Abstract

Epidemiologists often categorize a continuous risk predictor, even when the true risk model is not a categorical one. Nonetheless, such categorization is thought to be more robust and interpretable, and thus their goal is to fit the categorical model and interpret the categorical parameters. We address the question: with measurement error and categorization, how can we do what epidemiologists want, namely to estimate the parameters of the categorical model that would have been estimated if the true predictor was observed? We develop a general methodology for such an analysis, and illustrate it in linear and logistic regression. Simulation studies are presented and the methodology is applied to a nutrition data set. Discussion of alternative approaches is also included.

Keywords: Categorization, differential misclassification, epidemiology practice, inverse problems, measurement error

1. Introduction

Fitting models by categorizing a continuous risk predictor is a common practice in epidemiology. Among many recent examples, see [20, 19, 1, 5, 10] and [25]. A look at current issues of epidemiology journals will uncover many more examples. An important issue is that, generally in these problems, there are many covariates other than the main risk predictor.

The appeal of categorization in interpreting results is clear. If we have a risk predictor X, and we categorize it into J levels (C1, …, CJ), one can compare the highest level of the predictor, CJ, to the lowest level, C1, and if they are statistically significantly different, one can then conclude that it is better to be in the class that has the lowest risk, and quantify how much better.

One important technical point is that categorization implicitly posits an induced model based on the categorized variable X. In some cases, the induced model actually fits the data, e.g., when the response Y actually depends on X only through its categorized version, or if there are no other covariates, see the next paragraph. In other cases, and generally, the induced model does not fit the data, and we call this model misspecified. In particular, suppose that there are other covariates than X, say Z. Consider a binary response, Y, let H(·) be the logistic distribution function, and suppose that the true risk model in ·the continuous scale is pr(Y = 1|X, Z) = H{m(X, Z, β)} for some continuous function m(·). Then, even if there is no measurement error, if any of the covariates Z are related to Y in this continuous model, or if there is an interaction of X and Z on Y, categorizing X into J levels and plugging that into m(X, Z, β) in place of X leads to a misspecified model as we have defined it. Measurement error in this context makes things even more difficult. When there is no measurement error, [26] gives a characterization of what is actually being estimated in misspecified models: while we do not emphasize it, our paper extends this characterization to the measurement error case. A relevant paper that first solved this particular problem is [14], which was also cited in [26].

This slightly different terminology is motivated by the following example. Suppose that Y is binary, there are no additional covariates Z, and simply define πj = pr(Y = 1|X* = j), where X* is the categorized predictor. Then we can write, correctly, that pr(Y = 1|X* = j) = H{I(X* = j)θj} by making the obvious identifications. Thus, categorization does result in an induced correctly specified logistic model, just not the one in the continuous scale. A logistic regression analysis of Y on the categories of X* then will estimate θj consistently.

Our point is not to try to get epidemiologists to change their common practice. Instead, we study the effect of measurement error when a continuous predictor variable subject to measurement error is categorized. Our goal is to answer the question: with measurement error in this context, how can we (a) obtain consistent estimates of what epidemiologists would have obtained if X were actually observed; and (b) develop consistent standard errors.

We answer the question above in a general way. Section 2 gives basic technical background. Section 3 provides a general methodology for answering questions (a) and (b) above. Section 4 presents simulation studies for linear and logistic regression that show the good behavior of our methodology, both in terms of bias and confidence interval coverage. Section 5 shows applications of our approach by using data from the Eating at America’s Table Study [23]. Section 6 presents a discussion about other potential approaches to categorization and how those approaches compare to ours. Sketches of technical arguments are in the appendix.

Remark 1.

As discussed above, categorization leads to a misspecified model. It is also well-known that such categorization generally leads to differential measurement error [11, 13, 3], and thus additional complications over simply fitting a measurement error model. Chapters 6.1–6.2 of [13] has a detailed discussion when the continuous variable is dichotomized, calling the result differential by dichotomization. We are thus assuming that the true risk model in a continuous variable X is not categorical in X. If it were, consult [13] and [3], who also discuss the issue of doing a measurement error analysis in this case, especially the difficult complex issues of computation and identifiability both theoretical and practical.

2. Data generating mechanism and basic ideas

2.1. Illustration: A special case of linear regression

It is instructive to consider a special case, namely linear regression. Doing so will set the stage for our general method. The response is Y, the scalar predictor subject to error is X, the observed scalar predictor is W, there are predictors Z measured without error, and we define to allow for an intercept. The regression model in the continuous predictor X is , where ϵ is mean zero independent of (W, X, Z). There are j = 1, …, J categories (C1, …, CJ): the number of categories J is set by the investigator, and is generally 3 (tertiles), 4 (quartiles) or 5 (quintiles), depending on the scientific field and the investigator’s interests. Here M(X, Z) = {I(X ∈ C1), …, I(X ∈ CJ), ZT}T. If X could be observed, then we would also immediately obtain an estimate of .

By [26], when X is observed, what epidemiologists estimate by using the categorized M(X, Z) is Θ, where, based on the normal equations for the categorized predictor, is the solution to

| (1) |

The estimate is the solution to , and this is a consistent estimate of Θ. Comparisons between categories j and k for j, k ≤ J, say, are .

However, when X is not observable, estimating the solution to (1) has to be based solely on (Y, W, Z). In (1), it makes sense that if one believes the true regression model is linear in (X, Z), then, at some point, an estimate of β can be obtained via a measurement error analysis if there are sufficient data to do so.

Solving (1) based only on the observed W though is not so easy, and it is clear that some part of the relationship between W and X given Z is going to need to be specified, as it needs to be to do a general measurement error analysis. One way to do this is to define

| (2) |

and then define . Since 0 = E{Q(W, Z, Θ, β)}, Θ can be estimated by solving

Hence, in this simple case, for j = 1, …, J we will need to be able to calculate expectations of XI(X ∈ Cj) given (W, Z) and the probability that X ∈ Cj given (W, Z). As we will see, in general problems, we will need to estimate the expectations of other functions of X given (W, Z).

So, to summarize, to get a general solution, it appears that we will need to estimate (β1, β2) by a measurement error analysis and estimate expectations of specified functions of X given (W, Z).

Remark 2.

Following on Remark 1, it is obvious that in the unlikely event that the true risk model is actually categorical in X, so that E(Y | X, Z) = MT(X, Z)β, then model misspecification and differential measurement error both disappear, and one really needs just the probabilities that X is in the categories given (W, Z). As [13] and [3] discuss in detail, estimating such models is difficult because of model identifiability concerns. Often, papers dealing with this issue assume the existence of a validation data set, where X is actually observed on a subset of the data. [13] is a particularly good source for the difficulties we have mentioned and remedies using replication data. [3], page 314, who states that estimating the misclassification rates is “most likely coming from internal validation data” and also has a nice discussion.

2.2. Assumptions

Our work is very general, but even so, the algorithm is basically the same as in Section 2.1. Our methodology requires three basic assumptions, described below. We let X be the continuous predictor subject to measurement error, Z covariates measured exactly, W the mismeasured version of X, and Y the response.

Assumption 1.

When X is observed, the true response model in the continuous scale has parameters β, such that there is an estimating function, Φtrue(Y, X, Z, β) that identifies β and satisfies

| (3) |

Assumption 1 occurs in at least two circumstances.

Example 1.

(A) There are functions m1(X, Z, β) and m2(X, Z, β) such that E(Y|X, Z) = m1(X, Z, β) and the unbiased estimating function that would be used if X were observable is

| (4) |

(B) There is a parametric model for Y given (X, Z).

Example 1(A) is very general, in that it includes traditional quasilikelihood models, nonlinear regression, generalized linear models, probit regression, etc. Crucially, it does not require a fully parametric model for the distribution of Y given (X, Z).

In our approach, as in linear regression in Section 2.1, we may need to obtain information about moments of specified functions of X given (W, Z). To do this, we will consider the setting in which there may be an external data set of N observations giving information on one set of parameters of the joint distribution, Λext: if there is no external study, N = 0 and Λext does not exist. In addition, there is another set of the parameters, Λint, that is estimated from the n observations in the internal data set.

Assumption 2.

When X is not observed, either (a) the distribution of X given (W, Z) is known up to parameters Λext and Λint as described above, or (b) there is a function, defined at (11) below, whose conditional expectation given (W, Z) depends on parameters Λext and Λint and can be estimated. The parameter Λext cannot be estimated by internal data, while the parameter Λint can be estimated by internal data. For both, there are unbiased estimating functions Vext,m(Λext) for the external data and Vint,i(Λint, Λext) for the internal data such that E{Vext,m(Λext)} = 0 and E{Vint,i(Λint, Λext)} = 0.

For linear regression, is given in (2).

If there are external data and N > 0, we estimate Λext by solving the estimating equation

| (5) |

In the internal data set, we estimate Λint by solving an estimating equation

| (6) |

There is also a very subtle issue that needs to be made explicit.

Assumption 3.

If external data are necessary for model identification, the parameter Λext is transportable in the sense that this parameter is the same in the external and internal data sets.

The issue of when parameters are transportable from an external data set to the internal data set is discussed in Chapter 2.2.4–2.2.5 of [4]. As they state, it is much better if there are sufficient internal data that external data need not be used, but this is not always the case.

2.3. General observations when X is observed

As argued in Section 1, the goal is to fit a model when X is categorized into J levels (C1, …, CJ), and so we defined the dummy variables and Z together as M(X, Z) = {I(X ∈ C1), …, I(X ∈ CJ), ZT}T: our formulation allows more complex forms, including interactions. Suppose there are i = 1, …, n subjects in the primary/main/internal study, and suppose further that we observe (Yi, Xi, Zi). If X is observed, the analysis done on these categories will be based on replacing (X, Z) in (3)–(4) by M(X, Z), and to make clear the categorization, we define a parameter Θ, set Φcat{Yi, M(Xi, Zi), Θ} = Φtrue{Yi, M(Xi, Zi), Θ}, and obtain by solving

| (7) |

More complex forms of (7) are easily accommodated.

Unlike in Assumption 1 and (3)–(4), except in the rare case that the categorized model is actually true, 0 ≠ E[Φcat{Y, M(X, Z), Θ}|X, Z], a conditional expectation. This is a key part of the work in [26].

Despite the fact that the categorized model does not fit the data conditional on (X, Z), by standard estimating equation theory [26], the estimate formed by solving (7) has a limit as n → ∞, Θ, which is the solution to

| (8) |

It is important to observe that (8) is an unconditional expectation, not a conditional one.

If, instead of observing X, we observe its mismeasured version W, and if we replace X by W, we will of course generally inconsistently estimate both β and Θ.

2.4. Estimating the true parameter β

In our approach, as in Section 2.1 for linear regression, we must estimate β in (3). There is of course a large literature on how to do this [13, 4, 3, 27]. Borrowing on that literature, from Assumptions 1–2, for an estimating function Φ(Y, W, Z, β, Λint, Λext), the estimate, , is the solution to

| (9) |

where are obtained from equations (5) and (6), respectively. Of course, the details and the form of Φ(·) differ from case-to-case.

3. Methodology and asymptotic theory

3.1. Methodology: General case

The methodology is simple to explain at the general level. The target Θ is defined as the solution to (8). However, we can rewrite (8) as

| (10) |

Since the distribution of Y given (X, Z) depends on β, for notational completeness we define

| (11) |

| (12) |

Making the usual nondifferential measurement error assumption, i.e., that Y and W are independent given (X, Z),

| (13) |

Critically, (12) depends only on the observed covariates. Thus, if we have consistent estimates of (β, Λint, Λext), then a consistent estimate , of Θ solves

| (14) |

In some cases, we do not have external data. Thus, we do not have Vext and Λext, and Vint and Θ only depend on Λint.

Remark 3.

The key question is how to compute in (11). In the fully general case (3), we require a parametric model for the distribution of Y given (X, Z), as in Example 1(B). However, in standard regression models of the form in (4) in Example 1(A), great simplification occurs, because in that case,

and thus

C.3 gives detailed formulae for linear and logistic regression.

Remark 4.

Our method is closely related to the expectation-correction method of [27], Chapter 2.5.2, and less closely to the general corrected score methods first introduced by [17]. [27] has an excellent and comprehensive discussion of the correction methods in the literature. We do not have a score function per se, but we have a function, Φcat{Y, M(X, Z), Θ}, with the property that E[Φcat{Y, M(X, Z), Θ}] = 0: importantly, it is not true that the conditional expectation E[Φcat{Y, M(X, Z), Θ}|X, Z] ≡ 0. Instead of our (11)–(12), the expectation-correction method uses as its estimating equation E[Φcat{Y, M(X, Z), Θ}|Y, W, Z] = Q*(Y, W, Z, Θ, β, Λint, Λext). The obvious distinction is that our function Q(·) does not involve Y explicitly, while the expectation-correction function Q*(·) does involve Y. We used Q(·) and (11) because our assumptions allow to be calculated explicitly, especially in Example 1(A), so that implementation is somewhat easier. In addition, in Example 1(A), there does not need to be a full likelihood, as would be required in the expectation-correction method, so there are actual differences in the methods.

3.2. Asymptotic Theory

Asymptotic theory for the parameter estimates is easily derived. Let Ω = (Θ, β, Λint, Λext) and let the true values of the parameters be denoted by Ω.

It is neater notation in this section to let i = 1, …, n denote the internal data, and i = n + 1, …, n + N denote the external data. For i > n, define , while for i ≤ n define

If there are external data, the estimate solves . If there are no external data, then N = 0, Ω = (Θ, β, Λint) and the zero element and Λext in the definition of Ψi(Ω) are removed.

By standard estimating equation results, we have the following results, which are shown in Appendices A.1 and A.2.

Lemma 1.

If there are external data, i.e., N > 0, make Assumptions 1–3. Suppose that N → ∞ and n → ∞ such that n/(n + N) → blim, where 0 < blim < 1. Then

where A = blimE{∂Ψ1(Ω)/∂ΩT} + (1 − blim)E{∂Ψn+N(Ω)/∂ΩT} and B = blimcov{Ψ1(Ω)} + (1 − blim)cov{Ψn+N(Ω)}. In the definitions A of and B, the expectation and covariance matrix for Ψ1(Ω) are computed in the internal data, while the expectation and covariance matrix for ΨN+n(Ω) are computed in the external data. Let be the sample covariance matrix of for i = n + 1, …, n + N and let be the sample covariance matrix of for i = 1, …, n. Consistent estimates of A and B are easily seen to be and .

Lemma 2.

If there are no external data, i.e., N = 0, make Assumptions 1–2. As n → ∞,

where A = E{∂Ψ1(Ω)/∂ΩT} and B = cov{Ψ1(Ω)}. In the definitions of A and B, the expectation and covariance matrix for Ψ1(Ω) are computed in the internal data. Let be the sample covariance matrix of for i = 1, …, n. Consistent estimates of A and B are easily seen to be and .

Remark 5.

While the calculations used in Lemmas 1–2 are standard, as a referee has pointed out, we are making the following kinds of assumptions to carry them through: weaker conditions can be constructed. All these conditions hold in our examples of linear and logistic regression with additive measurement error. There is a parameter which we have called in this subsection Ω = (Θ, β, Λint, Λext). For i = 1, …, n + N, we have defined estimating functions Ψi(Ω), which we have defined in such a way that E{Ψi(Ω)} = 0 for i = 1, …, n + N: the expectations are unconditional, although in implementing the estimators we have exploited our Assumptions 1–3 to simplify the numerical calculations. Having done all this, we are now in the realm of estimating equation theory. Sufficient but not necessary conditions for our asymptotic theory to hold are the following.

The parameter space is compact. This is not necessary but it is convenient for proving consistency.

There is a unique Ω in the parameter space such that E{Ψi(Ω)} = 0 for all i = 1, …, n + N.

The estimating equations Ψi(Ω) are 3-times continuously and boundedly differentiable in the parameter space.

The estimating equation has a unique solution.

The matrix E{∂Ψi(Ω)/∂ΩT} is of full rank within a neighborhood of the true parameter value.

For sufficiently large (n, N), within a neighborhood of the true parameter value, is of full rank with eigenvalues bounded away from 0 and ±∞.

Remark 6.

The major new item here in verifying the assumptions mentioned in Remark 5 are the differentiability assumptions having to do with Q(W, Z, Θ, β, Λint, Λext) in (12). Let the conditional density/mass function of Y given (X, Z) be fY|X,Z (·, β, Λint, Λext) and the conditional density/mass function of X given (W, Z) be fX|W,Z(·, Λint, Λext). Let dν(y) and dν(x) be integrals/counts as the case requires. Then (12) can be written out as

Then the non-standard differentiability assumptions in Remark 5 are really about the differentiability assumptions of Φcat{y, M(x, Z), Θ}, fY|X,Z(·, β, Λint, Λext) and fX|W,Z(·, Λint, Λext) with respect to the parameters.

4. Simulations: Logistic and linear regression

4.1. Logistic regression

4.1.1. Scenarios

For simplicity, we do our simulations in the case that there is no Z. For logistic regression, we assume that the true model is

| (15) |

where H(·) is the logistic distribution function. Then we generate data as

| (16) |

where X and U are independent. We set β0 = −0.42 and set β1 = log(1.5) in Table 1. We set (μx = 0, , ), so that the measurement error variance is the same as the variance of X, and the classical attenuation coefficient is . Solving (8) numerically, we find that Θ = (−0.98, −0.64, −0.42, −0.21, 0.14)T. In both cases, the main study sample size is n = 500.

We used the quintiles of the distribution of X to define the categories. This is because, as stated in the introduction, we have our goal is to obtain consistent estimates of what epidemiologists would have obtained if X were actually observed, in this case, the quintiles of X.

We did simulations in two cases:

External-Internal Data: The internal data has no replicates and the external data set has size N = 300 and K = 2 replicates for each observation. The nuisance parameters are and . We estimated from the external data with replicates, and estimated μx, using the internal data without any replicates. Standard errors were computed as in Lemma 1.

Internal Data Only: The internal data has R = 2 replicates and there are no external data (K = 0). The nuisance parameters . We estimated (μx, , ) from the internal data with replicates. Standard errors were computed as in Lemma 2.

C.3 provides details of implementation.

4.1.2. Results

The results given below are similar, and indeed even more impressive, when the main study sample size n increases to n = 1,000, 2,000 and 3,000, and thus these are not displayed here. The results are also similar when β1 is either smaller or larger. The same qualitative results are also found for Θ = (θ1, …, θ5)T individually (results not shown).

We fit the new approach and compare it with the naive method for the both cases described above. Our main interest is to estimate the log relative risk θ5 − θ1, which compares the effect of the category 5 with the effect of the category 1. In the two simulations, we computed (a) the log relative risk pretending that X is observed; (b) our method; and (c) the naive method that ignores measurement error. In the scenario of internal data with R = 2, the predictor used was the sample mean of the replicates.

Based on 1000 simulated data sets, in Table 1, we report the empirical average mean bias, asymptotic standard error, standard deviation, root mean squared error, and coverage rate of the nominal 95% confidence interval across the simulations.

From Table 1, we observe the following.

The estimator using true X and our method both have little bias and provide near-nominal coverage.

The naive estimator that ignores the measurement error is badly biased and attenuated towards zero. Consequently the coverage probabilities are near-zero and the root mean squared errors are quite inflated.

With no internal replicates, i.e., R = 1, the root mean squared error of our method is naturally higher than if X had been observed, but not quite as high as would be expected in a continuous analysis. Indeed, in a continuous analysis with attenuation λ = 0.50, as in our simulation, one would expect a doubling of root mean squared error.

4.2. Linear regression

4.2.1. Scenarios

In this section, we do simulations based on simple linear regression with no Z, including homoscedastic and heteroscedastic cases.

We assume that the true model is

| (17) |

Similarly, we generate data as

We set β0 = 0 and set β1 = 0.75 and studied two cases: (a) homoscedastic with ϵ ~ N(0, 1); and (b) heteroscedastic with ϵ ~ N(0, 0.2 + 0.5×2). The classical attenuation coefficient and sample size are the same as in Section 4.1. Solving (8) numerically, we find that Θ = (−1.04, −0.40, 0.00, 0.40, 1.05)T. C.2 provides implementation details.

4.2.2. Results

Similarly as before, our main interest is to estimate θ5 − θ1, which compares the effect of the category 5 with the effect of the category 1. In the two simulations, we computed θ5 − θ1 (a) pretending that X is observed; (b) our methods; and (c) the naive method that ignores measurement error. For the naive method, in internal data with R = 2, the predictor used is the sample mean of the replicates.

Based on 1000 simulated data sets, in Table 2, we report the empirical average mean bias, asymptotic standard error, standard deviation, root mean squared error, and coverage rate of the nominal 95% confidence intervals across the simulations.

From Table 2, we see that similar conclusions can be drawn as in Section 4.1. However, an interesting thing is in the heteroscedastic case, when noise ϵ has its variance related to X. Assuming that X is observed, the coverage rate of nominal 95% confidence intervals is low, because the heteroscedasticity is ignored. Using our method, we can get close to nominal coverage without knowing any information about the noise ϵ. Thus, this example shows that our method is very general as we stated in Example 1(A).

5. Empirical example

5.1. Data description

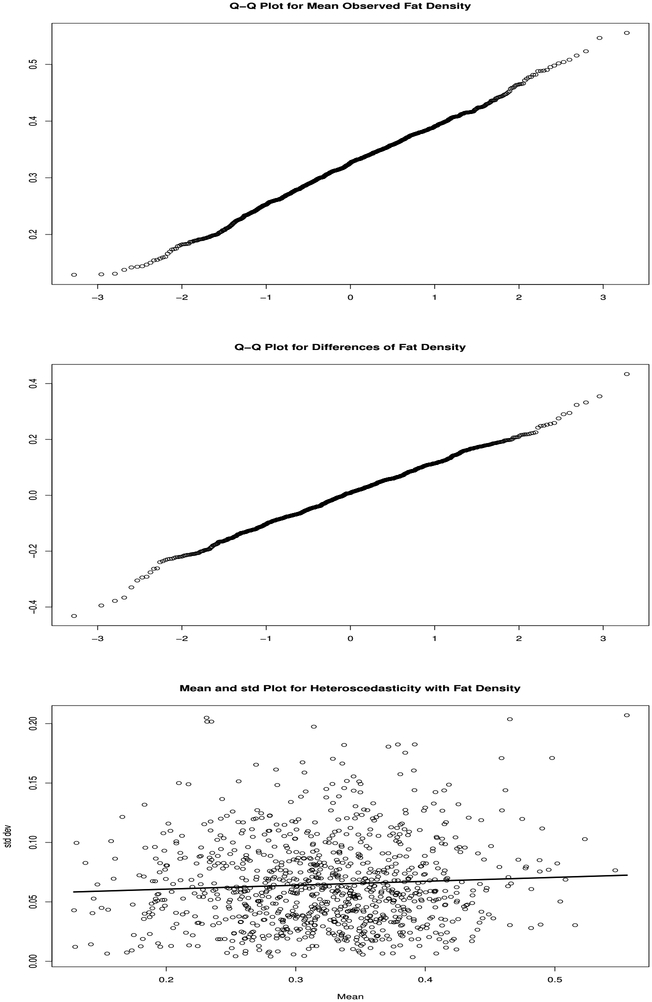

We illustrate our methods using data from the Eating at America’s Table (EATS) Study [23], in which 964 participants completed multiple 24-hour recalls of diet. We consider the variable Fat Density, which is the percentage of calories coming from Fat. The response Y is either (i) the indicator of obesity, which means that a subject’s body mass index (BMI, weight in kilograms divided by the square of height in meters) is 30 or greater. or (ii) the actual body mass index. We assume that W, is unbiased for usual intake X, and that W = X +U. It is reasonable in these data to take (a) X to be normally distributed, (b) that U is normally distributed; and (c) that X and U are independent, as we now describe. We used the methods described in [9] and Chapter 1.7 of [4], which also give the rationale for these methods. Specifically, for (a), as they suggest a qq-plot of the individual means for Fat Density looked acceptably normal, with skewness and kurtosis = −0.06 and 3.02, respectively, see the top panel of Figure 1. For (b), as they suggest, we took differences of the first and second Fat Density measurements, which had skewness (theoretically = 0) and kurtosis = −0.14 and 3.40, respectively: the somewhat higher kurtosis here is seen to be minor on the qq-plot, see the middle panel of Figure 1. Finally, for (c), they suggest analyzing the correlation between the individual-level mean and standard deviation = 0.06, and there was no obvious strong pattern when we plotted the data the latter against the former, see the bottom panel of Figure 1.

For numerical stability, our analysis in the continuous scale is uses centered and standardized W using . To illustrate an example of an internal and an external study, we randomly selected N = 200 subjects as the external study to have the first two 24-hour recalls, while using the remaining data as the main internal study. As in the simulation, we either set the number of recalls R = 1, K = 2, meaning the external study data were used to estimate the measurement error variance, for R = 2, K = 0, in which case the external data were not used.

5.2. Results

5.2.1. Logistic regression

As described in Section 4.1, we assume the true model defined by (15)–(16), and the respective two cases. In this application we again estimate the log relative risk θ5 − θ1. We fit both our new approach and the naive model that ignores measurement error when external data is and is not used.

In Table 3, we observe that when using the external data and only 1 observation in the internal data the estimate of the log relative risk θ5 − θ1 from our approach is 108% greater than the naive estimate, while when using internal data with two replicates our estimate of our approach is 32% greater than the naive estimate. This makes sense because the second case uses the mean of two replicates, hence has smaller measurement error variance, and thus the naive estimate will be closer to our method.

In both cases, the asymptotic standard error from our new method is greater than the naive method, which led to wider confidence intervals. This makes sense, because with a scalar covariate measured with error, correcting for measurement error bias usually increases estimated standard errors, while of course reducing bias.

5.2.2. Linear regression

Next we consider the linear model with body mass index as the response. All assumptions for W, X and U are the same as in Section 5.1. Moreover, we maintain the standardization and sampling scheme in Section 5.1: the results are presented in Table 4.

From Table 4, we observe similar conclusions as in logistic regression case. One point of particular interest is that in both scenarios (external-internal or internal data only), our estimator converges theoretically to the same value, and this is seen in the results. The naive method that ignores measurement error estimates different parameters because the measurement error variance is twice as large in the external-internal case as it is in the internal-only case.

6. Other approaches and the assumptions

6.1. Other approaches

We emphasize once more that it is common practice in epidemiology to categorize a continuous predictor, and we have given numerous citations of this practice. Generally, this practice results in a misspecified model.

Our goal is to correct the analysis so as to reproduce, asymptotically, the estimators that would have been obtained if there were no measurement error. The problem has not been considered previously in the context that a continuous predictor has been categorized. Such categorization generally leads to differential measurement error [11, 13, 3], and thus additional complications over simply fitting a measurement error model.

While our paper is the first to consider the issue of how to correct an analysis to account for a continuous predictor that is categorized, there are of course other possible approaches, but none of them really avoids the basic issues we have discussed of what is needed to obtain consistent estimators with asymptotically correct inference in the case of measurement error.

For example, one could assume that the true risk model is based upon the categorized truth, even if this is implausible in most contexts. One could further assume that the misclassification is nondifferential, which is incorrect if the true risk model is in the continuous scale [11, 13, 3]. There is a small literature on this problem. [13], especially Chapter 6.1, has remarks on the bias induced when a binary predictor is misclassified. [3], Chapter 6.7.7 and Chapter 6.14, has a detailed discussion of the issue, and provides a number of references to the problem. Both [13] and [3] show that a measurement error correction will require a distribution for the categorical X given (W, Z), sometimes called the reclassification rate, and both indicate that there are substantive issues, including identifiability, involved with estimating these models. For replication studies wherein W is measured repeatedly on a subset of the data, there is some evidence that 3 replicates will result in identifiability. However, both books emphasize the use of internal validation substudies, wherein one actually observes X in a substudy.

If Xcat is the categorized truth, then one might attempt an analysis based on assuming a joint distribution of (Y, W, Xcat) given Z, but as in any measurement error model [4], the joint distribution requires (a) a distribution for Y given (Xcat, W, Z), and (b) the distribution of (W, Xcat) given Z. However, (a) actually depends on W, and thus that the modeling presents additional complications. In addition, (b) is no easier than ours, can be implausible and does not make fewer assumptions than we have done.

Simulation-extrapolation, or SIMEX, [6, 22, 4] is a well-known approach to the creation of approximately, but not fully, consistent estimators for additive measurement error models of the form W = X +ZTα +U, where U is independent of Z and can be homoscedastic or heteroscedastic but has replicates [8], and is generally taken to be normally distributed. This literature attempts to dispense with distributional assumptions for X for the continuous case, but is at best approximately correct. The fact that a categorized risk model is implausible, leading to differential measurement error, may also cause complications, but the use of SIMEX in this context is a worthwhile topic for further study. We also mention the MCSIMEX procedure [16], which is appropriate for misclassified data where the misclassification probabilities can be estimated.

It is also possible to change the paradigm entirely and avoid categorization, and all the issues related to categorization, by instead using Bsplines. Indeed, part of the reason sometimes given for categorizing a continuous predictor and not modeling a response linearly in the continuous X is that it could lead to unduly extreme comparisons for risk between the lowest and the highest values of X. The general thought is that this can be overcome by replacing the linear X by a Bspline in X. There are papers involving Bsplines and measurement error [2, 12, 18], and it appears that regression calibration can possible be used by calibrating each spline basis function. After the fitting, one could compare the Bspline fits at the 10th, 30th, 50th, 70th and 90th percentiles of X to form versions of the tables found in epidemiology papers, but the interpretations are not fully comparable.

We showed how to solve this problem and given asymptotically consistent estimators with asymptotically correct standard errors. Assumption 2 is reasonable in other contexts than ours, for example, that X has a mixture-of-normals distribution and U is normally distributed [7].

6.2. Assumptions in the simulations and example

Readers of an initial version of this paper have noted that our simulations and data example use the assumption that the distribution of X given (W, Z) is normally distributed, but misinterpreted this fact into concluding that the approach is only applicable in that case. For the data example in Section 5, we justified the assumptions using known methods for model checking of measurement error models. Assumption 2 is widely used and reasonable in many other contexts than ours numerical work, for example, that X has a mixture-of-normals distribution and U is normally distributed [7]. Modeling via mixture distributions is a reasonable way to extend what we have done in the classical error case. See also [21] for the homoscedastic and heteroscedastic cases when the variance function and the distributions of X and U are modeled as mixture distributions.

Many papers in the literature also rely on the existence of validation data, where X is actually observed in a subset of the main data set. In that case, Assumption 2 is easily checked by model fitting and validation on the observed validation data subset.

6.3. Categorization

In Section 2.1, we stated that the number J of categories was set by the investigators, Usually, J = 3, 4 or 5, as seen by the examples cited in the introduction. In addition, setting the category limits is also an art, and may be based on (a) limits in the literature; (b) limits based on the error-prone instrument, such as the quintiles of a food frequency questionnaire or 24-hour recall; and (c) limits based on a measurement error analysis. Since our goal is to construct the analysis that would have been done if X could be observed, we use the latter.

Acknowledgments

We thank the Associate Editor and two anonymous referees for their valuable comments. Blas and Wang should be considered as joint first authors. Blas’s research was supported by a post-doctoral fellowship from the Brazilian Agency CNPq (201192/2015–2). The research of Wang and Carroll was supported by a grant from the National Cancer Institute (U01-CA057030). The R package CCP for implementing the methods has been placed on GitHub at https://github.com/tianyingw/CCP. The Eating at America’s Table Study data in Section 5 can be obtained from a data transfer agreement with the National Cancer Institute: our R package can generate simulated data as in Section 4 as a check for reproducibility.

Appendix A: Sketch of technical arguments

A.1. Argument for Lemma 1

We consider the case that there are external data used to estimate Λext and that there are parameters Λint. As in Section 3.2, the data for i = 1, …, n are for the internal data, while, for i = n + 1, …, n + N, are for the external data if such external data exist and are used. The functions Ψi(Ω) are also defined in Section 3.2. By Taylor series,

For logistic regression and linear regression, the forms of Ψi(Ω) can be found in Appendix C. Thus,

It is obvious that , and immediate that , where A and B are defined in Lemma 1.

A.2. Argument for Lemma 2

We consider the case that there are only parameters Λint. As in Section 3.2, the data for i = 1, …, n are for the internal data. The functions Ψi(Ω) are also defined in Section 3.2. Then

so that

Appendix B: Tables for simulations and EATS data analysis

Table 1.

Simulation study for logistic regression in Section 4.1 with sample size n = 500 and, where applicable, the external study has sample size N = 300 and 2 replicates, while β0 = −0.42, β1 = log(1.5). The target parameter, Θ = (θ1, …, θ5)T, where θj is the parameter for the jth category. Displayed are results for the estimation of the log relative risk, θ5 − θ1. Ext-Int Data is the case that external data are used to estimate the measurement error variance. Int Data is the case that the internal data have 2 replicates, and the Ignore ME estimator ignores the measurement error and is based on the mean of these replicates. Coverage is the coverage rate of nominal 95% confidence intervals. RMSE is the square root of the mean squared error.</Table_Caption>

| Log Relative Risk Analysis | ||||||

|---|---|---|---|---|---|---|

| Data | Method | mean bias | Mean Estimated Std. Err. | Actual Standard Deviation | RMSE | Coverage |

| X observed | 0.016 | 0.304 | 0.301 | 0.301 | 95.2% | |

| Ext-Int Data | ||||||

| Our Method | −0.005 | 0.41 | 0.402 | 0.402 | 94.5% | |

| Ignore ME | −0.453 | 0.251 | 0.256 | 0.520 | 0% | |

| Int Data | ||||||

| Our method | 0.005 | 0.361 | 0.323 | 0.323 | 95.9% | |

| Ignore ME | −0.287 | 0.268 | 0.266 | 0.391 | 80.2% | |

Table 2.

Simulation study for linear regression in Section 4.2 with n = 500 and, where applicable, the external study has sample size N = 300 and 2 replicates, while β0 = 0, β1 = 0.75. The target parameter, Θ = (θ1, …, θ5)T, where θj is the parameter for the jth category. Displayed are results for the estimation of θ5 − θ1. Ext-Int Data is the case that external data are used to estimate the measurement error variance. Int Data is the case that the internal data have 2 replicates, and the Ignore ME estimator ignores the measurement error and is based on the mean of these replicates. Coverage is the coverage rate of nominal 95% confidence intervals. RMSE is the square root of the mean squared error.

| Results Analysis (θ5 − θ1) | ||||||

|---|---|---|---|---|---|---|

| Data | Method | mean bias | Mean Estimated Std. Err. | Actual Standard Deviation | RMSE | Coverage |

| Homoscedastic ϵ ~ N(0, 1) | ||||||

| X observed | 0.004 | 0.145 | 0.150 | 0.150 | 95.1% | |

| Ext-Int Data | ||||||

| Our Method | 0.013 | 0.249 | 0.233 | 0.233 | 95.8% | |

| Ignore ME | −0.814 | 0.139 | 0.142 | 0.826 | 0.1% | |

| Int Data | ||||||

| Our method | −0.007 | 0.176 | 0.170 | 0.170 | 95.3% | |

| Ignore ME | −0.536 | 0.142 | 0.145 | 0.555 | 3.7% | |

| Heteroscedastic ϵ ~ N(0,0.2 + 0.5x2) | ||||||

| X observed | 0.004 | 0.123 | 0.169 | 0.169 | 85.3% | |

| Ext-Int Data | ||||||

| Our Method | 0.011 | 0.261 | 0.245 | 0.245 | 95.9% | |

| Ignore ME | −0.814 | 0.122 | 0.135 | 0.825 | 0.1% | |

| Int Data | ||||||

| Our Method | −0.010 | 0.197 | 0.189 | 0.189 | 95.9% | |

| Ignore ME | −0.537 | 0.123 | 0.141 | 0.555 | 1.8% | |

Table 3.

Data analysis for logistic regression in Section 5. The target parameter, Θ = (θ1, …, θ5)T, where θj is the parameter for the jth category. Displayed are results for the estimation of the log relative risk, θ5 − θ1. Ext-Int Data is the case that external data are used only to estimate the measurement error variance, and the external data have 2 replicates. Int Data is the case that the internal data have 2 replicates, and the Ignore ME estimator ignores the measurement error and is based on the mean of these replicates. Asymptotic Std. Err. is the standard error estimate from the theory. CI is the nominal 95% confidence interval for the log relative risk. p-value is the p-value for the test that the log relative risk = 0.</Table_Caption>

| Log Relative Risk Analysis | |||||

|---|---|---|---|---|---|

| Data | Method | Estimate | Asymptotic Std. Err. | 95% CI | p-value |

| Ext-Int Data | |||||

| Our Method | 0.98 | 0.47 | (0.06,1.90) | 0.036 | |

| Ignore ME | 0.47 | 0.24 | (0.00, 0.95) | 0.049 | |

| Int Data | |||||

| Our Method | 1.10 | 0.34 | (0.43,1.77) | 0.001 | |

| Ignore ME | 0.83 | 0.22 | (0.39,1.26) | 0.000 | |

Table 4.

Data analysis in for linear regression Section 5. The target parameter, Θ = (θ1, …, θ5)T, where θj is the parameter for the jth category. Displayed are results for the estimation of θ5 − θ1. Ext-Int Data is the case that external data are used only to estimate the measurement error variance, and the external data have 2 replicates. Int Data is the case that the internal data have 2 replicates, and the Ignore ME estimator ignores the measurement error and is based on the mean of these replicates. Asymptotic Std. Err. is the standard error estimate from the theory. CI is the nominal 95% confidence interval for θ5 − θ1. p-value is the p-value for the test that θ5 − θ1 = 0.

| Results Analysis (θ5 − θ1) | |||||

|---|---|---|---|---|---|

| Data | Method | Estimate | Asymptotic Std. Err. | 95% CI | p-value |

| Ext-Int Data | |||||

| Our Method | 0.59 | 0.18 | (0.24, 0.95) | 0.001 | |

| Ignore ME | 0.28 | 0.10 | (0.09, 0.47) | 0.004 | |

| Int Data | |||||

| Our Method | 0.56 | 0.13 | (0.30,0.81) | 0.000 | |

| Ignore ME | 0.35 | 0.09 | (0.18,0.52) | 0.000 | |

Fig 1.

EATS data of Section 5. Top panel: Normal qq-plot of the mean Fat Density over 4 recalls. This indicates that the mean Fat Density is approximately normally distributed and qualifies for the assumptions in our numerical example. Middle panel: Normal qq-plot of differences of observed Fat density, as a diagnosis that U is approximately normally distributed. Bottom panel: Mean and standard deviation plot to diagnose heteroscedasticity, showing that there is little heteroscedasticity in the measurement errors.

Appendix C: Estimating equations for linear and logistic regression

C.1. Estimating the nuisance parameter Λ

Here we only consider two cases among numerous possibilities. One is that the internal data consists of (Yi, Wi, Zi) for i = 1, …n and is estimated from the external data using replicates Wik for k = 1, …, K and i = n + 1, …, n + N. The second case is that the replicates are in the internal data.

C.1.1. External-internal data

For specificity, we consider the first case that the external data have no responses Y, are independent of the internal data. Suppose that we use external data only to estimate , and we observe Wik = Xi + Uik for k = 1, …, K and i = n+1, …, n+N. We use internal data to estimate μx, without replicates. In the external data, let . Define to be the sample variance of the Wik for a given i. Because , unbiased estimating equations for are

C.1.2. Internal data only

Suppose there is no external data, and we have replicates Wir for r = 1, …, R in the internal data. Now we use internal data to estimate , and we observe Wir = Xi + Uir for r = 1, …, R and i = 1, …, n.

Define . Define to be the sample variance of the Wir within subject i, and define . The estimating equations are

Since the two cases we considered are the same as in linear regression and logistic regression, the way we estimate Λint and Λext are exactly the same. Then we will only give details for the estimating equations about β and Θ below.

C.2. Details for linear regression

C.2.1. Background

Here we give full details of our methodology for linear regression. As in Lemma 1, Ω = (Θ, β, Λint, Λext).

Let . Here we consider the simple case of linear regression with the classical measurement error model in both the external and internal data sets to be

C.2.2. The forms of Φ(·)

In this linear model, denote the estimating equations for β as Φ(·), we consider

C.2.3. The forms of Φcat(·) and Q(·)

Since we assume the true model is , it is easy to see that categorical estimating function

Hence, by simple calculations and following Remark 3, with Ω = (Θ, β, Λint, Λext),

We used the integrate function in the R package stats to compute the integrals.

The estimating function for β = (β0, β1) is

The estimating function for Θ is

Asymptotic standard errors were estimated as in Lemma 1 and Lemma 2.

C.3. Details for logistic regression

C.3.1. Background

Here we give full details of our methodology for logistic regression. As in Lemma 1, Ω = (Θ, β, Λint, Λext).

As before, let H(·) denote the logistic distribution function and let . Here we consider the special case of linear logistic regression with the classical measurement error model in both the external and internal data sets to be

Different from the linear case in Section C.2, we consider the case where X depends on another covariate Z. There are numerous data structures possible, but we here present the external-internal and internal data only cases.

C.3.2. Settings

There are two settings of interest.

There is no information about in the internal data, so that the external parameter is the measurement error variance, , while the internal parameters are .

There are no external data, so that Λext is null, and the internal data with replicates allow estimation of .

In both case, (or in the internal data only case) are estimated the same as in C.1.1 and C.1.2, while the estimating function for (α, ) is

where i = 1, …, n.

C.3.3. Estimating β

In this section, we implement our method and give all estimating equations in the case where we have both external and internal data. In another case, where we only use internal data with replicates, all results below are still valid by removing Λext.

Define . Then, given (W, Z), X is normally distributed with mean and variance . We write this conditional density as fx|w,z(x, w, z, β, Λint, Λext).

There are multiple ways to estimate β from the observed data. Here we describe two of them.

- The first is regression calibration, in which X is replaced by its mean given (W, Z) and the linear logistic model is fit. Thus the regression calibration method has

- A second possibility, one that we used, is the following. By simple calculations, pr(Y = 1|W, Z) = p(W, Z, β, Λint, Λext), where

a quantity that is easily computed in R using the integrate function in the R package stats. Denote pi = pr(Yi = 1|Wi, Zi). Thus, the loglikelihood . We then use optim function in the R package stats to minimize the negative loglikelihood to estimate β.

C.3.4. The forms of Φcat(·) and Q(·)

Since we assume the true model is , it is easy to see that categorical estimating function

Hence, with Ω = (Θ, β, Λint, Λext), by simple calculations and following Remark 3,

We used the integrate function in the R package stats to compute the integrals.

Contributor Information

Betsabé G. Blas Achic, Departamento de Estatística, Universidade Federal de Pernambuco, Av. Prof. Moraes Rego, 1235 – Cidade Universitária, Recife-PE-Brasil, CEP: 50670-901.

Tianying Wang, Department of Statistics, Texas A&M University, 3143 TAMU, College Station, TX 77843-3143.

Ya Su, Department of Statistics, University of Kentucky, Lexington, KY, 40536-0082.

Raymond J. Carroll, Department of Statistics, Texas A&M University, 3143 TAMU, College Station, TX 77843-3143, and School of Mathematical Sciences, University of Technology Sydney, Broadway NSW 2007

References

- [1].Arem H, Reedy J, Sampson J, Jiao L, Hollenbeck AR, Risch H, Mayne ST, and Stolzenberg-Solomon RZ (2013). The Healthy Eating Index 2005 and risk for pancreatic cancer in the NIH–AARP Study. Journal of the National Cancer Institute 105, 1298–1305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Berry SM, Carroll RJ, and Ruppert D (2002). Bayesian smoothing and regression splines for measurement error problems. Journal of the American Statistical Association 97, 457, 160–169. [Google Scholar]

- [3].Buonaccorsi JP (2010). Measurement Error: Models, Methods and Applications. Chapman & Hall. [Google Scholar]

- [4].Carroll RJ, Ruppert D, Stefanski LA, and Crainiceanu CM (2006). Measurement Error in Nonlinear Models: A Modern Perspective, Second Edition Chapman and Hall. [Google Scholar]

- [5].Chaix B, Kestens Y, Duncan DT, Brondeel R, Méline J, Aarbaoui TE, Pannier B, and Merlo J (2016). A gps-based methodology to analyze environment-health associations at the trip level: case-crossover analyses of built environments and walking. American Journal of Epidemiology 184, 8, 579–589. [DOI] [PubMed] [Google Scholar]

- [6].Cook JR and Stefanski L (1994). Simulation-extrapolation estimation in parametric measurement error models. Journal of the American Statistical Association 89, 1314–1328. [Google Scholar]

- [7].Cordy CB and Thomas DR (1997). Deconvolution of a distribution function. Journal of the American Statistical Association 92, 1459–1465. [Google Scholar]

- [8].Devanarayan V and Stefanski LA (2002). Empirical simulation extrapolation for measurement error models with replicate measurements. Statistics & Probability Letters 59, 219–225. [Google Scholar]

- [9].Eckert RS, Carroll RJ, and Wang N (1997). Transformations to additivity in measurement error models. Biometrics 53, 262–272. [PubMed] [Google Scholar]

- [10].Evenson KR, Wen F, and Herring AH (2016). Associations of accelerometry-assessed and self-reported physical activity and sedentary behavior with all-cause and cardiovascular mortality among us adults. American Journal of Epidemiology 184, 10, 621–632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Flegal KM, Keyl PM, and Nieto FJ (1991). Differential misclassification arising from nondifferential errors in exposure measurement. American Journal of Epidemiology 134, 10, 1233–1246. [DOI] [PubMed] [Google Scholar]

- [12].Ganguli B, Staudenmayer J, and Wand MP (2005). Additive models with predictors subject to measurement error. Australian & New Zealand Journal of Statistics 47, 2, 193–202. [Google Scholar]

- [13].Gustafson P (2004). Measurement Error and Misclassication in Statistics and Epidemiology. Chapman and Hall/CRC. [Google Scholar]

- [14].Huber PJ (1967). The behavior of maximum likelihood estimates under nonstandard conditions. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability. Vol. 1, 221–233. [Google Scholar]

- [15].Kauermann G and Carroll RJ (2001). A note on the efficiency of sandwich covariance matrix estimation. Journal of the American Statistical Association 96, 456, 1387–1396. [Google Scholar]

- [16].Lederer W and Küchenhoff H (2006). A short introduction to the simex and mcsimex. The Newsletter of the R Project Volume 6/4, October 2006, 26. [Google Scholar]

- [17].Nakamura T (1990). Corrected score function for errors-in-variables models: methodology and application to generalized linear models. Biometrika 77, 127–137. [Google Scholar]

- [18].Pham TH, Ormerod JT, and Wand MP (2013). Mean field variational Bayesian inference for nonparametric regression with measurement error. Computational Statistics & Data Analysis 68, 375–387. [Google Scholar]

- [19].Reedy J, Wirfält E, Flood A, Mitrou PN, Krebs-Smith SM, Kipnis V, Midthune D, Leitzmann M, Hollenbeck A, Schatzkin A, and others. (2010). Comparing 3 dietary pattern methods – cluster analysis, factor analysis, and index analysis – with colorectal cancer risk: the NIH–AARP Diet and Health Study. American Journal of Epidemiology 171, 479–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Reedy JR, Mitrou PN, Krebs-Smith SM, Wirfält E, Flood AV, Kipnis V, Leitzmann M, Mouwand T, Hollenbeck A, Schatzkin A, and Subar AF (2008). Index-based dietary patterns and risk of colorectal cancer: the NIH-AARP Diet and Health Study. American Journal of Epidemiology 168, 38–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Sarkar A, Mallick BK, Staudenmayer J, Pati D, and Carroll RJ (2014). Bayesian semiparametric density deconvolution in the presence of conditionally heteroscedastic measurement errors. Journal of Computational and Graphical Statistics 23, 1101–1125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Stefanski LA and Cook JR (1995). Simulation-extrapolation: the measurement error jackknife. Journal of the American Statistical Association 90, 1247–1256. [Google Scholar]

- [23].Subar AF, Thompson FE, Kipnis V, Mithune D, Hurwitz P, McNutt S, McIntosh A, and Rosenfeld S (2001). Comparative validation of the Block, Willett, and National Cancer Institute food frequency questionnaires: The Eating at America’s Table Study. American Journal of Epidemiology 154, 1089–1099. [DOI] [PubMed] [Google Scholar]

- [24].Trentham-Dietz A, Newcomb PA, B ES, Longnecker MP, Baron J, Greenberg ER, and Willett WC (1997). Body size and risk of breast cancer. American Journal of Epidemiology 145, 11, 1011–1019. [DOI] [PubMed] [Google Scholar]

- [25].Wang Y, Wellenius GA, Hickson DA, Gjelsvik A, Eaton CB, and Wyatt SB (2016). Residential proximity to traffic-related pollution and atherosclerosis in 4 vascular beds among African-American adults: Results from the Jackson Heart Study. American Journal of Epidemiology 184, 10, 732–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].White H (1982). Maximum likelihood estimation of misspecified models. Econometrica 50, 1–25. [Google Scholar]

- [27].Yi GY (2017). Statistical Analysis with Measurement Error or Misclassification: Strategy, Method and Application. Springer. [Google Scholar]

- [28].Zeger SL, Liang K-Y, and Albert PS (1988). Models for longitudinal data: a generalized estimating equation approach. Biometrics 44, 1049–1060. [PubMed] [Google Scholar]