Abstract

Does everybody look at the same place on a face when identifying another person, and if not, does this variability in fixation behavior lead to functional consequences? In one condition, observers’ eye movements were recorded while performing a face identification task. A second condition had the same observers identify faces while their gaze was restricted to specific locations on the face. We found substantial differences which persist in time in where individuals chose to move their eyes. Observers’ systematic departure from a canonical, theoretically optimal fixation point did not correlate with performance degradation. Instead, each individual’s looking preference corresponded to an idiosyncratic performance-maximizing point of fixation: those who looked lower on the face also performed better when forced to fixate the lower part of the face. The results suggest an observer-specific synergy between the face recognition and eye movement systems that optimizes face identification performance.

Keywords: face perception, eye movements, individual differences

Identifying faces is one of the most common, socially vital visual tasks that humans perform. The combination of task difficulty and evolutionary importance has led to the creation of a network of neural modules dedicated to processing information for the recognition of individual identities, emotional valence, gender, and many other properties (Haxby, Hoffman, & Gobbini, 2000; Kanwisher, McDermott, & Chun, 1997). In order to quickly and accurately accomplish these tasks, the brain’s face recognition system requires access to high-quality, task-specific visual information. The acquisition of this information is primarily determined by where on the retina the image of the face falls, which, in turn, is determined by where on the face the observer chooses to look.

Many studies have examined, at the group level, where people move their eyes when looking at faces (Althoff & Cohen, 1999; Barton, Radcliffe, Cherkasova, Edelman, & Intriligator, 2006; Peterson & Eckstein, 2011; Walker-Smith, Gale, & Findlay, 1977). Other studies have focused on variations in looking behavior between well-defined groups, such as normal versus clinical populations associated with decreased face recognition abilities (e.g., autism: Dalton et al., 2005; schizophrenia: Williams, Loughland, Gordon, & Davidson, 1999; prosopagnosia: Barton, Radcliffe, Cherkasova, & Edelman, 2007; Xivry, Ramon, Lefèvre, & Rossion, 2008), Western Caucasian versus East Asian (Blais, Jack, Scheepers, Fiset, & Caldara, 2008; Jack, Blais, Scheepers, Schyns, & Caldara, 2009), and older versus younger (Firestone, Turk-Browne, & Ryan, 2007). However, few investigations have focused on differences across seemingly well-matched individuals. There are individual differences in saccade amplitudes and fixation durations and these differences are conserved across tasks (Rayner, Li, Williams, Cave, & Well, 2007). In relation to the spatial distribution of saccades, an early report found variation between individuals (Walker-Smith et al., 1977), though this study only measured three observers and did not evaluate the stability of these differences across time.

If people do enact consistent, individualized eye movement patterns during face viewing, are these strategies correlated with recognition performance? There is a large variation in face recognition ability within the normal population (Duchaine & Nakayama, 2006). A recent study has reported differences in specific measurements of eye movement behavior, such as time spent fixating the eyes and the number of saccadic transitions, but not in the overall distribution of fixtions across internal features (Sekiguchi, 2011). Furthermore, this study finds that there are distinct fixation times on the eyes and number of saccadic transitions for high- and low-performing groups.

Here, we first measured and found systematic differences across individuals’ landing point of the initial eye movement during a face identification task. Second, we assessed the stability of these differences across time. Third, we explored the functional consequences of individual differences in face recognition eye movement behavior. Fourth, we quantified the distance between each observer’s preferred point of fixation and an optimal fixation strategy based on a rational Bayesian observer that takes into account the statistical distribution of discriminating information in the faces, the task demands, and a canonical foveated visual system. Specifically, we tested two hypotheses: 1) An individual’s perceptual performance in a face identification task can be predicted by the distance from the observer’s preferred point of fixation to the optimal point of fixation as determined by a rational Bayesian observer; 2) Individual differences in where people choose to first fixate when recognizing a face correspond to individual differences in fixation location-dependent perceptual performance such that perceptual performance (identification accuracy) is maximized at the preferred point of fixation.

Method

Participants

Thirty Caucasian undergraduate students (18 female, age range 19 to 23) from the University of California, Santa Barbara participated in the study for course credit. All observers had normal or corrected-to-normal vision and no history of neurological disorders.

Stimuli and display

In-house frontal-view photographs of ten male undergraduate were cropped to remove background, hair, and clothing before being scaled such that the distance from the bottom of the hairline to the bottom of the chin was equated across the set (15.25° visual angle). Stimuli were presented on a linearly calibrated monitor with a mean luminance of 25 cd/m2. Images were converted to 8-bit grayscale, contrast-energy normalized, and embedded in white Gaussian noise with a standard deviation of 2.75 cd/m2 (Fig. 1a).

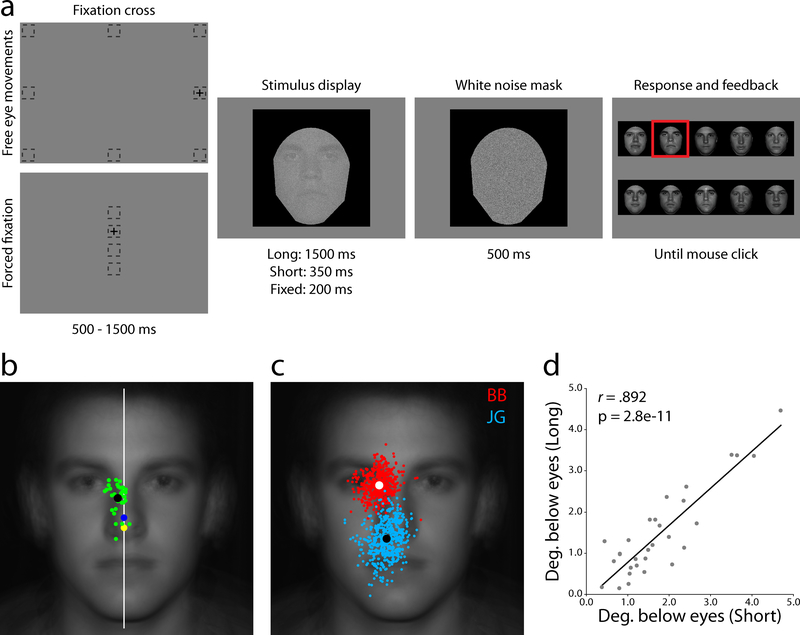

Fig. 1.

Task structure and free eye movements. (a) Participants began free eye movement trials by fixating outside the impending stimulus area, while forced fixation trials required continuous gaze at one of four specified locations along the vertical midline. (b) Average landing point of the first saccade for each of the thirty observers (green dots) and the group average (black dot). The white line represents the vertical midline, showing a consistent bias toward the right side of the face. The geometric center of the visible face area (yellow dot) and the black frame (blue dot) show that observers do not use a central targeting strategy. (c) All saccades from two example observers, BB (red, an “eye-looker”) and JG (cyan, a “nose-looker”). (d) Consistency between the Long (1500 ms viewing time) and Short (350 ms) free eye movement conditions showing that the first saccade does not depend on overall stimulus presentation time.

Procedure

Observers participated in three conditions, two where eye movements were allowed and one with a forced fixation. First, participants completed a 500 trial training set with a long display time (Long; 1500ms). Next, they completed 500 trials with a short display time (Short; 350ms). Last, observers completed 1500 trials of a condition that forced them to maintain various single fixation locations (Fixed). Eight observers returned to retake the Short condition after delays ranging from 90 to 525 days (Mdelay = 176 days) under the same experimental conditions.

Free eye movements

Each trial began with a fixation cross located at one of eight positions (randomly selected with equal probability) located outside the impending face image with an average distance of 13.95° visual angle from the center of stimulus (Fig. 1a). The peripheral starting locations were used to avoid initial fixation bias where task information could be accrued prior to an eye movement (Arizpe, Kravitz, Yovel, & Baker, 2012; Hsiao & Cottrell, 2008). Observers fixated the cross and pressed the space bar. If, before stimulus presentation, the eye position fell more than 1° from the center of the cross an error screen would be displayed followed by a cross at the same location. Following a 500 – 1500ms delay the cross was removed and a randomly sampled face was displayed in the center of the monitor. Participants were free to move their eyes while the face image was present. The face was then replaced with a high-contrast white Gaussian noise mask for 500ms followed by a response screen with high contrast, noise-free versions of the possible faces. Subjects used the mouse to select their face choice. Feedback was provided by framing the correct answer with a red box before beginning the next trial (Fig. 1a).

Forced fixation

Each trial began with a fixation cross located at one of four locations (randomly selected) along the monitor’s vertical midline (3° apart, corresponding to the center of the mouth, nose tip, center of the eyes, and forehead; Fig. 1a). The procedure was the same as the free eye movement conditions except that eye movements were not allowed during the 200ms stimulus presentation (if gaze fell more than 1° from the center of the cross an error screen would be displayed followed by a cross at the same location and a random resampling of a new face).

Eye-tracking

The left eye of each participant was tracked using an SR Research Eyelink 1000 Tower Mount eye tracker sampling at 250 Hz. A nine-point calibration and validation were run before each 125 trial session with a mean error of no more than 0.5° visual angle. Saccades were classified as events where eye velocity was greater than 22°/sec and eye acceleration exceeded 4000°/sec2.

Saccade-based observer classification

We assessed the consistency and distinctiveness with which individuals executed eye movement strategies by constructing a simple minimum-distance saccade-generator classifier. Each observer’s first fixations from the Short condition were randomly placed into K groups where K ranged between 2 and 500. For each of the K groups, each observer’s mean x and y fixation positions were computed (the test set). The distance between these means and the means computed for each observer from the remaining K-1 groups (the training set) were calculated. The classifier chose the observer, j, who produced the training set with the smallest distance to the test location as the prediction for who generated the test data:

where and represent the mean x and y coordinates for the training and test saccade groups. This was repeated for each of the K groups. To assess chance performance we ran the same classifier but randomized the observer labels on the test set.

Optimal point of fixation for a Foveated Ideal Observer

An ideal observer is a model that makes statistically optimal decisions about the presence of a signal that is corrupted by stochastic noise (Geisler, 2011; Kersten, Mamassian, & Yuille, 2004; Sekuler, Gaspar, Gold, & Bennett, 2004). For a given noisy image, such as those presented to the human observers in this study, an ideal observer calculates the posterior probabilities for each of the possible stimulus classes having been presented and takes the maximum of these probabilities as its decision (Green & Swets, 1989). We modified the ideal observer by implementing a canonical, spatially-variant filtering function to simulate the effects of decreasing contrast sensitivity in the periphery (Peli, Yang, & Goldstein, 1991; Peterson & Eckstein, 2011). For any fixation point this Foveated Ideal Observer (FIO) processes the noisy face image with a series of linear filters, where the form of each filter, in the frequency domain, is a function of both the distance from fixation (retinal eccentricity) and the direction (e.g., upper visual field versus lower visual field). The FIO then compares this filtered stimulus to filtered versions of the possible faces and selects the one most likely to have generated the noisy image. Averaged group performance from the Fixed condition was used to fit the free parameters of the model, which were then held constant to compute predicted performance for each possible fixation (Peterson & Eckstein, 2011). For further details see the Appendix.

Results and discussion

Individual differences in preferred point of fixation and their reliability

The short presentation time allowed for one or, very rarely, two saccades during stimulus presentation (M# saccades = 1.04; only first saccades analyzed). The speeded nature of the task along with the increased difficulty introduced by noise corruption required observers to execute strategic eye movements that maximized information acquisition given a single fixation. This resulted in eye movement patterns that showed a clear differentiation from the classic “T” pattern (with saccadic transitions between the two eyes and the mouth) observed in face perception studies that used longer presentation times and stimuli that were easier to identify (Rizzo, Hurtig, & Damasio, 1987; Henderson, Williams, & Falk, 2005; Williams & Henderson, 2007; but see Hsiao & Cottrell, 2008 for a corresponding observation using similar experimental parameters and Fig. S2 showing a progression toward a feature-targeting pattern in the Long condition after the initial saccade). Participants universally moved their eyes close to the vertical midline but displaced slightly though significantly to the right side of the face (μdistance from midline = 0.62°, t(29) = 7.46, p < .001, one-tailed; Fig. 1b). In contrast to this tight horizontal grouping, there was greater inter-individual variability in the vertical position of observers’ saccadic end points (σhorizontal between, = 0.47°, σvertical between, = 1.05°; p < .002, bootstrap with 10,000 draws; Fig. 1b). Variation in observers’ mean landing points was accompanied by differences in fixation distribution variance. The average within-subject variability, σvertical within, = 1.12°, mirrored the inter-individual variance reported above while the ratios of the fixation distributions’ standard deviations to the initial saccade lengths were in close correspondence to previous literature (M = 8.9%, range 6.1 – 13.6%; Fig. 1c; McGowan, Kowler, Sharma, & Chubb, 1998). Correlating mean first saccade landing position between the Long and Short conditions showed a highly reliable relationship in eye movement behavior (r(28) = 0.89, p < .001), suggesting that the preferred point of fixation does not depend on time constraints (Fig. 1d). Consistent with previous studies on face learning, fixation variance was greater during the first third of the Long trials, when observers were learning the faces, than during the Short condition when the faces had already been learned (Fig. S3; Henderson et al., 2005).

Temporal stability of preferred point of fixation

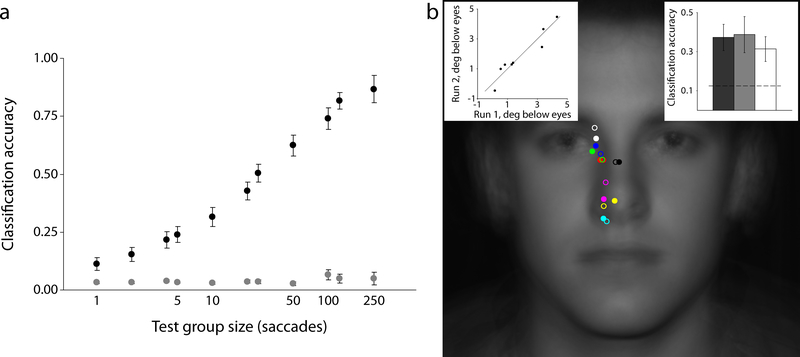

How specific are these strategies to the individual? If observers execute consistent, idiosyncratic eye movement plans then we should be able to predict which individual generated a given sample of saccades. Using our minimum-distance classifier (see Methods).,we found that individuals consistently executed distinctive eye movement strategies, with classifier performance identifying an individual from their fixations significantly above chance for all values of the test group size, K (all p values < .001; Fig. 2a).

Fig. 2.

Consistency and reliability of individual differences in eye movement behavior. (a) A simple minimum-distance classifier can accurately predict which of the 30 observers generated a given random sample of saccades. (b) Idiosyncratic strategies are stable across time. The average landing point for 8 observers is shown with the original test sessions in solid dots and retests (completed from 3 to 17 months later) in open circles. Left inset shows a strong correlation between the vertical position of observers’ eye movements between tests, while the right inset shows how the minimum-distance classifier, trained on saccade samples from the original test sessions, can predict the generators of saccade samples taken from the retest sessions. Error bars represent one standard error of the mean.(s.e.m.)

The Short sessions were completed over the course of one or two days. Feasibly, participants could have used ad hoc strategies for that particular testing time that are not representative of their general face identification behavior. To assess the reliability of observer-specific eye movement patterns, eight observers returned to complete another 500 trials of the Short condition. Inspection of each observer’s centroids for the original (Run 1; open circles in Fig. 2b) and re-test sessions (Run 2; filled circles in Fig. 2b) suggests a consistent preference for distinctive points of fixation over time. We quantified this temporal stability in two ways. First, we correlated the distance below the eyes of each observer’s mean landing point between Run 1 and Run 2 (left inset, Fig. 2b). The close proximity of points to the identity line suggests a unique fixed strategy for each individual (r(6) = 0.95, p < .001). Next, we used the minimum-distance classifier under three different train/test conditions using a leave-one-out methodology (i.e., K = 500; right inset, Fig. 2b). First, we trained and tested on data from the original Short sessions (dark grey). We then did the same for the re-test Short sessions (light grey). Finally, we trained on fixation data from Run 1 and tested on data from Run 2 (white). Not surprisingly, classification was best when training and testing on the same run (though not significantly so; F2,21 = 0.20, p = .82). However, classification accuracy was still significantly above chance when training on the original Short data and testing on data collected for the Short condition months later (t(7) = 2.97, p = .02). These analyses show that individuals enact distinctive eye movement strategies when identifying faces and that these strategies are stable over time.

Center of gravity fixations

Finally, the possibility exists that the fixation patterns are not specific to face identification but rather driven by a general center-of-gravity strategy (Tatler, 2007). We tested two hypotheses: that observers targeted the geometric center of 1) the visible area of the face (within the black frame; yellow dot in Fig. 1c), or 2) the square frame/monitor (blue dot in Fig. 1c). Every observer fixated significantly away from the visible face center, while 28 observers fixated significantly away from the frame/monitor center (all p values < .001). Additionally, five new observers participated in a control study where the positions of both the face image and the initial fixation were variable and independent (Fig. S4). No significant differences were found in where observers chose to look on average between image location/initial fixation location conditions. While the within-subject variance was slightly greater when the image location was allowed to vary, the between-subject variance remained unaltered, supporting an interpretation that eye movement strategies are individualized and specific to faces (Fig. S5 and Table S1) and are mostly determined with respect to the outline of the face and/or internal features rather than the position of the face relative to the frame or the monitor.

Functional consequences of individual differences in preferred points of fixation

We addressed the question of whether the differences in preferred fixation points are just random effects between observers (i.e., idiosyncratic over-practiced behavior) with no perceptual function or consequence, or if they translate to perceptual performance differences. To assess the effects of different fixation locations on task performance we measured face identification accuracy as a function of forced fixation location (Methods and Fig. 1a). The group results show that different points of fixation lead to different performance, with identification accuracy falling off rapidly above the eyes and below the nose (Fig. 3a).

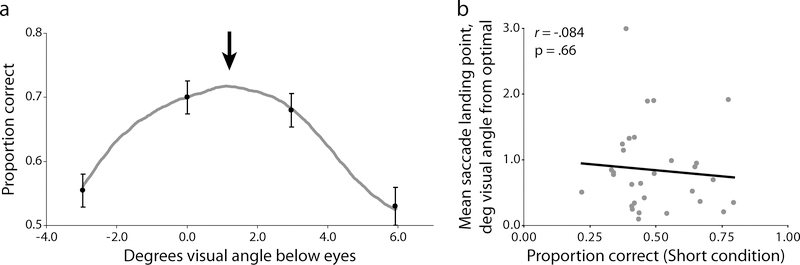

Fig. 3.

Relationship between performance and gaze location. (a) Average forced fixation performance for all 30 observers (black dots) and the Foveated Ideal Observer model predictions. The black arrow represents the average group landing position from the free eye movement condition which also coincides with the model’s predicted point of maximum performance. (b) Correlation between each observer’s overall identification performance and the average distance of their saccades to the group’s optimal fixation point. Error bars represent one s.e.m.

The choice of fixation directly determines task performance on the group level. This relationship between fixation choice and performance can be predicted by an ideal face identifier model equipped with a simulated canonical foveated visual system estimated from multiple observers (Foveated Ideal Observer, FIO; Methods and Appendix; Peterson & Eckstein, 2011). Running the FIO on the group data shows a predicted maximum performance peak at 1.48° below the eyes, which corresponds closely to the average group landing position from the free eye movement condition (Fig. 3a). If all observers had roughly similar spatially-dependent visual processing (referred to as the visibility map) and higher-order identification mechanisms we would expect there to be a single optimal region where fixations lead to maximum identification performance. Here, such an effect would result in a statistical relationship between saccade location (taken as the distance from the observer’s mean y coordinate to the group’s optimal fixation point) and task performance (the proportion correct identifications in the Short condition). Specifically, we would expect observers who regularly move their eyes far away from the optimal point to perform poorly relative to their group-optimal counterparts. However, our results show there is no relationship between the distance of observers’ preferred points of fixation from that of the theoretical optimal fixation and their face identification accuracy (r(28) = −0.08, p = .66; Fig. 3b).

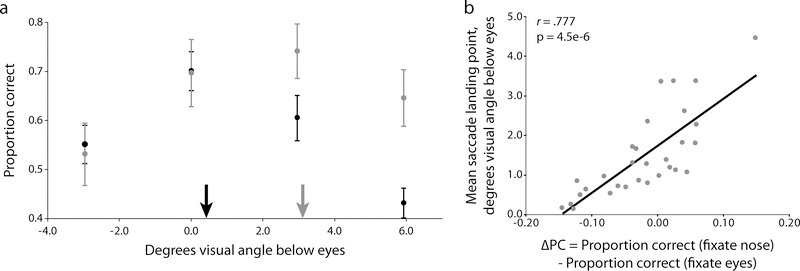

We next tested the hypothesis that observers follow eye movement strategies that maximize their own task performance. For example, those individuals who fixate near the nose region would have an associated higher perceptual performance when forced to fixate the nose rather than eyes. Those observers who preferentially fixate the eyes would show a higher performance when forced to fixate the eyes rather than the nose. Our results for the Fixed condition resulted in distinctive performance profiles, with every observer exhibiting peak performance either at the eyes or at the nose tip. We first looked for evidence that fixation-dependent performance varies between separate, well-defined groups of observers. We took the seven observers whose average saccade landing points were the highest up on the face (termed the “eye-lookers”) and the seven observers whose average landing points were the lowest on the face (termed the “nose-lookers”) and computed each group’s fixation-dependent performance profile (Fig. 4a). If the differences in observers’ free eye movement behavior are indeed random, then we would expect to find no systematic difference in performance profiles between the groups. Defining ΔPC as the difference in performance between the eye- and nose-looker groups, we found that while performance when forced to fixate the forehead or the eyes showed no differentiation between the groups (ΔPCforehead = 0.019, t(12) = 0.25, p = .81, two-tailed; ΔPCeyes = 0.001, t(12) = 0.01, p = .99, two-tailed), there was a clear discrepancy for forced fixations below the eyes with eye-lookers experiencing a severe degradation in performance compared to the nose-lookers (ΔPCnose = −0.143, t(12) = −1.88, p = .04, one-tailed; ΔPCmouth = −0.215, t(12) = −2.96, p = .01, one-tailed).

Fig. 4.

Correspondence between saccadic behavior and fixation-dependent performance. (a) Forced fixation performance for two groups of extreme observers: “eye-lookers” in black and “nose-lookers” in grey. A clear differentiation emerges with eye-lookers displaying severe performance costs as they are forced to fixate lower on the face. Arrows represent the mean landing positions for each group in the Short condition. (b) Correlation between each observer’s preferred saccadic end point and their performance profile. In general, the farther up on the face an individual chooses to look, the greater the performance penalty for fixating away from the eyes. Error bars represent one s.e.m.

We also analyzed the relationship between eye movements and performance at the individual level to evaluate whether each observer’s distinctive performance profile corresponded to their selection of eye movement behavior. We used the difference in performance between the nose and eye fixation trials as a metric of the relative benefit for fixating lower versus higher on the face (called ΔPCnose-eyes). We correlated the distance, in degrees visual angle, below the eyes of each observer’s preferred fixation location from the Short condition and ΔPCnose-eyes (Fig. 4b). If observers execute eye movements that account for their distinctive fixation-dependent task abilities then there should be a strong relationship between saccade endpoint choice and the performance difference between upper and lower face fixation performance. Figure 4b shows that this is indeed the case: observers who looked lower on the face when free to move their eyes also showed an associated performance benefit when looking lower on the face (r(28) = 0.78, p < .001).

Source of variation in fixation-dependent performance profiles

Unlike a previous study (Sekiguchi, 2011) we found that there are systematic differences in the preferred point of fixation across individuals. What might explain this disagreement in results? Almost all of the face recognition literature has allowed for long viewing times even though humans identify faces very rapidly, often within one or two fixations (Hsiao & Cottrell, 2008). Thus, the great majority of eye movement data analyzed in these studies were collected after identification had most likely been accomplished. There is the strong possibility that eye movements late in the presentation time represent default social behavior, such as maintaining consistent eye contact, which could be driven by a more homogenous strategy across the population. Our study focused on the first saccade which might have paramount importance in acquiring task relevant information. A second question relates to the source and cause of these individual differences in eye movement strategies and the associated optimal performance fixation points. How could this situation arise where different observers, looking at the same stimuli, can have such unique performance profiles? One plausible explanation is that humans formulate fixation strategies that maximize performance given knowledge of their distinctive foveated system. It is well-known that while visibility universally deteriorates in the periphery, the steepness, total amount, and directionality (i.e., differences between upper, lower, and horizontal visual fields; Abrams, Nizam, & Carrasco, 2012) of degradation differ greatly between individuals. A person who can see very well in the upper visual field but poorly in the lower visual field would do well to fixate towards the bottom of the face where information from the information-rich eyes (Peterson & Eckstein, 2011; Sekuler et al., 2004; Vinette, Gosselin, & Schyns, 2004) can still be acquired with high fidelity while allowing information from the mouth to fall on the fovea. The ability of humans to maximize task performance through optimal eye movement selection for their specific visibility map leaves this as a possibility (Najemnik & Geisler, 2005).

However, a second possibility cannot be discarded. Presumably, face identification requires, at some stage in the visual stream, the comparison of available visual information with stored representations of each identity’s face. Furthermore, recognizing faces is one of the most common and socially important tasks humans perform, with constant exposure and practice from birth. If humans adopt a fixation strategy from early in life that does not necessarily maximize information gain, it is feasible that the burgeoning face recognition network develops recognition strategies that maximize performance for the individual’s distinctive choice of looking behavior. This could be realized in multiple ways, either at the lower or higher levels of the visual architecture. At the lower level, the individual’s peripheral processing ability itself could be shaped by long-term experience with viewing faces and other perceptual stimuli at a given fixation region (e.g., modulation of the developing contrast sensitivity function as a function of eccentricity). At a higher level, the face recognition system could optimize its identification algorithm by utilizing fixation-specific internal representations and computations (fixation specific coding). Currently we do not have adequately precise measurements of infant eye movements and their evolution with age to make any strong claims, though this would seem to be a fruitful avenue for future research.

Supplementary Material

Appendix

We modeled the decrease in resolution and sensitivity in the visual periphery with a canonical, spatially-variant contrast sensitivity function (SVCSF; Peli et al., 1991) given by:

wherea0, b0, and c0 are constants chosen to set the peak contrast sensitivity at 1 and the frequency of peak sensitivity in the fovea at 4 cycles per degree visual angle. f is spatial frequency in cycles per degree visual angle and r is distance from fixation in degrees visual angle. d0 is termed the eccentricity factor which determines how quickly sensitivity is attenuated with peripheral distance. θ is the direction, an angle in polar coordinates, from fixation, as sensitivity does not degrade isotropically.

For any fixation point k, the Foveated Ideal Observer (FIO) filters each region of the noisy face image in the frequency domain with a unique SVCSF specified by its distance and direction from fixation (see Supplementary Figure S1). This image is compared to similarly filtered versions of the possible faces resulting in a vector of template responses, rk. These responses follow a multivariate normal distribution for which the likelihood, ℓ, of face i being present is calculated as:

where μi k, is the mean response under the hypothesis that face i was present, ∑k is the response covariance, and T is the transpose operator. The FIO takes the maximum likelihood as its decision.

References

- Abrams J, Nizam A, & Carrasco M (2012). Isoeccentric locations are not equivalent: The extent of the vertical meridian asymmetry. Vision Research, 52(1), 70–78. doi: 10.1016/j.visres.2011.10.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Althoff RR, & Cohen NJ (1999). Eye-movement-based memory effect: A reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25(4), 997–1010. doi: 10.1037/0278-7393.25.4.997 [DOI] [PubMed] [Google Scholar]

- Arizpe J, Kravitz DJ, Yovel G, & Baker CI (2012). Start Position Strongly Influences Fixation Patterns during Face Processing: Difficulties with Eye Movements as a Measure of Information Use. PLoS ONE, 7(2), e31106. doi: 10.1371/journal.pone.0031106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barton JJS, Radcliffe N, Cherkasova MV, & Edelman JA (2007). Scan patterns during the processing of facial identity in prosopagnosia. Experimental Brain Research, 181(2), 199–211. doi: 10.1007/s00221-007-0923-2 [DOI] [PubMed] [Google Scholar]

- Barton JJS, Radcliffe N, Cherkasova MV, Edelman J, & Intriligator JM (2006). Information processing during face recognition: the effects of familiarity, inversion, and morphing on scanning fixations. Perception, 35(8), 1089–1105. [DOI] [PubMed] [Google Scholar]

- Blais C, Jack RE, Scheepers C, Fiset D, & Caldara R (2008). Culture Shapes How We Look at Faces. PLoS ONE, 3(8), e3022. doi: 10.1371/journal.pone.0003022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, et al. (2005). Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci, 8(4), 519–526. doi: 10.1038/nn1421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine B, & Nakayama K (2006). The Cambridge Face Memory Test: Results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia, 44(4), 576–585. doi: 10.1016/j.neuropsychologia.2005.07.001 [DOI] [PubMed] [Google Scholar]

- Firestone A, Turk-Browne NB, & Ryan JD (2007). Age-Related Deficits in Face Recognition are Related to Underlying Changes in Scanning Behavior. Aging, Neuropsychology, and Cognition, 14(6), 594–607. doi: 10.1080/13825580600899717 [DOI] [PubMed] [Google Scholar]

- Geisler WS (2011). Contributions of ideal observer theory to vision research. Vision Research, 51(7), 771–781. doi: 10.1016/j.visres.2010.09.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green DM, & Swets JA (1989). Signal Detection Theory and Psychophysics. Peninsula Pub. [Google Scholar]

- Haxby JV, Hoffman EA, & Gobbini MI (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Henderson JM, Williams CC, & Falk RJ (2005). Eye movements are functional during face learning. Memory & Cognition, 33, 98–106. doi: 10.3758/BF03195300 [DOI] [PubMed] [Google Scholar]

- Hsiao J, & Cottrell G (2008). Two Fixations Suffice in Face Recognition. Psychological Science, 19(10), 998–1006. doi: 10.1111/j.1467-9280.2008.02191.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jack RE, Blais C, Scheepers C, Schyns PG, & Caldara R (2009). Cultural Confusions Show that Facial Expressions Are Not Universal. Current Biology, 19(18), 1543–1548. doi:16/j.cub.2009.07.051 [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, & Chun MM (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 17(11), 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kersten D, Mamassian P, & Yuille A (2004). Object perception as Bayesian inference. Annual Review of Psychology, 55, 271–304. doi: 10.1146/annurev.psych.55.090902.142005 [DOI] [PubMed] [Google Scholar]

- McGowan JW, Kowler E, Sharma A, & Chubb C (1998). Saccadic localization of random dot targets. Vision Research, 38(6), 895–909. [DOI] [PubMed] [Google Scholar]

- Najemnik J, & Geisler WS (2005). Optimal eye movement strategies in visual search. Nature, 434(7031), 387–391. doi: 10.1038/nature03390 [DOI] [PubMed] [Google Scholar]

- Peli E, Yang J, & Goldstein RB (1991). Image invariance with changes in size: the role of peripheral contrast thresholds. Journal of the Optical Society of America A, 8(11), 1762–1774. doi: 10.1364/JOSAA.8.001762 [DOI] [PubMed] [Google Scholar]

- Peterson MF, & Eckstein MP (2011). Fixating the Eyes is an Optimal Strategy Across Important Face (Related) Tasks. Journal of Vision, 11(11), 662–662. doi: 10.1167/11.11.662 [DOI] [Google Scholar]

- Rayner K, Li X, Williams CC, Cave KR, & Well AD (2007). Eye movements during information processing tasks: Individual differences and cultural effects. Vision Research, 47(21), 2714–2726. doi: 10.1016/j.visres.2007.05.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzo M, Hurtig R, & Damasio AR (1987). The role of scanpaths in facial recognition and learning. Annals of Neurology, 22(1), 41–45. doi: 10.1002/ana.410220111 [DOI] [PubMed] [Google Scholar]

- Sekiguchi T (2011). Individual differences in face memory and eye fixation patterns during face learning. Acta Psychologica, 137(1), 1–9. doi: 10.1016/j.actpsy.2011.01.014 [DOI] [PubMed] [Google Scholar]

- Sekuler AB, Gaspar CM, Gold J, & Bennett PJ (2004). Inversion Leads to Quantitative, Not Qualitative, Changes in Face Processing. Current Biology, 14(5), 391–396. doi:16/j.cub.2004.02.028 [DOI] [PubMed] [Google Scholar]

- Tatler BW (2007). The central fixation bias in scene viewing: selecting an optimal viewing position independently of motor biases and image feature distributions. Journal of Vision, 7(14), 4.1–17. doi: 10.1167/7.14.4 [DOI] [PubMed] [Google Scholar]

- Vinette C, Gosselin F, & Schyns PG (2004). Spatio-temporal dynamics of face recognition in a flash: it’s in the eyes. Cognitive Science, 28(2), 289–301. doi: 10.1016/j.cogsci.2004.01.002 [DOI] [Google Scholar]

- Walker-Smith GJ, Gale AG, & Findlay JM (1977). Eye movement strategies involved in face perception. Perception, 6(3), 313–326. doi: 10.1068/p060313 [DOI] [PubMed] [Google Scholar]

- Williams CC, & Henderson JM (2007). The face inversion effect is not a consequence of aberrant eye movements. Memory & Cognition, 35, 1977–1985. doi: 10.3758/BF03192930 [DOI] [PubMed] [Google Scholar]

- Williams LM, Loughland CM, Gordon E, & Davidson D (1999). Visual scanpaths in schizophrenia: is there a deficit in face recognition? Schizophrenia Research, 40(3), 189–199. doi: 10.1016/S0920-9964(99)00056-0 [DOI] [PubMed] [Google Scholar]

- Xivry JO, Ramon M, Lefèvre P, & Rossion B (2008). Reduced fixation on the upper area of personally familiar faces following acquired prosopagnosia. Journal of Neuropsychology, 2(1), 245–268. doi: 10.1348/174866407X260199 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.