Abstract

Background

Patients with diabetes are at increased risk of developing oral complications, and annual dental examinations are an endorsed preventive strategy. The authors evaluated the feasibility and validity of implementing an automated electronic health record (EHR)-based dental quality measure to determine whether patients with diabetes received such evaluations.

Methods

The authors selected a Dental Quality Alliance measure developed for claims data and adapted the specifications for EHRs. Automated queries identified patients with diabetes across 4 dental institutions, and the authors manually reviewed a subsample of charts to evaluate query performance. After assessing the initial EHR measure, the authors defined and tested a revised EHR measure to capture better the oral care received by patients with diabetes.

Results

In the initial and revised measures, the authors used EHR automated queries to identify 12,960 and 13,221 patients with diabetes, respectively, in the reporting year. Variations in the measure scores across sites were greater with the initial measure (range, 36.4–71.3%) than with the revised measure (range, 78.8–88.1%). The automated query performed well (93% or higher) for sensitivity, specificity, and positive and negative predictive values for both measures.

Conclusions

The results suggest that an automated EHR-based query can be used successfully to measure the quality of oral health care delivered to patients with diabetes. The authors also found that using the rich data available in EHRs may help estimate the quality of care better than can relying on claims data.

Practical Implications

Detailed clinical patient-level data in dental EHRs may be useful to dentists in evaluating the quality of dental care provided to patients with diabetes.

Keywords: Informatics, quality of care, dental public health, diabetes mellitus

Despite continuous improvement in the general and oral health of Americans, there is limited information about the quality of oral health care delivered. In 2001, in response to growing awareness of quality deficiencies in the American health care system, the Institute of Medicine published Crossing the Quality Chasm,1 highlighting the need for change in systems of care and quality improvement. Consequently, in 2008, with burgeoning national interest in quality improvement, the Centers for Medicare & Medicaid Services proposed the establishment of a Dental Quality Alliance (DQA) as a collaborative approach to developing oral health quality measures with the mission “to advance performance measurement as a means to improve oral health, patient care, and safety through a consensus-building process.” 2 As part of the collaborative effort to develop dental quality measures (DQMs), the DQA published several documents, including Adult Measures Under Consideration, 3 in which they proposed 13 high-priority adult measures, and the starter set of pediatric measures, 4 with 6 pediatric electronic measures validated for electronic health records (EHRs).

Investigators in most reports in the literature evaluate DQMs by using administrative claims data,5–7 which consists of treatment codes that health care providers submit to payers for billing.8,9 Herndon and colleagues5 evaluated 3 DQMs with a caries preventive focus by using administrative claims data and attested to their validity and feasibility. Although the results are encouraging, the authors noted the limitations of relying on administrative claims data and suggested these may be addressed by leveraging EHRs, which contain more detailed and longitudinal clinical data that better reflect patient oral health status. EHRs have become the standard for dental care documentation in most US dental schools and are increasingly common in community health centers, group practices, and private clinics.10 Because structured data are increasingly available in dental EHRs,4,11 our group in 2016 defined the steps for the implementation of automated DQM queries by using EHRs and demonstrated the feasibility of a meaningful use measure.12

In our study, we evaluated 1 of the DQA-proposed adult, high-priority measures—People With Diabetes: Oral Evaluation3—developed to evaluate “the percentage of adults identified as people with diabetes who received a comprehensive or periodic oral examination or a comprehensive periodontal examination at least once within the reporting year.” Diabetes is a chronic and progressive disease affecting 29 million people in the United States, or 9.3% of the US population.13 In 1995, the Centers for Medicare & Medicaid Services, American Diabetes Association, and National Committee on Quality Assurance set forth performance measures to evaluate the quality of medical care provided to people with diabetes, with a focus on routine testing for indicators such as low-density lipoprotein cholesterol level, eye examination results, and hemoglobin A1c level. Although integrated health care systems, including the Veterans Health Administration, adopted performance and outcome measures, they did not include oral health care quality evaluations.14 People with diabetes are at increased risk of developing oral health complications that include periodontal disease, xerostomia, and burning mouth syndrome.15 To manage this elevated risk and maintain quality of life, annual dental examinations are a preventive strategy endorsed by the American Dental Association,16 American Diabetes Association,17 and Healthy People 2020.15 Despite evidence-based recommendations, only 54.5% of people with diabetes reported having an annual dental examination in 2013,15 with marked disparities according to sex, race, and place of residence (rural versus urban).18

With the adoption of EHRs in dental offices and academic institutions, patient dental care can be documented and stored in a structured data format, allowing for secondary analyses and quality measurement.19 We conducted our study at 4 institutions that use the axiUm (Exan Group, Henry Schein) EHR platform. We had the following research objectives: adapt the proposed DQA DQM People With Diabetes: Oral Evaluation3 originally designed for administrative claims data to be used in EHRs, determine the validity of the DQM in terms of measuring what it was intended to measure, and develop and test a refined EHR-based DQM that can be used in the dental office setting to assess the quality of care provided to patients with diabetes diagnosed.

METHODS

We developed and assessed the feasibility of the DQM in 3 academic institutions and a large multispecialty group dental practice. The participating sites in this study have a previous and established collaboration,20,21 use the same EHR, and are early adaptors in using a standardized dental diagnostic terminology.22 The 4 institutions are also members of the Consortium for Oral Health Research and Informatics.23 In this study, we included all patients with a self-reported diagnosis of diabetes identified via EHRs with no stratification according to diabetes type or severity. All 4 dental institutions received institutional review board approval for the study. In the following paragraphs, we describe the methods we used to adapt the measure to the EHR, evaluate the measure’s validity, and develop and validate the revised measure.

People with diabetes: oral evaluation—measure description (original measure)

As described in the DQA measure specification sheet,3 this measure is used to evaluate oral health care received by adults (18 years or older) with diabetes following the specifications designed for administrative claims data.

Denominator

The patient population met the criteria for 2 denominators. Denominator 1 was the unduplicated number of all adults continuously enrolled for 180 days identified as people with diabetes within the reporting year. Denominator 2 was the unduplicated number of all adults with diabetes continuously enrolled for 180 days who received at least 1 dental service within the reporting year with a code between D0100 and D9999 from the Code on Dental Procedures and Nomenclature (CDT).24

Numerator

The numerator was the unduplicated number of adults with diabetes who were part of a denominator who received a comprehensive evaluation (D0150), periodic oral evaluation (D0120), or comprehensive periodontal evaluation (D0180) within the reporting year. We calculated 2 measure rates from the proposed criteria as the ratio of the numerator divided by denominator 1 and the ratio of the numerator divided by denominator 2.

Adaptation of the original measure: initial EHR measure

We retooled the original measure for clinic and EHR use. For example, the original measure determined the eligible population (denominator) by identifying those who had paid and unpaid claims over the past year and were enrolled continuously for 180 days. We made the following adaptations.

Denominator

We determined the denominator by means of an automated query of dental EHRs that identified unduplicated adult patients 18 years or older as of the last day of the reporting year with a self-reported diabetes condition noted in the patient’s medical history. Because we could not determine whether the participant was enrolled continuously for 180 days, our EHR-adapted measure specifications determined that only patients who received at least 1 dental-related procedure code as identified by means of CDT codes D0100 to D9999 in the reporting year of 2014 (January 1 through December 31) were included. Thus, we had only 1 denominator definition.

Numerator

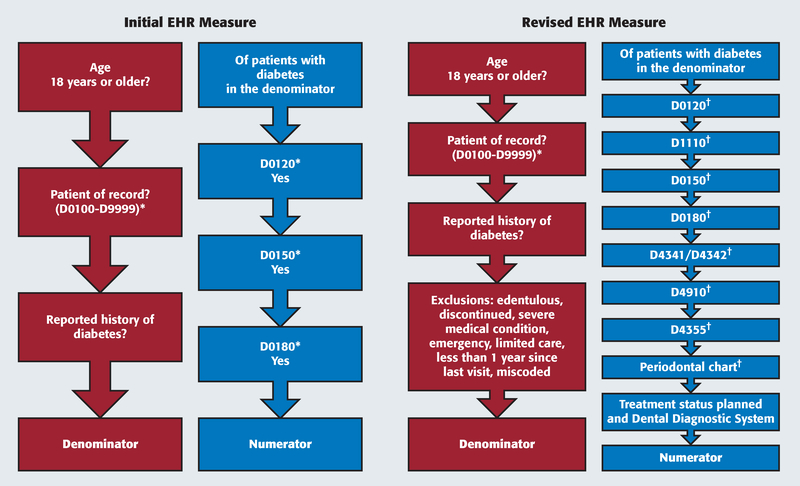

As specified in the original measure, the numerator was the subcategory of unduplicated enrolled adult patients with diabetes who were in the denominator who received a comprehensive oral evaluation (D0150), periodic oral evaluation (D0120), or comprehensive periodontal evaluation (D0180) in the reporting year. Figure 1 displays the criteria for the numerator and denominator populations for the initial EHR measure.

Figure 1.

Numerator and denominator workflow for initial and revised electronic health record (EHR) measures. * Completed Current Dental Terminology (CDT) procedures only. † CDT procedure codes completed or in progress.

Automated query implementation in the EHR framework

All participating sites used an EHR with a similar back-end database, and we developed a Structured Query Language script to extract data and generate reports in a standard format at all sites. The development of the script was an iterative process in which we first analyzed the feasibility and data availability at each stage and adjusted accordingly. With the exception of 1 site, diabetes information was recorded in the medical history forms. One site maintained multiple versions of the medical history form, and we modified the script to extract information from all pertinent data collection forms. After we finalized the configurations, we executed the scripts at all sites. We randomly selected an initial set of 20 charts at each site and manually reviewed them to confirm the validity of the information provided by the script. We then generated a final script. The appendix (available online at the end of this article) includes the logic or pseudocode of the script.

Validation of the automated query with manual chart reviews

We compared the performance of the automated query with the results of the manual electronic chart review, which we considered the criterion standard. As previously described,12 we determined validation following the steps described here.

Step 1: sample size calculations

We determined the minimum number of charts to be reviewed manually across all 4 sites. The proportions were 80%, 82%, 85%, and 85% for sites 1, 2, 3, and 4, respectively, and represented the number of patients at a specific site who had at least 1 of the following CDT procedure codes: D0120, D0150, or D0180. We set the margins for error and significance levels at each site at a standard 0.05 (d = 0.05; α = .05).

Step 2: chart review process

Two calibrated independent reviewers at each site (S.K., S.B., B.T., J.E., J.W., R.W., J.M., J.E.) with experience in electronic patient chart reviews conducted manual reviews. Each reviewer at each of the 4 sites initially reviewed the same 50 charts, and we calculated interrater agreement by using the Cohen κ.25 Interrater agreement was at a κ value of 1.0 for sites 1, 2, and 4 and 0.95 for site 3. The reviewers discussed and resolved differences in the chart reviews before subsequent chart reviews. A single reviewer at each site completed the remaining chart reviews.

Step 3: concordance between automated and manual queries

To evaluate the concordance between the automated and manual queries, we calculated sensitivity, specificity, positive predictive values, and negative predictive values. Sensitivity indicated that the manual review results confirmed that the patient was part of the numerator by having received at least 1 comprehensive or periodic oral evaluation or a comprehensive periodontal evaluation as identified by means of the automated query. Specificity indicated that the manual review results confirmed that the patient was not part of the numerator because he or she did not receive at least 1 comprehensive or periodic oral evaluation or a comprehensive periodontal evaluation as identified by means of the automated query. Positive predictive value indicated that the automated query confirmed that the patient was part of the numerator as identified by means of the manual query. Negative predictive value indicated that the automated query confirmed that the patient was part of only the denominator as identified by means of the manual query.

Step 4: calculate measure scores

We determined measure scores as a ratio of the numerator divided by the denominator for each site, as well as the overall measure score with the results from all sites combined. We calculated EHR measure scores for manual review and automated query results. We evaluated the relationship between the manual and automated queries at each site by using a 2-sample test for proportions. We calculated an overall measure score for both manual and automated queries. Finally, we further evaluated the differences in proportions across sites and performed a χ2 test with 3 degrees of freedom given an α level of .05 and a 95% cutoff value of 7.815.

Evaluation of patient charts that were not part of the numerator

We thoroughly reviewed charts from patients with diabetes who were included in the denominator but not in the numerator. We identified the different reasons why the patient included in the denominator did not receive a comprehensive or periodic oral evaluation or comprehensive periodontal evaluation in the reporting year. As a result, we designed and implemented a refined and revised EHR measure in our EHRs with the specifications described in the Results section and displayed in Figure 1.

RESULTS

The results for the initial and revised EHR measures are described in the following paragraphs and follow the same steps as in the Methods section.

Descriptive characteristics

Table 1 displays the characteristics of the adult patients with diabetes included in the initial and revised EHR measures. As noted, for each site there were no significant differences in age and sex in the study population included in the initial and revised EHR measures (P > .05) (Table 1). In the intersite comparisons, site 4 had a significantly younger population than did sites 1, 2, and 3 (P < .05). The number of unique patients who received care in the reporting year ranged from 10,000 through 240,000 across the 4 sites. The number of providers (dentists, dental hygienists, and dental and dental hygiene students) ranged from 292 to 766 across the 4 sites.

TABLE 1.

Descriptive characteristics of adults identified as having diabetes.

| CHARACTERISTIC | SITE 1 MEASURE | SITE 2 MEASURE | SITE 3 MEASURE | SITE 4 MEASURE | ||||

|---|---|---|---|---|---|---|---|---|

| Initial | Revised | Initial | Revised | Initial | Revised | Initial | Revised | |

| Age,* Mean (Standard Deviation) | 60.8 (11.4) | 60.8 (11.3) | 58.4 (14.5) | 58.4 (14.4) | 64.0 (12.7) | 64.4(12.2) | 54.2 (12.9) | 54.4(12.7) |

| Sex,* % | ||||||||

| Male | 39.0 | 38.8 | 47.4 | 49.3 | 41.4 | 42.8 | 45.5 | 46.5 |

| Female | 61.0 | 61.2 | 52.6 | 50.7 | 58.6 | 57.2 | 54.5 | 53.5 |

There were no differences in age and sex at each site for the initial and revised measures.

Results of the adaptation of the original measure: initial EHR measure

Denominator

The EHR automated queries identified a total of 12,960 patients with diabetes in the reporting year for the initial EHR measure (Table 2).

TABLE 2.

Initial and revised EHR* measure rates across the 4 sites for automated query and manual reviews.

| SITE | INITIAL EHR MEASURE | P VALUE | REVISED EHR MEASURE | P VALUE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Manual | Num† | 294 | MS,§ 58.8% | Manual | Num | 166 | MS, 83.0% | ||

| Den‡ | 500 | (95% CI,¶ 54% to 63%) | > .05# | Den | 200 | (95% CI, 78% to 88%) | > .05# | |||

| Query | Num | 613 | MS, 55.6% | Query | Num | 884 | MS, 81.2% | |||

| Den | 1,103 | (95% CI, 53% to 59%) | Den | 1,089 | (95% CI, 79% to 84%) | |||||

| 2 | Manual | Num | 67 | MS, 36.4% | Manual | Num | 118 | MS, 88.1% | ||

| Den | 184 | (95% CI, 29% to 43%) | > .05# | Den | 134 | (95% CI, 83% to 94%) | > .05# | |||

| Query | Num | 132 | MS: 34.2% | Query | Num | 288 | MS: 81.8% | |||

| Den | 386 | (95% CI, 29% to 39%) | Den | 352 | (95% CI, 78% to 86%) | |||||

| 3 | Manual | Num | 194 | MS, 67.8% | Manual | Num | 144 | MS, 82.8% | ||

| Den | 286 | (95% CI, 62% to 73%) | < .05# | Den | 174 | (95% CI, 77% to 88%) | > .05# | |||

| Query | Num | 620 | MS, 52.6% | Query | Num | 926 | MS, 84.0% | |||

| Den | 1,178 | (95% CI, 50% to 55%) | Den | 1,102 | (95% CI, 82% to 86%) | |||||

| 4 | Manual | Num | 194 | MS, 71.3% | Manual | Num | 156 | MS, 78.8% | ||

| Den | 272 | (95% CI, 66% to 77%) | < .05# | Den | 198 | (95% CI, 71% to 83%) | < .05# | |||

| Query | Num | 7,922 | MS, 77.0% | Query | Num | 8,988 | MS, 84.2% | |||

| Den | 10,293 | (95% CI, 76% to 78%) | Den | 10,678 | (95% CI, 83% to 85%) | |||||

| Total | Manual | Num | 749 | MS, 60.3% | Manual | Num | 584 | MS, 82.3% | ||

| Den | 1,242 | (95% CI, 58% to 63%) | < .001# | Den | 710 | (95% CI, 79% to 85%) | .26# | |||

| Query | Num | 9,287 | MS, 71.7% | Query | Num | 11,086 | MS, 83.9% | |||

| Den | 12,960 | (95% CI, 71% to 72%) | Den | 13,221 | (95% CI, 83% to 84%) | |||||

| Sensitivity | 92.6% (95% CI, 90.7% to 94.5%) | 99.4% (95% CI, 98.7% to 100%) | < .001** | |||||||

| Specificity | 100% (95% CI, 100% to 100%) | 90.2% (95% CI, 85.0% to 95.2%) | < .001** | |||||||

| PPV†† | 100% (95% CI, 100% to 100%) | 98.0% (95% CI, 96.5% to 99.0%) | < .001** | |||||||

| NPV‡‡ | 100% (95% CI, 100% to 100%) | 97.5% (95% CI, 94.8% to 100%) | < .001** | |||||||

EHR: Electronic health record.

Num: Numerator.

Den: Denominator.

MS: Measure score.

CI: Confidence interval.

Relationship between manual and automated queries calculated with 2-sample test of proportions.

Relationship of the validation analysis between the adapted and revised measures calculated with 2-sample test of proportions.

PPV: Positive predictive value.

NPV: Negative predictive value.

Numerator

The EHR automated queries identified a total of 9,287 patients with diabetes who received at least 1 CDT procedure code (D0120, D0150, or D0180) (Table 2).

Automated query implementation in the EHR framework

All sites implemented the query. We included in the appendix (available online at the end of this article) the logic or pseudocode of the EHR script for the implementation of the revised EHR measure to evaluate the quality of dental care among people with diabetes.

Validation of the automated query with manual chart reviews

Step 1: sample size calculations. The minimum number of charts manually reviewed at each site was 282, 184, 286, and 272 for sites 1, 2, 3, and 4, respectively. Although the sample size calculations required a minimum of 282 charts for review, site 1 reviewed 500 charts.

Step 2: chart review process. For the patient charts included in the manual review, the overall measure score was 60.3% and was significantly lower than the automated query measure score of 71.7% (P < .001) (Table 2). The manual review measure scores at each site ranged from 36.4% to 71.3% (Table 2). During the manual chart review at site 3, we noted the use of an in-house procedure code used to document the comprehensive oral evaluation clinical procedure that was not initially included in the automated query. As a result, the automated query score was 52.6%, and the manual score was 67.8% (P < .05). We included the in-house procedure code at site 3 in the revised EHR measure.

Step 3: concordance between the automated queries and manual reviews. Our results showed that the overall automated query performance performed well, with 93% sensitivity and 100% specificity and positive and negative predictive values.

Step 4: measure scores. We calculated the automated and manual query measure scores as the ratio of the numerator divided by the denominator showing the proportion of patients with diabetes who received at least 1 eligible clinical CDT procedure code (D0120, D0150, or D0180). The overall automated query and manual measure scores of the initial EHR measure were 71.7% (95% confidence interval [CI], 71% to 72%) and 60.3% (95% CI, 58% to 63%), respectively. In other words, the manual measure scores revealed that 60.3% of all patients with diabetes received at least 1 clinical CDT procedure code (D0120, D0150, or D0180) within the reporting year across all sites (Table 2).

Evaluation of patient charts that were not part of the numerator

We developed the revised EHR measure after an in-depth analysis of patient charts that were not part of the numerator in the initial EHR measure. Here is the summary of the modifications to both the denominator and numerator of the revised measure.

Revised EHR measure

Denominator

We applied the same specifications described for the initial EHR measure to this measure with the addition of an exclusion criterion. We excluded the following patients:

Discontinued patients (patients whose treatment was interrupted and who were no longer patients of record). Examples of scenarios that resulted in interruption of treatment include patients who were not compliant with their appointments or patients who developed a severe medical condition and were no longer able to come to the dental office;

Edentulous patients because our research focus was to determine whether patients with diabetes received a periodontal evaluation;

Patients seen for only limited, emergency, urgent, or specialist care.

The automated query identified a total of 13,221 patients with diabetes (Table 2).

Numerator

We describe here the revised and refined inclusion criteria, with the objective of better capturing the overall oral health care patients received (Figure 1):

Comprehensive oral evaluation (D0150), periodic oral evaluation (D0120), or comprehensive periodontal evaluation (D0180), including procedures with a completed or in-progress status and following the specifications of the original DQA and initial EHR measures;

Adult prophylaxis (D1110) or periodontal scaling and root planing for 1 to 3 teeth per quadrant (D4342), periodontal maintenance (D4910), or full-mouth debridement (D4355)—patients with diabetes are at increased risk of developing periodontal disease17; therefore, patients with diabetes who received periodontal treatment in the reporting year had at least 1 annual dental care visit and received the required oral health care equivalent beyond an oral health evaluation;

Periodontal charts, with the goal of clinically charting periodontal conditions to record probing depths and attachment levels at 6 sites per tooth, which is a critical step in completing a comprehensive periodontal examination; therefore, patients with a completed or in-progress periodontal chart had an annual dental care visit with an oral examination, thereby meeting the inclusion criteria of being part of the numerator;

Dental treatment procedures under the planned status and in conjunction with a valid structured diagnosis with use of the Dental Diagnostic System (DDS) terminology26 for documenting a periodontal condition: During the dental care visit and as described in the patient’s dental treatment plan, the patient received a valid DDS periodontal diagnosis, thereby meeting the inclusion criteria of the numerator.

The automated query identified 11,086 patients with diabetes across all 4 sites as having received at least 1 of the clinical procedures described in the revised inclusion criteria (Table 2).

Revised EHR measure automated query implementation

We implemented the automated query in the EHR and validated it with manual chart reviews by using the same methodology as described for the initial EHR measure in the methods. The overall revised measure scores (calculated as a ratio of the numerator divided by the denominator) was 83.9% (95% CI, 83% to 84%).

Revised EHR measure: validation of the automated query with manual chart reviews

Sample size calculations. After performing sample size calculations, each site completed a manual review of a subsample of charts. The proportions and minimum number of charts reviewed at each site were 80% and 200 at site 1, 82% and 134 at site 2, 85% and 174 at site 3, and 85% and 198 at site 4. We set margins for error and significance levels at each site at a standard 0.05 (d = 0.05; α = .05). For the first 50 charts reviewed at each site, interrater agreement was at a κ value of 1.0 for sites 1, 2, and 4 and 0.89 for site 3.

Chart review process

The overall measure score for the charts included in the manual reviews was 82.3% (95% CI, 79% to 85%). There were no overall differences between the scores revealed by the automated and manual queries (P = .26) (Table 2); however, at site 4, the manual review score (78.8% ) was lower than the automated query score (84.2%) (P < .05). The reason for this significant difference was a change in diabetes status in 4 patients because of weight loss and corrections in the medical history recordings during the reporting year. Because we designed the query to capture the first reported entry of a diabetic condition in the reporting year, we identified the discrepancy in the manual review, resulting in a lower measure score when compared with the automated query score.

The manual review (criterion standard) measure scores at each site ranged from 78.8% to 88.1%. The range of the scores for each site showed less variability in the revised EHR measure than in the initial EHR measure, indicating that for a quality measure to be implemented widely and to mirror clinical care, it is important to consider all relevant codes for the condition under examination so that we can evaluate patient care properly and not underestimate it.

Validation of the automated query

Our results showed that the overall automated query performance performed well, with 99% sensitivity, 90% specificity, and 98% positive predictive value and negative predictive value.

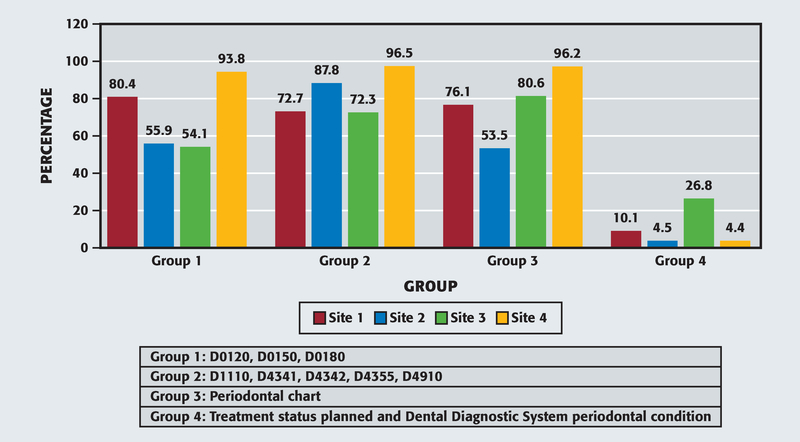

Numerator composition of the revised EHR measure

We grouped the CDT codes included in the revised EHR measure into 4 categories to evaluate the contribution of each code in the numerator composition (Figure 2). We calculated the percentages shown in Figure 2 from the automated query numerators included in Table 2 and included procedure code overlaps. Thus, a patient who received a procedure code included in group 1 also could have received 1 of the codes from group 2 and could be included in both groups. For example, at site 1, if the DQM included only patients in the group 1 category (D0120, D0150, or D0180), the measure would capture 80.4% of the patients but would miss 19.6% of the patients included in groups 2, 3, or 4. However, at site 3, the group 1 category included only 54.1%, suggesting possible variations in clinical procedures and patient population. Groups 2 and 3 showed the highest percentages of patients, highlighting the relevance of including periodontal treatment-related procedures as part of an oral health evaluation provided to people with diabetes. Lastly, group 4 exhibited the lowest percentages, but as the adoption of dental diagnostic terminologies continues in dentistry, DQM evaluation will benefit from diagnosis data and be an integral component of quality measurement.

Figure 2.

Numerator composition of the revised electronic health record measure. Percentages calculated from the automated query numerator are displayed in Table 2. Numbers in each group category include overlaps because patients may have received multiple Code on Dental Procedures and Nomenclature codes in the reporting year.

DISCUSSION

In our study, we adapted, tested, evaluated, and developed an improved EHR-based DQM to determine whether patients with diabetes received an annual oral or periodontal evaluation. We refined the specifications of a measure originally proposed by the DQA and constructed a revised EHR measure that better uses available data from EHRs. A key aspect in quality measure research is the appropriate definition of both the denominator and numerator populations.12 In our initial EHR measure, the denominator included all patients, including those who received only onetime emergency care or those who were discontinued and were not eligible to undergo the procedures listed in the numerator of the initial EHR measure. For example, at our institutions, a comprehensive oral evaluation (D0150) is provided only as part of continuous dental care, not emergency care. We also examined the definition of the numerator population. On examination of the reasons why patients from the denominator were not included in the numerator, we found that including only 3 CDT procedure codes (D0120, D0150, or D0180) failed to account for appropriate dental care delivered and resulted in an underestimation of the treatment patients received. Overly simplistic and loosely defined measures can make clinical evaluation challenging and compromise scientific evidence, generalizability, and interpretation of results.27

The revised EHR measure yielded higher and more consistent scores across all 4 sites than did the initial EHR measure. Overall, nearly 83% of patients with diabetes included in the denominator underwent at least 1 dental procedure as specified in the numerator inclusion criteria. Despite established evidence that dental care visits are an endorsed preventive strategy, data from the National Health Interview Survey indicated that nearly one-half of people with diabetes failed to undergo a dental examination.15 Two main reasons may help explain this large difference. One is that the number of dental procedures included in the revised EHR measure is not limited to annual dental care visits. The other is that the population in our study included patients of record at our dental institutions with at least 1 dental procedure completed in the reporting year. Therefore, it is possible that the population in our study was more likely to seek dental care when compared with the general population of patients with diabetes.

Despite the established benefits of using clinical data, challenges and limitations remain in using EHR data to assess the quality of care received by people with diabetes. First, we identified patients with diabetes on the basis of self-reported information available in the medical history forms with no stratification for diabetes type or severity. In our clinics, patients answer the question of whether they have diabetes at the beginning of dental treatment, and this information undergoes revisions at specific time points during the patient’s clinical treatment (for example, every 6 months as part of the periodic oral examination). Schneider and colleagues28 evaluated the validity and reliability of diabetes as a self-reported condition as part of a large epidemiologic study and concluded that self-reported diabetes is a reliable report (> 90%). Validation of self-reported medical conditions will improve as EHRs continue to evolve and integrated medical and dental clinical information becomes available.29

Second, we also acknowledge the possibility of underestimating the number of patients with diabetes because some patients may not have diabetes diagnosed or may have a prediabetic condition. Approximately 86 million Americans are estimated to have a prediabetic condition, and nearly 90% are not aware they have the condition.30 Lalla and colleagues31 assessed selected oral findings and evaluated the performance of prediction models, and they concluded that dentists play a major role in screening patients for prediabetes and undiagnosed diabetic conditions.

Third, we excluded edentulous patients from our revised measure specification because the focus of our research was to determine whether patients with diabetes received a periodontal evaluation. We therefore focused on periodontal-related assessments and treatments. Patients who received a comprehensive evaluation or periodic oral evaluation also underwent a periodontal evaluation. However, in future quality measure research, there may be value in also including edentulous patients with diabetes who receive other types of oral evaluations.

Lastly, patients who initiated continuous treatment in the last few months of the reporting year had a shorter time to undergo an oral examination. For example, if the patient initiated dental treatment at our institution on December 1, he or she may not have had the chance of completing the oral examination in the reporting year. Also, we also discovered that some patients’ charts were not coded with the appropriate CDT code or that the dental service was documented only in the free text notes and could not be identified by means of an automated query. In future work, we will investigate the usefulness of natural language processing to detect information in clinical notes.

We conducted our study at sites using the same EHR (axiUm). However, the measure specifications and methodology we described in this article may be used in institutions and clinics with other EHRs, especially if they are set up to use standardized codes (for treatments and diagnoses) and structured data to determine a patient’s diabetes status. As discussed in several reports on quality measures and dental terminology,5,12,22 data granularity and detailed information that can link dental procedures to disease conditions not only are needed for validating information in the EHR but also will be essential as quality measurement increases in number and complexity. Standardized documentation of diagnoses has begun in dentistry. The DDS,32 formerly called EZCodes22 and renamed SNO-DDS (Dental Diagnostic System) to reflect the harmonization with the Systematized Nomenclature of Dentistry,33 is a comprehensive dental diagnostic terminology for use in EHR user interfaces and was designed for the field of dentistry; it is publicly available and licensed through the American Dental Association. The sites participating in this study are early adopters of the DDS terminology and have been using it routinely since 2012. Our revised EHR measure leveraged the DDS terminology when we included patients with a periodontal diagnosis in the numerator, demonstrating the value of and need for including diagnosis information in clinical quality measure research.

In our research, we focused on evaluating a process-of-care measure (that is, did patients with diabetes receive an oral evaluation?) rather than assessing a health outcome (that is, did patients with diabetes improve or maintain their oral health?).34 Although process-of-care measures can act as surrogates for outcomes,14 in future work we will be expanding our focus to assessing outcome measures by using EHR data. In recognition of the benefits of using EHR data, the DQA detailed the specifications for 6 pediatric electronic measures.4 EHR data also will be critical in developing new measures focused on health outcomes such as those related to dental caries and periodontal disease.

We addressed our 3 research objectives across 4 geographically diverse dental institutions and demonstrated the feasibility of adapting a quality measure originally designed for administrative claims data and developed the specifications for use in the EHR framework, we determined the validity of the DQM in terms of measuring what it was intended to measure, and we developed and tested a refined EHR-based DQM. In future work, we will continue to develop and validate additional DQMs (process and outcomes of care) and, inspired by the learning health system35 movement, build a consortium of dental institutions willing to share data, knowledge, and expertise with the ultimate goal of improving patient oral and general health.

CONCLUSIONS

EHRs contain rich patient-level data suitable for assessing dental care quality. Our results suggest that an automated EHR-based query can be used successfully to measure the quality of oral health care delivered to patients with diabetes. We also found that using the rich data available in EHRs may help estimate the quality of care better than can relying on claims data. ∎

Acknowledgments

Research reported in this publication was supported in part by award R01DE024166 from the National Institute of Dental and Craniofacial Research, National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

ABBREVIATION KEY.

- CDT

Code on Dental Procedures and Nomenclature

- DDS

Dental Diagnostic System

- Den

Denominator

- DQA

Dental Quality Alliance

- DQM

Dental quality measure

- EHR

Electronic health record

- MS

Measure score

- NPV

Negative predictive value

- Num

Numerator

- PPV

Positive predictive value

Appendix

Logic of the electronic health record script for the implementation of the revised electronic health record measure to evaluate quality of dental care among patients with diabetes.

select row_id,chart#, age,gender, race, ethnicity,diabetes(y/n), part of numerator?(y/n), from

(

(select all patients who are reported to be diabetic as of the last day of the reporting year)

inner join

( select all patients who had at least one visit in the reporting year. exclude patients who had only emergency or specialist care visits in the reporting year )

on patient_id

inner join

( select patients with age >= 18 as of 1st day of reporting year)

on patient_id

inner join

( select patient demographics)

on patient_id

inner join

( select patient statuses and include patients active as on the last day of the reporting year)

on patient_id

inner join

( select patients who were not edentulous as on the last date of the reporting year)

on patient_id

left join

( select patients who satisfied at least one of the numerator criterions(all in the reporting year)

a. received do120 or do140

b. received d111o or d4341 or d4342 or d491o

c. had a completed perio exam or

d. had the following diagnosis: periodontal health; gingival diseases - plaque induced; gingival diseases - nonplaque induced; chronic periodontitis;

aggressive periodontitis; periodontitis as a manifestation of systemic diseases; necrotizing periodontal diseases; abscesses of the periodontium; developed and acquired deformities and conditions;

) on patient_id

)

Footnotes

SUPPLEMENTAL DATA

Supplemental data related to this article can be found at: http://dx.doi.org/10.1016/j.adaj.2017.04.017.

Disclosure. None of the authors reported any disclosures.

Contributor Information

Ana Neumann, Dr. Neumann is an associate professor, General Practice and Dental Public Health, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX..

Elsbeth Kalenderian, Dr. Kalenderian is a professor and the chair, Preventive and Restorative Dental Sciences, School of Dentistry, University of California San Francisco, San Francisco, CA..

Rachel Ramoni, Dr. Ramoni is an assistant professor, Department of Epidemiology and Health Promotion, New York University College of Dentistry, New York, NY..

Alfa Yansane, Dr. Yansane is an assistant adjunct professor, School of Dentistry, University of California San Francisco, San Francisco, CA..

Bunmi Tokede, Dr. Tokede is an assistant professor, Oral Health Policy and Epidemiology, Harvard School of Dental Medicine, Boston, MA..

Jini Etolue, Dr. Etolue is a postdoctoral fellow, Oral Health Policy and Epidemiology, Harvard School of Dental Medicine, Boston, MA..

Ram Vaderhobli, Dr. Vaderhobli is an associate professor, Preventive and Restorative Dental Sciences, School of Dentistry, University of California San Francisco, San Francisco, CA..

Kristen Simmons, Ms. Simmons is a chief operating officer, Willamette Dental Group, Hillsboro, OR..

Joshua Even, Dr. Even is a dentist, Willamette Dental Group, Hillsboro, OR..

Joanna Mullins, Ms. Mullins is a manager of clinical strategy and support, Willamette Dental Group, Hillsboro, OR..

Shwetha Kumar, Dr. Kumar is a graduate research assistant, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX..

Suhasini Bangar, Dr. Bangar is a graduate research assistant, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX..

Krishna Kookal, Mr. Kookal is a clinical informatics research datawarehouse systems analyst, School of Dentistry, University of Texas Health Science Center at Houston, Houston, TX..

Joel White, Dr. White is a professor, Preventive and Restorative Dental Sciences, School of Dentistry, University of California San Francisco, San Francisco, CA..

Muhammad Walji, Dr. Walji is a professor, School of Dentistry, University of Texas Health Science Center at Houston, 7500 Cambridge, SOD 4184, Houston, TX 77054, Muhammad.F.Walji@uth.tmc.edu..

References

- 1.Institute of Medicine Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001. [Google Scholar]

- 2.Dental Quality Alliance. Quality Measurement in Dentistry: A Guidebook. Chicago, IL: American Dental Association on behalf of the Dental Quality Alliance; 2016:56. [Google Scholar]

- 3.Dental Quality Alliance. DQA - Measures under consideration. Available at: http://www.ada.org/~/media/ADA/Science%20and%20Research/Files/DQA_2016_Measures_Under_Consideration.pdf?la=en. Accessed May 11, 2017.

- 4.Dental Quality Alliance. Pediatric Oral Healthcare Exploring the Feasibility for E-Measures. Chicago, IL: American Dental Association on behalf of the Dental Quality Alliance; 2012:31. [Google Scholar]

- 5.Herndon JB, Tomar SL, Catalanotto FA, et al. Measuring quality of dental care: caries prevention services for children. JADA. 2015;146(8):581–591. [DOI] [PubMed] [Google Scholar]

- 6.Kenney GM, Pelletier JE. Monitoring duration of coverage in Medicaid and CHIP to assess program performance and quality. Acad Pediatr. 2011;11(3 suppl):S34–S41. [DOI] [PubMed] [Google Scholar]

- 7.Mangione-Smith R, Schiff J, Dougherty D. Identifying children’s health care quality measures for Medicaid and CHIP: an evidence-informed, publicly transparent expert process. Acad Pediatr. 2011;11 (3 suppl):S11–S21. [DOI] [PubMed] [Google Scholar]

- 8.Tang PC, Ralston M, Arrigotti MF, Qureshi L, Graham J. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc. 2007;14(1): 10–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Centers for Medicare & Medicaid Services. Quality measures. Available at: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/QualityMeasures/index.html?redirect=/qualitymeasures/03_electronicspecifications.asp. Accessed May 11, 2017.

- 10.American Dental Association Survey Center. 2010 Technology and Social Media Survey. Chicago, IL: American Dental Association; 2012. [Google Scholar]

- 11.Walji MF, Kalenderian E, Tran D, et al. Detection and characterization of usability problems in structured data entry interfaces in dentistry. Int J Med Inform. 2013;82(2):128–138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bhardwaj A, Ramoni R, Kalenderian E, et al. Measuring up: implementing a dental quality measure in the electronic health record context. JADA. 2016;147(1):35–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Centers for Disease Control and Prevention. National Diabetes Statistics Report: Estimates of Diabetes and Its Burden in the United States. Atlanta, GA: U.S. Department of Health and Human Services Office of Disease Prevention and Health Promotion; 2014. [Google Scholar]

- 14.O’Connor PJ, Bodkin NL, Fradkin J, et al. Diabetes performance measures: current status and future directions. Diabetes Care. 2011;34(7)1651–1659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.National Health Interview Survey (NHIS); Centers for Disease Control and Prevention, National Center for Health Statistics (CDC/NCHS). Annual dental examinations among persons with diagnosed diabetes (age adjusted, percent, 2+ years) by total. Available at: https://www.healthypeople.gov/2020/data/Chart/4127?category=1&by=Total&fips=−1. Accessed May 15, 2017.

- 16.American Dental Association. Dentist highlights dentistry’s key role in identifying diabetes. Available at: http://www.ada.org/en/publications/ada-news/2015-archive/august/dentist-highlights-importance-of-dental-role-in-identifying-diabetes. Accessed March 28, 2016.

- 17.Furnari W, Gillis M, Novak K, Taylor G. In: Centers for Disease Control and Prevention, ed. What Dental Professionals Would Like Team Members to Know About Oral Health and Diabetes. Atlanta, GA: National Center for Chronic Disease Prevention and Health Promotion Division of Diabetes Translation. [Google Scholar]

- 18.National Center for Health Statistics. Health, United States, 2015: With Special Feature on Racial and Ethnic Health Disparities. Hyattsville, MD: U.S. Government; 2016. [PubMed] [Google Scholar]

- 19.Walji MF, Kalenderian E, Stark PC, et al. BigMouth: a multi-institutional dental data repository. J Am Med Inform Assoc. 2014;21(6):1136–1140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ramoni RB, Etolue J, Tokede O, et al. Adoption of dental innovations: the case of a standardized dental diagnostic terminology. JADA. 2017;148(5):319–327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ramoni RB, Walji MF, Kim S, et al. Attitudes toward and beliefs about the use of a dental diagnostic terminology: a survey of dental care providers in a dental practice. JADA. 2015;146(6):390–397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kalenderian E, Ramoni RL, White JM, et al. The development of a dental diagnostic terminology. J Dent Educ. 2011;75(1):68–76. [PMC free article] [PubMed] [Google Scholar]

- 23.Stark PC, Kalenderian E, White JM, et al. Consortium for oral health-related informatics: improving dental research, education, and treatment. J Dent Educ. 2010;74(10):1051–1065. [PMC free article] [PubMed] [Google Scholar]

- 24.American Dental Association. CDT 2017: Dental Procedure Codes. 7th ed. Chicago, IL: American Dental Association; 2016:180. [Google Scholar]

- 25.Cohen J A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37–46. [Google Scholar]

- 26.Kalenderian E, Tokede B, Ramoni R, et al. Dental clinical research: an illustration of the value of standardized diagnostic terms. J Public Health Dent. 2016;76(2):152–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McGlynn EA. Choosing and evaluating clinical performance measures. Jt Comm J Qual Improv. 1998;24(9):470–479. [DOI] [PubMed] [Google Scholar]

- 28.Schneider AL, Pankow JS, Heiss G, Selvin E. Validity and reliability of self-reported diabetes in the Atherosclerosis Risk in Communities Study. Am J Epidemiol. 2012;176(8):738–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rudman W, Hart-Hester S, Jones W, Caputo N, Madison M. Integrating medical and dental records: a new frontier in health information management. J AHIMA. 2010;81(10):36–39. [PubMed] [Google Scholar]

- 30.Centers for Disease Control and Prevention. Chronic disease prevention and health promotion: diabetes—working to reverse the US epidemic: at a glance 2016. Available at: https://www.cdc.gov/chronicdisease/resources/publications/aag/diabetes.htm. Accessed April 26, 2017.

- 31.Lalla E, Cheng B, Kunzel C, Burkett S, Lamster IB. Dental findings and identification of undiagnosed hyperglycemia. J Dent Res. 2013;92(10): 888–892. [DOI] [PubMed] [Google Scholar]

- 32.President and Fellows of Harvard College and the Board of Regents of the University of Texas System and Academic Centre for Dentistry Amsterdam and Regents of the University of California SNODDS Dental Diagnostic System. Available at: http://dentaldiagnosticterminology.org. Accessed June 20, 2016.

- 33.American Dental Association. SNODENT: Systemized Nomenclature of Dentistry. Available at: http://www.ada.org/en/member-center/member-benefits/practice-resources/dental-informatics/snodent. Accessed June 20, 2016.

- 34.Rubin HR, Pronovost P, Diette GB. The advantages and disadvantages of process-based measures of health care quality. Int J Qual Health Care. 2001;13(6):469–474. [DOI] [PubMed] [Google Scholar]

- 35.Friedman CP, Wong AK, Blumenthal D. Achieving a nationwide learning health system. Sci Transl Med. 2010;2(57):57cm29. [DOI] [PubMed] [Google Scholar]