Abstract

There is accumulating evidence that the entorhinal-hippocampal network is important for temporal memory. However, relatively little is known about the precise neurobiological mechanisms underlying memory for time. In particular, whether the lateral entorhinal cortex is involved in temporal processing remains an open question. During high-resolution fMRI scanning, participants watched a ~30-minute episode of a television show. During test, they viewed still-frames and indicated on a continuous timeline the precise time each still-frame was viewed during study. This procedure allowed us to measure error in seconds for each trial. We analyzed fMRI data from retrieval and found that high temporal precision was associated with increased BOLD fMRI activity in the anterolateral entorhinal (a homologue of the lateral entorhinal cortex in rodents) and perirhinal cortices, but not in the posteromedial entorhinal and parahippocampal cortices. This suggests a novel role for the lateral entorhinal cortex in processing of high-precision minute-scale temporal memories.

Summary:

In the lateral entorhinal cortex, high precision judgments related to timing were associated with greater BOLD fMRI activity than low precision time judgments. This brain region may be involved in memory for when events occur.

Introduction

The association of temporal and spatial contextual information with an experience is a critical component of episodic memory1-3. A rich literature has examined how spatial properties are encoded by hippocampal-entorhinal circuitry, including spatially selective cells both in the hippocampus4 as well as the medial entorhinal cortex (MEC)5-7. Temporal coding properties in the same network have only been recently examined. The discovery of “time cells” in hippocampal CA1 and MEC8-11 suggests that the medial temporal lobes (MTL) may employ similar mechanisms and shared circuitry to encode both space and time10,12,13. In contrast to the MEC, the lateral entorhinal cortex (LEC) appears to code for several elements of the sensory experience14, including item information15 and locations of objects in space14. Human fMRI studies have similarly shown that the LEC is preferentially selective for object identity information (i.e. “what”), whereas the MEC is preferentially selective for spatial locations (i.e. “where”)16,17. Whether the LEC provides temporal information to or receives information from the hippocampus to become integrated in episodic representations remains an open question. While the temporal coding properties of “time cells” offer a suitable mechanism by which short timescales (milliseconds to seconds) may be encoded, it is not clear how the longer timescale of episodes (minutes) are encoded by these mechanisms. Additionally, episodic memory involves unique “one-shot” encoding that is incidental in nature, while most studies assessing temporal coding properties involve explicit tasks and/or extensive training (e.g. sequence learning). We address both of these challenges by using a 30-minute incidental viewing paradigm of a complex naturalistic stimulus (an episode of a television sitcom) and a continuous evaluation of the precision of subsequent temporal memory judgments (on the order of seconds to minutes). Here, we demonstrate that the LEC plays a prominent role in temporal processing in a task involving a timescale of minutes. These results suggest that there may be multiple distinct mechanisms supporting temporal memory in the MTL and that timescale may be a critical variable that should be considered in future work.

Results

Temporal judgments generate a range of accuracies between 1–3 minutes

During fMRI scanning, subjects watched a ~30-minute television episode of a sitcom (Curb Your Enthusiasm, HBO), and were asked during a later test to determine, on a continuous timeline, when still-frames extracted from the episode appeared during incidental viewing (Fig 1). All analyses discussed were performed on data at retrieval. To ensure that subjects are able to accomplish the task and that behavioral performance reflects a range of different accuracies we quantified error in seconds on each trial. Average error was 155.54 seconds (2.6 minutes), with a standard deviation of 163.58 seconds (Fig 2a).

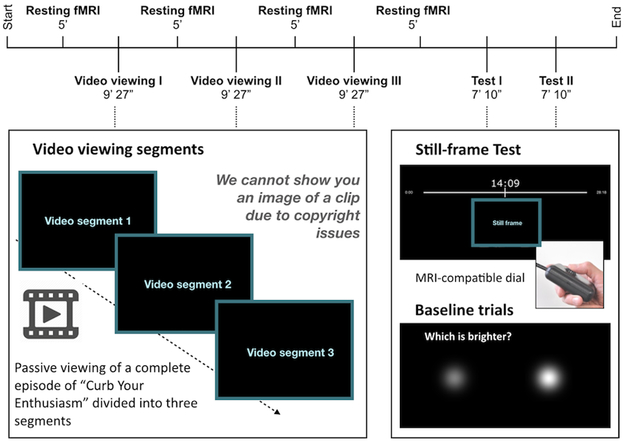

Fig 1. Task parameters and description.

During encoding participants passively viewed a ~ 28 minute television episode of “Curb Your Enthusiasm”, separated into three scans of 9 minutes and 27 seconds each. A 5-minute resting state fMRI scan took place before and after each scan for a total encoding and storage time of ~ 45 minutes. Subsequent testing blocks were divided into two scans, each for 7 minutes and 10 seconds. During test, participants were shown still frames that appeared during the episode and were asked to indicate on a timeline, using an MR-compatible dial, when the event in question occurred. Each testing trial lasted 9 seconds to allow participants to hone in on the temporal frame and enable more comprehensive indexing of temporal signals during retrieval. Perceptual baseline trials were also included where participants were asked to indicate which of the two circles on the screen was brighter.

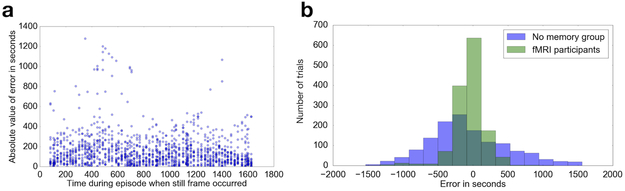

Fig 2. Behavioral performance.

Error was calculated per trial as time (in seconds) between subject placement and actual time of appearance. (a) The average error across all subjects was 153.3 seconds. Still frames shown at retrieval were taken from the middle 1545 seconds of the episode to avoid primacy and recency effects. (b) Behavioral performance compared to chance. FMRI participants had significantly lower error than a separate group of participants who performed the same task but had never seen the episode (n=19 participants, two-tailed Kolmogorov-Smirnov D = 0.4991, p < 0.001).

In each subject, we divided retrieval trials into thirds: “high precision”, “medium precision” and “low precision” trials. Across subjects, “high precision” trials were associated with error < 74 seconds and “low precision” trials were associated with error > 170 seconds, suggesting that the differences in terms of time were not drastic. In other words, the comparison is akin to examining differences in being accurate within a minute vs. three minutes. Trials with error exceeding five minutes were rare across all subjects and did not contribute significantly. Additionally, we ascertained that all participants were attentive to the episode and evaluated their semantic knowledge of the episode using a post-scan true-false test. Average accuracy was 96%.

To further determine whether similar accuracy could be driven by response biases (preference for specific portions of the timeline) or other factors not associated with temporal memory, we conducted a separate control experiment in an independent sample. Subjects in this experiment did not watch the episode but were still asked to place the still-frames on the timeline. Because they had no memory for the episode, their performance provided a measure of the random distribution. We compared the distribution of accuracy (absolute value of the trial-by-trial error in seconds) in the experimental fMRI sample and the control sample that did not view the episode using a nonparametric two-tailed Kolmogorov-Smirnov test. The difference across the two distributions was significant (K-S D=0.4991, p<0.0001), Fig 2b), confirming that performance in the fMRI participants was not merely reflecting behavioral biases related to assessment via the continuous timeline. We conducted a one-way repeated measures ANOVA comparing trials that were of short (2–107 seconds), medium (108–186 seconds) and long (200–277 seconds) distances from a boundary, which was not statistically significant [F(2,18)= 3.29, p>0.05], indicating that error does not differ significantly based on a trial’s distance from a segment boundary (Supplementary Fig S1). Additionally, we found no evidence for regional modulations by vividness of the recall. We asked twelve participants to provide vividness ratings after the scanner-based recall and compared high vs. low vividness trials. We found no significant differences that surpassed our cluster-based threshold of p < 0.05 (Supplementary Fig S2).

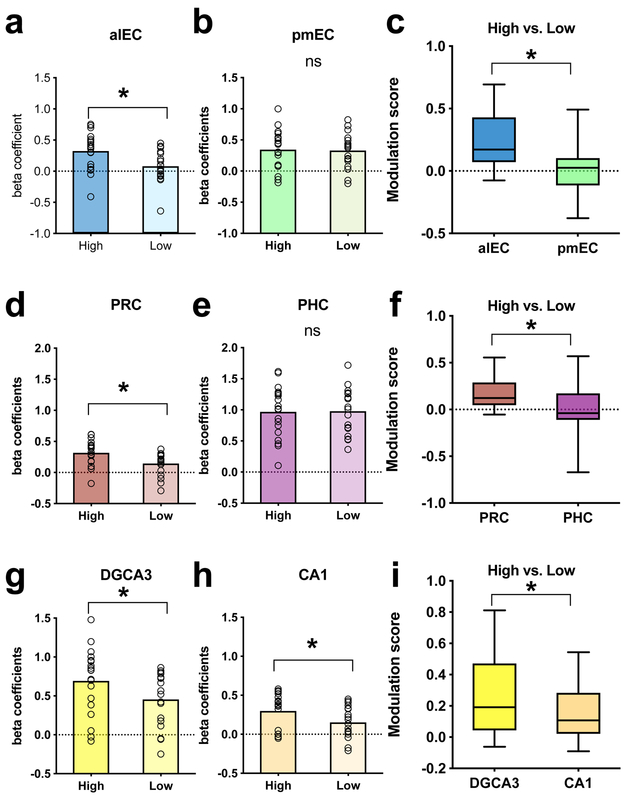

Anterolateral but not posteromedial EC is selectively engaged for precise temporal memory

Recent work using fMRI functional connectivity has clarified the boundaries of the LEC and MEC regions in the human brain and demonstrated that, consistent with nonhuman primate anatomical studies18, the human analog of rodent LEC is anterolateral (alEC), whereas the human analog of rodent MEC is posteromedial (pmEC)19,20. We used anatomical masks for alEC and pmEC to contrast the level of engagement as a function of temporal precision in these two particular regions. Contrasting high vs. low precision trials allowed us to examine sensitivity of MTL regions to the temporal accuracy of recall. Voxel beta coefficients were averaged within the regions of interest as an overall indicator of the degree of model fit with the underlying hemodynamic signal. We found significant temporal precision-related modulation in the alEC (t=4.537, df=18, two-tailed p=0.0003, Cohen’s d=0.8808, Fig 3a) but not in the pmEC (t=0.3504, df=18, two-tailed p=0.7301, Fig 3b). To determine if this difference across subregions of the EC was significant, we calculated the difference in beta coefficients between high and low precision conditions and contrasted the alEC and pmEC on this difference measure (i.e. modulation score). We found that the difference in modulation score was also significant (t=4.794, df=18, two-tailed p=0.0001, Cohen’s d=1.0886, Fig 3c), suggesting that high precision trials preferentially engaged the alEC but not pMEC. To determine if this selective engagement may extend upstream of the entorhinal cortex, we additionally averaged voxel activity in the perirhinal (PRC) and parahippocampal (PHC) cortices. As expected from the EC results, upstream cortices reflected a similar effect. We found a significant difference between high and low precision trials in the PRC (t=4.331, df=18, two-tailed p=.0004, Cohen’s d=0.8936, Fig 3d) but not in the PHC (t=0.1464, df=18, two-tailed p=0.8852, Fig 3e). Modulation scores across the two regions were also significantly different (t=3.193, df=18, p=.0005, Cohen’s d=0.7213, Fig 3f). Together, these results suggest that the extension of the ventral visual stream (PRC and alEC) is engaged in temporal processing on the scale of minutes, whereas the extension of the dorsal visual stream (PHC and pmEC) does not appear to show temporal precision-selective signals on the same scale.

Fig 3. Effects of precision on MTL regions.

(a,b,d,e,g,h) Comparing most precise [within 1 min] > least precise [over 3 min] across hippocampal subfields and MTL cortical regions; Using two-tailed paired samples t-tests (n=19 participants, Bonferroni-Holm corrected), we found significantly higher BOLD fMRI activity for high vs. low precision trials in alEC (t=4.537, df=18, two-tailed p=0.0003), PRC (t=4.331, df=18, two-tailed p=0.0004), DGCA3 (t=4.113, df=18, two-tailed p=0.0007), and CA1 (t=3.691, df=18, two-tailed p=0.0017). No significant differences were found in pmEC (t=0.3504, df=18, two-tailed p=0.7301) and PHC (t=0.1464, df=18, two-tailed p=0.8852). n=19 for all comparisons. (c,f,i) Magnitude of modulation by precision. Difference metrics were calculated by subtracting beta coefficients from the ‘least precise’ condition from those of the ‘most precise’ condition. Modulations were significantly higher in the alEC (t=4.794, df=18, two-tailed p=0.0001, minimum=−0.0751, 25th percentile=0.0705, median=0.1723, 75th percentile=0.4288, maximum=0.6932), PRC (t=3.193, df=18, two-tailed p=0.0005, minimum=−0.0535, 25th percentile=0.0466, median=0.1231, 75th percentile=0.2884, maximum=0.5558) and in hippocampal subfields (with a stronger effect in DG/CA3; t=3.091, df=18, two-tailed p=0.0063, minimum=−0.0615, 25th percentile=0.0434, median=0.1913, 75th percentile=0.471, maximum=0.8114) compared to the pmEC (minimum=−0.3783, 25th percentile=−0.1157, median=0.0256, 75th percentile=0.1032, maximum=0.4919), PHC (minimum=−0.6703, 25th percentile=−0.1117, median=−0.0387, 75th percentile=0.1733, maximum=0.5688), and CA1 (minimum=−0.0905, 25th percentile=0.0211, median=0.1075, 75th percentile=0.2832, maximum=0.5437). n=19 for all comparisons.

Hippocampal DG/CA3 is more engaged than CA1 for precise temporal memory

Next, we sought to examine whether hippocampal subfields show BOLD fMRI signals modulated by the precision of temporal judgments. We used anatomical segmentations of hippocampal dentate and CA3 (combined for a joint DG/CA3 label as in past fMRI studies), and CA1 to get regional averages of voxel-level activation during temporal memory judgments. We found precision-related modulations (high vs. low) in both hippocampal subregions, with stronger effects in DG/CA3 (t=4.113, df = 18, two-tailed p=0.0007, Cohen’s d=0.622, Fig 3g) compared to CA1 (t=3.691, df=18, two-tailed p=.0017, Cohen’s d=0.6871, Fig 3h). Again, we calculated average modulation scores across the two subregions across all participants and found a significant difference across subfields (t=3.091, df=18, two-tailed p=0.0063, Cohen’s d=0.4216, Fig 3i), suggesting that the modulation by temporal precision in DG/CA3 was stronger than in CA1.

Cortical regions preferentially engaged during precise temporal memory judgments

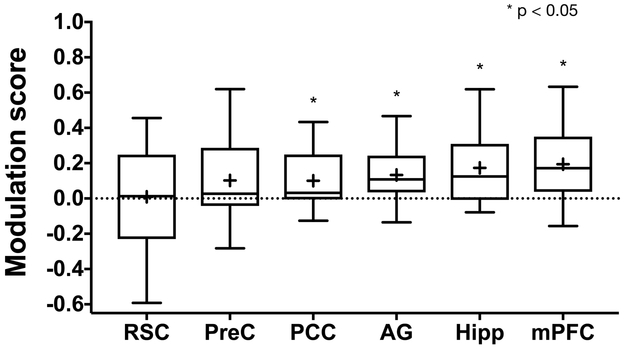

Since correct temporal memory judgments would be expected to engage circuitry involved in the experience of recollection and memory for rich contextual details, we examined how cortical regions outside of the MTL are modulated by temporal memory precision, focusing on regions previously implicated in recollection and detail memory21, including the angular gyrus (AG), retrosplenial cortex (RSC), precuneus (PreC), posterior cingulate cortex (PCC), and medial prefrontal cortex (mPFC). Using anatomical masks for these regions to average voxel-level activity during high and low precision, we found significant high vs. low differences bilaterally in the mPFC (t=3.851, df=18, p=0.0017, Cohen’s d=0.6469), the AG (t = 3.41, df = 18, p = 0.0031, Cohen’s d=0.6471), and the PCC (t=2.75, df=18, p=0.0132, Cohen’s d=0.4547). We observed no significant modulation in the precuneus (t=1.937, df=18, p=0.068) and retrosplenial cortex (t=0.137, df=18, p=0.8925). These results are summarized using modulation scores across cortical regions (Fig 4). Collectively, analyses of cortical regions suggest that memories recollected with higher temporal precision engage some of the same cortical circuits and regions known to play a role in the representation of detail memory.

Fig 4. Cortical reinstatement effects.

(a) Cortical temporal modulation scores across regions previously implicated in recollection and recall of contextual or detail memory including the retrosplenial cortex (RSC, t=−0.0027, df=18, two-tailed p=0.9979, minimum=−.5316, 25th percentile=−0.2357, median=0.0012, 75th percentile=0.2478, maximum=0.536), precuneus (PreC, t=1.685, df=18, two-tailed p=0.1093, minimum=−0.2382, 25th percentile=−0.0648, median=0.0345, 75th percentile=0.1931, maximum=0.4965), posterior cingulate cortex (PCC, t=2.7984 df=18, two-tailed p=0.0119, minimum=−0.0851, 25th percentile=−0.0426, median=0.0571, 75th percentile=0.1635, maximum=0.295), angular gyrus (AG, t=3.3742, df=18, two-tailed p=0.0034, minimum=−0.1062, 25th percentile=0.0197, median=0.0984, 75th percentile=0.2662, maximum=0.4543), medial prefrontal cortex (mPFC, t=2.899, df=18, two-tailed p=0.0096, minimum=−0.1148, 25th percentile=−0.0118, median=0.1211, 75th percentile=0.2584, maximum=0.0846), and the whole hippocampus (Hipp, t=3.9518, df=18, two-tailed p=0.0021, minimum=−0.0784, 25th percentile=−0.0077, median=0.1245, 75th percentile=0.3094, maximum=0.6192) for reference. Only modulation scores in the PCC, AG, mPFC and Hipp are significantly different from zero (two-tailed one-sample t-tests, Bonferroni-Holm corrected, n=19 participants). Hipp is shown here for comparison.

Discussion

Results from this study suggest that temporal precision judgments on the order of minutes are associated with increased BOLD fMRI activity in the alEC and PRC, which is consistent with a broad role for these regions in the processing of external input including information about temporal context. The observation that alEC-PRC network but not the pmEC-PHC network was significantly more engaged for trials with high temporal precision suggests that distinct mechanisms may be used to process and store spatial and longer-timescale temporal information. Past studies in rodents have demonstrated little spatial selectivity in LEC but strong coding for object properties14,22. One study which used a similar timeline asked participants to make retrospective estimates of the duration of time between audio clips from a radio story. They found that these duration estimates correlated with BOLD fMRI pattern similarity in the right entorhinal cortex, though the authors did not segment aLEC and pMEC23. More recently, an examination of LEC firing properties during open exploration has demonstrated strong temporal coding on the order of minutes, consistent with our results24.

The observation that PRC was significantly more engaged for the most temporally precise trials was only partially consistent with prior studies. Inactivation of the PRC in rats has been associated with impaired temporal order memory for objects25, and a subset of neurons in the PRC alter their firing based on how recently an object was viewed26. In contrast, a number of studies have demonstrated a role for the PRC in object recognition and not the recall of contextual details per se27. Studies in humans using fMRI have reported signals linked to temporal context, operationalized in terms of items’ ordinal positions in a sequence, in the PHC and not the PRC28-30. It is worth noting that these prior studies used a short timescale of event proximity (seconds, not minutes), whereas the current study used a much longer timescale (minutes to tens of minutes). It is possible that coding for temporal relations on this longer timescale may involve distinct mechanisms that are more in-line with the hypothesized functions for the alEC and PRC regions in semantic recall.

Consistent with the possibility that distinct neural mechanisms support short and long timescale temporal coding, we also found no temporally-modulated signals in the PHC, a region that has been associated with fine temporal memory judgments29 on a short timescale. A previous study31 reported PHC engagement during retrieval of temporal order for events in a television show, but that this activity was not associated with precision, thus it is difficult to draw conclusions about whether the activity supported performance.

Another aspect of this work that differs significantly from extant literature is that all fMRI data discussed are from retrieval, not encoding. Previous research investigating temporal memory and using a timeline23,32 found that fMRI activity at encoding predicted aspects of subsequent temporal memory. In contrast, our work sought to investigate networks that support retrieval of experiences in order to make temporal memory judgments. This difference in experimental design fills a gap in the literature and may partially explain the divergence between the reported results and those of previous studies.

One potential limitation is that the current study and other tasks using naturalistic stimuli are less able to control every aspect of encoding and retrieval. We tried to control for alternative explanations to the extent that it was possible. One is that our results could have been driven by attention at encoding, with participants preferentially attending to objects in scenes for which they later had greater temporal precision. After they completed the study, we asked twelve of our fMRI participants to rate how vividly they could recall the scene associated with each still-frame image from the experiment (Supplementary Fig S2). We then used those ratings to perform a univariate analysis to test whether there was significantly higher BOLD fMRI activity for high vs. low vividness trials in our ROIs. We found no significant differences, indicating that the most vividly recalled scenes were not associated with higher alEC activity. It is possible that participants’ self-reports of vividness were imperfect or that during encoding, participants preferentially attended to certain parts of the video that were later recalled more precisely.

Overall, naturalistic tasks and tightly controlled laboratory tasks each have different strengths and weaknesses. Tightly controlled laboratory experiments are less generalizable to real-life situations. We controlled for potential confounds as much as possible, by choosing an episode from a television show that uses situational humor that requires an understanding of the characters and the narrative, has been used in the past by other investigators33, takes place in a relatively small number of physical locations, and does not include a laugh track. Integrating evidence from both naturalistic and laboratory studies will advance understanding of memory systems.

It is important to consider the relative contributions of pure timing information vs. sequence/event information in determining when events occurred. This is especially true for more naturalistic paradigms involving multisensory information, since events can be salient and have meaning. It is likely that both types of information are important for making temporal judgments. It would be useful for future studies to compare memory for events that occur in a meaningful order with events that have less of a sequential structure.

Our results demonstrate a prominent role for the alEC and PRC in temporal memory on the scale of minutes. This demonstration also brings timescale into consideration as a potential critical variable in studying temporal memory that may affect which brain networks are recruited to support encoding and retrieval. Single MTL neurons fire at a preferred time during trials lasting a few seconds34. However, it is likely that a gradually changing pattern from many MTL neurons would be necessary to encode longer time periods (minutes to days). Experiences that span minutes to hours are likely associated with evolving internal states (wake/sleep cycles, hunger, etc.) that may help in distinguishing them from similar experiences that occurred at different times. Further work will be necessary to elucidate the specific molecular and synaptic mechanisms that underlie temporal storage and retrieval at these different timescales.

Methods

Participants

Twenty-six healthy adult volunteers were recruited from the University of California, Irvine and the surrounding community. This study was approved by the Institutional Review Board (IRB) at the University of California, Irvine, and we complied with the study protocol as approved by the IRB. Participants gave informed consent in accordance with the IRB and received monetary compensation. All participants were right handed and were screened for psychiatric disorders. Six were excluded due to excessive motion (>20% of TRs excluded due to the Euclidian Norm of the motion derivative exceeding 0.3mm), and one requested to stop the study after the first functional scan. Data from the remaining 19 participants (10 female, ages 18–29 [mean 21.42, SD = 2.85]) was analyzed. Sample size was calculated a priori based on power analyses which demonstrate that for high resolution functional MRI studies, a minimum of 16 subjects is required to achieve 80% power at an alpha of .05.

Functional MRI task

Encoding:

Participants viewed an episode of Curb Your Enthusiasm (Season 2 Episode 9 “The Baptism”) while in the MRI scanner. This was presented using PsychoPy35 version1.82.01. The episode was split into three equal parts, each 9 minutes and 26 seconds long (Fig 1). Participants were instructed to pay attention to the videos and that they would be asked questions about them later. After each video segment, we collected a 5-minute resting state scan in which participants were instructed to look at a fixation cross in the middle of the screen.

Retrieval:

Retrieval took place approximately 5 minutes after the last resting state scan at encoding. During each of 2 runs, participants were presented with 72 still frames from the video segments and were asked to indicate when during the episode they thought each still frame occurred. Above each still frame, a timeline appeared that ranged from 0 seconds (beginning of the episode) to 28:18 seconds (the end of the episode). No still frames from the first or last minute of the episode were used to avoid primacy/recency effects. A cursor was visible and moved in sync with an MR-compatible scroll click device that is similar to the scroll wheel on a mouse (Current Designs). On perceptual baseline trials, two gray circles appeared on the screen and participants were instructed to indicate which circle was brighter. Each of these trials were 9 seconds long, and they comprised 25% of total retrieval trials. Outside of the scanner, participants took a test about events that occurred during the episode. All reported analyses were performed on retrieval data only.

Behavioral Control Experiment

In order to ensure that participants were performing adequately on the task, we conducted a behavioral experiment on a separate group of participants. These participants did not watch the episode of Curb Your Enthusiasm. They were asked to place the still frames from the episode on a timeline without ever having watched the episode. Because they were not able to use memory to guide their responses, their performance is considered to be at chance. We then performed a Kolmogorov–Smirnov test using GraphPad Prism36 to determine whether performance from this experiment was significantly different than that of the actual fMRI participants.

MRI acquisition

Neuroimaging data were acquired on a 3.0 Tesla Philips Achieva scanner, using a 32-channel sensitivity encoding (SENSE) coil at the Neuroscience Imaging Center at the University of California, Irvine. A high-resolution 3D magnetization-prepared rapid gradient echo (MP-RAGE) structural scan (0.65 × 0.65 × 0.65mm) was acquired at the beginning of each session and used for co-registration. Each of two functional MRI scans consisted of a T2*-weighted echo planar imaging (EPI) sequence using blood-oxygenation-level-dependent (BOLD) contrast: repetition time (TR)=2500 ms, echo time (TE)=26 ms, flip angle = 70 degrees, 33 slices, 172 dynamics per run, 1.8 × 1.8 mm in plane resolution, 1.8 mm slice thickness, field of view (FOV) =180 × 65.8 × 180. Slices were acquired as a partial axial volume and without offset or angulation. Four initial “dummy scans” were acquired to ensure T1 signal stabilization.

Functional MRI Analysis

Preprocessing:

Preprocessing and general linear model analysis was conducted using AFNI (Analysis of Functional NeuroImages) software37. First, data were brain extracted (3dSkullStrip). Then, using afni_proc.py, TRs pairs where the Euclidian Norm of the motion derivative exceeded 0.3mm were excluded from the analysis. Functional data were slice timing corrected (3dTshift), motion corrected (3dvolreg), and blurred to 2mm (3dmerge). Each subject’s functional data was aligned to their anatomical scan (3dallineate). Then, we used ANTs (Advanced Normalization Techniques) software38 to align each subject’s data to a common template (0.65mm isotropic).

General Linear Model:

For each subject, retrieval trials were ordered by the amount of error in seconds (distance between the subject’s response and the correct answer). The ordered trials were then split into three conditions: high precision, medium precision, and low precision trials. These three conditions were entered into the general linear model using 3D deconvolution in AFNI (3dDeconvolve), in addition to 6-dimensional motion regressors generated during motion correction. We restricted our analysis to task-activated voxels which we obtained by thresholding the full F-statistic containing all experimental conditions (thresholded at p = 0.35, cluster extent threshold = 20), which thus does not bias voxel selection towards any particular condition of interest. Subsequent analyses compared parameter estimates (beta coefficients) from the most and least precise trials, compared to perceptual baseline trials. This was done using the AFNI 3dmaskave function to extract average beta coefficients across the left and right components of each region.

Regions of interest (ROIs) were traced on the common template (0.65 mm isotropic) to which each subject’s data was aligned. Beta coefficients were averaged across all voxels in each ROI (3dmaskave). For each ROI, paired t-tests were conducted on parameter estimates from the most precise and least precise trials. Bonferroni-Holm correction for multiple comparisons was used for clusters of a priori ROIs (hippocampal and medial temporal lobe cortex [CA1, DGCA3, subiculum, alEC, pmEC, PRC, and PHC] and other cortical regions (RSC, medial prefrontal cortex, angular gyrus, PCC, and PreC]). Cohen’s d was calculated for significant effects using the formula (Mean1-Mean2)/pooled standard deviation.

Still frame presentation was pseudo-randomized for each participant, using PsychoPy35. Otherwise, “high”, “medium” and “low” precision conditions were based on participant performance and therefore could not be randomized. Data collection and analysis were not performed blind to the conditions of the experiments.

Statistics

We conducted the Kolmogorov-Smirnov test using GraphPad Prism36. This software was also used for the following analyses: 1) to compare BOLD fMRI activity for high and low precision trials using two-tailed paired-samples t-tests, 2) to conduct a one-way repeated measures ANOVA comparing trials with short, medium, and long distances from video boundaries, and 3) to compare BOLD fMRI activity for high and low vividness trials using two-tailed paired-samples t-tests. To assess whether modulation scores (high-low precision beta coefficients) were significantly different from 0, we used RStudio39 to conduct one-sample t-tests. Data distribution was assumed to be normal but this was not formally tested. Individual data points are shown for key analyses. Sample size was calculated a priori based on power analyses which demonstrate that for high resolution functional MRI studies, a minimum of 16 subjects is required to achieve 80% power at an alpha of .05. Additional methodological details can be found in the Life Sciences Reporting Summary.

Data availability statement

The data that support the findings of this study are available from the corresponding author upon request.

Code availability statement

The code used to collect and analyze data from this study are available from the corresponding author upon request.

Supplementary Material

Acknowledgements:

We thank M. Tsai, J. Noche, and A. Chun for assistance with data collection. We also thank C. Stark, N. Fortin, and D. Huffman for helpful discussions. This work was supported by US NIH grants P50AG05146, R01MH1023921, and R01AG053555 (PI: M.A.Y.), and Training Grant T32DC010775 (to M.E.M., PI: Metherate).

Footnotes

Competing Interests Statement

The authors declare no competing interests.

References

- 1.Kesner RP & Hunsaker MR The temporal attributes of episodic memory. Behav. Brain Res 215, 299–309 (2010). [DOI] [PubMed] [Google Scholar]

- 2.Ekstrom AD & Bookheimer SYS Spatial and temporal episodic memory retrieval recruit dissociable functional networks in the human brain. Learn. Mem 14, 645–654 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ekstrom AD & Ranganath C Space , Time and Episodic Memory : the Hippocampus is all over the Cognitive Map CA, USA 2 . Department of Psychology, University of California, Davis, CA, USA 3. Neuroscience Graduate Group, University of California, Davis, CA, USA: * Each autho. 1–16 [Google Scholar]

- 4.Hartley T, Lever C, Burgess N & O’Keefe J Space in the brain: how the hippocampal formation supports spatial cognition. Philos. Trans. R. Soc. B Biol. Sci 369, 20120510–20120510 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hafting T, Fyhn M, Molden S, Moser MB & Moser EI Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801–806 (2005). [DOI] [PubMed] [Google Scholar]

- 6.Save E & Sargolini F Disentangling the Role of the MEC and LEC in the Processing of Spatial and Non-Spatial Information: Contribution of Lesion Studies. Front. Syst. Neurosci 11, 1–9 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McNaughton BL, Battaglia FP, Jensen O, Moser EI & Moser MB Path integration and the neural basis of the ‘cognitive map’. Nat. Rev. Neurosci 7, 663–678 (2006). [DOI] [PubMed] [Google Scholar]

- 8.MacDonald CJ, Lepage KQ, Eden UT & Eichenbaum H Hippocampal ‘time cells’ bridge the gap in memory for discontiguous events. Neuron 71, 737–49 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.MacDonald CJ, Carrow S, Place R & Eichenbaum H Distinct hippocampal time cell sequences represent odor memories in immobilized rats. J. Neurosci 33, 14607–16 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kraus BJ et al. During Running in Place, Grid Cells Integrate Elapsed Time and Distance Run. Neuron 88, 578–589 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pastalkova E, Itskov V, Amarasingham A, and Buzsáki G Internally Generated Cell Assembly Sequences in the Rat Hippocampus. Science (80-. ). 321, 1322–1327 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Salz XDM et al. Time Cells in Hippocampal Area CA3. 36, 7476–7484 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Eichenbaum H et al. On the Integration of Space, Time, and Memory. Neuron 95, 1007–1018 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Deshmukh SS & Knierim JJ Representation of Non-Spatial and Spatial Information in the Lateral Entorhinal Cortex. Front. Behav. Neurosci 5, (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Knierim JJ, Neunuebel JP, Deshmukh SS & Knierim JJ Functional correlates of the lateral and medial entorhinal cortex : objects , path integration and local – global reference frames. (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Reagh ZM & Yassa M. a. Object and spatial mnemonic interference differentially engage lateral and medial entorhinal cortex in humans. Proc. Natl. Acad. Sci. U. S. A (2014). doi: 10.1073/pnas.1411250111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Murray EA & Yassa MA Pattern separation. 1–31 (2017). [Google Scholar]

- 18.Suzuki WA & Amaral DG Perirhinal and parahippocampal cortices of the macaque monkey: Cortical afferents 4025. J. Comp. Neurol 350, 497–533 (1994). [DOI] [PubMed] [Google Scholar]

- 19.Maass A, Berron D, Libby LA, Ranganath C & Düzel E Functional subregions of the human entorhinal cortex. Elife 4, 1–20 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schröder TN, Haak KV, Jimenez NIZ, Beckmann CF & Doeller CF Functional topography of the human entorhinal cortex. Elife 4, 1–17 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ranganath C & Ritchey M Two cortical systems for memory-guided behaviour. Nat. Rev. Neurosci 13, 713–26 (2012). [DOI] [PubMed] [Google Scholar]

- 22.Knierim JJ, Neunuebel JP & Deshmukh SS Functional correlates of the lateral and medial entorhinal cortex : objects , path integration and local – global reference frames. Philos. Trans. R. Soc 369, (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lositsky O et al. Neural pattern change during encoding of a narrative predicts retrospective duration estimates. Elife 5, 1–40 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tsao A, Sugar J, Lu L, Wang C, Knierim JJ, Moser M, and Moser EI Time coding in lateral entorhinal cortex. (2018). [DOI] [PubMed] [Google Scholar]

- 25.Hannesson DK Interaction between Perirhinal and Medial Prefrontal Cortex Is Required for Temporal Order But Not Recognition Memory for Objects in Rats. J. Neurosci 24, 4596–4604 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Brown MW Neuronal responses and recognition memory. Semin. Neurosci. 8, 23–32 (1996). [Google Scholar]

- 27.Eichenbaum H, Yonelinas AP & Ranganath C The medial temporal lobe and recognition memory. Annu. Rev. Neurosci 30, 123–152 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hsieh LT, Gruber MJ, Jenkins LJ & Ranganath C Hippocampal Activity Patterns Carry Information about Objects in Temporal Context. Neuron 81, 1165–1178 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Jenkins LJ & Ranganath C Prefrontal and medial temporal lobe activity at encoding predicts temporal context memory. J. Neurosci 30, 15558–65 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tubridy S & Davachi L Medial temporal lobe contributions to episodic sequence encoding. Cereb. Cortex 21, 272–80 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lehn H et al. A Specific Role of the Human Hippocampus in Recall of Temporal Sequences. J. Neurosci 29, 3475–3484 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Jenkins LJ & Ranganath C Prefrontal and medial temporal lobe activity at encoding predicts temporal context memory. J. Neurosci 30, 15558–65 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Furman O, Dorfman N, Hasson U, Davachi L & Dudai Y They saw a movie: Long-term memory for an extended audiovisual narrative. Learn. Mem 14, 457–467 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.MacDonald CJ, Lepage KQ, Eden UT & Eichenbaum H Hippocampal ‘time cells’ bridge the gap in memory for discontiguous events. Neuron 71, 737–49 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

Methods-only References

- 35.Pierce JW Psychopy—Psychonomics software in Python. J Neuroscience Methods, 162(1–2):8–13 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.GraphPad Prism version 7.00 for Mac, GraphPad Software, La Jolla California USA, www.graphpad.com. [Google Scholar]

- 37.Cox RW AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res 29, 162–173 (1996). [DOI] [PubMed] [Google Scholar]

- 38.Avants BB, Tustison N & Song G Advanced Normalization Tools (ANTS). Insight J. 1–35 (2009). at ftp://ftp3.ie.freebsd.org/pub/sourceforge/a/project/ad/advants/Documentation/ants.pdf [Google Scholar]

- 39.RStudio. RStudio: Integrated development environment for R (Version 1.1.442) [Computer Software]. Boston, MA: Available from http://www.rstudio.org [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon request.