Accurate medical image segmentation is essential for diagnosis, surgical planning and many other applications. Convolutional Neural Networks (CNNs) have become the state-of-the-art automatic segmentation methods. However, fully automatic results may still need to be refined to become accurate and robust enough for clinical use. We propose a deep learning-based interactive segmentation method to improve the results obtained by an automatic CNN and to reduce user interactions during refinement for higher accuracy. We use one CNN to obtain an initial automatic segmentation, on which user interactions are added to indicate mis-segmentations. Another CNN takes as input the user interactions with the initial segmentation and gives a refined result. We propose to combine user interactions with CNNs through geodesic distance transforms, and propose a resolution-preserving network that gives a better dense prediction. In addition, we integrate user interactions as hard constraints into a back-propagatable Conditional Random Field. We validated the proposed framework in the context of 2D placenta segmentation from fetal MRI and 3D brain tumor segmentation from FLAIR images. Experimental results show our method achieves a large improvement from automatic CNNs, and obtains comparable and even higher accuracy with fewer user interventions and less time compared with traditional interactive methods.

Keywords: Interactive image segmentation, convolutional neural network, geodesic distance, conditional random fields

1. Introduction

Segmentation of anatomical structures is an essential task for a range of medical image processing applications such as image-based diagnosis, anatomical structure modeling, surgical planning and guidance. During the past decades, researchers have developed many automatic segmentation approaches [1]. However, fully automatic segmentation methods rarely achieve sufficiently accurate and robust results to be clinically useful [2]. This is typically due to poor image quality (with noise, partial volume effect, artifacts and low contrast), large variations among patients, inhomogeneous appearances brought by pathology, and variability of protocols among clinicians leading to different definitions of a given structure boundary. To address the limitations of automatic segmentation approaches, interactive segmentation methods are desirable as they allow higher accuracy and robustness in many applications [3], such as planning of radiotherapy treatment of brain tumors [4]. As providing manual annotations for segmentation is time-consuming and labor-intensive, an efficient interactive segmentation tool is of great importance for practical use.

A good interactive segmentation method should obtain accurate results efficiently with as few user interactions as possible, leading to interaction efficiency. For such a method, there are mainly two factors that have a critical impact on its performance and usefulness. The first is the type of user interactions used as input to the method, and the second is the algorithm's underpinning model. Despite the large number of existing interactive segmentation methods [3], most of them are confronted by requiring a large amount of user interactions and long user time, or limited learning ability with their underpinning models.

For example, the widely used ITK-SNAP [5] takes user-provided seed pixels or blobs as a starting point and employs an active contour model for segmentation. It requires most of the user interactions to be given at the beginning and the underpinning model can hardly be refined with additional user interactions once an initial segmentation is obtained. SlicSeg [6] accepts user-provided scribbles in a single start slice to train an Online Random Forest for 3D segmentation, but lacks in flexibility to allow further user-editing. Random Walks [7] and Graph Cuts [8] learn from scribbles and allow the user to provide additional scribbles for refinement. They use Random Walker and Gaussian Mixture Model (GMM) as the underpinning model. However, they need a large number of scribbles to get satisfactory segmentation. GrabCut [9] works with a user-provided bounding box to start the segmentation and requires fewer interactions compared with Graph Cuts [8], but the performance is still limited by the representativity of the underpinning GMM. Therefore, a more efficient way for user interactions and a better underpinning model are highly demanded for interactive medical image segmentation.

Recently, deep learning with convolutional neural networks (CNNs) has achieved the state-of-the-art performance in many image analysis applications [10]. With the high-quality automatic segmentation results achieved by Fully Convolutional Network (FCN) [11], U-Net [12], V-Net [13], HighRes3DNet [14] and DeepMedic [15], etc., CNNs have been shown to be powerful learning models for segmentation tasks. However, they have not yet been adapted to interactive medical image segmentation.

In this paper, we propose a novel interactive method for 2D and 3D medical image segmentation that leverages deep learning. We propose a two-stage pipeline, where a first CNN automatically obtains an initial segmentation and a second CNN refines the initial segmentation by taking advantages of a small number of user interactions that we encode as geodesic distance maps. We refer to the proposed interactive segmentation method as Deep Interactive Geodesic Framework (DeepIGeoS).

Compared with existing interactive segmentation methods, DeepIGeoS has several appealing properties. First, it uses a more powerful underpinning learning model, i.e., CNN with automatic feature learning to take advantages of knowledge from a large training set. Second, it requires far fewer user interactions, as the method starts with a high-quality automatic segmentation and only needs user-provided clicks or short scribbles in the refinement stage. Third, it is efficient and can respond to user interactions in real time, which leads to very short user time.

The contributions of this work are four-fold: 1) We propose a deep CNN-based interactive framework for 2D and 3D medical image segmentation; 2) to make CNNs suitable for interactive segmentation with high efficiency and accuracy, we propose two new networks for 2D and 3D images respectively; 3) we propose to integrate user interactions with CNNs by converting them into geodesic distance maps as part of the input for CNNs, and use them as constraints for a trainable Conditional Random Field (CRF); 4) we demonstrate that CNNs lead to state-of-the-art performance for interactive medical image segmentation, with far less user efforts and user time than existing methods.

2. Related Works

2.1. Image Segmentation Based on CNNs

Typical CNNs [16], [17], [18] were originally designed for image classification tasks. Some early works adapted such networks for pixel labeling with patch or region-based methods [19], [20]. Such methods achieved higher accuracy than traditional methods that relied on hand-crafted features. However, they suffered from inefficiency for testing. FCNs [11] take an entire image as input and give a dense segmentation. In order to overcome the problem of loss of spatial resolution due to multi-stage max-pooling and downsampling, it uses a stack of deconvolution (a.k.a. upsampling) layers and activation functions to upsample the feature maps. Inspired by the convolution and deconvolution framework of FCNs, a U-shape network (U-Net) [12] and its 3D version [21] were proposed for biomedical image segmentation. A similar network (V-Net) [13] was proposed to segment the prostate from 3D MRI volumes.

To overcome the drawbacks of successive max-pooling and downsampling that lead to a loss of feature map resolution, dilated convolution [22], [23] was proposed to preserve the resolution of feature maps and enlarge the receptive field to incorporate larger contextual information. In [24], a stack of dilated convolutions was used for object tracking and semantic segmentation. Dilated convolution has also been used for instance-sensitive segmentation [25] and action detection from video frames [26].

Multi-scale features extracted from CNNs have been shown to be effective for improving segmentation accuracy [11], [22], [23]. One way of obtaining multi-scale features is to pass several scaled versions of the input image through the same network. The features from all the scales can be fused for pixel classification [27]. In [15], [19], the features of each pixel were extracted from two concentric patches with different sizes. In [28], multi-scale images at different stages were fed into a recurrent convolutional neural network. Another widely used way to obtain multi-scale features is exploiting the feature maps from different levels of a CNN. For example, in [29], features from intermediate layers are concatenated for segmentation and localization. In [11], [22], predictions from the final layer are combined with those from previous layers.

2.2. Interactive Image Segmentation

Interactive image segmentation has been widely used in various applications [30], [31], [32]. There are many kinds of user interactions, such as click-based [33], contour-based [34] and bounding box-based methods [9]. Drawing scribbles is user-friendly and particularly popular, e.g., in Graph Cuts [8], GeoS [35], [36], and Random Walks [7]. However, most of these methods rely on low-level features and require a relatively large amount of user interactions to deal with images with low contrast and ambiguous boundaries. Machine learning methods [6], [37], [38] have been proposed to learn from user interactions. They can achieve higher segmentation accuracy with fewer user interactions. However, they are limited by hand-crafted features that depend on the user's experience.

Recently, using deep CNNs to improve interactive segmentation has attracted increasing attention due to CNNs’ automatic feature learning and high performance. For instance, 3D U-Net [21] learns from sparsely annotated images and can be used for semi-automatic segmentation. ScribbleSup [39] also trains CNNs for semantic segmentation supervised by scribbles. DeepCut [32] employs user-provided bounding boxes as annotations to train CNNs for the segmentation of fetal MRI. However, these methods are not fully interactive for testing since they do not accept further interactions for refinement. In [40], a deep interactive object selection method was proposed where user-provided clicks are transformed into euclidean distance maps and then concatenated with the input of FCNs. However, the euclidean distance does not take advantage of image context information. In contrast, the geodesic distance transform [35], [36], [41] encodes spatial regularization and contrast-sensitivity but it has not been used for CNNs.

2.3. CRFs for Spatial Regularization

Graphical models such as CRFs [22], [42], [43] have been widely used to enhance segmentation accuracy by introducing spatial consistency. In [42], spatial regularization was obtained by minimizing the Potts energy with a min-cut/max-flow algorithm. In [43], the discrete max-flow problem was mapped to its continuous optimization formulation. Such methods encourage segmentation consistency between adjacent pixel pairs with high similarity. In order to better model long-range connections within the image, a fully connected CRF was used in [44] to establish pairwise potentials on all pairs of pixels in the image. To make the inference of this CRF efficient, the pairwise edge potentials were defined by a linear combination of Gaussian kernels in [45]. The parameters of CRFs in these works were manually tuned or inefficiently learned by grid search. In [46], a maximum margin learning method was proposed to learn CRFs using Graph Cuts. Other methods including structured output Support Vector Machines [47], approximate marginal inference [48] and gradient-based optimization [49] were also proposed to learn parameters in CRFs. They treat the learning of CRFs as an independent step after the training of classifiers.

The CRF-RNN network [50] formulated dense CRFs as RNNs so that the CNNs and CRFs can be jointly trained in an end-to-end system for segmentation. However, the pair-wise potentials in [50] are limited to weighted Gaussians and not all the parameters are trainable due to the Permutohedral lattice implementation [51]. In [52], a Gaussian Mean Field (GMF) network was proposed and combined with CNNs where all the parameters are trainable. More freeform pairwise potentials for a pair of super-pixels or image patches were proposed in [27], [53], but such CRFs have a low resolution. In [54], a generic CNN-CRF model was proposed to handle arbitrary potentials for labeling body parts in depth images. However, it has not yet been validated with other segmentation applications.

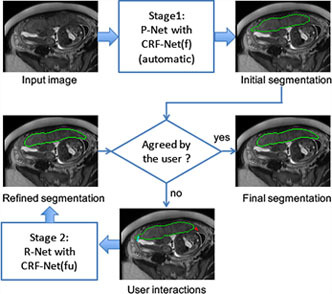

3. Method

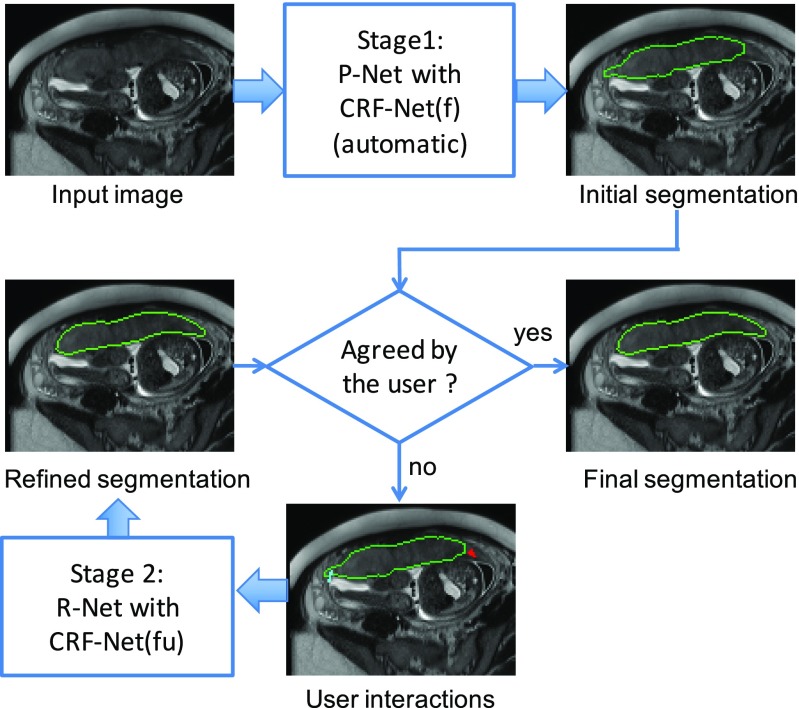

The proposed DeepIGeoS for deep interactive segmentation is depicted in Fig. 1. To minimize the number of user interactions, we propose a two-stage framework: In Stage 1, which is an automatic segmentation problem and requires fast inference, one CNN (P-Net) automatically proposes an initial segmentation. In Stage 2, the user checks the initial segmentation and gives some interactions (clicks and short scribbles) to indicate mis-segmented regions, and a second CNN (R-Net) refines the segmentation by taking as input the original image, the initial segmentation and the user interactions. The user is allowed to give clicks/scribbles to refine the result more than one time through R-Net. P-Net and R-Net use a resolution-preserving structure that captures high-level features from a large receptive field without loss of resolution. They share the same structure except the difference in the input dimensions. Differently from previous works [55] that re-train the learning model each time when new user interactions are given, the proposed R-Net is only trained with user interactions once since it takes a considerable time to re-train a CNN model with a large training set.

Fig. 1.

Overview of the proposed interactive segmentation framework with two stages. Stage 1: P-Net automatically proposes an initial segmentation. Stage 2: R-Net refines the segmentation with user interactions indicating mis-segmentations. CRF-Net(f) is our proposed back-propagatable CRF that uses freeform pairwise potentials. It is extended to be CRF-Net(fu) that employs user interactions as hard constraints.

To make the segmentation result more spatially consistent and to use scribbles as hard constraints, both P-Net and R-Net are connected with a CRF, which is modeled as an RNN (CRF-Net) so that it can be trained jointly with P-Net/R-Net by back-propagation. We use freeform pairwise potentials in the CRF-Net. The way user interactions are used is presented in Section 3.1. The structures of 2D/3D P-Net and R-Net are detailed in Section 3.2. In Section 3.3, we describe the implementation of our CRF-Net. Training details are described in Section 3.4.

3.1. User Interaction-Based Geodesic Distance Maps

In Stage 2 of our method, scribbles are provided by the user to refine the initial automatic segmentation obtained by P-Net in Stage 1. A scribble labels a set of pixels as the foreground or background. Interactions with the same label are converted into a distance map. In [40], the euclidean distance was used due to its simplicity. However, the euclidean distance treats each direction equally and does not take the image context into account. In contrast, the geodesic distance helps to better differentiate neighboring pixels with different appearances, and improves label consistency in homogeneous regions [36]. GeoF [41] uses the geodesic distance to encode variable dependencies in the feature space and it is combined with Random Forests for semantic segmentation. However, it is not designed to deal with user interactions. We propose to encode user interactions via geodesic distance transforms for CNN-based segmentation.

Suppose  and

and  represent the set of pixels belonging to foreground scribbles and background scribbles, respectively. Let

represent the set of pixels belonging to foreground scribbles and background scribbles, respectively. Let  be a pixel in an image

be a pixel in an image  , then the unsigned geodesic distance from

, then the unsigned geodesic distance from  to the scribble set

to the scribble set  is:

is:

|

|

where  is the set of all paths between pixel

is the set of all paths between pixel  and

and  .

.  is one feasible path and it is parameterized by

is one feasible path and it is parameterized by  [0, 1].

[0, 1].  is a unit vector that is tangent to the direction of the path. If no scribbles are drawn for either the foreground or background, the corresponding geodesic distance map is filled with random numbers.

is a unit vector that is tangent to the direction of the path. If no scribbles are drawn for either the foreground or background, the corresponding geodesic distance map is filled with random numbers.

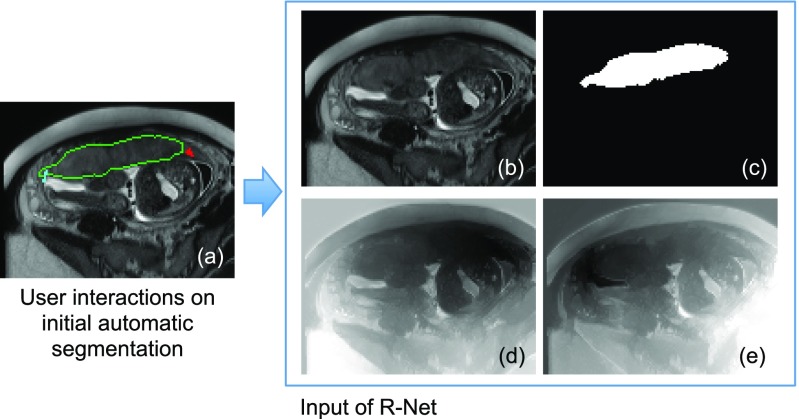

Fig. 2 shows an example of geodesic distance transforms of user interactions. The geodesic distance maps of user interactions and the initial automatic segmentation have the same size as  . They are concatenated with the raw channels of

. They are concatenated with the raw channels of  so that a concatenated image with

so that a concatenated image with  +3 channels is obtained, which is used as the input of the refinement network R-Net.

+3 channels is obtained, which is used as the input of the refinement network R-Net.

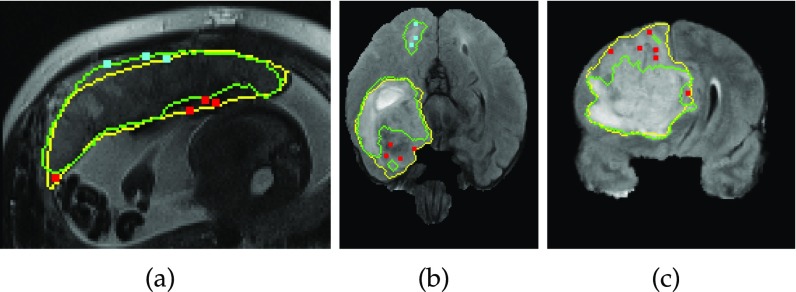

Fig. 2.

Input of R-Net in Stage 2. (a) The user provides clicks/scribbles to correct foreground (red) and background (cyan) on the initial automatic segmentation. (d) and (e) are geodesic distance maps based on foreground and background interactions, respectively. The original image (b) is combined with the initial automatic segmentation (c) and the geodesic distance maps (d), (e) by channel-concatenation and used as the input of R-Net.

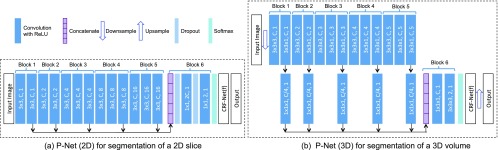

3.2. Resolution-Preserving CNNs using Dilated Convolution

CNNs in our method are designed to capture high-level features from a large receptive field without the loss of resolution of the feature maps. They are adapted from VGG-16 [17] and made resolution-preserving. Fig. 3 shows the structure of 2D and 3D P-Net. In 2D P-Net, the first 13 convolution layers are grouped into five blocks. The first and second blocks have two convolution layers respectively, and each of the remaining blocks has three convolution layers. The size of the convolution kernel is fixed as 3×3 in all these convolution layers. 2D R-Net uses the same structure as 2D P-Net except that its number of input channels is  +3 and it employs user interactions in the CRF-Net. To obtain an exponential increase of the receptive field, VGG-16 uses a max-pooling and downsampling layer after each block. However, this implementation would decrease the resolution of feature maps exponentially. Therefore, to preserve resolution through the network, we remove the max-pooling and downsampling layers and use dilated convolution in each block.

+3 and it employs user interactions in the CRF-Net. To obtain an exponential increase of the receptive field, VGG-16 uses a max-pooling and downsampling layer after each block. However, this implementation would decrease the resolution of feature maps exponentially. Therefore, to preserve resolution through the network, we remove the max-pooling and downsampling layers and use dilated convolution in each block.

Fig. 3.

The CNN structure of 2D/3D P-Net with CRF-Net(f). The parameters of convolution layers are (kernel size, output channels, dilation) in dark blue rectangles. Block 1 to block 6 are resolution-preserving. 2D/3D R-Net uses the same structure as 2D/3D P-Net except its input has three additional channels shown in Fig. 2 and the CRF-Net(f) is replaced by the CRF-Net(fu) (Section 3.3).

Let  be a 2D image of size

be a 2D image of size  , and let

, and let  be a square dilated convolution kernel with a size of (2

be a square dilated convolution kernel with a size of (2 + 1)×(2

+ 1)×(2 + 1) and a dilation parameter

+ 1) and a dilation parameter  , where

, where  and

and  . The dilated convolution of

. The dilated convolution of  with

with  is defined as:

is defined as:

|

For 2D P-Net/R-Net, we set  to 1 for block 1 to block 5, so the size of a convolution kernel becomes 3 × 3. The dilation parameter in block

to 1 for block 1 to block 5, so the size of a convolution kernel becomes 3 × 3. The dilation parameter in block  is set to:

is set to:

|

where  is a system parameter controlling the base dilation parameter of the network. We set

is a system parameter controlling the base dilation parameter of the network. We set  =1 in experiments.

=1 in experiments.

The receptive field of a dilated convolution kernel  is (2

is (2 +1)×(2

+1)×(2 +1). Let

+1). Let  denote the receptive field of block

denote the receptive field of block  .

.  can be computed as:

can be computed as:

|

where  is the number of convolution layers in block

is the number of convolution layers in block  , with a value of 2, 2, 3, 3, 3 for the five blocks respectively. When

, with a value of 2, 2, 3, 3, 3 for the five blocks respectively. When  = 1, the receptive field size of each block is

= 1, the receptive field size of each block is  = 4

= 4 + 1,

+ 1,  = 12

= 12 + 1,

+ 1,  = 36

= 36 + 1,

+ 1,  = 84

= 84 + 1,

+ 1,  = 180

= 180 + 1, respectively. Thus, these blocks capture features at different scales.

+ 1, respectively. Thus, these blocks capture features at different scales.

The stride of each convolution layer is set to 1. The number of output channels of convolution in each block is set to a fixed number  . In order to use multi-scale features, we concatenate the features from different blocks to get a composed feature of length 5

. In order to use multi-scale features, we concatenate the features from different blocks to get a composed feature of length 5 . This feature is fed into a classifier that is implemented by two additional layers as shown in block 6 in Fig. 3a. These two layers use convolution kernels with size of 1 × 1 and dilation parameter of 0. Block 6 gives each pixel an initial score of belonging to the foreground or background class. In order to get a more spatially consistent segmentation and add hard constraints when scribbles are given, we apply a CRF on the basis of the output from block 6. The CRF is implemented by a recurrent neural network (CRF-Net, detailed in Section 3.3), which can be jointly trained with P-Net or R-Net. The CRF-Net gives a regularized prediction for each pixel, which is fed into a cross entropy loss function layer.

. This feature is fed into a classifier that is implemented by two additional layers as shown in block 6 in Fig. 3a. These two layers use convolution kernels with size of 1 × 1 and dilation parameter of 0. Block 6 gives each pixel an initial score of belonging to the foreground or background class. In order to get a more spatially consistent segmentation and add hard constraints when scribbles are given, we apply a CRF on the basis of the output from block 6. The CRF is implemented by a recurrent neural network (CRF-Net, detailed in Section 3.3), which can be jointly trained with P-Net or R-Net. The CRF-Net gives a regularized prediction for each pixel, which is fed into a cross entropy loss function layer.

Similar network structures are used by 3D P-Net/R-Net for 3D segmentation, as shown in Fig. 3b. To reduce the memory consumption for 3D images, we use one downsampling layer before the resolution-preserving layers and compress the output features of block 1 to 5 by a factor four via 1  1 convolutions before the concatenation layer.

1 convolutions before the concatenation layer.

3.3. Back-Propagatable CRF-Net with Freeform Pairwise Potentials and User Constraints

In [50], a CRF based on RNN was proposed and it can be trained by back-propagation. Rather than using Gaussian functions, we extend this CRF so that the pairwise potentials can be freeform functions and we refer to it as CRF-Net(f). In addition, we integrate user interactions in our CRF-Net(f) in the interactive refinement context, which is referred to as CRF-Net(fu). The CRF-Net(f) is connected to P-Net and the CRF-Net(fu) is connected to R-Net.

Let  be the label map assigned to an image

be the label map assigned to an image  with a label set

with a label set  = {0, 1, …,

= {0, 1, …,  − 1}. The Gibbs distribution

− 1}. The Gibbs distribution  models the probability of

models the probability of  given

given  in a CRF, where

in a CRF, where  is the normalization factor known as the partition function, and

is the normalization factor known as the partition function, and  is the Gibbs energy:

is the Gibbs energy:

|

where the unary potential  measures the cost of assigning label

measures the cost of assigning label  to pixel

to pixel  , and the pairwise potential

, and the pairwise potential  is the cost of assigning labels

is the cost of assigning labels  to a pixel pair

to a pixel pair  .

.  is the set of all pixel pairs. In our method, the unary potential is obtained from P-Net or R-Net that gives classification scores for each pixel. The pairwise potential is:

is the set of all pixel pairs. In our method, the unary potential is obtained from P-Net or R-Net that gives classification scores for each pixel. The pairwise potential is:

|

where  is the euclidean distance between pixels

is the euclidean distance between pixels  and

and  .

.  is the compatibility between the label of

is the compatibility between the label of  and that of

and that of  represented by a matrix of size

represented by a matrix of size  .

.  , where

, where  and

and  represent the feature vectors of

represent the feature vectors of  and

and  , respectively. The feature vectors can either be learned by a network or be derived from image features such as spatial location with intensity values. For experiments, we used the latter one, as in [8], [45], [50] for simplicity and efficiency.

, respectively. The feature vectors can either be learned by a network or be derived from image features such as spatial location with intensity values. For experiments, we used the latter one, as in [8], [45], [50] for simplicity and efficiency.  (

( ) is a function in terms of

) is a function in terms of  and

and  . Instead of defining

. Instead of defining  (

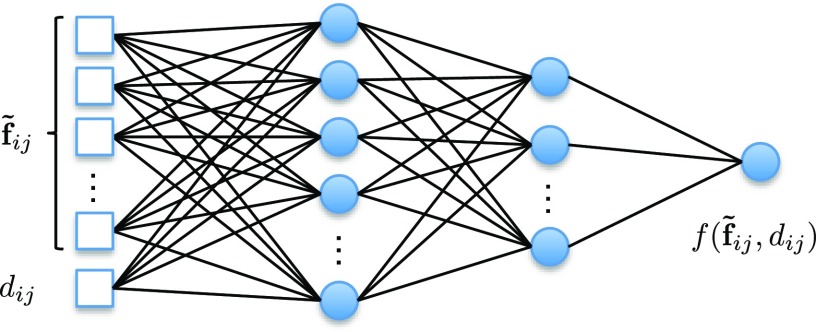

( ) as a single Gaussian function [8] or a combination of several Gaussian functions [45], [50], we set it as a freeform function represented by a fully connected neural network (Pairwise-Net) which can be learned during training. The structure of Pairwise-Net is shown in Fig. 4. The input is a vector composed of

) as a single Gaussian function [8] or a combination of several Gaussian functions [45], [50], we set it as a freeform function represented by a fully connected neural network (Pairwise-Net) which can be learned during training. The structure of Pairwise-Net is shown in Fig. 4. The input is a vector composed of  and

and  . There are two hidden layers and one output layer.

. There are two hidden layers and one output layer.

Fig. 4.

The Pairwise-Net for pairwise potential function  .

.  is the difference of features between a pixel pair

is the difference of features between a pixel pair  and

and  .

.  is the euclidean distance between them.

is the euclidean distance between them.

Graph Cuts [8], [46] can be used to minimize Eq. (6) when  (

( ) is submodular [56] such as when the segmentation is binary with

) is submodular [56] such as when the segmentation is binary with  (

( ) being the delta function and

) being the delta function and  (

( ) being positive. However, this is not the case for our method since we learn

) being positive. However, this is not the case for our method since we learn  (

( ) and

) and  (

( ) where

) where  (

( ) may not be the delta function and

) may not be the delta function and  (

( ) could be negative. Continuous max-flow [43] can also be used for the minimization, but its parameters are manually designed. Alternatively, mean-field approximation [45], [50], [52] is often used for efficient inference of the CRF while allowing learning parameters by back-propagation. Instead of computing

) could be negative. Continuous max-flow [43] can also be used for the minimization, but its parameters are manually designed. Alternatively, mean-field approximation [45], [50], [52] is often used for efficient inference of the CRF while allowing learning parameters by back-propagation. Instead of computing  directly, an approximate distribution

directly, an approximate distribution  is computed so that the KL-divergence

is computed so that the KL-divergence  is minimized. This yields an iterative update of

is minimized. This yields an iterative update of  [45], [50], [52].

[45], [50], [52].

|

|

where  is the label set.

is the label set.  and

and  are a pixel pair. For the proposed CRF-Net(fu), with the set of user-provided scribbles

are a pixel pair. For the proposed CRF-Net(fu), with the set of user-provided scribbles  , we force the probability of pixels in the scribble set to be 1 or 0. The following equation is used as the update rule for each iteration:

, we force the probability of pixels in the scribble set to be 1 or 0. The following equation is used as the update rule for each iteration:

|

where  denotes the user-provided label of a pixel

denotes the user-provided label of a pixel  that is in the scribble set

that is in the scribble set  . We follow the implementation in [50] to update

. We follow the implementation in [50] to update  through a multi-stage mean-field method in an RNN. Each mean-field layer splits Eq. (8) into four steps including message passing, compatibility transform, adding unary potentials and normalizing [50].

through a multi-stage mean-field method in an RNN. Each mean-field layer splits Eq. (8) into four steps including message passing, compatibility transform, adding unary potentials and normalizing [50].

3.4. Implementation Details

The raster-scan algorithm [36] was used to compute geodesic distance transforms by applying a forward pass scanning and a backward pass scanning with a 3 × 3 kernel for 2D and a 3  3 kernel for 3D. It is fast due to accessing the image memory in contiguous blocks. For the proposed CRF-Net with freeform pairwise potentials, two observations motivate us to use pixel connections based on local patches instead of full connections within the entire image. First, the permutohedral lattice implementation [45], [50] allows efficient computation of fully connected CRFs only when pairwise potentials are Gaussian functions. However, a method that relaxes the requirement of pairwise potentials as freeform functions represented by a network (Fig. 4) cannot use that implementation and therefore would be inefficient for fully connected CRFs. Suppose an image with size

3 kernel for 3D. It is fast due to accessing the image memory in contiguous blocks. For the proposed CRF-Net with freeform pairwise potentials, two observations motivate us to use pixel connections based on local patches instead of full connections within the entire image. First, the permutohedral lattice implementation [45], [50] allows efficient computation of fully connected CRFs only when pairwise potentials are Gaussian functions. However, a method that relaxes the requirement of pairwise potentials as freeform functions represented by a network (Fig. 4) cannot use that implementation and therefore would be inefficient for fully connected CRFs. Suppose an image with size  , a fully connected CRF has

, a fully connected CRF has  (

( -1) pixel pairs. For a small image with

-1) pixel pairs. For a small image with  =

=  = 100, the number of pixel pairs would be almost 10

= 100, the number of pixel pairs would be almost 10 , which requires not only a huge amount of memory but also long computational time. Second, though long-distance dependency helps to improve segmentation in most RGB images [22], [45], [50], this would be very challenging for medical images since the contrast between the target and background is often low [57]. In such cases, long-distance dependency may lead the label of a target pixel to be corrupted by the large number of background pixels with similar appearances. Therefore, to maintain a good efficiency and avoid long-distance corruptions, we define the pairwise connections for one pixel within a local patch centered on that. In our experiments, the patch size is set to 7 × 7 for 2D images and 5

, which requires not only a huge amount of memory but also long computational time. Second, though long-distance dependency helps to improve segmentation in most RGB images [22], [45], [50], this would be very challenging for medical images since the contrast between the target and background is often low [57]. In such cases, long-distance dependency may lead the label of a target pixel to be corrupted by the large number of background pixels with similar appearances. Therefore, to maintain a good efficiency and avoid long-distance corruptions, we define the pairwise connections for one pixel within a local patch centered on that. In our experiments, the patch size is set to 7 × 7 for 2D images and 5  3 for 3D images.

3 for 3D images.

We initialize  (

( ) as

) as  (

( ,

,  ) = [

) = [ ], where [

], where [ ] is the Iverson Bracket [50]. A fully connected neural network (Pairwise-Net) with two hidden layers is used to learn the freeform pairwise potential function (Fig. 4). The first and second hidden layers have 32 and 16 neurons, respectively. In practice, this network is implemented by an equivalent fully convolutional neural network with 1 × 1 kernels for 2D or 1

] is the Iverson Bracket [50]. A fully connected neural network (Pairwise-Net) with two hidden layers is used to learn the freeform pairwise potential function (Fig. 4). The first and second hidden layers have 32 and 16 neurons, respectively. In practice, this network is implemented by an equivalent fully convolutional neural network with 1 × 1 kernels for 2D or 1  1 kernels for 3D. We use a pre-training step to initialize the Pairwise-Net with an approximation of a contrast sensitive function [8]:

1 kernels for 3D. We use a pre-training step to initialize the Pairwise-Net with an approximation of a contrast sensitive function [8]:

|

where  is the dimension of the feature vectors

is the dimension of the feature vectors  and

and  , and

, and  and

and  are two parameters controlling the magnitude and shape of the initial pairwise function respectively. In this initialization step, we set

are two parameters controlling the magnitude and shape of the initial pairwise function respectively. In this initialization step, we set  to 0.08 and

to 0.08 and  to 0.5 based on experience. Similar to [45], [50], [58], we set

to 0.5 based on experience. Similar to [45], [50], [58], we set  and

and  as values in input channels (i.e, image intensity in our case) of P-Net for simplicity of implementation and for obtaining contrast-sensitive pairwise potentials. To pre-train the Pairwise-Net we generate a training set

as values in input channels (i.e, image intensity in our case) of P-Net for simplicity of implementation and for obtaining contrast-sensitive pairwise potentials. To pre-train the Pairwise-Net we generate a training set  with 100k samples, where

with 100k samples, where  is the set of features simulating the concatenated

is the set of features simulating the concatenated  and

and  , and

, and  is the set of prediction values simulating

is the set of prediction values simulating  . For each sample

. For each sample  in

in  , the feature vector

, the feature vector  has a dimension of

has a dimension of  + 1 where the first

+ 1 where the first  dimensions represent the value of

dimensions represent the value of  and the last dimension denotes

and the last dimension denotes  . The

. The  th channel of

th channel of  is filled with a random number

is filled with a random number  , where

, where  (0, 2) for

(0, 2) for  and

and  (0, 8) for

(0, 8) for  + 1. The ground truth of prediction value

+ 1. The ground truth of prediction value  for

for  is obtained by Eq. (11). After generating

is obtained by Eq. (11). After generating  and

and  , we use a Stochastic Gradient Descent (SGD) algorithm with a quadratic loss function to pre-train the Pairwise-Net.

, we use a Stochastic Gradient Descent (SGD) algorithm with a quadratic loss function to pre-train the Pairwise-Net.

For pre-processing, all the images are normalized by the mean value and standard deviation of the training set. We apply data augmentation by vertical or horizontal flipping, random rotation with angle range [- /8,

/8,  /8] and random zoom with scaling factor range [0.8, 1.25]. We use the cross entropy loss function and SGD algorithm for optimization with minibatch size 1, momentum 0.99 and weight decay 5

/8] and random zoom with scaling factor range [0.8, 1.25]. We use the cross entropy loss function and SGD algorithm for optimization with minibatch size 1, momentum 0.99 and weight decay 5  . The learning rate is halved every 5k iterations. Since a proper initialization of P-Net and CRF-Net(f) is helpful for a faster convergence of the joint training, we train the P-Net with CRF-Net(f) in three steps. First, the P-Net is pre-trained with initial learning rate 10

. The learning rate is halved every 5k iterations. Since a proper initialization of P-Net and CRF-Net(f) is helpful for a faster convergence of the joint training, we train the P-Net with CRF-Net(f) in three steps. First, the P-Net is pre-trained with initial learning rate 10 and maximal number of iterations 100k. Second, the Pairwise-Net in the CRF-Net(f) is pre-trained as described above. Third, the P-Net and CRF-Net(f) are jointly trained with initial learning rate 10

and maximal number of iterations 100k. Second, the Pairwise-Net in the CRF-Net(f) is pre-trained as described above. Third, the P-Net and CRF-Net(f) are jointly trained with initial learning rate 10 and maximal number of iterations 50k.

and maximal number of iterations 50k.

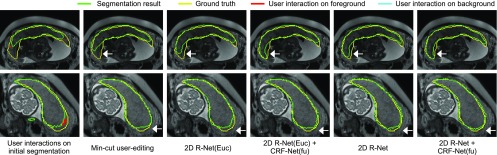

After the training of P-Net with CRF-Net(f), we automatically simulate user interactions to train R-Net with CRF-Net(fu). First, P-Net with CRF-Net(f) is used to obtain an automatic segmentation for each training image. It is compared with the ground truth to find mis-segmented regions. Then the user interactions on each mis-segmented region are simulated by randomly sampling  pixels in that region. Suppose the size of one connected under-segmented or over-segmented region is

pixels in that region. Suppose the size of one connected under-segmented or over-segmented region is  , we set

, we set  for that region to 0 if

for that region to 0 if  30 and

30 and  /100

/100  otherwise based on experience. Examples of simulated user interactions on training images are shown in Fig. 5. With these simulated user interactions on the initial segmentation of training data, the training of R-Net with CRF-Net(fu) is implemented through SGD, which is similar to the training of P-Net with CRF-Net(f).

otherwise based on experience. Examples of simulated user interactions on training images are shown in Fig. 5. With these simulated user interactions on the initial segmentation of training data, the training of R-Net with CRF-Net(fu) is implemented through SGD, which is similar to the training of P-Net with CRF-Net(f).

Fig. 5.

Simulated user interactions on training images for placenta (a) and brain tumor (b, c). Green: automatic segmentation given by P-Net with CRF-Net(f). Yellow: ground truth. Red (cyan): simulated clicks on under-segmentation (over-segmentation).

We implemented our 2D networks by Caffe1 [59] and 3D networks by Tensorflow2 [60] using NiftyNet3 [14]. Our training process was done via two 8-core E5-2623v3 Intel Haswells and two K80 NVIDIA GPUs and 128 GB memory. The testing process with user interactions was performed on a MacBook Pro (OS X 10.9.5) with 16 GB RAM and an Intel Core i7 CPU running at 2.5 GHz and an NVIDIA GeForce GT 750M GPU. A Matlab and PyQt GUI were developed for 2D and 3D interactive segmentation tasks, respectively.(See supplementary videos, which can be found on the Computer Society Digital Library at http://doi.ieeecomputersociety.org/10.1109/TPAMI.2018.2840695)

4. Experiments

4.1. Comparison Methods and Evaluation Metrics

We first present the results obtained in Stage 1 of our method, then present the results obtained in Stage 2. For Stage 1, we compared our P-Net with FCN [11] and DeepLab [58] for 2D segmentation and DeepMedic [15] and HighRes3DNet [14] for 3D segmentation. Pre-trained models of FCN4 and DeepLab5 based on ImageNet were fine-tuned for 2D placenta segmentation. Since the input of FCN and DeepLab should have three channels, we duplicated each of the gray-level images twice and concatenated them into a three-channel image as the input. DeepMedic and HighRes3DNet were originally designed for multi-modality or multi-class 3D segmentation. We adapted them for single modality binary segmentation. We also compared 2D/3D P-Net with 2D/3D P-Net(b5) that only uses the features from block 5 (Fig. 3) instead of the concatenated multi-scale features. The proposed CRF-Net(f) with freeform pairwise potentials was compared with: 1). Dense CRF as an independent post-processing step for the output of P-Net. We followed the implementation in [15], [45], [58]. The parameters of this CRF were manually tuned based on a coarse-to-fine search scheme as suggested by [58], and 2). CRF-Net(g) which refers to the CRF that can be trained jointly with CNNs by using Gaussian pairwise potentials [50].

For Stage 2, which is the interactive refinement part, we compared three methods to deal with user interactions. 1). Min-cut user-editing [9], where the initial probability map (output of P-Net in our case) is combined with user interactions to solve an energy minimization problem with min-cut [8]; 2). Using the euclidean distance of user interactions in R-Net, which is referred to as R-Net(Euc), and 3). The proposed R-Net with the geodesic distance of user interactions.

We also compared DeepIGeoS with several other interactive segmentation methods. For 2D slices, DeepIGeoS was compared with: 1). Geodesic Framework [35] that computes a probability based on the geodesic distance from user-provided scribbles for pixel classification; 2). Graph Cuts [8] that models segmentation as a min-cut problem based on user interactions; 3). Random Walks [7] that assigns a pixel with a label based on the probability that a random walker reaches a foreground or background seed first, and 4). SlicSeg [6] that uses Online Random Forests to learn from the scribbles and predict the remaining pixels. For 3D images, DeepIGeoS was compared with GeoS [36] and ITK-SNAP [5]. Two users (an Obstetrician and a Radiologist) respectively used these interactive methods to segment every test image until the result was visually acceptable.

For quantitative evaluation, we measured the Dice score and the average symmetric surface distance (ASSD).

|

where  and

and  represent the region segmented by the algorithm and the ground truth, respectively.

represent the region segmented by the algorithm and the ground truth, respectively.

|

where  and

and  represent the set of surface points of the target segmented by the algorithm and the ground truth, respectively.

represent the set of surface points of the target segmented by the algorithm and the ground truth, respectively.  is the shortest euclidean distance between

is the shortest euclidean distance between  and

and  . We used the Student's

. We used the Student's  -test to compute the

-test to compute the  -value in order to see whether the results of two algorithms significantly differ from each other.

-value in order to see whether the results of two algorithms significantly differ from each other.

4.2. 2D Placenta Segmentation from Fetal MRI

4.2.1. Clinical Background and Experiments Setting

Fetal MRI is an emerging diagnostic tool complementary to ultrasound due to its large field of view and good soft tissue contrast. Segmenting the placenta from fetal MRI is important for fetal surgical planning such as in the case of twin-to-twin transfusion syndrome [61]. Clinical fetal MRI data are often acquired with a large slice thickness for good contrast-to-noise ratio. Movement of the fetus can lead to inhomogeneous appearances between slices. In addition, the location and orientation of the placenta vary largely between individuals. These factors make automatic and 3D segmentation of the placenta a challenging task [62]. Interactive 2D slice-based segmentation is expected to achieve more robust results [6], [55]. The 2D segmentation results can also be used for motion correction and high-resolution volume reconstruction [63].

We collected clinical T2-weighted MRI scans of 25 pregnant women in the second trimester with Single-shot Fast Spin-echo (SSFSE). The data were acquired in axial view with pixel size between 0.7422 mm × 0.7422 mm and 1.582 mm × 1.582 mm and slice thickness 3-4 mm. Each slice was resampled with a uniform pixel size of 1 mm × 1 mm and cropped by a box of size 172 × 128 containing the placenta. We used 17 volumes with 624 slices for training, three volumes with 122 slices for validation and five volumes with 179 slices for testing. The ground truth was manually delineated by an experienced Radiologist.

4.2.2. Stage 1: Automatic Segmentation by 2D P-Net with CRF-Net(f)

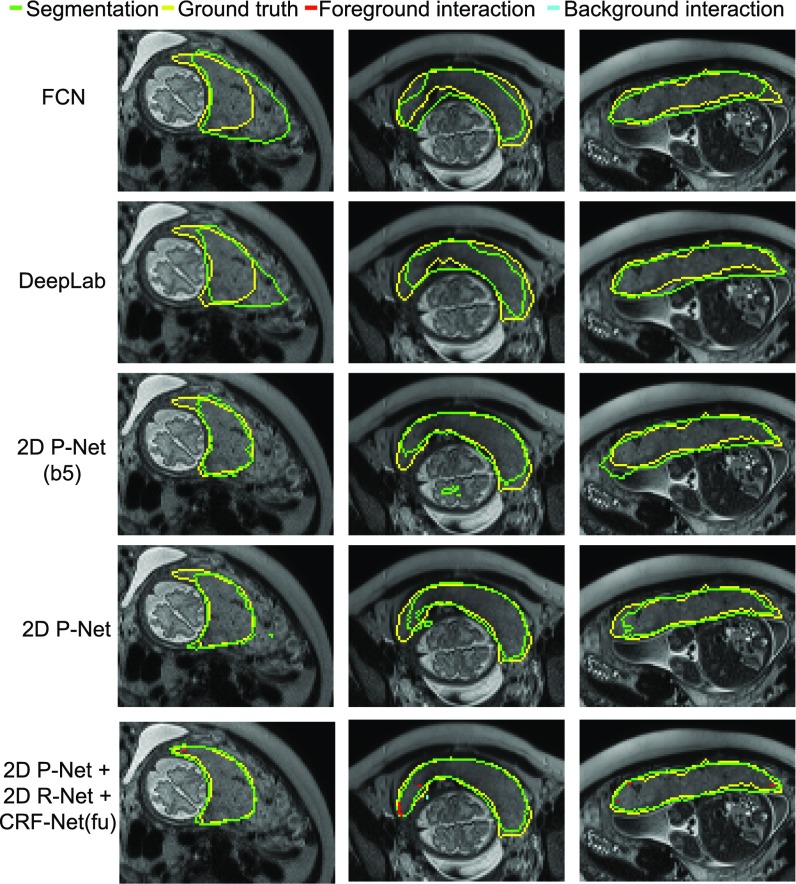

Fig. 6 shows the automatic segmentation results obtained by different networks in Stage 1. It shows that FCN is able to capture the main region of the placenta. However, the segmentation results are blob-like with smooth boundaries. DeepLab is better than FCN, but its blob-like results are similar to those of FCN. This is mainly due to the downsampling and upsampling procedure employed by these methods. In contrast, 2D P-Net(b5) and 2D P-Net obtain more detailed results. It can be observed that 2D P-Net achieves better results than the other three networks. However, there are still some obvious mis-segmented regions by 2D P-Net. Table 1 presents a quantitative comparison of these networks based on all the testing data. 2D P-Net achieves a Dice score of 84.78  11.74 percent and an ASSD of 2.09

11.74 percent and an ASSD of 2.09  1.53 pixels, and it performs better than the other three networks.

1.53 pixels, and it performs better than the other three networks.

Fig. 6.

Visual comparison of different networks in Stage 1 of 2D placenta segmentation. The last row shows interactively refined results by DeepIGeoS.

TABLE 1. Quantitative Comparison of Different Networks and CRFs in Stage 1 of 2D Placenta Segmentation.

| Method | Dice(%) | ASSD(pixels) |

|---|---|---|

| FCN [11] | 81.47  11.40 11.40 |

2.66  1.39 1.39 |

| DeepLab [58] | 83.38  9.53 9.53 |

2.20  0.84 0.84 |

| 2D P-Net(b5) | 83.16  13.01 13.01 |

2.36  1.66 1.66 |

| 2D P-Net | 84.78  11.74 11.74 |

2.09  1.53 1.53 |

| 2D P-Net + Dense CRF | 84.90  12.05 12.05 |

2.05  1.59 1.59 |

| 2D P-Net + CRF-Net(g) | 85.44  12.50 12.50 |

1.98  1.46 1.46 |

| 2D P-Net + CRF-Net(f) | 85.86  11.67 11.67 |

1.85  1.30 1.30 |

CRF-Net(g) [50] constrains pairwise potential as Gaussian functions. CRF-Net(f) is our proposed CRF that learns freeform pairwise potential functions. Significant improvement from 2D P-Net ( -value

-value  0.05) is shown in bold font.

0.05) is shown in bold font.

Based on 2D P-Net, we compared different CRFs in Stage 1. A visual comparison between Dense CRF, CRF-Net(g) with Gaussian pairwise potentials and CRF-Net(f) with freeform pairwise potentials is shown in Fig. 7. In the first column, the placenta is under-segmented by 2D P-Net. Dense CRF leads to very small improvements on the result. CRF-Net(g) and CRF-Net(f) improve the result by preserving more placenta regions, and the later shows a better segmentation. In the second column, 2D P-Net obtains an over-segmentation of adjacent fetal brain and maternal tissues. Dense CRF does not improve the segmentation noticeably, but CRF-Net(g) and CRF-Net(f) remove more over-segmentated areas. CRF-Net(f) shows a better performance than the other two CRFs. The quantitative evaluation of these three CRFs is presented in Table 1, which shows Dense CRF leads to a result that is very close to that of 2D P-Net ( -value

-value  0.05), while the last two CRFs significantly improve the segmentation (

0.05), while the last two CRFs significantly improve the segmentation ( -value

-value  0.05). In addition, CRF-Net(f) is better than CRF-Net(g). Fig. 7 and Table 1 indicate that large mis-segmentation exists in some images, therefore we use 2D R-Net with CRF-Net(fu) to refine the segmentation interactively in the following section.

0.05). In addition, CRF-Net(f) is better than CRF-Net(g). Fig. 7 and Table 1 indicate that large mis-segmentation exists in some images, therefore we use 2D R-Net with CRF-Net(fu) to refine the segmentation interactively in the following section.

Fig. 7.

Visual comparison of different CRFs in Stage 1 of 2D placenta segmentation. The last row shows interactively refined results by DeepIGeoS.

4.2.3. Stage 2: Interactive Refinement by 2D R-Net with CRF-Net(fu)

Fig. 8 shows examples of interactive refinement based on 2D R-Net with CRF-Net(fu) in Stage 2. The first column in Fig. 8 shows initial segmentation results obtained by 2D P-Net + CRF-Net(f). The user provides clicks/scribbles to indicate the foreground (red) or the background (cyan). The second to last column in Fig. 8 show the results for five variations of refinement. These refinement methods correct most of the mis-segmented areas but perform at different levels in dealing with local details, as indicated by white arrows. Fig. 8 shows 2D R-Net with geodesic distance performs better than min-cut user-editing and 2D R-Net(Euc) that uses euclidean distance. CRF-Net(fu) can further improve the segmentation. For quantitative comparison, we measured the segmentation accuracy after the first iteration of user refinement (giving user interactions to mark all the main mis-segmented regions and applying refinement once), in which the same initial segmentation and the same set of user interactions were used by the five refinement methods. The results are presented in Table 2, which shows the combination of the proposed 2D R-Net using geodesic distance and CRF-Net(fu) leads to more accurate segmentations than the other refinement methods with the same set of user interactions. The Dice score and ASSD of 2D R-Net + CRF-Net(fu) are 89.31  5.33 percent and 1.22

5.33 percent and 1.22  0.55 pixels, respectively.

0.55 pixels, respectively.

Fig. 8.

Visual comparison of different refinement methods in Stage 2 of 2D placenta segmentation. The first column shows the initial automatic segmentation obtained by 2D P-Net + CRF-Net(f), on which user interactions are added for refinement. The remaining columns show refined results. 2D R-Net(Euc) is a counterpart of the proposed 2D R-Net and it uses euclidean distance. White arrows show the difference in local details.

TABLE 2. Quantitative Evaluation of Different Refinement Methods in Stage 2 of 2D Placenta Segmentation.

| Method | Dice(%) | ASSD(pixels) |

|---|---|---|

| Before refinement | 85.86  11.67 11.67 |

1.85  1.30 1.30 |

| Min-cut user-editing | 87.04  9.79 9.79 |

1.63  1.15 1.15 |

| 2D R-Net(Euc) | 88.26  10.61 10.61 |

1.54  1.18 1.18 |

| 2D R-Net | 88.76  5.56 5.56 |

1.31  0.60 0.60 |

| 2D R-Net(Euc) + CRF-Net(fu) | 88.71  8.42 8.42 |

1.26  0.59 0.59 |

| 2D R-Net + CRF-Net(fu) | 89.31  5.33 5.33 |

1.22  0.55 0.55 |

The initial segmentation is obtained by 2D P-Net + CRF-Net(f). 2D R-Net(Euc) uses euclidean distance instead of geodesic distance. Significant improvement from 2D R-Net ( -value

-value  0.05) is shown in bold font.

0.05) is shown in bold font.

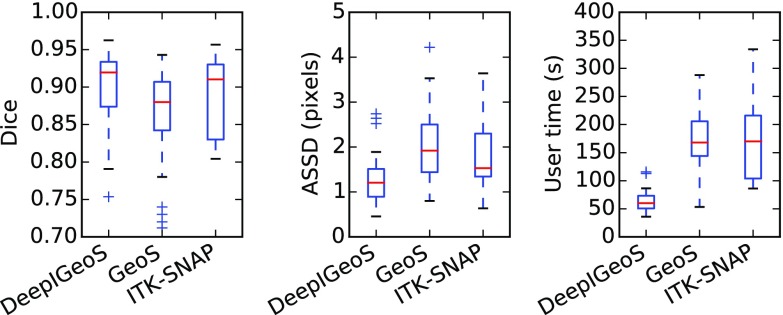

4.2.4. Comparison with Other 2D Interactive Methods

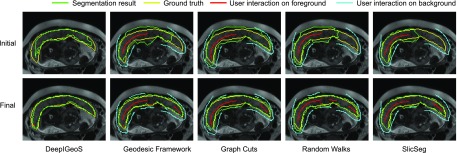

Fig. 9 shows a visual comparison between DeepIGeoS and Geodesic Framework [35], Graph Cuts [8], Random Walks [7] and SlicSeg [6] for 2D placenta segmentation. The first row shows the initial scribbles and the resulting segmentation. Notice no initial scribbles are needed for DeepIGeoS. The second row shows refined results, where DeepIGeoS only needs two short strokes to get an accurate segmentation, while the other methods require far more scribbles to get similar results. Quantitative comparison of these methods based on the final segmentation given by the two users is presented in Fig. 10. It shows these methods achieve similar accuracy, but DeepIGeoS requires far fewer user interactions and less user time. (See supplementary video 1, available online.)

Fig. 9.

Visual comparison of DeepIGeoS and other interactive methods for 2D placenta segmentation. The first row shows initial scribbles (except for DeepIGeoS) and the resulting segmentation. The second row shows final refined results with the entire set of scribbles. The user decided on the level of interaction required to achieve a visually acceptable result.

Fig. 10.

Quantitative comparison of 2D placenta segmentation by different interactive methods in terms of Dice, ASSD, total interactions (scribble length) and user time.

4.3. 3D Brain Tumor Segmentation from FLAIR Images

4.3.1. Clinical Background and Experiments Setting

Gliomas are the most common brain tumors in adults with little improvement in treatment effectiveness despite considerable research works [64]. With the development of medical imaging, brain tumors can be imaged by different MR protocols with different contrasts. For example, T1-weighted images highlight enhancing part of the tumor and FLAIR acquisitions highlight the peritumoral edema. Segmentation of brain tumors can provide better volumetric measurements and therefore has enormous potential value for improved diagnosis, treatment planning, and follow-up of individual patients. However, automatic brain tumor segmentation remains technically challenging because 1) the size, shape, and localization of brain tumors have considerable variations among patients; 2) the boundaries between adjacent structures are often ambiguous.

In this experiment, we investigate interactive segmentation of the whole tumor from FLAIR images. We used the 2015 Brain Tumor Segmentation Challenge (BraTS) [64] training set with images of 274 cases. The ground truth were manually delineated by several experts. Differently from previous works using this dataset for multi-label and multi-modality segmentation [15], [65], as a first demonstration of deep interactive segmentation in 3D, we only use FLAIR images in the dataset and only segment the whole tumor. We randomly selected 234 cases for training and used the remaining 40 cases for testing. All these images had been skull-stripped and resampled to size of 240  155 with isotropic resolution 1 mm

155 with isotropic resolution 1 mm . We cropped each image based on the bounding box of its non-zero region. The feature channel number of 3D P-Net and R-Net was

. We cropped each image based on the bounding box of its non-zero region. The feature channel number of 3D P-Net and R-Net was  .

.

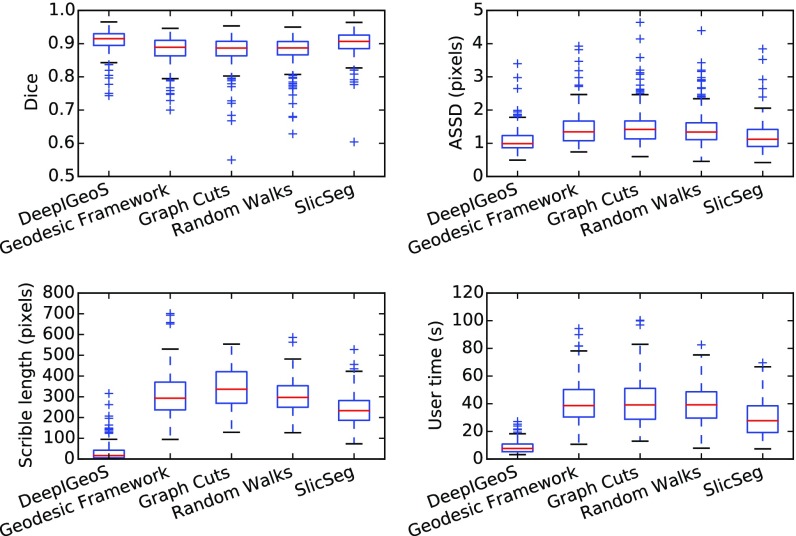

4.3.2. Stage 1: Automatic Segmentation by 3D P-Net with CRF-Net(f)

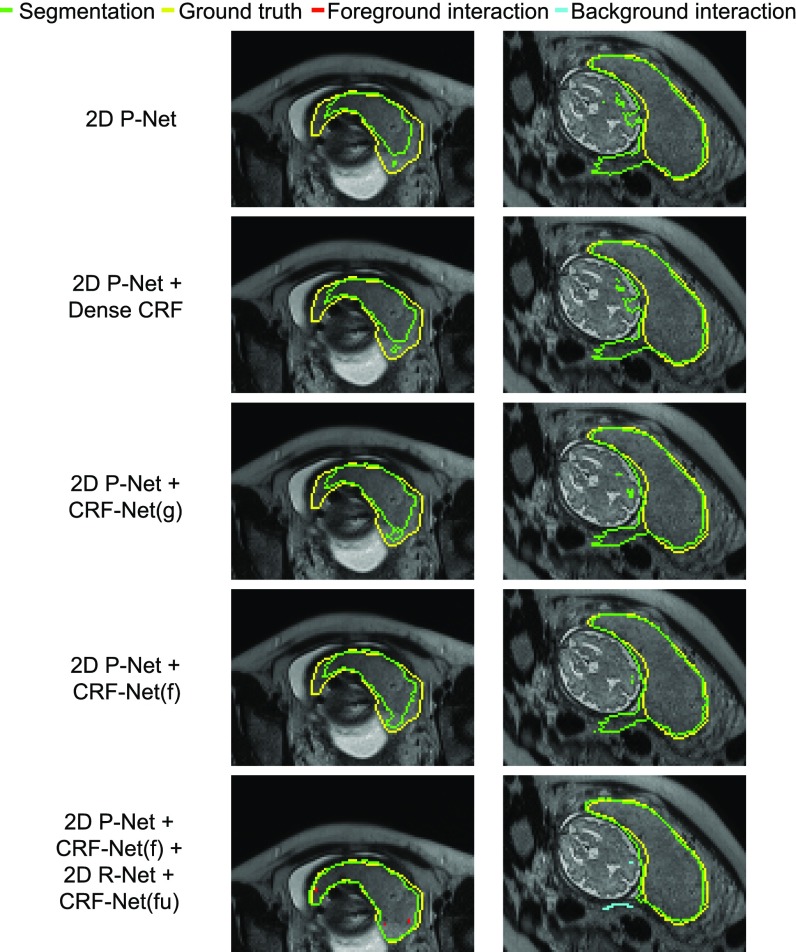

Fig. 11 shows examples of automatic segmentation by different networks in Stage 1, where 3D P-Net is compared with DeepMedic [15], HighRes3DNet [14] and 3D P-Net(b5). In the first column, DeepMedic segments the tumor roughly, with some missed regions near the boundary. HighRes3DNet reduces the missed regions but leads to some over-segmentation. 3D P-Net(b5) obtains a similar result to that of HighRes3DNet. In contrast, 3D P-Net achieves a more accurate segmentation, which is closer to the ground truth. More examples in the second and third column in Fig. 11 also show 3D P-Net outperforms the other networks. Quantitative evaluation of these four networks is presented in Table 3. DeepMedic achieves an average dice score of 83.87 percent. HighRes3DNet and 3D P-Net(b5) achieve similar performance, and they are better than DeepMedic. 3D P-Net outperforms these three counterparts with 86.68  7.67 percent in terms of Dice and 2.14

7.67 percent in terms of Dice and 2.14  2.17 pixels in terms of ASSD. Note that the proposed 3D P-Net has far fewer parameters compared with HighRes3DNet. It is more memory efficient and therefore can perform inference on a 3D volume in interactive time.

2.17 pixels in terms of ASSD. Note that the proposed 3D P-Net has far fewer parameters compared with HighRes3DNet. It is more memory efficient and therefore can perform inference on a 3D volume in interactive time.

Fig. 11.

Visual comparison of different networks in Stage 1 of 3D brain tumor segmentation. The last row shows interactively refined results by DeepIGeoS.

TABLE 3. Quantitative Comparison of Different Networks and CRFs in Stage 1 of 3D Brain Tumor Segmentation.

| Method | Dice (%) | ASSD (pixels) |

|---|---|---|

| DeepMedic [15] | 83.87  8.72 8.72 |

2.38  1.52 1.52 |

| HighRes3DNet [14] | 85.47  8.66 8.66 |

2.20  2.24 2.24 |

| 3D P-Net(b5) | 85.36  7.34 7.34 |

2.21  2.13 2.13 |

| 3D P-Net | 86.68  7.67 7.67 |

2.14  2.17 2.17 |

| 3D P-Net + Dense CRF | 87.06  7.23 7.23 |

2.10  2.02 2.02 |

| 3D P-Net + CRF-Net(f) | 87.55  6.72 6.72 |

2.04  1.70 1.70 |

Significant improvement from 3D P-Net ( -value

-value  0.05) is shown in bold font.

0.05) is shown in bold font.

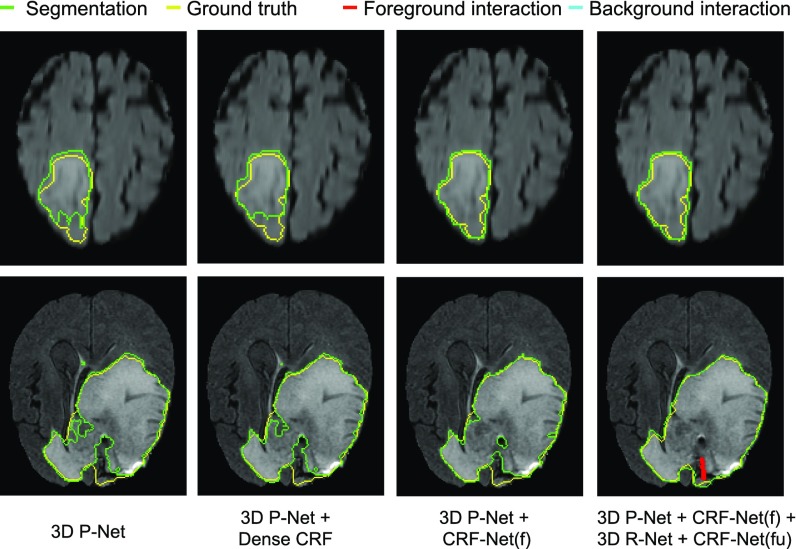

Since CRF-RNN [50] was only implemented for 2D, in the context of 3D segmentation we only compared 3D CRF-Net(f) with 3D Dense CRF [15] that uses manually tuned parameters. Visual comparison between these two types of CRFs working with 3D P-Net in Stage 1 is shown in Fig. 12. It can be observed that CRF-Net(f) achieves more noticeable improvement compared with Dense CRF that is used as post-processing without end-to-end learning. Quantitative measurement of Dense CRF and CRF-Net(f) is listed in Table 3. It shows that only CRF-Net(f) obtains significantly better segmentation than 3D P-Net with  -value

-value  0.05.

0.05.

Fig. 12.

Visual comparison of different CRFs in Stage 1 of 3D brain tumor segmentation. The last column shows interactively refined results by DeepIGeoS.

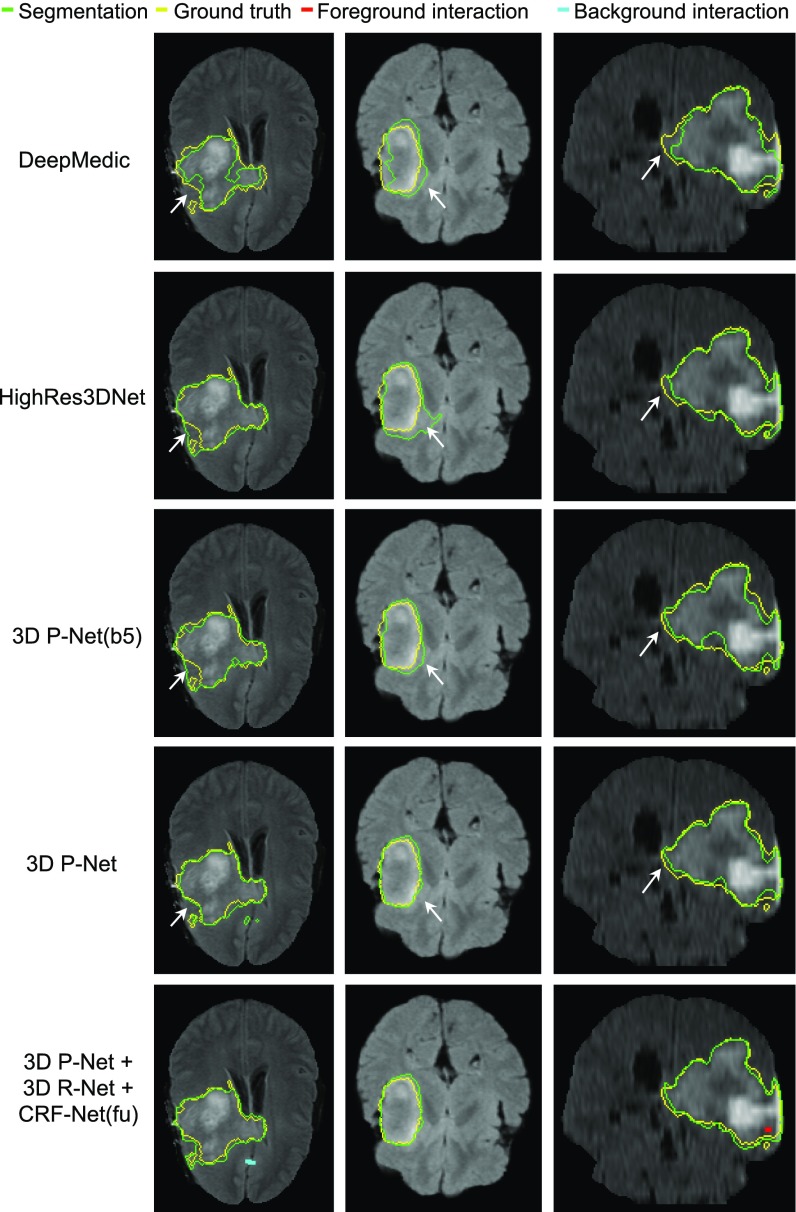

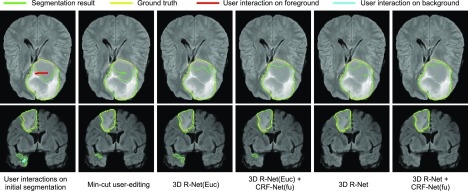

4.3.3. Stage 2: Interactive Refinement by 3D R-Net with CRF-Net(fu)

Fig. 13 shows examples of interactive refinement results in Stage 2 of 3D brain tumor segmentation. The initial segmentation is obtained by 3D P-Net + CRF-Net(f) in Stage 1. With the same set of user interactions, we compared the refined results of min-cut user-editing and four variations of 3D R-Net: using geodesic or euclidean distance transforms with or without CRF-Net(fu). Fig. 13 shows that min-cut user-editing achieves a small improvement. It can be found that more accurate results are obtained by using geodesic distance than using euclidean distance, and CRF-Net(fu) can further help to improve the segmentation. For quantitative comparison, we measured the segmentation accuracy after the first iteration of refinement, in which the same set of scribbles were used for different refinement methods. The quantitative evaluation is listed in Table 4, showing that the proposed 3D R-Net with geodesic distance and CRF-Net(fu) achieves higher accuracy than the other variations with a Dice score of 89.93  6.49 percent and ASSD of 1.43

6.49 percent and ASSD of 1.43  1.16 pixels.

1.16 pixels.

Fig. 13.

Visual comparison of different refinement methods in Stage 2 of 3D brain tumor segmentation. The initial segmentation is obtained by 3D P-Net + CRF-Net(f), on which user interactions are given. 3D R-Net(Euc) is a counterpart of the proposed 3D R-Net and it uses euclidean distance.

TABLE 4. Quantitative Comparison of Different Refinement Methods in Stage 2 of 3D Brain Tumor Segmentation.

| Method | Dice(%) | ASSD(pixels) |

|---|---|---|

| Before refinement | 87.55  6.72 6.72 |

2.04  1.70 1.70 |

| Min-cut user-editing | 88.41  7.05 7.05 |

1.74  1.53 1.53 |

| 3D R-Net(Euc) | 88.82  7.68 7.68 |

1.60  1.56 1.56 |

| 3D R-Net | 89.30  6.82 6.82 |

1.52  1.37 1.37 |

| 3D R-Net(Euc) + CRF-Net(fu) | 89.27  7.32 7.32 |

1.48  1.22 1.22 |

| 3D R-Net + CRF-Net(fu) | 89.93  6.49 6.49 |

1.43  1.16 1.16 |

The segmentation before refinement is obtained by 3D P-Net + CRF-Net(f). 3D R-Net(Euc) uses euclidean distance instead of geodesic distance. Significant improvement from 3D R-Net ( -value

-value  0.05) is shown in bold font.

0.05) is shown in bold font.

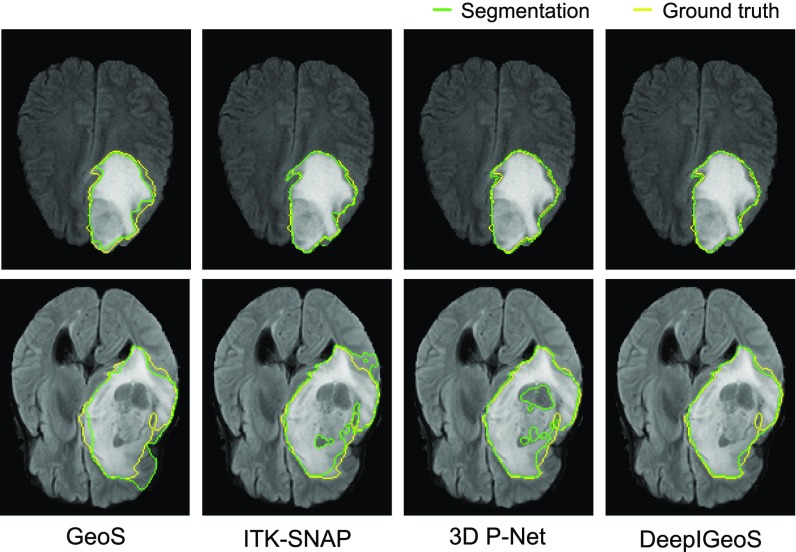

4.3.4. Comparison with Other 3D Interactive Methods

Fig. 14 shows a visual comparison between GeoS [36], ITK-SNAP [5] and DeepIGeoS. In the first row, the tumor has a good contrast with the background. All the compared methods achieve very accurate segmentations. In the second row, a lower contrast makes it difficult for the user to identify the tumor boundary. Benefited from the initial tumor boundary that is automatically identified by 3D P-Net, DeepIGeoS outperforms GeoS and ITK-SNAP. Quantitative comparison is presented in Fig. 15. It shows DeepIGeoS achieves higher accuracy compared with GeoS and ITK-SNAP. In addition, the user time for DeepIGeoS is about one third of that for the other two methods. Supplementary video 2, available online, shows more examples of DeepIGeoS for 3D brain tumor segmentation.

Fig. 14.

Visual comparison of 3D brain tumor segmentation using GeoS, ITK-SNAP, and DeepIGeoS that is based on 3D P-Net.

Fig. 15.

Quantitative evaluation of 3D brain tumor segmentation by DeepIGeoS, GeoS and ITK-SNAP.

5. Conclusion

In this work, we presented a deep learning-based interactive framework for 2D and 3D medical image segmentation. We proposed a two-stage framework with a P-Net to obtain an initial automatic segmentation and an R-Net to refine the result based on user interactions that are transformed into geodesic distance maps and then integrated into the input of R-Net. We also proposed a resolution-preserving network structure with dilated convolution for dense prediction, and extended the existing RNN-based CRF so that it can learn freeform pairwise potentials and take advantage of user interactions as hard constraints. Segmentation results of the placenta from 2D fetal MRI and brain tumors from 3D FLAIR images show that our proposed method achieves better results than automatic CNNs. It requires far less user time compared with traditional interactive methods and achieves higher accuracy for 3D brain tumor segmentation. The framework can be extended to deal with multiple organs in the future.

Acknowledgments

This work was supported through an Innovative Engineering for Health award by the Wellcome Trust (WT101957); Engineering and Physical Sciences Research Council (EPSRC) (NS/A000027/1, EP/H046410/1, EP/J020990/1, EP/K005278), Wellcome/EPSRC [203145Z/16/Z], the National Institute for Health Research University College London Hospitals Biomedical Research Centre (NIHR BRC UCLH/UCL), the Royal Society [RG160569], a UCL Overseas Research Scholarship and a UCL Graduate Research Scholarship, hardware donated by NVIDIA and of Emerald, a GPU-accelerated High Performance Computer, made available by the Science & Engineering South Consortium operated in partnership with the STFC Rutherford-Appleton Laboratory.

Biographies

Guotai Wang received the bachelor's and master's degrees in biomedical engineering from Shanghai Jiao Tong University, in 2011 and 2014, respectively. He is working toward the PhD degree in Translational Imaging Group, UCL, working on image segmentation for fetal surgical planning. He won the UCL Overseas Research Scholarship and UCL Graduate Research Scholarship. His research interests include image segmentation, computer vision, and deep learning.

Maria A. Zuluaga received the PhD degree from Universit Claude Bernard Lyon. After a year as a postdoctoral fellow with the European Synchrotron Radiation Facility, Grenoble, France, she joined CMIC, in March 2012, as a research associate to work on cardiovascular image analysis and computer-aided diagnosis (CAD) of cardiovascular pathologies. Since August 2014, she has been a part of the Guided Instrumentation for Fetal Therapy and Surgery (GIFT-Surg) project as a senior research associate.

Wenqi Li received the BSc degree in computer science from the University of Science and Technology Beijing, in 2010, the MSc degree in computing with vision and imaging from the University of Dundee, in 2011, and the PhD degree in computer vision and image processing group from the University of Dundee, in 2015. He is a research associate with the Guided Instrumentation for Fetal Therapy and Surgery (GIFT-Surg) project. His main research interests include anatomy detection and segmentation for presurgical evaluation and surgical planning.

Rosalind Pratt received the degree in medicine from the University of Leeds, before starting clinical training in London, where she is undertaking specialist training in obstetrics and gynaecology. She is a clinical academic training fellow with the UCL Institute for Women's Health. Her main research focus is in novel imaging of the human placenta.

Premal A. Patel received the degree in medicine from the St Bartholomews and the Royal London Hospital School of Medicine and Dentistry, University of London. He is a consultant paediatric interventional radiologist with the Great Ormond Street Hospital for Children NHS Foundation Trust and undertakes a variety of image guided procedures in children. He is also a clinical training fellow within the Translational Imaging Group, UCL. Following a period of training in Surgery and Paediatric Surgery in London, he undertook training in Clinical Radiology in Southampton and subsequently undertook Fellowships in Paediatric Interventional Radiology at Great Ormond Street Hospital for Children, London, United Kingdom and The Hospital for Sick Children (SickKids), University of Toronto, Canada.

Michael Aertsen received the medicine degree from the University of Hasselt and the Katholieke Universiteit Leuven. He is a consultant pediatric radiologist with the University Hospitals of Leuven. He is specialized in fetal MRI and his main research focus is the fetal brain development with advanced MRI techniques.

Tom Doel received the MSc degree in applied mathematics from the University of Bath, the MPhys degree in mathematical physics from the University of Edinburgh, and the PhD degree from the University of Oxford developed computational techniques for multi-modal medical image segmentation, registration, analysis and modelling. He is a research associate with the Translational Imaging Group, UCL and an honorary research fellow with the UCL Hospitals NHS Foundation Trust. He is a professional software engineer and worked for Barco Medical Imaging Systems to develop clinical radiology software. His current research is on novel algorithm development and the robust design and architecture of clinically-focussed software for surgical planning and image-guided surgery as part of the GIFT-Surg project.

Anna L. David is a professor and honorary consultant with the Obstetrics and Maternal Fetal Medicine, Institute for Women's Health, University College London (UCL), London. She has a clinical practice with the UCLH in fetal medicine, fetal therapy and obstetrics. Her main research is in translational medicine. She is head of the Research Department of Maternal Fetal Medicine, UCL Institute for Women's Health.

Jan Deprest is a professor of obstetrics and gynaecology with the Katholieke Universiteit Leuven and consultant obstetrician gynaecologist with the University Hospitals Leuven, Belgium. He is currently the academic chair of his Department and the director of the Centre for Surgical Technologies, Faculty of Medicine. He established the Eurofoetus consortium, which is dedicated to the development of instruments and techniques for minimally invasive fetal and placental surgery.

Sébastien Ourselin is director of the EPSRC Centre for Doctoral Training (CDT) in medical imaging, head of the Translational Imaging Group (TIG) as part of the Centre for Medical Image Computing (CMIC), and professor of Medical Image Computing, UCL. His core skills are in medical image analysis, software engineering, and translational medicine. He is best known for his work on image registration and segmentation, its exploitation for robust image-based biomarkers in neurological conditions, as well as for his development of image-guided surgery systems.

Tom Vercauteren received the graduate degree from Ecole Polytechnique, and Columbia University, and the PhD degree from Inria Sophia Antipolis. He is an associate professor with the UCL. His main research focus is on the development of innovative interventional imaging systems and their translation to the clinic. One key driving force of his work is the exploitation of image computing and the knowledge of the physics of acquisition to move beyond the initial limitations of the medical imaging devices that are developed or used in the course of his research.

Funding Statement

This work was supported through an Innovative Engineering for Health award by the Wellcome Trust (WT101957); Engineering and Physical Sciences Research Council (EPSRC) (NS/A000027/1, EP/H046410/1, EP/J020990/1, EP/K005278), Wellcome/EPSRC [203145Z/16/Z], the National Institute for Health Research University College London Hospitals Biomedical Research Centre (NIHR BRC UCLH/UCL), the Royal Society [RG160569], a UCL Overseas Research Scholarship and a UCL Graduate Research Scholarship, hardware donated by NVIDIA and of Emerald, a GPU-accelerated High Performance Computer, made available by the Science & Engineering South Consortium operated in partnership with the STFC Rutherford-Appleton Laboratory.

Footnotes

Contributor Information

Guotai Wang, Email: guotai.wang.14@ucl.ac.uk.

Maria A. Zuluaga, Email: maria.zuluaga@gmail.com.

Wenqi Li, Email: wenqi.li@ucl.ac.uk.

Rosalind Pratt, Email: rosalind.pratt@ucl.ac.uk.

Premal A. Patel, Email: premal.patel@ucl.ac.uk.

Michael Aertsen, Email: michaelaertsen@gmail.com.

Tom Doel, Email: t.doel@ucl.ac.uk.

Anna L. David, Email: a.david@ucl.ac.uk.

Jan Deprest, Email: Jan.Deprest@uzleuven.be.

Sébastien Ourselin, Email: s.ourselin@ucl.ac.uk.

Tom Vercauteren, Email: t.vercauteren@ucl.ac.uk.

References

- [1].Withey D. and Koles Z., “Medical image segmentation: Methods and software,” in Proc. Joint Meeting 6th Int. Symp. Noninvasive Functional Source Imaging Brain Heart Int. Conf. Functional Biomedical Imaging, 2007, pp. 140–143. [Google Scholar]

- [2].Sharma N. and Aggarwal L. M., “Automated medical image segmentation techniques,” J. Medical Physics, vol. 35, no. 1, pp. 3–14, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Zhao F. and Xie X., “An overview of interactive medical image segmentation,” Ann. BMVA, vol. 2013, no. 7, pp. 1–22, 2013. [Google Scholar]

- [4].Hamamci A., Kucuk N., Karaman K., Engin K., and Unal G., “Tumor-Cut: Segmentation of brain tumors on contrast enhanced MR images for radiosurgery applications,” IEEE Trans. Medical Imaging, vol. 31, no. 3, pp. 790–804, Mar. 2012. [DOI] [PubMed] [Google Scholar]

- [5].Yushkevich P. A., Piven J., Hazlett H. C., Smith R. G., Ho S., Gee J. C., and Gerig G., “User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability,” NeuroImage, vol. 31, no. 3, pp. 1116–1128, 2006. [DOI] [PubMed] [Google Scholar]

- [6].Wang G., Zuluaga M. A., Pratt R., Aertsen M., Doel T., Klusmann M., David A. L., Deprest J., Vercauteren T., and Ourselin S., “Slic-Seg: A minimally interactive segmentation of the placenta from sparse and motion-corrupted fetal MRI in multiple views,” Med. Image Anal., vol. 34, pp. 137–147, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Grady L., “Random walks for image segmentation,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 28, no. 11, pp. 1768–1783, Nov. 2006. [DOI] [PubMed] [Google Scholar]

- [8].Boykov Y. Y. and Jolly M. P., “Interactive graph cuts for optimal boundary & region segmentation of objects in N-D images,” in Proc. 8th IEEE Int. Conf. Comput. Vis., 2001, pp. 105–112. [Google Scholar]

- [9].Rother C., Kolmogorov V., and Blake A., “GrabCut: Interactive foreground extraction using iterated graph cuts,” ACM Trans. Graph., vol. 23, no. 3, pp. 309–314, 2004. [Google Scholar]

- [10].Litjens G., Kooi T., Bejnordi B. E., Setio A. A. A., Ciompi F., Ghafoorian M., van der Laak J. A., Ginneken B. V., and Sánchez C. I., “A survey on deep learning in medical image analysis,” Med. Image Anal., vol. 42, pp. 60–88, 2017. [DOI] [PubMed] [Google Scholar]

- [11].Long J., Shelhamer E., and Darrell T., “Fully convolutional networks for semantic segmentation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2015, pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- [12].Ronneberger O., Fischer P., and Brox T., “U-Net: Convolutional networks for biomedical image segmentation,” in Proc. Medical Image Comput. Comput.-Assisted Intervention, 2015, pp. 234–241. [Google Scholar]

- [13].Milletari F., Navab N., and Ahmadi S.-A., “V-Net: Fully convolutional neural networks for volumetric medical image segmentation,” in Proc. 4th Int. Conf. 3D Vis., 2016, pp. 565–571. [Google Scholar]

- [14].Li W., Wang G., Fidon L., Ourselin S., Cardoso M. J., and Vercauteren T., “On the compactness, efficiency, and representation of 3D convolutional networks: brain parcellation as a pretext task,” in Proc. Inf. Process. Medical Imaging, 2017, pp. 348–360. [Google Scholar]

- [15].Kamnitsas K., Ledig C., Newcombe V. F. J., Simpson J. P., Kane A. D., Menon D. K., Rueckert D., and Glocker B., “Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation,” Med. Image Anal., vol. 36, pp. 61–78, 2017. [DOI] [PubMed] [Google Scholar]

- [16].Krizhevsky A., Sutskever I., and Hinton G. E., “ImageNet classification with deep convolutional neural networks,” in Proc. 25th Int. Conf. Neural Inf. Process. Syst., 2012, pp. 1097–1105. [Google Scholar]

- [17].Simonyan K. and Zisserman A., “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556. [Google Scholar]

- [18].He K., Zhang X., Ren S., and Sun J., “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 770–778. [Google Scholar]

- [19].Havaei M., Davy A., Warde-Farley D., Biard A., Courville A., Bengio Y., Pal C., Jodoin P.-M., and Larochelle H., “Brain tumor segmentation with deep neural networks,” Med. Image Anal., vol. 35, pp. 18–31, 2016. [DOI] [PubMed] [Google Scholar]

- [20].Girshick R., Donahue J., Darrell T., and Malik J., “Rich feature hierarchies for accurate object detection and semantic segmentation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2014, pp. 580–587. [Google Scholar]

- [21].Abdulkadir A., Lienkamp S. S., Brox T., and Ronneberger O., “3D U-Net: Learning dense volumetric segmentation from sparse annotation,” in Proc. Medical Image Comput. Comput.-Assisted Intervention, 2016, pp. 424–432. [Google Scholar]

- [22].Chen L.-C., Papandreou G., Kokkinos I., Murphy K., and Yuille A. L., “Semantic image segmentation with deep convolutional nets and fully connected CRFs,” arXiv preprint arXiv:1412.7062. [DOI] [PubMed] [Google Scholar]

- [23].Yu F. and Koltun V., “Multi-scale context aggregation by dilated convolutions,” arXiv preprint arXiv:1511.07122. [Google Scholar]

- [24].Ondruska P., Dequaire J., Wang D. Z., and Posner I., “End-to-end tracking and semantic segmentation using recurrent neural networks,” arXiv preprint arXiv:1604.05091. [Google Scholar]

- [25].Dai J., He K., Li Y., Ren S., and Sun J., “Instance-sensitive fully convolutional networks,” in Proc. 14th Eur. Conf. Comput. Vis., 2016, pp. 534–549. [Google Scholar]

- [26].Lea C., Vidal R., Reiter A., and Hager G. D., “Temporal convolutional networks for action segmentation and detection,” in Proc. Eur. Conf. Comput. Vis., 2016, pp. 47–54. [Google Scholar]

- [27].Lin G., Shen C., Reid I., and van dan Hengel A., “Efficient piecewise training of deep structured models for semantic segmentation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 3194–3203. [Google Scholar]

- [28].Pinheiro P. and Collobert R., “Recurrent convolutional neural networks for scene labeling,” in Proc. 31st Int. Conf. Int. Conf. Mach. Learn., 2014, pp. 82–90. [Google Scholar]

- [29].Hariharan B., Arbeláez P., Girshick R., and Malik J., “Hypercolumns for object segmentation and fine-grained localization,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2015, pp. 447–456. [DOI] [PubMed] [Google Scholar]

- [30].Armstrong C. J., Price B. L., and Barrett W. A., “Interactive segmentation of image volumes with live surface,” Comput. Graph., vol. 31, no. 2, pp. 212–229, 2007. [Google Scholar]

- [31].Cates J. E., Lefohn A. E., and Whitaker R. T., “GIST: An interactive, GPU-based level set segmentation tool for 3D medical images,” Med. Image Anal., vol. 8, no. 3, pp. 217–231, 2004. [DOI] [PubMed] [Google Scholar]