Abstract

A large body of recent work focuses on methods for extracting low-dimensional latent structure from multi-neuron spike train data. Most such methods employ either linear latent dynamics or linear mappings from latent space to log spike rates. Here we propose a doubly nonlinear latent variable model that can identify low-dimensional structure underlying apparently high-dimensional spike train data. We introduce the Poisson Gaussian-Process Latent Variable Model (P-GPLVM), which consists of Poisson spiking observations and two underlying Gaussian processes—one governing a temporal latent variable and another governing a set of nonlinear tuning curves. The use of nonlinear tuning curves enables discovery of low-dimensional latent structure even when spike responses exhibit high linear dimensionality (e.g., as found in hippocampal place cell codes). To learn the model from data, we introduce the decoupled Laplace approximation, a fast approximate inference method that allows us to efficiently optimize the latent path while marginalizing over tuning curves. We show that this method outperforms previous Laplace-approximation-based inference methods in both the speed of convergence and accuracy. We apply the model to spike trains recorded from hippocampal place cells and show that it compares favorably to a variety of previous methods for latent structure discovery, including variational auto-encoder (VAE) based methods that parametrize the nonlinear mapping from latent space to spike rates with a deep neural network.

1. Introduction

Recent advances in multi-electrode array recording techniques have made it possible to measure the simultaneous spiking activity of increasingly large neural populations. These datasets have highlighted the need for robust statistical methods for identifying the latent structure underlying high-dimensional spike train data, so as to provide insight into the dynamics governing large-scale activity patterns and the computations they perform [1-4].

Recent work has focused on the development of sophisticated model-based methods that seek to extract a shared, low-dimensional latent process underlying population spiking activity. These methods can be roughly categorized on the basis of two basic modeling choices: (1) the dynamics of the underlying latent variable; and (2) the mapping from latent variable to neural responses. For choice of dynamics, one popular approach assumes the latent variable is governed by a linear dynamical system [5-11], while a second assumes that it evolves according to a Gaussian process, relaxing the linearity assumption and imposing only smoothness in the evolution of the latent state [1, 12-14]. For choice of mapping function, most previous methods have assumed a fixed linear or log-linear relationship between the latent variable and the mean response level [1, 5-8, 11, 12]. These methods seek to find a linear embedding of population spiking activity, akin to PCA or factor analysis. In many cases, however, the relationship between neural activity and the quantity it encodes can be highly nonlinear. Hippocampal place cells provide an illustrative example: if each discrete location in a 2D environment has a single active place cell, population activity spans a space whose dimensionality is equal to the number of neurons; a linear latent variable model cannot find a reduced-dimensional representation of population activity, despite the fact that the underlying latent variable (“position”) is clearly two-dimensional.

Several recent studies have introduced nonlinear coupling between latent dynamics and firing rate [7, 9, 10, 15]. These models use deep neural networks to parametrize the nonlinear mapping from latent space to spike rates, but often require repeated trials or long training sets. Table 1 summarizes these different model structures for latent neural trajectory estimation (including the original Gaussian process latent variable model (GPLVM) [16], which assumes Gaussian observations and does not produce spikes).

Table 1:

Modeling assumptions of various latent variable models for spike trains.

| model | latent | mapping function | output nonlinearity | observation |

|---|---|---|---|---|

| PLDS [8] | LDS | linear | exp | Poisson |

| PfLDS [9, 10] | LDS | neural net | exp | Poisson |

| LFADS [15] | RNN | neural net | exp | Poisson |

| GPFA [1] | GP | linear | identity | Gaussian |

| P-GPFA [13, 14] | GP | linear | exp | Poisson |

| GPLVM [16] | GP | GP | identity | Gaussian |

| P-GPLVM | GP | GP | exp | Poisson |

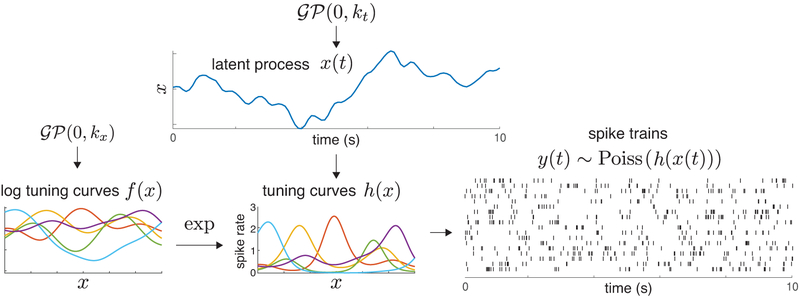

In this paper, we propose the Poisson Gaussian process latent variable model (P-GPLVM) for spike train data, which allows for nonlinearity in both the latent state dynamics and in the mapping from the latent states to the spike rates. Our model posits a low-dimensional latent variable that evolves in time according to a Gaussian process prior; this latent variable governs firing rates via a set of non-parametric tuning curves, parametrized as exponentiated samples from a second Gaussian process, from which spikes are then generated by a Poisson process (Fig. 1).

Figure 1:

Schematic diagram of the Poisson Gaussian Process Latent Variable Model (P-GPLVM), illustrating multi-neuron spike train data generated by the model with a one-dimensional latent process.

The paper is organized as follows: Section 2 introduces the P-GPLVM; Section 3 describes the decoupled Laplace approximation for performing efficient inference for the latent variable and tuning curves; Section 4 describes tuning curve estimation; Section 5 compares P-GPLVM to other models using simulated data and hippocampal place-cell recordings, demonstrating the accuracy and interpretability of P-GPLVM relative to other methods.

2. Poisson-Gaussian process latent variable model (P-GPLVM)

Suppose we have simultaneously recorded spike trains from N neurons. Let denote the matrix of spike count data, with neurons indexed by i ∈ (1,…, N) and spikes counted in discrete time bins indexed by t ∈ (1,…, T). Our goal is to construct a generative model of the latent structure underlying these data, which will here take the form of a P-dimensional latent variable x(t) and a set of mapping functions or tuning curves {hi(x)}, i ∈ (1,…, N) which map the latent variable to the spike rates of each neuron.

Latent dynamics

Let x(t) denote a (vector-valued) latent process, where each component xj(t), j ∈ (1,…, P), evolves according to an independent Gaussian process (GP),

| (1) |

with covariance function kt(t, t′) ≜ cov(xj(t), xj(t′)) governing how each scalar process varies over time. Although we can select any valid covariance function for kt, here we use the exponential covariance function, a special case of the Matérn kernel, given by k(t, t′) = r exp (−∣t − t′∣/l), which is parametrized by a marginal variance r > 0 and length-scale l > 0. Samples from this GP are continuous but not differentiable, equivalent to a Gaussian random walk with a bias toward the origin, also known as the Ornstein-Uhlenbeck process [17].

The latent state x(t) at any time t is a P-dimensional vector that we will write as . The collection of such vectors over T time bins forms a matrix . Let xj denote the jth row of X, which contains the set of states in latent dimension j. From the definition of a GP, xj has a multivariate normal distribution,

| (2) |

with a T × T covariance matrix Kt generated by evaluating the covariance function kt at all time bins in (1,…, T).

Nonlinear mapping

Let denote a nonlinear function mapping from the latent vector xt to a firing rate λt. We will refer to h(x) as a tuning curve, although unlike traditional tuning curves, which describe firing rate as a function of some externally (observable) stimulus parameter, here h(x) describes firing rate as a function of the (unobserved) latent vector x. Previous work has modeled h with a parametric nonlinear function such as a deep neural network [9,10]. Here we develop a nonparametric approach using a Gaussian process prior over the log of h. The logarithm assures that spike rates are non-negative.

Let fi(x) = log hi(x) denote the log tuning curve for the i’th neuron in our population, which we model with a GP,

| (3) |

where kx is a (spatial) covariance function that governs smoothness of the function over its P-dimensional input space. For simplicity, we use the common Gaussian or radial basis function (RBF) covariance function: , where x and x′ are arbitrary points in latent space, ρ is the marginal variance and δ is the length scale. The tuning curve for neuron i is then given by hi(x) = exp(fi(x)).

Let denote a vector with the t’th element equal to fi(xt). From the definition of a GP, fi has a multivariate normal distribution given latent vectors at all time bins ,

| (4) |

with a T × T covariance matrix Kx generated by evaluating the covariance function kx at all pairs of latent vectors in x1:T. Stacking fi for N neurons, we will formulate a matrix with on the i’th row. The element on the i’th row and the t’th column is fi, t = fi(xt).

Poisson spiking

Lastly, we assume Poisson spiking given the latent firing rates. We assume that spike rates are in units of spikes per time bin. Let λi, t = exp(fi, t) = exp(fi(xt)) denote the spike rate of neuron i at time t. The spike-count of neuron i at t given the log tuning curve fi and latent vector xt is Poisson distributed as

| (5) |

In summary, our model is as a doubly nonlinear Gaussian process latent variable model with Poisson observations (P-GPLVM). One GP is used to model the nonlinear evolution of the latent dynamic x, while a second GP is used to generate the log of the tuning curve f as a nonlinear function of x, which is then mapped to a tuning curve h via a nonlinear link function, e.g. exponential function. Fig. 1 provides a schematic of the model.

3. Inference using the decoupled Laplace approximation

For our inference procedure, we estimate the log of the tuning curve, f, as opposed to attempting to infer the tuning curve h directly. Once f is estimated, h can be obtained by exponentiating f. Given the model outlined above, the joint distribution over the observed data and all latent variables is written as,

| (6) |

where θ = {ρ, δ, r, l} is the hyperparameter set, references to which will now be suppressed for simplification. This is a Gaussian process latent variable model (GPLVM) with Poisson observations and a GP prior, and our goal is to now estimate both F and X. A standard Bayesian treatment of the GPLVM requires the computation of the log marginal likelihood associated with the joint distribution (Eq.6). Both F and X must be marginalized out,

| (7) |

However, propagating the prior density p(X) through the nonlinear mapping makes this inference difficult. The nested integral in (Eq. 7) contains X in a complex nonlinear manner, making analytical integration over X infeasible. To overcome these difficulties, we can use a straightforward MAP training procedure where the latent variables F and X are selected according to

| (8) |

Note that point estimates of the hyperparameters θ can also be found by maximizing the same objective function. As discussed above, learning X remains a challenge due to the interplay of the latent variables, i.e. the dependency of F on X. For our MAP training procedure, fixing one latent variable while optimizing for the other in a coordinate descent approach is highly inefficient since the strong interplay of variables often means getting trapped in bad local optima. In variational GPLVM [18], the authors introduced a non-standard variational inference framework for approximately integrating out the latent variables X then subsequently training a GPLVM by maximizing an analytic lower bound on the exact marginal likelihood. An advantage of the variational framework is the introduction of auxiliary variables which weaken the strong dependency between X and F. However, the variational approximation is only applicable to Gaussian observations; with Poisson observations, the integral over F remains intractable. In the following, we will propose using variations of the Laplace approximation for inference.

3.1. Standard Laplace approximation

We first use Laplace’s method to find a Gaussian approximation q(F∣Y, X) to the true posterior p(F∣Y, X), then do MAP estimation for X only. We employ the Laplace approximation for each fi individually. Doing a second order Taylor expansion of log p(fi∣yi, X) around the maximum of the posterior, we obtain a Gaussian approximation

| (9) |

where and is the Hessian of the negative log posterior at that point. By Bayes’ rule, the posterior over fi is given by p(fi∣yi, X) = p(yi∣fi)p(fi∣X)/p(yi∣X), but since p(yi∣X) is independent of fi, we need only consider the unnormalized posterior, defined as Ψ(fi), when maximizing w.r.t. fi. Taking the logarithm gives

| (10) |

Differentiating (Eq. 10) w.r.t. fi we obtain

| (11) |

| (12) |

where Wi = ∔∇∇ logp(yi∣fi). The approximated log conditional likelihood on X (see Sec. 3.4.4 in [17]) can then be written as

| (13) |

We can then estimate X as

| (14) |

When using standard LA, the gradient of log q(yi∣X) w.r.t. X should be calculated for a given posterior mode . Note that not only is the covariance matrix Kx an explicit function of X, but also and Wi are also implicitly functions of X—when X changes, the optimum of the posterior changes as well. Therefore, log q(yi∣X) contains an implicit function of X which does not allow for a straightforward closed-form gradient expression. Calculating numerical gradients instead yields a very inefficient implementation empirically.

3.2. Third-derivative Laplace approximation

One method to derive this gradient explicitly is described in [17] (see Sec. 5.5.1). We adapt their procedure to our setting to make the implicit dependency of and Wi on X explicit. To solve (Eq. 14), we need to determine the partial derivative of our approximated log conditional likelihood (Eq. 13) w.r.t. X, given as

| (15) |

by the chain rule. When evaluating the second term, we use the fact that is the posterior maximum, so ∂Ψ(fi)/∂fi = 0 at , where Ψ(fi) is defined in (Eq. 11). Thus the implicit derivatives of the first two terms in (Eq. 13) vanish, leaving only

| (16) |

To evaluate , we differentiate the self-consistent equation (setting (Eq. 11) to be 0 at ) to obtain

| (17) |

where we use the chain rule and from (Eq. 12). The desired implicit derivative is obtained by multiplying (Eq. 16) and (Eq. 17) to formulate the second term in (Eq. 15).

We can now estimate XMAP with (Eq. 14) using the explicit gradient expression in (Eq. 15). We call this method third-derivative Laplace approximation (tLA), as it depends on the third derivative of the data likelihood term (see [17] for further details). However, there is a big computational drawback with tLA: for each step along the gradient we have just derived, the posterior mode must be reevaluated. This method might lead to a fast convergence theoretically, but this nested optimization makes for a very slow computation empirically.

3.3. Decoupled Laplace approximation

We propose a novel method to relax the Laplace approximation, which we refer to as the decoupled Laplace approximation (dLA). Our relaxation not only decouples the strong dependency between X and F, but also avoids the nested optimization of searching for the posterior mode of F within each update of X. As in tLA, dLA also assumes to be a function of X. However, while tLA assumes to be an implicit function of X, dLA constructs an explicit mapping between and X.

The standard Laplace approximation uses a Gaussian approximation for the posterior p(fi∣yi, X) ∝ p(yi∣fi)p(fi∣X) where, in this paper, p(yi∣fi) is a Poisson distribution and p(fi∣X) is a multivariate Gaussian distribution. We first do the same second order Taylor expansion of log p(fi∣yi, X) around the posterior maximum to find q(fi∣yi, X) as in (Eq. 9). Now if we approximate the likelihood distribution p(yi∣fi) as a Gaussian distribution

, we can derive its mean m and covariance S. If

and

, the relationship between two Gaussian distributions and their product allow us to solve for m and S from the relationship

:

| (18) |

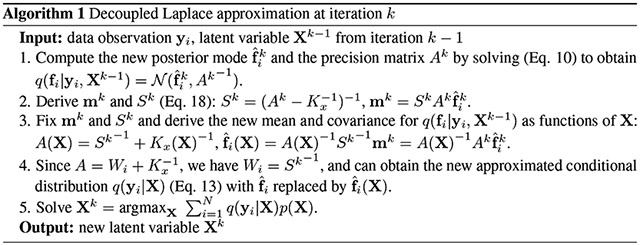

m and S represent the components of the posterior terms, and A, that come from the likelihood. Now when estimating X, we fix these likelihood terms m and S, and completely relax the prior, p(fi∣X). We are still solving (Eq. 14) w.r.t. X, but now q(fi∣yi, X) has both mean and covariance approximated as explicit functions of X. Alg. 1 describes iteration k of the dLA algorithm, with which we can now estimate XMAP. Step 3 indicates that the posterior maximum for the current iteration is now explicitly updated as a function of X, avoiding the computationally demanding nested optimization of tLA. Intuitively, dLA works by finding a Gaussian approximation to the likelihood at such that the approximated posterior of fi, q(fi∣yi, X), is now a closed-form Gaussian distribution with mean and covariance as functions of X, ultimately allowing for the explicit calculation of q(yi∣X).

4. Tuning curve estimation

Given the estimated and from the inference, we can now calculate the tuning curve h for each neuron. Let be a grid of G latent states, where . Correspondingly, for each neuron, we have the log of the tuning curve vector evaluated on the grid of latent states, , with the g’th element equal to f(xg). Similar to (Eq. 4), we can write down its distribution as

| (19) |

with a G × G covariance matrix Kgrid generated by evaluating the covariance function kx at all pairs of vectors in x1:G. Therefore we can write a joint distribution for as

| (20) |

is a covariance matrix with elements evaluated at all pairs of estimated latent vectors in , and . Thus we have the following posterior distribution over fgrid:

| (21) |

where diag(Kgrid) denotes a diagonal matrix constructed from the diagonal of Kgrid. Setting , the spike rate vector

| (22) |

describes the tuning curve h evaluated on the grid x1:G.

5. Experiments

5.1. Simulation data

We first examine performance using two simulated datasets generated with different kinds of tuning curves, namely sinusoids and Gaussian bumps. We will compare our algorithm (P-GPLVM) with PLDS, PfLDS, P-GPFA and GPLVM (see Table 1), using the tLA and dLA inference methods. We also include an additional variant on the Laplace approximation, which we call the approximated Laplace approximation (aLA), where we use only the explicit (first) term in (Eq. 15) to optimize over X for multiple steps given a fixed . This allows for a coarse estimation for the gradient w.r.t. X for a few steps in X before estimation is necessary, partially relaxing the nested optimization so as to speed up the learning procedure.

For comparison between models in our simulated experiments, we compute the R-squared (R2) values from the known latent processes and the estimated latent processes. In all simulation studies, we generate 1 single trial per neuron with 20 simulated neurons and 100 time bins for a single experiment. Each experiment is repeated 10 times and results are averaged across 10 repeats.

Sinusoid tuning curve:

This simulation generates a “grid cell” type response. A grid cell is a type of neuron that is activated when an animal occupies any point on a grid spanning the environment [19]. When an animal moves in a one-dimensional space (P = 1), grid cells exhibit oscillatory responses. Motivated by the response properties of grid cells, the log firing rate of each neuron i is coupled to the latent process through a sinusoid with a neuron-specific phase Φi and frequency ωi,

| (23) |

We randomly generated Φi uniformly from the region [0,2π] and ωi uniformly from [1.0,4.0].

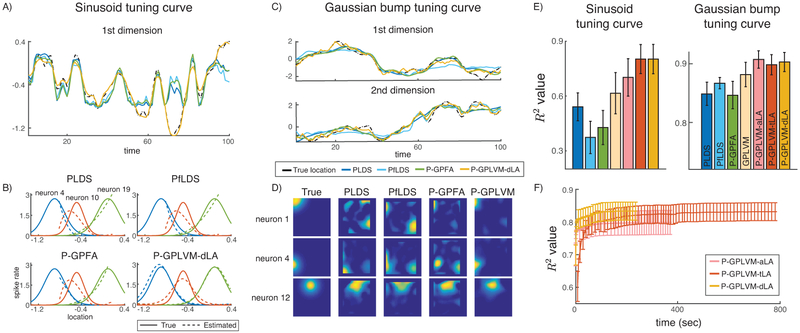

An example of the estimated latent processes versus the true latent process is presented in Fig. 2A. We used least-square regression to learn an affine transformation from the latent space to the space of the true locations. Only P-GPLVM finds the global optimum by fitting the valley around t = 70. Fig. 2B displays the true tuning curves and the estimated tuning curves for neuron 4, 10, & 9 with PLDS, PfLDS, P-GPFA and P-GPLVM-dLA. For PLDS, PfLDS and P-GPFA, we replace the estimated with the observed spike count y in (Eq. 21), and treat the posterior mean as the tuning curve on a grid of latent representations. For P-GPLVM, the tuning curve is estimated via (Eq. 22). The R2 performance is shown in the first column of Fig. 2E.

Figure 2:

Results from the sinusoid and Gaussian bump simulated experiments. A) and C) are estimated latent processes. B) and D) display the tuning curves estimated by different methods. E) shows the R2 performances with error bars. F) shows the convergence R2 performances of three different Laplace approximation inference methods with error bars. Error bars are plotted every 10 seconds.

Deterministic Gaussian bump tuning curve:

For this simulation, each neuron’s tuning curve is modeled as a unimodal Gaussian bump in a 2D space such that the log of the tuning curve, f, is a deterministic Gaussian function of x. Fig. 2C shows an example of the estimated latent processes. PLDS fits an overly smooth curve, while P-GPLVM can find the small wiggles that are missed by other methods. Fig. 2D displays the 2D tuning curves for neuron 1, 4, & 12 estimated by PLDS, PfLDS, P-GPFA and P-GPLVM-dLA. The R2 performance is shown in the second column of Fig. 2E.

Overall, P-GPFA has a quite unstable performance due to the ARD kernel function in the GP prior, potentially encouraging a bias for smoothness even when the underlying latent process is actually quite non-smooth. PfLDS performs better than PLDS in the second case, but when the true latent process is highly nonlinear (sinusoid) and the single-trial dataset is small, PfLDS losses its advantage to stochastic optimization. GPLVM has a reasonably good performance with the nonlinearities, but is worse than P-GPLVM which demonstrates the significance of using the Poisson observation model. For P-GPLVM, the dLA inference algorithm performs best overall w.r.t. both convergence speed and R2 (Fig. 2F).

5.2. Application to rat hippocampal neuron data

Next, we apply the proposed methods to extracellular recordings from the rodent hippocampus. Neurons were recorded bilaterally from the pyramidal layer of CA3 and CA1 in two rats as they performed a spatial alternation task on a W-shaped maze [20]. We confine our analyses to simultaneously recorded putative place cells during times of active navigation. Total number of simultaneously recorded neurons ranged from 7-19 for rat 1 and 24-38 for rat 2. Individual trials of 50 seconds were isolated from 15 minute recordings, and binned at a resolution of 100ms.

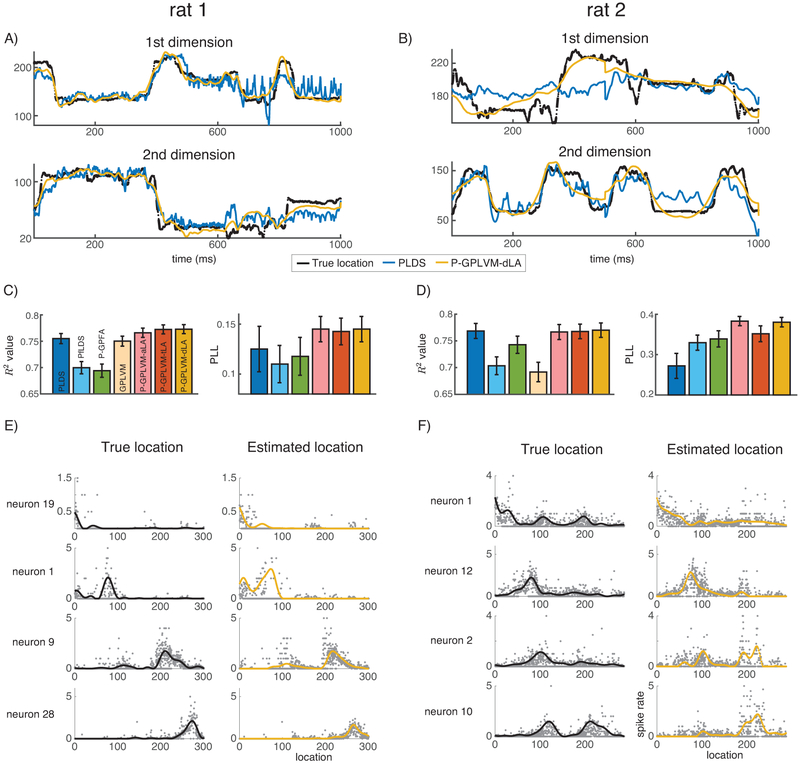

We used this hippocampal data to identify a 2D latent space using PLDS, PfLDS, P-GPFA, GPLVM and P-GPLVMs (Fig. 3), and compared these to the true 2D location of the rodent. For visualization purposes, we linearized the coordinates along the arms of the maze to obtain 1D representations. Fig. 3A & B present two segments of 1s recordings for the two animals. The P-GPLVM results are smoother and recover short time-scale variations that PLDS ignores. The average R2 performance for all methods for each rodent is shown in Fig. 3C & D where P-GPLVM-dLA consistently performs the best.

Figure 3:

Results from the hippocampal data of two rats. A) and B) are estimated latent processes during a 1s recording period for two rats. C) and D) show R2 and PLL performance with error bars. E) and F) display the true tuning curves and the tuning curves estimated by P-GPLVM-dLA.

We also assessed the model fitting quality by doing prediction on a held-out dataset. We split all the time bins in each trial into training time bins (the first 90% time bins) and held-out time bins (the last 10% time bins). We first estimated the parameters for the mapping function or the tuning curve in each model using spike trains from all the neurons within training time bins. Then we fixed the parameters and inferred the latent process using spike trains from 70% neurons within held-out time bins. Finally, we calculated the predictive log likelihood (PLL) for the other 30% neurons within held-out time bins given the inferred latent process. We subtracted the log-likelihood of the population mean firing rate model (single spike rate) from the predictive log likelihood divided by number of observations, shown in Fig. 3C & D. Both P-GPLVM-aLA and P-GPLVM-dLA perform well. GPLVM has very negative PLL, omitted in the figures.

Fig. 3E & F present the tuning curves learned by P-GPLVM-dLA where each row corresponds to a neuron. For our analysis we have the true locations xtrue, the estimated locations xP-GPLVM, a grid of G locations x1:G distributed with a shape of the maze, the spike count observation yi, and the estimated log of the tuning curves for each neuron i. The light gray dots in the first column of Fig. 3E & F are the binned spike counts when mapping from the space of xtrue to the space of x1:G. The second column contains the binned spike counts mapped from the space of xP-GPLVM to the space of x1:G. The black curves in the first column are achieved by replacing and with xtrue and y respectively using the predictive posterior in (Eq. 21) and (Eq. 22). The yellow curves in the second column are the estimated tuning curves by using (Eq. 22) to get for each neuron. We can tell that the estimated tuning curves closely match the true tuning curves from the observations, discovering different responsive locations for different neurons as the rat moves.

6. Conclusion

We proposed a doubly nonlinear Gaussian process latent variable model for neural population spike trains that can identify nonlinear low-dimensional structure underlying apparently high-dimensional spike train data. We also introduced a novel decoupled Laplace approximation, a fast approximate inference method that allows us to efficiently maximize marginal likelihood for the latent path while integrating over tuning curves. We showed that this method outperforms previous Laplace-approximation-based inference methods in both the speed of convergence and accuracy. We applied the model to both simulated data and spike trains recorded from hippocampal place cells and showed that it outperforms a variety of previous methods for latent structure discovery.

Acknowledgments

This work was supported by grants from the Simons Foundation (SCGB AWD543027) and a U19 NIH-NINDS BRAIN Initiative Award (5U19NS104648)

References

- [1].Yu BM, Cunningham JP, Santhanam G, Ryu SI, Shenoy KV, and Sahani M. Gaussian-process factor analysis for low-dimensional single-trial analysis of neural population activity. In Adv neur inf proc sys, pages 1881–1888, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Paninski L, Ahmadian Y, Ferreira Daniel G., Koyama S, Rad Kamiar R., Vidne M, Vogelstein J, and Wu W. A new look at state-space models for neural data. J comp neurosci, 29(1-2):107–126, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Cunningham John P and Yu BM. Dimensionality reduction for large-scale neural recordings. Nature neuroscience, 17(11):1500–1509, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Linderman SW, Johnson MJ, Wilson MA, and Chen Z. A bayesian nonparametric approach for uncovering rat hippocampal population codes during spatial navigation. J neurosci meth, 263:36–47, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Macke JH, Buesing L, Cunningham JP, Yu BM, Shenoy KV, and Sahani M. Empirical models of spiking in neural populations. In Adv neur inf proc sys, pages 1350–1358, 2011. [Google Scholar]

- [6].Buesing L, Macke JH, and Sahani M. Spectral learning of linear dynamics from generalised-linear observations with application to neural population data. In Adv neur inf proc sys, pages 1682–1690, 2012. [Google Scholar]

- [7].Archer EW, Koster U, Pillow JW, and Macke JH. Low-dimensional models of neural population activity in sensory cortical circuits. In Adv neur inf proc sys, pages 343–351, 2014. [Google Scholar]

- [8].Macke JH, Buesing L, and Sahani M. Estimating state and parameters in state space models of spike trains. Advanced State Space Methods for Neural and Clinical Data, page 137, 2015. [Google Scholar]

- [9].Archer Evan, Park Il Memming, Buesing Lars, Cunningham John, and Paninski Liam. Black box variational inference for state space models. arXiv preprint arXiv:1511.07367, 2015. [Google Scholar]

- [10].Gao Y, Archer EW, Paninski L, and Cunningham JP. Linear dynamical neural population models through nonlinear embeddings. In Adv neur inf proc sys, pages 163–171, 2016. [Google Scholar]

- [11].Kao JC, Nuyujukian P, Ryu SI, Churchland MM, Cunningham JP, and Shenoy KV. Single-trial dynamics of motor cortex and their applications to brain-machine interfaces. Nature communications, 6, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Pfau David, Pnevmatikakis Eftychios A, and Paninski Liam. Robust learning of low-dimensional dynamics from large neural ensembles. In Adv neur inf proc sys, pages 2391–2399, 2013. [Google Scholar]

- [13].Nam Hooram. Poisson extension of gaussian process factor analysis for modeling spiking neural populations Master’s thesis, Department of Neural Computation and Behaviour, Max Planck Institute for Biological Cybernetics, Tubingen, 8 2015. [Google Scholar]

- [14].Zhao Y and Park IM. Variational latent gaussian process for recovering single-trial dynamics from population spike trains. arXiv preprint arXiv:1604.03053, 2016. [DOI] [PubMed] [Google Scholar]

- [15].Sussillo David, Jozefowicz Rafal, Abbott LF, and Pandarinath Chethan. Lfads-latent factor analysis via dynamical systems. arXiv preprint arXiv:1608.06315, 2016. [Google Scholar]

- [16].Lawrence Neil D. Gaussian process latent variable models for visualisation of high dimensional data. In Adv neur inf proc sys, pages 329–336, 2004. [Google Scholar]

- [17].Rasmussen Carl and Williams Chris. Gaussian Processes for Machine Learning. MIT Press, 2006. [Google Scholar]

- [18].Damianou AC, Titsias MK, and Lawrence ND. Variational inference for uncertainty on the inputs of gaussian process models. arXiv preprint arXiv:1409.2287, 2014. [Google Scholar]

- [19].Hafting T, Fyhn M, Molden S, Moser MB, and Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature, 436(7052):801–806, 2005. [DOI] [PubMed] [Google Scholar]

- [20].Karlsson M, Carr M, and Frank LM. Simultaneous extracellular recordings from hippocampal areas ca1 and ca3 (or mec and ca1) from rats performing an alternation task in two w-shapped tracks that are geometrically identically but visually distinct. crcns.org. 10.6080/K0NK3BZJ, 2005. [DOI]