Egyptian fruit bats integrate vision and echolocation in a task-dependent manner.

Abstract

How animals integrate information from various senses to navigate and generate perceptions is a fundamental question. Bats are ideal animal models to study multisensory integration due to their reliance on vision and echolocation, two modalities that allow distal sensing with high spatial resolution. Using three behavioral paradigms, we studied different aspects of multisensory integration in Egyptian fruit bats. We show that bats learn the three-dimensional shape of an object using vision only, even when using both vision and echolocation. Nevertheless, we demonstrate that they can classify objects using echolocation and even translate echoic information into a visual representation. Last, we show that in navigation, bats dynamically switch between the modalities: Vision was given more weight when deciding where to fly, while echolocation was more dominant when approaching an obstacle. We conclude that sensory integration is task dependent and that bimodal information is weighed in a more complex manner than previously suggested.

INTRODUCTION

All animals rely on multiple sensory systems for making decisions and guiding behavior. Multisensory information is weighed and integrated in a complex manner, which has been widely studied in various sensory systems and different animals (1–6). The integration of sensory information is dependent on the task and sensory information at hand. It has been shown that in humans, vision overrides audition, touch, and proprioception for spatial tasks (7–9), whereas audition overrides vision in temporal tasks (10). However, if information from the dominant modality is degraded or unreliable, then other modalities will take over. For example, Alais and Burr (7) showed that, when subjects are presented with an audiovisual cue, their judgment of its position will be closer to that of the light (and not sound) source but, if the light is severely blurred, then audition would dominate. This weighing of sensory modalities based on their reliability was mathematically phrased as the maximum likelihood estimation model (1, 7). According to this model, the stimulus from each modality is given a weight proportional to its reliability [i.e., to the modality-specific signal-to-noise ratio (SNR)]. These weighted stimuli are then summed to give a new estimate with maximal reliability, which determines the subject’s perception and behavior.

Studies also show that integration of multisensory information while learning is often beneficial for unisensory object recognition, that is, animals perform better even when relying on one sense if they learned the object while relying on several senses (11, 12). However, in some cases, sensory information from one modality can interfere with learning of information from another, a phenomenon called overshadowing (13). This might happen when one sensory cue is perceived as more salient than another (14). Information learned in a unisensory manner might also be “translated” to the use of other senses (3, 5, 15). In this case, usually referred to as cross-modal recognition, subjects are trained to identify a target with one sensory modality and are then tested with other modalities. These kind of experiments reveal how the senses interact and whether representations in the brain are uni- or multisensory.

Most studies on multisensory integration focus on integration and interaction of vision, audition, touch, and proprioception. Notably, these modalities have very different characteristics in terms of their spatial resolution and range of perception (e.g., vision is distal, while touch is proximal). Therefore, naturally, they often show strong asymmetries when combined and weighed, with one modality being more dominant than another. Furthermore, in many experiments on sensory weighing and multisensory learning, the sensory information does not originate from the same source (e.g., for vision and audition, there is usually a visual object and a different sound source). It thus remains unknown how modalities with similar characteristics are perceived and weighed.

The Egyptian fruit bat, Rousettus aegyptiacus, is a very interesting animal model to study multisensory integration due to its strong reliance on two sensory systems: vision and echolocation. Rousettus bats have large eyes, providing high spatial acuity (16), high sensitivity (a low visual threshold) (17), and some binocular overlap, suggesting depth perception (18). In addition to their profound use of vision, these bats also use echolocation to navigate and forage (19). In contrast to all other echolocating bats that emit laryngeal vocalizations, bats from the genus Rousettus produce ultrashort and broad-banded lingual clicks (20, 21), similar to the clicks of some cetaceans (22). Several recent studies have proven that Rousettus’ performance does not fall from that of laryngeal echolocators in many sensorimotor tasks (20, 21, 23). Rousettus bats regulate the use of echolocation based on ambient light levels; they increase the rate and intensity of their echolocation clicks in lower light levels. Nevertheless, they keep using echolocation (in both the lab and the field) even in relatively high light levels where vision should suffice, especially when approaching landing (19). The use of two sensory modalities that allow distal perception at up to a few meters and that are comparable in their spatial resolution in this range (24) makes Rousettus excellent animal models for intersensory integration. More broadly, there is currently very little understanding of how bats (in general) integrate visual- and echolocation-based sensory input to make sensory decisions. Most research on bats focuses on their echolocation while neglecting vision, although all bats use vision to some extent (25).

We examined several aspects of multisensory integration. First, we studied bimodal learning in an object discrimination task. We tested whether Rousettus, exposed to both the visual and acoustic cues of objects, will integrate this multisensory information. If bats integrate bimodal information, then we would expect that after learning to discriminate between the objects bimodally, they would be able to discriminate between them with each modality separately as well. Since these bats rely on both modalities to a large extent, we hypothesized that the bats would integrate these modalities when learning the shape of an object.

Next, we examined cross-modal recognition. In echolocating mammals, this cross-modal recognition was found in the bottle-nosed dolphin (Tursiops truncatus), which was able to transfer information from vision to echolocation and vice versa (3). We tested whether Rousettus bats can transfer information about complex targets and, more specifically, texture information between these modalities. We hypothesized that similar to the bottle-nosed dolphin, Rousettus will be able to visually discriminate between two targets, which were originally learned using echolocation only.

Last, we tested how multisensory information is weighed in a task-dependent manner. We tested how Rousettus bats weigh vision and echolocation in an orientation task. To do that, we designed a task that creates a naturalistic conflict between vision and echolocation, where the bats’ behavior indicated the preferred modality. We hypothesized that the bats will predominantly rely on vision for orientation because vision allows better angular resolution than echolocation (25–27).

RESULTS

Bimodal learning

We first trained bats to discriminate between two differently-shaped three-dimensional (3D) targets (a cylinder and a prism) under conditions that allowed the usage of both vision and echolocation (dim light, 2 lux, “bimodal learning”; Fig. 1A). Next, we trained them on the same targets with only one sensory modality available (“unimodal learning”), expecting that if the bats already learned visual and auditory sensory cues during the bimodal learning phase, then unimodal learning will be faster.

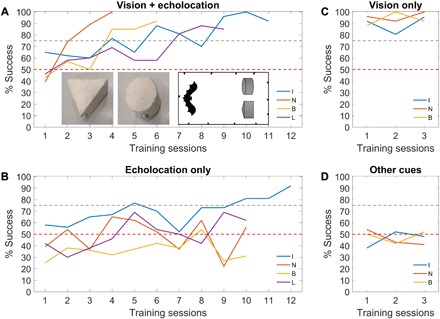

Fig. 1. Bimodal and unimodal learning of shape using echolocation and vision.

Learning curves of bats trained to discriminate between a prism and a cylinder under different sensory conditions. Inset shows front-bottom view of the two targets (the direction from which the bats approached) and top view of the experimental setup. For a more detailed setup, see Fig. 2B (the experiment had identical setup with different targets). The different colors depict different individuals. The red horizontal dashed line indicates chance level, while the gray dashed line indicates 75% success. (A) Training under conditions that allowed usage of both vision and echolocation. (B) Training using echolocation only. (C) Training using vision only. (D) Training with both vision and echolocation abolished. When using vision only (C), the bats immediately solved the discrimination task within one session. If there were other cues available, then the bats should have immediately solved the task in the other cues condition (D) as well. Therefore, we only trained the bats for several sessions under this condition. In all experimental conditions, bats performed one training session (with 24 trials on average) per day.

The bats took off from a platform situated 2.8 m from the targets, and they were rewarded for either landing on the cylinder (two bats) or on the prism (two bats; see targets in Fig. 1A). The bats successfully learned to discriminate a prism from a cylinder when both modalities were available. All bats reached a criterion of 75% success in three consecutive sessions starting in the ninth training session (at most).

To ensure that the bats were using echolocation and to reconstruct their flight trajectories, we recorded their flight using a 12-microphone array spread around the perimeter of the room (Materials and Methods). The echolocation rates were lower under the bimodal condition than in complete darkness, but they were high enough to provide vast acoustic information about the targets (10.73 ± 2.46 Hz in light versus 17.10 ± 0.89 Hz in dark; means ± SD). One additional bat that was trained on this task did not echolocate under the bimodal condition; therefore, it was disqualified from the study, and its data were not shown.

The faces of the two targets had the same area and reflected very similar spectra (fig. S1), but they greatly differed acoustically when scanned from the side (the cylinder is circular, reflecting equally from all directions, while the prism has three planar faces; thus, its reflections strongly depend on the angle). We thus allowed the bats to fly freely and investigate the targets from all angles. We validated that they observed the targets (visually and acoustically) from a wide range of angles by reconstructing the flight trajectories of two bats (fig. S2).

When vision was abolished by conducting the experiment in complete darkness [<10−7 lux, which is below the visual threshold of these bats (17); “echolocation-only training”], three bats did not succeed in discriminating between the targets (Fig. 1B). We tested them for 10 sessions since, by this point, they all reached 75% success under the bimodal condition. One bat reached 75% success on the 10th session, so it was tested for two more sessions to allow it to reach the criterion of three consecutive successful sessions, and it did (blue line in Fig. 1B).

We next abolished echolocation using targets with the same shape, size, and color but made of weakly reflective foam in parallel with playing-back pink noise (most intense at 30 kHz, the peak frequency of these bats) to mask possible use of echoes (“vision-only training”). The playback was loud (90-dB sound pressure level at 1 m) and was directed toward the bats’ flight direction. Therefore, when the bats approached the target, the masking noise became more intense, making any echoic information difficult to use. Three bats spontaneously reached 75% success within one session (Fig. 1C), much faster than in the original bimodal learning. This strongly suggests that they only used vision to learn the original task. Another bat refused to participate in experiments anymore and was disqualified from the experiment.

To ensure that no other cues were available (e.g., olfactory), we abolished both vision and echolocation using foam targets with pink noise playback in complete darkness. The bats’ performance did not differ from chance under this condition, suggesting that no other cues were used (Fig. 1D). This condition also proved the efficiency of our abolishment of echolocation because even the bat that learned the task acoustically reduced performance to chance under this condition. We conclude that when both vision and echolocation are available, Rousettus bats prefer visual cues in a shape discrimination task. Comparing the performance under vision-only and echolocation-only training of the one bat that was able to perform the task acoustically strongly implies that it relearned the task from scratch using echolocation rather than used a representation that was already learned during the bimodal condition [compare blue lines in Fig. 1 (B and C)].

Acoustic-based and cross-modal recognition

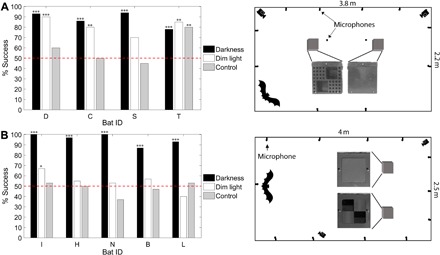

Because one bat was able to perform echo-based recognition, we set to test whether Rousettus bats can translate a representation acquired acoustically into a visual image. To examine this cross-modal recognition, we trained four bats to discriminate between two targets with different textures (a smooth target and a perforated target; Fig. 2A) in complete darkness using echolocation (<10−7 lux). These targets were completely unfamiliar to the bats to prevent effects of previous knowledge. After reaching the 75% success criterion, we tested the bats in the dark (under the same conditions but without reward) to ensure learning, and they performed well above chance (P < 10−4 for each of the four bats, one-tailed binomial test). Then, we tested whether they could discriminate between the targets visually in dim light (2 lux) with echolocation cues abolished by placing each target inside a transparent plastic cube (which allows vision but eliminates echo differences). During visual testing, the bats received no reward so that they would not learn the targets visually. The bats have never seen the targets before; we made sure to expose the targets in the training room only after the lights were off. Three bats performed well above chance (P < 0.01 for each of the three, one-tailed binomial test), and one bat showed a trend but did not reach significance (P = 0.057). In a third control condition, the targets were placed in transparent cubes in complete darkness, thus blocking both acoustic and visual information. Three bats performed at chance level (P > 0.25 for each of the three bats, one-tailed binomial test), indicating that they learned the texture-related echo information, while one bat performed above chance (P < 0.01), suggesting that it managed to rely on some alternative cue, possibly an olfactory cue that none of the other bats have noticed. There was no reward under this condition.

Fig. 2. Cross-modal recognition between echolocation and vision.

Bats’ performance under complete darkness (black), dim light (white), and a control, which did not allow vision or echolocation (gray). In all three conditions, the bats received no reward (for either right or wrong decisions), thus preventing any learning. The letters on the x axis depict individual bats’ identifications (IDs). Performance was tested relative to chance level (dashed line). (A) Left: Results of the first round of cross-modal recognition experiment (dark, approximately 45 trials per bat; light and control, 20 trials per bat). Right: Top view of the experimental setup and front view of the targets (the direction from which the bats approached). (B) Left: The results of the second round (30 trials per bat per condition). Right: Top view of the experimental setup and front view of the targets (the direction from which the bats approached). *P < 0.05, **P < 0.01, ***P < 0.001.

We repeated this experiment with five new bats with slight changes in the setup (see Materials and Methods and Fig. 2B). In this round, all five bats learned the acoustic task and performed well above chance when tested in complete darkness (P < 10−4 for each bat, one-tailed binomial test). The five new bats performed at chance level in the control tests (vision and echoes abolished), suggesting that they used echo cues (P > 0.1 in each of the five bats, one-tailed binomial test). However, only one bat succeeded in translating echo information to visual recognition in the cross-modal task in dim light (P < 0.05, one-tailed binomial test). Because of the clear success of some of the bats, we propose that bats can perform cross-modal translation. There could be various reasons why not all the bats succeeded, such as a motivation problem. Notably, we performed the testing trials in dim light without reward to avoid visual learning. To encourage the bats to keep performing the task, we embedded these visual testing trials between rewarded training trials in the dark. This might have caused the bats to “give up” on the unrewarded light trials and wait for the following rewarded darkness trial. There were also some differences between the targets and the room’s illumination in the two repeats of the experiment. In the discussion, we discuss more possible explanations for the unequal performance.

Task-dependent sensory weighing

We found that when learning the shape of an object, Rousettus bats prefer visual cues (Fig. 1). We thus aimed to test how they weigh visual and echolocation cues when performing different tasks. To test sensory weighing in an orientation task, we released naïve bats that have never seen the setup individually in the base of a large two-arm maze (3 m long; Fig. 3A) and allowed them to choose to fly into one of the two arms, mimicking a situation that a bat could face in a cave or a dense forest (298 bats were included in the study; see Table 1). In all trials, one of the arms was fully blocked with an obstacle, a wall, while the other arm was open. The wall was 0.45 m deep into one of the arms after the split of the maze into two arms, which occurred 1.4 m from the base. We altered the sensory (vision versus echolocation) cues in the blocked arm and compared the bats’ preference toward the blocked/open arm under these different conditions. By manipulating a single cue of the blocking wall (e.g., either the color for visual cues or the reflectivity for acoustic cues), we could assess the sensory cues on which the bats based their decision. Each bat was tested once only (in only one condition) without any training; we quantified the response across the population. The bats were not rewarded in this experiment; they only had to choose which arm to fly into.

Fig. 3. Sensory weighing of echolocation and vision in an orientation task.

x-axis condition names: BR, black reflective; WR, white reflective; BNR, black nonreflective. (A) Top view of the setup of the experiment. (B) The percentage of bats flying toward the open versus the blocked arm when altering the visual or the acoustic characteristics of the wall blocking the blocked arm in three different light levels (~40 bats per condition). (C) The proportions of different behaviors of bats approaching the blocked arm when altering the acoustic reflectivity of the wall.

Table 1. Number of bats that participated in the study.

In the bimodal learning and cross-modal recognition experiments, bats were trained to discriminate targets and were disqualified if they failed to reach 75% correct choice within reasonable time in the original training task (i.e., before any testing began). In the sensory weighing experiment, all bats that participated in the experiment were naïve and were flown once in the two-arm maze, except for in the “acoustic detection control” where bats were trained to detect a reflective wall. In the acoustic detection control, the bats were tested with either the right side of the two-arm maze blocked or the left side. The number of bats disqualified from each condition and the reason for disqualification are indicated. The numbers given for each condition are the numbers of participants after the removal of the disqualified bats.

| Experiment | Number of bats | ||||

| Bimodal learning | 5 (same bats as round 2 in the cross-modal recognition; one of the bats was disqualified since it did not echolocate in light) |

||||

| Cross-modal recognition | Round 1: 4 (another 4 failed to reach the initial learning criterion) Round 2: 5 (another bat failed to reach the initial learning criterion) |

||||

| Sensory weighing conditions: | Left blocked | Right blocked | No. of disqualified | ||

| No. of no echolocation |

No. of video malfunction |

No. of refuse to fly | |||

| Black reflective wall (5 × 10−2 lux) | 20 | 21 | 10 | 5 | 2 |

| White reflective wall (5 × 10−2 lux) | 20 | 20 | 8 | 2 | 1 |

| Black reflective wall (2 × 10−3 lux) | 20 | 20 | 4 | 2 | |

| White reflective wall (2 × 10−3 lux) | 19 | 19 | 4 | 2 | 5 |

| Black reflective wall (3 × 10−5 lux) | 20 | 20 | 3 | 2 | |

| White reflective wall (3 × 10−5 lux) | 20 | 18 | 4 | 8 | |

| Black nonreflective wall (3 × 10−5 lux) | 61 | 7 | 1 | ||

| Acoustic detection control (<10−7 lux) | 10 | 2 (failed to reach criterion) | |||

When presented with a black wall (in the blocked arm) in three different light levels [5 × 10−2, 2 × 10−3, and 3 × 10−5 lux, all of which are above their visual threshold (17)], the bats preferred the blocked arm almost exclusively over the open arm. On average, 90% of the ~40 bats in each light level preferred the blocked arm (P < 10−3 for every light level, binomial test relative to chance; Fig. 3B). Moreover, there was an increase in the bats’ preference toward the blocked arm with a decrease in light level from 78% preference in 5 × 10−2 lux to 98% preference in 3 × 10−5 lux; (P = 0.02 and df = 2, Fisher’s exact test). Post hoc analysis with all possible comparisons revealed a difference between the highest and lowest light levels [P = 0.04, Fisher’s exact test with false discovery rate (FDR) correction]. When using a white wall instead of a black wall (with equal acoustic reflectivity), the bats completely changed their behavior and preferred the open arm over the white wall almost exclusively. On average, 92% of the ~40 bats in each light level preferred the open arm under these conditions (P < 10−3 for every light level, binomial test relative to chance; Fig. 3B). The choice difference between the two conditions (i.e., black versus white wall) was highly significant (χ2 > 44.36, df = 1, and P < 10−3 for each of the three light levels, chi-square test for independence). There was no difference between the three light levels with a white wall (P = 0.63 and df = 2, Fisher’s exact test). The bats thus relied on visual information in all light levels tested, suggesting that in these orientation tasks, Rousettus bats will prefer vision.

In contrast, bats did not change their preference when only the acoustic information was altered. In both conditions discussed so far (i.e., white and black walls), the wall blocking the arm was highly reflective acoustically (target strength, −7 dB at 1 m). To manipulate the acoustic cues, we replaced the black highly reflective wall with a nonreflective black foam wall (target strength, −25 dB at 1 m) and tested the bats in the lowest light level (3 × 10−5 lux), where the use of echolocation should be most dominant. Because we tested each bat once, in only one set of experimental conditions, so treatment order was not a factor. Just as with the black reflective wall, bats preferred the arm blocked with a black foam wall over the open arm almost exclusively (97% of 40 bats preferred the reflective black wall, and 95% of 61 bats preferred the nonreflective wall over the open arm; P = 1, Fisher’s exact test; Fig. 3B). The fact that Rousettus bats preferred the black arm regardless of acoustic stimulus further suggests that Rousettus bats were relying on vision when deciding which route to take when navigating.

We made sure that the bats could use echolocation to detect the blocking wall before the split into the two arms and, therefore, that their reliance on vision was not due to lack of sensory ability. To this end, we trained a different group of bats to detect the blocked arm and fly into the open one (under the same conditions; see Materials and Methods) and then tested them. We found that they could detect the wall [8 of the 10 bats performed significantly (P < 0.01, one-tailed binomial test), while two bats showed the same pattern but did not reach significance (P = 0.057, one-tailed binomial test); two other bats did not learn the task within the training period].

After entering the blocked arm and approaching the wall, the bats shifted to relying on acoustic information. In general, when a bat entered a blocked arm, it either turned back, attempted to land on the wall, or collided with it. The bats altered their behavior according to the acoustic reflectivity of the wall: When the target was more reflective, they turned back significantly more often (46% versus 13% for reflective versus nonreflective wall in 3 × 10−5 lux; χ2 = 13.08, df = 2, and P = 10−3, chi-square test for independence for all three behaviors; post hoc for turning only, χ2 = 12.47, df = 1, and P = 10−3, chi-square test for independence with FDR correction; Fig. 3C). In addition, more bats collided when approaching the nonreflective wall (24% versus 47% for reflective versus nonreflective wall; χ2 = 5.5, df = 1, and P = 0.028, chi-square test for independence with FDR correction). Since the visual cues provided by the two walls were almost identical, it is evident that the bats shifted to relying on acoustic information when approaching the barrier. The bats also increased their echolocation rate when approaching both the reflective and nonreflective walls (from 18.2 to 20.0 Hz, z = 2.15 and P = 0.03 and from 18.4 to 21.7 Hz, z = 4.99 and P < 10−3, respectively, Wilcoxon signed-rank test). The few bats that entered the arm blocked with a white reflective board all turned back when approaching the wall.

DISCUSSION

When both visual and acoustic information were available, Rousettus bats relied only on vision to discriminate between two differently shaped targets (Fig. 1). The availability of bimodal information, thus, did not enhance learning in this case although bats did collect acoustic information through echolocation. Visual information was also not transferred to acoustic information; the bats solved the task easily when using vision but could not do so when using echolocation. The fact that one bat learned the task acoustically rapidly (within 12 sessions) proves that the problem can be solved acoustically by these bats. Therefore, although the bats could have used acoustic information, all of them preferred visual cues, suggesting that overshadowing occurred and there was no acoustic learning.

Nevertheless, Rousettus bats are capable of using echolocation to discriminate targets, as was evident from the performance of one of the bats in the shape discrimination task (Fig. 1B) and from the performance of nine additional bats who learned to discriminate between two targets with different textures using echolocation only (Fig. 2). Ensonifying the targets revealed clear spectral cues, which could be used by the bats (figs. S3 and S4). To date, echo-based object discrimination was demonstrated only for bats using laryngeal echolocation calls, while Rousettus use lingual clicks. Object discrimination using clicks has been demonstrated for many cetaceans (22), but the properties of water allow sound to penetrate objects, which is not the case for airborne sound. This study therefore extends the evidence on the superb performance enabled by Rousettus click-based echolocation (20, 21, 23).

Our cross-modal experiment suggests that Rousettus bats can translate acoustic-based representations into a visual image (Fig. 2). The inconclusive results in some of the bats might suggest a few possible drawbacks in our experiment. First, since no food reward was given during tests under the light condition to prevent learning, the bats’ motivation to work was reduced. Furthermore, since test trials in light were very distinguishable (the light was turned on), the bats could easily know when there will be no food reward and simply wait for the next dark trial (where reward was given). In addition, in light trials, echolocation was blocked using transparent plastic cubes, making the two targets acoustically identical to the smooth target (i.e., the target that had no texture). In these test trials, bats thus received conflicting information from vision and echolocation, which may have interfered with their performance. All of these factors might have reduced the bats’ motivation to perform in the light. Indeed, they often simply refused to fly in the light at all, especially in the second round of the experiment. In addition, a few changes were made in the second round of the experiment, which could explain the differences in performance between the two rounds. Specifically, the targets in the two rounds were not identical and the lighting conditions changed (see Materials and Methods). Because our targets differed in their depth structure and because perceiving depth using vision strongly relies on lighting conditions (particularly with our transplant plastic cubes, which might have reflected the light differently), these changes might have interfered with the bats’ ability to differentiate the targets visually in the second round. Nevertheless, several bats did perform, showing that bats can translate an acoustic representation into a visual one. The fact that the bats could not perform the shape discrimination task (which they learned visually) when using only echolocation implies that the reverse translation, from vision to echolocation, might be more difficult for them, but further research on this is required.

Last, we found that when navigating and negotiating obstacles, bats will weigh sensory information in a task-dependent manner. When choosing where to fly, the bats relied on vision, preferring the darker of the two arms almost exclusively, probably because it looked like an opening to a dark space (e.g., a cave), while the open arm, which was more brightly illuminated, looked blocked visually (when a white wall was blocking the arm, the open arm became the darker of the two and, accordingly, the bats switched to choose it; Fig. 3B). Bats echolocated during the task, but they ignored the echoes returning from the blocking wall although they could sense them and preferred to trust their vision. The bats incorporated acoustic information in their decision process. First, the bats increased their echolocation rate when approaching the wall, implying that they were responding to incoming echoic information. Second, while approaching a highly reflective blocking wall, 46% of the bats turned back, suggesting a reversal of their decision (for comparison, only 8% of the bats turned around when choosing the open arm; Fig. 3C). We hypothesize that once the bats entered the blocked arm and were not facing a two-alternative visual choice anymore, there was a shift in their sensory attention to acoustic information, which lead to the decision to avoid the barrier in front of them. Notably, when the arm was blocked by a nonreflective foam wall, only a minority of the bats (13%) changed their decision and turned back, suggesting that the intensity of the echoic information must be above a certain threshold to overrule the previous visual-based decision. We thus hypothesize that the bats are constantly integrating visual and echoic information but that they weigh the echo-based information in a task-dependent manner, probably depending on the visual information they receive.

The bats preferred the dark cave-like arm in all light levels tested, even in the lowest light level that is very close to their visual threshold where the visual SNR was minimal (Fig. 3B). This contradicts the response expected if they were using a maximum likelihood estimator, where deterioration of visual information should result in giving less weight to vision and more to echolocation and thus to a reduction in preferring the black blocked arm (1, 7). The preference for the black blocked arm was higher when vision deteriorated. We suggest that the blocked arm appeared even more like a cave opening in the lowest light level, thus strengthening the visual percept. This is what it looked like to our human eyes, so it was probably even more so to Rousettus vision, which is rod-based and has lower angular resolution (28).

Despite the many similarities between vision and echolocation (24), there are also some inherent differences. Vision has superior angular acuity (25–27), while echolocation has better range acuity (29–32) (note that range estimation with vision has been tested only for humans, which have far better visual acuities than bats, and yet their performance falls in comparison to bat echolocation). The difference in performance in range estimation between vision and echolocation is probably due to range being inferred in vision using different visual cues (e.g., binocular disparity, relative size, etc.) (30, 33), whereas in echolocation, it is directly computed from the delay between the emitted pulse and arrival of reflected echoes, which allows very accurate measurements (31, 34). These differences most likely underlie the different weighing of these modalities. In our experiments, vision was more dominant for tasks that required angular spatial information, such as orientation and object discrimination, while echolocation was mainly used for range estimations and obstacle avoidance. Most of these tasks could, in theory, be resolved with information from both modalities. Shape discriminations, for example, could be performed either according to the angular spatial relations between its visual features or using acoustic spectrotemporal information. Nevertheless, vision was usually preferred.

Another possible explanation for this preference is the rapid deterioration of echo-based information with distance due to the strong atmospheric attenuation of sound in air, making echo-based information less reliable from a distance (35). Vision suffers from this problem to a lesser degree. In the shape discrimination task, when both visual and echo information was available, it is possible that visual information allowed the bats to make a choice in the discrimination task from their takeoff platform but echolocation did not. It seems that vision is also superior for navigation even within the range of echolocation, as was also suggested in previous studies in other bat species (36–38). For example, Joermann et al. (38) showed that two bat species (Desmodus rotundus and Phyllostomus discolor) approached a visual illusion of a landing grid but turned away shortly before the grid (supposedly realizing that it does not reflect echoes) and did not attempt to land. In this study, as well as in ours, acoustic information was not disregarded. Rather, it was dynamically weighed along with visual information to resolve the sensory conflict.

In conclusion, intersensory integration is complex and dynamic. Rousettus bats clearly prefer visual information in many scenarios, but they hardly ever stop acquiring echoic information. The dominant sense might depend on the task and switch according to incoming input. Recent results also suggest that multisensory encoding of space can be complex and that the same hippocampal neurons encode different locations based on the available sensory information (39). Bats make uniquely interesting animal models to study intersensory integration due to their heavy reliance on two sensory modalities for similar tasks. The large variety of species-specific behaviors within the bat order suggests a wide variety of intersensory integration strategies in both the behavioral and brain levels.

MATERIALS AND METHODS

Animals

A total of 317 R. aegyptiacus bats were included in the study (Table 1). The bats were caught in a roost in central Israel housing thousands of bats and brought to the Zoological Garden in Tel Aviv University, Israel.

Five male bats participated in the bimodal learning and in the cross-modal recognition experiments. Four bats participated in another cross-modal experiment. These bats were identified by bleaching their fur and using radio frequency identification chips. During their stay, they were housed in a large cage (1 m by 2 m by 2.5 m) and kept in reversed light cycle. The bats were trained approximately three to five times a week. On training days, the bats’ diet was restricted to 50% of their daily diet, and they were fed with 70 g of apples per bat. On these days, the rest of the nutrition was provided during training and consisted of bananas and mango juice. On weekends, food was enriched with different fruits available and given ad libitum. Water was always provided ad libitum. During the first few weeks, the bats were weighted on a weekly basis to monitor weight loss. Once it was clear that they are not losing weight, their weight was monitored on a monthly basis, while their physical state was monitored daily during the entire experimental period. The experiment was approved by the Institutional Animal Care and Use Committee (IACUC) committee number L-12-039.

A total of 308 bats participated in the sensory weighing experiment. They were brought to Tel Aviv University in groups of 16 to 30 (mixed sex), tested the next day, and then released. During their stay, they were kept in natural day-night cycle and provided with water and food ad libitum. Naïve bats were kept separately from bats that already performed the experiment. The experiment was approved by the IACUC committee number L-14-054.

Light level measurements

In all experiments, the ambient light level in the room was measured using an International Light Technologies detector (SPM068, ILT1700) with a resolution of 10−7 lux. We considered a light level of <10−6 lux as complete darkness since it is lower than the Rousettus bats’ visual threshold (17).

Experimental setups and procedures

Bimodal learning

The experiment took place in an acoustic flight room (4 m by 2.5 m by 2.2 m). Twelve ultrasonic microphones (Knowles FG) were used to record echolocation and were spread around the perimeter of the room. Audio was sampled and recorded using a 12-channel analog-to-digital (A/D) converter (UltraSoundGate 1216, Avisoft) with a sampling rate of 250,000 Hz. In addition, two infrared cameras were placed in the room to allow videoing of the bats’ behavior.

Rousettus bats were trained in a two-alternative forced choice task to discriminate between two wooden 3D targets differing in shape: a triangular prism (base, 24 cm; height, 22 cm; length, 16 cm) and a cylinder (diameter, 17 cm; length, 16 cm). From the bats’ takeoff platform, the targets appeared to the bats as a triangle and a circle with equal area (i.e., their 2D cross section facing the bat; see inset in Fig. 1A). The 3D shape of the target differed greatly, so they were supposed to provide ample acoustic and visual cues allowing their classification. Two bats were trained to land on the prism, and two were trained to land on the cylinder. Another bat that was trained to land on the prism was disqualified since it did not echolocate. The bats were placed by the experimenter on a starting platform 2.8 m from the targets, from which they initiated the flight toward the targets. They were allowed to fly freely and scan the 3D shapes of the targets from all angles. If, during this process, the bats landed on one of the walls and not the target, then they were returned by the experimenter back to the starting platform. Correct choices (i.e., landing on the right target) were rewarded with fruit puree given from a syringe, which was controlled by the experimenter. Wrong choices were punished using an aversive noise. After landing, the experimenter removed the bat from the target and changed the location of the targets (while they were not visible to the bat; see below). The experimenter then placed the bat on the platform with her back to the targets and then moved to the corner of the room in a stereotypical manner to avoid providing any cue to the bats. The bats typically took off immediately. The fact that approximately half of the bats did not succeed in the sensory translation test (cross-modal recognition) and that the bats performed at chance level under all control conditions throughout the experiments implies that they were not cued by the experimenter. The bats were trained in this manner 3 days a week, with each session lasting approximately 30 min (including ~26 to 30 trials).

The targets were mounted on two poles 1 m apart. These poles were a part of an apparatus that rotated around a fixed axis. This allowed the experimenter to easily switch the locations of the two targets between two possible fixed locations (see inset in Fig. 1A). To prevent the usage of spatial memory, the targets switched locations in a pseudorandom order, with each target appearing in the same location in no more than three consecutive trials. Nevertheless, sometimes, during training, the bats fixated on one location for multiple trials. In these cases, the experimenter placed the correct choice target in the opposite location for several trials until the bat chose it. The apparatus was rotated after every trial regardless of whether the targets changed their location or not to prevent the learning of auditory cues that might imply where the correct target is.

Bimodal training

The bats were first trained to discriminate the targets with both modalities available. Lights were turned on (approximately 2 lux) to allow vision. The bats were trained until they reached a criterion of three consecutive days with 75% correct choice.

To test which modality the bats used, we abolished each of the modalities separately. We first abolished vision (echolocation-only training) and then echolocation (vision-only training). Last, we tested the bats with both modalities abolished. Since the bats did not spontaneously succeed when both modalities were abolished (Fig. 1D), they were definitely using one of these modalities for learning (Fig. 1A). The inability of the bats to perform with echolocation only (Fig. 1B) compared to the immediate success with vision only (Fig. 1C) implies that there was no order effect for the conditions tested.

Echolocation-only training

All light sources in the room were abolished, and the bats were trained in complete darkness. This permitted the use of echolocation only. In these training sessions, the experimenter used night vision goggles.

Vision-only training

The training occurred in dim light (approximately 2 lux) with echolocation blocked. To abolish the usage of echolocation, the wooden targets were replaced with targets of the same shape, size, and color but made of foam. Foam is much less reflective acoustically than wood, thus reducing the possibility to use echoes. To ensure that even the weak echoes reflected from the foam were not used by the bats, pink noise was played to mask the echoes. The noise was played from two speakers (Vifa) and placed 0.6 m behind and 0.4 m below each target facing the direction from which the bats approached the targets. The speakers were connected to an UltraSoundGate player 116 device (Avisoft). The speakers played noise that was measured to be 90 dB at 1 m at 30 kHz, the peak intensity of Rousettus echolocation (21).

In both vision-only and echolocation-only conditions, the bats were trained until they reached the criterion of three consecutive sessions with 75% success or for 10 sessions because this was the maximum number of sessions they needed to first reach 75% in the bimodal training, whichever came first. We considered these two conditions as training because the bats were rewarded for correct decisions.

Vision and echolocation abolished

The bats were trained in complete darkness with the foam targets and noise playback. The main purpose of this condition was to ensure that the bats did not use any olfactory cues from the foam targets. If the bats used either acoustic or visual cues from the targets, then their performance should be at chance level under this condition.

Targets’ ensonification

The targets were ensonified using a speaker (Vifa), connected to an UltraSoundGate player 116 device (Avisoft), and a 46DD-FV 1/8″ constant current power calibrated microphone (GRAS) placed on top of the speaker. The speaker played a 2.5-ms-long, 95- to 15-kHz down sweep, and the microphone recorded the echo’s sound pressure. Sampling rate of both the signal and the recording was 375 kHz. The microphone and speaker were placed on a tripod 1 m from the target, which was also placed on a tripod.

Audio analysis

We used an in-house software written in MATLAB (MathWorks, 2015) to analyze audio recordings. Using the time difference of arrival of the echolocation pulses to the different microphones in the array, we reconstructed the bat’s 3D flight trajectory under the bimodal condition. We then calculated the angle between the bat and the target’s main axis for both targets in every location. We analyzed data of two bats (I and B) from the first two learning sessions in the bimodal training. We only used correct choice trials. In total, seven trials were analyzed per bat, three with the rewarded target to the left and four with the rewarded target to the right.

We also analyzed echolocation rates for all bats on both the bimodal condition and echolocation only. The analysis was conducted on 10 trials per bat from the first two sessions in every condition using the loudest channel.

Cross-modal recognition

The cross-modal recognition experiment was conducted twice with different bats (Table 1) and slightly different targets, in a flight room similar to the one used in the bimodal learning experiment, equipped with the same ultrasonic microphones and infrared cameras (Fig. 2B). In both rounds, Rousettus bats were trained in a two-alternative forced choice task to discriminate between a textured and a smooth target. They were first trained and tested in complete darkness (<10−7lux, i.e., using only echolocation). Then, they were tested in dim light (under conditions where only vision can be used; see below) to examine cross-modal recognition. Last, they were tested without visual or acoustic cues to rule out the use of alternative cues.

In the first round, the bats were presented with two identical plastic targets (15 cm by 10 cm by 15 cm) that differed only in texture; one of the targets was smooth, and the other one was perforated with 1-cm-deep holes on four of its side and 5-cm-deep holes on two opposite sides (see inset in Fig. 2A). The bats had to land on the smooth target to receive fruit puree presented in a small 5-cm-diameter bowl. To control for olfactory cues, both targets had bowls with fruit on their upper face (where the bats landed). Both bowls were covered with a fine mesh made of fishing wires (0.5-mm diameter). The feeder on the smooth target had wide openings of 1.5 cm between two wires, allowing the bats access to the food, while the feeder on the perforated target had narrower openings of 0.5 cm that prevented any access. Food was frequently replenished by the experimenter to equalize odor cues. We confirmed that the bats could not recognize the targets based on this difference between the mesh on the bowls (see below). The targets were mounted on poles at the center of the room in two fixed locations. The two targets’ positions were switched in a pseudorandom order (as in the “Bimodal learning” under “Experimental setups and procedures” section). The targets were always removed from the poles and placed on them again, regardless of whether their location changed or not to eliminate any acoustic cues.

The bats were trained in complete darkness daily, 5 days a week, with each session lasting 30 min. The bats took off from one of the corners of the room, which they have established as their home base. Flights were initiated by the bats. After a bat had landed on one of the targets, the experimenter encouraged it to fly back to the wall (by gently touching it) so that the location of the targets could be changed. Night vision goggles were used by the experimenter throughout the experiment. We were extra careful that the bats never see the targets. We thus only revealed them every day after assuring that the room was completely dark.

Test trials began once the bats reached a criterion of 75% correct choices on three consecutive days. All three types of test trials (see below) differed from training trials in that they had no reward to prevent learning (that is, giving reward in light trials would have resulted in bats relearning the task visually, instead of “translating” the information they gained with echolocation). To ensure that bats continued to land on the targets even in the absence of a food reward, test trials were interspersed between regular rewarded training trials, randomly separated by one to three training trials. During test trials, both feeders were blocked with the same dense mesh, preventing access to the food in both of them.

Testing in the dark

These tests were performed in complete darkness to validate the learning. A total of 42 to 49 of these trials were performed per bat.

Testing in dim light

After the bats finished the test trials in the dark, they were tested in dim light (approximately 2 lux). These trials were also embedded within a regular (dark) training session, and no food reward was given. In these test trials, the targets were placed inside plastic cubes to allow the usage of vision but not echolocation (the echoes of both targets were identical as was validated; see control below). These trials were also randomly spread within the regular (darkness) training trials. At the beginning of each visual test trial, the targets were placed in the plastic cube, and they were removed after the bat landed. Twenty trials per bat were performed (and not more) to prevent extinction of the original learning.

Control

The bats were also tested in the dark with the plastic cubes covering the targets. This allowed us to examine whether they relied on any other cue except for the texture differences (e.g., olfactory cues or acoustical cues from the feeders). If texture-related acoustical information was used, then it is expected that under these control conditions, the bats will perform at chance level. Twenty of these trials were performed per bat.

Statistical analysis

All comparisons were performed with a one-tailed binomial test relative to chance level (50% success). Tests were one-sided because of our assumption that training will improve performance.

Targets’ ensonification

See “Bimodal learning” under “Experimental setups and procedures” section. In addition to recording from an azimuth of 0°, the targets were ensonified from 22.5° and 45° to test the influence of the holes on the spectra. The echoes recorded at the same angle were averaged.

In the second round of the experiment, the same room as in the bimodal learning experiment was used. In this experiment, the bats were presented with slightly different targets (15 cm by 15 cm by 15 cm) from the first round of cross-modal recognition experiment: In this experiment, the perforated target had only the two 5-cm-deep holes (without the 1-cm holes; see inset in Fig. 2B) on two parallel faces, which might have made the translation to vision more difficult. The second target was smooth, as in the first round. The targets were changed because this experiment was part of a more comprehensive experiment (whose results will be published elsewhere) aiming to assess depth sensitivity in Rousettus. In addition, for the same reason, the location of the takeoff and targets was changed (see Fig. 2, A and B) to ensure that the bats take off from equal distance from the two targets. In addition, to ensure that the bats do not have a bias toward the smooth target (since they were all trained to land on it in the previous round), in this round, two of the bats were rewarded for flying toward the smooth target, and three were rewarded for flying to the textured target. As in the bimodal learning experiment, the bats were released from the experimenter’s hand to a starting platform from which they initiated their flights. Food reward was given by the experimenter, which then returned the bat to the starting platform for a new trial. Cross-modal recognition was tested in the same manner as in round 1: training in complete darkness, testing in the dark, testing in the light, and control (30 trials per condition).

Sensory weighing

The experiment took place in an acoustic room (4 m by 2.2 m by 2.4 m). A large two-arm maze (1.8 m by 3 m by 1.8 m), which allowed bats to fly, was set up in the middle of the room (Fig. 3A). The maze’s walls and ceiling were made of white tarpaulin, which strongly reflects sound, and did not allow the bats to land on them. A landing platform made of foam (70 cm by 45 cm) was hung at the end of each arm.

One of the arms was blocked with a wall, which we manipulated to control the visual and acoustic cues the bats were receiving. (i) We manipulated the color of the highly acoustically reflective plastic wall testing white versus black walls (which were identical other than the color). This procedure altered the visual cues only but maintained the same acoustic information (both walls were equally reflective). The bats were tested in three different light levels (see below). (ii) We manipulated the reflectivity of the blocking wall (using a foam instead of a plastic wall) but kept its color identical (black). This procedure manipulated only the acoustic information but maintained the visual cues. The bats were tested under this condition only in the lowest light level (3 × 10−5 lux) because we expected that echolocation will be more dominant in this light level. The choice of the bats between a blocked corridor with different sensory information (e.g., white versus black walls) and between the same stimulus (i.e., an open arm that never changed) allowed us to reveal which sensory cues they were relying on when making their decision.

Two high-speed infrared cameras (OptiTrack, NaturalPoint) were placed 0.5 m above ground at the entrance of each arm (1.4 m into the maze) facing up and recorded at 125 frames/s. An ultrasonic microphone (UltraSoundGate CM16/CMPA, Avisoft) was placed on a tripod in front of the wall separating the two arms of the maze 1.35 m above the ground. The microphone was facing the main corridor and was tilted upward by 45°. The microphone was connected to an A/D converter (Hm116, Avisoft) and recorded audio at a sampling rate of 250,000 Hz.

We tested naïve bats in this experiment; each bat performed one flight without any training and no more. Each bat was kept in a carrying cage for 15 min in the acoustic room, outside the maze, to allow its eyes to adapt to the dark. Then, the experimenter released the bat from the hand while seated on a chair at the maze entrance (1.50 m above ground). The bat was encouraged to fly if it stayed on the hand longer than a few seconds. If it did not fly toward one of the two arms (but hovered or turned) for three attempts, then the bat was disqualified. We also disqualified bats that did not echolocate or trials in which no video was recorded (Table 1). The microphone and cameras were triggered by another experimenter sitting outside the experimental room at the moment of release and were set to record until the bat entered one of the arms. Light level was adjusted to either 5 × 10−2, 2 × 10−3, or 3 × 10−5 lux using four LED light sources at the ceiling of the acoustic room outside the maze, which allowed homogeneous lighting (above the tarpaulin ceiling). A 3 × 10−5 lux was chosen because it is very close to the threshold for vision of these bats (17) and at this light level, to the visual eye, the black walls blocking one of the arms appeared like an opening to a cave. The other light levels allowed more visual information.

When manipulating the color of the wall, we presented the two colored walls on both sides of the maze in all light levels to ensure that there was no effect of the maze itself or its lighting on the behavior of the bats. We found no difference (choice of open versus blocked arm between different sides of the maze with black wall, P = 1; same choice for white wall, P = 0.59; behavior after entering the blocked arm between different sides of the maze with black wall, P = 0.1; Fisher’s exact test for all comparisons; see “Statistical analysis”). Because there was no basal preference to one arm and because each bat only flew once, the trials with the nonreflective wall were performed on the left side only for convenience.

Acoustic detection control

To assure that R. aegyptiacus were capable of detecting the reflective wall acoustically (i.e., based on echolocation) in the experimental setup before choosing an arm to fly into, 12 bats were trained in complete darkness (<10−6 lux, lower than their vision threshold) to acoustically detect the open arm, rather than the blocked one, and fly into it. During training, bats were released from the base of the two-arm maze, and correct choices (i.e., flying to the open arm) were rewarded by allowing the bat to hang on the landing platform at the edge of the arm for 2 min (wild bats prefer this over being handled). Bats that agreed to drink mango juice from a syringe were also rewarded with juice. Bats that reached criterion of 10 consecutive correct choices within 3 hours of training were tested in complete darkness (10−7 lux). The tests included 20 trials per bat, with the blocking wall positioned on alternative sides in a pseudorandom order, with no more than 3 consecutive trials on the same side.

Audio and video analysis

Audio recordings were examined using SASLab (Avisoft) to ensure that the bats echolocated. All videos were analyzed by an experimenter and categorized to trials in which the bat entered the blocked arm and trials in which the bat entered the open arm. Trials in which bats entered the blocked arm were further subdivided to trials where the bat approached the wall but then turned back, trials in which the bat attempted to land, and trials in which the bat collided. These trials were classified by three independent observers. The scores of the observers (e.g., proportion of bats colliding per condition) were averaged. Bats whose video was not obtained because of technical failure or bats whose recording did not show echolocation were also disqualified because we aimed to study multimodal decision making (Table 1).

To calculate echolocation rate, we used trials of bats that collided or attempted to land on the blocked arm. We used the moment of contact with the wall to sync video and audio of the trial. In total, we had 19 trials for the reflective wall and 41 for the nonreflective wall. Each trial was divided into two halves using the time of flight, and echolocation rate was calculated per each half and then averaged across bats. The echolocation rates are thus estimates for when the bat was closer to or farther from the wall.

Statistical analysis

We first tested the preference for the blocked versus the open arm with a binomial test relative to chance. We then tested whether there was a difference in bats’ preference of the open versus the blocked arm under the different conditions. The data were compared using the chi-square test for independence unless >20% of the table cells had expected value of <5. In these cases, data were compared with Fisher’s exact test. Last, we tested whether the behavior of the bats (i.e., turn back, attempt to land, and collide) after they entered the arm varied under different conditions (reflective versus nonreflective walls). For this comparison, chi-square and Fisher’s test were used as well.

Targets’ ensonification

The targets were ensonified in the same manner as the bimodal learning experiment. After the ensonification of the targets, the microphone was placed instead of the target to record the speaker’s incident sound pressure. We then calculated the target strength by dividing the peak intensities of the incident and echo sound pressure for each target.

Supplementary Material

Acknowledgments

We thank L. Harten, G. Shalev, and T. Reisfeld for helping with the animals. Funding: Sagol School of Neuroscience, Tel Aviv University funded the scholarship for S.D. Author contributions: Y.Y. devised the concept. S.D. and Y.Y designed the experiment, discussed the results, and wrote the paper. S.D. conducted the experiments and analysis. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/6/eaaw6503/DC1

Fig. S1. Spectra of the targets in the bimodal learning experiment.

Fig. S2. The distribution of the bats’ angles relative to the targets.

Fig. S3. Spectra of the targets in the cross-modal recognition experiment—round 1.

Fig. S4. Spectra of the targets in the cross-modal recognition experiment—round 2.

Reference (40)

REFERENCES AND NOTES

- 1.Ernst M. O., Banks M. S., Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433 (2002). [DOI] [PubMed] [Google Scholar]

- 2.Stein B. E., Stanford T. R., Multisensory integration: Current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 (2008). [DOI] [PubMed] [Google Scholar]

- 3.Pack A. A., Herman L. M., Sensory integration in the bottlenosed dolphin: Immediate recognition of complex shapes across the senses of echolocation and vision. J. Acoust. Soc. Am. 98, 722–733 (1995). [DOI] [PubMed] [Google Scholar]

- 4.Davenport R. K., Rogers C. M., Intermodal equivalence of stimuli in apes. Science 168, 279–280 (1970). [DOI] [PubMed] [Google Scholar]

- 5.Winters B. D., Reid J. M., A distributed cortical representation underlies crossmodal object recognition in rats. J. Neurosci. 30, 6253–6261 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sober S. J., Sabes P. N., Flexible strategies for sensory integration during motor planning. Nat. Neurosci. 8, 490–497 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Alais D., Burr D., The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262 (2004). [DOI] [PubMed] [Google Scholar]

- 8.Botvinick M., Cohen J., Rubber hands “feel” touch that eyes see. Nature 391, 756 (1998). [DOI] [PubMed] [Google Scholar]

- 9.Rock I., Victor J., Vision and touch: An experimentally created conflict between the two senses. Science 143, 594–596 (1963). [DOI] [PubMed] [Google Scholar]

- 10.Shams L., Kamitani Y., Shimojo S., What you see is what you hear. Nature 408, 788 (2000). [DOI] [PubMed] [Google Scholar]

- 11.Guo J., Guo A., Crossmodal interactions between olfactory and visual learning in Drosophila. Science 309, 307–310 (2005). [DOI] [PubMed] [Google Scholar]

- 12.Seitz A. R., Kim R., Shams L., Sound facilitates visual learning. Curr. Biol. 16, 1422–1427 (2006). [DOI] [PubMed] [Google Scholar]

- 13.Kehoe E. J., Overshadowing and summation in compound stimulus conditioning of the rabbit’s nictitating membrane response. J. Exp. Psychol. Anim. Behav. Process. 8, 313–328 (1982). [PubMed] [Google Scholar]

- 14.Rowe C., Multisensory learning: From experimental psychology to animal training. Anthrozoös 18, 222–235 (2005). [Google Scholar]

- 15.Schumacher S., Burt De Perera T., Thenert J., von der Emde G., Cross-modal object recognition and dynamic weighting of sensory inputs in a fish. Proc. Natl. Acad. Sci. U.S.A. 113, 7638–7643 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Heffner R. S., Koay G., Heffner H. E., Sound localization in an Old-World fruit bat (Rousettus aegyptiacus): Acuity, use of binaural cues, and relationship to vision. J. Comp. Psychol. 113, 297–306 (1999). [DOI] [PubMed] [Google Scholar]

- 17.Boonman A., Bumrungsri S., Yovel Y., Nonecholocating fruit bats produce biosonar clicks with their wings. Curr. Biol. 24, 2962–2967 (2014). [DOI] [PubMed] [Google Scholar]

- 18.Thiele A., Vogelsang M., Hoffmann K. P., Pattern of retinotectal projection in the megachiropteran bat Rousettus aegyptiacus. J. Comp. Neurol. 314, 671–683 (1991). [DOI] [PubMed] [Google Scholar]

- 19.Danilovich S., Krishnan A., Lee W. J., Borrisov I., Eitan O., Kosa G., Moss C. F., Yovel Y., Bats regulate biosonar based on the availability of visual information. Curr. Biol. 25, R1124–R1125 (2015). [DOI] [PubMed] [Google Scholar]

- 20.Holland R. A., Waters D. A., Rayner J. M. V., Echolocation signal structure in the Megachiropteran bat Rousettus aegyptiacus Geoffroy 1810. J. Exp. Biol. 207, 4361–4369 (2004). [DOI] [PubMed] [Google Scholar]

- 21.Yovel Y., Geva-Sagiv M., Ulanovsky N., Click-based echolocation in bats: Not so primitive after all. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 197, 515–530 (2011). [DOI] [PubMed] [Google Scholar]

- 22.D. R. Ketten, The marine mammal ear: Specializations for aquatic audition and echolocation, in The Evolutionary Biology of Hearing, D. B. Webster, R. R. Fay, A. N. Popper, Eds. (Springer-Verlag, 1992), pp. 717–750. [Google Scholar]

- 23.Waters D. A., Vollrath C., Echolocation performance and call structure in the Megachiropteran fruit-bat Rousettus aegyptiacus. Acta Chiropt. 5, 209–219 (2003). [Google Scholar]

- 24.A. Surlykke, J. A. Simmons, C. F. Moss, Perceiving the world through echolocation and vision, in Bat Bioacoustics, M. B. Fenton, A. D. Grinnel, A. N. Popper, R. R. Fay, Eds. (Springer Handbook of Auditory Research, Springer, 2016), vol. 54, pp. 265–288. [Google Scholar]

- 25.J. Eklöf, thesis, University of Gothenburg (2003). [Google Scholar]

- 26.Ghose K., Moss C. F., The sonar beam pattern of a flying bat as it tracks tethered insects. J. Acoust. Soc. Am. 114, 1120–1131 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Simmons J. A., Kick S. A., Lawrence B. D., Hale C., Bard C., Escudié B., Acuity of horizontal angle discrimination by the echolocating bat, Eptesicus fuscus. J. Comp. Physiol. A. 153, 321–330 (1983). [Google Scholar]

- 28.Müller B., Goodman S. M., Peichl L., Cone photoreceptor diversity in the retinas of fruit bats (megachiroptera). Brain Behav. Evol. 70, 90–104 (2007). [DOI] [PubMed] [Google Scholar]

- 29.Anderson P. W., Zahorik P., Auditory/visual distance estimation: Accuracy and variability. Front. Psychol. 5, 1097 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Viguier A., Clément G., Trotter Y., Distance perception within near visual space. Perception 30, 115–124 (2001). [DOI] [PubMed] [Google Scholar]

- 31.Simmons J. A., The resolution of target range by echolocating bats. J. Acoust. Soc. Am. 54, 157–173 (1973). [DOI] [PubMed] [Google Scholar]

- 32.Simmons J. A., Perception of echo phase information in bat sonar. Science 204, 1336–1338 (1979). [DOI] [PubMed] [Google Scholar]

- 33.Brenner E., van Damme W. J., Perceived distance, shape and size. Vis. Res. 39, 975–986 (1999). [DOI] [PubMed] [Google Scholar]

- 34.Dear S. P., Simmons J. A., Fritz J., A possible neuronal basis for representation of acoustic scenes in auditory cortex of the big brown bat. Nature 364, 620–623 (1993). [DOI] [PubMed] [Google Scholar]

- 35.Boonman A., Bar-On Y., Cvikel N., Yovel Y., It’s not black or white—On the range of vision and echolocation in echolocating bats. Front. Physiol. 4, 248 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Höller P., Schmidt U., The orientation behaviour of the lesser spearnosed bat, Phyllostomus discolor (Chiroptera) in a model roost. Concurrence of visual, echoacoustical and endogenous spatial information. J. Comp. Physiol. A. 179, 245–254 (1996). [DOI] [PubMed] [Google Scholar]

- 37.Chase J., Visually guided escape responses of microchiropteran bats. Anim. Behav. 29, 708–713 (1981). [Google Scholar]

- 38.Joermann G., Schmidt U., Schmidt C., The mode of orientation during flight and approach to landing in two Phyllostomid bats. Ethology 78, 332–340 (1988). [Google Scholar]

- 39.Geva-Sagiv M., Romano S., Las L., Ulanovsky N., Hippocampal global remapping for different sensory modalities in flying bats. Nat. Neurosci. 19, 952–958 (2016). [DOI] [PubMed] [Google Scholar]

- 40.Yovel Y., Falk B., Moss C. F., Ulanovsky N., Optimal localization by pointing off axis. Science 327, 701–704 (2010). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/6/eaaw6503/DC1

Fig. S1. Spectra of the targets in the bimodal learning experiment.

Fig. S2. The distribution of the bats’ angles relative to the targets.

Fig. S3. Spectra of the targets in the cross-modal recognition experiment—round 1.

Fig. S4. Spectra of the targets in the cross-modal recognition experiment—round 2.

Reference (40)