Abstract

Some theories of episodic memory hypothesize that spatial context plays a fundamental role in episodic memory, acting as a scaffold on which episodes are constructed. A prediction based on this hypothesis is that spatial context should play a primary role in the neural representation of an event. To test this hypothesis in humans, male and female participants imagined events, composed of familiar locations, people, and objects, during an fMRI scan. We used multivoxel pattern analysis to determine the neural areas in which events could be discriminated based on each feature. We found that events could be discriminated according to their location in areas throughout the autobiographical memory network, including the parahippocampal cortex and posterior hippocampus, retrosplenial cortex, posterior cingulate cortex, precuneus, and medial prefrontal cortex. Events were also discriminable based on person and object features, but in fewer regions. Comparing classifier performance in regions involved in memory for scenes and events demonstrated that the location of an event was more accurately classified than the person or object involved. These results support theories that suggest that spatial context is a prominent defining feature of episodic memory.

SIGNIFICANCE STATEMENT Remembered and imagined events are complex, consisting of many elements, including people, objects, and locations. In this study, we sought to determine how these types of elements differentially contribute to how the brain represents an event. Participants imagined events consisting of familiar locations, people, and objects (e.g., kitchen, mom, umbrella) while their brain activity was recorded with fMRI. We found that the neural patterns of activity in brain regions associated with spatial and episodic memory could distinguish events based on their location, and to some extent, based on the people and objects involved. These results suggest that the spatial context of an event plays an important role in how an event is represented in the brain.

Keywords: episodic memory, event memory, hippocampus, MVPA, spatial context

Introduction

Spatial context is a defining property of episodic memory, distinguishing it from semantic memory (Tulving, 1972, 2002). Spatial context contributes to the detail richness of remembered and imagined autobiographical events, suggesting that it may play a scaffolding role in the representation of events in memory (Arnold et al., 2011; de Vito et al., 2012; Robin and Moscovitch, 2014, 2017; Robin et al., 2016; Sheldon and Chu, 2016; Hebscher et al., 2017). Some studies, however, have found that other familiar elements, such as people and objects, contribute equally to the qualities of episodic memory and imagination (D'Argembeau and Van der Linden, 2012; McLelland et al., 2015; Hebscher et al., 2017).

Scenes, the canonical type of spatial context, engage brain areas associated with autobiographical memory and imagination (Hassabis et al., 2007; Spreng et al., 2009; Zeidman et al., 2015; Hodgetts et al., 2016). Scene processing typically results in activation in a posterior network of regions including the parahippocampal gyrus, retrosplenial cortex, precuneus, posterior cingulate cortex, and the hippocampus (Epstein and Kanwisher, 1998; Epstein et al., 2007; Zeidman et al., 2015; Hodgetts et al., 2016). These regions are also active when recalling the spatial context of a memory for an item or event (Burgess et al., 2001; Kumaran and Maguire, 2005; Hassabis et al., 2007; Bar et al., 2008; Szpunar et al., 2009; Gilmore et al., 2015). These scene-related regions are part of the autobiographical memory and imagination networks (Maguire and Mummery, 1999; Addis et al., 2004, 2007, 2009; Svoboda et al., 2006; Cabeza and St Jacques, 2007; Hassabis et al., 2007; Szpunar et al., 2007; Spreng et al., 2009; Spreng and Grady, 2010; Schacter et al., 2012; Rugg and Vilberg, 2013).

Does this overlap of scene and autobiographical memory regions suggest that representations of spatial context are inherent in the representations of episodic memory? Higher hippocampal pattern similarity for events with similar spatial contexts suggests that the hippocampus represents the spatial context of memories (Chadwick et al., 2011; Kyle et al., 2015b; Nielson et al., 2015; Ritchey et al., 2015; Milivojevic et al., 2016). Studies examining whole-brain activity, however, indicate that more posterior neocortical regions, including the parahippocampal, retrosplenial, and posterior cingulate cortices also represent spatial context (Hannula et al., 2013; Staresina et al., 2013; Szpunar et al., 2014). Many of these studies did not directly compare the contributions of spatial context to the representation of episodic memory with those of other event elements.

We investigated how spatial context is represented in memory for complex events compared with other event features: people and objects. By varying all three features, we were able to determine which neural areas are associated with spatial context and directly compare the effects of spatial context to the other feature types. If events involving the same location resemble one another more than events that share other features, it would support the hypothesis that spatial context plays an especially important role in determining the neural representation of an event, consistent with scene construction and related theories (O'Keefe and Nadel, 1978; Nadel, 1991; Burgess et al., 2002; Hassabis and Maguire, 2007, 2009; Maguire and Mullally, 2013; Nadel and Peterson, 2013). A second question is whether spatial context is represented primarily in the hippocampus or by the posterior scene network. By examining activation patterns in the hippocampus and throughout the brain, we will determine whether the effects of spatial context on episodic memory are mediated via the neocortical posterior scene network or by the hippocampus directly.

Materials and Methods

Participants

Nineteen healthy young adults participated in the study. One participant was excluded from analyses due to excessive motion in the scanner, resulting in 18 participants (8 female, 10 male; mean age, 25.55; age range, 18–29 years). All participants were right handed, fluent in English, and had completed at least 12 years of formal education (mean years of education, 17.94; SD, 2.69). All had normal or corrected-to-normal vision, no hearing problems, and no history of psychological or neurological illness or injury. Participants provided informed consent before participating in the study, in accordance with the Office of Research Ethics at the Rotman Research Institute at Baycrest Health Sciences, and were provided monetary compensation for their participation.

Experimental design and statistical analyses

Experimental design

Before participating in the study, participants filled out an on-line questionnaire, providing the names of nine well known people from their lives (three family members, three friends, three coworkers/colleagues) and nine well known locations (three rooms in their house or a well known house, three locations relating to school/work, three other locations frequently visited; e.g., stores, gyms, theaters, subway stations). These highly familiar real-world cues were used as stimuli in the experiment.

Study procedure.

Testing took place in a single session of ∼2.5 h. Before the scanning session, participants were given detailed instructions for the study procedures and completed six practice trials using a subset of cues that were not used again in the study, and had the opportunity to ask questions and receive feedback from the experimenter.

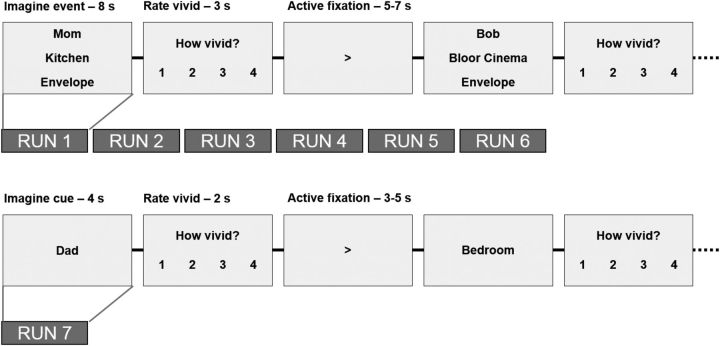

During the scanning session, participants completed six runs of the main experimental task. In each run, participants completed 27 event imagination trials. On each trial, three cues were presented to the participant, who was instructed to imagine the cued features interacting in a life-like event involving themselves, and to picture as many details as possible about the event for the 8 s duration of the trial. The three cues always consisted of the names of one person, one location, and one object. Three person and location cues were randomly selected from each category of the participant's answers to the on-line questionnaire. The three object cues were randomly selected from a standard list of nine highly imageable objects, used across all participants. Participants were instructed to imagine novel events, distinct from the events on other trials and from any existing memories. Participants were instructed to imagine each feature consistently across trials (i.e., the same specific locations, the same specific objects). Participants pressed a key to indicate when they had an event in mind. After each event, participants rated the vividness of the imagined event on a scale from 1 to 4 (1, not vivid; 4, extremely vivid). Over the 27 trials, participants imagined events based on all combinations of the nine cues (3 locations × 3 persons × 3 objects). Each trial was separated by a jittered active fixation (5–7 s in duration), which randomly displayed an arrow pointing either left or right. Participants pressed a key corresponding to the direction of the arrow and then were instructed to focus on the arrow for the remainder of the fixation.

On subsequent runs (2–5), participants were shown the same 27 cue sets in a random order and were asked to remember the events that they imagined in the first run, and revisualize them for the duration of the 8 s trial. Once again, they pressed a key to indicate when an event was in mind, and made a vividness rating after every event. Across runs, the order of the cues on the screen varied, and was counterbalanced within and across participants. A schematic of the procedure is shown in Figure 1.

Figure 1.

Schematic of event imagination and single-feature imagination paradigms. On each trial of the event imagination paradigm, participants were presented with the names of a familiar person, location, and object and were asked to imagine an event integrating these three features. They then made a vividness rating (scale of 1–4). Events were separated with a jittered, active fixation period, which required participants to press a key indicating whether the arrow was pointing left or right. In the single-feature run, participants were presented with single cues (nonoverlapping with the cues used in the event imagination paradigm) and were asked to imagine them and provide a vividness rating.

Single-feature imagination paradigm.

For the final run, participants were presented with a single cue per trial (the remaining three locations, three persons, and three object names, not previously seen in the experiment) and were asked to imagine the features in isolation. Each cue was displayed for 4 s, during which time the participants were instructed to imagine the location, person, or object in as much detail as possible. Participants made a vividness rating for each trial (1–4 scale), and trials were separated by the same jittered active fixation task as in the main experiment (3–5 s in duration). One participant was unable to complete this run due to time, resulting in N = 17 for this run.

Postscan measures.

Following the scan, participants completed postscan ratings and descriptions for each cue set. Each of the 27 cue combinations was presented, and participants were asked to provide a brief description of what they imagined for each cue set. Descriptions were recorded using a microphone. Next, participants were asked to make ratings about the imagined events. First, participants were asked to rate how consistently they imagined the event across the six runs (1–4 scale: 1, not consistent; 4, very consistent). Next, they rated how coherent the event was, referring to how integrated the three features were in the event (1–4 scale: 1, not very integrated; 4, very well integrated). Next, they rated the plausibility of the imagined event by rating how easily the elements of the event fit together (1–4 scale: 1, not very well; 4, extremely well). Then, they rated how similar the imagined event was to an existing memory (1–4 scale: 1, not at all similar; 4, same as a memory). Finally, each of the nine cues was presented alone, and participants rated the familiarity of each feature (1–4 scale: 1, not very familiar; 4, extremely familiar) and how easily they could picture each feature (1–4 scale: 1, not very easily; 4, extremely easily).

MRI setup and data acquisition

Stimuli were presented and responses were recorded using EPrime 2.0 software (Psychology Software Tools; RRID:SCR_009567). Stimuli were projected onto a screen behind the MRI scanner, which was visible to the participant via a mirror attached to the head coil. Participants made responses using an MRI-compatible response box, placed under their right hand. Participants were scanned in a 3.0 T Siemens MAGNETOM Trio MRI scanner using a 12-channel head coil. High-resolution, gradient echo, multislice, T1-weighted scans (160 slices of 1 mm thickness; 19.2 × 25.6 cm field of view) coplanar with the EPI scans as well as whole-brain magnetization-prepared rapid acquisition gradient echo (MP-RAGE) 3-D T1-weighted scans were acquired for anatomical localization in the middle of the scanning session, between the third and fourth functional runs. During the seven functional runs, T2*-weighted EPIs sensitive to BOLD contrast were acquired. Images were acquired using a two-shot gradient echo EPI sequence (22.5 × 22.5 cm field of view with a 96 × 96 matrix size, resulting in an in-plane resolution of 2.35 × 2.35 mm for each of 35 3.5 mm axial slices with a 0.5 mm interslice gap; repetition time, 2.0 s; echo time, 27 ms; flip angle, 62°).

Behavioral data analysis

Means and SDs for behavioral measures are reported. When measures were collected for each feature type separately, ratings were compared across feature categories using repeated-measures ANOVAs with one repeated factor of cue type. Mauchly's test of sphericity was used to test the validity of the sphericity assumption, and, when violated, Greenhouse–Geisser-corrected p values are reported. Post hoc comparisons were performed using Bonferroni-corrected paired t tests.

fMRI data analysis

Preprocessing.

Functional images were converted into NIFTI-1 format, reoriented to radiological orientation, and realigned to the mean image of the first functional run using the 3dvolreg program in AFNI (Analysis of Functional NeuroImages; Cox, 1996; RRID:SCR_005927). To retain higher spatial resolution for multivariate pattern analyses, the data were not spatially smoothed. Statistical analyses were first conducted on realigned functional images in native EPI space. The MP-RAGE anatomical scan was normalized to the Montreal Neurological Institute (MNI) space using nonlinear symmetric normalization implemented in ANTS (Advanced Normalization Tools; RRID:SCR_004757) software (Avants et al., 2008). This transformation was then applied to maps of statistical results derived from native space functional images using ANTS to normalize these maps for group analyses.

Searchlight multivoxel pattern analysis.

Every imagination event across the six runs was modeled using an 8 s SPM canonical hemodynamic response model in a voxelwise general linear model (GLM) via the 3dDeconvolve program in AFNI. The GLM also included a single parametric regressor for the vividness ratings, and 12 nuisance regressors per run to account for motion and physiological noise in the data using the CompCor approach (Behzadi et al., 2007). This resulted in one GLM per subject, each with 235 regressors (162 events, 1 parametric vividness regressor, 72 nuisance regressors). The resultant 162 β-estimates for the event imagination trials were then used as the input for multivoxel pattern analyses (MVPAs).

To identify brain areas where patterns of activity distinguished between the features in each category (person, location, and object) or between each unique event, we performed a series of four searchlight analyses over the entire brain. Each analysis used a shrinkage discriminant analysis (SDA) as a classifier, a form of regularized linear discriminant analysis that can be applied to high-dimensional data that have more variables (voxels) than observations (trials). With this method, the estimates of the category means and covariances are shrunk toward zero using James–Stein shrinkage estimators as a way to ensure the estimability of the inverse covariance matrix and to reduce the mean squared error when used for out-of-sample prediction (SDA package for R; Ahdesmäki et al., 2014).

Each classifier used a local 10-mm-radius spherical neighborhood that surrounded a central voxel, resulting in an average of 175 voxels in the searchlight. The classifiers were trained via leave-one-run-out cross-validation on the β-coefficients estimated from each event imagination trial. A separate classifier was trained on each of the location features, person features, object features, and the conjunction of all three features (i.e., each of the 27 unique event combinations) to determine which voxels discriminate between the features of each type, regardless of the other elements of the event, and which voxels distinguish each unique event. The searchlight sphere was moved around the entire brain, excluding voxels falling outside a functional brain mask, creating a whole-brain map of classification performance attributed to the central voxel of each sphere. The code for the searchlight analyses is available at https://github.com/bbuchsbaum/rMVPA/blob/master/R/searchlight.R.

Classifier performance was assessed using area under the curve (AUC), where 0.5 corresponded to chance-level discrimination. Whole-brain maps of AUC values were generated for each subject and each classifier. These maps were spatially transformed to MNI space and analyzed with voxelwise permutation t tests. The Randomize function in FSL (Winkler et al., 2014; RRID:SCR_002823) was used to perform nonparametric one-sample t tests for each voxel, testing whether the classifier performance was significantly above chance, using threshold-free cluster enhancement (TFCE; Smith and Nichols, 2009) to identify significant clusters and maintain a familywise error (FWE) rate of p < 0.05. Randomize uses nonparametric permutation testing rather than assuming a Gaussian distribution, which has been demonstrated to be less prone to type I errors than parametric fMRI analysis methods (Eklund et al., 2016). TFCE computes a voxelwise score reflecting the strength of signal (in this case, AUC) at a given voxel and the extent of contiguous voxels demonstrating the same effect, without relying on the definition of an absolute threshold. This method has been demonstrated to be more sensitive than other voxelwise or clusterwise thresholding methods (Smith and Nichols, 2009). FWE-corrected t maps were produced for each classifier, indicating which voxels were able to distinguish events based on each feature.

Contrasting classifier performance.

To compare performance across the different classifiers, subtraction analyses were performed contrasting classification by each cue type. For each contrast, whole-brain AUC maps were subtracted at the subject level. The resulting maps were tested against zero at the group level, using FSL Randomize to perform nonparametric one-sample t tests. TFCE was used to identify significant clusters maintaining a familywise error rate of p < 0.05. Resulting clusters correspond to voxels in which classification was significantly higher for a given feature compared with one of the other features.

Multifeature ROI definition.

To define a more targeted set of areas that represent the features making up the events in this paradigm, we used the single-feature scanning run to define a set of ROIs selective for the feature categories (i.e., locations, people, and objects). Importantly, the single-feature run used a separate set of cues from the event imagination runs, which were still personally familiar and drawn from the same categories as the event imagination cues. In this run, however, cues were presented singly, and participants were asked to imagine them in isolation. Thus, the single-feature run was independent of the multifeature event runs, allowing us to define regions of interest sensitive to the feature categories in an unbiased manner.

Similar to the searchlight MVPA procedure described above, we trained SDA classifiers to discriminate the features, although in this case, the classifiers were trained and tested to discriminate between feature categories (person, object, and scene), rather than between specific items (e.g., kitchen, bedroom, and office) within a category. The parameters of the classifiers were the same as in the previous analyses, except that the classifier was trained using five cross-validation folds derived from contiguous blocks of trials within the run, since there was only one run of the single-feature paradigm. This run contained 54 trials, comprising six blocks of the same nine cues (three locations, three people, and three objects). Each block consisted of nine trials in a random order, so that each cue was presented once per block, and presentations were spaced evenly throughout the run. The classifier was trained using leave-one-block-out cross-validation, resulting in training on five blocks and testing on the sixth. Accuracy was assessed for each feature category by calculating the average AUC for discriminating that category from the other two categories in each voxel, for each participant. To derive a map of reliable classification performance at the group level, the spatially normalized AUC maps were compared against chance (AUC = 0.5) using one-sample t tests. This procedure produced separate normalized maps for location, person, and object features, representing the voxels that were selective for these features.

To create a set of ROIs that equally represented all three episodic features, we selected the 1000 most significant voxels from each feature category map based on ranking the t values of every voxel in the brain. We then merged these voxels using a setwise union to create a combined mask containing the set of voxels most able to discriminate the three features. On average, for the top 1000 voxels for each category, the object voxels had higher t values than the location voxels (t(1998) = 3.831, p = 0.00013) but did not differ from the person voxels (t(1998) = 1.503, p = 0.133), and the person voxels had marginally higher t values than the location voxels (t(1998) = 1.803, p = 0.072), although the overall means and ranges were similar (location voxels: mean, 8.51; SD, 0.72; range, 7.70–11.68; person voxels: mean, 8.59; SD, 1.15; range, 7.23–12.37; object voxels: mean, 8.66; SD, 1.01; range, 7.54–12.93). Note that these values reflect how well, on average, these voxels were able to discriminate that feature category from the other categories, and do not reflect discrimination ability within categories.

Hippocampal ROI definition.

T1-weighted structural scans were anatomically segmented using Freesurfer automated cortical and subcortical parcellation software (version 5.3; aparc.a2009s atlas; RRID:SCR_001847; Fischl et al., 2002, 2004; Destrieux et al., 2010). To test hypotheses about the hippocampus, for each subject, a mask of the left and right hippocampus was computed based on the Freesurfer parcellation, and used to define the hippocampal ROI.

Comparing classifier performance across event features.

To compare classification performance across features, we computed average classification accuracy (as measured by the area under the curve) within the ROIs described above. Separate SDA classifiers were trained and tested on each feature using only the voxels in the ROI, and summary measures of performance were computed. For each ROI, we compared average classifier performance across features using a repeated-measures ANOVA with one factor of feature type, and follow-up paired t tests, using the Bonferroni correction for multiple comparisons.

To compare classification accuracy while varying the number of voxels contributing to the classification, we performed separate classification analyses based on subsets of voxels. For each event feature (object, person, location), all voxels in the brain were ranked according to the correlation-adjusted t statistic (CAT score; Zuber and Strimmer, 2009), indicating the voxels, that were the most informative in the training set. Then, separate models were trained and tested on each feature, using only the N highest ranked voxels, to control for the number of voxels contributing to the classification results. We varied the voxel number by powers of 2 (from 100 to 25600, and then used the whole brain) and compared classifier performance as a function of voxel number and feature type using a 9 (voxel number) × 3 (feature type) repeated-measures ANOVA, and follow-up Bonferroni-corrected paired t tests. Mauchly's test of sphericity was used to test the validity of the sphericity assumption, and, when violated, Greenhouse–Geisser-corrected p values are reported.

Results

Behavioral results

In-scan vividness ratings of events were high [mean, 3.01; SD, 0.58 (on a scale where 1 is not vivid and 4 is extremely vivid)]. Since cues differed across subjects, it was not possible to examine group-level differences in vividness relating to the individual features. We could, however, examine the variance in vividness ratings according to each feature category by grouping events according to each feature within subject and calculating the variance in the vividness ratings for that feature category across the group. If one feature consistently resulted in higher variability in the vividness ratings, differences in that feature may have been driving the vividness judgments for the events. This was not found to be the case, as a repeated-measures ANOVA with one factor of feature type found no main effect of feature type on within-subject variance of vividness ratings (F(2,34) = 1.287, p = 0.288).

Vividness ratings of single features during the single-feature run were high overall (mean, 3.50; SD, 0.37). Since this run presented single features, it was possible to compare vividness ratings across the feature categories (location: mean, 3.54; SD, 0.44; person: mean, 3.53; SD, 0.44; object: mean, 3.44; SD, 0.40). A repeated-measures ANOVA with one factor of feature type revealed no significant difference between the categories on vividness ratings (F(2,32) = 0.764, p = 0.474).

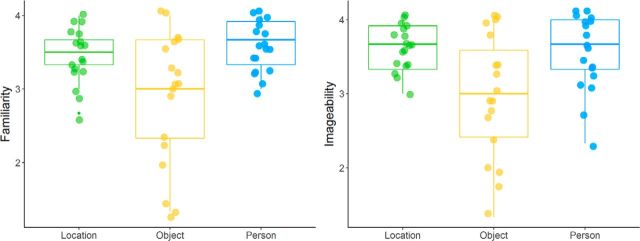

Mean postscan ratings of events revealed that events were generally rated as fairly consistently imagined, with good coherence of the features, medium plausibility and low similarity to existing memories (consistency: mean, 3.12; SD, 0.57; coherence: mean, 3.06; SD, 0.62; plausibility: mean, 2.43; SD, 0.59; similarity to memory: mean, 1.49; SD, 0.35). The familiarity and imageability of the individual features were also both rated highly on the 4 point scales (familiarity: mean, 3.32; SD, 0.46; imageability: mean, 3.37; SD, 0.47). When compared across categories, repeated-measures ANOVAs with one factor of feature type revealed significant differences across the feature categories (imageability: F(2,34) = 11.979, p = 0.0001, η2 = 0.20; familiarity: F(2,34) = 12.118, p = 0.001, η2 = 0.22; Greenhouse–Geisser correction was applied for familiarity ANOVA, ε = 0.655; Fig. 2). Post hoc Bonferroni-corrected paired t tests revealed that in both cases, the location and person features were rated more highly than the object features (familiarity: location vs object, t(17) = 3.859, pcorrected = 0.0068, d = 0.85; person vs object, t(17) = 3.694, pcorrected = 0.0054, d = 0.87; imageability: location vs object, t(17) = 3.866, pcorrected = 0.0037, d = 0.91; person vs object, t(17) = 3.646, pcorrected = 0.0060, d = 0.86), but location and person features did not differ (familiarity: location vs person, t(17) = 1.299, pcorrected = 0.634; imageability: location vs person, t(17) = 1.067, pcorrected = 0.903).

Figure 2.

Postscan ratings of familiarity and imageability by feature category for the features used in the event imagination runs. Location and person cues were rated significantly higher than object cues on both familiarity and imageability, but did not differ from one another.

fMRI results

Classification of events by feature

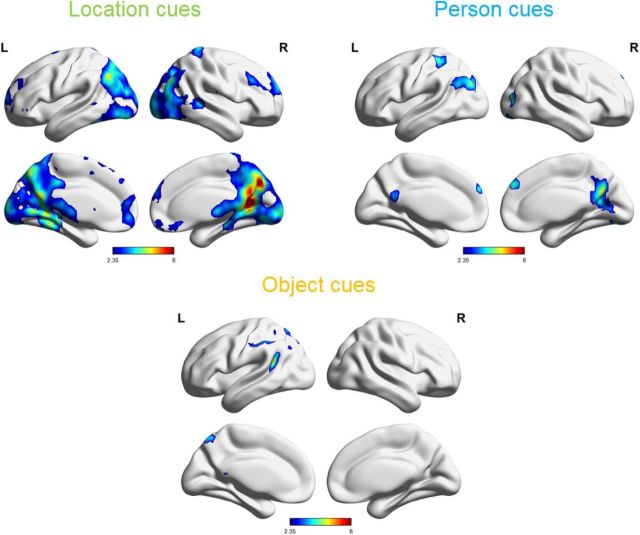

To determine which areas of the brain could distinguish events based on their spatial context, we conducted a searchlight pattern classification analysis to discriminate multifeature events based on their locations. This analysis revealed a collection of posterior regions able to reliably classify events based on location information (Fig. 3 and Fig. 3-1). Regions included the retrosplenial cortex, posterior cingulate cortex, precuneus, parahippocampal cortex, left posterior hippocampus, angular gyrus, occipital cortex, and the medial prefrontal cortex.

Figure 3.

Regions significantly able to discriminate events based on location, person, and object features, as determined by MVPA searchlight classification analysis. Color intensity reflects t values resulting from comparing the classification accuracy against chance. FWE-corrected, p < 0.05 [Figures 3-1, 3-2, and 3-3, cluster tables (figures were made with BrainNet Viewer; Xia et al., 2013; RRID:SCR_009446)]. L, Left; R, right.

Clusters and local maxima from searchlight classification based on location features. Download Figure 3-1, DOCX file (12.4KB, docx)

Clusters and local maxima from searchlight classification based on person features. Download Figure 3-2, DOCX file (12.1KB, docx)

Clusters and local maxima from searchlight classification based on object features. Download Figure 3-3, DOCX file (11.6KB, docx)

In contrast, when the searchlight classifier was trained and tested on person features, a much smaller set of regions was found to reliably distinguish between events (Fig. 3 and Fig. 3-2). Significant regions were primarily along the cortical midline, including the posterior cingulate cortex and the dorsal medial prefrontal cortex, and also included the right lingual gyrus, left angular gyrus, and left inferior parietal lobule.

The object feature classifier again revealed a smaller set of regions than the location classifier, which could distinguish between events based on the included object (Fig. 3 and Fig. 3-3). Regions were primarily in the left parietal lobe including the left inferior parietal lobule, left supramarginal gyrus, left angular gyrus, posterior left superior temporal gyrus, and the precuneus.

Importantly, while these classifiers are separately trained on the three features (location, person, and object), they all operated on the same set of events, with the only difference being how the events were labeled for each analysis, depending on which feature was under study. Thus, to classify events according to one feature, differences based on that feature must be uniquely discriminable from other trialwise variations, such as those resulting from variations in the other features.

Classification of unique events

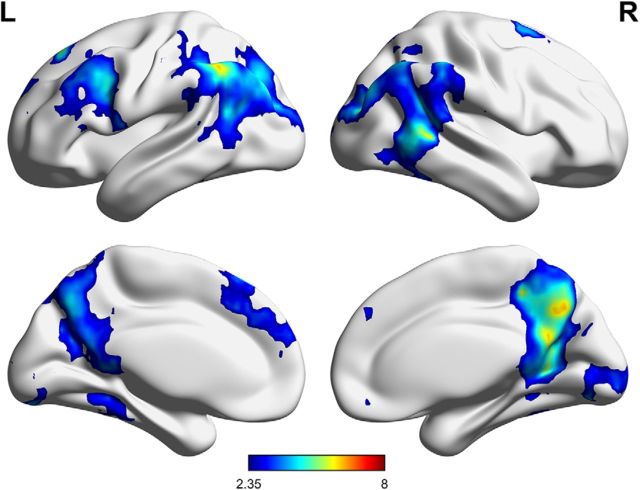

To determine which parts of the brain could distinguish each unique event, formed by a unique combination of the location, person, and object features, we conducted another searchlight classification analysis treating each event as unique. Crucially, to accurately distinguish these events from one another, the unique conjunction of all three features had to be considered, resulting in 27 categories, corresponding to each feature combination. This analysis revealed a set of areas able to distinguish the unique feature combinations including the posterior cingulate cortex, precuneus, supramarginal gyrus, left angular gyrus, posterior middle temporal gyrus, left fusiform gyrus, and left middle frontal and medial frontal gyri (Fig. 4 and Fig. 4-1).

Figure 4.

Regions significantly able to discriminate events based on the unique combination of features, as determined by MVPA searchlight classification analysis. Color intensity reflects t values resulting from comparing the classification accuracy against chance. FWE-corrected, p < 0.05 (Figure 4-1, cluster table). L, Left; R, right.

Clusters and local maxima from searchlight classification based on each combination of features. Download Figure 4-1, DOCX file (12.5KB, docx)

Contrasting classifier performance

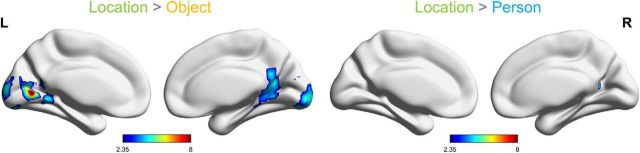

To directly compare classification performance across cue types, we subtracted classification accuracy values in pairwise contrasts. The only contrasts to yield significant clusters were those comparing location > object and location > person (Fig. 5 and Figs. 5-1 and 5-2). Classifying events according to location compared with objects resulted in significantly higher performance in a large posterior–medial cluster including the lingual gyrus, areas of occipital cortex, retrosplenial cortex, posterior cingulate cortex, and posterior parahippocampal cortex. Comparing classification based on location with that based on person cues resulted in a small significant cluster in the posterior cingulate cortex. The reverse contrasts of person > location and object > location, as well as comparisons between person and object cues, resulted in no significant clusters corresponding to better classification performance based on object or person features.

Figure 5.

Regions with significantly higher classifier performance for discriminating events based on location, compared with object (left) and person (right). The reverse contrasts, and comparisons between person and object features yielded no significant clusters. Color intensity reflects t values resulting from comparing the difference in classification accuracy against zero. FWE-corrected, p < 0.05 (Figures 5-1 and 5-2, FWE-corrected cluster tables). L, Left; R, right.

Clusters and local maxima from the contrast of location > object classification. Download Figure 5-1, DOCX file (11.3KB, docx)

Clusters and local maxima from the contrast of location > person classification. Download Figure 5-2, DOCX file (11KB, docx)

Determining multifeature ROI

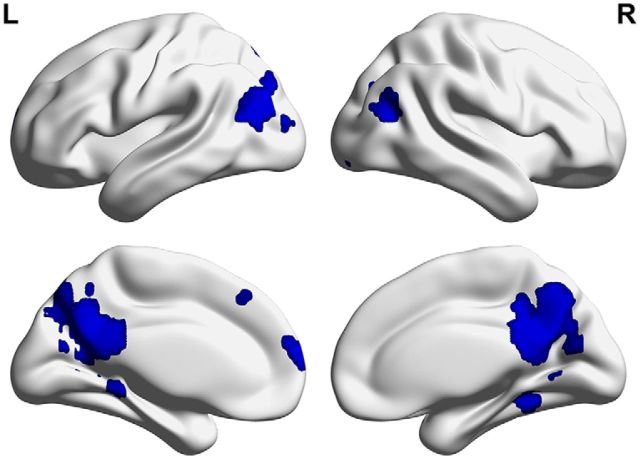

To compare the classification of the events based on each of the features in a set of regions representing all three features, we first defined a set of regions in the brain that showed sensitivity to each of the features, as described in Materials and Methods. An MVPA classifier was used to determine the regions selective for each feature (location, person, object) based on the independent data from the single-feature run, which used a separate set of cues in the same categories as the event imagination runs. Classification analyses determined sets of regions that could accurately distinguish each feature category from the others. From each of these sets of feature-sensitive regions, the 1000 most significant voxels were masked and merged to create a multifeature ROI, representing the voxels most selective for all three features. This ROI included a large region in the posterior medial cortex, spanning the posterior cingulate cortex, precuneus, and retrosplenial cortex (Fig. 6). In addition, it included bilateral parahippocampal cortex clusters, extending into the posterior hippocampus on the left, bilateral angular gyrus clusters, and dorsomedial prefrontal cortex clusters.

Figure 6.

Regions included in the multifeature ROI, based on merging the voxels that could most reliably discriminate each feature from the others in the single-feature scan. Regions include the posterior cingulate cortex, precuneus, retrosplenial cortex, bilateral angular gyrus, bilateral parahippocampal cortex extending into the posterior hippocampus on the left, and the medial prefrontal cortex. L, Left; R, right.

Comparison of classification performance across features

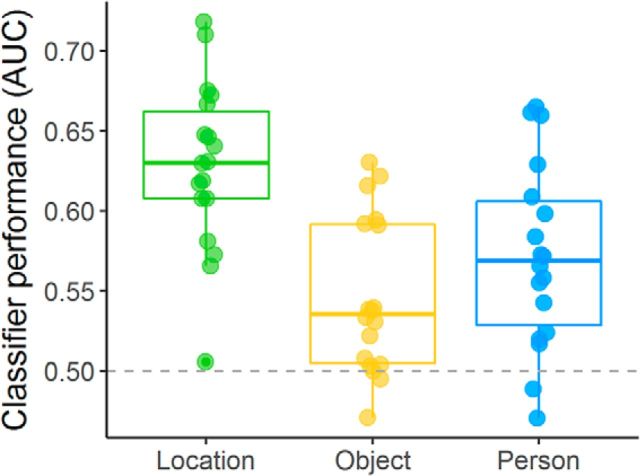

To quantitatively compare classifier performance across features, we trained and tested classifiers based on each feature and compared average classification performance in the multifeature ROI. As shown in Figure 7, events were more accurately classified based on location compared with person or object features. A one-way repeated-measures ANOVA comparing classification performance (as measured by the area under the curve) confirmed a main effect of feature type (F(2,34) = 16.18, p = 0.000016, η2 = 0.31). While average classification performance was significantly above chance for all three features (location: t(17) = 10.40, pcorrected = 0.000000052, d = 2.45; person: t(17) = 5.27, pcorrected = 0.00037, d = 1.24; object: t(17) = 3.96, pcorrected = 0.006, d = 0.93), follow-up Bonferroni-corrected paired t tests demonstrated that classification based on location was significantly more accurate than that based on object (t(17) = 6.94, pcorrected = 0.000014, d = 1.63) and on person (t(17) = 3.55, pcorrected = 0.015, d = 0.84), while person and object classification did not differ from one another (t(17) = 1.59, pcorrected = 0.79).

Figure 7.

Classification performance for each feature category in the multifeature ROI. Classification of the location of an event was significantly more accurate than classification of the person or object included in the event. Mean classification was above chance (0.5) for all three feature types.

Based on our a priori questions about representations in the hippocampus, we also assessed classifier performance in a hippocampal ROI. There was no difference in classifier performance based on feature (F(2,34) = 0.31, p = 0.74), and classification accuracy was not significantly above chance for any of the features (location: t(17) = 0.81, puncorrected = 0.43; person: t(17) = 1.50, puncorrected = 0.15; object: t(17) = 0.69, puncorrected = 0.50). Note that these nonsignificant differences are not likely attributable to the smaller size of the hippocampal ROI, since better classification performance based on location was also observed in a parahippocampal cortex ROI.

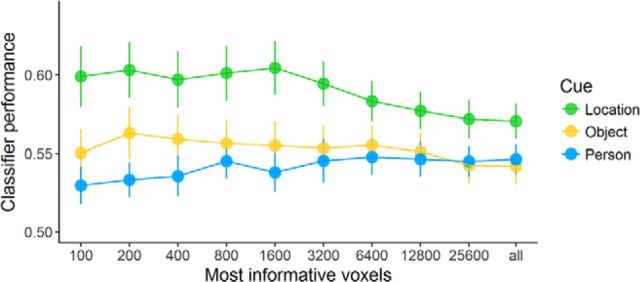

Last, we compared classification accuracy varying the total number of voxels used to train and test the classifier, to compare classifier performance controlling for voxel number without constraining the analyses to particular ROIs. As shown in Figure 8, classification according to location features consistently led to better performance than classification according to person or object features across varying voxel numbers. This difference was confirmed by a repeated-measures ANOVA on classification performance with factors of feature type and voxel number, which found a significant main effect of feature (F(2,34) = 5.742, p = 0.007, η2 = 0.119), a marginally significant interaction between feature and voxel number (F(18,306) = 2.629, p = 0.062, η2 = 0.016; Greenhouse–Geisser correction, ε = 0.163), and no significant main effect of voxel number (F(9,153) = 1.753, p = 0.180; Greenhouse–Geisser correction, ε = 0.267). Follow-up comparisons indicated that the difference between location and person classification was significant across all voxel numbers (t(17) = 4.161, pcorrected = 0.002, d = 0.98). The overall difference between location and object classification did not survive Bonferroni correction (t(17) = 2.155, pcorrected = 0.14), but differences were most apparent at 1600 and 3200 voxels (Fig. 8). There was no significant difference between classification based on person and object (t(17) = 0.734, pcorrected > 0.99).

Figure 8.

Classification performance for each feature category, by voxel number. Classification performance is measured by AUC for the classification at each voxel number. The number of voxels included in the classification was varied from 200 voxels to the whole brain.

Discussion

This study provides evidence that location plays a prominent role in the neural representation of events with overlapping spatial, person, and object features. The influence of location in the neural code is found throughout posterior–medial regions implicated in processing scenes and events, including the parahippocampal cortex and left posterior hippocampus, retrosplenial cortex, precuneus, posterior cingulate cortex, and angular gyrus.

Imagined events that included familiar locations, people, and objects were classified using searchlight MVPA to determine the regions which can distinguish among events with overlapping features. Classifying events according to location yielded the largest set of areas, including the posterior cingulate cortex, retrosplenial cortex, angular gyrus, precuneus, parahippocampal cortex, and the left posterior hippocampus, consistent with regions in the autobiographical and scene memory networks (Zeidman et al., 2015; Hodgetts et al., 2016). Classification according to person features revealed a smaller set of regions, including the right lingual gyrus and medial prefrontal and parietal regions, which have been associated with thinking about oneself and others (Buckner et al., 2008; Andrews-Hanna et al., 2010). Classification according to object features resulted in the smallest set of regions, consisting mainly of medial and lateral left parietal regions, which may be associated with somatosensation or spatial imagery (Andersen et al., 1997; Simon et al., 2002). Direct contrasts between these conditions revealed that classification accuracy was significantly higher for locations compared with objects in a large set of posterior–medial regions, and compared with people in a posterior cingulate cluster.

To compare classification accuracy for the three features in a set of regions associated with all three event features, a multiregion ROI was independently defined based on single-feature trials, producing a set of regions similar to autobiographical memory networks (Svoboda et al., 2006; Spreng and Grady, 2010; Schacter et al., 2012; Benoit and Schacter, 2015). In these areas, classification of the events was above chance for all three feature types, but was significantly more accurate when based on location, indicating that location plays a primary role in the neural representation of events. In addition, we compared classification accuracy across the brain while varying the number of voxels included in the classification, but not limiting the analysis to particular ROIs, which also revealed that classification based on location resulted in significantly higher accuracy than classification based on person features and object features. This difference was apparent for location compared with person classification across all voxel numbers, and for location compared with object in fewer instances. Thus, across our analyses, location effects consistently play a dominant role in event representation.

Together, these findings suggest that spatial context is a prominent defining feature of the neural representation of events, consistent with behavioral findings showing that spatial context influences the phenomenology of events (Arnold et al., 2011; Robin and Moscovitch, 2014; Robin et al., 2016; Sheldon and Chu, 2016; Hebscher et al., 2017). The parahippocampal, retrosplenial, and posterior cingulate cortices and the precuneus have been consistently implicated in studies of memory and perception of scenes and spatial context (Epstein and Kanwisher, 1998; Epstein, 2008; Andrews-Hanna et al., 2010; Auger et al., 2012; Szpunar et al., 2014; Horner et al., 2015; Zeidman et al., 2015), and, along with the medial prefrontal cortex, are also parts of the autobiographical memory network (Svoboda et al., 2006; Spreng et al., 2009; Spreng and Grady, 2010; St Jacques et al., 2011; Rugg and Vilberg, 2013; Benoit and Schacter, 2015). These results suggest that the role of these scene-related regions in the autobiographical memory network relates to representing the spatial context of events and memories.

These results are consistent with aspects of the scene construction theory, which states that spatial context provides a scaffold on which remembered and imagined events are constructed (Nadel, 1991; Hassabis and Maguire, 2007, 2009; Bird and Burgess, 2008; Maguire and Mullally, 2013). Location effects did not differ significantly from both person and object across all analyses, and there was evidence for significant classification of events based on people and objects in certain regions, demonstrating that these other event elements also contribute to the neural representation of events and in some analyses and brain areas may play roles comparable to those of location. Nonetheless, compared with the other two features, location appeared to most consistently play a role in determining the neural representation of events. The prominence of location was evident despite the fact that familiar people and the rich associations they can evoke (Liu et al., 2016) should, in principle, make people as strong a defining feature of events as location. This study limited the features under study to location, person, and objects, which were chosen since they are highly imageable, personally familiar features of events, although events also involve other features such as temporal context, emotions, and thoughts.

Scene construction theory also stipulates that the dependence of episodes on spatial representations relates to their mutual dependence on the hippocampus (Hassabis and Maguire, 2007, 2009; Maguire and Mullally, 2013). In our results, the left posterior hippocampus was included in the set of regions found to discriminate events based on location, and in the multifeature ROI, which demonstrated more accurate classification for events based on location. Both of these effects, however, were also shown in the larger set of scene-related regions including parahippocampal, retrosplenial, and posterior cingulate cortices and precuneus. Moreover, when the classification of events was assessed in a hippocampal ROI, classification was not significantly above chance for any of the features, and there were no significant differences between location and the other features. Thus, while we present evidence that location plays an important role in determining the neural representation of an event, it seems to do so by virtue of a set of neocortical areas relating to the processing of scene and episodic memory.

These findings are not necessarily incompatible with scene construction theory; it is possible that the posterior–medial scene regions represent the spatial contexts of events directly, while the hippocampus may be instrumental for constructing or binding these scenes and events (Eichenbaum et al., 1994; Olsen et al., 2012; Maguire and Mullally, 2013; Yonelinas, 2013; Eichenbaum and Cohen, 2014; Maguire et al., 2016). If the hippocampus provides an index to neocortical representations (Teyler and Discenna, 1986; Moscovitch et al., 2016), patterns of hippocampal activity may not directly reflect the features of the memory, instead using a sparser indexing code (O'Reilly and Norman, 2002; Rolls and Treves, 2011). Consistent with this interpretation are previous findings that cortical areas such as the parahippocampal cortex and perirhinal cortex show increased pattern similarity for related stimuli in given categories, while the hippocampus shows no differentiation or even decreased similarity for related representations (LaRocque et al., 2013; Copara et al., 2014; Ezzyat and Davachi, 2014; Dimsdale-Zucker et al., 2018), consistent with its role in pattern separation, which orthogonalizes similar events.

In contrast, other studies have reported changes in hippocampal pattern similarity based on features such as spatial or temporal context (Chadwick et al., 2011; Hsieh et al., 2014; Kyle et al., 2015a; Nielson et al., 2015; Ritchey et al., 2015; Milivojevic et al., 2016; Dimsdale-Zucker et al., 2018). Many of these studies, however, used visually presented stimuli rather than imagined ones, which may have resulted in more consistent and easily discriminable neural representations in the hippocampus. In addition, since the cues in this study were highly familiar and based on remote memory, they may have been less dependent on the hippocampus, as is the case with navigation-related spatial memory (Teng and Squire, 1999; Rosenbaum et al., 2000; Hirshhorn et al., 2012). Furthermore, many of the studies above used higher-resolution fMRI, allowing for the detection of pattern similarity differences specific to hippocampal subfields, which may be obscured in standard resolutions (Bakker et al., 2008; Schlichting et al., 2014; Kyle et al., 2015b; Dimsdale-Zucker et al., 2018).

In summary, our study offers evidence that episodic events are discriminable based on their location in posterior–medial regions including the parahippocampal cortex, posterior hippocampus, retrosplenial cortex, angular gyrus, posterior cingulate cortex, and the precuneus. In a set of regions consistent with the autobiographical memory network, events were classified most accurately based on their location compared with the person or object in the event. In conclusion, we propose that these findings illustrate the importance of spatial context in determining the neural representation of complex episodic events, providing evidence for a neural mechanism of how spatial context influences event memory. As such, our findings support scene construction theory, which proposes that scenes underlie episodes by forming a scaffold on which events are constructed. Our findings indicate, however, that other event elements, such as people and objects, and a larger collection of brain areas exclusive of the hippocampus, are also involved in event representation.

Footnotes

This work was supported by the Canadian Institutes for Health Research (Grant MOP49566). We thank Sigal Gat Lazer for help with data collection, Marilyne Ziegler for technical support, and Buddhika Bellana for helpful discussion.

The authors declare no competing financial interests.

References

- Addis DR, McIntosh AR, Moscovitch M, Crawley AP, McAndrews MP (2004) Characterizing spatial and temporal features of autobiographical memory retrieval networks: a partial least squares approach. Neuroimage 23:1460–1471. 10.1016/j.neuroimage.2004.08.007 [DOI] [PubMed] [Google Scholar]

- Addis DR, Wong AT, Schacter DL (2007) Remembering the past and imagining the future: common and distinct neural substrates during event construction and elaboration. Neuropsychologia 45:1363–1377. 10.1016/j.neuropsychologia.2006.10.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addis DR, Pan L, Vu MA, Laiser N, Schacter DL (2009) Constructive episodic simulation of the future and the past: distinct subsystems of a core brain network mediate imagining and remembering. Neuropsychologia 47:2222–2238. 10.1016/j.neuropsychologia.2008.10.026 [DOI] [PubMed] [Google Scholar]

- Ahdesmäki M, Zuber V, Gibb S, Strimmer K (2014) Shrinkage discriminant analysis and CAT score variable selection [software]. Available at: http://strimmerlab.org/software/sda.

- Andersen RA, Snyder LH, Bradley DC, Xing J (1997) Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci 20:303–330. 10.1146/annurev.neuro.20.1.303 [DOI] [PubMed] [Google Scholar]

- Andrews-Hanna JR, Reidler JS, Sepulcre J, Poulin R, Buckner RL (2010) Functional-anatomic fractionation of the brain's default network. Neuron 65:550–562. 10.1016/j.neuron.2010.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnold KM, McDermott KB, Szpunar KK (2011) Imagining the near and far future: the role of location familiarity. Mem Cogn 39:954–967. 10.3758/s13421-011-0076-1 [DOI] [PubMed] [Google Scholar]

- Auger SD, Mullally SL, Maguire EA (2012) Retrosplenial cortex codes for permanent landmarks. PLoS One 7:e43620. 10.1371/journal.pone.0043620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants BB, Epstein CL, Grossman M, Gee JC (2008) Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal 12:26–41. 10.1016/j.media.2007.06.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakker A, Kirwan CB, Miller M, Stark CE (2008) Pattern separation in the human hippocampal CA3 and dentate gyrus. Science 319:1640–1642. 10.1126/science.1152882 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Schacter DL (2008) Scenes unseen: the parahippocampal cortex intrinsically subserves contextual associations, not scenes or places per se. J Neurosci 28:8539–8544. 10.1523/JNEUROSCI.0987-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behzadi Y, Restom K, Liau J, Liu TT (2007) A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage 37:90–101. 10.1016/j.neuroimage.2007.04.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benoit RG, Schacter DL (2015) Specifying the CORE network supporting episodic simulation and episodic memory by activation likelihood estimation. Neuropsychologia 75:450–457. 10.1016/j.neuropsychologia.2015.06.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bird CM, Burgess N (2008) The hippocampus and memory: insights from spatial processing. Nat Rev Neurosci 9:182–194. 10.1038/nrn2335 [DOI] [PubMed] [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL (2008) The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci 1124:1–38. 10.1196/annals.1440.011 [DOI] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, Spiers HJ, O'Keefe J (2001) A temporoparietal and prefrontal network for retrieving the spatial context of lifelike events. Neuroimage 14:439–453. 10.1006/nimg.2001.0806 [DOI] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, O'Keefe J (2002) The human hippocampus and spatial and episodic memory. Neuron 35:625–641. 10.1016/S0896-6273(02)00830-9 [DOI] [PubMed] [Google Scholar]

- Cabeza R, St Jacques P (2007) Functional neuroimaging of autobiographical memory. Trends Cogn Sci 11:219–227. 10.1016/j.tics.2007.02.005 [DOI] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Maguire EA (2011) Decoding overlapping memories in the medial temporal lobes using high-resolution fMRI. Learn Mem 18:742–746. 10.1101/lm.023671.111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copara MS, Hassan AS, Kyle CT, Libby LA, Ranganath C, Ekstrom AD (2014) Complementary roles of human hippocampal subregions during retrieval of spatiotemporal context. J Neurosci 34:6834–6842. 10.1523/JNEUROSCI.5341-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. 10.1006/cbmr.1996.0014 [DOI] [PubMed] [Google Scholar]

- D'Argembeau A, Van der Linden M (2012) Predicting the phenomenology of episodic future thoughts. Conscious Cogn 21:1198–1206. 10.1016/j.concog.2012.05.004 [DOI] [PubMed] [Google Scholar]

- Destrieux C, Fischl B, Dale A, Halgren E (2010) Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage 53:1–15. 10.1016/j.neuroimage.2010.06.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Vito S, Gamboz N, Brandimonte MA (2012) What differentiates episodic future thinking from complex scene imagery? Conscious Cogn 21:813–823. 10.1016/j.concog.2012.01.013 [DOI] [PubMed] [Google Scholar]

- Dimsdale-Zucker HR, Ritchey M, Ekstrom AD, Yonelinas AP, Ranganath C (2018) CA1 and CA3 differentially support spontaneous retrieval of episodic contexts within human hippocampal subfields. Nat Commun 9:294. 10.1038/s41467-017-02752-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen NJ (2014) Can we reconcile the declarative memory and spatial navigation views on hippocampal function? Neuron 83:764–770. 10.1016/j.neuron.2014.07.032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Otto T, Cohen NJ (1994) Two functional components of the hippocampal memory system. Behav Brain 17:449–518. 10.1017/S0140525X00035391 [DOI] [Google Scholar]

- Eklund A, Nichols TE, Knutsson H (2016) Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci U S A 113:7900–7905. 10.1073/pnas.1602413113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein RA. (2008) Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci 12:388–396. 10.1016/j.tics.2008.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N (1998) A cortical representation of the local visual environment. Nature 392:598–601. 10.1038/33402 [DOI] [PubMed] [Google Scholar]

- Epstein RA, Parker WE, Feiler AM (2007) Where am I now? distinct roles for parahippocampal and retrosplenial cortices in place recognition. J Neurosci 27:6141–6149. 10.1523/JNEUROSCI.0799-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ezzyat Y, Davachi L (2014) Similarity breeds proximity: pattern similarity within and across contexts is related to later mnemonic judgments of temporal proximity. Neuron 81:1179–1189. 10.1016/j.neuron.2014.01.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, van der Kouwe A, Killiany R, Kennedy D, Klaveness S, Montillo A, Makris N, Rosen B, Dale AM (2002) Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron 33:341–355. 10.1016/S0896-6273(02)00569-X [DOI] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Van Der Kouwe AJW, Makris N, Ségonne F, Quinn BT, Dale AM (2004) Sequence-independent segmentation of magnetic resonance images. Neuroimage 23 [Suppl. 1]:S69–S84. 10.1016/j.neuroimage.2004.07.016 [DOI] [PubMed] [Google Scholar]

- Gilmore AW, Nelson SM, McDermott KB (2015) A parietal memory network revealed by multiple MRI methods. Trends Cogn Sci 19:534–543. 10.1016/j.tics.2015.07.004 [DOI] [PubMed] [Google Scholar]

- Hannula DE, Libby LA, Yonelinas AP, Ranganath C (2013) Medial temporal lobe contributions to cued retrieval of items and contexts. Neuropsychologia 51:2322–2332. 10.1016/j.neuropsychologia.2013.02.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA (2007) Deconstructing episodic memory with construction. Trends Cogn Sci 11:299–306. 10.1016/j.tics.2007.05.001 [DOI] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA (2009) The construction system of the brain. Philos Trans R Soc Lond B Biol Sci 364:1263–1271. 10.1098/rstb.2008.0296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Maguire EA (2007) Using imagination to understand the neural basis of episodic memory. J Neurosci 27:14365–14374. 10.1523/JNEUROSCI.4549-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebscher M, Levine B, Gilboa A (2017) The precuneus and hippocampus contribute to individual differences in the unfolding of spatial representations during episodic autobiographical memory. Neuropsychologia. Advance online publication. Retrieved February 9, 2018. doi: 10.1016/j.neuropsychologia.2017.03.029. 10.1016/j.neuropsychologia.2017.03.029 [DOI] [PubMed] [Google Scholar]

- Hirshhorn M, Grady C, Rosenbaum RS, Winocur G, Moscovitch M (2012) The hippocampus is involved in mental navigation for a recently learned, but not a highly familiar environment: a longitudinal fMRI study. Hippocampus 22:842–852. 10.1002/hipo.20944 [DOI] [PubMed] [Google Scholar]

- Hodgetts CJ, Shine JP, Lawrence AD, Downing PE, Graham KS (2016) Evidencing a place for the hippocampus within the core scene processing network. Hum Brain Mapp 37:3779–3794. 10.1002/hbm.23275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Bush D, Lin WJ, Burgess N (2015) Evidence for holistic episodic recollection via hippocampal pattern completion. Nat Commun 6:7462. 10.1038/ncomms8462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsieh LT, Gruber MJ, Jenkins LJ, Ranganath C (2014) Hippocampal activity patterns carry information about objects in temporal context. Neuron 81:1165–1178. 10.1016/j.neuron.2014.01.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumaran D, Maguire EA (2005) The human hippocampus: cognitive maps or relational memory? J Neurosci 25:7254–7259. 10.1523/JNEUROSCI.1103-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kyle CT, Smuda DN, Hassan AS, Ekstrom AD (2015a) Roles of human hippocampal subfields in retrieval of spatial and temporal context. Behav Brain Res 278:549–558. 10.1016/j.bbr.2014.10.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kyle CT, Stokes JD, Lieberman JS, Hassan AS, Ekstrom AD (2015b) Successful retrieval of competing spatial environments in humans involves hippocampal pattern separation mechanisms. Elife 4:e10499. 10.7554/eLife.10499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque KF, Smith ME, Carr VA, Witthoft N, Grill-Spector K, Wagner AD (2013) Global similarity and pattern separation in the human medial temporal lobe predict subsequent memory. J Neurosci 33:5466–5474. 10.1523/JNEUROSCI.4293-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Z-X., Grady C, & Moscovitch M (2016) Effects of prior-knowledge on brain activation and connectivity during associative memory encoding. Cerebral Cortex 27:1991–2009. 10.1093/cercor/bhw047 [DOI] [PubMed] [Google Scholar]

- Maguire EA, Mullally SL (2013) The hippocampus: a manifesto for change. J Exp Psychol Gen 142:1180–1189. 10.1037/a0033650 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maguire EA, Mummery CJ (1999) Differential modulation of a common memory retrieval network revealed by positron emission tomography. Hippocampus 9:54–61. [DOI] [PubMed] [Google Scholar]

- Maguire EA, Intraub H, Mullally SL (2016) Scenes, spaces, and memory traces: what does the hippocampus do? Neuroscientist 22:432–439. 10.1177/1073858415600389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLelland VC, Devitt AL, Schacter DL, Addis DR (2015) Making the future memorable: the phenomenology of remembered future events. Memory 23:1255–1263. 10.1080/09658211.2014.972960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milivojevic B, Varadinov M, Vicente Grabovetsky A, Collin SH, Doeller CF (2016) Coding of event nodes and narrative context in the hippocampus. J Neurosci 36:12412–12424. 10.1523/JNEUROSCI.2889-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch M, Cabeza R, Winocur G, Nadel L (2016) Episodic memory and beyond: the hippocampus and neocortex in transformation. Annu Rev Psychol 67:105–134. 10.1146/annurev-psych-113011-143733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadel L. (1991) The hippocampus and space revisited. Hippocampus 1:221–229. 10.1002/hipo.450010302 [DOI] [PubMed] [Google Scholar]

- Nadel L, Peterson MA (2013) The hippocampus: part of an interactive posterior representational system spanning perceptual and memorial systems. J Exp Psychol Gen 142:1242–1254. 10.1037/a0033690 [DOI] [PubMed] [Google Scholar]

- Nielson DM, Smith TA, Sreekumar V, Dennis S, Sederberg PB (2015) Human hippocampus represents space and time during retrieval of real-world memories. Proc Natl Acad Sci U S A 112:11078–11083. 10.1073/pnas.1507104112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J, Nadel L (1978) The hippocampus as a cognitive map. New York: Oxford UP. [Google Scholar]

- Olsen RK, Moses SN, Riggs L, Ryan JD (2012) The hippocampus supports multiple cognitive processes through relational binding and comparison. Front Hum Neurosci 6:146. 10.3389/fnhum.2012.00146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Reilly RC, Norman KA (2002) Hippocampal and neocortical contributions to memory: advances in the complementary learning systems framework. Trends Cogn Sci 6:505–510. 10.1016/S1364-6613(02)02005-3 [DOI] [PubMed] [Google Scholar]

- Ritchey M, Montchal ME, Yonelinas AP, Ranganath C (2015) Delay-dependent contributions of medial temporal lobe regions to episodic memory retrieval. Elife 4:e05025. 10.7554/eLife.05025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robin J, Moscovitch M (2014) The effects of spatial contextual familiarity on remembered scenes, episodic memories, and imagined future events. J Exp Psychol Learn Mem Cogn 40:459–475. 10.1037/a0034886 [DOI] [PubMed] [Google Scholar]

- Robin J, Moscovitch M (2017) Familiar real-world spatial cues provide memory benefits in older and younger adults. Psychol Aging 32:210–219. 10.1037/pag0000162 [DOI] [PubMed] [Google Scholar]

- Robin J, Wynn J, Moscovitch M (2016) The spatial scaffold: the effects of spatial context on memory for events. J Exp Psychol Learn Mem Cogn 42:308–315. 10.1037/xlm0000167 [DOI] [PubMed] [Google Scholar]

- Rolls ET, Treves A (2011) The neuronal encoding of information in the brain. Prog Neurobiol 95:448–490. 10.1016/j.pneurobio.2011.08.002 [DOI] [PubMed] [Google Scholar]

- Rosenbaum RS, Priselac S, Köhler S, Black SE, Gao F, Nadel L, Moscovitch M (2000) Remote spatial memory in an amnesic person with extensive bilateral hippocampal lesions. Nat Neurosci 3:1044–1048. 10.1038/79867 [DOI] [PubMed] [Google Scholar]

- Rugg MD, Vilberg KL (2013) Brain networks underlying episodic memory retrieval. Curr Opin Neurobiol 23:255–260. 10.1016/j.conb.2012.11.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Hassabis D, Martin VC, Spreng RN, Szpunar KK (2012) The future of memory: remembering, imagining, and the brain. Neuron 76:677–694. 10.1016/j.neuron.2012.11.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlichting ML, Zeithamova D, Preston AR (2014) CA1 subfield contributions to memory integration and inference. Hippocampus 24:1248–1260. 10.1002/hipo.22310 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheldon S, Chu S (2016) What versus where: investigating how autobiographical memory retrieval differs when accessed with thematic versus spatial information. Q J Exp Psychol (Hove) 70:1909–1921. 10.1080/17470218.2016.1215478 [DOI] [PubMed] [Google Scholar]

- Simon O, Mangin JF, Cohen L, Le Bihan D, Dehaene S (2002) Topographical layout of hand, eye, calculation, and language-related areas in the human parietal lobe. Neuron 33:475–487. 10.1016/S0896-6273(02)00575-5 [DOI] [PubMed] [Google Scholar]

- Smith SM, Nichols TE (2009) Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 44:83–98. 10.1016/j.neuroimage.2008.03.061 [DOI] [PubMed] [Google Scholar]

- Spreng RN, Grady CL (2010) Patterns of brain activity supporting autobiographical memory, prospection, and theory of mind, and their relationship to the default mode network. J Cogn Neurosci 22:1112–1123. 10.1162/jocn.2009.21282 [DOI] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim AS (2009) The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. J Cogn Neurosci 21:489–510. 10.1162/jocn.2008.21029 [DOI] [PubMed] [Google Scholar]

- Staresina BP, Alink A, Kriegeskorte N, Henson RN (2013) Awake reactivation predicts memory in humans. Proc Natl Acad Sci U S A 110:21159–21164. 10.1073/pnas.1311989110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St Jacques PL, Kragel PA, Rubin DC (2011) Dynamic neural networks supporting memory retrieval. Neuroimage 57:608–616. 10.1016/j.neuroimage.2011.04.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svoboda E, McKinnon MC, Levine B (2006) The functional neuroanatomy of autobiographical memory: a meta-analysis. Neuropsychologia 44:2189–2208. 10.1016/j.neuropsychologia.2006.05.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szpunar KK, Watson JM, McDermott KB (2007) Neural substrates of envisioning the future. Proc Natl Acad Sci U S A 104:642–647. 10.1073/pnas.0610082104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szpunar KK, Chan JC, McDermott KB (2009) Contextual processing in episodic future thought. Cereb Cortex 19:1539–1548. 10.1093/cercor/bhn191 [DOI] [PubMed] [Google Scholar]

- Szpunar KK, St Jacques PL, Robbins CA, Wig GS, Schacter DL (2014) Repetition-related reductions in neural activity reveal component processes of mental simulation. Soc Cogn Affect Neurosci 9:712–722. 10.1093/scan/nst035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teng E, Squire LR (1999) Memory for places learned long ago is intact after hippocampal damage. Nature 400:675–677. 10.1038/23276 [DOI] [PubMed] [Google Scholar]

- Teyler TJ, DiScenna P (1986) The hippocampal memory indexing theory. Behav Neurosci 100:147–154. 10.1037/0735-7044.100.2.147 [DOI] [PubMed] [Google Scholar]

- Tulving E. (1972) Episodic and semantic memory. In: Organization of memory (Tulving E, Donaldson W, eds), pp 382–402. New York and London: Academic. [Google Scholar]

- Tulving E. (2002) Episodic memory: from mind to brain. Annu Rev Psychol 53:1–25. 10.1146/annurev.psych.53.100901.135114 [DOI] [PubMed] [Google Scholar]

- Winkler AM, Ridgway GR, Webster MA, Smith SM, Nichols TE (2014) Permutation inference for the general linear model. Neuroimage 92:381–397. 10.1016/j.neuroimage.2014.01.060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia M, Wang J, He Y (2013) BrainNet Viewer: a network visualization tool for human brain connectomics. PLoS One 8:e68910. 10.1371/journal.pone.0068910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yonelinas AP. (2013) The hippocampus supports high-resolution binding in the service of perception, working memory and long-term memory. Behav Brain Res 254:34–44. 10.1016/j.bbr.2013.05.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeidman P, Mullally SL, Maguire EA (2015) Constructing, perceiving, and maintaining scenes: hippocampal activity and connectivity. Cereb Cortex 25:3836–3855. 10.1093/cercor/bhu266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuber V, Strimmer K (2009) Gene ranking and biomarker discovery under correlation. Bioinformatics 25:2700–2707. 10.1093/bioinformatics/btp460 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Clusters and local maxima from searchlight classification based on location features. Download Figure 3-1, DOCX file (12.4KB, docx)

Clusters and local maxima from searchlight classification based on person features. Download Figure 3-2, DOCX file (12.1KB, docx)

Clusters and local maxima from searchlight classification based on object features. Download Figure 3-3, DOCX file (11.6KB, docx)

Clusters and local maxima from searchlight classification based on each combination of features. Download Figure 4-1, DOCX file (12.5KB, docx)

Clusters and local maxima from the contrast of location > object classification. Download Figure 5-1, DOCX file (11.3KB, docx)

Clusters and local maxima from the contrast of location > person classification. Download Figure 5-2, DOCX file (11KB, docx)