Abstract

Sensory perception relies on the precise neuronal encoding of modality-specific environmental features in primary sensory cortices. Some studies have reported the penetration of signals from other modalities even into early sensory areas. So far, no comprehensive account of maps induced by “foreign sources” exists. We addressed this question using surface-based topographic mapping techniques applied to ultra-high resolution fMRI neuroimaging data, measured in female participants. We show that fine-grained finger maps in human primary somatosensory cortex, area 3b, are somatotopically activated not only during tactile mechanical stimulation, but also when viewing the same fingers being touched. Visually-induced maps were weak in amplitude, but overlapped with the stronger tactile maps tangential to the cortical sheet when finger touches were observed in both first- and third-person perspectives. However, visually-induced maps did not overlap tactile maps when the observed fingers were only approached by an object but not actually touched. Our data provide evidence that “foreign source maps” in early sensory cortices are present in the healthy human brain, that their arrangement is precise, and that their induction is feature-selective. The computations required to generate such specific responses suggest that counterflow (feedback) processing may be much more spatially specific than has been often assumed.

SIGNIFICANCE STATEMENT Using ultra-high field fMRI, we provide empirical evidence that viewing touches activates topographically aligned single finger maps in human primary somatosensory cortical area 3b. This shows that “foreign source maps” in early sensory cortices are topographic, precise, and feature-selective in healthy human participants with intact sensory pathways.

Keywords: area 3b, multisensory integration, somatotopy, touch, vision, visuotactile mapping

Introduction

The notion of a primary sensory cortex for each modality originates with the very first studies in functional neuroanatomy, over a century ago. Although recent electrophysiological and neuroimaging studies have often reported responses to nonpreferred input modalities in primary cortical areas (Sadato et al., 1996; Röder et al., 2002; Sadato et al., 2002; Kupers et al., 2006; Driver and Noesselt, 2008; Schaefer et al., 2009; Bieler et al., 2017), no detailed neuroanatomical account of these “foreign source maps” exists.

Visual cortices of congenitally blind individuals respond to input from nonvisual modalities, including sound and touch (Merabet et al., 2004; Poirier et al., 2007; Collignon et al., 2011). These non-afferent activations have been assigned important roles in sensory signal encoding, and are assumed to be the consequence of unmasking (Sadato et al., 1996; Pascual-Leone and Hamilton, 2001; Merabet et al., 2007). More recently, it was shown that also in healthy individuals with intact vision, primary visual cortices are involved in tactile encoding (Snow et al., 2014). However, the fine-grained spatial organization of these foreign source maps in early sensory cortex has never been examined in either intact or sensory-deprived individuals. It therefore remains an open question whether the fine-grained topographic mapping format that characterizes responses to the native modality in early sensory cortex is also used to represent non-afferent input, or whether different formats are used. Studies on visual body perception, for example, indicate that visual representations in somatosensory cortex may perhaps be distorted (Longo and Haggard, 2010; Fuentes et al., 2013). In addition, it has been suggested that fine-grained back-projections from higher-level cortical areas down to primary sensory cortices may be absent, or masked (Keysers et al., 2010). This would suggest that hypothetical non-afferent inputs are at best coarsely mapped.

In primary somatosensory cortex (S-I) in particular, non-afferent responses remain controversial. Whereas primary visual cortex has repeatedly been shown to respond to touch, area 3b is rarely reliably activated by visual stimuli (Meehan et al., 2009; Meftah et al., 2009; Kuehn et al., 2013, 2014; Chan and Baker, 2015). Whereas somatosensory areas 1 and 2 have been activated while viewing touches and are assigned multisensory response properties, area 3b remains mostly silent in such conditions (Cardini et al., 2011; Gentile et al., 2011; Longo et al., 2011; Kuehn et al., 2013, 2014). This supports the current view that area 3b encodes mechanical touch only rather than observed touch. Further, area 3b could provide the neuronal substrate to distinguish perceived touch from observed touch (Kuehn et al., 2013); a computation that can be critical to the survival of the organism.

Here, we used ultra-high field imaging at 7 tesla to shed light on this issue. We characterized topographic representations of single fingers in area 3b, first during tactile stimulation, and then while the same participants viewed individual fingers of another person's hand receiving similar tactile stimulation. We computed somatotopic maps for both tactile and visual input modalities, and tested whether touch to fingers and visual observation of finger touches activated topographic and tangentially aligned finger maps in area 3b. Importantly, we also tested whether these maps were robust across viewing perspectives, and were specific for observing a finger that had actually been touched versus merely approached. Finally, we controlled for the potential influence of finger movements on visual map formation using electromyography (EMG). Our results provide a novel perspective on the functional roles of foreign source maps in primary sensory cortex, and shed new light on associated models on multisensory integration, social cognition, and learning.

Materials and Methods

Participants.

Sixteen healthy, female participants between 20 and 36 years of age [mean age: 26.25 ± 3.87 years (mean ± SD)] took part in the phase-encoded fMRI study, which was comprised of three scanning sessions (2 visual sessions, 1 tactile session, each recorded at separate days; Fig. 1). Participants were right-handed, and none of them had a recorded history of neurological or psychiatric diseases. This participant number is well above estimated sample sizes needed for robust power detection at the single subject level (Desmond and Glover, 2002), and a similar sample size was used in previous studies on visual responses in the somatosensory system (Kuehn et al., 2013, 2014). Thirteen healthy, female participants between 20 and 35 years of age participated in the blocked-design fMRI study, which again comprised three scanning sessions (2 visual sessions, 1 tactile session, each recorded at separate days; Fig. 1). Some of them participated in both studies (n = 9; mean age: 25.33 ± 4.30 years). All participants gave written informed consent before scanning, and were paid for their attendance. Both studies were approved by the local Ethics committee at the University of Leipzig. Data collection was restricted to female participants due to equipment requirements (see fMRI scanning tactile sessions).

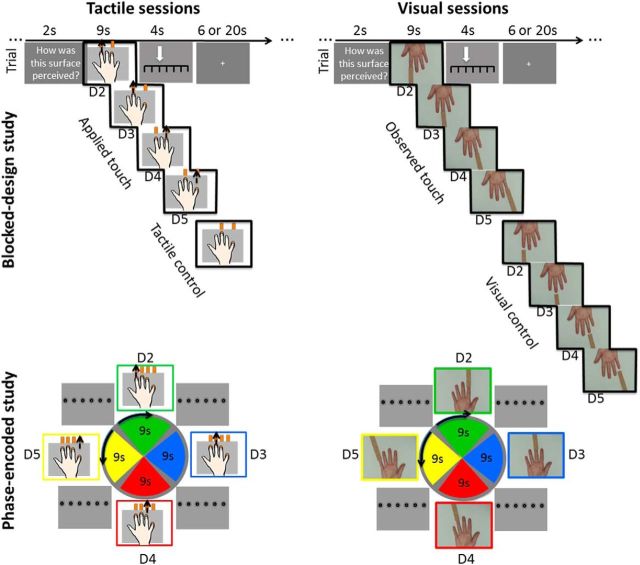

Figure 1.

Experimental designs. Two 7 tesla fMRI studies were conducted, each composed of three sessions. In the phase-encoded study (observation of first-person touches, bottom), visual and tactile stimuli were presented in repeated cycles. Nine second blocked stimulation of the index finger (D2) was followed by 9 s blocked stimulation of the middle finger (D3), the ring finger (D4), the small finger (D5), back to D2 and so forth. In a different session, finger stimulation order was reversed to cancel fixed local differences in hemodynamic delay (Huang and Sereno, 2013). After each finger stimulation, the numbers “1” (not rough) to “6” (very rough) were displayed on screen for 1.6 s, and participants judged the roughness of the previous sandpaper via eye gaze. In the two visual sessions, participants saw finger touches on screen, rather than feeling them on their own fingertips. In the blocked-design study (observation of third-person touches, top), stimulation blocks were presented randomized using similar stimulus material, except that videos were rotated by 180°, and tactile as well as visual control conditions were added where the sandpaper and the finger did not touch. Finger touches were presented for 9 s, and participants had a 4 s time window to respond. Intertrial intervals were 6 s in two-thirds of the trials, and 20 s in one-third of the trials.

Twelve novel, healthy, female participants between 18 and 30 years of age (mean age: 24.58 ± 3.03 years) took part in two additional EMG experiments. Participants were right-handed, and none of them had a recorded history of neurological or psychiatric diseases. We ensured that this participant number is above sample sizes needed to obtain robust EMG effects (see below). All participants gave written informed consent before the start of the experiment, and were paid for their attendance. Both studies were approved by the local Ethics committee at the University of Leipzig.

Scanning acquisition parameters.

Functional and structural MRI data were acquired using a 7T MR scanner (Magnetom 7T, Siemens Healthcare Sector) with a 24-channel NOVA head coil in Leipzig (MPI CBS). At the beginning of each scanning session, high-resolution 3D anatomical T1-weighted whole-brain scans were acquired using an MPRAGE sequence with the following parameters: echo spacing = 6.1 ms, TE = 2.26 ms, TI = 1100 ms, flip angle = 6°, isotropic voxel resolution = 1.2 mm. First-order shimming was performed before collecting the functional data. Multiple shimming runs were performed until optimal results were obtained [FWHM (Hz) for visual sessions = 43.36 ± 4.90 (mean ± SD), FWHM (Hz) for tactile sessions = 42.83 ± 5.22]. In the phase-encoded study, a field-map scan was acquired before each functional block (2 per session, voxel size: 2.0 × 2.0 × 2.0 mm, 33 slices, FOV = 192 mm, TR = 1 s, TE1 = 6 ms, TE2 = 7.02 ms, flip angle = 60°).

The T1-weighted scans were subsequently used to select 44 axial slices for functional scans (interleaved slice acquisition, slice thickness = 1.5 mm, no gap) covering bilateral S-I, and adjacent areas. The hand knob area was used as landmark for first-session slice selection. The hand knob is easily identified in sagittal T1-images, and reliably indicates the location of the hand area in the primary motor cortex (Yousry et al., 1997), and the primary somatosensory cortex (White et al., 1997; Sastre-Janer et al., 1998; Moore et al., 2000). After this, automatic realignment was performed for the subsequent two sessions for the phase-encoded study (in the blocked-design study, slice selection was done manually for each scanning session). We used GRAPPA acceleration (Griswold et al., 2002) with iPad = 3 to acquire functional T2*-weighted gradient-echo echoplanar images. A field-of-view of 192 × 192 mm2, and an imaging matrix of 128 × 128 were used. The functional images had isotropic 1.5 mm voxels. The other sequence parameters were as follows: TR = 2.0 s, TE = 18 ms, flip angle = 61°. Although 3D image acquisition can provide better spatial resolution compared with the single-slice acquisitions (2D) used here, motion artifacts are elevated in 3D multishot sequences, whereas the SNR from single-shot 3D-GRASE is very low (Kemper et al., 2016).

For each participant, we also acquired a T1-weighted 3D MPRAGE sequence with selective water excitation and linear phase encoding at the Siemens TIM Trio 3T scanner (the above listed sequences were collected at the 7T scanner). Imaging parameters were as follows: TI = 650 ms, echo spacing = 6.6 ms, TE = 3.93 ms, α = 10°, bandwidth = 130 Hz/pixel, FOV = 256 mm × 240 mm, slab thickness = 192 mm, 128 slices, spatial resolution = 1 mm × 1 mm × 1.5 mm, 2 acquisitions.

fMRI scanning procedure: general.

There were two fMRI studies in total, one phase-encoded study, and one blocked-design study (for an overview, see Fig. 1). Each study consisted of three scanning sessions [2 visual sessions (Sessions 1 and 2), and 1 tactile session (Session 3)]. There were two visual sessions to increase statistical power of visual map analyses. Scanning sessions took place on separate days (phase-encoded study: mean = 6.25 ± 1.0 d between scanning Sessions 1 and 2, mean = 13.19 ± 13.51 d between scanning Sessions 2 and 3; blocked-design study: mean = 7.44 ± 1.33 d between Sessions 1 and 2, mean = 19.11 ± 8.42 d between Sessions 2 and 3; measures were taken between 8:00 A.M. and 7:00 P.M.). Both studies were separated by ∼1.5 years (mean = 20.67 ± 1.22 months). In the first two scanning sessions of both studies (visual sessions), participants observed video clips in the scanner. In the third scanning session of both studies (tactile sessions), participants experienced physical tactile stimulation on their own fingertips. The same stimulus material (sandpaper samples, see below) was used for visual and tactile sessions. During scanning, participants were provided with earplugs and headphones to protect against scanner noise. A small mirror was mounted onto the head coil to provide visual input. The mirror was adjusted for each person to provide optimal sight. Scanner-compatible glasses were provided if necessary to allow corrected-to-normal vision. A small MRI compatible video camera (12M from MRC, http://www.mrc-systems.de/deutsch/products/mrcamera.html#Cameras) was mounted above the mirror, which allowed the experimenter to monitor participants' eye movements during the experiment, and to verify that participants did not fall asleep.

fMRI scanning tactile sessions.

A custom-built tactile stimulator was used for stimulating participants' fingertips in the MR scanner. The tactile stimulator was nonmagnetic, and was composed of a scanner-compatible table, stimulus belts on which several sandpaper samples were mounted, and a stimulation plate with aperture that exposed the belt to the digits. Once the table was in place, plate position was adjusted until participants' right arms could comfortably reach the stimulation plate. Due to size restrictions in the scanner bore (note that the table was mounted above participants' bodies within the scanner), we could only measure normal- or small-sized, and normal- or light-weight participants. The stimulation plate was designed for smaller hands. For these reasons, we only measured female participants in this study. The stimulation plate contained either four holes (phase-encoded design), or two holes (blocked design), and participants placed the fingers of their right hand onto each hole (phase-encoded design), or two nonadjacent fingers onto two holes (blocked-design). In the blocked-design, participants switched finger positions from index finger (D2)/ring finger (D4) to middle finger (D3)/small finger (D5), or vice versa, between blocks. No switching was required in the phase-encoded design. These different designs were used to increase the reliability of our results across stimulation orders and analyses techniques. Armrests were used to promote a relaxed posture for the arm that reached the plate so that no active muscle contraction was required to hold the arm and fingers in place. If participants had problems maintaining this position in a relaxed state, which was checked carefully by the experimenter before the experiment started, additional scanner-compatible belts were used to stabilize the arms and hands. Participants were instructed to completely relax arms, hands, and fingers throughout the experiment. No pulse oximeter was attached to participants' fingers.

In the phase-encoded design, there were four experimental conditions in each session: Tactile stimulation to the index finger, the middle finger, the ring finger, and the small finger, in repeated cycles (Fig. 1). Sandpaper belts accessible through the holes within the stimulation plate were used for tactile stimulation. Each belt was composed of 18 sandpaper samples, and 18 neutral surfaces that separated adjacent sandpaper samples. Stimulation order was randomized. Tactile single finger stimulation was applied in cycles, and always lasted for 9 s per finger. Each cycle was composed of tactile stimulation and a response screen, and lasted 42.7 s. During tactile stimulation, the experimenter moved one stimulation belt for 9 s in one direction, and was equipped with an auditory timer for stimulation onset and stimulation offset. During tactile stimulation, participants saw a white fixation cross on a black screen; the cross was placed at locations corresponding to the fingertip of the stimulated finger in the video clips as described below (e.g., during stimulation of the index finger, participants looked at a cross located within their right hemifield). After finger stimulation, a response screen was presented for 1.6 s where participants fixated the number (between 1 and 6) that best matched the previously felt tactile stimulus. Two cycle types were used (D2 → D5 cycles, and D5 → D2 cycles), and each type was presented in a separate block. There were two blocks in total. Each block contained 13 cycles, presented in direct succession and without breaks and lasted 9.8 min. At the start and end of each block, a 15 s fixation screen was presented. Both blocks were separated by a short break. Block order was counterbalanced across participants. The phase-encoded design was similar to the well validated design used by Besle et al. (2013). Differences between their paradigm and ours include stimulation length (9 s in our study, 4 s in the study by Besle et al., 2013), stimulation material (stimulation belt in our study, piezo stimulator in their study), task (roughness discrimination task in our study, amplitude detection task in their study), and response mode (short breaks for responses after each stimulation block in our study, responses within the running task in their study).

In the blocked-design, there were five experimental conditions per session, three in each block: Tactile stimulation to each of the four fingers, and a tactile control condition. Tactile stimulation was performed as reported above. In the tactile control condition, no stimulation was applied to the fingers. At the beginning of each trial, a screen appeared for 2 s prompting the subject to rate the roughness of the sandpaper's surface. After the stimulation (or no stimulation), a response screen appeared for 4 s where participants moved an arrow to the vertical line that best matched the previously felt tactile stimulus by using their left hand (nonstimulated). There was a 6 s pause between trials in two-thirds of the trials, and a 20 s pause between trials in one-third of the trials. This resulted in a medium trial length of 30.5 s. Each condition was repeated 12 times. Each roughness level was presented two times per condition. Conditions were pseudorandomly presented with the constraint that no condition was presented more than twice in a row, and that the order of the experimental conditions was presented in mirror-symmetric fashion. We tested in two blocks: in one block, tactile stimulation to the index finger or the ring finger was applied, in the other block, tactile stimulation to the middle finger or the small finger was applied. Block-order was counterbalanced across participants. There was a short break separating blocks. Total experimental time was 37 min.

fMRI scanning: visual sessions.

In the visual session of the phase-encoded study, four conditions were tested: observed tactile stimulation to the index finger, middle finger, ring finger, or small finger, respectively. In all conditions, touches to the right hand were observed, oriented in first-person perspective with the palm up (Fig. 1). Sandpaper of six different grit values and similar color was used for stimulation (Kuehn et al., 2013). The same sandpaper was also used for tactile stimulation (see above). Within each video clip (lasting 9 s), one finger was stroked by a piece of sandpaper three times consecutively. Whereas the whole hand was seen in all video clips (and was always in the same position on the screen), only finger tips were stroked, and the device moving the sandpaper was not visible. After each video clip, a response screen appeared for 1.6 s with the numbers 1–6 displayed horizontally, and equally spaced (Fig. 1). Similar to the tactile session, participants were instructed to fixate the number which best matched the roughness level of the sandpaper they had seen in the previous trial (1 = lowest roughness, 6 = maximal roughness). No active button presses, which could potentially activate the finger map of the motor and proprioceptive systems, were required for responding. Responses (i.e., eye movements) were observed via a mounted camera but not recorded.

A phase-encoded design was used, and conditions were presented in periodic order. Each stimulus cycle was composed of four video clips (observed touches to each of the four fingers), and four response screens (one after each video clip). One cycle was 42.7 s long. The presentation of sandpaper grit values across conditions was counterbalanced. Each sandpaper value was presented four times. The first trial was always discarded from the analyses to match the length of both cycles (see below). To control for scanner-specific drifts as well as voxelwise variations in hemodynamic delay, we used two cycle orders: the first presented touches to the index finger, followed by the middle finger, the ring finger, and the small finger (D2 → D5 cycle). The other presented touches, first to the small finger, followed by the ring finger, the middle finger, and the index finger (D5 → D2 cycle). The two cycle types were presented in separate blocks. In each block, 25 cycles of the same type were presented in direct succession, and without breaks. The second block started after a short break and presented 25 cycles of the other cycle type in the same way. Block order was counterbalanced across participants, and scanning days. At the start and end of each block, a 15 s fixation screen was presented. Each block lasted 18.3 min. In total, each participant performed four visual blocks, two of each cycle type.

In the blocked design, there were eight experimental conditions: Observed touches to each of the four fingers, and visual control conditions where sandpaper samples approached but did not touch the same four fingers. The video clips used here showed hands in the third-person perspective. It were the same videos as those used in the phase-encoded design, but rotated by 180 degrees (Fig. 1). For the visual control conditions, the same hand was displayed on the screen (also in the third-person perspective), but the sandpaper stopped ∼1 cm in front of the fingertip. Movement speed was kept constant. Before the video clips were presented, a screen appeared for 2 s instructing participants to rate the roughness of the sandpaper surfaces. After each video clip, a response screen appeared for 4 s with seven vertical lines displayed horizontally and equally spaced (leftmost line = no touch, rightmost line = maximal roughness). Participants were instructed to use left-hand button presses (index finger and middle finger) to move a randomly located arrow to the number which best matched the roughness level of the sandpaper they had seen in the previous trial. If they observed a video where no touch occurred, they were instructed to choose the leftmost line.

Each trial was composed of one video clip lasting for 9 s, and one response screen. There were 6 or 20 s pause before the next trial started (6 s for two-thirds of the trials, 20 s for one-third of the trials, randomized and counterbalanced across conditions). This added up to a medium trial length of 30.5 s. Each condition was repeated 24 times. There were 96 trials per session, which added up to a total duration of 48 min per session. Conditions were pseudorandomly presented with the constraints that no condition was presented more than twice in a row, and that conditions were presented mirror-symmetric (i.e., the second half of the experiment mirrored the first half). In one of the two scanning sessions, touch on video was presented to the index finger or the ring finger, in the other touch on video was presented to the middle finger or the small finger, respectively. The order of scanning sessions was counterbalanced across participants, and scanning days.

Surface reconstruction.

FSL 5.0 (Smith et al., 2004; Woolrich et al., 2009) and FreeSurfer's recon-all (http://surfer.nmr.mgh.harvard.edu/) were used for brain segmentation and cortical surface reconstruction using the T1-weighted 3D MPRAGE. Recon-all is a fully automated image processing pipeline, which, among other steps, performs intensity correction, transformation to Talairach space, normalization, skull-stripping, subcortical and white-matter segmentation, surface tessellation, surface refinement, surface inflation, sulcus-based nonlinear morphing to a cross-subject spherical coordinate system, and cortical parcellation (Dale et al., 1999; Fischl et al., 1999). Skull stripping, construction of white and pial surfaces, and segmentation were manually checked for each individual participant.

Preprocessing phase-encoded study: touch observed in first-person perspective.

Rigid-body realignment was performed to minimize movement artifacts in the time series (Unser et al., 1993a,b), and slice timing correction was applied to the functional data to correct for differences in image acquisition time between slices, all using SPM8 (Statistic Parametric Mapping, Wellcome Department of Imaging Neuroscience, University College London, London, UK). Distortion correction of the functional images due to magnetic field inhomogeneities was performed using FSL fugue based on a field-map scan before each functional scan. Functional time series were cut using fslroi. The functional volumes acquired while participants observed the fixation cross at the beginning and end of the experiment, and the data acquired during the first cycle were removed. After this procedure, the time series of the D2 → D5 cycles and the D5 → D2 cycles were mirror-symmetric to each other. Each of these (shorter) time series was then registered to the T1-weighted 3D MPRAGE used for recon-all using csurf tkregister. We used 12 degrees of freedom, nonrigid registration. The resulting registration matrix was used to map the x, y, z location of each surface vertex into functional voxel coordinates. The floating point coordinates of points at varying distances along the surface normal to a vertex were used to perform nearest neighbor sampling of the functional volume voxels (i.e., the 3D functional data were associated with each vertex on the surface by finding which voxel that point lay within).

Functional time series of the different cycle directions (D2 → D5 and D5 → D2 cycle) were averaged time point by time point by reversing the direction of time on a scan-by-scan basis. This was feasible because both scans were mirror-symmetric (see above). The time-reversed cycle direction (D5 → D2 cycle) was time-shifted before averaging by 4 s (= 2 TRs) to compensate for hemodynamic delay. Averaging was done in 3D without any additional registration.

Statistical analyses phase-encoded study: with touch observed in first-person perspective.

The program Fourier implemented in csurf (http://www.cogsci.ucsd.edu/∼sereno/.tmp/dist/csurf) was used to conduct statistical analyses on the averaged time series. In brief, the program runs discrete Fourier transformations on the time course at each 3D voxel, and then calculates phase and significance of the periodic activation. 24 and 12 stimulation cycles for visual and tactile sessions, respectively, were used as input frequencies. No spatial smoothing was applied to the data before statistical analyses. Frequencies <0.005 Hz were ignored for calculating signal-to-noise, which is the ratio of amplitude at the stimulus frequency to the amplitudes of other (noise) frequencies. Very low frequencies are dominated by movement artifacts, and this procedure is identical to linearly regressing out signals correlated with low-frequency movements. High frequencies up to the Nyquist limit (1/2 the sampling rate) were allowed. This corresponds to no use of a low-pass filter. The higher-frequency periodic activation due to task responses at 0.08 Hz (vs 0.005 Hz for finger mapping) was discarded as an orthogonal nuisance variable when calculating signal-to-noise. For display, a vector was generated whose amplitude is the square root of the F-ratio calculated by comparing the signal amplitude at the stimulus frequency to the signal at other noise frequencies and whose angle was the stimulus phase. The Fourier analyzed data were then sampled onto the individual surface. To minimize the effect of superficial veins on BOLD signal change, superficial points along the surface normal to each vertex (top 20% of the cortical thickness) were disregarded. Clusters that survived a surface-based correction for multiple comparisons of p < 0.01 (correction was based on the cluster size exclusion method as implemented by surfclust and randsurfclust within the csurf FreeSurfer framework; Hagler et al., 2006), and a cluster-level correction of p < 0.001, were defined as significant. This significance threshold was used to identify significant tactile maps at the individual level. Visual maps were identified using a different statistical approach (see below).

For group analyses, complex- and real-valued data from each individual participant's morphed sphere were sampled to the canonical icosahedral sphere surface using a FreeSurfer tool, mri_surf2surf. One step of nearest-neighbor smoothing was applied to the data after resampling. Components were averaged in this common coordinate system, and a phase dispersion index was calculated. Finally, a (scalar) cross-subject F-ratio was calculated from the complex data. For phase analyses, the amplitude was normalized to 1, which prevented overrepresenting subjects with strong amplitudes. An average surface from all subjects was then created (similar to fsaverage) using the FreeSurfer tool, make_average_subject, and cross-subject average data were also resampled back onto an individual subject's morphed sphere. To sample data from surface to surface, for each vertex, the closest vertex in the source surface was found; each hole was assigned to the closest vertex (FreeSurfer mris_surf2surf). If a vertex had multiple source vertices, then the source values were averaged. Surface-based statistics were performed as in the individual subject analyses (i.e., correction for multiple comparisons at p < 0.01, and cluster-level correction at p < 0.001; Hagler et al., 2006). This statistical procedure was used to identify significant tactile maps. Visual maps were identified using a different statistical approach (see below).

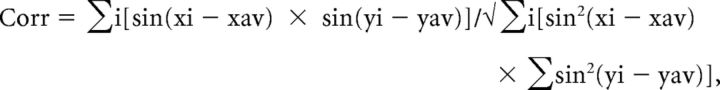

We estimated the similarity between both group datasets, visual and tactile, across the cortex using circular correlation within surface-based searchlights centered at each vertex. Circular correlation was calculated as follows:

|

(csurf tksurfer corr_over_label, circular correlation component equivalent to the MATLAB code in the circular statistics toolbox). Each searchlight comprised the 150 nearest vertices (selected by approximation to geodesic distances) to each (center) vertex. Importantly, when performing circular correlations, we took care to avoid spurious correlations due to a single voxel being sampled by multiple vertices as follows: for each voxel, we found all the vertices within it (nearest-neighbor sampling), averaged their locations, then found the vertex in that group nearest the average position, and used that vertex alone as the “representative” vertex for that voxel (csurf tksurfer find_uniqsamp_vertices). Correlations with p < 0.01, and a minimal cluster size of 5 voxels were considered significant.

Additional comparisons between visual and tactile maps were conducted within larger regions-of-interest (ROIs) using circular correlation as well as alignment indices. Both measures were evaluated across a ROI defined as a connected 2D patch of surface vertices in area 3b that had a significant periodic response to physical touch perception (F(2,245) > 4). Corresponding vertices were extracted from the periodic responses to touch observation. Analyses were performed at the individual level, and at the group level using real-valued statistics (F). The alignment index for each pair of vertices was defined as follows:

|

where δ φ is the smallest angle between the two phase measurements in radians (Sereno and Huang, 2006; Mancini et al., 2012). This index ranges from 1 (where the phase measurement at a vertex is identical in the two datasets) to 0 (where the phase measurements are separated by π, for example, index and ring finger). A histogram of alignment indices for a ROI that is sharply peaked near 1 indicates that the two maps are well aligned (perfect alignment would be a spike exactly at 1). The average of the alignment indices (AIs; average angular offset) and the SD of angular offsets were calculated for each comparison to roughly characterize the distribution. To statistically compare a random set of AIs to the empirically measured set of AIs in each individual, we calculated random AIs by taking each individual's empirically measured phase values of the tactile map, generated a random sample of visual phase values, and computed the AI as described above. We then compared the empirically measured AIs to the random AIs for each subject using paired-sample t tests and a Bonferroni-corrected p-value <0.0031 to determine significant differences.

We also performed circular correlations between tactile and visual phase values within the ROI (entire finger map area) using a Bonferroni-corrected p-value <0.0031 to determine significant correlations. Finally, we calculated probability density estimates for the empirically measured AIs, and the simulated (random) AIs. Estimates were based on a normal kernel function, and were evaluated at equally spaced points. The sampling rate of the histogram was in steps of 0.01, the bandwidth of the distribution function was 0.04.

To estimate map amplitudes (in percentage), we started with the discrete Fourier transform response amplitude (hypotenuse given real and imaginary values) for each vertex within our ROIs. This value was multiplied by 2 to account for positive and negative frequencies, again multiplied by 2 to estimate peak-to-peak values, divided by the number of time points over which averaging was performed (to normalize the discrete FT amplitude), and divided by the average brightness of the functional dataset (excluding air). Finally, the value was multiplied by 100 for percentage response amplitude.

We also extracted individual peak coordinates (x, y, and z, in surface-space) of tactile and visual single finger representations in the area 3b tactile map area. To compute x-, y-, and z-bias, we found the difference between the peak coordinate of the visual map and the peak coordinate of the tactile map individually for each finger, and each participant. These biases were averaged, and compared against zero using one-sample t tests.

Preprocessing blocked design study: with touch observed in third-person perspective.

Realignment and unwarping was performed to minimize movement artifacts in the time series (Unser et al., 1993a,b), and slice timing correction was applied to the functional data to correct for differences in image acquisition time between slices, all using SPM8.

One functional dataset per participant (volumes acquired during the tactile index finger/ring finger block) was registered to the 7T T1-weighted image using normalized mutual information registration, and sixth degree B-spline interpolation. The other functional datasets (there were four in total: 2 visual sessions + 2 tactile sessions) were coregistered to the already registered functional dataset using normalized cross-correlation, and sixth degree B-spline interpolation. Normalized cross-correlations were rerun multiple times (usually 2–3 times) until a plateau was reached where no further enhancement in registration could be detected. Registration was performed with SPM8. Note that data were neither normalized nor smoothed (beyond interpolation during registration) during this procedure.

All time series were then registered to the T1-weighted 3D MPRAGE used for recon-all (see above) using csurf tkregister. We used 12 degrees of freedom, nonrigid registration (the registration matrix maps x, y, z location of each surface vertex into functional voxel coordinates). Rounded floating point transform coordinates were used for nearest-neighbor sampling of the functional and statistical contrast volumes. To minimize the effect of superficial veins on BOLD signal change, the most superficial sampling points along the vertex normal (top 20% of the cortical thickness) were disregarded.

Statistical analyses blocked design study: with touch observed in third-person perspective.

Fixed-effects models on the first level were calculated for each subject separately using the general linear model as implemented in SPM8. Because we treated each finger individually and independently, both in the visual and tactile sessions, BOLD activation elicited by each finger's tactile stimulation/observation were treated as an independent measure in the quantification (Kuehn et al., 2014; Stringer et al., 2014). For the tactile sessions, we modeled two sessions with three regressors of interest each (finger stimulation to index finger/ring finger or to middle finger/small finger, respectively, and tactile control). For the visual sessions, we modeled two sessions with four regressors-of-interest each (observed touch and visual control of index finger/ring finger, or middle finger/small finger, respectively). The button presses and instruction screens were added as nuisance regressors in both models. Given that functional and anatomical data were not normalized, no group statistics were performed with SPM.

For the tactile sessions, four linear contrast estimates were calculated: touch to the index finger (3 −1 −1 −1), touch to the middle finger (−1 3 −1 −1), touch to the ring finger (−1 −1 3 −1), and touch to the small finger (−1 −1 −1 3). For the visual sessions, eight linear contrast estimates were calculated: observed touch to the index finger (3 0 −1 0 −1 0 −1 0), observed touch to the middle finger (−1 0 3 0 −1 0 −1 0), observed touch to the ring finger (−1 0 −1 0 3 0 −1 0), observed touch to the small finger (−1 0 −1 0 −1 0 3 0), visual control index finger (0 3 0 −1 0 −1 0 −1), visual control middle finger (0 −1 0 3 0 −1 0 −1), visual control ring finger (0 −1 0 −1 0 3 0 −1), and visual control small finger (0 −1 0 −1 0 −1 0 3). On the individual subject level, voxels that survived a significance threshold of p < 0.05 were mapped onto the cortical surfaces using the procedure as described above. These thresholded contrast images were used for ROI analyses on the individual subject level within the FSL-framework. Non-thresholded t maps were used for computing group-averaged tactile and visual maps (for visualization purposes only).

We compared tactile maps acquired in the two different studies (phase-encoded and blocked-design) within those participants who took part in both studies (n = 9). We used the phase-encoded tactile maps to define ROIs for each individual finger, and each individual subject within area 3b (cluster-level corrected, √[F] > 2). FreeSurfer surface-labels were used for areal definition. SPM-contrast estimates of each of the four computed tactile contrasts (see above) were extracted from each single finger receptive area defined as described above, averaged, and compared with one another using paired-sample two-tailed t tests, and a significance threshold of p < 0.05 (uncorrected). t Tests reveal robust results also when subsamples of the data are not normally distributed (Bortz, 1999).

For all participants (n = 13), contrast estimates of each of the eight computed visual contrasts (see above) were extracted from each single finger receptive area within area 3b. Anterior–posterior boundaries were taken from FreeSurfer surface labels. Significant clusters of the blocked design tactile maps of each individual participant (thresholded at F > 4) were used to define the response regions. We compared mean contrast estimates between corresponding and noncorresponding receptive areas for the observed touch study and visual control study using a paired-sample t test and a significance threshold of p < 0.05. We also characterized topographic similarity of visual and tactile maps across the whole finger representation, also for both the observed touch and the visual control study. For this aim, the single finger response regions as defined above were merged, and amplitude values for each surface vertex were extracted for the eight visual contrasts and the four tactile contrasts (one contrast for each finger), respectively. Correlation coefficients of the vectors were calculated using Pearson correlations. The correlation coefficients were first computed at the individual subject level, and then averaged across subjects to calculate group averaged correlation matrices. Mean correlation coefficients were then compared between corresponding and noncorresponding fingers for the observed touch and visual control study using paired-sample t tests and a significance threshold of p < 0.05.

We calculated percentage of correct responses of visual and tactile control conditions, where no stimulation at the fingertip occurred. We compared percentage of correct responses of visual and tactile conditions against each other using a paired-sample t test, and a significance threshold of p < 0.05. We further calculated mean absolute error for the visual and tactile conditions by computing the difference between observed/physically perceived touch, respectively, and estimated level of roughness. We compared mean absolute error rates of visual and tactile conditions against each other using a paired-sample t test and a significance threshold of p < 0.05.

EMG experiment.

The EMG signal is a biomedical signal that measures electrical currents generated by muscles during their contraction (Raez et al., 2006). EMG was measured via EMG reusable surface cup electrodes over the vicinity of the extensor digitorum muscle of the right arm where small finger movements can be detected by changes in signal amplitude (Ishii, 2011). EMG studies were performed in a mock scanner, which realistically simulates the MR scanner environment, but without the magnetic field. In the first study, EMG signals were recorded while participants observed the same video material and were instructed in the same way as in the experiment reported above (fMRI scanning: visual sessions, third paragraph) except that participants responded via eye movements as in the phase-encoded study. The order of presentation was randomized; there were 12 trials per condition. In a second study, participants observed the same videos but were instructed to perform small finger movements while watching the touch videos. This second experiment was performed to ensure that electrode placement, and analyses methods were suitable to detect significant EMG signal changes if small finger movements occurred during touch observation. The signal was sampled at 5000 Hz.

For EMG signal analyses, we followed a standard procedure: we extracted the raw signal via the differential mode (difference in voltage between the positive and negative surface electrodes). We performed full wave rectification, and applied a Butterworth filter of 50 Hz to the data. Time series were divided into time windows representing either the rest periods (beginning and end of experiment where a fixation-cross was shown), the no-touch observation periods, or the touch observation periods for Study 1. For Study 2, time windows were defined for the rest periods and the no-touch observation periods as above, and for the instructed movement periods. We calculated mean EMG signal amplitudes (in voltage) for each condition, and compared them within each study using a repeated-measures ANOVA, and a significance threshold of p < 0.05. Significant main effects were followed-up by one-sided paired-sample t tests to specifically test for higher signal amplitude in the touch observation and instructed movement periods compared with the other two conditions of each study.

Results

Vision activates topographic maps in primary somatosensory cortex

The first set of analyses was devoted to testing whether receiving touch to the fingers or observing touch to the same fingers activates topographically aligned finger maps in contralateral area 3b. Data from three fMRI sessions acquired at a 7 tesla MR scanner were used. Participants either viewed video clips of hands being touched at the fingertips, or were touched by the same stimuli at their own fingertips (Fig. 1). Visual and tactile stimuli were presented in a periodic pattern, with one stimulus set starting with index finger touch (or vision of index finger touch, respectively) followed by middle finger touch, ring finger touch, little finger touch, back to index, and so on, and the second stimulus set running in reversed order. This design allowed the use of balanced Fourier-based analysis techniques (Sereno et al., 1995) to reveal primary finger maps (Mancini et al., 2012; Besle et al., 2013).

By visually inspecting non-thresholded phase maps of group averaged data within postcentral gyrus, we found that single finger representations were arranged in expected order, with the little finger most superior, and the index finger most inferior on the cortical surface in tactile, but importantly, also in visually-induced finger maps (Figs. 2A, 3A). As is also clearly visible, the visual map was much weaker in amplitude compared with the tactile map (Fig. 2C).

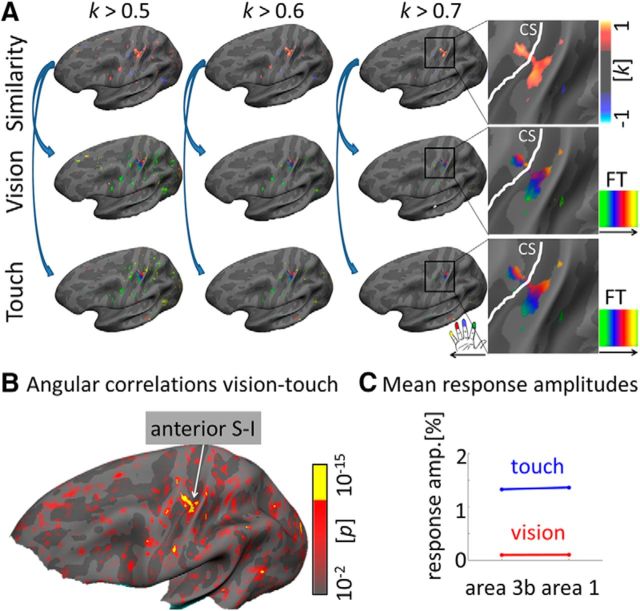

Figure 2.

Phase-encoded tactile and visually-induced finger maps in contralateral primary somatosensory cortex. A, B, visuo-tactile mean similarity maps (A, top row) were calculated by applying whole-brain surface-based searchlight analyses to visual and tactile time series averaged over 16 participants. Angular correlations were calculated on a vertex-wise basis (corrected for autocorrelations). Correlation coefficients were high (k > 0.7), and correlations were significant (p < 10−15) in the hand knob area of left (contralateral) anterior S-I. For visual display, mean visual and tactile maps were masked by areas of high similarity (k > 0.5, k > 0.6, k > 0.7; A, middle and bottom rows). FT, Fourier transform; CS, central sulcus. C, Mean response amplitudes (in percentage) of tactile and visually-induced finger maps. Data were extracted from area 3b and area 1 finger maps, respectively. FreeSurfer surface labels were used for defining both areas.

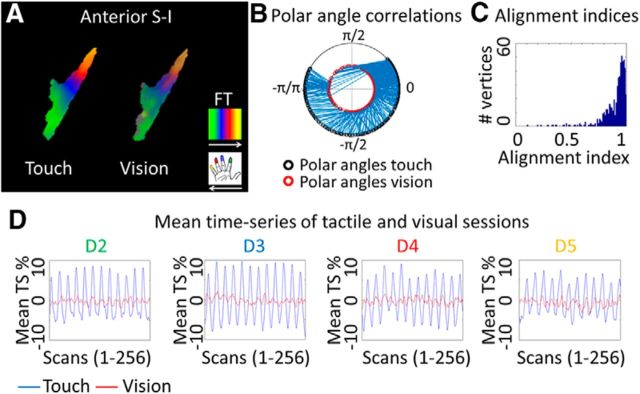

Figure 3.

Similarity between tactile and visually-induced finger maps in contralateral primary somatosensory cortex. A, Similar topographic arrangements of mean tactile and mean visual maps in anterior S-I (averaged phase-maps over 16 participants displayed in the tactile finger map ROI of contralateral area 3b).The FreeSurfer surface label of area 3b was used for masking. B, Spoke plot visualizes the polar angle correlation between visual and tactile time series; data were extracted from the tactile finger map ROI in area 3b. The correlation was significant (p < 10−15). C, Histogram plots the number of vertices against visuotactile phase angle map similarities (alignment indices range between 0 and 1, 1 indicates maximal alignment; Sereno and Huang, 2006). See Figure 5 for histograms of each individual participant. D, Mean time series in percentage units (averaged over 16 participants, displayed for 256 scans) of tactile and visual datasets, extracted from D2 (index finger), D3 (middle finger), D4 (ring ringer), and D5 (small finger) receptive areas (shown are values averaged over all vertices of the respective ROI).

We then defined the expected general location of contralateral tactile finger maps within area 3b on the basis of probabilistic FreeSurfer surface labels. Probabilistic FreeSurfer surface labels were used in all reported analyses to identify area 3b. Tactile maps were defined on the basis of the phase of voxels whose periodic response significantly exceeded surface-based cluster-filter thresholds, both at the individual level, and at the group level. Those maps were then used as ROIs. Spoke plots (Hussain et al., 2010) that visualize circular correlations between tactile and visual phase values (correlations are plotted in blue; Fig. 3B) showed few crossing lines, which indicates strong alignment between tactile and visually-induced finger maps within the ROI (hand area of contralateral area 3b). Significant circular correlations between the phase values of tactile and visual maps (Sereno and Huang, 2006) confirmed this observation (k = 0.93, SD = 0.12, p < 10−15; Fig. 2B).

We calculated the average AI (AAI), which is another measure of the similarity of phase angles (i.e., finger-specific response properties), across tactile and visually-induced datasets (Sereno and Huang, 2006; Mancini et al., 2012). The alignment index is a simple definition of angular difference. In an ideal dataset without neuronal, physiological or scanner noise, if the map was shifted by one finger, this would amount to an angular offset of π/2 (AAI = 0.5). Our results revealed that the AAI was very high in contralateral area 3b (AAI = 0.90, where possible values range from 0 to 1; Fig. 3C, see Fig. 5 for individual histograms). The sharp peak of the histogram at AAI near 1 indicates high numbers of highly-aligned tactile and visually-induced vertices within the tactile finger map area, with few poorly-aligned tactile and visually-induced vertices (Sereno and Huang, 2006). The values were much higher than those expected by a shifted alignment of fingers (or no alignment at all). We also compared group average AAIs to the mean random AAIs that were calculated subject-by-subject, and obtained a significant difference [mean AAI = 0.63 ± 0.11, mean AAI (random) = 0.49 ± 0.02, t(15) = 4.99, p = 1.62 × 10−4; Table 1; see Fig. 5].

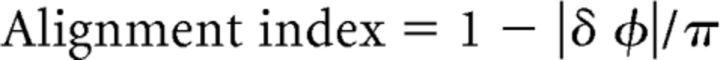

Figure 5.

Individual participants' histograms of alignment indices. Histograms plot the number (#) of vertices against visuo-tactile phase angle map similarities (alignment indices range between 0 and 1, 1 indicates maximal alignment; Sereno and Huang, 2006). Histograms are here shown for each individual participant (P1–P16), and for all vertices in the tactile finger map ROI in area 3b. The red lines in the histograms represent the probability density estimates of each individual histogram (i.e., the empirically-measured data). The green lines in the histograms represent the probability density estimates as expected for given tactile phase angles but random visual phase angles, modeled for each participant individually. The p values show the results of comparing random to real alignment indices for each participant (see Table 1 for full statistics). In all significant cases, the empirically-measured alignment index was higher than the random (modeled) alignment index (Table 1). The histogram in the top right corner shows the modeled random histogram for P8. x- and y-axes' labels and data ranges for P1–P16 are the same as those shown in the enlarged top.

Table 1.

Mean AAIs and randomly generated AAIs (AAIs-random) for each of the 16 participants who participated in the phase-encoded experiment

| Participant | AAIs | AAIs-random | p | t |

|---|---|---|---|---|

| P1 | 0.56 ± 0.28 | 0.51 ± 0.30 | 5.93 × 10−4 | 3.45 |

| P2 | 0.57 ± 0.29 | 0.46 ± 0.28 | 1.02 × 10−7 | 5.41 |

| P3 | 0.71 ± 0.30 | 0.48 ± 0.30 | 2.57 × 10−12 | 7.55 |

| P4 | 0.62 ± 0.28 | 0.48 ± 0.29 | 1.35 × 10−16 | 8.51 |

| P5 | 0.49 ± 0.28 | 0.50 ± 0.28 | 0.65 | −0.45 |

| P6 | 0.45 ± 0.27 | 0.49 ± 0.30 | 0.10 | −1.65 |

| P7 | 0.74 ± 0.24 | 0.52 ± 0.29 | 1.37 × 10−34 | 11.91 |

| P8 | 0.87 ± 0.11 | 0.48 ± 0.30 | 9.16 × 10−44 | 17.95 |

| P9 | 0.51 ± 0.31 | 0.49 ± 0.28 | 0.40 | 0.85 |

| P10 | 0.67 ± 0.27 | 0.50 ± 0.29 | 7.80 × 10−31 | 12.09 |

| P11 | 0.70 ± 0.26 | 0.46 ± 0.28 | 2.83 × 10−30 | 12.45 |

| P12 | 0.61 ± 0.28 | 0.49 ± 0.29 | 5.46 × 10−6 | 4.65 |

| P13 | 0.63 ± 0.25 | 0.52 ± 0.29 | 8.92 × 10−6 | 4.54 |

| P14 | 0.70 ± 0.18 | 0.49 ± 0.29 | 1.99 × 10−50 | 16.88 |

| P15 | 0.56 ± 0.28 | 0.50 ± 0.30 | 0.0016 | 3.18 |

| P16 | 0.67 ± 0.30 | 0.51 ± 0.29 | 7.38 × 10−10 | 6.36 |

| Average | 0.63 ± 0.11 | 0.49 ± 0.02 | 1.62 × 10−4 | 4.99 |

AAIs and AAIs-random were compared within each participant and at the group level using paired-sample t test. Reported are AAIs ± SE, AAIs-random ± SE, t values, and p values.

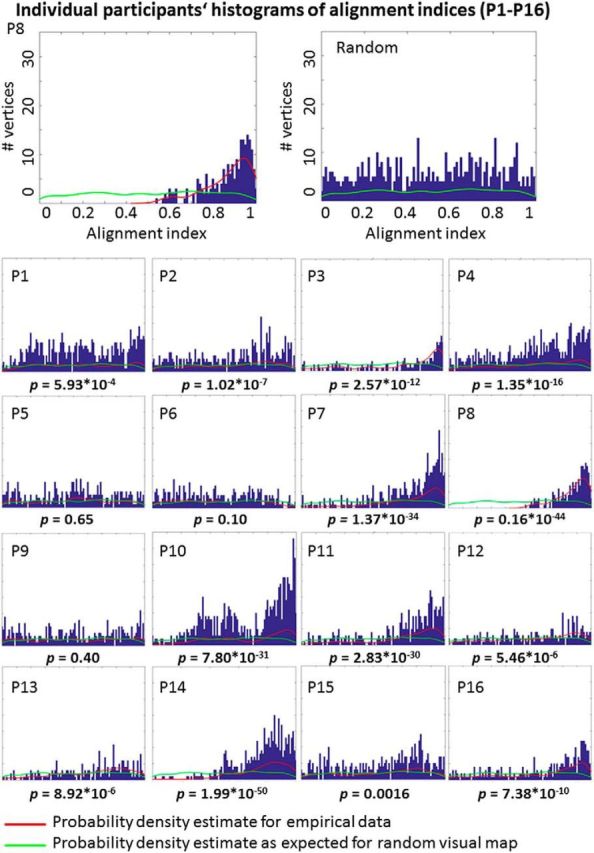

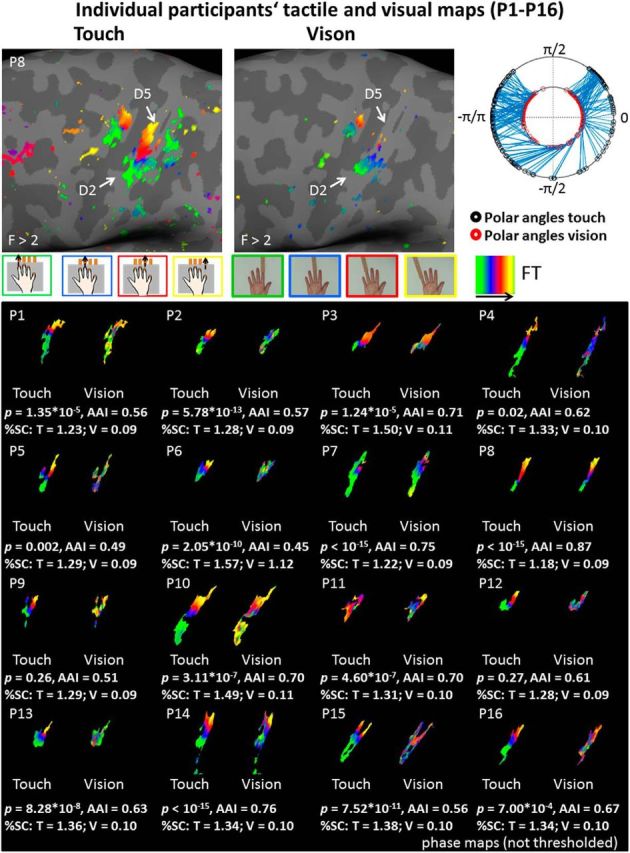

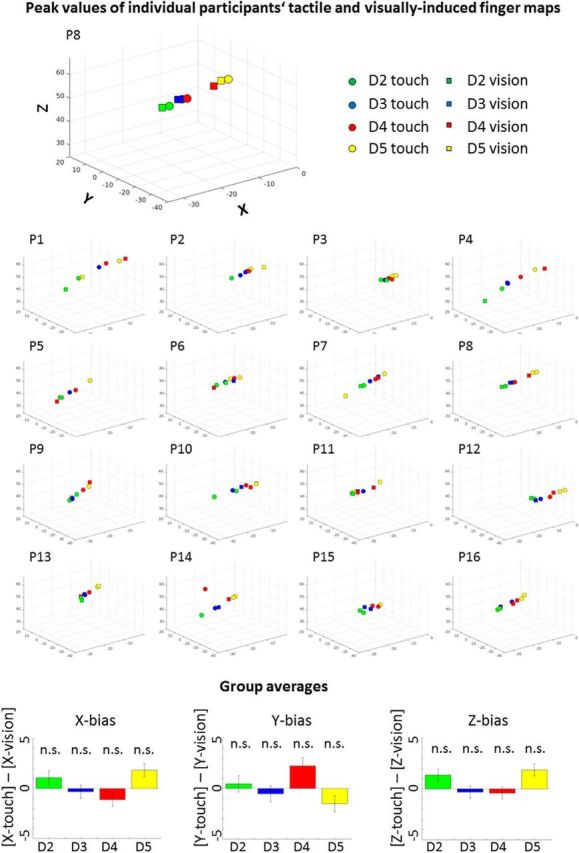

Circular correlations and AAIs were also computed on the individual level subject-by-subject. Circular correlations were significant at a Bonferroni-corrected significance level of p < 0.0031 (corrected for 16 comparisons) for 13/16 participants, with AAIs ranging between 0.45 and 0.87 [mean AAI = 0.63 ± 0.11 (SD)]. Maps of each individual participant are shown in Figures 4 and 6, histograms of each individual participant are shown in Figure 5. There was no significant shift of the visually-induced single finger representations compared with the tactile single finger representations when peak values were taken as independent measures, neither in the x-, y-, or z-dimension (all p values >0.28; Fig. 6). In addition, we compared AAIs to randomly generated AAIs in each individual person. We found that the AAIs were significantly higher than the randomly generated AAIs for 13/16 participants (all p values <0.0017; Fig. 5; Table 1). When combining both measures (i.e., the circular correlation analyses and the comparisons against randomly generated visual maps), we found 11/16 participants to show significant effects in both analyses at a Bonferroni-corrected significance level of p < 0.0031 (P1, P2, P3, P7, P8, P10, P11, P13, P14, P15, P16).

Figure 4.

Individual participants' tactile and visually-induced finger maps. Phase values of tactile and visually-induced single finger maps (thresholded at F > 2) and associated spoke plot of one example participant (P8, top). Spoke plots visualize polar angle correlations between tactile and visual time-series (each blue line represents the relation between the tactile and visually-induced polar angles of 1 vertex). The shorter the line between the inner ring and the outer ring, the higher the alignment between the tactile map and the visually-induced map. Raw (non-thresholded) phase values of tactile and visually-induced single finger maps from all 16 participants who took part in the phase-encoded study (bottom box). Data were extracted from the contralateral tactile finger map in area 3b as defined based on significant tactile maps in each individual participant. The mask of each individual's tactile map was used to mask both tactile and visual maps. FreeSurfer surface labels were used to define area 3b. Individual histograms and peak vertices can be inspected in Figures 5 and 6. The p values show the results of each individual's polar angle correlation (see Fig. 3 for group data). FT, Fourier transform; SC, signal change; T, touch; V, vision.

Figure 6.

Peak values of individual participants' tactile and visually-induced finger maps. 3D-scatter plots show the locations of each individual single finger representation (D2–D5) of the tactile and visually-induced maps of each individual participant (P1–P16). Circles and squares show the locations of the peak f values for tactile and visually-induced single finger representations, respectively. Group averaged data (bottom) show the difference between the visually-induced single finger representations and the tactile single finger representations (x-bias, y-bias, z-bias; 0 indicates no bias). Biases did not differ significantly from zero (all p values >0.28).

To make sure that our effect was specific to our ROI and such correlations did not diverge across the whole cortical surface, we applied a whole-brain surface-based searchlight circular correlation analysis to whole-brain tactile and visually-induced angle maps. We found correlated phases of activation of local vertex sets at the stimulus frequency (p < 10−15, k > 0.7) in primary somatosensory cortex across from, and just inferior to the hand knob area, and also within primary visual cortex. Correlated maps were expected in visual cortex because participants fixated similar locations on screen during tactile and visual scanning sessions and retinotopic stimulation occurred in both conditions. Note that visual sessions always preceded tactile sessions such that the identical fixation points could not influence visual map formation. Correlated phases were found in left area 3b, extending to left area 1 (surface area = 80.3 mm2, 38 voxels; Fig. 2A,B). Note that we prevented spurious autocorrelation (inflated degrees of freedom) by only considering one representative mesh point for each functionally activated voxel (rather than including all the multiple mesh points that would otherwise redundantly sample the same voxel). This avoids artificially inflating the p value.

We also compared mean response amplitudes of tactile and visually-induced maps to a normal distribution with mean equal to zero, and found both maps to be significantly different from zero (all p values <10−10). Mean response amplitudes of the tactile maps were significantly higher than those of the visual maps (touch = 1.48 ± 0.25%, vision = 0.50 ± 0.13%, t(15) = 17.2, p < 10−6; Fig. 2C). The relatively stronger amplitude of the tactile maps compared with the visual maps is also evident by inspecting finger-specific time-series of visual and tactile maps as shown in Figure 3D.

Visually-induced topographic maps in area 3b are robust across viewing perspectives

Thirteen participants (n = 9 of the original cohort) participated in another fMRI study again with multiple sessions. Touch was here viewed in the third-person perspective (Fig. 1). Note that in this case, the visuotopic order of finger stimulation was reversed. This was designed to exclude the possibility that simple visuospatial matching mechanisms were inducing the formation of visually-driven somatotopic maps. Additionally, it allowed us to investigate whether visually-induced topographic maps were only activated during self-referenced touch (first-person perspective, see above), and whether our effect was robust across analyses techniques (Fourier analyses versus blocked-design). The same stimulus material was used as in the study on first-person touches, except that visual stimuli were rotated by 180° (third-person perspective).

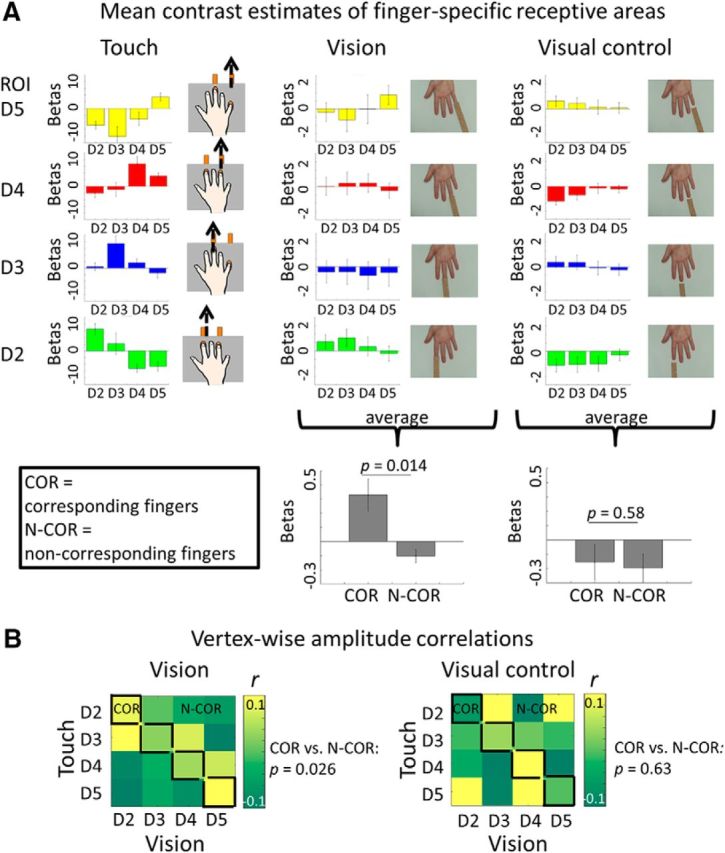

To investigate whether a topographic finger map would be expressed during the observation of third-person touches, we first compared mean betas between corresponding and noncorresponding single finger receptive areas, which yielded a significant difference (t(12) = 2.88, p = 0.014; Fig. 7A). Then, we computed vertex-wise amplitude correlations between single finger response regions of tactile and visual maps. Vertex-wise amplitude correlations allow the comparison of topographic functional response dynamics, such as activation peaks, or center-surround inhibition. We found positive correlations between tactile and visual response regions between corresponding fingers, particularly between feeling touch at D5 and seeing touch to D5, whereas correlations were weaker or negative between noncorresponding fingers (e.g., feeling touch at D5 and seeing touch to D2; Fig. 7B). The difference in correlation coefficients between corresponding and noncorresponding fingers was significant [mean r corresponding fingers = 0.10 ± 0.01 (SD), mean r noncorresponding fingers = −0.034 ± 0.09, t(14) = 2.49, p = 0.026; Fig. 7B], which indicates that alignment between tactile and visual maps also occurs when touch is observed in the third-person perspective. Interestingly, whereas strong and specific correspondence between vision and touch was observed for D5, weaker and/or less-specific correspondences were observed for D4, D3, and D2, where also neighboring finger representations sometimes responded to observing touches to these digits (i.e., the receptive area of D3 responded more to touch observation to D2 than the receptive area of D2, and the receptive area of D3 responded slightly more to touch observation to D4 than the receptive area of D4). This indicates that viewing touches in the third-person perspective weakens correspondences between vision and touch, likely because correct matching requires a rotation by 180°. Because our task did not require specific attention to finger identity, participants may have sometimes mixed up the identity of different fingers when they observed touch in the third-person perspective. Inspection of averaged heat maps of tactile and visually-induced maps reveals that observing touch just in the case of the middle finger in the third-person perspective resulted in broad and unspecific activations within the tactile map area, with no strong inhibitory response in the receptive areas of the noncorresponding fingers (Fig. 8A). The critical statistical comparisons between corresponding and noncorresponding fingers, however, were significant, and a preference for the corresponding finger ± 1 finger is visible in Figure 7B. We also evaluated whether one of the four digits responded more strongly than the other digits to the observation of finger touches. We conducted a repeated-measures ANOVA with the factor finger, taking the averaged β values as dependent measures. This ANOVA was not significant (F(3,48) = 0.69, p = 0.56).

Figure 7.

Tactile and visually-induced maps of the blocked-design (observation of third-person touches). A, Left, Mean contrast estimates (±SE) of finger-specific receptive areas as recorded in the blocked-design study extracted from receptive areas defined on the basis of the phase-encoded study. This analysis was conducted to show the robustness of the single finger maps across designs and analyses techniques. Data are averaged over those participants who participated in both experiments (n = 8). A, Middle and right, Mean contrast estimates (±SE) of visually-induced maps as recorded in the blocked-design study, where touches were observed in the third-person perspective, extracted from finger-specific receptive areas. Data are averaged over all participants who participated in the blocked-design study (n = 13). A, Bottom, Averaged contrast estimates extracted for corresponding (“COR”) and noncorresponding (“N-COR”) receptive areas. For COR receptive areas, visually-induced and tactile receptive areas matched, e.g., for the observation of touch to D2, values were extracted from the tactile D2 receptive area. For N-COR receptive areas, visually-induced and tactile receptive areas did not match, e.g., for the observation of touch to D2, values were extracted from the tactile D3, D4, and D5 receptive areas. Left, The averages of observed touch condition. Right, Averages of visual control condition (Fig. 1). Mean β values between COR and N-COR receptive areas were compared (p values shown, see Results for more details). B, Correlation matrices display vertex-wise amplitude correlations between tactile and visually-induced single finger receptive areas. Values were extracted from conditions where touch was applied to the fingers (“Touch”), where tactile contact between finger and sandpaper was displayed (“Vision”), or where tactile contact between finger and sandpaper was not displayed because the sandpaper stopped 1 cm before the finger (“Visual control”; Fig. 1). Fields inside the black lines show correlations between corresponding visual and tactile single finger receptive areas (COR), whereas fields outside the black lines show correlations between noncorresponding visual and tactile single finger receptive areas (N-COR). Averaged r values of COR and N-COR fields were compared (p values shown, see Results for more details). Mean data of all participants who participated in the blocked-design study are displayed (n = 13).

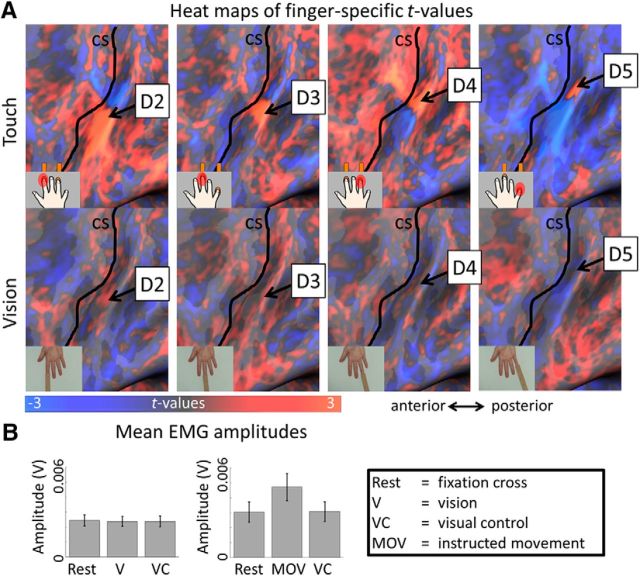

Figure 8.

Heat maps of tactile and visually-induced single finger representations in contralateral primary somatosensory cortex. A, Averaged t maps elicited by touches to single fingers (top), and by observing touches to the same fingers (bottom). In the visual conditions, touches were observed in the third-person perspective (Fig. 1). Non-thresholded heat maps display positive and negative t values for finger-specific contrasts, e.g., 3 × D2 − [D3 + D4 + D5] for the index finger. B, Mean EMG amplitudes (in V) measured over the vicinity of the extensor digitorum muscle of the right arm during rest, observed touch, and visual control conditions (left), and during rest, instructed movement, and visual control conditions (right).

Actual contact between finger and object is mandatory for the expression of visually-driven finger maps

Is actual observed contact between the finger and the stimulating object mandatory to evoke topographically aligned visual finger maps in area 3b? Or is mere attention to a finger enough to explain the observed effects? To investigate feature-selectivity of visually-induced maps, and to investigate whether visuospatial attention toward a specific finger can activate non-afferent maps in area 3b, a visual control condition was added to the blocked-design study, where the sandpaper-covered stimuli stopped shortly before they reached each fingertip (Fig. 1). We again compared mean betas between corresponding and noncorresponding fingers, as above, and here did not find a significant difference (t(12) = 0.56, p = 0.58; Fig. 7A). Then, we computed vertex-wise amplitude correlations between single finger response regions of tactile and visually-induced maps (here recorded during the visual control condition), and also in this case found no topographic correspondences between tactile and visually-induced maps: high and low correlation coefficients appeared randomly spread between corresponding and noncorresponding fingers in the control condition (Fig. 7B). In this noncontact condition, the difference in correlation coefficients between corresponding and noncorresponding fingers was not significant, as expected [mean r corresponding fingers = 0.029 ± 0.084 (SD), mean r noncorresponding fingers = −0.0048 ± 0.13, t(14) = 0.48, p = 0.63; Fig. 7B].

We also compared finger maps as described by the phase-encoded design with those as described by the blocked design for those participants who participated in both studies. We found that topographic maps revealed by those two different methods showed high correspondence, as evidenced by significantly higher mean contrast estimates within the region representing the same finger compared with the regions representing different fingers (Fig. 7A; Table 2; Besle et al., 2013).

Table 2.

Mean beta values of the blocked-design experiment extracted from single-finger receptive areas as defined on the basis of the phase-encoded experiment

| Variables | Digit comparisons |

|||||||

|---|---|---|---|---|---|---|---|---|

| Area 3b |

Area 1 |

|||||||

| D2 | vs D3 | vs D4 | vs D5 | D2 | vs D3 | vs D4 | vs D5 | |

| μ ± SEM | 6.57 ± 1.64 | 2.24 ± 3.16 | −5.22 ± 1.17 | −4.65 ± 1.41 | 4.91 ± 0.78 | 1.45 ± 0.88 | −3.46 ± 1.07 | −3.01 ± 1.29 |

| p | 0.06 | 0.001 | 0.002 | 0.007 | 2.4 × 10−4 | 2.23 × 10−4 | ||

| t | 2.20 | 5.27 | 5.01 | 3.79 | 6.82 | 6.94 | ||

| D3 | vs D2 | vs D4 | vs D5 | D3 | vs D2 | vs D4 | vs D5 | |

| μ± SEM | 7.55 ± 2.38 | 0.47 ± 1.15 | 1.71 ± 1.13 | −1.48 ± 1.49 | 4.78 ± 1.57 | 0.58 ± 0.99 | −0.29 ± 1.15 | −1.26 ± 1.49 |

| p | 0.0064 | 0.020 | 0.0091 | 0.004 | 0.02 | 0.003 | ||

| t | 3.84 | 3.01 | 3.50 | 4.23 | 3.10 | 4.50 | ||

| D4 | vs D2 | vs D3 | vs D5 | D4 | vs D2 | vs D3 | vs D5 | |

| μ± SEM | 6.79 ± 2.12 | −2.11 ± 1.26 | −0.98 ± 2.08 | 3.09 ± 0.88 | 5.06 ± 1.42 | −1.10 ± 0.89 | 0.27 ± 1.22 | 2.52 ± 1.28 |

| p | 0.020 | 0.075 | 0.15 | 0.002 | 0.006 | 0.18 | ||

| t | 2.98 | 2.31 | 3.50 | 4.71 | 3.90 | 1.50 | ||

| D5 | vs D2 | vs D3 | vs D4 | D5 | vs D2 | vs D3 | vs D4 | |

| μ± SEM | 3.43 ± | −4.91 ± | −8.20 ± | −3.07 ± | 2.18 ± 0.91 | −4.48 ± 1.09 | −6.09 ± 1.24 | −0.92 ± 1.07 |

| p | 0.0028 | 0.014 | 0.045 | 0.001 | 2.19 × 10−4 | 0.03 | ||

| t | 4.49 | 3.23 | 2.43 | 5.30 | 6.96 | 2.60 | ||

p values and t values of paired-sample two-tailed t test are displayed. p values <0.05 were considered significant. μ, Mean; D2, index finger; D3, middle finger; D4, ring finger; D5, little finger (see Fig. 7A for visualization).

Behavioral performance was similar during vision and touch

Participants were asked to rate the roughness levels of the sandpaper surfaces that they either perceived tactually or visually, respectively. Participants were confident in their roughness judgments both in the tactile and visual conditions, and there was no significant difference in mean correct responses and mean error rates between tactile and visual conditions [mean correct responses to detect control trials: touch = 98.44 ± 2.16% (SD), vision = 98.44 ± 2.43%, t(7) = −4.28 × 10−15, p = 1; mean error rate to discriminate roughness levels: touch = 2.72 ± 0.26 levels, vision = 2.71 ± 0.29 levels, t(7) = −0.05, p = 0.96).

Touch observation does not trigger muscle activations

To investigate whether touch observation might trigger tiny finger movements that nevertheless could confound our results, EMG was measured over the vicinity of the extensor digitorum muscle while participants either looked at a fixation cross, observed touch videos, or observed tactile control videos (same stimulus material as above). We conducted a repeated-measures ANOVA with the factors rest, observed touch, and visual control, which was not significant (F(2,22) = 0.45, p = 0.65; Fig. 8B). To ensure that our electrode placement and applied analyses techniques were in principle able to detect small finger movements if present, we conducted a second experiment with the same participants; this time we instructed them to perform small finger movements during the observed touch condition (but not in the other two conditions). The experiment was otherwise identical to the previous one. Here, as expected, the repeated-measures ANOVA with the factors rest, visual control, and instructed movements obtained a significant main effect of condition (F(2,22) = 5.11, p = 0.015), which was driven by significantly higher EMG signal amplitudes in the condition where participants were instructed to perform small finger movements compared with the rest and no-touch conditions [mean signal amplitude: movement = 4.76−3 ± 1.07−3 V (SE), visual control = 2.98−3 ± 0.76−3 V, t(11) = 2.28, p = 0.026; mean signal amplitude: movement = 4.76−3 ± 1.07−3 V, rest = 2.81−3 ± 0.78−3 V, t(11) = −2.36, p = 0.018; Fig. 8B).

Discussion

We have characterized somatotopic maps in human primary somatosensory cortex during actual touch and during visually perceived (observed) touch to individual fingers. Our data show fine-grained alignment tangential to the cortical sheet in area 3b between: (1) actual tactile contact on a particular finger, and (2) viewing the same finger of another person being touched. This alignment was robust across viewing perspectives, but did not occur when the observed finger was only approached by an object but not actually touched. The visual map was weak in amplitude, and was detectable in the majority of participants, even though not in every individual. Our data indicate that foreign source maps in early sensory cortices are weak but precise, and that their activation is feature-selective. We also provide clarifying evidence for the multisensory response properties of area 3b.

The multisensory response properties of somatosensory cortical area 3b have been controversial. Whereas one study reported activation of area 3b in response to viewing touches (Schaefer et al., 2009), most other studies found no visually-induced responses in area 3b (Kuehn et al., 2013, 2014; Chan and Baker, 2015), and suggested that the area may only encode self-perceived touch (Kuehn et al., 2013). Here, we provide evidence for non-afferent finger maps in area 3b. Why did the previous studies not obtain similar results? We used 7 tesla fMRI, which has higher signal-to-noise ratios compared with standard MRI field strengths (Bandettini, 2009; van der Zwaag et al., 2009; Stringer et al., 2011). We also applied a statistical analysis procedure that focused on the similarity of phasic responses between visual and tactile maps rather than on amplitude changes. This approach was inspired by the work of Bieler et al. (2017) who showed in rats that S-I neuronal responses during visual stimulation are specific with respect to power and phase, but weak in amplitude. By applying a statistical method that focused on phasic changes, we found visual maps to be topographically precise, but relatively weak in signal intensity (∼0.5% mean response amplitude for vision, ∼1.5% mean response amplitude for touch, both maps, however, were significantly different from 0). The strength of activity triggered by observation of touches seems to be higher in less topographically precise areas, such as area 2, area 5, and the secondary somatosensory cortex (Keysers et al., 2004; Kuehn et al., 2013, 2014). In the present study, fine-grained visually-driven finger maps were detected in area 3b and area 1.

Our data show that foreign source maps in primary somatosensory cortex are arranged very similarly to afferent inputs that reach the same area. We did not find systematic shifts of the visually-induced maps compared with the tactile maps, for any of the four fingers. This rejects the hypothesis of a distorted or shifted visually-driven map compared with the tactile map on average. Wide area searches showed that correlations between tactile and visually-induced maps were strongest in the hand area of contralateral primary somatosensory cortex, and in primary visual cortex; that is, they were confined to fine-grained topographic map areas. Observed touches are therefore initially represented in a retinotopic map format in primary visual cortex, and are subsequently remapped into a somatotopic map format in area 3b. This remapping reinforces the fundamental importance of topographic maps throughout organizational hierarchies (Sereno and Huang, 2006; Orlov et al., 2010; Huang and Sereno, 2013; Sood and Sereno, 2016; Kuehn, 2017).

Individual participant data do sometimes show deviations from the average, and visually-driven topographic maps do not appear in all tested participants. Also the similarity between tactile and visually-driven maps differs across subjects: whereas some participants show almost perfect alignment, in some participants tactile and visually-driven maps are less similar, and in yet other participants, there is no significant overlap. One explanation is that this is the result of individual differences in social perception and/or empathy; another factor may be individual differences in somatosensory attention. Responses in somatosensory cortex during the observation of touch are stronger in vision-touch synesthetes, who report actually feeling touch on their own body when they merely observe touch to another person's body (Blakemore et al., 2005). Positive correlations between BOLD signal changes in S-I during touch observation and individual perspective-taking abilities have also been shown (Schaefer et al., 2012), and suppressive interactions between visually-induced receptive fields in posterior S-I seem to be stronger in those participants who are able to correctly judge roughness levels by sight (Kuehn et al., 2014). This suggests that individual differences in visually-induced map formation may be relevant for everyday life. Which factors influence the formation of visually-driven maps in S-I remains a question for future research to address.

Our data are in agreement with single cell recordings in monkeys and rats. Zhou and Fuster (2000) found early somatosensory cortex activation during visual object perception in monkeys, though extensive training was needed. Bieler et al. (2017) found the majority of neurons in S-I of rats to show multisensory additive responses during tactile, visual, and visuotactile stimulation, and provided evidence that bimodal stimulation shapes the timing of neuronal firing in S-I. S-I pyramidal neurons decreased their activity, whereas S-I interneurons increased their activity during visual stimulation (Bieler et al., 2017). We hypothesize that these specific changes in pyramidal neurons and interneurons are most likely the cellular basis for the effect observed here, even though this would have to be empirically tested. A complex interaction between excitation and inhibition of S-I neurons triggered by vision may also explain why we often see negative BOLD in noncorresponding single finger receptive areas in our data. Also other studies have highlighted the importance of long-range, low-weight connections for globalizing input to small groups of areas (Markov et al., 2013).

A second main finding of our study was that the aligned visually-induced maps in area 3b only appear when actual touch to fingers is observed, but not when the stimulus sandpaper stopped ∼1 cm before the fingertip. The visually-induced maps therefore appear to encode actual tactile contact or simulated actual contact between an observed object and a finger. During social perception, this points toward a particularly specific role of area 3b in the encoding of tactile features, point-by-point on the body surface. But if the role of area 3b during actual touch and observed touch is similar, how can actual touch then be distinguished from remapped touch? Different map amplitudes may be one way to distinguish the self from the other. In addition, feedback loops between primary sensory cortex and higher-level areas may allow the conscious perception of touch in one case, and visual imagery of touch in the other. Finally, the laminar distribution and cell types of these maps may differ (not addressed in this report).