Abstract

This study surveys surgeons’ cost knowledge and ability to distinguish subtle price variations between surgical tools with similar functions or indications.

The operating room is a cost-dense environment, and disposable surgical instruments account for a large proportion of its costs.1 Because surgeons often have a choice of instruments to use, they play a critical role in managing supply costs. Previous research on surgeon cost knowledge has shown that surgeons are unskilled at estimating the price of surgical supplies.2 However, it is unclear whether surgeons can correctly differentiate the more expensive item of 2 surgical instruments, a task that more accurately reflects real-world decisions. Furthermore, cost report cards (CRCs) have been proposed as a passive mechanism for educating surgeons about surgical supplies to reduce spending.3 However, the association between CRCs and cost knowledge is unknown.

Methods

To assess surgeon cost knowledge and the association of CRCs with cost knowledge, we conducted a web-based survey of 100 attending general surgeons and subspecialists (eg, colorectal, surgical oncologist) at 3 academic health systems in Southern California. This study was reviewed and approved by each hospital’s institution review board. Written informed consent was obtained from all study participants.

The survey was created iteratively after 6 cognitive interviews. It provided pictures and descriptions of 10 instrument comparisons and asked the surgeon to identify the more expensive item of the 2 instruments presented. Because the assessment focused on surgeon cost knowledge, the items were not intended to be exchangeable but generally had a similar function (eg, 5 mm vs 10 mm Endoclip) and/or indication (eg, Endoclose vs Carter-Thomason). Instrument comparisons were tailored to each site on the basis of institution-specific item inventory.

Costs were obtained at each site and, although prices for the same item varied by institution, the cost associations (ie, which was more expensive) were consistent across sites. The primary outcome was the percentage of correct comparisons. Multivariable models were fit to assess the differences in cost knowledge between the institution with CRCs and the other 2 sites, controlling for years since training, self-reported exposure and familiarity with supply costs, and perceived importance of cost vs effectiveness when choosing surgical instruments.

Results

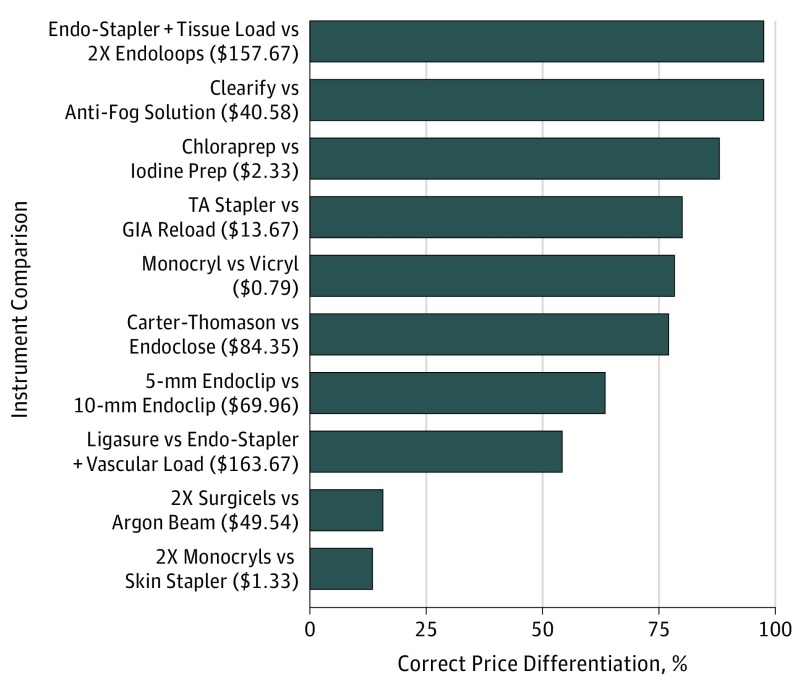

The response rate was 83% (n = 83). The mean (SD) correct score was 66% (12.49%; range, 40%-100%), which was better than chance (66% vs 50%; P < .001). However, substantial knowledge deficits were observed for some of the instrument comparisons (Figure). Cronbach coefficient α for the 10-item knowledge summated scale was only .11. Surgeons from the institution with CRCs reported more exposure to supply costs (odds ratio, 3.94; 95% CI, 1.49-10.41; P = .006) but not increased familiarity, nor did they perform better on the cost assessment. None of the remaining covariates were associated with cost knowledge.

Figure. Surgical Instrument Price Comparison.

Instrument comparison is organized in descending order by the percentage of correct differentiation between the cost of the 2 instruments (comparisons toward the top indicate better performance by surgeons, whereas comparisons toward the bottom indicate worse performance). The instrument listed first is the more expensive of the 2 items. Dollar values in parentheses indicate the mean absolute cost difference between the 2 items across the 3 sites.

Discussion

Surgeons were able to correctly differentiate the more expensive of 2 surgical instruments better than chance but had a wide variation in knowledge for some comparisons, seemingly irrespective of the cost difference between instruments in each comparison. Feedback in the form of CRCs may increase self-reported exposure but does not necessarily improve familiarity with prices or cost knowledge. Previous studies have suggested passive CRCs may decrease supply costs,4 but their results were modest and may be subject to publication bias.5 In this study, the institution with CRCs provided reports to surgeons without active engagement, mandates, or incentives. More active approaches or approaches that do not rely on surgeons retaining and applying cost knowledge, such as preference card standardization,5,6 may be more effective.

Limitations of this study include the poor internal consistency and reliability of the knowledge scale and the lack of generalizability, with respect to both the sample and the relevance of comparisons to other sites. To our knowledge, no previous study of surgeon cost knowledge has performed psychometric evaluation, and the poor internal consistency we encountered may explain previous null findings. The reason for the high variability in surgeon performance is difficult to explain. This question may be best answered with use of a qualitative approach and warrants future study.

References

- 1.Morrison JE Jr, Jacobs VR. Replacement of expensive, disposable instruments with old-fashioned surgical techniques for improved cost-effectiveness in laparoscopic hysterectomy. JSLS. 2004;8(2):201-206. [PMC free article] [PubMed] [Google Scholar]

- 2.Okike K, O’Toole RV, Pollak AN, et al. Survey finds few orthopedic surgeons know the costs of the devices they implant. Health Aff (Millwood). 2014;33(1):103-109. doi: 10.1377/hlthaff.2013.0453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gunaratne K, Cleghorn MC, Jackson TD. The surgeon cost report card: a novel cost-performance feedback tool. JAMA Surg. 2016;151(1):79-80. doi: 10.1001/jamasurg.2015.2666 [DOI] [PubMed] [Google Scholar]

- 4.Zygourakis CC, Valencia V, Moriates C, et al. Association between surgeon scorecard use and operating room costs. JAMA Surg. 2017;152(3):284-291. doi: 10.1001/jamasurg.2016.4674 [DOI] [PubMed] [Google Scholar]

- 5.Childers CP, Showen A, Nuckols T, Maggard-Gibbons M. Interventions to reduce intraoperative costs: a systematic review. Ann Surg. 2018;268(1):48-57. doi: 10.1097/SLA.0000000000002712 [DOI] [PubMed] [Google Scholar]

- 6.Koyle MA, AlQarni N, Odeh R, et al. Reduction and standardization of surgical instruments in pediatric inguinal hernia repair. J Pediatr Urol. 2018;14(1):20-24. doi: 10.1016/j.jpurol.2017.08.002 [DOI] [PubMed] [Google Scholar]