Abstract

In the jungle, survival is highly correlated with the ability to detect and distinguish between an approaching predator and a putative prey. From an ecological perspective, a predator rapidly approaching its prey is a stronger cue for flight than a slowly moving predator. In the present study, we use functional magnetic resonance imaging in the nonhuman primate, to investigate the neural bases of the prediction of an impact to the body by a looming stimulus, i.e., the neural bases of the interaction between a dynamic visual stimulus approaching the body and its expected consequences onto an independent sensory modality, namely, touch. We identify a core cortical network of occipital, parietal, premotor, and prefrontal areas maximally activated by tactile stimulations presented at the predicted time and location of impact of the looming stimulus on the faces compared with the activations observed for spatially or temporally incongruent tactile and dynamic visual cues. These activations reflect both an active integration of visual and tactile information and of spatial and temporal prediction information. The identified cortical network coincides with a well described multisensory visuotactile convergence and integration network suggested to play a key role in the definition of peripersonal space. These observations are discussed in the context of multisensory integration and spatial, temporal prediction and Bayesian causal inference.

SIGNIFICANCE STATEMENT Looming stimuli have a particular ecological relevance as they are expected to come into contact with the body, evoking touch or pain sensations and possibly triggering an approach or escape behavior depending on their identity. Here, we identify the nonhuman primate functional network that is maximally activated by tactile stimulations presented at the predicted time and location of impact of the looming stimulus. Our findings suggest that the integration of spatial and temporal predictive cues possibly rely on the same neural mechanisms that are involved in multisensory integration.

Keywords: fMRI, looming visual, macaque, multisensory integration, prediction, tactile

Introduction

In the jungle, survival is highly correlated with the ability to detect and distinguish between a predator and a putative prey. From an ecological perspective, a predator rapidly approaching its prey is a stronger cue for flight than a slowly moving predator. Experimentally, such a situation can be modeled as a looming stimulus moving toward the subject of the experiment. In such a context, looming stimuli have been described to elicit stereotyped defensive behavior both in monkeys (Schiff et al., 1962) and in human infants (Ball and Tronick, 1971). These observations suggest that subjects predict the possible consequences of these stimuli onto their body and anticipate these consequences by producing an escape motor repertoire. These predictive mechanisms are expected to be heteromodal by essence: the sensory consequences of looming stimuli, be they visual or auditory, are mostly predicted onto the tactile modality, as an impact to the body. And indeed, recent studies show that looming stimuli enhance tactile processing at the predicted time of impact and at the expected location of impact, both as measured by enhanced tactile sensitivity (Cléry et al., 2015a) and shorter reaction times (Canzoneri et al., 2012; Kandula et al., 2015), including when specifically probing nociceptive perception (De Paepe et al., 2016). Likewise, looming auditory-visual multisensory stimuli increase behavioral orienting indices (Maier et al., 2004; Cappe et al., 2009). Threatening visual looming stimuli, such as spiders compared with butterflies, further shortened the reaction times to tactile probes presented on the skin (de Haan et al., 2016). These several lines of behavioral evidence strongly suggest that the predictive mechanisms at play in estimating the consequences of looming stimuli on the skin pertain to more general processes involved in the defense of body integrity.

However, the neurophysiological mechanisms by which the dynamic information provided by one sensory modality predictively enhances the processing of another sensory modality remain unknown. A parsimonious hypothesis is that these processes involve cortical regions receiving neuronal information from both sensory modalities and optimally combining these multisensory signals. In the specific context of visual looming stimuli predicting an impact to the face, this parsimonious view predicts the contribution of a well characterized visuotactile convergence network of post-arcuate premotor (premotor area F4; Gross and Graziano, 1995; Fogassi et al., 1996), intraparietal [ventral intraparietal area (VIP); Duhamel et al., 1997, 1998; Bremmer et al., 1997, 2000, 2002a,b; Guipponi et al., 2013] and striate and extrastriate visual areas (Guipponi et al., 2013, 2015a). Consolidating this prediction, the parietal and the premotor components of this network have been involved in the representation of a defense peripersonal space (PPS; for reviews, see Graziano and Cooke, 2006; Cléry et al., 2015b) and in approaching behavior (Rizzolatti et al., 1997).

Here, we used functional magnetic resonance imaging (fMRI) in monkeys to test this hypothesis and to identify the neural bases of the prediction of an impact of a looming visual stimulus onto the body. While the monkeys were maintaining their gaze onto a central point, we presented them with a visual stimulus looming toward the face or/and a tactile stimulation (airpuff) on the face. These visual and tactile stimuli were either presented in isolation in independent blocks or played together in the same blocks. When played together, we manipulated the spatial and temporal relationships between the visual and the tactile stimulations so as to isolate the specific contribution of either temporal prediction or spatial prediction cues on the cortical activations. Our observations confirm the parsimonious hypothesis outlined above, that impact prediction (i.e., the anticipation of touch) onto the face activates a core post-arcuate, intraparietal striate and extrastriate cortical network previously associated with multisensory convergence and multisensory integration. Importantly, we show that the activity of this network is highly dependent upon the spatial and temporal predictive information held by the looming visual stimulus. These observations are discussed in the context of multisensory integration and spatial, temporal prediction and Bayesian causal inference.

Materials and Methods

All procedures were in compliance with the guidelines of the European Community on animal care (European Community Council, Directive 2010/63/UE). All the protocols used in this experiment were approved by the local animal care committee (agreement #C2EA42-12-10-0401-002). The animals' welfare and the steps taken to reduce suffering were in accordance with the recommendations of the Weatherall report, “The use of nonhuman primates in research”.

Subjects and materials

Two rhesus monkeys (female M1, male M2; 10-8 years old, 6–7 kg) participated in the study. The animals were implanted with a plastic MRI compatible headset covered by dental acrylic. The anesthesia during surgery was induced by Zoletil (Tiletamine-Zolazepam, Virbac, 5 mg/kg) and followed by isoflurane (Belamont, 1–2%). Postsurgery analgesia was ensured thanks to Temgesic (buprenorphine, 0.3 mg/ml, 0.01 mg/kg). During recovery, proper analgesic and antibiotic coverage was provided. The surgical procedures conformed to European and National Institutes of Health Guidelines for the Care and Use of Laboratory Animals.

During the scanning sessions, monkeys sat in a sphinx position in a plastic monkey chair positioned within a horizontal magnet (1.5-T MR scanner Sonata; Siemens) facing a translucent screen placed 90 cm from the eyes. Their head was restrained and equipped with MRI-compatible headphones customized for monkeys (MR Confon GmbH). A radial receive-only surface coil (10 cm diameter) was positioned above the head. Eye position was monitored at 120 Hz during scanning using a pupil-corneal reflection tracking system (Iscan). Animals were rewarded with liquid dispensed by a computer-controlled reward delivery system (Crist) through a plastic tube coming to their mouth. The task, all the behavioral parameters, and the sensory stimulations were monitored by two computers running with MATLAB and Presentation (Neurobehavioural Systems). Visual stimulations were projected onto the screen with a Canon XEED SX60 projector. Tactile stimulations were delivered through Teflon tubing and two articulated plastic arms connected to distant air pressure electro-valves. Monkeys were trained in a mock scan environment approaching to the best the actual MRI scanner setup.

Task and stimuli

The animals were trained to maintain fixation on a red central spot (0.24° × 0.24°) while stimulations (visual and/or tactile) were delivered. The monkeys were rewarded for staying within a 2° × 2° tolerance window centered on the fixation spot. The distance between the eyes of monkeys and the screen is of 98 cm. The reward delivery was scheduled to encourage long fixation without breaks (i.e., the interval between successive deliveries was decreased and their amount was increased, up to a fixed limit, as long as the eyes did not leave the window). The fixation spot was placed in the center of a background representing a 3D environment with visual depth cues and was present all throughout the runs (Fig. 1A). The 3D environment and different cone trajectories, at eye level, were all constructed with the Blender software (http://www.blender.org/). Visual and/or tactile stimuli were presented to the monkeys as follows.

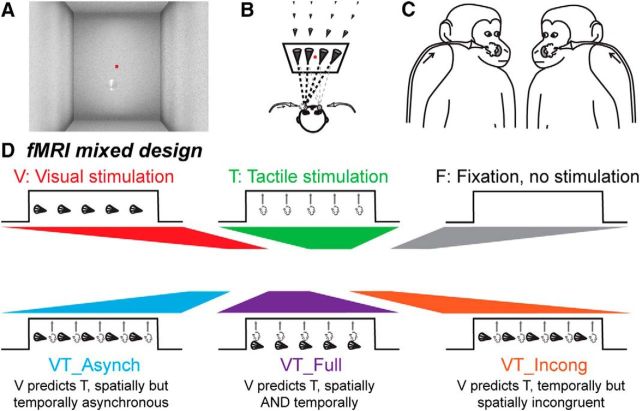

Figure 1.

Methods. A, Visual stimuli consisted in a video sequence of a cone placed in a 3D environment and looming toward the animal's face. The red dot corresponds to the spatial location the monkey was required to fixate to be rewarded. B, The trajectory of the looming cone could start from four different points in the back of the visual scene, four ipsilateral and four contralateral to the predicted impact location with respect to the monkey's face. C, Tactile stimulations were directed to the center of the face thanks to airpuffs directed to the left or right cheek, coinciding with the predicted impact of the looming visual stimulus on the monkey's face. D, We used a mixed fMRI design. Each run was thus composed of five conditions organized in blocks (15 pulses per condition), during which visual (looming cone) and tactile (50 ms airpuff) events were organized as follows: a unimodal visual stimulation condition (V), a unimodal tactile stimulation condition (T), a bimodal condition in which the visual stimulus is spatially and temporally predictive of the tactile stimulus (VT_Full), a bimodal condition in which the visual stimulus predicting the tactile stimulus spatially but preceding the expected impact in time (V_Asynch) and a bimodal condition in which the visual stimulus predicting the tactile stimulus temporally but is spatially incongruent with the tactile stimulus (VT_Incong).

Visual stimuli consisted of a low-contrast dynamic 3D cone-shaped stimulus, pointing toward the monkey. The average luminosity of the cone was the same as that of the background (20% along the white to black gray scale of the video display. However, luminosity within the cone locally varied, as function of the luminosity from 5% to 25%). The cone moved within the 3D environment, originating away from and rapidly approaching the monkey (Fig. 1A). This resulted in a stable unambiguous percept of the cone moving from far away to close to the subject, along precise reproducible trajectories. The trajectory of this looming stimulus was adjusted so as to induce the percept of a potential impact on the monkey's face at two possible locations, on the left or right cheeks, close to the snout. For each impact location, the cone trajectory could originate from eight possible locations around the fixation point: four in the left hemifield and four in the right hemifield at ±0.32°, ±1.27°, ±3.16°, and ±4.11°. As a result, half of the cone trajectories crossed the midsagittal plane and induced a predicted impact to the face on the contralateral cheek with respect to the spatial origin of the cone (Fig. 1B).

Tactile stimuli consisted in air puffs delivered at two possible locations on the monkey's face, on the left or right cheeks, at the two possible impact locations predicted by the cone trajectory, close to the nose and the mouth (Fig. 1C). These puffs were delivered, with a pressure intensity set at 0.3 bars, through tubes the extremities of which were at 2–4 mm from each cheek of the monkeys. This barely perceivable airpuff intensity was chosen so as to maximize the multisensory integration processes expected to take place when combined with a visual stimulus. Indeed, at the single neuron level, multisensory integration processes have been shown to be inversely proportional to the strength of each unimodal stimulus, the lower their intensity, the higher the deviation of the neuron's multisensory response from their unimodal response (Stein and Meredith, 1993; Avillac et al., 2004, 2007). Airpuff duration was set to 50 ms (as measured with a silicon pressure on chip signal conditioned sensor, MPX5700 Series, Freescale) and successive airpuffs were separated by a random time interval ranging from 1500 to 2800 ms.

The visual and tactile sensory modalities were tested in the same runs, either in separate blocks (unimodal stimulations) or in same blocks (bimodal stimulations; Fig. 1D). In the visual unimodal blocks, the movement of the visual cone had a duration of 550 ms and two looming stimuli were separated by a random timing ranging from 1300 to 2800 ms. In the tactile unimodal blocks, airpuff duration was set to 50 ms and successive airpuffs were separated by a random time interval ranging from 1300 to 2800 ms. For the bimodal conditions, we defined three different types of stimulation blocks: (1) tactile stimuli temporally offset (preceding) with respect to the time when the visual stimulus is expected to hit the face but spatially congruent bimodal blocks [VT temporally asynchronous (VT_Asynch)], in which the tactile stimulus was presented while the visual stimulus was approaching the face of the monkey (midcourse of the visual stimulus, 300 ms before the end of the video, airpuff latency as measured with the pressure sensor), at the location at which the visual stimulus was expected to impact the face; (2) tactile stimuli temporally synchronous with the time when the visual stimulus hits the face and spatially congruent bimodal blocks [(VT fully predictive (VT_Full)], in which the tactile stimulus was presented at the moment when the visual stimulus was expected to impact the face (airpuff latency as measured with the pressure sensor), at the spatial location of the expected impact; and (3) tactile stimuli temporally synchronous with the time when the visual stimulus hits the face but spatially incongruent bimodal blocks [VT spatially incongruent (VT_Incong)], in which the tactile stimulus was presented at the moment when the visual stimulus was expected to impact the face, but at a location symmetrical to where the visual stimulus was expected to impact the face. In all these bimodal blocks, visual stimuli were presented with the same temporal dynamics as in the unimodal visual blocks. It is crucial to note that the visual and tactile stimuli were designed to have a low salience so as to maximize the multisensory integration, as discussed above (Stein and Meredith, 1993).

Functional time series (runs) were organized as follows: 15 volume blocks of unimodal and bimodal stimulation blocks were followed by a 15 volume block of pure fixation baseline (Fig. 1D); this sequence was played twice, resulting in a 180 volume run. The 6 types of blocks were presented in 10 counterbalanced possible orders.

Scanning

Before each scanning session, a contrast agent, composed of monocrystalline iron oxide nanoparticles (feraheme; Vanduffel et al., 2001), was injected into the animal's femoral/saphenous vein (4–10 mg/kg). Brain activations produce increased BOLD signal changes. In contrast, when using MION contrast agents, brain activations produce decreased signal changes signal (Kolster et al., 2014). For the sake of clarity, the polarity of the contrast agent MR signal changes was inverted. We acquired gradient-echo echoplanar images covering the whole brain (1.5 T; repetition time: 2.08 s; echo time: 27 ms; 32 sagittal slices; 2 × 2 × 2 mm voxels). A total of 135 (132) runs was acquired for M1 (/M2).

Analysis

A total of 98 runs for Monkey M1 and 92 runs for Monkey M2 was selected based on the quality of the monkey's fixation throughout each run (>85% within the eye fixation tolerance window). Runs were analyzed using SPM8 (Wellcome Department of Cognitive Neurology, London, UK). For spatial preprocessing, functional volumes were first realigned and rigidly coregistered with the anatomy of each individual monkey (T1-weighted MPRAGE 3D 0.6 × 0.6 × 0.6 mm or 0.5 × 0.5 × 0.5 mm voxel acquired at 1.5T) in stereotactic space. The JIP program (Mandeville et al., 2011) was used to perform a non-rigid coregistration (warping) of mean functional image onto the individual anatomies.

Fixed effect individual analyses were performed for each monkey, with a level of significance set at p < 0.05 corrected for multiple comparisons (FWE, t scores > 4.89) and p < 0.001 (uncorrected level, t scores > 3.1). In all analyses, realignment parameters, as well as eye movement traces, were included as covariates of no interest to remove eye movement and brain motion artifacts. When coordinates are provided, they are expressed with respect to the anterior commissure. Results are displayed on coronal sections or flattened maps obtained with Caret (Van Essen et al., 2001; http://www.nitrc.org/projects/caret/).

Assigning the activations to a specific cortical area was performed on each individual monkey brain using the monkey brain atlases made available on http://scalablebrainatlas.incf.org. These atlases allow mapping specific anatomical coronal sections with several cytoarchitetonic parcellation studies. We used the Lewis and Van Essen (2000) and Paxinos et al. (1999) rhesus monkey parcellations. For some areas, we additionally referred to more recent works (Petrides et al., 2005; Belmalih et al., 2009).

Mixed block/event-related analysis.

Our main goal in this work was to specifically identify how visual impact as predicted by the time course of the visual video was modulated by a tactile stimulus depending on the subjects' expectations due to the mixed block/event structure of the experimental design (Petersen and Dubis, 2012). We were thus targeting processes time-locked to stimulus dynamics in specific blocks. For each block condition, we extracted visual times (in mid-course of the visual stimulus) and tactile times of presentation. To identify these time-locked processes irrespectively of more general block related-processes, activations were not reported against the fixation blocks. Rather, activations were reported against arbitrarily defined fixation events within each unimodal and each bimodal condition, assigned at intermediate timings between two successive stimuli sequences. For bimodal conditions, we performed two types of analyses, one based on the timings of the visual stimuli and one based on the timings of the tactile stimuli. In Figure 4, color contours correspond to the activations obtained relative to the looming visual stimuli time onset and black contours correspond to the activations obtained relative to tactile stimuli time onset (t scores > 3.1). In all of the three bimodal conditions, the spatial extent of the observed functional activations is only marginally affected by whether the analyses are performed relative to the visual or to the tactile stimuli. Thus, unless stated otherwise, the communication of the results and their discussion refer to the analysis timed on the visual stimuli.

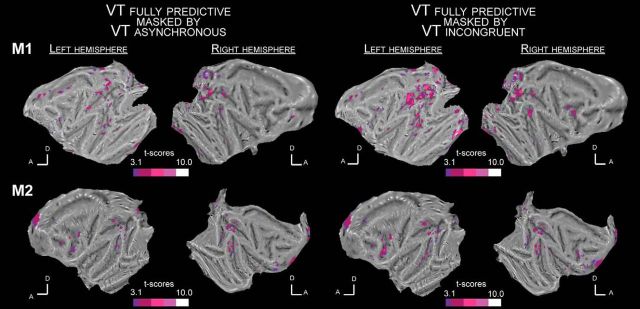

Figure 4.

Whole-brain activation maps for the VT fully predictive versus fixation contrast masked by the VT temporally asynchronous versus fixation contrast (left; p < 0.05) and the VT spatially incongruent versus fixation contrast (right; p < 0.05) for Monkey M1 (top flat maps) and Monkey M2 (bottom flat maps). All else as in Figure 3.

Regions-of-interest.

We performed regions-of-interest (ROIs) analyses using MarsBar toolbox (Brett et al., 2002), based on the fixed effects individual analyses results. To ensure statistically valid inferences when performing the ROI analysis, we divided, for each individual subject, the functional runs into two independent datasets of equal size. The ROIs were defined on one dataset, using the activations obtained at uncorrected level (t scores > 3.1) in the fully predictive bimodal VT condition (VT_Full) versus fixation contrast. For each of these ROIs, the percentage of signal change (PSC) was extracted at their associated coordinates for all the runs belonging to the second dataset, using SPM8 and the MarsBar toolbox (http://marsbar.sourceforge.net; Brett et al., 2002). Specifically, for each ROI, MarsBar allows to extract the signal intensity at the event of interest in percentage with respect to the whole brain mean (Ev/WholeBrain). Likewise, for each ROI, and each event of interest, it allows to extract the signal intensity at the corresponding reference fixations (i.e., fixations sampled within the same blocks as the events of interest), again in percentage with respect to the whole brain mean (Fix/WholeBrain). The PSC we report corresponds to the difference between Ev/WholeBrain and Fix/WholeBrain. The significance of these PSCs across all runs was assessed using repeated-measures ANOVA and paired t tests, after the data for each ROI was tested for normality (Kolmogorov–Smirnov test). Within these ROIs, we reported superadditive and subadditive interactions to test for multisensory integration processes.

Potential covariates.

In all analyses, realignment parameters, as well as eye movement traces, were included as covariates of no interest to remove eye movement and brain motion artifacts. However, some of the stimulations might have induced a specific behavioral pattern biasing our analysis, not fully accounted for by the above regressors. For example, airpuffs to the face might have evoked facial mimics (for an analysis of blink-related activations, see Guipponi et al., 2015b) inducing some degree of variability in the point of impact of the air puffs. Although we cannot completely rule out this possibility, our experimental setup allows us to minimize its impact. First, monkeys worked head-restrained (to maintain the brain at the optimal position within the scanner, to minimize movement artifacts on the fMRI signal and to allow for a precise monitoring of their eye movements). As a result, the tactile stimulations to the face were stable in a given session. When drinking the liquid reward, small lip movements occurred. These movements thus correlated with reward timing. The air puffs were placed on the cheeks on each side of the monkey's nose at a location that was not affected by the lip movements. Airpuffs are often suspected to activate the auditory system. In the present study, the airpuff delivery system was placed outside the MRI scanner room and the monkeys were wearing headphones to protect their hearing from the high intensity sound produced by the scanner. By placing a microphone inside the headphones, we confirm that no airpuff triggered sound could be recorded, whether in the absence or presence of a weak MRI scanner noise (Guipponi et al., 2015a). Second, monkeys were required to maintain their gaze on a small fixation point, within a tolerance window of 2° × 2°. This was controlled online and was used to motivate the animal to maximize fixation rates (as fixation disruptions, such as saccades or drifts, affected the reward schedule). Eye traces were also analyzed offline for the selection of the runs to include in the analysis (good fixation for 85% of the run duration, with no major fixation interruptions).

Pupil size analysis.

During the scanning sessions, pupil size (eye pupil horizontal diameter, in pixels) was recorded together with eye position signals. The variations of pupil size were analyzed as a function of block condition, to identify possible autonomic markers that the monkeys were interpreting differently each stimulation block, based on the predictive information provided by each block (Fig. 2A). Specifically, changes in pupil size in time were normalized with respect to the pupil size average of each session. The main pupil size analysis describes the average deviation of pupil size in each block condition with respect to the overall session average, per monkey. Eye movements analyses indicate that fixation behavior did not significantly vary from one block to the other, for each monkey (see next section). As a result, changes in pupil diameter cannot be accounted for by changes in eye movements.

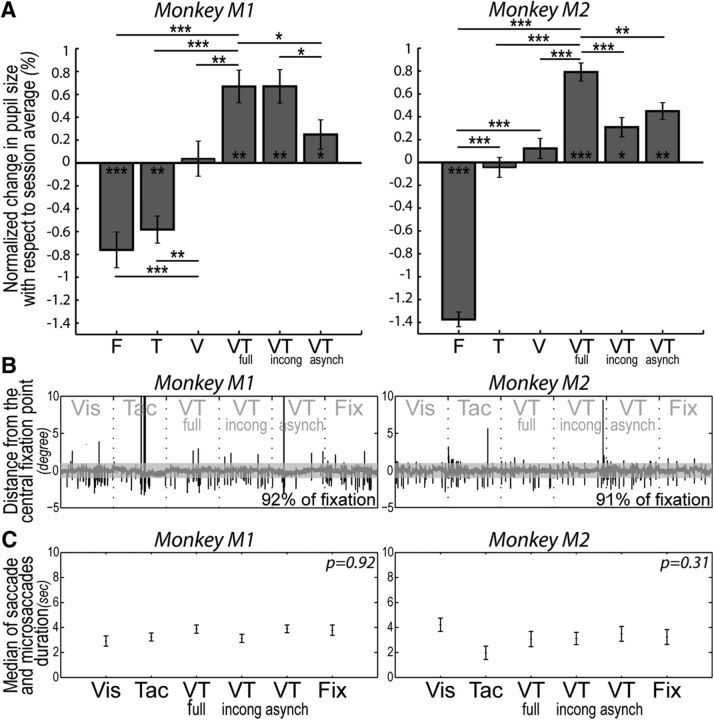

Figure 2.

A, Pupil size changes as a function of the predictive structure of the stimulation blocks. Average normalized changes in pupil size with respect to session average (in %), for each of Monkeys M1 and M2, for the unimodal visual stimulation condition (visual), the unimodal tactile stimulation condition (tactile), the bimodal condition in which the visual stimulus is spatially and temporally predictive of the tactile stimulus (VT_Full), the bimodal condition in which the visual stimulus is temporally asynchronous with the tactile stimulus (VT_Asynch) and the bimodal condition in which the visual stimulus is temporally predictive but spatially incongruent with the tactile stimulus (VT_Incong). The statistical significance of paired t tests is represented as follows: *p < 0.05, **p < 0.01, ***p < 0.001. B, Single run example of eye deviation from the fixation point in distance in degrees, for Monkeys M1 and M2. C, Mean of saccade and microsaccade duration in seconds over all the runs (±median SE), for each type of block and each monkey.

Eye movement data analysis.

During the MRI scanning sessions, the horizontal (X) and vertical (Y) eye position of the monkey was recorded (in degrees). An example of the time course of eye position is presented in Figure 2B, for each monkey, for an exemplar run. For each of these exemplar runs, the block structure is indicated. The quality of fixation was constant across blocks as assessed by a Kruskal–Wallis test performed onto the time spent, in each block, beyond a 1° x- or y-threshold (p > 0.05 for each monkey). This measure quantifies both the time spent out of the fixation window and the frequency of saccades and microsaccades (defined as any eye movement deviation of more 1° in x- or y-coordinates). Figure 2C represents the average duration of out of the fixation window (and corresponding SE), per block type and per monkey.

Results

Monkeys were exposed, in the same time series, to six different types of stimulation blocks, in a mixed fMRI design (Fig. 1D), while required to fixate a central red point all throughout. These six conditions were as follows: (1) pure fixation blocks; (2) visual only blocks, with looming visual stimuli evolving in a virtual 3D environment (Fig. 1A), running along several possible trajectories predicting an impact to the face at two possible locations (Fig. 1B); (3) tactile only blocks with tactile stimulations at two possible facial locations coinciding with the two possible endpoints of the looming visual stimuli (Fig. 1C); and (4–6) three possible bimodal visuotactile stimulations (Fig. 1D) with the visual stimulus spatially and temporally predicting the tactile stimulus (VT_Full), the visual stimulus predicting the tactile stimulus spatially but preceding the expected impact in time (VT_Asynch) or the visual stimulus predicting the tactile stimulus temporally but is spatially incongruent with the tactile stimulus (VT_Incong). In the following, we describe the behavioral and functional correlates of the spatial and temporal prediction information held by the visual looming stimulus onto the processing of the tactile stimulus.

Changes in pupil size reveal an implicit processing of predictive signals

The monkeys were required to fixate the central red spot as long as possible to maximize their reward schedule, irrespectively of the type of stimulation they were presented with. They thus did not produce any overt indicator that they processed the distinct stimulation blocks. This was done on purpose so as to probe the prediction of impact of looming of visual stimuli onto tactile perception in the absence of any active cognitive task to be operated on any of the two sensory inputs, as this would necessarily have interfered with low-level multisensory integrative processes. However, if the perception of tactile or the visual stimuli is influenced by their co-occurrence (due to multisensory integration) and how they are perceived is further differentially modulated by the temporal and spatial contingencies between these two stimuli (due to spatial and temporal prediction), one can expect block structure to modulate autonomic parameters such as pupil size, which has been show to covary with a wide range of cognitive processes (for review, see Laeng et al., 2012). Here, the analysis of how pupil size varied as a function of the different stimulation blocks is expected to provide an implicit indicator of a selective processing of predictive sensory stimulations compared with nonpredictive sensory stimulations (Fig. 2A).

Specifically, in both monkeys, pupil size was consistently smaller on fixation blocks than the corresponding average pupil size in each functional run (paired t test, p < 0.001, for both monkeys). Pupil size was also larger on unimodal visual blocks than the corresponding average pupil size observed on fixation blocks (paired t test, p < 0.001, for both monkeys). This result is the opposite from what could have been predicted from the photomotor reflex: the additional presence of the looming visual stimuli is expected to induce, if anything, a phasic reduction in pupil size, resulting in a decrease in the average pupil size on visual blocks relative to fixation blocks. We actually observe the opposite, suggesting that the looming visual stimuli evoke in both monkeys an increased arousal/expectation state associated with relatively enlarged pupils. A similar increase in pupil size compared with fixation can also be observed in the unimodal tactile block, for Monkey M2 (paired t test, p < 0.001). Crucially, pupil size was statistically larger on the three bimodal predictive conditions compared with the fixation blocks (paired t test, p < 0.01, for both monkeys) but also compared with the unimodal visual blocks (paired t test, p < 0.01, for both monkeys). It is to be noted that in all these three conditions, the visual stimuli that could have induced phasic changes in pupil size are actually identical to those presented during the unimodal visual blocks. The fact that the bimodal conditions correlated with larger pupil size than in the unimodal visual condition is an indication that the tactile stimulus was implicitly processed by the monkey.

In the bimodal conditions, for Monkey M2, pupil size changes are maximal in blocks in which the visual stimuli both spatially and temporally predict the tactile stimulation (paired t test, p < 0.01 when compared with the temporally asynchronous condition; p < 0.001 when compared with the spatially incongruent condition). Monkey M1, appears to mostly rely on the temporal cues predictive of the imminence of impact (paired t test, p < 0.05 when comparing the fully predictive blocks to the temporally asynchronous condition; p > 0.05 when comparing the fully predictive blocks to the spatially incongruent condition). There are no differences in the monkey's fixation behavior across the different blocks, as assessed by a Kruskal–Wallis test performed onto the time out of the fixation window per block (p > 0.05 for each monkey; Fig. 2C). As a result, the changes in pupil size are independent from the quality of fixation. Overall, these observations indicate that pupil size changes can serve as an implicit autonomous marker that the monkeys are distinctly processing the predictive signals provided by each block, correlating with the functional observations that will now be described.

Unimodal stimulations evoke very weak cortical functional activations

The visual and tactile stimulations were specifically designed to have a low salience (low-contrast looming cone onto the 3D visual background, low-intensity tactile stimuli) so as to maximize the expected neuronal integration processes (Stein and Meredith, 1993; Avillac et al., 2004, 2007). Confirming that these stimuli were indeed low salience stimuli, both evoke very weak cortical functional activations when presented each on their own, as can be seen on their individual functional whole brain flat-map (Fig. 3). Specifically, in both monkeys, the visual looming stimuli sparsely activate very low striate or extrastriate cortical region, the intraparietal cortex as well as the peri-arcuate premotor cortex (Fig. 3, red activations; t > 3.1). Tactile stimulations evoke even weaker cortical activations, mostly in somatosensory related cortices, in Monkey M1, and around the post-central sulcus in the right hemisphere of Monkey M2 (Fig. 3, green activations; t > 3.1). These tactile maps can be confronted with those obtained in with stronger tactile stimuli (Guipponi et al., 2015a, their Fig. 2; Wardak et al., 2016).

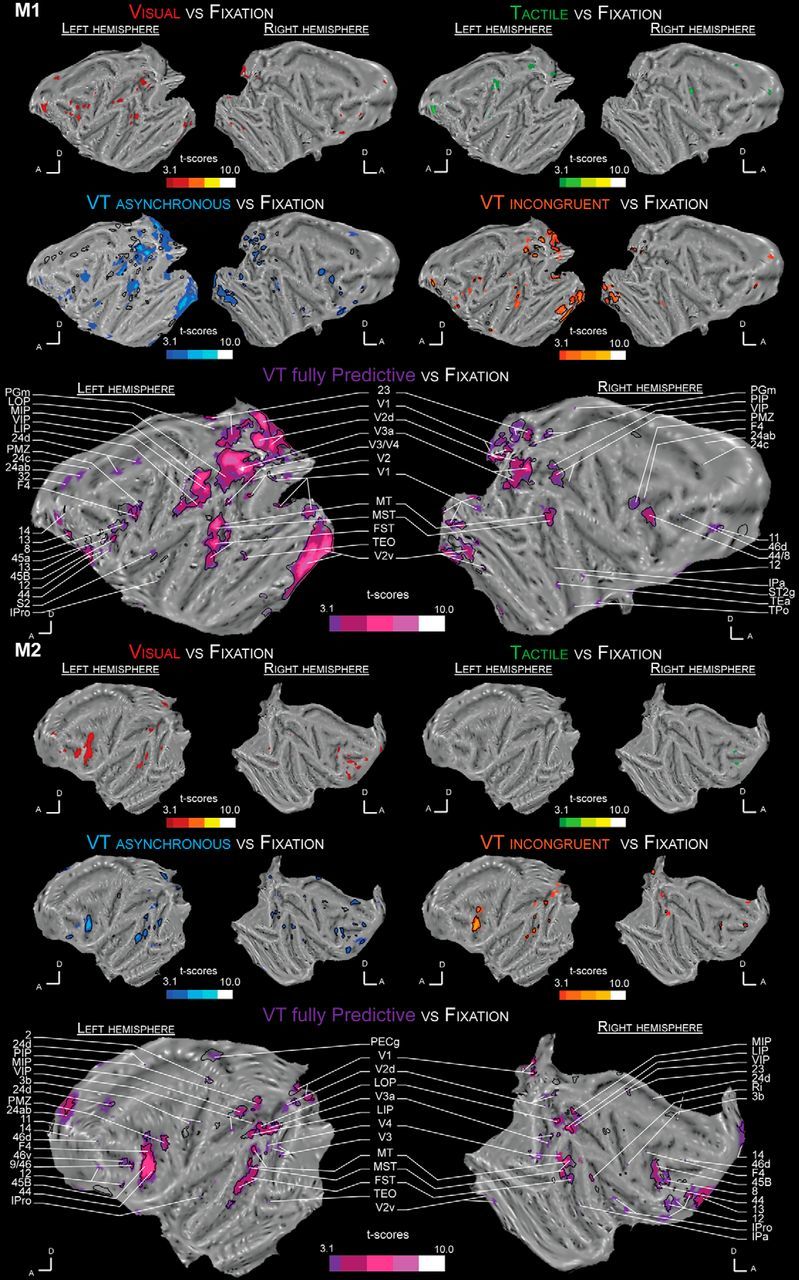

Figure 3.

Whole-brain activation maps for the visual (red), tactile (green), VT temporally asynchronous (blue), VT spatially incongruent (orange), and VT fully predictive (purple) conditions. Each contrast is performed with a level of significance set at p < 0.001 uncorrected level, t > 3.1 for each monkey (M1 and M2). Dark lines represent VT bimodal cortical activations when the time reference is based on the tactile stimulus time rather than on the end of the visual looming sequence, as in the main analysis. 2, Somatosensory area 2; 3b, somatosensory area 3b; 8, area 8; 9/46, area 9/46; 11, area 11; 12, area 12; 13, orbitofrontal area 13; 14, area 14; 23, area 23; 24ab, area 24ab; 24c, area 24c; 24d, area 24d; 32, area 32; 44, area 44; 45, area 45; 46v, ventral area 46; 46d, posterior subdivision of area 46; F4, frontal area F4; FST, floor of superior temporal sulcus; IPa, intraparietal sulcus associated area; IPro, insular proisocortex; LIP, lateral intraparietal area; LOP, lateral occipital parietal cortex; MIP, medial intraparietal area; MST, medial superior temporal area; MT, middle temporal area; PECg, parietal area PE, cingulate part; PGm, parietal area PG, medial part; PIP, posterior intraparietal area; PMZ, premotor zone; Ri, retroinsular area; S2, secondary somatosensory cortex; ST2g, superior temporal sulcus area 2, gyral part; TEa, temporal area TEa; TEO, temporal area TE, occipital part; TPo, temporal parieto-occipital associated area in sts; V1, visual area 1; V2, visual area 2; V2v, ventral visual area 2; V2d, dorsal visual area 2; V3a, visual area 3a; V3, visual area 3; V4, visual area 4.

An occipito-parieto-temporo-premotor network is strongly activated by a tactile stimulus presented at the predicted time of impact of a looming visual stimulus on the face

Figure 3 also presents the whole brain functional activation flat maps for the three bimodal visuotactile stimulation blocks: for the temporally asynchronous bimodal condition (VT_Asynch: blue activations, t > 3.1), the spatially incongruent bimodal condition (VT_Incong: orange activations, t > 3.1), and the fully predictive bimodal condition (VT_Full: purple activations, t > 3.1). Color contours correspond to the activations obtained relative to the looming visual stimuli time onset. Black contours correspond to the activations obtained relative to tactile stimuli time onset (t > 3.1). Crucial to the interpretation of the present data, in all of the three bimodal conditions, the observed functional activations are only marginally affected by whether the analyses are performed relative to the visual or to the tactile stimuli. Only activations identified at least in three of four hemispheres are discussed below.

In blocks in which visual stimuli are spatially and temporally predictive of tactile stimuli at the expected time of impact (VT_Full), the activations are strikingly more widespread and stronger than those observed during unimodal visual or tactile stimulations (Fig. 3, purple activations; t > 3.1). Specifically, these include large portions of the striate and extrastriate cortex in areas V1, V2, V3, V3a, and V4, temporal sulcus activations including areas MT, MST, FST, and TEO, parietal activations including areas LOP, VIP, and MIP, insular activations (area IPro), cingular activations (area 24c-d), and prefrontal/premotor activations including premotor areas F4, 44, 45b, and 46.

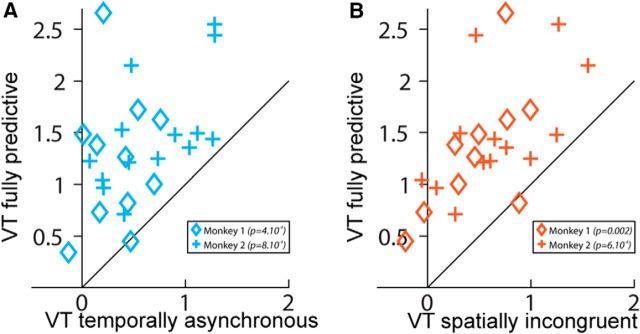

Both spatial and temporal prediction cues of impact to the face are crucial to the observed activations

In striking contrast to what is observed when the visual looming stimulus predicts the imminence of the tactile stimulus both in time and space, in blocks in which visual stimuli are temporally but not spatially predictive of tactile stimuli (i.e., when the tactile stimulus is presented at the expected impact time of the looming visual stimulus, but on the opposite cheek on the face, VT_Incong, Fig. 3, orange maps), functional activations are overall weaker and smaller (Fig. 4, right; VT_Full masked by VT_Incong, p < 0.05). This is even more pronounced for blocks in which tactile stimuli are asynchronous with respect to the expected impact of the visual stimuli on the face (i.e., when the tactile stimulus is presented at the expected impact location of the looming visual stimulus on the face, but while the visual stimulus is still halfway through its trajectory, VT_Asynch; Figs. 3, blue maps, 4, left; VT_Full masked by VT_Asynch, p < 0.05). Specifically, in the majority of regions of interest identified in the fully predictive versus fixation contrast, performed on half the available runs, the PSC, assessed in the other independent half of the available runs, is statistically higher in the fully predictive bimodal condition than in the bimodal temporally asynchronous condition [Fig. 5a; one-way ANOVA: M1: p < 10 − 3, VT_Full > VT_Asynch (p < 0.05): 7/10 ROIs = 70%, VT_Full ≈ VT_Asynch: 3/10 ROIs = 30%; M2: p < 10 − 3, VT_Full > VT_Asynch (p < 0.05): 7/13 ROIs = 54%, VT_Full ≈ VT_Asynch: 6/13 ROIs = 46%]. Because visual stimuli could originate from eight different locations in the far visual field (4 locations ipsilateral to the impact point and 4 contralateral) but predict only two possible impact locations to the face (left or right cheek), the spatial effects reported here cannot be accounted for by other aspects of the stimulus. Similarly, in the majority of ROIs, the PSCs are statistically higher in the fully predictive bimodal condition than in the bimodal spatially incongruent condition [Fig. 5b; one-way ANOVA: M1: p < 0.002, VT_Full > VT_Incong (p < 0.05): 8/10 ROIs = 80%, VT_Full ≈ VT_Incong: 2/10 ROIs = 20%; M2: anova1 test: p < 10 − 3, VT_Full > VT_Incong (p < 0.05): 9/13 ROIs = 69%, VT_Full ≈ VT_Incong: 4/13 ROIs = 31%].

Figure 5.

Temporal and spatial prediction of imminence of impact maximizes cortical activations. ROIs are defined on the VT fully predictive condition time-locked to the end of the visual looming sequence versus fixation contrast in the two monkeys, using half of the available runs (M1: 10 ROIs; M2: 13 ROIs). PSC is calculated onto the remaining independent half of the runs. A, PSC in the VT fully predictive condition as a function of the PSC in the VT temporally asynchronous condition (one-way ANOVA; M1: p < 0.001, M2: p < 0.001). B, PSC in the VT fully predictive condition as a function of the PSC in the VT spatially incongruent condition (one-way ANOVA; M1: p < 0.002, M2: p < 0.001).

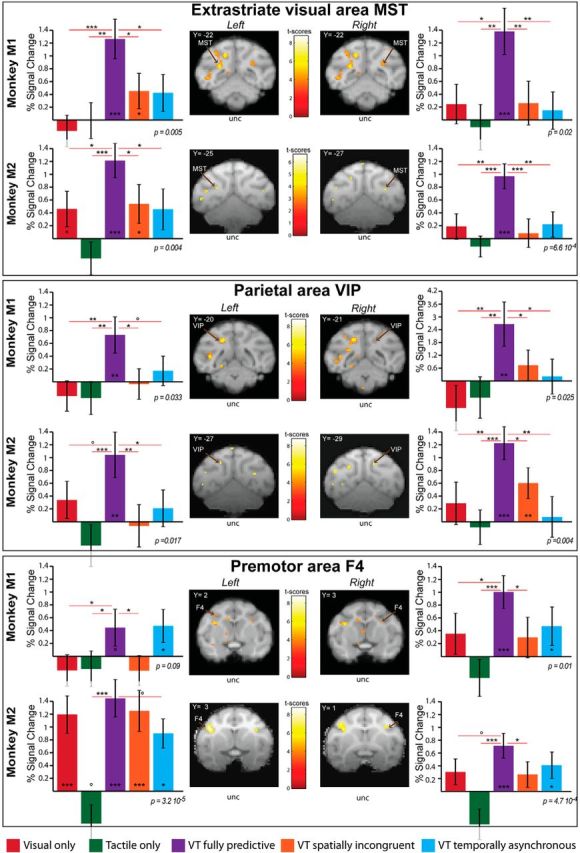

Thus, maximal enhancement is observed in the identified functional network, when the tactile stimulus is presented in a time window and at a location compatible with the prediction of impact of the visual dynamic stimulus onto the face. This is further exemplified on a subset of ROIs, selected in key extrastriate (MST), parietal (VIP), and premotor (area F4) cortices of both hemispheres of both monkeys (Fig. 6). These ROIs are defined on a fully predictive versus fixation contrast, performed on half the available runs, and their associated percentage of signal change is estimated over the other independent half of the available runs. For all these ROIs, there is a significant stimulation block effect (one-way ANOVA, p < 0.05, except for left F4 region of Monkey M1). Specifically, as can be seen in Figure 6, the bimodal fully predictive condition (purple) is in most cases statistically different from both the visual (red) and the tactile (green) conditions. This bimodal fully predictive condition is also statistically different from both the bimodal temporally asynchronous (blue) or/and the bimodal spatially incongruent (orange) conditions in extrastriate visual area MST and posterior parietal area VIP (except in the left hemisphere of Monkey M1 where only a trend in the statistical difference between the temporally asynchronous condition and the fully predictive condition is observed). In premotor area F4 (Fig. 6), the difference in the PCS between the fully predictive condition and the two partially predictive conditions is less marked, more so for the temporally asynchronous condition (statistical difference reached for only 1 ROI) than for the spatially incongruent condition (statistical difference reached for 3/4 ROIs).

Figure 6.

Impact prediction activates a parietofrontal network. Histograms represent the percentage signal change for visual (red), tactile (green), VT fully predictive (purple), VT spatially incongruent (orange) and VT temporally asynchronous (blue) conditions for Monkeys 1 and 2, for selected ROIs in the extrastriate cortex (MST), the parietal cortex (area VIP) and the premotor cortex (area F4). As in Figure 5, the ROIs are selected on a VT predictive versus fixation contrast using half of the available runs (p < 0.001, uncorrected level), and the extraction of the PSC is performed onto the remaining independent half of the runs. For each ROI, block effect is assessed by a repeated measure one-way ANOVA; significance of PSCs difference with respect to baseline and among themselves is assessed using paired t tests. *p < 0.05, **p < 0.01, ***p < 0.001; °0.05 < p < 0.07.

This predictive heteromodal functional enhancement results from a multisensory integration process of visual and tactile information

Several statistical criteria can be applied to demonstrate multisensory integrative processes on hemodynamic activations (Beauchamp, 2005; Gentile et al., 2011; Love et al., 2011; Werner and Noppeney, 2011; Tyll et al., 2013). The most reliable criterion is departure from additivity, i.e., statistical sub- or supra-additivity. Table 1 summarizes the location and activation statistics for a selected set of ROIs in the striate and extrastriate visual cortex, and the temporal, parietal and prefrontal cortex, in each monkey, defined on half the available functional runs. For these ROIs, Table 2 summarizes the average PSC with respect to fixation, as assessed from the second independent half of available functional ROIs, for the fully predictive condition (column 2) compared with the sum of the visual and tactile unimodal conditions (column 3) and to the mean of the spatially incongruent and temporally asynchronous conditions (column 4). In Monkey M1, 7/10 (70%, bold fonts) of the ROIs show a significant difference between these two values (t test, p < 0.05), indicating a multisensory integrative process. In Monkey M2, all the ROIs show a significant difference between these two values (t test, p < 0.05). For both animal and all integrative ROIs, the identified integrative mechanisms correspond to supra-additivity.

Table 1.

Summary of main ROIs defined on a VT predictive versus fixation contrast using half of the available runs (p < 0.001, uncorrected level), per monkey (M1/M2), for the left and right hemispheres

| Monkey M1 |

Monkey M2 |

||||||

|---|---|---|---|---|---|---|---|

| Areas | Peak loc. | t | ROI size | Areas | Peak loc. | t | ROI size |

| V1 left | (−14,−38,1) | 5.33 | 27 mm3 (sph) | V1 left | (−17,−31,−6) | 3.35 | 4 mm3 |

| V1 right | (17,−34,−8) | 3.45 | 1 mm3 | ||||

| V2 left | (−10,−38,−6) | 7.32 | 27 mm3 (sph) | V2 left | (−10,−36,−7) | 4.35 | 24 mm3 |

| V3 left | (−12,−32,2) | 5.17 | 27 mm3 (sph) | ||||

| V3 right | (11,−31,3) | 3.28 | 27 mm3 (sph) | V3 right | (12,−32,2) | 3.47 | 2 mm3 |

| MST left | (−12,−22,2) | 5.32 | 27 mm3 (sph) | MST left | (−12,−25,1) | 3.24 | 4 mm3 |

| MST right | (11,−22,3) | 3.59 | 8 mm3 (sph) | MST right | (14,−27,0) | 3.64 | 3 mm3 |

| FST left | (−18,−19,−1) | 5.33 | 27 mm3 (sph) | FST left | (−19,−17,−1) | 4.49 | 27 mm3 (sph) |

| FST right | (20,−17,−4) | 3.29 | 4 mm3 | ||||

| VIP left | (−9,−20,9) | 5.61 | 27 mm3 (sph) | VIP left | (7,−27,7) | 3.29 | 8 mm3 (sph) |

| VIP right | (6,−21,8) | 3.11 | 1 mm3 | VIP right | (6,−29,6) | 3.45 | 8 mm3 (sph) |

| F4 left | (−13,2,14) | 3.40 | 8 mm3 (sph) | F4 left | (−16,3,9) | 4.97 | 27 mm3 (sph) |

| F4 right | (11,3,9) | 3.46 | 8 mm3 (sph) | F4 right | (14,1,10) | 4.06 | 27 mm3 |

For each ROI, the table indicates peak location (x, y, and z coordinates with respect to the anterior commissure), t score at the local, maximum, and ROI size in cubic millimeters. When the actual activation is too large and merges with adjacent activations, the ROI was created by an 8 mm3 or a 27 mm3 sphere (sph) centered onto the peak location (loc.) depending on whether the cluster size was respectively inferior to 27 mm3 (8 mm3 sph) or superior to 27 mm3 (27 mm3 sph). The areas are identified and labelled in reference to the nomenclature used in the Lewis and Van Essen (2000) atlas as available in Caret and in the scalable brain atlas (http://scalablebrainatlas.incf.org).

Table 2.

Supra-additive and subadditive integrative processes tested on a selection of ROIs identified in Monkeys M1 and M2

| Monkey M1 |

Monkey M2 |

||||||

|---|---|---|---|---|---|---|---|

| Areas | VT_Full | V + T | Impact pred. | Areas | VT_Full | V + T | Impact pred. |

| V1 left | 0.82 | −0.96 | 0.66 | V1 left | 2.15 | 0.12 | 1.02 |

| V1 right | 1.49 | −1.02 | 0.72 | ||||

| V2 left | 1.72 | 0.55 | 0.77 | V2 left | 2.55 | −0.10 | 1.28 |

| V3 left | 1.44 | 0.03 | 0.96 | ||||

| V3 right | 1.48 | 0.65 | 0.25 | V3 right | 1.25 | 0.30 | 0.87 |

| MST left | 1.26 | −0.18 | 0.44 | MST left | 1.21 | 0.15 | 0.50 |

| MST right | 1.38 | 0.13 | 0.21 | MST right | 0.97 | 0.06 | 0.15 |

| FST left | 1.63 | 0.77 | 0.77 | FST left | 1.35 | −0.13 | 0.90 |

| FST right | 2.44 | 0.37 | 0.88 | ||||

| VIP left | 0.73 | −0.47 | 0.07 | VIP left | 1.04 | −0.04 | 0.07 |

| VIP right | 2.66 | −2.06 | 0.48 | VIP right | 1.22 | 0.20 | 0.34 |

| F4 left | 0.45 | −0.42 | 0.13 | F4 left | 1.35 | 0.74 | 1.08 |

| F4 right | 1.00 | 0.02 | 0.50 | F4 right | 2.44 | −0.21 | 0.34 |

The ROIs are defined on a VT predictive (pred.) versus fixation contrast using half of the available runs (p < 0.001, uncorrected level), and the PSCs are calculated on the second independent half of the ROIs. For each ROI, the table indicates the PSC of the VT fully predictive bimodal activation with respect to fixation activations sampled in the same blocks, the sum of the PSC obtained in visual and tactile stimulations blocks with respect to fixation, and the mean of the PSC obtained in the bimodal condition with respect to fixation (VT temporally asynchronous and VT spatially incongruent). Bold values indicate either multisensory integrative supra- or sub-additivity (t test, column 2 compared to column 3), temporal and spatial information integrative supra- or sub-additivity (t test, column 2 compared to column 4).

Spatial and temporal cues are actively integrated toward impact prediction

The above analysis demonstrates that a majority of ROIs within the identified network actively integrate visual and tactile information during the fully predictive condition. Here, we seek to understand whether spatial and temporal cues are also actively integrated during the fully predictive condition. To this effect we assess how the PSCs measured during the fully predictive condition compare to the mean of those obtained during the spatially incongruent condition and the temporally asynchronous condition to identify supra-additive or subadditive integrative mechanisms of spatial and temporal information (Table 2, columns 2 and 4). In Monkey M1, 8/10 (80%, bold fonts) of the ROIs show a significant difference between these two values (t test, p < 0.05), indicating an integrative process of spatial and temporal information. In Monkey M2, 7/13 of the ROIs (53%) show this effect. Again, when this integrative process takes place, it corresponds to a supra-additive integrative process rather than a subadditive integrative process. This strongly suggests that spatial and temporal predictive information is actively integrated at the neuronal level and possibly highlights a cortical network specifically involved in the integration of these predictive cues. Single-cell recordings in key regions of this cortical network will allow to test whether this results from optimal cue combination and directly predicts behavior as demonstrated in other instances (e.g., visuo-vestibular integration in parietal area VIP; Fetsch et al., 2011; Pitkow et al., 2015).

The multisensory integration and predictive effects cannot be explained by a larger pupil size

Part of the activations observed during the temporally and spatially congruent condition could be due to the larger pupil, resulting in more retinal photoreceptors being stimulated. However, several arguments indicate that this is not the major explicative factor of our observations. Indeed, the visual stimuli appear at eight possible locations in the far space and loom toward two positions on the face, thus defining sixteen trajectories in all. Eight of these trajectories involve a crossing of the midline and the stimulation of both visual hemifields. The other eight trajectories involve the stimulation of a unique hemifield. If one assumes that the observed activations are mostly due to changes in pupil size, one would expect strong changes in activations in both the central and the peripheral parts of the striate and extrastriate cortices, bilaterally. This is definitively not the case (Guipponi et al., 2015a, their Fig. 4 shows an outline of the central and peripheral visual field representations in a group of monkeys including Monkey M1). Most importantly, a key observation is the specificity of the observed changes in activations. These are restricted to a cortical network which we have previously described, in an independent set of experiments, as a visuotactile multisensory network (Guipponi et al., 2015a). This multisensory network is composed of prefrontal, parietal, temporal and occito-parietal regions, but also striate and extrastriate regions representing the periphery of the visual field, confirming the anatomical evidence for somatosensory projections toward these low-level visual areas (Clavagnier et al., 2004). In one monkey that participated both in the present study and in the multisensory study, the similarity, in terms of identified voxels, between the network identified in the full impact prediction condition of the present study and the network identified in the visuotactile convergence study is striking (Cléry et al., 2015b). All this together supports the idea that the differential activations observed in the present study in the full impact prediction condition cannot be account for by a change in stimuli luminosity due to a larger pupil size.

The multisensory integration and predictive effects cannot be accounted for by changes in arousal or in locus ceruleus driven changes in norepinephrine release.

The higher the degree of arousal (Bradshaw, 1967) or of alertness (Yoss et al., 1970), the larger the pupil size. As a result, and due to the fact that they correlate with pupil size changes, our functional observations could actually merely reflect nonspecific arousal effects in the spatially and temporally impact prediction blocks. Several lines of evidence go against this interpretation. First, in a recent paper, Chang et al. (2016) describe the cortical regions that negatively correlated with arousal as indexed both by eyelid opening and direct intracortical LFP recordings. These include the extrastriate visual cortex, the parietal cortex, the insula, the limbic cortex and prefrontal cortex with a strong degree of overlap with the default-mode network (Buckner et al., 2008). In other terms, the higher the degree of arousal, the lower the functional activation of these regions. The functional network that we identify in the full spatial and temporal impact prediction condition demonstrates the exact opposite trend, namely increased activations for large pupil size conditions (proxy to high arousal conditions). Second, larger pupil size is associated with higher norepinephrine (NE) release from the locus ceruleus (LC). In a recent study by Guedj et al. (2017), atomoxetine, a NE-reuptake inhibitor, induced a switching from negative to positive coupling between the LC and the parietofrontal attentional intrinsic network at the same time as a decoupling between this parietofrontal network and other sensory motor network, including post-arcuate premotor regions. In addition, spontaneous pupil size variations are associated with activations in the salience network, the thalamus and the frontoparietal cortex (Schneider et al., 2016). Both studies point toward changes in the frontoparietal attentional network, which, in the monkey, is essentially composed of the frontal eye fields (Armstrong et al., 2009; Gregoriou et al., 2009, 2012; Ibos et al., 2013; Astrand et al., 2015) and the lateral intraparietal area (Ben Hamed et al., 2001; Wardak et al., 2004; Ibos et al., 2013). Activations encompassing only a very small part of frontal eye field and lateral intraparietal area are identified in three of four hemispheres. The vast majority of the identified functional network in the spatial and temporal impact prediction condition lies outside this parietofrontal attentional network. All this together strongly indicates that our reported activations cannot be accounted for by variations in the degree of the monkey's arousal or in LC-NE transmission.”

Discussion

Overall, this study demonstrates that looming visual stimuli toward the body enhance the tactile information processing within a temporal window and at a spatial location that coincide with the prediction of impact to the body. The activated network is essentially composed of striate and extrastriate visual areas, intraparietal and peri-arcuate premotor areas and pulvinar subsectors. In this network, this heteromodal enhancement appears to result from active multisensory integrative neuronal mechanisms. These results are discussed in relation with the ecological and behavioral significance of looming stimuli, PPS, and the predictive coding framework.

Ecological and behavioral specificity of looming stimuli

Looming visual stimuli have been shown to generate stereotyped fear responses in monkeys (Schiff et al., 1962), human infants (Ball and Tronick, 1971), and adults (King and Cowey, 1992). These responses are absent when receding stimuli are used or when the looming object is perceived as passing by the observer rather than predicting an impact onto its body (Lewis and Neider, 2015). Although in theory the rate of optical increase in the size of the retinal image as an object approaches allows for a precise prediction of the time-to-collision, independently of object size or distance (Gibson, 1979), threatening objects are perceived as arriving earlier than nonthreatening objects (Brendel et al., 2012; Vagnoni et al., 2012), including in infants (Ayzenberg et al., 2015).

Importantly, looming stimuli interfere with visually guided actions, independently of an observer's current goals (Moher et al., 2015). This has led to the formulation of the behavioral-urgency hypothesis (Franconeri and Simons, 2003), which proposes that dynamic events on specific trajectories with respect to the body capture attention when they require an immediate behavioral response because they represent a danger to the observer's safety.

Changes in pupil size as a behavioral marker of impact prediction to the body

Pupil diameter adjusts as a function of the overall illumination and reflects a phasic response of LC neurons (Koss, 1986; Aston-Jones and Cohen, 2005; Samuels and Szabadi, 2008; Murphy et al., 2011; Laeng et al., 2012; Eldar et al., 2013). However, under constant illumination conditions, pupil size increase with arousal (Bradshaw, 1967), alertness (Yoss et al., 1970), decision making (Simpson and Hale, 1969), behavioral and attentional performance (Gilzenrat et al., 2010; Eldar et al., 2013; for review, see Laeng et al., 2012; Kihara et al., 2015), surprise and updating (Nassar et al., 2012; O'Reilly et al., 2013), changes in uncertainty, learning (Preuschoff et al., 2011), and emotional content of the stimulation (Bradley et al., 2008). In contrast, pupil constriction is associated with information updating (O'Reilly et al., 2013). These variations rely on two major neuromodulation systems, the noradrenergic LC system (Rajkowski et al., 1994; Aston-Jones and Cohen, 2005; Bouret and Sara, 2005; Yu and Dayan, 2005; Sara, 2009; Jepma and Nieuwenhuis, 2011) and the cholinergic basal forebrain system (Yu, 2012). Here we show that pupil size increases as a function of the strength of the spatial and temporal cues predicting an impact to the face. This could be related to an enhanced tonic LC-NA activity due to the impact predictive cues processing and their potential for harm to the body (Bradley et al., 2008).

Prediction of impact to the body and PPS

Looming stimuli enhance tactile processing by enhancing tactile sensitivity (Cléry et al., 2015a) and speeding reaction times (Canzoneri et al., 2012; Kandula et al., 2015). Because these behavioral effects are induced by visual stimuli and have consequences onto a heteromodal modality, namely touch, this strongly predicts the involvement of a visuotactile convergence network. The network we identify here is extremely similar to the visuotactile convergence network recently described in monkeys (Guipponi et al., 2013, 2015a), though quite distinct from the network activated by pure tactile stimulations to the face (Wardak et al., 2016). A direct prediction of our observation is that the human homolog of these parietal areas (Beauchamp et al., 2004; Sereno and Huang, 2006) are also expected to contribute to impact prediction in human subjects.

Importantly, impact prediction to the face involves, the parietofrontal (ventral intraparietal/post-arcuate PMZ/F4) network that has been associated with the definition of a defense PPS (Cléry et al., 2015b). These regions have bimodal visuotactile receptive fields representing close PPS and the corresponding skin surface (Gentilucci et al., 1988; Rizzolatti et al., 1988; Colby et al., 1993; Graziano et al., 1994; Gross and Graziano, 1995; Fogassi et al., 1996; Duhamel et al., 1997). They are involved in a defense PPS encoding (Graziano et al., 2002; Cooke and Graziano, 2004; Graziano and Cooke, 2006). We suggest that this network not only processes the trajectory of the looming objet with respect to the body, but also anticipates its consequences on the body by modulating sensitivity to touch. It is unclear whether these predictive mechanisms precede the preparation of protective actions in response to the looming stimulus or are contemporary to these flight mechanisms. The fact that tactile sensitivity is also enhanced for looming stimuli brushing past the face (Cléry et al., 2015a) further supports the idea that this network is not only activated by the prediction of intrusion onto the body but more generally into PPS as a comfort zone (Quesque et al., 2017).

Prediction of impact and multisensory integration

In single-cell studies, multisensory integration is the phenomenon by which the sum of neuronal responses to unisensory stimulations, in spikes per second, is different from the neuronal activity to bimodal stimulations (Avillac et al., 2007; Stein and Stanford, 2008; Stein et al., 2009). fMRI studies of multisensory integration pose very specific analysis issues, due to the nonlinear relationship that exists between spike generation and the corresponding change in the hemodynamic response (Boynton et al., 1996; Dale and Buckner, 1997; Heeger and Ress, 2002). In particular, the choice of the baseline is a critical factor (Binder et al., 1999; Stark and Squire, 2001; James and Stevenson, 2012) as well as the criteria for deciding that multisensory integration is indeed taking place (Calvert, 2001; Beauchamp et al., 2004; Beauchamp, 2005; Laurienti et al., 2005; Gentile et al., 2011; Love et al., 2011; Werner and Noppeney, 2011; Tyll et al., 2013). Here, we show that >70% of the ROIS reached the superadditive criterion, indicating active visuotactile integration processes. This expands previous evidence for visuotactile integration in the intraparietal cortex (Avillac et al., 2004, 2007) to the temporal prediction domain and to novel cortical territories. Quite surprisingly, multisensory integration is observed both in premotor and parietal, in lower striate and extrastriate visual areas, strongly indicating that multisensory integration processes in these regions is modulated by top-down contextual information including spatial and temporal predictive information.

A directly related question is whether this cortical network additionally distinctly integrates the spatial and temporal prediction cues. Again, we show that >50% of the ROIs reached the superadditive criterion, strongly suggesting that spatial and temporal prediction also relies on integrative mechanisms.

Causal multisensory inference and impact prediction

Bayesian causal inference (BCI) framework, that probabilistically associates diverse sensory outcomes with a set of potential sources and that explicitly models the potential external situations that could have generated the observed sensory signals appears as a very powerful computational framework to study multisensory processes (Körding et al., 2007; Shams and Beierholm, 2010; Parise et al., 2012). In the BCI framework, the estimate of the actual structure of the incoming sensory evidence is obtained by combining the estimates under the various causal structures (fusion or segregation) and evaluating the best model (Wozny et al., 2010). Recent studies (Rohe and Noppeney, 2015a,b, 2016) demonstrate that BCI is performed within a hierarchically organized cortical network: early sensory areas segregate sensory information (independent sources prior); posterior intraparietal areas fuse sensory information (common source prior); whereas anterior parietal areas infer the causal structure of the world (implementing predictions compatible with the BCI framework). A study by Arnal et al. (2011) shows that top-down predictions run through backward connections, whereas prediction errors propagate forward within the cortical hierarchy. Most relevant to our present work, predictive coding has been proposed to act as a generic mechanism for temporally binding multisensory signals into a coherent percept (Lee and Noppeney, 2014).

We would like to propose to further enrich this framework with an additional dimension beyond fusion and segregation, namely delayed consequences. This implies taking into account the temporal dimension of dynamic sensory stimuli as may occur in speech where the vision of the lips precedes and predicts the sound of the voice or in the prediction of impact of a visual object to the body. From a neuronal perspective, although single-cell recordings in monkeys show that the ventral intraparietal cortex performs multisensory source fusion (i.e., multisensory integration; Avillac et al., 2004, 2007), our study suggests that this cortical area also performs multisensory predictive binding in time, possibly thanks to gamma-band synchronization, which has been described to increase during the processing of looming stimuli (Maier et al., 2008) and which has been suggested to possibly implement top-down prior inferences (van Atteveldt et al., 2014).

Footnotes

This work was supported by the Fondation pour la Recherche Médicale to J.C, the French education ministry to O.G, and the French Agence nationale de la recherche (Grant ANR-05-JCJC-0230-01) to S.B.H.

The authors declare no competing financial interests.

References

- Armstrong KM, Chang MH, Moore T (2009) Selection and maintenance of spatial information by frontal eye field neurons. J Neurosci 29:15621–15629. 10.1523/JNEUROSCI.4465-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnal LH, Wyart V, Giraud AL (2011) Transitions in neural oscillations reflect prediction errors generated in audiovisual speech. Nat Neurosci 14:797–801. 10.1038/nn.2810 [DOI] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD (2005) An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu Rev Neurosci 28:403–450. 10.1146/annurev.neuro.28.061604.135709 [DOI] [PubMed] [Google Scholar]

- Astrand E, Ibos G, Duhamel JR, Ben Hamed S (2015) Differential dynamics of spatial attention, position, and color coding within the parietofrontal network. J Neurosci 35:3174–3189. 10.1523/JNEUROSCI.2370-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Olivier E, Denève S, Ben Hamed S, Duhamel JR (2004) Multisensory integration in multiple reference frames in the posterior parietal cortex. Cogn Process 5:159–166. 10.1007/s10339-004-0021-3 [DOI] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel JR (2007) Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci 27:1922–1932. 10.1523/JNEUROSCI.2646-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayzenberg V, Longo M, Lourenco S (2015) Evolutionary-based threat modulates perception of looming visual stimuli in human infants. J Vis 15(12):797 10.1167/15.12.797 [DOI] [Google Scholar]

- Ball W, Tronick E (1971) Infant responses to impending collision: optical and real. Science 171:818–820. 10.1126/science.171.3973.818 [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. (2005) Statistical criteria in fMRI studies of multisensory integration. Neuroinformatics 3:93–113. 10.1385/NI:3:2:093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A (2004) Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41:809–823. 10.1016/S0896-6273(04)00070-4 [DOI] [PubMed] [Google Scholar]

- Belmalih A, Borra E, Contini M, Gerbella M, Rozzi S, Luppino G (2009) Multimodal architectonic subdivision of the rostral part (area F5) of the macaque ventral premotor cortex. J Comp Neurol 512:183–217. 10.1002/cne.21892 [DOI] [PubMed] [Google Scholar]

- Ben Hamed S, Duhamel JR, Bremmer F, Graf W (2001) Representation of the visual field in the lateral intraparietal area of macaque monkeys: a quantitative receptive field analysis. Exp Brain Res 140:127–144. 10.1007/s002210100785 [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Rao SM, Cox RW (1999) Conceptual processing during the conscious resting state: a functional MRI study. J Cogn Neurosci 11:80–95. 10.1162/089892999563265 [DOI] [PubMed] [Google Scholar]

- Bouret S, Sara SJ (2005) Network reset: a simplified overarching theory of locus coeruleus noradrenaline function. Trends Neurosci 28:574–582. 10.1016/j.tins.2005.09.002 [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ (1996) Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci 16:4207–4221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Miccoli L, Escrig MA, Lang PJ (2008) The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45:602–607. 10.1111/j.1469-8986.2008.00654.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradshaw J. (1967) Pupil size as a measure of arousal during information processing. Nature 216:515–516. 10.1038/216515a0 [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W (1997) The representation of movement in near extra-personal space in the macaque ventral intraparietal area (VIP). In: Parietal lobe contributions to orientation in 3D space (Thier P, Karnath HO, eds), pp 619–630. Berlin Heidelberg: Springer. [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W (2000) Stages of self-motion processing in primate posterior parietal cortex. Int Rev Neurobiol 44:173–198. 10.1016/S0074-7742(08)60742-4 [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W (2002a) Heading encoding in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16:1554–1568. 10.1046/j.1460-9568.2002.02207.x [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W (2002b) Visual–vestibular interactive responses in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16:1569–1586. 10.1046/j.1460-9568.2002.02206.x [DOI] [PubMed] [Google Scholar]

- Brendel E, DeLucia PR, Hecht H, Stacy RL, Larsen JT (2012) Threatening pictures induce shortened time-to-contact estimates. Atten Percept Psychophys 74:979–987. 10.3758/s13414-012-0285-0 [DOI] [PubMed] [Google Scholar]

- Brett M, Anton J-L, Valabregue R, Poline J-B (2002) Region of interest analysis using the MarsBar toolbox for SPM 99. Presented at the 8th International Conference on Functional Mapping of the Human Brain, Sendai, Japan, June 2–6, 2002. [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL (2008) The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci 1124:1–38. 10.1196/annals.1440.011 [DOI] [PubMed] [Google Scholar]

- Calvert GA. (2001) Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb Cortex 11:1110–1123. 10.1093/cercor/11.12.1110 [DOI] [PubMed] [Google Scholar]

- Canzoneri E, Magosso E, Serino A (2012) Dynamic sounds capture the boundaries of peripersonal space representation in humans. PLoS One 7:e44306. 10.1371/journal.pone.0044306 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C, Thut G, Romei V, Murray MM (2009) Selective integration of auditory-visual looming cues by humans. Neuropsychologia 47:1045–1052. 10.1016/j.neuropsychologia.2008.11.003 [DOI] [PubMed] [Google Scholar]

- Chang C, Leopold DA, Schölvinck ML, Mandelkow H, Picchioni D, Liu X, Ye FQ, Turchi JN, Duyn JH (2016) Tracking brain arousal fluctuations with fMRI. Proc Natl Acad Sci U S A 113:4518–4523. 10.1073/pnas.1520613113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clavagnier S, Falchier A, Kennedy H (2004) Long-distance feedback projections to area V1: implications for multisensory integration, spatial awareness, and visual consciousness. Cogn Affect Behav Neurosci 4:117–126. 10.3758/CABN.4.2.117 [DOI] [PubMed] [Google Scholar]

- Cléry J, Guipponi O, Odouard S, Wardak C, Ben Hamed S (2015a) Impact prediction by looming visual stimuli enhances tactile detection. J Neurosci 35:4179–4189. 10.1523/JNEUROSCI.3031-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cléry J, Guipponi O, Wardak C, Ben Hamed S (2015b) Neuronal bases of peripersonal and extrapersonal spaces, their plasticity and their dynamics: knowns and unknowns. Neuropsychologia 70:313–326. 10.1016/j.neuropsychologia.2014.10.022 [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME (1993) Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol 69:902–914. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Graziano MS (2004) Sensorimotor integration in the precentral gyrus: polysensory neurons and defensive movements. J Neurophysiol 91:1648–1660. 10.1152/jn.00955.2003 [DOI] [PubMed] [Google Scholar]

- Dale AM, Buckner RL (1997) Selective averaging of rapidly presented individual trials using fMRI. Hum Brain Mapp 5:329–340. [DOI] [PubMed] [Google Scholar]

- de Haan AM, Smit M, Van der Stigchel S, Dijkerman HC (2016) Approaching threat modulates visuotactile interactions in peripersonal space. Exp Brain Res 234:1875–1884. 10.1007/s00221-016-4571-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Paepe AL, Crombez G, Legrain V (2016) What's coming near? The influence of dynamical visual stimuli on nociceptive processing. PLOS One 11:e0155864. 10.1371/journal.pone.0155864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Bremmer F, Ben Hamed S, Graf W (1997) Spatial invariance of visual receptive fields in parietal cortex neurons. Nature 389:845–848. 10.1038/39865 [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME (1998) Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol 79:126–136. [DOI] [PubMed] [Google Scholar]

- Eldar E, Cohen JD, Niv Y (2013) The effects of neural gain on attention and learning. Nat Neurosci 16:1146–1153. 10.1038/nn.3428 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE (2011) Neural correlates of reliability-based cue weighting during multisensory integration. Nat Neurosci 15:146–154. 10.1038/nn.2983 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G (1996) Coding of peripersonal space in inferior premotor cortex (area F4). J Neurophysiol 76:141–157. [DOI] [PubMed] [Google Scholar]

- Franconeri SL, Simons DJ (2003) Moving and looming stimuli capture attention. Percept Psychophys 65:999–1010. 10.3758/BF03194829 [DOI] [PubMed] [Google Scholar]

- Gentile G, Petkova VI, Ehrsson HH (2011) Integration of visual and tactile signals from the hand in the human brain: an fMRI study. J Neurophysiol 105:910–922. 10.1152/jn.00840.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentilucci M, Fogassi L, Luppino G, Matelli M, Camarda R, Rizzolatti G (1988) Functional organization of inferior area 6 in the macaque monkey: I. Somatotopy and the control of proximal movements. Exp Brain Res 71:475–490. 10.1007/BF00248741 [DOI] [PubMed] [Google Scholar]

- Gibson JJ. (1979) The ecological approach to visual perception. Boston: Houghton Mifflin. [Google Scholar]

- Gilzenrat MS, Nieuwenhuis S, Jepma M, Cohen JD (2010) Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cogn Affect Behav Neurosci 10:252–269. 10.3758/cabn.10.2.252 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF (2006) Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 44:845–859. 10.1016/j.neuropsychologia.2005.09.009 [DOI] [PubMed] [Google Scholar]

- Graziano MS, Taylor CS, Moore T (2002) Complex movements evoked by microstimulation of precentral cortex. Neuron 34:841–851. 10.1016/S0896-6273(02)00698-0 [DOI] [PubMed] [Google Scholar]

- Graziano MS, Yap GS, Gross CG (1994) Coding of visual space by premotor neurons. Science 266:1054–1057. 10.1126/science.7973661 [DOI] [PubMed] [Google Scholar]

- Gregoriou GG, Gotts SJ, Zhou H, Desimone R (2009) High-frequency, long-range coupling between prefrontal and visual cortex during attention. Science 324:1207–1210. 10.1126/science.1171402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregoriou GG, Gotts SJ, Desimone R (2012) Cell-type-specific synchronization of neural activity in FEF with V4 during attention. Neuron 73:581–594. 10.1016/j.neuron.2011.12.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross CG, Graziano MS (1995) Review: multiple representations of space in the brain. Neuroscientist 1:43–50. 10.1177/107385849500100107 [DOI] [Google Scholar]

- Guedj C, Monfardini E, Reynaud AJ, Farnè A, Meunier M, Hadj-Bouziane F (2017) Boosting norepinephrine transmission triggers flexible reconfiguration of brain networks at rest. Cereb Cortex 27:4691–4700. 10.1093/cercor/bhw262 [DOI] [PubMed] [Google Scholar]

- Guipponi O, Wardak C, Ibarrola D, Comte JC, Sappey-Marinier D, Pinède S, Ben Hamed S (2013) Multimodal convergence within the intraparietal sulcus of the macaque monkey. J Neurosci 33:4128–4139. 10.1523/JNEUROSCI.1421-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guipponi O, Cléry J, Odouard S, Wardak C, Ben Hamed S (2015a) Whole brain mapping of visual and tactile convergence in the macaque monkey. Neuroimage 117:93–102. 10.1016/j.neuroimage.2015.05.022 [DOI] [PubMed] [Google Scholar]

- Guipponi O, Odouard S, Pinède S, Wardak C, Ben Hamed S (2015b) fMRI cortical correlates of spontaneous eye blinks in the nonhuman primate. Cereb Cortex 25:2333–2345. 10.1093/cercor/bhu038 [DOI] [PubMed] [Google Scholar]

- Heeger DJ, Ress D (2002) What does fMRI tell us about neuronal activity? Nat Rev Neurosci 3:142–151. 10.1038/nrn730 [DOI] [PubMed] [Google Scholar]

- Ibos G, Duhamel JR, Ben Hamed S (2013) A functional hierarchy within the parietofrontal network in stimulus selection and attention control. J Neurosci 33:8359–8369. 10.1523/JNEUROSCI.4058-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, Stevenson RA (2012) The use of fMRI to assess multisensory integration. In: The neural bases of multisensory processes (Murray MM, Wallace MT, eds). Boca Raton, FL: CRC. [PubMed] [Google Scholar]

- Jepma M, Nieuwenhuis S (2011) Pupil diameter predicts changes in the exploration–exploitation trade-off: evidence for the adaptive gain theory. J Cogn Neurosci 23:1587–1596. 10.1162/jocn.2010.21548 [DOI] [PubMed] [Google Scholar]