Abstract

Classical economic theory contends that the utility of a choice option should be independent of other options. This view is challenged by the attraction effect, in which the relative preference between two options is altered by the addition of a third, asymmetrically dominated option. Here, we leveraged the attraction effect in the context of intertemporal choices to test whether both decisions and reward prediction errors (RPE) in the absence of choice violate the independence of irrelevant alternatives principle. We first demonstrate that intertemporal decision making is prone to the attraction effect in humans. In an independent group of participants, we then investigated how this affects the neural and behavioral valuation of outcomes using a novel intertemporal lottery task and fMRI. Participants' behavioral responses (i.e., satisfaction ratings) were modulated systematically by the attraction effect and this modulation was correlated across participants with the respective change of the RPE signal in the nucleus accumbens. Furthermore, we show that, because exponential and hyperbolic discounting models are unable to account for the attraction effect, recently proposed sequential sampling models might be more appropriate to describe intertemporal choices. Our findings demonstrate for the first time that the attraction effect modulates subjective valuation even in the absence of choice. The findings also challenge the prospect of using neuroscientific methods to measure utility in a context-free manner and have important implications for theories of reinforcement learning and delay discounting.

SIGNIFICANCE STATEMENT Many theories of value-based decision making assume that people first assess the attractiveness of each option independently of each other and then pick the option with the highest subjective value. The attraction effect, however, shows that adding a new option to a choice set can change the relative value of the existing options, which is a violation of the independence principle. Using an intertemporal choice framework, we tested whether such violations also occur when the brain encodes the difference between expected and received rewards (i.e., the reward prediction error). Our results suggest that neither intertemporal choice nor valuation without choice adhere to the independence principle.

Keywords: asymmetric dominance, cognitive modeling, delay discounting, fMRI, nucleus accumbens, value-based decision making

Introduction

The independence of irrelevant alternative (IIA) principle is a fundamental property of the majority of choice theories such as expected utility theory (Von Neumann and Morgenstern, 1947) and is implied by common choice rules such as Luce's choice axioms or the softmax choice rule (Luce, 1959; Sutton and Barto, 1998). It states that the relative preference for two options must not change when other options are added to the choice set. However, empirical research provides compelling evidence that the IIA principle is violated systematically in a wide range of different choice settings and across species (Tversky, 1972; Heath and Chatterjee, 1995; Shafir et al., 2002; Trueblood et al., 2013; Berkowitsch et al., 2014). Perhaps the most compelling example of the violation of IIA is the attraction effect, in which adding a third alternative D (for decoy), which is clearly inferior to an option T (for target), but not to another option C (for competitor), increases the probability of choosing T (Huber et al., 1982). The attraction effect also violates the regularity principle according to which the probability of choosing an option must not increase when a choice set is increased by another option (Rieskamp et al., 2006). For example, imagine yourself choosing between two digital cameras, with option T being an expensive and high-quality product and option C being a cheap and low-quality alternative. Upon noticing your indifference, a salesman draws your attention to a third option D, which is even more expensive than the first one and of slightly lower quality. Of course, you will not pick this new dominated option D, but its inferiority to the similar high-quality camera T will make this dominating option more attractive than the competing low-quality camera C. As a result, you will buy the expensive camera T.

In the present study, we argue that the abundant evidence against IIA in preferential choice should have similar implications for our understanding of how the brain learns from reward and punishment: The reward prediction error (RPE) reflects the difference between received and expected rewards and acts as the central teaching signal in reinforcement learning (Schultz et al., 1997). We propose the novel hypothesis that, similar to its effect on preferential choices, the attraction effect influences expectations about rewards even in the absence of choice, which in turn leads to a modulation of the RPE. To illustrate this point, let us return to the camera example and assume that you are not making a choice, but that a fortune wheel selects one out of the three options as your reward. We predict that, even though the subjective values of options T and C are similar when considering only the two options, the presence of D in the lottery makes T relatively more attractive than C. This implies that winning option T should be a more rewarding event than winning option C and should thus elicit a more positive RPE signal. Importantly, it has been argued recently that RPE coding is stable across different behavioral contexts, which allows predicting RPE signals in one task by deriving the utility of different rewards in another task (Lak et al., 2014). Here, we challenge this putative context independence of the RPE by predicting a susceptibility of the RPE to the attraction effect.

To determine whether the RPE in brain and behavior violates the IIA principle, we conducted an fMRI experiment in which participants played a lottery with options taken from intertemporal choice tasks (Kirby and Herrnstein, 1995). Conveniently, these options are defined by two attributes (i.e., reward amount and delay), allowing us to test for the attraction effect. Although there is some evidence for multi-attribute context effects in intertemporal choice (Scholten and Read, 2010), to the best of our knowledge, the attraction effect has never been demonstrated in this domain. Accordingly, we first establish that intertemporal choices are prone to the attraction effect. The results of both the behavioral and fMRI studies challenge existing theories of intertemporal choice, including exponential and hyperbolic discounting models (Samuelson, 1937; Mazur, 1987), and provide novel evidence for the context dependency of subjective valuation of rewards in the absence of choice.

Materials and Methods

Participants.

Twenty-one psychology students (15 females; mean age = 22.0 years, SD = ±1.9, range = 19–26 years; one participant without indication of age) from the University of Basel took part in the behavioral experiment (henceforth Study 1). The fMRI experiment (henceforth Study 2) comprised 30 participants (21 females; 24.8 years, ±4.2, 19–35 years). One participant of Study 2 did not perform the left-right task (see below) above chance level and was therefore excluded from any further analysis. Parts of the dataset from the two-alternative choice task of another participant were lost due to a restart of the computer program during the task. Fortunately, the participant's discount factor (see below) could be computed successfully based on the remaining data and the participant was therefore included in the analysis. Participants in Study 1 received partial course credit for their participation plus an additional monetary outcome of a randomly selected choice from one of the intertemporal choice tasks (paid to the participants by bank transfers at the corresponding time point). Participants in Study 2 were paid CHF 50 (∼$48 US) for their participation plus the outcome of a randomly selected choice/lottery outcome from the intertemporal choice task/lotteries. All participants gave written informed consent and the study was approved by the local ethics committee (Ethikkommission Nordwest und Zentralschweiz).

Experimental design.

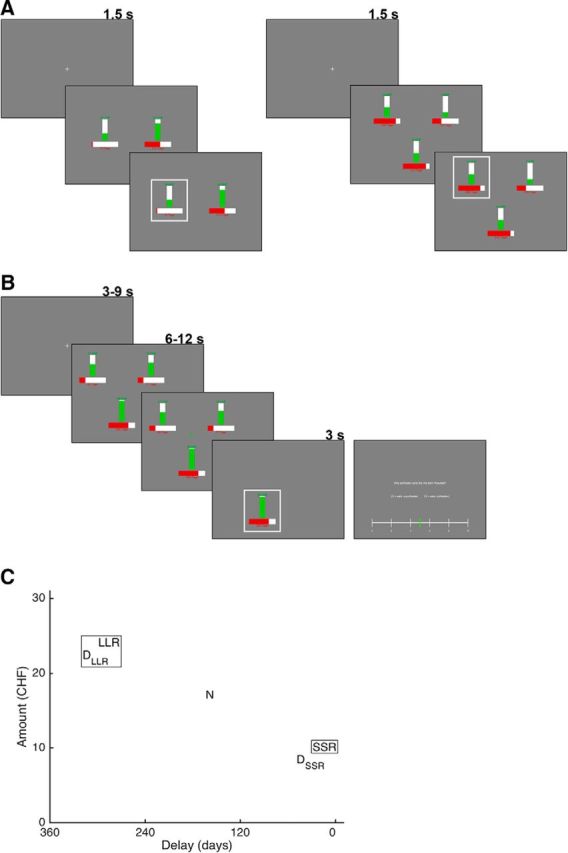

Both experiments began with filling out a demographic questionnaire, followed by a classical two-alternative intertemporal choice task (Kirby and Herrnstein, 1995; McClure et al., 2004) in which participants chose between a later larger reward (LLR) and a smaller sooner reward (SSR) in 60 trials (see Fig. 1A, left). These trials were interspersed with 10 “catch” trials, in which one option dominated the alternative on both attributes, to test whether participants were able to detect first-order dominance. The dominating option in these catch trials was consistently chosen in both experiments (Study 1: 100%, SD = ±0%; Study 2: 99%; ±6%). Reward amounts could vary between CHF 1 and 30, delays between 1 and 360 d. Each trial began with a fixation cross in the middle of the screen (1.5 s), followed by the presentation of the two choice options on the left and right side. Amount and delay of an option were depicted numerically in green and red, respectively. In addition, vertical/horizontal white bars were filled by green/red in proportion to amount/delay (e.g., if the delay was 270 d, 270/360 = 75% of the horizontal bar was red). Participants chose one of the options by pressing the respective button (left/right arrow keys in Study 1, left/right buttons on an MR-compatible button box in Study 2). The selected option was indicated by a white frame encompassing the option and participants confirmed their choice by pressing a third button (spacebar in Study 1, a third button on the button box in Study 2) to proceed to the next trial. Participants could change their selection as long as they did not press this third button. There was no time limit for choices.

Figure 1.

Task design. A, All participants started with a two-alternative intertemporal choice task (left). Reward amounts were indicated by numbers and a vertical bar in green; delays were indicated by numbers and a horizontal bar in red. Participants of Study 1 went on to perform a three-alternative intertemporal choice task (right). B, Participants of Study 2 went on to perform a three-alternative intertemporal lottery task during fMRI. Upon presentation of the three possible outcomes, they had to indicate whether options were shown more on the left (as in this example) or more on the right. After a variable delay, the actual outcome was presented. Satisfaction ratings were only obtained in an additional lottery task after scanning. C, Locations in the two-attribute (money and delay) space of an example set of five options used in the three-alternative choice and lottery tasks. Based on the results from the two-alternative choice task, several of these five-option sets were created to test for the attraction effect. For a single trial, three out of the five options were selected. For example, the framed options (LLR, SSR, and DLLR) make up a choice set in which, according to the attraction effect, LLR is expected to be chosen.

The primary goal of the two-alternative choice task was to find an individual's indifference point between LLR and SSR options. For the initial creation of the two-alternative choice set, we used a hyperbolic discounting function (Mazur, 1987) as follows:

|

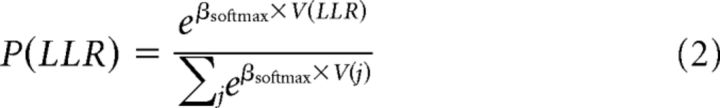

where X and Y represent the amount and delay of an option i, respectively, and κ is the discount factor, which we set to 0.0083, the median estimate reported in a large sample study with a comparable pool of participants (Peters et al., 2012). We selected hyperbolic discounting instead of exponential discounting because the former usually provides a better account of human intertemporal choices (Kirby and Herrnstein, 1995; Frederick et al., 2002; Peters et al., 2012). Using this function, we kept V for LLR and SSR options similar (with some added variability). However, amounts and delays were adaptively changed every sixth trial if either LLR or SSR options were chosen in less than two of the last six trials. Upon completion of this task, Equation 1 was fitted to the choice data using the softmax choice rule (Sutton and Barto, 1998) to derive choice probabilities as follows:

|

where P(LLR) is the probability of choosing LLR, j are all possible options, and βsoftmax is a (free) sensitivity parameter. Parameters κ and βsoftmax were estimated by minimizing the root mean square deviation (RMSD) with a grid search (301 steps per parameter; range of κ between 0 and 0.15; range of βsoftmax between 0 and 20). We chose RMSD and grid search to quickly obtain robust estimates of the discount parameter without taking the risk of ending up in local minima. The discount parameter was then used to create multiple sets of five options: two of these five options were an LLR and an SSR option with similar V and two further options were decoys DLLR and DSSR, which were similar to the LLR and SSR options, respectively, with a slightly lower amount (1–2 CHF less) and a slightly higher delay (1–29 d more) than the respective LLR/SSR option. The fifth option was a neutral option N with amount and delay halfway between LLR and SSR (see Fig. 1C). We repeatedly used a set of five options to create three choice trials for the three-alternative choice task that followed in Study 1. All of these three trials included the LLR and SSR options plus DLLR, DSSR, or N. Thirty sets were created to obtain 90 trials for the three-alternative choice task. A custom-built algorithm was used to pseudorandomize trial order to ensure that the same option (and pairs of options) did not repeat on subsequent trials.

Upon completion of the two-alternative task, participants of Study 1 proceeded with the three-alternative intertemporal choice task (see Fig. 1A, right), which was highly similar but included a third option presented in the lower middle part of the screen and selectable via the down arrow key (note that the spatial arrangement of options in both tasks was randomized). Unlike in Study 1, participants of Study 2 went into the MR scanner to conduct a novel three-alternative intertemporal lottery task (see Fig. 1B). In this task, each trial started with an initial fixation cross shown for 3–9 s before the three possible rewards of the trial's lottery were presented for 6–12 s. To ensure that participants were attentive, the three possible rewards were displayed either more on the left or more on the right and participants had to press the corresponding button on the MR-compatible button box (for the 29 participants included in the analysis, the accuracy in this task was very high: 98%; SD = ±2%). A green cross appeared in the middle of the three options after participants pressed the correct button; a red cross appeared for incorrect responses. If a response was incorrect or not made, participants were told so and the trial was terminated. After correct responses, an outcome was randomly selected and indicated for 3 s by disappearance of the 2 other options and appearance of a white frame encompassing the actual outcome. The task consisted of 144 trials in total. The creation of lottery sets in Study 2 was similar to the creation of choice sets in Study 1 (i.e., sets of five options: LLR, SSR, DLLR, DSSR, and N), but for Study 2, a set of three possible outcomes (e.g., LLR, SSR, DLLR) was used in three different trials so that each option was the actual outcome once (e.g., LLR in trial 1, SSR in trial 2, DLLR in trial 3) (note that, similar to Study 1, trials were pseudorandomized so that direct repetitions of options were avoided). Therefore, a set of five options could be used to create nine trials so that 16 different sets were required to obtain the 144 trials of the lottery task. Note that this procedure ensures that the probability of each option being the actual outcome of the lottery is exactly 1/3, which was also instructed to the participants. Participants further knew that, at the end of the experiment, one of the trials would be selected randomly and that they would receive the lottery outcome from this trial (unless they pressed the wrong button or missed in the left/right task).

After MR scanning, participants in Study 2 conducted half of the lottery trials again, but this time, they had to indicate their satisfaction of receiving the actual lottery reward (and not the other two possible rewards) at the end of each trial (see Fig. 1B). We consider these satisfaction ratings to be a “behavioral approximation” of the RPE because people should be more satisfied with receiving more reward than expected and less satisfied with receiving less reward than expected (Tobler et al., 2006). To indicate their satisfaction with each reward, participants used a continuous rating scale from 0 (“very unsatisfied”) to 10 (“very satisfied”) with the left and right arrow keys to move a green bar along the white scale and the spacebar button to confirm their rating. The 72 trials for this task were selected by randomly picking eight of the 16 five-trial sets that were used to create the 144 trials for the task inside the MR scanner. We decided to collect the satisfaction rating data in a separate task for two reasons: (1) we did not want to induce a specific form of outcome valuation that might have influenced the neural RPE signal in accordance with our hypothesis (for the same reason, people were only informed about the rating task after exiting the scanner) and (2) we wanted to minimize the duration of the task inside the MR scanner. Stimuli were created and choice sets were presented using MATLAB (RRID:SCR_001622) and its Cogent 2000 toolbox.

Behavioral data analysis.

The critical test for the attraction effect in our three-alternative intertemporal choice task (Study 1) is whether the frequency of choosing LLR over SSR increases in the presence of DLLR and whether the frequency of choosing SSR over LLR increases in the presence of DSSR. Stated differently, in the presence of DLLR, the LLR option is the target (and SSR is the competitor), whereas in the presence of DSSR the SSR option is target (and LLR is the competitor), and the attraction effect is present when targets are chosen significantly more often than competitors. Note that our design provides a very strong test of the attraction effect because the same option acts as target and competitor on two different trials (i.e., LLR and SSR appear on both trials, with only the unattractive decoys changing). Statistically, we used a paired t test and report effect sizes (Cohen's d). Similarly, we tested for an attraction-effect modulation of satisfaction with the received outcome (a behavioral proxy of the RPE) in the three-alternative intertemporal lottery task (Study 2) by comparing the ratings for targets and competitors, again using a paired t test. In addition, we ran a multiple linear regression with satisfaction ratings as the dependent variable and the following predictor variables: (1) the subjective value of the outcome Voutcome, (2) the RPE (Voutcome and RPE were estimated based on the hyperbolic discounting model), (3) a variable coding whether the outcome was a target (+1) or a competitor (−1), (4) a variable coding whether the outcome was a decoy (+1), and (5) a variable coding whether the outcome was an LLR (+1) or an SSR (−1) option. Note that, among these predictor variables, only Voutcome and RPE are not orthogonal to each other. We checked the average correlation between these as well as their variance inflation factor (VIF) to ensure that they can be used in the same regression analysis. Both measures indicate a low level of colinearity compared with other studies in the field (cf. Hare et al., 2008): average correlation = 0.25; SD = ±0.22; VIF(Voutcome) = 1.27, ±0.73; VIF(RPE) = 2.77, ±1.40. The regression analysis was conducted to test whether the attraction effect can explain variance in the ratings over and above the Voutcome and RPE information given by the hyperbolic discounting model. We chose a mixed-model approach in which the regression is first run within each participant and the individual regression coefficients are then subjected to (“group-level”) one-sample t tests against 0.

Cognitive models I and II (hyperbolic and exponential discounting).

We compared four different cognitive models against each other with respect to how well their choice predictions in Study 1 could be generalized from the two-alternative to the three-alternative intertemporal choice task and from trials comprising a neutral option N to trials comprising a decoy option (within the three-alternative task). The four models comprised two traditional discounting models (i.e., exponential and hyperbolic discounting), a multi-attribute sequential sampling model (i.e., the leaky competing accumulator; LCA; Usher and McClelland, 2001, 2004) and the recently proposed intertemporal choice heuristic (ITCH; Ericson et al., 2015). The hyperbolic discounting model is described by Equations 1 and 2 (see above). For the exponential discounting model, Equation 1 is replaced by the following value function (e.g., Peters et al., 2012):

where X and Y represent the amount and delay of an option i, respectively, and κ is the discount factor. The probability of choosing option i is given by Equation 2.

Cognitive model III: LCA model.

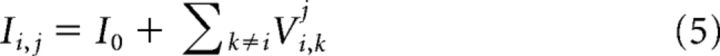

LCA relies on a sequential sampling process with leakage and mutual inhibition, in which each choice option i is represented by an accumulator Ai that is updated every time step Δt based on a comparison of i's attribute value Mi,k on the currently attended attribute k (here: magnitude or delay) with the other options' attribute values. More precisely, with n choice options and m attributes, the n by 1 vector A, representing all accumulators, is updated by the following:

where S represents an n by n leakage and inhibition matrix with δ as on-diagonal elements and −φ × (1 − δ) as off-diagonal elements (δ and φ model leakage and inhibition, respectively), I represents an n by m input matrix (see below), and Wt represents an m by 1 attention vector with one element being 1 (representing the currently attended attribute) and the other elements being 0 (representing the currently unattended attributes) at every time point t. The probability that a particular attribute is attended to at t depends on the m − 1 attention weights ω. Et represents an n by 1 noise vector with each element being drawn from a normal distribution with mean 0 and SD σ. Note that LCA is inspired by neurophysiological constraints and truncates negative values of A to 0 because of the implausibility of negative firing rates (Usher and McClelland, 2001, 2004; but see Bogacz et al., 2006). The input matrix I contains the attribute-wise comparisons with each element according to the following:

|

where I0 represents a constant input to each accumulator and Vi,kj represents the output of a comparison between option i and j on the attribute k that differs depending on whether i is better or worse than j on attribute k:

where α and λ represent parameters for marginal utility and loss aversion, respectively, in the sense of prospect theory's value function (Kahneman and Tversky, 1979; Tversky and Kahneman, 1991, 1992). Note that the assumption of loss aversion is critical for LCA to explain the attraction effect because competitors, but not targets, are worse than decoys on one attribute (Usher and McClelland, 2004; Tsetsos et al., 2010). As being part of the family of sequential sampling models (Smith and Ratcliff, 2004; Gold and Shadlen, 2007; Heekeren et al., 2008), LCA can predict response times when assuming that a choice is made as soon as one element of A exceeds a decision threshold. However, because we were interested in predicting choices but not response times, we assumed that choices were always made after a fixed time interval tmax based on which element of A was highest at tmax (Berkowitsch et al., 2014; Trueblood et al., 2014). We set tmax to 100, which we found to be sufficient for the trial-wise LCA predictions to stabilize.

To the best of our knowledge, LCA has never been applied to intertemporal choices. We adjusted the model to this choice context by the following two adaptations: First, we applied a power transformation to delays D to account for the nonlinearity in time perception (Wittmann and Paulus, 2008; Zauberman et al., 2009; Dai and Busemeyer, 2014) using an exponent γ to account for interindividual differences in this nonlinearity. This was necessary because context effects such as the attraction effects are usually investigated with attributes that have (approximately) linear utility functions, and LCA as well as multi-alternative decision field theory (MDFT; Roe et al., 2001) and the multi-attribute linear ballistic accumulator model (MLBA; Trueblood et al., 2014) are tailored to such attributes. Second, magnitudes and (transformed) delays were rescaled to values ranging between 0 and 1, where 0 represents the worst possible attribute value and 1 the best possible attribute value. In other words, 1 CHF was rescaled to 0, 30 CHF was rescaled to 1, 1γ day was rescaled to 1, and 360γ days was rescaled to 0 (with intermediate values being rescaled accordingly). Note that rescaling has to be done after (and not before) applying the power transformation of delays to preserve the shape of the delay curve induced by γ. Importantly, rescaling was used merely for technical reasons (i.e., to bring the attribute values in a range in which LCA had been applied in the past) and differs from procedures that change choice predictions qualitatively (e.g., divisive normalization; Louie et al., 2013). The rescaled magnitudes and the power-transformed and rescaled delays were then entered (as elements Mi,k) into LCA (see above).

LCA is only one out of various sequential sampling models that have been shown to account for context effects including the attraction effect. Alternatives include the above mentioned MDFT and MLBA models, the associative accumulation model (Bhatia, 2013), and the 2N-ary choice tree model (Wollschläger and Diederich, 2012). The latter three models are relatively new and less established than LCA and MDFT. Compared with LCA, MDFT's simulations require more within-trial samples to converge (i.e., a higher tmax value; cf. Tsetsos et al., 2010), which reduces the efficiency of parameter search. Therefore, we chose LCA to represent the larger class of sequential sampling models for both conceptual and practical reasons.

Cognitive model IV: ITCH.

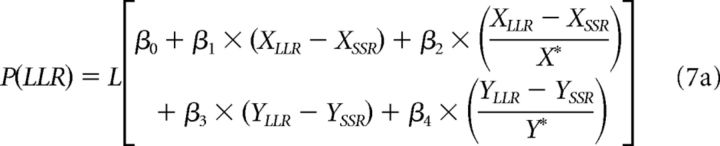

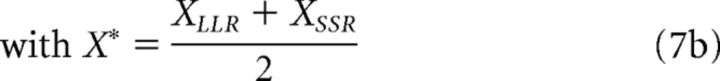

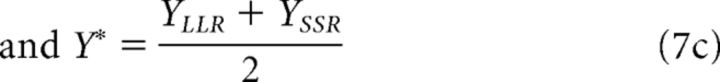

The ITCH model is based on direct comparisons of magnitudes X and delays Y (Ericson et al., 2015). We included it in our model comparison because it has been argued to account for within-attribute comparison effects in intertemporal choice that are difficult to reconcile with traditional discounting models and because it is in principle compatible with violations of IIA. When choosing between an LLR and an SSR option, the probability of choosing LLR is as follows:

|

|

|

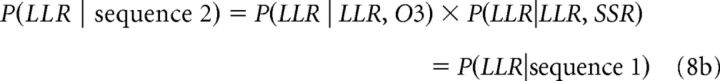

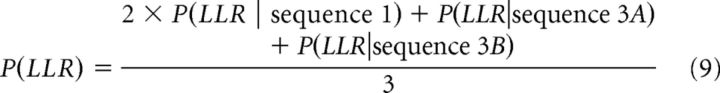

where XLLR (XSSR) is the amount of money associated with option LLR (SSR), YLLR (YSSR) is the delay associated with option LLR (SSR), L[x] is the value of the cumulative logistic distribution at x with mean 0 and variance 1, and the β's represent regression coefficients (i.e., free parameters). Put simply, the ITCH model predicts intertemporal choices by a logistic regression of absolute and relative differences in X and Y. To generalize the model to a situation, in which a third option (O3) is added to the choice set, we assumed that choices between three options arise from a sequence of pairwise comparisons. For example, LLR and SSR are compared first and LLR is preferred in this pairwise comparison with probability P(LLR|LLR, SSR), which is computed as in Equation 7 above. Next, LLR is compared with O3 and is preferred in this pairwise comparison with probability P(LLR|LLR, O3), which is also given by Equation 7 when replacing the X and Y terms of SSR by those of O3. The probability of choosing LLR given the described sequence (i.e., sequence 1 = LLR vs SSR, then LLR vs O3) is simply the product of the pairwise comparisons as follows:

To compute the overall probability of choosing LLR, three other possible sequences must be taken into account (i.e., sequence 2 = LLR vs O3, then LLR vs SSR; sequence 3A = SSR vs O3, then LLR vs SSR; sequence 3B = SSR vs O3, then LLR vs O3). We denote the last two sequences by “3A” and “3B” because they are essentially the same (first SSR and O3 are compared, then the winner of this comparison is compared with LLR). The probability of choosing LLR given sequences 2, 3A, and 3B is as follows:

|

We assume that all sequences are equally likely to occur, which means that the probability of sequence 3A and 3B together is 1/3 because both begin with a comparison of SSR and O3. Therefore, the overall probability of choosing LLR is as follows:

|

The probabilities of choosing SSR and O3 are computed accordingly. This generalization of the ITCH model has the advantage of preserving the original choice process represented by Equation 7 of the model. However, it is important to note that this is only one of many possible generalizations.

Modeling procedures.

We compared the four models against each other using two generalization tests (Busemeyer and Wang, 2000; see also Gluth et al., 2015), for which model parameters are estimated based on one dataset and then used to predict behavior in a second dataset. More specifically, we conducted a first generalization test by estimating parameters of each model for the dataset of the two-alternative choice task and then predicting the choices participants made in the three-alternative choice task. In the second generalization test, the models were fitted on the basis of trials from the three-alternative choice task that comprised the neutral option N and generalized to trials from the same task that comprised a decoy option. The generalization test is similar to cross-validation, but differs from it by testing the fitted models with a new task design instead of with a replication sample from the same design, thereby examining to what extent models can be generalized to a new context (Busemeyer and Wang, 2000). Importantly, model complexity is taken into account automatically, because overly complex models will overfit the training dataset and will therefore perform poorly when being generalized to the test dataset.

The hyperbolic and exponential discounting models have two free parameters, the discount rate κ and the sensitivity parameter βsoftmax. Our adapted LCA model can have up to eight free parameters, but we fixed four of them (δ = 0.94; I0 = 0.75; α = 0.88; λ = 2.25) to values already used and reported in the literature (Usher and McClelland, 2004; Tsetsos et al., 2010) to speed up parameter estimation and because these parameters have little impact on predictions when only two alternatives are in the choice set. Accordingly, the LCA has four free parameters, the inhibition parameter φ, the standard deviation σ of noise, attention weight ω, and γ for the power transformation of delays. The ITCH model has five free parameters (i.e., β0, β1, β2, β3, and β4). The two discounting models and ITCH have closed-form solutions and were estimated using maximum likelihood estimation. Because LCA does not have a closed-form solution, we approximated maximum likelihood estimation by simulating every trial 100 times and using the choice frequencies as probabilities (Gluth et al., 2013). To avoid that the log-likelihood of LCA (but also of other models) goes to −∞ when a single choice is predicted with a probability of 0, the trialwise predictions of all models were truncated to a minimum and maximum of 0.01 and 0.99, respectively (Rieskamp, 2008; Gluth et al., 2013). For the training datasets, the models were compared based on the Bayesian information criterion (BIC; Schwarz, 1978) as follows:

where the deviance of a model M is two times the negative log-likelihood of the data given M, n is the number of trials, and q is the number of free parameters of the model. For the test datasets, the deviance itself was used to compare the models because the generalization test controls for model complexity automatically (see above). In addition to the average BIC and deviance values, we report the model evidence of the best model within each participant: Following Raftery (1995), we define the model evidence as “weak,” “positive,” “strong,” or “very strong” when the BIC/deviance difference x between the best and the second-best model is x < 2, 2 < x < 6, 6 < x < 10, or x > 10, respectively. Parameter estimation for all models was realized by combining an initial grid search algorithm (with 25 steps per parameter) to obtain reasonable starting values, which were then passed on to a Simplex minimization algorithm (Nelder and Mead, 1965) as implemented in the MATLAB function fminsearchcon to obtain the final estimates. Parameters were constrained for the discounting models: κ between 0.0001 and 1, and βsoftmax between 0.001 and 100; for LCA: φ between 0 and 1, σ between 0.05 and 5.05, ω between 0.01 and 0.99, and γ between 0.01 and 1.01; for ITCH: all β's between −12 and 12.

fMRI data acquisition and preprocessing.

Whole-brain fMRI data was collected on a 3 tesla Siemens MAGNETOM Prisma scanner with a 20-channel head coil. In 3 consecutive runs, a total of 1191 echoplanar T2*-weighted images (TR 2200 ms, TE 30 ms, FoV 228 × 228, flip angle 82°) were acquired using 40 axial slices with a voxel size of 2 × 2 × 2 mm plus a 1 mm gap between slices. A z-shim gradient moment of −1.0 mT/m*ms was used to minimize signal drop in the orbitofrontal cortex (Deichmann et al., 2003). In addition, field maps (short TE: 4.92 ms; long TE: 7.38 ms) and a high-resolution T1-weighted MPRAGE image (voxel size 1 × 1 × 1 mm) were acquired for each subject to improve spatial preprocessing.

Preprocessing of fMRI data was performed using SPM8 (RRID:SCR_007037) and commenced with slice timing correction to the middle slice of each volume, followed by spatial realignment and unwarping to account for movement artifacts. Realignment and unwarping was supported by correction for geometric distortions using field maps generated with the FieldMap toolbox (Hutton et al., 2002). The individual T1-weighted image was then coregistered to the mean functional image generated during realignment. The coregistered image was segmented into gray matter, white matter, and CSF by the “new segment” algorithm of SPM8 and the obtained tissue-class images were used to generate individual flow fields and a structural template of all participants by the DARTEL toolbox (Ashburner, 2007). Flow fields were used for spatial normalization of functional images to MNI space and to create a structural group mean image for displaying statistical maps. Functional images were smoothed by a Gaussian kernel of 6 mm FWHM.

Statistical analysis of fMRI data.

To avoid different standardization of input values for parametric modulators (PMs, see below), the three scanning sessions were collapsed into a single run per participant. Individual (first-level) design matrices included an onset vector for presentation of the three lottery offers (duration = 6–12 s), an onset vector for the time point when the left/right response was made (duration = 0 s), and an onset vector for presentation of the actual lottery outcome (duration = 3 s). Each of these onset vectors were accompanied by PMs that modeled the predicted change of the hemodynamic response as a function of a variable of interest at the respective time point (Büchel et al., 1998). The lottery offers vector included a PM for the hyperbolic discounted expected value (EV) of the current lottery, which is given by the following: EV = (V1 + V2 + V3)/3, where V1 is the value of option 1 according to Equation 1. The left/right response vector included two PMs for response time and whether the left (+1) or the right (−1) button was pressed. The outcome vector included four PMs for the hyperbolic discounting estimates of Voutcome and RPE, whether the outcome was a target (+1) or a competitor (−1), and whether the outcome was a decoy (+1). Note that similar to the predictor variables in the behavioral regression analysis, the correlation between the PMs Voutcome and RPE and the respective VIFs were comparatively low: avg. correlation = 0.19; SD = ±0.17; VIF(Voutcome) = 1.24, ±0.35; VIF(RPE) = 2.11, ±0.72 (in an additional exploratory analysis, we also found that the exclusion of Voutcome does not have a substantial effect on the results). PMs were z-standardized before implementation into the design matrix and stepwise orthogonalization was deactivated to test for each PM's unique contribution to explain variance in the data (Mumford et al., 2015). In addition, three nuisance onset vectors were included (if necessary) for invalid trials in which participants missed the left/right task or responded incorrectly [one vector for presentation of the lottery, one vector for the button press (in case of incorrect responses), one vector for presentation of the feedback slide]. A high-pass filter of 128 s and correction for temporal autocorrelation were applied to the data (a custom-built algorithm was used to adjust filter and autocorrelation to the fact that we collapsed the three scanning sessions). Individual statistical parametric maps were calculated based on SPM's GLM estimation procedure. To test for different BOLD signal contrasts between targets versus competitors depending on whether these were LLR or SSR outcomes, we ran a second GLM in which we split the target versus competitor PM into two separate PMs for LLR and SSR outcomes. To test whether LLR versus SSR outcomes themselves explained reward-related brain activation, we ran a third GLM but added another PM to the outcome vector that coded for LLR (+1) versus SSR (−1) outcomes.

At the group level, individual contrast images were taken together and used in combination with SPM's flexible factorial design to test for effects of RPE and target versus competitors. In addition, a regression analysis with the mean difference in satisfaction ratings between targets and competitors as independent variable was used to test for a brain–behavior relationship of the attraction effect across participants. Given the well established evidence for RPE signals in the NAcc (Bartra et al., 2013; Garrison et al., 2013; Clithero and Rangel, 2014), we focused our analyses on the NAcc using small-volume correction (SVC) for multiple-comparisons and/or ROI analyses. Here, we report SVC/ROI results based on an anatomical NAcc ROI taken from the IBASPM atlas (Alemán-Gómez et al., 2006) as implemented in the WFU PickAtlas toolbox (WFU PickAtlas, RRID:SCR_007378). Note, however, that results pertaining to the attraction effect (i.e., comparisons between targets and competitors) do not change qualitatively if we used individually defined functional ROIs that are based on the RPE signal (i.e., 4 mm spheres around the individual peak voxels) instead. A statistical threshold of p < 0.05 was used for SVC and ROI analyses. For other regions, we chose a threshold of p < 0.05 (FWE corrected for whole brain), but no region survived this threshold for any analysis reported here. Activations are depicted on an overlay of the mean structural T1 image from all participants, with a threshold of p < 0.005 (uncorrected) with 10 contiguous voxels chosen for display purposes.

Results

The attraction effect biases intertemporal choices

To investigate whether intertemporal choices were affected by an attraction effect, we tested 21 participants in Study 1 who first completed a classical two-alternative version of the intertemporal choice task. This allowed us to adjust the subsequent three-alternative version to their individual level of temporal discounting (Fig. 1A). In the three-alternative choice task, participants were always offered an LLR and an SSR, plus a third option that was either a decoy option similar to (but worse than) LLR (DLLR), a decoy option similar to (but worse than) SSR (DSSR), or a neutral option (N; Fig. 1C). The attraction effect predicts that the probability of choosing LLR is higher when DLLR is in the choice set than when DSSR is in the choice set (and vice versa for SSR). In other words: LLR becomes the target and SSR the competitor in the presence of DLLR; SSR becomes the target and LLR the competitor in the presence of DSSR; and targets are expected to be chosen more often than competitors.

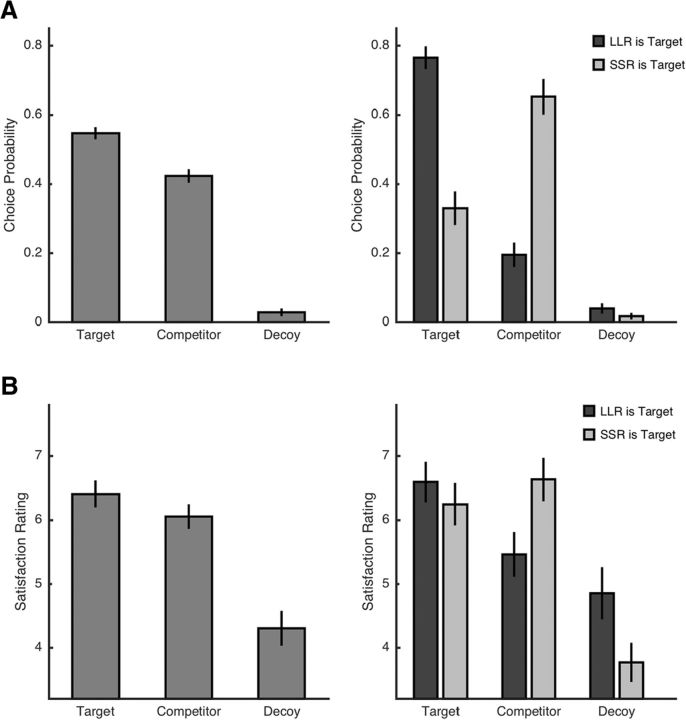

As predicted, we found that participant chose targets significantly more often than competitors (targets: 55 ± 7%; competitors: 42 ± 8%; t(20) = 4.15; p < 0.001; d = 0.90; Fig. 2A, left). We further observed that, in this task, participants chose LLR options more often than SSR options (LLR: 57 ± 17%; SSR: 20 ± 12%; t(20) = 6.10; p < 0.001; d = 1.33). This is surprising given that we ensured that LLRs' and SSRs' subjective values (based on fitting a hyperbolic discounting model to the two-alternative choice data) did not differ significantly from each other. Note that indifference between LLR and SSR is not a necessary condition for the attraction effect. Consistent with the attraction effect, although participants preferred LLR over SSR in the presence of both DLLR (t(20) = 9.38; p < 0.001; d = 2.05) and DSSR (t(20) = 3.46; p = 0.002; d = 0.75), this preference was weaker for DSSR trials (Fig. 2A, right).

Figure 2.

Behavioral results. A, Relative choice proportion of targets, competitors, and decoys for all trials (left) and separated for trials in which LLR or SSR was the target (right) in Study 1. B, Same results for satisfaction ratings in Study 2. Note that, even though the attraction effect is significant over both trial types (in both studies), the expected pattern (i.e., targets are preferred to competitors) is only seen in trials with LLR being the target. Error bars in all figures represent SEM.

The attraction effect modulates the subjective valuation of rewards in the absence of choice

In the fMRI experiment (Study 2), 29 participants first conducted the same two-alternative choice task as in Study 1 for calibration purposes. During MR scanning, however, they worked on a three-alternative intertemporal lottery task (Fig. 1B) to investigate whether the attraction effect modulates the RPE-related fMRI BOLD signal in the NAcc (see below). In each lottery trial, participants saw the three options, but could not choose. Instead, one of the three options was selected by the computer as the reward for that particular trial. After scanning, half of the lottery trials were repeated and participants gave satisfaction ratings for the received lottery outcome in each trial. Similar to the choice task in Study 1, we expected a modulation of the subjective valuation of rewards by the attraction effect, but now in the absence of choice. Indeed, satisfaction ratings were significantly higher for targets than for competitors (t(28) = 3.20; p = 0.003; d = 0.60; Fig. 2B, left). In contrast to Study 1, we did not find that participants rated LLR outcomes significantly higher than SSR outcomes (t(28) = 1.50; p = 0.144; d = 0.28). However, it is notable that the attraction effect was mainly driven by DLLR trials (t(28) = 2.17; p = 0.038; d = 0.40), whereas the rating difference between targets and competitors in DSSR trials did not differ (t(28) = −0.75; p = 0.460; d = −0.14; Fig. 2B, right). In addition, we ran a multiple linear regression analysis to test whether the attraction effect explained variance over and above the value of the lottery outcome and the RPE as derived from hyperbolic discounting. The rationale of this analysis is as follows. We expected that the hyperbolic discounting-based outcome value and RPE regressors can explain some variance in satisfaction ratings because they capture general interindividual differences in temporal discounting and because they should make accurate predictions in trials with a neutral third option N. Conversely, given that hyperbolic discounting does not account for the attraction effect (see the cognitive modeling results below), we expected that the target versus competitor and the decoy predictor variables explain additional variance in the satisfaction ratings. The results of this analysis are provided in Table 1. Indeed, the regression coefficients of RPE, but also of target versus competitor outcomes, were significantly positive, whereas the coefficient of decoy outcomes was significantly negative. Consistent with the results reported above, the coefficient for LLR versus SSR was not significant.

Table 1.

Regression analysis of satisfaction ratings

| Independent variable | Coefficient: mean (SD) | t-statistic | p-value |

|---|---|---|---|

| Intercept | 3.96 (2.65) | 8.04 | <0.001 |

| Voutcome | 0.57 (2.29) | 1.33 | 0.193 |

| RPE | 0.79 (1.58) | 2.68 | 0.012 |

| Target vs Competitor | 0.19 (0.32) | 3.29 | 0.003 |

| Decoy | −1.07 (1.58) | −3.63 | 0.001 |

| LLR vs SSR | 0.28 (1.29) | 1.16 | 0.254 |

In sum, our behavioral results suggest the presence of a robust attraction effect in both intertemporal choices and in valuation of rewards from an intertemporal lottery. However, the typical attraction effect pattern (i.e., that targets are preferred over competitors) was only present in DLLR trials.

RPE signals in NAcc

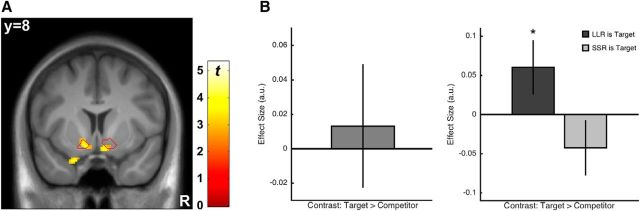

With respect to the fMRI analyses, we first sought to establish that our paradigm elicited a “conventional” RPE signal in the NAcc. As for the behavioral analysis, the RPE used in this analysis was based on the hyperbolic discounting model fitted to the choices that each participant made in the two-alternative choice task before scanning. Based on the results of the behavioral regression analysis (see above and Table 1), we expected a significant relationship between the hyperbolic discounting-based RPE regressor and the signal in NAcc even though this model is incompatible with the attraction effect and should therefore not capture the NAcc signal entirely. As shown in Figure 3A, we found a significant RPE signal in both left and right NAcc (left: peak MNI coordinates: X = −14/Y = 4/Z = −10; z = 4.92; p(SVC) < 0.001; right: 10/10/−14; z = 3.31; p(SVC) = 0.016). Note that, similar to previous work (Park et al., 2012), we controlled for the subjective value of the reward itself by including this variable in the same analysis.

Figure 3.

RPE brain signal and the attraction effect. A, “Conventional” RPE regressor based on hyperbolic discounting correlated with the fMRI signal in bilateral NAcc. The red outline illustrates the NAcc ROI used for statistical testing and data extraction. B, Mean activations in the NAcc ROI for the contrast target outcomes > competitor outcomes over all trials (left) and for trials with LLR/SSR being the target separately (right). The attraction effect pattern (target > competitor) was found in trials with LLR being the target. The negative tendency for trials with SSR being the target was not significant. *p < 0.05.

RPE modulation by the attraction effect

Next, we tested for neural evidence of RPE modulation by the attraction effect by contrasting the fMRI signal for target versus competitor outcomes. Given the strong empirical support for RPE coding in NAcc, we used an ROI approach testing for the contrast target > competitor averaged over an anatomically defined NAcc ROI. The contrast was positive but not significant over all trials (t(28) = 0.44; p = 0.666; d = 0.08; Fig. 3B, left). This is not surprising given that we saw the typical attraction effect pattern in the behavior only in DLLR trials (see above). Therefore, we set up two separate target > competitor contrasts for lotteries containing either a DLLR or a DSSR decoy option. For DLLR trials, we found that the target > competitor contrast was significantly positive (t(28) = 2.10; p = 0.045; d = 0.39), whereas for DSSR options, the contrast was not significant (t(28) = −1.44; p = 0.160; d = −0.27; Fig. 3B, right). Expectedly, the difference between trial types was also significant (t(28) = 2.63; p = 0.014; d = 0.49). Importantly, to rule out that these effects are merely driven by a difference in NAcc activation between LLR and SSR outcomes, we tested for such a difference, but did not find any evidence for it (neither with a very liberal statistical threshold nor with an ROI approach), which is consistent with the nonsignificant behavioral difference between LLR and SSR outcomes in Study 2 (see above). In summary, for those trials for which our behavioral results suggested a strong attraction effect, we also found an attraction effect modulation of the RPE signal in the NAcc.

RPE modulation across participants

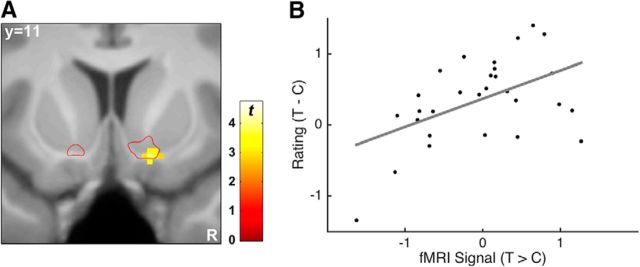

Interdependencies between choice or lottery options (such as attraction effects) may affect valuation processes differently in different participants. We predicted that participants whose satisfaction ratings were more strongly modulated by the attraction effect should also exhibit a stronger modulation of the RPE response in NAcc. To test this, we conducted a regression analysis across participants with the (behavioral) rating difference of targets − competitors as independent variable and the (brain) fMRI contrast of target > competitor as dependent variable. This analysis revealed a significant brain–behavior correlation in the right NAcc (14/12/-14; z = 3.44; p(SVC) = 0.013; Fig. 4). In other words, participants with a stronger NAcc signal for target compared with competitor outcomes during MR scanning reported higher satisfaction for receiving target outcomes compared with competitor outcomes afterward.

Figure 4.

Brain–behavior correlation of the attraction effect. A, Regression of the fMRI contrast target > competitor on the target − competitor difference in satisfaction ratings revealed a significant effect in the right NAcc. B, Scatterplot of the effect for illustration purposes. The individual peak estimates of the fMRI contrast shown here were derived using a leave-one-subject-out approach (Esterman et al., 2010) to avoid the nonindependence error. According to this approach, the strength of the brain–behavior correlation is r(27) = 0.50.

Cognitive modeling of intertemporal choices

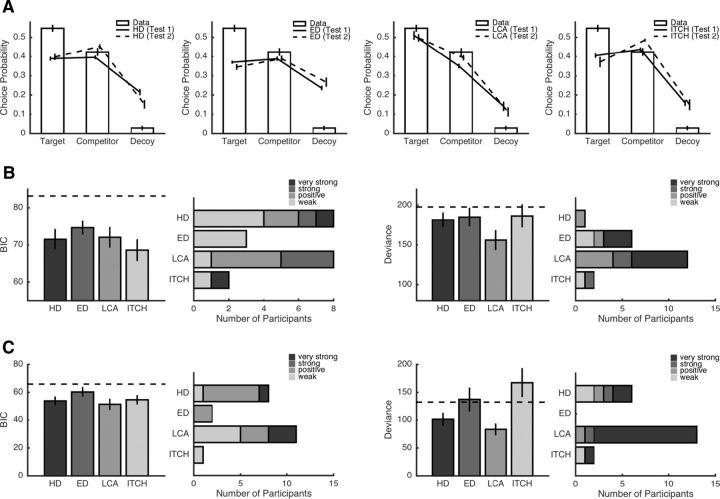

The attraction effect can be accounted for by multi-attribute sequential sampling models such as the LCA model (Usher and McClelland, 2001, 2004), but should pose a challenge to traditional discounting models because they adhere to the IIA principle. In addition, the recently proposed ITCH model (Ericson et al., 2015), which is based on the comparison of absolute and relative differences of magnitudes and delays, has been shown to better account for specific patterns in intertemporal choices than traditional discounting models. Because this model does not imply IIA, it remains open whether it also explains the attraction effect. Therefore, we compared hyperbolic and exponential discounting models, the LCA model (adapted to intertemporal choices), and the ITCH model with respect to their ability of predicting participants' decisions in Study 1. We used a generalization test (Busemeyer and Wang, 2000) to examine how well the models' predictions could be generalized from the two-alternative to the three-alternative intertemporal choice task and from trials comprising a neutral option N to trials comprising a decoy option (within the three-alternative task).

First, we tested the generalization results on a qualitative level by looking at the choice predictions for targets, competitors, and decoys. As can be seen from Figure 5A, only LCA predicted (correctly) that targets were chosen more often than competitors. Next, we compared the models on a quantitative level. When fitting the models to the data from the two-alternative choice task, we found that all models were better than a baseline model that predicted each choice with p = 1/2 and that the models performed almost equally well (Fig. 5B, left). On the individual level, hyperbolic discounting and the LCA model best explained the data for the majority of participants but, in general, the two-alternative choice dataset was insufficient to identify a winning model unambiguously. On the contrary, the generalization test to the three-alternative intertemporal choice task yielded more conclusive results (Fig. 5B, right). LCA had the lowest average deviance of all models and provided the best generalization results for 12 of 21 participants (with very strong evidence for LCA in six participants).

Figure 5.

Model comparisons. A, Comparison of choice frequencies and model predictions for targets, competitors, and decoys. B, Results of the first generalization test (i.e., generalizing from two-alternative to all three-alternative choice trials). Left and right panels show results for the training and test datasets, respectively. Within each panel, average BICs/deviances per model are shown on the left and evidence for the best model for each participant are shown on the right. Lower BICs/deviances indicate better fits. Dashed line indicates the fit of a baseline model that “guesses” each choice with equal probability. C, Results for the second generalization test (i.e., generalizing from trials with an N option to trials with a decoy option). HD, Hyperbolic discounting model; ED, exponential discounting model.

In light of participants' tendency to prefer LLR options in the three-alternative task (see above), we conducted a second generalization test. Here, we used the trials from the three-alternative choice task that involved the neutral option N to estimate parameters and then tested the models' generalizability with respect to the remaining trials (that involved a decoy option). For the dataset for which parameters were estimated, all models predicted choices better than chance (Fig. 5C, left). LCA was the best model overall and for more participants than any other model, but the hyperbolic discounting model and the ITCH model performed similarly. Yet again, the generalization results unequivocally identified LCA as the best model (Fig. 5C, right): LCA had a much smaller deviance than any other model and provided the best fit for 13 of 21 participants (with very strong evidence for LCA in 11 participants).

Discussion

In the present study, we tested for behavioral and neural evidence of a modulation of the RPE by the attraction effect. To begin, we demonstrated for the first time an attraction effect in the context of intertemporal choices (Study 1). Also for the first time, we observed an RPE modulation on the behavioral level (i.e., outcome satisfaction) in Study 2 when participants did not choose between options but received and evaluated potential rewards. On the neural side, we found the expected RPE modulation by the attraction effect in the NAcc in trials in which the LLR option was the target. This was consistent with our behavioral results, which also showed the typical attraction effect pattern (i.e., target > competitor) for only these trials. Moreover, the target/competitor differences in the NAcc signal and in the ratings were correlated across participants. Finally, we demonstrated that a cognitive process model, which relies on the dynamic comparisons of attribute values, outperforms not only traditional hyperbolic and exponential discounting models, but also a more recently proposed attribute-based heuristic (i.e., the ITCH model) in predicting our data.

In a recent electrophysiology study with nonhuman primates (Lak et al., 2014), the dopaminergic RPE signal in response to different reward-predicting cues could be anticipated by assessing the subjective value of the rewards in an independent choice task. This observation led the authors to speculate that “the value of the cue was independent of the value of the other options,” that “economic values were … stable across time and behavioral context,” and that “the value represented by dopamine neurons in a nonchoice context is consistent with the values used for choice.” Although these conclusions may be warranted in specific experimental contexts, our findings challenge the putative general context independence of the RPE on the basis of its susceptibility to the attraction effect. In particular, we argue that because the (momentary) utility of an option depends on the alternatives in the set (Rieskamp et al., 2006; Vlaev et al., 2011), the RPE signal, after receiving a particular option, should also reflect this dependence. Similar to previous studies (Yacubian et al., 2006; Hare et al., 2008; Park et al., 2012), we examined the characteristics of RPE coding in a lottery task that did not include a learning component. Given that our results can be generalized to settings in which outcomes are not fully described but have to be experienced through trial and error (Daw et al., 2006; Gluth et al., 2014), they will have important implications for theories of reinforcement learning (RL). For instance, the softmax rule, arguably the most commonly applied choice rule in RL models, implies the IIA principle (Hunt et al., 2014) and is thus incompatible with context effects such as the attraction effect. Our findings therefore suggest that the softmax choice rule might not be suitable for decisions with more than two alternatives that are characterized by two or more attributes (e.g., reward magnitude and probability). Moreover, if context effects are not taken into account when computing (subjective) reward expectations, the size and even the sign of the RPE might be predicted incorrectly.

The fMRI signal in the NAcc at the time of reward was only consistent with an attraction effect modulation for trials with DLLR decoys. This, however, matched our behavioral findings in both Study 1 and 2 to the extent that only in DLLR trials were targets preferred over competitors and given higher ratings. In fact, the only difference between the behavioral and neural results was that in the former, but not in the latter, the preference for targets in DLLR trials was so much higher than the preference for competitors in DSSR trials that the attraction effect became significant when collapsing the data over all trials. The linkage of the attraction effect modulation between brain and behavior was further supported by the interindividual correlation of target versus competitor differences in NAcc activation and satisfaction ratings. Importantly, we ruled out an alternative explanation of the significant effect in DLLR trials, namely that LLR rewards generally elicited a more positive RPE signal than SSR rewards (also consistent with the LLR–SSR difference in satisfaction ratings being not significant). Notably, asymmetries in the attraction effect as we observed it in our data are common in studies of multi-alternative decision making. For instance, in their meta-analyses on the attraction effect in consumer choice, Heath and Chatterjee (1995) conclude that the introduction of decoys leads to a stronger attracting effect on higher-quality, higher-price than on lower-quality, lower-price options. Therefore, different attributes such as money, delay, price, or product quality appear to be differently resistant to context-dependent modulations, although the reasons for these asymmetries remain unclear.

Hyperbolic discounting has replaced exponential discounting as the standard way of describing intertemporal choice because of its ability to account for time inconsistencies that were confirmed empirically (Kirby and Herrnstein, 1995; Laibson, 1997; Peters et al., 2012). However, more recent work has revealed novel systematic patterns in intertemporal choice that are incompatible with both hyperbolic and exponential discounting (Scholten and Read, 2010; Dai and Busemeyer, 2014; Ericson et al., 2015; Lempert and Phelps, 2016). In particular, the magnitude and the delay duration effect show that discount factors change when reward amounts and delays of LLR and SSR options are multiplied by the same constant. Accordingly, new choice models such as the trade-off model (Scholten and Read, 2010) or the ITCH model (Ericson et al., 2015) have been proposed. The critical feature of those models is the direct comparison of attribute values (i.e., amount and delay) between LLR and SSR options. Intriguingly, this development in intertemporal choice theories matches the more general critique of “value-first” models, which assume that the choice process is preceded by independently assessing each option's subjective value (Rieskamp et al., 2006; Vlaev et al., 2011; Hunt et al., 2014). Hyperbolic and exponential discounting belong to the family of “value-first” models and so do expected utility theory (Von Neumann and Morgenstern, 1947), prospect theory (Kahneman and Tversky, 1979), and simple neuroeconomic choice models (Glimcher, 2009; Padoa-Schioppa, 2011). The general picture that emerges here is that these models fail to account for interactions between choice options caused by comparisons within single attributes. Our findings suggest that we must recognize intertemporal choice as a form of multi-attribute decision making in which money and delay trade off.

Even if a cognitive model is based on direct comparisons of attribute values, it is not guaranteed that it will account for particular context effects. The ITCH model was specifically designed to explain context effects reported in studies of intertemporal choice (i.e., the magnitude and the delay duration effect) and allows for violations of the IIA principle. In our study, however, we showed that this model has difficulties with predicting the attraction effect (though other ways to generalize the model to choices with more than two alternatives are conceivable and might be better suited to predict the effect). This is because the model does not have a mechanism to exploit asymmetries in dominance relationships. In contrast, LCA uses loss aversion to produce the attraction effect and accounts for several other multi-alternative context effects, including the similarity and the compromise effects (Usher and McClelland, 2004; Tsetsos et al., 2010). Moreover, because LCA assumes direct comparisons of attribute values, it can (in principle) account for the magnitude and the delay duration effects (see also Dai and Busemeyer, 2014). It should be noted, however, that LCA is only one of many sequential sampling models that account for context effects by using various different assumptions (cf. Rieskamp et al., 2006). In fact, LCA has been criticized for requiring loss aversion to explain the attraction effect, which is difficult to reconcile with evidence for this effect in perceptual decisions (Trueblood et al., 2014). Given that loss aversion appears sensible in the context of intertemporal choice, the current study does not add to this ongoing debate. It rather points to sequential sampling models as a general and strong framework that enables context-dependent predictions of choice behavior.

To the best of our knowledge, our results also demonstrate for the first time that context effects such as the attraction effect can be elicited in the absence of choice, that is, when people evaluate the (relative) desirability of different potential rewards. The underlying mechanisms of this context-dependent reward valuation remain unclear, but we speculate that within-attribute comparisons similar to those during multi-alternative choice are at play. In other words, when evaluating the desirability of different potential rewards, people weigh the rewards' advantages and disadvantages with respect to specific attributes and determine on this basis which of the different rewards are most/least desirable (in the current context). Certainly, more research is needed to substantiate this proposition and to identify common versus distinct mechanisms of context-dependent choice and valuation.

In conclusion, we have shown that both RPE coding and intertemporal choice are prone to the attraction effect and violate the IIA and regularity principles of the axiomatic approach in behavioral economics. Accordingly, potential multi-alternative context effects in choice and valuation processes must be considered as we develop theories of reinforcement learning and delay discounting.

Footnotes

This work was supported by the Swiss National Science Foundation (SNSF Grant 100014_153616 to S.G. and J.R.). We thank Tehilla Mechera-Ostrowsky and Pauldy Otermans for help with data collection.

The authors declare no competing financial interests.

References

- Alemán-Gómez Y, Melie-García L, Valdés-Hernandez P. IBASPM: Toolbox for automatic parcellation of brain structures. Poster presented at the 12th Annual Meeting of the Organization for Human Brain Mapping; June; Florence, Italy. 2006. [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Bartra O, McGuire JT, Kable JW. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage. 2013;76:412–427. doi: 10.1016/j.neuroimage.2013.02.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berkowitsch NA, Scheibehenne B, Rieskamp J. Rigorously testing multialternative decision field theory against random utility models. J Exp Psychol Gen. 2014;143:1331–1348. doi: 10.1037/a0035159. [DOI] [PubMed] [Google Scholar]

- Bhatia S. Associations and the accumulation of preference. Psychol Rev. 2013;120:522–543. doi: 10.1037/a0032457. [DOI] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Büchel C, Holmes AP, Rees G, Friston KJ. Characterizing stimulus-response functions using nonlinear regressors in parametric fMRI experiments. Neuroimage. 1998;8:140–148. doi: 10.1006/nimg.1998.0351. [DOI] [PubMed] [Google Scholar]

- Busemeyer JR, Wang YM. Model comparisons and model selections based on generalization criterion methodology. J Math Psychol. 2000;44:171–189. doi: 10.1006/jmps.1999.1282. [DOI] [PubMed] [Google Scholar]

- Clithero JA, Rangel A. Informatic parcellation of the network involved in the computation of subjective value. Soc Cogn Affect Neurosci. 2014;9:1289–1302. doi: 10.1093/scan/nst106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dai J, Busemeyer JR. A probabilistic, dynamic, and attribute-wise model of intertemporal choice. J Exp Psychol Gen. 2014;143:1489–1514. doi: 10.1037/a0035976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage. 2003;19:430–441. doi: 10.1016/S1053-8119(03)00073-9. [DOI] [PubMed] [Google Scholar]

- Ericson KM, White JM, Laibson D, Cohen JD. Money earlier or later? Simple heuristics explain intertemporal choices better than delay discounting does. Psychol Sci. 2015;26:826–833. doi: 10.1177/0956797615572232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esterman M, Tamber-Rosenau BJ, Chiu YC, Yantis S. Avoiding non-independence in fMRI data analysis: leave one subject out. Neuroimage. 2010;50:572–576. doi: 10.1016/j.neuroimage.2009.10.092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frederick S, Loewenstein G, O'Donoghue T. Time discounting and time preference: a critical review. J Econ Lit. 2002;40:351–401. doi: 10.1257/jel.40.2.351. [DOI] [Google Scholar]

- Garrison J, Erdeniz B, Done J. Prediction error in reinforcement learning: a meta-analysis of neuroimaging studies. Neurosci Biobehav Rev. 2013;37:1297–1310. doi: 10.1016/j.neubiorev.2013.03.023. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Choice: toward a standard back-pocket model. In: Glimcher PW, Camerer CF, Fehr E, Poldrack RA, editors. Neuroeconomics: decision making and the brain. Ed 1. Amsterdam: Elsevier/Academic; 2009. pp. 503–522. [Google Scholar]

- Gluth S, Rieskamp J, Büchel C. Deciding not to decide: computational and neural evidence for hidden behavior in sequential choice. PLoS Comput Biol. 2013;9:e1003309. doi: 10.1371/journal.pcbi.1003309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gluth S, Rieskamp J, Büchel C. Neural evidence for adaptive strategy selection in value-based decision-making. Cereb Cortex. 2014;24:2009–2021. doi: 10.1093/cercor/bht049. [DOI] [PubMed] [Google Scholar]

- Gluth S, Sommer T, Rieskamp J, Büchel C. Effective connectivity between hippocampus and ventromedial prefrontal cortex controls preferential choices from memory. Neuron. 2015;86:1078–1090. doi: 10.1016/j.neuron.2015.04.023. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Hare TA, O'Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heath TB, Chatterjee S. Asymmetric decoy effects on lower-quality versus higher-quality brands: meta-analytic and experimental evidence. J Consum Res. 1995;22:268–284. doi: 10.1086/209449. [DOI] [Google Scholar]

- Heekeren HR, Marrett S, Ungerleider LG. The neural systems that mediate human perceptual decision making. Nat Rev Neurosci. 2008;9:467–479. doi: 10.1038/nrn2374. [DOI] [PubMed] [Google Scholar]

- Huber J, Payne JW, Puto C. Adding asymmetrically dominated alternatives: violations of regularity and the similarity hypothesis. J Consum Res. 1982;9:90–98. doi: 10.1086/208899. [DOI] [Google Scholar]

- Hunt LT, Dolan RJ, Behrens TE. Hierarchical competitions subserving multi-attribute choice. Nat Neurosci. 2014;17:1613–1622. doi: 10.1038/nn.3836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutton C, Bork A, Josephs O, Deichmann R, Ashburner J, Turner R. Image distortion correction in fMRI: a quantitative evaluation. Neuroimage. 2002;16:217–240. doi: 10.1006/nimg.2001.1054. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–292. doi: 10.2307/1914185. [DOI] [Google Scholar]

- Kirby KN, Herrnstein RJ. Preference reversals due to myopic discounting of delayed reward. Psychol Sci. 1995;6:83–89. doi: 10.1111/j.1467-9280.1995.tb00311.x. [DOI] [Google Scholar]

- Laibson D. Golden eggs and hyperbolic discounting. Q J Econ. 1997;112:443–478. doi: 10.1162/003355397555253. [DOI] [Google Scholar]

- Lak A, Stauffer WR, Schultz W. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc Natl Acad Sci U S A. 2014;111:2343–2348. doi: 10.1073/pnas.1321596111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lempert KM, Phelps EA. The malleability of intertemporal choice. Trends Cogn Sci. 2016;20:64–74. doi: 10.1016/j.tics.2015.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Louie K, Khaw MW, Glimcher PW. Normalization is a general neural mechanism for context-dependent decision making. Proc Natl Acad Sci U S A. 2013;110:6139–6144. doi: 10.1073/pnas.1217854110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce RD. Individual choice behavior: a theoretical analysis. Mineola, NY: Dover Publications; 1959. [Google Scholar]

- Mazur JE. Quantitative analyses of behavior, Vol. 5: the effect of delay and of intervening events on reinforcement value. Hillsdale, NJ: Erlbaum; 1987. An adjusting procedure for studying delayed reinforcement; pp. 55–73. [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- Mumford JA, Poline JB, Poldrack RA. Orthogonalization of regressors in FMRI models. PLoS One. 2015;10:e0126255. doi: 10.1371/journal.pone.0126255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelder JA, Mead R. A simplex method for function minimization. Comput J. 1965;7:308–313. doi: 10.1093/comjnl/7.4.308. [DOI] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park SQ, Kahnt T, Talmi D, Rieskamp J, Dolan RJ, Heekeren HR. Adaptive coding of reward prediction errors is gated by striatal coupling. Proc Natl Acad Sci U S A. 2012;109:4285–4289. doi: 10.1073/pnas.1119969109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Miedl SF, Büchel C. Formal comparison of dual-parameter temporal discounting models in controls and pathological gamblers. PLoS One. 2012;7:e47225. doi: 10.1371/journal.pone.0047225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raftery AE. Bayesian model selection in social research. Sociol Methodol. 1995;25:111. doi: 10.2307/271063. [DOI] [Google Scholar]

- Rieskamp J. The probabilistic nature of preferential choice. J Exp Psychol Learn Mem Cogn. 2008;34:1446–1465. doi: 10.1037/a0013646. [DOI] [PubMed] [Google Scholar]

- Rieskamp J, Busemeyer JR, Mellers BA. Extending the bounds of rationality: Evidence and theories of preferential choice. J Econ Lit. 2006;44:631–661. doi: 10.1257/jel.44.3.631. [DOI] [Google Scholar]

- Roe RM, Busemeyer JR, Townsend JT. Multialternative decision field theory: a dynamic connectionist model of decision making. Psychol Rev. 2001;108:370–392. doi: 10.1037/0033-295X.108.2.370. [DOI] [PubMed] [Google Scholar]

- Samuelson P. A note on measurement of utility. Rev Econ Stud. 1937;4:155–161. doi: 10.2307/2967612. [DOI] [Google Scholar]

- Scholten M, Read D. The psychology of intertemporal tradeoffs. Psychol Rev. 2010;117:925–944. doi: 10.1037/a0019619. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Schwarz G. Estimating the dimension of a model. Ann Stat. 1978;6:461–464. doi: 10.1214/aos/1176344136. [DOI] [Google Scholar]

- Shafir S, Waite T, Smith B. Context-dependent violations of rational choice in honeybees (Apis mellifera) and gray jays (Perisoreus canadensis) Behav Ecol Sociobiol. 2002;51:180–187. doi: 10.1007/s00265-001-0420-8. [DOI] [Google Scholar]

- Smith PL, Ratcliff R. Psychology and neurobiology of simple decisions. Trends Neurosci. 2004;27:161–168. doi: 10.1016/j.tins.2004.01.006. [DOI] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge, MA: MIT; 1998. [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Human neural learning depends on reward prediction errors in the blocking paradigm. J Neurophysiol. 2006;95:301–310. doi: 10.1152/jn.00762.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trueblood JS, Brown SD, Heathcote A, Busemeyer JR. Not just for consumers: context effects are fundamental to decision making. Psychol Sci. 2013;24:901–908. doi: 10.1177/0956797612464241. [DOI] [PubMed] [Google Scholar]

- Trueblood JS, Brown SD, Heathcote A. The multiattribute linear ballistic accumulator model of context effects in multialternative choice. Psychol Rev. 2014;121:179–205. doi: 10.1037/a0036137. [DOI] [PubMed] [Google Scholar]

- Tsetsos K, Usher M, Chater N. Preference reversal in multiattribute choice. Psychol Rev. 2010;117:1275–1293. doi: 10.1037/a0020580. [DOI] [PubMed] [Google Scholar]

- Tversky A. Elimination by aspects: a theory of choice. Psychol Rev. 1972;79:281–299. doi: 10.1037/h0032955. [DOI] [Google Scholar]

- Tversky A, Kahneman D. Loss aversion in riskless choice: a reference-dependent model. Q J Econ. 1991;106:1039–1061. doi: 10.2307/2937956. [DOI] [Google Scholar]

- Tversky A, Kahneman D. Advances in prospect theory: cumulative representation of uncertainty. J Risk Uncertain. 1992;5:297–323. doi: 10.1007/BF00122574. [DOI] [Google Scholar]

- Usher M, McClelland JL. The time course of perceptual choice: the leaky, competing accumulator model. Psychol Rev. 2001;108:550–592. doi: 10.1037/0033-295X.108.3.550. [DOI] [PubMed] [Google Scholar]

- Usher M, McClelland JL. Loss aversion and inhibition in dynamical models of multialternative choice. Psychol Rev. 2004;111:757–769. doi: 10.1037/0033-295X.111.3.757. [DOI] [PubMed] [Google Scholar]

- Vlaev I, Chater N, Stewart N, Brown GD. Does the brain calculate value? Trends Cogn Sci. 2011;15:546–554. doi: 10.1016/j.tics.2011.09.008. [DOI] [PubMed] [Google Scholar]

- Von Neumann J, Morgenstern O. Theory of games and economic behavior. Ed 2. Princeton, NJ: Princeton University; 1947. [Google Scholar]

- Wittmann M, Paulus MP. Decision making, impulsivity and time perception. Trends Cogn Sci. 2008;12:7–12. doi: 10.1016/j.tics.2007.10.004. [DOI] [PubMed] [Google Scholar]

- Wollschläger LM, Diederich A. The 2N-ary choice tree model for N-alternative preferential choice. Front Psychol. 2012;3:189. doi: 10.3389/fpsyg.2012.00189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yacubian J, Gläscher J, Schroeder K, Sommer T, Braus DF, Büchel C. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26:9530–9537. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zauberman G, Kim BK, Malkoc SA, Bettman JR. Discounting time and time discounting: Subjective time perception and intertemporal preferences. J Mark Res. 2009;46:543–556. doi: 10.1509/jmkr.46.4.543. [DOI] [Google Scholar]