Abstract

There is significant controversy over the existence and function of a direct subcortical visual pathway to the amygdala. It is thought that this pathway rapidly transmits low spatial frequency information to the amygdala independently of the cortex, and yet the directionality of this function has never been determined. We used magnetoencephalography to measure neural activity while human participants discriminated the gender of neutral and fearful faces filtered for low or high spatial frequencies. We applied dynamic causal modeling to demonstrate that the most likely underlying neural network consisted of a pulvinar-amygdala connection that was uninfluenced by spatial frequency or emotion, and a cortical-amygdala connection that conveyed high spatial frequencies. Crucially, data-driven neural simulations revealed a clear temporal advantage of the subcortical connection over the cortical connection in influencing amygdala activity. Thus, our findings support the existence of a rapid subcortical pathway that is nonselective in terms of the spatial frequency or emotional content of faces. We propose that that the “coarseness” of the subcortical route may be better reframed as “generalized.”

SIGNIFICANCE STATEMENT The human amygdala coordinates how we respond to biologically relevant stimuli, such as threat or reward. It has been postulated that the amygdala first receives visual input via a rapid subcortical route that conveys “coarse” information, namely, low spatial frequencies. For the first time, the present paper provides direction-specific evidence from computational modeling that the subcortical route plays a generalized role in visual processing by rapidly transmitting raw, unfiltered information directly to the amygdala. This calls into question a widely held assumption across human and animal research that fear responses are produced faster by low spatial frequencies. Our proposed mechanism suggests organisms quickly generate fear responses to a wide range of visual properties, heavily implicating future research on anxiety-prevention strategies.

Keywords: amygdala, dynamic causal modeling, emotion, faces, pulvinar, spatial frequency

Introduction

The ability to rapidly detect external threats is essential to the survival of all species (Mobbs et al., 2015). It has long been known that the amygdala plays an important role in coordinating fear responses (Morris et al., 1998; Furl et al., 2013), but there is considerable controversy regarding how quickly the amygdala initially receives visual information. One proposition from converging human and animal evidence is that there is a short and direct colliculus-pulvinar pathway to the amygdala (Morris et al., 1999). This so-called “low road” is thought to transmit coarse visual information more rapidly than alternative “high roads” believed to transmit fine-grained details via the visual cortex (LeDoux, 1998). This multipathway proposition has sparked debate within the literature over the very existence of the low road and its potential influence over the numerous, cascading processes emanating from the amygdala (Pessoa and Adolphs, 2010, 2011; de Gelder et al., 2011; Cauchoix and Crouzet, 2013).

Anatomical evidence for the subcortical route has been identified using diffusion imaging (Tamietto et al., 2012; Rafal et al., 2015) and computational modeling (Rudrauf et al., 2008; Garvert et al., 2014) in humans as well as neuroanatomical tracing in animals (Berman and Wurtz, 2010; Day-Brown et al., 2010). The estimated synaptic integration time for the subcortical route (80–90 ms) is faster than that of the cortical visual stream (145–170 ms), supporting the notion of rapid subcortical input to the amygdala (Silverstein and Ingvar, 2015). Furthermore, the superior colliculus consists primarily of magnocellular neurons tuned preferentially to lower spatial frequencies (Márkus et al., 2009). Together, this forms the dominant hypothesis for a rapid colliculus-pulvinar-amygdala pathway facilitating early processing of coarse visual information, such as low spatial frequency (LSF) content (Ohman, 2005). fMRI research has supported this hypothesis by finding greater signal in the superior colliculus, pulvinar, and amygdala to LSF fearful faces and greater signal in the extrastriate visual cortex for high spatial frequency (HSF) faces (Vuilleumier et al., 2003). These findings were recently validated at the electrophysiological level, where LSF fearful faces were found to evoke early activity (75 ms poststimulus onset) in the lateral amygdala (Méndez-Bértolo et al., 2016).

Critically, however, these studies cannot tell us about the directionality of interactions among these brain regions. The human pulvinar receives both magnocellular and parvocellular retinal input (Cowey et al., 1994) and is connected with cortical visual areas, such as V1, V3, and V5/MT (Tamietto and Morrone, 2016). This suggests that the pulvinar is well situated to rapidly transmit both LSF and HSF information to the amygdala, along with perceptual features sufficient to generate an emotional response in the amygdala (Nguyen et al., 2013; Le et al., 2016), via multiple parallel or converging pathways (Pessoa and Adolphs, 2010; Diano et al., 2017). Thus, it remains to be seen whether rapid subcortical input to the amygdala is, indeed, restricted to LSF affective visual information. Indeed, the findings of a temporal advantage for LSF affective information are inconsistent, which we would not expect if a rapid subcortical pathway to the amygdala existed predominantly for LSF information (De Cesarei and Codispoti, 2013).

In this study, we evaluated how spatial frequency and affective content flows toward the amygdala via subcortical and cortical connections. We measured neural activity using magnetoencephalography (MEG) while participants made gender judgements on faces filtered for different spatial frequencies. We then applied dynamic causal modeling (DCM) to these data to infer the direction of information transmission within hypothesized neural networks (David et al., 2006) consisting of increasingly complex combinations of the following: (1) a cortical pathway from the LGN to the primary visual cortex (V1) to the amygdala; (2) a subcortical pathway from the pulvinar to the amygdala; and (3) a medial pathway from the pulvinar to V1 to the amygdala, to account for a possible indirect influence of the pulvinar over the amygdala via cortical sources (Furl et al., 2013). After determining the most likely underlying neural architecture, we tested hypotheses for the types of spatial frequency and emotion content propagated along each connection. Thus, we aimed to determine whether LSF faces modulate the subcortical pathway.

Materials and Methods

Participants.

Twenty-seven people participated in the study, although one was discarded due to being on psychiatric medication. This left 26 neurologically healthy participants (50% female; 23 right-handed, three left-handed) with an age range from 18 to 32 years (mean ± SD, 22.69 ± 3.87 years). All participants had normal or corrected-to-normal vision. Participation in the study was voluntary, and all participants were reimbursed AUD$40 for their time. Ethical clearance was granted by the University of Queensland Institutional Human Research Ethics committee.

Procedure.

All participants were scanned at Swinburne University of Technology in Melbourne, Victoria. After removing all metal items from the body, participants were seated in the MEG inside a magnetically shielded room. Stimuli were projected onto a Perspex screen positioned ∼1.15 m in front of the participant (viewing angle ≈ 22.81°). Participants held an MEG-compatible 2-response button box with their dominant hand with the index and middle finger resting on the two buttons (akin to a computer mouse). The participants were instructed to fixate on the center of the screen and remain still for the duration of each block (three blocks of ∼11 min each, with a few minutes break between blocks). A gray background was presented onscreen where faces appeared one at a time. Stimuli were presented using the Cogent 2000 toolbox for MATLAB (http://www.vislab.ucl.ac.uk/cogent.php). Each face was displayed for 200 ms to minimize the likelihood of saccades. Whenever the face was not on the screen, a cross was displayed to help participants maintain central fixation. The next trial did not begin until after the participant responded using the button box to indicate whether the face was male or female. Left/right assignment for male/female did not change across the three blocks, but the assignment was counterbalanced between participants. Participants were required to make their response as accurately and as quickly as they could. The intertrial interval was jittered between 750 and 1500 ms to reduce onset predictability.

Stimuli.

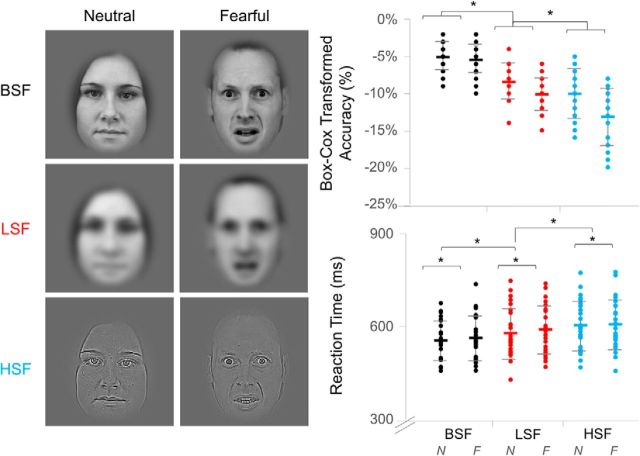

The face stimuli originated from the Karolinksa Directed Emotional Faces set (Lundqvist et al., 1998). Image dimensions were 198 × 252 pixels, and all images were grayscale. Spatial frequency was manipulated by applying a low-pass cutoff of <6 cycles/image to create the LSF stimuli and by applying a high-pass cutoff of >24 cycles/image to create the HSF stimuli (Fig. 1). Broad spatial frequency (BSF) stimuli were images with no altered frequency information. Luminance and contrast of the LSF and HSF images were matched to their respective BSF image by using the SHINE toolbox for MATLAB (Willenbockel et al., 2010). There were 60 identities: 30 males and 30 females. Each identity was presented once per condition (3 spatial frequency levels × 2 emotional expressions) resulting in six presentations per block. Hence, the three blocks resulted in 180 trials per condition. All faces were presented in a random order per block, but the same identity was never sequentially presented. A photodiode was placed at the bottom-left corner of the screen to record precise stimulus onset.

Figure 1.

Experimental design and behavioral data. Left, Examples of the face stimuli used in the experiment. Columns indicate the neutral and fearful emotion conditions. Rows indicate the three spatial frequency conditions: BSF, LSF, and HSF. Right, Dot plots of each participant's gender judgment score for normalized accuracy (top) and reaction time (bottom). Black represents BSF. Red represents LSF. Blue represents HSF. Each spatial frequency column contains a pair, where the left series represents neutral (N) expressions and the right series represents fearful (F) expressions. Error bars indicate standard error. *p < 0.05.

MEG data acquisition.

Neural activity was recorded using a whole-head 306-sensor (102 magnetometers and 102 pairs of orthogonally oriented planar gradiometers) TRIUX system (Elekta Neuromag Oy). Activity was recorded at a sampling rate of 1000 Hz. Before entering the MEG room, head position indicator coils were positioned on each participant (three on the forehead and one behind each ear). An electromagnetic digitizer system was used to determine the location of the coils relative to anatomical fiducials at the nasion and at the left and right preauricular points (FastTrak, Polhemus). Electro-oculographic (EOG) electrodes were also placed above and below the right eye to record eye blinks. When seated in the scanner, participants were positioned so that the helmet of the MEG was in as much contact with the head as comfortably possible. Total scanning time was 30–40 min, including breaks. Head position was tracked continuously using the head position indicator coils.

MEG preprocessing.

The temporal extension of Signal-Space Separation (Taulu and Simola, 2006) was applied using the MaxFilter software (Elekta Neuromag Oy), actively cancelling noise and interpolating bad channels. The MaxMove function was also used to correct for head movement using a standard reference head position (0 x, 0 y, 40 z). All subsequent offline preprocessing was completed using SPM12 (Wellcome Trust Center for Neuroimaging, University College London, London) via MATLAB 2014a (The MathWorks). The data were down-sampled to 200 Hz, and a bandpass filter of 0.5–30 Hz was applied. Each participant's MEG data were coregistered with their anatomical T1 MRI image, which was acquired at the same site (Swinburne University of Technology, Melbourne, Australia) immediately after completing the MEG scan.

Because of a technical error, EOG activity for the first four participants was not recorded. Thus, for blink correction, a frontal MEG planar gradiometer (channel MEG0922) was substituted as the EOG, given its proximity to the upper EOG sensor and the presence of the eyeblink artifact in the signal. Eyeblink artifacts were identified as a 3fT/mm deviation in the EOG signal and then marking a −300 to 300 ms time window around this deviation. The associated sensor topographies were used to correct the MEG data.

The MEG data were segmented into −100 to 650 ms blocks of planar gradiometer activity time-locked to stimulus onset and baseline-corrected, creating a series of event-related fields (ERFs). For DCM, these ERFs were averaged using the robust averaging function in SPM12, which weights the contribution of an epoch to the average based on its relative noise (Litvak et al., 2011). A low-pass filter of 30 Hz and baseline correction were reapplied to the averaged ERFs to account for any high-frequency noise introduced by robust averaging. For the spatiotemporal analysis, the ERFs for each trial (i.e., before the robust averaging step completed for DCM analysis) were converted to 3D scalp/time images (x space, y space, ms time) and then smoothed with an 8 mm × 8 mm × 20 ms FWHM Gaussian kernel to accommodate for intersubject variability.

DCM.

DCM was used to compare different plausible neural networks that may underlie the observed neural data. DCM is a biologically informed computational method of estimating the effective connectivity between brain regions using Bayesian statistics (Daunizeau et al., 2011). Critically, this method allows us to make causal inferences on how the neural dynamics of one population directly influence changes in the dynamics of another population. We constructed several models that were based closely on previous work by Garvert et al. (2014) who also investigated neural networks of emotional face perception. These models (see Fig. 4) included the LGN (MNI coordinates: left = −22, −22, −6; right = 22, −22, −6), pulvinar (PUL; MNI coordinates: left = −12, −25, 7; right = 12, −25, 7), V1 (MNI coordinates: left = −7, −85, −7; right = 7, −85, −7), and amygdala (AMY; MNI coordinates: left = −23, −5, −22; right = 23, −5, −22). We modeled driving sensory input (i.e., onset of face stimuli) as entering both the LGN and pulvinar. These inputs were agnostic as to the anatomical source of the signal (Stephan et al., 2010), but we assumed that input to the LGN would encapsulate retinal input, whereas input to the pulvinar could have encapsulated signals from the retina, superior colliculus, extrastriate cortex, or other neural areas, as suggested by previous neuroanatomical research (Tamietto and Morrone, 2016). All models encompassed only the first 300 ms (i.e., 0–300 ms poststimulus onset) of the ERFs, as we were interested primarily in the earliest stages of visual processing.

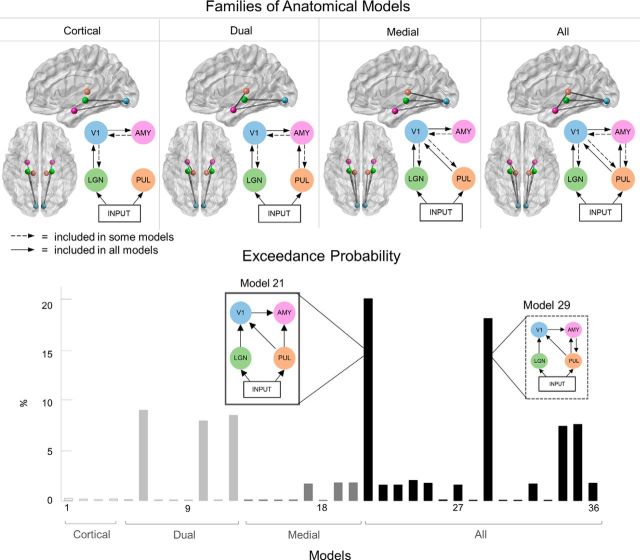

Figure 4.

Selection of anatomical model families. Top, Four families of anatomical models were tested. Green nodes represent the LGN. Blue represents the primary visual cortex (V1). Pink represents the amygdala (AMY). Orange represents the pulvinar (PUL). 3D brain models (sagittal and axial views) are shown. All models consisted of separate left and right hemispheres. Bottom, The exceedance probability of each model, grouped into the four families: light gray represents cortical; gray represents subcortical; dark gray represents medial; black represents all. Model 21, which had the highest probability, is shown in the left break-out box, whereas the next most probable model, Model 29, is shown in the right break-out box.

After defining our models, DCM was then used to generate full spatiotemporal models of evoked responses by using a neural mass model (i.e., a set of biologically informed differential equations describing the synaptic dynamics underpinning neural connectivity) in conjunction with the parameters specified in each model (Moran et al., 2013). These predicted data, along with our observed data, were used in Bayesian model estimation (via the expectation maximization algorithm) and random-effects Bayesian model selection (which compromises between explanatory power and model complexity and accounts for variability across participants) to determine the most likely model for our data. This DCM procedure was used to estimate the most likely underlying neural architecture in the anatomical stage (see Fig. 4), followed by the most likely modulation of connections by our experimental conditions (spatial frequency and emotion) in the functional stage (see Fig. 5).

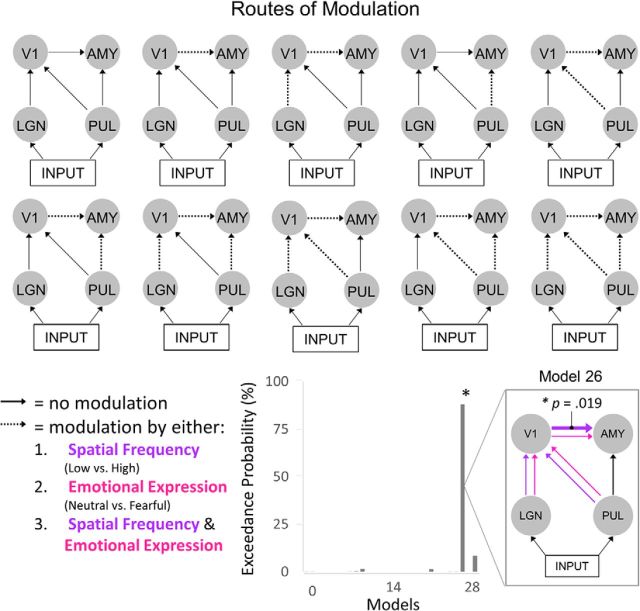

Figure 5.

Routes of modulation by emotion and spatial frequency. Left, Each diagram represents a plausible route of modulation. Dotted arrows indicate potential modulation by spatial frequency, emotion, or both. Top left, The first model is the null model with no modulated connections. The other nine models were each uniquely modulated. Right, The exceedance probability, determined via Bayesian model selection, for all 29 models. The model shown in the break-out box is the winning model (Model 26), where all connections were modulated by spatial frequency and emotion, except the subcortical route. Classical t tests revealed that only the parameter estimates for the cortical connection were significantly > 0 (Bonferroni-corrected, p = 0.019).

After obtaining the final winning model in the functional stage, we conducted sensitivity analysis to determine how different connections and their modulations influenced source activity (see Fig. 6), as has been done in previous DCM studies (Dietz et al., 2014; Garvert et al., 2014; FitzGerald et al., 2015). In sensitivity (or “contribution”) analysis, the strength of the coupling parameter between two sources of interest is arbitrarily increased. The generative model is then used to predict the dynamics of pyramidal cell activity in the target source, taking into account the strengthened connection. By comparing the simulated source activity with and without the increased connection, we can estimate the influence of that connection over the dynamics of the target source (i.e., if the connection contributes significantly to that source within the model, we would expect a strengthened coupling parameter to produce marked changes in the generated source activity).

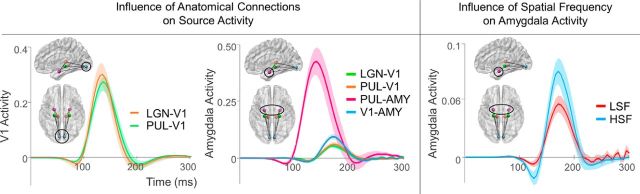

Figure 6.

Sensitivity analysis of connection type on source activity. Sensitivity analysis of anatomical connectivity (left, middle) and modulatory (right) connections. Each wave depicts the simulated perturbation in source activity by either the presence of a connection (left, middle) or the modulation by LSF or HSF (right).

Results

Gender discrimination performance

We analyzed participants' behavioral responses in the gender discrimination task to determine whether spatial frequency and emotion influenced general face processing. After removing outlier trials (>3 SD from each participant's mean, mean ± SD, 7.73 ± 4.24% of trials), we conducted 2 (emotion) × 2 (spatial frequency) repeated-measures ANOVAs for reaction time (correct responses only) and for Box-Cox-transformed accuracy.

Gender discrimination was impaired more by the removal of low than high spatial frequencies, as shown by the higher accuracy (F(1,32) = 108.29, p = 6.65 × 10−13) and faster reaction time (F(2,41) = 47.14, p = 2.30 × 10−10) for BSF faces (−5.30%, 559 ms) than for LSF (−9.29%, 585 ms) faces, as well as for LSF relative to HSF faces (−11.65%, 605 ms). Furthermore, fearful faces slowed reaction times, such that participants had greater accuracy (F(1,25) = 116.46, p = 6.74 × 10−11) and faster reaction time (F(1,25) = 12.24, p = 0.002) for neutral faces (−7.89%, 579 ms) than fearful faces (−9.60%, 587 ms). Reduced accuracy for fearful faces was only seen for LSF and HSF faces (F(2,43) = 24.89, p = 2.33 × 10−7).

Although gender discrimination performance has previously been thought of as equivalent between LSF and HSF faces (Schyns and Oliva, 1999; Vuilleumier et al., 2003; Pourtois et al., 2005), our results support other studies that have found an advantage of LSF over HSF information (Goffaux et al., 2003; Winston et al., 2003; Deruelle and Fagot, 2005; Aguado et al., 2010; Awasthi et al., 2013). Interestingly, few of these studies report an influence of emotional expression on performance (Vlamings et al., 2009), unlike our findings. The average luminance and root-mean-square contrast did not differ significantly between emotion conditions, as determined by separate 2 (emotion) × 3 (spatial frequency) repeated-measures ANOVAs, and so the difference was not likely due to low-level perceptual confounds. It is possible that fearful faces impaired performance because participants were instructed to respond as quickly and as accurately as possible, whereas such a time pressure may have been absent in previous studies.

Spatiotemporal analysis of MEG sensor data

Our behavioral analysis revealed that both spatial frequency and facial expression influenced gender discrimination of faces. To understand where and when the brain differentially processed spatial frequency and emotion, we analyzed the collected MEG data. We used statistical parametric mapping, a technique that allowed us to investigate these effects while remaining unbiased as to specific scalp locations and time windows. This mapping technique converts sensor activity collected across all 204 planar gradiometers into 3D maps of space (x and y mm2) and time (−200–600 ms, 5 ms resolution). These 3D maps were entered into a 2 (emotion) × 3 (spatial frequency) within-subjects mass univariate design, correcting for multiple comparisons using Random Field Theory, as is typically performed in standard GLM analysis of fMRI data. We then compared the resultant statistical parametric maps between participants in a series of planned comparisons: (1) LSF versus HSF, (2) neutral versus fearful emotion, and (3) interaction between LSF and HSF and emotion. BSF was also used as an ecological control for LSF and HSF in a set of two comparisons: BSF versus LSF and BSF versus HSF.

Significant differences (p < 0.05, family wise-error-corrected) in field intensity were found between LSF and HSF across occipital, temporal, and central areas, spanning a time window of 160–585 ms (set-level F(2,25) = 38.86, p = 1.61 × 10−6; Fig. 2). Greater absolute field intensity for LSF was found at the earliest significant time point (160 ms) over right occipitotemporal areas and later on at 460 ms and 465 ms over right temporal areas, in accordance with previous literature (Goffaux et al., 2003; Peyrin et al., 2010; Awasthi et al., 2013). All remaining clusters of significant activity showed greater field intensity for HSF than LSF faces. These clusters were found at various time-points between 170 and 585 ms, located bilaterally and centrally over occipital and parietal areas, similar to previous work (Vlamings et al., 2009; Craddock et al., 2013; Mu and Li, 2013). Thus, we found distinct early (160 ms) and late (460 ms) components of LSF processing despite overwhelmingly greater activity for HSF overall (170–585 ms). Some studies have reported earlier effects of LSF (i.e., within the M100 component) than our relatively later effect (Craddock et al., 2013; Mu and Li, 2013). This may be due to differences in analysis technique, such that statistics on specific electrodes and time windows are more specific (but also more biased) compared with our more conservative and unbiased spatiotemporal analysis, where corrections for multiple comparisons are made across the entire sensor space and all time-points.

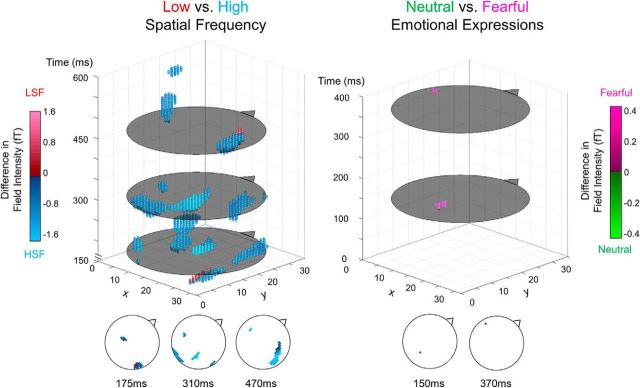

Figure 2.

Statistical parametric maps of sensor data. A 3D representation of significant voxels across space and time. Flat gray circles in the 3D plot represent time-points of interest (for graphical purposes), which are each displayed as traditional scalp plots along the bottom of the figure. Triangle/nose represents faced direction. For LSF versus HSF (left), blue and red spheres represent voxels that had significantly greater absolute field intensity for HSF and LSF faces, respectively. Similarly, for neutral versus fearful on the right, pink and green represent greater absolute field intensity for fearful and neutral expressions, respectively. All points are significant at p < 0.05 (family-wise error corrected).

No significant differences were found between BSF and LSF (even when uncorrected for multiple comparisons), suggesting comparable neural activation across both space and time. In contrast, there were significant differences between BSF and HSF across occipital, temporal, and central areas over a time window of 160–525 ms, very similar to that seen in the LSF versus HSF contrast described above.

Activity elicited by fearful faces was significantly greater than that elicited by neutral faces at two distinct clusters: an occipital peak at 150 ms and a left temporal peak at 370 ms (set-level F(1,25) = 38.48, p = 1.73 × 10−6), indicating typical early and late emotion effects reported in the literature (Vuilleumier, 2005). This effect did not, however, interact significantly with spatial frequency. Thus, although LSF processing was indeed found to be faster overall, this did not result in enhanced processing of fearful faces. Hence, our findings do not support an automatic prioritization of LSF fear processing in a task where the emotional expression of a face is task-irrelevant (De Cesarei and Codispoti, 2013).

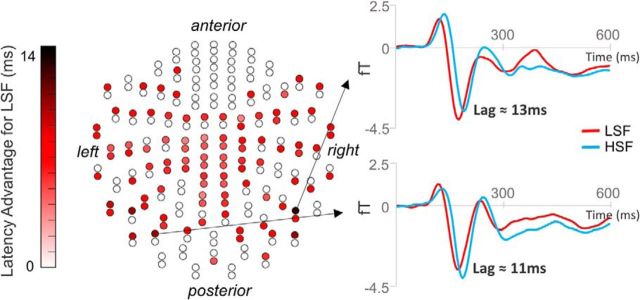

Assessing neural latency with cross-correlation

In our spatiotemporal analysis, the earliest significant difference between spatial frequency activation was found at 160 ms, where field intensity was greater for LSF than HSF, followed by significantly greater activity for HSF than LSF at 170 ms onwards. To determine whether this apparent temporal difference was significant, we performed cross-correlation analyses. This approach assumes that two paired waveforms (i.e., LSF and HSF ERFs at each sensor) are highly correlated, which was indeed the case in these dataset (average R2 across channels and subjects = 82.79%). Thus, we computed the relative lag between these waveforms at each of the 204 sensors. This resulted in 96 (47.06%) of sensors with a significant lag (Bonferroni-corrected, p < 2.45 × 10−4) between LSF and HSF faces, where all 204 sensors demonstrated an earlier effect of LSF (7.13 ± 2.19 ms; Fig. 3). This finding is supported by similar studies showing earlier M170 latencies for LSF compared with HSF image processing (Vlamings et al., 2009; Awasthi et al., 2013). Therefore, there appears to be a significant temporal disadvantage for HSF compared with LSF faces, such that the waveform as a whole (which encompasses multiple face processing components such as the M100 and M170) shifted later in time.

Figure 3.

Latency advantage for LSF faces. Left, A sensor map displaying 204 planar gradiometers. Red represents a sensor that showed a significant time difference between LSF and HSF. Darker red represents an earlier neural effect of LSF compared with HSF faces. Right, Activity at two example sensors that showed the greatest time difference.

DCM of neural networks

DCM is a biophysically informed method of comparing the likelihood of hypothetical neural networks that underlie a given dataset, compromising between model accuracy and complexity (David et al., 2006). We applied this technique in two stages: an anatomical stage, where we compared the likelihood that cortical, medial, and subcortical connections were recruited across all conditions; and a functional stage, where we took the winning model from the previous stage and compared the likelihood that connections were modulated by spatial frequency and/or emotion.

In the anatomical stage, four families of models were constructed (Fig. 4). Each family consisted of models for each possible combination of forward and backward connections, resulting in four models for the Cortical family, eight models for Dual, eight models for Medial, and 16 models for All. Each model contained nodes for the left and right hemispheres, but cross-hemisphere connections (e.g., from the left amygdala to the right amygdala) were not modeled.

We then conducted random effects Bayesian model selection on all models grouped into families. The greatest exceedance probability was found for the All family (59.61%) compared with the dual (23.69%), medial (13.29%), and cortical (3.40%) families. Therefore, it is clear that the inclusion of the subcortical route (i.e., the All and Dual families) vastly increased model likelihood. Within the winning All family, two models had comparably higher exceedance probability than the other 14: Model 21 (20.72%) and Model 29 (18.69%). Model 21 was the simplest in the family of models, containing only forward connections, whereas Model 29 was only incrementally more complex due to the addition of a backward connection between the amygdala and the pulvinar. Given previous research establishing that backward connections contribute more to a model's explanatory power as peristimulus time increases (Garrido et al., 2007), we speculated that the below-chance difference between these two models (chance being 100% divided by the number of models in the space, giving 2.78%) may have arisen because the backward connection's effect only occurred in the latter part of the 0–300 ms time window. Applying Occam's razor, we selected Model 21 as the most parsimonious explanation for our data because this model had the fewest connections and also the greatest exceedance probability. Hence, the anatomical foundation for the following series of functional models consisted of the well-known cortical stream, the controversial subcortical pathway, and the hypothesized medial connection between the pulvinar and the visual cortex.

The functional stage was designed to determine the likelihood of different routes of modulation by spatial frequency, emotion, or both, using a between-trial effects approach (i.e., differences between LSF and HSF or between neutral and fearful emotional expressions) (Garrido et al., 2009). First, we determined the possible paths along which visual information may be modulated (four possibilities: V1-amygdala, LGN-V1-amygdala, pulvinar-amygdala, pulvinar-V1-amygdala). These modulatory pathways were then combined in every possible way, resulting in nine models each for modulation by spatial frequency, emotion, or both (Fig. 5). Thus, the entire model space consisted of 28 models, including a null model precluding any modulation.

Random effects Bayesian model selection revealed that Model 26 had the greatest exceedance probability (88.04%), demonstrating that all pathways, except the subcortical pathway, were likely modulated by both spatial frequency and emotion within the given dataset. To infer the generalizability of this neural network to the population, classical one-sample t tests were conducted on individual parameter (i.e., connection) estimates. After Bonferroni correction for multiple comparisons, only the modulation of the cortical connection from V1 to the amygdala was significantly >0 (Bonferroni-corrected p = 0.019). The modulation of this cortical connection was greater for HSF than LSF (F(1,25) = 11.312, p = 0.002) and for fearful than neutral HSF faces (F(1,25) = 6.403, p = 0.018), suggesting a role for V1 in processing facial expressions in the HSF domain. Finally, we calculated the difference in functional modulation of the cortical route for spatial frequency (HSF-LSF) and emotion (fearful-neutral), as well as for reaction time and accuracy. Correlations between these measures revealed that reaction time was increasingly slower for fearful than for neutral faces as fearful modulation of the cortical route increased (R2 = 0.437, Bonferroni-corrected p = 0.050), suggesting that the perceptual features of fearful expressions transmitted along the cortical-amygdala connection slowed reaction time during gender identification. Thus, cortical transmission of facial expressions to the amygdala (Das et al., 2005) is a potential neural mechanism for the typical slowing of responses to fearful faces (Neath and Itier, 2015).

Sensitivity analysis

Using DCM, we found an optimal model for both the underlying neural architecture and the likely modulation of parameters by spatial frequency and emotion. Using this model in conjunction with sensitivity analysis, we then investigated the times at which each connection influenced the amplitude of the estimated source activity. Sensitivity analysis uses simulated data generated by an estimated dynamic causal model (which, in turn, was generated using the observed data) and tests the influence of particular parameters within a network by artificially amplifying coupling strength through stimulation. If the parameter influences the activity at a particular node in the simulated network, then it should be possible to observe a fluctuation in the simulated activity (Daunizeau et al., 2009; Dietz et al., 2014; FitzGerald et al., 2015). Therefore, using a data-driven modeling approach, we were able to make inferences about the influence of the subcortical, cortical, and medial connections on visual cortex and amygdala source activity.

We constructed a replica of the wining model to split the modulatory effects of spatial frequency and emotion at the cortical and medial streams into LSF Neutral, LSF Fearful, HSF Neutral, and HSF Fearful, allowing us to observe any potential interaction effects. We then conducted sensitivity analysis separately on the anatomical presence and functional modulation of each connection. This produced waveforms of amygdala and V1 source activity for perturbation by each connection type. Similar to the previous spatiotemporal analysis, we statistically analyzed the entirety of each of these waveforms by creating statistical parametric maps (source activity × time) and using Random Field Theory to correct for multiple comparisons across time (p < 0.05).

First, we investigated how each anatomical connection influenced V1 and amygdala activity by boosting the coupling parameters for each connection. V1 source activity was equally influenced by its forward connections from the LGN and the pulvinar from 110 to 160 ms (Fig. 6, left). Changes in amygdala activity, on the other hand, were significantly greater for the subcortical pathway than for all other connections between 70 and 90 ms and 110–165 ms (Fig. 6, middle). There was also a significantly greater change in amygdala source activity for the direct cortical connection from V1 than from the more indirect LGN-V1 (155–190 ms) and pulvinar-V1 (165–180 ms) connections.

To determine whether these apparent time differences in amygdala perturbation were significant, we conducted cross-correlation on pairs of the four connections. As expected, the perturbation by the subcortical pathway at 70 ms was significantly earlier than the perturbation by the LGN-V1 (by 33.27 ms, p = 6.638 × 10−10), pulvinar-V1 (by 31.54 ms, p = 1.956 × 10−12), and V1-amygdala connections (by 32.88 ms, p = 9.659 × 10−13), each of which perturbed activity later at ∼155 ms.

A separate sensitivity analysis was conducted on the functional modulation of connections. This first revealed that neither spatial frequency nor emotion modulation influenced V1 source activity through LGN or pulvinar input pathways. Interestingly, a main effect of spatial frequency was found for amygdala source activity, showing greater perturbation by HSF than LSF during 125–130 ms and 165–180 ms (Fig. 6, right). Upon observation of each individual connection (i.e., LGN-V1, V1-amygdala, and pulvinar-V1), it appears that HSF faces yielded a greater general effect across connections but that this effect was predominantly driven by modulation along the cortical V1-amygdala connection, which was the only significant simple effect. This corroborates the significant t statistic on this parameter found within the winning functional Model 26.

Validation checks

A pertinent consideration of our study is the relative insensitivity of MEG gradiometers to magnetic fields evoked by deep sources (e.g., amygdala, pulvinar, and LGN) compared with cortical sources (e.g., V1) (Papadelis and Ioannides, 2007). DCM is designed to model unobserved neural activity (i.e., “hidden states”), such as activity generated at the source level. This is made possible through a combination of a biophysical model and a priori informed sources known to be active under a given task (Moran et al., 2013). Importantly, the amygdala has previously been successfully localized with MEG data (Attal et al., 2012), aided by the high density of pyramidal neurons in the amygdala (Dumas et al., 2013) as well as by the use of validated prior source locations (Attal et al., 2012; Garrido et al., 2012; Garvert et al., 2014). Crucially, the DCMs in our study use the source locations a priori defined on the basis of the very same task performed in an fMRI study (Vuilleumier et al., 2003). It remains possible, however, that other nearby sources may have contributed to the activity captured by the deep nodes outlined in our models, such as the superior temporal sulcus (STS; MNI coordinates: left −46, −4, −18) previously shown to be engaged in the fMRI version of this task (Vuilleumier et al., 2003).

To address this possibility, we designed a series of validation models to assess whether activity in the STS may have contributed to activity we observed in the amygdala. The model space consisted of three families: (1) Amygdala Connected, (2) STS Connected, and (3) Both Connected. The nodes within each model consisted of the LGN, pulvinar, V1, amygdala, and STS. Each family included eight models, with forwards-only and forwards-and-backwards versions of the Cortical, Dual, Medial, and All model types, as described in the anatomical stage (similar to Fig. 4). Thus, the models within the Amygdala Connected family were identical to the first (forwards-only) and last (forwards-and-backwards) Cortical, Dual, Medial, and All models within the anatomical stage, with the addition of the disconnected STS node. The STS Connected family was identical to the Amygdala Connected family, except that now all amygdala connections were connected to the STS instead, leaving the amygdala disconnected. Differences in probability between the Amygdala Connected and STS Connected families would reveal whether the modeled hidden states of the amygdala and STS were distinguishable. Finally, the Both Connected models represented the combination of the two previous families, allowing us to estimate the probability that the amygdala and STS had dynamically distinct profiles and were both functionally active during our task.

After estimating these 24 models and conducting Bayesian model selection on the three families, we discovered that the Amygdala Connected model outperformed (50.40%) the STS Connected model (1.50%), where the winning model overall was the Amygdala Connected: All, forwards-only model (54.15%). This demonstrates that our measurements were indeed sensitive to differences in the dynamic profiles of the STS and amygdala and that our DCM reconstruction captured true amygdala activity. Importantly, the probability of the Both Connected family (48.10%) was similar to that of the Amygdala Connected family, suggesting a negligible contribution of the STS to our data within this early 0–300 ms time window. Given the absence of evidence for an advantage of the model including the STS, all our subsequent analyses were based on the more parsimonious family of models (i.e., Amygdala Connected).

Another pressing issue with deep source modeling with MEG is the extent to which we can tease apart nearby deep sources in the model space, such as the pulvinar and the LGN. To address this, we compared our original model connected to both LGN and pulvinar, to a model that was connected to a single thalamic node, whose coordinates laid halfway between the coordinates used for the pulvinar and LGN (MNI: left [−17, −23, 6], right [17, −26, 6]). In principle, if the data were insensitive to differences in activity between the LGN and pulvinar, the source activity at each of these nodes would be indistinguishable and thus the simpler thalamic model would win. Alternatively, if these hidden states were successfully captured by DCM, the model including LGN and pulvinar would win. A random-effects Bayesian model selection analysis revealed that the model including LGN and pulvinar won with 100% exceedance probability. Thus, demonstrating that with DCM for MEG we can indeed distinguish activity from LGN and pulvinar.

Discussion

Here we present computational evidence supporting the existence of a subcortical amygdala connection that facilitates rapid visual information transfer as early as 70 ms, regardless of spatial frequency or emotional expression. Participants discriminated the gender of LSF, HSF, and BSF faces exhibiting neutral or fearful expressions. Behavioral performance was better for LSF than HSF faces and for neutral than fearful faces, whereas neural activity was greater and slower overall for HSF compared with LSF and BSF faces. Together, these findings suggest that HSF faces were more computationally demanding (Mermillod et al., 2010a).

We used DCM to test hypotheses for the most likely structure and function of the neural network underlying face perception, specifically the rapid processing that occurs within the first 300 ms. The anatomical stage demonstrated that the most likely network consisted of cortical, subcortical, and medial connections to the amygdala, providing direction-specific evidence for the existence of a forward pulvinar-amygdala connection that operates in parallel with cortical-amygdala connections. This lends functional support to anatomical evidence for the pulvinar-amygdala connection (Day-Brown et al., 2010; de Gelder et al., 2012; Rafal et al., 2015), as well as to previous demonstrations of a functional visual pulvinar-amygdala connection using DCM of MEG data (Rudrauf et al., 2008; Garvert et al., 2014). The presence of the medial pulvinar-V1 connection in our winning anatomical model also corroborates previous anatomical evidence from human and nonhuman primates (Bridge et al., 2016).

Input to the pulvinar from the striate and extrastriate cortex may contribute to information flow along the pulvinar-amygdala connection, implying the subcortical route is not purely “subcortical” (Pessoa and Adolphs, 2010). Our results suggest that V1 is an unlikely contributor to the subcortical route, given the lack of a backward connection between V1 and the pulvinar in our winning model. By modeling an agnostic “input” parameter into the pulvinar, however, we have inevitably sampled a bigger and broader pathway (both anatomically and functionally) that could encompass input from several other candidate neural areas, such as the optic tract, V2, and MT/V5 (Tamietto and Morrone, 2016). Anatomically, reconstructed white matter streamlines from superior colliculus to pulvinar to amygdala form only a fraction of the total streamlines from pulvinar to amygdala (Tamietto et al., 2012; Rafal et al., 2015). Functionally, the medial pulvinar connects with the amygdala as well as several cortical regions with various cognitive and affective functions (Bridge et al., 2016). Importantly, the results from the functional modeling stage of our study revealed that the pulvinar-amygdala connection lacked modulation by spatial frequency or facial expression. We did not incorporate the superior colliculus in our models due to constraints on the spatial resolution of MEG for such a small, deep source. Given that input from the superior colliculus would be predominantly LSF, however, we can deduce that the rapid information transfer we observed from pulvinar to amygdala most likely represents multiple sources capable of processing LSF and/or HSF stimuli from retinal and cortical input (Tamietto and Morrone, 2016). Future research could elucidate the temporal and spatial frequency properties of these inputs to the pulvinar, helping to disambiguate our own findings as well as others whose sources of input remain speculative (Méndez-Bértolo et al., 2016).

Emotional expression did not modulate the pulvinar-amygdala connection, consistent with previous DCM studies (Garrido et al., 2012; Garvert et al., 2014). There was, however, modulation by emotional expression along the medial pathway. Thus, although the pulvinar may possess the capability to enhance activity during exposure to fearful faces (Maior et al., 2010; Le et al., 2016), it did not modify the signal transmitted to the amygdala. In contrast, modulation of the cortical-amygdala pathway by HSF fearful faces correlated with slower reaction times to fearful faces, implying greater modulation by facial expression along the cortical than the subcortical route. Thus, our results are inconsistent with previous findings of increased activity for LSF fearful faces in the amygdala (Vuilleumier et al., 2003; Méndez-Bértolo et al., 2016). One potential explanation is that our stimuli were matched for both luminance and contrast, unlike previous studies in which only luminance was matched (Vuilleumier et al., 2003; Méndez-Bértolo et al., 2016). The effects of contrast equalization on spatial frequency processing are particularly important during early (i.e., <100 ms) visual processing (Vlamings et al., 2009). In support of this notion is an intracranial EEG study that used luminance- and contrast-equalized stimuli and did not observe significant differences between LSF and HSF faces until 240 ms (Willenbockel et al., 2012). Thus, apparent interactions between spatial frequency and emotion may be explained by differences in contrast and luminance of the stimuli. Finally, an imperative difference between our study and previous work is that the critical element of directionality was absent from their methodologies, leaving open the possibility that such effects they were driven by backward connections (Vuilleumier et al., 2003; Krolak-Salmon et al., 2004; Willenbockel et al., 2012; Méndez-Bértolo et al., 2016).

It is clear that differences in stimulus and task parameters can have a significant impact on the apparent function of the subcortical pathways to the amygdala. For example, emotional expression was task-irrelevant in the present study. The pulvinar responds more strongly to behaviorally relevant stimuli (Kanai et al., 2015), which might explain the lack of modulation by emotional expression along the pulvinar-amygdala connection because fearful and neutral stimuli were equally task-relevant. Indeed, much of the work on this topic also used tasks in which emotional expression was task-irrelevant (Vuilleumier et al., 2003; Garvert et al., 2014; Méndez-Bértolo et al., 2016), allowing for fruitful cross-comparison between studies. There are many avenues through which future research could explore the different functional roles of the pulvinar-amygdala connection, depending on the motion of stimuli (given the connection between pulvinar and V5/MT) (Tamietto and Morrone, 2016), the animate/inanimate nature of stimuli (Mermillod et al., 2010b), emotion regulation (Beffara et al., 2015), context (e.g., fear-conditioned stimuli) (Shang et al., 2015), or interacting neural areas (e.g., networks for unconscious vision) (Tamietto and de Gelder, 2010). Similarly, other threat-specific subcortical amygdala pathways from the superior colliculus to different regions of the thalamus have been identified in mice (Shang et al., 2015; Wei et al., 2015). Future work could look for these pathways in humans and nonhuman primates using methods with higher spatial resolution, such as fMRI and intracranial recordings.

Overall, our results suggest that the subcortical route is recruited during semipassive face viewing but not specifically for LSF fearful faces. We propose that these results provide novel direction-specific evidence for a generalized functional role of the subcortical route in processing faces, such that neither spatial frequency nor emotional content is automatically filtered. Such a mechanism is intuitive, considering that an organism's survival is maximized if it can rapidly detect potential threats using both LSF and HSF visual information (Fradcourt et al., 2013; Stein et al., 2014). The so-called “diagnostic approach” describes flexible prioritization of spatial frequency processing depending on the task at hand (Ruiz-Soler and Beltran, 2006; de Gardelle and Kouider, 2010). For example, LSF information could indicate the presence of a face, whereas the HSF information could reveal the face's identity, either of which could be essential for detecting a potential threat (Sowden and Schyns, 2006). The purported role of the amygdala as a “relevance detector” (Sander et al., 2003) would suggest that its earliest visual input would contain all spatial frequencies and all emotional content, which is in line with the fast subcortical pathway unfiltered for spatial frequency or emotional content demonstrated here. This complements other research on the auditory subcortical route to the amygdala, which was found to be unmodulated by the predictability of sounds (Garrido et al., 2012).

The evidence we have demonstrated for a rapid, unmodulated pulvinar-amygdala connection helps reconcile apparently contradictory perspectives in the literature. First, we have shown that the effect of the pulvinar on amygdala activity precedes that of the visual cortex (Tamietto and de Gelder, 2010) but that the pulvinar influence amygdala activity via multiple parallel pathways (Pessoa and Adolphs, 2010). Second, the pulvinar-amygdala connection rapidly transmits a broad range of spatial frequencies, indicating a multiplicity of subcortical pathways to the amygdala that likely include the superior colliculus. Finally, reframing the subcortical route as playing a generalized instead of a specialized role in face processing may explain why emotional responses to different spatial frequencies have yielded contradictory findings (De Cesarei and Codispoti, 2013). Thus, we propose that the supposed “coarseness” of the subcortical route may be better reframed as “unfiltered.” By elucidating precisely what information is transmitted along this rapid subcortical pathway and how this is used by the amygdala, we may better understand the first stages of emotional experience and the potential role that subcortical activity plays in emotional disorders, such as anxiety (Carr, 2015) and autism (Nomi and Uddin, 2015).

Footnotes

This work was supported by the Australian Research Council (ARC) Centre of Excellence for Integrative Brain Function ARC Centre Grant CE140100007. J.B.M. was supported by ARC Australian Laureate Fellowship FL110100103. M.I.G. was supported by University of Queensland Fellowship 2016000071. J.M. was supported by an Australian Postgraduate Award. We thank Will Woods, Johanna Stephens, Mahla Cameron-Bradley, and Rachael Batty (Swinburne University) for help in collecting the MEG data; and two anonymous reviewers for insightful comments.

The authors declare no competing financial interests.

References

- Aguado L, Serrano-Pedraza I, Rodríguez S, Román FJ (2010) Effects of spatial frequency content on classification of face gender and expression. Span J Psychol 13:525–537. 10.1017/S1138741600002225 [DOI] [PubMed] [Google Scholar]

- Attal Y, Maess B, Friederici A, David O (2012) Head models and dynamic causal modeling of subcortical activity using magnetoencephalographic/electroencephalographic data. Rev Neurosci 23:85–95. 10.1515/rns.2011.056 [DOI] [PubMed] [Google Scholar]

- Awasthi B, Sowman PF, Friedman J, Williams MA (2013) Distinct spatial scale sensitivities for early categorization of faces and places: neuromagnetic and behavioral findings. Front Hum Neurosci 7:91. 10.3389/fnhum.2013.00091 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beffara B, Wicker B, Vermeulen N, Ouellet M, Bret A, Molina MJ, Mermillod M (2015) Reduction of interference effect by low spatial frequency information priming in an emotional Stroop task. J Vis 15:16. 10.1167/15.6.16 [DOI] [PubMed] [Google Scholar]

- Berman RA, Wurtz RH (2010) Functional identification of a pulvinar path from superior colliculus to cortical area MT. J Neurosci 30:6342–6354. 10.1523/JNEUROSCI.6176-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bridge H, Leopold DA, Bourne JA (2016) Adaptive pulvinar circuitry supports visual cognition. Trends Cogn Sci 20:146–157. 10.1016/j.tics.2015.10.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr JA. (2015) I'll take the low road: the evolutionary underpinnings of visually triggered fear. Front Neurosci 9:1–13. 10.3389/fnins.2015.00414 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cauchoix M, Crouzet SM (2013) How plausible is a subcortical account of rapid visual recognition? Front Hum Neurosci 7:1–4. 10.3389/fnhum.2013.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowey A, Stoerig P, Bannister M (1994) Retinal ganglion-cells labeled from the pulvinar nucleus in macaque monkeys. Neuroscience 61:691–705. 10.1016/0306-4522(94)90445-6 [DOI] [PubMed] [Google Scholar]

- Craddock M, Martinovic J, Müller MM (2013) Task and spatial frequency modulations of object processing: an EEG study. PLoS One 8:1–12. 10.1371/journal.pone.0070293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das P, Kemp AH, Liddell BJ, Brown KJ, Olivieri G, Peduto A, Gordon E, Williams LM (2005) Pathways for fear perception: modulation of amygdala activity by thalamo-cortical systems. Neuroimage 26:141–148. 10.1016/j.neuroimage.2005.01.049 [DOI] [PubMed] [Google Scholar]

- Daunizeau J, Kiebel SJ, Friston KJ (2009) Dynamic causal modelling of distributed electromagnetic responses. Neuroimage 47:590–601. 10.1016/j.neuroimage.2009.04.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daunizeau J, David O, Stephan KE (2011) Dynamic causal modelling: a critical review of the biophysical and statistical foundations. Neuroimage 58:312–322. 10.1016/j.neuroimage.2009.11.062 [DOI] [PubMed] [Google Scholar]

- David O, Kiebel SJ, Harrison LM, Mattout J, Kilner JM, Friston KJ (2006) Dynamic causal modeling of evoked responses in EEG and MEG. Neuroimage 30:1255–1272. 10.1016/j.neuroimage.2005.10.045 [DOI] [PubMed] [Google Scholar]

- Day-Brown JD, Wei H, Chomsung RD, Petry HM, Bickford ME (2010) Pulvinar projections to the striatum and amygdala in the tree shrew. Front Neuroanat 4:143. 10.3389/fnana.2010.00143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Cesarei A, Codispoti M (2013) Spatial frequencies and emotional perception. Rev Neurosci 24:89–104. 10.1515/revneuro-2012-0053 [DOI] [PubMed] [Google Scholar]

- de Gardelle V, Kouider S (2010) How spatial frequencies and visual awareness interact during face processing. Psychol Sci 21:58–66. 10.1177/0956797609354064 [DOI] [PubMed] [Google Scholar]

- de Gelder B, van Honk J, Tamietto M (2011) Emotion in the brain: of low roads, high roads and roads less travelled. Nat Rev Neurosci 12:425; author reply 425. 10.1038/nrn2920-c1 [DOI] [PubMed] [Google Scholar]

- de Gelder B, Hortensius R, Tamietto M (2012) Attention and awareness each influence amygdala activity for dynamic bodily expressions: a short review. Front Integr Neurosci 6:54. 10.3389/fnint.2012.00054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deruelle C, Fagot J (2005) Categorizing facial identities, emotions, and genders: attention to high- and low-spatial frequencies by children and adults. J Exp Child Psychol 90:172–184. 10.1016/j.jecp.2004.09.001 [DOI] [PubMed] [Google Scholar]

- Diano M, Celeghin A, Bagnis A, Tamietto M (2017) Amygdala response to emotional stimuli without awareness: facts and interpretations. Front Psychol 7:1–13. 10.3389/fpsyg.2016.02029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dietz MJ, Friston KJ, Mattingley JB, Roepstorff A, Garrido MI (2014) Effective connectivity reveals right-hemisphere dominance in audiospatial perception: implications for models of spatial neglect. J Neurosci 34:5003–5011. 10.1523/JNEUROSCI.3765-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas T, Dubal S, Attal Y, Chupin M, Jouvent R, Morel S, George N (2013) MEG evidence for dynamic amygdala modulations by gaze and facial emotions. PLoS One 8:1–11. 10.1371/journal.pone.0074145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- FitzGerald TH, Moran RJ, Friston KJ, Dolan RJ (2015) Precision and neuronal dynamics in the human posterior parietal cortex during evidence accumulation. Neuroimage 107:219–228. 10.1016/j.neuroimage.2014.12.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fradcourt B, Peyrin C, Baciu M, Campagne A (2013) Behavioral assessment of emotional and motivational appraisal during visual processing of emotional scenes depending on spatial frequencies. Brain Cogn 83:104–113. 10.1016/j.bandc.2013.07.009 [DOI] [PubMed] [Google Scholar]

- Furl N, Henson RN, Friston KJ, Calder AJ (2013) Top-down control of visual responses to fear by the amygdala. J Neurosci 33:17435–17443. 10.1523/JNEUROSCI.2992-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Kilner JM, Kiebel SJ, Friston KJ (2007) Evoked brain responses are generated by feedback loops. Proc Natl Acad Sci U S A 104:20961–20966. 10.1073/pnas.0706274105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Kilner JM, Kiebel SJ, Stephan KE, Baldeweg T, Friston KJ (2009) Repetition suppression and plasticity in the human brain. Neuroimage 48:269–279. 10.1016/j.neuroimage.2009.06.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Barnes GR, Sahani M, Dolan RJ (2012) Functional evidence for a dual route to amygdala. Curr Biol 22:129–134. 10.1016/j.cub.2011.11.056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garvert MM, Friston KJ, Dolan RJ, Garrido MI (2014) Subcortical amygdala pathways enable rapid face processing. Neuroimage 102:309–316. 10.1016/j.neuroimage.2014.07.047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goffaux V, Jemel B, Jacques C, Rossion B, Schyns PG (2003) ERP evidence for task modulations on face perceptual processing at different spatial scales. Cogn Sci 27:313–325. 10.1207/s15516709cog2702_8 [DOI] [Google Scholar]

- Kanai R, Komura Y, Shipp S, Friston K (2015) Cerebral hierarchies: predictive processing, precision and the pulvinar. Philos Trans R Soc Lond B Biol Sci 370:20140169. 10.1098/rstb.2014.0169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krolak-Salmon P, He M, Vighetto A, Bertrand O, Thomas A, Lyon CB (2004) Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: a depth electrode ERP study in human. Neuron 42:665–676. 10.1016/s0896-6273(04)00264-8 [DOI] [PubMed] [Google Scholar]

- Le QV, Isbell LA, Matsumoto J, Le VQ, Nishimaru H, Hori E, Maior RS, Tomaz C, Ono T, Nishijo H (2016) Snakes elicit earlier, and monkey faces, later, gamma oscillations in macaque pulvinar neurons. Sci Rep 6:20595. 10.1038/srep20595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux J. (1998) The emotional brain: the mysterious underpinnings of emotional life. New York: Simon and Schuster. [Google Scholar]

- Litvak V, Mattout J, Kiebel S, Phillips C, Henson R, Kilner J, Barnes G, Oostenveld R, Daunizeau J, Flandin G, Penny W, Friston K (2011) EEG and MEG data analysis in SPM8. Comput Intell Neurosci 2011:852961. 10.1155/2011/852961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D, Anders F, Öhman A (1998) The Karolinska directed emotional faces (KDEF). CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet. 91–630. [Google Scholar]

- Maior RS, Hori E, Tomaz C, Ono T, Nishijo H (2010) The monkey pulvinar neurons differentially respond to emotional expressions of human faces. Behav Brain Res 215:129–135. 10.1016/j.bbr.2010.07.009 [DOI] [PubMed] [Google Scholar]

- Márkus Z, Berényi A, Paróczy Z, Wypych M, Waleszczyk WJ, Benedek G, Nagy A (2009) Spatial and temporal visual properties of the neurons in the intermediate layers of the superior colliculus. Neurosci Lett 454:76–80. 10.1016/j.neulet.2009.02.063 [DOI] [PubMed] [Google Scholar]

- Méndez-Bértolo C, Moratti S, Toledano R, Lopez-Sosa F, Martínez-Alvarez R, Mah YH, Vuilleumier P, Gil-Nagel A, Strange BA (2016) A fast pathway for fear in human amygdala. Nat Neurosci 19:1041–1049. 10.1038/nn.4324 [DOI] [PubMed] [Google Scholar]

- Mermillod M, Bonin P, Mondillon L, Alleysson D, Vermeulen N (2010a) Coarse scales are sufficient for efficient categorization of emotional facial expressions: evidence from neural computation. Neurocomputing 73:2522–2531. 10.1016/j.neucom.2010.06.002 [DOI] [Google Scholar]

- Mermillod M, Droit-Volet S, Devaux D, Schaefer A, Vermeulen N (2010b) Are coarse scales sufficient for fast detection of visual threat? Psychol Sci 21:1429–1437. 10.1177/0956797610381503 [DOI] [PubMed] [Google Scholar]

- Mobbs D, Hagan CC, Dalgleish T, Silston B, Prévost C (2015) The ecology of human fear: survival optimization and the nervous system. Front Neurosci 9:1–22. 10.3389/fnins.2015.00055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran R, Pinotsis DA, Friston K (2013) Neural masses and fields in dynamic causal modeling. Front Comput Neurosci 7:57. 10.3389/fncom.2013.00057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, Frith CD, Young AW, Calder AJ, Dolan RJ (1998) A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121:47–57. 10.1093/brain/121.1.47 [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ (1999) A subcortical pathway to the right amygdala mediating “unseen” fear. Proc Natl Acad Sci U S A 96:1680–1685. 10.1073/pnas.96.4.1680 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mu T, Li S (2013) The neural signature of spatial frequency-based information integration in scene perception. Exp Brain Res 227:367–377. 10.1007/s00221-013-3517-1 [DOI] [PubMed] [Google Scholar]

- Neath KN, Itier RJ (2015) Fixation to features and neural processing of facial expressions in a gender discrimination task. Brain Cogn 99:97–111. 10.1016/j.bandc.2015.05.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nguyen MN, Hori E, Matsumoto J, Tran AH, Ono T, Nishijo H (2013) Neuronal responses to face-like stimuli in the monkey pulvinar. Eur J Neurosci 37:35–51. 10.1111/ejn.12020 [DOI] [PubMed] [Google Scholar]

- Nomi JS, Uddin LQ (2015) Face processing in autism spectrum disorders: from brain regions to brain networks. Neuropsychologia 71:201–216. 10.1016/j.neuropsychologia.2015.03.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohman A. (2005) The role of the amygdala in human fear: automatic detection of threat. Psychoneuroendocrinology 30:953–958. 10.1016/j.psyneuen.2005.03.019 [DOI] [PubMed] [Google Scholar]

- Papadelis C, Ioannides AA (2007) Localization accuracy and temporal resolution of MEG: a phantom experiment. Int Congr Ser 1300:257–260. 10.1016/j.ics.2007.01.055 [DOI] [Google Scholar]

- Pessoa L, Adolphs R (2010) Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat Rev Neurosci 11:773–783. 10.1038/nrn2920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R (2011) Emotion and the brain: multiple roads are better than one. Nat Rev Neurosci 12:425. 10.1038/nrn2920-c2 21673722 [DOI] [Google Scholar]

- Peyrin C, Michel CM, Schwartz S, Thut G, Seghier M, Landis T, Marendaz C, Vuilleumier P (2010) The neural substrates and timing of top-down processes during coarse-to-fine categorization of visual scenes: a combined fMRI and ERP study. J Cogn Neurosci 22:2768–2780. 10.1162/jocn.2010.21424 [DOI] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P (2005) Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum Brain Mapp 26:65–79. 10.1002/hbm.20130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rafal RD, Koller K, Bultitude JH, Mullins P, Ward R, Mitchell AS, Bell AH (2015) Connectivity between the superior colliculus and the amygdala in humans and macaque monkeys: virtual dissection with probabilistic DTI tractography. J Neurophysiol 114:1947–1962. 10.1152/jn.01016.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudrauf D, David O, Lachaux JP, Kovach CK, Martinerie J, Renault B, Damasio A (2008) Rapid interactions between the ventral visual stream and emotion-related structures rely on a two-pathway architecture. J Neurosci 28:2793–2803. 10.1523/JNEUROSCI.3476-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruiz-Soler M, Beltran FS (2006) Face perception: an integrative review of the role of spatial frequencies. Psychol Res 70:273–292. 10.1007/s00426-005-0215-z [DOI] [PubMed] [Google Scholar]

- Sander D, Grafman J, Zalla T (2003) The human amygdala: an evolved system for relevance detection. Rev Neurosci 14:303–316. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Oliva A (1999) Dr. Angry and Mr. Smile: when categorization flexibly modifies the perception of faces in rapid visual presentations. Cognition 69:243–265. 10.1016/S0010-0277(98)00069-9 [DOI] [PubMed] [Google Scholar]

- Shang C, Liu Z, Chen Z, Shi Y, Wang Q, Liu S, Li D, Cao P (2015) A parvalbumin-positive excitatory visual pathway to trigger fear responses in mice. Science 348:1472–1477. 10.1126/science.aaa8694 [DOI] [PubMed] [Google Scholar]

- Silverstein DN, Ingvar M (2015) A multi-pathway hypothesis for human visual fear signaling. Front Syst Neurosci 9:101. 10.3389/fnsys.2015.00101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sowden PT, Schyns PG (2006) Channel surfing in the visual brain. Trends Cogn Sci 10:538–545. 10.1016/j.tics.2006.10.007 [DOI] [PubMed] [Google Scholar]

- Stein T, Seymour K, Hebart MN, Sterzer P (2014) Rapid fear detection relies on high spatial frequencies. Psychol Sci 25:566–574. 10.1177/0956797613512509 [DOI] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Moran RJ, den Ouden HE, Daunizeau J, Friston KJ (2010) Ten simple rules for dynamic causal modeling. Neuroimage 49:3099–3109. 10.1016/j.neuroimage.2009.11.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M, de Gelder B (2010) Neural bases of the non-conscious perception of emotional signals. Nat Rev Neurosci 11:697–709. 10.1038/nrn2889 [DOI] [PubMed] [Google Scholar]

- Tamietto M, Morrone MC (2016) Visual plasticity: blindsight bridges anatomy and function in the visual system. Curr Biol 26:R70–R73. 10.1016/j.cub.2015.11.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamietto M, Pullens P, de Gelder B, Weiskrantz L, Goebel R (2012) Subcortical connections to human amygdala and changes following destruction of the visual cortex. Curr Biol 22:1449–1455. 10.1016/j.cub.2012.06.006 [DOI] [PubMed] [Google Scholar]

- Taulu S, Simola J (2006) Spatiotempral signal space separation method for rejecting nearby interference in MEG measurements. Phys Med Biol 51:1759–1768. 10.1088/0031-9155/51/7/008 [DOI] [PubMed] [Google Scholar]

- Vlamings PH, Goffaux V, Kemner C (2009) Is the early modulation of brain activity by fearful facial expressions primarily mediated by coarse low spatial frequency information? J Vis 9:12.1–13. 10.1167/9.5.12 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. (2005) How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci 9:585–594. 10.1016/j.tics.2005.10.011 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ (2003) Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci 6:624–631. 10.1038/nn1057 [DOI] [PubMed] [Google Scholar]

- Wei P, Liu N, Zhang Z, Liu X, Tang Y, He X, Wu B, Zhou Z, Liu Y, Li J, Zhang Y, Zhou X, Xu L, Chen L, Bi G, Hu X, Xu F, Wang L (2015) Processing of visually evoked innate fear by a non-canonical thalamic pathway. Nat Commun 6:6756. 10.1038/ncomms7756 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW (2010) Controlling low-level image properties: the SHINE toolbox. Behav Res Methods 42:671–684. 10.3758/BRM.42.3.671 [DOI] [PubMed] [Google Scholar]

- Willenbockel V, Lepore F, Nguyen DK, Bouthillier A, Gosselin F (2012) Spatial frequency tuning during the conscious and non-conscious perception of emotional facial expressions: an intracranial ERP study. Front Psychol 3:237. 10.3389/fpsyg.2012.00237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winston JS, Vuilleumier P, Dolan RJ (2003) Effects of low-spatial frequency components of fearful faces on fusiform cortex activity. Curr Biol 13:1824–1829. 10.1016/j.cub.2003.09.038 [DOI] [PubMed] [Google Scholar]