Abstract

BACKGROUND:

Overutilization of clinical laboratory testing in the inpatient setting is a common problem. The objective of this project was to develop an inexpensive and easily-implemented intervention to promote rational laboratory utilization without compromising resident education or patient care.

METHODS:

The study comprised of a cluster-randomized, controlled trial to assess the impact of a multifaceted intervention of education, guideline development, elimination of recurring lab orders, unbundling of laboratory panels, and redesign of the daily progress note on laboratory test ordering. The population included all patients hospitalized on a general medicine service during two consecutive months on a general medicine teaching service within a 999-bed tertiary care hospital in Boston, MA. The primary outcome was the total number of commonly utilized laboratory tests per patient-day during two months in 2008. Secondary outcomes included a subgroup analysis of each individual test per patient-day, adverse events, and resident and nursing satisfaction.

RESULTS:

5392 patient-days were captured. The intervention produced a 9% decrease in aggregate laboratory utilization (rate ratio 0.91, p = 0.021, 95% CI 0.84 – 0.98). Six instances of delayed diagnosis of acute kidney injury and 11 near misses were reported in the intervention arm.

CONCLUSIONS:

A bundled educational and administrative intervention promoting rational ordering of laboratory tests on a single academic general medicine service led to a modest, but significant decrease in laboratory utilization. To our knowledge, this was the first study to examine the daily progress note as a tool to limit excessive test ordering. Unadjudicated near misses and possible harm were reported with this intervention. This finding warrants further study.

Keywords: diagnostic tests, medical education, resource utilization

Introduction

As noted by Peabody in 1922, “…Good medicine does not consist in the indiscriminate application of laboratory examinations to a patient, but rather in having so clear a comprehension of the probabilities and possibilities of a case as to know what tests may be expected to give information of value.” 1 Overutilization of clinical laboratory testing in the inpatient setting is a long-recognized and vexing problem, in part due to inherent variation in practice patterns and disagreement over what studies are and are not appropriate. 1–4 At our hospital, recurring daily lab orders have become the prevailing clinical practice. Sequelae of excessive laboratory utilization can include increased cost, false-positive results, 5 patient discomfort due to phlebotomy, and hospital-acquired anemia. 6–8 Further, indiscriminate laboratory testing deprives trainees of opportunities to learn and practice diagnostic reasoning.

Attempts to encourage physicians to order tests more parsimoniously have achieved inconsistent success. 9–12 Programs that combine multiple strategies may be more successful in promoting rational laboratory use. 11,12 Accordingly, we sought to assess the effect of a multifaceted approach to curbing laboratory overutilization by internal medicine housestaff—a group accounting for significant resource utilization in teaching hospitals. 13

We report a novel multidisciplinary intervention that integrates education, consensus-building, and administrative elements. It is inexpensive, requires no new infrastructure, and avoids measures that would likely be regarded as punitive. In keeping with the mission of a teaching institution, this project is didactic in nature. In recognition of clinical, fiscal, and intellectual benefits, the ultimate goal of the intervention is to train physicians to become responsible stewards of healthcare resources. This project invited physicians-in-training to help answer a clinical question relevant to their daily work. We hypothesized that our intervention would decrease unnecessary testing.

Methods

The Massachusetts General Hospital (MGH) is a 999-bed tertiary care teaching hospital located in Boston, MA. At the time of this study, the Bigelow Medical Service was staffed by five teams of resident and attending physicians regionalized by floor. Each of the five Bigelow teams consisted of four interns, one to two junior residents, one to two attending physicians, and two to three medical students. Teams A and E covered 24 beds, while teams B, C, D covered 20 beds respectively. Physicians and medical students rotate on the Bigelow Service for two- or four-week intervals. Each team is assisted by a staff of nurses and administrative personnel assigned by floor.

The study period was chosen to accommodate the rotation schedule of the residency program, to avert the confounding influence of July intern arrival, and to minimize migration bias associated with intervention team members rotating to control teams. Bigelow teams were cluster randomized using a random number table to the intervention and control arms as demonstrated in Figure 1. From April 30 to May 27, 2008—the “May” block—teams A, B, C received the intervention, while teams D and E served as concurrent controls. In the “June” block, May 28 to June 24, 2008, teams B and D received the intervention, while teams A, C, and E served as the controls. The intervention consisted of three elements:

Teams were given a 15-minute educational briefing on day one of each block. Ordering guidelines, developed by literature search and consensus of resident and attending physicians were distributed and briefly discussed. The use of these guidelines was optional but encouraged.

Team members were encouraged not to write automatically recurring daily orders for laboratory tests. In common practice these are also known as “daily” or “QD” orders; for the purposes of this paper, we will refer to these as “ standing orders.” Physicians could monitor laboratory studies as frequently as they desired, but they were encouraged to write a new order for each episode of measurement. No other test ordering restrictions were enacted. All orders placed by physicians were placed via an institutionally developed computerized provider order entry (CPOE) system.

Team members were asked to include a new section entitled “Labs Needed for Tomorrow” in their daily progress note. This list was non-binding; teams were allowed to revise their selection of laboratory studies as clinically warranted. At the time of this intervention, all progress notes were hand-written on a printed note template, and the “Labs Needed for Tomorrow” section was added to the bottom.

Intervention teams received posters depicting the aforementioned lab ordering changes in addition to the printed copies of the ordering guidelines. These posters were placed in each intervention floor’s physician workrooms, to minimize visibility to control providers on other floors. Nurse management on each floor was briefed regarding the intervention.

FIGURE 1.

Schema.

NOTE: Breakdown of intervention and control teams by month.

The primary endpoint of this study was the total number of completed laboratory tests per patient-day for Bigelow teams during the May and June rotation blocks of 2008. The following tests were tracked: serum sodium (Na), potassium (K), bicarbonate (CO2), chloride (Cl), blood urea nitrogen (BUN), creatinine (Cr), glucose (Gluc), calcium (Ca), magnesium (Mg), phosphorus (Phos), alanine aminotransferase (ALT), aspartate aminotransferase (AST), alkaline phosphate (Alk phos), albumin (Alb), globulin (Glob), total protein (TP), direct bilirubin (Dbili), total bilirubin (Tbili), complete blood counts with and without differential (CBC +/− diff), partial thromboplastin time (PTT), prothrombin time (PT), international normalized ratio (INR), and urinalysis (U/A). Compliance with the intervention was monitored by audit of ten randomly selected progress notes per team Monday through Friday during the intervention.

All bundled test panels were broken down and tabulated by individual test; for example, a basic metabolic panel order would be counted as discrete studies for serum Na, K, Cl, CO2, BUN, Cr, and glucose; though comprised of multiple studies, CBC and U/A were each counted as a single test. Laboratory utilization and patient demographics were collected through administrative databases.

Adverse events, both those resulting in perceived harm to patients and “near miss” of harm, were assessed in real-time through polling of supervising residents on each intervention team. To increase reporting, all physicians on intervention teams were surveyed regarding adverse events at the end of the intervention period, though these data were not associated with specific patients. “Harm” and “near miss” were neither specifically defined nor independently adjudicated. Adverse event reports were not solicited on control teams as to minimize bias due to a Hawthorne effect and recognizing that the study was not powered to detect a difference in adverse events between groups. Respondents were not required to answer every survey question.

Patient characteristics were compared by chi-square test and one-way analysis of variance. Frequencies of lab tests were analyzed in generalized linear mixed models assuming a negative binomial distribution for daily counts of tests of a given type ordered for each patient with log link, a fixed effect of the treatment imposed on a team at the time of ordering, and random patient x month specific intercepts to account for the dominant influence of a patient’s medical condition in determining the frequency of lab testing. Poisson models were fit for Ca, Mg, and PTT to achieve convergence of estimates. Too few data were available to control for team effects. As a sensitivity analysis, we analyzed counts of lab tests as normally distributed data in linear mixed models with both patient x month and team random effects.

Due to randomization of medical teams rather than patients, a number of patients crossed over from an intervention to a control team, or vice versa, during the course of the trial. In this instance, these patients’ lab draws were attributed to the ordering team—intervention or control—at the time the labs were ordered. Demographics of this crossover population were analyzed separately from those whose entire care was covered by a single team.

This project was approved by the institutional review board of the Massachusetts General Hospital, Partners Healthcare, Inc. Consent was obtained from all participating physicians for the purposes of tracking individual test ordering patterns. Funding for the study was provided by the Massachusetts General Hospital Department of Medicine.

Results

A total of 5392 patient-days were captured in the trial, 2790 in the control groups and 2602 in the intervention groups. Patient characteristics are represented in Table 1. Mortality, comorbidities, and length of stay (LOS) were similar in all groups. The crossover design necessarily dictated that a population of patients transitioned from an intervention team in May to a control team in June and vice-versa; as a result, these patients and their demographics were counted and analyzed separately in Table 1.

TABLE 1:

Patient Demographics

| Variables | Overall | Control (n=383) | Intervention (n=415) | Both conditions (n=83) | P value |

|---|---|---|---|---|---|

| Number of admissions during study period | <0.001 | ||||

| 1 | 806 (91.5%) | 365 (95.3%) | 399 (96.1%) | 42 (50.6%) | |

| 2 | 66 (7.5%) | 18 (4.7%) | 14 (3.4%) | 34 (41.0%) | |

| 3 | 7 (0.8%) | 0 | 2 (0.5%) | 5 (6.0%) | |

| 4 | 2 (0.2%) | 0 | 0 | 2 (2.4%) | |

| Gender | 0.13 | ||||

| F | 380 (43.1%) | 175 (45.7%) | 177 (42.7%) | 28 (33.7%) | |

| M | 501 (56.9%) | 208 (54.3%) | 238 (57.3%) | 55 (66.3%) | |

| Age at first admission (mean±SD, range) | 63.6±17.7 (18.0, 98.0) | 63.0±18.1 (19.0, 98.0) | 64.7±17.5 (18.0, 97.0) | 60.9±17.4 (21.0, 91.0) | 0.14 |

| Top principle ICD-9 diagnoses | 0.34 | ||||

| 46 (4.8%) | 23 (5.2%) | 17 (3.6%) | 6 (11.5%) | ||

| 1. Acute renal failure NOS, 5849 | |||||

| 30 (3.1%) | 11 (2.5%) | 18 (3.8%) | 1 (1.9%) | ||

| 2. Congestive heart failure NOS, 4280 | |||||

| 27 (2.8%) | 9 (2.0%) | 17 (3.6%) | 1 (1.9%) | ||

| 3. Obstructive chronic bronchitis with acute exacerbation, 49121 | |||||

| 26 (2.7%) | 13 (2.9%) | 12 (2.5%) | 1 (1.9%) | ||

| 4. Alcohol withdrawal, 29181 | |||||

| 26 (2.7%) | 14 (3.2%) | 11 (2.3%) | 1 (1.9%) | ||

| 5. Pneumonia, organism NOS, 486 | |||||

| 812 (84%) | 371 (84.1%) | 399 (84.2%) | 42 (80.8%) | ||

| 6. Other icd-9 diagnoses | |||||

| Discharge Disposition | 0.89 | ||||

| Skilled nursing facility | 125 (12.9%) | 56 (12.7%) | 64 (13.5%) | 5 (9.6%) | |

| Inpatient rehabilitation | 82 (8.5%) | 37 (8.4%) | 39 (8.2%) | 6 (11.5%) | |

| Long term acute care | 20 (2.1%) | 10 (2.3%) | 8 (1.7%) | 2 (3.8%) | |

| Psychiatric hospital | 21 (2.2%) | 8 (1.8%) | 13 (2.7%) | 0 | |

| Home or self care (including home services) | 636 (65.8%) | 293 (66.4%) | 306 (64.6%) | 37 (71.2%) | |

| Hospice | 9 (0.9%) | 6 (1.4%) | 2 (0.4%) | 1 (1.9%) | |

| Against medical advice | 33 (3.4%) | 14 (3.2%) | 19 (4.0%) | 0 (0.0%) | |

| Died | 25 (2.6%) | 11 (2.5%) | 13 (2.7%) | 1 (1.9%) | |

| Discharged to certified Medicaid facility | 1 (0.1%) | 1 (0.2%) | 0 | 0 | |

| Discharged to a federal hospital | 3 (0.3%) | 1 (0.2%) | 2 (0.4%) | 0 | |

| Short term general hospital | 4 (0.4%) | 2 (0.5%) | 2 (0.4%) | 0 | |

| Other | 8 (0.8%) | 2 (0.5%) | 6 (1.3%) | 0 | |

| Variables | Overall | Control | Intervention | Both | P value |

| Length of stay (mean±SD, range) | 7.28±7.98 (1.00, 88.0) | 6.85±6.39 (1.00, 44.0) | 7.53±9.16 (1.00, 88.0) | 8.54±8.59 (2.00, 49.0) | 0.22 |

| Log-transformed length of stay (mean±SD, range) | 1.64±0.77 (0.00, 4.48) | 1.62±0.75 (0.00, 3.78) | 1.65±0.79 (0.00, 4.48) | 1.82±0.77 (0.69, 3.89) | 0.22 |

NOTE: “Intervention” and “control” refers to each respective study arm, while “both” refers to the population of patients who crossed over from intervention to control arm or vice-versa during the trial. Abbreviations: ICD-9 = international classification of diseases, 9th edition; NOS = not otherwise specified.

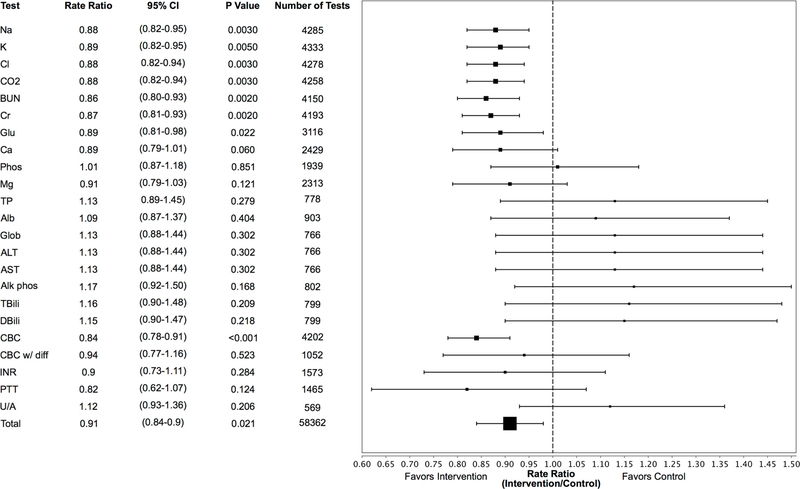

Figure 2 depicts Poisson and negative-binomial estimated rate ratios (RR) comparing laboratory orders in intervention and control arms. Aggregate laboratory ordering was lower in the intervention arm, with RR 0.91 (95% confidence interval [CI] 0.84–0.98, P = 0.021). Significant decreases in laboratory utilization were observed for Na (RR 0.88, 95% CI 0.82–0.95, P = 0.003), K (RR 0.89, 95% CI 0.82–0.95, P = 0.005, Cl (RR 0.88, 95% CI 0.82–0.95, P = 0.003), CO2 (RR 0.88, 95% CI 0.82–0.94, P = 0.003), BUN (RR 0.86, 95% CI 0.80–0.93, P = 0.002), Cr (RR 0.87, 95% CI 0.81–0.93, P = 0.002), Glu (RR 0.89, 95% CI 0.81–0.98, P = 0.02), and CBC without differential (RR 0.84, 95% CI 0.78–0.91, P < 0.001). Equivalent inference was reached by sensitivity analysis incorporating a random team effect.

FIGURE 2.

Rate ratios of lab ordering by test

NOTE: Forest plot depicting rate ratios of lab ordering generated from Poisson (Ca, Mg, PTT) and negative binomial regressions. Abbreviations: Lower CL = lower limit of 95% confidence interval, Upper CL = upper limit of 95% confidence interval, individual laboratory test abbreviations per methods section.

Survey response rates were high: 86% (19/22) for interns, 90% (9/10) for junior residents, 92% (11/12) for senior residents, and 100 % (11/11) for attendings. 14 Forty-six percent (18/39) of respondents believed that the intervention improved overall patient care; 38% (15/39) and 15% (6/39) felt that it was neutral or harmed patient care, respectively. Forty-nine percent (19/39) felt that it increased the amount of time needed to render care, 44% (17/39) felt it was time neutral, and 8% (3/39) felt that it saved time. Survey data suggest that the intervention helped 82% (32/39) of respondents think more carefully about laboratory ordering. Sixty-four percent (21/33) of respondents felt that the intervention should be implemented as formal policy. Fifteen percent (6/39) of physicians believed the intervention resulted in at least one episode of definite patient harm, and 32% (12/38) believed it resulted in at least one “near miss.”

Table 2 tabulates the reports of adverse events. There were 11 reports of “near misses” and 7 reports of perceived patient harm as reported by the clinical providers, although only two of these were reported to the investigators in real time: a delay in checking calcium and phosphorous levels in a vitamin-D deficient patient who had received an IV bisphosphonate and new acute kidney injury in a patient who was being actively diuresed who did not have a basic metabolic panel checked for multiple days. The remaining adverse events were reported retrospectively through the survey.

TABLE 2:

Physician-reported adverse events

| Near Misses (Number of survey reports) | Events Resulting in Perceived Harm (Number of survey reports) |

|---|---|

| Delayed observation of elevated Ca-Phos product (2) | Delayed diagnosis of acute kidney injury (6) |

| Delayed phlebotomy draws (4) | Delayed phlebotomy draws with unspecified harm (1) |

| Delayed measurement of Ca and Phos in vitamin D-deficient patient treated with IV bisphosphonate (1) | |

| Delayed observation of rising INR in patient with liver failure (1) | |

| Ectopy in patient found to have hypomagnesemia (1) | |

| Delayed diagnosis of acute kidney injury (2) | |

NOTE: Data collected from retrospective survey of physicians on intervention teams. Abbreviations: Ca = calcium, Phos = phosphorous, IV = intravenous, INR = international normalized ratio

Random chart audit demonstrated 80% intervention compliance in May and 53% compliance in June. Limited auditing of control teams’ notes found no evidence of effects of the intervention.

Discussion

This report describes a successful collaborative intervention to promote rational laboratory utilization and develop an inpatient medical service into a research engine with trainees as key stakeholders. We achieved a modest reduction in laboratory volume while preserving the educational mission of our training program. Common strategies to change physician practice patterns include education, feedback, consensus-building, administrative changes, incentives, and penalties. All of these strategies have been applied to improve clinical laboratory utilization. 9,11,12 Our intervention incorporates consensus-building (creation of guidelines), education (discussion of guidelines with intervention teams), and administrative changes (voluntary elimination of recurrent laboratory orders and addition of a “labs needed for tomorrow” section to daily progress note). To our knowledge, this is the first reported intervention to incorporate the use of the daily progress note as a vehicle to stimulate discussion and explicit planning of laboratory studies. 11,12 Other efforts have shown that forcing physicians to justify a study during the ordering process can increase the proportion of properly indicated laboratory studies over an educational intervention alone. 15

The 9% reduction in aggregate laboratory utilization in the intervention arm appears to be driven by decreased use of the basic metabolic panel and constituent studies (Na, K, Cl, CO2, BUN, Cr, glucose) and CBC without differential. Meanwhile, the intervention did not appear to influence ordering of extended chemistries (Ca, Phos, Mg), liver biochemical profiles (ALT, AST, Alk phos, Alb, Glob, TP), coagulation studies (PTT, INR), or urinalyses. High utilization studies, like the BMP (basic metabolic panel) and CBC, may prove to be more amenable to demand management than less commonly ordered tests, although this has yet to be conclusively demonstrated. 16,17 We hypothesize that high volume tests are subject to greater ordering variation and overutilization. This may explain why the intervention seemed to have a larger effect on the most commonly ordered lab tests.

Eliminating standing laboratory orders may be effective in curbing utilization of high demand studies. 16,17 May et al. report that elimination of standing laboratory orders produced a 12% decrease in utilization over one year compared to a historical control; this is similar to the magnitude observed in our intervention. 16 In another study, a step-wise intervention that coupled unbundling of metabolic panel tests with changes in the electronic ordering interface produced a 24% reduction in BMP utilization, but this effect was magnified to 51% when standing orders were banned outright. 17 An early retrospective, quasi-experimental study showed that elimination of standing laboratory orders at one urban teaching hospital did not decrease overall laboratory utilization in patients with acute myocardial infarction, coronary artery disease, and CHF, but the study was small and likely underpowered. 18

The success of administrative efforts appears to rest largely upon local institutional culture; strong support by clinical and hospital leadership, as well as the housestaff, may be as important as the design of the interventions themselves. 9 Involvement of residents in guideline design and trial execution likely improved acceptance of this intervention. Furthermore, “buy-in” was sought from nurses, nursing management, chief residents, and residency program leadership, which may have further reinforced compliance.

This study was neither powered nor designed to systematically compare adverse events between the intervention and control arms. Nonetheless, intervention teams reported a number of “near misses” and events associated with perceived harm. Unfortunately, only two events were reported in real time, so detailed clinical data associated with the other 16 reports are not known. As a result, it is difficult to independently attribute the events to the intervention. In one instance of delayed diagnosis of acute kidney injury reported in real time, the clinical team did not check a basic metabolic panel for a number of days, even though the patient was receiving large doses of IV diuretics; at our institution, daily—or more frequent—monitoring of electrolytes is widely accepted as standard practice in this setting, and this was delineated in the intervention guidelines that were distributed. Some reports in Table 2 may have referenced the same event(s); since survey respondents were not asked to provide identifiable patient information, they could not be further characterized or adjudicated. However, survey data indicate that most clinicians did not believe that the intervention caused harm and there were no statistically significant differences in LOS or mortality observed in our trial. This is also consistent with the experience of other institutions, where meaningful decreases in lab utilization were achieved without an increase in adverse events or LOS. 16

As a small single-center study, the results may not be generalizable to other institutions. Due to the crossover design, a population of patients moved from an intervention team in May to a control team in June and vice-versa. However, as demonstrated in Table 1, the patients in this group were few in number and similar to the overall study population.

Survey data gathered by this study are subject to bias, as those inclined to complete their questionnaires may hold stronger opinions, positive or negative, about the intervention. In spite of this shortcoming, the survey captured some useful provider feedback on the intervention, and our response rates were high (86–100%). 14 We relied on clinical teams to report adverse events, so it is possible that negative outcomes associated with underutilization of laboratory studies were missed. Clinicians reported a number of adverse events only in retrospect, which hindered investigation of their context and consequences.

This study was not designed to explore the durability of the intervention’s effect. Interventions incorporating consensus-building, education, and/or feedback often produce weak or transient reductions in test ordering. 9,11 Future studies should examine whether outright elimination of automatically recurring daily laboratory orders may help produce a durable decrease in laboratory utilization. To our knowledge, there have been no multi-center trials addressing this question.

In summary, we found that a bundled educational and administrative intervention to promote rational ordering of laboratory tests on a single academic general medicine service led to a modest, but significant decrease in the most commonly ordered studies.

CLINICAL SIGNIFICANCE.

Overutilization of clinical laboratory testing in the inpatient setting remains a common problem.

An inexpensive and easily-implemented intervention that incorporates guidelines, education, elimination of recurring laboratory orders, and addition of a “labs needed for tomorrow” section to daily progress note achieved a 9% decrease in aggregate laboratory ordering, without compromising resident education.

Further study is needed to assess the impact of this intervention on patient outcomes.

Acknowledgements:

The authors would like to thank Chris Lofgren for his assistance with laboratory data collection and Drs. Arjun Rao and Ciaran McMullan for their assistance with study implementation. In addition, they gratefully acknowledge the residents, faculty, and staff of the MGH Department of Medicine and Internal Medicine Residency Training Program for their assistance and financial support of this project. This work was conducted with support from Harvard Catalyst, the Harvard Clinical and Translational Science Center (National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health Award UL1 TR001102), and financial contributions from Harvard University and its affiliated academic healthcare centers. The content is solely the responsibility of the authors and does not necessarily represent the official views of Harvard Catalyst, Harvard University and its affiliated academic healthcare centers, or the National Institutes of Health.

Disclosures

Bradley Wertheim was paid by the Massachusetts General Hospital Department of Medicine for his work as a research assistant on this study. Eric Macklin is supported by NIH grant UL1 TR001102, and is a paid DSMB member of Acorda Therapeutics and Shire Human Genetic Therapies. Gabriela Motyckova was paid for work for the blinatumumab ALL Advisory Board in 2015.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflicts of Interest

All other authors reported no conflicts of interest.

References:

- 1.Peabody FW. The physician and the laboratory. The Boston Medical and Surgical Journal 1922;187(9):324–327. [Google Scholar]

- 2.Griner PF, Liptzin B. Use of the laboratory in a teaching hospital. implications for patient care, education, and hospital costs. Ann Intern Med 1971;75(2):157–63. [DOI] [PubMed] [Google Scholar]

- 3.Editorial: Routine laboratory tests--the physician’s responsibility. New England Journal of Medicine 1966;274:222.5902621 [Google Scholar]

- 4.van Walraven C, Naylor CD. Do we know what inappropriate laboratory utilization is? A systematic review of laboratory clinical audits. Jama 1998;280(6):550–8. [DOI] [PubMed] [Google Scholar]

- 5.Bates DW, Goldman L, Lee TH. Contaminant blood cultures and resource utilization. the true consequences of false-positive results. Jama 1991;265(3):365–9. [PubMed] [Google Scholar]

- 6.Chant C, Wilson G, Friedrich JO. Anemia, transfusion, and phlebotomy practices in critically ill patients with prolonged ICU length of stay: A cohort study. Crit Care 2006;10(5):R140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Salisbury AC, Reid KJ, Alexander KP, et al. Diagnostic blood loss from phlebotomy and hospital-acquired anemia during acute myocardial infarction. Arch Intern Med 2011;171(18):1646–1653. [DOI] [PubMed] [Google Scholar]

- 8.Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? the effect of diagnostic phlebotomy on hemoglobin and hematocrit levels. J Gen Intern Med 2005;20(6):520–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Eisenberg JM. Physician utilization: The state of research about physicians’ practice patterns. Med Care 2002;40(11):1016–1035. [DOI] [PubMed] [Google Scholar]

- 10.Axt-Adam P, van der Wouden JC, van der Does E. Influencing behavior of physicians ordering laboratory tests: A literature study. Med Care 1993;31(9):784–794. [DOI] [PubMed] [Google Scholar]

- 11.Solomon DH, Hashimoto H, Daltroy L, Liang MH. Techniques to improve physicians’ use of diagnostic tests: A new conceptual framework. Jama 1998;280(23):2020–7. [DOI] [PubMed] [Google Scholar]

- 12.Kobewka DM, Ronksley PE, McKay JA, Forster AJ, van Walraven C. Influence of educational, audit and feedback, system based, and incentive and penalty interventions to reduce laboratory test utilization: A systematic review. Clin Chem Lab Med 2015;53(2):157–183. [DOI] [PubMed] [Google Scholar]

- 13.Iwashyna TJ, Fuld A, Asch DA, Bellini LM. The impact of residents, interns, and attendings on inpatient laboratory ordering patterns: A report from one university’s hospitalist service. Acad Med 2011;86(1):139–145. [DOI] [PubMed] [Google Scholar]

- 14.Kellerman SE, Herold J. Physician response to surveys. A review of the literature. Am J Prev Med 2001;20(1):61–67. [DOI] [PubMed] [Google Scholar]

- 15.Kroenke K, Hanley JF, Copley JB, et al. Improving house staff ordering of three common laboratory tests. reductions in test ordering need not result in underutilization. Med Care 1987;25(10):928–935. [DOI] [PubMed] [Google Scholar]

- 16.May TA, Clancy M, Critchfield J, et al. Reducing unnecessary inpatient laboratory testing in a teaching hospital. Am J Clin Pathol 2006;126(2):200–6. [DOI] [PubMed] [Google Scholar]

- 17.Neilson EG, Johnson KB, Rosenbloom ST, et al. The impact of peer management on test-ordering behavior. Ann Intern Med 2004;141(3):196–204. [DOI] [PubMed] [Google Scholar]

- 18.Sussman E, Goodwin P, Rosen H. Administrative change and diagnostic test use. the effect of eliminating standing orders. Med Care 1984;22(6):569–72. [DOI] [PubMed] [Google Scholar]