Abstract

Mental health clinicians do not consistently use evidence-based assessment (EBA), a critical component of accurate case conceptualization and treatment planning. The present study used the Unified Theory of Behavior to examine determinants of intentions to use EBA in clinical practice among a sample of Masters’ level social work trainees (N = 241). Social norms had the largest effect on intentions to use EBA. Injunctive norms in reference to respected colleagues accounted for the most variance in EBA intentions. Findings differed for respondents over 29 years of age vs. younger respondents. Implications for implementation strategies and further research are discussed.

Keywords: Pre-Service Implementation Strategies, Behavioral Health, Evidence-Based Assessment, Measurement-Based Care

Access to evidence-based practices is an established determinant of population mental health outcomes (Colton & Manderscheid, 2006; Hoagwood, Burns, Kiser, Ringeisen, & Schoenwald, 2001; Kazdin, 2017). Evidence-based assessment (EBA) comprises the use of standardized assessment tools to determine treatment and track progress. It is considered an evidence-based approach by a range of disciplines that provide mental health services including social work (Jensen-Doss, 2011; Lyon, Dorsey, Pullmann, Silbaugh-Cowdin, & Berliner, 2015). Standardized diagnostic and monitoring tools provide a more valid assessment than do informal assessment methods such as unstructured interviewing (De Los Reyes & Alado, 2015; Jensen-Doss, 2011; Love, Koob, & Hill, 2007). Studies have consistently associated the use of EBA with improved patient outcomes (Lambert et al., 2003; Lewis et al., 2015; Youngstrom et al., 2017).

In recent years, significant efforts guided by principles of implementation science have been dedicated to implementing evidence-based treatments in mental health settings (Beidas et al., 2016; Townsend & Morgan, 2017). The use of EBA in mental health services has received considerably less attention among implementation researchers than have evidence-based treatments, and use of EBA remains low (Jensen-Doss, 2011; Lyon et al., 2015). Studies consistently highlight that mental health clinicians, including psychiatrists, psychologists, couples therapists and social workers, tend not to use EBA, often with detrimental consequences, such as preventable suicides (De Los Reyes & Alado, 2015; Jensen-Doss & Hawley, 2010; Posner, 2016; Schacht, Dimidjian, George, & Berns, 2009).

Despite its increasing appearance in curricula of mental health professional programs, EBA is still not used by many mental health clinicians (Lyon et al., 2015). There is a need to understand why this disconnect persists, and to develop targeted strategies to increase the use of EBA by mental health professionals. The present study uses established approaches from leading social psychological theories to better understand mental health trainees’ decision-making regarding the use of EBA. The study advances implementation science by identifying targets for pre-service implementation strategies i.e. strategies used while clinicians are still in training (Becker-Haimes et al., 2018) to increase mental health trainees’ intentions to use EBA.

Targeting clinical trainees

Most efforts to strengthen the use of EBA in the mental health workforce have focused on clinicians already in practice (Jensen‐Doss, 2011). A promising alternative is to target clinical trainees before they are practicing independently (Becker-Haimes et al., 2018; Huey, 2002; Stanhope, Tuchman, & Sinclair, 2011). Trainees tend to be more responsive to training than are practicing clinicians, who often are less flexible in regard to new practices (Donaldson, 2015; Tennille, Solomon, Brusilovskiy, & Mandell 2016). It also may be more feasible to reach clinicians while they are still in training, before they are spread across provider organizations. Many experts agree on the potential of pre-service implementation strategies (Becker-Haimes et al., 2018; Burns et al., 2012; Huey, 2002; Stanhope et al., 2011), although few have been studied. A small literature has shown that pre-service training increases EBP skills to a larger extent than in-service trainings do (Donaldson, 2015; Santa Maria, Markham, Crandall, & Guilamo‐Ramos, 2017).

Extant pre-service implementation studies focus almost solely on training. Only one pre-service implementation study, to our knowledge, has identified a theory-based implementation target beyond building EBP skills, though no comparison of multiple potential implementation targets was pursued (Santa Maria et al., 2017). The present study addresses this gap by examining modifiable determinants of intentions to use EBA among mental health trainees and thus identifying potential targets for pre-service implementation strategies.

Focus on social work trainees

Although problems with EBA use have been demonstrated across multiple mental health professions (De Los Reyes & Alado, 2015; Jensen-Doss & Hawley, 2010; Schacht et al., 2009), social worker trainees are a particularly important to study as social workers comprise almost 50% of the US mental health workforce (SAMHSA, 2013). While the US Council on Social Work Education requires that social workers be trained to use research evidence to assess and intervene with clients (Grady et al., 2018), multiple studies suggest the need to improve the use of evidence-based approaches among social workers (Grady et al., 2018; McNeil, 2006). One study found that only 1% of clinical decisions made by Masters’ level social workers were guided by empirical evidence (McNeil, 2006), leading to suggestions that social work programs increase training in evidence-based practice and, specifically, in EBA (Grady et al., 2018; McNeill, 2006). Some social workers may perceive evidence-based practices as “cookbook” approaches that disregard the human nature of mental health practice, and do not map onto their clients’ clinical needs, which may weaken social workers’ intention to use EBA (McNeil, 2006; Pignotti & Thyer, 2009).

Conceptual frameworks

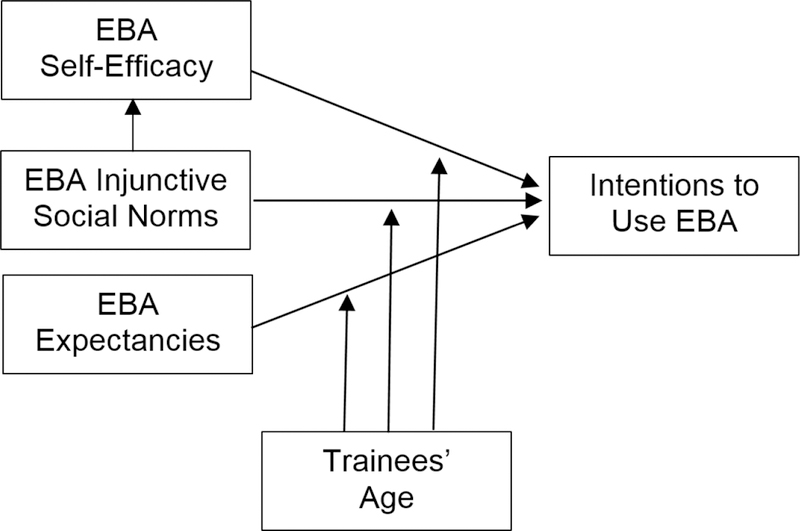

Many implementation approaches to date have not relied on strong causal theory (French et al., 2012). Established theories such as the Theory of Reasoned Action (TRA) (Fishbein & Ajzen, 2011), and Unified Theory of Behavior (UTB) (Jaccard & Levitz, 2015; Jaccard, Dodge, & Dittus, 2002) generally recognize two major sequences of social and cognitive processes shaping human behavior: (a) formation of behavioral intentions as a function of proximal determinants i.e.. behavioral beliefs, social norms, and self-efficacy, and (b) translation of intentions into action in a context that may hinder or facilitate the performance of intended behavior (Figures 1). In recent years, two-sequence models have supported some of the most effective and widely adopted behavioral interventions in public health (e.g. Guilamo-Ramos et al., 2011).

Figure 1.

Proximal Determinants of EBA Intentions: A Hypothetical Causal Model.

Much implementation research to date has examined factors like organizational culture on the use of evidence-based practice (Beidas et al., 2016). These factors fit the second intention-to-behavior portion of the two-sequence behavioral frameworks. Before addressing contextual factors that affect the performance of the intended behavior, however, it is important to ensure that intentions to perform the behavior in question are actually high. For example, if trainees strongly intend to perform the practice, then implementation efforts should modify contextual factors to allow clinicians to act on their intentions. If, on the other hand, intentions to perform the practice are weak, implementation efforts should firstly target modifiable proximal determinants of practice intentions.

Many implementation studies examine clinicians’ attitudes toward EBP, which have been found to strongly predict intentions (e.g. Proctor et al., 2009). However, it is often difficult to directly persuade people to change their attitudes; whereas behavioral beliefs, norms and self-efficacy are amenable to change (Fishbein & Ajzen, 2011, Jaccard et al., 2002). Therefore implementation efforts may benefit from the study of clinicians’ behavioral beliefs, norms and self-efficacy, which underlie attitudes, and which represent proximal, modifiable determinants of behavioral intentions (Fishman, Beidas, Reisinger, & Mandell, 2018; Jaccard et al., 2002). A powerful theoretical framework for examining proximal determinants of intentions is Unified Theory of Behavior developed by Jaccard et al. (2002) by integrating elements of influential causal models posited by Fishbein, Bandura, Triandis, Kanfer, and Becker (Ajzen & Fishbein, 2005; Bandura, 1986; Kanfer, 1975; Rosenstock, Strecher, & Becker, 1988; Triandis, 1996). The decision-making portion of the UTB examines intentions as a function of five classes of proximal determinants: (a) expectancies, (b) injunctive and descriptive norms, (c) self-efficacy, (d) self-concept, a view of oneself as a person for whom the referent behavior is appropriate, and (d) emotions related to the referent behavior. Three of the above (a, b, and c) represent highly malleable cognitions (Jaccard & Levitz, 2015).

Current study

The present study uses well-established social psychological theory and methods to test a conceptual model explaining intentions to use EBA among a sample of Masters’ of Social Work (MSW) trainees in a major university as a function of theoretically based determinants: (a) trainees’ behavioral beliefs regarding EBA, their beliefs about the likely results of their EBA use, also referred to as expectancies; (b) social norms, or perceptions of other individuals’ favorability towards the EBA use, and (3) self-efficacy, beliefs about their own ability to successfully perform EBA. The TRA approach guides our mixed-method “elicitation” of EBA-related behavioral beliefs (Fishbein & Ajzen, 2011). Subsequently, we use the UTB framework to test a structural equation model examining EBA intentions as a function of behavioral beliefs, social norms and self-efficacy (Figure 1), while testing potential mediation and moderation effects in the model. We hypothesized that (a) expectancies, social norms and self-efficacy regarding EBA use are independently associated with intentions to use EBA and (b) that self-efficacy mediates the effect of norms on intentions, based on our prior work exploring causal relationship between these two constructs (Figure 1). According to this logic, individuals experiencing higher normative pressure to perform a behavior will practice it more, and thus develop higher self-efficacy regarding this behavior. We also hypothesized that (c) the model parameters differ as a function of trainees’ age, as suggested by Aarons and Sawitzky (2006) and Aarons and Palinkas (2007) which find that younger mental health clinicians tend to have more positive attitudes to EBP than their more experienced colleagues. Clinicians of different age groups may have dissimilar sets of beliefs about the use of an EBP, as a result of theoretical orientations, belief structures, and accumulated professional experience, among other age-related characteristics. We also controlled all model parameters for theory-based measure of social desirability.

Method

Conceptual model

We tested a theoretical model based on a reduced form of the decision-making sequence of the UTB (Figure 1). We focused on three classes of proximal determinants of MSW trainees’ intentions to use EBA that are theoretically most amenable to change via a pre-service implementation strategy: (a) expectancies, (b) injunctive social norms, and (c) self-efficacy (Bandura, 2004; Guilamo-Ramos et al., 2011). EBA expectancies were elicited from the population of interest (Fishbein & Ajzen, 2011); data on social norms and self-efficacy were collected from the same respondents via more traditional close-ended measures, because social norms and self-efficacy beliefs tend to be more homogenously distributed across subpopulations, compared to expectancies (Fishbein & Ajzen, 2011).

We formally tested for age-related differences in clinicians’ EBA motivations, as suggested by some studies (Aarons & Palinkas, 2007; Aarons & Sawitzky, 2006). We formally compared all model parameters for two age groups of respondents. The cutoff of 29 years of age is based on themes from qualitative work with mental health trainees suggesting that the reliance on one’s own professional experience tend to increase shortly before the age of 30 (Lushin, Beidas, Conrad, Marcus, & Mandell, in preparation).

Participants and Procedures

All procedures were approved by New York University Institutional Review Board. Participants were students in their second year of MSW training at New York University School of Social Work, during the fall semester of 2017. All trainees in the 14 sections of the academic core Clinical Practice and Program Evaluation course (N = 276) were approached once during class time by graduate students trained as data collectors. Respondents consented to participate and completed a voluntary survey containing open-ended and close-ended questions. Several core MSW courses offered at NYU, including Social Work Research I and II, and Social Work Practice IV, emphasize evidence-based practice and assessment (NYU, 2017).

Most trainees completed the survey (241; 87% response rate). Respondents averaged 26.9 years of age (SD = 6.1), were largely female (83.8%), and of diverse ethnic backgrounds (56.4% white; 14.1% African American, 9.5 % Asian, 3.4% other (e.g., Native American); 13.7% identified as Latino and 16.6% did not report on race or ethnicity). Most held a Bachelor’s degree (82%); 8.5% held a Master’s or professional degree outside social work. All participants were assured of the anonymity of their responses. Respondents never had to reveal potentially socially undesirable behaviors in an identifiable manner. The survey included a measure of social desirability (see: Measures section).

Measures

Open-ended elicitation of EBA expectancies.

The primary architects of TRA recommend eliciting behavioral beliefs in an open-ended fashion, and then using the most frequently mentioned beliefs as expectancy items most relevant for the given population (Fishbein & Ajzen, 2011). The purpose is to focus on the behavioral beliefs that are salient for the specific group of participants, instead of asking them to rate pre-conceived belief statements that may or may not be relevant for them. The high utility of this elicitation approach is well known in studies of health behavior (Middlestadt, Bhattacharyya, Rosenbaum, Fishbein, & Shepherd, 1996), but it is rarely used in implementation science. In the present study, we used elicitation approach to complement the existing standardized questionnaires of clinician attitudes toward standardized assessment (Jensen-Doss & Hawley, 2010), to explore beliefs about EBA specifically salient to social work trainees. We used two open-ended questions, “What advantages / good things do you think will happen if you conduct evidence-based assessment in your future professional role as a social worker?” and “What disadvantages / bad things... ?” Participants were encouraged to list “top-of-the-mind” responses. Content analysis was used to create categories of similar responses (Middlestadt et al., 1996). Two raters independently generated categories from individual responses and met to develop a finalized list of categories. Examples of the categories were: “Anticipation of a more evidence-based clinical work,” and “Anticipation of a more client-centered clinical work” (Table 4). Responses were sorted into categories and each category was scored, dichotomously as 0 = not mentioned and 1 = mentioned; the process also referred to as coding. One person coded all responses and a second coder double coded 200 individual responses selected at random from all responses. Inter-rater agreement for these 200 responses was determined by kappa statistic. The resultant kappa was 0.90.

EBA intentions.

Trainees reported their intentions to use standardized assessment tools in their future work by rating ten statements on a five-point (“Strongly Disagree,” “Moderately Disagree,” “Neither,” “Moderately Agree,” “Strongly Agree”) scale. Seven items reflected intentions to perform EBA within various task domains of clinical work (e.g., I intend to use standardized measurement tools to establish my clients’ problems and intervention needs; I intend to use data gathered by standardized measurement tools to formulate a treatment plan; I intend to use standardized measurement tools at the end of treatment to evaluate whether treatment has resulted in improved outcomes). These items have been developed by McLeod, Jensen-Doss, & Ollendick (2013). In addition, three items asked respondents to reflect on the certainty of their future use of standardized measurement tools to evaluate and monitor client problems (I will use standardized measurement tools…; I intend to… and, If the situation at my future work place is right, I would be willing to…) (Jaccard & Jacoby, 2009). Cronbach’s alpha for the entire scale was 0.92. Content validity index (Polit, Beck, & Owen, 2007) with a panel of 4 raters was ≥0.80.

Social norms.

Trainees reported their EBA-related injunctive normative beliefs by rating four statements of beliefs that the use of EBA would be recommended by their professors, clinical supervisors, professional role models, and “the most respected colleagues” in the field. These referent roles were selected based on themes from previous interviews with MSW trainees on topics that include individuals influencing their development as clinicians (Lushin et al., in preparation). The wording of the injunctive norms items was based on recommendations by Jaccard and Jacoby (2009). Answers were rated on a five-point agree-disagree scale. Cronbach’s alpha was 0.86. Content validity index (Polit et al., 2007) with a panel of 4 raters was ≥0.80.

Self-efficacy.

This construct was measured by four statements about respondents’ perception of their ability to successfully perform EBA-related tasks, such as administering standardized assessment tools, finding appropriate standardized measures and identifying reliable and valid assessment tools. Two additional items were used: I will be successful at administering standardized assessment measures, and, I am confident about administering standardized assessment measures. The wording of the self-efficacy items was based on communicative self-efficacy scale with predictive validity r = 0.55 (Lushin, 2017). Statements were rated on a five-point agree-disagree scale (Lushin, 2017). Cronbach’s alpha was 0.90. Content validity index (Polit et al., 2007) with a panel of 4 raters was ≥0.80.

Social desirability.

The measure of social desirability used four items from the established impression management subscale (Paulhus, 1984): (1) I never swear; (2) I never say something bad about a friend behind his or her back; (3) I don’t gossip about other people’s business; (4) I never criticize other people. Answers were rated on a five-point agree-disagree scale. Cronbach’s alpha was 0.74. The measure has shown strong validity in previous studies (Guilamo‐Ramos et al., 2011).

Socio-demographic variables.

Respondents reported their age, gender, race, ethnicity, and parents’ combined educational level. To avoid “othering” respondents, gender question was open-ended. Parental educational attainment was measured on the ordinal scale from “1” (unfinished high school) to “7” (finished professional/doctoral program). Attained education levels for parents were summed, for the metric of 2–14 (parents were defined as dyads of most important caregivers).

Analytic strategy

First, we calculated frequencies with which respondents mentioned the elicited beliefs/expectancies about the consequences of EBA use. These beliefs were represented by the categories emerging from the content analysis of trainees’ open-ended responses.

We then tested the theoretical model in Figure 1 using structural equation modeling (SEM) using the Mplus software package with robust (Huber-White) maximum likelihood algorithms. The models were evaluated using global fit indices including traditional overall chi square test of model fit, the Root Mean Square Error of Approximation (RMSEA), p value for the test of close fit, the Comparative Fit Index (CFI), and the Standardized Root Mean Square Residual (SRMR) (Bollen & Long, 1992). We also used standardized residual covariances and modification indices. The parameter estimates were examined for Heywood cases. Multiple group comparison were used to examine potential interaction effect of age. Age group differences for each primary path in the model were tested using the delta method as implemented in Mplus with Huber-White robust standard errors. In addition, we tested potential interaction effects between expectancies, self-efficacy and norms via product term moderation approach.

The joint significance test was used to test for mediation (MacKinnon, Lockwood, Hoffman, West, & Sheets, 2002). According to this approach, mediation is supported when both of the two effects comprising the intervening variable effect demonstrate statistical significance in the context of a causal model. This method provides the best balance of Type I error and statistical power among mediation tests (Bollen & Long, 1992). The SEM model was nested for classrooms in which the respondents were embedded, by using clustering algorithm in Mplus; ICCs are provided.

We then analyzed the relative importance of behavioral beliefs, injunctive norms and self-efficacy, for the EBA intentions. We used dominance analysis (Budescu & Azen, 2004) to compare predictors’ contributions to the explained variance of the outcome, free of collinearity. The predictors with the largest relative importance/dominance indices make the greatest contribution to the outcome and provide the most important implementation targets, relative to other predictors in this model. The importance metrics are scaled from 0 to 100 and sum to 100 across predictors. They indicate the relative contribution to the overall squared correlation. We first analyzed relative importance of beliefs, injunctive norms, and self-efficacy, with the latter two constructs represented by composite scores as described in Measures section.

Results

Descriptive statistics

Table 1 displays the sample characteristics including socio-demographics and means of continuous variables. Statistics are reported for the full sample, and for respondents <29 years of age and ≥29 years of age. The composite score of all EBA intentions items (alpha = 0.91), had the sample mean 3.9 (SD = 0.7). The composite score of intentions was positively correlated with the total score of social desirability items (r = 0.13, p <0.05). Subsequent analyses included social desirability as a covariate.

Table 1.

Descriptive Statistics and Key Variables

| Variable | Full Sample (N = 241) |

Younger Subsample (below 29 years old) N = 191 |

Older Subsample (29 years old and older) N = 50 |

|||

|---|---|---|---|---|---|---|

| Percent | Mean (SD) | Percent | Mean (SD) | Percent | Mean (SD) | |

| Female | 83.8 | 90.4b a | 66.7 b | |||

| White | 56.4 | 57.8 | 51.1 | |||

| African American | 14.1 | 14.0 | 13.3 | |||

| Asian / Pacific Islanders | 9.5 | 9.9 | 8.9 | |||

| Native American /Other | 3.4 | 3.1 | 4.4 | |||

| Latino | 13.7 | 12.5 | 17.8 | |||

| Race / Ethnicity Not Reported | 6.6 | 6.8 | 4.4 | |||

| Multiple Bachelor’s Program Attended | 21 | 2 a | 24.4 b | |||

| Masters’/Professional Degree outside SW | 8.9 | 8.5 a | 26.7 b | |||

| Age | 26.9 (6.1) | 24.50 (2.0) | 37.50 (6.7) | |||

| Summated Parental Education Attainment * | 9.8 (3.1) | 9.8 (3.0) | 9.7 (3.5) | |||

| Intentions to Use EBA (Alpha = 0.91)** | 3.9 (0.6) | 4.0(0.6) | 3.7 (0.94) | |||

| Injunctive Norms re. EBA (Alpha = 0.86) | 3.7 (0.8) | 3.4 (0.7) | 3.4 (1.05) | |||

| Self-Efficacy re. Use EBA (Alpha = 0.71) | 3.4 (0.9) | 3.8 (0.8) | 3.1 (1.0) | |||

Note: Summated Parental Education Attainment ranges 2–14

Scales of the core continuous variables (Intentions, Norms, and Self-Efficacy) are on a 1–5 metric

Within rows, statistics with different superscripts

have statistically significant difference across age groups

Elicited expectancies

Content analysis of trainees’ open-ended responses about their EBA expectancies yielded seven categories of expectancies, four of them positive, and three negative. Positive expectancy categories included beliefs about EBA making one’s clinical work (1) more evidence-based, (2) more effective, (3) supporting client-centered practices, by emphasizing clients’ interests and empowerment, and (4) giving clinicians or their agency external incentives such as material gain, prestige, and administrative advantages. Negative expectancy categories included beliefs about EBA (5) reducing individual/flexible approach to the clients, (6) compromising rapport with clients, and (7) increasing work load. Examples of open-ended responses in each category are displayed in Appendix, Table 4.

Table 2 presents frequencies of the elicited expectancies regarding the use of EBA. These are represented by the percent of respondents who mentioned beliefs encapsulated by each expectancy category. As recommended by Middlestadt (2012), we used only those expectancy categories mentioned by ≥10% of the respondents.

Table 2.

Frequencies of Elicited Expectancies (percent of individuals mentioning beliefs within each belief category)

| Belief Category / Expectancy | Full Sample N = 241 |

Younger Subsample (below 29 years old) N = 191 |

Older Subsample (29 years old and older) N = 50 |

|---|---|---|---|

| EBA will make my clinical work more evidence-based | 15 % | 15 % | 13 % |

| EBA will effectively help in assessment and treatment | 24 % | 25 % | 22 % |

| EBA will support client-centered practices | 10 % | 9 % | 11 % |

| EBA will increase external incentives | 15 % | 13 % a | 22 % b |

| EBA will reduce flexible approach to clients | 22 % | 21 % | 29 % |

| EBA will compromise rapport with clients | 12 % | 11 % | 16 % |

| EBA will increase work load | 10 % | 10 % | 10 % |

Note: Within rows, statistics with different superscripts

have statistically significant difference across age groups

Model testing

The theoretical model in Figure 1 was fit to the data. First, the overall model for the full sample of MSW trainees was tested, yielding good indices of fit, and then multiple group solution was invoked to test the model while accounting for potential path differences as a moderating function of the age group, under 29 years old (younger) or 29 years old and over (older). The group comparison revealed statistically significant differences for several key model coefficients. The multiple group solution model yielded good model fit indices (chi square (df = 58) = 66.8 p = 0.20; RMSEA = 0.04; p value for close fit = 0.70; SRMR = 0.05, CFI = 0.95). Focused fit tests for both groups yielded no significant points of model stress for theoretically meaningful modification indices or standardized residuals. Analyses revealed no Heywood cases.

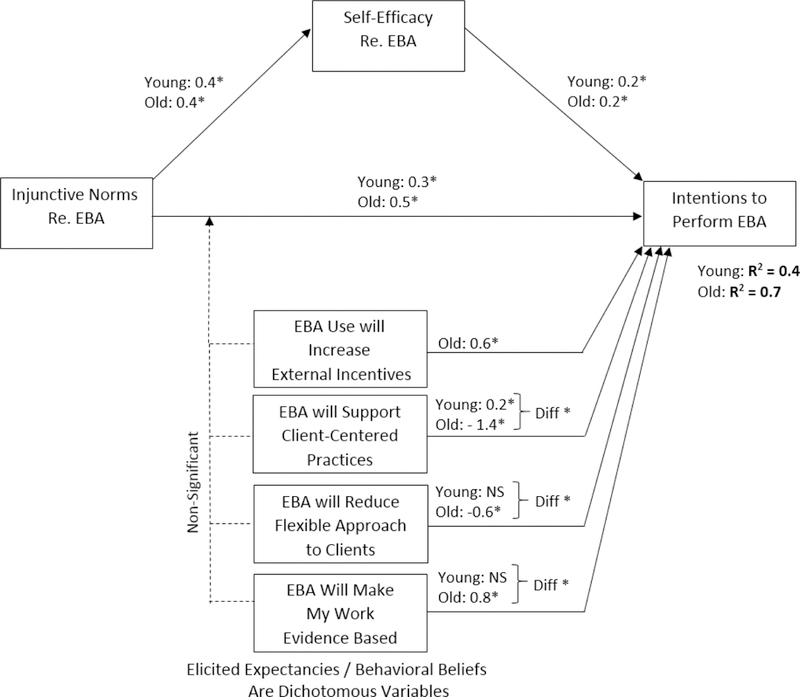

We therefore report results obtained through SEM analyses of the main model using multiple group comparisons as a function of age group. Accordingly, the following model test results are presented as they elucidate three key areas: (1) How did expectancies, social norms and self-efficacy relate to EBA intentions for the younger group and (2) for the older group; and (3) What were the differences between these relationships across the age groups (moderation by age). Path coefficients for the younger and older group models are reported in Figure 2.

Figure 2.

EBA Intentions as a Function of Proximal Determinants: Multi-Group Solution by Age Group (path coefficients are non-standardized)

Total Indirect effect from Injunctive Norms:

Young subsample: 0.06*

Old subsample: non-significant

Notes: * p < 0.05

“Young” represents age group below 29 years old

“Old” represents age group 29 years old and older

Path coefficients presented in pairs (“Young” and “Old”) represent parameter estimates for younger and older groups, respectively.

“Diff*” represents statistically significant parameter differences between younger and older groups.

Multi-Group Comparison formally documents moderation effect of Age Group

Expectancies, norms and self-efficacy effects on EBA intentions: Younger group.

In the younger group model, injunctive norms about EBA implementation were positively associated with intentions to use EBA in their future career (b = 0.30 ± 0.11, p<0.05). Younger trainees’ self-efficacy regarding EBA was also positively associated with their EBA intentions (b = 0.18 ± 0.09, p<0.05).In addition, EBA self-efficacy among the young subsample was positively associated with injunctive norms in reference to EBA (b = 0.35 ± 0.12, p<0.05). Among the elicited expectancies, for the young group, only beliefs about EBA making work more client-centered were significantly directly associated with EBA intentions; the association was positive (b = 0.23 ± 0.12, p<0.05).

Expectancies, norms and self-efficacy effects on EBA intentions: Older group.

In the older group model, injunctive norms about EBA implementation were positively associated with intentions to use EBA in their future career (b = 0.45 ± 0.20, p<0.05). Older trainees’ self-efficacy regarding EBA was positively associated with their EBA intentions (b = 0.21 ± 0.22, p=0.06). EBA self-efficacy among the old subsample was positively associated with injunctive norms in reference to EBA (b = 0.37 ± 0.25, p<0.05). For the older group, three categories of elicited expectancies were significantly associated with EBA intentions. The beliefs about EBA making work more evidence-based were significantly positively related to EBA intentions (b = 0.77 ± 0.32, p<0.05). The beliefs about EBA reducing flexible approach to the clients were significantly negatively related to EBA intentions (b = −0.58 ± 0.12, p<0.05). The beliefs about EBA making work more client-centered were significantly associated with EBA intentions; unexpectedly the association was negative (b = −1.44 ± 0.78, p<0.05). In other words, the trainees in the older group who anticipated EBA to make their work more client-centered, intended to perform EBA weaker by 1.44 scale point, on average. Thus in the older group, the expectancy of client-centeredness had an opposite effect on intentions, compared to the younger group whose members, on average had stronger intentions to perform EBA if they anticipated EBA to increase client-centered focus of their work.

Model group differences (moderation by age).

Statistically significant age group differences (p<0.05) were observed for the following three paths: (1) reduced flexibility expectancies associated with EBA intentions (Difference = 0.46 ± 0.31); (2) evidence-based treatment expectancies associated with EBA intentions (Difference = 0.71 ± 0.36); and (3) client-centeredness expectancies associated with EBA intentions (Difference = 1.67 ± 0.80). All three above differences remained statistically significant after adjusting for family-wise error using Holm-modified Bonferroni method. These age group differences represent moderation effects of age. In both the young group and the old group models, EBA self-efficacy partially mediated the effect of EBA injunctive norms on EBA intentions (Figure 2). Additionally, in both age group models, expectancies had no statistically significant moderator effects (Figure 2, dotted lines).

The explained variance (R squared) of EBA intentions for the younger group model was 0.38 (p < 0.05), and for the older group model it was 0.74 (p < 0.05). For overall model it was 0.42 (p < 0.05). The model was nested for classrooms (N=14); ICC = 0.02.

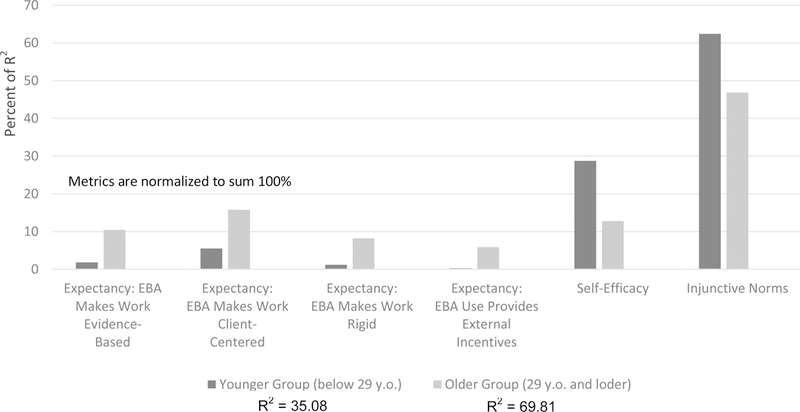

Relative importance analysis

Table 3 and Figure 3 present the relative importance analysis of each proximal determinant variable (EBA social injunctive norms, self-efficacy, and four categories of expectancies) in predicting intentions to perform EBA, for each of the two age groups. The overall variance of EBA intentions explained by all the predictors together was 42%. The bulk of explained variance was accounted for by normative beliefs (importance = 65.9), and self-efficacy (importance = 28.6). For the younger subsample (below 29 years old) the EBA injunctive norms and self-efficacy dominated the explained variance in EBA intentions (Imp = 62.4, and 28.7, respectively). For the older group, the importance of social norms (Importance = 46.9) was similar to the summative importance of expectancies (40.3), particularly those of client-centered practice and of evidence-based practice (15.7, and 10.5, respectively); self-efficacy (12.8) played a smaller role. The variance of EBA intentions explained by all the predictors taken together, for the younger subsample, was 35%, and for the older subsample it was 70% (Table 3), which is nearly identical to the variance estimates derived by the structural equation model.

Table 3.

Relative Importance of the Proximal Determinants for the Intentions to Perform EBA (percent of explained variance)

| Younger Subsample (below 29 years old) N = 191 |

Older Subsample (29 years old and older) N = 50 |

|

|---|---|---|

| Injunctive Social Norms (composite score) | 62.4 ± 20.2 | 46.9 ± 22.2 |

| Self-Efficacy (composite score) | 28.7 ± 16.6 | 12.8 ± 14.3 |

| EBA will support Client-Centered Practices | 5.5 ± 10.2 | 15.7 ± 18.1 |

| EBA will increase External Incentives | 0.3 ± 5.7 | 5.9 ± 11.1 |

| EBA will reduce Flexible Approach to Clients | 1.2 ± 7.6 | 8.2 ± 14.2 |

| EBA will make my work Evidence-Based | 1.9 ± 8.2 | 10.5 ± 14.1 |

| Overall Explained Variance of EBA Intentions | R2= 35% | R2= 70% |

Figure 3.

Relative Importance of Proximal Determinants for EBA Intentions (percent of exaplained variance)

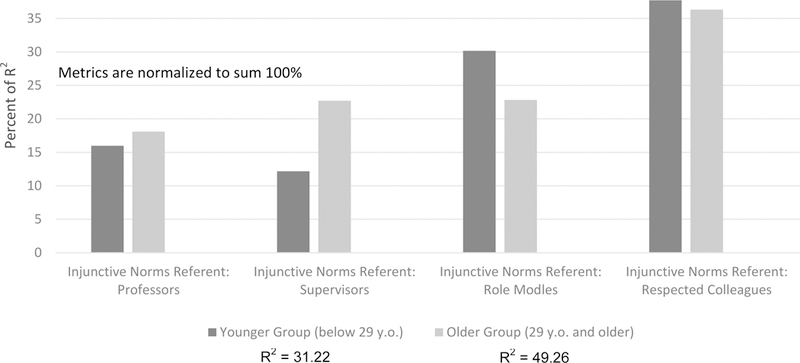

Disaggregating relative importance of injunctive social norms.

We disaggregated the relative importance of social norms total score by examining dominance indices of all the individual items of social norms (Figures 4). Across age groups, the explained variance of EBA intentions was dominated by social norms in reference to the most respected colleagues in the field; followed by social norms in reference to one’s professional role models, with social norms in reference to one’s professors and supervisors lagging behind. These four items together explained about one third of the variance of EBA intentions for the younger group, and roughly a half of the EBA intentions variance for the older group (Figures 4).

Figure 4.

Relative Importance, for EBA Intentions, of Injunctive Social Norms, across Normative Referents (percent of exaplained variance)

Discussion

The present research used well-established theoretical and analytic approaches to identify specific targets for implementation strategies to motivate mental health trainees to use EBA. Our theoretical model (Figure 2) explained a large proportion of the variance in outcome (42% for the overall model). Most prior implementation science studies have accounted for smaller proportions of outcome variance. For example, a previous study examining the relative contribution of individual and organizational factors to therapist use of EBP explained about 23% of the variance (Beidas et al., 2015).

The core hypotheses embedded in the theoretical model (Figure 1) were supported; some of them with interesting implications. Injunctive social norms played an important role in determining trainees’ intentions to use EBA. The impact of injunctive norms on EBA intentions was greater than that of expectancies, although in many studies of other behaviors, expectancies dominated in shaping intentions (Chang, & Crowe, 2011; Estrada, 2009; Jaccard et al., 2002). One interpretation is that trainees have only modest exposure to the contexts of their future clinical practice. This leaves them with a lack of first-hand experiences from which to form their own impactful expectancies. Instead, nascent clinicians’ decision-making may largely rely on perspectives of more experienced others, a cognitive process reflected by injunctive social norms (Jaccard et al., 2002). This logic is supported by our finding that in the younger group of trainees, expectancies played a modest role, explaining in sum only 8.4% of EBA intent variance; while in the older group, the expectancies accounted for 40.3% of explained variance. This difference by age in the impact of expectancies may also help explain why the overall proportion of EBA intent variance explained was nearly double for the older group compared to the younger subsample: in the younger group, only norms strongly contributed to the explained variance, while for the older group, strong contributions came from both norms and expectancies. The younger trainees’ EBA intentions may be additionally impacted by their self-concept and social self-image implications (Jaccard & Levitz, 2015), a class of variables shown to predict youth health-related behaviors, but not yet examined as a determinant of clinician decision-making.

The most consequential class of injunctive norm referents is represented by respected colleagues in the field, not professors or supervisors. Our data do not specify in more detail who these respected colleagues are. One hypothesis is that, for our respondents, this group comprises fellow social workers with some field experience whom our respondents have encountered during internship or have worked with in their pre-MSW capacity. One potential interpretation of the importance of this referent group, in line with the themes from our ongoing qualitative research among MSW students (Lushin et al., in preparation), may be that for novice clinicians, workplace peers represent the most proximal source of hands-on work knowledge. Professors may be perceived as focusing largely on theoretical teaching, while supervisors promote work standards and manualized protocols; both of these roles being somewhat removed from the “real life” work experiences. In contrast, experienced colleagues may provide models of work operations that are responsive to the myriad constraints in the work setting. These models are intuitive, not always articulated, and rich with shortcuts and ad-hoc solutions, in the vein of Polaniy’s (1966) concept of “tacit knowledge.”

Another key finding is the age group difference in the predictors of intentions to implement EBA. Results suggest that, with the increase of trainees’ age, the role of expectancies for shaping intentions tends to increase, while the role of self-efficacy tends to decrease. This tendency may be attributed to the accumulation of workplace experiences with age, which may explain the modest contribution of expectancies in the younger group, in contrast to a more prominent role of expectancies for the older group. As mentioned above, younger trainees may lack overall first-hand work experiences, which may make their work-related expectancies less consequential then for an older group of trainees. Conversely, self-efficacy was less consequential for older clinicians. Older trainees may perceive that their current appraisal of their ability to use EBA is likely to change with circumstances, and thus its value for forming intentions may not be particularly high.

The difference by age in the role of the expectancy that use of EBA would increase the client-centered focus of one’s clinical work is potentially illustrative. Older trainees who mentioned this belief had significantly lower intentions to perform EBA, while younger trainees who mentioned it had higher intentions to perform EBA. This difference may be hypothetically explained by the accumulation of stress and burnout from prior workplace exposures among the older group of MSW trainees (Beidas et al., 2016). In a series of interviews with social work trainees (Lushin et al., in preparation) themes emerged suggesting that older trainees who join social work not as a first career, appear to leave employment in which they were unhappy or stressed; which suggests potentially larger load of burnout in an older group of trainees. Prior studies have associated higher staff stress and burnout with lower implementation of EBPs (Nelson et al., 2014; Prince & Carey, 2010), however little research has explained modifiable social and cognitive mechanisms of this association. Alternatively, the above age-group difference may be attributed to dissimilar theoretical orientations among graduate trainees of different age groups, due to receiving undergraduate education at different periods. The emphasis on the person-centered orientation in human services may have emerged across educational settings in recent years and shaped decision-making processes in younger trainees.

Future directions and limitations

Examining determinants of intentions to implement.

The malleable determinants of implementation intentions examined in this study, social norms, expectancies, and self-efficacy, represent mutable dimensions of the ‘acceptability’ construct frequently used in implementation science, because one’s perception of the benefits, feasibility and normative supports for a particular behavior largely makes this behavior acceptable to an individual (Proctor et al., 2009). Further implementation research may benefit from routinely examining clinicians’ EBP use intentions, social norms, expectancies, and self-efficacy, as done in this study, combined with contextual variables that moderate translation of clinician intentions into action, in the vein of the established two-sequence behavioral frameworks (Fishbein & Ajzen, 2011; Jaccard & Levitz., 2015). Each implementation project would then benefit from an empirically defined set of mutable targets: either determinants of intentions, or moderators of the intent-to-behavior sequence, or both classes of determinants.

Utility of injunctive norms and respected colleagues.

Overall, our findings regarding the dominant role of injunctive norms for nascent clinicians’ EBA buy-in present a potentially useful target for implementation strategies, given the demonstrated malleability of injunctive norms (Prince & Carey, 2010). Additionally, our data demonstrate the dominant role of respected colleagues as normative referents for nascent clinicians. This finding suggests that the emphasis on implementation by targeting organizational leaders and supervisors, currently resonant in healthcare policy for its apparent efficiency (Szydlowski & Smith, 2009; Touati et al., 2006) may not be ideal for some target populations including students, trainees, and new clinicians. Instead, a more in-depth examination of the processes shaping the transfer of “tacit knowledge” from respected colleagues to newcomer clinicians may provide evidence for potential peer-based pre-service implementation strategies. Through it, colleagues already in practice would transfer their high regard for EBA and share tips for the least stressful use of EBA in specific contexts of practice. Graduate programs and service organizations alike would benefit from knowing who the individuals are in the role of “respected colleague” being most readily emulated by nascent clinicians. Such individuals may be systematically tapped as promoters of organizational culture of implementation.

Tailoring implementation strategies for age groups.

The finding of an age difference for the patterns of clinical decision-making calls for longitudinal research to more precisely identify age level at which clinicians’ decision making patterns shift most consequentially. Overall, our finding suggests potential utility of tailoring implementation strategies to individuals, in conjunction with the more broadly discussed approach, tailoring to settings (Williams et al., 2011). Implementation studies may benefit from accounting for clinicians’ age and other characteristics underlying individual differences in decision making, and, more generally, from seeking meaningful segmentation parameters for clinician populations. Future research should also explore potential mediators and moderators of age and burnout effects on work-related beliefs and EBP use among clinicians. Additionally, our findings highlight a need to tailor implementation strategies to age groups of clinicians by focusing strategic efforts on norms and self-efficacy in younger groups of clinicians, while targeting expectancies and norms in older groups.

Limitations.

One limitation of the present study is the cross-sectional nature of the data. Multiple prior longitudinal studies, however, demonstrated normative beliefs, expectancies and self-efficacy predicting behavioral intentions, rather than the effects operating in the opposite direction (Jaccard & Levitz., 2015). The cross-sectional perspective additionally limits our efforts to discover when and how best to intervene to improve trainees’ EBA intentions. In our further longitudinal studies we plan to examine how determinants of EBA intentions evolve among future clinicians throughout their training trajectories, and suggest the optimal time points for pre-service implementation efforts. This future research will also include measures of trainee EBP/EBA behaviors before and after joining the workforce. Another concern is the reliance on self-report, although multiple steps were taken to ensure trainees would provide honest answers. Also, the sample of trainees was drawn from one university, and participants represented only one mental health service discipline, social work, and the targeted trainees were at the same level in terms of their social work training (year 2 in the program); which limits broader generalizability of our findings. In our future research we plan to compare data on trainees’ EBA intentions and beliefs across graduate programs accounting for different approaches to EBA training and for potentially dissimilar organizational culture in reference to EBP/EBA, and across training programs representing various mental health service disciplines. Further, the modest sample size especially in the older subgroup means that the coefficients should be interpreted with caution. Potential common method / shared measurement biases may be of concern, as they often are with behavioral research (Podsakoff, MacKenzie, Lee, & Podsakoff, 2003). Additionally, the extent of trainees’ field experience in clinical settings, as well as the degree of stress and burnout experienced in their previous employment, and trainees theoretical orientation, was not measured and controlled. Further studies should use these important variables which may confound or explain age effect on EBA intentions, and/or may have independent explanatory effects. Finally, we did not collect behavioral data from respondents. Intentions are known as the strongest predictor of behavior (Fishbein & Ajzen, 2011), however it is possible that EBA intentions may have been different had they been measured after trainees’ entering the workforce. Future work should examine how trainee intentions change over the course of their career and how this influences their use of EBA.

Conclusions

This study is the first to test a conceptual model of EBA intentions derived from well-established theories among pre-service mental health trainees. Injunctive norms provide strong targets for implementation strategies among novice mental health clinicians. Respected colleagues in the field are the strongest normative referents for nascent clinicians. There are fundamental age-related differences in the patterns of decision-making among newcomer clinicians, with injunctive norms and self-efficacy dominating decision-making for younger trainees, and expectancies playing a more prominent role for older trainees. More broadly, our study highlights the likely advantages of focused pre-service implementation strategies among early-career mental health clinicians that may be optimal if mounted at the interface of academic and field internship settings.

Abbreviations

- EBP

Evidence-based practice

- EBA

Evidence-based assessment

- MSW

Master of Social Work

- TRA

Theory of Reasoned Action

- UTB

Unified Theory of Behavior

- SEM

Structural equation modeling

- RMSEA

Root means square error of approximation

- SRMR

Standardized root means square residual

- CFI

Comparative fit index

- NIH

National Institutes of Health

APPENDIX

Table 4.

Categories of elicited expectancies with examples of open-ended responses

| Expectancy Categories | Examples of Open Ended Responses | |

|---|---|---|

| 1. | The use of EBA will make one’s clinical work more reliant on strong evidence, i.e. will make the work more evidence-based. | “I will be using the methods that have been proven to work.” |

| 2. | The use of EBA will make one’s work more effective. | “[The use of EBA] will increase effectiveness of the program.” |

| 3. | The use of EBA will make one’s clinical work more client-centered by placing stronger emphasis on clients’ interests and empowerment. | “Show clients that we are invested in their progress,” “Give the patients better understanding of therapy.” |

| 4. | The use of EBA will give clinicians or their agency external incentives such as material gain, prestige, and administrative advantages. | “My work can be verifiable,” “Funding can be allocated to what’s proven to work.” |

| 5. | The use of EBA will reduce individual/flexible approach to the clients. | “[The use of EBA will] put the client into a box/mold that they have to fit into.” |

| 6. | The use of EBA will compromise rapport with clients. | “[The use of EBA] will take away from the natural human connection.” |

| 7. | The use of EBA will increase “external negatives” such as added work load. | “I will feel overwhelmed,” “Work load will increase.” |

Footnotes

Conflict of Interest: Author Lushin declares that he has no conflict of interest. Author Becker-Haimes declares that she has no conflict of interest. Author Mandell declares that he has no conflict of interest.Author Conrad declares that he has no conflict of interest. Author Kaploun declares that he has no conflict of interest. Author Bailey declares that she has no conflict of interest. Author Ai Bo declares that she has no conflict of interest. Author Beidas declares that she has no conflict of interest.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Ethical approval: This article does not contain any studies with animals performed by any of the authors.

References

- Aarons GA, & Sawitzky AC (2006). Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological services, 3(1), 61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajzen I, & Fishbein M (2005). The influence of attitudes on behavior. The handbook of attitudes, 173(221), 31. [Google Scholar]

- Bandura A (1986). The explanatory and predictive scope of self-efficacy theory. Journal of social and clinical psychology, 4(3), 359. [Google Scholar]

- Bandura A (2004). Health promotion by social cognitive means. Health education & behavior, 31(2), 143–164. [DOI] [PubMed] [Google Scholar]

- Becker-Haimes EM, Okamura KH, Baldwin CD, Wahesh E, Schmidt C, & Beidas RS (2018). Understanding the Landscape of Behavioral Health Pre-service Training to Inform Evidence-Based Intervention Implementation. Psychiatric services, Online first [DOI] [PubMed] [Google Scholar]

- Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, ... & Adams DR. (2015). Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA pediatrics, 169(4), 374–382). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, Aarons G, Hoagwood K, Evans A, Hurford M, Rubin R, Hadley T, Mandell DS, & Barg FK (2016). A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 893–908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bollen KA, & Long JS (1992). Tests for structural equation models: introduction. Sociological Methods & Research, 21(2), 123–131. [Google Scholar]

- Burns HK, Puskar K, Flaherty MT, Mitchell AM, Hagle H, Braxter B, ... & Terhorst L (2012). Addiction training for undergraduate nurses using screening, brief intervention, and referral to treatment. Journal of Nursing Education and Practice, 2(4), 167. [Google Scholar]

- Chang AM, & Crowe L (2011). Validation of Scales Measuring Self‐Efficacy and Outcome Expectancy in Evidence‐Based Practice. Worldviews on Evidence‐Based Nursing, 8(2), 106–115. [DOI] [PubMed] [Google Scholar]

- Colton CW, & Manderscheid RW (2006). PEER REVIEWED: Congruencies in Increased Mortality Rates, Years of Potential Life Lost, and Causes of Death Among Public Mental Health Clients in Eight States. Preventing chronic disease, 3(2). [PMC free article] [PubMed] [Google Scholar]

- De Los Reyes A, & Aldao A (2015). Introduction to the special issue: Toward implementing physiological measures in clinical child and adolescent assessments. Journal of Clinical Child & Adolescent Psychology, 44(2), 221–237. [DOI] [PubMed] [Google Scholar]

- Donaldson AL (2015). Pre-professional training for serving children with ASD: An apprenticeship model of supervision. Teacher Education and Special Education, 38(1), 58–70. [Google Scholar]

- Estrada N (2009). Exploring perceptions of a learning organization by RNs and relationship to EBP beliefs and implementation in the acute care setting. Worldviews on Evidence‐Based Nursing, 6(4), 200–209. [DOI] [PubMed] [Google Scholar]

- Fishbein M, & Ajzen I (2011). Predicting and changing behavior: The reasoned action approach. Taylor & Francis. [Google Scholar]

- Fishman J, Beidas R, Reisinger E, & Mandell DS (2018). The Utility of Measuring Intentions to Use Best Practices: A Longitudinal Study Among Teachers Supporting Students With Autism. Journal of School Health, 88(5), 388–395. [DOI] [PubMed] [Google Scholar]

- French SD, Green SE, O’Connor DA, McKenzie JE, Francis JJ, Michie S, ... & Grimshaw JM . (2012). Developing theory-informed behaviour change interventions to implement evidence into practice: a systematic approach using the Theoretical Domains Framework. Implementation Science, 7(1), 38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grady MD, Wike T, Putzu C, Field S, Hill J, Bledsoe SE, ... & Massey M. (2018). Recent Social Work Practitioners’ Understanding and Use of Evidence-Based Practice and Empirically Supported Treatments. Journal of Social Work Education, 54(1), 163–179. [Google Scholar]

- Guilamo-Ramos V, Bouris A, Jaccard J, Gonzalez B, McCoy W, & Aranda D (2011). A parent-based intervention to reduce sexual risk behavior in early adolescence: Building alliances between physicians, social workers, and parents. Journal of Adolescent Health, 48(2), 159–163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoagwood K, Burns BJ, Kiser L, Ringeisen H, & Schoenwald SK (2001). Evidence-based practice in child and adolescent mental health services. Psychiatric services, 52(9), 1179–1189. [DOI] [PubMed] [Google Scholar]

- Huey LY (2002). Problems in behavioral health care: Leap-frogging the status quo. Administration and Policy in Mental Health and Mental Health Services Research, 29(4–5), 403–419. [DOI] [PubMed] [Google Scholar]

- Jaccard J, & Jacoby J (2009). Theory construction and model-building skills: A practical guide for social scientists. Guilford Press. [Google Scholar]

- Jaccard J, & Levitz N (2015). Parent-based interventions to reduce adolescent problem behaviors: New directions for self-regulation approaches In Self-regulation in adolescence. Cambridge University Press, New York. [Google Scholar]

- Jaccard J, Dodge T, & Dittus P (2002). Parent‐adolescent communication about sex and birth control: A conceptual framework. New directions for child and adolescent development, 2002 (97), 9–42. [DOI] [PubMed] [Google Scholar]

- Jensen-Doss A, & Hawley KM (2010). Understanding barriers to evidence-based assessment: Clinician attitudes toward standardized assessment tools. Journal of Clinical Child & Adolescent Psychology, 39(6), 885–896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen‐Doss A (2011). Practice involves more than treatment: How can evidence‐based assessment catch up to evidence‐based treatment? Clinical Psychology: Science and Practice, 18(2), 173–177. [Google Scholar]

- Jensen-Doss A, Smith AM, Becker-Haimes EM, Ringle VM, Walsh LM, Nanda M, ... & Lyon AR (2017). Individualized Progress Measures Are More Acceptable to Clinicians Than Standardized Measures: Results of a National Survey. Administration and Policy in Mental Health and Mental Health Services Research, 1–12. [DOI] [PubMed] [Google Scholar]

- Kanfer FH, Karoly P, & Newman A (1975). Reduction of children’s fear of the dark by competence-related and situational threat-related verbal cues. Journal of Consulting and Clinical Psychology, 43(2), 251. [DOI] [PubMed] [Google Scholar]

- Kazdin Alan E. “Addressing the treatment gap: A key challenge for extending evidence-based psychosocial interventions.” Behaviour research and therapy 88 (2017): 7–18. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, Hawkins EJ, Vermeersch DA, Nielsen SL, & Smart DW (2003). Is it time for clinicians to routinely track patient outcome? A meta‐analysis. Clinical Psychology: Science and Practice, 10(3), 288–301. [Google Scholar]

- Lewis CC, Scott K, Marti CN, Marriott BR, Kroenke K, Putz JW, ... & Rutkowski D. (2015). Implementing measurement-based care (iMBC) for depression in community mental health: a dynamic cluster randomized trial study protocol. Implementation Science, 10(1), 127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Love SM, Koob JJ, & Hill LE (2007). Meeting the challenges of evidence-based practice: Can mental health therapists evaluate their practice?. Brief Treatment and Crisis Intervention, 7(3), 184. [Google Scholar]

- Lushin V (2017). Underage Drinking, Parental Self-Efficacy and Communication of Alcohol Expectations among Latino Families in New York (Doctoral dissertation, New York University). [Google Scholar]

- Lushin V, Beidas R, Conrad J, Marcus S, & Mandell D (In preparation). Implementation of evidence-based assessment: Thoughts and experiences of social work trainees. [Google Scholar]

- Lyon AR, Dorsey S, Pullmann M, Silbaugh-Cowdin J, & Berliner L (2015). Clinician use of standardized assessments following a common elements psychotherapy training and consultation program. Administration and policy in mental health and mental health services research, 42(1), 47–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacKinnon DP, Lockwood CM, Hoffman JM, West SG, & Sheets V (2002). A comparison of methods to test mediation and other intervening variable effects. Psychological methods, 7(1), 83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLeod BD, Jensen-Doss A, & Ollendick TH (2013). Overview of diagnostic and behavioral assessment In McLeod BD, Jensen-Doss A, & Ollendick TH (Eds.), Diagnostic and behavioral assessment in children and adolescents: A clinical guide (pp. 3–33). New York: Guilford Press. [Google Scholar]

- McNeill T (2006). Evidence-based practice in an age of relativism: Toward a model for practice. Social Work, 51(2), 147–156. [DOI] [PubMed] [Google Scholar]

- Middlestadt SE (2012). Beliefs underlying eating better and moving more: Lessons learned from comparative salient belief elicitations with adults and youths. The ANNALS of the American Academy of Political and Social Science, 640(1), 81–100. [Google Scholar]

- Middlestadt SE, Bhattacharyya K, Rosenbaum J, Fishbein M, & Shepherd M (1996). The use of theory based semistructured elicitation questionnaires: formative research for CDC’s Prevention Marketing Initiative. Public health reports, 111(Suppl 1), 18. [PMC free article] [PubMed] [Google Scholar]

- Nelson KM, Helfrich C, Sun H, Hebert PL, Liu CF, Dolan E, ... & Sanders W (2014). Implementation of the patient-centered medical home in the Veterans Health Administration: associations with patient satisfaction, quality of care, staff burnout, and hospital and emergency department use. JAMA internal medicine, 174(8), 1350–1358. [DOI] [PubMed] [Google Scholar]

- New York University Silver School of Social Work. Masters of Social Work Course Descriptions. https://socialwork.nyu.edu/academics/msw/course-descriptions.html Retrieved 02/02/2018.

- Paulhus DL (1984). Two-component models of socially desirable responding. Journal of personality and social psychology, 46(3), 598. [Google Scholar]

- Pignotti M, & Thyer BA (2009). Use of novel unsupported and empirically supported therapies by licensed clinical social workers: An exploratory study. Social Work Research, 33(1), 5–17. [Google Scholar]

- Polanyi M (1966). The logic of tacit inference. Philosophy, 41(155), 1–18. [Google Scholar]

- Posner K (2016). Evidence-Based Assessment to Improve Assessment of Suicide Risk, Ideation, and Behavior. Journal of the American Academy of Child & Adolescent Psychiatry, 55(10), S95. [Google Scholar]

- Prince MA, & Carey KB (2010). The malleability of injunctive norms among college students. Addictive Behaviors, 35(11), 940–947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Podsakoff PM, MacKenzie SB, Lee JY, & Podsakoff NP (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of applied psychology, 88(5), 879. [DOI] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, & Mittman B (2009). Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 24–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenstock IM, Strecher VJ, & Becker MH (1988). Social learning theory and the health belief model. Health education quarterly, 15(2), 175–183. [DOI] [PubMed] [Google Scholar]

- SAMHSA. (2013). Behavioral Health, United States, 2013. HHS Publication No. (SMA) 13–4797. Rockville, MD: SAMSHA. [Google Scholar]

- Santa Maria D, Markham C, Crandall S, & Guilamo‐Ramos V (2017). Preparing Student Nurses as Parent‐based Adolescent Sexual Health Educators: Results of a Pilot Study. Public Health Nursing, 34(2), 130–137. [DOI] [PubMed] [Google Scholar]

- Schacht RL, Dimidjian S, George WH, & Berns SB (2009). Domestic violence assessment procedures among couple therapists. Journal of marital and family therapy, 35(1), 47–59.; [DOI] [PubMed] [Google Scholar]

- Stanhope V, Tuchman E, & Sinclair W (2011). The implementation of mental health evidence based practices from the educator, clinician and researcher perspective. Clinical Social Work Journal, 39(4), 369–378. [Google Scholar]

- Szydlowski S, & Smith C (2009). Perspectives from nurse leaders and chief information officers on health information technology implementation. Hospital Topics, 87(1), 3–9. [DOI] [PubMed] [Google Scholar]

- Tennille J, Solomon P, Brusilovskiy E, & Mandell D (2016). Field Instructors Extending EBP Learning in Dyads (FIELD): Results of a pilot randomized controlled trial. Journal of the Society for Social Work and Research, 7(1), 1–22. [Google Scholar]

- Touati N, Roberge D, Denis JL, Cazale L, Pineault R, & Tremblay D (2006). Clinical leaders at the forefront of change in health-care systems: advantages and issues. Lessons learned from the evaluation of the implementation of an integrated oncological services network. Health Services Management Research, 19(2), 105–122. [DOI] [PubMed] [Google Scholar]

- Townsend MC, & Morgan KI (2017). Psychiatric mental health nursing: Concepts of care in evidence-based practice. FA Davis. [Google Scholar]

- Triandis HC (1996). The psychological measurement of cultural syndromes. American psychologist, 51(4), 407. [Google Scholar]

- Williams EC, Johnson ML, Lapham GT, Caldeiro RM, Chew L, Fletcher GS, ... & Bradley KA. (2011). Strategies to implement alcohol screening and brief intervention in primary care settings: a structured literature review. Psychology of Addictive Behaviors, 25(2), 206. [DOI] [PubMed] [Google Scholar]

- Youngstrom EA, Van Meter A, Frazier TW, Hunsley J, Prinstein MJ, Ong ML, & Youngstrom JK (2017). Evidence‐Based Assessment as an Integrative Model for Applying Psychological Science to Guide the Voyage of Treatment. Clinical Psychology: Science and Practice [Google Scholar]