Abstract

Attentional engagement is a major determinant of how effectively we gather information through our senses. Alongside the sheer growth in the amount and variety of information content that we are presented with through modern media, there is increased variability in the degree to which we “absorb” that information. Traditional research on attention has illuminated the basic principles of sensory selection to isolated features or locations, but it provides little insight into the neural underpinnings of our attentional engagement with modern naturalistic content. Here, we show in human subjects that the reliability of an individual's neural responses with respect to a larger group provides a highly robust index of the level of attentional engagement with a naturalistic narrative stimulus. Specifically, fast electroencephalographic evoked responses were more strongly correlated across subjects when naturally attending to auditory or audiovisual narratives than when attention was directed inward to a mental arithmetic task during stimulus presentation. This effect was strongest for audiovisual stimuli with a cohesive narrative and greatly reduced for speech stimuli lacking meaning. For compelling audiovisual narratives, the effect is remarkably strong, allowing perfect discrimination between attentional state across individuals. Control experiments rule out possible confounds related to altered eye movement trajectories or order of presentation. We conclude that reliability of evoked activity reproduced across subjects viewing the same movie is highly sensitive to the attentional state of the viewer and listener, which is aided by a cohesive narrative.

SIGNIFICANCE STATEMENT Modern technology has brought about a monumental surge in the availability of information. There is a pressing need for ways to measure and characterize attentional engagement and understand the causes of its waxing and waning in natural settings. The present study demonstrates that the degree of an individual's attentional engagement with naturalistic narrative stimuli is strongly predicted by the degree of similarity of his/her neural responses to a wider group. The effect is so strong that it enables perfect classification of attentional state across individuals for some narrative stimuli. As modern information content continues to diversify, such direct neural metrics will become increasingly important to manage and evaluate its effects on both the healthy and disordered human brain.

Keywords: attention, intersubject correlation, natural narrative

Introduction

In the modern, information-saturated world, sustaining attention on a single continuous information stream, even in the absence of competing sensory stimuli, is a constant challenge. Consistent attentional engagement is particularly important in an educational context and is problematic for those with learning disorders. The modulation of neural responses by attentional state has long been studied using reductionistic laboratory paradigms involving sequences of discrete stimuli with limited feature content. However, the kinds of stimuli that engage human attention in day-to-day life usually take the form of dynamic multisensory streams characterized by high dimensional features.

Behavioral studies of everyday activities such as reading, watching TV, driving, listening to lectures, or using a computer confirm our common experience that attention is in constant flux (Anderson and Burns, 1991, Shinoda et al., 2001, Smallwood, 2011, Lindquist and McLean, 2011, Risko et al., 2013, Szpunar et al., 2013). A number of studies on the neural correlates of attentional fluctuations have used laboratory tasks specifically designed to probe mind wandering (Christoff et al., 2009, Braboszcz and Delorme, 2011) or lapses of attention (Weissman et al., 2006, O'Connell et al., 2009, Kam et al., 2011). These studies point to reduced stimulus-evoked responses and increased default network activity when attention drifts away from the experimental stimulus, but little is known about the neural substrates of everyday attentional engagement with dynamic naturalistic stimuli.

In recent years, there has been an increase in neuroscience research using naturalistic stimuli (Hasson et al., 2004, Bartels and Zeki, 2004, 2005, Spiers and Maguire, 2007), providing new tools for understanding brain processing under “natural” settings. Such approaches can provide insight into how the brain processes narratives and, conversely, how narratives affect mental state (Furman et al., 2007, Naci et al., 2014, Wilson et al., 2008). Naturalistic stimuli such as films mimic the real-world environment much better than conventional laboratory stimuli but, unlike our mundane day-to-day experience, films are directed and edited to orient our attention systematically (Hasson et al., 2008b). Although the extent of engagement with a film is subject to personal bias, motivation, interests, etc., narrative stimuli such as films tend to elicit similar emotions and sentiments across viewers. In fact, it has been shown with fMRI that hemodynamic cortical responses are reliably reproduced across viewers during cinematic presentations, suggesting that cognitive processes are reliable and reproducible (Hasson et al., 2004, 2008a; Furman et al., 2007; Jääskeläinen et al., 2008; Wilson et al., 2008). A structured film, such as a scene from a Hollywood movie, elicits more strongly correlated cortical responses across viewers than unstructured, unedited footage of people walking in front of an office building and much more than when viewers watch incoherent stimuli such as a movie clip with scrambled scenes (Hasson et al., 2004, 2008a; Furman et al., 2007; Dmochowski et al., 2012). A plausible account of these effects is that viewers' attentional engagement varies across different types of movies. However, this has not been tested explicitly through direct manipulation of voluntary attention, so it remains possible that intersubject reliability is driven mostly by low-level preattentive or bottom-up processes. Here, we aimed to manipulate attentional engagement explicitly and determine how this affects neural reliability for stimuli that naturally attract different levels of attention.

We asked several groups of participants to listen to and/or watch auditory or audiovisual stories with different levels of narrative coherence and assessed the intersubject reliability of neural responses by measuring the correlation of the electroencephalogram across subjects during attentive and inattentive states. We measured reliability across rather than within subjects to avoid presenting the stimulus several times to the same subject, which would clearly hamper natural attentional engagement. Subjects were instructed to either normally attend to the stimulus or to perform mental arithmetic that diverted their attention from the physically identical stimulus. As predicted, neural responses were more reliable (i.e., better correlated across subjects) when subjects were freely attending to the stimulus. Moreover, neural responses were more reliable for audiovisual stimuli, and for stimuli with a clear narrative. For a suspenseful narrative [e.g., the television show “Bang! You're Dead” (BYD); Hitchcock, 1961], the attentional modulation was so strong that we could perfectly identify the attentional state of the participant based solely on the basis of the similarity of their neural activity to a larger group.

Materials and Methods

Subjects and stimuli.

We recruited different sets of subjects for the four experiments reported here (n = 20, 20, 17, and 20, for a total of 76 unique subjects). All subjects were fluent English speakers with ages ranging from 18 to 30 years (42% female). All participants provided written informed consent and were remunerated for their participation. In addition, they all had self-reported little to no familiarity with the stimulus. Procedures were approved by the Institutional Review Board of the City University of New York.

First, we recorded EEG from 20 participants during the presentation of 2 6-min-long audiovisual narratives (used previously in Hasson et al., 2008a; Dmochowski et al., 2012), a segment of a TV series, BYD (Hitchcock, 1961), and a clip from the feature film “The Good, the Bad, and the Ugly” (GBU; Leone, 1966). In BYD, a boy handles a handgun loaded with live rounds, which he mistakenly believes to be a toy. BYD features several intense and suspenseful scenes in which the boy points the loaded gun and pulls the trigger at unsuspecting individuals. The scenes of BYD change rapidly and are edited to orient the viewer's attention systematically. The clip selected from GBU portrays three men in a standoff scene comprised of prolonged stills of the scenery, body parts, and faces. There is minimal movement or dialogue. Unless one is familiar with GBU's story, the scene carries little narrative content. Each video clip was presented twice with the instructions to attend normally in the first presentation (attend task) and to count backwards in steps of seven starting at 1000 for the second presentation (count task). The order was fixed because participants are expected to be more attentive during the first exposure and may naturally lose interest during the second viewing of the narrative, which we intended to diminish attention.

In a second experiment, we recorded EEG from an additional 20 participants during the presentation of three audio-only naturalistic stimuli. These included a 6 min recording of live storytelling,“Pie Man” (PM; O'Grady, 2012), a version of the same PM story with the order of words scrambled in time (PMsc; note that both original and scrambled versions are identical to Hasson et al., 2004), and a 6-min-long recording of live storytelling in Japanese (Jpn). PM was recorded during a live, on-stage performance by American journalist Jim O'Grady during which he is telling a story from his time as a student reporter for a university publication. The scrambled version of this story (PMsc) was intended to elicit similar low-level auditory stimulation to PM while removing any narrative content. The story in Japanese (Jpn), also a live recording of a male stand-up comedian, was intended to be entirely incomprehensible and without narrative content for our participants (none of them reported knowledge of Japanese). Note that PMsc carries some meaning in the individual words, whereas Jpn does not. The three stimuli were presented in a random order and, as before, they were repeated for each subject with an attend and count task condition. Subjects were instructed to maintain their gaze at a fixation cross located at the center of the screen for these auditory stimuli.

For a control experiment, we recorded data from an additional 17 participants using the same stimuli as in the first experiment (BYD and GBU) and the same task conditions (attend and count), but now asked participants to maintain their gaze at the center of the screen. To assist viewers with this, a rectangular area was highlighted (50 × 50 pixel, 1.3 × 1.3 cm) with a thin gray line at the center of the screen. Viewers were seated at 75 cm from the screen and the video stimulus covered an area of 33.8 × 27.03 cm (1280 × 1024 pixels). Eye tracking with an EyeLink 2000 (SR Research) was used to monitor eye movements to assess whether subjects managed to follow this instruction.

Finally, in a fourth experiment, we collected data from an additional 20 subjects to control for a potential order confound. Here, we reversed the order, instructing subjects to first count and then attend to each of the stories. The order of the three audiovisual and auditory-only stories (BYD, GBU, and PM) was randomized across subjects.

In total, each condition provided several minutes of continuous data for analysis for each subject (∼26, ∼42.6, ∼26, and ∼40.5 min for Experiments 1–4, respectively).

EEG acquisition.

Video presentation and EEG recording were performed in an electromagnetically shielded and sound-dampened room with no ambient lighting. All subjects were fitted with a standard, 64-electrode cap following the international 10/10 system. EEG signals were collected using a BioSemi ActiveTwo system at a sampling frequency of 512 Hz. EOG was measured in six locations (above, below, and lateral to each eye) and referenced to the same centroparietal ground electrodes as the EEG electrodes. Video playback used an in-house-modified version of MPlayer software (http://www.mplayerhq.hu) operating on a RT linux platform, which provided trigger signals for the EEG acquisition system once per second during the duration of each stimulus. This trigger was used to assess temporal jitter of playback, which remained at <±2 ms across subjects.

Preprocessing.

All processing of data was performed using MATLAB software (The MathWorks). Signals were filtered with second-order Butterworth filters with a 1 Hz high-pass and a 60 Hz notch and down-sampled to 256 Hz. Eye movement-related artifacts were linearly regressed out from all EEG channels using the EOG recordings (Parra et al., 2005). To remove outliers automatically, we processed the EEG data with robust principal component analysis (rPCA) (Candès et al., 2011), which identifies individual outlier samples in the data and substitutes them implicitly with an interpolation from other sensors, leveraging the spatial correlation between sensors among non-outlier samples. We used the inexact augmented Lagrange multiplier method for computing rPCA (Lin et al., 2010) and applied the method on the combined set of subjects; that is, concatenated over all subjects for each stimulus in both attentional conditions. In doing so, the interpolation was consistent across subjects, which is important because we sought to extract activity that was consistent across subjects.

Intersubject correlation (ISC).

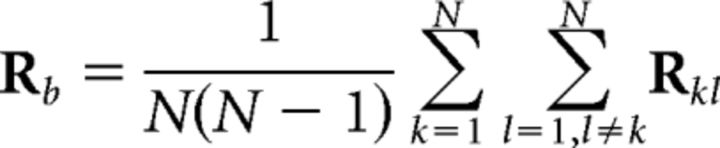

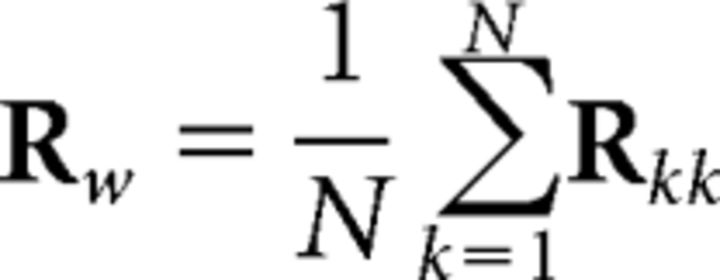

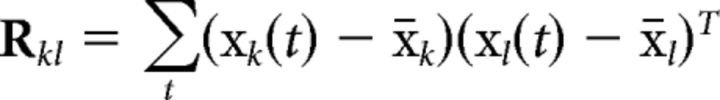

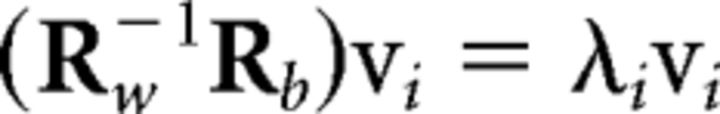

To assess reliability of evoked responses to the stimulus, we measured correlation of the EEG between subjects. Note that reproducibility across subjects, if at all present, requires reliable responses in each subject. Importantly, by measuring reliability across subjects, we avoid having to present the narrative stimulus repeatedly to the same subjects. ISC was evaluated in the correlated components of the EEG (Dmochowski et al., 2012, 2014). The goal of correlated component analysis here is to find linear combinations of electrodes (one could think of them as virtual sensors or “sources” in the brain) that are maximally correlated between subjects. The basic idea of the technique is summarized in Figure 1. Correlated components are similar to principal components except that the projections of the data capture maximal correlation between datasets rather than capturing maximal variance for a given dataset. This concept of maximizing correlations is the same as in canonical correlation analysis (Hotelling, 1936), with the only difference being that datasets are in the same space and share the same projection vectors. Here, components are found by solving an eigenvalue problem similar to PCA and other component extraction techniques (Parra and Sajda 2003, de Cheveigné and Parra, 2014). The technique requires calculation of the pooled between-subject cross-covariance, as follows:

|

and the pooled within-subject covariance, as follows:

|

where

|

measures the cross-covariance of all electrodes in subject k with all electrodes in subject l. Vector xk(t) represents the (D = 64) scalp voltages measured at time t in subject k, and x̄k their mean value in time. The component projections that capture the largest correlation between subjects are the eigenvectors vi of matrix, Rw−1Rb, with the strongest eigenvalues λi as follows::

|

Before computing eigenvectors, we regularized the pooled within-subject correlation matrix to improve robustness to outliers using shrinkage (Blankertz et al., 2011), Rw ← (1 − γ)Rw + γλ̄I, where ¯λ is the mean eigenvalue of Rw and we selected γ = 0.5.

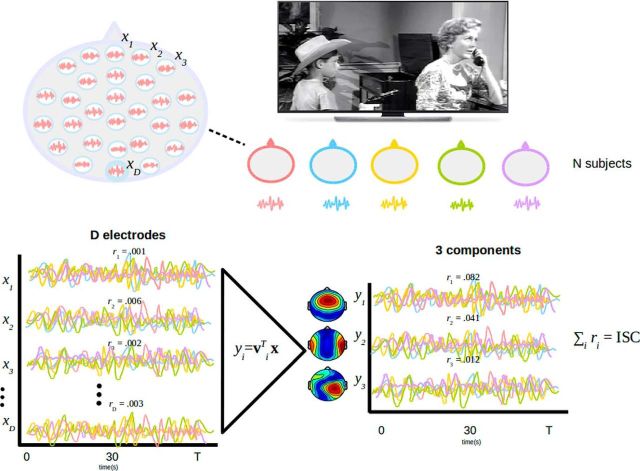

Figure 1.

Overview of neural reliability measure. Neural response are recorded from n subjects during presentation of a naturalistic stimulus. Each subject provides time courses x(t) recorded on D electrodes (D = 64). Correlations across subjects in each of these electrodes xd(t) is small (rd < 0.01). The data are projected using projection vectors, vi, which maximize correlations, ri. In the first component projections, yi, correlations are now larger (although still small in absolute terms, values are now substantially above chance; see Fig. 3). ISC is measured as the sum of correlation of the first few correlated components (first three in this study).

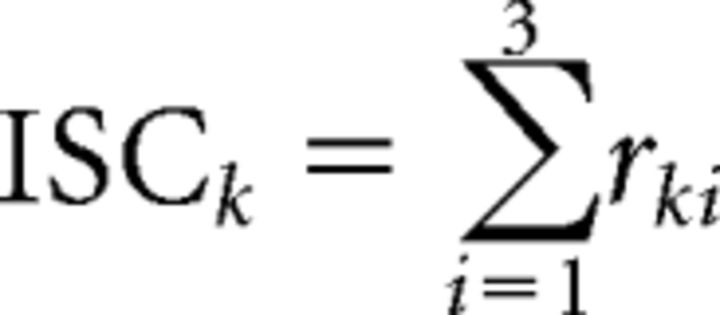

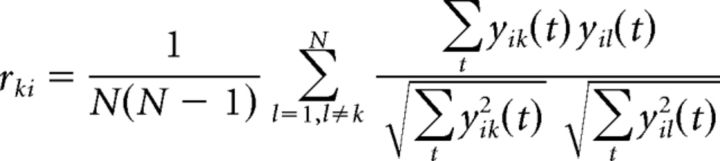

Within- and between-subject covariances and resultant components vectors vi were computed separately for each experiment combining data from all subjects, all stimuli, and both attention conditions. To determine how reliable the EEG responses of single subjects are, we measured how similar they are to the responses of the group after projecting the data onto the component vectors vi. ISC was then calculated as the correlation coefficient of these projections separately for each stimulus presentation and each component and averaged over all possible pairs of subjects involving an individual subject. Therefore, for each stimulus, attention condition, and subject k, we obtained a measure of reliability as the ISC summed over components as follows:

|

where

|

are the conventional Pearson correlation coefficients, here averaged across pairs of subjects and computed for component projections, yik(t) = viT(xik(t) − xk).

We selected the strongest three correlated components (i = 1… 3) and ignored smaller components because they are close to the chance level of correlation (C3 is already close to chance levels; see Fig. 3). In Figures 2, 6, and 7, we report ISCs summed over these three components to capture overall reliability. In Figure 3, we analyzed the three components separately. The corresponding “forward models” showing the spatial distribution of the correlated activity were also computed (see Fig. 3) using established methods (Parra et al., 2005; Haufe et al., 2014).

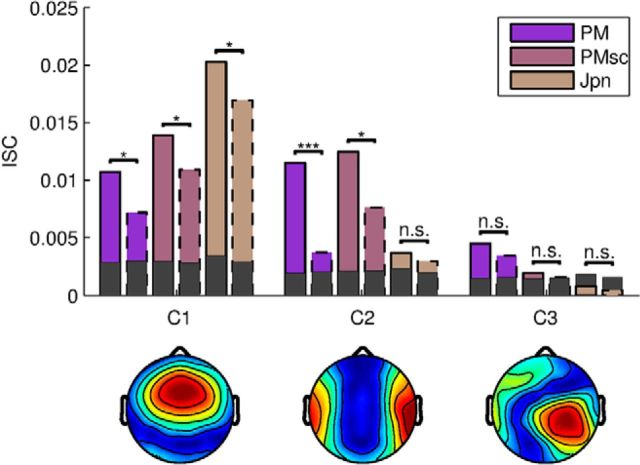

Figure 3.

Attentional modulation of ISC depends on narrative coherence and differs by component. Top, For the auditory stimuli with no narrative (PMsc and Jpn), the first component dominates the overall ISC. Strongest attentional modulation of ISC is observed for the second component, in particular for the narrative stimulus (PM) (solid and dashed correspond to attend and count conditions). For the non-narrative stimuli, ISC is at chance levels (marked by dark-shaded bars) for the third component. Bottom, Scalp topographies (“forward model”) indicate that each component captures a different neural process. These topographies for audio-only stimuli are similar to those found previously in audiovisual stimuli (Dmochowski et al., 2012, 2014).

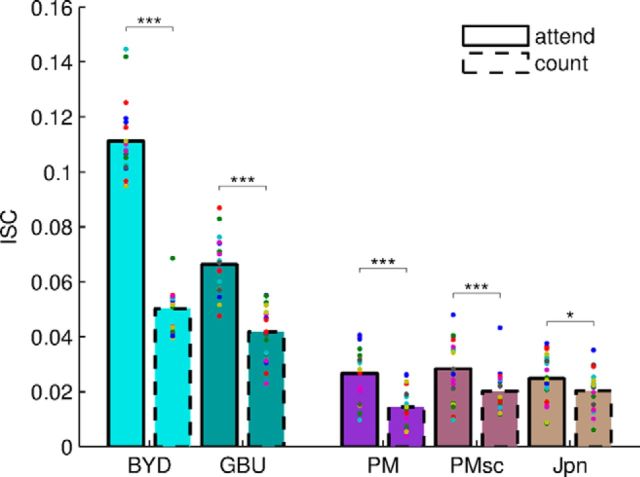

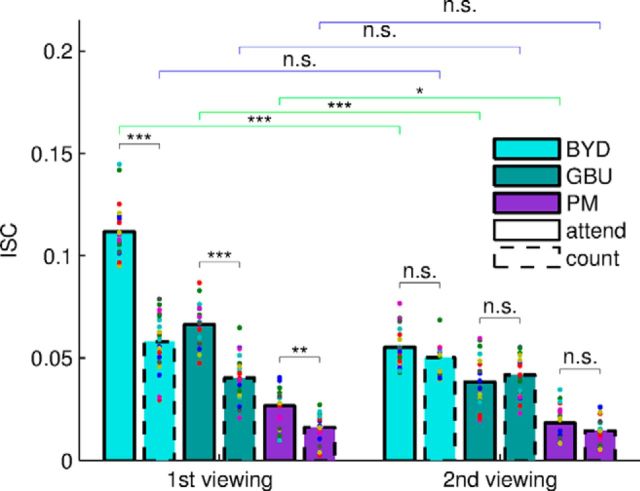

Figure 2.

ISC is modulated by attention for audiovisual stimuli and, to a lesser degree, for audio-only stimuli. ISC for individual subjects (colored dots, bars indicate the mean across subjects) differ significantly between attend (solid) and count conditions (dashed bars) and between auditory and audiovisual stimuli. Audiovisual stimuli (blue/green) and audio-only stimuli (purple/brown) were presented to two separate cohorts of 20 subjects. Audiovisual: BYD and GBU; auditory: PM, PMsc, Jpn. For the audio-only stimuli, ISC was significantly lower and less modulated by attention (see text). The ISC measure for each subject is obtained by summing the correlation in each of the three most correlated components. Pairwise comparisons here used a within-subject t test (*q < 0.05, **q < 0.01, ***q < 0.001).

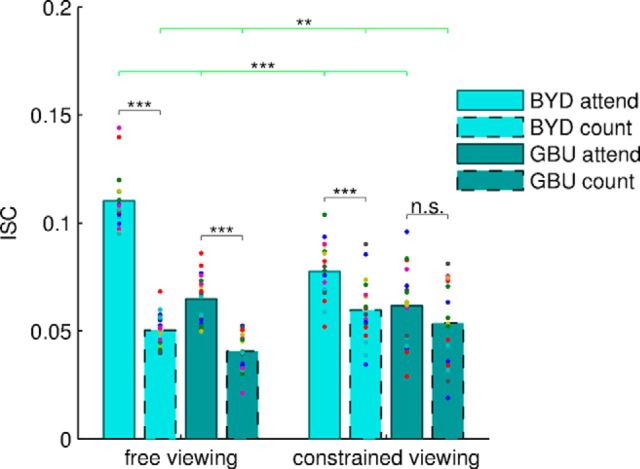

Figure 6.

The attentional effect on ISC is lessened when constraining eye movements. EEG ISC is increased when constraining eye gaze for counting condition and decreased for attend condition (green horizontal lines, repeated-measures ANOVA; for p-values, see text). Follow-up pairwise comparisons are shown for attentional modulation (black horizontal lines, BYD-free: q < 10−14; GBU-free: q < 10−5; BYD-constrained: q < 10−4; GBU-constrained: q = 0.061).

Figure 7.

ISC is reduced by counting in a separate group also viewing the stimuli for the first time. An additional group of 20 subjects was presented with three stimuli with the order of attend and count conditions reversed. Data are grouped to compare attention conditions across groups with common order of presentation. Both counting and repeated viewing significantly reduce ISC from the levels observed for the attentive first viewing condition, but once ISC was reduced by one of the two manipulations, there was no further drop in ISC. Follow-up two-sample comparisons are shown for attentional modulation (black horizontal lines, BYD first viewing: q = 10–13; GBU first viewing: q = 10–07; PM first viewing: q = 0.0012). Follow-up two sample pairwise comparisons for order effect, first and second viewing (green horizontal lines: attend task, BYD: q = 10–15; GBU: q = 10–07; PM: q = 0.034; blue horizontal lines: count task).

Chance-level ISC can be determined using phase-randomized surrogate data. To do so, the phase of the EEG time series is randomized in the frequency domain following Theiler et al. (1992). This method generates new time series in which the temporal fluctuations of the signal are no longer aligned in time with the original data and thus do not correlate across subject, yet chance values for correlation remain preserved because these depend solely on the spatiotemporal correlation in the data, which are preserved by this phase-randomization technique. After this randomization, we followed the same procedure as with the original data above: within- and between-covariance matrices were computed, the signal projected on the eigenvectors, and ISC values computed. Significance level was determined by comparing the ISC of the original data to the mean ISC of 100 sets of such phase-randomized surrogate data.

Except for some preprocessing, we correlate here the raw EEG signals. Correlations therefore measure fluctuations of electric potentials that are consistent in phase across subjects. In EEG these are referred to as “evoked responses.” In contrast, oscillatory fluctuations for which the phase is not stimulus locked will not add to the present correlation measure. Therefore, ISC here measures phase-locked evoked responses and does not capture the envelope of oscillatory powers, typically referred to as “induced” activity. To capture such activity, one has to band-pass the signal and we did this for the alpha band as described below. We focus mostly on the analysis of evoked activity because of the remarkable observation that it is reliably reproduced for naturalistic stimuli (Dmochowski et al., 2014). We used ISC as a measure of reliability (as opposed to event-related potentials, ERPs) because naturalistic stimuli do not have well defined events on which to trigger the ERP analysis, nor did we want to repeat the stimulus many times for the purpose of averaging.

ISC to the attentive group.

For the purpose of discriminating between task conditions (attend vs count; see Figs. 4, 5), correlated components vectors, vi, were extracted from the attend group only separately for each stimulus. We then projected the data onto these components and measured correlations between individuals (that are to be classified as attending or counting) with all other members of the attend group. The resulting measure, which we call ISC-A, is the average of correlation coefficients between each test subject with all attend subjects. As before, ISC-A sums correlation across the first three components. Correlation is thus measured within condition and across conditions for data from the attend and count conditions, respectively. The notion here is that normally attending subjects (attend condition) serve as a normative group to ascertain attentional state. ISC-A measures how similar a given recording is to that of the individuals in the attentive group. To avoid training biases of the component extraction, data from the subject to be tested were excluded from the component extraction step. Classification performance for task (attend vs count) was assessed using the standard metric of area under the receiver operating characteristic curve (Az of ROC). Chance level for Az was determined by using surrogate data using randomly shuffled task labels (attend/count). For each shuffle, Az was computed following the identical procedure starting with extraction of the correlated components from the attend-labeled group and continuing with all subsequent steps as outlined above. Significance levels were determined by comparing the correct Az with 10000 renditions of randomized task labels.

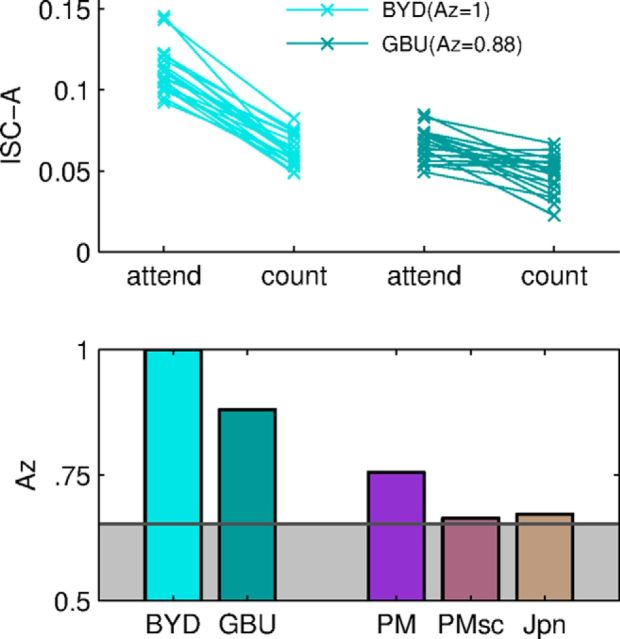

Figure 4.

Neural reliability can be use to identify attentional state of individual subjects by comparing evoked responses with those of an attentive group. Top, ISC-A is strongly modulated by task (attend vs count) for individual subjects (one line per subject) for the audiovisual stimuli presented here (BYD and GBU as in Fig. 3). Bottom, Prediction performance of attentional state measured as Az for the same audiovisual and auditory stimuli shown in Figure 3. Area shaded in gray corresponds to a statistical significance of p > 0.05.

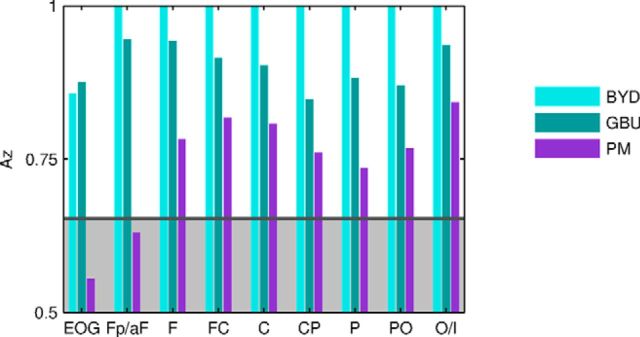

Figure 5.

Eye movement artifacts do not explain modulation of ISC of EEG. Prediction performance of attentional state using ISC-A was computed using subgroups of eight electrodes from most anterior (FP/AF) to most posterior (O/I) measuring spatial differences in EEG reliability. EOG electrodes alone can predict attentional state, suggesting that attention changes eye movement patterns. Discrimination performance using EEG electrodes (with EOG regressed out) improves as one moves posteriorly and away from the eyes, indicating that eye movement artifacts themselves are unlikely to drive ISC. Area shaded in gray corresponds to statistical significance of p > 0.05.

Statistical analysis.

All statistical tests were performed using subjects as independent samples; that is, each subject provided per stimulus and per condition one measurement of neural reliability, namely, the correlation of the evoked activity of that subject with the rest of the group (ISC). Unless otherwise noted, comparisons across conditions involving the same subjects always used within-subjects comparisons: paired t tests or repeated-measures ANOVA. Details of the planned comparisons using ANOVA are described in the Results section. For post hoc pairwise comparisons, we used paired t tests and p-values were corrected for multiple comparisons using Benjamini–Hochberg–Yekutieli false-discovery procedure (Benjamini and Yekutieli, 2001). In these cases, we report the q-value. For planned comparisons, we report p-value without correction. ANOVA and t tests were performed using standard MATLAB functions, in particular anovan() for all ANOVAs. The Az calculation and the test for significance of Az difference (DeLong et al., 1988) was performed using an in-house program written in MATLAB.

Alpha power.

To compare the effects obtained with the ISC measures to existing, established markers of attentional engagement, we also analyzed alpha-frequency band (∼10 Hz) activity in the data. To obtain alpha power, the signal was projected onto each correlated component, band-passed filtered using a complex-valued Morlet filter with center frequency of 10 Hz and one octave bandwidth (Q-factor = 1), and the absolute value was squared and averaged over all samples of each stimulus and condition. The resulting power was normalized by broad-band power (0–256 Hz) for each viewing in each component and then summed over the first three correlated components because there was no notable difference across components in the modulation of alpha power due to attention.

Results

Attention modulates neural reliability for audiovisual and audio-only naturalistic stimuli

In a first experiment, we presented 2 narrative audiovisual stimuli of 5–6 min duration to 20 subjects who were asked to either attend to the stimulus naturally or to silently count backwards in their mind in steps of 7—a task that makes it virtually impossible to attend to the narrative. In a second experiment, we presented three audio-only naturalistic stimuli to a new set of 20 subjects undergoing the same attention manipulation. EEG was recorded and analyzed to assess the reliability of evoked responses across subjects using ISC (see Materials and Methods and Fig. 1). For each subject, we obtained an ISC measure reflecting the similarity of an individual's evoked responses to that of the others in the group who saw the same stimulus under the same attention condition.

As predicted, neural responses were more reliable (i.e., ISC was higher) when subjects were attending normally to the stimulus (Fig. 2). A two-way ANOVA with factors of task (attend vs count) and modality (audiovisual and audio-only) shows that there is a strong effect of task condition (F = 439.17, df = 1, p < 10−26) and a strong effect of presentation modality (across-subjects comparison, F = 412.91, df = 1, p < 10−23). The most reliable responses are elicited by audiovisual stimuli for which subjects can normally attend. An interaction effect indicated that the modulation of reliability (ISC) by attentional state was stronger for the audiovisual stimuli than for the audio-only stimuli (F = 201.16, df = 1, p < 10−19). Follow-up pairwise comparisons showed that ISC was significantly higher during attentive viewing than during inattentive viewing (count condition) for all narrative stimuli (BYD: t = 25.19, df = 19, q < 10−14; GBU: t = 7.53, df = 19, q < 10−5; PM: t = 6.33, df = 19, q < 10−4,). Weaker attentional modulation was also observed for the non-narrative stimuli PMsc, in which words were scrambled in time such that no meaningful story could be discerned (t = 4.24, df = 19, q < 0.001), and for Jpn, a non-intelligible Japanese story with weaker but still discernible attentional modulation (t = 2.7, df = 19, q = 0.014).

Correlated components are modulated by attention and narrative

To determine which factors affect neural reliability, we analyzed the effect of narrative cohesiveness and differentiated between the correlated components. Although the audiovisual stimuli varied with respect to narrative content (BYD may have been more suspenseful than GBU), we focused the analysis on the auditory-only stimuli. In this second experiment, we explicitly selected auditory stimuli to vary in narrative cohesiveness: PM had a cohesive narrative, PMsc contained individual meaningful words without narrative cohesion, and Jpn consisted of speech that was non-intelligible to our subjects. In addition, different components of the correlated activity have different spatial scalp distributions (Fig. 3, bottom), suggesting that they represent different neural processes. We wondered to what extent each of these neural generators depended on attentional state and narrative type. To answer this question, we report ISC separately for each component for the three auditory stimuli (Fig. 3, top). By definition, the correlated-component algorithm captures the highest ISC in the first component. A two-way repeated-measures ANOVA showed that the first component is modulated by task (attend vs count) for all stimuli (F = 65.34, df = 1, p < 10−7), a modulation significant in follow-up pairwise comparisons (PM: t = 3.31, df = 19, q = 0.009, PMsc: t = 2.64, df = 19, q = 0.016, Jpn: t = 0.90, df = 19, q = 0.016). For the second component, attentional modulation was highly significant for the narrative stimulus (PM: t = 7.1, df = 19, q < 10−5) and somewhat weaker for PMsc, which carries meaning at the word level (PMsc: t = 3.1, df = 19, q = 0.017). For Jpn, which was entirely unintelligible, there was no attentional modulation in the second component (q = 0.69). The ISC for the third component, as well as higher components (data not shown), were close to chance level and were not significantly modulated by attention. In total, the strongest attentional modulation was observed for the second component in the presence of a coherent narrative.

Reliability of EEG is a strong predictor of attentional state for audiovisual narrative stimuli

As reported above, the modulation of ISC due to attentional state (attend vs count) is substantial, in particular for the audiovisual stimuli. We wondered whether it is possible to infer the attentional state of an individual subject based solely on the ISC of the neural activity evoked by the stimulus. In other words, can we tell whether someone is paying attention to a naturalistic stimulus from their brain activity? To test this, we compared the evoked activity from an individual viewer with the activity of the attentive group. Correlated components were extracted using the attentive group and then used to measure correlation between an individual subject and attentive individuals (denoted here as ISC-A to distinguish from the measure used above, which measured correlation within a condition). As expected, responses of an individual were much more similar to the attentive group (i.e., had high ISC-A) when they were attending to the stimulus than when they were not (Fig. 4, top). Treating ISC-A as a predictor of attentional state gives very high classification performance. The Az ROC, a standard metric of classification performance, is highly significant and reaches values as high as Az = 1.0 for BYD and Az = 0.88 for GBU (Fig. 4, bottom). Prediction of attentional state for audio-only stimuli was lower (PM, Az = 0.76) and not significant for stimuli that lacked narrative content (PMsc and Jpn: p > 0.05).

Attentive subjects have correlated eye movements but eye movement artifacts do not account for attentional modulation of neural reliability

During active scene perception, the eye positions of different viewers move from one location to another in a reliable fashion. This phenomenon can result in significant correlation of the eye movement trajectories across subjects (Hasson et al., 2008c). It is possible that these eye movement paths are disrupted when viewers no longer naturally attend to the stimulus. We thus suspected that the ISC of eye movements are also modulated by attention. Indeed, we found that one can distinguish between task conditions using only EOG electrodes (Fig. 5, EOG), which capture vertical and horizontal eye movements with reasonable fidelity. Given that eye movement artifacts are also present in EEG electrodes, we wanted to rule out that the results reported above are a consequence of inadequate removal of these eye movement artifacts. We reanalyzed the data by selecting subsets of EEG electrodes along the anterior to posterior axis. If the attentional modulation evident in the ISC were entirely driven by eye movement artifacts, then we would expect to see a reduction in attentional modulation at more posterior electrodes. However, this was not the case for either the audiovisual or the audio-only stimuli (Fig. 5), thus ruling out volume conduction of eye movement artifacts as the spurious cause of the attentional modulation observed in the EEG.

Constrained viewing diminishes attentional modulation

Although we ruled out eye movement artifacts as the driver of ISC modulation, it is still possible that similarity of eye movements across subjects leads to similar primary visual stimulation (as well as similar oculo-motor activity), which in turn dominates the ISC in the EEG signals. To test for this, a third experiment used the same audiovisual stimuli (BYD and GBU) while restricting eye movements (Fig. 6). During both task conditions, 17 subjects were instructed to keep their gaze within a constrained area at the center of the screen (constrained viewing), thus ensuring that retinal visual input was similar across attention conditions. A two-way ANOVA with factors of task (attend vs count, repeated-measures) and viewing condition (free vs constrained, between-group) showed that the main effect of attention remained robust (F = 221.67 df = 1, p < 10−15); however, there was a clear interaction between the two factors (F = 61.79, df = 1, p < 10−9). Although the attention effect was significant in the constrained viewing condition on its own, the effect was significantly smaller than in the free viewing condition. Importantly, we could verify that subjects' point of gaze at any instant in time was in closer agreement across subjects in the constrained viewing condition than in the normal free viewing condition. (The time-averaged distance from the mean fixation point across subjects was 14.7 pixels during constrained viewing versus 44.5 pixels during free viewing for BYD.) For the count condition, when considered alone, ISC increased slightly with constrained viewing (F = 7.56, df = 1, p = 0.0094, between-group), suggesting that some portion of the ISC relates to common retinal input. However, ISC in the attend condition decreases strongly with constrained viewing (F = 17.95, df = 1, p = 0.0002, between-group) despite the fact that retinal input was more similar. This indicates that a large portion of the ISC modulations by attentional engagement cannot be accounted for simply by common retinal input and thus early visual cortical representations.

Attentional modulation persists when controlling for order of presentation

In the experiments thus far, subjects were normally attending to the stimulus during the first presentation and were distracted from the stimulus in the second presentation by counting. It is therefore possible that the observed drop in ISC is the result of repeating the stimulus rather than reduced attentional engagement with the stimulus. Indeed, ISC is known to drop when the stimulus is repeated (Dmochowski et al., 2012), which we previously interpreted as an attentional effect (i.e., subjects are less interested in the stimulus after the initial viewing). To disambiguate the effect of counting from that of repeated presentation, we recorded from a new group of 20 subjects with the reversed order (first count and then attend) for two audiovisual and one auditory stimulus. Figure 7 compares the results from the previous data with the original order (first attend and then count). First and foremost, we confirmed the effects of attention now using only the first viewing: A two-way between-group ANOVA confirmed that the effect of attention and of stimuli were robust (task: F = 212.22, df = 1, p < 10−27, stimulus: F = 314.23, df = 2, p < 10−46). A separate ANOVA conducted for only the count conditions with factors of viewing order and stimuli again showed an effect of stimuli, but, interestingly, no effect of viewing order (between-groups, F = 2.35, df = 1, p = 0.13). This indicates that ISC during counting is reduced regardless of order. However, interestingly, for the attend condition, there was a large drop in ISC with the second viewing (2-way between-group ANOVA with order and stimuli as factors, order: F = 235.62, df = 1, p < 10−28, stimulus: F = 305.12, df = 2, p < 10−45). This extends our previous findings (Dmochowski et al., 2012) showing that neural responses become less reliable on the second viewing of a stimulus. This indicates that, despite being instructed to ignore it the first time around, subjects do not engage with the content on second viewing to a degree anywhere near the level attained on a first, attended viewing.

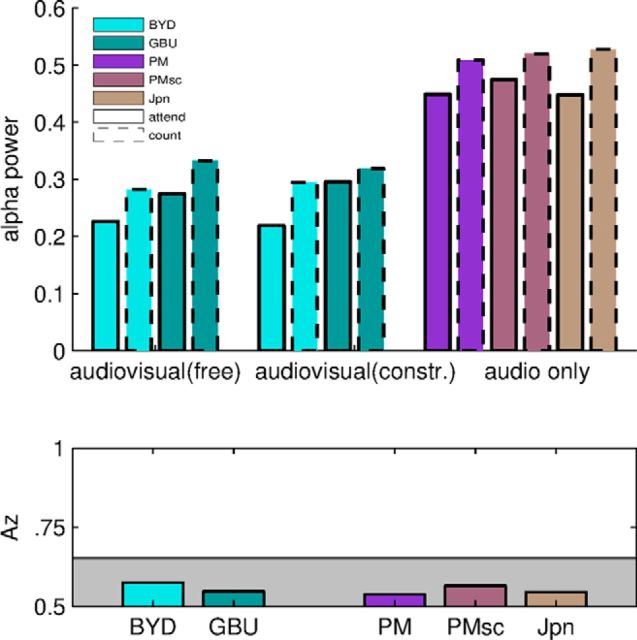

Alpha activity replicates results of ISC but with relatively weaker modulation

Attentional modulation has long been linked to changes in alpha-frequency band activity (power of 10 Hz oscillations). To test whether our current results are consistent with this conventional measure of attentional modulation, we analyzed alpha-band activity for all stimuli and conditions summing alpha-band power in all three extracted components (Fig. 8, top). We performed a three-way ANOVA on the unconstrained viewing and listening conditions with the following factors: task (attend vs count), modality (audiovisual and audio-only), and stimulus (BYD, GBU, PM, PMsc, Jpn). During the count condition, alpha power was increased compared with the attend condition (F = 5.92, df = 1, p = 0.019) independent of modality or stimulus. Alpha was also higher for the audio-only stimuli than the audiovisual stimuli (F = 6.91, df = 1, p = 0.019). A separate 3-way ANOVA on the visual stimuli with task condition (attend vs count), viewing condition (free and constrained viewing), and stimulus (BYD and GBU) as factors confirms the attentional modulation in alpha-band power (F = 16.12, df = 1, p = 0.0003). However, alpha power did not differ between the free-viewing and constrained-viewing conditions (F = 0.47, df = 1, p = 0.49). Alpha also differed between BYD and GBU (F = 9.14, df = 1, p = 0.0047). All of the observed modulations in alpha power are consistent with what was observed above using the reliability of evoked responses. We wondered how the attention-dependent modulation in alpha power compares with the modulation of ISC. To this end, we calculated how well one can determine attentional state across subjects from alpha power. Az values were <0.56 for all stimuli (Fig. 8, bottom), which is not a statistically significant classification performance here (p < 0.05 indicated in gray). In fact, these values are significantly lower than the Az values shown in Figure 4 with ISC-A. (BYD: z = 4.52, p < 10−5; GBU: z = 4.13, p < 10−5; PM: z = 2.58, p = 0.0048; PMsc: z = 1.16, p = 0.1230; Jpn: z = 1, p = 0.16). The main reason for the alpha-band's poor ability to detect attentional state may be the strong differences in power across subjects (random, F = 15.32, df = 47, p < 10−17). However, when alpha-band power is normalized per subject by alpha-power averaged across viewings, its ability to discriminate attentional state improves but is still weaker than ISC-A (Az < 0.7 for all stimuli).

Figure 8.

Modulation of alpha power confirms attentional effects, but modulation is not sufficient to predict attentional state across individuals. Top, Alpha power is modulated by attention independent of stimulus, modality, or viewing condition. It is stronger for auditory-only stimuli, but does not differ between free and constraint viewing. Bottom, Classification performance between attend and count condition based on alpha power. Given the variability of alpha across subjects, the observed modulation with attention is not strong enough to distinguish attentional state across subjects (gray area indicates chance performance).

Discussion

In the present study, we demonstrated that attention strongly affects the reliability with which naturalistic stimuli are processed, reflected in ISC of human EEG (Fig. 1, ISC). ISC was highest and most strongly modulated by attention during free viewing of audiovisual stimuli (Fig. 2) compared with auditory stimuli (though see caveat below) and for stimuli with a compelling narrative compared with stimuli that lacked meaning (Fig. 3). We further demonstrated that the correlation of an individual's EEG with a group of normally attending individuals was an extremely good predictor of attentional state (Fig. 4), achieving up to 100% accuracy in the case of BYD.

ISC measures reliability of neural processing across, rather than within, subjects (Fig. 1). This has the distinct advantage that it does not require repeated presentations of the same stimulus to a single subject. This is important because repeated presentation of narrative stimuli in itself likely reduces attentional engagement (Dmochowski et al., 2012). In the present study, we demonstrated a strong attentional modulation of ISC both when comparing the first attentive viewing with a second, unattentive viewing of the same subjects and when comparing two separate groups who first attended or did not attend, thus ruling out potential order confounds. The magnitude of ISC relates directly to the reliability of evoked activity within individual subjects; that is, if the signal is not reliable in single subjects, then it will not be reliable across subjects. Whether the observed relationship between restricted attention and diminished evoked reliability is the result of the reduced magnitude of evoked responses or a reduction in trial-to-trial variability is something that we cannot address with a single rendition of the stimulus.

It has been established previously that saccade paths are correlated across viewers during the presentation of dynamic naturalistic stimuli (Hasson et al., 2008c). Consistent with this, we found here that the correlation of eye movements between subjects decreased when viewers engaged in the counting task rather than naturally attending to the stimulus (Fig. 5). However, we ruled out that the attentional modulation of ISC is purely the result of this difference in similarity of eye movement patterns, first by showing that ISC modulation does not reduce from anterior to posterior scalp sites, as would be characteristic of electrical eye movement artifacts (Fig. 5), and second by showing significant attentional modulations of neural reliability even for constrained viewing. This second control revealed an interesting interaction between attentional engagement and the constraining of eye position: whereas activity during engaged viewing became less reliable with constrained fixation, activity during disengaged counting became more reliable. This indicates that a portion of the ISC measure is driven by similarity in retinal input, although this was clearly not enough to account for the full attentional effect on neural reliability. At the same time, the reduction in neural reliability with constrained viewing is consistent with the known link between the attention system in the brain and the eye movement systems, which have been the subject of a long line of work in psychophysics (Kowler, 2011), animal electrophysiology (Awh et al., 2006), and human neuroimaging (Nobre et al., 2000). The present finding builds on this body of work by showing that the relationship exists not only for individual eye movements and discrete spatial fixation points, but is also an important determinant of whether a continuous sensory stream is attended to. More generally, these results indicate that constraining eye movements in the presence of an attractive stimulus strongly interferes with the natural expression of attentional engagement in stimulus-guided eye movement patterns and the resultant similarity in cortical processing. It also suggests that maintaining fixation while engaging with a sensory stream is an inherently demanding task and thus attenuates the attention allotted to the stimulus in the attend condition. Anecdotally, participants reported that this task was difficult.

Over the past several decades, human EEG research has established that sensory responses indexed in both the transient and steady-state evoked potential are significantly modulated by attention to locations and features, consistent with sensory gain modulation (Hillyard et al., 1998; Andersen et al., 2008; Hopf et al., 2009; Kam et al., 2011). It is plausible that the correlated components showing attentional modulation in our study relate in some part to those sensory response modulations. However, two important distinctions are noteworthy. First, the most correlated component, the size and modulation of which was least contingent on narrative content and thus may be seen as most likely to reflect low-level processes, appears to be a supramodal component in that it is elicited and modulated in both the audio and audiovisual modalities (Dmochowski et al., 2012, 2014). Second, the attentional modulation observed here for naturalistic stimuli is considerably stronger than what is typically observed for transient or steady-state stimuli: it is more than a factor of two for the ISC modulation of some stimuli (Fig. 2).

The strongest ISC was observed for multisensory audiovisual stimuli, consistent with ISC studies using fMRI (Hasson et al., 2008c; Lerner et al., 2011). Although it is possible that the audio versus audiovisual stimuli selected here coincidentally differed in their propensity to engage subjects attentionally, this result more likely relates to well known neural processing enhancements for multisensory compared with unisensory stimuli (Giard and Peronnet, 1999; Molholm et al., 2002) and the interplay between attention and multisensory integration (Talsma et al., 2010). It should be noted, however, that the current sample of stimuli (two audiovisual and three auditory) is not large enough to conclude that the attentional modulation of ISC is universally stronger for multisensory stimuli.

Intersubject reliability of neural responses using fMRI has been associated with a variety of higher-level processes such as memory encoding, emotional valence, language comprehension, temporal integration, level of consciousness, psychological state, and stimulus preference (Furman et al., 2007; Hasson et al., 2008a; Jääskeläinen et al., 2008; Wilson et al., 2008; Dumas et al., 2010; Nummenmaa et al., 2012; Dmochowski et al., 2014; Lahnakoski et al., 2014, 2014; Naci et al., 2014). In our own work using EEG, we have suggested a relationship between ISC and engagement with the stimulus (Dmochowski et al., 2012). However, the present study is the first to manipulate explicitly the viewer's voluntary attentional state while presenting a continuous dyanmic stimulus and thus provides the first direct evidence of the considerable sensitivity of this EEG-based ISC measure to attentional state. We also compared attention effects at various levels of semantic coherence and, although the ISC in the first component (C1; Fig. 3) is modulated by attention regardless of semantic coherence, the attentional modulation in the second component (C2; Fig. 3) depended on the level of semantic coherence. Although it is tempting to thus conclude that C2 captures higher-level semantic processing, the general levels of ISC were comparable for scrambled and unscrambled stimulus versions. Further research is needed to determine the relevance of these components to higher-level semantic processing.

A more conventional measure of attentional modulation is the power of alpha oscillations. For example, alpha power is increased when subjects turn their attention inward and away from external stimuli (Ray and Cole, 1985; Cooper et al., 2003). Alpha power also increases during lapses of attention (O'Connell et al., 2009). Modulations of alpha can be used to detect shifts in covert spatial attention on a single-trial basis (van Gerven and Jensen, 2009) and alpha power during viewing of TV commercials correlates with recall and recognition of the commercial contents (Reeves et al., 1985, Smith et al., 2004). With this well established measure, we validated that subjects were indeed attending across modalities, stimuli, and attention conditions, in agreement with our findings using ISC. Importantly, however, the modulation in alpha activity with attentional state is relatively weaker than the modulation in ISC. This is demonstrated by the stark difference in classification accuracy of attentional state across viewers: when using ISC-A, we obtain Az = 0.75–1.0 for narrative stimuli (Fig. 4), whereas using alpha power, we obtain only Az < 0.56, which is no better than chance (Fig. 8). Classification performance is hindered by the large variability in alpha power across subjects, a well established phenomenon also observed here. Indeed, there is substantial power spectrum variability across subjects that allows for the identification of individual subjects based on power spectrum alone (Näpflin et al., 2007). However, even after we calibrate for alpha power in individual subjects, classification performance remains low (Az < 0.7). In contrast, our proposed measure of attentional state does not require within-subject calibration to obtain high levels of accuracy. Instead, only data from a normative group are required for a given stimulus, in this sense providing an “absolute” measure of attentional state.

Footnotes

This work was supported by the Defense Advanced Research Projects Agency (DARPA Contract W911NF-14-1-0157). We thank Samantha S. Cohen for extensive discussions and careful editing of this manuscript and Uri Hasson for providing the various stimuli used here.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License Creative Commons Attribution 4.0 International, which permits unrestricted use, distribution and reproduction in any medium provided that the original work is properly attributed.

References

- Andersen SK, Hillyard SA, Müller MM. Attention facilitates multiple stimulus features in parallel in human visual cortex. Curr Biol. 2008;18:1006–1009. doi: 10.1016/j.cub.2008.06.030. [DOI] [PubMed] [Google Scholar]

- Anderson DR, Burns J. Paying attention to television. In: Bryant J, Zillmann D, editors. Responding to the screen: reception and reaction processes. New York: Routledge; 1991. pp. 3–25. [Google Scholar]

- Awh E, Armstrong KM, Moore T. Visual and oculomotor selection: links, causes and implications for spatial attention. Trends Cogn Sci. 2006;10:124–130. doi: 10.1016/j.tics.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Bartels A, Zeki S. Functional brain mapping during free viewing of natural scenes. Hum Brain Mapp. 2004;21:75–85. doi: 10.1002/hbm.10153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels A, Zeki S. Brain dynamics during natural viewing conditions: a new guide for mapping connectivity in vivo. Neuroimage. 2005;24:339–349. doi: 10.1016/j.neuroimage.2004.08.044. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29:1165–1188. doi: 10.1007/s00384-007-0382-z. [DOI] [PubMed] [Google Scholar]

- Blankertz B, Lemm S, Treder M, Haufe S, Müller KR. Single-trial analysis and classification of ERP components: a tutorial. Neuroimage. 2011;56:814–825. doi: 10.1016/j.neuroimage.2010.06.048. [DOI] [PubMed] [Google Scholar]

- Braboszcz C, Delorme A. Lost in thoughts: neural markers of low alertness during mind wandering. Neuroimage. 2011;54:3040–3047. doi: 10.1016/j.neuroimage.2010.10.008. [DOI] [PubMed] [Google Scholar]

- Candès EJ, Li X, Ma Y, Wright J. Robust principal component analysis? JACM. 2011;58:11. doi: 10.1145/1970392.1970395. [DOI] [Google Scholar]

- Christoff K, Gordon AM, Smallwood J, Smith R, Schooler JW. Experience sampling during fMRI reveals default network and executive system contributions to mind wandering. Proc Natl Acad Sci U S A. 2009;106:8719–8724. doi: 10.1073/pnas.0900234106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper NR, Croft RJ, Dominey SJ, Burgess AP, Gruzelier JH. Paradox lost? Exploring the role of alpha oscillations during externally vs internally directed attention and the implications for idling and inhibition hypotheses. Int J Psychophysiol. 2003;47:65–74. doi: 10.1016/S0167-8760(02)00107-1. [DOI] [PubMed] [Google Scholar]

- de Cheveigné A, Parra LC. Joint decorrelation, a versatile tool for multichannel data analysis. Neuroimage. 2014;98:487–505. doi: 10.1016/j.neuroimage.2014.05.068. [DOI] [PubMed] [Google Scholar]

- DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. 1988;44:837–845. doi: 10.2307/2531595. [DOI] [PubMed] [Google Scholar]

- Dmochowski JP, Sajda P, Dias J, Parra LC. Correlated components of ongoing EEG point to emotionally laden attention–a possible marker of engagement? Front Hum Neurosci. 2012:6. doi: 10.3389/fnhum.2012.00112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dmochowski JP, Bezdek MA, Abelson BP, Johnson JS, Schumacher EH, Parra LC. Audience preferences are predicted by temporal reliability of neural processing. Nat Commun. 2014:5. doi: 10.1038/ncomms5567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas G, Nadel J, Soussignan R, Martinerie J, Garnero L. Inter-brain synchronization during social interaction. PLoS One. 2010;5:e12166. doi: 10.1371/journal.pone.0012166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furman O, Dorfman N, Hasson U, Davachi L, Dudai Y. They saw a movie: long-term memory for an extended audiovisual narrative. Learn Mem. 2007;14:457–467. doi: 10.1101/lm.550407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- Hasson U, Furman O, Clark D, Dudai Y, Davachi L. Enhanced intersubject correlations during movie viewing correlate with successful episodic encoding. Neuron. 2008a;57:452–462. doi: 10.1016/j.neuron.2007.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Landesman O, Knappmeyer B, Vallines I, Rubin N, Heeger DJ. Neurocinematics: the neuroscience of film. Projections. 2008b;2:1–26. [Google Scholar]

- Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N. A hierarchy of temporal receptive windows in human cortex. J Neurosci. 2008c;28:2539–2550. doi: 10.1523/JNEUROSCI.5487-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haufe S, Meinecke F, Görgen K, Dähne S, Haynes JD, Blankertz B, Bieβmann F. On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage. 2014;87:96–110. doi: 10.1016/j.neuroimage.2013.10.067. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Vogel EK, Luck SJ. Sensory gain control (amplification) as a mechanism of selective attention: electrophysiological and neuroimaging evidence. Philos Trans R Soc Lond B Biol Sci. 1998;353:1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hitchcock A. Bang! You're Dead. Television. Alfred Htichcock Presents. 1961.

- Hopf J-M, Schoenfeld MA, Hillyard SA. The cognitive neurosciences IV. Cambridge, MA: MIT; 2009. Spatio-temporal analysis of visual attention; pp. 235–250. [Google Scholar]

- Hotelling H. Relations between two sets of variates. Biometrika. 1936;28:321–377. [Google Scholar]

- Jääskeläinen IP, Koskentalo K, Balk MH, Autti T, Kauramäki J, Pomren C, Sams M. Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. Open Neuroimag J. 2008;2:14–19. doi: 10.2174/1874440000802010014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kam JW, Dao E, Farley J, Fitzpatrick K, Smallwood J, Schooler JW, Handy TC. Slow fluctuations in attentional control of sensory cortex. J Cogn Neurosci. 2011;23:460–470. doi: 10.1162/jocn.2010.21443. [DOI] [PubMed] [Google Scholar]

- Kowler E. Eye movements: the past 25 years. Vision Res. 2011;51:1457–1483. doi: 10.1016/j.visres.2010.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lahnakoski JM, Glerean E, Jääskeläinen IP, Hyönä J, Hari R, Sams M, Nummenmaa L. Synchronous brain activity across individuals underlies shared psychological perspectives. Neuroimage. 2014;100:316–324. doi: 10.1016/j.neuroimage.2014.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leone S. The Good, the Bad and the Ugly. Movie. United Artists. 1966 [Google Scholar]

- Lerner Y, Honey CJ, Silbert LJ, Hasson U. Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J Neurosci. 2011;31:2906–2915. doi: 10.1523/JNEUROSCI.3684-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin Z, Chen M, Ma Y. The augmented Lagrange multiplier method for exact recovery of corrupted low-rank matrices. 2010. [Accessed May 15, 2015]. ArXiv Prepr ArXiv10095055 Available from: http://arxiv.org/abs/1009.5055.

- Lindquist SI, McLean JP. Daydreaming and its correlates in an educational environment. Learning and Individual Differences. 2011;21:158–167. doi: 10.1016/j.lindif.2010.12.006. [DOI] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/S0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Naci L, Cusack R, Anello M, Owen AM. A common neural code for similar conscious experiences in different individuals. Proc Natl Acad Sci U S A. 2014;111:14277–14282. doi: 10.1073/pnas.1407007111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näpflin M, Wildi M, Sarnthein J. Test–retest reliability of resting EEG spectra validates a statistical signature of persons. Clin Neurophysiol. 2007;118:2519–2524. doi: 10.1016/j.clinph.2007.07.022. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Sebestyen GN, Miniussi C. The dynamics of shifting visuospatial attention revealed by event-related potentials. Neuropsychologia. 2000;38:964–974. doi: 10.1016/S0028-3932(00)00015-4. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L, Glerean E, Viinikainen M, Jääskeläinen IP, Hari R, Sams M. Emotions promote social interaction by synchronizing brain activity across individuals. Proc Natl Acad Sci U S A. 2012;109:9599–9604. doi: 10.1073/pnas.1206095109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Connell RG, Dockree PM, Robertson IH, Bellgrove MA, Foxe JJ, Kelly SP. Uncovering the neural signature of lapsing attention: electrophysiological signals predict errors up to 20 s before they occur. J Neurosci. 2009;29:8604–8611. doi: 10.1523/JNEUROSCI.5967-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Grady J. Pie Man. The Moth. 2012. Available at: http://themoth.org/posts/stories/pie-man.

- Parra LC, Sajda P. Blind source separation via generalized eigenvalue decomposition. Journal of Machine Learning Research. 2003;4:1261–1269. [Google Scholar]

- Parra LC, Spence CD, Gerson AD, Sajda P. Recipes for the linear analysis of EEG. Neuroimage. 2005;28:326–341. doi: 10.1016/j.neuroimage.2005.05.032. [DOI] [PubMed] [Google Scholar]

- Ray WJ, Cole HW. EEG alpha activity reflects attentional demands, and beta activity reflects emotional and cognitive processes. Science. 1985;228:750–752. doi: 10.1126/science.3992243. [DOI] [PubMed] [Google Scholar]

- Reeves B, Thorson E, Rothschild ML, McDonald D, Hirsch J, Goldstein R. Attention to television: intrastimulus effects of movement and scene changes on alpha variation over time. Int J Neurosci. 1985;27:241–255. doi: 10.3109/00207458509149770. [DOI] [PubMed] [Google Scholar]

- Risko EF, Buchanan D, Medimorec S, Kingstone A. Everyday attention: mind wandering and computer use during lectures. Computers and Education. 2013;68:275–283. doi: 10.1016/j.compedu.2013.05.001. [DOI] [Google Scholar]

- Shinoda H, Hayhoe MM, Shrivastava A. What controls attention in natural environments? Vision Res. 2001;41:3535–3545. doi: 10.1016/S0042-6989(01)00199-7. [DOI] [PubMed] [Google Scholar]

- Smallwood J. Mind-wandering while reading: attentional decoupling, mindless reading and the cascade model of inattention. Language and Linguistics Compass. 2011;5:63–77. doi: 10.1111/j.1749-818X.2010.00263.x. [DOI] [Google Scholar]

- Smith ME, Gevins A. Attention and brain activity while watching television: components of viewer engagement. Media Psychology. 2004;6:285–305. doi: 10.1207/s1532785xmep0603_3. [DOI] [Google Scholar]

- Spiers HJ, Maguire EA. Decoding human brain activity during real-world experiences. Trends Cogn Sci. 2007;11:356–365. doi: 10.1016/j.tics.2007.06.002. [DOI] [PubMed] [Google Scholar]

- Szpunar KK, Moulton ST, Schacter DL. Mind wandering and education: from the classroom to online learning. Front Psychol. 2013:4. doi: 10.3389/fpsyg.2013.00495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talsma D, Senkowski D, Soto-Faraco S, Woldorff MG. The multifaceted interplay between attention and multisensory integration. Trends Cogn Sci. 2010;14:400–410. doi: 10.1016/j.tics.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theiler J, Eubank S, Longtin A, Galdrikian B, Doyne Farmer J. Testing for nonlinearity in time series: the method of surrogate data. Phys Nonlinear Phenom. 1992;58:77–94. doi: 10.1016/0167-2789(92)90102-S. [DOI] [Google Scholar]

- van Gerven M, Jensen O. Attention modulations of posterior alpha as a control signal for two-dimensional brain–computer interfaces. J Neurosci Methods. 2009;179:78–84. doi: 10.1016/j.jneumeth.2009.01.016. [DOI] [PubMed] [Google Scholar]

- Weissman DH, Roberts KC, Visscher KM, Woldorff MG. The neural bases of momentary lapses in attention. Nat Neurosci. 2006;9:971–978. doi: 10.1038/nn1727. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Molnar-Szakacs I, Iacoboni M. Beyond superior temporal cortex: intersubject correlations in in narrative speech comprehension. Cereb Cortex. 2008;18:230–242. doi: 10.1093/cercor/bhm049. [DOI] [PubMed] [Google Scholar]