Abstract

Dopamine is known to be involved in regulating effort investment in relation to reward, and the disruption of this mechanism is thought to be central in some pathological situations such as Parkinson's disease, addiction, and depression. According to an influential model, dopamine plays this role by encoding the opportunity cost, i.e., the average value of forfeited actions, which is an important parameter to take into account when making decisions about which action to undertake and how fast to execute it. We tested this hypothesis by asking healthy human participants to perform two effort-based decision-making tasks, following either placebo or levodopa intake in a double blind within-subject protocol. In the effort-constrained task, there was a trade-off between the amount of force exerted and the time spent in executing the task, such that investing more effort decreased the opportunity cost. In the time-constrained task, the effort duration was constant, but exerting more force allowed the subject to earn more substantial reward instead of saving time. Contrary to the model predictions, we found that levodopa caused an increase in the force exerted only in the time-constrained task, in which there was no trade-off between effort and opportunity cost. In addition, a computational model showed that dopamine manipulation left the opportunity cost factor unaffected but altered the ratio between the effort cost and reinforcement value. These findings suggest that dopamine does not represent the opportunity cost but rather modulates how much effort a given reward is worth.

SIGNIFICANCE STATEMENT Dopamine has been proposed in a prevalent theory to signal the average reward rate, used to estimate the cost of investing time in an action, also referred to as opportunity cost. We contrasted the effect of dopamine manipulation in healthy participants in two tasks, in which increasing response vigor (i.e., the amount of effort invested in an action) allowed either to save time or to earn more reward. We found that levodopa—a synthetic precursor of dopamine—increases response vigor only in the latter situation, demonstrating that, rather than the opportunity cost, dopamine is involved in computing the expected value of effort.

Keywords: computational model, decision making, dopamine, effort-based decision making, psychopharmacology

Introduction

One of the fundamental functions of the brain is to optimize the allocation of limited resources to the behaviors that are most likely to lead to valuable outcomes. This requires comparing benefits with incurred costs, either physical, mental, or temporal (Prévost et al., 2010; Schmidt et al., 2012; Skvortsova et al., 2014). The dopaminergic system has long been regarded as central to this adaptive function, and its dysfunction is thought to be involved in several pathological conditions in which cost–benefit computation is severely disrupted, such as Parkinson's disease, addiction, depression, and schizophrenia (Turner and Desmurget, 2010; Cléry-Melin et al., 2011; Salamone et al., 2012; Strauss et al., 2014; Zenon and Olivier, 2014). Dopamine (DA) manipulations in animals have been shown to affect effort processing, both in terms of response vigor, i.e., the amount of effort invested in a motor response (Salamone et al., 2003), and in terms of effort-based decision making, since blockade or depletion of DA will drive animals to shift their preferences from high reward options requiring a large amount of effort to lower rewards accessible with smaller amounts of effort (Cousins et al., 1994; Salamone et al., 1994). In healthy humans, during a task in which participants had to choose between a high cost/high reward and low cost/low reward option to obtain varying monetary reinforcements, amphetamine intake, which enhances the synaptic concentration of catecholamines, was shown to increase the willingness to choose the more strenuous option (Wardle et al., 2011). Similarly, in a recent study conducted on Parkinson's patients, DA replacement therapy was shown to increase the acceptance rate for large efforts (Chong et al., 2015).

In an influential paper, Niv et al. (2007) proposed a model attempting to explain, from a normative perspective, the role of DA in effort processing. In this model, baseline DA concentration (i.e., tonic DA) signals the average level of reward per unit of time encountered in the past. This average reward rate value could then be used to estimate the opportunity cost of a given potential action, i.e., the cost corresponding to the forfeiting of rewards that could be obtained if another action available in the same context was chosen instead. Niv et al. (2007) modeled opportunity cost as the product of the average reward rate and the time spent executing a given action. Consequently, in many tasks described in the literature, there is a trade-off between the response vigor, allowing one to control the time spent executing the task, and the opportunity cost. According to the model of Niv et al. (2007), in such tasks, the average reward rate should affect response vigor, a prediction recently confirmed experimentally, albeit in a task that required mental effort, making its generalization to physical effort uncertain (Guitart-Masip et al., 2011).

To determine whether DA affects opportunity cost during effort-based decision making, we conducted an experiment comparing the effects of levodopa (a synthetic precursor of DA) and placebo in two tasks. In one task, called the effort-constrained task, there was a trade-off between effort and opportunity cost, and in the other, the time-constrained task, there was no such a trade-off. According to the model of Niv et al. (2007), we predicted that in the task in which effort is in balance with opportunity cost, DA should have an effect on the level of effort invested, whereas the task in which effort and opportunity cost are not related should not be affected by DA modulation.

Materials and Methods

Subjects.

Twenty healthy subjects were invited to participate in the experiment; the group age ranged from 20 to 35 years old. Participants had no medical history of neurological problems and did not take medication that could affect the nervous system. One subject did not complete the tasks for reasons unrelated to the experimental conditions, and her results were subsequently excluded. The final number of subjects included in the experiment was thus 19 (10 males). The experiment was conducted with approval from the local ethics committee, and all participants provided their informed consent before participating.

Procedure.

The experiments were performed double blind; i.e., neither the subject nor the experimenter knew which substance was being given. Nine of the subjects took part in a first series of experiments that included three drug conditions (sulpiride, placebo, and DA). Because of concerns related to a lower dosage (200 mg) and to the short delay (30 min) between sulpiride intake and the task, it was decided to drop the sulpiride condition for the following subjects. The second series of experiments was thus made using only DA and placebo, and, except for the absence of the sulpiride session, the experimental procedure was the same as in the first series. Only the results from the placebo and DA sessions are reported in the current paper.

Participants attended the laboratory on separate days, with an interval of at least 48 h between two sessions. Thirty minutes before the beginning the experiment, the subject was given a pill containing either the placebo or the active substance, DA (125 mg of levodopa). Each session consisted of three blocks of an effort-constrained task and three blocks of a time-constrained task; each block lasted 6 min. Half of the subjects started with the effort-constrained task and the other half with the time-constrained task; these two conditions were then alternated throughout the session. The subjects started each experimental session with the same task. Each subject was given a brief training session on each task before beginning the experiments to ensure that the tasks were fully understood.

Before each block, the subject was asked to squeeze a homemade dynamometer three times to their maximum capability to determine their maximal voluntary contraction. The highest value was retained so that subsequent effort levels, used during the two main tasks, were expressed as a percentage of the maximal voluntary contraction. This ensured a good calibration of the required contractions relative to the participant's strength in each block and allowed us to compensate for fatigue effects (Zénon et al., 2015). In each trial, a certain amount of money was proposed for executing a physical effort, which consisted in squeezing the dynamometer with a certain level of force. The subjects received real-time feedback on their performance in the form of a gauge displayed on the screen, i.e., a rectangle shape that “filled up” progressively with color according to the strength exerted during the task. After each block, the total “earnings” were displayed on the screen before the subsequent task began.

The pupil size and eye movements of the subjects were recorded at 250 Hz by means of an Eyelink eye tracker (SR Research; for details, see Zénon et al., 2014).

Task 1: effort-constrained task.

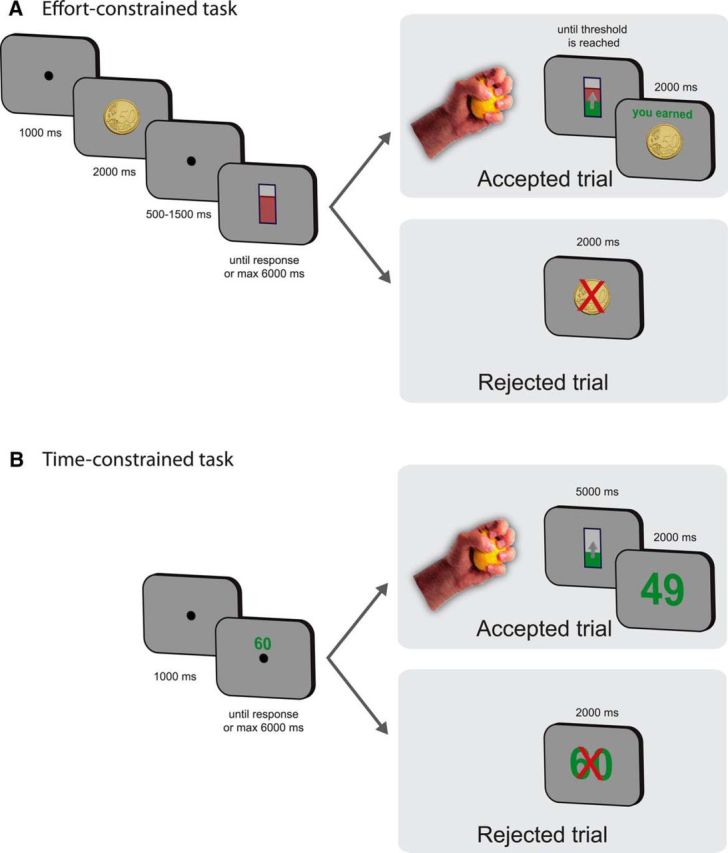

Following a 1000 ms fixation screen, the task started with the on-screen presentation of a coin (5, 20, or 50 euro cents; Fig. 1A) for 2000 ms. This was followed by the presentation of a fixation point for 500–1500 ms, after which the gauge of strength required to obtain the reward was shown, with the exact level to be reached being indicated by a horizontal red line. There were 12 possible levels, namely, 0.34, 0.50, 0.69, 0.97, 1.36, 1.90, 2.66, 3.72, 5.20, 7.27, 10.16, and 15.22 (a value of 1 corresponded to a force equal to the maximal voluntary contraction exerted for 1 s). The subject could then either refuse or accept the offer proposed by pressing a keyboard key with their nondominant hand (“Ctrl” key for refusing and “Enter” key for accepting the offer) within a 6 s time limit; this allowed us to calculate the “acceptance rate.” If the offer was refused, the next trial was then presented after a 2000 ms presentation of the forfeited reward superimposed with a red cross. If accepted, the subject had to squeeze the dynamometer until the required level of force was reached to win the reward proposed on the previous screen, without any time limit. As a visual feedback, a green rectangle superimposed on the gauge was displayed on the screen and indicated in real time the integral of the force profile such that the speed with which the level raised was directly proportional to the amount of force exerted on the dynamometer. For example, exerting a 25% maximal voluntary contraction for 4 s raised the gauge to the same level as a 50% maximal voluntary contraction exerted for 2 s. Therefore, in the effort-constrained task, exerting more force allowed the participants to save time by reaching the target faster, thereby resulting in a trade-off between effort and opportunity cost. As there was a time limit for the block duration (6 min) but no limit in terms of number of trials, increasing the level of effort allowed the subject to gain time: the reward was obtained quicker and they were thus able to move to the next trial faster, increasing chances to raise total earnings; this strategy was explicitly described to the participants.

Figure 1.

Schematic depiction of the tasks. A, Effort-constrained task layout. The subject was presented with a coin of 50, 20, or 5 euro cents. The screen then showed the amount of effort required to reach the reward with a red line in a rectangular cylinder. If the subject accepted, he or she then had to squeeze the dynamometer to obtain the reward, with no time constraint. If he or she refused, the next offer was proposed. B, Time-constrained task layout. The subject was presented a proposed reward amount (ranging from 5 to 80 cents). If the subject accepted the offer, he or she had to squeeze a dynamometer during 5 s. The reward earned depended on the level reached on the gauge at the end of the 5 s time limit. If the subject refused, the next offer was proposed.

Task 2: time-constrained task.

In this task, a number was first shown on the screen (5, 6, 7, 8, 9, 10, 12, 14, 16, 19, 22, 25, 29, 33, 39, 45, 52, 60, 69, or 80; Fig. 1B), representing the reward, in euro cents, that would be won at the end of that trial if the force level reached the top of the gauge rectangle. As in the effort-constrained task, the subject had the opportunity to accept or refuse the offer by pressing the “Enter” or “Ctrl” key, respectively, within a 6 s time limit, and the amount of force exerted while squeezing the dynamometer also affected the speed with which the force level displayed on screen raised. When the offer was refused, the forfeited reward superimposed with a red cross was shown for 2000 ms, followed by the beginning of the next trial. In the time-constrained task, in contrast to the effort-constrained task, the duration of the effort was constant and fixed at 5 s. The amount of reward actually received during a trial was proportional to the gauge position at the end of the trial (e.g., if the subject was offered 52 cents but only arrived half way to the target, he or she would then gain only 26 cents) and was displayed on the screen at the end of each trial (Fig. 1B). If the subject managed to reach the top of the gauge within the 5 s limit (4 ± 5% of the trials, mean ± SD), the force was still integrated, but the gauge level remained immobile at the top of the gauge. Therefore, in the time-constrained task, exerting more effort allowed a proportional increase of the earnings but did not allow participants to save time, as the duration of each trial was constant. Therefore, there was no trade-off between effort and opportunity cost in this task.

Data analysis.

The statistical analyses consisted of generalized linear mixed models (GLMMs) and were performed with SAS Enterprise Guide software (version 5.1, copyright 2012, SAS Institute). The acceptance rate was modeled as a binary variable (offer accepted or rejected), while the reaction time (RT; log transformed to make their distribution closer to normal), the force intensity, and the pupil responses were modeled as normal variables. In the effort-constrained task, the independent variables were the amount of reward (5, 20, or 50 cents) proposed, the level of effort required (12 levels), and the treatment (levodopa vs placebo) condition. In the time-constrained task, the independent variables were the reward (20 levels) and the treatment condition. We also performed a GLMM analysis on the log-transformed pupil response (Zénon et al., 2014). The baseline pupil size during the second before the decision was computed and subtracted from the average response during the task execution. Eye blinks were linearly interpolated. Then, the effect of the average force actually exerted during the task was used as a continuous predictor, while the treatment effect was included as a categorical predictor in the GLMM model. In all models, all fixed effects were also included as random effects. In addition, different parameters were used to model the variance of the residuals of each predictor (variance components).

We also performed a control analysis to assess the relationship between the treatment effect (change in force intensity) and the total effort (sum of all contraction intensities relative to maximal voluntary contraction) exerted during each task on the one hand and the total reward earned during each task on the other hand. We conducted ANCOVAs with the treatment effect as dependent variable, the total effort or total reward as fixed independent variable, and the subject index as random independent variable. The treatment effect was computed by z scoring all the effort intensities performed during both sessions for a given subject and averaging these standardized values for all trials in the levodopa condition.

Computational model.

We modeled subjects' behavior by means of a computational model inspired by the work of Niv et al. (2007). The model we tested initially included eight free parameters (Er, Ef, Ed, Te, Ta, Ru, Oc, and Qr; see definitions below and Table 1).

Table 1.

Model parameters

| Notation | Median | Mean ± SD | Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | |

|---|---|---|---|---|---|---|---|---|

| Reference value | Qr | −0.7024 | −0.683 ± 0.567 | * | * | * | * | * |

| Utility Ratio | Ru | 0.9942 | 1.186 ± 0.470 | * | * | * | * | * |

| Opportunity cost | Oc | 0.258 | 0.296 ± 0.228 | * | * | * | * | * |

| Acceptance temperature | Ta | 0.2192 | 0.247 ± 0.120 | * | * | * | ||

| Effort temperature | Te | 0.0751 | 0.098 ± 0.103 | * | * | * | ||

| Reward exponent | Er | 0.3747 | 0.391 ± 0.087 | * | ||||

| Force exponent | Ef | 2.1364 | 2.366 ± 0.808 | * | ||||

| Duration exponent | Ed | 0.2314 | 0.290 ± 0.225 | * |

The tables shows the parameters included in the computational model, together with their median and mean values. The asterisks in the right columns indicate which parameters were included in each version of the model.

The model estimated the value of each proposed offer as a function of the force that could be exerted, as follows:

where Qr was a free parameter representing the decision threshold, U was the utility of the reward, Co was the opportunity cost, and Ce was the cost of effort.

The utility of reward U was computed as follows:

|

to account for the decreasing marginal value of reward, and where both Er and Ru (for utility ratio) were free parameters. In the effort-constrained task, reward was equal to the reward proposed (promised reward). In the time-constrained task, reward was equal to force times promised reward, as the final amount of reward was proportional to the average amount of force exerted during the task.

The cost of effort, Ce, was equal to the following:

in accordance with a previous neuroeconomic model of effort cost (Körding et al., 2004), with Ed and Ee being free parameters. The value of the duration variable differed according to the task. In the effort-constrained task, duration was equal to the total effort required in the trial divided by the force exerted during the task. In the time-constrained task, duration was always equal to 5 s.

The opportunity cost factor Oc was computed as follows:

where Oc was a free parameter, and duration took the same value as in Equation 3. Therefore, in our model, Oc did not depend on the past average reward rate. This simplification allowed us to avoid making any assumption about the way the average reward rate should be computed (i.e., how long should the memory of past reward be? how should breaks between tasks affect the average reward rate? should effort cost be discounted from the reward to compute the reward rate? etc.) and was legitimate, given that any change in opportunity cost between the treatment conditions would be evidenced by variations of the Oc parameter. The effect of changes in Oc and Ru on performance in both tasks are illustrated in Figure 2, A and B, respectively. As expected, while increases in Oc led to increased force exertion in the effort-constrained task, the time-constrained task was not influenced by changes in Oc, whereas the opposite was found for changes in Ru.

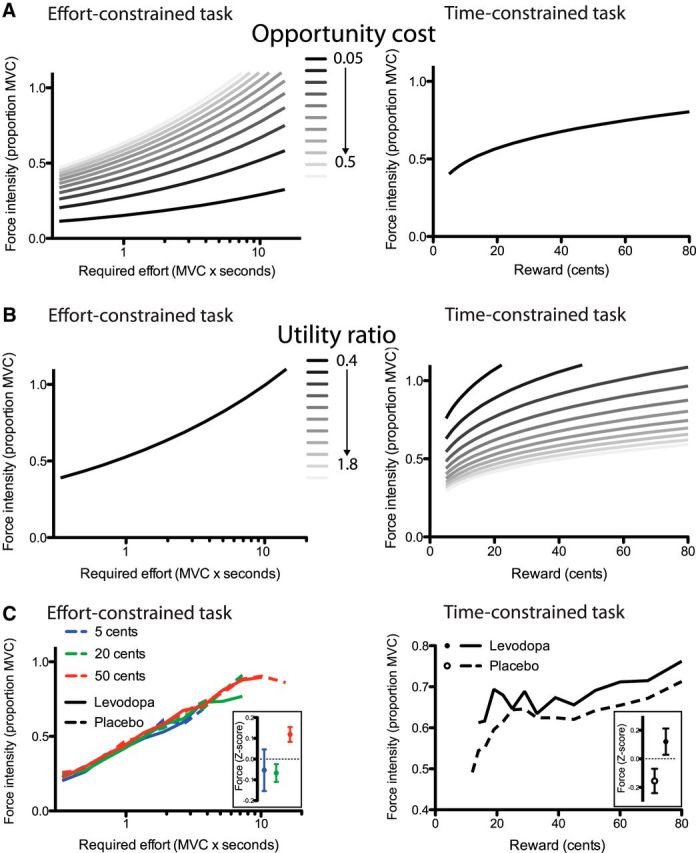

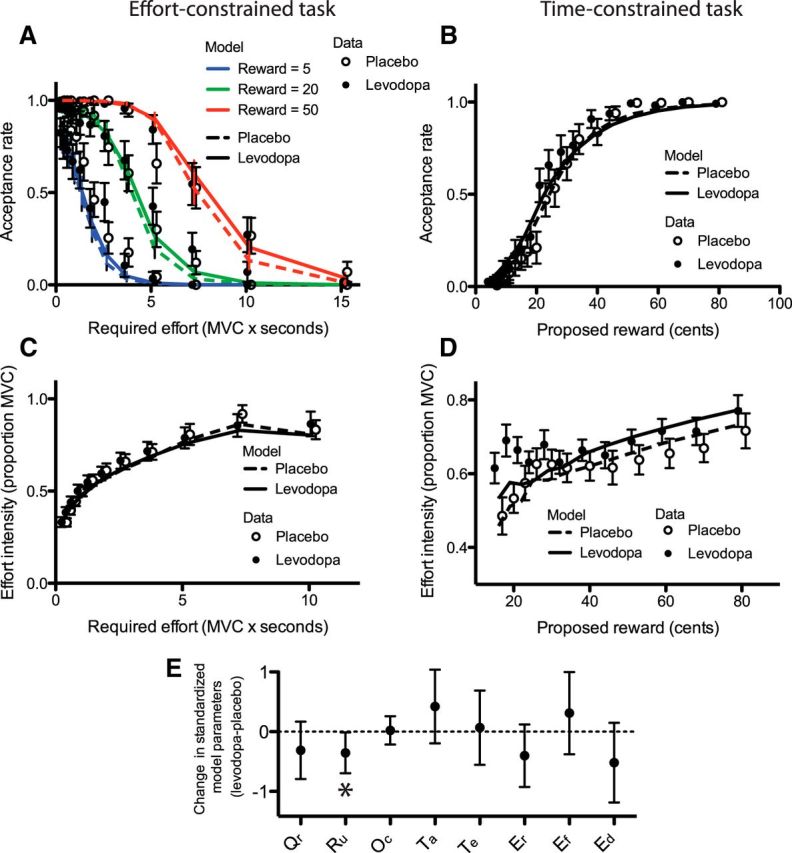

Figure 2.

Main predictions and behavioral results. A, B, Computational model predictions of force execution during the effort-constrained (left) and time-constrained tasks (right), for different values of the opportunity cost factor Oc (A) or utility ratio factor Ru (B). In the right panel of A and left panel of B, the force intensity does not vary with the changes in the model factors, and all curves are therefore superimposed. Changes in Oc predict variations in the force intensity in the effort-constrained task only, while changes in Ru predict force variations only in the time-constrained task. C, The relation between the force intensity and the effort required in the effort-constrained task (left) or reward magnitude on offer in the time-constrained task (right) is shown for both the placebo (dashed) and levodopa (solid) treatment conditions. In the left panel, the three different reward conditions are color coded, and the inset illustrates the effect of the reward condition on force intensity, z-scored within each effort level condition. Regarding the data illustrated in the right panel, the force intensity was computed from the accepted trials only, and only the data points including more that 10% average acceptance rate were included. The inset in the right panel shows the effect of the treatment condition on the force intensity, z-scored within each reward condition. Error bars illustrate the SEM.

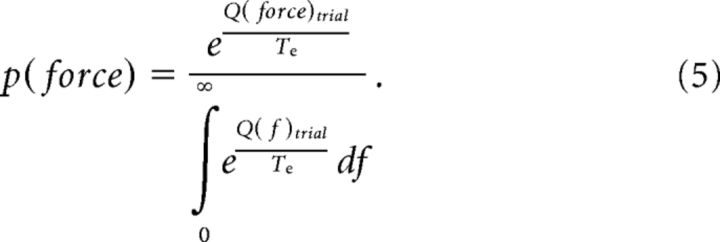

We then computed the probability of choosing a given force value in each trial as follows:

|

The probability of accepting the trial was computed as follows:

|

and

|

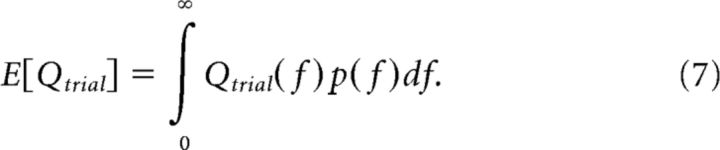

The integrals over variable f in Equations 5 and 7 were approximated by a discrete sum over values of f between 0 and 2, with a 0.01 sampling interval.

We fitted this model by minimizing its negative log likelihood (LL):

|

where “accepted” was equal to one when the participant actually accepted the trial and equal to zero otherwise. The “chosen effort” corresponded to the actual average force exerted during the trial, if accepted. LLeff was equal to zero if the trial was refused.

We fitted this model to the actual acceptance and effort data of the two tasks separately for each subjects and each treatment condition. To avoid local minima, the fit was performed by means of a genetic algorithm with a population of 500. As multicollinearity issues between the parameters made the determination of a global minimum difficult for the complete model with eight parameters, we ran a series of other versions of the model in which the exponent parameters (Er, Ef, Ed) and/or one or two of the temperature parameters (Te, Ta) were fixed to the medians of their values obtained from the first model-fitting procedure (Table 1). Interestingly, we found that the value of Ef was in close correspondence with the quadratic function of effort cost estimated in a previous study (Hartmann et al., 2013).

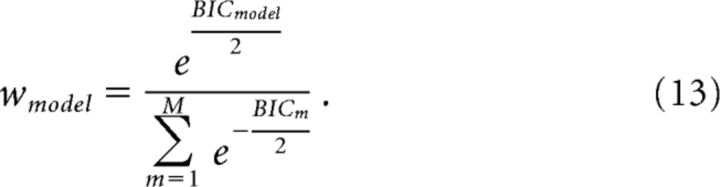

The Bayesian information criterion (BIC) was computed for each model, subject, and treatment condition:

where L is the maximum likelihood, k is the number of degrees of freedom, and n is the number of data points. The models that provided the smallest BIC (i.e., best performance) varied according to the subjects and treatment conditions. We then computed the final parameter values by means of Bayesian model averaging (BMA):

|

in which β represents the vector of model parameters (Table 1), M is the total number of models (five in our case), and w is computed as follows:

|

To estimate the quality of the fit with the data, we computed the percentage of variance explained by the force data when using the mode of the p(force) distribution as the model force output, and we computed the area under the Receiver Operating Characteristic (ROC) curve when using p(accept) to predict the actual acceptance data.

Results

First, it is noteworthy that levodopa did not affect the maximal voluntary contraction made at the beginning of each block [F(1,18) = 0.37, p = 0.5506; Bayes factor (BF), 44.7; p(H0) = 0.98], making the effort requirements comparable between treatment conditions. To estimate the validity of the null effect of treatment on the maximal voluntary contraction, we compared the Bayesian information criteria of the full model with an alternative model not including the treatment factor. This comparison was performed by computing the Bayes factor (BF = 44.7) and estimating the probability of the null hypothesis [p(H0) = 0.98; Masson, 2011].

Effort-constrained task

In the effort-constrained task, the acceptance rate varied depending on the amount of the reward on offer (F(2,36) = 58.13, p < 0.0001; Fig. 3A), but was not affected by the treatment condition [F(1,18) = 0.40, p = 0.5343; BF = eΔBIC/2 > 106, p(H0) = 1]. Additionally, the acceptance rate diminished as the effort required increased (F(11,198) = 52.22, p < 0.0001). All other effects were nonsignificant (p > 0.05).

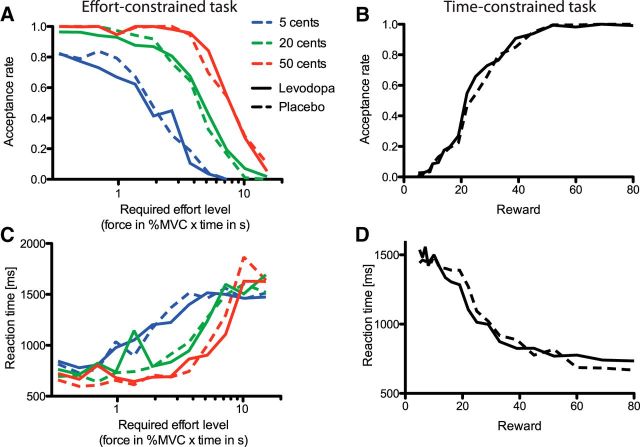

Figure 3.

Acceptance rate and reaction time results. A, C, Effort-constrained task. The relations between the effort required (x-axis) and the acceptance rate (A) and the RT (C) are depicted. The conventions are the same as in Figure 2. B, D, Time-constrained task. The relations between reward magnitude on offer (cents) and the acceptance rate (B) and the RT (D) are illustrated for both the placebo (dashed) and levodopa (solid) treatment conditions.

RT for accepting/refusing the offer was not influenced by the treatment condition (F(1,17.9) = 0.00, p = 0.9945), but was affected by the amount of reward on offer (Fig. 3C; F(2,36.7) = 6.84, p = 0.003) and by effort (F(11,202) = 16.35, p < 0.0001). The interaction between the offered reward and effort factor also impacted on the RT (F(22,397) = 5.18, p < 0.0001). All other variables were nonsignificant (p > 0.05), and the probability for the null effect of treatment on the RT was equal to one (BF > 20 × 106).

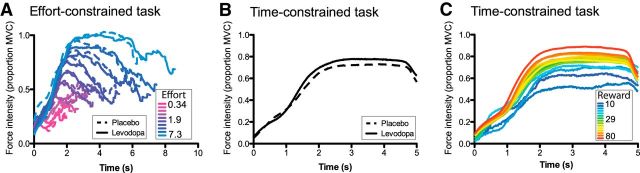

There was no effect of the treatment condition either on the amount of force used to squeeze the dynamometer (F(1,18) = 0.03, p = 0.8661; Figs. 2C, 4A). In contrast, the effort required had a significant effect on the force applied on the dynamometer (F(11,162) = 101.05, p < 0.0001; Figs. 2C, 4A). This last effect is not trivial given that the effort variable corresponded to the target value of the integral of the force profile and, therefore, did not constrain directly the force intensity. The promised reward also significantly influenced the force (F(2,36) = 7.39, p = 0.0020; Fig. 2C, inset). The subjects exerted more force for a 50 or 20 cent reward compared to a 5 cent reward. All the other effects were nonsignificant (p > 0.05), and the probability for the null effect of treatment was very close to one (BF > 5 × 106).

Figure 4.

Average force execution time course. A, Averaged time course of force execution as a function of effort (color coded) and treatment condition (solid and dashed for the levodopa and placebo conditions, respectively) in the effort-constrained task. The rate of acceptance and duration of the effort varied between conditions and subjects. Therefore, we excluded from the figure the bins that included data for <30% of the participants. B, Effect of the treatment condition (solid and dashed for the levodopa and placebo conditions, respectively) on the time course of force execution in the time-constrained task. C, Time course of force execution as a function of reward (color coded) in the time-constrained task.

We then looked at the pupil response during the effort execution. We ran a GLMM with the average force exerted during the task as continuous predictor and the treatment condition as categorical predictor. Only accepted trials were taken into account. We found that the force condition significantly affected the pupil response (F(1,17.5) = 26.94, p < 0.0001), confirming our earlier findings (Zénon et al., 2014), while neither the treatment effect nor the interaction were significant (all p > 0.05). The Bayes factor for the absence of significant treatment effect was equal to 12.18 [p(H0) = 0.92].

Time-constrained task

The acceptance rate was not affected by the treatment condition the subject was under (F(1,18) = 0.01, p = 0.9395), but rose as the proposed amount of reward increased (Fig. 3B; F(1,18) = 198.45, p < 0.0001). The interaction between the reward and treatment conditions was nonsignificant (F(1,18) = 1.41, p = 0.2501), and the Bayes factor associated with the absence of effect of the treatment condition was superior to 100 × 106 [p(H0) = 1].

RT for accepting/refusing the offer was, as for the effort-constrained task, not affected by the subject's treatment condition (Fig. 3D; F(1,18) = 0.08, p = 0.7794). RT was, however, as in the effort-constrained task, influenced by the amount of reward on offer (F(19,342) = 6.77, p < 0.0001). The interaction between the treatment and reward factors was nonsignificant (F(19,342) = 1.03, p = 0.4209), and the Bayesian probability for the absence of treatment effect was, again, close to one.

Figure 2C shows that, contrary to the effort-constrained task, the administration of levodopa drove the subjects to apply more force compared to when the placebo was administered (F(1,18) = 6.46, p = 0.0204; Fig. 4B). The reward pressed the subjects to exert more force: the bigger the reward, the stronger the subject squeezed the dynamometer (F(19,199) = 8.10, p < 0.0001; Fig. 4C). However, the interaction between the treatment and reward conditions did not influence the subject force exertion (F(19,164) = 0.89, p = 0.5987).

As in the effort-constrained task, we found that the pupil response was affected by the average force applied during the task (F(1,17) = 5.94, p = 0.026), but that there was no main effect of the treatment variable and no significant interactions (all p > 0.05). The Bayes factor for the absence of effect in this case was equal to 4.26 [p(H0) = 0.81].

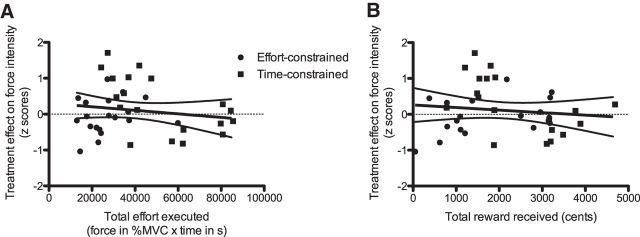

Control analysis

As the time-constrained and effort-constrained tasks differed not only in terms of the presence of trade-off between effort and opportunity cost, but also in terms of the total effort exerted and total reward received, we conducted a control analysis (Fig. 5) in which we analyzed the potential influence of the total effort and the total reward on the effect of levodopa on the force intensity. ANCOVAs showed that neither the total effort (F(1,18) = 0, p = 0.9673; Fig. 5A) nor the total reward (F(1,18) = 1.7, p = 0.2093; Fig. 5B) influenced the treatment effect on force intensity.

Figure 5.

Control analysis. A, Treatment effect on force intensity (z scores) as a function of the total effort executed [force in percentage times time (seconds)] in both types of tasks (effort and time constrained). B, Treatment effect on force intensity (z scores) as a function of the total amount of reward received (cents) in both types of tasks (effort and time constrained).

Computational model

The model provided a very good fit with the data linked to the acceptance rate (Fig. 6A,B; medians of the area under the ROC curve, 0.97 and 0.96 for the placebo and levodopa conditions, respectively) and also a good fit with the force data (Fig. 6C,D; medians of percentage of variance explained, 62 and 63% for the placebo and levodopa conditions, respectively). When comparing the parameter values under placebo and levodopa, we found that only the Ru parameter, accounting for the ratio between effort cost and reward value, decreased significantly (Fig. 6E; paired t test, t(18) = −2.17, p = 0.043; all other tests, p > 0.1), indicating that a given monetary amount was worth more effort in the levodopa than in the placebo condition.

Figure 6.

Computational results. A–D, Data points correspond to the actual data averaged across participants, while solid and dashed lines correspond to the average model fitting in the levodopa and placebo conditions, respectively. A, Acceptance rate in the effort-constrained task. Reward conditions are color coded. B, Acceptance rate in the time-constrained task. C, Average force during the effort-constrained task. D, Average force during the time-constrained task. It is noteworthy that while the fit was quite accurate overall, the model failed to account for the force exertion in the low reward condition when levodopa was administered. E, Changes in model parameters between the placebo and levodopa sessions. Error bars represent 95% confidence intervals. Qr corresponds to the decision threshold, Ru to the utility ratio, Oc to the opportunity cost factor, Ta to the acceptance temperature parameter, Te to the effort temperature parameter, and Er, Ef, and Ed to the reward, force, and duration exponent parameters, respectively. MVC, Maximal Voluntary Contraction. The asterisk indicates that only Ru changed significantly between treatment conditions (α = 0.05).

Discussion

In the present study, we found that DA increased subjects' willingness to exert greater effort in the time-constrained task, in which there was no trade-off between opportunity cost and effort. In contrast, DA had no effect on the force exerted in the effort-constrained task. Given that in rodents the baseline rate of responses has been shown to correlate strongly with the DA-induced decrease in response rate (Salamone et al., 2012), we had to ensure that the difference in DA effects between our two tasks was not caused by their different demands for effort or rewards. The correlation analysis showed clearly that these differences did not explain the differential effect of the levodopa administration on the subjects' performance. It was also crucial to show that the maximal effort exerted by the subjects at the beginning of each block was unaffected by levodopa. This result is consistent with those of several previous experiments (Schmidt et al., 2008; Chong et al., 2015) and confirms the absence of a simple relationship between DA and effort (Kurniawan et al., 2011). The absence of a direct link between DA and effort was also confirmed by the lack of effect of the DA manipulation on the pupil response, which can be viewed as an estimate of how effortful the force exertion was perceived to be (Zénon et al., 2014), or, more generally, as a marker of the effect of effort exertion on arousal (Sara and Bouret, 2012).

The present findings do not concur with the hypothesis put forth by Niv et al. (2007), who proposed that tonic DA signals the average reward rate, used to compute the opportunity cost. Indeed, according to this view, DA manipulations should have affected the effort investment in the effort-constrained task specifically, in which investing more effort allowed participants to decrease opportunity cost. Our findings also appear to be contradictory to those from the study by Beierholm et al. (2013), who found that the response vigor of a group of subjects taking levodopa was influenced more by the average reward rate than that of a control group. This result would apparently confirm the Niv et al. (2007) hypothesis about the role of DA in the coding of opportunity cost. However, a few important differences between this study and the present experiment are noteworthy. First, in the study by Beierholm et al. (2013), DA did not have a direct influence on any of the behavioral parameters, including RT (i.e., their estimate of response vigor), which remained, on average, unaffected by the dopaminergic manipulation, in contrast with the animal literature (Salamone et al., 2012). Rather, this manipulation was found to affect only one parameter of the computational model representing the influence of average reward rate on response vigor. Second, in their study, response vigor was measured during an oddball detection task, and therefore did not involve physical effort, in stark contrast with the present study and all previous experiments studying response vigor (Salamone et al., 2012). Determining whether the discrepancy between our results and the study by Beierholm et al. (2013) derives from their use of a cognitive task will require further experiments comparing directly the effects of DA manipulations on cognitive and physical effort.

In the effort-constrained task, the intensity of the response increased according to the amount of effort requested to obtain the reward. This is not an inconsequential finding given that, in principle, subjects could have squeezed the dynamometer with a constant force, regardless of the total effort requested to reach the reward. The observation that subjects exerted more force when the total effort requested was higher implies that they took into account the opportunity cost, justifying investing more force to decrease the amount of time spent on the action (Guitart-Masip et al., 2011). Subjects understood that a larger force intensity allowed the reward to be gained quicker, leaving more time to switch to the next offer and thus eventually leading to higher global rewards at the end of the 6 min block. This shows that the null effect of DA manipulation on the effort-constrained task cannot be caused by a lack of comprehension of the task constraints by the subjects.

In the present experiment, we used levodopa to manipulate dopamine concentrations in the brain. However, the mechanisms by which levodopa affects tonic levels and phasic responses are complex and remain poorly understood (Cools, 2006; Dreyer, 2014). In addition, baseline levels of DA vary between subjects, and the behavioral effect of levodopa intake is likely to vary as a function of the individual baseline level (Cools and D'Esposito, 2011), which itself varies as a function of the time of day (Sowers and Vlachakis, 1984), or the menstrual cycle (Jacobs and D'Esposito, 2011). We tried to minimize the impact of this confound by asking our subjects to arrive at the same times every day and to conduct the 2 d of experiments in conditions as similar as possible.

To our knowledge, the present study is the first to confirm in humans the direct effect of DA on response vigor (Salamone et al., 2003; Wang et al., 2013). Indeed, previous human studies have focused on the effect of DA manipulations on effort-based decision making, showing that higher levels of DA increased the willingness to execute greater effort (Wardle et al., 2011; Chong et al., 2015), similar to the T-maze studies in rodents (Salamone, 2007). In these studies, the amount of effort to be executed was imposed, and the only degree of freedom left to the participants was to choose the best offer. In our study, in the two tasks, subjects had to decide both whether or not to accept the offer and then how much force to exert on each trial. Surprisingly, we found that DA modulated the amount of force exerted only in the task in which reward was directly proportional to effort, and not in the task in which the reward was fixed. This dissociation was accounted for in the computational model by a dopamine-induced change in the utility ratio parameter Ru, representing the ratio of the cost of effort relative to the value of the monetary reward. This parameter was significantly influenced by the levodopa manipulation, whereas none of the other parameters showed significant change between the treatment conditions. While the absence of change in the Oc, or opportunity cost factor, contradicts the hypothesis of Niv et al. (2007), as already mentioned, the lack of modification of the Qr parameter is contradictory with the model of Phillips et al. (2007), which proposes that DA serves to adjust the decision threshold between worthwhile and nonworthwhile outcomes. Instead, our findings show that DA manipulations altered the ratio between the subjective cost of the associated effort and the subjective value of the reinforcement. This confirms and extends the view that DA signals the incentive value of reward cues, used to determine how much effort a given expected reinforcement is worth (Salamone et al., 2005; Berridge, 2007; Flagel et al., 2011). This function is viewed as central in the pathophysiology of addiction (Robinson and Berridge, 1993), in which a large amount of resources are allocated to the research of the drug and its consumption, at the expense of essential activities such as gathering food (Heyman, 2000). Similarly, bradykinesia in Parkinson's disease is no longer considered a mere motor deficit, but rather a disorder of cost–benefit computation (Mazzoni et al., 2007; Turner and Desmurget, 2010). The role of motivational disturbances in schizophrenia has also been highlighted recently, including in effort-based decision-making tasks (Fervaha et al., 2013; Barch et al., 2014; Strauss et al., 2014), and depression results in a disruption of effort allocation as a function of expected reward value (Cléry-Melin et al., 2011). DA appears to be the common denominator to these disorders, and furthering our understanding of its function should allow us to improve our therapeutic arsenal to combat them.

Footnotes

This work was supported by grants from Innoviris (Région Bruxelles-Capitale), the Actions de Recherche Concertées (Communauté Française de Belgique), the Fondation Médicale Reine Elisabeth, and the Fonds de la Recherche Scientifique (FNRS).

The authors declare no conflict of interest.

References

- Barch DM, Treadway MT, Schoen N. Effort, anhedonia, and function in schizophrenia: reduced effort allocation predicts amotivation and functional impairment. J Abnorm Psychol. 2014;123:387–397. doi: 10.1037/a0036299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beierholm U, Guitart-Masip M, Economides M, Chowdhury R, Düzel E, Dolan R, Dayan P. Dopamine modulates reward-related vigor. Neuropsychopharmacology. 2013;38:1495–1503. doi: 10.1038/npp.2013.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC. The debate over dopamine's role in reward: the case for incentive salience. Psychopharmacology. 2007;191:391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- Chong TT, Bonnelle V, Manohar S, Veromann KR, Muhammed K, Tofaris GK, Hu M, Husain M. Dopamine enhances willingness to exert effort for reward in Parkinson's disease. Cortex. 2015;69:40–46. doi: 10.1016/j.cortex.2015.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cléry-Melin ML, Schmidt L, Lafargue G, Baup N, Fossati P, Pessiglione M. Why don't you try harder? An investigation of effort production in major depression. PLoS One. 2011;6:e23178. doi: 10.1371/journal.pone.0023178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R. Dopaminergic modulation of cognitive function-implications for L-DOPA treatment in Parkinson's disease. Neurosci Biobehav Rev. 2006;30:1–23. doi: 10.1016/j.neubiorev.2005.03.024. [DOI] [PubMed] [Google Scholar]

- Cools R, D'Esposito M. Inverted-U-shaped dopamine actions on human working memory and cognitive control. Biol Psychiatry. 2011;69:e113–e125. doi: 10.1016/j.biopsych.2011.03.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cousins MS, Wei W, Salamone JD. Pharmacological characterization of performance on a concurrent lever pressing/feeding choice procedure: effects of dopamine antagonist, cholinomimetic, sedative and stimulant drugs. Psychopharmacology. 1994;116:529–537. doi: 10.1007/BF02247489. [DOI] [PubMed] [Google Scholar]

- Dreyer JK. Three mechanisms by which striatal denervation causes breakdown of dopamine signaling. J Neurosci. 2014;34:12444–12456. doi: 10.1523/JNEUROSCI.1458-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fervaha G, Graff-Guerrero A, Zakzanis KK, Foussias G, Agid O, Remington G. Incentive motivation deficits in schizophrenia reflect effort computation impairments during cost-benefit decision-making. J Psychiatr Res. 2013;47:1590–1596. doi: 10.1016/j.jpsychires.2013.08.003. [DOI] [PubMed] [Google Scholar]

- Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, Akers CA, Clinton SM, Phillips PE, Akil H. A selective role for dopamine in stimulus-reward learning. Nature. 2011;469:53–57. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Beierholm UR, Dolan R, Duzel E, Dayan P. Vigor in the face of fluctuating rates of reward: an experimental examination. J Cogn Neurosci. 2011;23:3933–3938. doi: 10.1162/jocn_a_00090. [DOI] [PubMed] [Google Scholar]

- Hartmann MN, Hager OM, Tobler PN, Kaiser S. Parabolic discounting of monetary rewards by physical effort. Behav Processes. 2013;100:192–196. doi: 10.1016/j.beproc.2013.09.014. [DOI] [PubMed] [Google Scholar]

- Heyman GM. An economic approach to animal models of alcoholism. Alcohol Res Health. 2000;24:132–139. [PMC free article] [PubMed] [Google Scholar]

- Jacobs E, D'Esposito M. Estrogen shapes dopamine-dependent cognitive processes: implications for women's health. J Neurosci. 2011;31:5286–5293. doi: 10.1523/JNEUROSCI.6394-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding KP, Fukunaga I, Howard IS, Ingram JN, Wolpert DM. A neuroeconomics approach to inferring utility functions in sensorimotor control. PLoS Biol. 2004;2:e330. doi: 10.1371/journal.pbio.0020330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Guitart-Masip M, Dolan RJ. Dopamine and effort-based decision making. Front Neurosci. 2011;5:81. doi: 10.3389/fnins.2011.00081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masson ME. A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behav Res Methods. 2011;43:679–690. doi: 10.3758/s13428-010-0049-5. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, Hristova A, Krakauer JW. Why don't we move faster? Parkinson's disease, movement vigor, and implicit motivation. J Neurosci. 2007;27:7105–7116. doi: 10.1523/JNEUROSCI.0264-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- Phillips PE, Walton ME, Jhou TC. Calculating utility: preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacology. 2007;191:483–495. doi: 10.1007/s00213-006-0626-6. [DOI] [PubMed] [Google Scholar]

- Prévost C, Pessiglione M, Météreau E, Cléry-Melin ML, Dreher JC. Separate valuation subsystems for delay and effort decision costs. J Neurosci. 2010;30:14080–14090. doi: 10.1523/JNEUROSCI.2752-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson TE, Berridge KC. The neural basis of drug craving: an incentive-sensitization theory of addiction. Brain Res Brain Res Rev. 1993;18:247–291. doi: 10.1016/0165-0173(93)90013-P. [DOI] [PubMed] [Google Scholar]

- Salamone JD. Functions of mesolimbic dopamine: changing concepts and shifting paradigms. Psychopharmacology. 2007;191:389. doi: 10.1007/s00213-006-0623-9. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res. 1994;65:221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Mingote S, Weber SM. Nucleus accumbens dopamine and the regulation of effort in food-seeking behavior: implications for studies of natural motivation, psychiatry, and drug abuse. J Pharmacol Exp Ther. 2003;305:1–8. doi: 10.1124/jpet.102.035063. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Mingote SM, Weber SM. Beyond the reward hypothesis: alternative functions of nucleus accumbens dopamine. Curr Opin Pharmacol. 2005;5:34–41. doi: 10.1016/j.coph.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Nunes EJ, Randall PA, Pardo M. The behavioral pharmacology of effort-related choice behavior: dopamine, adenosine and beyond. J Exp Anal Behav. 2012;97:125–146. doi: 10.1901/jeab.2012.97-125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sara SJ, Bouret S. Orienting and reorienting: the locus coeruleus mediates cognition through arousal. Neuron. 2012;76:130–141. doi: 10.1016/j.neuron.2012.09.011. [DOI] [PubMed] [Google Scholar]

- Schmidt L, d'Arc BF, Lafargue G, Galanaud D, Czernecki V, Grabli D, Schüpbach M, Hartmann A, Lévy R, Dubois B, Pessiglione M. Disconnecting force from money: effects of basal ganglia damage on incentive motivation. Brain. 2008;131:1303–1310. doi: 10.1093/brain/awn045. [DOI] [PubMed] [Google Scholar]

- Schmidt L, Lebreton M, Cléry-Melin ML, Daunizeau J, Pessiglione M. Neural mechanisms underlying motivation of mental versus physical effort. PLoS Biol. 2012;10:e1001266. doi: 10.1371/journal.pbio.1001266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skvortsova V, Palminteri S, Pessiglione M. Learning to minimize efforts versus maximizing rewards: computational principles and neural correlates. J Neurosci. 2014;34:15621–15630. doi: 10.1523/JNEUROSCI.1350-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sowers JR, Vlachakis N. Circadian variation in plasma dopamine levels in man. J Endocrinol Invest. 1984;7:341–345. doi: 10.1007/BF03351014. [DOI] [PubMed] [Google Scholar]

- Strauss GP, Waltz JA, Gold JM. A review of reward processing and motivational impairment in schizophrenia. Schizophr Bull. 2014;40:S107–16. doi: 10.1093/schbul/sbt197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner RS, Desmurget M. Basal ganglia contributions to motor control: a vigorous tutor. Curr Opin Neurobiol. 2010;20:704–716. doi: 10.1016/j.conb.2010.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang AY, Miura K, Uchida N. The dorsomedial striatum encodes net expected return, critical for energizing performance vigor. Nat Neurosci. 2013;16:639–647. doi: 10.1038/nn.3377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle MC, Treadway MT, Mayo LM, Zald DH, de Wit H. Amping up effort: effects of d-amphetamine on human effort-based decision-making. J Neurosci. 2011;31:16597–16602. doi: 10.1523/JNEUROSCI.4387-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zenon A, Olivier E. Contribution of the basal ganglia to spoken language: is speech production like the other motor skills? Behav Brain Sci. 2014;37:576. doi: 10.1017/S0140525X13004238. [DOI] [PubMed] [Google Scholar]

- Zénon A, Sidibé M, Olivier E. Pupil size variations correlate with physical effort perception. Front Behav Neurosci. 2014;8:286. doi: 10.3389/fnbeh.2014.00286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zénon A, Sidibé M, Olivier E. Disrupting the supplementary motor area makes physical effort appear less effortful. J Neurosci. 2015;35:8737–8744. doi: 10.1523/JNEUROSCI.3789-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]