Abstract

Maladaptive decision-making is increasingly recognized to play a significant role in numerous psychiatric disorders, such that therapeutics capable of ameliorating core impairments in judgment may be beneficial in a range of patient populations. The field of “decision neuroscience” is therefore in its ascendancy, with researchers from diverse fields bringing their expertise to bear on this complex and fascinating problem. In addition to the advances in neuroimaging and computational neuroscience that contribute enormously to this area, an increase in the complexity and sophistication of behavioral paradigms designed for nonhuman laboratory animals has also had a significant impact on researchers' ability to test the causal nature of hypotheses pertaining to the neural circuitry underlying the choice process. Multiple such decision-making assays have been developed to investigate the neural and neurochemical bases of different types of cost/benefit decisions. However, what may seem like relatively trivial variation in behavioral methodologies can actually result in recruitment of distinct cognitive mechanisms, and alter the neurobiological processes that regulate choice. Here we focus on two areas of particular interest, namely, decisions that involve an assessment of uncertainty or effort, and compare some of the most prominent behavioral paradigms that have been used to investigate these processes in laboratory rodents. We illustrate how an appreciation of the diversity in the nature of these tasks can lead to important insights into the circumstances under which different neural regions make critical contributions to decision making.

Introduction

Understanding the mechanism by which the brain makes decisions is perhaps one of the most fundamental questions for neuroscientists, psychologists, and economists alike. The demonstration that the humble laboratory rodent can show evidence of complex cost-benefit decision making, subject to similar preferences and biases as those that hallmark human cognition, has opened up numerous exciting possibilities. For example, researchers can apply optogenetic and chemogenetic techniques to dissect neural circuits and manipulate neuronal signaling with unprecedented neuroanatomical and temporal precision. However, for the novice (and even experienced!) behavioral neuroscientist, there may appear to be a bewildering array of different assays to choose from, all of which look superficially similar yet may actually tap into quite distinct cognitive processes and regulated by dissociable neural circuitries. Here, we illustrate this point and the insights gained through comparing task variants within two areas of particular interest, namely, decision making under uncertainty, and models of effort-based choice, before highlighting potential avenues for future research.

Decisions involving uncertainty

Given that nearly all “real-life” decisions involve an element of uncertainty, it is perhaps unsurprising that there is significant interest in understanding the deliberative process by which the brain chooses between probabilistic outcomes. From an economics perspective, risk is used to describe uncertain outcomes associated with known probabilities of occurrence. This concept has also been described as “expected uncertainty,” in which the unreliability of predictive relationships between environmental events is known to the subject (Yu and Dayan, 2005). This can be distinguished from unexpected uncertainty, or ambiguity, in which the degree of uncertainty associated with a particular response or event is unknown to the subject. Expected/risky and unexpected/ambiguous types of uncertain outcomes engage distinct neural circuitries (e.g., Huettel et al., 2006), and the type of uncertainty encountered may recruit different cognitive processes to guide choice (for review, see Winstanley et al., 2012). Most behavioral methodologies involve repeated training sessions or forced choice trials to expose the animals to the contingencies in play, and reduce the chance that animals are responding at random. Once acquired to a stable baseline, most behavioral tasks are therefore assessing risk rather than ambiguity.

Human decision making typically does not obey mathematical norms when choosing between uncertain outcomes, in that our choice is not always governed by the expected utility of different options. As famously described by Prospect Theory, our decision making is instead subject to various heuristics and biases, such as risk aversion and loss aversion (Kahneman and Tversky, 1979; Kahneman, 2011), and such choice patterns are thought to make important contributions to the maladaptive decision making observed in gambling disorder (GD) (Clark, 2010). Whether animals likewise show such mathematically irrational choice is important to consider when assuming that the cognitive processes underlying such decisions may approximate human behavior.

“Risk” is also commonly used to mean the “the possibility that something unpleasant or unwelcome will happen” (Oxford English Dictionary). Thus, the phrase “risky” choice could also imply the chances of not only something good failing to occur, but also something bad happening. In some assays designed to assess risk/reward decision making in animals, the “risk” may merely be a lack of reward, whereas others incorporate the potential for some additional aversive consequence, such as a shock (Simon et al., 2009), unpleasant taste (van den Bos et al., 2006), or prolonged time-out period (Rivalan et al., 2009; Zeeb et al., 2009). As we will describe below, decisions where rewards may potentially come with unpleasant outcomes may recruit different neural circuits to guide choice behavior compared with situations involving choice between some versus no reward. These considerations suggest a number of important ways by which decision-making tasks that require an assessment of uncertainty or risk could be clustered.

Signaling loss: rodent analogs of the Iowa Gambling Task (IGT) and related behavioral tasks

The IGT (Bechara et al., 1994) is one of the best known and widely used decision-making tasks. Subjects choose between four decks of cards, with the goal of accumulating the most points. Each card results in either a net gain, or a net loss. Two decks are considered “risky” or disadvantageous, in that choice can result in large per trial gains but also disproportionately heavy losses, such that continued selection from such decks will result in a reduction in points over time. The optimal strategy is therefore to favor the conservative or advantageous decks, choice from which results in smaller per trial gains and penalties, but gradual accumulation of profit throughout the session. Elevated risky choice has been reported in numerous clinical populations, including bipolar mania (Clark et al., 2001), psychopathy (van Honk et al., 2002), substance use disorders (Bechara and Damasio, 2002), and the presence of suicidal features (Jollant et al., 2005), to name just a few, and has been associated with damage to the amygdala and areas of frontal cortex (Bechara et al., 1999; Fellows and Farah, 2003).

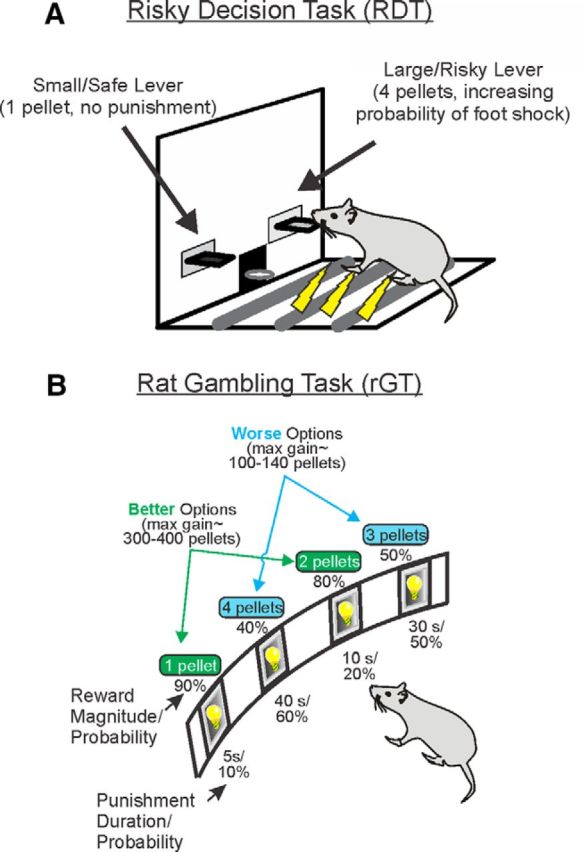

Development of a rodent analog was considered a worthy endeavor by a number of groups, due to the high translational potential of any neurobiological mechanisms found to drive choice. The first obvious challenge is how to model the loss component, given that the food reinforcement typically used with animal tasks is consumed immediately upon delivery and cannot be repossessed. Cues signaling how much reward is cached may not be intuitively recognized by rodents, and the use of conditioned stimuli in the absence of immediate reward leads to issues of extinction over time. Instead, different groups have adopted a range of ingenious ways to either signal loss or substitute for the aversive nature of losses, namely, through one of the following: (1) adding an unpleasant taste to the food, as in the runway-based rat IGT (RIGT) (van den Bos et al., 2006); (2) delivering electric shocks with the reward in what has been termed the risky decision-making task (RDT) (Fig. 1A) (Simon et al., 2009); or (3) through the use of punishing time-outs that reduce the remaining time-to-earn reward to closely approximate “losses,” such as in the rat gambling task (rGT, Fig. 1B) (Zeeb et al., 2009).

Figure 1.

Two assays used to probe decision-making involving uncertainty that use different magnitudes of rewards and distinct punishments. A, In the RDT, rats are trained to choose between two levers that delivers either 1 pellet (Small/Safe lever) or 4 pellets (Large/Risky lever), both of which are delivered with 100% certainty. However, selection of the Risky Lever may also deliver a foot shock punisher, and the probability of punishment increases over blocks of trials within a session. B, The rGT was patterned after the IGT used with human subjects. In each 30 min session, rats try to maximize sugar pellet profits by choosing between four options that vary in the number of sugar pellets (1–4) that can be earned on win trials, but also the probability (0.1–0.6) and duration (5–40 s) of punishing time-out periods delivered on losses. “Safe” options associated with smaller per-trial gains yield the most reward per session, whereas “risky” options appear tempting due to the larger reward size but result in less reward over time due to the longer and more frequent time-out punishments they are paired with. In the cued rGT, delivery of reward is accompanied by a 2 s audiovisual cue that increases in complexity with the reward size.

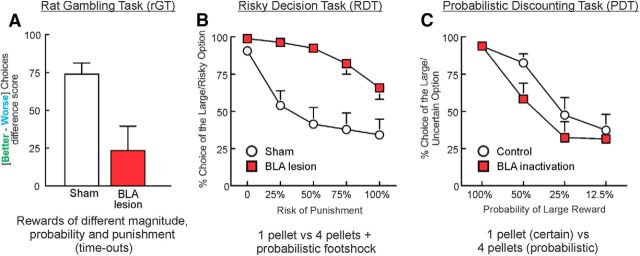

Regardless of other methodological differences between these three tasks variants, some clear similarities start to emerge when considering the neural circuitry underlying performance. Lesions to the BLA made after the animals have already acquired either the rGT (timeouts; Fig. 2A) or RDT (footshock punishment; Fig. 2B) lead to an increase in choice of the risky options (Zeeb and Winstanley, 2011; Orsini et al., 2015a). Thus, in these contexts, the BLA is crucial for mediating how negative outcomes diminish the value of otherwise more preferred rewards. This is in keeping with a broad literature suggesting that the BLA encodes the emotional significance of negative events, and may likewise explain why BLA lesions reduce the ability of a loss to spur further gambling in a rodent model of “loss-chasing” (Tremblay et al., 2014).

Figure 2.

A comparison of the effects of BLA lesions/inactivation on three forms of decision-making involving uncertainty. A, On the rGT (see Fig. 1B), subjects choose between options that vary in terms of the probability of obtaining different magnitudes of reward associated with different durations of time-out punishments, with some options being more advantageous (“better”) in the long term. BLA lesions cause disadvantageous patterns of decision making, with rats selecting options that yield larger but less frequent rewards and receiving more time-outs. Adapted with permission from Zeeb and Winstanley (2011). B, In the RDT (see Fig. 1A), rats choose between smaller rewards and larger ones associated with increasing likelihood of foot shock punishment. Again, BLA lesions increase choice of the risky option, such that rats receive more foot shocks, as well as more reward. Adapted with permission from Orsini et al. (2015a). C, In contrast, the PDT entails choice between a small/certain reward and a larger, uncertain one, with the probability of receiving the large reward varying across blocks of trials within a session. Unlike the rGT or RDT, there are no explicit punishments associated with the uncertain reward. Here, BLA inactivations reduce choice of larger, uncertain rewards, most prominently when the uncertain option is of equal or greater utility relative to the certain one. Adapted with permission from Ghods-Sharifi et al. (2009).

Lesions to the orbitofrontal cortex made after extended training fail to alter choice on the rGT yet decrease risky choices associated with shock in the RDT (Zeeb and Winstanley, 2011; Orsini et al., 2015a). Although lesions/inactivations targeting the lateral portion of the OFC have not been performed to our knowledge on the RIGT, cFos levels within the OFC, measured ex vivo following task performance, did not correlate with levels of risky decision making (de Visser et al., 2011). Hence, the use of a painful stimulus (shock) alters how the OFC guides the direction of choice. Interestingly, lesions made to either the OFC or BLA, or disconnecting these two regions before the acquisition of the rGT, results in slower learning about which option is the most beneficial, suggesting that these areas form a functional circuit that enables efficacious development of optimal strategies (Zeeb and Winstanley, 2011, Zeeb and Winstanley, 2013). Whether functional connectivity between the BLA and OFC is necessary for acquisition of the other tasks mentioned here is, as yet, unknown.

With respect to other prefrontal regions, lesions/inactivations of the prelimbic (PrL) and infralimbic (IL) areas of the medial prefrontal cortex (anatomically homologous to anterior cingulate [ACC] areas 32/25) (Uylings et al., 2003; Heilbronner et al., 2016) leads to a suboptimal, risk averse pattern of choice on the rGT, whereby animals increase choice of the option associated with the most frequent reward that is of lowest value, but that is also associated with the lowest penalties (Paine et al., 2013; Zeeb et al., 2015). Inactivating the agranular insula had a similar effect (Pushparaj et al., 2015). However, as yet, no cortical area targeted has resulted in a clear increase in risky choice comparable with that seen after BLA lesions.

Given the pronounced role of striatal regions in mediating the link between affective states and motor output (nucleus accumbens, NAc), goal-directed responding (dorsomedial striatum) and habitual behavior (dorsolateral striatum) (Balleine and O'Doherty, 2010), there has been surprisingly little work done on elucidating the contributions made by these regions to performance of any of these tasks. Likewise, the contribution of dopamine (DA, a major input to the striatum) to decisions involving reward and punishment remains to be clarified. D1-selective ligands do not affect choice on the RDT or the rGT (Zeeb et al., 2009; Simon et al., 2011). Conversely, D2 receptor activation reduces risky choices associated with shock on the RDT, yet neither acute nor chronic administration of D2/3 agonists affect choice on the rGT (Zeeb et al., 2009; Tremblay et al., 2013). However, associating salient audiovisual cues with “wins” that increase in complexity with the magnitude of reward does render choice on the rGT sensitive to modulation by D3 receptors (Barrus and Winstanley, 2016). The picture is further complicated by the findings that systemic blockade of D2 receptors reduces risky choice on the rGT (where the punishment is a timeout) yet has no effect on the RDT using shocks as a punisher (Zeeb et al., 2009; Simon et al., 2011). Clearly, our limited understanding of how striatal and dopaminergic systems contribute to decisions involving rewards and punishment highlight this as an important topic for future research.

Certain versus uncertain rewards: uncertainty discounting tasks

Just as many laboratory-based tasks used with humans frame decision outcomes with respect to gain versus no gain, as opposed to gain versus loss, certain rodent paradigms likewise use probabilistic schedules in which rats can choose to respond for a smaller-certain versus larger-uncertain reward. A paradigm that has been used by a number of groups is one in which the odds of receiving the larger reward shift between blocks of trials in a systematic manner from 100% to 12.5%, either in a descending (“good-to-bad”) or ascending (“bad-to-good”) manner (Cardinal and Howes, 2005; St Onge and Floresco, 2009; Rokosik and Napier, 2011), reminiscent of the structure of some delay-discounting tasks (Evenden and Ryan, 1996).

A series of studies has systematically explored the neural circuitry underlying probabilistic discounting, much of which has recently been reviewed in depth (Orsini et al., 2015b). Inactivation of the BLA (Fig. 2C) or NAc, as well as disconnection of these two regions, decreased choice of the uncertain options (Ghods-Sharifi et al., 2009; Stopper and Floresco, 2011; St Onge et al., 2012). In contrast, inactivating the medial (but not lateral) OFC increased choice of the risky options, whereas inactivation of the insular cortex had no effect (St Onge and Floresco, 2010; Stopper et al., 2014). One key region involved in adjusting decision biases is the PrL PFC. Inactivation of this region increased risky choice when the probability of reward decreased during the session, had the opposite pattern of effects when the order of blocks was reversed, yet had no impact on choice when the reinforcement contingencies remained stable across the session (St Onge and Floresco, 2010). This fascinating pattern of results suggests that this region plays a key role in tracking the probability of reward over time, facilitating adjustments in choice preference in response to contingency shifts. Furthermore, selectively disrupting top-down PFC→BLA connections increased choice of the larger yet increasingly uncertain reward, implying that communication between these two regions serves to modify choice biases driven by BLA→NAc circuits (St Onge et al., 2012).

Dopaminergic transmission plays a key role in modulating this form of decision making. Unlike other tasks that incorporate some form of punishment, D1 receptor activity modifies decision making when risky choices either yield larger rewards or nothing. Reductions in risky choice have been observed when D1 antagonists have been administered systemically (St Onge and Floresco, 2009) or locally within the PFC (St Onge et al., 2011), NAc (Stopper et al., 2013), or BLA (Larkin et al., 2016). Moreover, reducing D1 receptor tone within the forebrain increases lose-shift behavior, suggesting that these receptors reduced the negative impact of unrewarded actions and help a decision-maker keep an “eye on the prize,” maintaining choice biases even when risky choices do not always yield rewards. In comparison, D2 receptor activity within the PFC facilitates shifts in decision biases during probabilistic discounting, as blockade of these receptors increases risky choice when odds shift from “good-to-bad” (St Onge et al., 2011). These findings highlight the complexity through which mesocortical DA transmission regulates different aspects of decision making by acting on different receptors and, presumably, distinct populations of PFC neurons (Gee et al., 2012; Seong and Carter, 2012). Interestingly, D2 receptors within the NAc do not appear to play a role in this form of decision making (Stopper et al., 2013). When comparing this lack of effect with the seemingly more prominent role D2 receptors play in decision making involving some form of punishment described earlier, it is notable that separate populations of striatal neurons expressing D1 or D2 receptors have been proposed to regulate different patterns of behavior. D1-containing cells may be more important for promoting approach behaviors, whereas activation of D2-expressing neurons can be aversive, suggesting their involvement in avoidance behaviors (Lobo et al., 2010; Kravitz et al., 2012). Thus, within corticostriatal circuits, D1 versus D2 receptors may mediate distinct forms of decision making dependent in part on whether the objective is to merely maximize rewards or avoid potentially aversive consequences.

Certain versus uncertain rewards of equal expected value

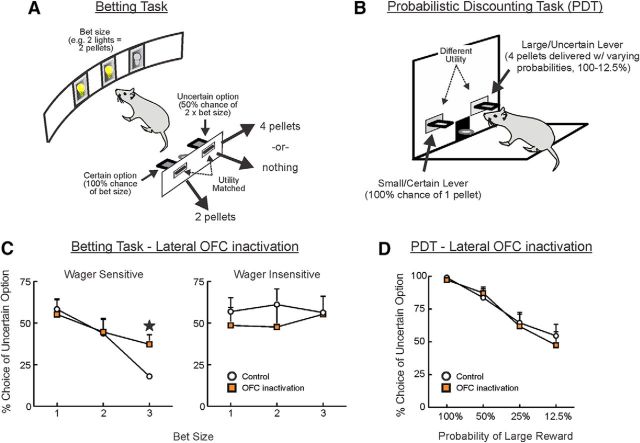

To address whether rats show decision-making biases similar to human subjects, tasks have been designed in which the options are matched in terms of expected utility. Preference for uncertainty or guaranteed rewards can therefore be ascertained without the potential confounds of differential reinforcement rates, similar to the original studies by Kahneman and Tversky (Tversky and Kahneman, 1974, 1981; Kahneman and Tversky, 1979). In the rat betting task (rBT, Fig. 3A), animals choose between either a guaranteed reward, or double that option or nothing with 50:50 odds. During the course of the session, the number of pellets available on the safe lever (the “bet size”) varies between blocks from 1 to 3 in a pseudo-random fashion, with changes in bet size signaled by forced choice trials and cue lights. Even though the relative utility of the guaranteed and probabilistic options remains equal, choice of the uncertain option decreases as the bet size increases, comparable to escalation of commitment effects in human subjects, whereby individuals are less willing to gamble as the stakes increase even though the odds of success remain constant (Cocker et al., 2012b).

Figure 3.

A comparison of the effects of lateral OFC inactivation on two forms of decision making involving uncertainty. A, On a given trial of the Betting task, the number of pellets available on the certain lever (or “bet size”) are signaled by illumination of an equivalent number of cue lights on the opposite wall. A nosepoke at each illuminated aperture turns off the light inside. When all the lights have been extinguished in this way, the two levers are presented. Choice of the certain option results in guaranteed delivery of the bet size, whereas a response on the uncertain lever leads to double that amount or nothing with 50:50 odds. As such, there is no net advantage, with respect to pellets earned, in choosing one option over another: even though the bet size shifts across blocks of 10 trials between 1 and 3 sugar pellets, the relative utility of each option remains equal and constant. B, In the PDT, rats choose between a small/certain reward and larger reward delivered with varying probabilities. In contrast to the betting task, the utility of the two options differs and changes across trial blocks. C, Under control conditions on the rBT, some animals show sensitivity to bet size (wager sensitive, left), and decrease preference for the uncertain option when greater amounts of reward are at stake. Other rats are relatively wager insensitive (right). OFC inactivation increased choice of the uncertain option in wager-sensitive rats when bet sizes were large while having no effect on wager-insensitive rats. D, In contrast to the rBT, OFC inactivation does not affect choice behavior on the PDT. A comparison of these two observations suggests that the OFC may play a more prominent role in directing risk-related decisions influenced by subjective biases, independent of reward utility.

Individual rats vary considerably in their propensity to switch preference to the safe lever as bet size increases, such that rats may be classified as either wager-sensitive or -insensitive (Fig. 3C) (Cocker and Winstanley, 2015). With regards to the brain regions involved in mediating such biases, inactivations or lesions to the BLA, PrL, or IL regions are without effect on choice in either wager-sensitive or -insensitive rats, yet inactivation of the lateral OFC selectively ameliorates wager-sensitive choice patterns (Tremblay et al., 2014; Barrus et al., 2016). Recent data using a somewhat comparable task suggest that selective activation of NAc neurons expressing D2 receptors can ameliorate risky choice through signaling unfavorable outcomes during the decision-making process (Zalocusky et al., 2016). In keeping with such observations, acute and chronic administration of a D2/3 receptor agonist increased risky choice on these different decision-making tasks, whereas a D1 agonist was without affect (Tremblay et al., 2016; Zalocusky et al., 2016). Thus, even though D2 receptors in the NAc may not play as prominent a role in guiding risky choice in situations where the utility of options differ, they may nonetheless shape choice biases in other situations involving subjective preferences for risky versus more certain options.

Comparison of neural circuitries

As is often the case in neuroscience, the same manipulations have not been performed in all the tasks described above. Yet, the involvement of four key systems (the BLA, lateral OFC, mPFC, and DA system) have been assessed in at least the majority of the paradigms under consideration, which permits some comparison of their relative contribution to different aspects of decision making (Table 1). Whereas inactivating the BLA increases risky choice during both the rGT and RDT, it has the opposite effect on the PDT (Fig. 2). The manner in which the BLA guides choice during risky decision making thus depends on the nature of the penalties involved and corresponding response priorities of the decision-maker. When risky choices can incur some form of punishment, the BLA biases action selection to reduce the chances of aversive events. In comparison, when choosing between certain versus larger uncertain reward (but no other punishment), the neural activity in the BLA promotes choices that may maximize the amount of reward obtained. In contrast, neural activity in this nucleus does not appear to contribute to choice when options are matched for expected utility, as in the rBT.

Table 1.

Relationship between task features and neurobiology implicated in decision-making assays involving an assessment of uncertaintya

| Task type | Task feature present |

Neurobiology implicated in risky choice |

|||||

|---|---|---|---|---|---|---|---|

| Loss | Contingency shift | Utility matched | BLA | lOFC | mPFC | DA | |

| rIGT | ✓ |  |

|

? | —b | ? | ? |

| rGT | ✓ |  |

|

↑ | — | ↓ |

c c

|

| RDT | ✓ | ✓ |  |

↑ | ↓ | ? | ✓ |

| PDT |  |

✓ |  |

↓ | — | ↑↓d | ✓ |

| rBT |  |

✓ | ✓ | — | ↑ | — | ✓ |

a Symbols are used to indicate whether risky choice is increased (↑), decreased (↓), or unaffected (—) by inactivation or silencing of the BLA, lateral OFC, or regions of the mPFC. The last column provides a somewhat crude overview of whether DA plays a strong role in mediating choice, based on the effects of acute or chronic pharmacological challenges with specific dopaminergic agents (i.e., not just psychostimulants, which can act on a multitude of neurochemical signaling systems).

b This is inferred from cFos staining, rather than inactivation/lesion experiments.

c The cued version of the rGT is sensitive to D3 receptor agonists and antagonists.

d The presence of both upward and downward arrows signifies that risky choice can be both increased and decreased by targeting different DA receptor subtypes, particularly in frontal cortex, such that a simple linear relationship between DA tone and risky choice is unlikely.

The lateral OFC has a pronounced role to play in risk-related decisions when there is a chance of a painful aversive stimulus, in which case this region appears to promote risky choice, perhaps through signaling the appetitive qualities of the reward. This manipulation had no effects in either the PDT (Fig. 3C,D) or rGT (at least once task contingencies have been learned), suggesting that the effects on the RDT are not attributable to disruptions in processing of loss signals or contingency shifts. In the rBT, inactivating the OFC selectively ameliorated wager-sensitive response bias, increasing choice of the risky option. A singular theory of OFC function has been notoriously difficult to establish (Stalnaker et al., 2015). However, when attempting to integrate these findings, both increases in choice of uncertain options in the rBT and decreases in risky choice in the RDT following OFC lesions may reflect alterations in the subjective evaluation of different outcomes. Thus, in the rGT and PDT, there are arguably objectively defined “optimal” strategies. In contrast, in the rBT, wager-sensitive rats shift preference away from the risky option as the bet size increases, even though this does not result in any change in overall reward rate; lateral OFC inactivations abolish this effect. In a similar vein, the risky option is always rewarded in the RDT, but the chances of punishment increase during the session. Given that the aversive and appetitive consequences associated with choice in this task are in different modalities, there is no “mathematical” norm by which choice can be judged. As such, silencing the OFC causes animals to behave as if the larger reward is not “worth” the penalty, which may be a reflection of diminished valuation of rewards. Thus, if subjective value is viewed as some internally generated reward assessment, based more on how the outcomes make the subjects feel, then perhaps the effects of lateral OFC inactivation on both tasks can be understood as a decrease in the impact that this value judgment has on choice.

On the other hand, the finding that inactivation of the PrL PFC reduces choice of the best option in the rGT and impairs modifications in choice biases when reward probabilities change in the PDT, suggests that this region promotes efficient choice behavior to ensure gain is maximized under conditions where the objective value of different options are discrepant. More specifically, this region of the frontal lobes serves to optimize reward seeking in situations presenting multiple options (i.e., more than two) or when the relative utility of different options is volatile.

Last, decisions that do not involve explicit aversive outcomes are exquisitely sensitive to manipulations of DA in multiple terminal regions, with D1 receptors playing a prominent role in promoting choice of larger uncertain rewards by reducing sensitivity to nonrewarded actions. In comparison, choice guided in part by the potential of aversive outcomes, as in the rGT or RDT, is relatively insensitive to D1 receptor manipulations, whereas tone on D2 receptors may be more consequential in modifying choice. This being said, DA transmission within the PFC can mediate seemingly opposing patterns of behavior by acting on different receptors. Thus, the principles of operation by which cortical and subcortical DA transmission refines different forms of risky decisions ares likely to be much more complex than simple dichotomies (e.g.; D1 = reward, D2 = aversion), as is typically the case with studies of monoamines.

Decisions involving effort-related costs

Deciding whether a particular outcome is worth the effort required to achieve it is another almost universal factor that influences daily decision making and may contribute to clinically relevant symptoms, such as anhedonia and anergia (Salamone et al., 1994; Treadway et al., 2009). Effortful tasks can generally be conceptualized as taxing physical or mental resources. Although human neuroimaging has started to delineate a somewhat overlapping network of frontostriatal regions implicated in both choice variants (e.g., Botvinick et al., 2009; Croxson et al., 2009; Treadway et al., 2012), it is unclear whether the allocation of cognitive and physical effort is underpinned by the same cognitive or neurobiological processes (Kool et al., 2010; Kurniawan et al., 2010), and preclinical studies may make an important contribution in this regard.

Physical effort

The original assays developed by Salamone and colleagues (Cousins et al., 1994; Salamone et al., 2001) have been highly influential and used a concurrent choice task conducted in an operant chamber, wherein rats choose between pressing a lever a number of times to obtain a more palatable, sweetened food pellet reward, or consuming less palatable laboratory chow that is freely available, with a more recent variant requiring rats to respond on a progressive ratio to obtain the preferred reward (Randall et al., 2012). Critically, this task can be used to delineate whether alterations in choice are due to nonspecific effects on satiety or more evaluative aspects of decision making. A complementary method requires rats to choose between different magnitudes of the same reward. The original T-maze-based task required rats to surmount a scalable barrier to obtain a larger reward in one arm, whereas no barrier is placed in the small reward arm (Salamone et al., 1994). An automated “effort-discounting” task conducted in operant chambers works on a similar principle, in which rats choose between two levers, one delivering a smaller reward after one press and the other a larger reward after a greater number of presses (1–20) that increases over blocks of trials. Advantages of this latter procedure include the ability to assess choice latencies, rates of responding (as an index of response vigor), and being able to equate the relative delay to obtain the two rewards (Floresco et al., 2008; Shafiei et al., 2012; Simon et al., 2013).

Preference for high effort/large reward options on each of the above-mentioned tasks is markedly reduced by manipulations of the NAc, primarily the more lateral “core” region. This includes cell body lesions or inactivations (Hauber and Sommer, 2009; Ghods-Sharifi and Floresco, 2010), neurotoxic lesions of mesoaccumbens DA terminals (Salamone et al., 1991; Salamone et al., 1994), or blockade of either D1 or D2 receptors within the NAc (Nowend et al., 2001). Similarly, lesions or inactivation of the BLA induces a similar shift in preference away from high-cost options (Floresco and Ghods-Sharifi, 2007; Ghods-Sharifi et al., 2009; Ostrander et al., 2011). Notably, these manipulations do not affect preference for larger versus smaller rewards of equal cost. Thus, subcortical circuitry that enables overcoming physical effort costs to obtain more preferred rewards includes the NAc, BLA, and mesoaccumbens dopamine, mirroring their involvement in mediating choice of larger, uncertain rewards. In this regard, it could be argued that decisions involving effort may be related conceptually to those involving uncertainty, in that the decision-maker may be unsure whether the physical challenges may be overcome. This being said, these studies typically involve extensive pretraining, so that subjects are well experienced in overcoming the effort requirements at hand, which would minimize this form of uncertainty. Furthermore, the observations that there are considerable dissociations with respect to the regions of the frontal lobe that mediate decisions involving uncertainty versus effort (as discussed below) suggest that these two types of decisions may be mediated by distinct evaluative processes.

With respect to prefrontal involvement, a number of groups have shown that lesions of the rat dorsal ACC (but not the PrL or OFC) also reduce choice of high effort options on the T-maze barrier task (Walton et al., 2002, 2003; Schweimer and Hauber, 2005; Rudebeck et al., 2006; Floresco and Ghods-Sharifi, 2007; Hauber and Sommer, 2009; Ostrander et al., 2011; Holec et al., 2014). The findings of a seemingly selective involvement of ACC in effort-related choice has spawned a plethora of lesion, neurophysiological, and imaging studies in rats, monkeys, and humans exploring its contribution to these functions. Indeed, direct stimulation of the ACC in humans elicits a feeling of foreboding regarding an impending challenge accompanied by the will to persevere and strive to overcome difficulties, described as if driving into a storm when there is no alternative but to make it to the other side (Parvizi et al., 2013).

However, a deeper inspection of the literature reveals that the involvement of this region in decisions related to physical effort costs only manifests itself under some experimental conditions. For example, similar lesions do not affect performance of the concurrent choice task, where rats chose between pressing a lever for sweetened pellets versus freely available laboratory chow, nor do they affect instrumental responding for food on a progressive ratio (Schweimer and Hauber, 2005). Similarly, ACC lesions did not alter effort-related decisions when rats were required to exert greater physical force by pressing a weighted lever to obtain larger rewards (Holec et al., 2014). Furthermore, in their original study, Walton et al. (2002) noted that that the effects of medial PFC lesions could be remediated if the ratio of reward magnitude was changed from 4:2 to 5:1. Of particular note, a detailed analysis of choice behavior on the T-maze barrier task revealed that, early in a test session, ACC lesion rats were just as likely to initially approach the arm containing the larger reward and the barrier as controls. Yet, upon encountering the barrier, lesioned rats were much more likely to abort their choice and select the easily accessible smaller reward (Holec et al., 2014). This pattern of results suggest that the ACC is not involved in the initial decision to select higher cost/larger reward options, but instead may be more important in overcoming these costs at the point when faced with physical challenge. Thus, even though neurophysiological studies suggest that ACC neural activity encodes information regarding effort cost (Kennerley et al., 2006; Cowen et al., 2012), it is apparent that the ACC does not play a ubiquitous role in effort-related decisions. Rather, it may serve a more specialized function in biasing choice when the discrepancy between the particular costs and benefit associated with different choices is relatively small or when impending effort costs are physically apparent (e.g., a perceptible barrier).

Cognitive effort

The design of the rat cognitive effort task (rCET) takes advantage of a well-validated rodent test of visuospatial attention: the five choice serial reaction time task (5CSRT) (Carli et al., 1983; Robbins, 2002). Rats are trained to attend to an array of five nosepoke holes, and respond in an illuminated aperture when a stimulus light inside is briefly (0.5 s) illuminated to earn reward. The number of premature nosepoke responses provides an index of motor impulsivity, while attentional ability is indicated by the accuracy of target detection. Each trial in the rCET is similar to that in the 5CSRT, with one exception: at the start of each trial, the animal chooses between two levers, and its response determines whether the trial is attentionally demanding, with a stimulus duration of 0.2 s, or much easier, with a stimulus duration of 1.0 s. Successful performance of a hard trial results in 2 pellets, compared with only 1 following an accurate response on an easy trial. Accuracy for hard trials averages ∼65%, whereas for easy trials this improves to 90% (Cocker et al., 2012a).

Notable individual differences emerge when analyzing preference for the hard versus easy trials, such that animals can be classified as either “workers” or “slackers” by whether percentage choice of the harder option falls above or below the group mean (typically 70% choice of the harder option). Critically, this does not depend on animals' ability to perform the harder trials: accuracy is just as good in slackers as in workers. Such observations provide surface-level support for the theory that cognitive ability and mental endeavor are dissociable processes (Naccache et al., 2005). Experiments probing the neurobiological substrates that guide rCET performance also largely point to such a conclusion. As expected from the human literature (and in keeping with some studies of physical effort in animals), ACC inactivations uniformly decreased choice of the more effortful option without impairing accuracy of target detection (Hosking et al., 2014a). However, this manipulation also increased premature responses, indicative of an increase in motor impulsivity. The time taken to make a choice between the easy and hard options was not affected by silencing the ACC, suggesting that the increase in choice of the easier option did not occur more rapidly due to a loss of deliberation or increased reflection impulsivity. On the other hand, PrL inactivation decreased choice of the harder option while also impairing accuracy of target detection (Hosking et al., 2016).

The data described above implicate frontal regions in the evaluation of differentially effortful tasks, yet these findings pose an additional question as to whether perturbations in impulse control or attentional ability induced by frontal inactivations drives the reduced choice of the more cognitively challenging option. That is, are rats shying away from the harder option because they are less likely to succeed at the task, and hence, can choice between differentially effortful cognitive challenges be manipulated independently of their performance? The effects of BLA inactivation on this task would suggest so, in that this manipulation altered choice without affecting accuracy or premature responding, although rats were slower to make a choice (Hosking et al., 2014a). Interestingly, BLA inactivations reduced choice of the more preferred option in both subgroups, increasing choice of the harder option in slackers and the easier option in worker rats. As such, although the BLA may facilitate expression of subjective preferences, other regions may play a more prominent role in determining the most preferred course of action.

In contrast to these more selective regional effects, striatal manipulations have a profound impact on almost every facet of the rCET: inactivations of the DMS decrease choice of the harder option, impair accuracy, increase impulsivity, and slow responding, whereas inactivating the NAc leads to a complete cessation of task performance (Silveira et al., 2016a). Given these pronounced effects, it is somewhat surprising that selective dopaminergic drugs do not affect choice on the rCET, in marked contrast to physical effort-based choice (Hosking et al., 2015). Instead, cholinergic and cannabinoid, but not noradrenergic, signaling appears to be highly influential on decision-making in the rCET (Hosking et al., 2014b; Silveira et al., 2016b). Of particular note is the observation that THC decreased choice of the more demanding option without affecting any other task parameter. Although acute administration of the psychostimulants amphetamine and caffeine decreased high effort choice in workers, these drugs either had the opposite or null effects on slackers' choice, respectively (Cocker et al., 2012a). These observations are also largely contrary to the effects of these compounds in tests of physical effort (e.g., Salamone et al., 2007; Bardgett et al., 2009), although higher doses of amphetamine can reduce choice of the more effortful option in the operant task version (Floresco et al., 2008).

Comparison of neural circuitries

Table 2 provides a simple overview regarding the ways in which the tasks discussed vary in terms of core structural characteristics, and variation in the neural circuitry involved. Some of the most interesting findings from the rCET pertain to individual differences in animals' willingness to select the more cognitively demanding option, and this has yet to be fully explored in physical effort judgments. BLA inactivations decrease choice of the high effort option in both physical and cognitive effort tasks, as long as only “worker” rats are considered in the latter; BLA inactivations have the opposite effect, increasing choice of the harder option, in slacker rats. Baseline choice of the HR option is typically >75% in both the T-maze barrier task, and in the epoch of the effort-discounting task where BLA inactivations had the greatest effect, leading to the suggestion that the increase in choice of the harder option in the cognitively lazy rats may be a result of the difference in baseline choice rather than there being something fundamentally different about the role of the BLA in cognitively effortful decision making. However, close inspection of the data does not support this hypothesis. BLA inactivation still decreased choice of the more physically demanding option even in a subgroup of rats that showed <60% preference for the difficult option at baseline, virtually matching the basal choice profile of slacker rats (Floresco and Ghods-Sharifi, 2007). As such, the role of the BLA in mediating the exertion of cognitive effort may be distinct from its role in promoting physically demanding choice.

Table 2.

Relationship between task features and neurobiology implicated in different decision-making assays involving the assessment of efforta

| Task type | Type of effort taxed |

Neurobiology implicated in risky choice |

|||||

|---|---|---|---|---|---|---|---|

| Type of effort | Effortful challenge | NAc | BLA | ACC | mPFC | DA | |

| T-maze | Physical | Complete one unique and effortful action (scale barrier) | ↓ | ↓ | ↓ | — | ✓ |

| Concurrent choice task | Physical | Complete more units of equally effortful response (lever press) versus none | ↓ | NA | — | — | ✓ |

| EDT | Physical | Complete more units of equally effortful response (lever press) versus less | ↓ | ↓ | — | — | ✓ |

| rCET | Cognitive | Accurately localize brief visual stimulus (attentionally demanding) | —b | ↑↓ | ↓ | ↓ |  |

a Symbols are used to indicate whether choice of the effortful option is increased (↑), decreased (↓), or unaffected (—) by inactivation or silencing of the NAc, BLA, ACC, or regions of the mPFC. The last column provides a rough indication of whether DA plays a strong role in mediating choice, based on the effects of acute or chronic pharmacological challenges with specific dopaminergic agents.

b NAC inactivation completely abolished performance of the rCET task; therefore, it is impossible to conclude that a selective reduction in choice of the harder option is observed, although obviously such choice does decline.

The frontal cortex is also more widely involved in rCET performance, with PrL, IL, and ACC inactivations causing a shift toward the easier option in all rats, whereas the ACC only plays a critical role in supporting choice of more physically demanding tasks when either a physical barrier is used or when the two options are more difficult to distinguish. When integrating these preclinical findings with those obtained from human subjects, it is interesting to note that ACC stimulation induced a feeling of persevering in the face of adversity (Parvizi et al., 2013). As such, activity within the ACC may influence effort-related choices by enabling the decision-maker to overcome seemingly more daunting mental or physical obstacles, or alternatively, reducing the attractiveness of easily accessible yet inferior rewards, thereby empowering the individual to “stay the course” to obtain more preferred rewards.

The differential involvement of other medial prefrontal regions in mediating performance of the rCET versus physical effort tasks may reflect the nature of the effortful task. Rather than completing a physical challenge, rats are instead performing an attentionally-demanding test requiring accuracy of target detection and behavioral inhibition, processes that are more frontally dependent than scaling a barrier or completing a series of lever-responses. Similarly, the greater involvement of dopamine in physical-effort tasks may reflect the importance of this neurotransmitter in motor control. However, dopaminergic innervation of frontostriatal circuits is clearly implicated in attention and impulsivity (Dalley et al., 2004), yet dopamine antagonists did not alter choice on the rCET. While involvement in the effortful activity itself may recruit neurochemical systems into the decision process, this is clearly not the dominant factor involved. The observation that decisions involving the assessment of physical and cognitive effort cost are mediated by partly dissociable neural and neurochemical systems may offer valuable insight into the nature of individual differences in willingness to engage in physically versus cognitively demanding tasks, and potentially inform any interventions designed to encourage these qualitatively distinct kinds of endeavor.

Summary and future directions

Combining the latest technological advances with behavioral assays of decision making has the potential to increase our knowledge regarding the mechanisms underlying the choice process with unparalleled neural and neurochemical specificity. Current neuroscience research does not support the idea that there is one central “decision-making region,” in which all evaluation and comparative processes occur, but that this process is likely composed of a network of regions that exist in a dynamic network. As per the related field of sensorimotor decision making, activity within the nodes of this network is thought to peak as evidence from internal and external processing accumulates, until selection of option X among competing alternatives comes to dominate (Orsini et al., 2015b). Furthermore, each node and neurotransmitter within the neural circuitry discussed here also contributes to numerous other brain and cognitive processes. Recognizing the duplicity of functions mediated by a region may actually clarify its contribution to choice on a particular paradigm. For example, the conclusion that damage to the OFC results in risky choice, as measured by the original version of the IGT, was refined in light of data demonstrating that this choice deficit could be attributed to a deficit in reversal learning (Fellows and Farah, 2005).

Although most of the data collected to date were generated from male rats, it will be important to validate these behavioral assays in female rats and determine whether performance is moderated by sex. Indeed, whereas female rats make fewer advantageous choices compared with males in the RIGT (van den Bos et al., 2012), recent findings suggest that female rats are more risk-averse when performing the RDT (Orsini et al., 2016). The authors of the latter study discussed the importance of ruling out potential confounds related to task engagement before concluding there could be a sex-dependent difference in cognition. For example, with respect to the RDT, it was critical to control for shock reactivity and motivation for food reward, both of which may be altered in female rats given that they are typically smaller and lighter than males. It is increasingly recognized that a range of contextual factors, including trial rate, motivational state, the “framing” of decisions (gain vs loss), and even the risk preference of probands, can also significantly impact risky choice, and likewise may be important to consider when trying to translate behavioral methods across species (Blanchard et al., 2014; Blanchard and Hayden, 2015; Cocker and Winstanley, 2015; Heilbronner and Hayden, 2016; Suzuki et al., 2016). While we have focused on rodent studies, the importance of nonhuman primate research in delineating key brain mechanisms underlying choice, such as parsing action-value versus stimulus-value computations, cannot be understated (Samejima et al., 2005; Lau and Glimcher, 2008; Morris et al., 2014). As rat decision-making research increases in complexity, its synthesis with nonhuman primate decision making research merits further consideration and may likewise highlight important areas for future research and methodological innovation (e.g., Redish, 2016).

In conclusion, the data reviewed here demonstrate that the cognitive processes taxed by different decision-making tasks can vary significantly, and this impacts the neural circuits that are recruited in guiding the direction of behavior. It is important to keep this in mind when selecting a behavioral assay that will maximize the chances of fulfilling the goals of the experiment. At the simplest level, this could mean choosing a task that is known to be sensitive to manipulations of the area or neurotransmitter system under investigation. For example, if the experimental aim is to delineate the role of dopaminergic projections to the anterior cingulate cortex in mediating decisions involving differential amounts of effort, it would be optimal to select the maze-based test of physical effort, instead of the rCET or concurrent-choice task. If the aim is to look at the role played by subjective, rather than objective, reward value in risky choice, consider choosing a task in which the options are matched for expected utility. Critically, if task choice must be determined by either available behavioral testing equipment or other logistical challenges, being aware of the limitations and potential confounds associated with any particular behavioral assay will enable a more informed discussion of the results, and therefore a more insightful and informative contribution to the literature. As such, deciding which decision-making task is most appropriate in addressing an experimental question may therefore be one of the most critical decisions of all.

Footnotes

This work was supported by Canadian Institutes for Health Research operating grants to C.A.W. and S.B.F. and Canadian Natural Sciences and Engineering Council Discovery Grants to C.A.W. and S.B.F. We thank Dr. Caitlin Orsini for providing data used to generate one of the figures in this article.

C.A.W. received salary support through the Michael Smith Foundation for Health Research and the Canadian Institutes of Health Research New Investigator Award program, and has consulted for Shire Pharmaceuticals on an unrelated matter. The other author declares no competing financial interests.

References

- Balleine BW, O'Doherty JP. Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology. 2010;35:48–69. doi: 10.1038/npp.2009.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bardgett ME, Depenbrock M, Downs N, Points M, Green L. Dopamine modulates effort-based decision-making in rats. Behav Neurosci. 2009;123:242–251. doi: 10.1037/a0014625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrus MM, Winstanley CA. Dopamine D3 receptors modulate the ability of win-paired cues to increase risky choice in a rat gambling task. J Neurosci. 2016;36:785–794. doi: 10.1523/JNEUROSCI.2225-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrus MM, Hosking JG, Cocker PJ, Winstanley CA. Inactivation of the orbitofrontal cortex reduces irrational choice on a rodent Betting Task. Neuroscience. 2016 doi: 10.1016/j.neuroscience.2016.02.028. doi: 10.1016/j.neuroscience.2016.02.028. Advance online publication. Retrieved Feb. 19, 2016. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H. Decision-making and addiction: I. Impaired activation of somatic states in substance dependent individuals when pondering decisions with negative future consequences. Neuropsychologia. 2002;40:1675–1689. doi: 10.1016/S0028-3932(02)00015-5. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition. 1994;50:7–15. doi: 10.1016/0010-0277(94)90018-3. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J Neurosci. 1999;19:5473–5481. doi: 10.1523/JNEUROSCI.19-13-05473.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchard TC, Hayden BY. Monkeys are more patient in a foraging task than in a standard intertemporal choice task. PLoS One. 2015;10:e0117057. doi: 10.1371/journal.pone.0117057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchard TC, Wilke A, Hayden BY. Hot-hand bias in rhesus monkeys. J Exp Psychol Anim Learn Cogn. 2014;40:280–286. doi: 10.1037/xan0000033. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Huffstetler S, McGuire JT. Effort discounting in human nucleus accumbens. Cogn Affect Behav Neurosci. 2009;9:16–27. doi: 10.3758/CABN.9.1.16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Howes NJ. Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci. 2005;6:37. doi: 10.1186/1471-2202-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carli M, Robbins TW, Evenden JL, Everitt BJ. Effects of lesions to ascending noradrenergic neurons on performance of a 5-choice serial reaction time task in rats: implications for theories of dorsal noradrenergic bundle function based on selective attention and arousal. Behav Brain Res. 1983;9:361–380. doi: 10.1016/0166-4328(83)90138-9. [DOI] [PubMed] [Google Scholar]

- Clark L. Decision-making during gambling: an integration of cognitive and psychobiological approaches. Philos Trans R Soc Lond B Biol Sci. 2010;365:319–330. doi: 10.1098/rstb.2009.0147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark L, Iversen SD, Goodwin GM. A neuropsychological investigation of prefrontal cortex involvement in acute mania. Am J Psychiatry. 2001;158:1605–1611. doi: 10.1176/appi.ajp.158.10.1605. [DOI] [PubMed] [Google Scholar]

- Cocker PJ, Winstanley CA. Irrational beliefs, biases and gambling: exploring the role of animal models in elucidating vulnerabilities for the development of pathological gambling. Behav Brain Res. 2015;279:259–273. doi: 10.1016/j.bbr.2014.10.043. [DOI] [PubMed] [Google Scholar]

- Cocker PJ, Hosking JG, Benoit J, Winstanley CA. Sensitivity to cognitive effort mediates psychostimulant effects on a novel rodent cost/benefit decision-making task. Neuropsychopharmacology. 2012a;37:1825–1837. doi: 10.1038/npp.2012.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cocker PJ, Dinelle K, Kornelson R, Sossi V, Winstanley CA. Irrational choice under uncertainty correlates with lower striatal D(2/3) receptor binding in rats. J Neurosci. 2012b;32:15450–15457. doi: 10.1523/JNEUROSCI.0626-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cousins MS, Wei W, Salamone JD. Pharmacological characterization of performance on a concurrent lever pressing/feeding choice procedure: effects of dopamine antagonist, cholinomimetic, sedative and stimulant drugs. Psychopharmacology (Berl) 1994;116:529–537. doi: 10.1007/BF02247489. [DOI] [PubMed] [Google Scholar]

- Cowen SL, Davis GA, Nitz DA. Anterior cingulate neurons in the rat map anticipated effort and reward to their associated action sequences. J Neurophysiol. 2012;107:2393–2407. doi: 10.1152/jn.01012.2011. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalley JW, Cardinal RN, Robbins TW. Prefrontal executive and cognitive function in rodents: neural and neurochemical substrates. Neurosci Biobehav Rev. 2004;28:771–784. doi: 10.1016/j.neubiorev.2004.09.006. [DOI] [PubMed] [Google Scholar]

- de Visser L, Baars AM, Lavrijsen M, van der Weerd CM, van den Bos R. Decision-making performance is related to levels of anxiety and differential recruitment of frontostriatal areas in male rats. Neuroscience. 2011;184:97–106. doi: 10.1016/j.neuroscience.2011.02.025. [DOI] [PubMed] [Google Scholar]

- Evenden JL, Ryan CN. The pharmacology of impulsive behaviour in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology. 1996;128:161–170. doi: 10.1007/s002130050121. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. Ventromedial frontal cortex mediates affective shifting in humans: evidence from a reversal learning paradigm. Brain. 2003;126:1830–1837. doi: 10.1093/brain/awg180. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. Different underlying impairments in decision-making following ventromedial and dorsolateral frontal lobe damage in humans. Cereb Cortex. 2005;15:58–63. doi: 10.1093/cercor/bhh108. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S. Amygdala-prefrontal cortical circuitry regulates effort-based decision-making. Cereb Cortex. 2007;17:251–260. doi: 10.1093/cercor/bhh143. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Tse MT, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort- and delay-based decision-making. Neuropsychopharmacology. 2008;33:1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- Gee S, Ellwood I, Patel T, Luongo F, Deisseroth K, Sohal VS. Synaptic activity unmasks dopamine D2 receptor modulation of a specific class of layer V pyramidal neurons in prefrontal cortex. J Neurosci. 2012;32:4959–4971. doi: 10.1523/JNEUROSCI.5835-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghods-Sharifi S, Floresco SB. Differential effects on effort discounting induced by inactivations of the nucleus accumbens core or shell. Behav Neurosci. 2010;124:179–191. doi: 10.1037/a0018932. [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S, St Onge JR, Floresco SB. Fundamental contribution by the basolateral amygdala to different forms of decision-making. J Neurosci. 2009;29:5251–5259. doi: 10.1523/JNEUROSCI.0315-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauber W, Sommer S. Prefrontostriatal circuitry regulates effort-related decision-making. Cereb Cortex. 2009;19:2240–2247. doi: 10.1093/cercor/bhn241. [DOI] [PubMed] [Google Scholar]

- Heilbronner SR, Hayden BY. The description-experience gap in risky choice in nonhuman primates. Psychonom Bull Rev. 2016;23:593–600. doi: 10.3758/s13423-015-0924-2. [DOI] [PubMed] [Google Scholar]

- Heilbronner SR, Rodriguez-Romaguera J, Quirk GJ, Groenewegen HJ, Haber SN. Circuit-based corticostriatal homologies between rat and primate. Biol Psychiatry. 2016;80:509–521. doi: 10.1016/j.biopsych.2016.05.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holec V, Pirot HL, Euston DR. Not all effort is equal: the role of the anterior cingulate cortex in different forms of effort-reward decisions. Front Behav Neurosci. 2014;8:12. doi: 10.3389/fnbeh.2014.00012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosking JG, Cocker PJ, Winstanley CA. Dissociable contributions of anterior cingulate cortex and basolateral amygdala on a rodent cost/benefit decision-making task of cognitive effort. Neuropsychopharmacology. 2014a;39:1558–1567. doi: 10.1038/npp.2014.27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosking JG, Lam FC, Winstanley CA. Nicotine increases impulsivity and decreases willingness to exert cognitive effort despite improving attention in “slacker” rats: insights into cholinergic regulation of cost/benefit decision-making. PLoS One. 2014b;9:e111580. doi: 10.1371/journal.pone.0111580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosking JG, Floresco SB, Winstanley CA. Dopamine antagonism decreases willingness to expend physical, but not cognitive, effort: a comparison of two rodent cost/benefit decision-making tasks. Neuropsychopharmacology. 2015;40:1005–1015. doi: 10.1038/npp.2014.285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosking JG, Cocker PJ, Winstanley CA. Prefrontal cortical inactivations decrease willingness to expend cognitive effort on a rodent cost/benefit decision-making task. Cereb Cortex. 2016;26:1529–1538. doi: 10.1093/cercor/bhu321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Jollant F, Bellivier F, Leboyer M, Astruc B, Torres S, Verdier R, Castelnau D, Malafosse A, Courtet P. Impaired decision-making in suicide attempters. Am J Psychiatry. 2005;162:304–310. doi: 10.1176/appi.ajp.162.2.304. [DOI] [PubMed] [Google Scholar]

- Kahneman D. Thinking fast and slow. London: Penguin; 2011. [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–292. doi: 10.2307/1914185. [DOI] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision-making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- Kool W, McGuire JT, Rosen ZB, Botvinick MM. Decision-making and the avoidance of cognitive demand. J Exp Psychol Gen. 2010;139:665–682. doi: 10.1037/a0020198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz AV, Tye LD, Kreitzer AC. Distinct roles for direct and indirect pathway striatal neurons in reinforcement. Nat Neurosci. 2012;15:816–818. doi: 10.1038/nn.3100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Seymour B, Talmi D, Yoshida W, Chater N, Dolan RJ. Choosing to make an effort: the role of striatum in signaling physical effort of a chosen action. J Neurophysiol. 2010;104:313–321. doi: 10.1152/jn.00027.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larkin JD, Jenni NL, Floresco SB. Modulation of risk/reward decision-making by dopaminergic transmission within the basolateral amygdala. Psychopharmacology (Berl) 2016;233:121–136. doi: 10.1007/s00213-015-4094-8. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron. 2008;58:451–463. doi: 10.1016/j.neuron.2008.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lobo MK, Covington HE, 3rd, Chaudhury D, Friedman AK, Sun H, Damez-Werno D, Dietz DM, Zaman S, Koo JW, Kennedy PJ, Mouzon E, Mogri M, Neve RL, Deisseroth K, Han MH, Nestler EJ. Cell type-specific loss of BDNF signaling mimics optogenetic control of cocaine reward. Science. 2010;330:385–390. doi: 10.1126/science.1188472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris RW, Dezfouli A, Griffiths KR, Balleine BW. Action-value comparisons in the dorsolateral prefrontal cortex control choice between goal-directed actions. Nat Commun. 2014;5:4390. doi: 10.1038/ncomms5390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naccache L, Dehaene S, Cohen L, Habert MO, Guichart-Gomez E, Galanaud D, Willer JC. Effortless control: executive attention and conscious feeling of mental effort are dissociable. Neuropsychologia. 2005;43:1318–1328. doi: 10.1016/j.neuropsychologia.2004.11.024. [DOI] [PubMed] [Google Scholar]

- Nowend KL, Arizzi M, Carlson BB, Salamone JD. D1 or D2 antagonism in nucleus accumbens core or dorsomedial shell suppresses lever pressing for food but leads to compensatory increases in chow consumption. Pharmacol Biochem Behav. 2001;69:373–382. doi: 10.1016/S0091-3057(01)00524-X. [DOI] [PubMed] [Google Scholar]

- Orsini CA, Trotta RT, Bizon JL, Setlow B. Dissociable roles for the basolateral amygdala and orbitofrontal cortex in decision-making under risk of punishment. J Neurosci. 2015a;35:1368–1379. doi: 10.1523/JNEUROSCI.3586-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Moorman DE, Young JW, Setlow B, Floresco SB. Neural mechanisms regulating different forms of risk-related decision-making: insights from animal models. Neurosci Biobehav Rev. 2015b;58:147–167. doi: 10.1016/j.neubiorev.2015.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Willis ML, Gilbert RJ, Bizon JL, Setlow B. Sex differences in a rat model of risky decision-making. Behav Neurosci. 2016;130:50–61. doi: 10.1037/bne0000111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostrander S, Cazares VA, Kim C, Cheung S, Gonzalez I, Izquierdo A. Orbitofrontal cortex and basolateral amygdala lesions result in suboptimal and dissociable reward choices on cue-guided effort in rats. Behav Neurosci. 2011;125:350–359. doi: 10.1037/a0023574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paine TA, Asinof SK, Diehl GW, Frackman A, Leffler J. Medial prefrontal cortex lesions impair decision-making on a rodent gambling task: reversal by D1 receptor antagonist administration. Behav Brain Res. 2013;243:247–254. doi: 10.1016/j.bbr.2013.01.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parvizi J, Rangarajan V, Shirer WR, Desai N, Greicius MD. The will to persevere induced by electrical stimulation of the human cingulate gyrus. Neuron. 2013;80:1359–1367. doi: 10.1016/j.neuron.2013.10.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pushparaj A, Kim AS, Musiol M, Zangen A, Daskalakis ZJ, Zack M, Winstanley CA, Le Foll B. Differential involvement of the agranular vs granular insular cortex in the acquisition and performance of choice behavior in a rodent gambling task. Neuropsychopharmacology. 2015;40:2832–2842. doi: 10.1038/npp.2015.133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randall PA, Pardo M, Nunes EJ, López Cruz L, Vemuri VK, Makriyannis A, Baqi Y, Müller CE, Correa M, Salamone JD. Dopaminergic modulation of effort-related choice behavior as assessed by a progressive ratio chow feeding choice task: pharmacological studies and the role of individual differences. PLoS One. 2012;7:e47934. doi: 10.1371/journal.pone.0047934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish AD. Vicarious trial and error. Nat Rev Neurosci. 2016;17:147–159. doi: 10.1038/nrn.2015.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivalan M, Ahmed SH, Dellu-Hagedorn F. Risk-prone individuals prefer the wrong options on a rat version of the Iowa Gambling Task. Biol Psychiatry. 2009;66:743–749. doi: 10.1016/j.biopsych.2009.04.008. [DOI] [PubMed] [Google Scholar]

- Robbins TW. The 5-choice serial reaction time task: behavioural pharmacology and functional neurochemistry. Psychopharmacology. 2002;163:362–380. doi: 10.1007/s00213-002-1154-7. [DOI] [PubMed] [Google Scholar]

- Rokosik SL, Napier TC. Intracranial self-stimulation as a positive reinforcer to study impulsivity in a probability discounting paradigm. J Neurosci Methods. 2011;198:260–269. doi: 10.1016/j.jneumeth.2011.04.025. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Steinpreis RE, McCullough LD, Smith P, Grebel D, Mahan K. Haloperidol and nucleus accumbens dopamine depletion suppress lever pressing for food but increase free food consumption in a novel food choice procedure. Psychopharmacology (Berl) 1991;104:515–521. doi: 10.1007/BF02245659. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res. 1994;65:221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Wisniecki A, Carlson BB, Correa M. Nucleus accumbens dopamine depletions make animals highly sensitive to high fixed ratio requirements but do not impair primary food reinforcement. Neuroscience. 2001;105:863–870. doi: 10.1016/S0306-4522(01)00249-4. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl) 2007;191:461–482. doi: 10.1007/s00213-006-0668-9. [DOI] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Schweimer J, Hauber W. Involvement of the rat anterior cingulate cortex in control of instrumental responses guided by reward expectancy. Learn Mem. 2005;12:334–342. doi: 10.1101/lm.90605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seong HJ, Carter AG. D1 receptor modulation of action potential firing in a subpopulation of layer 5 pyramidal neurons in the prefrontal cortex. J Neurosci. 2012;32:10516–10521. doi: 10.1523/JNEUROSCI.1367-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shafiei N, Gray M, Viau V, Floresco SB. Acute stress induces selective alterations in cost/benefit decision-making. Neuropsychopharmacology. 2012;37:2194–2209. doi: 10.1038/npp.2012.69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silveira MM, Tremblay M, Winstanley CA. Dissociable contributions of dorsal and ventral regions of the striatum on a rodent cost/benefit decision-making task requiring cognitive effort. San Diego: Society for Neuroscience; 2016a. [DOI] [PubMed] [Google Scholar]

- Silveira MM, Adams WK, Morena M, Hill MN, Winstanley CA. Δ9-Tetrahydrocannabinol decreases willingness to exert cognitive effort in male rats. J Psychiatry Neurosci. 2016b;41:150363. doi: 10.1503/jpn.150363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon NW, Gilbert RJ, Mayse JD, Bizon JL, Setlow B. Balancing risk and reward: a rat model of risky decision-making. Neuropsychopharmacology. 2009;34:2208–2217. doi: 10.1038/npp.2009.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon NW, Montgomery KS, Beas BS, Mitchell MR, LaSarge CL, Mendez IA, Bañuelos C, Vokes CM, Taylor AB, Haberman RP, Bizon JL, Setlow B. Dopaminergic modulation of risky decision-making. J Neurosci. 2011;31:17460–17470. doi: 10.1523/JNEUROSCI.3772-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon NW, Beas BS, Montgomery KS, Haberman RP, Bizon JL, Setlow B. Prefrontal cortical-striatal dopamine receptor mRNA expression predicts distinct forms of impulsivity. Eur J Neurosci. 2013;37:1779–1788. doi: 10.1111/ejn.12191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Cooch NK, Schoenbaum G. What the orbitofrontal cortex does not do. Nat Neurosci. 2015;18:620–627. doi: 10.1038/nn.3982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- St Onge JR, Floresco SB. Dopaminergic regulation of risk-based decision-making. Neuropsychopharmacology. 2009;34:681–697. doi: 10.1038/npp.2008.121. [DOI] [PubMed] [Google Scholar]

- St Onge JR, Floresco SB. Prefrontal cortical contribution to risk-based decision-making. Cereb Cortex. 2010;20:1816–1828. doi: 10.1093/cercor/bhp250. [DOI] [PubMed] [Google Scholar]

- St Onge JR, Abhari H, Floresco SB. Dissociable contributions by prefrontal D1 and D2 receptors to risk-based decision-making. J Neurosci. 2011;31:8625–8633. doi: 10.1523/JNEUROSCI.1020-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- St Onge JR, Stopper CM, Zahm DS, Floresco SB. Separate prefrontal-subcortical circuits mediate different components of risk-based decision-making. J Neurosci. 2012;32:2886–2899. doi: 10.1523/JNEUROSCI.5625-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stopper CM, Floresco SB. Contributions of the nucleus accumbens and its subregions to different aspects of risk-based decision-making. Cogn Affect Behav Neurosci. 2011;11:97–112. doi: 10.3758/s13415-010-0015-9. [DOI] [PubMed] [Google Scholar]

- Stopper CM, Khayambashi S, Floresco SB. Receptor-specific modulation of risk-based decision-making by nucleus accumbens dopamine. Neuropsychopharmacology. 2013;38:715–728. doi: 10.1038/npp.2012.240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stopper CM, Green EB, Floresco SB. Selective involvement by the medial orbitofrontal cortex in biasing risky, but not impulsive, choice. Cereb Cortex. 2014;24:154–162. doi: 10.1093/cercor/bhs297. [DOI] [PubMed] [Google Scholar]

- Suzuki S, Jensen EL, Bossaerts P, O'Doherty JP. Behavioral contagion during learning about another agent's risk-preferences acts on the neural representation of decision-risk. Proc Natl Acad Sci U S A. 2016;113:3755–3760. doi: 10.1073/pnas.1600092113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Buckholtz JW, Schwartzman AN, Lambert WE, Zald DH. Worth the ‘EEfRT?’ The effort expenditure for rewards task as an objective measure of motivation and anhedonia. PLoS One. 2009;4:e6598. doi: 10.1371/journal.pone.0006598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Buckholtz JW, Cowan RL, Woodward ND, Li R, Ansari MS, Baldwin RM, Schwartzman AN, Kessler RM, Zald DH. Dopaminergic mechanisms of individual differences in human effort-based decision-making. J Neurosci. 2012;32:6170–6176. doi: 10.1523/JNEUROSCI.6459-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay M, Hosking JG, Winstanley CA. Effects of chronic D-2/3 agonist ropinirole medication on rodent models of gambling behaviour. Mov Disord. 2013;28:S218. [Google Scholar]

- Tremblay M, Cocker PJ, Hosking JG, Zeeb FD, Rogers RD, Winstanley CA. Dissociable effects of basolateral amygdala lesions on decision-making biases in rats when loss or gain is emphasized. Cogn Affect Behav Neurosci. 2014;14:1184–1195. doi: 10.3758/s13415-014-0271-1. [DOI] [PubMed] [Google Scholar]

- Tremblay M, Silveira MM, Kaur S, Hosking JG, Adams WK, Baunez C, Winstanley CA. Chronic D2/3 agonist ropinirole treatment increases preference for uncertainty in rats regardless of baseline choice patterns. Eur J Neurosci. 2016 doi: 10.1111/ejn.13332. doi: 10.1111/ejn.13332. Advance online publication. Retrieved July 16, 2016. [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. The framing of decisions and the psychology of choice. Science. 1981;211:453–458. doi: 10.1126/science.7455683. [DOI] [PubMed] [Google Scholar]

- Uylings HB, Groenewegen HJ, Kolb B. Do rats have a prefrontal cortex? Behav Brain Res. 2003;146:3–17. doi: 10.1016/j.bbr.2003.09.028. [DOI] [PubMed] [Google Scholar]

- van den Bos R, Lasthuis W, den Heijer E, van der Harst J, Spruijt B. Toward a rodent model of the Iowa gambling task. Behav Res Methods. 2006;38:470–478. doi: 10.3758/BF03192801. [DOI] [PubMed] [Google Scholar]

- van den Bos R, Jolles J, van der Knaap L, Baars A, de Visser L. Male and female Wistar rats differ in decision-making performance in a rodent version of the Iowa Gambling Task. Behav Brain Res. 2012;234:375–379. doi: 10.1016/j.bbr.2012.07.015. [DOI] [PubMed] [Google Scholar]

- van Honk J, Hermans EJ, Putman P, Montagne B, Schutter DJ. Defective somatic markers in sub-clinical psychopathy. Neuroreport. 2002;13:1025–1027. doi: 10.1097/00001756-200206120-00009. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Rushworth MF. The role of rat medial frontal cortex in effort-based decision-making. J Neurosci. 2002;22:10996–11003. doi: 10.1523/JNEUROSCI.22-24-10996.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]