Abstract

A major principle of human brain organization is “integrating” some regions into networks while “segregating” other sets of regions into separate networks. However, little is known about the cognitive function of the integration and segregation of brain networks. Here, we examined the well-studied brain network for face processing, and asked whether the integration and segregation of the face network (FN) are related to face recognition performance. To do so, we used a voxel-based global brain connectivity method based on resting-state fMRI to characterize the within-network connectivity (WNC) and the between-network connectivity (BNC) of the FN. We found that 95.4% of voxels in the FN had a significantly stronger WNC than BNC, suggesting that the FN is a relatively encapsulated network. Importantly, individuals with a stronger WNC (i.e., integration) in the right fusiform face area were better at recognizing faces, whereas individuals with a weaker BNC (i.e., segregation) in the right occipital face area performed better in the face recognition tasks. In short, our study not only demonstrates the behavioral relevance of integration and segregation of the FN but also provides evidence supporting functional division of labor between the occipital face area and fusiform face area in the hierarchically organized FN.

SIGNIFICANCE STATEMENT Although the integration and segregation are major principles of human brain organization, little is known about whether they support the cognitive processes. By correlating the within-network connectivity (WNC) and between-network connectivity (BNC) of the face network with face recognition performance, we found that individuals with stronger WNC in the right fusiform face area or weaker BNC in the right occipital face area were better at recognizing faces. Our study not only demonstrates the behavioral relevance of the integration and segregation but also provides evidence supporting functional division of labor between the occipital face area and fusiform face area in the hierarchically organized face network.

Keywords: between-network connectivity, face recognition, fusiform face area, occipital face area, within-network connectivity

Introduction

A major principle of human brain organization is “integrating” some regions into functional networks while “segregating” other sets of regions into separate networks (Tononi et al., 1994, 1999; Fair et al., 2007; Supekar et al., 2009). The mechanism of integration (i.e., strengthened interregional connections within a network) and segregation (i.e., weakened interregional connections between networks) is important for gating information flow (Salinas and Sejnowski, 2001; Raichle and Snyder, 2007; Rubinov and Sporns, 2010) and for developing and maintaining mature network architecture (Fair et al., 2007, 2008, 2009; Dosenbach et al., 2010). Specifically, studies based on resting-state functional connectivity (FC) have revealed that both integration and segregation drive the functional maturation of six major functional networks in the adult brain (i.e., the frontoparietal, cingulo-opercular, default, sensorimotor, occipital, and cerebellum networks) (Dosenbach et al., 2010). However, a fundamental question that remains unanswered is whether and how the integration and segregation of functional networks support the related cognitive processes.

The functional network for face processing provides an ideal test platform to address this question. Neuroimaging studies have revealed a well-described face network (FN) comprising brain regions that are coactivated in a variety of face processing tasks (for reviews, see Haxby et al., 2000; Gobbini and Haxby, 2007; Ishai, 2008; Pitcher et al., 2011a), including the fusiform face area (FFA), occipital face area (OFA), superior temporal sulcus (STS), amygdala (AMG), inferior frontal gyrus (IFG), anterior temporal cortex (ATC), and visual cortex (VC) (Calder and Young, 2005; Kanwisher and Yovel, 2006; Gobbini and Haxby, 2007; Liu et al., 2010; Zhang et al., 2012; Zhen et al., 2013). Recent FC studies have shown that some face-selective regions are strongly connected (e.g., the FFA-OFA, FFA-STS, FFA-VC, and STS-AMG) under both task-state and resting-state in the adult brain (Zhang et al., 2009; Turk-Browne et al., 2010; Zhu et al., 2011; Davies-Thompson and Andrews, 2012; O'Neil et al., 2014), and effective connectivity of the FFA-OFA and the OFA-STS increases from childhood to adulthood (Cohen Kadosh et al., 2011). Therefore, the coactivated face-selective regions are likely integrated through strengthened FC to form the FN. Furthermore, FC between the face-selective regions can be read out for behavioral performance. For example, stronger FFA-OFA FC is related to better performance in a variety of face tasks (Zhu et al., 2011), and stronger FC between the FFA and a face region in the perirhinal cortex is associated with a larger face inversion effect (O'Neil et al., 2014). However, these studies only focused on specific regions within the FN, and did not investigate the integration of the FN as a whole. Furthermore, no study has yet characterized the segregation of the FN, that is, the weakened FC between the FN and nonface networks (NFN) in the brain.

In this study, we first characterized the within-network connectivity (WNC) and between-network connectivity (BNC) of the FN in a large sample of participants (N = 296) with a voxel-based global brain connectivity (GBC) method using resting-state fMRI (rs-fMRI) (Cole et al., 2012), and then examined whether these measures were related to behavioral performance in face recognition. Specifically, the FN was defined as a set of voxels that are selectively responsive to faces over objects through a standard functional localizer, and the NFN was defined as the rest of the non–face-selective voxels in the brain. For each voxel in the FN, the WNC was calculated as the averaged FC of a voxel to the rest of the face-selective voxels in the FN, whereas BNC was the averaged FC of a face-selective voxel in the FN to all of the NFN voxels. By correlating the WNC and BNC of each voxel in the FN with face recognition performance across participants, we characterized the behavioral relevance of the integration (i.e., stronger WNC) and segregation (i.e., weaker BNC) of the FN, which may shed light on the hierarchical structure of the FN.

Materials and Methods

As part of an ongoing project investigating the associations among gene, environment, brain, and behavior (e.g., Wang et al., 2012, 2014; Huang et al., 2014; Kong et al., 2015a,b,c; Song et al., 2015a; Zhang et al., 2015b; Zhen et al., 2015), the present study used multimodal data to investigate the behavioral relevance of FN′s within-network integration and between-network segregation. First, a task-state fMRI (ts-fMRI) scan was performed to localize the FN (Pitcher et al., 2011b; Zhen et al., 2015). Second, an rs-fMRI scan was used to characterize the intrinsic WNC and BNC of the FN. Third, two behavioral tests were conducted outside of the MRI scanner in a separate behavioral session to measure face recognition performance for each participant (using the same cohort as those in the rs-fMRI scan). Finally, correlational analyses were performed between the WNC/BNC of the FN and face recognition ability to investigate the behavioral relevance of the within-network integration and between-network segregation of the FN.

Participants

All participants were college students recruited from Beijing Normal University, Beijing, China. The participants reported no history of neurological or psychiatric disorders and had normal or corrected-to-normal vision. A total of 202 participants (124 females; 182 self-reported right-handed; mean age = 20.3 years, SD = 0.9 years) participated in the functional localizer scan, and 296 participants (176 females; 273 self-reported right-handed; mean age = 20.4 years, SD = 0.9 years; of which 192 had participated in the functional localizer scan) participated in the rs-fMRI scan and behavioral session. Both the behavioral and MRI protocols were approved by the Institutional Review Board of Beijing Normal University. Written informed consent was obtained from all participants before the study.

Functional localizer for the FN

A dynamic face localizer was used to define the FN (Pitcher et al., 2011b). Specifically, three blocked-design functional runs were conducted with each participant. Each run contained two block sets, intermixed with three 18 s fixation blocks at the beginning, middle, and end of the run. Each block set consisted of four blocks with four stimulus categories (faces, objects, scenes, and scrambled objects), with each stimulus category presented in an 18 s block that contained six 3 s movie clips. During the scanning, participants were instructed to passively view the movie clips containing faces, objects, scenes, or scrambled objects (for more details on the paradigm, see Zhen et al., 2015).

Image acquisition

MRI scanning was conducted on a Siemens 3T scanner (MAGENTOM Trio, a Tim system) with a 12-channel phased-array head coil at Beijing Normal University Imaging Center for Brain Research, Beijing, China. In total, three sets of MRI images were acquired. Specifically, the ts-fMRI was acquired using a T2*-weighted gradient-echo echo-planar-imaging (GRE-EPI) sequence (TR = 2000 ms, TE = 30 ms, flip angle = 90 degrees, number of slices = 30, voxel size = 3.125 × 3.125 × 4.8 mm). The rs-fMRI was scanned using the GRE-EPI sequence with different sequence parameters from ts-fMRI (TR = 2000 ms, TE = 30 ms, flip angle = 90°, number of slices = 33, voxel size = 3.125 × 3.125 × 3.6 mm). The rs-fMRI scanning lasted for 8 min and consisted of 240 contiguous EPI volumes. In the scan, participants were instructed to relax without engaging in any specific task and to remain still with their eyes closed. In addition, a high-resolution T1-weighted magnetization prepared gradient echo sequence (MPRAGE: TR/TE/TI = 2530/3.39/1100 ms, flip angle = 7°, matrix = 256 × 256, number of slices = 128, voxel size = 1 × 1 × 1.33 mm) anatomical scan was acquired for registration purposes and for anatomically localizing the functional regions. Earplugs were used to attenuate scanner noise, and a foam pillow and extendable padded head clamps were used to restrain head motion of participants.

Image preprocessing

ts-fMRI data preprocessing.

The task-state functional images were preprocessed with FEAT (FMRI Expert Analysis Tool) version 5.98, part of FSL (FMRIB's Software Library, http://www.fmrib.ox.ac.uk/fsl). The first-level analysis was conducted separately on each run and each session for each participant. Preprocessing included the following steps: motion correction, brain extraction, spatial smoothing with a 6 mm FWHM Gaussian kernel, intensity normalization, and high-pass temporal filtering (120 s cutoff). Statistical analyses on the time series were performed using FILM (FMRIB's Improved Linear Model) with a local autocorrelation correction. Predictors (i.e., the faces, objects, scenes, and scrambled objects stimuli) were convolved with a gamma hemodynamic response function to generate the main explanatory variables. The temporal derivative of each explanatory variable was modeled to improve the sensitivity of the model. Motion parameters were also included in the GLM as confounding variables of no interest to account for the effect of residual head movements. Two face-selective contrasts were calculated for each run of each session: (1) faces versus objects and (2) faces versus fixation.

A second-level analysis was performed to combine all runs within each session. Specifically, the parameter image from the first-level analysis was initially aligned to the individual's structural images through FLIRT (FMRIB's linear image registration tool) with 6 degrees-of-freedom and then warped to the MNI standard template through FNIRT (FMRIB's nonlinear image registration tool) running with the default parameters. The spatially normalized parameter images (resampled to 2 mm isotropic voxels) were then summarized across runs in each session using a fixed-effect model. The statistical images from the second-level analysis were then used for further group analysis.

rs-fMRI data preprocessing.

The resting-state functional images were also preprocessed with FSL. The preprocessing included the removal of first four images, head motion correction (by aligning each volume to the middle volume of the image with MCFLIRT), spatial smoothing (with a Gaussian kernel of 6 mm FWHM), intensity normalization, and the removal of linear trend. Next, a temporal bandpass filter (0.01–0.1 Hz) was applied to reduce low-frequency drifts and high-frequency noise.

To further eliminate physiological noise, such as fluctuations caused by motion, cardiac and respiratory cycles, nuisance signals from CSF, white matter, whole brain average, motion correction parameters, and first derivatives of these signals were regressed out using the methods described in previous studies (Fox et al., 2005; Biswal et al., 2010). The 4-D residual time series obtained after removing the nuisance covariates were used for the FC analyses. The strength of the intrinsic FC between two voxels was estimated using the Pearson's correlation of the residual resting-state time series at those voxels.

Registration of each participant's rs-fMRI images to the structural images was performed using FLIRT to produce a 6 degrees-of-freedom affine transformation matrix. Registration of each participant's structural images to the MNI space was accomplished using FLIRT to produce a 12 degrees-of-freedom linear affine matrix (Jenkinson and Smith, 2001; Jenkinson et al., 2002).

Behavioral tests

Participants completed two computer-based tasks: the old/new recognition task (Zhu et al., 2011; Wang et al., 2012) and the face-inversion task (Zhu et al., 2011), and one paper-based test, the Raven's Advanced Progressive Matrices (RAPM) (Raven et al., 1998). The RAPM test was conducted on a separate day from the computer-based tasks.

Old/new recognition task.

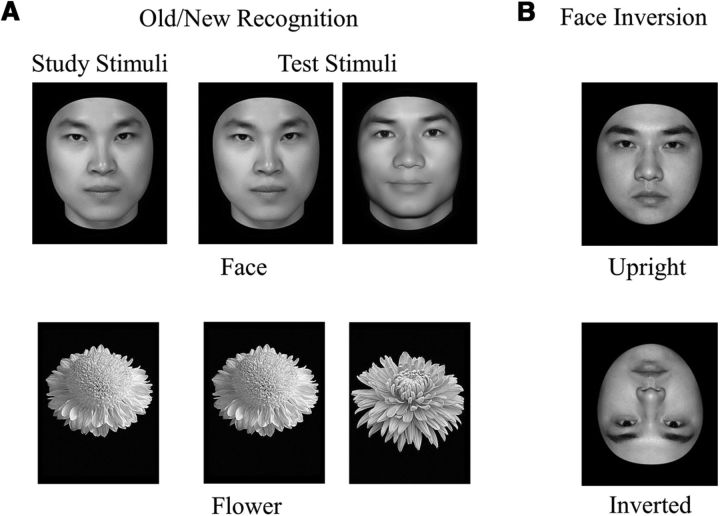

Thirty face images and 30 flower images were used in the old/new recognition task (Fig. 1A). The face images were grayscale pictures of adult Chinese faces with the external contours removed, and the flower images were grayscale pictures of common flowers with leaves and background removed. There were two blocks in this task that were counterbalanced across participants: a face block and a flower block. Each block consisted of one study segment and one test segment. In the study segment, 10 images of one object category were shown for 1 s per image, with an interstimulus interval of 0.5 s, and these studied images were shown twice. In the test segment, 10 studied images were shown twice, randomly intermixed with 20 new images from the same category. On presentation of each image, participants were instructed to indicate whether the image had been shown in the study segment. For each participant, the recognition accuracy was calculated as the average proportion of hits and correct rejections for each category (i.e., face and flower), respectively. The face-specific recognition ability (FRA) was calculated as the normalized residual of the face recognition accuracy after regressing out the flower recognition accuracy (Wang et al., 2012; DeGutis et al., 2013; Huang et al., 2014).

Figure 1.

Example stimuli and trial types. A, In the old/new recognition task, participants studied a single image (either a face or a flower). They were then shown a series of individual images of the corresponding type and asked to indicate which of the images had been shown in the study segment. B, In the face-inversion task, participants performed a successive same-different matching task on upright and inverted faces.

Face-inversion task.

Twenty-five face images were used, which were grayscale adult Chinese male faces (data not shown in the old/new recognition task), with all external information (e.g., hair) removed (Fig. 1B). Pairs of face images were presented sequentially, either both upright or both inverted, with upright- and inverted-face trials randomly interleaved. Each trial started with a blank screen for 1 s, followed by the first face image presented at the center of the screen for 0.5 s. Then, after an interstimulus interval of 0.5 s, the second image was presented until a response was made. Participants were instructed to judge whether the two sequentially presented faces were identical. There were 50 trials in each condition, half of which consisted of face pairs that were identical and the other half of which consisted of face pairs from different individuals. Each face pair was shown only once in each condition, and face pairs from different individuals were mixed and matched. Therefore, each of the 25 male faces was shown 4 times in total (2 for identical trials, 2 for different trials) in both the upright and inverted conditions. For each participant, the accuracy was calculated as the average proportion of hits and correct rejections for the upright and inverted condition, respectively.

Assessment of general intelligence.

The RAPM contains 48 multiple-choice items of abstract reasoning, in which participants are required to select the missing piece of a 3 × 3 matrix from one of eight alternatives. Because the participants were highly homogenous, the number of correctly answered items was used as a measure of each individual's general intelligence.

Within-network and between-network FC analyses for the FN

Definition of the FN.

A voxel that satisfied the following two criteria was considered a face-selective voxel (Kanwisher et al., 1997). First, the face condition must be significantly higher than the baseline (i.e., fixation). Second, the face condition must be significantly higher than object condition. The first criterion ensures that a voxel is responsive to faces, and the second criterion ensures that the response of the voxel is selective to faces (i.e., responding more to faces than to nonface objects). Therefore, we combined the contrast of faces versus fixation and that of faces versus objects to define face-selective activation of each voxel in each individual participant, where the value of the conjunction activation was defined as the smaller Z value of the two contrasts. The conjunction activation of each participant (i.e., Z-statistic image) was then thresholded (Z > 2.3, p < 0.01, uncorrected) (Kawabata Duncan and Devlin, 2011; Zhen et al., 2015) and binarized. The binarized images of all participants were then averaged to create a probabilistic activation map for face recognition, where the value in each voxel represents the percentage of participants who showed face-selective activation in the voxel. That is, a value of 0.2 of a voxel indicates that 20% of the participants showed significant face-selective activation in this voxel. Thus, the probabilistic activation map provides information regarding the interindividual variability of face-selective activation (Van Essen, 2002; Julian et al., 2012; Tahmasebi et al., 2012; Engell and McCarthy, 2013; Zhen et al., 2015). Finally, the FN was created by keeping only the voxels that showed a probability of activation >0.2 in the probabilistic activation map (see also Flöel et al., 2010; Engell and McCarthy, 2013). Accordingly, the rest of the voxels in the gray matter of the brain (constrained by the MNI 25% structural atlas implemented in FSL) was defined as the NFN (i.e., not belonging to the FN).

Within-network and between-network FC.

A GBC method, which is a recently developed analytical approach for neuroimaging data, was used to characterize the intrinsic WNC and BNC of each voxel within the FN (Cole et al., 2012). The GBC of a voxel was generally defined as the averaged FC of that voxel to the rest of the voxels in the whole brain or a predefined mask. This method enables characterization of a specific region's full-range FC with voxelwise resolution, allowing us to comprehensively examine the role of each region's FC in face recognition. Specifically, the WNC of each voxel in the FN was computed as its voxel-based FC within the FN. That is, the FC of a FN voxel to the rest of the FN voxels was computed one by one, which were then averaged as the WNC of the FN voxel. In contrast, the BNC of each FN voxel was computed as its voxel-based FC to the NFN. That is, the FC of a FN voxel to all NFN voxels was computed one by one, which was then averaged to represent the BNC of the FN voxel. Next, participant-level FC maps were transformed to z-score maps using Fisher's z-transformation to yield normally distributed values (Cole et al., 2012; Gotts et al., 2013a). As a result, two types of FC were estimated for each voxel and each participant. As we expected that the WNC would be stronger than the BNC, a paired two-sample t test between the WNC and BNC was performed for each voxel within the FN.

FC-FRA correlation analyses.

Based on the individual variability of the two FC measures and scores from the behavioral tests, correlation analyses were conducted to reveal the functionality of the WNC and BNC in relation to the individual differences in the FRA. Specifically, a Pearson's correlation between FC and FRA was conducted for each voxel with a GLM tool implemented in FSL, where FRA was set as an independent variable and FC as the dependent variable. Multiple-comparison correction was performed on the statistical map using the 3dClustSim program implemented in Analysis of Functional NeuroImages (http://afni.nimh.nih.gov). A threshold of cluster-level p < 0.05 and voxel-level p < 0.05 (cluster size > 100 voxels) was set based on Monte Carlo simulations in the FN mask.

As the FRA was calculated from the accuracy for recognizing faces regressing out the accuracy of recognizing flowers, we further explored whether the correlation largely resulted from a correlation between FC and performance in the face condition, but not from a correlation between FC and performance in the flower condition. First, significant clusters from the FC-FRA correlation analysis were identified as the ROI. Then, we correlated the mean FC value of these ROI with the accuracy of the face condition and flower condition, respectively. Finally, to validate this finding, Pearson's correlation was performed between mean FC values of the FC-FRA defined ROI and the accuracy of recognizing upright and inverted faces in the face-inversion task. In addition, ROI-based analyses after exploratory voxelwise analyses help control for Type I error by limiting the number of statistical tests to a few ROI (Poldrack, 2007).

Participant exclusion

The quality control of the MRI data focused on the artifacts caused by head motion during the scanning. Participants whose head motion was >3.0° in rotation or 3.0 mm in translation throughout the fMRI scan were excluded from further analyses. For the rs-fMRI, two male participants who met this criterion were excluded, whereas no participant was excluded in the ts-fMRI. For the computer-based face tests, Tukey's outlier filter (Hoaglin et al., 1983) was used to identify outlier participants with an exceptionally low performance (3× the interquartile range below the first quartile), or an exceptionally high performance (3× the interquartile range above the third quartile). No participant was excluded with this method.

Results

To define the FN, we created a probabilistic map for face-selective activation in the brain. The probability for each voxel was calculated as the percentage of participants who showed face-selective activation in the conjunction contrasts of faces versus objects and faces versus fixation (Z > 2.3, uncorrected). A high probability indicated that the voxel had high likelihood to belong to the FN. Across the brain, the probability of a given voxel being significantly face-selective activated ranged from 0 to 0.713 (Fig. 2). The FN was created by keeping only the voxels with a probability of activation >0.2. From these voxels, 14 clusters were identified, including the bilateral FFA, bilateral OFA, bilateral STS, bilateral AMG, bilateral superior frontal gyrus, bilateral medial frontal gyrus, right precentral gyrus, right IFG, right ATC, and bilateral VC (Fig. 2). The regions in the FN were in agreement with the face-selective regions identified in previous studies on faces (Haxby et al., 2000; Kanwisher and Yovel, 2006; Gobbini and Haxby, 2007; Ishai, 2008; Pitcher et al., 2011a; Engell and McCarthy, 2013; Zhen et al., 2013). The anatomical coordinates of all of these clusters are reported in Table 1.

Figure 2.

Group-level face-selective probabilistic activation map. Fourteen face-selective regions were identified in group analysis. Color bar represents the percentage of participants who showed face-selective activation. SFG, Superior frontal gyrus; MFG, medial frontal gyrus; PCG, right precentral gyrus; L, left; R, right. The visualization was provided by BrainNet Viewer (http://www.nitrc.org/projects/bnv/).

Table 1.

The local maxima of group-level probabilistic activation mapa

| Region | MNI coordinates |

Peak p | ||

|---|---|---|---|---|

| x | y | z | ||

| Right FFA | 42 | −50 | −22 | 0.644 |

| Left FFA | −40 | −52 | −22 | 0.436 |

| Right OFA | 42 | −82 | −14 | 0.540 |

| Left OFA | −42 | −80 | −16 | 0.327 |

| Right STS | 48 | −38 | 4 | 0.693 |

| Left STS | −58 | −42 | 6 | 0.347 |

| Right AMG | 22 | −8 | −16 | 0.653 |

| Left AMG | −18 | −10 | −16 | 0.589 |

| SFG | 6 | 56 | 20 | 0.297 |

| MFG | 2 | 34 | −22 | 0.277 |

| Right PCG | 50 | 4 | 44 | 0.381 |

| Right IFG | 48 | 24 | 18 | 0.257 |

| Right ATC | 36 | −2 | −40 | 0.252 |

| VC | −8 | −78 | −4 | 0.713 |

a SFG, Superior frontal gyrus; MFG, medial frontal gyrus; PCG, precentral gyrus.

After identifying the FN, we computed each voxel's WNC and BNC in the FN with the rs-fMRI data, where the WNC measured the voxelwise FC within the FN and the BNC measured the voxelwise FC between the FN and the NFN. As shown in Figure 3A, B, most of the voxels in the FN showed positive WNC and BNC. Further quantitative analysis showed that, among all of the face-selective regions, the OFA, FFA, STS, and VC had the largest WNC values (Figs. 3A, 4). In addition, the WNC value of the rFFA, bilateral OFA, and VC was 1 SD higher than the mean WNC value of the FN, suggesting that they are hubs of the FN (Dai et al., 2014). On the other hand, the FFA, AMG, and VC had the largest BNC values (Figs. 3B, 4).

Figure 3.

Global pattern of the WNC/BNC in the FN. The group-level (one sample t test) WNC map (A), group-level (one sample t test) BNC map (B), and the difference (paired two sample t test) between the two connectivity metrics across participants (C) are overlaid on cortical surface. C, A total of 95.4% of the voxels in the FN had significantly larger WNC than BNC (t > 2.6, two-tailed p < 0.01, uncorrected). D, Scatter plots showing the across-voxel correlation between the probability of the face-selective activation in Figure 2 and the t-statistical difference in C (nonparametric Spearman r = 0.40, p < 10−5).

Figure 4.

WNC/BNC value and their comparison across participants. For display purposes, the average WNC and BNC values of the peak coordinates in Table 1 are shown in the bar plot. The t value indicates the paired t test statistic between WNC and BNC across participants for each peak. Error bars indicate SEM. SFG, Superior frontal gyrus; MFG, medial frontal gyrus; PCG, right precentral gyrus.

Importantly, the WNC of most voxels in the FN was much larger compared with the BNC. A paired t test between the WNC and BNC across voxels in the FN revealed that 95.4% of voxels in the FN had a significantly larger WNC value than BNC value (t > 2.6, two-tailed p < 0.01, uncorrected; Fig. 3C), indicating that voxels in the FN have stronger mutual connections than connections between the FN and NFN. A further inspection of the probabilistic activation map shown in Figure 2 and the difference map between the WNC and BNC values shown in Figure 3C implies that regions with lower probability of face activation (e.g., frontal regions) were less likely connected to other regions in the face network. To test this intuition, we computed the spatial correlation across the FN between the face-selective probabilistic activation map (Fig. 2) and the difference map between WNC and BNC values (Fig. 3C), and found a strong correlation between these two maps (nonparametric Spearman r = 0.40, p < 10−5; Fig. 3D), suggesting an intrinsic association between face-selective responses and the functional connectivity between face-selective regions (see also Song et al., 2015b). Interestingly, a similar pattern was observed in the scene network (defined by the conjunction of the contrast between scenes and objects and that of scenes and fixation), where 94.8% of the voxels in the scene network had a significantly larger WNC value than BNC value (t > 2.6, two-tailed p < 0.01, uncorrected), and the scene-selective probabilistic activation map was significantly correlated with the difference map of the scene network between the WNC and BNC values (nonparametric Spearman r = 0.49, p < 10−5). Together, this pattern suggests that the FN is a relatively encapsulated network, which may result from a general organization principle of the brain that regions with similar functions are more likely to be connected together (see also Stevens et al., 2010, 2012, 2015; Zhu et al., 2011; Simmons and Martin, 2012; He et al., 2013; Peelen et al., 2013; Hutchison et al., 2014).

Having characterized the WNC and BNC of the FN, we investigated whether these measures can be read out for behavioral performance on face recognition. To do so, the participant's FRA was measured using the old/new recognition task. The descriptive statistics for the task are summarized in Table 2. To examine whether the integration of the FN was related to FRA (face accuracy regressing out flower accuracy), we conducted a Pearson's correlation analysis between the WNC and FRA across all voxels in the FN. As shown in Figure 5A, a cluster in the right FFA (rFFA) showed a significantly positive WNC-FRA correlation (168 voxels, p < 0.05, corrected; r = 0.17, p = 0.004, MNI coordinates: 40, −48, −16), indicating that individuals with a stronger WNC (i.e., integration) in the rFFA performed better in recognizing previously presented faces. To examine the specificity of the WNC-FRA correlation, we performed an ROI-based analysis to ask whether the WNC-FRA correlation in the rFFA was specific to face recognition, not to flower recognition. We found that the WNC in the rFFA (defined by the WNC-FRA correlation) was positively correlated with the participants' accuracy of recognizing faces (r = 0.14, p = 0.01), but not with the accuracy of recognizing flowers (r = −0.05, p = 0.37). In addition, to examine the reliability of the correlation, the top 25% and bottom 25% of the participants based on their FRA were labeled as the high (N = 73) and low (N = 73) FRA groups, respectively. Consistent with the correlational results, the high FRA group (WNC = 0.114) showed a stronger WNC value than the low FRA group (WNC = 0.085) in the rFFA cluster (t(144) = 2.88, p = 0.005, Cohen's d = 0.48). Finally, no association was found between the BNC and FRA in the rFFA (r = 0.002, p = 0.97), indicating the specific role of the rFFA in integrating the rest of the regions in the FN to facilitate face recognition.

Table 2.

Descriptive statistics for behavioral testsa

| Task, trial type, and measure | Percentiles |

Mean (SD) | ||

|---|---|---|---|---|

| 25 | 50 | 75 | ||

| Old/new recognition | ||||

| Face accuracy | 0.700 | 0.775 | 0.825 | 0.772 (0.092) |

| Hit | 0.700 | 0.800 | 0.900 | 0.797 (0.126) |

| FA | 0.150 | 0.250 | 0.350 | 0.252 (0.138) |

| Flower accuracy | 0.750 | 0.800 | 0.875 | 0.804 (0.089) |

| Hit | 0.850 | 0.900 | 0.950 | 0.894 (0.099) |

| FA | 0.150 | 0.250 | 0.400 | 0.286 (0.150) |

| FRA | −0.650 | 0.008 | 0.677 | 0 (1) |

| Face inversion | ||||

| Upright accuracy | 0.860 | 0.900 | 0.940 | 0.893 (0.067) |

| Hit | 0.840 | 0.880 | 0.960 | 0.872 (0.102) |

| FA | 0.040 | 0.080 | 0.120 | 0.085 (0.081) |

| Inverted accuracy | 0.680 | 0.740 | 0.800 | 0.733 (0.092) |

| Hit | 0.720 | 0.800 | 0.880 | 0.788 (0.131) |

| FA | 0.200 | 0.320 | 0.440 | 0.321 (0.148) |

| Raven's test | 34 | 37 | 40 | 36.9 (4.86) |

a Accuracy, Average proportion of hits and correct rejections; FA, false alarms; FRA, face-specific recognition ability.

Figure 5.

Correlation between WNC/BNC and FRA. Correlations were calculated between each voxel's WNC/BNC in the FN and FRA. A, Of the entire FN, only rFFA's WNC significantly correlated with FRA (p < 0.05, corrected for multiple comparisons, 168 voxels). To better visualize the location of the significant cluster, the boundary of the rFFA in Figure 2 is shown with a green contour. The scatter plot between the WNC in the rFFA region and FRA is shown for illustration purposes only. B, Of the entire FN, only rOFA's BNC significantly correlated with FRA (p < 0.05, corrected for multiple comparisons, 200 voxels). Green contour represents the boundary of the rOFA in Figure 2. The scatter plot between the BNC in the rOFA region and FRA is shown for illustration purposes only. L, Left; R, right; a.u., arbitrary units.

Three control analyses were performed to ensure that the FC-FRA correlation in the rFFA was not caused by confounding factors, such as head motion, gender, and general intelligence. First, recent studies have shown that FC is largely affected by head motion (Power et al., 2012; Satterthwaite et al., 2012; Van Dijk et al., 2012; Kong et al., 2014). To rule out this confounding factor, we reanalyzed the FC-FRA correlations controlling for head motion. Specifically, the mean displacement of each brain volume compared with the previous volume was calculated for each participant to measure the extent of her/his head motion (Van Dijk et al., 2012). We found a similar result that the WNC in the rFFA was significantly correlated with FRA (r = 0.17, p = 0.004). In addition, spike-like head motion (>1 mm) was observed in 15 participants; therefore, we excluded these participants and recalculated the correlation, and we found that the rFFA WNC-FRA correlation remained significant (r = 0.18, p = 0.002). In short, the WNC-FRA correlation is unlikely an artifact from head motion. Second, previous studies have shown that females are better at face recognition than males (e.g., Rehnman and Herfitz, 2007; Zhu et al., 2011; Sommer et al., 2013), which was also replicated in this study (FRA: t(292) = 2.08, p = 0.04, Cohen's d = 0.25). To ensure that the linear relationship between the WNC and FRA was not from the group difference between the male and female participants, we recalculated the partial correlation between the WNC in the rFFA and FRA with gender being regressed out, and found that the association between the WNC in the rFFA and FRA remained unchanged (r = 0.15, p = 0.01). Third, to examine whether the association between FRA and FC was accounted for by individual differences in general intelligence, we measured the participants' general intelligence using the RAPM (Raven and Raven, 1998). We recalculated the partial correlation between the WNC in the rFFA and FRA, with RAPM scores being controlled for, and found that the correlation remained unchanged (r = 0.17, p = 0.003).

Next, we examined whether the link between the FC and FRA was influenced by the preprocessing and analysis strategies. First, to rule out the possibility that our results depended on the choice of the threshold, we redefined the FN with four different thresholds (i.e., 0.1, 0.15, 0.25, and 0.3) and then recomputed the WNC-FRA correlation. We found that the results were replicated under all thresholds. That is, a cluster in the rFFA showed a positive WNC-FRA correlation under different thresholds (threshold 0.1: 291 voxels, r = 0.19, p = 0.001; threshold 0.15: 215 voxels, r = 0.17, p = 0.003; threshold 0.25: 122 voxels, r = 0.16, p = 0.005; threshold 0.3: 80 voxels, r = 0.16, p = 0.006; corrected p < 0.05 for all thresholds), suggesting that our results were unlikely accounted for by different thresholds in defining the FN. Second, although the V1/V2 are frequently activated in face tasks (e.g., Pitcher et al., 2011b; Julian et al., 2012; Engell and McCarthy, 2013), they are not face-specific regions. To examine whether the correlation was mainly driven by the V1/V2, we removed the V1/V2 from the FN and recalculated the voxelwise FC-FRA association. Again, we replicated a positive correlation between the WNC in an rFFA cluster and FRA (52 voxels, uncorrected; r = 0.15, p = 0.01), suggesting that the V1/V2 did not significantly contribute to the correlation. Third, in light of debates on the effect of global signal in FC analyses (e.g., Murphy et al., 2009; Fox et al., 2009; Saad et al., 2012; Gotts et al., 2013b), we recomputed the WNC-behavioral correlation without the removal of global signal, and found a similar but weaker positive correlation between the WNC in an rFFA cluster and FRA (59 voxels, one-tailed p < 0.05, uncorrected; r = 0.14, p = 0.02).

We further examined whether the WNC in the rFFA can predict the participants' performance in recognizing upright versus inverted faces to (1) replicate the finding based on the old/new recognition task, and (2) confirm the specificity of the association because inverted faces share virtually all of the visual properties of upright faces yet are not processed as faces (Yin, 1969). Further, this analysis helps extend the finding from face recognition to face perception because the face-inversion task relies on perceptual discrimination in a successive same-different matching task. Indeed, the accuracy of recognizing upright faces in the old/new face task was only moderately correlated with that of discriminating upright faces in the face-inversion task (r = 0.32, p < 0.001). Consistent with the finding that was mainly relying on face memory condition, we found that the correlation between the WNC in the rFFA and the accuracy for discriminating upright faces was significant (r = 0.14, p = 0.01), whereas no significant correlation was found between the WNC in the rFFA and the accuracy for discriminating inverted faces (r = 0.05, p = 0.38). In short, the rFFA apparently integrates the rest of the regions in the FN for face recognition, but not for nonface recognition.

Having examined the behavioral relevance of the integration of the FN, we next investigated whether the segregation of the FN was related to face recognition ability as well. We found that the BNC in a cluster of the right OFA (rOFA) was negatively correlated with the FRA (200 voxels, p < 0.05, corrected; r = −0.19, p = 0.001; MNI coordinates: 36, −84, −14) (Fig. 5B), indicating that individuals with a weaker BNC (i.e., segregation) in the rOFA were better at face recognition. This association was specific to face recognition indexed by the raw accuracy of the face condition (r = −0.17, p = 0.003), but not to flower recognition indexed by the raw accuracy of the flower condition (r = 0.02, p = 0.73). Similarly, the high FRA group (BNC = 0.002) showed a weaker BNC value than the low FRA group (BNC = 0.007) in the rOFA cluster (t(144) = −3.26, p = 0.001, Cohen's d = 0.54). Finally, no association was found between the WNC in the rOFA and FRA (r = 0.01, p = 0.91), indicating the specific function of the rOFA in segregating the FN from the NFN to facilitate face recognition.

Similar control analyses were performed and showed that the BNC-FRA association in the rOFA was not caused by head motion (controlling for mean displacement: r = −0.18, p = 0.002; excluding participants with large spike-like head motion: r = −0.18, p = 0.002), gender (r = −0.19, p = 0.001), or general intelligence (r = −0.18, p = 0.002). In addition, the BNC-FRA correlation was also validated with the face-inversion task. That is, the BNC in the rOFA was significantly correlated with the discrimination of upright faces (r = −0.12, p = 0.04), but not with the discrimination of inverted faces (r = −0.05, p = 0.35). Furthermore, the BNC-FRA correlation in the rOFA was also replicated with different thresholds in defining FN (threshold 0.1: 548 voxels, r = −0.18, p = 0.002; threshold 0.15: 323 voxels, r = −0.19, p = 0.001; threshold 0.25: 108 voxels, r = −0.18, p = 0.002; threshold 0.3: 87 voxels, r = −0.18, p = 0.002; corrected p < 0.05 for all thresholds), suggesting that the correlation was independent of the choice of threshold. In addition, the removal of the V1/V2 from the FN did not significantly affect the BNC-FRA correlation in the rOFA (121 voxels, p < 0.05, corrected; r = −0.18, p = 0.002). Finally, without the removal of global signal, a similar but weaker BNC-FRA correlation was observed in an rOFA cluster (17 voxels, one-tailed p < 0.05, uncorrected; r = −0.12, p = 0.04).

In sum, we observed a double dissociation between the face-selective regions and FC metrics in recognizing faces, where the rFFA serves as a hub to integrate other face-selective regions in the FN and the rOFA serves as a gate to segregate the FN from the NFN.

Discussion

In the current study, we assessed the within-network integration and between-network segregation of the FN and then investigated their behavioral relevance in face recognition. First, we found that the majority of voxels in the FN had a stronger WNC than BNC, suggesting that the FN is a relatively encapsulated network. Then, we found that individuals with stronger WNC in the rFFA (i.e., integration) performed better in both face memory and face perception tasks, and those with a weaker BNC in the rOFA (i.e., segregation) were better at recognizing faces. Overall, our study provides the first empirical evidence demonstrating the behavioral relevance of the integration and segregation of the FN, and shows that the OFA and FFA play different roles in network organization for face recognition.

Our finding of a stronger WNC than BNC in the FN is consistent with recent findings that the resting-state FC between the face-selective regions is larger than the FC between the face-selective and non–face-selective regions (Zhu et al., 2011; Hutchison et al., 2014; Stevens et al., 2015). For example, the FC between two face-selective regions (i.e., the FFA and OFA) is larger than the FC between a face-selective and a scene-selective region (i.e., the parahippocampal place area or transverse occipital sulcus) (Zhu et al., 2011). Moreover, studies investigating resting-state FC of other category-specific networks (e.g., animals, scenes, objects, bodies, and tools) also demonstrate similar privileged FC among brain regions with congruent category preferences (Stevens et al., 2010, 2012, 2015; Simmons and Martin, 2012; He et al., 2013; Peelen et al., 2013; Hutchison et al., 2014). For example, a tool-preferential region in the left medial fusiform gyrus shows stronger FC with other lateralized tool-preferential regions than with other category-related regions in the ventral occipitotemporal cortex (Stevens et al., 2015). The finding of encapsulated face and scene network in our study, along with these studies, suggests a general organization principle of the brain that cortical regions for the same cognitive components are more strongly coupled than regions involved in different components (Di et al., 2013; Chan et al., 2014; Yeo et al., 2015). Finally, given that the appropriate change of the WNC and BNC of brain networks with age is critical for normal maturation (Dosenbach et al., 2010; Cao et al., 2014) and healthy aging (Cao et al., 2014; Chan et al., 2014), a stronger WNC relative to BNC in the FN may be helpful for maintaining functional specialization of the FN and for enhancing the efficiency of facial information processing.

Importantly, the integration of the rFFA with other face-selective regions in the FN (i.e., stronger WNC) contributed to behavioral performance in face recognition, suggesting a hub-like role of the FFA within the FN for face recognition (Fairhall and Ishai, 2007; Nestor et al., 2011). This result is in line with previous studies showing that FC between the FFA and other face-selective regions (e.g., the OFA and perirhinal) contributes to behavioral performance in face recognition (Zhu et al., 2011; O'Neil et al., 2014). Our study extended these findings obtained from measuring regional-wise FC between two face-selective regions by characterizing the full range FC of the FFA to the whole FN, thereby illustrating a hub-like role of the FFA in the FN for the first time. Therefore, our study provides direct evidence supporting the influential model on face recognition in which the FFA presumably plays a central role in face recognition, with the coordinated participation of more regions in the core and extended FN (Haxby et al., 2000; Calder and Young, 2005; Gobbini and Haxby, 2007). Moreover, the behavioral relevance of the FC between the FFA and other coactivated regions implies the existence of an intrinsic and meaningful interaction among the coactivated face-selective regions, even in the absence of tasks. Because coactivation in a task likely leads to Hebbian strengthening of connections among coactivated neurons/regions (Hebb, 1949), the strengthened FC between the FFA and other coactivated regions may be the remnant of their past coactivation history in recognizing faces (see also Fair et al., 2007, 2008, 2009).

In contrast, the segregation of the rOFA from the NFN (i.e., weaker BNC) was associated with better face recognition, suggesting the pivotal role of the OFA in gating face and nonface information. This result is in line with previous studies that the first wave of neural activity differentiating faces from nonface objects occurs in the OFA (Liu et al., 2002; Pitcher et al., 2007, 2009). First, transcranial stimulation delivered at the rOFA at ∼100 ms after stimulus onset impairs the discrimination of faces, but not of objects or bodies (Pitcher et al., 2007, 2009). Second, the first face-selective magnetoencephalography component peaks at a latency of 100 ms (M100), and the amplitude of the M100 correlates with the successful categorization of faces from nonface objects (Liu et al., 2002). The temporal proximity of the transcranial stimulation to the M100 latency suggests that the M100 may originate from the OFA (Pitcher et al., 2011a). Furthermore, the OFA adjusts its FC with other face-selective regions in the FN when the task switches between face and nonface recognition, suggesting the gating role of the OFA for the FN (Zhen et al., 2013). Therefore, segregation between the FN and NFN may serve as a possible mechanism by which the OFA differentiates faces from nonface objects.

Together, our results shed light on how the hierarchical structure of the FN is implemented through the functional division of labor between the FFA and OFA via within-network integration and between-network segregation. Several lines of evidence support the hierarchical structure of the FN, with the OFA computing an early structural description of faces, whereas the FFA computing the subsequent invariant aspects of faces, such as facial identity (Haxby et al., 2000; Calder and Young, 2005; Liu et al., 2010). First, the OFA is located posterior to the FFA, and the OFA response is more biased to contralateral stimuli than the FFA (Hemond et al., 2007). Second, the OFA preferentially represents face parts (Pitcher et al., 2007; Harris and Aguirre, 2008; Liu et al., 2010), whereas the FFA is involved in processing configural and holistic information of faces (Yovel and Kanwisher, 2004; Liu et al., 2010; Zhang et al., 2012; Zhang et al., 2015a). Third, the OFA is sensitive to physical changes in a face, whereas the FFA is sensitive to changes in the identity of a face (Rotshtein et al., 2005). Combined with the hierarchical structure of the FN, our study implies a two-stage model of face processing through the different roles of the OFA and FFA in network integration and segregation: in the first stage, the OFA likely segregates the FN from the NFN based on the detection of face parts; and in the second stage, the FFA may integrate facial information from multiple face-selective regions to holistically process faces. Future studies with more elaborate behavioral paradigms and task-related FC analyses are needed to test this speculation.

There are several unaddressed issues that are important topics for future research. First, the present study focused on the invariant facial aspect (i.e., facial identity); therefore, future studies are needed to investigate whether the processing of changeable face aspects also follows the principle of the integration and segregation of the FN. Specifically, does the face-selective STS play a hub-like role in recognizing facial expression by integrating other face-selective regions in the FN (Calder and Young, 2005; Pitcher et al., 2014; Yang et al., 2015)? Second, our study proposes a two-stage model for the FN to process facial information. Future studies are invited to examine whether this model can be generalized to other brain networks. That is, do brain networks in general exhibit a similar hierarchical structure to accomplish their corresponding cognitive functions, with an early stage of segregating from nonrelated brain networks and a later stage of integrating within-network regions? Third, previous studies have shown increased specificity in both task activation and resting-state FC analyses with individual localization compared with group-level localization (e.g., Glezer and Riesenhuber, 2013; Laumann et al., 2015; Stevens et al., 2015). Therefore, it is interesting to define the FN at individual level and then examine the relation between FN′s connectivity pattern and behavioral performance in face recognition in future studies. Finally, although similar patterns were observed regardless of the removal of global signal or not, the functional division of labor between the OFA and FFA was more readily revealed when global signal was regressed out. This finding is in line with previous studies where the distinct connection patterns of the FFA and OFA to other brain regions (i.e., AMG, pSTS, and early VC) are only revealed with the removal of global signal (Roy et al., 2009; Kruschwitz et al., 2015). One possible interpretation is that global signal regression may help pulling apart neighboring but functionally distinct brain regions (Fox et al., 2009; Roy et al., 2009; Shehzad et al., 2009; Kruschwitz et al., 2015).

Footnotes

This work was supported by National Natural Science Foundation of China (31230031, 31221003, 31470055, and 31471067), the National Basic Research Program of China (2014CB846101, 2014CB846103), and Changjiang Scholars Programme of China.

The authors declare no competing financial interests.

References

- Biswal BB, Mennes M, Zuo XN, Gohel S, Kelly C, Smith SM, Beckmann CF, Adelstein JS, Buckner RL, Colcombe S, Dogonowski AM, Ernst M, Fair D, Hampson M, Hoptman MJ, Hyde JS, Kiviniemi VJ, Kötter R, Li SJ, Lin CP, et al. Toward discovery science of human brain function. Proc Natl Acad Sci U S A. 2010;107:4734–4739. doi: 10.1073/pnas.0911855107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Cao M, Wang JH, Dai ZJ, Cao XY, Jiang LL, Fan FM, Song XW, Xia MR, Shu N, Dong Q, Milham MP, Castellanos FX, Zuo XN, He Y. Topological organization of the human brain functional connectome across the lifespan. Dev Cogn Neurosci. 2014;7:76–93. doi: 10.1016/j.dcn.2013.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan MY, Park DC, Savalia NK, Petersen SE, Wig GS. Decreased segregation of brain systems across the healthy adult lifespan. Proc Natl Acad Sci U S A. 2014;111:E4997–E5006. doi: 10.1073/pnas.1415122111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Kadosh K, Cohen Kadosh R, Dick F, Johnson MH. Developmental changes in effective connectivity in the emerging core face network. Cereb Cortex. 2011;21:1389–1394. doi: 10.1093/cercor/bhq215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole MW, Yarkoni T, Repovs G, Anticevic A, Braver TS. Global connectivity of prefrontal cortex predicts cognitive control and intelligence. J Neurosci. 2012;32:8988–8999. doi: 10.1523/JNEUROSCI.0536-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dai Z, Yan C, Li K, Wang Z, Wang J, Cao M, Lin Q, Shu N, Xia M, Bi Y, He Y. Identifying and mapping connectivity patterns of brain network hubs in Alzheimer's disease. Cereb Cortex. 2014;2015:3723–3742. doi: 10.1093/cercor/bhu246. [DOI] [PubMed] [Google Scholar]

- Davies-Thompson J, Andrews TJ. Intra- and interhemispheric connectivity between face-selective regions in the human brain. J Neurophysiol. 2012;108:3087–3095. doi: 10.1152/jn.01171.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeGutis J, Wilmer J, Mercado RJ, Cohan S. Using regression to measure holistic face processing reveals a strong link with face recognition ability. Cognition. 2013;126:87–100. doi: 10.1016/j.cognition.2012.09.004. [DOI] [PubMed] [Google Scholar]

- Di X, Gohel S, Kim EH, Biswal BB. Task vs. rest-different network configurations between the coactivation and the resting-state brain networks. Front Hum Neurosci. 2013;7:493. doi: 10.3389/fnhum.2013.00493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosenbach NU, Nardos B, Cohen AL, Fair DA, Power JD, Church JA, Nelson SM, Wig GS, Vogel AC, Lessov-Schlaggar CN, Barnes KA, Dubis JW, Feczko E, Coalson RS, Pruett JR, Jr, Barch DM, Petersen SE, Schlaggar BL. Prediction of individual brain maturity using fMRI. Science. 2010;329:1358–1361. doi: 10.1126/science.1194144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, McCarthy G. Probabilistic atlases for face and biological motion perception: an analysis of their reliability and overlap. Neuroimage. 2013;74:140–151. doi: 10.1016/j.neuroimage.2013.02.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Dosenbach NU, Church JA, Cohen AL, Brahmbhatt S, Miezin FM, Barch DM, Raichle ME, Petersen SE, Schlaggar BL. Development of distinct control networks through segregation and integration. Proc Natl Acad Sci U S A. 2007;104:13507–13512. doi: 10.1073/pnas.0705843104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Cohen AL, Dosenbach NU, Church JA, Miezin FM, Barch DM, Raichle ME, Petersen SE, Schlaggar BL. The maturing architecture of the brain's default network. Proc Natl Acad Sci U S A. 2008;105:4028–4032. doi: 10.1073/pnas.0800376105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Cohen AL, Power JD, Dosenbach NU, Church JA, Miezin FM, Schlaggar BL, Petersen SE. Functional brain networks develop from a “local to distributed” organization. PLoS Comput Biol. 2009;5:e1000381. doi: 10.1371/journal.pcbi.1000381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cereb Cortex. 2007;17:2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Flöel A, Ruscheweyh R, Krüger K, Willemer C, Winter B, Völker K, Lohmann H, Zitzmann M, Mooren F, Breitenstein C, Knecht S. Physical activity and memory functions: are neurotrophins and cerebral gray matter volume the missing link? Neuroimage. 2010;49:2756–2763. doi: 10.1016/j.neuroimage.2009.10.043. [DOI] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci U S A. 2005;102:9673–9678. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox MD, Zhang D, Snyder AZ, Raichle ME. The global signal and observed anticorrelated resting state brain networks. J Neurophysiol. 2009;101:3270–3283. doi: 10.1152/jn.90777.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glezer LS, Riesenhuber M. Individual variability in location impacts orthographic selectivity in the “visual word form area.”. J Neurosci. 2013;33:11221–11226. doi: 10.1523/JNEUROSCI.5002-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gobbini MI, Haxby JV. Neural systems for recognition of familiar faces. Neuropsychologia. 2007;45:32–41. doi: 10.1016/j.neuropsychologia.2006.04.015. [DOI] [PubMed] [Google Scholar]

- Gotts SJ, Jo HJ, Wallace GL, Saad ZS, Cox RW, Martin A. Two distinct forms of functional lateralization in the human brain. Proc Natl Acad Sci U S A. 2013a;110:E3435–E3444. doi: 10.1073/pnas.1302581110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gotts SJ, Saad ZS, Jo HJ, Wallace GL, Cox RW, Martin A. The perils of global signal regression for group comparisons: a case study of Autism Spectrum Disorders. Front Hum Neurosci. 2013b;7:356. doi: 10.3389/fnhum.2013.00356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris A, Aguirre GK. The representation of parts and wholes in face-selective cortex. J Cogn Neurosci. 2008;20:863–878. doi: 10.1162/jocn.2008.20509. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/S1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- He C, Peelen MV, Han Z, Lin N, Caramazza A, Bi Y. Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage. 2013;79:1–9. doi: 10.1016/j.neuroimage.2013.04.051. [DOI] [PubMed] [Google Scholar]

- Hebb DO. The organization of behavior. New York: Wiley; 1949. [Google Scholar]

- Hemond CC, Kanwisher NG, Op de Beeck HP. A preference for contralateral stimuli in human object- and face-selective cortex. PLoS One. 2007;2:e574. doi: 10.1371/journal.pone.0000574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoaglin DC, Mosteller F, Tukey JW. Understanding robust and exploratory data analysis. New York: Wiley; 1983. [Google Scholar]

- Huang L, Song Y, Li J, Zhen Z, Yang Z, Liu J. Individual differences in cortical face selectivity predict behavioral performance in face recognition. Front Hum Neurosci. 2014;8:483. doi: 10.3389/fnhum.2014.00483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchison RM, Culham JC, Everling S, Flanagan JR, Gallivan JP. Distinct and distributed functional connectivity patterns across cortex reflect the domain-specific constraints of object, face, scene, body, and tool category-selective modules in the ventral visual pathway. Neuroimage. 2014;96:216–236. doi: 10.1016/j.neuroimage.2014.03.068. [DOI] [PubMed] [Google Scholar]

- Ishai A. Let's face it: it's a cortical network. Neuroimage. 2008;40:415–419. doi: 10.1016/j.neuroimage.2007.10.040. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/S1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1006/nimg.2002.1132. [DOI] [PubMed] [Google Scholar]

- Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. Neuroimage. 2012;60:2357–2364. doi: 10.1016/j.neuroimage.2012.02.055. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philos Trans R Soc Lond B Biol Sci. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawabata Duncan KJ, Devlin JT. Improving the reliability of functional localizers. Neuroimage. 2011;57:1022–1030. doi: 10.1016/j.neuroimage.2011.05.009. [DOI] [PubMed] [Google Scholar]

- Kong F, Hu S, Wang X, Song Y, Liu J. Neural correlates of the happy life: the amplitude of spontaneous low frequency fluctuations predicts subjective well-being. Neuroimage. 2015a;107:136–145. doi: 10.1016/j.neuroimage.2014.11.033. [DOI] [PubMed] [Google Scholar]

- Kong F, Hu S, Xue S, Song Y, Liu J. Extraversion mediates the relationship between structural variations in the dorsolateral prefrontal cortex and social well-being. Neuroimage. 2015b;105:269–275. doi: 10.1016/j.neuroimage.2014.10.062. [DOI] [PubMed] [Google Scholar]

- Kong F, Liu L, Wang X, Hu S, Song Y, Liu J. Different neural pathways linking personality traits and eudaimonic well-being: a resting-state functional magnetic resonance imaging study. Cogn Affect Behav Neurosci. 2015c;15:299–309. doi: 10.3758/s13415-014-0328-1. [DOI] [PubMed] [Google Scholar]

- Kong XZ, Zhen Z, Li X, Lu HH, Wang R, Liu L, He Y, Zang Y, Liu J. Individual differences in impulsivity predict head motion during magnetic resonance imaging. PLoS One. 2014;9:e104989. doi: 10.1371/journal.pone.0104989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kruschwitz JD, Meyer-Lindenberg A, Veer IM, Wackerhagen C, Erk S, Mohnke S, Pöhland L, Haddad L, Grimm O, Tost H, Romanczuk-Seiferth N, Heinz A, Walter M, Walter H. Segregation of face sensitive areas within the fusiform gyrus using global signal regression? A study on amygdala resting-state functional connectivity. Hum Brain Mapp. 2015;36:4089–4103. doi: 10.1002/hbm.22900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laumann TO, Gordon EM, Adeyemo B, Snyder AZ, Joo SJ, Chen MY, Gilmore AW, McDermott KB, Nelson SM, Dosenbach NU, Schlaggar BL, Mumford JA, Poldrack RA, Petersen SE. Functional system and areal organization of a highly sampled individual human brain. Neuron. 2015;87:657–670. doi: 10.1016/j.neuron.2015.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Stages of processing in face perception: an MEG study. Nat Neurosci. 2002;5:910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: an FMRI study. J Cogn Neurosci. 2010;22:203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy K, Birn RM, Handwerker DA, Jones TB, Bandettini PA. The impact of global signal regression on resting state correlations: are anti-correlated networks introduced? Neuroimage. 2009;44:893–905. doi: 10.1016/j.neuroimage.2008.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nestor A, Plaut DC, Behrmann M. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci U S A. 2011;108:9998–10003. doi: 10.1073/pnas.1102433108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Neil EB, Hutchison RM, McLean DA, Köhler S. Resting-state fMRI reveals functional connectivity between face-selective perirhinal cortex and the fusiform face area related to face inversion. Neuroimage. 2014;92:349–355. doi: 10.1016/j.neuroimage.2014.02.005. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Bracci S, Lu X, He C, Caramazza A, Bi Y. Tool selectivity in left occipitotemporal cortex develops without vision. J Cogn Neurosci. 2013;25:1225–1234. doi: 10.1162/jocn_a_00411. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol. 2007;17:1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Charles L, Devlin JT, Walsh V, Duchaine B. Triple dissociation of faces, bodies, and objects in extrastriate cortex. Curr Biol. 2009;19:319–324. doi: 10.1016/j.cub.2009.01.007. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Duchaine B. The role of the occipital face area in the cortical face perception network. Exp Brain Res. 2011a;209:481–493. doi: 10.1007/s00221-011-2579-1. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage. 2011b;56:2356–2363. doi: 10.1016/j.neuroimage.2011.03.067. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Duchaine B, Walsh V. Combined TMS and FMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr Biol. 2014;24:2066–2070. doi: 10.1016/j.cub.2014.07.060. [DOI] [PubMed] [Google Scholar]

- Poldrack RA. Region of interest analysis for fMRI. Soc Cogn Affect Neurosci. 2007;2:67–70. doi: 10.1093/scan/nsm006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage. 2012;59:2142–2154. doi: 10.1016/j.neuroimage.2011.10.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME, Snyder AZ. A default mode of brain function: a brief history of an evolving idea. Neuroimage. 2007;37:1083–1090. doi: 10.1016/j.neuroimage.2007.02.041. discussion 1097–1099. [DOI] [PubMed] [Google Scholar]

- Raven J, Raven JC, Court JH. Manual for Raven's progressive matrices and vocabulary scales. Oxford: Oxford Psychologists; 1998. [Google Scholar]

- Rehnman J, Herfitz A. Women remember more faces than men do. Acta Psychol. 2007;124:344–355. doi: 10.1016/j.actpsy.2006.04.004. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Henson RN, Treves A, Driver J, Dolan RJ. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci. 2005;8:107–113. doi: 10.1038/nn1370. [DOI] [PubMed] [Google Scholar]

- Roy AK, Shehzad Z, Margulies DS, Kelly AM, Uddin LQ, Gotimer K, Biswal BB, Castellanos FX, Milham MP. Functional connectivity of the human amygdala using resting state fMRI. Neuroimage. 2009;45:614–626. doi: 10.1016/j.neuroimage.2008.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubinov M, Sporns O. Complex network measures of brain connectivity: uses and interpretations. Neuroimage. 2010;52:1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- Saad ZS, Gotts SJ, Murphy K, Chen G, Jo HJ, Martin A, Cox RW. Trouble at rest: how correlation patterns and group differences become distorted after global signal regression. Brain Connect. 2012;2:25–32. doi: 10.1089/brain.2012.0080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salinas E, Sejnowski TJ. Correlated neuronal activity and the flow of neural information. Nat Rev Neurosci. 2001;2:539–550. doi: 10.1038/35086012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satterthwaite TD, Wolf DH, Loughead J, Ruparel K, Elliott MA, Hakonarson H, Gur RC, Gur RE. Impact of in-scanner head motion on multiple measures of functional connectivity: relevance for studies of neurodevelopment in youth. Neuroimage. 2012;60:623–632. doi: 10.1016/j.neuroimage.2011.12.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shehzad Z, Kelly AM, Reiss PT, Gee DG, Gotimer K, Uddin LQ, Lee SH, Margulies DS, Roy AK, Biswal BB, Petkova E, Castellanos FX, Milham MP. The resting brain: unconstrained yet reliable. Cereb Cortex. 2009;19:2209–2229. doi: 10.1093/cercor/bhn256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Martin A. Spontaneous resting-state BOLD fluctuations reveal persistent domain-specific neural networks. Soc Cogn Affect Neurosci. 2012;7:467–475. doi: 10.1093/scan/nsr018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommer W, Hildebrandt A, Kunina-Habenicht O, Schacht A, Wilhelm O. Sex differences in face cognition. Acta Psychol. 2013;142:62–73. doi: 10.1016/j.actpsy.2012.11.001. [DOI] [PubMed] [Google Scholar]

- Song Y, Lu H, Hu S, Xu M, Li X, Liu J. Regulating emotion to improve physical health through the amygdala. Soc Cogn Affect Neurosci. 2015a;10:523–530. doi: 10.1093/scan/nsu083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song Y, Zhu Q, Li J, Wang X, Liu J. Typical and atypical development of functional connectivity in the face network. J Neurosci. 2015b;35:14624–14635. doi: 10.1523/JNEUROSCI.0969-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Buckner RL, Schacter DL. Correlated low-frequency BOLD fluctuations in the resting human brain are modulated by recent experience in category-preferential visual regions. Cereb Cortex. 2010;20:1997–2006. doi: 10.1093/cercor/bhp270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Kahn I, Wig GS, Schacter DL. Hemispheric asymmetry of visual scene processing in the human brain: evidence from repetition priming and intrinsic activity. Cereb Cortex. 2012;22:1935–1949. doi: 10.1093/cercor/bhr273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Tessler MH, Peng CS, Martin A. Functional connectivity constrains the category-related organization of human ventral occipitotemporal cortex. Hum Brain Mapp. 2015;36:2187–2206. doi: 10.1002/hbm.22764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Supekar K, Musen M, Menon V. Development of large-scale functional brain networks in children. PLoS Biol. 2009;7:e1000157. doi: 10.1371/journal.pbio.1000157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tahmasebi AM, Artiges E, Banaschewski T, Barker GJ, Bruehl R, Büchel C, Conrod PJ, Flor H, Garavan H, Gallinat J, Heinz A, Ittermann B, Loth E, Mareckova K, Martinot JL, Poline JB, Rietschel M, Smolka MN, Ströhle A, Schumann G, et al. Creating probabilistic maps of the face network in the adolescent brain: a multicentre functional MRI study. Hum Brain Mapp. 2012;33:938–957. doi: 10.1002/hbm.21261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tononi G, Sporns O, Edelman GM. A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc Natl Acad Sci U S A. 1994;91:5033–5037. doi: 10.1073/pnas.91.11.5033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tononi G, Sporns O, Edelman GM. Measures of degeneracy and redundancy in biological networks. Proc Natl Acad Sci U S A. 1999;96:3257–3262. doi: 10.1073/pnas.96.6.3257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk-Browne NB, Norman-Haignere SV, McCarthy G. Face-specific resting functional connectivity between the fusiform gyrus and posterior superior temporal sulcus. Front Hum Neurosci. 2010;4:176. doi: 10.3389/fnhum.2010.00176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Dijk KR, Sabuncu MR, Buckner RL. The influence of head motion on intrinsic functional connectivity MRI. Neuroimage. 2012;59:431–438. doi: 10.1016/j.neuroimage.2011.07.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC. Windows on the brain: the emerging role of atlases and databases in neuroscience. Curr Opin Neurobiol. 2002;12:574–579. doi: 10.1016/S0959-4388(02)00361-6. [DOI] [PubMed] [Google Scholar]

- Wang R, Li J, Fang H, Tian M, Liu J. Individual differences in holistic processing predict face recognition ability. Psychol Sci. 2012;23:169–177. doi: 10.1177/0956797611420575. [DOI] [PubMed] [Google Scholar]

- Wang X, Xu M, Song Y, Li X, Zhen Z, Yang Z, Liu J. The network property of the thalamus in the default mode network is correlated with trait mindfulness. Neuroscience. 2014;278:291–301. doi: 10.1016/j.neuroscience.2014.08.006. [DOI] [PubMed] [Google Scholar]

- Yang DY, Rosenblau G, Keifer C, Pelphrey KA. An integrative neural model of social perception, action observation, and theory of mind. Neurosci Biobehav Rev. 2015;51:263–275. doi: 10.1016/j.neubiorev.2015.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeo BT, Krienen FM, Eickhoff SB, Yaakub SN, Fox PT, Buckner RL, Asplund CL, Chee MW. Functional specialization and flexibility in human association cortex. Cereb Cortex. 2015;25:3654–3672. doi: 10.1093/cercor/bhu217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. J Exp Psychol. 1969;81:141. doi: 10.1037/h0027474. [DOI] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Zhang H, Tian J, Liu J, Li J, Lee K. Intrinsically organized network for face perception during the resting state. Neurosci Lett. 2009;454:1–5. doi: 10.1016/j.neulet.2009.02.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J, Li X, Song Y, Liu J. The fusiform face area is engaged in holistic, not parts-based, representation of faces. PLoS One. 2012;7:e40390. doi: 10.1371/journal.pone.0040390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J, Liu J, Xu Y. Neural decoding reveals impaired face configural processing in the right fusiform face area of individuals with developmental prosopagnosia. J Neurosci. 2015a;35:1539–1548. doi: 10.1523/JNEUROSCI.2646-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang L, Liu L, Li X, Song Y, Liu J. Serotonin transporter gene polymorphism (5-HTTLPR) influences trait anxiety by modulating the functional connectivity between the amygdala and insula in Han Chinese males. Hum Brain Mapp. 2015b;36:2732–2742. doi: 10.1002/hbm.22803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhen Z, Fang H, Liu J. The hierarchical brain network for face recognition. PLoS One. 2013;8:e59886. doi: 10.1371/journal.pone.0059886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhen Z, Yang Z, Huang L, Kong XZ, Wang X, Dang X, Huang Y, Song Y, Liu J. Quantifying interindividual variability and asymmetry of face-selective regions: a probabilistic functional atlas. Neuroimage. 2015;113:13–25. doi: 10.1016/j.neuroimage.2015.03.010. [DOI] [PubMed] [Google Scholar]

- Zhu Q, Zhang J, Luo YL, Dilks DD, Liu J. Resting-state neural activity across face-selective cortical regions is behaviorally relevant. J Neurosci. 2011;31:10323–10330. doi: 10.1523/JNEUROSCI.0873-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]