Every day, the public is exposed to multiple industrial chemicals via food, water, air, and consumer products. Many are known to be toxic and can increase the risk of adverse health effects, including cancer, asthma, developmental disabilities, and infertility. The US Environmental Protection Agency (EPA) is responsible for making evidence-based policies to limit exposure to dangerous chemicals. To inform potential chemical regulations, a core component of the EPA’s duty is to evaluate data on the hazards and risks of industrial chemicals under the 1976 Toxic Substances Control Act (TSCA; Pub L No. 94-469), the law covering chemicals in commerce. Congress reformed the TSCA after widespread recognition of fatal flaws in the 1976 law. Under it, the EPA could not even restrict asbestos, a known human carcinogen. In 2016, President Barack Obama signed the Frank R. Lautenberg Chemical Safety for the 21st Century Act (Pub L No. 114-182), overhauling TSCA after 40 years. The TSCA covers more than 40 000 chemicals in the marketplace. The EPA’s action (or inaction) on these chemicals has major implications for human health in the United States because of federal law preempting states and beyond the United States because of global commerce and trade agreements.

Pursuant to implementation of the new law, the EPA’s Office of Chemical Safety and Pollution Prevention recently released a methodology for collecting, evaluating, and interpreting scientific evidence on chemicals (http://bit.ly/2TFEDrF). The EPA officially calls the method a “systematic review” framework for TSCA, but it is systematic in name only, as it falls far short of best practices for systematic reviews. Application of the TSCA method will exclude relevant research from chemical assessments, leading to underestimation of health risks and resulting in inadequate policies that allow unsafe chemical exposures, thus harming public health. The TSCA systematic review method could be especially detrimental for populations more vulnerable to chemical exposures, such as pregnant women and children.

INTERNATIONAL CONSENSUS ON METHODS

Systematic review methodology originated more than 40 years ago in psychology and is now the standard for evaluating intervention effectiveness in evidence-based medicine. Well-conducted systematic reviews have saved lives and money by providing a comprehensive, unbiased evaluation of the evidence.1

International scientific organizations (e.g., Cochrane and Campbell collaborations) developed, advanced, and applied the methodology. In 2009, the Navigation Guide systematic review method adapted these clinical research synthesis methods for environmental health evidence streams and decision contexts.2 In 2013, the National Toxicology Program’s Office of Health Assessment and Translation (OHAT) developed a comparable method (http://bit.ly/2H9MjN7), and scientists at the EPA’s Integrated Risk Information System program played an important role in the development and implementation of systematic review methods. Importantly, the Integrated Risk Information System’s review method has been positively evaluated by the National Academies of Sciences and does not have the problems we list (http://bit.ly/2EKyZuQ). Peer-reviewed case studies demonstrated the value of systematic reviews in environmental health,3 and the National Academies of Sciences has recommended the Navigation Guide and OHAT’s methods for chemical evaluations (http://bit.ly/2VNzew5).

FLAWS IN THE NEW METHODOLOGY

The 2016 TSCA law mandates that the EPA make decisions about chemical risks on the basis of the “best available science” and the “weight of the scientific evidence.” The EPA defined “weight of the scientific evidence” in its 2017 regulations as follows:

a systematic review method, applied in a manner suited to the nature of the evidence or decision, that uses a preestablished protocol to comprehensively, objectively, transparently, and consistently identify and evaluate each stream of evidence, including strengths, limitations, and relevance of each study and to integrate evidence as necessary and appropriate based on strengths, limitations, and relevance. (http://bit.ly/2SVDGa8)

However, instead of building on current well-established methods, the EPA issued a new TSCA methodology that is inconsistent with the definition in regulation and with empirical evidence. It also has three fundamental flaws.

INCOMPLETENESS

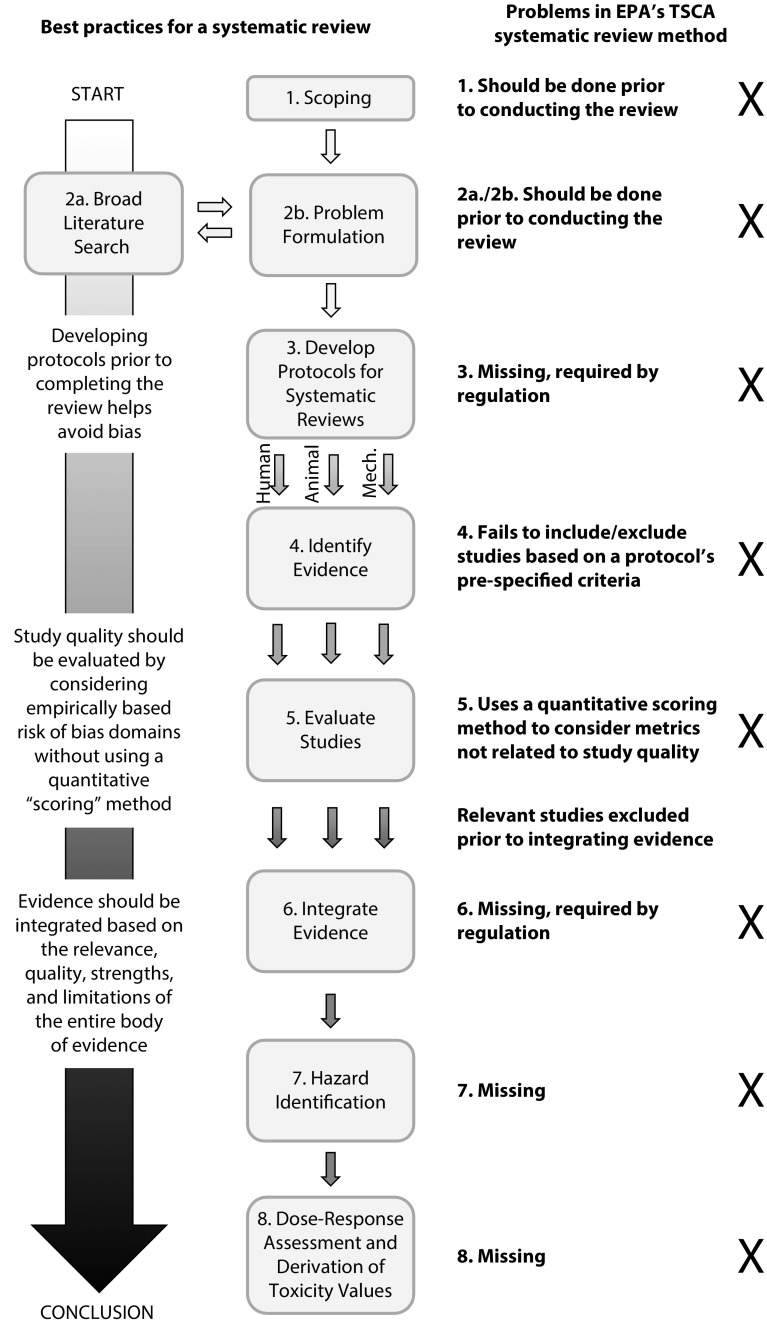

First, the TSCA method is incomplete. As shown in Figure 1, it lacks numerous essential systematic review elements. For example, it includes neither an explicit method for evaluating the overall body of each evidence stream (animal, human, mechanistic) nor a method for integrating two or more streams of evidence (http://bit.ly/2NRpPkq, http://bit.ly/2CbAd1A).4 A critical missing piece is creating protocols for all review components before conducting the review to minimize bias and ensure transparency in decision making, specified as best practice by all established methods (http://bit.ly/2NRpPkq, http://bit.ly/2CbAd1A).5 The EPA’s 2017 regulation also requires this best practice, mandating that the agency use “a preestablished protocol” to conduct assessments.

FIGURE 1—

A Comparison of General Steps for a Systematic Review and the Toxic Substances Control Act’s Systematic Review Method

Note. EPA = Environmental Protection Agency; Mech. = mechanistic; TSCA = Toxic Substances Control Act. The TSCA method is missing steps, does not follow established best practices for systematic review, and does not conform to regulatory requirements.

Source. Adapted from the National Academies of Sciences (http://bit.ly/2CbAd1A) and the Institute of Medicine (http://bit.ly/2NRpPkq).

AN INAPPROPRIATE SCORING SCHEME

Second, the TSCA systematic review method establishes an inappropriate scoring scheme for the quality of studies by assigning numerical scores to various study components and calculating an overall “quality score.” The implicit assumption in quantitative scoring methods such as the EPA’s is that we understand how much each factor used to evaluate study quality contributes to the overall quality and that these factors are independent of each other. This is not a scientifically supportable underlying assumption, as researchers have documented that such scoring methods have unknown validity and may contain invalid items. Thus, results of a quality score are not predictive of the quality of studies (http://bit.ly/2CbAd1A).

An examination of the application of quality scores in meta-analysis found that quality score weighting produced biased effect estimates because quality is not a singular dimension that is additive, but may be nonadditive and nonlinear.6 The National Academies recommended against the use of scoring systems, concluding, “There is no empirical basis for weighting the different criteria in the scores. . . . The current standard in evaluation of clinical research calls for reporting each component of the assessment tool separately and not calculating an overall numeric score” (http://bit.ly/2CbAd1A). In addition, the new TSCA methodology scores study components that are unrelated to research quality, for instance, how completely the authors of a study reported the methods used. This will result in a biased evaluation of the literature.

INCENTIVES TO DISREGARD RELEVANT RESEARCH

Third, the new TSCA methodology could disregard relevant research findings because it uses this scoring scheme to exclude studies that have only a single reporting or methodological limitation. It is inappropriate to use a single limitation to exclude relevant studies, as the EPA’s 2017 regulation requires consideration of all relevant science while accounting for “strengths and limitations.” This is also consistent with approaches in established systematic review methodologies (http://bit.ly/2VNzew5, http://bit.ly/2H9MjN7).4 Furthermore, there is no empirical evidence that the “critical metrics” the EPA uses to exclude studies are related to study quality. For example, to score human epidemiology studies, some critical metrics are whether the eligibility criteria, sources, and methods for selecting participants were reported. If not reported, the study may be scored “low quality” or “unacceptable for use.” It has, however, been documented that how completely and clearly a study is reported is not a valid measure of the quality of the underlying research.7 Thus the TSCA criteria could exclude many high-quality epidemiological studies. The first application of the TSCA method in evaluations of five persistent, bioaccumulative, and toxic chemicals excluded almost 500 studies that “did not meet evaluation criteria” of the new methodology (http://bit.ly/2XPVWp0).

In summary, the TSCA method ignores significant scientific and internationally accepted rules and procedures for conducting systematic reviews, which will result in incomplete and biased chemical evaluations—ultimately leading to policy decisions on billions of pounds of industrial chemicals that threaten public health. We recommend that for TSCA evaluations, the EPA adopt and implement existing empirically based methodology as the National Academies recommends for chemical evaluations (http://bit.ly/2VNzew5, http://bit.ly/2H9MjN7, http://bit.ly/2EKyZuQ).3 Using these methods would enable the EPA to make the best science-based decisions to protect the environment and human health.

ACKNOWLEDGMENTS

Funding for this work was provided by the JPB Foundation (grant 681), the Passport Foundation, and the Clarence E. Heller Charitable Foundation.

The authors wish to acknowledge Daniel Fox, PhD.

CONFLICTS OF INTEREST

The authors declare no conflicts of interest.

Footnotes

REFERENCES

- 1.Fox DM. The Convergence of Science and Governance: Research, Health Policy, and American States. Berkeley, CA: University of California Press; 2010. [Google Scholar]

- 2.Woodruff TJ, Sutton P Navigation Guide Work Group. An evidence-based medicine methodology to bridge the gap between clinical and environmental health sciences. Health Aff (Millwood). 2011;30(5):931–937. doi: 10.1377/hlthaff.2010.1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Woodruff TJ, Sutton P. The Navigation Guide systematic review methodology: a rigorous and transparent method for translating environmental health science into better health outcomes. Environ Health Perspect. 2014;122(10):1007–1014. doi: 10.1289/ehp.1307175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schunemann HJ, Oxman AD, Vist GE . Interpreting results and drawing conclusions. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions, version 5.1. London, England: Cochrane Collaboration; 2011. p. ch. 12. [Google Scholar]

- 5.Higgins JPT, Green S. Preparing a Cochrane review. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions, version 5.1. London, England: Cochrane Collaboration; 2011. p. ch. 2. [Google Scholar]

- 6.Greenland S, O’Rourke K. On the bias produced by quality scores in meta-analysis, and a hierarchical view of proposed solutions. Biostatistics. 2001;2(4):463–471. doi: 10.1093/biostatistics/2.4.463. [DOI] [PubMed] [Google Scholar]

- 7.Soares HP, Daniels S, Kumar A et al. Bad reporting does not mean bad methods for randomised trials: observational study of randomised controlled trials performed by the Radiation Therapy Oncology Group. BMJ. 2004;328(7430):22–24. doi: 10.1136/bmj.328.7430.22. [DOI] [PMC free article] [PubMed] [Google Scholar]