Abstract

Introduction

Endovascular aortic repair (EVAR) is a minimal-invasive technique that prevents life-threatening rupture in patients with aortic pathologies by implantation of an endoluminal stent graft. During the endovascular procedure, device navigation is currently performed by fluoroscopy in combination with digital subtraction angiography. This study presents the current iterative process of biomedical engineering within the disruptive interdisciplinary project Nav EVAR, which includes advanced navigation, image techniques and augmented reality with the aim of reducing side effects (namely radiation exposure and contrast agent administration) and optimising visualisation during EVAR procedures. This article describes the current prototype developed in this project and the experiments conducted to evaluate it.

Methods

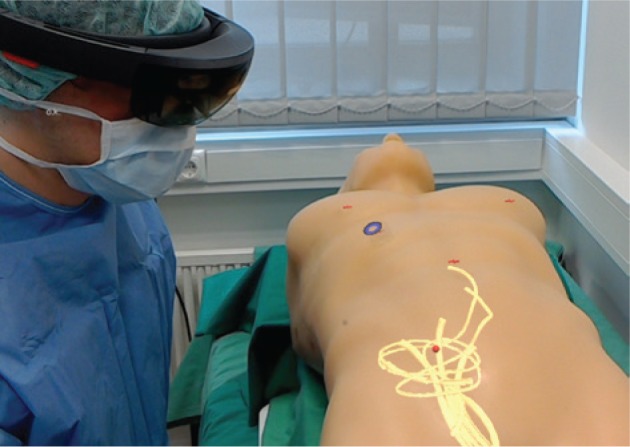

The current approach of the Nav EVAR project is guiding EVAR interventions in real-time with an electromagnetic tracking system after attaching a sensor on the catheter tip and displaying this information on Microsoft HoloLens glasses. This augmented reality technology enables the visualisation of virtual objects superimposed on the real environment. These virtual objects include three-dimensional (3D) objects (namely 3D models of the skin and vascular structures) and two-dimensional (2D) objects [namely orthogonal views of computed tomography (CT) angiograms, 2D images of 3D vascular models, and 2D images of a new virtual angioscopy whose appearance of the vessel wall follows that shown in ex vivo and in vivo angioscopies]. Specific external markers were designed to be used as landmarks in the registration process to map the tracking data and radiological data into a common space. In addition, the use of real-time 3D ultrasound (US) is also under evaluation in the Nav EVAR project for guiding endovascular tools and updating navigation with intraoperative imaging. US volumes are streamed from the US system to HoloLens and visualised at a certain distance from the probe by tracking augmented reality markers. A human model torso that includes a 3D printed patient-specific aortic model was built to provide a realistic test environment for evaluation of technical components in the Nav EVAR project. The solutions presented in this study were tested by using an US training model and the aortic-aneurysm phantom.

Results

During the navigation of the catheter tip in the US training model, the 3D models of the phantom surface and vessels were visualised on HoloLens. In addition, a virtual angioscopy was also built from a CT scan of the aortic-aneurysm phantom. The external markers designed for this study were visible in the CT scan and the electromagnetically tracked pointer fitted in each marker hole. US volumes of the US training model were sent from the US system to HoloLens in order to display them, showing a latency of 259±86 ms (mean±standard deviation).

Conclusion

The Nav EVAR project tackles the problem of radiation exposure and contrast agent administration during EVAR interventions by using a multidisciplinary approach to guide the endovascular tools. Its current state presents several limitations such as the rigid alignment between preoperative data and the simulated patient. Nevertheless, the techniques shown in this study in combination with fibre Bragg gratings and optical coherence tomography are a promising approach to overcome the problems of EVAR interventions.

Keywords: 3D rapid prototyping, aortic aneurysm, augmented reality, EVAR, image-guided therapy, real-time 3D ultrasound, tracking system

Abbreviations: 2D, two-dimensional; 3D, three-dimensional; CBCT, cone-beam computed tomography; CT, computed tomography; DoF, degrees of freedom; DSA, digital subtraction angiography; EVAR, endovascular aortic repair; FAST, focussed assessment with sonography for trauma; FBG, fibre Bragg grating; IVUS, intravascular ultrasound; OCT, optical coherence tomography; OST, optical see-through; PLA, polylactic acid; TCP/IP, transmission control protocol/internet protocol; US, ultrasound

Introduction

Aortic aneurysm is defined as an enlargement of the aorta greater than 1.5 times the normal size, and is a relatively common and potentially lethal disease. The prevalence in Europe is estimated to be up to 5% and most are located at the abdominal segment of the aorta (abdominal aortic aneurysm). Stress (defined as force per unit area) on the aneurysm wall beyond the wall strength produces rupture [1], which causes life-threatening bleeding with mortality rates greater than 80% [2]. Therefore, the treatment is ideally performed before the event of rupture and currently elective aneurysm repair is generally recommended in diameters of 5.5 cm and over, or progressive aneurysm growth [3]. Endovascular aortic repair (EVAR) is a minimal-invasive technique that excludes the risk of rupture by implantation of an endoluminal (covered) stent graft into the aortic wall using introducer devices from the femoral or brachial arteries. EVAR shows lower perioperative morbidity and mortality, fast recovery [3], and is the standard procedure, if feasible. During the endovascular procedure, device navigation is currently performed by visualisation of the vessel anatomy using fluoroscopy in combination with digital subtraction angiography (DSA), applying radiation exposure and contrast agent. The application of contrast agents for endovascular navigation is a significant risk factor for kidney injury during these procedures. In this context, the proceduralist has to be aware that a significant proportion of patients with abdominal aneurysm bears an underlying renal impairment previous to surgery and the administration of contrast agent may cause deterioration of the renal function [4]. Acute kidney injury is a relevant post-operative complication following EVAR and is associated with increased mortality [5]. Next to the issue of contrast drugs, patients, as well as medical staff, are exposed to significant radiation doses during endovascular procedures [6]. Especially DSA runs of the abdomen and pelvis, used intraoperatively to visualise the vessel anatomy for adequate navigation and stent deployment, contribute to a significant radiation exposure during EVAR procedures. The average examination time with exposure to radiation in an EVAR procedure has been documented as being 22.6 min [7]. Providing care to patients with complex aneurysms using the chimney technique, or fenestrated and branched stent grafts has the negative side effect of increased radiation exposure [8], [9]. Deterministic effects of ionising radiation cause skin erythema (2 Gy) or hair loss (3 Gy) of the patient, which arise by exceeding a certain dose [10]. A previous study estimated deterministic radiation effects to patients in 29% of EVAR procedures [11]. Stochastic effects of ionising radiation due to repetitive exposure of the medical staff may cause cancer or cataracts, even years or decades after the exposure [12].

Another limitation of acquiring two-dimensional (2D) fluoroscopic images to obtain the position of endovascular tools such as catheters, guide wires, sheaths and stent grafts related to the patient´s anatomy is the lack of depth information. Navigation systems overcome this problem by superimposing the current position of endovascular tools in the operating room (information obtained with electromagnetic tracking systems) on preoperative computed tomography (CT) angiograms and anatomical three-dimensional (3D) models. A sensor (specifically, coils) on the tip of the endovascular device measures the magnetic field generated by a transmitter and the electromagnetic tracking system estimates the sensor position and orientation based on the theoretical knowledge of the transmitted magnetic field. This technology does not require line-of-sight as in the case of optical tracking systems but is potentially affected by ferromagnetic objects and other electronic devices, which distort the reference magnetic field [13]. An average error of 4.2 mm was obtained when tracking a guide wire in nonrigid scenarios (animal study) [14], but the accuracy is not uniform throughout the limited working volume of the electromagnetic tracking system.

Ultrasound (US) imaging is a common screening technique for abdominal aortic aneurysms and is used for guiding the femoral access in percutaneous EVAR procedures to reduce access-related complications [15], [16]. This relatively low-cost and portable technology requires the physician to identify which section of the patient’s anatomy is currently displayed and then to mentally reconstruct the 3D anatomy from 2D images [17]. In addition, out-of-plane motion owing to patient respiration or transducer movements changes the current slice of the patient’s anatomy. There is a growing interest in using real-time 3D US to deal with the aforesaid problems [18]. Delivery of fenestrated stent grafts could be guided with real-time 3D US as the tips of guide wires can be tracked in 3D [19]. Its combination with preoperative CT data, which addresses the orientation problem of 3D US with an intuitive and understandable way of displaying these volumes [20], could be useful for navigation in EVAR procedures.

In navigation systems, preoperative 3D studies and anatomical 3D models are commonly displayed on standard 2D screens. HoloLens glasses (Microsoft, Redmond, WA, USA) are an optical see-through (OST) head-mounted computer that renders virtual 3D objects superimposed on the real environment using a stereoscopic display. This wireless augmented reality technology enables a more intuitive visualisation of 3D content than on standard 2D screens. This is carried out without losing information of the real world as in the case of virtual reality. Augmented reality glasses go beyond other augmented reality systems such as tablets or mobile phones. The user does not need to hold the device or to look down at the display as the virtual objects are displayed in their visual field. There are several OST augmented reality systems on the market apart from HoloLens glasses [21]. Nevertheless, a recent study highlighted HoloLens as being more suitable for surgical interventions than two other representative, commercially available OST devices [namely Moverio BT-200 (Epson, Shinjuku, Tokyo, Japan) and R-7 (ODG, San Francisco, CA, USA)] regarding frame rate, contrast perception and task load [22]. This technology may be applied when guiding EVAR procedures.

Our study forms part of the ongoing research project Nav EVAR, which focusses on guiding EVAR procedures by means of an interdisciplinary approach to reduce its current disadvantages, namely radiation exposure (medical staff and patients) and contrast agent administration (patients). Navigation, electromagnetic tracking systems, intraoperative US imaging, fibre Bragg gratings (FBGs) and optical coherence tomography (OCT) are the technologies under evaluation in this project. Augmented reality, specifically HoloLens, is used to visualise data from these systems. In addition, the Nav EVAR project also includes rapid prototyping of patient-specific phantoms for generating realistic test environments, avoiding animal experiments according to the German guidelines and European legislation on the protection of animals used for scientific purposes (European Union Directive 2010/63/EU). A general overview of this research project (namely motivation, objectives and involved technologies) is available on this video https://youtu.be/t5Bhvb9kf44.

The aim of this article is to describe the technologies involved in the current prototype developed in the Nav EVAR project to guide EVAR procedures and the experiments conducted to evaluate this approach. This study presents the current iterative process of biomedical engineering within the disruptive interdisciplinary project Nav EVAR that includes advanced navigation, imaging techniques and augmented reality with the aim of reducing side effects and optimising visualisation during EVAR procedures.

Materials and methods

Aortic aneurysm phantom

Advanced rapid prototyping and model manufacturing techniques were also introduced at this stage of the Nav EVAR project to provide a realistic test environment for integration, examination and evaluation of the technical components. A human model torso was built in cooperation with HumanX GmbH and the Fraunhofer Research Institution for Marine Biotechnology and Cell Technology. This torso comprises a patient-specific aortic model including outgoing vessels, the spine from the thoracic vertebra IV until the coccyx and the pelvis for anatomical orientation, and a hosepipe system to enable circulation of fluids. The patient-specific aortic model and bones were produced from a preoperative CT scan of a patient who was treated for aortoiliac aneurysm disease. The declaration of consent was completed and anonymisation of patient data was also implemented. The segmentation of the high-resolution CT scan (1-mm slice thickness) and conversion into STL-data was performed semi-automatically using 3D Slicer (open-source software) [23]. The patient-specific aortic model, spine and pelvis were produced using rapid prototyping [materials: FLX9070-DM (TangoPlus FLX930 and VeroClear RGD810; Stratasys, Eden Prairie, MN, USA) for the thoracic part of the aortic model, silicone for the abdominal part of the aortic model and polylactic acid (PLA) for the bones]. The bones were painted with lime varnish to improve their contrast in CT scans. The patient-specific aortic model is not rigid and is also exchangeable for other 3D-printed aortoiliac pathologies (models based on patient data to be collected during the Nav EVAR project) to provide a flexible modular system for further research.

Augmented reality

The specifications of Microsoft HoloLens glasses include an inertial measurement unit (namely an accelerometer, a gyroscope and a magnetometer) to track the user’s movements, and a time-of-flight depth-camera for finger tracking and depth sensing (also referred as spatial mapping) of enclosed environments. This spatial information is related to the user by means of four environment understanding cameras [24]. Spatial mapping enables the simulation of physical effects that make virtual objects look more realistic and the placement of virtual 3D objects or 2D panels at specific locations. HoloLens also includes a 2.4-megapixel video camera and four microphones for recording or streaming from the user’s point of view, which facilitates documentation of surgical procedures, communication with experts and training [25]. In HoloLens, virtual objects are selected with a user’s gaze while interaction is built on hand gestures and voice commands [26]. This hands-free manipulation makes HoloLens suitable for surgical environments [27]. Surgeons can visualise the patient’s data in front of their eyes maintaining sterile conditions, without turning their heads to look at other screens. In addition, this approach does not require a member of the surgical team to control the workstation [25]. HoloLens also inherently allows sharing virtual objects across multiple augmented reality devices, making it possible for the team in the operating room who are wearing HoloLens to see the same scene.

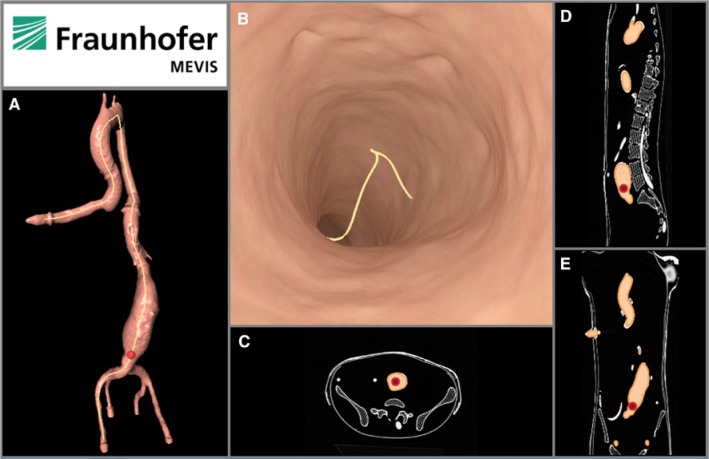

In the Nav EVAR project, 3D models (or triangle meshes) of the phantom surface and vascular structures are the virtual 3D objects superimposed on the phantom in the real world with HoloLens [28]. These 3D models are reconstructed after contouring them on a CT scan (surface rendering technique). The HoloLens wearer can also visualise virtual 2D objects on 2D panels such as the orthogonal views of CT scans, 2D images of 3D vascular models and 2D images of virtual angioscopies also built from CT scans. The point of view of the catheter, which was incorporated in the initial version of the Nav EVAR prototype [28], was replaced with a virtual angioscopy implemented with Open Inventor library [29]. The appearance of the vessel wall follows that shown in ex vivo and in vivo angioscopies [30], [31].

Navigation

The initial approach of the Nav EVAR project is guiding these procedures in real-time with an electromagnetic tracking system after attaching a sensor on the catheter tip and displaying this information on HoloLens. The proof-of-concept of this approach was evaluated in a phantom study with the Focused Assessment with Sonography for Trauma (FAST) Ultrasound Training Model (Blue Phantom, Sarasota, FL, USA), where vascular surgeons, radiologists and thoracic surgeons highlighted the potential of the prototype [28].

Tracking data is mapped to both the CT scan and the virtual objects obtained from these radiological data by means of a landmark-based rigid registration algorithm whose inputs are the 3D coordinates of at least three landmarks on the phantom distributed evenly around the area of interest in both coordinates systems (namely the electromagnetic tracking system and the radiological data). The position of each landmark in the former coordinate system is recorded with the tip of an electromagnetically tracked pointer. This process is carried out with voice commands on HoloLens rather than touching any keyboard or mouse. In addition, another registration is needed to align the renderings of the virtual 3D objects on HoloLens with the phantom in the real world. This process is done after pointing at the virtual landmarks displayed on HoloLens with the tracked pointer. Navigation is then carried out by electromagnetic tracking of the catheter tip inside the phantom. HoloLens displays the current position of the catheter tip on the 3D model of the vascular structure and on the orthogonal views of the CT scan, and the current view of the virtual angioscopy.

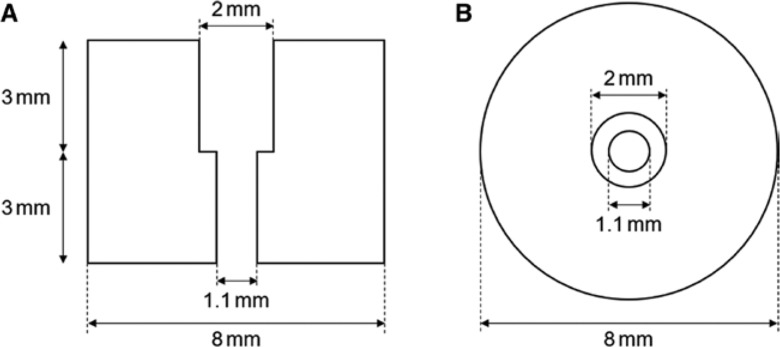

Specific external markers were designed to be used as landmarks in the registration process and with the aim of improving both their localisation in CT scans and when acquiring their position with the electromagnetically tracked pointer before navigation (Figure 1). Before acquiring the CT scan, the location of each external marker is previously marked (specifically, a point) on the phantom with a marker pen for later repositioning. Each external marker is placed on the phantom surface by matching the point marker and the hole of the external marker. Then the external marker can be removed after the CT acquisition. Before navigation, each external marker is placed again on the phantom following the point markers. The hole of the external markers was specifically designed to insert the pointer tip (lower diameter 0.98 mm and upper diameter 1.98 mm) without any movement to each other when recording the 3D coordinates of each external marker to reduce inaccuracies during this process.

Figure 1:

Design of the external marker.

(A) Lateral view. (B) Top view.

Ultrasound imaging

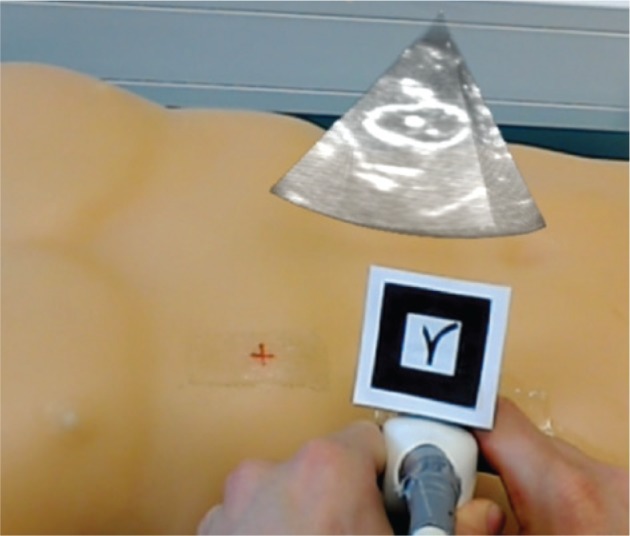

The use of real-time 3D US is also under evaluation in the Nav EVAR project for guiding endovascular tools. Several studies streamed 2D US images to HoloLens [32], [33], [34]. In our research project, US volumes (raw data) are streamed from a real-time 3D cardiovascular US system (Vivid 7 Dimension, GE Healthcare, Chicago, IL, USA), using a 3V matrix array probe, to a client computer using an in-house modification [35] and via Ethernet. Then, these volumes are transmitted to HoloLens with an open-source multi-language/platform remote procedure call (gRPC [36]) and via WiFi. Both steps use a client-server architecture via transmission control protocol/Internet protocol (TCP/IP). Streaming US volumes to HoloLens enables visualisation of both US data and probe relative to the patient. This information is superimposed on the HoloLens user’s field of view at a certain distance from the US probe. This process was done after attaching an augmented reality marker to the US probe and including HoloLensARToolKit [37] in the Nav EVAR prototype.

Evaluation

The solutions presented in this study were tested by using an US training model (FAST Ultrasound Training Model, Blue Phantom, Sarasota, FL, USA) and the aortic-aneurysm phantom. The former is a realistic model that simulates human tissues in the thorax, upper quadrant and abdomen regarding the deformation of soft-tissue (for instance, the skin) and the acoustic characteristics for US imaging.

CT studies were acquired with a Biograph40 scanner (Siemens, Munich, Bavaria, Germany) in the case of the FAST Ultrasound Training Model, and a Siemens SOMATOM Definition AS+ scanner in the case of the external markers and the aortic-aneurysm phantom. These studies were used to check whether the external markers were visible in CT scans, to create a virtual angioscopy from the aortic-aneurysm phantom (model built from different materials), and to check the navigation workflow and its visualisation with HoloLens using the FAST Ultrasound Training Model and a tracked catheter. The 3D models of the surface and the vessels (specifically, six tubes) of the FAST Ultrasound Training Model were obtained from the CT scan of this phantom. The registration process to set up a common coordinate system before navigation was carried out with four anatomical landmarks on the phantom surface (namely both mammillae, the belly button and the joint between both legs). During navigation, the tracked catheter was inserted in one the tubes that simulated the vascular structure and moved along it.

US volumes (imaging depth of 15 cm) of the FAST Ultrasound Training Model were also acquired with the Vivid 7 Dimension system at a rate of 13.8 Hz and visualised with HoloLens. The latency (specifically, the time interval between sending a volume from the US system and its visualisation on HoloLens) was calculated over 75 s after synchronising both the US system and the HoloLens with Network Time Protocol.

Results

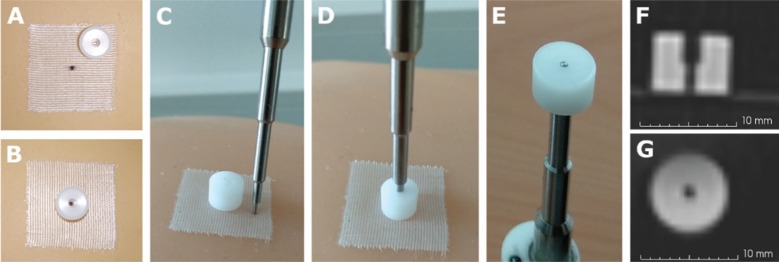

Figure 2 shows the external marker designed for this study and the tip of the electromagnetically tracked pointer. The hole of the external marker enabled the viewing of the point marker and the pointer tip fitted in that hole. In addition, the external marker was also visible in the CT scan.

Figure 2:

External marker and pointer tip used for mapping the tracking and radiological data.

(A) External marker not aligned with the point marker. In this case, the point was drawn on the surgical tape. (B) External marker aligned with the point marker. (C) External marker and pointer tip. (D) Pointer tip inserted in the external marker. (E) Bottom view of the insertion. (F) CT slice with the lateral view of the external marker. (G) CT slice with the bottom view of the external marker. Voxel size 0.5×0.5×0.6 mm.

Figure 3 illustrates the virtual angioscopy built from the CT scan of the aortic-aneurysm phantom, apart from the 3D model of the segmented vessel system with the aneurysm and the orthogonal views of the CT scan. This visualisation replaces the point of view of the catheter included in the initial version of the Nav EVAR prototype shown in Figure 4.

Figure 3:

2D panel built from the radiological data.

(A) 2D image of the 3D vascular model. (B) View (2D image) of the virtual angioscopy. (C) Axial view of the CT scan of the aortic-aneurysm phantom. (D) Sagittal view of that CT scan. (E) Coronal view of that CT scan. The red point and the yellow line represent the position of the catheter tip and a virtual path of the catheter along the vessel centre line respectively.

Figure 4:

Visualisation with HoloLens.

3D models of the phantom surface (blue wireframe) and vessels (yellow) superimposed on the FAST Ultrasound Training Model. 2D panels above the phantom show the point of view of the catheter (left, initial version of the Nav EVAR prototype) and an axial slice of the CT scan of the phantom (right). This photo was taken from the HoloLens user’s point of view after connecting to the web server Windows Device Portal on the HoloLens [38].

The catheter tip was navigated in the FAST Ultrasound Training Model. Figures 4 and 5 illustrate the visualisation of that navigation with HoloLens. During the navigation, the rendering of the 3D model corresponding to the phantom surface was not perfectly aligned with the phantom in the real world (Figure 4), neither was the catheter tip with the tube (Figure 5).

Figure 5:

Navigation with HoloLens.

3D model of the vessels (yellow) superimposed on the FAST Ultrasound Training Model. The red crosses, the red sphere and the blue ring correspond to the virtual anatomical markers used in the registration process, the tip of the catheter and the user’s gaze respectively. This photo was taken with a second HoloLens, specifically from that HoloLens user’s point of view.

US volumes of the FAST Ultrasound Training Model were acquired with the Vivid 7 Dimension system and the 3V transducer and subsequently visualised with HoloLens. This data was superimposed on the HoloLens user’s field of view at a certain distance from the US probe, more precisely from the augmented reality marker attached to the US probe (Figure 6). The latency measured was 259±86 ms (mean±standard deviation).

Figure 6:

Visualisation of US volumes with HoloLens.

The volume rendering of the US data shows the kidney of the FAST Ultrasound Training Model.

Discussion

Microsoft HoloLens glasses were the augmented reality technology selected to visualise data during the navigation in EVAR procedures. This OST device allows a more intuitive visualisation of 3D content such as the position of the catheter tip along the 3D model of the aorta. In addition, 2D panels with information such as the virtual angioscopy or the orthogonal views of the CT angiogram can be placed at specific locations defined by the user and that are more convenient than those fixed positions of standard 2D screens. The virtual angioscopy showed better appearance than that presented in our previous study [39] and that included in the initial prototype [28]. However, HoloLens presents some drawbacks such as its weight (579 g), memory (limited to 900 MB for applications), battery life (2–3 h), binocular visual field [approximately 30°×17.5° (horizontal×vertical) compared to 120°×135° in humans (region of binocular overlap)], and potential discomfort (for instance, dizziness, headache, eye strain or dry eyes) [21], [40], [41], [42], [43], [44]. New versions of this experimental device or other augmented reality systems may overcome these disadvantages in the future. Nevertheless, HoloLens’ weight can be distributed around the head by adjusting its headband, users can get used to its limited field of view, compensating it by head movements, and the visualisation of virtual objects can be adjusted after an automatic calibration of the interpupillary distance.

The mapping between the tracking data and the radiological data used a landmark-based rigid registration algorithm. This study presents new external markers that can be identified in the CT scan (specifically, the centre of the bottom portion of the cylinder). Before navigation, the hole included in each marker enables its repositioning on the point marker drawn on the phantom surface and its localisation with the tracked pointer, avoiding that its tip slides along the phantom surface. The external markers will include a transparent adhesive layer to attach the marker to the phantom surface. Disadvantages of external marking might be a patient’s compliance. In particular, complex aortic anatomies require custom made stent grafts, such as fenestrated or branched grafts, whose design is based on preoperative CT angiograms and with production delays up to 2 months. The point marker drawn on the patient’s skin before the CT angiogram acquisition should last until the endovascular intervention to allow the placement of the external marker.

During the navigation, the position of the catheter tip showed some error as it was not perfectly aligned with the tube. The use of the anatomical landmarks on the phantom that are not accurately identified in the CT images and just before navigation may cause this misalignment. Further research will include the use of the aortic-aneurysm phantom, the designed external markers and the quantitative assessment of the target registration error by means of acquiring CT or cone-beam CT (CBCT) scans of the whole setting (phantom with external markers and tracked catheter) during the navigation. In addition, the alignment based on external markers on the patient’s skin may not be sufficiently accurate in obese patients owing to skin movement. This matching could be improved when including intraoperative imaging, such as real-time 3D US, and a deformable registration between preoperative and intraoperative imaging modalities [45] to update the navigation with the patient’s current anatomy. Preoperative CT angiograms may provide the overview and orientation of the target, but not resemble the actual scenario in the operating room owing to patient position or tissue deformation/movement, while intraoperative US volumes offer a limited but updated picture during the treatment [46]. A robotised US system may address the problem of continuous acquisition of US volumes during a treatment without manual positioning of the US probe [47]. On the other hand, mapping between the renderings of the 3D models (namely the phantom surface and vascular structures) on HoloLens and the real world may be improved by including surface data. This approach will be tested after attaching a high-quality depth camera to HoloLens in order to improve the rough surfaces obtained from the spatial mapping of HoloLens [28], [48].

In this study, tracking of endovascular tools was based on attaching an electromagnetic sensor on the catheter tip. However, these surgical devices are flexible to facilitate their delivery through tortuous vessels. A better understanding of the position of the instrument related to the patient’s anatomy can be obtained when tracking several points of the tool rather than only its tip. In addition, the visualisation of the catheter shape could avoid damaging the vessel wall [49]. In [50], two miniaturised electromagnetic sensors with five degrees of freedom (DoF), namely three translations and two rotations, were attached inside a 5-F catheter, one at the catheter tip and another sensor a few centimetres below, in order to estimate the tip position, the orientation of the catheter axis at both sensor locations and the catheter curvature. The number of sensors was increased in [51], where the authors embedded seven electromagnetic sensors in a 7-F radiofrequency ablation catheter sheath [the sensor at the tip has 6 DoF (three translations and three rotations)] to reconstruct its shape for a distance of 70.5 cm inside a 2D silicone aortic phantom. Results showed an average error of 3.0 mm when estimating the catheter shape at three sensor positions (catheter tip, middle and catheter distal end) using the electromagnetic tracking data. A lower average error (2.1 mm) was obtained when the simulation of the mechanical characteristics of the catheter was included in the estimation of its position. An alternative for shape sensing is based on FBG technology. An FBG sensor consists of a periodic structure (grating) in an optical fibre core that reflects a narrow band of wavelengths of the incident light. Strain or temperature changes the grating period which in turn shifts the spectrum of the reflected light [52]. A common configuration for shape reconstruction consists in placing three optical fibres parallel to the longitudinal axis of the needle/catheter with several sets of FBG sensors at specific locations along the optical fibres. Each set is composed of three FBG sensors (one in each optical fibre) aligned following a triangle (cross section). This configuration enables temperature compensation and the determination of twist angles [53]. The shape is obtained after interpolating the magnitude and direction of the curvature calculated from strains measured at each set of FBG sensors. The accuracy is affected by factors such as the sensor configuration (number and placement of FBG sensors) and the interpolation method [54]. The error increases when estimating long shapes and tools that have low stiffness [53]. In [55], the authors estimated the needle shape with a maximum error of 0.74 mm when inserting the needle 115 mm into a soft-tissue phantom (gelatine) using four sets of FBG sensors (triangular configuration) separated by 30 mm each (first set of FBG sensors at 18 mm from the needle tip). A limitation of the FBG technology is that shapes are obtained in the needle/catheter coordinate system (local coordinate system) [56]. Therefore, no information about the tool position is available, only its deflection. Further research in the Nav EVAR project will focus on the use of FBG sensors for shape reconstruction [39] combined with electromagnetic tracking to increase accuracy [53] and provide the position in a global coordinate system.

US volumes of the FAST Ultrasound Training Model were superimposed on the HoloLens user’s field of view at a certain distance from the US probe by using an augmented reality marker attached to the US probe. However, the latency should be reduced to at least 100 ms in order to display real-time 3D US volumes on HoloLens. In addition, the implemented method of opacity-based volume rendering in HoloLens and the interaction with US volumes were limited. The opacity transfer function should be replaced with an approach that enhances structures of interest better than that implemented in commercial systems and that is more suitable for real-time 3D US data, such as that presented in [57]. Furthermore, the user interface in HoloLens should be intuitive and also include tools such as moving, rotating and cropping the volume rendering, and, if necessary, adjusting parameters of the opacity transfer function. This interaction would be built on hand gestures and voice commands.

Obesity, bowel gas and vessel calcification are factors that may hinder the use of real-time 3D US to guide EVAR procedures due to the limited penetration depth of US and the acoustic shadowing caused by interfaces like tissue/air or tissue/bone. Intravascular US (IVUS) enables the visualisation of vessels from the inside out to address those potential obstacles of real-time 3D US. Cross-sectional images of the vessel are acquired by means of a catheter with a miniaturised US probe mounted on its tip. This image modality enables the assessment of a vessel’s lumen and its wall, and also shows atherosclerotic plaque [58]. Stent grafts can be identified in these images, as well as the shadow caused by guide wires [58], [59]. In [60], an IVUS probe tracked with an electromagnetic sensor was proposed to guide the installation of stent grafts. 3D models of a silicone descending thoracic aorta were reconstructed based on this data with a cross-section radius average error of 0.9 mm. An alternative catheter-based imaging technique is OCT, which is based on near-infrared light emission. This technology provides less tissue penetration and scan diameter than IVUS, but offers a higher resolution and frame rate with a smaller catheter size [61]. Acquiring intraoperative OCT images for guiding EVAR procedures will also be assessed in the Nav EVAR project.

The Nav EVAR project tackles the problem of radiation exposure and contrast agent administration during EVAR interventions by using a multidisciplinary approach that includes navigation, electromagnetic tracking systems, intraoperative US imaging, FBGs and OCT to guide the endovascular tools (namely catheters, guide wires, sheaths and stent grafts). Augmented reality technology, specifically HoloLens, was included to enable a more intuitive visualisation of the navigation data than on standard screens. This article reviewed the current state of this project and its further research, describing the potential and limitations of each technology. The combination of these techniques is a promising approach to overcome the problems of EVAR procedures.

Supporting Information

Acknowledgments

The authors extend their gratitude to Ralf Bruder, Sven Böttger (Institute for Robotics and Cognitive Systems, University of Lübeck), Erik Stahlberg and Annika Dell (University Hospital Schleswig-Holstein, Campus Lübeck) for their technical support.

Supplementary Material

The article (iss-2018-2001) offers reviewer assessments as supplementary material.

Author Statement

Research funding: This study was supported by the German Federal Ministry of Education and Research (grant number 13GW0228), the Ministry of Economic Affairs, Employment, Transport and Technology of Schleswig-Holstein, and the German Research Foundation (DFG) (grant number ER 817/1-1). Conflict of interest: Authors state no conflict of interest. Informed consent: The research related to use the anonymised radiological data of patients (specifically, CT scans) complied with all the relevant national regulations and institutional policies, and was performed in accordance with the tenets of the Helsinki Declaration with written informed consent. Ethical approval: Not applicable.

Author Contributions

Verónica García-Vázquez: conceptualization; software; data acquisition; writing – original draft. Felix von Haxthausen: conceptualization; software; data acquisition; writing – review & editing. Sonja Jäckle: software; data processing; writing – review & editing. Christian Schumann: software; writing – review & editing. Ivo Kuhlemann: funding acquisition; software; writing – review & editing. Juljan Bouchagiar: production of aortic-aneurysm phantom; Data acquisition; writing – review & editing. Anna-Catharina Höfer: data acquisition; writing – review & editing. Florian Matysiak: production of aortic-aneurysm phantom; Data acquisition; writing – review & editing. Gereon Hüttmann: funding acquisition; writing – review & editing. Jan Peter Goltz: funding acquisition; writing – review & editing. Markus Kleemann: conceptualization; funding acquisition; supervision; writing – review & editing. Floris Ernst: conceptualization; funding acquisition; supervision; writing – review & editing. Marco Horn: funding acquisition; writing – original draft.

References

- [1].Truijers M, Pol JA, SchultzeKool LJ, van Sterkenburg SM, Fillinger MF, Blankensteijn JD. Wall stress analysis in small asymptomatic, symptomatic and ruptured abdominal aortic aneurysms. Eur J Vasc Endovasc Surg 2007;33:401–7. [DOI] [PubMed]; Truijers M, Pol JA, SchultzeKool LJ, van Sterkenburg SM, Fillinger MF, Blankensteijn JD. Wall stress analysis in small asymptomatic, symptomatic and ruptured abdominal aortic aneurysms. Eur J Vasc Endovasc Surg. 2007;33:401–7. doi: 10.1016/j.ejvs.2006.10.009. [DOI] [PubMed] [Google Scholar]

- [2].Debus ES, Kölbel T, Böckler D, Eckstein H-H. Abdominelle aortenaneurysmen [Abdominal aortic aneurysms]. Gefässchirurgie 2010;15:154–68.; Debus ES, Kölbel T, Böckler D, Eckstein H-H. Abdominelle aortenaneurysmen [Abdominal aortic aneurysms] Gefässchirurgie. 2010;15:154–68. [Google Scholar]

- [3].Brown LC, Powell JT, Thompson SG, Epstein DM, Sculpher MJ, Greenhalgh RM. The UK endovascular aneurysm repair (EVAR) trials: randomised trials of EVAR versus standard therapy. Health Technol Assess 2012;16:1–218. [DOI] [PubMed]; Brown LC, Powell JT, Thompson SG, Epstein DM, Sculpher MJ, Greenhalgh RM. The UK endovascular aneurysm repair (EVAR) trials: randomised trials of EVAR versus standard therapy. Health Technol Assess. 2012;16:1–218. doi: 10.3310/hta16090. [DOI] [PubMed] [Google Scholar]

- [4].Walsh SR, Tang TY, Boyle JR. Renal consequences of endovascular abdominal aortic aneurysm repair. J Endovasc Ther 2008;15:73–82. [DOI] [PubMed]; Walsh SR, Tang TY, Boyle JR. Renal consequences of endovascular abdominal aortic aneurysm repair. J Endovasc Ther. 2008;15:73–82. doi: 10.1583/07-2299.1. [DOI] [PubMed] [Google Scholar]

- [5].Greenberg RK, Chuter TAM, Lawrence-Brown M, Haulon S, Nolte L. Analysis of renal function after aneurysm repair with a device using suprarenal fixation (Zenith AAA endovascular graft) in contrast to open surgical repair. J Vasc Surg 2004;39:1219–28. [DOI] [PubMed]; Greenberg RK, Chuter TAM, Lawrence-Brown M, Haulon S, Nolte L. Analysis of renal function after aneurysm repair with a device using suprarenal fixation (Zenith AAA endovascular graft) in contrast to open surgical repair. J Vasc Surg. 2004;39:1219–28. doi: 10.1016/j.jvs.2004.02.033. [DOI] [PubMed] [Google Scholar]

- [6].Bartal G, Vano E, Paulo G, Miller DL. Management of patient and staff radiation dose in interventional radiology: current concepts. Cardiovasc Intervent Radiol 2014;37:289–98. [DOI] [PubMed]; Bartal G, Vano E, Paulo G, Miller DL. Management of patient and staff radiation dose in interventional radiology: current concepts. Cardiovasc Intervent Radiol. 2014;37:289–98. doi: 10.1007/s00270-013-0685-0. [DOI] [PubMed] [Google Scholar]

- [7].Kalef-Ezra JA, Karavasilis S, Ziogas D, Dristiliaris D, Michalis LK, Matsagas M. Radiation burden of patients undergoing endovascular abdominal aortic aneurysm repair. J Vasc Surg 2009;49:283–7. [DOI] [PubMed]; Kalef-Ezra JA, Karavasilis S, Ziogas D, Dristiliaris D, Michalis LK, Matsagas M. Radiation burden of patients undergoing endovascular abdominal aortic aneurysm repair. J Vasc Surg. 2009;49:283–7. doi: 10.1016/j.jvs.2008.09.003. [DOI] [PubMed] [Google Scholar]

- [8].Ho P, Cheng SWK, Wu PM, Ting ACW, Poon JTC, Cheng CKM, et al. Ionizing radiation absorption of vascular surgeons during endovascular procedures. J Vasc Surg 2007;46:455–9. [DOI] [PubMed]; Ho P, Cheng SWK, Wu PM, Ting ACW, Poon JTC, Cheng CKM. et al. Ionizing radiation absorption of vascular surgeons during endovascular procedures. J Vasc Surg. 2007;46:455–9. doi: 10.1016/j.jvs.2007.04.034. [DOI] [PubMed] [Google Scholar]

- [9].Ziegler P, Avgerinos ED, Umscheid T, Perdikides T, Stelter WJ. Fenestrated endografting for aortic aneurysm repair: a 7-year experience. J Endovasc Ther 2007;14:609–18. [DOI] [PubMed]; Ziegler P, Avgerinos ED, Umscheid T, Perdikides T, Stelter WJ. Fenestrated endografting for aortic aneurysm repair: a 7-year experience. J Endovasc Ther. 2007;14:609–18. doi: 10.1177/152660280701400502. [DOI] [PubMed] [Google Scholar]

- [10].Ketteler ER, Brown KR. Radiation exposure in endovascular procedures. J Vasc Surg 2011;53:35S–8S. [DOI] [PubMed]; Ketteler ER, Brown KR. Radiation exposure in endovascular procedures. J Vasc Surg. 2011;53:35S–8S. doi: 10.1016/j.jvs.2010.05.141. [DOI] [PubMed] [Google Scholar]

- [11].Weerakkody RA, Walsh SR, Cousins C, Goldstone KE, Tang TY, Gaunt ME. Radiation exposure during endovascular aneurysm repair. Br J Surg 2008;95:699–702. [DOI] [PubMed]; Weerakkody RA, Walsh SR, Cousins C, Goldstone KE, Tang TY, Gaunt ME. Radiation exposure during endovascular aneurysm repair. Br J Surg. 2008;95:699–702. doi: 10.1002/bjs.6229. [DOI] [PubMed] [Google Scholar]

- [12].Klein LW, Miller DL, Balter S, Laskey W, Haines D, Norbash A, et al. Occupational health hazards in the interventional laboratory: time for a safer environment. J Vasc Interv Radiol 2009;20:147–53. [DOI] [PubMed]; Klein LW, Miller DL, Balter S, Laskey W, Haines D, Norbash A. et al. Occupational health hazards in the interventional laboratory: time for a safer environment. J Vasc Interv Radiol. 2009;20:147–53. doi: 10.1016/j.jvir.2008.10.015. [DOI] [PubMed] [Google Scholar]

- [13].Franz AM, Haidegger T, Birkfellner W, Cleary K, Peters TM, Maier-Hein L. Electromagnetic tracking in medicine – a review of technology, validation, and applications. IEEE Trans Med Imaging 2014;33:1702–25. [DOI] [PubMed]; Franz AM, Haidegger T, Birkfellner W, Cleary K, Peters TM, Maier-Hein L. Electromagnetic tracking in medicine – a review of technology, validation, and applications. IEEE Trans Med Imaging. 2014;33:1702–25. doi: 10.1109/TMI.2014.2321777. [DOI] [PubMed] [Google Scholar]

- [14].Manstad-Hulaas F, Tangen GA, Gruionu LG, Aadahl P, Hernes TAN. Three-dimensional endovascular navigation with electromagnetic tracking: ex vivo and in vivo accuracy. J Endovasc Ther 2011;18:230–40. [DOI] [PubMed]; Manstad-Hulaas F, Tangen GA, Gruionu LG, Aadahl P, Hernes TAN. Three-dimensional endovascular navigation with electromagnetic tracking: ex vivo and in vivo accuracy. J Endovasc Ther. 2011;18:230–40. doi: 10.1583/10-3301.1. [DOI] [PubMed] [Google Scholar]

- [15].Stavropoulos SW, Charagundla SR. Imaging techniques for detection and management of endoleaks after endovascular aortic aneurysm repair. Radiology 2007;243:641–55. [DOI] [PubMed]; Stavropoulos SW, Charagundla SR. Imaging techniques for detection and management of endoleaks after endovascular aortic aneurysm repair. Radiology. 2007;243:641–55. doi: 10.1148/radiol.2433051649. [DOI] [PubMed] [Google Scholar]

- [16].Arthurs ZM, Starnes BW, Sohn VY, Singh N, Andersen CA. Ultrasound-guided access improves rate of access-related complications for totally percutaneous aortic aneurysm repair. Ann Vasc Surg 2008;22:736–41. [DOI] [PubMed]; Arthurs ZM, Starnes BW, Sohn VY, Singh N, Andersen CA. Ultrasound-guided access improves rate of access-related complications for totally percutaneous aortic aneurysm repair. Ann Vasc Surg. 2008;22:736–41. doi: 10.1016/j.avsg.2008.06.003. [DOI] [PubMed] [Google Scholar]

- [17].Fenster A, Parraga G, Bax J. Three-dimensional ultrasound scanning. Interface Focus 2011;1:503–19. [DOI] [PMC free article] [PubMed]; Fenster A, Parraga G, Bax J. Three-dimensional ultrasound scanning. Interface Focus. 2011;1:503–19. doi: 10.1098/rsfs.2011.0019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Huang Q, Zeng Z. A review on real-time 3D ultrasound imaging technology. Biomed Res Int 2017;2017:6027029. [DOI] [PMC free article] [PubMed]; Huang Q, Zeng Z. A review on real-time 3D ultrasound imaging technology. Biomed Res Int. 2017;2017:6027029. doi: 10.1155/2017/6027029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Brekken R, Dahl T, Hernes TAN. Ultrasound in abdominal aortic aneurysm. In: Grundmann R, editor. Diagnosis, Screening and Treatment of Abdominal, Thoracoabdominal and Thoracic Aortic Aneurysms. IntechOpen, 2011:103–24. Available from: https://www.intechopen.com/books/diagnosis-screening-and-treatment-of-abdominal-thoracoabdominal-and-thoracic-aortic-aneurysms.; Brekken R, Dahl T, Hernes TAN. In: Diagnosis, Screening and Treatment of Abdominal, Thoracoabdominal and Thoracic Aortic Aneurysms. Grundmann R, editor. IntechOpen; 2011. Ultrasound in abdominal aortic aneurysm; pp. 103–24. Available from: https://www.intechopen.com/books/diagnosis-screening-and-treatment-of-abdominal-thoracoabdominal-and-thoracic-aortic-aneurysms. [Google Scholar]

- [20].Ipsen S, Bruder R, Kuhlemann I, Jauer P, Motisi L, Cremers F, et al. A visual probe positioning tool for 4D ultrasound-guided radiotherapy. Conf Proc IEEE Eng Med Biol Soc 2018 (accepted for publication). [DOI] [PubMed]; Ipsen S, Bruder R, Kuhlemann I, Jauer P, Motisi L, Cremers F. et al. A visual probe positioning tool for 4D ultrasound-guided radiotherapy. Conf Proc IEEE Eng Med Biol Soc. 2018 doi: 10.1109/EMBC.2018.8512390. (accepted for publication) [DOI] [PubMed] [Google Scholar]

- [21].Kuntz S, Kulpa R, Royan J. The democratization of VR-AR. In: Arnaldi B, Guitton P, Moreau G, editors. Virtual Reality and Augmented Reality: Myths and Realities. Hoboken, NJ: John Wiley & Sons, 2018:73–122.; Kuntz S, Kulpa R, Royan J. In: Virtual Reality and Augmented Reality: Myths and Realities. Arnaldi B, Guitton P, Moreau G, editors. Hoboken, NJ: John Wiley & Sons; 2018. The democratization of VR-AR; pp. 73–122. [Google Scholar]

- [22].Qian L, Barthel A, Johnson A, Osgood G, Kazanzides P, Navab N, et al. Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int J CARS 2017;12:901–10. [DOI] [PMC free article] [PubMed]; Qian L, Barthel A, Johnson A, Osgood G, Kazanzides P, Navab N. et al. Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int J CARS. 2017;12:901–10. doi: 10.1007/s11548-017-1564-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin J-C, Pujol S, et al. 3D Slicer as an image computing platform for the quantitative imaging network. Magn Reson Imaging 2012;30:1323–41. [DOI] [PMC free article] [PubMed]; Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin J-C, Pujol S. et al. 3D Slicer as an image computing platform for the quantitative imaging network. Magn Reson Imaging. 2012;30:1323–41. doi: 10.1016/j.mri.2012.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Getting started with a mixed reality platformer using Microsoft HoloLens. [cited 2018 May 6]. Available from: https://blogs.windows.com/buildingapps/2017/02/27/getting-started-mixed-reality-platformer-using-microsoft-hololens/#Pq3ymvAwQA5Jcde6.97.; Getting started with a mixed reality platformer using Microsoft HoloLens. [cited 2018 May 6]. Available from: https://blogs.windows.com/buildingapps/2017/02/27/getting-started-mixed-reality-platformer-using-microsoft-hololens/#Pq3ymvAwQA5Jcde6.97.

- [25].Mitrasinovic S, Camacho E, Trivedi N, Logan J, Campbell C, Zilinyi R, et al. Clinical and surgical applications of smart glasses. Technol Health Care 2015;23:381–401. [DOI] [PubMed]; Mitrasinovic S, Camacho E, Trivedi N, Logan J, Campbell C, Zilinyi R. et al. Clinical and surgical applications of smart glasses. Technol Health Care. 2015;23:381–401. doi: 10.3233/THC-150910. [DOI] [PubMed] [Google Scholar]

- [26].Pratt P, Ives M, Lawton G, Simmons J, Radev N, Spyropoulou L, et al. Through the HoloLens™ looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur Radiol Exp 2018;2:2. [DOI] [PMC free article] [PubMed]; Pratt P, Ives M, Lawton G, Simmons J, Radev N, Spyropoulou L. et al. Through the HoloLens™ looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur Radiol Exp. 2018;2:2. doi: 10.1186/s41747-017-0033-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Tepper OM, Rudy HL, Lefkowitz A, Weimer KA, Marks SM, Stern CS, et al. Mixed reality with HoloLens: where virtual reality meets augmented reality in the operating room. Plast Reconstr Surg 2017;140:1066–70. [DOI] [PubMed]; Tepper OM, Rudy HL, Lefkowitz A, Weimer KA, Marks SM, Stern CS. et al. Mixed reality with HoloLens: where virtual reality meets augmented reality in the operating room. Plast Reconstr Surg. 2017;140:1066–70. doi: 10.1097/PRS.0000000000003802. [DOI] [PubMed] [Google Scholar]

- [28].Kuhlemann I, Kleemann M, Jauer P, Schweikard A, Ernst F. Towards X-ray free endovascular interventions – using HoloLens for on-line holographic visualisation. Healthc Technol Lett 2017;4:184–7. [DOI] [PMC free article] [PubMed]; Kuhlemann I, Kleemann M, Jauer P, Schweikard A, Ernst F. Towards X-ray free endovascular interventions – using HoloLens for on-line holographic visualisation. Healthc Technol Lett. 2017;4:184–7. doi: 10.1049/htl.2017.0061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Open Inventor. [cited 2018 May 6]. Available from: https://www.mevislab.de/mevislab/features/open-inventor.; Open Inventor. [cited 2018 May 6]. Available from: https://www.mevislab.de/mevislab/features/open-inventor.

- [30].Faure EM, Canaud L, Cathala P, Serres I, Marty-Ané C, Alric P. Assessment of abdominal branch vessel patency after bare-metal stenting of the thoracoabdominal aorta in a human ex vivo model of acute type B aortic dissection. J Vasc Surg 2015;61:1299–305. [DOI] [PubMed]; Faure EM, Canaud L, Cathala P, Serres I, Marty-Ané C, Alric P. Assessment of abdominal branch vessel patency after bare-metal stenting of the thoracoabdominal aorta in a human ex vivo model of acute type B aortic dissection. J Vasc Surg. 2015;61:1299–305. doi: 10.1016/j.jvs.2013.11.095. [DOI] [PubMed] [Google Scholar]

- [31].Savastano LE, Zhou Q, Smith A, Vega K, Murga-Zamalloa C, Gordon D, et al. Multimodal laser-based angioscopy for structural, chemical and biological imaging of atherosclerosis. Nat Biomed Eng 2017;1:0023. [DOI] [PMC free article] [PubMed]; Savastano LE, Zhou Q, Smith A, Vega K, Murga-Zamalloa C, Gordon D. et al. Multimodal laser-based angioscopy for structural, chemical and biological imaging of atherosclerosis. Nat Biomed Eng. 2017;1:0023. doi: 10.1038/s41551-016-0023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Matava C, Alam F, Saab R. Using Hololens augmented reality to combine real-time US-guided anesthesia procedure images and patient monitor data overlaid on a simulated patient: development. Anesth Analg 2017;124:1064.; Matava C, Alam F, Saab R. Using Hololens augmented reality to combine real-time US-guided anesthesia procedure images and patient monitor data overlaid on a simulated patient: development. Anesth Analg. 2017;124:1064. [Google Scholar]

- [33].Kuzhagaliyev T, Clancy NT, Janatka M, Tchaka K, Vasconcelos F, Clarkson MJ, et al. Augmented reality needle ablation guidance tool for irreversible electroporation in the pancreas. Proc SPIE, Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling 2018;10576:1057613.; Kuzhagaliyev T, Clancy NT, Janatka M, Tchaka K, Vasconcelos F, Clarkson MJ. et al. Augmented reality needle ablation guidance tool for irreversible electroporation in the pancreas. Proc SPIE, Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling. 2018;10576:1057613. [Google Scholar]

- [34].Mahmood F, Mahmood E, Dorfman RG, Mitchell J, Mahmood F-U, Jones SB, et al. Augmented reality and ultrasound education: initial experience. J Cardiothorac Vasc Anesth 2018;32:1363–7. [DOI] [PubMed]; Mahmood F, Mahmood E, Dorfman RG, Mitchell J, Mahmood F-U, Jones SB. et al. Augmented reality and ultrasound education: initial experience. J Cardiothorac Vasc Anesth. 2018;32:1363–7. doi: 10.1053/j.jvca.2017.12.006. [DOI] [PubMed] [Google Scholar]

- [35].Bruder R, Ernst F, Schlaefer A, Schweikard A. A framework for real-time target tracking in IGRT using three-dimensional ultrasound. Int J CARS 2011;6:S306–7.; Bruder R, Ernst F, Schlaefer A, Schweikard A. A framework for real-time target tracking in IGRT using three-dimensional ultrasound. Int J CARS. 2011;6:S306–7. [Google Scholar]

- [36].gRPC. [cited 2018 May 6]. Available from: https://www.grpc.io.; gRPC. [cited 2018 May 6]. Available from: https://www.grpc.io.

- [37].ARToolKit on HoloLens. [cited 2018 May 6]. Available from: http://longqian.me/2017/01/20/artoolkit-on-hololens.; ARToolKit on HoloLens. [cited 2018 May 6] Available from: http://longqian.me/2017/01/20/artoolkit-on-hololens [Google Scholar]

- [38].Using the Windows Device Portal. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/using-the-windows-device-portal.; Using the Windows Device Portal. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/using-the-windows-device-portal.

- [39].Horn M, Nolde J, Goltz JP, Barkhausen J, Schade W, Waltermann C, et al. Ein prototyp für die navigierte implantation von aortenstentprothesen zur reduzierung der kontrastmittel- und Strahlenbelastung: das Nav-CARS-EVAR-konzept (navigated-contrast-agent and radiation sparing endovascular aortic repair) [An experimental set-up for navigated-contrast-agent and radiation sparing endovascular aortic repair (Nav-CARS EVAR)]. Zentralbl Chir 2015;140:493–9. [DOI] [PubMed]; Horn M, Nolde J, Goltz JP, Barkhausen J, Schade W, Waltermann C. et al. Ein prototyp für die navigierte implantation von aortenstentprothesen zur reduzierung der kontrastmittel- und Strahlenbelastung: das Nav-CARS-EVAR-konzept (navigated-contrast-agent and radiation sparing endovascular aortic repair) [An experimental set-up for navigated-contrast-agent and radiation sparing endovascular aortic repair (Nav-CARS EVAR)] Zentralbl Chir. 2015;140:493–9. doi: 10.1055/s-0035-1546261. [DOI] [PubMed] [Google Scholar]

- [40].Health & safety. [cited 2018 May 6]. Available from: https://www.microsoft.com/en-us/hololens/legal/health-and-safety-information.; Health & safety. [cited 2018 May 6]. Available from: https://www.microsoft.com/en-us/hololens/legal/health-and-safety-information.

- [41].Performance recommendations for HoloLens apps. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/performance-recommendations-for-hololens-apps.; Performance recommendations for HoloLens apps. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/performance-recommendations-for-hololens-apps.

- [42].HoloLens hardware details. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/hololens-hardware-details.; HoloLens hardware details. [cited 2018 May 6]. Available from: https://docs.microsoft.com/en-us/windows/mixed-reality/hololens-hardware-details.

- [43].Thompson WB, Fleming RW, Creem-Regehr SH, Stefanucci JK. Visual perception from a computer graphics perspective. Boca Raton: CRC Press, 2011.; Thompson WB, Fleming RW, Creem-Regehr SH, Stefanucci JK. Visual perception from a computer graphics perspective. Boca Raton: CRC Press; 2011. [Google Scholar]

- [44].Wang S, Parsons M, Stone-McLean J, Rogers P, Boyd S, Hoover K, et al. Augmented reality as a telemedicine platform for remote procedural training. Sensors 2017;17:2294. [DOI] [PMC free article] [PubMed]; Wang S, Parsons M, Stone-McLean J, Rogers P, Boyd S, Hoover K. et al. Augmented reality as a telemedicine platform for remote procedural training. Sensors. 2017;17:2294. doi: 10.3390/s17102294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Brekken R, Iversen DH, Tangen GA, Dahl T. Registration of real-time 3-D ultrasound to tomographic images of the abdominal aorta. Ultrasound Med Biol 2016;42:2026–32. [DOI] [PubMed]; Brekken R, Iversen DH, Tangen GA, Dahl T. Registration of real-time 3-D ultrasound to tomographic images of the abdominal aorta. Ultrasound Med Biol. 2016;42:2026–32. doi: 10.1016/j.ultrasmedbio.2016.03.021. [DOI] [PubMed] [Google Scholar]

- [46].Kaspersen JH, Sjølie E, Wesche J, Åsland J, Lundbom J, Ødegård A, et al. Three-dimensional ultrasound-based navigation combined with preoperative CT during abdominal interventions: a feasibility study. Cardiovasc Intervent Radiol 2003;26:347–56. [DOI] [PubMed]; Kaspersen JH, Sjølie E, Wesche J, Åsland J, Lundbom J, Ødegård A. et al. Three-dimensional ultrasound-based navigation combined with preoperative CT during abdominal interventions: a feasibility study. Cardiovasc Intervent Radiol. 2003;26:347–56. doi: 10.1007/s00270-003-2690-1. [DOI] [PubMed] [Google Scholar]

- [47].Kuhlemann I, Bruder R, Ernst F, Schweikard A. WE-G-BRF-09: Force- and image-adaptive strategies for robotised placement of 4D ultrasound probes. Med Phys 2014;41:523.; Kuhlemann I, Bruder R, Ernst F, Schweikard A. WE-G-BRF-09: Force- and image-adaptive strategies for robotised placement of 4D ultrasound probes. Med Phys. 2014;41:523. [Google Scholar]

- [48].Garon M, Boulet P-O, Doironz J-P, Beaulieu L, Lalonde J-F. Real-time high resolution 3D data on the HoloLens. Int Symp Mix Augment Real 2016;189–91.; Garon M, Boulet P-O, Doironz J-P, Beaulieu L, Lalonde J-F. Real-time high resolution 3D data on the HoloLens. Int Symp Mix Augment Real. 2016:189–91. [Google Scholar]

- [49].Chen F, Liu J, Liao H. 3D catheter shape determination for endovascular navigation using a two-step particle filter and ultrasound scanning. IEEE Trans Med Imaging 2017;36:685–95. [DOI] [PubMed]; Chen F, Liu J, Liao H. 3D catheter shape determination for endovascular navigation using a two-step particle filter and ultrasound scanning. IEEE Trans Med Imaging. 2017;36:685–95. doi: 10.1109/TMI.2016.2635673. [DOI] [PubMed] [Google Scholar]

- [50].Condino S, Calabrò EM, Alberti A, Parrini S, Cioni R, Berchiolli RN, et al. Simultaneous tracking of catheters and guidewires: comparison to standard fluoroscopic guidance for arterial cannulation. Eur J Vasc Endovasc Surg 2014;47:53–60. [DOI] [PubMed]; Condino S, Calabrò EM, Alberti A, Parrini S, Cioni R, Berchiolli RN. et al. Simultaneous tracking of catheters and guidewires: comparison to standard fluoroscopic guidance for arterial cannulation. Eur J Vasc Endovasc Surg. 2014;47:53–60. doi: 10.1016/j.ejvs.2013.10.001. [DOI] [PubMed] [Google Scholar]

- [51].Dore A, Smoljkic G, Poorten EV, Sette M, Sloten JV, Yang G-Z. Catheter navigation based on probabilistic fusion of electromagnetic tracking and physically-based simulation. Rep US 2012;3806–11.; Dore A, Smoljkic G, Poorten EV, Sette M, Sloten JV, Yang G-Z. Catheter navigation based on probabilistic fusion of electromagnetic tracking and physically-based simulation. Rep US. 2012:3806–11. [Google Scholar]

- [52].Hill KO, Meltz G. Fiber Bragg grating technology fundamentals and overview. J Lightwave Technol 1997;15:1263–76.; Hill KO, Meltz G. Fiber Bragg grating technology fundamentals and overview. J Lightwave Technol. 1997;15:1263–76. [Google Scholar]

- [53].Shi C, Luo X, Qi P, Li T, Song S, Najdovski Z, et al. Shape sensing techniques for continuum robots in minimally invasive surgery: a survey. IEEE Trans Biomed Eng 2017;64:1665–78. [DOI] [PubMed]; Shi C, Luo X, Qi P, Li T, Song S, Najdovski Z. et al. Shape sensing techniques for continuum robots in minimally invasive surgery: a survey. IEEE Trans Biomed Eng. 2017;64:1665–78. doi: 10.1109/TBME.2016.2622361. [DOI] [PubMed] [Google Scholar]

- [54].Henken KR, Dankelman J, van den Dobbelsteen JJ, Cheng LK, van der Heiden MS. Error analysis of FBG-based shape sensors for medical needle tracking. IEEE ASME Trans Mechatron 2014;19:1523–31.; Henken KR, Dankelman J, van den Dobbelsteen JJ, Cheng LK, van der Heiden MS. Error analysis of FBG-based shape sensors for medical needle tracking. IEEE ASME Trans Mechatron. 2014;19:1523–31. [Google Scholar]

- [55].Roesthuis RJ, Kemp M, van den Dobbelsteen JJ, Misra S. Three-dimensional needle shape reconstruction using an array of fiber Bragg grating sensors. IEEE ASME Trans Mechatron 2014;19:1115–26.; Roesthuis RJ, Kemp M, van den Dobbelsteen JJ, Misra S. Three-dimensional needle shape reconstruction using an array of fiber Bragg grating sensors. IEEE ASME Trans Mechatron. 2014;19:1115–26. [Google Scholar]

- [56].Borot de Battisti M, Denis de Senneville B, Maenhout M, Lagendijk JJW, van Vulpen M, Hautvast G, et al. Fiber Bragg gratings-based sensing for real-time needle tracking during MR-guided brachytherapy. Med Phys 2016;43:5288–97. [DOI] [PubMed]; Borot de Battisti M, Denis de Senneville B, Maenhout M, Lagendijk JJW, van Vulpen M, Hautvast G. et al. Fiber Bragg gratings-based sensing for real-time needle tracking during MR-guided brachytherapy. Med Phys. 2016;43:5288–97. doi: 10.1118/1.4961743. [DOI] [PubMed] [Google Scholar]

- [57].Petersch B, Hadwiger M, Hauser H, Hönigmann D. Real time computation and temporal coherence of opacity transfer functions for direct volume rendering of ultrasound data. Comput Med Imaging Graph 2005;29:53–63. [DOI] [PubMed]; Petersch B, Hadwiger M, Hauser H, Hönigmann D. Real time computation and temporal coherence of opacity transfer functions for direct volume rendering of ultrasound data. Comput Med Imaging Graph. 2005;29:53–63. doi: 10.1016/j.compmedimag.2004.09.013. [DOI] [PubMed] [Google Scholar]

- [58].Naka KK, Papamichael ND, Tsakanikas VD. Intravascular image interpretation. In: Tsakanikas VD, editor. Intravascular Imaging: Current Applications and Research Developments. Hershey: IGI Global, 2011:10–35.; Naka KK, Papamichael ND, Tsakanikas VD. In: Intravascular Imaging: Current Applications and Research Developments. Tsakanikas VD, editor. Hershey: IGI Global; 2011. Intravascular image interpretation; pp. 10–35. [Google Scholar]

- [59].White RA, Donayre C, Kopchok G, Walot I, Wilson E, deVirgilio C. Intravascular ultrasound: the ultimate tool for abdominal aortic aneurysm assessment and endovascular graft delivery. J Endovasc Ther 1997;4:45–55. [DOI] [PubMed]; White RA, Donayre C, Kopchok G, Walot I, Wilson E, deVirgilio C. Intravascular ultrasound: the ultimate tool for abdominal aortic aneurysm assessment and endovascular graft delivery. J Endovasc Ther. 1997;4:45–55. doi: 10.1583/1074-6218(1997)004<0045:IUTUTF>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- [60].Shi C, Tercero C, Ikeda S, Ooe K, Fukuda T, Komori K, et al. In vitro three-dimensional aortic vasculature modeling based on sensor fusion between intravascular ultrasound and magnetic tracker. Int J Med Robotics Comput Assist Surg 2012;8:291–9. [DOI] [PubMed]; Shi C, Tercero C, Ikeda S, Ooe K, Fukuda T, Komori K. et al. In vitro three-dimensional aortic vasculature modeling based on sensor fusion between intravascular ultrasound and magnetic tracker. Int J Med Robotics Comput Assist Surg. 2012;8:291–9. doi: 10.1002/rcs.1416. [DOI] [PubMed] [Google Scholar]

- [61].Hoye A. Current status of intravascular imaging with IVUS and OCT: the clinical implications of intravascular imaging. In: Tsakanikas VD, editor. Intravascular Imaging: Current Applications and Research Developments. Hershey: IGI Global, 2011:293–308.; Hoye A. In: Intravascular Imaging: Current Applications and Research Developments. Tsakanikas VD, editor. Hershey: IGI Global; 2011. Current status of intravascular imaging with IVUS and OCT: the clinical implications of intravascular imaging; pp. 293–308. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.