Abstract

Humans can consciously project themselves in the future and imagine themselves at different places. Do mental time travel and mental space navigation abilities share common cognitive and neural mechanisms? To test this, we recorded fMRI while participants mentally projected themselves in time or in space (e.g., 9 years ago, in Paris) and ordered historical events from their mental perspective. Behavioral patterns were comparable for mental time and space and shaped by self-projection and by the distance of historical events to the mental position of the self, suggesting the existence of egocentric mapping in both dimensions. Nonetheless, self-projection in space engaged the medial and lateral parietal cortices, whereas self-projection in time engaged a widespread parietofrontal network. Moreover, while a large distributed network was found for spatial distances, temporal distances specifically engaged the right inferior parietal cortex and the anterior insula. Across these networks, a robust overlap was only found in a small region of the inferior parietal lobe, adding evidence for its role in domain-general egocentric mapping. Our findings suggest that mental travel in time or space capitalizes on egocentric remapping and on distance computation, which are implemented in distinct dimension-specific cortical networks converging in inferior parietal lobe.

SIGNIFICANCE STATEMENT As humans, we can consciously imagine ourselves at a different time (mental time travel) or at a different place (mental space navigation). Are such abilities domain-general, or are the temporal and spatial dimensions of our conscious experience separable? Here, we tested the hypothesis that mental time travel and mental space navigation required the egocentric remapping of events, including the estimation of their distances to the self. We report that, although both remapping and distance computation are foundational for the processing of the temporal and spatial dimensions of our conscious experience, their neuroanatomical implementations were clearly dissociable and engaged distinct parietal and parietofrontal networks for mental space navigation and mental time travel, respectively.

Keywords: egocentric map, fMRI, self, spatial navigation, time

Introduction

The link between the two fundamental dimensions of our reality—space and time—is foundational for cognitive neuroscience (Gallistel, 1990; Dehaene and Brannon, 2010; Buzsáki and Moser, 2013). The representation of time has been hypothesized to be integrated in a common mental magnitude (Walsh, 2003), construed to spatial primitives (Boroditsky, 2000; Lakoff and Johnson, 2005) and spatialized (Arzy et al., 2009a; Bonato et al., 2012) so that temporal ordinality (before/after) may map to spatial polarity (left/right). To date, however, the relationship between the mental representations of space and time remains more descriptive than explicative. Here, we asked whether the temporal and spatial dimensions of mental events undergo such domain-general processing.

The ability to imagine oneself in the past or in the future is called mental time travel (Tulving, 2002; Suddendorf et al., 2009) and has been characterized by chronometric effects. During mental time travel, participants are typically slower and less accurate in ranking an event as happening before or after a mental self-position in time (Arzy et al., 2009b). This effect has been hypothesized to result from the imagery of the self at different times and referred to as “self-projection in time” (Arzy et al., 2009b). Regardless of the mental self-position, participants are also typically faster and more accurate in ranking events that are further away from the mental self-position (Arzy et al., 2009a), a pattern consistent with canonical symbolic distance effects (Moyer and Bayer, 1976) suggesting that the distances between events are automatically computed. Under the hypothesis of a common cognitive system for the representation of time and space, we predicted comparable chronometric patterns in a mental space navigation task designed to be cognitively analogous to the mental time travel task used here. Mental time travel and mental space navigation were predicted to share neural activation patterns on the basis of recent findings studying distance effects (Parkinson et al., 2014; Peer et al., 2015).

To test these hypotheses, 17 participants took part in an event-related fMRI study during which they judged the ordinality of remembered events with respect to an imagined self-position in time or in space or, in control trials, estimated their emotional valence. In a given experimental block, a series of eight temporal, spatial, or emotional judgments was preceded by a self-positioning cue. The self-positioning cue instructed participants to mentally position themselves in time or in space (Fig. 1A). Participants were then prompted with one of three possible response pairs indicating the judgment to be done: before/after (TIME), west/east (SPACE), and positive/negative (CTRL). Following the presentation of the response pair, an event selected from a distribution of historical events was displayed on the screen (Fig. 1B). The distribution of historical events was centered on the imagined self-position to ensure an equal probability of occurrence of before/west/positive and after/east/negative answers (Fig. 1C). Although mental time travel and mental space navigation were hypothesized to yield overlapping neural activity, we also tested whether self-projection and distance effects would reveal distinct networks for mental time travel and mental space navigation.

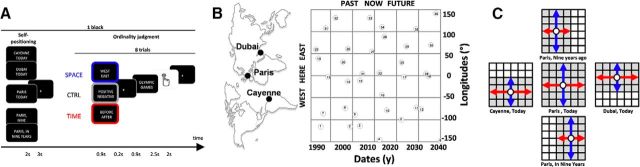

Figure 1.

Experimental design. A, One experimental block. One block started with a self-position cue providing the time and place at which participants should mentally position themselves. Five possible cues combined three places (Cayenne, Dubai, and Paris) and three dates (9 years ago, in 9 years, and today). Following this cue, a series of eight trials were presented. A trial started with a question indicating whether the ordinality judgment was about TIME (red; before/after), SPACE (blue; east/west), or emotion (CTRL, gray; positive/negative). Any dimension could follow any self-position cue. Following the question, a historical event selected of 36 possible events was presented to the participant. B, Place and time of historical events. Events were distributed in a grid-like manner around the possible geographical and temporal locations, enabling their use regardless of the dimension. C, Distribution of events in space and time. Used events (shaded grid unit) were distributed so that their relative west/east (red) and past/future (blue) positions were even with respect to each possible self-position cue (open circles). This design entailed a restriction of the number of events for positions that were not here and now.

Materials and Methods

Participants.

Seventeen healthy volunteers (age 24 ± 4 years old; 8 males), right-handed, with normal or corrected-to-normal vision and with no history of psychological disorders participated in this study. All subjects lived in the Parisian region. All participants were compensated for their participation and provided written informed consents in accordance with the Ethics Committee on Human Research at the Commissariat à l'Energie Atomique et aux Energies Alternatives (DRF/I2BM, NeuroSpin, Gif-sur-Yvette, France) and the Declaration of Helsinki 2008.

Design and procedure.

At least 48 h before the experiment, participants were provided with a list of events with their historical description, dates, and locations on a world map centered on Paris (https://www.google.ca/maps, June 1, 2013). Participants were asked to study the list and informed that their knowledge would be tested before the fMRI experiment. On the day of the neuroimaging experiment, participants filled a questionnaire and rated their recollection of each event by selecting “sure,” “not sure,” or “forgotten.” Events were presented in random order across participants.

During the neuroimaging experiment, stimuli consisted in viewing white words on a black background presented via a back-projection display (1024 × 768 resolution, 60 Hz refresh rate). The PsychToolbox-3 (http://psychtoolbox.org/) was used for presenting stimuli, recording behavioral responses, and synchronizing experimental timing with the scanner pulse timing. Stimuli covered on average 1.3 × 8.9° of visual angle. Participants were instructed to maintain fixation on a central cross when no words were presented.

The experiment consisted in a fast event-related design of 76 blocks grouped in four acquisition runs. Each block started with the presentation of the spatiotemporal self-position cue at which participants mentally imagine themselves. Over the 76 experimental blocks, participants could be asked to imagine themselves now in Paris (NOW, HERE), 9 years ago in Paris (PAST, HERE), in 9 years in Paris (FUTURE, HERE), now in Cayenne (NOW, WEST), or now in Dubai (NOW, EAST). Each possible self-position comprised 16 blocks, except for the (NOW, HERE) condition, which comprised 20 blocks (Fig. 1C). The presentation of the self-position cue (2 s) was followed by a fixation cross lasting 3 s and, then, by a succession of 8 trials (Fig. 1A). One trial consisted of one of three possible tasks: a spatial judgment task akin to mental space navigation (SPACE) in which participants indicated whether a historical event occurred to the west or east of the imagined self-position; a temporal judgment task akin to mental time travel (TIME) in which participants indicated whether a historical event occurred before or after the imagined self-position; a valence judgment (control condition, CTRL) designed to control for strategic and procedural aspects of the 2-AFC task as well as memory retrieval, in which participants indicated whether they considered the historical event to have a positive or negative valence. In a given trial, the question indicating the dimension of the judgment (Before/After? for TIME, West/East? for SPACE, and Positive/Negative? for CTRL) was presented on the screen for 0.9 s and followed by a varying interstimulus interval ranging from 0.220 to 0.330 s. The historical event (e.g., Olympic Games) was then presented for 0.9 s after which participants were given up to 2.5 s to give their answer. The next trial followed after a 2 s intertrial interval. On average, a trial lasted 5 s. Hence, in the 76 blocks, a total of 104 trials (2 × 24 for TIME + 2 × 24 for SPACE + 8 for CTRL) were collected for each condition and 152 trials (2 × 36 for TIME + 2 × 36 for SPACE + 8 for CTRL) for the (PRESENT, HERE) condition. TIME, SPACE, and CTRL trials were randomized inside each block. Self-position was fixed for each block and randomized between two successive acquisition runs.

Judgments were given by a button press with the thumb using FORP buttons (Current Designs) in both hands. Participants responded with their left hand for Before, West, and Positive, and with their right hand for After, East, and Negative in the two first acquisition runs; the response-hand mapping was reversed for the two last acquisition runs to alleviate cognitive-motor mapping confounds in the behavioral and the fMRI data. Accuracy and speed were equally emphasized.

Historical events.

Thirty-six historical events were used (Fig. 1B; Table 1). Past events were public and well-known events to avoid subject-specific recollection bias as much as possible; similarly, future events were highly probable events, such as future construction sites or upcoming sport events to reduce the impact of event probability with temporal distance. The final set of historical events covered a span of 54 years centered on 2013 (year at which fMRI data were collected) and 320 angular degree of longitude centered on Paris (experiment location). During the fMRI scans, historical events were distributed uniformly across dates and longitudes and according to the imagined self-position and the dimension: for example, when participants imagined themselves 9 years ago and made a temporal judgment, the event set was centered on the year 2004 and comprised 24 events. Similarly, when participants imagined themselves in Cayenne and produced a spatial judgment, the event set was centered on Cayenne and comprised 24 events (Fig. 1C).

Table 1.

Full list of the 36 historical events with their dates and longitudesa

| Event stimulus | Year | Longitude (°) | Event stimulus | Year | Longitude (°) |

|---|---|---|---|---|---|

| 1 Miles Davis | 1991 | −118.5 | 19 Desert Storm | 1990 | 43.9 |

| 2 Ronald Reagan | 2003 | −118.3 | 20 Second Intifada | 2000 | 35.7 |

| 3 Artificial genome | 2007 | −117.1 | 21 Radovan Karadzic | 2008 | 7.5 |

| 4 Drinkable water | 2015 | −149.4 | 22 Universal exhibition | 2015 | 9.2 |

| 5 Solar eclipse | 2024 | −102.1 | 23 Elephant extinction | 2024 | 34.8 |

| 6 Oil drying up | 2040 | −152.4 | 24 Nuclear phase out | 2034 | 8.2 |

| 7 Barack Obama | 1992 | −87.6 | 25 Cold War | 1989 | 65.8 |

| 8 Twin Towers | 2001 | −74.1 | 26 DCN bombing | 2002 | 67.1 |

| 9 Hurricane Katrina | 2005 | −90 | 27 Meteor shower | 2013 | 60 |

| 10 Canal expansion | 2018 | −79.7 | 28 Dam building | 2017 | 95.8 |

| 11 Francophony ending | 2030 | −73.5 | 29 Archipelago flooding | 2026 | 72.9 |

| 12 Air traffic | 2032 | −77 | 30 First population | 2036 | 76 |

| 13 Tony Blair | 1994 | −0.1 | 31 Celestial peace | 1989 | 116 |

| 14 Prestige wreckage | 2002 | −11.1 | 32 Ecology agreement | 1997 | 140.5 |

| 15 Depenalized abortion | 2007 | −9.1 | 33 Nuclear accident | 2011 | 140.5 |

| 16 Olympic Games | 2016 | −43.2 | 34 Second Titanic | 2016 | 119 |

| 17 Sewer renovation | 2025 | −0.1 | 35 Indonesia growth | 2030 | 113.9 |

| 18 Metro express | 2035 | 2 | 36 Koala extinction | 2038 | 151.2 |

a Historical events were provided in French to participants and consisted systematically of 2 words.

MRI data acquisition and analysis.

Anatomical MRI and fMRI were acquired using a 3T MRI scanner (TIM Trio; Siemens). Each participant underwent 7 min anatomical imaging using a T1-weighted MPRAGE sequence (160 slices; repetition time, 2300 ms; echo time, 2.98 ms; FOV, 256; voxel size, 1 × 1 × 1 mm3) and four sessions of 15–20 min of functional imaging with a 32-channel head-coil and high-resolution multiband imaging (Xu et al., 2013) with multiband factor = 3, GRAPPA factor = 2, 69 interleaved axial slices, voxel size 2 × 2 × 2 mm3, repetition time = 1.5 s, echo time = 30 ms.

We used statistical parametric mapping (SPM8; Wellcome Trust Centre for Neuroimaging, London; http://www.fil.ion.ucl.ac.uk/spm/) for image preprocessing with slice timing correction, realignment, coregistration with the structural image, normalization to MNI stereotactic space, and spatial smoothing with a 5 mm FWHM isotropic Gaussian kernel. A two level analysis was implemented. In a first level analysis, fixed-effect GLMs were estimated subject-wise on the basis of a design matrix that covered all four fMRI sessions and included boxcar regressors, ranging from question onset to event offset, convolved with a canonical hemodynamic response function, for every experimental condition as well as for nuisance covariates from the motion parameters, their first derivatives, and acquisition runs. Single-subject GLMs were estimated for all voxels comprised in a liberal subject-wise gray matter mask (index > 0.1). A high-pass filter (128 s cutoff) was applied to remove slow drifts unrelated to the paradigm. Each trial was modeled as a regressor specifying the following combined parameters: the five spatiotemporal self-positions used in a given block: NOW in PARIS, NOW at WEST, or EAST of PARIS (i.e., in Cayenne or Dubai), PAST or FUTURE in PARIS (i.e., 9 years ago or 9 years in the future), the task (TIME, SPACE), the relative position of the historical event (relative west/east, relative past/future, not analyzed in this study), and the distance separating the imagined self-position and the historical event in the trial (egocentric distance) split in four bins (very close, close, far, and very far). For instance, one trial could be as follows: self-position = PAST, task = TIME, side = relative west, distance = close. In the model, the control task was combined with the five spatiotemporal self-positions only. The five self-position presentations were modeled separately. Hence and overall, 51 regressors could be included in an acquisition block, due to the across-blocks condition randomization. In a second level analysis, individual contrast images for each of the experimental conditions (first level regressor) relative to rest were entered into a second level whole-brain ANOVA (random effect model) with experimental conditions as within-subject factor. Then, T contrasts are estimated as a linear weighted combination of effect of interest. Standard thresholds were applied for whole-brain T contrasts: second level contrast maps were thresholded with a voxelwise threshold of p < 0.001. Then, FDR correction was performed by setting a cluster size threshold: only clusters with a clusterwise FDR-corrected p value inferior to 0.05 were kept. T contrast of interest were TIME > SPACE, SPACE > TIME, TIME > CTRL, SPACE > CTRL, TIME: PAST + FUTURE > NOW, SPACE: WEST + EAST > HERE, TIME: CLOSE > FAR, and SPACE: CLOSE > FAR.

To perform conjunction analyses, we used the Minimum Statistic Compared to the Conjunction Null (Nichols et al., 2005), implemented in SPM8, on a second level random-effect ANOVA model in which the relevant T contrasts were selected. We use the same procedure of multiple comparisons that, for single T contrast, using a voxel level threshold of 0.001 uncorrected with a FDR correction for cluster level at p < 0.05. Z-statistics, corrected clusterwise p values, and cluster extents are provided in Tables 3–6.

Table 3.

Regions engaged in temporal (TIME) and spatial (SPACE) versus control tasks (CTRL)a

| Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | p (FDR corrected) | Z value | Extent | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |||||||||||||||

| TIME > CTRL | SPACE > CTRL | Conjunction | ||||||||||||||||||

| Parietal | Parietal | |||||||||||||||||||

| L | AG | 39 | −36 | −76 | 32 | <0.001 | 5.12 | 207 | L | AG | 39 | −36 | −78 | 32 | <0.001 | >8 | 611 | <0.001 | 5.12 | 205 |

| R | AG | 39 | 42 | −72 | 38 | <0.001 | 5.69 | 365 | R | AG | 39 | 42 | −74 | 30 | <0.001 | >8 | 622 | <0.001 | 5.69 | 311 |

| L | PCUN/RSC | 7 | −16 | −60 | 18 | 0.006 | 3.65 | 65 | L | PCUN/RSC | 29/30 | −16 | −60 | 18 | <0.001 | >8 | 1434 | 0.008 | 3.65 | 63 |

| R | PCUN/RSC | 29/30 | 20 | −56 | 20 | <0.001 | 4.39 | 127 | R | PCUN/RSCb | 29/30 | 18 | −54 | 20 | — | — | — | <0.001 | 4.39 | 127 |

| L | PCUN/SPL | 7 | −4 | −66 | 50 | 0.024 | 4.22 | 40 | L | PCUN/SPLb | 7 | −6 | −66 | 52 | — | — | — | 0.03 | 4.22 | 40 |

| R | PCUN/SPL | 7 | 8 | −66 | 50 | <0.001 | 5.24 | 174 | R | PCUN/SPLb | 7 | 8 | −66 | 52 | <0.001 | 7.51 | 875 | <0.001 | 5.24 | 168 |

| R | PCC | 23 | 8 | −38 | 44 | 0.032 | 3.98 | 35 | R | PCCb | 23 | 8 | −38 | 44 | — | — | — | 0.039 | 3.98 | 35 |

| R | IPS/SMG | 7/40 | 50 | −42 | 50 | <0.001 | 4.69 | 185 | — | — | — | — | — | — | — | — | — | — | — | — |

| Frontal | Frontal | |||||||||||||||||||

| L | sFront | 6 | −26 | 10 | 54 | 0.006 | 4.68 | 65 | L | sFront | 6 | −24 | 10 | 54 | <0.001 | 6.25 | 202 | 0.008 | 4.68 | 64 |

| R | sFront | 6 | 28 | 10 | 56 | <0.001 | 5.81 | 284 | R | sFront | 6 | 26 | 10 | 52 | <0.001 | 7.23 | 378 | <0.001 | 5.81 | 247 |

| R | Frontopolar | 10 | 32 | 60 | 12 | <0.001 | 4.77 | 247 | R | Frontopolar | 10 | 26 | 56 | 6 | 0.001 | 3.94 | 85 | 0.003 | 3.94 | 85 |

| R | dlPFC | 10 | 42 | 40 | 24 | <0.001 | 5.3 | 286 | R | dlPFC | 6/8 | 28 | 32 | 44 | 0.02 | 4.22 | 35 | |||

| Temporal | ||||||||||||||||||||

| — | — | — | — | — | — | — | — | — | L | iT | 37 | −58 | −58 | −6 | 0.003 | 4.89 | 65 | — | — | — |

| — | — | — | — | — | — | — | — | — | R | iT | 37 | 58 | −54 | −8 | 0.002 | 4.73 | 75 | — | — | — |

| — | — | — | — | — | — | — | — | — | L | PHC | 30 | −30 | −42 | −8 | <0.001 | 6.68 | 132 | — | — | — |

| — | — | — | — | — | — | — | — | — | R | PHC | 30 | 26 | −36 | −14 | 0.005 | 4.64 | 55 | — | — | — |

a All clusters shown here at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 35 voxels, cluster level). For each activation cluster, the MNI coordinates of the peak voxel within each different structure are reported together with the corresponding Z values, p values, and cluster extent. Most regions were at least partially overlapped. L, Left; R, right.

b Part of a larger cluster, reported for observed symmetry

Table 4.

Regions engaged in temporal (TIME) versus spatial (SPACE) tasks and spatial (SPACE) versus temporal (TIME) taska

| Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | ||||||||||||

| SPACE > TIME | TIME > SPACE | ||||||||||||||||

| Parietal | Parietal | ||||||||||||||||

| L | AG | 39/19 | −36 | −78 | 30 | <0.001 | >8 | 1,006 | — | — | — | — | — | — | — | — | — |

| R | AG | 39/19 | 40 | −76 | 30 | <0.001 | — | — | — | — | — | — | — | — | — | — | — |

| L | PCUN/RSC | 29/30 | −16 | −58 | 18 | <0.001 | >8 | 4,207 | — | — | — | — | — | — | — | — | — |

| R | PCUN/RSC | 29/30 | 16 | −54 | 18 | <0.001 | — | — | — | — | — | — | — | — | — | — | — |

| L | IPL/IPS | 40 | −40 | −58 | 42 | <0.001 | 4.71 | 223 | |||||||||

| R | IPL/IPS | 40 | 48 | −58 | 38 | <0.001 | 6.71 | 794 | |||||||||

| Frontal | Frontal | ||||||||||||||||

| R | sFront | 6/8 | 28 | 0 | 56 | <0.001 | 6.91 | 291 | — | — | — | — | — | — | — | — | — |

| L | sFront | 6/8 | −22 | 6 | 56 | <0.001 | 6.16 | 490 | — | — | — | — | — | — | — | — | — |

| L | IFG | 11 | −4 | 50 | −14 | 0.006 | 4.65 | 69 | — | — | — | — | — | — | — | — | — |

| L | MFG | 47 | −34 | 34 | −12 | 0.006 | 4.60 | 69 | — | — | — | — | — | — | — | — | — |

| — | — | — | — | — | — | — | — | — | L | Pre-SMA | 6 | −6 | 14 | 66 | 0.001 | 6.64 | 104 |

| — | — | — | — | — | — | — | — | — | R | dlPFC/pars Tri | 46 | 42 | 18 | 50 | <0.001 | 6.12 | 264 |

| — | — | — | — | — | — | — | — | — | L | IFG | 45 | −56 | 20 | 20 | <0.001 | 5.73 | 261 |

| — | — | — | — | — | — | — | — | — | L | MFG | 6 | −42 | 2 | 50 | <0.001 | 5.66 | 323 |

| — | — | — | — | — | — | — | — | — | R | dlPFC/pars orb | 11 | 42 | 52 | −6 | <0.001 | 4.93 | 136 |

| — | — | — | — | — | — | — | — | — | R | dlPFC/pars Tri | 46 | 54 | 30 | 24 | <0.001 | 4.79 | 190 |

| — | — | — | — | — | — | — | — | — | R | MFG | 8 | 4 | 30 | 46 | 0.013 | 3.97 | 52 |

| Temporal | Temporal | ||||||||||||||||

| L | PHC | 30 | −30 | −42 | −8 | <0.001 | >8 | 661 | — | — | — | — | — | — | — | — | — |

| R | PHC | 30 | 26 | −36 | −14 | <0.001 | >8 | 332 | — | — | — | — | — | — | — | — | — |

| L | iT | 37 | −44 | −56 | −6 | <0.001 | 5.48 | 321 | — | — | — | — | — | — | — | — | — |

| R | iT | 37 | 54 | −58 | −6 | 0.003 | 4.66 | 82 | — | — | — | — | — | — | — | — | — |

| — | — | — | — | — | — | — | — | — | R | iT | 37 | 66 | −38 | −4 | <0.001 | 5.33 | 185 |

| — | — | — | — | — | — | — | — | — | L | iT | 37 | −50 | −42 | 2 | <0.001 | 4.77 | 203 |

| Occipital | |||||||||||||||||

| — | — | — | — | — | — | — | — | — | L | Lingual | 19 | −12 | −96 | −4 | <0.001 | 4.78 | 108 |

| cerebellum | |||||||||||||||||

| — | — | — | — | — | — | — | — | — | L | Cereb crus1 | — | −12 | −82 | −28 | 0.003 | 4.02 | 80 |

| — | — | — | — | — | — | — | — | — | L | Cereb crus2 | — | −36 | −70 | −42 | 0.02 | 3.82 | 44 |

a All clusters shown here at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 44, cluster level). For each activation cluster, the MNI coordinates of the maximally activated voxel within each different structure are reported together with the corresponding Z values, p values, and cluster extent. L, Left; R, right.

Table 5.

Regions activated for self-projection in time (TIME: PAST + FUTURE > NOW) and self-projection in space (SPACE: WEST + EAST > HERE)a

| Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | p (FDR corrected) | Z value | Extent | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |||||||||||||||

| PAST + FUTURE > NOW | WEST + EAST > HERE | Conjunction | ||||||||||||||||||

| Parietal | Parietal | |||||||||||||||||||

| L | AG | 19 | −34 | −80 | 34 | <0.001 | 4.67 | 127 | — | — | — | |||||||||

| R | AG/IPS | 39/7/40 | 52 | −40 | 52 | <0.001 | 5.83 | 1,303 | R | AG | 19/39 | 44 | −74 | 32 | <0.001 | 5.34 | 266 | <0.001 | 5.24 | 176 |

| R | PCUN/SPL | 7 | 6 | −66 | 54 | 0.01 | 3.89 | 54 | L | PCUN/SPL | 7 | −12 | −70 | 56 | 0.03 | 4.03 | 37 | 0.016 | 3.89 | 46 |

| R | PCUN/SPL | 7 | 8 | −60 | 54 | <0.001 | 4.47 | 162 | — | — | — | |||||||||

| L + R | PCUN/RSC | NA | 18 | −54 | 22 | 0.001 | 4.24 | 100 | — | — | — | |||||||||

| L | IPS | 7/40 | −30 | −64 | 34 | <0.001 | 4.97 | 505 | — | — | — | — | — | — | — | — | — | — | ||

| Frontal | Frontal | |||||||||||||||||||

| L | sFront | 6 | −26 | 12 | 50 | <0.001 | 4.63 | 275 | — | — | — | — | — | — | — | — | — | — | ||

| R | sFront | 9 | 30 | 16 | 52 | <0.001 | 5.68 | 1,178 | R | sFront | 9 | 28 | 6 | 56 | 0.013 | 4.15 | 87 | 0.006 | 4.15 | 68 |

| L | dlPFC/pars tri | 45 | −46 | 34 | 26 | <0.001 | 5.08 | 183 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | Rostrolateral PFC | 46 | −40 | 46 | 8 | 0.004 | 3.69 | 69 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | dmPFC/pre-SMA | 8 | −4 | 34 | 42 | <0.001 | 6.22 | 364 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | dmPFC/pre-SMA | 8 | 4 | 28 | 44 | <0.001 | 6.29 | 314 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | aIns | 16 | −30 | 24 | −4 | <0.001 | 5.74 | 176 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | aIns | 16 | 34 | 24 | 0 | <0.001 | 5.28 | 222 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | dlPFC | 9 | 30 | 16 | 52 | <0.001 | 5.68 | 1,178 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | Pars orb | 47 | 42 | 48 | −6 | 0.004 | 4.03 | 72 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | Caudal MFC | 6 | −40 | 8 | 34 | <0.001 | 4.68 | 251 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | Frontopolar | 10 | 24 | 56 | 4 | 0.002 | 4.67 | 83 | — | — | — | — | — | — | — | — | — | — | — | — |

| Temporal | ||||||||||||||||||||

| L | iT | 21 | −62 | −40 | −14 | 0.002 | 3.93 | 85 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | iT | 21 | 62 | −36 | −12 | <0.001 | 5.37 | 234 | — | — | — | — | — | — | — | — | — | — | — | — |

| Cerebellum | ||||||||||||||||||||

| L | Cereb crus1 | — | −38 | −70 | −28 | <0.001 | 4.91 | 213 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | Cereb crus2 | — | −8 | −80 | −30 | <0.001 | 5.13 | 234 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | Cereb crus2 | — | −38 | −66 | −44 | 0.045 | 4.03 | 32 | — | — | — | — | — | — | — | — | — | — | — | — |

a All clusters shown at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 32 voxels, cluster level). For each activation cluster, the MNI coordinates of the maximally activated voxel within each different structure are reported together with the corresponding Z values and cluster extent. Right AG, right PCUN/SPL, and sFront were overlapped across the two contrasts. L, Left; R, right.

Table 6.

Regions responsive to egocentric distance (CLOSE > FAR) in time and spacea

| Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | Brain region | BA | MNI coordinates |

p (FDR corrected) | Z value | Extent | p (FDR corrected) | Z value | Extent | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |||||||||||||||

| SPACE: CLOSE > FAR | TIME: CLOSE > FAR | Conjunction | ||||||||||||||||||

| Parietal | Parietal | |||||||||||||||||||

| R | AGb | 39 | 38 | −76 | 36 | <0.001 | 5.46 | 1,496 | R | AG | 39 | 42 | −70 | 40 | 0.007 | 3.91 | 74 | 0.017 | 3.91 | 58 |

| L + R | PCUN/RSCb | 29/30 | −20 | −64 | 24 | 0.006 | 4.28 | 74 | — | — | — | — | — | — | — | — | — | — | — | — |

| L + R | PCUN/SPLb | 7 | −10 | −68 | 56 | 0.002 | 4.16 | 98 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | IPS | 40 | −42 | −42 | 42 | <0.001 | 3.98 | 65 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | IPS | 7/40 | 46 | −42 | 52 | <0.001 | 4.75 | 98 | — | — | — | — | — | — | — | — | — | — | — | — |

| Frontal | Frontal | |||||||||||||||||||

| R | aIns | 16 | 30 | 24 | 6 | <0.001 | 4.62 | 150 | R | aIns | 16 | 38 | 22 | −2 | 0.01 | 4.18 | 55 | 0.038 | 4.09 | 36 |

| L | AG | 39 | −36 | −78 | 32 | <0.001 | 5.23 | 67 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | aIns | 16 | −32 | 24 | −2 | <0.001 | 4.64 | 135 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | dmPFC/pre-SMA | 8 | 6 | 30 | 44 | <0.001 | 4.65 | 120 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | dlPFC/pars Tri | 45 | 46 | 36 | 26 | 0.021 | 3.73 | 195 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | sFront | 6 | 34 | 18 | 54 | <0.001 | 4.73 | 265 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | Frontopolar cortex | 10 | 38 | 58 | 12 | <0.001 | 4.51 | 49 | — | — | — | — | — | — | — | — | — | — | — | — |

| Temporal | ||||||||||||||||||||

| L | PHC | 19 | −30 | −44 | −8 | 0.008 | 5.23 | 290 | — | — | — | — | — | — | — | — | — | — | — | — |

| R | Inf Temp | 21 | 56 | −50 | −10 | <0.001 | 5.67 | 120 | — | — | — | — | — | — | — | — | — | — | — | — |

| Cerebellum | ||||||||||||||||||||

| L | Cereb crus 1 | — | −26 | −66 | −32 | <0.001 | 4.08 | 152 | — | — | — | — | — | — | — | — | — | — | — | — |

| L | Cereb crus 2 | — | −6 | −82 | −32 | 0.008 | 4.04 | 67 | — | — | — | — | — | — | — | — | — | — | — | — |

a All clusters shown here at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 49, cluster level). For each activation cluster, the MNI coordinates of the maximally activated voxel within each different structure are reported together with the corresponding Z values and cluster extent. Right AG and right aIns correspond to the overlap between the two contrasts. L, Left; R, right.

b Part of a larger cluster, reported for global consistency.

Prescan interviews.

Participants were asked to recall the dates and locations of historical events provided to them 1 week before taking part in the fMRI acquisition. Participants correctly dated (12.4% mistaken date dates, mean error: 0.04 years) and localized events on the world map (3.5% of locations outside the country they belong to, and 7.3% lightly misplaced). Significantly more recall errors occurred for future compared with past events (mean difference = 5.56%, z = 2.2, p = 0.03) and for locations east of Paris compared with locations west of Paris (mean difference = 9.06%, z = 3.5, p = 0.004). Reported and veridical dates revealed no significant differences in terms of absolute value, suggesting that most errors were close approximation of veridical dates. Additionally, the more temporally distant events were in time from the present, the more they were forgotten (temporally very far minus very close mean difference: 7.6%, z = 1.98, p = 0.047). Hence, and together, 11.6% of behavioral data were disregarded from all reported analyses.

Behavioral data analysis.

Linear mixed-effects (LME) models were performed using R (R Foundation for statistical computing) with lme4 and LmerTest packages (Bates et al., 2015). Two LMEs were fitted: one for reaction times (RT) and one for error rates (ER). LME contains both random and fixed factors. We tested four fixed factors for each model: temporal distance (TD, continuous), spatial distance (SD, continuous), the dimension of the task (DIM, categorical, 2 levels: time and space), and self-location (SL, categorical, 3 levels). For this latter factor, past and future self-locations were combined as well as west and east locations so that the three levels were “HERE, NOW,” “HERE, PAST + FUTURE,” and “WEST + EAST, NOW,” based on the absence of difference observed in previous studies using the same design (Arzy et al., 2009a; Gauthier and van Wassenhove, 2016). In each tested model, random effects were modeled for subjects (SUBJ factor, 17 levels) and events (EVENT factor, 36 levels) in addition to fixed effects. This was used to solve the problem of whether subject- or item-related variance can be modeled as random effects. Rather than performing two different repeated-measures ANOVAs (with event and with subject random effects), we chose to conjointly account for subject- and item-related variance with LME (Baayen et al., 2008). This means that, by considering that both subjects and events are drawn from a much larger population, we can generalize our findings to any subjects and events. In the LME modeling RT, only correct trials were analyzed (accounting for 83% of RT data). Moreover, in typical ANOVAs, the incremental addition of factors in a statistical model yields an overfitting of the data. To circumvent this issue, we used a statistical model selection strategy based on Akaike Information Criterion score. We used a forward model selection strategy, starting from a null model containing only random effects and iteratively selected one-by-one fixed effects providing the model with the lowest Akaike Information Criterion and passing a χ2 test (α = 0.05) compared with previous model. Planned comparisons and post hoc tests were then derived from the selected final model with LmerTest package. For this, reported p values were relative to an intercept baseline condition chosen here to be the physical references, namely: SL = NOW, HERE, estimated with a Markov Chain Monte Carlo and estimated degree of freedom was following Satterwaithe approximation (Bates et al., 2015).

Results

Self-position, distances, and dimensions (space and time) significantly affected RT and ER with respect to subject- and event-related variance as assessed by mixed model selection for effects of interest (Table 2). Assessing individual significance of selected factors revealed no main effects of dimension on RT (DIM, t = −0.89, p = 0.38) or on ER (t = 0.44, p = 0.66), suggesting that the temporal and spatial conditions were balanced for task difficulty. In the following results, reported differences between TIME and SPACE are thus unlikely to be related to differences in task difficulty or task execution.

Table 2.

Linear mixed model selections for RT and ER produced in Mental Time Travel and Mental Space Navigation (TIME and SPACE, respectively)a

| df | AIC | LogLik | χ2 | Pr (>χ2) | |

|---|---|---|---|---|---|

| RT model | |||||

| Model 0 = (1 SUBJ) + (1 EVENT) | 3 | 12256 | −6123 | ||

| Model 1 = Model 0 + SL | 6 | 12198 | −6093 | 61.8 | 3.84e-14 |

| Model 2 = Model 1 + DIM | 7 | 12172 | −6079 | 27.9 | 1.26e-7 |

| Model 3 = Model 2 + TD | 8 | 12148 | −6065 | 26.6 | 2.52e-7 |

| Model 4 = Model 3 + SD | 9 | 12126 | −6054 | 23.2 | 1.49e6 |

| Model 5 = Model 4 + TD × DIM | 10 | 12100 | −6040 | 28.0 | 1.21e7 |

| Model 6 = Model 5 + SD × DIM | 11 | 12079 | −6028 | 23.0 | 1.66e-6 |

| Model 7 = Model 6 + SL × SD | 13 | 12072 | −6023 | 11.0 | 0.0041 |

| Model 8 = Model 7 + SL × DIM | 15 | 12067 | −6018 | 9.04 | 0.011 |

| Model 9 = Model 8 + SL × SD × DIMb | 17 | 12064 | −6015 | 7.45 | 0.024 |

| Model 10 = Model 9 + SL × SD × TD | 20 | 12066 | −6013 | 3.5 | 0.33 |

| ER model | |||||

| Model 0 = (1 SUBJ) + (1 EVENT) | 3 | 7615 | −3804 | ||

| Model 1 = Model 0 + SL | 5 | 7581 | −3785 | 37.4 | 7.5e9 |

| Model 2 = Model 1 + SD | 6 | 7563 | −3775 | 20.2 | 6.9e-6 |

| Model 3 = Model 2 + TD | 7 | 7561 | −3773 | 4.1 | 0.045 |

| Model 4 = Model 3 + SL × DIM | 10 | 7534 | −3757 | 32.9 | 3.4e7 |

| Model 5 = Model 4 + SL × TD | 12 | 7528 | −3752 | 10.7 | 0.004 |

| Model 6 = Model 5 + SL × SD | 14 | 7520 | −3746 | 11.3 | 0.003 |

| Model 7 = Model 6 + SD × DIM | 15 | 7519 | −3744 | 3.9 | 0.046 |

| Model 8 = Model 7 + SL × SD × DIMb | 17 | 7505 | −3735 | 18.7 | 9.5e-5 |

| Model 9 = Model 8 + SL × SD × TD × DIM | 23 | 7505 | −3729 | 11.7 | 0.07 |

a SL is the self-location in time and in space and accounts for self-projection in time, or in space or for no self-projection. TD and SD are the temporal and spatial distances, respectively. DIM is the task performed (TIME and SPACE). Only those models showing significant or marginal effects compared with previous iteration are reported for clarity. The final models are shown in bold. RT model: The self-location (SL), the dimension of the task (DIM), and the distance (TD, SD) significantly improved the model. All the double interactions with DIM improved the RT model, as well as SL:SD and SD:SL:DIM. ER model: All but the main effect of DIM improved the model. All the double interactions with SL improved the model, as well as the SD:DIM and SL:SD:DIM. Overall, it shows that interactions between dimensions (DIM) and the other factors as well as between self-location (SL) and the other factors are crucial to account for the data.

b Final models.

Self-projection and distance effects in temporal and spatial judgments

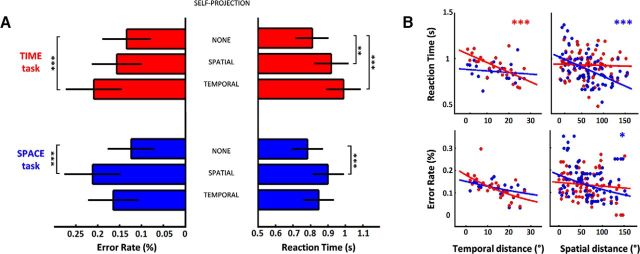

Self-projection in time is typically associated with slowed RT and increased ER (Arzy et al., 2009b; Gauthier and van Wassenhove, 2016). As predicted, temporal judgments were slowest after self-projection in time (PAST + FUTURE vs NOW, t = 3.77, p < 0.001: Fig. 2A); similarly, spatial judgments were slower when participants mentally self-positioned in Cayenne or Dubai compared with in Paris (WEST + EAST vs HERE, t = 5.11, p < 0.001; Fig. 2A). Additionally, self-positioning in space increased ER during spatial judgments (WEST + EAST vs HERE, t = 5.10, p < 0.001; Fig. 2B), and self-positioning in time increased ER during temporal judgments (PAST + FUTURE vs NOW, t = 4.11, p < 0.001; Fig. 2B). Together, these results support the existence of self-projection mechanisms in the time dimension (Arzy et al., 2009b), but also in the spatial dimension: mental self-positioning away from the here and now is associated with a behavioral cost (Gauthier and van Wassenhove, 2016). The behavioral costs of self-projection in space and in time were not significantly different for RT (paired t test, t(32) = −1.27, p = 0.21) and ER (paired t test, t(32) = 0.63, p = 0.53), allowing to discard trivial effects of task difficulty when comparing the neural correlates of self-projection in time for mental time travel, and self-projection in space for mental space navigation. Additionally, temporal judgments were slower for self-positions away from Paris (WEST + EAST vs HERE, t = 3.04, p < 0.005), although spatial judgments were not significantly slowed down when participants self-positioned away from the present (PAST + FUTURE vs NOW, t = 1.04, p = 0.3). Chronometric space-time interactions may index a competition between the psychological dimension of space and time during self-projection (Gauthier and van Wassenhove, 2016): mental events may be represented egocentrically along their temporal or spatial dimension but not both simultaneously. This working hypothesis is next explored with fMRI.

Figure 2.

Behavioral cost of self-projection and distance shows an egocentric remapping of events. A, ER (Left) and RT (Right) as a function of self-projection (NONE, TEMPORAL, or SPATIAL), for TIME (red) and SPACE (blue) tasks. Temporal self-projection increased ER and RT for TIME; spatial self-projection increased ER and RT for SPACE as well as RT for TIME. B, ER and RT as a function of distance between events and mental self-positions. Top, RT in TIME and SPACE fastened with increasing distances in time and space, respectively. Bottom, ER in TIME and SPACE decreased with increasing distances in time and space, respectively. Error bars represent the standard error across repetitions.

Regardless of self-position in time and space, the further away events were from the mental self-position, the faster the temporal (TD(TIME), t = −6.72, p < 0.001) and the spatial (SD(SPACE), t = −3.37, p < 0.001) judgments were. There were no significant effects of spatial (temporal) distance on temporal (spatial) judgments (SD(TIME), t = −0.25, p = 0.07; TD(SPACE), t = −1.82, p = 0.8), suggesting that distance effects were dimension-specific (TD × DIM, t = 4.26, p < 0.001 and SD × DIM, t = 2.43, p < 0.05). As observed with self-projection, the behavioral costs of spatial and temporal distance computations were not significantly different for RT (paired t test, t(32) = 0.46, p = 0.57) or ER (paired t test, t(32) = 0.35, p = 0.72), allowing to discard trivial effects of task difficulty when comparing the neural correlates of temporal distance in mental time travel, and spatial distance in mental space navigation. Additionally, spatial distances produced a stronger slowing down of RT when participants were self-positioned away from Paris (SD × (WEST + EAST vs HERE), t = −2.2, p < 0.05). Distance effects were found for ER of spatial judgments with spatial distance (SD, t = −2.03, p < 0.05) but not for temporal judgments with temporal distance (t = 0.1, p = 0.9). Self-positioning in space yielded a faster decrease of ER with spatial distance for spatial judgments (SD × (WEST + EAST vs HERE), t = −3.8, p < 0.001) and self-positioning in time yielded a faster decrease of ER with temporal distance for temporal judgments (TD × (PAST + FUTURE vs PRESENT), t = −3.22, p = 0.001), consistent with previous findings (Gauthier and van Wassenhove, 2016).

As increases of distances between the self and mental events systematically fastened RT and decreased ER, we hypothetically operationalized self-projection as the egocentric remapping of events stored as allocentric memories, and this was specifically tested with fMRI.

Overlapping networks for mental navigation in time and space

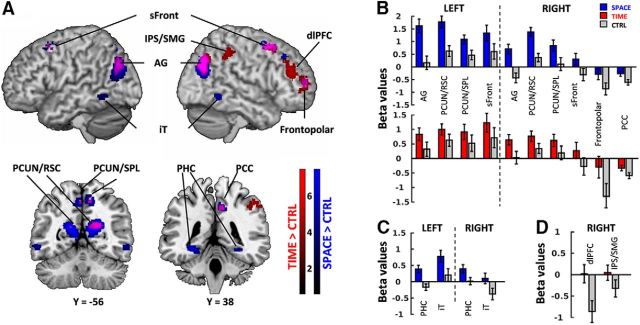

First, to reveal the core network mediating mental travels in time and space, we examined specific regions engaged in the processing of mental time (TIME) and mental space (SPACE) with respect to a control condition (emotional valence judgment, CTRL). TIME and SPACE were each contrasted with CTRL (Fig. 3A; Table 3, peak voxel coordinates). Additionally, to uncover regions engaged in both temporal and spatial processing, a conjunction analysis was performed (Fig. 3, activations in purple; Table 3, last two columns). Ten activation clusters overlapped bilaterally in the conjunction analysis: the inferior parietal lobule/angular gyrus (IPL/AG), the medial precuneus/retrospenial cortices (PCUN/RSC), the medial precuneus/superior parietal lobules (PCUN/SPL), the superior frontal cortices (sFront), right posterior cingulate (PCC), and frontopolar cortices (Fig. 3A), covering 65% of the volume of TIME > CTRL activations (1345 of 2080 voxels), and 30% of the SPACE > CTRL activations (1045 of 4569 voxels). Among these 10 regions commonly activated by mental travels in time and in space against control, the statistically significant effects observed in frontopolar cortex and the PCC were driven by the differences in negative betas, suggesting that these two regions were not part of the core network of mental travels, but rather idiosyncratic to the chosen control. Conversely, a few regions were found in only one contrast: bilateral parahippocampal cortices (PHC) and inferior temporal gyri (iT) were found only in the SPACE > CTRL contrast and mainly driven by positive betas for SPACE, whereas right intraparietal sulcus (IPS) and dorsolateral prefrontal cortex (dlPFC) were found only in the TIME > CTRL contrast and largely driven by negative betas for CTRL (Fig. 3B). Among the 8 core regions found to be specific to mental travels, SPACE tends to elicit higher activation levels than TIME especially in the parietal lobe.

Figure 3.

A, Overlap of brain regions engaged in TIME (red) and SPACE (blue) compared with CTRL, and their conjunction (purple). All clusters are shown at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 35 voxels, cluster level). Color bars represent the contrast t values. Coordinates corresponding to peak voxel activations are reported in Table 3. Brain regions common to TIME and SPACE comprised bilateral AG, PCUN/RSC, and PCUN/SPL, and superior frontal cortices as well as the right PCC and frontopolar cortex. TIME engaged the right IPS/SMG and the dlPFC. SPACE engaged bilateral iTs, bilateral PHG, and right dlPFC. B, Average beta values for regions activated in both TIME > CTRL and SPACE > CTRL contrasts. Most of the overlapped clusters, apart from frontopolar cortex and PCC, showed positive betas. C, Average beta values for regions activated in SPACE > CTRL contrast only. iT and PHC appear to be significantly more activated than the control. D, Average beta values for regions activated in TIME > CTRL contrast only. dlPFC and IPS/SMG are more activated than the control; the contrast is mainly driven by the deactivation in the control task. Error bars represent the standard error across subjects.

In sum, we found a parietofrontal network specifically supporting mental time travel and mental navigation in space, independently of the chosen 2-AFC task on memorized events. The differences found between TIME and SPACE were further investigated and quantified through direct TIME versus SPACE whole-brain contrasts.

TIME versus SPACE: dimension-specific networks

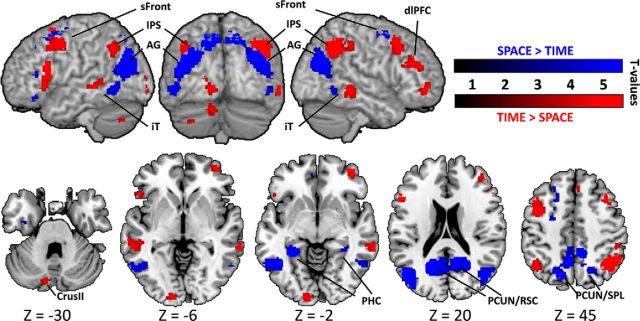

To have a general overview of the specificity of mental time travel and mental space navigation, we performed direct contrasts between all TIME and all SPACE trials regardless of all other parameters. Contrasting TIME and SPACE yielded two networks that were consistent with those observed in the contrasts for self-projection and distance (Fig. 4; Table 4). SPACE > TIME contrast yielded large posterior activations in bilateral PCUN/RSC, PHC and AG/transverse occipital sulcus, iT but also frontal activations in bilateral sFront (BA6/BA8), left IFG and left middle frontal gyrus (MFG). When contrasting TIME > SPACE, a large parietofrontal network was found: bilateral posterior IPS and bilateral frontal activations along the dlPFC, and left presupplementary motor area (pre-SMA). In addition to parietofrontal activations, bilateral iT, left lingual gyrus, and left cerebellar activations were also found.

Figure 4.

Dimension-specific networks engaged by TIME versus SPACE and SPACE versus TIME. All clusters showed here at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 44, cluster level). Coordinates corresponding to peak voxel activations are reported in Table 4. For each activation cluster, the MNI coordinates of the maximally activated voxel within each different structure are reported together with the corresponding Z values and cluster extent. L, Left; R, right.

Overall, the direct contrast between mental time travel (TIME) and mental space navigation (SPACE) showed that spatial processing capitalizes specifically on medial temporal and posterior parietal regions, whereas temporal processing operates through a widespread network importantly comprising prefrontal regions.

Dimension specificity of brain activations for self-projection and distance

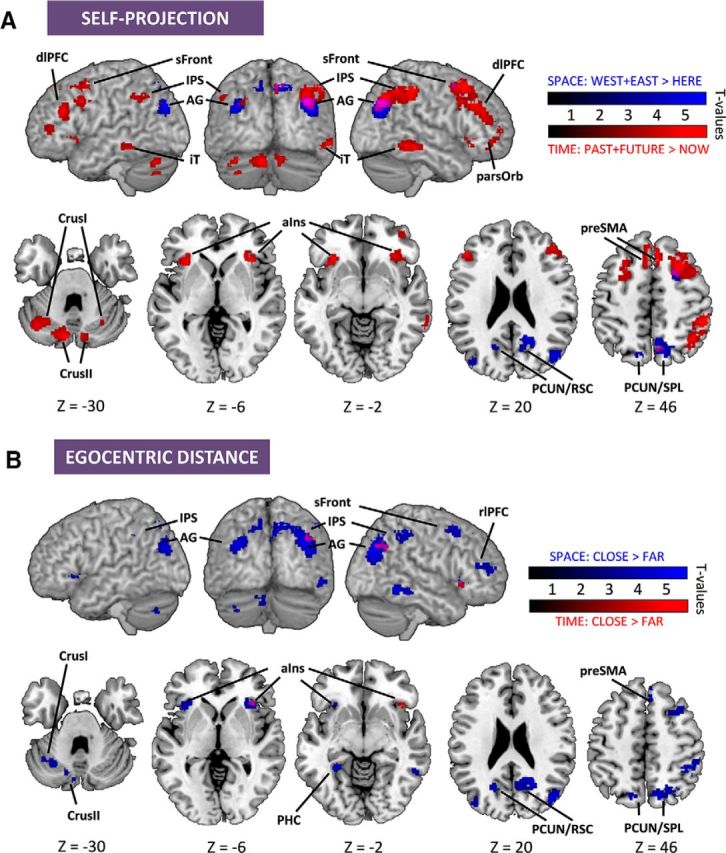

The behavioral effects found for self-projection and relative distance effects were dimension-specific. Thus, we first assessed brain activation patterns capturing self-projection in time and self-projection in space separately. For this, we contrasted TIME trials when participants self-projected (PAST + FUTURE) with TIME trials in which no self-projection was required (NOW). Corresponding peak voxel coordinates are reported in Table 5. Self-projection in time elicited activation in bilateral IPS, IPL/AG, sFront, several areas of prefrontal cortices (dlPFC, pars orbitaris), anterior insula (aIns), iT, the anterior part of the pre-SMA, and medial and lateral regions of the cerebellum (Fig. 5A). We then contrasted SPACE trials during self-projection (WEST + EAST) with SPACE trials without self-projection (HERE). Self-projection in space mainly elicited parietal activations: IPS/AG, PCUN/RSC, and PCUN/SPL, with the notable exception of the right superior frontal cortex (Fig. 5A). To reveal the regions commonly engaged by self-projection in time and in space, a conjunction analysis was performed and revealed that right IPL/AG, PCUN/SPL, and superior frontal cortices were significantly activated in both contrasts (Fig. 5A; Table 5). These three regions accounted for 4% of the clusters found in PAST + FUTURE > NOW contrast (290 of 7116 voxels, 3 of 21 clusters) and 37% of the clusters found in WEST + EAST > HERE contrast (290 of 779 voxels, 3 of 6 clusters).

Figure 5.

Distinct and common brain regions indexing self-projection and distance computation. All clusters are at p < 0.001 uncorrected (voxel level) and p < 0.05 FDR-corrected (extent threshold = 32, cluster level). A, Brain regions indexing self-projection in time for TIME (red; PAST + FUTURE > NOW), in space for SPACE (blue; WEST + EAST > HERE), and their conjunction (purple). Coordinates corresponding to peak voxel activations are reported in Table 5. Temporal self-projection activated a distributed network comprising anterior parietal and frontal cortices, whereas spatial self-projection was mainly restricted to posterior and medial parietal regions. The two networks overlapped in right IPL/AG, PCUN/SPL, and sFront. B, Brain regions indexing temporal distance in TIME (red), spatial distance in SPACE (blue), and their conjunction (purple). Coordinates corresponding to peak voxel activations are reported in Table 6. The temporal distance contrast yielded activations of the right AG and aIns. The spatial distance contrast revealed a wide parietofrontal network comprising IPS, sFront, aIns, PHC, and iT. The temporal and spatial distance contrasts overlapped in right AG and right aIns.

In sum, self-projection in time elicited a widespread activation of parietal, frontal, temporal, and cerebellar cortices, whereas self-projection in space essentially engaged the parietal lobe. In the conjunction analysis, only 3 regions were found that covered a small volume of the two networks. For these reasons, we consider that self-projection in time and in space mainly operates on dimension-specific substrates.

We then assessed brain activity corresponding to the dimension-specific distance effects observed behaviorally by investigating separately the temporal distance effect in TIME and spatial distance effect in SPACE. For this, we contrasted TIME trials performed on the temporally closest events (TIME: CLOSE) with those performed on the furthest ones (TIME: FAR). Similarly for SPACE, trials performed on the spatially closest events (SPACE: CLOSE) were contrasted with those performed on the spatially furthest ones (SPACE: FAR). Temporal distance effects were restricted to the right IPL/AG and the right aIns (Fig. 5B; Table 6). By contrast, spatial distance effects included a large network: bilateral IPL/AG, aIns, PCUN/SPL, PCUN/RSC, IPS, right superior frontal cortex, pre-SMA, rostrolateral PFC, iT, and left cerebellum (Fig. 5B). A conjunction analysis showed that right IPL/AG and the right aIns were commonly engaged by distance in a CLOSE-FAR contrast regardless of the dimension of the task (Table 6). These two regions accounted for 72% of the clusters found in TIME: CLOSE > FAR contrast (94 of 129 voxels, 2 of 2 clusters) and 3% of the clusters found in SPACE: CLOSE > FAR contrast (94 of 3441 voxels, 2 of 16 clusters). Bilateral IPS, right iT, right sFront, right pre-SMA, and left cerebellum were all differentially engaged across the time and space dimensions as well as the two cognitive task components (i.e., self-projection and distance). Hence and overall, if temporal distance was almost contained in the network responding to spatial distances, the converse was not true. Spatial distances elicited a widespread network encompassing parietal, temporal, and frontal cortices.

Together, we observed a partial dissociation between TIME and SPACE in self-projection and a partial dissociation between TIME and SPACE in distance computation. Additionally, both operations partially dissociated: self-projection in time covered 90% (7116/(7116 + 779) voxels) of the activated volume over the two self-projection contrasts, whereas distance in space covered 96% (3441/(3441 + 129) voxels) of the activated volume over the two distance contrasts.

The overall results indicate that mental time travel and mental space navigation build on largely dissociable neural substrates for two cognitive operations that are self-projection and distance computation. Remarkably, the dissociation between TIME and SPACE networks across these cognitive components is consistent with the results obtained with their direct contrasts reported in the previous section. These observations suggest that the intrinsic differences in neural activation between mental time travel and mental space navigation can be specifically attributed to the implementation of self-projection and distance computations.

Right IPL/AG, a hub for setting and monitoring egocentric maps

Although large dissociations were found between the mental processing of time and space, and across self-projection and distance computation, a small region of IPL was consistently found in each contrast conjunction (self-projection: 176 over 266 voxels of IPL cluster; and distance: 58 over 74 voxels). A whole-brain conjunction analysis between self-projection contrasts and egocentric distances contrasts, in time and in space, revealed that a portion of the right IPL located in BA39 (x = 42, y = −70, z = 40, Z = 3.91, extent = 46) was specifically involved in self-projection and distance computation in both TIME and SPACE (Fig. 6). This finding indicated that this subregion in IPL likely supports a cognitive operation that is central to mental travels, regardless of its temporal or spatial dimension. These results also suggested that self-projection and relative distance computation may heavily rely on the abstract coding of egocentric maps in this subregion. Additionally, the inferior part of the right IPL was more activated for spatial content and the superior part for temporal content as assessed by direct SPACE versus TIME contrasts (Fig. 4), self-projection contrasts (Fig. 5A), and distance contrasts (Fig. 5B), suggesting a regional specialization of IPL.

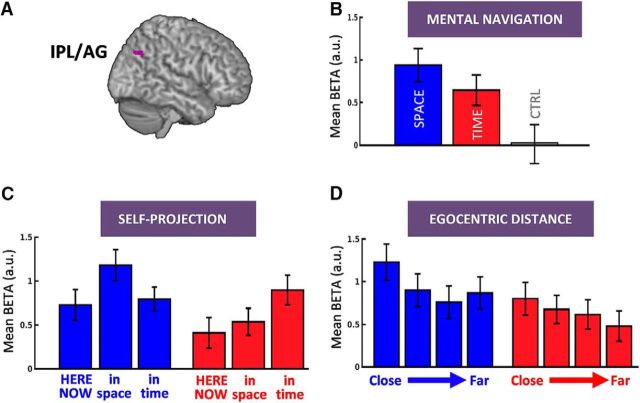

Figure 6.

Right IPL/AG and egocentric mapping of time and space. A, IPL/AG survived a whole-brain conjunction analysis between self-projection time, self-projection space, distance in time, and distance in space contrasts. B, Beta values for SPACE (blue), TIME (red), and CTRL (gray) showed a specific engagement of IPL/AG for TIME and SPACE relative to CTRL. C, Beta values for SPACE and TIME split across self-projection conditions showed that right IPL/AG was selectively implicated in spatial self-projection during the SPACE task and temporal self-projection during the TIME task. D, Beta values for SPACE and TIME split across four egocentric distance bins showed higher activation for close than for far events. The overall activation pattern suggests that the right IPL/AG contributes to the setting, maintenance, and manipulation of egocentric temporal and spatial maps. Error bars represent the standard error across subjects.

Discussion

In this study, subjects performed a mental time travel and a mental space navigation task while undergoing fMRI. The main findings are that self-projection and distance computations engaged distinct brain networks for the processing of the temporal and the spatial dimensions of events, with the exception of activity in the right IPL, which captured egocentric distance but also self-projection in these two dimensions. We discuss below how the observation of distinct neural networks suggests that similar cognitive operations (here, self-projection and distance computations) may operate in different cognitive maps (here, temporal and spatial cognitive maps).

Domain specificity of egocentric mapping in mental time and in mental space

The robust behavioral cost associated with self-projection is consistent with the literature on mental time travel (Arzy et al., 2008, 2009b) and mental space navigation (Gauthier and van Wassenhove, 2016): a change of mental reference point increases the behavioral cost, whereas the relative distance between an event and the mental reference decreases it. Importantly, this pattern is the inverse of the one found in the seminal mental rotation studies reporting increased behavioral cost with distance (Shepard and Metzler, 1971; Kosslyn et al., 1978), suggesting that mental time and space travels cannot be reduced to mental imagery. Rather, it was recently hypothesized that self-projection could be operationalized as an egocentric remapping of the temporal or spatial cognitive maps (Gauthier and van Wassenhove, 2016). In other words, the here and now would be a vantage point not only for actually experienced journeys but also for mental ones (Liberman and Trope, 2008).

In our study, the activation of both retrosplenial and posterior parietal cortices during mental space navigation supports the hypothesis of egocentric remapping, even in the absence of real (Ghaem et al., 1997) or virtual (Committeri et al., 2004) spatial experience. Damage in posterior parietal cortex is known to impair egocentric navigation and memory (Aguirre and D'Esposito, 1999; Wilson et al., 2005; Ciaramelli et al., 2010); and more recently, the imagined spatial locations and heading have been inferred from activation patterns in RSC (Marchette et al., 2014; Sulpizio et al., 2016). This is in agreement with the BBB model (Burgess et al., 2001a; Byrne et al., 2007), in which allocentric coordinates of the self-position in the hippocampus and landmark positions in the parahippocampus are converted into egocentric frames in retrospenial cortex, a hypothesis also supported by recent empirical evidence (Zhang et al., 2012; Dhindsa et al., 2014). Also consistent with the egocentric hypothesis, the hippocampal and parahippocampal cortices were not significantly engaged by self-projection in space.

Self-projection in TIME entailed a frontoparietal network that was largely distinct from the one observed in SPACE, suggesting a different cognitive mapping scheme. The network was consistent with the widespread dlPFC activations previously reported during mental time travel (Botzung et al., 2008; Nyberg et al., 2010; Viard et al., 2011a). Additionally, the bilateral insular and pre-SMA activations were only found for self-projection in TIME: the insula has been associated with emotional valence (Craig, 2009; Viard et al., 2011a) and the representation of self (Arzy et al., 2009b). Functionally coupled with the anterior insula (Cauda et al., 2011), pre-SMA also contributed to the same network. As no significant engagement of the hippocampus was found, self-projection in time appears to capitalize on high level neocortical structures with a privileged link to the representation of the self.

A direct contrast between SPACE and TIME confirmed the neural dissociations: whereas space processing was mostly confined to posterior regions, time processing mostly recruited frontal regions, consistent with previous reports on the temporal and spatial ordering of memories (Schedlbauer et al., 2014). Hence, one possibility is that, unlike spatial processing for which dedicated structures enable egocentric remapping, setting a new temporal viewpoint may require online computations implicating executive functions, in agreement with mental time travel as an evolutionary novel form of mental manipulation (Suddendorf et al., 2009).

In sum, and consistent with recent psychophysical predictions (Gauthier and van Wassenhove, 2016), self-projection or egocentric remapping appears to be a major contributor to the differentiation of mental time and space processing.

Dedicated networks for dimension-specific egocentric distances

Psychological distances are mental metrics representing the distance of various psychological dimensions (e.g., time, space, or social distances) with respect to the self (Liberman and Trope, 2008, 2014). Interestingly, no behavioral interferences between temporal and spatial distances were observed, supporting the notion that time and space are representationally distinct (Gauthier and van Wassenhove, 2016).

A widespread parietofrontal network was related to by spatial distance in SPACE (Fig. 4B), functionally divided into two classes of regions with respect to self-projection and distance. The first class overlapped with the network activated by self-projection in space (PCUN/RSC, PCUN/SPL, and IPL) and reflected both real-to-imagined position and self-to-event egocentric spatial distances. The second, comprising IPS, left PHC, right temporal cortex, right prefrontal cortex, bilateral insula, and right pre-SMA, was exclusively related to the self-to-event egocentric spatial distances. This suggests that, although the first network may encode distance egocentrically (Byrne et al., 2007), the second may be related to the allocentric coding of distance notably implicating the parahippocampus. This interpretation would be in line with the recent proposal of a network-based allocentric coding of space (Ekstrom et al., 2014). Alternatively, this network could relate to task demands: goal-directed virtual navigation reported similar distance modulation of precentral cortex, middle temporal gyrus, and insula, associated with behavioral costs (Epstein, 2008; Viard et al., 2011b; Sherrill et al., 2013). The bilateral IPS and prefrontal activations have often been reported in symbolic and nonsymbolic magnitude processing (Fias et al., 2007; Piazza et al., 2007), suggesting that spatial distances could be processed through such quantitative system. Although these regions were not found in the distance contrasts for TIME, the IPS was seen in the self-projection contrast: one possibility is that self-projection in time entails the elaboration of a temporal cognitive map for events, in an egocentric frame of reference, and onto which time events are maintained along with their magnitudes. It is noteworthy that neither the spatial navigation system nor the general language (Friederici and Gierhan, 2013) or the known arithmetic network (Arsalidou and Taylor, 2011) appeared to fully overlap with the regions we observe here for mental time travel.

A domain-general representation in IPL?

The finding of common activations in parietal and frontal cortices suggests a common processing of abstract relations computed within egocentric maps. PCUN/SPL (Burgess et al., 2001b; Committeri et al., 2004; Lambrey et al., 2012) and superior frontal cortex (BA6/BA8) activations have previously been associated with changes in spatial perspective (Committeri et al., 2004; Sulpizio et al., 2016) and in temporal self-location during mental time travel (Nyberg et al., 2010; Viard et al., 2011a). These two regions may serve egocentric remapping of mental events by directing attention to internal representations (Wagner et al., 2005) according to retrieval goals (Cabeza, 2008). In other words, these two regions may support a common workspace for the computation of relations between event representations within a cognitive map.

Here, the right IPL/AG was responsive to both self-projection and egocentric distances, thus appearing to be a key area for egocentric remapping and for metric computations. This finding is consistent with recent reports (Parkinson et al., 2014) and with the observation that lesions of AG impair the processing of visual egocentric frames in near space (Bjoertomt et al., 2009; Lane et al., 2015). The activation of the right IPL/AG has been reported during mental time travel (Botzung et al., 2008; Viard et al., 2011a) together with the perception of temporal order (Battelli et al., 2007). The right IPL/AG may thus support the mapping of magnitudes (time, space, quantity) with respect to an egocentric reference so as to allow self-related ordinal judgments. Such mapping would reconcile our findings of similar behavioral distance effects in time and space, and strongly suggests that egocentric mapping in IPL is indeed abstract. Although activations were found in IPL for both TIME and SPACE, it is noteworthy that an inferior-to-superior regional specialization of IPL could be seen for space and time, respectively (Figs. 4, 5A). This gradient in IPL was consistent with a recent subject level fMRI study of mental orientation (Peer et al., 2015) and with resting-state functional connectivity parcellation of IPL where inferior IPL is connected only to the spatial network (Uddin et al., 2010; Yeo et al., 2011).

Together, these observations suggest distinct networks for time and space computations before a remapping in an abstract format, a hypothesis that will be addressed with time-resolved neuroimaging.

In conclusion, our findings nuanced the hypothesis of a common system for the representation of time and space. We showed that two important cognitive operations characteristic of mental time and space travel (namely, self-projection and distance computations) strongly dissociate in their neural implementations as a function of the task dimension (time or space). However, a robust overlap was found in a subregion of IPL, suggesting the possibility of a shared dimensionless (thus, abstract) egocentric mapping during mental time travel and mental space navigation. Such convergence may have emerged from shared constraints in anchoring the self in a common referential system.

Footnotes

This work was supported by European Research Council YStG-263584 and Agence Nationale de la Recherche 10JCJC-1904 to V.v.W. Preliminary data were presented at the annual meeting of the Society for Neuroscience (2014, Washington, DC), the annual meeting of the organization for Human Brain Mapping (2015, Geneva), and the annual meeting of the Cognitive Neuroscience Society (2016, New York). We thank the members of the Unité de Recherche en NeuroImagerie Applicative Clinique et Translationnelle and the medical staff at NeuroSpin for help in recruiting and scheduling participants; Karin Pestke for help with some of the data collection; Benoît Martin for some of the preliminary analysis; members of the Cognitive Neuroimaging Unit for fruitful discussions; Kamil Ugurbil, Essa Yacoub, Steen Moeller, Eddie Auerbach, and Gordon Junqian Xu (Center for Magnetic Resonance Research, University of Minnesota) for sharing pulse sequence and reconstruction algorithms; and Alexis Amadon for technical support.

The authors declare no competing financial interests.

References

- Aguirre GK, D'Esposito MD. Topographical disorientation: a synthesis and taxonomy. Brain. 1999;122:1613–1628. doi: 10.1093/brain/122.9.1613. [DOI] [PubMed] [Google Scholar]

- Arsalidou M, Taylor MJ. Is 2 + 2 = 4? Meta-analyses of brain areas needed for numbers and calculations. Neuroimage. 2011;54:2382–2393. doi: 10.1016/j.neuroimage.2010.10.009. [DOI] [PubMed] [Google Scholar]

- Arzy S, Molnar-Szakacs I, Blanke O. Self in time: imagined self-location influences neural activity related to mental time travel. J Neurosci. 2008;28:6502–6507. doi: 10.1523/JNEUROSCI.5712-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arzy S, Adi-Japha E, Blanke O. The mental time line: an analogue of the mental number line in the mapping of life events. Conscious Cogn. 2009a;18:781–785. doi: 10.1016/j.concog.2009.05.007. [DOI] [PubMed] [Google Scholar]

- Arzy S, Collette S, Ionta S, Fornari E, Blanke O. Subjective mental time: the functional architecture of projecting the self to past and future. Eur J Neurosci. 2009b;30:2009–2017. doi: 10.1111/j.1460-9568.2009.06974.x. [DOI] [PubMed] [Google Scholar]

- Baayen RH, Davidson DJ, Bates DM. Mixed-effects modeling with crossed random effects for subjects and items. J Mem Lang. 2008;59:390–412. doi: 10.1016/j.jml.2007.12.005. [DOI] [Google Scholar]

- Bates D, Martin M, Bolker B. Fitting linear mixed-effects models using lme4. J Stat Softw. 2015;67:1–48. [Google Scholar]

- Battelli L, Pascual-Leone A, Cavanagh P. The “when” pathway of the right parietal lobe. Trends Cogn Sci. 2007;11:204–210. doi: 10.1016/j.tics.2007.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjoertomt O, Cowey A, Walsh V. Near space functioning of the human angular and supramarginal gyri. J Neuropsychol. 2009;3:31–43. doi: 10.1348/174866408X394604. [DOI] [PubMed] [Google Scholar]

- Bonato M, Zorzi M, Umiltà C. When time is space: evidence for a mental time line. Neurosci Biobehav Rev. 2012;36:2257–2273. doi: 10.1016/j.neubiorev.2012.08.007. [DOI] [PubMed] [Google Scholar]

- Boroditsky L. Metaphoric structuring: understanding time through spatial metaphors. Cognition. 2000;75:1–28. doi: 10.1016/S0010-0277(99)00073-6. [DOI] [PubMed] [Google Scholar]

- Botzung A, Denkova E, Manning L. Experiencing past and future personal events: functional neuroimaging evidence on the neural bases of mental time travel. Brain Cogn. 2008;66:202–212. doi: 10.1016/j.bandc.2007.07.011. [DOI] [PubMed] [Google Scholar]

- Burgess N, Becker S, King JA, O'Keefe J. Memory for events and their spatial context: models and experiments. Philos Trans R Soc Lond B Biol Sci. 2001a;356:1493–1503. doi: 10.1098/rstb.2001.0948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, Spiers HJ, O'Keefe J. A temporoparietal and prefrontal network for retrieving the spatial context of lifelike events. Neuroimage. 2001b;14:439–453. doi: 10.1006/nimg.2001.0806. [DOI] [PubMed] [Google Scholar]

- Buzsáki G, Moser EI. Memory, navigation and theta rhythm in the hippocampal-entorhinal system. Nat Neurosci. 2013;16:130–138. doi: 10.1038/nn.3304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne P, Becker S, Burgess N. Remembering the past and imagining the future: a neural model of spatial memory and imagery. Psychol Rev. 2007;114:340–375. doi: 10.1037/0033-295X.114.2.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cabeza R. Role of parietal regions in episodic memory retrieval: the dual attentional processes hypothesis. Neuropsychologia. 2008;46:1813–1827. doi: 10.1016/j.neuropsychologia.2008.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cauda F, D'Agata F, Sacco K, Duca S, Geminiani G, Vercelli A. Functional connectivity of the insula in the resting brain. Neuroimage. 2011;55:8–23. doi: 10.1016/j.neuroimage.2010.11.049. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Rosenbaum RS, Solcz S, Levine B, Moscovitch M. Mental space travel: damage to posterior parietal cortex prevents egocentric navigation and reexperiencing of remote spatial memories. J Exp Psychol Learn Mem Cogn. 2010;36:619–634. doi: 10.1037/a0019181. [DOI] [PubMed] [Google Scholar]

- Committeri G, Galati G, Paradis AL, Pizzamiglio L, Berthoz A, LeBihan D. Reference frames for spatial cognition: different brain areas are involved in viewer-, object-, and landmark-centered judgments about object location. J Cogn Neurosci. 2004;16:1517–1535. doi: 10.1162/0898929042568550. [DOI] [PubMed] [Google Scholar]

- Craig AD. Emotional moments across time: a possible neural basis for time perception in the anterior insula. Philos Trans R Soc Lond B Biol Sci. 2009;364:1933–1942. doi: 10.1098/rstb.2009.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Brannon EM. Space, time, and number: a Kantian research program. Trends Cogn Sci. 2010;14:517–519. doi: 10.1016/j.tics.2010.09.009. [DOI] [PubMed] [Google Scholar]

- Dhindsa K, Drobinin V, King J, Hall GB, Burgess N, Becker S. Examining the role of the temporo-parietal network in memory, imagery, and viewpoint transformations. Front Hum Neurosci. 2014;8:709. doi: 10.3389/fnhum.2014.00709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekstrom AD, Arnold AE, Iaria G. A critical review of the allocentric spatial representation and its neural underpinnings: toward a network-based perspective. Front Hum Neurosci. 2014;8:803. doi: 10.3389/fnhum.2014.00803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein RA. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci. 2008;12:388–396. doi: 10.1016/j.tics.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fias W, Lammertyn J, Caessens B, Orban GA. Processing of abstract ordinal knowledge in the horizontal segment of the intraparietal sulcus. J Neurosci. 2007;27:8952–8956. doi: 10.1523/JNEUROSCI.2076-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD, Gierhan SM. The language network. Curr Opin Neurobiol. 2013;23:250–254. doi: 10.1016/j.conb.2012.10.002. [DOI] [PubMed] [Google Scholar]

- Gallistel CR. The organization of learning. Cambridge, MA: Massachusetts Institute of Technology; 1990. [Google Scholar]

- Gauthier B, van Wassenhove V. Cognitive mapping in mental time travel and mental space navigation. Cognition. 2016;154:55–68. doi: 10.1016/j.cognition.2016.05.015. [DOI] [PubMed] [Google Scholar]

- Ghaem O, Mellet E, Crivello F, Tzourio N, Mazoyer B, Berthoz C, Denis M. Mental navigation along memorized routes activates the hippocampus, precuneus, and insula. Neuroreport. 1997;8:739–744. doi: 10.1097/00001756-199702100-00032. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Ball TM, Reiser BJ. Visual images preserve metric spatial information: evidence from studies of image scanning. J Exp Psychol Hum Percept Perform. 1978;4:47–60. doi: 10.1037/0096-1523.4.1.47. [DOI] [PubMed] [Google Scholar]

- Lakoff G, Johnson M. Metaphors we live by. Chicago: University of Chicago; 2005. pp. 103–114. [Google Scholar]

- Lambrey S, Doeller C, Berthoz A, Burgess N. Imagining being somewhere else: neural basis of changing perspective in space. Cereb Cortex. 2012;22:166–174. doi: 10.1093/cercor/bhr101. [DOI] [PubMed] [Google Scholar]

- Lane AR, Ball K, Ellison A. Dissociating the neural mechanisms of distance and spatial reference frames. Neuropsychologia. 2015;74:42–49. doi: 10.1016/j.neuropsychologia.2014.12.019. [DOI] [PubMed] [Google Scholar]

- Liberman N, Trope Y. The psychology of transcending here and now. Science. 2008;322:1201–1205. doi: 10.1126/science.1161958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberman N, Trope Y. Traversing psychological distance. Trends Cogn Sci. 2014;18:364–369. doi: 10.1016/j.tics.2014.03.001. [DOI] [PubMed] [Google Scholar]

- Marchette SA, Vass LK, Ryan J, Epstein RA. Anchoring the neural compass: coding of local spatial reference frames in human medial parietal lobe. Nat Neurosci. 2014;17:1598–1606. doi: 10.1038/nn.3834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moyer S, Bayer RH. Mental comparison and the symbolic distance effect. Cogn Psychol. 1976:228–246. [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Kim AS, Habib R, Levine B, Tulving E. Consciousness of subjective time in the brain. Proc Natl Acad Sci U S A. 2010;107:22356–22359. doi: 10.1073/pnas.1016823108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson C, Liu S, Wheatley T. A common cortical metric for spatial, temporal, and social distance. J Neurosci. 2014;34:1979–1987. doi: 10.1523/JNEUROSCI.2159-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peer M, Salomon R, Goldberg I, Blanke O, Arzy S. Brain system for mental orientation in space, time, and person. Proc Natl Acad Sci U S A. 2015;112:11072–11077. doi: 10.1073/pnas.1504242112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piazza M, Pinel P, Le Bihan D, Dehaene S. A magnitude code common to numerosities and number symbols in human intraparietal cortex. Neuron. 2007;53:293–305. doi: 10.1016/j.neuron.2006.11.022. [DOI] [PubMed] [Google Scholar]

- Schedlbauer AM, Copara MS, Watrous AJ, Ekstrom AD. Multiple interacting brain areas underlie successful spatiotemporal memory retrieval in humans. Sci Rep. 2014;4:6431. doi: 10.1038/srep06431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepard RN, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–703. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Sherrill KR, Erdem UM, Ross RS, Brown TI, Hasselmo ME, Stern CE. Hippocampus and retrosplenial cortex combine path integration signals for successful navigation. J Neurosci. 2013;33:19304–19313. doi: 10.1523/JNEUROSCI.1825-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suddendorf T, Addis DR, Corballis MC. Mental time travel and the shaping of the human mind. Philos Trans R Soc Lond B Biol Sci. 2009;364:1317–1324. doi: 10.1098/rstb.2008.0301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sulpizio V, Committeri G, Lambrey S, Berthoz A, Galati G. Role of the human retrosplenial cortex/parieto-occipital sulcus in perspective priming. Neuroimage. 2016;125:108–119. doi: 10.1016/j.neuroimage.2015.10.040. [DOI] [PubMed] [Google Scholar]

- Tulving E. Episodic memory: from mind to brain. Annu Rev Psychol. 2002;53:1–25. doi: 10.1146/annurev.psych.53.100901.135114. [DOI] [PubMed] [Google Scholar]