Abstract

The personal significance of a language statement depends on its communicative context. However, this is rarely taken into account in neuroscience studies. Here, we investigate how the implied source of single word statements alters their cortical processing. Participants' brain event-related potentials were recorded in response to identical word streams consisting of positive, negative, and neutral trait adjectives stated to either represent personal trait feedback from a human or to be randomly generated by a computer. Results showed a strong impact of perceived sender. Regardless of content, the notion of receiving feedback from a human enhanced all components, starting with the P2 and encompassing early posterior negativity (EPN), P3, and the late positive potential (LPP). Moreover, negative feedback by the “human sender” elicited a larger EPN, whereas positive feedback generally induced a larger LPP. Source estimations revealed differences between “senders” in visual areas, particularly the bilateral fusiform gyri. Likewise, emotional content enhanced activity in these areas. These results specify how even implied sender identity changes the processing of single words in seemingly realistic communicative settings, amplifying their processing in the visual brain. This suggests that the concept of motivated attention extends from stimulus significance to simultaneous appraisal of contextual relevance. Finally, consistent with distinct stages of emotional processing, at least in contexts perceived as social, humans are initially alerted to negative content, but later process what is perceived as positive feedback more intensely.

Keywords: EEG/ERP, emotion, fusiform gyri, language, social feedback, social neuroscience

Introduction

Language is intrinsically communicative, yet neuroscience studies typically investigate the processing of isolated words or phrases. Communication theories posit that meaning is derived from interaction with others (Blumer, 1969), implying that the perceived identity of a communicative partner should affect the way language content is processed. When emotional content is communicated, context is likely to be especially important because emotional language is particularly relevant for humans (Barrett et al., 2007; Lieberman et al., 2007). Neuroscience research has amply demonstrated the prioritized processing of emotional language (for review, see Kissler, 2013). Brain event-related potentials (ERPs) consistently differentiate between emotional and neutral words (Ortigue et al., 2004; Kissler et al., 2009; Schacht and Sommer, 2009a, 2009b; Kanske et al., 2011). Although earlier effects have been reported (Scott et al., 2009; Kanske et al., 2011), emotion effects are typically reflected in a larger early posterior negativity (EPN) and a more pronounced late positive potential (LPP). The EPN arises from ∼200 ms, indexing mechanisms of lexical (Kissler and Herbert, 2013) and perceptual tagging and early attention (Schupp et al., 2007). The LPP occurs from ∼500 ms after word presentation and is implicated in stimulus evaluation and memory processing (Kanske and Kotz, 2007; Herbert et al., 2008; Hofmann et al., 2009; Schacht and Sommer, 2009a). Emotional intensity plays an important role in amplifying ERPs, but EEG data further suggest distinct functional stages with initial alerting by negative stimuli and later evaluative processing favoring positive content (Zhang et al., 2014, Luo et al., 2010).

Source analyses revealed generators of early emotion effects in word processing in primary visual cortex (Ortigue et al., 2004) and in left extrastriate visual cortex (Kissler et al., 2007), including the fusiform gyri (Hofmann et al., 2009) and left middle temporal gyrus (Keuper et al., 2014). For emotional pictures, LPP generators have been found in occipitoparietal (Schupp et al., 2007; Moratti et al., 2011) and frontal regions (Moratti et al., 2011).

Enhanced visual processing of emotional stimuli can be accounted for within the motivated attention framework, stating that emotional stimuli amplify visual cortex activity due to their higher motivational relevance (Lang et al., 1998).

Here, we test whether a contextual manipulation can modulate word processing in a similar manner, amplifying motivational relevance and enhancing processing in the visual brain. We chose a social feedback situation as a particularly salient context (Eisenberger et al., 2011; Korn et al., 2012). Participants were either told that a human would give them personal feedback by endorsing positive, negative, or neutral trait adjectives or they expected random feedback from a computer. In reality, both conditions were perceptually identical. We hypothesized that the feedback would induce larger ERP components when perceived as coming from the “human sender.” Content effects were expected to replicate prioritized processing of emotional words. In sum, we analyze the sequence of early (N1, P2), midlatency (EPN), and late components (P3, LPP) in response to visually presented social feedback and determine the time course and cortical generators of context and content effects.

Materials and Methods

Participants.

Eighteen participants were recruited at the University of Bielefeld. They gave written informed consent according to the Declaration of Helsinki and received 10 Euros for participation. The study was approved by the ethics committee. Due to experimentation errors, two datasets had to be excluded, leaving 16 participants for final analysis. These 16 participants (12 females) were 24.40 years on average (range, 21–30). All participants were native German speakers, had normal or corrected-to-normal visual acuity, and were right-handed. Screenings with the German version of the Beck Depression Inventory and the State Trait Anxiety Inventory revealed no clinically relevant depression (M = 4.12; SD = 4.54) or anxiety scores (M = 35.94; SD = 3.06).

Stimuli.

Adjectives had been previously rated by 20 students in terms of valence and arousal using the Self-Assessment Manikin (Bradley and Lang, 1994). Raters had been specifically instructed to consider adjective valence and arousal in an interpersonal evaluative context. A total of 150 adjectives (60 negative, 30 neutral, 60 positive) were selected and matched in their linguistic properties, such as word length, frequency, familiarity, and regularity (Table 1). Linguistic parameters were assessed by the dlex database, a corpus of the German language that draws on a wide variety of sources and includes more than 100 million written words (Heister et al., 2011). Importantly, negative and positive adjectives differed only in their valence. Because truly neutral trait adjectives are rare, neutral adjectives were allowed to further differ from emotional adjectives on rated abstractness. Abstractness was rated on a scale similar to the self-assessment manikin, showing a manikin with many distinct features on the left side (concrete-low values) that is successively transformed into a very abstract rendering on the right side. Positive and negative adjectives were somewhat more concrete than neutral ones.

Table 1.

Comparisons of negative, neutral, and positive adjectives by one-way ANOVAs

| Variable | Negative adjectives (n = 60) | Neutral adjectives (n = 30) | Positive adjectives (n = 60) | F(2,147) |

|---|---|---|---|---|

| Valence | 3.10a (0.84) | 5.01b (0.32) | 7.01c (0.90) | 371.05*** |

| Arousal | 4.57a (0.85) | 3.30b (0.66) | 4.40a (0.85) | 25.93*** |

| Abstractness | 3.24a (1.03) | 5.07b (1.46) | 3.16a (1.27) | 28.10*** |

| Word length | 8.93 (2.65) | 9.23 (2.94) | 9.15 (2.48) | 0.16 |

| Word frequency (per million) | 4.64 (8.56) | 4.34 (6.26) | 4.78 (8.05) | 0.03 |

| Familiarity (absolute) | 21805.77 (39221.26) | 18832.23 (48387.29) | 19331.85 (42795.46) | 0.07 |

| Regularity (absolute) | 261.58 (551.78) | 165.97 (378.73) | 239.06 (388.71) | 0.44 |

| Neighbors Coltheart (absolute) | 3.45 (4.44) | 2.53 (3.42) | 3.78 (4.70) | 0.83 |

| Neighbors Levenshtein (absolute) | 6.13 (6.48) | 4.93 (4.14) | 6.60 (6.26) | 0.76 |

Data are shown as mean (SD). Means in the same row sharing the same superscript letter do not differ significantly from one another at p ≤ 0.05; means that do not share subscripts differ at p ≤ 0.05 based on LSD test post hoc comparisons.

***p ≤ 0.001.

Procedure.

Participants were told that they would be evaluated by an unknown other person or would see evaluations generated randomly by a computer program. Participants were instructed that evaluations were made by accepting or rejecting presented trait adjectives online and that these evaluations would be communicated via color changes of words on the screen. All subjects underwent both conditions. The sequence of conditions was counterbalanced across participants (Schindler et al., 2014).

Upon arrival, participants were asked to describe themselves in a brief structured interview in front of a camera. They were told that their self-description would be videotaped and would be shown to a second participant next door. After the interview, participants filled out a demographic questionnaire, Beck's Depression Inventory (Hautzinger et al., 2009), and the State Trait Anxiety Inventory (Spielberger et al., 1999) while the EEG was applied. To ensure face validity, a research assistant left the testing room several minutes ahead of the fictitious feedback, guiding an “unknown person” to a laboratory room next to the testing room.

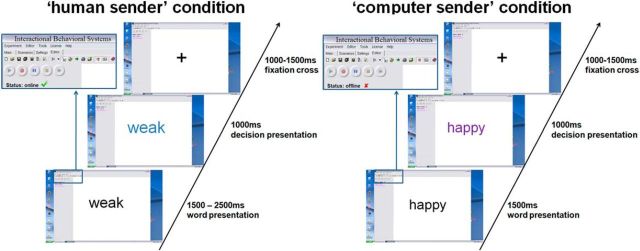

Stimuli were presented within a desktop environment of a fictitious program called “Interactional Behavioral Systems,” allegedly allowing instant online communication (Fig. 1).

Figure 1.

Trial presentation in the fictitious interactive software environment. Each trial started with a presented trait adjective. Subsequent color change indicated endorsement of a trait.

Network cables and changes of the fictitious software desktop image were made salient to ensure credibility of the situation. The presented feedback was randomly generated and half of all presented adjectives were endorsed, leading to 30 affirmative negative, 30 neutral, and 30 affirmative positive decisions. In addition, 20 highly negative adjectives were inserted and always rejected in the evaluations to further increase credibility because it would appear very unlikely for somebody to endorse that extremely negative traits apply to a hardly known stranger. These additional trials were excluded from further analysis. The desktop environment and stimulus presentation were created using Presentation software (www.neurobehavioralsystems.com). In the “human” condition, color changes between 1500 and 2500 ms after adjective onset indicated a decision by the supposed interaction partner. This manipulation simulated variable decision latencies in humans. The decision was communicated via color change (blue or purple) of the presented adjective, indicating whether the respective adjective applied to the participant or not. Color feedback assignments were counterbalanced. In the computer condition, corresponding color changes always occurred at 1500 ms, conveying the notion of constant machine computing time. In both conditions, color changes lasted for 1000 ms, followed by a fixation cross for 1000 to 1500 ms. After testing and debriefing, participants rated their confidence in truly being judged by another person in the “human” condition on a five point Likert-scale questionnaire.

EEG recording and analyses.

EEG was recorded from 128 BioSemi active electrodes (www.biosemi.com). Recorded sampling rate was 2048 Hz. During recording, Cz was used as a reference electrode. Four additional electrodes (EOG) measured horizontal and vertical eye-movement. These were placed at the outer canthi of the eyes and below the eyes.

Preprocessing and statistical analyses were done using SPM8 for EEG data (http://www.fil.ion.ucl.ac.uk/spm/) and EMEGS (Peyk et al., 2011). In a first step, data were rereferenced to the average reference offline. To identify artifacts caused by saccades (horizontal, HEOG) or eye blinks (vertical, VEOG), virtual HEOG and VEOG channels were created from the EOG electrodes. EEG signals that were highly correlated with HEOG or VEOG activity were subtracted from the EEG (minimum correlation of 0.5). Data were then down-sampled to 250 Hz and later band-pass filtered from 0.166 to 30 Hz with a fifth-order Butterworth zero-phase filter. Filtered data were segmented from 500 ms before stimulus onset until 1000 ms after stimulus presentation. Because there was an immediate transition from word presentation to feedback by color change, results are presented without baseline correction so as not to introduce prebaseline differences into the feedback phase. However, there were no apparent differences in the time segment immediately preceding the color change (Figs. 2, 4, 5) and control analyses with baseline correction lead to analogous results. Automatic artifact detection was used to eliminate remaining artifacts defined as trials exceeding a threshold of 160 μV. Data were then averaged, using the robust averaging algorithm of SPM8, excluding possible further artifacts. Robust averaging down-weights outliers for each channel and each time point, thereby preserving a higher number of trials; artifacts are not supposed to distort the whole trial, but rather most of the time corrupt only parts of the trial. We used the recommended offset of the weighting function, which preserves ∼95% of the data points drawn from a random Gaussian distribution (Litvak et al., 2011). Overall, <1% of all electrodes were interpolated and, on average,13.18% of all trials were rejected, leaving, on average, 26.05 trials per condition.

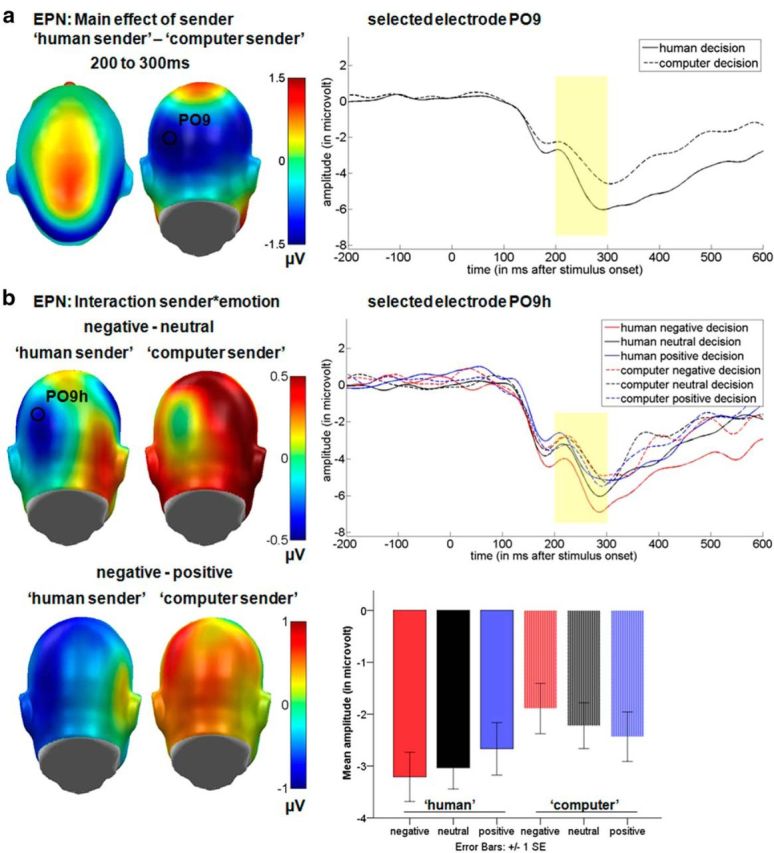

Figure 2.

Results for the EPN time window displaying the significant main effect for the communicative sender and the interaction between communicative sender and emotional content. a, Left, Difference topographies for the communicative sender. Blue color indicates more negativity and red color more positivity for decisions from the “human sender.” Right, Selected electrode PO9 displaying the time course for both senders. b, Left, Difference topographies for the “human sender” and “computer sender.” Right, Selected electrode PO9h displaying the time course for all decisions from both senders. Mean amplitudes in microvolt for the occipital EPN cluster are shown at bottom. For display purposes, electrodes were filtered using a 15 Hz low-pass filter.

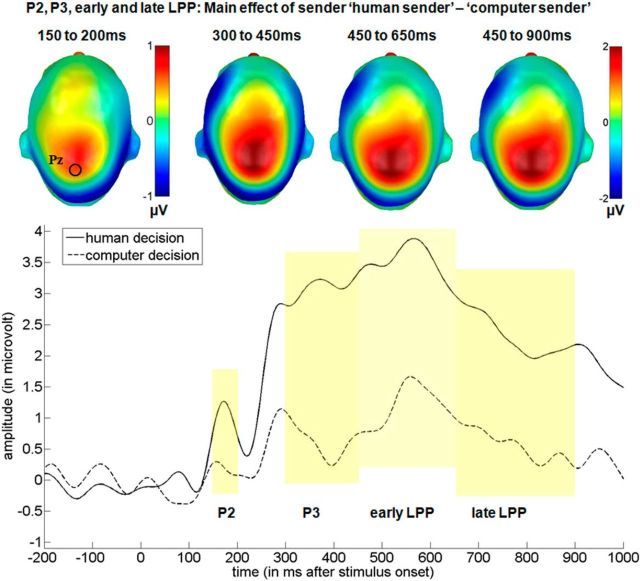

Figure 4.

Main effect of the communicative sender over the central cluster in the P2, P3, and early and late LPP time windows. Top, Difference topographies for the communicative sender. Blue color indicates more negativity and red more positivity for the “human sender.” Bottom, Selected electrode Pz displaying the time course for both senders. For display purposes, data were filtered using a 15 Hz low-pass filter.

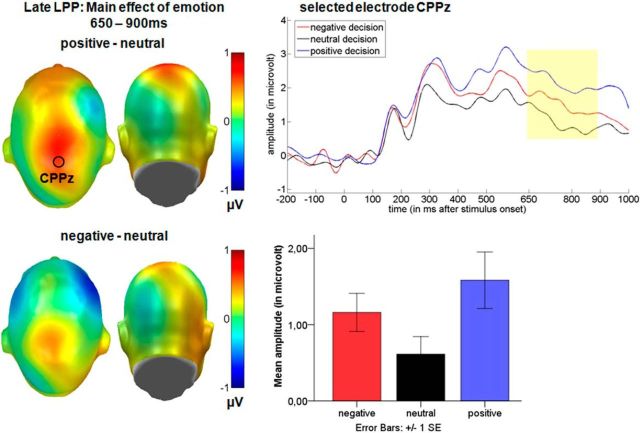

Figure 5.

Main effect of emotion over the central cluster in the late LPP time window. Left, Difference topographies between emotional and neutral decisions. Blue color indicates more negativity and red more positivity for emotional decisions. Right, Selected electrode CPPz illustrates the time course for negative, neutral, and positive decisions. Mean amplitudes in microvolt for the central late LPP cluster are shown at bottom. For display purposes, data were filtered using a 15 Hz low-pass filter.

Statistical analyses.

EEG scalp data were statistically analyzed with EMEGS (Peyk et al., 2011). Two (sender: human vs computer) by three (content: positive, negative, neutral) repeated-measures ANOVAs were set up to investigate main effects of the communicative sender, emotion, and their interaction in time windows and electrode clusters of interest. Effect sizes were calculated for main and interaction effects and post hoc comparisons. For all post hoc tests, mean microvolt values are presented for each condition. Time windows were segmented from 150 to 200 to investigate N1 and P2 effects, from 200 to 300 ms to investigate EPN effects (Kissler et al., 2007), from 300 to 450 ms to investigate P3 effects, and from 450 to 650 ms and 650 to 900 ms to investigate early and late portions of the LPP, respectively (Schupp et al., 2004; Bublatzky and Schupp, 2012). For the N1 time window, an occipital cluster was used (20 electrodes: PO9, PO9h, PO7, PO7h, I1, OI1, O1, POO3, Iz, OIz, Oz, POOz, I2, OI2, O2, POO4, PO10, PO10h, PO8, PO8h). For the EPN time window, two symmetrical occipital clusters of 11 electrodes each were examined (left: I1, OI1, O1, PO9, PO9h, PO7, P9, P9h, P7, TP9h, TP7; right: I2, OI2, PO10, PO10h, PO8, P10, P10h, P8, TP10h, TP8, see Kissler et al., 2007). For the P2, P3, and LPP time windows, a central cluster was investigated (26 electrodes: FCC1h, FCC2h, C3h, C1, C1h, Cz, C2h, C2, C4h, CCP3h, CCP1, CCP1h, CCPz, CCP2h, CCP2, CCP4h, CPz, CPP1, CPPz, CPP2, P1h, Pz, P2h, PPO1h, PPOz, PPO2h; see Schupp et al., 2007).

Source reconstructions of the generators of significant ERP differences were generated and statistically assessed with SPM8 for EEG (Litvak and Friston, 2008; López et al., 2014) following recommended procedures. First, a realistic boundary element head model was derived from SPM's template head model based on the MNI brain. Electrode positions were then transformed to match the template head, which generates reasonable results even when individual subjects' heads differ from the template (Litvak et al., 2011). Average electrode positions as provided by BioSemi were coregistered with the cortical mesh template for source reconstruction. This cortical mesh was used to calculate the forward solution. The inverse solution was then calculated from 0 ms to 1000 ms after feedback onset (Campo et al., 2012). Group inversion (Litvak and Friston, 2008) was computed and the multiple sparse priors algorithm implemented in SPM8 was applied. This method allows activated sources to vary in the degree of activity, but restricts the activated sources to be the same in all subjects (Litvak and Friston, 2008). Compared with single-subject matrix inversion, this has shown to result in more robust source estimations (Litvak and Friston, 2008; Sohoglu et al., 2012).

For each analyzed time window, 3D source reconstructions were generated as NIFTI images (voxel size = 2 mm*2 mm*2 mm). These images were smoothed using an 8 mm full-width half-maximum Gaussian kernel. Consistent with a previous study (Campo et al., 2013), we describe statistical differences in source activity of voxels differing at least at an uncorrected threshold of p < 0.005 and a minimum of 25 significant voxels per cluster (Sun et al., 2015). Results exceeding this threshold (p < 0.001 or FWE corrected) are marked separately to provide a transparent and comprehensive data presentation. Some previous studies show generators of surface activity only descriptively (Schupp et al., 2007) or test at more liberal significance thresholds of 0.05 (Sohoglu et al., 2012; Sun et al., 2015). Furthermore, we extracted the significant activity from the sender effect and used it as a ROI for the emotion effect to determine the spatial overlap between the two effects. Within this ROI, FWE correction was applied.

The identification of activated brain regions was performed using the AAL atlas (Tzourio-Mazoyer et al., 2002).

Results

Questionnaire data

After debriefing, mean credibility ratings of the evaluative situation were 3.4 (SD = 1.02) on a scale ranging from 1 to 5. Two participants stated they were strongly convinced, six participants were quite convinced, four participants were somewhat convinced, and four participants said they were little convinced that they had been rated by another person in the “human sender” condition.

N1 and P2

For the N1 (150–200 ms), there was no main effect of sender (F(1,15) = 1.54, p = 0.23, partial η2 = 0.09), of emotion (F(2,30) = 0.20, p = 0.82, partial η2 = 0.01), or an interaction (F(2,30) = 1.44, p = 0.25, partial η2 = 0.09) over occipital sensors.

For the P2, over the central sensor group, a main effect of the putative sender (F(1,15) = 11.45, p < 0.01, partial η2 = 0.43) was found (Fig. 4). Post hoc tests showed that the “human sender” (M = 1.29 μV) led to an enhanced P2 compared with the “computer sender” (M = 0.82 μV). There was no main effect of emotion (F(2,30) = 0.31, p = 0.74, partial η2 = 0.02) and no interaction (F(2,30) = 2.02, p = 0.15, partial η2 = 0.12).

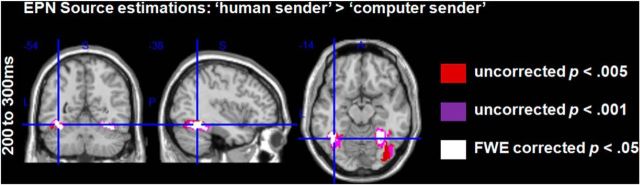

In source space, significant differences between the putative senders were found in the P2 time window. Decisions made by the “human sender” led to more activity in the bilateral fusiform gyri and in the right inferior occipital gyrus (Table 2). Larger activations were found only for the comparison “human sender” versus the computer sender. No significantly larger activity was found for the reverse comparison even using a liberal threshold (uncorrected p < 0.05).

Table 2.

Source estimations for the comparison between the “human sender” and the “computer sender” in the P2 time window (150–200 ms)

| Cluster level |

Peak level |

MNI coordinates (mm) |

AAL |

|||

|---|---|---|---|---|---|---|

| No. of significant voxels | Peak t(1,90) | Peak p-uncorrected | x | y | z | Area |

| 273 | 3.03 | <0.005 | 38 | −66 | −18 | Fusiform R |

| 74 | 2.99 | <0.005 | 44 | −72 | −16 | Fusiform R |

| 249 | 2.78 | <0.005 | −42 | −64 | −18 | Fusiform L |

| 273 | 2.71 | <0.005 | −36 | −54 | −20 | Fusiform L |

Results show enhanced source activations for the human sender in visual areas. For each significant peak, respective coordinates (x, y, and z) are reported in MNI space. A cluster may exhibit more than one peak, whereas only the largest peak is displayed. Area, Peak-level brain region as identified by the AAL atlas; R/L, laterality right or left.

EPN

Over the occipital sensor clusters a significant main effect of communicative sender was observed in the EPN time window (F(1,15) = 8.04, p < 0.05, partial η2 = 0.35; Fig. 2a). Here, the “human sender” (M = −2.97 μV) led to a larger negativity compared with the “computer sender”(M = −2.18 μV, p < 0.05). Further, an interaction between sender and emotion was observed (F(2,30) = 3.56, p < 0.05 partial η2 = 0.19). Within the “human sender,” negative decisions elicited the largest EPN, followed first by neutral and then by positive decisions, whereas for the “computer sender,” the opposite pattern was observed (Fig. 2b). In particular, negative decisions (M = −3.21 μV) by the “human sender” elicited a more negative-going EPN than negative (M = −1.89 μV; t(15) = −3.82, p < 0.01) and neutral decisions (M = −2.22 μV; t(15) = −2.59, p < 0.05) by the “computer sender.” In addition, neutral decisions (M = −3.03 μV) by the “human sender” elicited a larger EPN than both negative (t(15) = −2.74, p < 0.05) and neutral (t(15) = −2.61, p < 0.05) decisions by the “computer sender.” All other comparisons were insignificant.

In source space, significant differences between the putative senders were also found in the EPN time window. Decisions made by the “human sender” led to more activity in the bilateral fusiform gyri and in the right inferior occipital gyrus (Fig. 3, Table 3). Larger activations were found only for the comparison “human sender” versus the “computer sender.” No significantly larger activity was found for the “computer sender” even using a liberal threshold (uncorrected p < 0.05).

Figure 3.

EPN: source estimations for the comparison between the “human sender” and the “computer sender” (displayed are the FWE-corrected t-contrasts). Decisions by the “human sender” led to enhanced activations in the bilateral fusiform gyri in the EPN time window.

Table 3.

Source estimations for the comparison between the “human sender” and the “computer sender” in the EPN time window (200–300 ms)

| Cluster level |

Peak level |

MNI coordinates (mm) |

AAL |

|||

|---|---|---|---|---|---|---|

| No. of significant voxels | Peak t(1,90) | Peak p-uncorrected | x | y | z | Area |

| 750 (656a, 346b) | 5.64c | <0.001 | −40 | −66 | −18 | Fusiform L |

| 1031 (639a, 320b) | 5.08c | <0.001 | 36 | −50 | −18 | Fusiform R |

| 65 | 3.50 | <0.001 | 44 | −72 | −16 | Fusiform R |

Results show enhanced source activations for the human sender in visual areas. For each significant peak, respective coordinates (x, y, and z) are reported in MNI space. A cluster may exhibit more than one peak, whereas only the largest peak is displayed. Area, Peak-level brain region as identified by the AAL atlas; R/L, laterality right or left.

aResulting cluster size when a threshold of p < 0.001 was used.

bResulting cluster size when FWE-corrected threshold of p < 0.05 was used.

cPeak significant at p < 0.05 FWE corrected threshold.

P3

In the time window between 300 and 450 ms, a significant main effect of the putative sender was found over central sensors (F(1,15) = 15.35, p < 0.001, partial η2 = 0.51). Post hoc tests show that the “human sender” (M = 1.85 μV) led to an enhanced P3 (Fig. 4). There was a trend-level effect of emotional content (F(2,30) = 3.10, p = 0.06, partial η2 = 0.17). Here, negative decisions (M = 1.85 μV, p < 0.05) and positive decisions (M = 1.97 μV, p = 0.08) led to somewhat larger P3 amplitudes compared with neutral decisions (M = 1.25 μV). Finally, no interaction between sender and emotion was found (F(2,30) = 0.45, p = 0.64, partial η2 = 0.03).

In source space, significant differences were also found between the putative senders. Similar to the above reported effects, decisions made by the “human sender” led to enhanced activity in the bilateral fusiform gyri (Table 4), the right middle occipital gyrus, and the left lingual gyrus. Again, no significantly larger activity was found for the “computer sender” even using a liberal threshold (uncorrected p < 0.05).

Table 4.

Source estimations for the comparison between the “human sender” and the “computer sender” in the P3 time window (300–450 ms)

| Cluster level |

Peak level |

MNI coordinates (mm) |

AAL |

|||

|---|---|---|---|---|---|---|

| No. of significant voxels | Peak t(1,90) | Peak p-uncorrected | x | y | z | Area |

| 807 (725a, 549b) | 6.73c | <0.001 | −42 | −64 | −18 | Fusiform L |

| 795 (723a, 553b) | 6.63c | <0.001 | 36 | −50 | −18 | Fusiform R |

| 63 | 3.35 | <0.005 | −12 | −80 | −12 | Lingual L |

| 81 | 2.90 | <0.005 | 32 | −84 | 6 | Midoccipital R |

| 63 | 2.77 | <0.005 | 40 | −82 | 10 | Midoccipital R |

Results show enhanced source activations for the human sender in visual areas. For each significant peak, respective coordinates (x, y, and z) are reported in MNI space. A cluster may exhibit more than one peak, whereas only the largest peak is displayed. Area, Peak-level brain region as identified by the AAL atlas; R/L, laterality right or left.

aResulting cluster size when a threshold of p < 0.001 was used.

bResulting cluster size when FWE-corrected threshold of p < 0.05 was used.

cPeak significant at p < 0.05 FWE corrected threshold.

LPP

In the time window between 450 and 650 ms, a significant main effect of the communicative sender was observed (F(1,15) = 10.45, p < 0.01, partial η2 = 0.41), where the “human sender” (M = 2.31 μV) led to a larger positivity than the computer sender (M = 0.80 μV; Fig. 4). There was again a trend-like main effect of emotion (F(2,30) = 2.66, p = 0.09, partial η2 = 0.15), where negative decisions (M = 1.55 μV, p = 0.08) and positive decisions (M = 1.97 μV, p = 0.09) led to somewhat larger amplitudes compared with neutral decisions (M = 1.14 μV). No interaction between sender and emotion was found (F(2,30) = 2.20, p = 0.15, partial η2 = 0.12).

Between 650 and 900 ms, during the late portion of the LPP, main effects of both the communicative sender (F(1,15) = 7.96, p < 0.05, partial η2 = 0.35), and emotion (F(2,30) = 3.99, p < 0.05, partial η2 = 0.21) were observed (Fig. 4, 5). Again, post hoc comparisons showed a sustained larger positivity for decisions by the “human sender” (M = 1.75 μV) compared with the “computer sender” (M = 0.49 μV, p < 0.01). Further, positive decisions (M = 1.58 μV) elicited a larger LPP compared with neutral decisions (M = 0.62 μV, p < 0.05), whereas they did not differ from negative decisions (M = 1.16 μV, p = 0.39). There was also a trend for an enhanced LPP for negative compared with neutral decisions (p = 0.055).

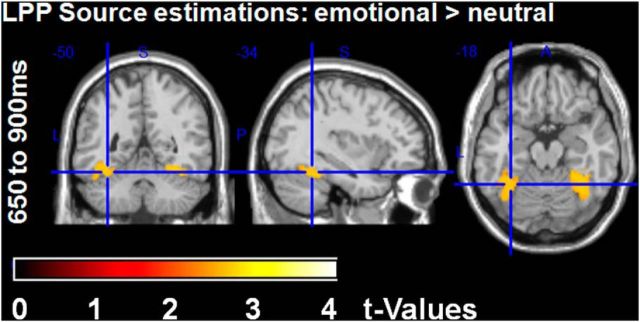

In source space, significant differences between the putative senders were found in the two LPP time windows. Further, significantly larger activations were found for emotional decisions compared with neutral decisions (Table 5, Table 6).

Table 5.

Source estimations for the comparison between the “human sender” and the “computer sender” in the early and late LPP time windows

| Cluster level |

Peak level |

MNI coordinates (mm) |

AAL |

|||

|---|---|---|---|---|---|---|

| No. of significant voxels | Peak t(1,90) | Peak p-uncorrected | x | y | z | Area |

| Early LPP time window (450–650 ms) | ||||||

| 195 (104a, 1b) | 4.73c | <0.001 | −12 | −80 | −12 | Lingual L |

| 647 (548a) | 4.37 | <0.001 | −42 | −64 | −18 | Fusiform L |

| 766 (547a) | 4.31 | <0.001 | 36 | −50 | −18 | Fusiform R |

| 102 (47a) | 3.80 | <0.001 | −18 | −82 | 38 | Sup Occipital L |

| 175 (89a) | 3.59 | <0.001 | 30 | −88 | 4 | Mid Occipital R |

| 46 | 3.51 | <0.005 | 24 | −82 | 40 | Sup Occipital R |

| 156 (64a) | 3.46 | <0.001 | −32 | −80 | 8 | Mid Occipital L |

| Late LPP time window (650–900 ms) | ||||||

| 105 (31a) | 3.74 | <0.001 | −12 | −80 | −12 | Lingual L |

| 69 | 3.35 | <0.005 | 18 | −74 | −12 | Lingual R |

| 55 | 3.06 | <0.005 | −16 | −82 | 40 | Cuneus L |

| 321 | 2.87 | <0.005 | −30 | −64 | −14 | Fusiform L |

| 322 | 2.78 | <0.005 | 26 | −62 | −14 | Fusiform R |

Results show enhanced source activations for the human sender in visual areas. For each significant peak, respective coordinates (x, y, and z) are reported in MNI space. A cluster may exhibit more than one peak, whereas only the largest peak is displayed. Area, Peak-level brain region as identified by the AAL atlas; R/L, laterality right or left.

aResulting cluster size when a threshold of p < 0.001 was used.

bResulting cluster size when FWE-corrected threshold of p < 0.05 was used.

cPeak significant at p < 0.05 FWE corrected threshold.

Table 6.

Source estimations for the comparison between emotional and neutral decisions during the late LPP time window (650–900 ms)

| Cluster level |

Peak level |

MNI coordinates (mm) |

AAL |

|||

|---|---|---|---|---|---|---|

| No. of significant voxels | Peak t(1,90) | Peak p-uncorrected | x | y | z | Area |

| 248 (437a) | 2.71 | <0.005 | 40 | −40 | −24 | Fusiform R |

| 176 (414a) | 2.69 | <0.005 | −40 | −42 | −20 | Fusiform L |

Results show enhanced source activations for the emotional decisions in visual areas. For each significant peak, respective coordinates (x, y, and z) are reported in MNI space. A cluster may exhibit more than one peak, whereas only the largest peak is displayed. Area, Peak-level brain region as identified by the AAL atlas; R/L, laterality right or left.

aResulting cluster size when a FWE-corrected threshold of p < 0.05 was used.

In the early LPP time window, enhanced activity was again observed in the bilateral fusiform gyri for the “human sender” as well as in the bilateral lingual, bilateral middle occipital gyri, and bilateral superior occipital gyri. In the late LPP time window, more activity for the “human sender” was found in the bilateral fusiform gyri, the bilateral lingual gyri, and the left superior occipital gyrus. Importantly, activity induced by the “computer sender” was never significantly larger than activity for the “human sender” even using a liberal threshold (uncorrected p < 0.05).

To examine emotion differences in source space in the late LPP time window and to assess their overlap with the sender effect, the sender main effect was used as an ROI within which familywise error correction was applied. This indicated that emotional decisions led to significant larger activity in bilateral fusiform gyri compared with neutral decisions, analogous to what had been observed for the sender effect (Fig. 6, Table 6). Neutral decisions never induced more activity than emotional ones even when applying a liberal significance threshold (uncorrected p < 0.05).

Figure 6.

Late LPP: source estimations for the comparison between emotional and neutral decisions within ROIs derived from sender main effects (shown are the FWE-corrected t-contrasts). Emotional decisions led to larger activity in bilateral fusiform gyri.

Discussion

This study examined the impact of perceived communicative context on the processing of emotional language. We hypothesized that trait adjectives would be processed more intensely when perceived as social feedback from another human. Indeed, despite physically identical stimuli, we observed large differences between the putative human and computer sender. In the “human sender” condition, larger ERPs were observed starting with the P2 and extending throughout the entire analysis window encompassing EPN, P3, and LPP. Moreover, a main effect of emotion was found in the late LPP time window, where positive and, in tendency, also negative decisions were processed more intensely than neutral ones. Finally, on the EPN, emotion effects were found to differ between the senders because alerting to negative content was more pronounced in the “human sender” condition than in the “computer sender” condition. Source analysis revealed the fusiform gyri as primary generators of both sender and emotion effects.

The sender effects support our main hypothesis, namely that sender information is implicitly factored into stimulus processing. This occurred even though the communicative context was only implied and the stimulation identical across conditions. Effects started with the P2 and extended throughout the epoch, indicating phasic amplification of processing due to higher motivational relevance of the “human sender” situation. Results fit well with the concept of motivated attention (Lang et al., 1997). Motivated attention has been suggested to account for spontaneously enhanced processing of emotional stimuli (Junghöfer et al., 2001; Herbert et al., 2006; Flaisch et al., 2011). The concept builds on the observation that experimental effects of emotional stimuli often parallel those of explicit instructions in feature-based attention (for review, see Schupp et al., 2006). Both attention and emotion amplify stimulus processing in object-specific regions of the visual brain (Schupp et al., 2007). Accordingly, studies with face stimuli show modulation of fusiform gyrus activity both by attended and, independent of instruction, also by emotionally relevant faces (Vuilleumier et al., 2001). We show here for the first time modulations of fusiform responses to words due to their implied contextual relevance.

Emotional content also affected processing. Consistent with other studies, we found evidence for fast extraction of emotional significance (Kissler et al., 2007; Scott et al., 2009), in particular in the more relevant condition (Rohr and Rahman, 2015). An interaction between content and context occurred already on the EPN. Here, negative decisions by the “human sender” elicited more negativity than negative or neutral decisions by the “computer sender.” Recently, three functionally distinct stages of emotional processing have been proposed (Luo et al., 2010; Kissler and Herbert, 2013; Zhang et al., 2014): Initial alerting is supposed to accentuate negative contents, whereas evaluative postprocessing favors positive content. The present interaction demonstrates that contextual factors can modulate early emotion processing and that salient social contexts accentuate early alerting mechanisms.

Consistent with the stage model, on the late LPP, primarily positive words were processed more intensely than neutral ones. The pattern was most pronounced in the “human sender” condition, but also held in the “computer condition,” replicating previous reports of enhanced LPPs in emotion word processing (Kissler et al., 2007; Kanske and Kotz, 2007; Herbert et al., 2008; Hofmann et al., 2009; Kissler et al., 2009; Schacht and Sommer, 2009a). A self-positivity bias may contribute to this. For example, Tucker et al. (2003) had participants decide whether adjectives were self-descriptive or descriptive of a close friend. Passive viewing served as the control condition. The LPP was generally larger in the active conditions. Moreover, larger LPPs were found for positive than negative traits endorsed in oneself or a close friend, the effect being larger again for the self-positive traits (Tucker et al., 2003). fMRI findings (Korn et al., 2012) and behavioral studies also support a self-positivity bias (Hepper et al., 2011). Positive traits seem more self-relevant for healthy subjects than negative traits and a correlation of self-positivity bias and self-esteem has been found (Zhang et al., 2013), which might be adaptive in helping people to maintain psychological health (Blackhart et al., 2009). Overall, the healthy brain appears to amplify processing of self-related positive information. Using EEG's time resolution, the present study determines a distinct processing stage, namely the LPP window, where this occurs.

Source analyses indicate that the emotion enhancement takes place in largely the same region as the contextual enhancement, indicating that both context and content can independently amplify visual processing. Stronger activations for both the “human sender” and emotional content were localized mostly in bilateral fusiform gyri, including the visual word form area (VWFA), a region specialized in the processing of written language (Yarkoni et al., 2008; Mei et al., 2010; Szwed et al., 2011; Wang et al., 2011). For example, stronger VWFA activations are found for real words versus objects or scrambled words (Szwed et al., 2011) and can be modulated by task demands (Wang et al., 2011). VWFA activity is thought to reflect the integration of orthography, word sound, and meaning (Yarkoni et al., 2008) and is also linked to memory for words (Mei et al., 2010).

Overall, our findings agree with the motivated attention model, further specify its cortical basis, and extend it to contextual factors. Results confirm enhancement of visual processing by motivationally relevant stimuli in object-specific cortical areas and indicate that both stimulus and context characteristics can confer motivational relevance. Enhanced bilateral fusiform gyri activations are likely to reflect heightened attention, enhancing the stimuli's visual processing, if a supposedly “human sender” gave feedback. Tasks such as lexical or semantic decisions compared with passive viewing (Chen et al., 2013) or semantic versus perceptual tasks (Martens et al., 2011) have been shown to modulate word processing in a similar manner. In the current experiment, both conditions basically required passive viewing. Therefore, modulations can be only explained by implicit tuning due to the context manipulation.

In face processing, stronger fusiform activity has been reported to socially and biologically relevant faces compared with nonface stimuli (Kanwisher et al., 1997). Fusiform responses are larger for emotional than neutral faces and can be tuned by attentional demands (Vuilleumier and Pourtois, 2007). Finally, social relevance manipulations, such as assigning faces to a group, also amplify fusiform activity for in-group faces (Van Bavel, Packer, and Cunningham, 2011). The present research extends these findings to word processing in social contexts. For humans, verbal feedback is socially, and perhaps also biologically, important because belonging to a community reflects a motivational desire derived from its evolutionary advantage for group-living species.

A key question for future research concerns further specification of the mechanisms behind tuning of fusiform activity by stimulus and/or context attributes. An influential model suggests that reentrant processing from the amygdala drives fusiform responses to emotional faces (Vuilleumier, 2005) and studies with neurological patients also indicate a crucial role for the amygdala in prioritized processing of emotional words (Anderson and Phelps, 2001). The current study does not lend itself to a straightforward test of this model because EEG does not reliably localize subcortical structures. However, future neuroimaging studies will be revealing in this regard. Attentional modulation of visual cortex has been suggested to be driven by top-down influences from parietal or prefrontal cortex (for review, see Corbetta and Shulman, 2002). However, in the present study, very little activity outside of the visual cortex was found even at lenient thresholds (p < 0.05), suggesting that these projections play little role in the current experimental situation. Here, we confirm reports of occipital generators of emotion-LPP enhancements (Sabatinelli et al., 2007; Moratti et al., 2011), but found no significant differences in parietal or frontal generator activity. This may be because context was manipulated between blocks, resulting in more sustained sensitization within visual cortex itself. Indeed, recent connectivity studies indicate bottom-up effects of left ventral occipitotemporal cortex, including the left fusiform gyrus, in word detection (Schurz et al., 2014). Finally, emotional stimuli and social contexts manipulations may differ in their respective patterns of activity within fusiform gyrus.

In summary, we found that the perceived social context has a large impact on word processing. Our research specifies the time course of the effect and reveals involved brain structures. Sender differences started with the P2 and extended throughout the analysis window. Source estimation localized these effects primarily to the fusiform portions of the visual cortex. Early in the processing stream, negative feedback was processed more intensely when received from the “human sender,” possibly reflecting an early alerting mechanism. However, at late processing stages, positive feedback was preferentially processed, supporting a self-positivity bias (Izuma et al., 2010; Korn et al., 2012; Simon et al., 2014). These results extend the concept of motivated attention from emotional stimulus content to socio-emotional context and provide a step toward studying word processing in more realistic or at least quasicommunicative scenarios. The present design will lend itself to straightforward investigations of interindividual differences and clinical disorders. It gains further relevance in the age of virtual communication, where personally unknown communication partners often ascribe states and intentions to each other.

Footnotes

This work was funded by the Deutsche Forschungsgemeinschaft (Grant DFG KI1283/4-1 and by Cluster of Excellence 277 “Cognitive Interaction Technology”). We thank all participants contributing to this study.

The authors declare no competing financial interests.

References

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends Cogn Sci. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blackhart GC, Nelson BC, Knowles ML, Baumeister RF. Rejection elicits emotional reactions but neither causes immediate distress nor lowers self-esteem: A meta-analytic review of 192 studies on social exclusion. Personality and Social Psychology Review. 2009;13:269–309. doi: 10.1177/1088868309346065. [DOI] [PubMed] [Google Scholar]

- Blumer H. Symbolic interactionism: perspective and method. New York: Prentice Hall; 1969. [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: the self-assessment manikin and the semantic differential. J Behav Ther Exp Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Bublatzky F, Schupp HT. Pictures cueing threat: Brain dynamics in viewing explicitly instructed danger cues. Soc Cogn Affect Neurosci. 2012;7:611–622. doi: 10.1093/scan/nsr032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campo P, Garrido MI, Moran RJ, Maestú F, García-Morales I, Gil-Nagel A, del Pozo F, Dolan RJ, Friston KJ. Remote effects of hippocampal sclerosis on effective connectivity during working memory encoding: a case of connectional diaschisis? Cereb Cortex. 2012;22:1225–1236. doi: 10.1093/cercor/bhr201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campo P, Poch C, Toledano R, Igoa JM, Belinchón M, García-Morales I, Gil-Nagel A. Anterobasal temporal lobe lesions alter recurrent functional connectivity within the ventral pathway during naming. J Neurosci. 2013;33:12679–12688. doi: 10.1523/JNEUROSCI.0645-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Davis MH, Pulvermüller F, Hauk O. Task modulation of brain responses in visual word recognition as studied using EEG/MEG and fMRI. Front Hum Neurosci. 2013;7:376. doi: 10.3389/fnhum.2013.00376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Eisenberger NI, Inagaki TK, Muscatell KA, Byrne Haltom KE, Leary MR. The neural sociometer: brain mechanisms underlying state self-esteem. J Cogn Neurosci. 2011;23:3448–3455. doi: 10.1162/jocn_a_00027. [DOI] [PubMed] [Google Scholar]

- Flaisch T, Häcker F, Renner B, Schupp HT. Emotion and the processing of symbolic gestures: An event-related brain potential study. Soc Cogn Affect Neurosci. 2011;6:109–118. doi: 10.1093/scan/nsq022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hautzinger M., Keller F., Kühner C. BDI-II Beck-Depressions-Inventar Revision 2 Auflage. Frankfurt: Pearson Assessment; 2009. [Google Scholar]

- Heister J, Würzner KM, Bubenzer J, Pohl E, Hanneforth T, Geyken A. dlexDB–eine lexikalische Datenbank für die psychologische und linguistische Forschung. Psychologische Rundschau. 2011;62:10–20. doi: 10.1026/0033-3042/a000029. [DOI] [Google Scholar]

- Hepper EG, Hart CM, Gregg AP, Sedikides C. Motivated expectations of positive feedback in social interactions. J Soc Psychol. 2011;151:455–477. doi: 10.1080/00224545.2010.503722. [DOI] [PubMed] [Google Scholar]

- Herbert C, Kissler J, Junghöfer M, Peyk P, Rockstroh B. Processing of emotional adjectives: evidence from startle EMG and ERPs. Psychophysiology. 2006;43:197–206. doi: 10.1111/j.1469-8986.2006.00385.x. [DOI] [PubMed] [Google Scholar]

- Herbert C, Junghöfer M, Kissler J. Event related potentials to emotional adjectives during reading. Psychophysiology. 2008;45:487–498. doi: 10.1111/j.1469-8986.2007.00638.x. [DOI] [PubMed] [Google Scholar]

- Hofmann MJ, Kuchinke L, Tamm S, Võ ML, Jacobs AM. Affective processing within 1/10th of a second: high arousal is necessary for early facilitative processing of negative but not positive words. Cogn Affect Behav Neurosci. 2009;9:389–397. doi: 10.3758/9.4.389. [DOI] [PubMed] [Google Scholar]

- Izuma K, Saito DN, Sadato N. Processing of the incentive for social approval in the ventral striatum during charitable donation. J Cogn Neurosci. 2010;22:621–631. doi: 10.1162/jocn.2009.21228. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Bradley MM, Elbert TR, Lang PJ. Fleeting images: a new look at early emotion discrimination. Psychophysiology. 2001;38:175–178. doi: 10.1111/1469-8986.3820175. [DOI] [PubMed] [Google Scholar]

- Kanske P, Plitschka J, Kotz SA. Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia. 2011;49:3121–3129. doi: 10.1016/j.neuropsychologia.2011.07.022. [DOI] [PubMed] [Google Scholar]

- Kanske P, Kotz SA. Concreteness in emotional words: ERP evidence from a hemifield study. Brain Res. 2007;1148:138–148. doi: 10.1016/j.brainres.2007.02.044. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keuper K, Zwanzger P, Nordt M, Eden A, Laeger I, Zwitserlood P, Kissler J, Junghöfer M, Dobel C. How ‘love’ and ‘hate’ differ from ‘sleep’: using combined electro/magnetoencephalographic data to reveal the sources of early cortical responses to emotional words. Hum Brain Mapp. 2014;35:875–888. doi: 10.1002/hbm.22220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kissler J. Love letters and hate mail: cerebral processing of emotional language content. In: Armony J, Vuilleumier P, editors. Handbook of human affective neuroscience. Cambridge: Cambridge University; 2013. pp. 304–328. [Google Scholar]

- Kissler J, Herbert C. Emotion, etmnooi, or emitoon?–faster lexical access to emotional than to neutral words during reading. Biol Psychol. 2013;92:464–479. doi: 10.1016/j.biopsycho.2012.09.004. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Peyk P, Junghöfer M. Buzzwords: early cortical responses to emotional words during reading. Psychol Sci. 2007;18:475–480. doi: 10.1111/j.1467-9280.2007.01924.x. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Winkler I, Junghöfer M. Emotion and attention in visual word processing–an ERP study. Biol Psychol. 2009;80:75–83. doi: 10.1016/j.biopsycho.2008.03.004. [DOI] [PubMed] [Google Scholar]

- Korn CW, Prehn K, Park SQ, Walter H, Heekeren HR. Positively biased processing of self-relevant social feedback. J Neurosci. 2012;32:16832–16844. doi: 10.1523/JNEUROSCI.3016-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Fitzsimmons JR, Cuthbert BN, Scott JD, Moulder B, Nangia V. Emotional arousal and activation of the visual cortex: an fMRI analysis. Psychophysiology. 1998;35:199–210. doi: 10.1111/1469-8986.3520199. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Motivated attention: affect, activation, and action. In: Lang PJ, Simons RF, Balaban MT, editors. Attention and orienting: sensory and motivational processes. Hillsdale, NJ: Erlbaum; 1997. pp. 97–135. [Google Scholar]

- Lieberman MD, Eisenberger NI, Crockett MJ, Tom SM, Pfeifer JH, Way BM. Putting feelings into words: affect labeling disrupts amygdala activity in response to affective stimuli. Psychol Sci. 2007;18:421–428. doi: 10.1111/j.1467-9280.2007.01916.x. [DOI] [PubMed] [Google Scholar]

- Litvak V, Mattout J, Kiebel S, Phillips C, Henson R, Kilner J, Barnes G, Oostenveld R, Daunizeau J, Flandin G, Penny W, Friston K. EEG and MEG data analysis in SPM8. Comput Intell Neurosci. 2011;2011:852961. doi: 10.1155/2011/852961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litvak V, Friston K. Electromagnetic source reconstruction for group studies. Neuroimage. 2008;42:1490–1498. doi: 10.1016/j.neuroimage.2008.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- López JD, Litvak V, Espinosa JJ, Friston K, Barnes GR. Algorithmic procedures for Bayesian MEG/EEG source reconstruction in SPM. Neuroimage. 2014;84:476–487. doi: 10.1016/j.neuroimage.2013.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo W, Feng W, He W, Wang NY, Luo YJ. Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage. 2010;49:1857–1867. doi: 10.1016/j.neuroimage.2009.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martens U, Ansorge U, Kiefer M. Controlling the unconscious: attentional task sets modulate subliminal semantic and visuomotor processes differentially. Psychol Sci. 2011;22:282–291. doi: 10.1177/0956797610397056. [DOI] [PubMed] [Google Scholar]

- Mei L, Xue G, Chen C, Xue F, Zhang M, Dong Q. The “visual word form area” is involved in successful memory encoding of both words and faces. Neuroimage. 2010;52:371–378. doi: 10.1016/j.neuroimage.2010.03.067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Saugar C, Strange BA. Prefrontal-occipitoparietal coupling underlies late latency human neuronal responses to emotion. J Neurosci. 2011;31:17278–17286. doi: 10.1523/JNEUROSCI.2917-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ortigue S, Michel CM, Murray MM, Mohr C, Carbonnel S, Landis T. Electrical neuroimaging reveals early generator modulation to emotional words. Neuroimage. 2004;21:1242–1251. doi: 10.1016/j.neuroimage.2003.11.007. [DOI] [PubMed] [Google Scholar]

- Peyk P, De Cesarei A, Junghöfer M. Electro Magneto Encephalograhy Software: overview and integration with other EEG/MEG toolboxes. Comput Intell Neurosci. 2011;2011:861705. doi: 10.1155/2011/861705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohr L, Abdel Rahman R. Affective responses to emotional words are boosted in communicative situations. Neuroimage. 2015;109:273–282. doi: 10.1016/j.neuroimage.2015.01.031. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Keil A, Bradley M. Emotional perception: correlation of functional MRI and event-related potentials. Cereb Cortex. 2007;17:1085–1091. doi: 10.1093/cercor/bhl017. [DOI] [PubMed] [Google Scholar]

- Schacht A, Sommer W. Emotions in word and face processing: Early and late cortical responses. Brain Cogn. 2009a;69:538–550. doi: 10.1016/j.bandc.2008.11.005. [DOI] [PubMed] [Google Scholar]

- Schacht A, Sommer W. Time course and task dependence of emotion effects in word processing. Cogn Affect Behav Neurosci. 2009b;9:28–43. doi: 10.3758/CABN.9.1.28. [DOI] [PubMed] [Google Scholar]

- Schindler S, Wegrzyn M, Steppacher I, Kissler J. It's all in your head–how anticipating evaluation affects the processing of emotional trait adjectives. Front Psychol. 2014;5:1292. doi: 10.3389/fpsyg.2014.01292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41:441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Flaisch T, Stockburger J, Junghöfer M. Emotion and attention: event-related brain potential studies. Prog Brain Res. 2006;156:31–51. doi: 10.1016/S0079-6123(06)56002-9. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Stockburger J, Codispoti M, Junghöfer M, Weike AI, Hamm AO. Selective visual attention to emotion. J Neurosci. 2007;27:1082–1089. doi: 10.1523/JNEUROSCI.3223-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurz M, Kronbichler M, Crone J, Richlan F, Klackl J, Wimmer H. Top-down and bottom-up influences on the left ventral occipito-temporal cortex during visual word recognition: an analysis of effective connectivity. Hum Brain Mapp. 2014;35:1668–1680. doi: 10.1002/hbm.22281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott GG, O'Donnell PJ, Leuthold H, Sereno SC. Early emotion word processing: evidence from event-related potentials. Biol Psychol. 2009;80:95–104. doi: 10.1016/j.biopsycho.2008.03.010. [DOI] [PubMed] [Google Scholar]

- Simon D, Becker MP, Mothes-Lasch M, Miltner WH, Straube T. Effects of social context on feedback-related activity in the human ventral striatum. Neuroimage. 2014;99:1–6. doi: 10.1016/j.neuroimage.2014.05.071. [DOI] [PubMed] [Google Scholar]

- Sohoglu E, Peelle JE, Carlyon RP, Davis MH. Predictive top-down integration of prior knowledge during speech perception. J Neurosci. 2012;32:8443–8453. doi: 10.1523/JNEUROSCI.5069-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spielberger CD, Sydeman SJ, Owen AE, Marsh BJ. Measuring anxiety and anger with the State-Trait Anxiety Inventory (STAI) and the State-Trait Anger Expression Inventory (STAXI) In: Maruish ME, editor. The use of psychological testing for treatment planning and outcomes assessment. Ed 2. Mahwah, NJ: Lawrence Erlbaum Associates; 1999. pp. 993–1021. [Google Scholar]

- Sun D, Lee TM, Chan CC. Unfolding the spatial and temporal neural processing of lying about face familiarity. Cereb Cortex. 2015;25:927–936. doi: 10.1093/cercor/bht284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szwed M, Dehaene S, Kleinschmidt A, Eger E, Valabrègue R, Amadon A, Cohen L. Specialization for written words over objects in the visual cortex. Neuroimage. 2011;56:330–344. doi: 10.1016/j.neuroimage.2011.01.073. [DOI] [PubMed] [Google Scholar]

- Tucker DM, Luu P, Desmond RE, Jr, Hartry-Speiser A, Davey C, Flaisch T. Corticolimbic mechanisms in emotional decisions. Emotion. 2003;3:127–149. doi: 10.1037/1528-3542.3.2.127. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Van Bavel JJ, Packer DJ, Cunningham WA. Modulation of the fusiform face area following minimal exposure to motivationally relevant faces: evidence of in-group enhancement (not out group disregard) J Cogn Neurosci. 2011;23:3343–3354. doi: 10.1162/jocn_a_00016. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/S0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Wang X, Yang J, Shu H, Zevin JD. Left fusiform BOLD responses are inversely related to word-likeness in a one-back task. Neuroimage. 2011;55:1346–1356. doi: 10.1016/j.neuroimage.2010.12.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarkoni T, Speer NK, Balota DA, McAvoy MP, Zacks JM. Pictures of a thousand words: investigating the neural mechanisms of reading with extremely rapid event-related fMRI. Neuroimage. 2008;42:973–987. doi: 10.1016/j.neuroimage.2008.04.258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang D, He W, Wang T, Luo W, Zhu X, Gu R, Li H, Luo YJ. Three stages of emotional word processing: an ERP study with rapid serial visual presentation. Soc Cogn Affect Neurosci. 2014;9:1897–1903. doi: 10.1093/scan/nst188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang H, Guan L, Qi M, Yang J. Self-esteem modulates the time course of self-positivity bias in explicit self-evaluation. PLoS One. 2013;8:e81169. doi: 10.1371/journal.pone.0081169. [DOI] [PMC free article] [PubMed] [Google Scholar]