Abstract

Plasticity in the visual cortex of blind individuals provides a rare window into the mechanisms of cortical specialization. In the absence of visual input, occipital (“visual”) brain regions respond to sound and spoken language. Here, we examined the time course and developmental mechanism of this plasticity in blind children. Nineteen blind and 40 sighted children and adolescents (4–17 years old) listened to stories and two auditory control conditions (unfamiliar foreign speech, and music). We find that “visual” cortices of young blind (but not sighted) children respond to sound. Responses to nonlanguage sounds increased between the ages of 4 and 17. By contrast, occipital responses to spoken language were maximal by age 4 and were not related to Braille learning. These findings suggest that occipital plasticity for spoken language is independent of plasticity for Braille and for sound. We conclude that in the absence of visual input, spoken language colonizes the visual system during brain development. Our findings suggest that early in life, human cortex has a remarkably broad computational capacity. The same cortical tissue can take on visual perception and language functions.

SIGNIFICANCE STATEMENT Studies of plasticity provide key insights into how experience shapes the human brain. The “visual” cortex of adults who are blind from birth responds to touch, sound, and spoken language. To date, all existing studies have been conducted with adults, so little is known about the developmental trajectory of plasticity. We used fMRI to study the emergence of “visual” cortex responses to sound and spoken language in blind children and adolescents. We find that “visual” cortex responses to sound increase between 4 and 17 years of age. By contrast, responses to spoken language are present by 4 years of age and are not related to Braille-learning. These findings suggest that, early in development, human cortex can take on a strikingly wide range of functions.

Keywords: blindness, cross-modal, development, language, plasticity, visual cortex

Introduction

Studies of blindness provide a rare glimpse into how nature and nurture shape human brain development. “Visual” cortices of congenitally blind individuals respond to input from non-visual modalities, including sound and touch. Occipital cortex of blind adults participates in functions such as tactile discrimination, auditory motion perception, and localization of sounds (Merabet et al., 2004; Poirier et al., 2006; Collignon et al., 2011). Strikingly, there is also evidence that occipital cortex processes spoken language (Cohen et al., 1997; Burton et al., 2002, 2003; Röder et al., 2002; Amedi et al., 2003, 2004; Bedny et al., 2011; Watkins et al., 2012). Occipital areas are active when blind adults listen to words and sentences (Sadato et al., 1996; Burton et al., 2002; Röder et al., 2002; Amedi et al., 2003) and occipital activity is sensitive to the meanings of words and the compositional structure of sentences (Röder et al., 2002; Bedny et al., 2011, 2012). Transient disruption of occipital circuits with transcranial magnetic stimulation (TMS) impairs the ability of blind people to produce semantically appropriate verbs to heard nouns (Amedi et al., 2004). Occipital participation in these tasks is striking, in light of the cognitive and evolutionary differences between vision and language. Understanding the mechanism of this plasticity could provide insights into human brain development.

All existing data on visual cortex plasticity in blindness come from adults. Since prior studies have not examined the emergence of plasticity during development, multiple fundamentally different hypotheses about timing and mechanism remain untested. With regard to timing, one possibility is that occipital responses to spoken language emerge in adulthood or late childhood. This might be the case if many years of blindness are required to revamp the visual system for non-visual functions. At the other extreme, occipital responses to speech might be present at birth. Over time vision might eliminate these non-visual responses in sighted children, whereas they are maintained in children who are blind. An intermediate possibility is that blindness causes responses to spoken language to emerge in visual cortex during early childhood.

The time course of plasticity also provides insight into its mechanism. How does occipital cortex come to respond to speech? According to one hypothesis, spoken language reaches occipital circuits from primary auditory areas or the auditory nucleus of the thalamus (Miller and Vogt, 1984; Falchier et al., 2002; Clavagnier et al., 2004). During development, occipital cortex initially responds to sound and, subsequently, gradually specializes for common or highly practiced sounds, such as speech.

Another possibility is that Braille learning bootstraps occipital areas for spoken language. A number of studies find that occipital areas of blind adults are active during Braille reading (Sadato et al., 1996; Burton et al., 2002; Merabet et al, 2004; Sadato, 2005; Reich et al., 2011). On the Braille bootstrapping account, occipital cortex initially receives input from somatosensory cortex and participates in the fine-grained spatial discrimination of Braille dots (Merabet et al., 2004). Practice- reading Braille in turn causes occipital cortex to respond to spoken language (Bavelier and Neville, 2002). This “Braille bootstrapping” account predicts that the timing of occipital plasticity for spoken language should follow the time course of learning to read Braille (Sandak et al., 2004; Church et al., 2008; Ben-Shachar et al., 2011).

Finally, language and vision might directly compete for cortical territory during development. This could occur, for example, if nearby language regions in temporal cortex invade what would otherwise be visual areas in occipitotemporal cortex. This view predicts that occipital responses to language should be present as early as, or even earlier than, occipital responses to sound and before learning Braille. If such direct encroachment occurs early in development, it might also affect the development of classic language areas in left prefrontal and temporoparietal cortices.

Materials and Methods

Participants.

Participants were 19 blind children (mean age = 9.32 years, SD = 4.04, range 4–17, 6 female), 20 blindfolded sighted children (mean age = 8.66, SD = 2.90, range = 4–16, 13 female), and 20 sighted children (mean age = 9.09, SD = 2.63, range 5–16, 11 female). Blindfolded children wore total-light-exclusion blindfolds during the fMRI scan only and were included to control for the presence of visual stimulation during the scan.

All blind participants had lost their sight due to pathology in or anterior to the optic chiasm (not involving brain damage). None of the blind children had any measurable visual acuity at the time of testing and all but one had at most minimal light perception since 2 months of age or earlier (see Table 1 for details). All sighted participants had normal or corrected-to-normal vision. None of the participants had any known neurological or cognitive disabilities. One congenitally blind participant had suffered a seizure early in life.

Table 1.

Participant demographics

| Age (years) | Gender | Subject | Age when learned English | Handedness | Age of blindness | Cause of blindness | Residual vision | General Braille ability | WJIII Braille score |

|---|---|---|---|---|---|---|---|---|---|

| 4.03 | F | BC4 | 24 months | Right | Birth | Premature birth/ROP stage V | None | None (Learning pre-Braille) | 0 |

| 4.64 | M | BC13 | 0 | Right | Birth | Leber's congenital amaurosis | None | Letter-by-letter | 0.15 |

| 5.05 | F | BC16 | 0 | Right | Birth | Leber's congenital amaurosis | LP | Letter-by-letter | 0.07 |

| 5.13 | M | BC15 | 0 | Right | Birth | Microphthalmia with sclerocornea | LP, some color | Letter-by-letter | NA |

| 5.69 | F | BC19 | 0 | Right | Birth | Microphthalmia | None | Word-by-word | 0.12 |

| 5.7 | M | BC3 | 28.5 months | Ambi | Birth | Microphthalmia, retinal dysplasia, CM, PA | None | Letter-by-Letter | 0.12 |

| 6.76 | M | BC17 | 0 | Right | Birth | Leber's congenital amaurosis | None | Word-by-word | 0.20 |

| 7.22 | F | BC12 | 0 | Right/Ambi | 2 mo | Leber's congenital amaurosis | LP | Whole stories | 0.57 |

| 7.53 | F | BC6 | 0 | Right | 2–5 yrs | Uveitis | Minimal LP | Whole stories | 0.34 |

| 7.78 | M | BC18 | 0 | Right | Birth | Leber's congenital amaurosis | LP | Word-by-word | 0.37 |

| 8.05 | M | BC2 | 0 | Left | Birth | Premature birth/ROP | Minimal LP | Whole stories | 0.30 |

| 8.37 | M | BC10 | 0 | Right | Birth | Leber's congenital amaurosis | None | Whole stories | 0.54 |

| 9.5 | F | BC8 | 0 | Right/Ambi | Birth | Familial exudative Vitreoretinapathy | None | Whole stories/Adult level | 0.75 |

| 9.62 | M | BC11 | 0 | Right/Ambi | Birth | Leber's congenital Amaurosis | Light/some form perception | Adult level | 0.94 |

| 9.65 | F | BC7 | 6 years | Left/Ambi | Birth | Microphthalmia | Minimal LP | Word-by-word/Whole stories | 0.31 |

| 11.6 | F | BC14 | 0 | Left | Birth | Leber's congenital amaurosis | None | Whole stories | 0.91 |

| 14.2 | M | BC9 | 0 | Ambi | Birth | Bilateral detached retinas at birth | None | Whole stories/Adult level | 0.70 |

| 15.6 | M | BC1 | 0 | Right | Birth | Anophthalmia | None | Adult level | NA |

| 17 | F | BC5 | 0 | Right | Birth | Optic nerve hypoplasia | None | Adult level | 0.99 |

Age of blindness describes onset of blindness, which is defined as absence of vision with at most minimal light perception (LP). General Braille ability measure reflects a subjective report from the parents of the blind children. WJIII Braille score is combined scores from the Letter-Word ID and Word Attack subsets of the Woodcock-Johnson III Test of Cognitive Abilities. The demographic groups are: Sighted blindfolded children: n = 20, 13 female, mean age 8.66 years, SD, 2.90, all native English speakers (8 multilingual), 5 left-handed; sighted children: n = 20, 11 female, mean age 9.08 years, SD, 2.63, all native English speakers (3 multilingual), 1 left-handed. BC, Blind child; ROP, retinopathy of prematurity; CM, coloboma microcornea; PA, Peter's anomaly.

All participants were fluent English speakers. Three of the blind children, two of the sighted children, and six of the blindfolded sighted children were multilingual (see demographics in Table 1 for further details). Analyses in classic language areas were all conducted separately for multilingual and monolingual children. We found no effects of multilingualism on either prefrontal or lateral temporal language specialization; thus, results for multilingual and monolingual children are not reported separately.

Seven additional children took part in the study but were excluded from all analyses due to excessive motion in the scanner (one blind and one sighted child), autism spectrum disorder diagnosis and inability to do the experimental task (one blind child), inability to remain in the scanner (two sighted children and one blind child), or abnormal neuroanatomy (one sighted child).

Task.

Before the beginning of the fMRI study, all children participated in a 10–15 min mock scan session and completed seven practice trials of the task. A researcher remained inside the scanner room and stayed in physical contact with each child throughout the scan.

During the scan, children performed a “Does this come next?” task. Each trial began with a 20-s target clip in one of three conditions: an English story (Language), a story in a foreign language (Foreign Speech: Hebrew, Korean, or Russian), or instrumental music (Music). None of the children who participated in the experiment spoke Hebrew, Korean, or Russian. Children then heard the question, “Does this come next?” (1.5 s), followed by a 3 s probe (sentence, foreign speech, or music). Participants judged whether the probe was the correct continuation of the initial story or the continuation of a different story (Language), the same foreign language or a different foreign language (Foreign Speech), or the same melody by the same instrument or, instead, a different melody by a different instrument (Music). For all conditions, the correct answer was “yes” 50% of the time. Each probe clip was followed by a 6.5-s pause during which the participant's response was collected via a button press. Following each trial, participants heard a 5-s motivational auditory clip, which either said “Great Job! Get ready for the next one” following correct responses, or “Alright. Here comes another one!” following incorrect responses. For sighted non-blindfolded children, a colorful image was presented on the screen. Images did not differ across conditions. Stimuli were presented through Matlab 7.10 running on an Apple MacBook Pro.

Although they performed well when verbally prompted during the practice trials, children < 5 years of age (two blind and one blindfolded) were unable to consistently respond to questions by pressing buttons inside the scanner. Their behavioral data were excluded from analyses due to an insufficient number of responses. Due to technical error, behavioral data from four blindfolded children were also not collected.

English stories were recorded by one of three native English (female) speakers in child-directed prosody. Different fluent speakers recorded the Korean, Hebrew, and Russian stories. English stories were on average 4.7 sentences long (SD = 0.92) and had a Flesch Reading Ease Level of 90.4 (SD = 6.74). Across conditions, all targets and probes were matched in duration and volume (p values > 0.3). Order of conditions was counterbalanced across runs and participants.

The full experiment consisted of four runs, with 6 language trials and 2 trials of foreign speech and music per run. Each run consisted of 10 experimental trials (36 s each) and three rest blocks (12 s each), for a total run-time of 6.6 min. The rest blocks occurred at the start of the run, at the halfway point of the run (after five experimental blocks), and at the end of the run.

After the scan, a teacher for the visually impaired administered a subset of the Braille Adaptation of the Woodcock-Johnson III Test of Achievement to assess Braille-reading and cognitive abilities of blind children.

fMRI analysis.

MRI structural and functional data of the whole brain were collected on a 3 Tesla Siemens scanner. T1-weighted structural images were collected in 128 axial slices with 1.33 mm isotropic voxels (TR = 2 ms, TE = 3.39 ms). Functional blood oxygenation level-dependent (BOLD) data were acquired in 3 × 3 × 4 mm voxels (TR = 2 s, TE = 30 ms), in 30 near-axial slices. The first 4 s of each run were excluded to allow for steady-state magnetization. Data analyses were performed using SPM8 (http://www.fil.ion.ucl.ac.uk/) and Matlab-based in-house software. Before modeling, data were realigned to correct for head motion, normalized to a standard adult template in Montreal Neurological Institute space, and smoothed with a 5 mm smoothing kernel. Data were normalized to an adult template. Although small anatomical differences exist in the shape and size of brain regions among adults and children of this age, these differences are small relative to the resolution of the MRI data (Kang et al., 2003; for similar procedures, see Cantlon et al., 2006, Gweon et al., 2012; for discussion of methodological considerations, see Burgund et al., 2002).

Motion spike time points (motion > 1.5 mm or more relative to the previous time point) were removed to maximize data quality and quantity per child, while matching groups on amount of motion. If more than half of the time points in a run were contaminated by motion, the run was dropped; the remaining motion spikes were removed during modeling using a spike regressor. We excluded participants from analysis if they had < 10 min of usable data. This resulted in dropping one blind and one sighted child from analysis. Blind, blindfolded, and sighted children did not differ in number of motion spikes (one-way ANOVA F(2,55)) = 0.51, p = 0.6; blind mean = 98, SD = 90, sighted blindfolded mean = 88, SD = 97; sighted non-blindfolded mean = 71, SD = 63).

We defined functional regions of interest (ROIs) to examine responses to spoken language in visual cortex. Based on prior work with blind adults (Bedny et al., 2011, 2012), we hypothesized that we would observe occipital responses to spoken language in left medial occipital cortex, left lateral occipital cortex, and the left fusiform gyrus. Because the precise location of the language responses may change across the lifespan, we used an iterated leave-one-run-out procedure (cross-validation) to define independent functional ROIs in occipital cortex specific to blind children. First, group contrasts (blind > sighted) for language > music were computed separately for runs 1, 2 and 3; runs 2, 3, and 4; runs 1, 3, and 4; and runs 1, 2, and 4. In each resulting contrast map, we defined regions of interest in the left medial occipital cortex, left lateral occipital cortex, and the left fusiform gyrus, as all suprathreshold voxels (p < 0.005 uncorrected, k = 10) within a 15 mm sphere around the activation peak of the region. We then extracted percentage signal change (PSC) for the left out run for each participant, and averaged across the four folds to calculate the average response per region.

Prefrontal and lateral temporal ROIs were defined based on the sentences > backwards speech contrast within sighted adults (Bedny et al., 2011, 2012). Group ROIs were defined by including all suprathreshold voxels (p < 0.001) within the left inferior and middle frontal gyri for the prefrontal ROI and the left middle and superior temporal gyri for the lateral temporal ROI.

A leave-one-run-out analysis was performed within classic language group ROIs to look for voxels that were responsive to language in each child. One run of data was used to identify voxels that responded more to language than music at p < 0.001. This is equivalent to defining individual-subject functional ROIs. We then used data from the remaining runs to compute β values for the Language > Music contrast. This procedure was repeated iteratively across runs (i.e., defining voxels based on run 1, run 2, and so on).

For the remainder of the analyses, statistics were performed on PSC differences from rest, extracted from each ROI. We calculated PSC for every time point after the onset of the trial for each condition [(raw signal during condition − raw signal during rest)/raw signal during rest] * 100. PSC was first calculated in each voxel and then averaged over all the voxels in the ROI. We averaged PSC from the middle seven time points from the “story” portion of each trial (8 s through 22 s).

Because we expected the blindfold to affect neural responses in occipital regions of sighted children, analyses of occipital regions were all conducted with three groups: blind, sighted with a blindfold, and sighted without a blindfold. In contrast, there were no differences expected or observed in classic language ROIs of sighted children with and without blindfolds, so analyses of these ROIs were conducted with two groups: blind and sighted.

In whole-brain analyses, a general linear model was used to analyze BOLD activity of each subject as a function of condition. Data were modeled in SPM 8 (http://www.fil.ion.ucl.ac.uk/spm) using a standard hemodynamic response function (HRF) with a 2 s offset to account for a previously observed delay in the HRF peak in similar paradigms. Covariates of interest were convolved with a standard hemodynamic response function. Nuisance covariates included run effects, an intercept term, and motion spike time points. BOLD signal differences between conditions were evaluated through second-level, random-effects analyses thresholded at permutation corrected p < 0.05 or p < 0.001, k = 50.

Results

Behavioral

All groups performed above chance in all conditions. Children were least accurate in the Foreign Speech condition (mean 77%, SD = 21), followed by Language (89%, SD = 14) and Music (93%, SD = 13; 2 × 3 ANOVA, main effect of condition F(2,94) = 15.65, p < 0.0001). Across conditions, blind children made marginally more errors than sighted children (main effect of group F(2,47) = 2.88, p = 0.07), but this difference was not specific to any condition (Group-by-condition interaction F(4,94) = 1.57, p = 0.19) All groups responded to questions faster in the Music and Foreign Speech conditions than in the Language condition (main effect of condition F(2,93) = 28.76, p < 0.0001, main effect of group F(2,47) = 0.07, p = 0.93, Group-by-condition interaction F(4,93) = 1.05, p = 0.39).

Occipital responses to spoken language

We asked first whether the occipital responses to language previously observed in blind adults are also present in blind children. PSC was extracted from three occipital ROIs that respond to language in congenitally blind adults: a lateral occipital region, a fusiform region, and a medial occipital region in the calcarine sulcus (Bedny et al., 2011).

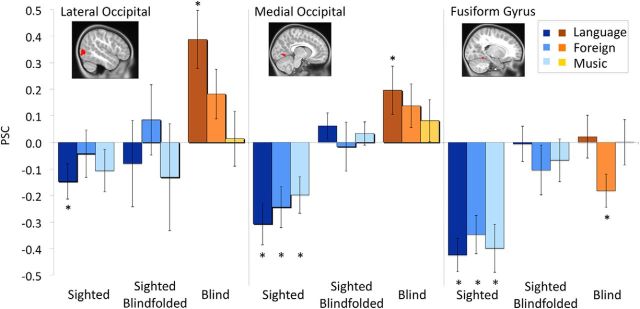

The results of ROI analyses are summarized in Figure 1. The left lateral occipital ROI responded more to spoken language than to music or foreign speech in blind children (within-group ANOVA, effect of condition F(2,36) = 6.36, p = 0.004; Language > Music t(18) = 2.93, p = 0.01, Language > Foreign Speech t(18) = 2.78, p = 0.01). The response to language was present in blind children but not in sighted children with or without a blindfold (2 × 3 ANOVA group-by-condition interactions, without blindfold F(2,74) = 6.96, p = 0.002; with blindfold F(2,74) = 4.28, p = 0.02; Fig. 1). We next looked at activity in right lateral occipital cortex to ask whether lateral occipital responses to spoken language were hemisphere specific. In a hemisphere-by-condition ANOVA in the blind group there was a main effect of condition (F(2,90) = 4.55, p = 0.01) but no effects of hemisphere or hemisphere-by-condition interaction (hemisphere F(1,90) = 2.08, p = 0.15, hemisphere by condition interaction F(2,90) = 0.1, p = 0.91). These results suggest that lateral occipital responses to spoken language are not left lateralized in blind children.

Figure 1.

Response of left occipital regions to language and non-linguistic sounds. Bars show PSC for language (darkest), foreign speech (intermediate), and music (lightest). Error bars represent the SEM. Asterisks indicate when a particular condition (bar) is different from the rest. Occipital regions show stronger responses to language and non-language sounds in blind children (right) than in sighted blindfolded children (middle) or sighted children without blindfolds (left).

A similar, but weaker, pattern of response was observed in the left lingual gyrus region (Blind group: effect of condition, F(2,36) = 2.48, p = 0.098, Language > Music t(18) = 2.28, p = 0.04, Language > Foreign Speech t(18) = 1.80, p = 0.09; ANOVA group-by-condition interaction without blindfold F(2,74) = 5.56, p = 0.006, with blindfold F(2,74) = 0.83, p = 0.44). In blind children, the left fusiform region responded more to language than foreign speech (t(18) = 2.72, p = 0.01), but there was no difference between language and music (t(18) = 0, p > 0.5) and the response to music was higher than to foreign speech (t(18) = 2.43 p = 0.03); blind group: effect of condition, F(2,36) = 4.95, p = 0.01, group-by-condition interaction with non-blindfold F(2,74) = 6.09, p = 0.004; with blindfold F(2,74) = 1.34, p = 0.27). None of the occipital regions responded more to spoken language than to music or to language than to foreign speech in sighted children (blindfolded and non-blindfolded, all p values > 0.1).

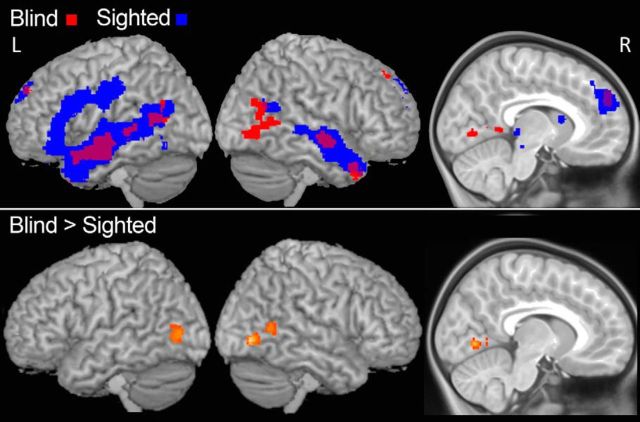

In whole-brain analyses blind but not sighted children activated several occipital regions during spoken story comprehension. In blind children, we observed greater responses to spoken language than to music in a right lateral occipital region (inferior and middle occipital gyri) and the posterior aspect of the right superior temporal sulcus (p < 0.05, corrected; Figs. 2, 3). At a more lenient statistical threshold we also observed responses in right posterior fusiform gyrus and the inferior and anterior aspect of the left lingual gyrus (p < 0.001, uncorrected, k = 50). In group-by-condition interaction analyses (Language > Music, Blind > Sighted), blind children had higher BOLD signals in the anterior and inferior aspect of the left lingual gyrus (p < 0.05, corrected). Responses in the fusiform gyrus and lateral occipital cortex bilaterally were observed at a more lenient threshold (p < 0.001 uncorrected, k > 50). A similar group-by-condition interaction was observed for the Language > Foreign + Music contrast (p < 0.001 uncorrected, k > 10). Notably, the whole-brain analyses reveal that in the current sample of blind children, occipital responses to spoken language were bilateral and not left-lateralized.

Figure 2.

Whole-brain responses to spoken language. Top, Whole-brain analysis of activity in Language > Music. Red, Blind children; blue, sighted children (with and without blindfold). Bottom, Whole-brain analysis of the group-by-condition interaction for Language > Music, Blind > Sighted (with and without blindfold; k = 50, p < 0.001 uncorrected).

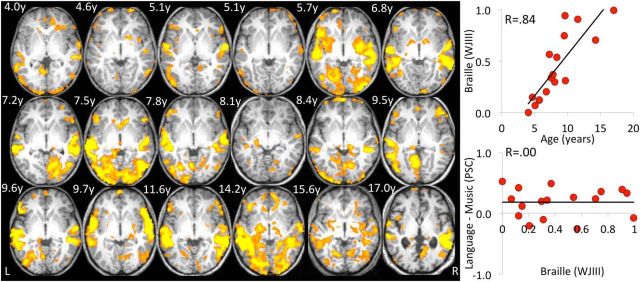

Figure 3.

Occipital responses to spoken language are stable across age and Braille proficiency. Left, Language responses in individual blind children. Each child's Language > Music t-map is displayed on his or her own normalized anatomy at a threshold of t > 2.59 (p < 0.005). Children's data are shown from youngest (left) to oldest (right). Data from the child who continued to have some residual vision during toddlerhood is second from left in the middle row. This participant showed as much plasticity as the congenitally blind children. The z coordinates for each child are between −2 and 6, and the y coordinates between −54 and −64. Right, Effects of Braille reading and age. Across participants, Braille reading abilities differed substantially across children and were highly correlated with age (top). However, Braille-reading scores did not predict responses to spoken language in occipital cortex (Language > Music) (bottom).

Effects of age and Braille-learning on occipital plasticity in blind children

A multiple regression with Age, Condition, and ROI as covariates revealed a main effect of Age on occipital activity in blind children (F(1,153) = 12.3, p < 0.0005), a main effect of Condition (F(2,153) = 3.79, p = 0.03) and main effect of ROI (F(2,153) = 5.67, p = 0.004). No significant interactions were found. Importantly, there was no age-by-condition interaction, suggesting that the observed age-related changes are not specific to spoken language (p > 0.3). Corresponding analyses in blindfolded sighted children did not reveal any significant main effects or interactions, and no change with Age in any ROI (all p values > 0.25).

A multiple regression with Braille, Condition, and ROI as covariates revealed a main effect of Condition (F(2,135) = 3.64, p = 0.03), a main effect of ROI (F(2,135) = 4.96, p = 0.001), and a significant effect of Braille (F(1,135) = 8.57, p = 0.004). No other main effects or interactions reached significance. Age and Braille-reading ability were highly correlated with each other: r = 0.84, t(15) = 6.04, p < 0.0001. Therefore, it is not possible to unambiguously attribute the observed increase in occipital activity to age as opposed to Braille learning. When Braille and Age were both included in the multiple regression, only the variable Age remained significant, F(1,117) = 6.20, p = 0.01, Braille F(1,117) = 0.13, F = 0.72. This suggests that Age was a better predictor than Braille proficiency.

We also tested directly whether Braille proficiency or Age predicts specialization for spoken language, as opposed to other kinds of sound (Language > Music). We found no relationship between occipital responses to spoken language and Braille (R < 0.01, p > 0.3) or Age (R < 0.01, p > 0.3; Fig. 3). (This analysis collapsed across occipital ROIs since ROI did not interact with any variables in the multiple regressions).

In sum, occipital responses to all sounds increased as blind children got older. Two features of the data support the interpretation that the increase in occipital activity is related to age. First, the correlation of occipital activity with age is stronger than with Braille. Second, age correlated with occipital responses to all sounds, and not specifically with occipital responses to spoken language.

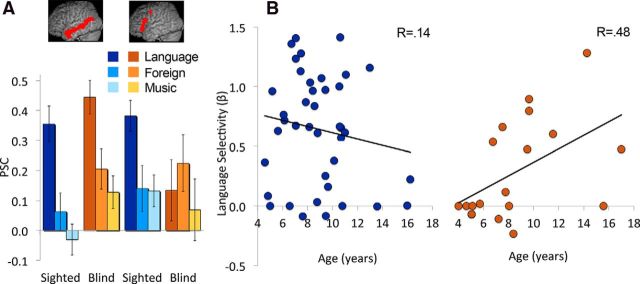

Effects of blindness on the development of classic language areas

Next, we examined activity in classic language areas of blind and sighted children. In blind children, occipital regions may be added to an otherwise typical language system. However, it is possible that the emergence of an occipital language response interacts with development of classic language areas. We identified classic language ROIs in left prefrontal cortex (LPFC) and left lateral temporal cortex, using data from a sentence comprehension task in a group of sighted adults (Bedny et al., 2011). In both sighted and blind children, the left lateral temporal region responded more to Language than to Music or Foreign Speech (effect of condition in sighted, F(2,78) = 46.46, p < 0.0001; effect of condition in blind, F(2,36) = 14.14, p < 0.001, group-by-condition interaction: F(4,112) = 1.29, p = 0.28). By contrast, the LPFC region responded selectively to language only in sighted children (effect of condition in sighted, F(2,78) = 6.89, p = 0.002; effect of condition in blind, F(2,36) = 0.97, p = 0.39, group-by-condition interaction F(4,112) = 2.73, p = 0.03; Fig. 4).

Figure 4.

Responses of frontal and temporal cortical areas to spoken language, foreign speech, and music. A, The bar graph shows PSC in temporal and prefrontal language areas. Temporal areas show similar language responses in blind and sighted children, whereas prefrontal regions show reduced selectivity in blind children. B, Scatter plots show relationship of age (x-axis) and prefrontal language selectivity (y-axis) for the sighted children (blue) and blind children (orange). A significant correlation is observed only in the blind group.

Similarly, in whole-brain analyses, blind and sighted children both activated bilateral lateral temporal areas along the extent of the middle temporal and superior temporal gyri (p < 0.05, corrected). This language-related activity was more extensive in the left hemisphere in both groups. However, sighted but not blind children had a focus of activation in left prefrontal cortex (left inferior/middle frontal gyri; Fig. 2).

The above analyses suggest that blind children have reduced language selectivity in prefrontal cortex relative to sighted children. Surprisingly, the left prefrontal ROI showed no language response at all in blind children as a group. We know from prior work that by adulthood, blind individuals do have language responses in the inferior frontal cortex. Furthermore, language responses in the frontal lobe are known to vary in their anatomical location across individuals (Fedorenko et al., 2011). Our analyses may have missed language-related responses due to averaging over a large part of frontal lobe and over individuals. We next conducted more sensitive individualized ROI analyses. Using cross-validation, we looked for language-selective voxels within the left prefrontal cortex of each participant (see Materials and Methods for details). This analysis revealed that although prefrontal language effects are weaker in blind children, there is nevertheless language-selective tissue in the left prefrontal cortex of the blind children (in independent data, Language > Music in blind children, t(18) = 3.16, p = 0.005, sighted children, t(39) = 8.67, p < 0.0001; between-group difference, t(40) = 2.8, p = 0.008). In blind but not sighted children, language-selective voxels became more selective between the ages of 4 and 17 (effect of Age on Language > Music difference in blind, r = 0.48, t(17) = 2.25, p = 0.04; sighted, r = 0.14, t(38) = −0.95, p = 0.35; group-by-age interaction, F(1,55) = 4.38, p = 0.04). Inspection of the data suggests that in prefrontal cortex, responses to spoken language are absent or minimal in blind children until 8 years of age. By contrast, in sighted children such responses are present by 4 years of age. These results are consistent with the observation that in blind and sighed adults, left prefrontal cortex does not differ in selectivity for language.

Discussion

Studies of the visual system in blind individuals provide rare insights into how developmental experience shapes cortical specialization. In the absence of vision, occipital regions respond to spoken language. The current findings shed light on the developmental timing and mechanism of this plasticity.

We find that in the absence of visual input, occipital regions respond to spoken language by early childhood. By 4 years of age, lateral occipital cortex responds more to spoken stories than to foreign speech or to music. Although language responses are present early, occipital responses to all sounds continue to increase between the ages of 4 and 17. These findings suggest that in occipital cortex preferential responses to spoken language are present as early as responses to sounds in general. We found no evidence for the idea that general responses to sound gradually become specialized for speech sounds. Early emergence of occipital responses to speech is consistent with prior evidence of a sensitive period. Individuals who became blind in adulthood fail to show responses to spoken language in occipital cortex, even after decades of blindness (Bedny et al., 2012; but see Ofan and Zohary, 2007). Other forms of plasticity are observed in late blind individuals (Burton et al., 2006). In this respect, total blindness is similar to monocular deprivation, which has qualitatively different consequences in childhood and adulthood (Hensch, 2005). Together, the available evidence suggests that early blindness modifies the process of cortical development, enabling occipital responses to speech.

The current data suggest that 4 years of age is an upper bound on when spoken language responses emerge in the occipital cortex of blind children: future research will be necessary to determine whether language responses are already present in occipital regions of blind infants, similar to language responses in frontal and temporal regions of sighted infants (Dehaene-Lambertz et al., 2002; Gervain et al., 2008), or whether occipital plasticity occurs gradually over children's first 4 years.

The present findings provide evidence against the hypothesis that occipital responses to spoken language are bootstrapped from earlier tactile responses to Braille. Occipital plasticity in blind individuals has been linked to expertise in tactile discrimination of Braille dots (Sadato et al., 1996). If Braille learning causes occipital language plasticity, the development of occipital activation for language should follow the time course of learning to read Braille. In sighted children, brain regions that support reading become increasingly specialized for written language as reading skills improve (Sandak et al., 2004; Church et al., 2008; Ben-Shachar et al., 2011). Contrary to this idea, we find that responses to spoken language in occipital cortex do not follow the time course of Braille learning. Our findings suggest that occipital responses to speech are independent of Braille.

The present results do not speak to the neurobiological mechanisms that support the processing of written language in blindness. A number of studies have reported occipital activity during Braille-reading (Sadato et al., 1996; Hamilton and Pascual-Leone, 1998; Reich et al., 2011). We expect that neural responses to Braille itself would be related to Braille proficiency, unlike responses to spoken language.

The present findings support the hypothesis that in the absence of bottom-up visual input, language directly encroaches into occipital cortical territory. At least two routes are possible for linguistic input to reach occipital cortex. First, typical visual areas such as the middle temporal motion complex and the fusiform face area are anatomically proximal to language cortex in the temporal lobe. In the absence of bottom-up visual input, neighboring language regions might colonize these visual areas (Florence and Kaas, 1995; Borsook et al., 1998).

Alternatively, linguistic input could reach occipital cortex via connections to prefrontal language regions. Some evidence for this idea comes from studies of functional connectivity: in the absence of a task, activity of occipital and prefrontal cortex is correlated in blind, but not sighted adults (Liu et al., 2007; Bedny et al., 2011; Watkins et al., 2012; Deen et al, 2015). Consistent with this hypothesis, we find that acquisition of linguistic functions by occipital cortex affects the development of prefrontal language areas. Although there is no difference between left lateral temporal responses to language among sighted and blind children (Ahmad et al., 2003), blind children tended to have weaker left prefrontal selectivity for language. Moreover, in blind but not sighted children, prefrontal selectivity for language increased between the ages of 4 and 17 years.

An intriguing possibility is that there are competitive interactions between cortical regions, such that the contribution of occipital regions to language processing delays the emergence of language selectivity in prefrontal cortex. There is some evidence that occipital circuits supplant prefrontal areas in blind adults. In one study, TMS applied to left prefrontal cortex impaired verb generation in sighted, but not blind adults, whereas TMS to the occipital pole impaired verb generation in blind, but not sighted adults (Amedi et al., 2004). These data suggest that occipital contributions can render prefrontal areas less essential for some language tasks. Through a similar process, the “extra” language circuits in the occipital cortex might reduce the pressure on prefrontal areas to specialize for language during development. If so, the effects of blindness on prefrontal regions could reflect a process of interactive specialization, whereby the functional specialization of each brain region depends on interactions, and in some cases competition, with other brain regions (Johnson, 2000, 2001; Thelen, 2002; Karmiloff-Smith, 2006).

Note, though, that further studies are necessary to determine whether and how prefrontal language responses are affected by blindness. First, it will be important to replicate the present findings in other samples of blind children. Second, the prefrontal cortex may also have been affected by the blind children's linguistic experience, rather than by occipital plasticity itself. Prior evidence suggests that social and linguistic experience can affect prefrontal language specialization (Raizada et al., 2008). There is also some evidence that blind children are delayed on early milestones of language acquisition. Blind children are late to produce their first words and first multiword utterances (in the low-normal range; Norris, 1957; Fraiberg, 1977; Landau and Gleitman, 1985). These delays are subtle and short-lived but could nevertheless affect the development of prefrontal language responses.

In summary, the present findings provide insights into how experience and intrinsic constraints drive human cortical development. We find that blindness alters the developmental trajectory of visual cortex. In the absence of visual input, occipital areas develop responses to sound and specifically to spoken language. Vision and language are evolutionarily and cognitively distinct and typically depend on different cortical circuits with distinctive cytoarchitectural profiles (Amunts et al., 2002; Enard et al., 2002; Barton, 2007; Kaas, 2008; Rilling et al., 2008; Makuuchi et al., 2009). Despite this, “visual” cortex is colonized by language functions in blindness. Input during development is thus a major determinant of a cortical area's cognitive function (Sharma et al., 2000; von Melchner et al., 2000).

An important outstanding question is how non-visual encroachment into the visual system of blind children affects the potential to recover visual function. Studies with adults who recover eye function after long periods of blindness suggest that absence of vision during childhood impairs the capacity of the visual cortex to support vision (Fine et al., 2003; Le Grand et al., 2003; Lewis and Maurer, 2005). Colonization of occipital circuits by non-visual functions might make them less suitable for visual processing. Conversely, such plasticity could prevent atrophy of cortical tissue and therefore preserve occipital circuits. Understanding the functional role and time course of cross-modal plasticity could inform decisions about the timing of sight recovery.

Footnotes

This research was supported by a grant from the David and Lucile Packard Foundation to R.S. and a grant from the Harvard/MIT Joint Research Grants Program in Basic Neuroscience to R.S. and Anne Fulton. We thank the children and their families for generously giving their time to make this research possible. We are grateful to Anne Fulton and Lindsay Yazzolino for their invaluable help with recruitment and testing. We thank Tammy Reisman and the UMass Boston teachers of students with visual impairments for administering the Woodcock Johnson III. We also thank the Athinoula A. Martinos Imaging Center for help with fMRI data collection and analyses.

References

- Ahmad Z, Balsamo LM, Sachs BC, Xu B, Gaillard WD. Auditory comprehension of language in young children: neural networks identified with fMRI. Neurology. 2003;60:1598–1605. doi: 10.1212/01.WNL.0000059865.32155.86. [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci. 2003;6:758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- Amedi A, Floel A, Knecht S, Zohary E, Cohen LG. Transcranial magnetic stimulation of the occipital pole interferes with verbal processing in blind subjects. Nat Neurosci. 2004;7:1266–1270. doi: 10.1038/nn1328. [DOI] [PubMed] [Google Scholar]

- Amunts K, Schleicher A, Zilles K. Architectonic mapping of the human cerebral cortex. In: Schuez A, Miller R, editors. Cortical areas: unity and diversity. London, UK: Taylor and Francis; 2002. pp. 29–52. [Google Scholar]

- Barton RA. Evolutionary specialization in mammalian cortical structure. J Evol Biol. 2007;20:1504–1511. doi: 10.1111/j.1420-9101.2007.01330.x. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Neville H. Cross-modal plasticity: where and how? Nat Rev Neurosci. 2002;3:443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dodell-Feder D, Fedorenko E, Saxe R. Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci U S A. 2011;108:4429–4434. doi: 10.1073/pnas.1014818108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dravida S, Saxe R. A sensitive period for language in the visual cortex: distinct patterns of plasticity in congenitally versus late blind adults. Brain Lang. 2012;122:162–170. doi: 10.1016/j.bandl.2011.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Shachar M, Dougherty RF, Deutsch GK, Wandell BA. The development of cortical sensitivity to visual word forms. J Cogn Neurosci. 2011;23:2387–2399. doi: 10.1162/jocn.2011.21615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borsook D, Becerra L, Fishman S, Edwards A, Jennings CL, Stojanovic M, Papinicolas L, Ramachandran VS, Gonzalez RG, Breiter H. Acute plasticity in the human somatosensory cortex following amputation. Neuroreport. 1998;9:1013–1017. doi: 10.1097/00001756-199804200-00011. [DOI] [PubMed] [Google Scholar]

- Burgund ED, Kang HC, Kelly JE, Buckner RL, Snyder AZ, Petersen SE, Schlaggar BL. The feasibility of a common stereotactic space for children and adults in fMRI studies of development. Neuroimage. 2002;17:184–200. doi: 10.1006/nimg.2002.1174. [DOI] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME. Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol. 2002;87:589–607. doi: 10.1152/jn.00285.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Diamond JB, McDermott KB. Dissociating cortical regions activated by semantic and phonological tasks: a FMRI study in blind and sighted people. J Neurophysiol. 2003;90:1965–1982. doi: 10.1152/jn.00279.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, McLaren DG, Sinclair RJ. Reading embossed capital letters: an fMRI study in blind and sighted individuals. Hum Brain Mapp. 2006;27:325–339. doi: 10.1002/hbm.20188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cantlon JF, Brannon EM, Carter EJ, Pelphrey KA. Functional imaging of numerical processing in adults and 4-year-old children. PLoS Biol. 2006;4:e125. doi: 10.1371/journal.pbio.0040125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church JA, Coalson RS, Lugar HM, Petersen SE, Schlaggar BL. A developmental fMRI study of reading and repetition reveals changes in phonological and visual mechanisms over age. Cereb Cortex. 2008;18:2054–2065. doi: 10.1093/cercor/bhm228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clavagnier S, Falchier A, Kennedy H. Long-distance feedback projections to area V1: implications for multisensory integration, spatial awareness, and visual consciousness. Cogn Affect Behav Neurosci. 2004;4:117–126. doi: 10.3758/CABN.4.2.117. [DOI] [PubMed] [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M. Functional relevance of cross-modal plasticity in blind humans. Nature. 1997;389:180–183. doi: 10.1038/38278. [DOI] [PubMed] [Google Scholar]

- Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F. Functional specialization for auditory-spatial processing in occipital cortex of congenitally blind humans. Proc Natl Acad Sci U S A. 2011;108:4435–4440. doi: 10.1073/pnas.1013928108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deen B, Saxe R, Bedny M. Occipital cortex of blind individuals is functionally coupled with executive control areas of frontal cortex. J Cogn Neurosci. 2015;27:1633–1647. doi: 10.1162/jocn_a_00807. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. Functional neuroimaging of speech perception in infants. Science. 2002;298:2013–2015. doi: 10.1126/science.1077066. [DOI] [PubMed] [Google Scholar]

- Enard W, Przeworski M, Fisher SE, Lai CS, Wiebe V, Kitano T, Monaco AP, Pääbo S. Molecular evolution of FOXP2, a gene involved in speech and language. Nature. 2002;418:869–872. doi: 10.1038/nature01025. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Behr MK, Kanwisher N. Functional specificity for high-level linguistic processing in the human brain. Proc Natl Acad Sci U S A. 2011;108:16428–16433. doi: 10.1073/pnas.1112937108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fine I, Wade AR, Brewer AA, May MG, Goodman DF, Boynton GM, Wandell BA, MacLeod DI. Long-term deprivation affects visual perception and cortex. Nat Neurosci. 2003;6:915–916. doi: 10.1038/nn1102. [DOI] [PubMed] [Google Scholar]

- Florence SL, Kaas JH. Large-scale reorganization at multiple levels of the somatosensory pathway follows therapeutic amputation of the hand in monkeys. J Neurosci. 1995;15:8083–8095. doi: 10.1523/JNEUROSCI.15-12-08083.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fraiberg S. Insights from the blind: comparative studies of blind and sighted infants. London, UK: Souvenir; 1977. [Google Scholar]

- Gervain J, Macagno F, Cogoi S, Peña M, Mehler J. The neonate brain detects speech structure. Proc Natl Acad Sci U S A. 2008;105:14222–14227. doi: 10.1073/pnas.0806530105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gweon H, Dodell-Feder D, Bedny M, Saxe R. Theory of mind performance in children correlates with functional specialization of a brain region for thinking about thoughts. Child Dev. 2012;83:1853–1868. doi: 10.1111/j.1467-8624.2012.01829.x. [DOI] [PubMed] [Google Scholar]

- Hamilton RH, Pascual-Leone A. Cortical plasticity associated with Braille learning. Trends Cogn Sci. 1998;2:168–174. doi: 10.1016/S1364-6613(98)01172-3. [DOI] [PubMed] [Google Scholar]

- Hensch TK. Critical period plasticity in local cortical circuits. Nat Rev Neurosci. 2005;6:877–888. doi: 10.1038/nrn1787. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Functional brain development in infants: elements of an interactive specialization framework. Child Dev. 2000;71:75–81. doi: 10.1111/1467-8624.00120. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Functional brain development in humans. Nat Rev Neurosci. 2001;2:475–483. doi: 10.1038/35081509. [DOI] [PubMed] [Google Scholar]

- Kaas JH. The evolution of the complex sensory and motor systems of the human brain. Brain Res Bull. 2008;75:384–390. doi: 10.1016/j.brainresbull.2007.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang HC, Burgund D, Burgund ED, Lugar HM, Petersen SE, Schlaggar BL. Comparison of functional activation foci in children and adults using a common stereotactic space. Neuroimage. 2003;19:16–28. doi: 10.1016/S1053-8119(03)00038-7. [DOI] [PubMed] [Google Scholar]

- Karmiloff-Smith A. The tortuous route from genes to behavior: a neuroconstructivist approach. Cogn Affect Behav Neurosci. 2006;6:9–17. doi: 10.3758/CABN.6.1.9. [DOI] [PubMed] [Google Scholar]

- Landau B, Gleitman L. Language and experience: evidence from the blind child. Cambridge, MA: Harvard UP; 1985. [Google Scholar]

- Le Grand R, Mondloch CJ, Maurer D, Brent HP. Expert face processing requires visual input to the right hemisphere during infancy. Nat Neurosci. 2003;6:1108–1112. doi: 10.1038/nn1121. [DOI] [PubMed] [Google Scholar]

- Lewis TL, Maurer D. Multiple sensitive periods in human visual development: evidence from visually deprived children. Dev Psychobiol. 2005;46:163–183. doi: 10.1002/dev.20055. [DOI] [PubMed] [Google Scholar]

- Liu Y, Yu C, Liang M, Li J, Tian L, Zhou Y, Qin W, Li K, Jiang T. Whole brain functional connectivity in the early blind. Brain. 2007;130:2085–2096. doi: 10.1093/brain/awm121. [DOI] [PubMed] [Google Scholar]

- Makuuchi M, Bahlmann J, Anwander A, Friederici AD. Segregating the core computational faculty of human language from working memory. Proc Natl Acad Sci U S A. 2009;106:8362–8367. doi: 10.1073/pnas.0810928106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A. Feeling by sight or seeing by touch? Neuron. 2004;42:173–179. doi: 10.1016/S0896-6273(04)00147-3. [DOI] [PubMed] [Google Scholar]

- Merabet LB, Hamilton R, Schlaug G, Swisher JD, Kiriakopoulos ET, Pitskel NB, Kauffman T, Pascual-Leone A. Rapid and reversible recruitment of early visual cortex for touch. PLoS One. 2008;3:e3046. doi: 10.1371/journal.pone.0003046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller MW, Vogt BA. Direct connections of rat visual cortex with sensory, motor, and association cortices. J Comp Neurol. 1984;226:184–202. doi: 10.1002/cne.902260204. [DOI] [PubMed] [Google Scholar]

- Norris M. Blindness in children. Chicago: University of Chicago; 1957. [Google Scholar]

- Ofan RH, Zohary E. Visual cortex activation in bilingual blind individuals during use of native and second language. Cereb Cortex. 2007;17:1249–1259. doi: 10.1093/cercor/bhl039. [DOI] [PubMed] [Google Scholar]

- Poirier C, Collignon O, Scheiber C, Reiner L, Vanlierde A, Tranduy D, Veraart C, De Volder AG. Auditory motion perception activates visual motion areas in early blind subjects. Neuroimage. 2006;31:279–285. doi: 10.1016/j.neuroimage.2005.11.036. [DOI] [PubMed] [Google Scholar]

- Raizada RD, Richards TL, Meltzoff A, Kuhl PK. Socioeconomic status predicts hemispheric specialisation of the left inferior frontal gyrus in young children. Neuroimage. 2008;40:1392–1401. doi: 10.1016/j.neuroimage.2008.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reich L, Szwed M, Cohen L, Amedi A. A ventral visual stream reading center independent of visual experience. Curr Biol. 2011;21:363–368. doi: 10.1016/j.cub.2011.01.040. [DOI] [PubMed] [Google Scholar]

- Rilling JK, Glasser MF, Preuss TM, Ma X, Zhao T, Hu X, Behrens TE. The evolution of the arcuate fasciculus revealed with comparative DTI. Nat Neurosci. 2008;11:426–428. doi: 10.1038/nn2072. [DOI] [PubMed] [Google Scholar]

- Röder B, Stock O, Bien S, Neville H, Rösler F. Speech processing activates visual cortex in congenitally blind humans. Eur J Neurosci. 2002;16:930–936. doi: 10.1046/j.1460-9568.2002.02147.x. [DOI] [PubMed] [Google Scholar]

- Sadato N. How the blind “see” Braille: lessons from functional magnetic resonance imaging. Neuroscientist. 2005;11:577–582. doi: 10.1177/1073858405277314. [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Ibañez V, Deiber MP, Dold G, Hallett M. Activation of the primary visual cortex by Braille reading in blind subjects. Nature. 1996;380:526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- Sandak R, Mencl W, Frost S, Pugh K. The neurobiological basis of skilled and impaired reading: recent findings and new directions. Sci Studies Reading. 2004;8:273–292. doi: 10.1207/s1532799xssr0803_6. [DOI] [Google Scholar]

- Sharma J, Angelucci A, Sur M. Induction of visual orientation modules in auditory cortex. Nature. 2000;404:841–847. doi: 10.1038/35009043. [DOI] [PubMed] [Google Scholar]

- Thelen E. Self-organization in developmental processes: Can systems approaches work? In: Johnson MH, Munakata Y, Gilmore R, editors. Brain development and cognition: a reader. Oxford, UK: Blackwell; 2002. [Google Scholar]

- von Melchner L, Pallas SL, Sur M. Visual behaviour mediated by retinal projections directed to the auditory pathway. Nature. 2000;404:871–876. doi: 10.1038/35009102. [DOI] [PubMed] [Google Scholar]

- Watkins KE, Cowey A, Alexander I, Filippini N, Kennedy JM, Smith SM, Ragge N, Bridge H. Language networks in anophthalmia: maintained hierarchy of processing in ‘visual’ cortex. Brain. 2012;135:1566–1577. doi: 10.1093/brain/aws067. [DOI] [PubMed] [Google Scholar]