Abstract

Complex audio-vocal integration systems depend on a strong interconnection between the auditory and the vocal motor system. To gain cognitive control over audio-vocal interaction during vocal motor control, the PFC needs to be involved. Neurons in the ventrolateral PFC (VLPFC) have been shown to separately encode the sensory perceptions and motor production of vocalizations. It is unknown, however, whether single neurons in the PFC reflect audio-vocal interactions. We therefore recorded single-unit activity in the VLPFC of rhesus monkeys (Macaca mulatta) while they produced vocalizations on command or passively listened to monkey calls. We found that 12% of randomly selected neurons in VLPFC modulated their discharge rate in response to acoustic stimulation with species-specific calls. Almost three-fourths of these auditory neurons showed an additional modulation of their discharge rates either before and/or during the monkeys' motor production of vocalization. Based on these audio-vocal interactions, the VLPFC might be well positioned to combine higher order auditory processing with cognitive control of the vocal motor output. Such audio-vocal integration processes in the VLPFC might constitute a precursor for the evolution of complex learned audio-vocal integration systems, ultimately giving rise to human speech.

Keywords: audio-vocal integration, cognitive control, monkey homolog of human Broca's area, prefrontal cortex, vocal motor control, vocalization

Introduction

The ability to recognize vocalizations of others and to produce vocal utterances in return has enabled primates to develop sophisticated audio-vocal communication systems (Seyfarth et al., 1980; Miller and Wang, 2006, Ouattara et al., 2009; Hage, 2013). Self-produced vocalizations have to be monitored continuously to detect and compensate for vocal production errors or external acoustical events, both in primates and several other vertebrate species. For instance, speakers and vocalizing animals involuntarily raise their call amplitude in response to masking ambient noise to increase the signal-to-noise ratio, an effect known as the Lombard effect (Lombard, 1911; Cynx et al., 1998; Brumm et al., 2004; Hage et al., 2013a). Hearing and vocalizing thus interact, and auditory feedback is required to adjust and guide vocal motor output.

In primates, interactions between the auditory and the vocal motor system exist on several brain levels such as lower brainstem, midbrain, and auditory cortex (AC; Müller-Preuss and Ploog, 1981; Eliades and Wang, 2003, 2008, 2013; Pieper and Jürgens, 2003; Hage et al., 2006). However, to subject audio-vocal interaction to cognitive control interactions higher up in the cortical hierarchy are needed. We therefore investigated the auditory and motor representations of species-specific vocalizations in single neurons of ventrolateral PFC (VLPFC), a multimodal association area (Sugihara et al., 2006; Nieder, 2012) and key interface of the brain's perception-action cycle (Fuster, 2000).

The VLPFC is presumed to constitute the apex of complex auditory processing in the brain (Romanski and Averbeck, 2009). Neurons recorded in BA45 and BA12/47 of VLPFC have been found to respond to complex sounds including species-specific vocalizations (Romanski and Goldman-Rakic, 2002; Romanski et al., 2005). The VLPFC receives input from the AC of the temporal lobe, including belt, parabelt, and the superior temporal plane (STP; Hackett et al., 1999; Romanski et al., 1999a,b). Complex sounds including noise bursts and vocalizations activate regions of the belt and parabelt (Rauschecker et al., 1995, 1997; Rauschecker, 1998) and along STP (Poremba et al., 2003; Kikuchi et al., 2010). An area anterior to the auditory input fields on STP has been identified as a vocalization area in macaques (Petkov et al., 2008), with cells more selective to individual voices than to call types (Perrodin et al., 2011, 2014).

In addition to sensory encoding of vocalizations, VLPFC is also involved in the production of vocalizations. Electrical microstimulation in BA44 elicited orofacial responses (Petrides et al., 2005). Recently, we reported that single-neuron activity in BA44/45 specifically predicted the preparation of conditioned vocalizations (Hage and Nieder, 2013), indicating cognitive control over the vocal motor system. Moreover, BA44/45 in macaques share similar cytoarchitectonics and corticocortical connections with human Broca's area, a key speech production area (Petrides and Pandya, 2002, 2009; Petrides et al., 2005).

Despite the evidence for separate sensory and motor representations of vocalizations in VLPFC, it is unknown whether single neurons in PFC reflect audio-vocal interactions. Neurons in VLPFC responding to both external sounds and before and/or during self-produced vocalizations would provide a neuronal hub for cognitively controlling vocal output.

Materials and Methods

Subjects.

We used two 5-year-old male rhesus monkeys (Macaca mulatta) weighing 4.2 and 4.5 kg for this study. During the experiments, the monkeys worked under a controlled water-intake protocol. All surgery procedures were accomplished under aseptic conditions under general anesthesia. All procedures were in accordance with the guidelines for animal experimentation and authorized by the national authorities (Regierungspräsidium Tübingen, Germany). All neurons analyzed in this study are a fraction of cells that have been recorded in a previous study (Hage and Nieder, 2013).

Experimental design.

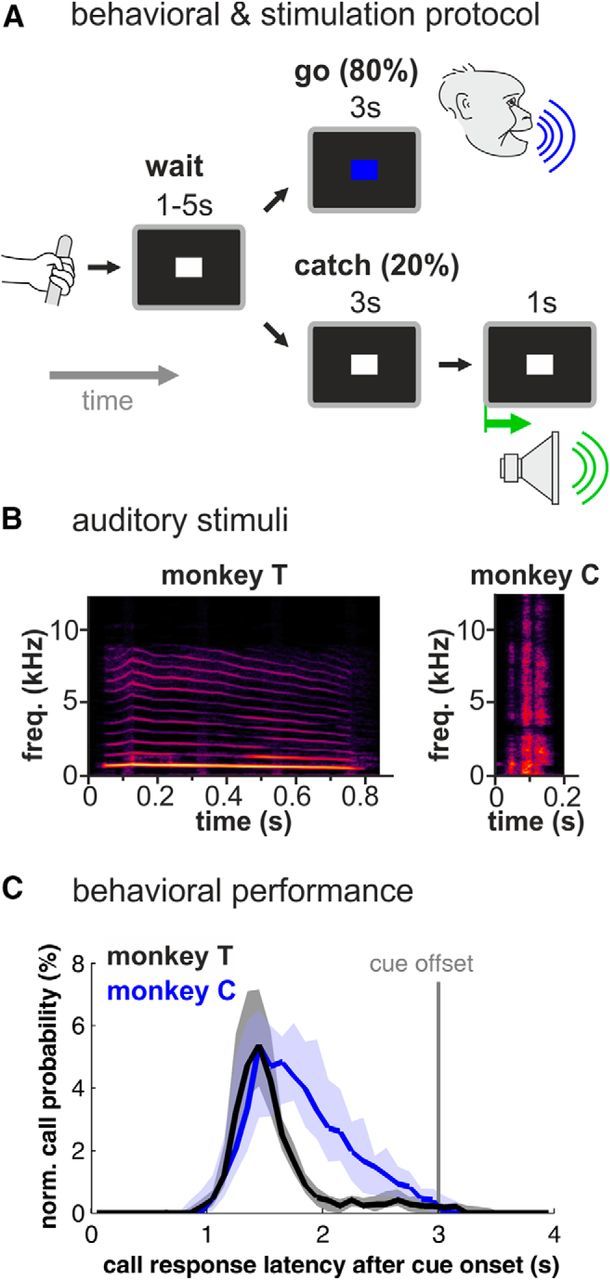

Single-cell recordings were conducted in monkeys trained to alternately produce calls and listen to species-specific vocalizations. The behavioral protocol required the monkeys to vocalize in response to an arbitrary visual cue in a go/nogo detection task as described earlier (Hage and Nieder, 2013; Hage et al., 2013b). Briefly, the monkey started a trial by grabbing a bar (“ready” response; Fig. 1A). A visual “nogo” signal (“precue”) appeared for a randomized time period of 1–5 s (white square, diameter: 0.5 degrees of visual angle) during which the monkey was not allowed to vocalize. In 80% of the trials, the precue signal was followed by a colored visual “go” signal (red or blue square with equal probability; diameter: 0.5 degrees of visual angle) lasting 3 s during which the monkey had to emit a vocalization to receive a liquid reward. To control for random calling behavior, the precue remained unchanged for 3 s in the remaining 20% of the trials and the monkey had to continue withholding vocal output (“catch” trials).

Figure 1.

Behavioral and auditory stimulation protocol and behavioral performance. A, Monkeys were trained in a go/nogo protocol to vocalize in response to a colored cue and were stimulated acoustically at the end of catch trials (20% of all trials) that required the monkey to withhold vocalization. B, Spectrograms of the coo and grunt vocalization that were used as acoustical stimuli. Spectral intensity is represented by different shades of color with black as the lowest and yellow as the highest intensity. C, Probability of the occurrence of call onsets within each 100 ms bin for both monkeys during go trials normalized for 31 (monkey T) and 27 recording sessions (monkey C); shaded areas indicate first and third quartiles.

At the end of each successful catch trial, i.e., when the monkey did not vocalize, the precue stimulus remained unchanged for 1 s and the monkey was acoustically stimulated with the type of call it uttered during go trials (see below for further details).

One session was recorded per individual per day. Monkeys were head fixed during the experiment, maintaining a constant distance of 5 cm between the monkey's head and the microphone. Eye movements were monitored via an IR-eye tracking system (ISCAN), sampled at 1 kHz, and stored with the Plexon system for subsequent analysis.

Behavioral data acquisition.

Stimulus presentation and behavioral monitoring were automated on PCs running the CORTEX program (NIH) and recorded by a multi-acquisition system (Plexon) as described earlier (Hage and Nieder, 2013; Hage et al., 2013b). Briefly, vocalizations were recorded synchronously with the neuronal data by the same system with a sampling rate of 40 kHz via an A/D converter for post hoc analysis. Vocalizations were detected automatically by a custom-written MATLAB program (The MathWorks) that calculated on-line several temporal and spectral acoustic parameters and ran on another PC, which monitored the vocal behavior in real time. All recordings were performed in a double-walled sound-proof booth (IAC Acoustics).

Auditory stimuli.

For auditory stimulation, we used the identical call type each of the monkeys uttered during the behavioral protocol. The main focus of the present study was to directly compare the activity of single neurons in response to specific self-produced and perceived identical vocalization. Because our behavioral protocol required a sparse presentation of (sufficiently often repeated) acoustic stimuli we played back the vocal stimulus only during the catch trial, when the monkey was not preparing for vocal output. Monkey T produced coo vocalizations and was therefore acoustically stimulated with a high-quality recording of its own coo call (753 ms duration). Monkey C produced grunt vocalizations and was therefore played back a recording of its own grunt call (140 ms duration; Fig. 1B). We used one vocalization exemplar as an acoustic stimulus for each. The vocalizations were stored as WAV files (sample rate 44.1 kHz), amplified (Yamaha amplifier A-520), and applied by one broadband speaker (Visaton), which was positioned 55 cm centered in front and 45 degrees above the animal's head. The system was calibrated using a measuring amplifier (Brüel & Kjær 2606 with condenser microphone 4135 and preamplifier 2633) to ensure a flat response of sound presentation (±5 dB) between 0.1 and 18 kHz. Vocal stimuli were presented with intensities of 80 dB SPL for the coo and 75 dB SPL for the grunt call, respectively.

Neurophysiological recordings.

Extracellular single-unit activity was recorded with arrays of 6–14 glass-coated tungsten microelectrodes of 1 and 2 MΩ impedance (Alpha Omega). Microelectrode arrays were inserted each recording day using a grid with 1 mm spacing, which was mounted inside the recording chamber. Several marks on grid and chamber ensured the identical position of the grid on each day. Recordings were made in the left VLPFC. Neurons were randomly selected; no attempts were made to preselect units for specific discharge properties. Signal acquisition, amplification, filtering, and off-line spike sorting was accomplished using the Plexon system. Data analysis was performed using MATLAB (The MathWorks). We analyzed all well isolated neurons with mean discharge rates >1 Hz that were recorded for at least seven hit trials, three miss trials, and seven auditory stimulation trials. Neurons showing eye movement-correlated and fixation-correlated activity (see below) were excluded.

Assignment of neurons to different brain areas.

Recording sites, i.e., recording wells and craniotomies, were localized using stereotaxic reconstructions from the individuals' MR images by combining stereotaxic coordinates measured during surgery and the individuals' MR images taken before implantation (Fig. 2A). The identical stereotaxic frame was used for MRI and all surgeries (MRI stereotaxic frame 9-YST-35-P-O, all plastic materials; ear tips style: A; eye/ear bars: offset; guide rails: 19 mm; Crist Instrument Company). Recordings were made from the VLPFC with recording well and craniotomy centered on the inferior arcuate sulcus. The recording wells were implanted parallel to the cortical surface in both monkeys (Fig. 2A), i.e., the electrodes entered perpendicular to the cortical surface.

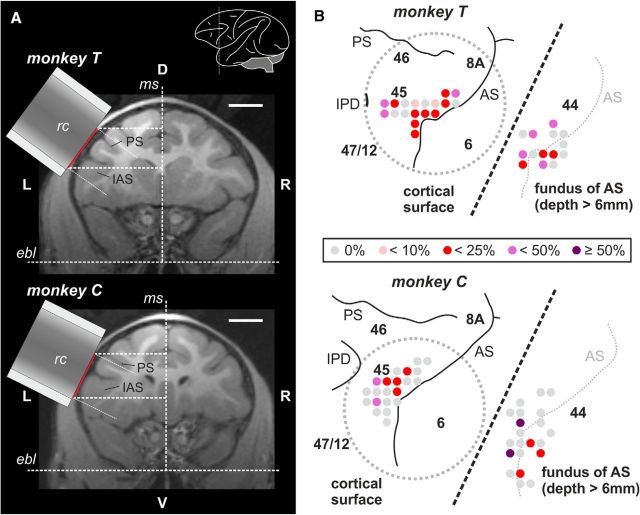

Figure 2.

Recording areas. A, MR images (frontal sections) of monkey T and monkey C used to reconstruct the position of the center of the recording chamber (rc). Red lines indicate the position of the craniotomy. Scale bar, 10 mm. Inset, Lateral view of the left hemisphere indicating the approximate position of the frontal section. B, Proposed location of recording sites inside each recording chamber in the prefrontal cortex as reconstructed from MR images (without recording wells; gray dotted circles: position of craniotomy). For better inspection, recording sites within the fundus of the inferior AS (depth > 6 mm; predominantly BA44) are depicted offset on the right side of each chamber (separated by oblique dotted line). The proportion of auditory units in relation to all neurons recorded at a specific recording site is color coded. AS, arcuate sulcus; ebl, ear bar level; IAS inferior arcuate sulcus; IPD, inferior precentral dimple; ms, mid-sagittal plane; PS, principal sulcus.

Since both monkeys are still used in ongoing experiments, no histological or electrode-MRI data are available yet. However, recordings on the surface were all centered around the posterior VLPFC, between the lower arcuate sulcus and the inferior prefrontal dimple. In accordance with Petrides and Pandya (2002), we refer to this region as proper BA44/45. However, we are aware that in accordance to another classification only the middle and posterior part of this area is referred to as BA45, while the rostral-most part is referred to as the posterior part of BA47/12 (Preuss and Goldman-Rakic, 1991). Due to the lack of histological verification, we therefore do not exclude that the rostral-most recordings of the present study might be positioned in BA47/12. In addition, we cannot exclude that our dorsal-most recordings might be positioned in BA46 or BA8.

Behavioral data analysis.

Successful vocalizations during go trials were defined as “hits,” and calls during catch trials as “false alarms” according to the go/nogo detection paradigm. To test whether the monkey was capable of performing the detection task successfully, we computed d′ sensitivity values derived from signal detection theory (Green and Swets, 1966) by subtracting z-scores (normal deviates) of median hit rates from z-scores of median false alarm rates. Detection threshold for d′ values was set to 1.8.

Neuronal data analysis

Eye movement-correlated and fixation-correlated neurons.

The demanding behavioral design prevented us from training the monkeys to maintain eye position when they produced vocalizations on command. However, we observed that vocalizations were typically accompanied by large eye movements. To avoid confound of the neuronal discharges with eye movement-related responses, we therefore excluded eye movement-related and eye fixation-related neurons from the dataset (for review, see Hage and Nieder, 2013). Briefly, we tested whether neuronal activity during the precue period was a function of saccade direction to reveal eye movement-correlated neurons. Saccadic eye movements were defined as eye positions that changed for >4 degrees of visual angle within 4 ms. The directional vector of the saccades was produced by comparing the eye positions 50 ms prior saccade onset and 50 ms after saccade onset. Next, directional vectors were rearranged into eight directional groups (Hage and Nieder, 2013) and peri-event time histograms were generated. A nonparametric one-way ANOVA (Kruskal–Wallis test) was performed to test for significant differences (p < 0.05) in firing rate between vector groups within 200 ms around saccade onset. Neurons significant for this test were excluded.

Additionally, we tested all neurons that did not show saccade-related neuronal activity to detect fixation-related neurons, i.e., neurons that increase their firing rates after precue fixation (Izawa et al., 2009). Therefore, neuronal activity was analyzed during recording periods in which a precue was present and the animal fixated the precue stimulus for at least 200 ms. A Wilcoxon sign rank test (p < 0.05) was performed to test for significant increases of firing rates during the fixation period (100–200 ms after fixation onset) compared with firing rates within a 100 ms window before. Neurons significant for this test were excluded.

Auditory neurons.

Monkey T was trained to produce coo vocalizations and monkey C to utter grunt calls. Therefore, we acoustically stimulated monkey T with a coo vocalization and monkey C with a grunt vocalization, which gave us the unique ability to investigate both—the neuron's activity in response to the auditory stimuli and its activity before the production of the equivalent vocalization. We determined a significant auditory response by comparing the neuronal activity during auditory stimulation with the neuron's baseline activity measured in a 500 ms window before stimulus onset (for baseline position see Figs. 3B–E, 5A).

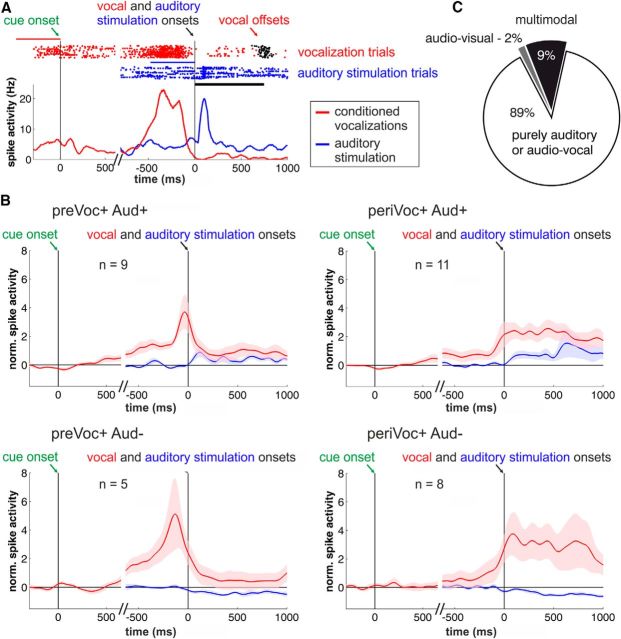

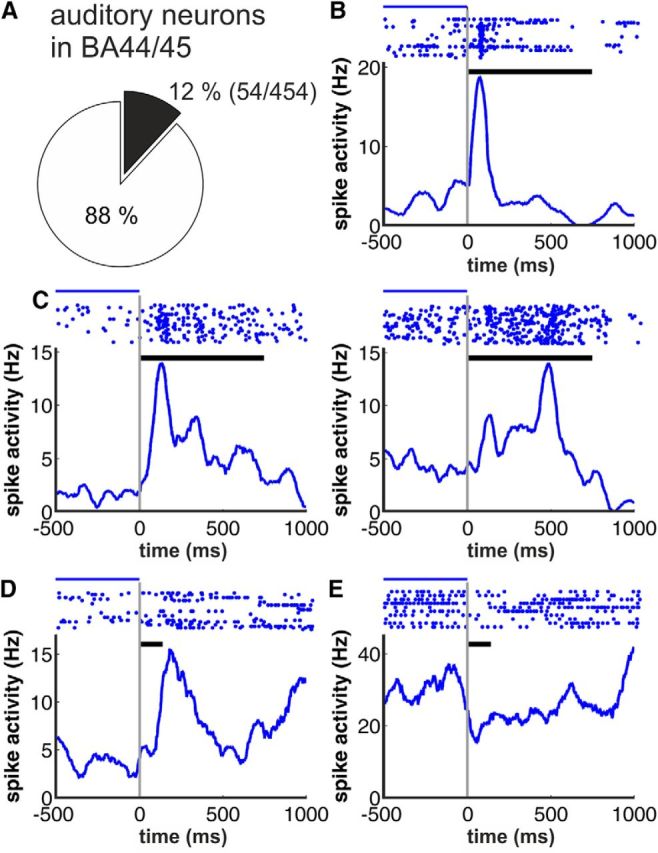

Figure 3.

Examples for acoustic responses. A, Proportion of neurons showing auditory responses in BA44/45 (54 of 454 recorded neurons). B–E, Example neurons showing different types of activity in response to coo (monkey T) and grunt vocalization (monkey C). Top, Neuronal responses are plotted as dot-raster histograms (each dot represents an action potential). Bottom, Spike-density functions (activity averaged over all trials and smoothed by a 100 ms Gaussian kernel). Phasic (B), sustained (C), and long-latency responses (D) and suppressed activity after call onset (E) were observed. Example neurons in B and C were recorded in monkey T, whereas neurons depicted in D and E stem from monkey C, respectively; black horizontal bars between dot-raster histograms indicate the duration of the coo and grunt vocalization used as acoustical stimulus for monkey T and C, respectively. Blue lines indicate from where the baseline firing rate was estimated.

Figure 5.

Audio-vocal activity in PFC neurons. A, Example neuron showing a phasic response during auditory stimulation and ramping neuronal activity before voluntary vocalizations. Top, Raster plot. Bottom, Represents the corresponding spike-density histogram averaged and smoothed with a Gaussian kernel (100 ms) for illustration. The blue and red lines indicate in which trial period the baseline firing rate was estimated (blue for auditory and red for vocal responses). B, Averaged and normalized population responses of auditory neurons subdivided into neurons showing increased (Aud+) or suppressed (Aud−) responses during auditory stimulation (blue curves) with an additional significant increase in neuronal activity before vocal output (preVoc+) or during conditioned vocal output (periVoc+; red curves). There are no significant differences in firing rates due to the visual cue stimuli. Vocalization-correlated activity is triggered by vocal onset and auditory response by the onset of the auditory stimuli (right, top and bottom); visual response by go-cue onset (left, top and bottom). C, Fractions of auditory neurons that also showed visual (1/54 neurons) as well as visual and prevocal and/or perivocal activity (multimodal neurons 5/54).

In monkey T, which was stimulated with a 753 ms coo vocalization, auditory responses were determined within two response periods: an early 500 ms interval starting 50 ms after stimulus onset and a subsequent late 500 ms interval. Auditory responses in monkey C, which were stimulated with a 140 ms grunt vocalization, were identified within a single 500 ms interval starting 50 ms after stimulus onset. The second analysis window in monkey T and the analysis window in monkey C, respectively, reach out beyond the offset of the used stimuli, which ensured the detection of potential auditory offset responses. Neurons with significant differences in firing rate in at least one of the analysis windows compared with the baseline were determined as auditory neurons (paired t test, p < 0.05).

In addition, we detected phasic onset responses by dividing the time after stimulus onset into two intervals of equal duration (0–200 ms and 200–400 ms) and comparing the neuronal activity within these windows with the neuron's baseline activity within a 200 ms window before stimulus onset. Responses were defined as “phasic” if statistical analysis revealed significant differences within these three windows (Kruskal–Wallis test, p < 0.05) and the neuronal activity in the first window after stimulus onset was higher than in the other two windows.

Prevocal and perivocal activity in auditory neurons.

We further investigated the auditory neurons post hoc due to their prevocal and perivocal activity before and during self-produced vocalizations. We tested for prevocal activity by comparing the neuronal activity in a 500 ms window just before vocal onset with baseline activity (500 ms analysis window before go stimulus onset; for baseline position see Fig. 5A; paired t test, p < 0.05). Perivocal activity was evaluated by comparing the neuronal activity in a 500 ms window right after vocal onset with baseline activity (500 ms window before go stimulus onset; paired t test, p < 0.05). Neurons with significant rate differences were determined as prevocal and perivocal neurons, respectively.

Visual activity in auditory neurons.

Neurons in VLPFC are known to show visual and visuomotor responses (Wilson et al., 1993; Murray et al., 2000; Passingham et al., 2000). We therefore analyzed the responses of the auditory neurons to the visual go stimulus. We determined a significant change in neuronal activity due to the go cue by comparing the neuronal activity of the first 300 ms after go-cue onset with the neuron's baseline activity measured in a 300 ms window before go-cue onset (paired t test, p < 0.05).

Modulation index.

Neurons that showed statistically significant changes in neuronal discharge rates either in response to auditory stimulation or in prevocal or perivocal activity were further investigated to evaluate the direction of the changes compared with baseline. Similar to earlier studies (Eliades and Wang, 2003, 2008, 2013), we calculated a normalized measure; the modulation index; and for auditory responses, prevocal responses, and perivocal responses as (Rstimulus − Rbaseline)/(Rstimulus + Rbaseline). Here, a modulation index of 0 indicates identical discharge rates during baseline and stimulus epoch, i.e., auditory response, prevocal or perivocal activity. A modulation index of −1 indicates complete suppression during the stimulus epoch, whereas a value of 1 indicates a neuron with a very low spontaneous rate and/or a very strong response during the stimulus epoch.

Population analysis and normalization.

Normalized activity was calculated by subtracting the mean neuronal baseline activity from the neuronal responses and dividing the outcome by the SD of the baseline activity. Spike-density histograms for single neurons were smoothed with a Gaussian kernel (bin width 150 ms; step size 1 ms) for illustrative purposes only. Neuronal discharges were systematically modulated throughout a trial so that pretrial period turned out to be an unreliable baseline rate estimate. For example, the neurons' baseline was significantly different in 32% of auditory neurons (17 of 54 neurons) when comparing between a pretrial period and before go onset, and in 13% (7/54) when comparing baseline between before go onset and auditory onset. For baseline normalization, we therefore analyzed auditory responses and vocalization-correlated activity relative to baseline activation in a preceding trial phase. This way, we could measure changes in discharge rates directly related to auditory stimuli and vocal output, respectively. Please note, as stated above, that the preceding trial phase, which was used for baseline normalization, was before go onset and, therefore, was not blurred by any potential motor preparation-related activity.

Results

The activity of single neurons during playbacks of vocalizations (auditory condition), preparation of vocalizations (prevocal condition), and production of vocalizations (perivocal condition) was recorded in two monkeys trained to vocalize on command in a go/nogo task (see Fig. 1A for experimental design; Hage and Nieder, 2013; Hage et al., 2013b). We trained one monkey (monkey T) to utter coo vocalizations, and the second monkey (monkey C) was taught to emit grunts (Fig. 1B). To have identical vocalizations during listening and production, monkey T was acoustically stimulated with a coo vocalization, whereas grunts were played back to monkey C. Data from 31 daily sessions for monkey T and 27 sessions for monkey C were collected. On average, each recording session consisted of 135 ± 9 (monkey T) and 134 ± 6 (monkey C) vocalizations per session. In all sessions with both monkeys, the values of the sensitivity index d′ were above the threshold of 1.8 (mean d′: 3.4 ± 0.1 in monkey T; 4.7 ± 0.1 in monkey C). This showed that the monkey produced calls very reliably and almost exclusively in response to the visual go cues. The median call latencies were similar in both monkeys (1.48 s in monkey T; 1.79 s in monkey C; Fig. 1C).

While the monkeys performed the task, we recorded 548 neurons from the left VLPFC (BA44 and BA45; Fig. 2A,B) of these two male Rhesus monkeys (Macaca mulatta). To avoid potential confounding effects caused by eye movements, we excluded neurons showing eye movement-related and eye fixation-related activity from the dataset with post hoc tests because the monkeys were not required to maintain fixation during the task. Fourteen percent of the neurons (79 of 548 neurons) showed saccadic eye movement-related activity, and 3% of neurons (15/548) exhibited eye fixation-correlated activity. After exclusion of these neurons, a total of 454 neurons was used for further analyses. All neurons analyzed in this study are a fraction of cells that have been recorded in a previous study (Hage and Nieder, 2013).

Auditory response properties

First, we examined the activity of VLPFC neurons in response to external acoustical stimulation with vocalizations. A proportion of 12% of the recorded neurons (54/454) showed significant modulation in response to external acoustic stimulation (paired t test and Kruskal–Wallis test, respectively, p < 0.05; Fig. 3A). Monkey T showed a higher proportion of auditory neurons [16% (40/255)] than monkey C [7% (14/199); χ2 test, p < 0.05]. We observed several auditory response types with increasing discharge rates such as phasic (Fig. 3B), sustained (Fig. 3C), and long-latency responses (Fig. 3D) and response types with suppressed neuronal activity after stimulus onset (Fig. 3E). A comparable proportion of 18% of the excluded neurons that showed eye movement-related or fixation-related activity also showed auditory-related activity (χ2 test, p > 0.1; 12 vs 18%).

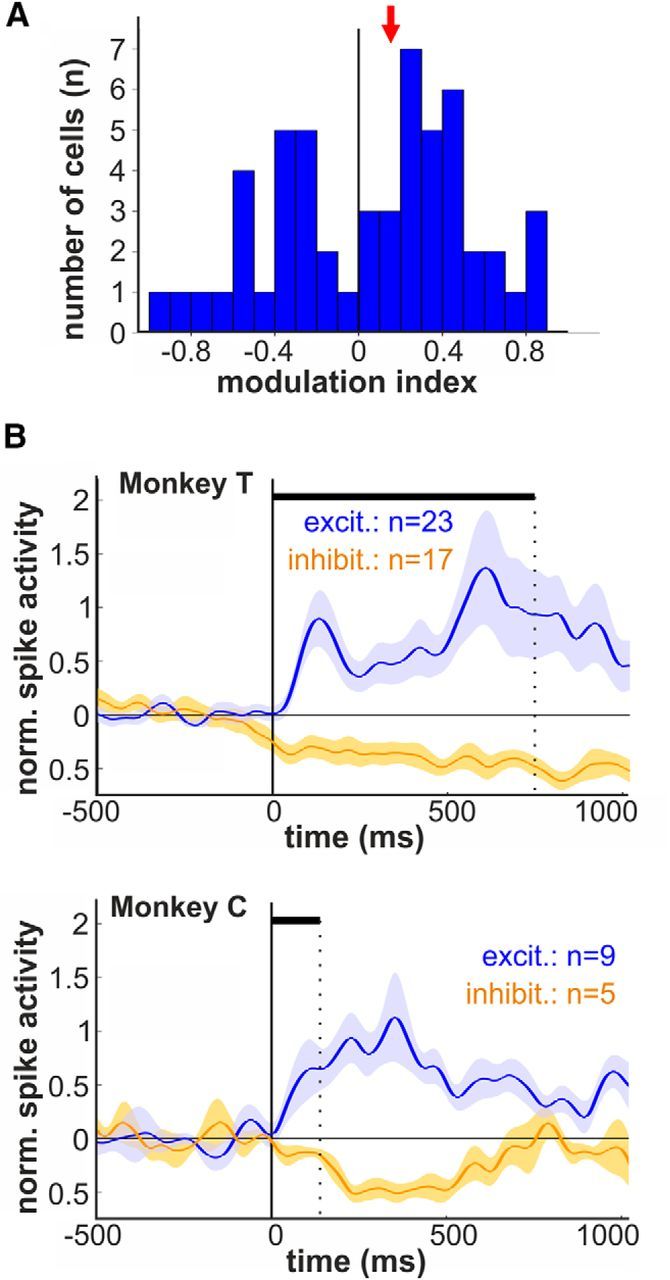

To quantify the effect of auditory stimulation on the neurons' discharge rate, we calculated the auditory modulation index for every single responsive neuron (Fig. 4A). Overall, a fraction of 59% (32/54) showed positive modulation indices indicating excited neuronal activity in response to auditory stimulation. The remaining 41% of the responsive neurons (22/54) was characterized by negative modulation, indices indicating that the neurons' firing rates were suppressed by auditory stimulation. Auditory neurons showed a median auditory modulation index of 0.16. We separated the auditory neurons into two populations regarding modulation response for each monkey. Figure 4B shows the mean population responses of acoustically excited (modulation index > 0) and inhibited neurons (modulation index < 0) for each monkey.

Figure 4.

Modulation indices and population responses for excited and inhibited auditory activity. A, Distribution of the modulation indices indicating the magnitude of excitation and suppression during auditory stimulation (red arrow, median modulation index). B, Average normalized activity (population responses) for auditory neurons showing increased and suppressed responses separately for monkey T and C, respectively.

Interaction of auditory and vocal activity

Next, we investigated the activity of the auditory neurons before and during conditioned vocalizations. We were not able to analyze changes of discharge rates during spontaneous calls due to the small number of auditory neurons recorded during spontaneous vocalizations. Figure 5A shows an auditory neuron with phasic responses to auditory stimulation. At the same time, this neuron shows a significant increase in prevocal activity, i.e., before vocalization, and a significant decrease in perivocal activity, i.e., during conditioned vocal output. No change in neuronal discharge rates was observed in response to the visual go cue. A proportion of 50% (27/54) of the auditory neurons showed significantly modulated prevocal activity; 26% of the recorded auditory neurons (14/54) showed an increase in prevocal activity (paired t test, p < 0.05), whereas decreased prevocal discharge rates were found in 24% (13/54) of auditory neurons.

Perivocal discharge rates were modulated in 57% (31/54) of the auditory cells, with increased activity in 35% (19/54), and suppressed responses in 22% (12/54) of auditory neurons. Figure 5B shows the population responses for the groups of auditory excited (Aud+) and suppressed (Aud−) neurons that exhibited both a significant increase in prevocal (preVoc+) and perivocal activity (periVoc+) for goal-directed vocalizations. Neuronal activities were much weaker in response to auditory stimuli than to vocal activity. This observation is analyzed in detail below.

The population responses of all four groups of audio-vocal neurons did not show any significant visual responses to the go stimulus (paired t test, p > 0.05). We further examined the activity of the auditory neurons in response to the visual go stimulus. A proportion of 11% of the recorded neurons (6/54) showed significant modulation in response to the visual stimulus (paired t test, p < 0.05). Interestingly, all but one of these neurons showed prevocal and/or perivocal activity indicating multimodal responses in approximately every tenth of auditory neurons (Fig. 5C).

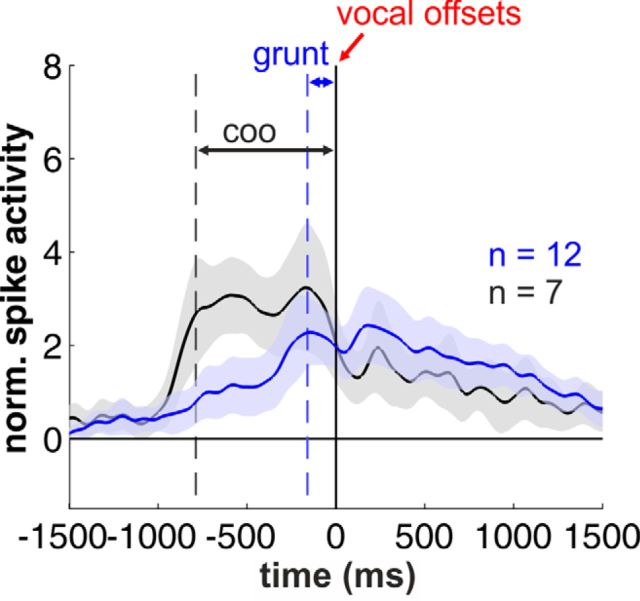

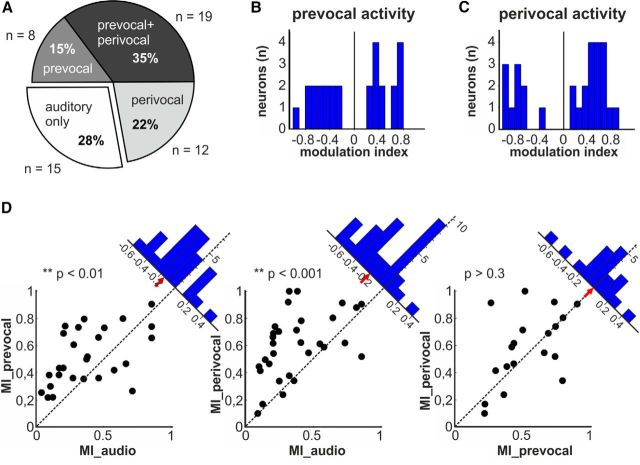

We further analyzed the neuronal activity of the periVoc+ neurons to evaluate their responses after call offset (Fig. 6). We observed that the duration of the sustained activity was dependent on the duration of the uttered calls, grunts and coo vocalizations, respectively. In both cases, however, the neuronal activity was continuing after call offset and showed no significant differences between both call types in a 1 s time window after call offset (p > 0.1, Wilcoxon test). Overall, 72% (39/54) of the auditory neurons showed a significant modulation of their discharge rates prior to vocal onset (prevocal), during vocal output (perivocal), or in both cases (Fig. 7A). Specifically, a third of the neurons exhibiting changes in prevocal activity showed an additional modulation in firing rates during goal-directed vocalizations (35%, 19/54). Fifteen percent (8/54) of auditory neurons showed significant prevocal activity changes. Finally, 22% (12/54) of auditory neurons also showed also perivocal responses.

Figure 6.

Postvocal activity in periVoc+ neurons. Averaged and normalized population responses of periVoc+ neurons separated for monkey T (coo vocalizations) and monkey C (grunt calls). There are no significant differences in firing rates after call offset.

Figure 7.

Pre vocal and perivocal activity of auditory neurons. A, Fractions of auditory neurons that also showed prevocal (8/54), perivocal (12/54), or both types of activation (19/54). B, C, Distribution of the modulation indices of auditory neurons showing significant modulation before conditioned vocal output (B) and/or during conditioned vocal output (C). D, Scatter plots of auditory neurons as a function of their absolute values of their modulation indices. Each dot represents one neuron with its corresponding absolute value of its modulation index in response to auditory stimulation (MI_audio) and before (MI_prevocal) and during self-produced vocalizations (MI_perivocal). Insets indicate the distance of the dot to the bisection line. Positive values were assigned to dots below the line (modulation index on abscissa higher than on the ordinate) and negative values to dots above the line (modulation index on abscissa lower than on the ordinate). Red arrows mark the median distance to the bisection line.

Pre vocal and perivocal activity of auditory neurons

To quantify the premotor-related and motor-related neuronal responses, we calculated the modulation indices for the observed prevocal and perivocal activities. During both prevocal and perivocal phases, the auditory neurons showed an increase in median discharge rates with average modulation indices of 0.22 and 0.34, respectively (Fig. 7B,C).

Finally, we compared the strengths of the neuronal modulation of the auditory neurons during the prevocal, perivocal, and auditory stimulation phases by plotting the respective absolute values of the modulation indices of individual neurons against each other (Fig. 7D). Then, we calculated the distance of the resulting points to the bisection line (Fig. 7D, insets). The plotting of prevocal modulation indices as a function of the auditory modulation indices revealed a significant difference from the bisection line with a median distance of −0.16 (one-sample t test, p < 0.01, n = 27). This finding indicates that prevocal modulation indices were on average higher than auditory modulation indices. Similar results were observed for the perivocal modulation indices as a function of the auditory modulation index. Here, significant differences from the bisection line were found with a median distance of −0.20 (one-sample t test, p < 0.001, n = 31), which indicates modulation indices that were on average higher during the perivocal phase than during auditory stimulation. The distribution of the vocal modulation index as a function of the prevocal modulation index showed no differences from the bisection line (median distance: −0.005), indicating similar values of prevocal and perivocal modulation indices on the population level (one-sample t test, p > 0.3, n = 19).

Discussion

We show that a fraction of neurons in VLPFC exhibits responses to auditory stimulation with species-specific calls. Almost three-fourths of these auditory neurons show a significant modulation of their discharge rates either before and/or during vocalization. Based on these audio-vocal interactions, VLPFC might be the ideal candidate to combine higher order auditory processing mechanisms with cognitive control of the vocal motor network.

Auditory activity in monkey PFC

Our findings of auditory neurons in BA44/45 are in agreement with previous studies, in which auditory neurons have been recorded in VLPFC, including areas BA45 and BA12/47 (for review, see Plakke et al., 2013), that were preferentially driven by species-specific vocalizations compared with pure tones, noise bursts, and other complex sounds (Romanski and Goldman-Rakic, 2002; Romanski et al., 2005). Importantly, most auditory neurons have been found to be multisensory in these areas that do integrate acoustic features and the appropriate facial gesture that accompanied the corresponding vocalization (Sugihara et al., 2006; Romanski, 2012; Diehl and Romanski, 2014). In the current study, we also report auditory neurons in BA44, an area that has not been investigated so far. Coudé et al. (2011) recently also recorded vocalizing monkeys but did not find auditory responses in vocalization-correlated neurons in the frontal lobe. This discrepancy is probably a result of different recordings sites, such as the ventrorostral premotor cortex in the Coudé et al. (2011) study, as opposed to VLPFC in our study. The VLPFC seems to receive auditory information from the belt and parabelt regions of AC, with which it is reciprocally connected (Romanski et al., 1999a,b; Petrides and Pandya, 2002, 2009). Neurons in VLPFC as well as in the belt and parabelt regions of AC show similar responses to auditory stimulation with species-specific vocalizations (Tian et al., 2001; Romanski et al., 2005). In contrast to core areas of AC, some neurons in higher auditory areas of macaque monkeys are activated by complex sounds such as vocalizations rather than by simple stimuli (Rauschecker et al., 1995, 1997; Rauschecker, 1998; Kusmierek and Rauschecker, 2009; Kikuchi et al., 2010). Some of these neurons showed even shorter response latencies than neurons in primary AC (Kusmierek and Rauschecker, 2014). In addition, a vocalization area has been identified in STP of macaques in which neurons preferred vocalizations over other auditory stimuli (Petkov et al., 2008), individual callers over call type (Perrodin et al., 2011, 2014), or one call type over the other (Fukushima et al., 2014).

Audio-vocal interaction in VLPFC

In addition to its role in multisensory processes, VLPFC has been discussed to be involved in vocal motor initiation and preparation processes (for review, see Ackermann et al., 2014). Electrical stimulations in BA44 of anesthetized macaques elicited orofacial responses (Petrides et al., 2005) and single neurons in BA44/45 specifically predicted the preparation of conditioned vocal output (Hage and Nieder, 2013). These findings argued for a direct involvement of BA44/45 in motor selection and initiation processes.

While several studies investigated responses to auditory stimuli in VLPFC, it was virtually unknown how this area is involved in audio-vocal integration processes. In the current study, we report such audio-vocal integration processes on the level of single neurons. Most recorded auditory neurons showed an increased neuronal modulation during voluntary vocalizations that started before vocal onset. Neurons with such audio-vocal response properties seem to be a general phenomenon of auditory-vocal interactions and have already been found at several levels in the primate brain (Müller-Preuss and Ploog, 1981; Eliades and Wang, 2003, 2008, 2013; Pieper and Jürgens, 2003; Hage et al., 2006). Similar to ventrolateral prefrontal level, audio-vocal neurons in the lower brainstem and in AC showed each combination of excised or suppressed activity between self-produced vocalizations and auditory stimulation (Eliades and Wang, 2003, 2013; Hage et al., 2006). In contrast to the present study, where auditory neurons were predominantly increased prior and/or during vocal output, neurons in AC were predominantly suppressed around self-produced vocalizations (Eliades and Wang, 2008). Here, a predominant suppression that is directly linked to vocal output seems to increase the dynamic range of the auditory system and maintain the hearing sensitivity to the external acoustic environment during vocal output (Eliades and Wang, 2003). Audio-vocal neurons in the brainstem seem to be predominantly involved in basic adjustments and modifications during audio-vocal reflexes (Nonaka et al., 1997; Hage et al., 2006).

Potential role of audio-vocal neurons in VLPFC

To investigate the possible function of audio-vocal activity in the prefrontal cortex, we analyzed the neuronal modulation rates of single neurons recorded in BA44/45. In most neurons, the absolute modulation rates before and/or during conditioned vocalizations were significantly higher than those during the playback of the same type of vocalization. Even though conclusions about auditory perceptual abilities based on the neuronal response to a single acoustic stimulus need to be made with caution, our findings suggest that not only prevocal, but also perivocal neurons are driven more by vocal motor activity than by the playback of the exact same type of vocalization. In addition, the neurons' modulation rates before and during vocal output were the same in neurons that showed a significant modulation in both time epochs. Further studies will have to decipher whether neurons in BA44/45 show a preponderance of premotor function in which audio-vocal neurons get modulatory auditory input but seem to be primarily involved in call initiation and vocal preparation. Alternatively, or in addition, such neurons might be involved in complex cognitive and perceptual auditory and/or multimodal processes as has been shown for neurons predominantly recorded in BA47/12 (Romanski, 2007, 2012).

Our results help to elucidate the role of VLPFC in audio-vocal integration processes. Several recent studies suggest BA44/45 of nonhuman primates as a monkey's homolog of human Broca's area (Petrides et al., 2005), which is crucial for speech control in humans (Amunts and Zilles, 2012). BA44/45 shows similar corticocortical projections, anatomical similarities, and comparable cytoarchitectonics as human Broca's area (Petrides and Pandya, 2002, 2009; Petrides et al., 2005). Moreover, they are particularly interconnected with several auditory areas within the superior temporal lobe (Catani et al., 2005; Parker et al., 2005; Romanski, 2007; Petrides and Pandya, 2009). In addition, brain activity in BA44/45 is preferentially elicited in response to species-specific vocalizations in both human and nonhuman primates (Romanski and Goldman-Rakic, 2002; Gil-da-Costa et al., 2006; Joly et al., 2012). Connectional differences between BA44 and BA45 have not yet been observed. However, several cortical auditory, multisensory, and visual association areas in the lateral superior temporal lobe have stronger connections with BA45 than BA44 (Petrides and Pandya, 2009). This previously undetected prefrontal loop for audio-vocal integration processes may constitute an evolutionary precursor of how networks control complex audio-vocal behavior on a cognitive level in the primate lineage and might be the first step in the evolution of complex learned vocal communication signals such as in human speech. However, this prefrontal loop may go back even further and be present in many mammals, since voice-sensitive regions have not only been found in humans, but also recently in dogs (Andics et al., 2014). Furthermore, a recent study showed that the organization of human Broca's area seems to be much more complex, incorporating 5–10 local fields that differ in their cellular properties (Amunts et al., 2010). Future studies will have to reveal whether such local fields also exist for the monkey homolog of Broca's area, and whether audio-vocal activity is limited to some of these fields. Several studies found visual and visuomotor cells in VLPFC (Wilson et al., 1993; Miller et al., 1996; O Scalaidhe et al., 1997; Vallentin and Nieder, 2008; Nieder, 2013). The primate VLPFC at the apex of the cortical hierarchy is ideally positioned to integrate not only social signals from the auditory modality (Gil-da-Costa, 2006; Sugihara et al., 2006; Romanski, 2012), but also from the visual domain (Sugihara et al., 2006). Neurons in BA47/12, BA45, and the lateral orbital cortex of PFC are known to respond to faces (O Scalaidhe et al., 1997), and Tsao et al. (2008) identified three discrete regions of highly face-selective regions in ventral PFC. Also in the present study, we found cells that were responsive to both visual (cue) items and auditory signals. All but one of these cells was also correlated to vocalizations, making them ideal candidates for multimodal integration in sensorimotor processes. In addition, we observed auditory responses in the population of eye movement-related and/or fixation-related neurons, additionally suggesting audio-motor integration (Murray et al., 2000; Passingham et al., 2000). Because PFC is known to give rise to executive functions, VLPFC neurons that are preferably active during conditioned but not spontaneous vocalization (Hage and Nieder, 2013) might be exclusively active during macaque vocal communication with a goal-directed purpose in which VLPFC might be able to directly control the vocal motor network (Ackermann et al., 2014). Such circuitries in PFC-integrating social signals from many sources could readily have been adopted in the course of primate evolution to establish complex audio-vocal behavior known to be crucial for proper speech and language production in humans (Rauschecker and Scott, 2009).

Footnotes

The authors declare no competing financial interests.

References

- Ackermann H, Hage SR, Ziegler W. Brain mechanisms of acoustic communication in humans and nonhuman primates: an evolutionary perspective. Behav Brain Sci. 2014;37:529–546. doi: 10.1017/S0140525X13003099. [DOI] [PubMed] [Google Scholar]

- Amunts K, Zilles K. Architecture and organization principles of Broca's region. Trends Cogn Sci. 2012;16:418–426. doi: 10.1016/j.tics.2012.06.005. [DOI] [PubMed] [Google Scholar]

- Amunts K, Lenzen M, Friederici AD, Schleicher A, Morosan P, Palomero-Gallagher N, Zilles K. Broca's region: novel organizational principles and multiple receptor mapping. PLoS Biol. 2010;8:e1000489. doi: 10.1371/journal.pbio.1000489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andics A, Gácsi M, Faragó T, Kis A, Miklósi A. Voice-Sensitive Regions in the Dog and Human Brain Are Revealed by Comparative fMRI. Curr Biol. 2014;24:574–578. doi: 10.1016/j.cub.2014.01.058. [DOI] [PubMed] [Google Scholar]

- Brumm H, Voss K, Köllmer I, Todt D. Acoustic communication in noise: regulation of call characteristics in a New World monkey. J Exp Biol. 2004;207:443–448. doi: 10.1242/jeb.00768. [DOI] [PubMed] [Google Scholar]

- Catani M, Jones DK, ffytche DH. Perisylvian language networks of the human brain. Ann Neurol. 2005;57:8–16. doi: 10.1002/ana.20319. [DOI] [PubMed] [Google Scholar]

- Coudé G, Ferrari PF, Rodà F, Maranesi M, Borelli E, Veroni V, Monti F, Rozzi S, Fogassi L. Neurons controlling voluntary vocalization in the macaque ventral premotor cortex. PLoS One. 2011;6:e26822. doi: 10.1371/journal.pone.0026822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cynx J, Lewis R, Tavel B, Tse H. Amplitude regulation of vocalizations in noise by a songbird, Taeniopygia guttata. Anim Behav. 1998;56:107–113. doi: 10.1006/anbe.1998.0746. [DOI] [PubMed] [Google Scholar]

- Diehl MM, Romanski LM. Responses of Prefrontal Multisensory Neurons to Mismatching Faces and Vocalizations. J Neurosci. 2014;34:11233–11243. doi: 10.1523/JNEUROSCI.5168-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. Sensory-motor interaction in the primate auditory cortex during self-initiated vocalizations. J Neurophysiol. 2003;89:2194–2207. doi: 10.1152/jn.00627.2002. [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. Neural substrates of vocalization feedback monitoring in primate auditory cortex. Nature. 2008;453:1102–1106. doi: 10.1038/nature06910. [DOI] [PubMed] [Google Scholar]

- Eliades SJ, Wang X. Comparison of auditory-vocal interactions across multiple types of vocalizations in marmoset auditory cortex. J Neurophysiol. 2013;109:1638–1657. doi: 10.1152/jn.00698.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukushima M, Saunders RC, Leopold DA, Mishkin M, Averbeck BB. Differential coding of conspecific vocalizations in the ventral auditory cortical stream. J Neurosci. 2014;34:4665–4676. doi: 10.1523/JNEUROSCI.3969-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. Executive frontal functions. Exp Brain Res. 2000;133:66–70. doi: 10.1007/s002210000401. [DOI] [PubMed] [Google Scholar]

- Gil-da-Costa R, Martin A, Lopes MA, Muñoz M, Fritz JB, Braun AR. Species-specific calls activate homologs of Broca's and Wernicke's areas in the macaque. Nat Neurosci. 2006;9:1064–1070. doi: 10.1038/nn1741. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets J. Signal detection theory and psychophysics. New York: Wiley; 1966. [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Prefrontal connections of the parabelt auditory cortex in macaque monkeys. Brain Res. 1999;817:45–58. doi: 10.1016/S0006-8993(98)01182-2. [DOI] [PubMed] [Google Scholar]

- Hage SR. Audio-vocal interactions during vocal communication in squirrel monkeys and their neurobiological implications. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2013;199:663–668. doi: 10.1007/s00359-013-0810-1. [DOI] [PubMed] [Google Scholar]

- Hage SR, Nieder A. Single neurons in monkey prefrontal cortex encode volitional initiation of vocalizations. Nat Commun. 2013;4:2409. doi: 10.1038/ncomms3409. [DOI] [PubMed] [Google Scholar]

- Hage SR, Jürgens U, Ehret G. Audio-vocal interaction in the pontine brainstem during self-initiated vocalization in the squirrel monkey. Eur J Neurosci. 2006;23:3297–3308. doi: 10.1111/j.1460-9568.2006.04835.x. [DOI] [PubMed] [Google Scholar]

- Hage SR, Jiang T, Berquist SW, Feng J, Metzner W. Ambient noise induces independent shifts in call frequency and amplitude within the Lombard effect in echolocating bats. Proc Natl Acad Sci U S A. 2013a;110:4063–4068. doi: 10.1073/pnas.1211533110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hage SR, Gavrilov N, Nieder A. Cognitive control of distinct vocalizations in rhesus monkeys. J Cogn Neurosci. 2013b;25:1692–1701. doi: 10.1162/jocn_a_00428. [DOI] [PubMed] [Google Scholar]

- Izawa Y, Suzuki H, Shinoda Y. Response properties of fixation neurons and their location in the frontal eye field in the monkey. J Neurophysiol. 2009;102:2410–2422. doi: 10.1152/jn.00234.2009. [DOI] [PubMed] [Google Scholar]

- Joly O, Pallier C, Ramus F, Pressnitzer D, Vanduffel W, Orban GA. Processing of vocalizations in human and monkeys: a comparative fMRI study. Neuroimage. 2012;62:1376–1389. doi: 10.1016/j.neuroimage.2012.05.070. [DOI] [PubMed] [Google Scholar]

- Kikuchi Y, Horwitz B, Mishkin M. Hierarchical auditory processing directed rostrally along the monkey's supratemporal plane. J Neurosci. 2010;30:13021–13030. doi: 10.1523/JNEUROSCI.2267-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kusmierek P, Rauschecker JP. Functional specialization of medial auditory belt cortex in the alert rhesus monkey. J Neurophysiol. 2009;102:1606–1622. doi: 10.1152/jn.00167.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kusmierek P, Rauschecker JP. Selectivity for space and time in early areas of the auditory dorsal stream in the rhesus monkey. J Neurophysiol. 2014;111:1671–1685. doi: 10.1152/jn.00436.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lombard E. Le signe de l'élévation de la voix. Ann Mal Oreille Larynx. 1911;37:101–119. [Google Scholar]

- Miller CT, Wang X. Sensory-motor interactions modulate a primate vocal behavior: antiphonal calling in common marmosets. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2006;192:27–38. doi: 10.1007/s00359-005-0043-z. [DOI] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller-Preuss P, Ploog D. Inhibition of auditory cortical neurons during phonation. Brain Res. 1981;215:61–76. doi: 10.1016/0006-8993(81)90491-1. [DOI] [PubMed] [Google Scholar]

- Murray EA, Bussey TJ, Wise SP. Role of prefrontal cortex in a network for arbitrary visuomotor mapping. Exp Brain Res. 2000;133:114–129. doi: 10.1007/s002210000406. [DOI] [PubMed] [Google Scholar]

- Nieder A. Supramodal numerosity selectivity of neurons in primate prefrontal and posterior parietal cortices. Proc Natl Acad Sci U S A. 2012;109:11860–11865. doi: 10.1073/pnas.1204580109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieder A. Coding of abstract quantity by ‘number neurons’ of the primate brain. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2013;199:1–16. doi: 10.1007/s00359-012-0763-9. [DOI] [PubMed] [Google Scholar]

- Nonaka S, Takahashi R, Enomoto K, Katada A, Unno T. Lombard reflex during PAG-induced vocalization in decerebrate cats. Neurosci Res. 1997;29:283–289. doi: 10.1016/S0168-0102(97)00097-7. [DOI] [PubMed] [Google Scholar]

- O Scalaidhe SP, Wilson FA, Goldman-Rakic PS. Areal segregation of face-processing neurons in prefrontal cortex. Science. 1997;278:1135–1138. doi: 10.1126/science.278.5340.1135. [DOI] [PubMed] [Google Scholar]

- Ouattara K, Lemasson A, Zuberbühler K. Campbell's monkeys concatenate vocalizations into context-specific call sequences. Proc Natl Acad Sci U S A. 2009;106:22026–22031. doi: 10.1073/pnas.0908118106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker GJ, Luzzi S, Alexander DC, Wheeler-Kingshott CA, Ciccarelli O, Lambon Ralph MA. Lateralization of ventral and dorsal auditory-language pathways in the human brain. Neuroimage. 2005;24:656–666. doi: 10.1016/j.neuroimage.2004.08.047. [DOI] [PubMed] [Google Scholar]

- Passingham RE, Toni I, Rushworth MF. Specialisation within the prefrontal cortex: the ventral prefrontal cortex and associative learning. Exp Brain Res. 2000;133:103–113. doi: 10.1007/s002210000405. [DOI] [PubMed] [Google Scholar]

- Perrodin C, Kayser C, Logothetis NK, Petkov CI. Voice cells in the primate temporal lobe. Curr Biol. 2011;21:1408–1415. doi: 10.1016/j.cub.2011.07.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrodin C, Kayser C, Logothetis NK, Petkov CI. Auditory and visual modulation of temporal lobe neurons in voice-sensitive and association cortices. J Neurosci. 2014;34:2524–2537. doi: 10.1523/JNEUROSCI.2805-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, Kayser C, Steudel T, Whittingstall K, Augath M, Logothetis NK. A voice region in the monkey brain. Nat Neurosci. 2008;11:367–374. doi: 10.1038/nn2043. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Distinct parietal and temporal pathways to the homologues of Broca's area in the monkey. PLoS Biol. 2009;7:e1000170. doi: 10.1371/journal.pbio.1000170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Cadoret G, Mackey S. Orofacial somatomotor responses in the macaque monkey homologue of Broca's area. Nature. 2005;435:1235–1238. doi: 10.1038/nature03628. [DOI] [PubMed] [Google Scholar]

- Pieper F, Jürgens U. Neuronal activity in the inferior colliculus and bordering structures during vocalization in the squirrel monkey. Brain Res. 2003;979:153–164. doi: 10.1016/S0006-8993(03)02897-X. [DOI] [PubMed] [Google Scholar]

- Plakke B, Diltz MD, Romanski LM. Coding of vocalizations by single neurons in ventrolateral prefrontal cortex. Hear Res. 2013;305:135–143. doi: 10.1016/j.heares.2013.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poremba A, Saunders RC, Crane AM, Cook M, Sokoloff L, Mishkin M. Functional mapping of the primate auditory system. Science. 2003;299:568–572. doi: 10.1126/science.1078900. [DOI] [PubMed] [Google Scholar]

- Preuss TM, Goldman-Rakic PS. Myelo- and cytoarchitecture of the granular frontal cortex and surrounding regions in the strepsirhine primate Galago and the anthropoid primate Macaca. J Comp Neurol. 1991;310:429–474. doi: 10.1002/cne.903100402. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP. Cortical processing of complex sounds. Curr Opin Neurobiol. 1998;8:516–521. doi: 10.1016/S0959-4388(98)80040-8. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B, Hauser M. Processing of complex sounds in the macaque nonprimary auditory cortex. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing in rhesus monkey auditory cortex. J Comp Neurol. 1997;382:89–103. doi: 10.1002/(SICI)1096-9861(19970526)382:1<89::AID-CNE6>3.0.CO;2-G. [DOI] [PubMed] [Google Scholar]

- Romanski LM. Representation and integration of auditory and visual stimuli in the primate ventral lateral prefrontal cortex. Cereb Cortex. 2007;17(Suppl 1):i61–i69. doi: 10.1093/cercor/bhm099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM. Integration of faces and vocalizations in ventral prefrontal cortex: implications for the evolution of audiovisual speech. Proc Natl Acad Sci U S A. 2012;109:10717–10724. doi: 10.1073/pnas.1204335109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Averbeck BB. The primate cortical auditory system and neural representation of conspecific vocalizations. Annu Rev Neurosci. 2009;32:315–346. doi: 10.1146/annurev.neuro.051508.135431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS. An auditory domain in primate prefrontal cortex. Nat Neurosci. 2002;5:15–16. doi: 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999a;403:141–157. doi: 10.1002/(SICI)1096-9861(19990111)403:2<141::AID-CNE1>3.0.CO;2-V. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999b;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Averbeck BB, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J Neurophysiol. 2005;93:734–747. doi: 10.1152/jn.00675.2004. [DOI] [PubMed] [Google Scholar]

- Seyfarth RM, Cheney DL, Marler P. Monkey responses to three different alarm calls: evidence for predator classification and semantic communication. Science. 1980;210:801–803. doi: 10.1126/science.7433999. [DOI] [PubMed] [Google Scholar]

- Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26:11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Schweers N, Moeller S, Freiwald WA. Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci. 2008;11:877–879. doi: 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallentin D, Nieder A. Behavioral and prefrontal representation of spatial proportions in the monkey. Curr Biol. 2008;18:1420–1425. doi: 10.1016/j.cub.2008.08.042. [DOI] [PubMed] [Google Scholar]

- Wilson FA, Ó Scalaidhe SP, Goldman-Rakic PS. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science. 1993;260:1955–1958. doi: 10.1126/science.8316836. [DOI] [PubMed] [Google Scholar]