Abstract

From an ecological point of view, approaching objects are potentially more harmful than receding objects. A predator, a dominant conspecific, or a mere branch coming up at high speed can all be dangerous if one does not detect them and produce the appropriate escape behavior fast enough. And indeed, looming stimuli trigger stereotyped defensive responses in both monkeys and human infants. However, while the heteromodal somatosensory consequences of visual looming stimuli can be fully predicted by their spatiotemporal dynamics, few studies if any have explored whether visual stimuli looming toward the face predictively enhance heteromodal tactile sensitivity around the expected time of impact and at its expected location on the body. In the present study, we report that, in addition to triggering a defensive motor repertoire, looming stimuli toward the face provide the nervous system with predictive cues that enhance tactile sensitivity on the face. Specifically, we describe an enhancement of tactile processes at the expected time and location of impact of the stimulus on the face. We additionally show that a looming stimulus that brushes past the face also enhances tactile sensitivity on the nearby cheek, suggesting that the space close to the face is incorporated into the subjects' body schema. We propose that this cross-modal predictive facilitation involves multisensory convergence areas subserving the representation of a peripersonal space and a safety boundary of self.

Keywords: body limit, looming visual stimuli, multisensory integration, prediction of impact, predictive coding

Introduction

From an ecological point of view, approaching objects are potentially harmful. A predator, a dominant conspecific, or a mere branch coming up at high speed are dangerous if one does not detect them fast enough to produce the appropriate escape motor repertoire. And indeed, looming stimuli trigger stereotyped defensive responses in both monkeys (Schiff et al., 1962) and human infants (Ball and Tronick, 1971); the estimated time of impact to the body of threatening looming stimuli is shorter than that of neutral looming stimuli (Vagnoni et al., 2012), and looming visual stimuli trigger pronounced orienting behavior toward simultaneous congruent auditory cues compared with receding stimuli, both in nonhuman primates (Maier et al., 2004) and in 5-month-old human infants (Walker-Andrews and Lennon, 1985). This suggests that the dynamics of visual stimuli with respect to the subject exerts cross-modal influences and possibly recruits multisensory integration processes. These observations are in agreement with the general multisensory integration framework, which assumes a common source for multimodal sensory inputs (Sugita and Suzuki, 2003). They specifically extend this framework to the case of dynamical multimodal sources, demonstrating that spatially and temporally congruent auditory stimuli enhance the perception of both static (McDonald et al., 2000) and dynamic visual stimuli (Maier et al., 2004; Cappe et al., 2009; Leo et al., 2011; Parise et al., 2012). Such bimodal dynamic stimuli are frequent in everyday life (visuo-auditory: a car passing a pedestrian; visuotactile: a mosquito walking on one's forearm). The neural substrates that result in the integration of this dynamic sensory information are increasingly understood both in humans (Cappe et al., 2012; Tyll et al., 2013) and in nonhuman primates (Maier and Ghazanfar, 2007; Maier et al., 2008). Overall, these studies derive multisensory integration principals for looming multisensory stimuli under the assumption of a causal common source.

However, a dynamic looming stimulus can also have delayed consequences for a second sensory modality. For example, an object approaching the face will induce a tactile stimulation at the moment of its impact on the body. The heteromodal somatosensory consequences of such a stimulus can be fully predicted by its spatiotemporal dynamics. Accordingly, approaching auditory (Canzoneri et al., 2012) or visual (Kandula et al., 2014) looming stimuli predictively speeds up tactile processing. In the following, we test whether visual stimuli looming toward the face predictively enhance heteromodal tactile sensitivity around the expected time of impact and at its expected location on the body. We discuss our observations in relationship with neurophysiological and behavioral evidence suggesting the existence of a defense peripersonal space defining a safety margin around the body (Gentilucci et al., 1988; Rizzolatti et al., 1988; Colby et al., 1993; Graziano et al., 1994, 2002; Gross and Graziano, 1995; Fogassi et al., 1996; Duhamel et al., 1998, Cooke and Graziano, 2004; Graziano and Cooke, 2006).

Materials and Methods

The experimental protocol was approved by the local ethics committee in biomedical research (Comité de protection des personnes sud-est IV, N CPP 11/025) and all participants gave their written informed consent.

Experimental set-up

Subjects sat in a chair at 50 cm from a 23 inch computer monitor. Their heads were restrained by a chin rest. Their arms were placed on a table and they held a gamepad with their two hands. Vertical and horizontal eye positions were monitored using a video eye tracker (EyeLink; sampling at 120 Hz, spatial resolution <1°). Data acquisition, eye monitoring, and visual presentation were controlled by a PC running Presentation (Neurobehavioral Systems).

Visual stimuli.

The fixation point was a 0.06° × 0.06° yellow square (0.67 cd /m2). The screen background was set to a structured 3D environment with visual depth cues (Fig. 1A). Visual stimuli consisted of eight possible video sequences of a cone, pointing toward the subject, moving within this 3D environment, originating away from and rapidly approaching the subject. For Experiments 1–4, the cone could originate from eight possible locations around the fixation point: (−6.8°, −1.0°), (−3.2°, −1.0°), (−2.8°, −1.0°), (−1.1°, −1.0°), (1.1°, −1.0°), (2.8°, −1.0°), (3.2°, −1.0°) and (6.8°, −1.0°). The cone moved along trajectories that intersected the subject's face at two possible locations, on the left or right cheeks, close to the nostrils (Fig. 1B). For Experiment 5, the cone origins were slightly modified to achieve the desired percept: (−5.8°, −1.0°), (−2.8°, −1.0°), (−1.1°, −1.0°), (−0.2°, −1.0°), (0.2°, −1.0°), (1.1°, −1.0°), (2.8°, −1.0°), (5.8°, −1.0°). In this experiment, the cone trajectory could either intersect the face on the left or right cheek or move past the face on the left or on the right. Each video sequence consisted of 24 images played for a total duration of 800 ms. The 3D environment and different cone trajectories were all constructed with the Blender software (http://www.blender.org/).

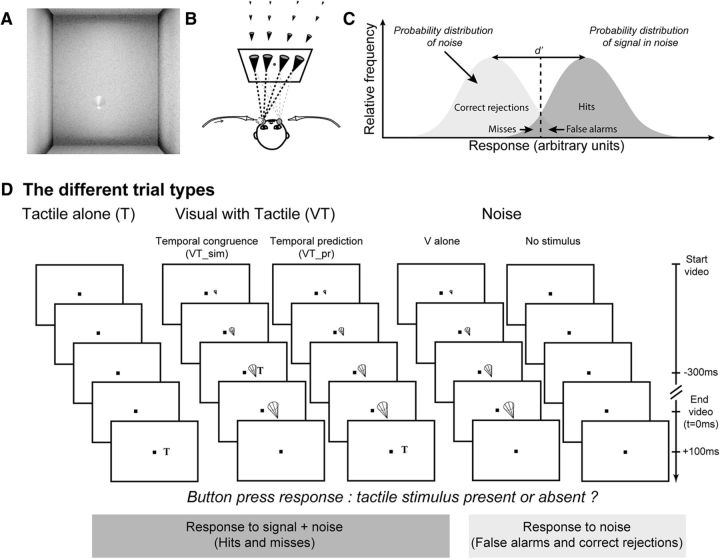

Figure 1.

Experimental protocol. A, Visual stimulus: video sequence of a cone in a 3D environment, looming toward the subject's face. B, Experimental setup, air-puff delivery, and possible apparent looming cone trajectories. C, Signal detection theory and d′ measure estimation. D, Possible variants of signal trials (tactile alone or visual with tactile) and noise trials (visual alone trials or no stimulus trials); example of a temporal prediction bloc–Experiment 1.

Tactile stimuli.

Tactile stimuli consisted of air puffs directed to the left or right cheek of the subjects, at locations coinciding with the two possible visual cone trajectory endpoints (Fig. 1B), through tubes placed at 2–4 mm from each cheek and rigidly fixed to the chin rest. The relative position between the screen and the air-puff tubing was maintained constant throughout the experiments and across subjects. The intensity of the left and right air puffs was adjusted independently, and for each subject, to achieve a 50% detection rate as estimated over a short block of 20 trials (one block for the left air puff and one block for the right air puff). The air-puff delivery system was placed in an independent room, separated from the experimental room, so that the subjects could not predict air-puff delivery from the sound produced by the delivery system. Air puffs were delivered to the subjects through long tubing connecting the air-puff delivery system to a rigid connector fixed on the chin rest. The latency of air-puff outlet at the tubing end following the opening of the solenoid air pressure valve was measured as a function of air-puff intensity, using a silicon on-chip signal-conditioned pressure sensor (MPX5700 Series; Freescale). Detection thresholds were achieved with air pressures varying between 0.05 and 0.1 bars, corresponding to average air-puff latencies of 220 ms. Throughout this manuscript, air-puff timings are corrected to reflect the actual time at which the air puffs hit the face.

Experimental procedure

Subjects had to fixate on a central yellow point throughout the trial. The fixation was monitored to remain within an eye-tolerance window of 2° (controlled by a video eye tracker) around the fixation stimulus. One to three seconds following trial start, a visual stimulus, a tactile stimulus, or a combination of both visual and tactile stimuli was presented. No-stimulation trials were also presented. The precise ratios of trial types are detailed below for each experiment. At the end of the trial, subjects were requested to report the detection of a tactile stimulus by a “Yes” button press (right-hand gamepad button) and respond by a “No” button press otherwise (left-hand gamepad button). To maximize multisensory integration, we used very weak tactile stimuli to the face, specifically directed to the left or right cheeks (see above, Tactile stimuli).The main measure reported in the present study is a d′ measure quantifying the sensitivity of each subject to tactile stimulations as a function of the stimulation context (no stimulation, tactile stimulation alone, or tactile stimulation associated with visual stimulation of specific spatial and temporal properties; Fig. 1C,D). This measure is based on a reliable estimate of false reports of tactile stimuli in noise (False alarms) and correct reports of tactile stimuli (Hits). Each d′ is calculated by collecting the subject's response to a minimum of 75 trials per stimulation context. The d′ measures were estimated for the different stimulation conditions in five different experiments as follows.

Experiment 1: influence of a visual looming stimulus on tactile d′, temporal prediction.

Ten subjects participated in this study (27.2 ± 5.3 years, five males and five females; Fig. 3A). All subjects were naive to the purpose of the experiment except one (S.B.H.). All participants had normal or corrected-to-normal vision. Five possible trial types were presented to the subjects. Noise trials allowed the estimation of their false alarm rate. The trials could either be no-stimulus trials (1/6 of all trials) or visual stimulation-only trials (1/3 of all trials). Signal trials allowed the estimation of their Hit rate as a function of the stimulation condition. The trials could be (1) tactile stimulation-only trials (1/6 of all trials, allowing estimation of d′ for pure tactile stimuli), (2) visual stimulation + tactile stimulation presented midway through the visual video trials (1/6 of all trials, 300 ms before video offset, allowing the estimation of d′ for tactile stimuli in the presence of a visual stimulus), and (3) visual stimulation + tactile stimulation presented when the visual cone is expected to impact the face on the cheek predicted by the cone trajectory trials (1/6 of all trials, 100 ms after video offset, allowing the estimation of d′ for tactile stimuli, which are spatially and temporally predicted by a dynamical visual looming stimulus). Trials were presented pseudorandomly. Subjects were allowed to rest whenever they needed by closing their eyes. During these rest periods, they were instructed not to move their head in the rest chin so as not to change the distance of the air-puff tubing to their face or to change eye calibration and 450 trials were collected in all.

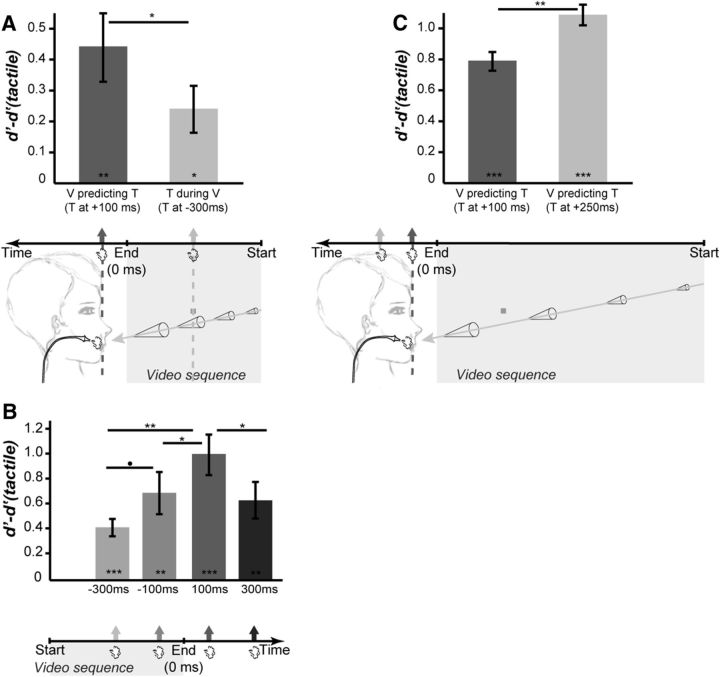

Figure 3.

Temporal prediction. A, Temporal prediction versus temporal simultaneity. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′ [d′(tactile)] and the d′ obtained when the tactile stimulus is applied during the visual video sequence (T during V) or at the predicted time of impact of the looming cone onto the subject's face (V predicting T). B, Temporal prediction window. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′(tactile) and the d′ obtained when the tactile stimulus is applied at different temporal asynchronies from the end of the visual video sequence. C, Temporal prediction window is modulated by the speed of the looming visual stimulus. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′(tactile) and the d′ obtained when the tactile stimulus is applied at +100 ms or +250 ms from the end of the visual video sequence of looming stimuli played at half the speed of those used in A and B. Significant statistical differences between each test condition and the d′(tactile) baseline are indicated by symbols inside the bars. Statistical differences across the test conditions are indicated by symbols above the bar plots. *p < 0.05, Bonferroni corrected; **p < 0.01, Bonferroni corrected; ***p < 0.001, Bonferroni corrected; ·p < 0.05, no correction for multiple comparisons.

Experiment 2: influence of a visual looming stimulus on tactile d′, temporal prediction window.

Ten subjects participated in this study (26.5 ± 4.0 years, four males and six females; Fig. 3B). All subjects were naive to the purpose of the experiment. All participants had normal or corrected-to-normal vision. Seven possible trial types were presented to the subjects. Noise trials were composed of no-stimulus trials (1/10 of all trials) and of visual stimulus-only trials (4/10 of all trials). Signal trials were as follows: (1) tactile stimulation-only trials (1/10 of all trials, allowing estimation of d′ to pure tactile stimuli) and (2) visual stimulation + tactile stimulation trials in which the tactile stimulus could be presented at four possible timings with respect to the visual stimuli (1/10 of all trials for each possible timing: −300 ms, −100 ms, 100 ms, or 300 ms from the end of the video, allowing the estimation of d′ for tactile stimuli in each condition). Trials were presented pseudorandomly. Subjects were allowed to rest whenever they needed by closing their eyes. Rest periods were arranged as in Experiment 1 and 450 total trials were collected.

Experiment 3: influence of the speed of a visual looming stimulus on the temporal prediction window, as assessed from the enhancement of tactile d′.

Ten subjects participated in this study (28.0 ± 5.4 years, two males and eight females; Fig. 3C). All subjects were naive to the purpose of the experiment, except two (J.C. and S.B.H.). Importantly, the looming stimuli approached the subjects' face and aimed for the cheek at half the speed of those used in Experiments 1, 2, 4, 5, and 6 (the exact same video sequences were used for all experiments, except in Experiment 3, each image of the video sequence stayed twice as long on the screen, compared with the images presented in Experiments 1, 2, 4, 5, and 6). Five possible trial types were presented to the subjects. Noise trials were composed of no-stimulus trials (1/6 of all trials) and of visual stimulus-only trials (2/6 of all trials). Signal trials were as follows: (1) tactile stimulation-only trials (1/6 of all trials, allowing estimation of d′ to pure tactile stimuli) and (2) visual stimulation + tactile stimulation trials in which the tactile stimulus could be presented at two possible timings with respect to the visual stimuli (1/6 of all trials for each possible timing: 100 ms or 250 ms from the end of the video, allowing the estimation of d′ for tactile stimuli in each condition). Trials were presented pseudorandomly. Subjects were allowed to rest whenever they needed by closing their eyes. Rest periods were arranged as in Experiment 1 and 500 total trials were collected.

Experiment 4: influence of a visual looming stimulus on tactile d′, spatial prediction.

Ten subjects participated in this study (24.3 ± 2.4 years, four males and six females; Fig. 4A). All subjects were naive to the purpose of the experiment, except one (J.C.). All participants had normal or corrected-to-normal vision. Five possible trial types were presented to the subjects. Noise trials were as in Experiment 1. Signal trials were as follows: (1) tactile stimulation-only trials (1/6 of all trials, allowing estimation of d′ for pure tactile stimuli), (2) visual stimulation + tactile stimulation presented when the visual cone is expected to impact the face on the cheek predicted by the cone trajectory trials (1/6 of all trials, 100 ms after video offset, allowing estimation of d′ for tactile stimuli, which are spatially and temporally predicted by a dynamical visual stimulus), and (3) trials with visual stimulation + tactile stimulation presented at the time at which the visual cone is expected to impact the face but on the opposite cheek from the one predicted by the cone trajectory (1/6 of all trials, 100 ms after video offset, allowing the estimation of d′ to tactile stimuli, which is temporally predicted by a dynamical visual stimulus but spatially incongruent to it). Trials were presented pseudorandomly. Subjects were allowed to rest whenever they needed by closing their eyes. Rest periods were arranged as in Experiment 1 and 450 total trials were collected.

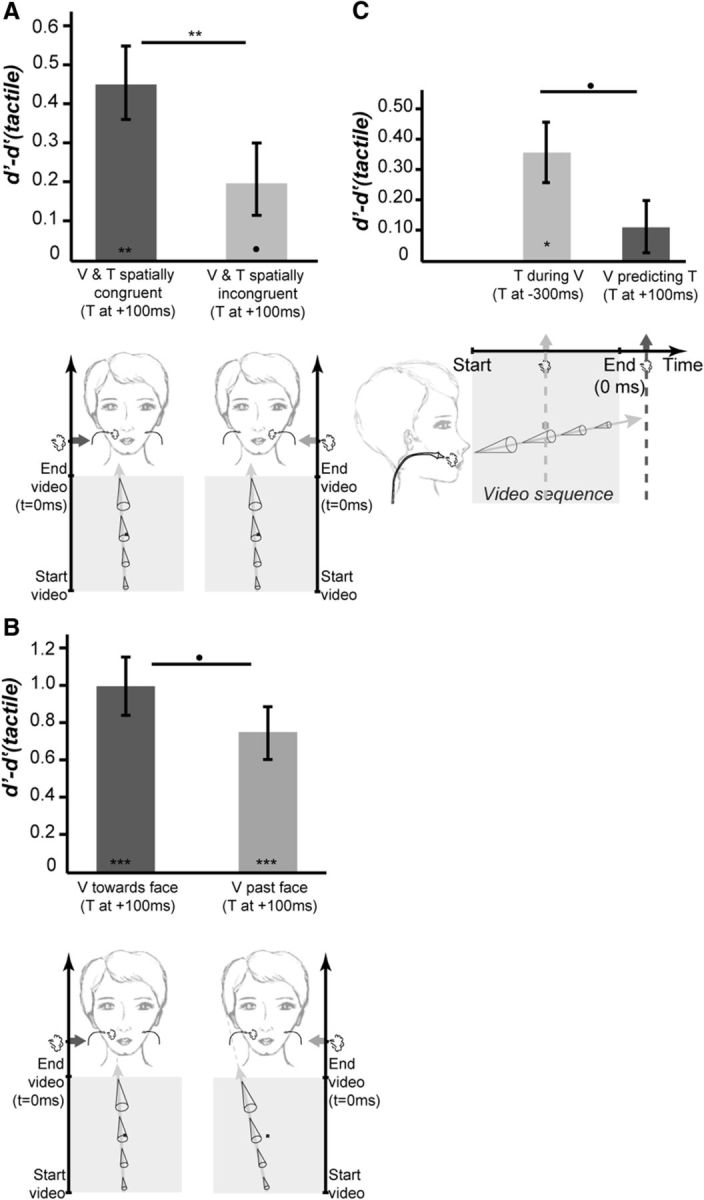

Figure 4.

Spatial prediction. A, Spatial congruence. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′(tactile) and the d′ obtained when the tactile stimulus is applied following the offset of the looming cone onto the subject's face, at its predicted impact time, at the location predicted by the cone trajectory (V and T spatially congruent) or on the opposite cheek (V and T spatially incongruent). B, Body limits. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′(tactile) and the d′ obtained when the tactile stimulus is applied at the end of looming cone video sequence, at its predicted impact time, when the cone trajectory predicts an impact to the face (V toward face) or not (V past face). C, Looming versus receding stimuli. Bar plots represent the mean (±SE) of the difference between the baseline tactile d′(tactile) and the d′ obtained when the tactile stimulus is applied during the visual video sequence of a cone receding away from the subject's face (T during V) or at the end of the video sequence (V predicting T). All else as in Figure 3.

Experiment 5: influence of visual stimulus impact on tactile detection.

Ten subjects participated in this study (27.8 ± 5.8 years, three males and seven females; Fig. 4B). All subjects were naive to the purpose of the experiment, except two (J.C. and S.B.H.). All trial types were as in Experiment 1, except that in half of the video sequences the cone trajectories did not cross the subjects' face but continued to the right or to the left of the face. Rest periods were arranged as in Experiment 1 and 450 total trials were collected.

Experiment 6: influence of a visual receding stimulus on tactile d′.

Ten subjects participated in this study (27.8 ± 5.8 years, three males and seven females; Fig. 4C). All subjects were naive to the purpose of the experiment, except one (J.C.). All trial types were as in Experiment 1, except that the visual video sequences were played in reverse order such that the cones appeared to move away from the subjects rather than toward their face. Rest periods were arranged as in Experiment 1 and 450 total trials were collected.

Analysis

Data analysis was performed in MATLAB (The MathWorks). For each experiment, we extracted, for each signal trial type (i.e., each trial type in which a tactile stimulus was effectively presented), and for each subject, the d′ quantifying the subject's sensitivity at detecting tactile stimuli (Table 1). For all experiments, we also quantified the response criterion for each such subject within a given experiment and confirmed that this criterion was independent of trial type (data not shown). Statistical effects were first assessed using a repeated-measure one-way ANOVA, followed by post hoc paired t tests for which the appropriate Bonferroni correction was applied (* symbol on all figures), unless otherwise specified (filled circle symbol on all figures). For the ANOVA tests, the F-values and p values are reported as well as the corresponding partial η squared. For the t tests, the t statistics (t statistics), the degree of freedom (df), and the Cohen's d are indicated to allow for a direct assessment of the effect size.

Table 1.

Average raw d′ values, per experiment and per experimental condition, quantifying the subjects' sensitivity at detecting tactile stimuli

| Experiment | # subject | Experimental condition | ||||

|---|---|---|---|---|---|---|

| 1, Figure 3A | 10 | d′(tactile only) 1.45 | d′(V predicting T) 1.89 | d′(V during T) 1.69 | ||

| 2, Figure 3B | 10 | d′(tactile only) 1.37 | d′(−300 ms) 2.06 | d′(−100 ms) 1.79 | d′(100 ms) 2.37 | d′(300 ms) 2.01 |

| 3, Figure 3C | 10 | d′(tactile only) 0.66 | d′(100 ms) 1.45 | d′(250 ms) 1.75 | ||

| 4, Figure 4A | 10 | d′(tactile only) 2.20 | d′(V and T spatially congruent) 2.66 | d′(V and T spatially incongruent) 2.40 | ||

| 5, Figure 4B | 10 | d′(tactile only) 1.72 | d′(V toward face) 2.72 | d′(V past face) 2.47 | ||

| 6, Figure 4C | 10 | d′(tactile only) 1.92 | d′(V predicting T) 2.03 | d′(V during T) 2.27 | ||

Control for the absence of facial muscle contraction induced by air-puff delivery

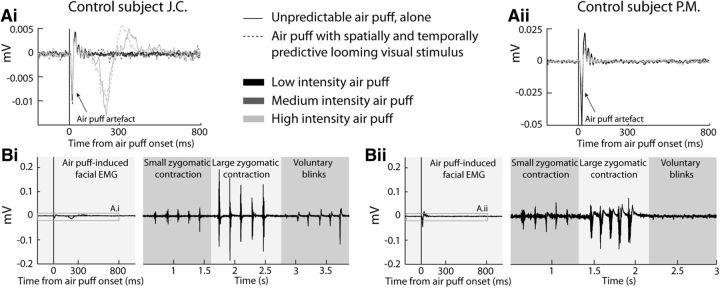

Our main prediction in the present work is that visual and temporal predictive cues will result in an enhancement in tactile sensitivity due to sensory processes (namely multisensory integration). However, predictive cues could also result in subjects unconsciously contracting their facial muscles around the air-puff impact point, in anticipation of air-puff delivery. This could result in a local change in tactile sensitivity. We thus measured, on two representative control subjects (Fig. 2; J.C., left plots, P.M. right plots), the facial EMG (Fig. 2; zygomatic minor, in a tendon-belly configuration, reference behind the ear for subject J.C. and on the forehead for subject P.M., naive subject; Biopac Systems, EMG100C amplification module, gain 2000, acquisition frequency 1 kHz, low-pass filter 500 Hz, high pass filter 10 Hz, 50 Hz band cut filter), while the subjects were submitted to temporally unpredictable (Fig. 2Ai,Aii, continuous lines) or fully predictive (Fig. 2Ai,Aii, dashed lines), low-intensity (Fig. 2Ai,Aii, black; subject J.C.: 85% detections for predictable air puffs and 55% for unpredictable air puffs; subject P.M.: 60% detections for predictable air puffs and 60% for unpredictable air puffs), medium-intensity (Fig. 2Ai,Aii, medium gray; just above threshold air puff, 0.05 bars), or high-intensity (Fig. 2Ai,Aii, light gray; reported as aversive by subjects, 0.5 bars) air puffs. The air puffs were located as in the main experiments, and the EMG recording electrodes were placed on the same side as the air-puff delivery tubing, one just above the lip commissure and the other on the other side of the nasolabial fold, above the first one, such that the air puff impacted bare skin. Apart from the electric artifact induced by the air-puff trigger, no modulation of the facial EMG could be observed, neither at the low air-puff intensities used in the main experiments, nor at higher intensities (Fig. 2Aii; except for blinks evoked at the highest aversive intensities for subject J.C.). To allow for a proper interpretation of this absence of effect, the facial EMGs recorded during small zygomatic contractions, large zygomatic contractions, or voluntary eye blinks are also shown, on the same y-scale (Fig. 2Bi,ii). This allows for the proper attribution of the modulation of J.C.'s facial EMG by high-intensity air puffs to blinks and not to a zygomatic minor muscle contraction, the EMG signature of which is different from that of a blink. The EMG modulation evoked by these nonvoluntary blinks is smaller than that observed following voluntary blinks, corroborating the fact that the underlying neural bases of voluntary and spontaneous or reflexive blinks are different (Guipponi et al., 2014).

Figure 2.

Low-intensity air puffs, whether unpredicted or fully predictable, do not produce anticipatory facial contractions that could account for changes in tactile sensitivity. A, Average facial EMG aligned on air-puff delivery, as a function of whether the air puff is unpredictable (continuous lines) or fully predictable (dashed lines), for low air-puff intensities (black; subject J.C.: 55% detections for predictable air puffs and 85% for unpredictable air puffs; subject P.M.: 60% detections for predictable air puffs and 60% for unpredictable air puffs), medium air-puff intensities (medium gray; just above threshold air puff, 0.05 bars), and high air-puff intensities (light gray; reported as aversive by subjects, 0.5 bars). B, For comparison, same data as in A reproduced with the same y-scale as facial EMG recordings during five small zygomatic contractions, five large zygomatic contractions, or five voluntary eye blinks. Data for control subject J.C. are presented on the left (plots Ai and Bi) and data for control subject P.M. are presented on the right (plots Aii and Bii).

Results

The main experimental measures reported below are tactile d′ sensitivity measures. This measure is based on the analysis of how often subjects report the presence of a tactile stimulus when none was actually presented (i.e., responses to noise, also referred to as false alarms) and how often they correctly report the presence of tactile stimuli when a stimulus was indeed presented (i.e., responses to signal, also referred to as hits or correct detections). The d′s are high when stimuli can unambiguously be detected and low when they are difficult to discriminate against noise (Fig. 1C). As a result, they reflect the sensitivity of the subject to the stimulus of interest. In the following, we analyze how the sensitivity to a tactile stimulus is affected by the simultaneous presentation of a dynamical looming visual stimulus, as a function of its spatial and temporal characteristics relative to the tactile stimulus.

Temporal prediction versus temporal simultaneity

The classical multisensory integration framework assumes that maximum cross-modal enhancement is obtained for spatially and temporally colocalized sensory sources. In a first experiment (Fig. 3A, Table 1), we question whether tactile detection is indeed maximized by the simultaneous presentation of a dynamic visual stimulus approaching the face (specifically the cheeks) or whether maximum tactile processing enhancement is obtained at the predicted time of impact of the looming stimulus on the face. We measured the tactile d′ of subjects when the tactile stimulus was applied to one of their cheeks as follows: (1) in the absence of any visual stimulation [d′(Tactile), serving as a baseline], (2) midway through the video sequence of a cone looming toward their face and predicting an impact at the very location of the tactile stimulus (T during V, 300 ms before the end of the video sequence), or (3) following the video sequence of a cone looming toward their face at its predicted time and location of impact to the skin (V predicting T, 100 ms following the end of the video sequence). There was a significant effect of the experimental conditions onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA (F(2,18) = 11.89, p < 0.0005, partial η squared = 57%). Specifically, and as expected from previous studies, d′(Tactile) was significantly smaller than d′(T during V) (Table 1, one-tailed t test, p = 0.0053, t statistics = 3.2, df = 9, Cohen's d = 0.45, Bonferroni corrected p < 0.05; Fig. 3A, dark gray bar) and d′(V predicting T) (Table 1, one-tailed t test, p = 0.0018, t statistics = 3.9, df = 9, Cohen's d = 0.89, Bonferroni corrected p < 0.01; Fig. 3A, light gray bar). Most interestingly, d′(T during V) was also significantly smaller than d′(V predicting T) (Table 1, one-tailed t test, p = 0.0165, t statistics = 2.5, df = 9, Cohen's d = 0.41, Bonferroni corrected p < 0.05; Fig. 3A). Thus maximum tactile detection is achieved when the tactile stimulus is temporally predicted by a visual stimulus looming toward the location of the tactile stimulation compared to when visual and tactile stimuli are presented simultaneously. This effect did not depend on the tactile stimulation side (left or right cheeks), nor on the origin of the looming visual stimulus (left or right visual field, periphery or center of the visual field). All the statistically significant comparisons performed above still hold when two-tailed t tests are performed instead of one-tailed t tests. This can directly be assessed from the t statistics and p values described above, because the t statistics are symmetric at about zero. It is, however, important to note that one-tailed t tests are fully appropriate here, because we have a strong a priori hypothesis on the directionality of the effects, due to the expected impact of multisensory integration and predictive coding on the subjects' overt responses.

Temporal prediction window

In Experiment 2, we manipulate the temporal asynchrony between the dynamic visual stimulus and the tactile stimulus to identify the temporal window of tactile sensitivity enhancement around the end of the looming stimulus video sequence. There was a significant effect of the time at which the air puffs were delivered relative to the end of the visual stimulus onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA (F(4,36) = 17.74, p < 0.00001, partial η squared = 66%). Specifically, all visuotactile conditions were associated with significantly higher d′ than the d′(Tactile) baseline condition (Table 1, Fig. 3B; one-tailed t test, all ps < 0.0014, t statistics ranging from 4.1 to 6.1, df = 9, Cohen's d ranging from 0.67 to 1.40, Bonferroni corrected p < 0.01 or better). Maximum tactile enhancement is obtained when the tactile stimulus is applied at the estimated time of impact of the looming cone on the face (100 ms, same timing as that used in Experiment 1; Fig. 3B). This d′ is significantly higher than that obtained for a tactile stimulus presented 300 ms before the end of the video sequence (Table 1, Fig. 3B; p = 0.000515, t statistics = 4.8, df = 9, Cohen's d = 0.81, Bonferroni corrected p < 0.01), 100 ms before the end of the video sequence; Table 1, Fig. 3B; p = 0.0039, t statistics = 3.4, df = 9, Cohen's d = 0.40, Bonferroni corrected p < 0.015), or 300 ms following the end of the video sequence (Table 1, Fig. 3B; p = 0.0093, t statistics = 2.9, df = 9, Cohen's d = 0.45, Bonferroni corrected p < 0.05). All the statistically significant comparisons performed above still hold when two-tailed t tests are performed instead of one-tailed t tests, except for the very last test, as can directly be assessed from the t statistics and p values provided. Here again, it is, however, important to note that one-tailed t tests are fully appropriate here, under the prediction that maximum tactile enhancement is obtained at the expected time of impact.

The temporal prediction window depends on the speed of the looming stimulus

In Experiment 2, maximum tactile enhancement is obtained at 100 ms following the end of the video sequence of the looming objects. To test whether the time of maximum tactile enhancement depends on the predicted time of impact to the face and thus on the speed of the looming object, in Experiment 3, we used dynamic visual stimuli looming at half the speed of those used in all other experiments, and we probed tactile sensitivity at 100 and 250 ms following the end of the video sequence. There was a significant effect of the time at which the air puffs were delivered relative to the end of the visual stimulus onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA (F(4,36) = 52.7, p < 0.00001, partial η squared = 85%). Specifically, all visuotactile conditions were associated with significantly higher d′ than the d′(tactile) baseline condition (Table 1, Fig. 3B; one-tailed t test, all ps < 0.00001, t statistics ranging from 6.7 to 8.3, df = 9, Cohen's d ranging from 1.0 to 1.48, Bonferroni corrected p < 0.00001 or better). Importantly, maximum tactile enhancement is obtained when the tactile stimulus is applied at the estimated time of impact of the looming cone on the face (Fig. 3C; 250 ms, compared with 100 ms, the timing revealing maximum enhancement in tactile sensitivity in Experiment 2, p = 0.0013, t statistics = 4.1, df = 9, Cohen's d = 0.5, Bonferroni corrected p < 0.01). In other words, for a high-speed looming stimulus (Experiment 2), tactile sensitivity is higher at 100 ms (predicted time of impact) following the end of the stimulus than later (300 ms), while for a low-speed stimulus (Experiment 3), tactile sensitivity is lower at 100 ms following the end of the stimulus than later (250 ms, predicted time of impact, between-subject one-tailed unpaired t test, t statistics = 4.53, df = 18, p = 0.00012, Cohen's d = 2.03). All the statistically significant comparisons performed above still hold when two-tailed t tests are performed instead of one-tailed t tests, as can directly be assessed from the t statistics and p values provided.

Spatial prediction

In Experiments 1 and 2, the tactile stimulus is always presented at the expected impact location. In Experiment 3, we test whether spatial prediction also contributes to tactile processing enhancement. We measured the tactile d′ of subjects when the tactile stimulus was applied to one of their cheeks (1) in the absence of any visual stimulation [d′(Tactile)], (2) following the video sequence of a cone looming toward their face at the time and location predicted by the dynamic visual stimulus [d′(V and T spatially congruent)], or (3) following the video sequence of a cone looming toward their face at the time predicted by this visual stimulus but at the opposite location [d′(V and T spatially incongruent)]. Here again, there was a significant effect of the experimental conditions onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA (F(2,18) = 14.45, p < 0.0002, partial η squared = 62%). Specifically, we find that tactile sensitivity was significantly increased with respect to the baseline d′(Tactile) when V and T were spatially congruent (Table 1, Fig. 4A, dark gray bar; one-tailed t test, p = 0.00048, t statistics = 4.8, df = 9, Cohen's d = 0.73, Bonferroni corrected p < 0.01). When V and T were spatially incongruent, a similar trend could be observed at an uncorrected level (Table 1, Fig. 4A, light gray bar; one-tailed t test, p = 0.029, t statistics = 2.2, df = 9, Cohen's d = 0.36). Most interestingly, d′(V and T spatially incongruent) was also significantly smaller than d′(V and T spatially congruent) (Table 1, Fig. 4A; one-tailed t test, p = 0.0015, t statistics = 4.0, df = 9, Cohen's d = 0.47, Bonferroni corrected p < 0.01). This crucial observation still holds true when two-tailed t tests are used, as can directly be assessed from the t statistics and p values provided. Thus maximum tactile detection is achieved when the tactile stimulus is both temporally and spatially predicted by a visual stimulus looming compared to when the visual stimulus is only temporally predictive of the tactile stimulus. Again, this effect did not depend on the tactile stimulation side (left or right cheek) or on the origin of the looming visual stimulus (left or right visual field, periphery, or center of the visual field). These observations, together with those reported in the first two experiments, indicate that both temporal and spatial predictions contribute to enhanced target detection.

Body limit

In the above experiment, we show that the enhancement of tactile sensitivity is highest on the cheek to be impacted by a looming stimulus compared with the opposite cheek on which no impact is predicted. In Experiment 4, we test whether a looming stimulus with a trajectory brushing past the face but predicting no impact to the skin also contributes to tactile processing enhancement. We measured the tactile d′ of subjects when the tactile stimulus was applied to one of their cheeks (1) in the absence of any visual stimulation [d′(Tactile)], (2) following the video sequence of a cone looming toward their face at the time and location predicted by the dynamic visual stimulus [d′(V toward face)], or (3) following the video sequence of a cone looming past their face and predicting no impact to the skin [d′(V past face)]. There was a significant effect of the experimental conditions onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA (F(2,18) = 26.37, p < 0.00001, partial η squared = 75%). Specifically, we find that tactile sensitivity was significantly increased with respect to the baseline d′(Tactile) both when the visual trajectory pointed toward the face (Table 1, Fig. 4B, dark gray bar; one-tailed t test, p = 0.000059, t statistics = 6.4, df = 9, Cohen's d = 1.67, Bonferroni corrected p < 0.001) or past it (Table 1, Fig. 4B, light gray bar; one-tailed t test, p = 0.00027, t statistics = 5.2, df = 9, Cohen's d = 1.27, Bonferroni corrected p < 0.001). Both these observations still hold true when two-tailed t tests are used, as can directly be assessed from the t statistics and p values provided. Importantly, d′(V toward face) was on average higher than d′(V past face) although this trend failed to reach significance at Bonferroni corrected level (Table 1, Fig. 4B; one-tailed t test, t statistics = 1.9, df = 9, Cohen's d = 0.39, p = 0.0445). As a result, tactile processing enhancement is only marginally reduced by a nonimpacting ipsilateral looming stimulus compared with what we describe in Experiment 3 for a looming visual impacting the contralateral cheek. This effect holds independently of the side of tactile stimulation (left or right cheek) or of the origin of the looming visual stimulus (left or right visual field, periphery, or center of the visual field).

Looming versus receding stimuli

In the previous experiments, the dynamical visual stimulus was a looming cone approaching the face. The spatial and temporal prediction enhancement of tactile detection described above could be fully due to the predictive cues provided by the stimulus trajectory. Alternatively, the reported effect could reflect an attentional spatiotemporal enhancement of tactile processing at the predicted location, independent of the fact that the trajectory of the cone is predictive of an impact on the face. To test for this effect, we repeated the first experiment, but this time with inverted video sequences, i.e., with dynamical visual stimuli receding away from the subject's face. A significant effect of the experimental conditions onto the subjects' tactile d′, as assessed from a repeated-measure one-way ANOVA, was also observed (F(2,18) = 5.91, p < 0.01, partial η squared = 40%). Specifically, we find that with a receding cone, the baseline d′(Tactile) is statistically smaller than d′(T during V) (Table 1, Fig. 4C, light gray bar; one-tailed t test, p = 0.0077, t statistics = 3.0, df = 9, Cohen's d = 0.67, Bonferroni corrected p < 0.05) but statistically undistinguishable from d′(V predicting T) (Table 1, Fig. 4C, dark gray bar; one-tailed t test, p = 0.1121, t statistics = 1.3, df = 9, Cohen's d = 0.22). In contrast with what was observed in the first experiment, d′(V predicting T) had a statistically nonsignificant trend to be smaller than d′(T during V) (Table 1, Fig. 4C; one-tailed t test, p = 0.028, t statistics = 2.2, df = 9, Cohen's d = 0.495, p < 0.05 uncorrected). This effect did not depend on the tactile stimulation side (left or right cheek) or on the origin of the looming visual stimulus (left or right visual field, periphery, or center of the visual field). Importantly, the change between the baseline d′(Tactile) and the d′ obtained during the looming condition (from Experiment 1) is significantly different from the change between the baseline d′(Tactile) and the d′ obtained the receding condition (from Experiment 5), as assessed from a between-subject one-tailed unpaired t test (t statistics = 2.4, df = 18, p = 0.0136, Cohen's d = 1.06, this observation still holds true when a two-tailed t test is used).

Overall, these observations indicate that the effects reported in the previous experiments cannot be accounted for by general attentional perceptual enhancement effects but rather that the predictive cues contained in the looming cone trajectories are crucially contributing to enhanced tactile detection at the predicted impact location at the expected time of impact.

Discussion

This study demonstrates that visual stimuli looming toward the face provide the nervous system with predictive cues that selectively enhance tactile sensitivity at the expected impact location of the visual stimulus. In the following, we discuss these observations in the context of multisensory integration, peripersonal space, and a defense boundary of self.

Predictive cues

Three predictive dimensions of the visual looming stimulus contribute to enhancing tactile sensitivity on the face.

The estimated time of impact

With high-speed looming stimuli, maximum tactile sensitivity is observed at 100 ms following the disappearance of the looming stimulus, at the subjective time of their impact to the face (Experiments 1 and 2; Fig. 3A,B). Importantly, with low-speed looming stimuli, maximum enhancement of tactile sensitivity is observed later, indicating that the enhancement of tactile sensitivity adjusts to the speed of the looming stimuli and to the time of their predicted impact on the face (Experiment 3; Fig. 3C). Several studies demonstrate that the temporal coincidence (Sugita and Suzuki, 2003) and temporal correlation (Parise et al., 2012) between a looming visual stimulus and a sound maximize audiovisual integration. The phenomenon we report here is completely different in that, in the presence of dynamic looming visual stimuli, maximum enhancement of tactile processing is achieved at the predicted time of impact, reflecting the expected subjective consequence of the visual stimulus onto the tactile modality. Interestingly, the perceptual and physiological binding of two stimuli into the representation of a unique external source is subjected to some degree of temporal tolerance, resulting in the description of a multisensory temporal binding window (for review, see Wallace and Stevenson, 2014). The bell-shaped pattern of enhanced tactile sensitivity around the predicted time of impact, as described by our Experiment 2 (Fig. 3B), suggests that a similar probabilistic temporal window of predicted impact may be at play when processing the consequences of a looming stimulus onto our body, and possibly points toward common neuronal bases.

The estimated position of impact

Maximal tactile sensitivity enhancement is observed when the tactile stimulus is presented at the expected location of impact of the looming stimulus on the face compared with an impact on the opposite side of the face (Experiment 4; Fig. 4A). This nicely matches the spatial multisensory integration rule as initially formulated (Stein and Meredith, 1993), most probably because of the crucial importance of spatial information when predicting an impact to the body (see Spence, 2013 and; Stein and Stanford, 2008, for a discussion of the task dependence of multisensory integration processes).

Dynamic depth cues

Maximum tactile sensitivity enhancement is selectively observed for a looming stimulus while a receding stimulus hardly has any effect on tactile sensitivity (Experiment 6; Fig. 4C). This effect is similar to what is observed for orienting biases due to looming stimuli (Maier et al., 2004), except for the major difference that we probe tactile sensitivity after the looming visual stimulus has disappeared. While both size and depth cues most probably contribute to the modulation of tactile sensitivity on the face, we propose that the movement vector cue (away from or toward the subject) is actually the dominant cue affecting tactile detection. Indeed, the spatial, temporal, and dynamic predictive cues are fully accounted for by the trajectory and speed of the looming visual stimuli. This is confirmed by the fact that slower looming stimuli result in a delayed predicted time of impact on the face, and hence a delayed time at which tactile sensitivity is maximally enhanced (Experiment 3; Fig. 3C).

Impact prediction and multisensory integration

Multisensory integration and causal inference

Multisensory integration is a neuronal process by which the response of a neuron in spikes per second to two sensory stimuli of different modalities (say visual and tactile), presented simultaneously, is different from the sum of the spikes per second produced by this same neuron in response to each sensory stimulus presented independently (Avillac et al., 2007). Multisensory integration is maximized when the two sensory stimuli are presented at the same location (spatial congruence) and at the same time (temporal congruence) and are low-energy stimuli (inverse effectiveness; Stein and Meredith, 1993), though recent evidence indicates that these factors are highly interdependent (Carriere et al., 2008; Royal et al., 2009; Ghose and Wallace, 2014; for review, see Wallace and Stevenson, 2014) and task dependent (Doehrmann and Naumer, 2008; Spence, 2013; van Atteveldt et al., 2014). This leads to the notion of causal inference: a visual and an auditory signal originating at the same spatial location at the same time share a unique underlying cause (Körding et al., 2007; Shams and Beierholm, 2010; Parise et al., 2012).

Baseline multisensory enhancement

The mere presence of a looming visual stimulus around tactile stimulation enhances tactile sensitivity, including when the tactile stimulus is presented during the looming phase (Experiments 1–3) or when the looming stimulus predicts an impact away from the tactile stimulation location (Experiments 4 and 5). This baseline effect could be due to an alerting effect of the visual stimulus, though it needs to be noted that this alerting effect is present only for looming stimuli, as receding stimuli do not induce an increase in tactile sensitivity (Experiment 6). This nonspecific enhancement of tactile sensitivity most probably builds on the classical multisensory integration mechanisms described above.

Impact prediction

On top of a baseline multisensory enhancement, tactile sensitivity is further enhanced by the predictive components of the heteromodal visual stimulus. This situation is encountered in everyday life. For example, anticipating the impact of an obstacle onto the body is of vital importance. Because this process involves cross-modal influences, we propose that the cortical regions responsible for this multisensory impact prediction highly overlap with the multisensory convergence and integration functional network. Very early on, Hyvärinen and Poranen (1974) (cited in Brozzoli et al., 2012) described the visual response of parietal neurons “as an anticipatory activation” that appears before the neuron's tactile receptive field is touched. The ventral intraparietal area (VIP) is an ideal candidate for impact prediction. Indeed, its neurons integrate vestibular proprioceptive self-motions and visual motion cues to encode relative self-motion with respect to the environment (Bremmer et al., 1997, 1999, 2000, 2002a, b; Duhamel et al., 1997). This region encodes both large field visual movements mimicking the consequences of the displacement of a subject within its environment (Bremmer et al., 1999, 2000, 2002a, b) and the movement of visual objects within the near peripersonal space (Bremmer et al., 1997, 2013). Importantly, VIP neurons respond to both visual and tactile stimuli (Duhamel et al., 1998; Guipponi et al., 2013) and perform nonlinear sub-, super-, or additive multisensory integration operations (Avillac et al., 2004, 2007). Most interestingly, this cortical region has been proposed to play a key role in the definition of a defense peripersonal space.

Impact prediction and peripersonal space

Our observations indicate that, quite surprisingly, a visual stimulus intruding into peripersonal space close to one's cheek has a higher impact prediction effect on our cheek than a visual stimulus predicting an impact to the other cheek. This enhancement is smaller than that observed for spatially congruent looming stimuli, but this difference does not reach significance at the corrected level. This suggests the existence of a security margin around the face, looming stimuli approaching too close to the face alerting our nervous system of a potentially harmful impact to the body. Our observations parallel a recent study by Canzoneri et al. (2012), which demonstrates that tactile processing on the hand is speeded by the presence of a looming sound, predicting an impact on the hand or within a well defined distance from the hand. A parietal–prefrontal cortical network composed of the VIP and premotor area F4 is suggested to play a crucial role in the definition of a defense peripersonal space. The neurons of both these regions have bimodal visuotactile receptive fields representing close peripersonal space and the corresponding skin surface (Gentilucci et al., 1988; Rizzolatti et al., 1988; Colby et al., 1993; Graziano et al., 1994; Gross and Graziano, 1995; Fogassi et al., 1996; Duhamel et al., 1998). The electrical microstimulation of both regions induces a behavioral defense repertoire of whole-body movements, suggesting their involvement in the coding of a defense peripersonal space (Graziano et al., 2002; Cooke and Graziano, 2004; Graziano and Cooke, 2006). We predict that this cortical network subserving a defense peripersonal space plays a key role in the prediction of an impact onto the body. Preliminary nonhuman primate functional-imaging data from our research group corroborate this prediction (Cléry et al., 2014).

Predictive coding and multisensory integration

Dynamic visual stimuli are not necessarily predictive of impact (e.g., a mosquito approaching our face for a blood dinner), but can be coincident with tactile stimulation (e.g., a mosquito moving on one's arm). The Bayesian framework has proven extremely successful in accounting for the behavioral (Fetsch et al., 2010) and single-cell recording (Gu et al., 2008) manifestations of multisensory integration. In addition, divisive normalization operated by local neuronal populations has been shown to account for inverse effectiveness and spatial congruence rules (Ohshiro et al., 2011; Fetsch et al., 2013) as well as for flexible adaptation to cue reliability (Morgan et al., 2008). On the other hand, the general predictive coding framework relying on internal generative models (or priors) constructed through experience (Friston and Kiebel, 2009; Friston, 2010) has been successfully applied to speech comprehension, in which visual cues are predictive of auditory speech information (van Wassenhove et al., 2005; van Wassenhove, 2013; Altieri, 2014; Lee and Noppeney, 2014). So how does a given functional multisensory integration network perform either coincident or predictive context- or task-dependent computations? Context-dependent time-varying priors could account for the reported behavioral effects. Alternatively, phase resetting of ongoing neuronal oscillations by a given sensory input (looming visual), predictively preparing neurons to respond to a second sensory input (tactile) with a particular timing relationship with the first sensory input, has been proposed as a general model for predictive neuronal processing (Lakatos et al., 2005, 2007; Kayser et al., 2008; van Atteveldt et al., 2014). This mechanism could be at the origin of enhanced tactile sensitivity during impact prediction. The local and global neuronal mechanisms by which the speed, distance, and trajectory characteristics of a looming stimulus with respect to the subject are integrated to estimate time to impact, the specific cortical regions that process this information, and how it is used for phase resetting remain to be unveiled.

Footnotes

We thank Karen Reilley for her help with the EMG recordings and Serge Pinède for his technical assistance in preparing the experiments.

The authors declare no competing financial interests.

References

- Altieri N. Multisensory integration, learning, and the predictive coding hypothesis. Front Psychol. 2014;5:257. doi: 10.3389/fpsyg.2014.00257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Olivier E, Denève S, Ben Hamed S, Duhamel J-R. Multisensory integration in multiple reference frames in the posterior parietal cortex. Cogn Process. 2004;5:159–166. doi: 10.1007/s10339-004-0021-3. [DOI] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel JR. Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci. 2007;27:1922–1932. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ball W, Tronick E. Infant responses to impending collision: optical and real. Science. 1971;171:818–820. doi: 10.1126/science.171.3973.818. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel J-R, Ben Hamed S, Graf W. The representation of movement in near extra-personal space in the macaque ventral intraparietal area (VIP) In: Thier P, Karnath H-O, editors. Parietal lobe contributions to orientation in 3D space, Vol 25, Experimental brain research series. Heidelberg: Springer; 1997. pp. 619–630. [Google Scholar]

- Bremmer F, Graf W, Ben Hamed S, Duhamel JR. Eye position encoding in the macaque ventral intraparietal area (VIP) Neuroreport. 1999;10:873–878. doi: 10.1097/00001756-199903170-00037. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Stages of self-motion processing in primate posterior parietal cortex. Int Rev Neurobiol. 2000;44:173–198. doi: 10.1016/S0074-7742(08)60742-4. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Kaminiarz A, Hoffmann KP. Encoding of movement in near extrapersonal space in primate area VIP. Front Behav Neurosci. 2013;7:8. doi: 10.3389/fnbeh.2013.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brozzoli C, Makin TR, Cardinali L, Holmes NP, Farnè A. Peripersonal space: a multisensory interface for body–object interactions. In: Murray MM, Wallace MT, editors. The neural bases of multisensory processes, Frontiers in neuroscience. Boca Raton, FL: CRC; 2012. [PubMed] [Google Scholar]

- Canzoneri E, Magosso E, Serino A. Dynamic sounds capture the boundaries of peripersonal space representation in humans. PLoS One. 2012;7:e44306. doi: 10.1371/journal.pone.0044306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C, Thut G, Romei V, Murray MM. Selective integration of auditory-visual looming cues by humans. Neuropsychologia. 2009;47:1045–1052. doi: 10.1016/j.neuropsychologia.2008.11.003. [DOI] [PubMed] [Google Scholar]

- Cappe C, Thelen A, Romei V, Thut G, Murray MM. Looming signals reveal synergistic principles of multisensory integration. J Neurosci. 2012;32:1171–1182. doi: 10.1523/JNEUROSCI.5517-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carriere BN, Royal DW, Wallace MT. Spatial heterogeneity of cortical receptive fields and its impact on multisensory interactions. J Neurophysiol. 2008;99:2357–2368. doi: 10.1152/jn.01386.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cléry J, Guipponi O, Wardak C, Ben Hamed S. Neuronal bases of peripersonal and extrapersonal spaces, their plasticity and their dynamics: knowns and unknowns. Neuropsychologia. 2014 doi: 10.1016/j.neuropsychologia.2014.10.022. pii:S0028-3932(14)00382-0. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol. 1993;69:902–914. doi: 10.1152/jn.1993.69.3.902. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Graziano MS. Sensorimotor integration in the precentral gyrus: polysensory neurons and defensive movements. J Neurophysiol. 2004;91:1648–1660. doi: 10.1152/jn.00955.2003. [DOI] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ. Semantics and the multisensory brain: how meaning modulates processes of audio-visual integration. Brain Res. 2008;1242:136–150. doi: 10.1016/j.brainres.2008.03.071. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Bremmer F, Ben Hamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Deangelis GC, Angelaki DE. Visual–vestibular cue integration for heading perception: applications of optimal cue integration theory. Eur J Neurosci. 2010;31:1721–1729. doi: 10.1111/j.1460-9568.2010.07207.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci. 2013;14:429–442. doi: 10.1038/nrn3503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G. Coding of peripersonal space in inferior premotor cortex (area F4) J Neurophysiol. 1996;76:141–157. doi: 10.1152/jn.1996.76.1.141. [DOI] [PubMed] [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nat Rev Neurosci. 2010;11:127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- Friston K, Kiebel S. Predictive coding under the free-energy principle. Philos Trans R Soc Lond B Biol Sci. 2009;364:1211–1221. doi: 10.1098/rstb.2008.0300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentilucci M, Fogassi L, Luppino G, Matelli M, Camarda R, Rizzolatti G. Functional organization of inferior area 6 in the macaque monkey. I. Somatotopy and the control of proximal movements. Exp Brain Res. 1988;71:475–490. doi: 10.1007/BF00248741. [DOI] [PubMed] [Google Scholar]

- Ghose D, Wallace MT. Heterogeneity in the spatial receptive field architecture of multisensory neurons of the superior colliculus and its effects on multisensory integration. Neuroscience. 2014;256:147–162. doi: 10.1016/j.neuroscience.2013.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia. 2006;44:2621–2635. doi: 10.1016/j.neuropsychologia.2005.09.011. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Yap GS, Gross CG. Coding of visual space by premotor neurons. Science. 1994;266:1054–1057. doi: 10.1126/science.7973661. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Taylor CS, Moore T. Complex movements evoked by microstimulation of precentral cortex. Neuron. 2002;34:841–851. doi: 10.1016/S0896-6273(02)00698-0. [DOI] [PubMed] [Google Scholar]

- Gross CG, Graziano MSA. Review: multiple representations of space in the brain. Neuroscientist. 1995;1:43–50. doi: 10.1177/107385849500100107. [DOI] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guipponi O, Wardak C, Ibarrola D, Comte JC, Sappey-Marinier D, Pinède S, Ben Hamed S. Multimodal convergence within the intraparietal sulcus of the macaque monkey. J Neurosci. 2013;33:4128–4139. doi: 10.1523/JNEUROSCI.1421-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guipponi O, Odouard S, Pinède S, Wardak C, Ben Hamed S. fMRI cortical correlates of spontaneous eye blinks in the nonhuman primate. Cereb Cortex. 2014 doi: 10.1093/cercor/bhu038. doi: 10.1093/cercor/bhu038. Advance online publication. [DOI] [PubMed] [Google Scholar]

- Hyvärinen J, Poranen A. Function of the parietal associative area 7 as revealed from cellular discharges in alert monkeys. Brain. 1974;97:673–692. doi: 10.1093/brain/97.1.673. [DOI] [PubMed] [Google Scholar]

- Kandula M, Hofman D, Chris Dijkerman H. Visuo-tactile interactions are dependent on the predictive value of the visual stimulus. Neuropsychologia. 2014. [Accessed December 10, 2014]. Available at: http://www.sciencedirect.com/science/article/pii/S002839321400462X. [DOI] [PubMed]

- Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cereb Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS One. 2007;2:e943. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O'Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H, Noppeney U. Temporal prediction errors in visual and auditory cortices. Curr Biol. 2014;24:R309–R310. doi: 10.1016/j.cub.2014.02.007. [DOI] [PubMed] [Google Scholar]

- Leo F, Romei V, Freeman E, Ladavas E, Driver J. Looming sounds enhance orientation sensitivity for visual stimuli on the same side as such sounds. Exp Brain Res. 2011;213:193–201. doi: 10.1007/s00221-011-2742-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maier JX, Ghazanfar AA. Looming biases in monkey auditory cortex. J Neurosci. 2007;27:4093–4100. doi: 10.1523/JNEUROSCI.0330-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maier JX, Neuhoff JG, Logothetis NK, Ghazanfar AA. Multisensory integration of looming signals by rhesus monkeys. Neuron. 2004;43:177–181. doi: 10.1016/j.neuron.2004.06.027. [DOI] [PubMed] [Google Scholar]

- Maier JX, Chandrasekaran C, Ghazanfar AA. Integration of bimodal looming signals through neuronal coherence in the temporal lobe. Curr Biol. 2008;18:963–968. doi: 10.1016/j.cub.2008.05.043. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder-Sälejärvi WA, Hillyard SA. Involuntary orienting to sound improves visual perception. Nature. 2000;407:906–908. doi: 10.1038/35038085. [DOI] [PubMed] [Google Scholar]

- Morgan ML, Deangelis GC, Angelaki DE. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 2008;59:662–673. doi: 10.1016/j.neuron.2008.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohshiro T, Angelaki DE, DeAngelis GC. A normalization model of multisensory integration. Nat Neurosci. 2011;14:775–782. doi: 10.1038/nn.2815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parise CV, Spence C, Ernst MO. When correlation implies causation in multisensory integration. Curr Biol. 2012;22:46–49. doi: 10.1016/j.cub.2011.11.039. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Camarda R, Fogassi L, Gentilucci M, Luppino G, Matelli M. Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Exp Brain Res. 1988;71:491–507. doi: 10.1007/BF00248742. [DOI] [PubMed] [Google Scholar]

- Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp Brain Res. 2009;198:127–136. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiff W, Caviness JA, Gibson JJ. Persistent fear responses in rhesus monkeys to the optical stimulus of “looming.”. Science. 1962;136:982–983. doi: 10.1126/science.136.3520.982. [DOI] [PubMed] [Google Scholar]

- Shams L, Beierholm UR. Causal inference in perception. Trends Cogn Sci. 2010;14:425–432. doi: 10.1016/j.tics.2010.07.001. [DOI] [PubMed] [Google Scholar]

- Spence C. Just how important is spatial coincidence to multisensory integration? Evaluating the spatial rule. Ann N Y Acad Sci. 2013;1296:31–49. doi: 10.1111/nyas.12121. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The merging of the senses. Cambridge, MA: MIT; 1993. [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Sugita Y, Suzuki Y. Audiovisual perception: implicit estimation of sound-arrival time. Nature. 2003;421:911. doi: 10.1038/421911a. [DOI] [PubMed] [Google Scholar]

- Tyll S, Bonath B, Schoenfeld MA, Heinze HJ, Ohl FW, Noesselt T. Neural basis of multisensory looming signals. Neuroimage. 2013;65:13–22. doi: 10.1016/j.neuroimage.2012.09.056. [DOI] [PubMed] [Google Scholar]

- Vagnoni E, Lourenco SF, Longo MR. Threat modulates perception of looming visual stimuli. Curr Biol. 2012;22:R826–R827. doi: 10.1016/j.cub.2012.07.053. [DOI] [PubMed] [Google Scholar]

- van Atteveldt N, Murray MM, Thut G, Schroeder CE. Multisensory integration: flexible use of general operations. Neuron. 2014;81:1240–1253. doi: 10.1016/j.neuron.2014.02.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wassenhove V. Speech through ears and eyes: interfacing the senses with the supramodal brain. Front Psychol. 2013;4:388. doi: 10.3389/fpsyg.2013.00388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Visual speech speeds up the neural processing of auditory speech. Proc Natl Acad Sci U S A. 2005;102:1181–1186. doi: 10.1073/pnas.0408949102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker-Andrews AS, Lennon EM. Auditory-visual perception of changing distance by human infants. Child Dev. 1985;56:544–548. doi: 10.2307/1129743. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stevenson RA. The construct of the multisensory temporal binding window and its dysregulation in developmental disabilities. Neuropsychologia. 2014;64C:105–123. doi: 10.1016/j.neuropsychologia.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]