Abstract

Even simple tasks rely on information exchange between functionally distinct and often relatively distant neuronal ensembles. Considerable work indicates oscillatory synchronization through phase alignment is a major agent of inter-regional communication. In the brain, different oscillatory phases correspond to low- and high-excitability states. Optimally aligned phases (or high-excitability states) promote inter-regional communication. Studies have also shown that sensory stimulation can modulate or reset the phase of ongoing cortical oscillations. For example, auditory stimuli can reset the phase of oscillations in visual cortex, influencing processing of a simultaneous visual stimulus. Such cross-regional phase reset represents a candidate mechanism for aligning oscillatory phase for inter-regional communication. Here, we explored the role of local and inter-regional phase alignment in driving a well established behavioral correlate of multisensory integration: the redundant target effect (RTE), which refers to the fact that responses to multisensory inputs are substantially faster than to unisensory stimuli. In a speeded detection task, human epileptic patients (N = 3) responded to unisensory (auditory or visual) and multisensory (audiovisual) stimuli with a button press, while electrocorticography was recorded over auditory and motor regions. Visual stimulation significantly modulated auditory activity via phase reset in the delta and theta bands. During the period between stimulation and subsequent motor response, transient synchronization between auditory and motor regions was observed. Phase synchrony to multisensory inputs was faster than to unisensory stimulation. This sensorimotor phase alignment correlated with behavior such that stronger synchrony was associated with faster responses, linking the commonly observed RTE with phase alignment across a sensorimotor network.

Keywords: ECoG, EEG, motor, multisensory, oscillations, synchrony

Introduction

Oscillatory brain activity reflects fluctuations of neuronal excitability; that is, different phases of brain oscillations correspond to high- and low-excitability states (Steriade et al., 1996; Sherman and Guillery, 2002; Lakatos, 2005). Interactions between neuronal groups can be facilitated, or not, by whether their respective excitatory states (or phases) are optimally aligned for the exchange of information. Synchronized activity can lead to temporal windows of communication between task-relevant brain regions (Varela et al., 2001; Fries, 2005; Womelsdorf and Fries, 2006). But how do local oscillations become aligned to promote inter-regional communication? Such synchronization might occur through phase reset of ongoing oscillations (Lakatos et al., 2007; Mercier et al., 2013). Previous evidence has shown, for example, that auditory stimulation can reset the phase of ongoing oscillations in visual cortex, influencing the processing of simultaneous or subsequent visual stimulation (Lakatos et al., 2009; Fiebelkorn et al., 2011; Romei et al., 2012; Mercier et al., 2013). Such cross-sensory (or cross-regional) phase reset, which is a mechanism for early multisensory interactions (MSIs) in sensory cortex (Lakatos et al., 2007), might serve to synchronize oscillatory activity across a network of task-relevant brain regions. Greater synchronization might then facilitate the transmission of information and thus improve behavioral outcomes.

A number of studies have linked the phase of ongoing oscillations, particularly in the delta and theta frequency bands, with fluctuations in behavioral performance under conditions of unisensory stimulation (Lakatos et al., 2008; Busch et al., 2009; Stefanics et al., 2010; Drewes and VanRullen, 2011; Fiebelkorn et al., 2011, 2013a, b; Henry and Herrmann, 2012; Ng et al., 2012). Recent investigations from our laboratory have also indicated that the detection of a near-threshold target is influenced by cross-sensory phase reset (Fiebelkorn et al., 2011, 2013b). The present study is focused on the neural mechanisms underlying perhaps the most commonly observed behavioral correlate of multisensory integration: the speeding of response times under conditions of multisensory relative to unisensory stimulation, typically referred to as the multisensory redundant target effect (RTE; Schröger and Widmann, 1998; Molholm et al., 2002, 2006; Teder-Salejärvi et al., 2002; Murray et al., 2005; Talsma and Woldorff, 2005; Senkowski et al., 2006; Romei et al., 2007; Moran et al., 2008; Gingras et al., 2009). Whereas several models have been developed to explain this behavioral facilitation (Miller, 1982; Otto and Mamassian, 2012), none of these models have incorporated the potential role of neuro-oscillatory mechanisms in contributing to the multisensory RTE.

Here, electro-corticographic (ECoG) data were recorded from auditory and motor cortices while patients with epilepsy performed a simple detection task that included both unisensory (auditory and visual) and multisensory (audiovisual) stimuli. Our analysis revealed (1) visual-alone stimulus influences on oscillatory activity in auditory cortex and whether (2) phase alignment during multisensory stimulation was stronger than for the sum of unisensory stimulation. Further, we investigated (3) if multisensory stimulation leads to greater/faster local and inter-regional phase alignment and (4) if inter-regional phase alignment was linked to response times. Our results show that stronger delta-band phase alignment in auditory cortex is linked to stronger phase alignment across a sensorimotor network and that stronger synchronization leads to faster response time (RTs), with the fastest phase locking and the fastest RTs occurring under conditions of multisensory stimulation. These data provide compelling support for the notion that modulation of neuro-oscillatory activity, in the form of cross-sensory phase reset and sensorimotor phase coupling, plays a significant role in the multisensory facilitation of reaction times.

Materials and Methods

Participants.

Data were collected from three patients implanted with subdural electrodes before undergoing presurgical evaluation for intractable epilepsy (P1: male of 46 years; P2: female of 18 years; P3 female of 38 years). Participants provided written informed consent, and the Institutional Review Boards of the Nathan Kline Institute, Weill Cornell Presbyterian Hospital, and The Albert Einstein College of Medicine approved the procedures. The conduct of this study was strictly in line with the principles outlined in the Declaration of Helsinki.

Electrode placement and localization.

Subdural electrode (stainless steel electrodes from AD-Tech Medical Instrument) placement and density were dictated solely by medical purposes. The precise location of each electrode was determined through nonlinear coregistration of preoperative structural MRI (sMRI), postoperative sMRI, and CT scans. The preoperative sMRI provided accurate anatomic information, the postoperative CT scan provided an undistorted view of electrode placements, and the postoperative sMRI (i.e., sMRI conducted while the electrodes were still implanted) allowed for an assessment of the entire coregistration process and the correction of brain deformation due to the presence of the electrodes. Coregistration procedures, normalization into MNI space, electrode localization, and image reconstruction were done through the BioImage suite software package (Lacadie et al., 2008) and results projected on the MNI-Colin27 brain (X. Papademetris, M. Jackowski, N. Rajeevan, H. Okuda, R. T. Constable, and L. H. Staib, BioImage Suite: an integrated medical image analysis suite, Section of Bioimaging Sciences, Department of Diagnostic Radiology, Yale School of Medicine).

Stimuli and task.

Auditory-alone, visual-alone, and audiovisual stimuli were presented equiprobably and in random order using Presentation software (Neurobehavioral systems). The interstimulus interval was randomly distributed between 750 and 3000 ms. The auditory stimulus, a 1000 Hz tone with a duration of 60 ms (5 ms rise/fall times), was presented at a comfortable listening level that ranged between 60 and 70 dB, through Sennheiser HD600 headphones; the visual stimulus, a centered red disk subtending 3° on the horizontal meridian, was presented on a CRT (Dell Trinitron, 17”) monitor for 60 ms, at a viewing distance of 75 cm. Patients maintained central fixation and responded as quickly as possible whenever a stimulus was detected, regardless of stimulus type (auditory-alone, visual-alone, or audiovisual). All participants responded with a button press, using their right index finger (for previous application of this paradigm to probe multisensory processing, see Molholm et al., 2002, 2006; Senkowski et al., 2006, 2007; Brandwein et al., 2011, 2013; Mercier et al., 2013). Each block included 100 stimuli, and the patients completed between 12 and 20 blocks. To maintain focus and prevent fatigue, patients were encouraged to take frequent breaks. The experimenters monitored eye position.

Intracranial EEG recording and preprocessing.

Continuous intracranial EEG (iEEG) was recorded using BrainAmp amplifiers (Brain Products) and sampled at 1000 Hz (low/high cutoff = 0.1/250 Hz). A subdural, frontally placed electrode was used as the reference during the recordings.

Off-line, trials with a button response falling between 100 and 750 ms poststimulus onset were selected, and corresponding iEEG was epoched from −1500 to 1500 ms either time locked to stimulus onset or to the button press. These epochs (±500 ms padding) then underwent artifact rejection. An adaptive procedure, based on standardized z-values calculated across time independently for each channel and adjusted for each dataset (participant), was applied to detect artifacts. Manual scanning of the raw data was performed to evaluate the quality of this procedure [final average number of trials across participants used for this analysis was as follows: audiovisual (AV) 194 ± 12; visual-alone (V) 181 ± 7; auditory-alone (A) 148 ± 15]. Detrended epochs were further preprocessed to remove line noise (60/120 Hz) using a discrete Fourier transform and high-pass (0.1 Hz) and low-pass (125 Hz) filtered using a two-pass, fourth-order Butterworth filter. Baseline correction was conducted over the entire epoch.

Electrodes implanted subdurally are highly sensitive to local field potentials (LFPs) and much less sensitive to distant activity. To further improve the spatial resolution and avoid far-field diffusion, LFPs were used to estimate the spatial derivative of the voltage axis (Perrin et al., 1987; Butler et al., 2011; Gomez-Ramirez et al., 2011; Mercier et al., 2013). A composite local reference scheme was applied in which the composite was defined by the number of immediate electrode neighbors on the horizontal and/or vertical plane (see Eq. 1). This number varied from 1 to 4 on the basis of the reliability of the electrical signal (i.e., electrodes contaminated by electrical noise or epileptic activity were not included). For instance, a five-point formula was applied when there were four immediate neighbors (grids), whereas a four-point formula was used when there were three immediate neighbors. This approach was used to ensure maximum representation of the local signal, independent of the reference, and minimum contamination through diffusion of currents from more distant generators (i.e., volume conduction).

|

where Vi,j (or Vk) denotes the recorded field potential at the ith row and jth column (or kth position) in the electrode grid (or strip).

ERP analysis.

To compute ERPs, all re-referenced nonrejected trials were averaged both time locked to stimulus onset for each stimulus condition (auditory-alone, visual-alone, and audiovisual) and time locked to the button press in response to each stimulus condition (following auditory-alone, visual-alone, and audiovisual stimuli). To verify whether sensory ERPs represented a statistically significant modulation from baseline, poststimulus amplitudes (from 0 to 300 ms) were compared with baseline amplitude values (from −100 to 0 ms). This was done using a random permutation test as described previously (Mercier et al., 2013). For the motor ERPs, the same method was applied with baseline defined as the entire period, since motor-related evoked activity starts before the button press due to preparatory activity and lasts for a few hundred milliseconds after the button press.

Based on the ERP analysis, we defined the contacts of interest (COIs); that is, for each participant, we selected the channels with the highest auditory or motor activity. For the auditory ROI, the selection was based on the earliest and largest ERP modulation observed over auditory regions. For the motor COI, the observation of the strongest ERP phase reversal (with the first phase characteristic of a readiness potential) time locked to the response was used as a selection criterion.

Time-frequency analysis.

To perform time-frequency decomposition, individual trials were convolved with complex Morlet wavelets, which had a width from three to seven cycles (f0/σf = 3 for 3–4 Hz; 5 for 5—6 HZ, and 7 for higher frequencies; Fiebelkorn et al., 2013b). The frequency range of these wavelets was 3–50 and 70–125 Hz (to circumvent the ambient 60 Hz artifact noise) increasing in 1 Hz steps, with convolution applied every 10 ms. Power and phase concentrations were computed based on the complex output of the wavelet transform (Tallon-Baudry et al., 1996; Roach and Mathalon, 2008; Oostenveld et al., 2011). To avoid any back-leaking from poststimulus activity into the prestimulus period, the baseline used for the time-frequency analysis was from −1000 to −500 ms.

Analysis of phase concentration and power.

To evaluate the presence or absence of systematic increases in phase concentration across trials, the phase concentration index (PCI; introduced as phase-locking factor in Tallon-Baudry et al., 1996, and also referred to as intertrial coherence in Makeig et al., 2002 and Delorme and Makeig, 2004) was computed as follows. The complex result of the wavelet convolution for each time point and frequency within a given trial was normalized by its amplitude such that each trial contributed equally to the subsequent average (in terms of amplitude). This provided an indirect representation of the phase concentration across trials, with possible values ranging from 0 (no phase consistency across trials) to 1 (perfect phase alignment across trials). To test for significant PCIs relative to stimulus onset, we used the same random permutation test procedure as used to assess ERP statistical significance (see above). A unique distribution was generated for each frequency.

To assess evidence for phase resetting, we determined whether or not significant changes in phase concentration occurred in the absence of changes in power (Shah et al., 2004). Event-related spectral perturbations were visualized by computing spectral power relative to baseline (i.e., after subtracting the baseline average power, the power value was divided by the mean of the baseline values). The significance of increases or decreases in power from baseline was calculated using the same statistical procedure as for PCI and ERP analyses.

For both the ERP and power analyses, poststimulus activity could be either positive or negative relative to baseline. Therefore a two-tailed threshold was used to determine statistical significance (p was considered significant if p ≤ 0.025 or p ≥ 0.975). For analysis of phase alignment, a one-tailed approach was used to determine statistical significance (p was considered significant if p ≤ 0.05) because we were specifically interested in identifying increases in poststimulus phase consistency.

Multisensory statistics.

To assess whether visually driven modulations interacted nonlinearly with the auditory response we applied the additive criterion model [AV vs (A + V); Stein and Meredith, 1993; Stein, 1998; Stanford et al., 2005; Avillac et al., 2007; Kayser et al., 2008; Mercier et al., 2013]; that is, we measured if the activity elicited in the multisensory condition differed from what would be expected from simple summation of the activity elicited by unisensory stimuli. Such nonlinear multisensory effects were then identified as either supra-additive or subadditive if the multisensory condition was, respectively, larger or smaller than the sum of unisensory conditions.

For this, a randomization method was used in which the average audiovisual response was compared with a representative distribution of the summed “unisensory” trials (see Senkowski et al., 2007; Mercier et al., 2013 for similar approaches). This distribution was built from a random subset of all possible summed combinations of the unisensory trials (baseline corrected), with the number of summed trials corresponding to the number of audiovisual trials. All randomization procedures (and therefore unisensory trials) were performed independently for each time point, and for the frequency analyses, for each frequency band. For tests of MSI effects in phase concentration, the unisensory trials were summed before being transformed through a wavelet convolution due to the nonlinearity of the procedure (Senkowski et al., 2006, 2007).

Measures of functional connectivity.

To measure communication between COIs, we computed phase-locking value (PLV; Tass et al., 1998; Lachaux et al., 1999), which is an index that represents the degree of phase synchrony between two signals. It measures, at a given time point, the variability across trials of the phase difference between two electrodes and is defined as follows:

|

with n being number of trials and ϕ1 and ϕ2 being the phase measured at electrode 1 and 2, respectively.

PLV is indexed between 1, when the phase difference is consistent across trials, and 0 when the phases at the two electrodes are randomly distributed with respect to each other. To test for significant increases in PLV, angle differences across trials were subjected to the Omnibus test (independently along the time and frequency dimensions). The Omnibus test is an alternative to the more commonly used Rayleigh test, with the advantage that it tests for circular uniformity without making assumptions about the underlying distribution (Berens, 2009). A second series of statistical tests was conducted using the random permutation procedure proposed by Lachaux et al. (1999). For the latter test, single trials were randomly permuted such that trials at one COI did not match the trials at the other COI when PLV was computed; that is, the within-trial phase relationship was broken. After each permutation the corresponding PLV was computed. A surrogate distribution was created by repeating this procedure 1000 times, independently for each time point and frequency. Finally, if the observed PLV was >95% of the randomized value, it was considered significant, and the p value was calculated accordingly.

We next tested for nonlinear multisensory effects by comparing PLV for the multisensory condition against the sum of the unisensory conditions. To do so, we used a variant of the test described under multisensory statistics. First, all possible combinations of summed unisensory trials were computed for the two COIs (over auditory cortex and over motor cortex). Then they were transformed in the time-frequency space to obtain the phase estimate. Next, some of the single summed trials were randomly picked and the corresponding PLV computed; their amount is identical to the number of multisensory trials used to compute the observed PLV. This last procedure was repeated 1000 times to build a (A + V) distribution against which the observed multisensory PLV (two-tailed) could be compared statistically. PLV is a measure of phase synchrony, and as such it does not take into account the amplitude of the signal. To ensure that the effects observed were specific to phase, we further computed amplitude and power correlations. No systematic pattern emerged from these control analyses, either across participants and/or conditions.

Measures of coupling between COIs (PLV indices and multisensory statistics using the additive model) were applied both on the data time locked to the stimulus and to the data time locked to the response. The first approach is more sensitive to phase alignment between auditory cortex and motor cortex relative to the stimulus onset (i.e., less susceptible to variability in sensory response), whereas the second approach is more sensitive to phase alignment relative to the motor response.

Measures of correlation between phase alignment indices and response time correlation.

To investigate the functional implications of phase alignment, we assessed if there was any correlation between PCI and PLV and between PLV and RTs. Single trials were sorted per RTs (from the fastest to the slowest trials, using bins containing 10% of the trials for each iteration with an increment of 1) and running averages of PCI (recorded from auditory cortex), PLV (recorded between auditory and motor cortices), and RTs were computed. Each PCI and PLV, time locked to the stimulus, was computed at the latency corresponding to the highest value measured for each condition in the delta band. Finally, we compared running PCIs to PLVs and RTs to PLVs using the Pearson correlation. Last, to verify that correlation measures were not influenced by response time distribution, the same analyses were conducted after log-transform and with reciprocal transform. With both approaches, comparative results were obtained, confirming results obtained with the nontransformed data.

Control for multiple comparisons.

All p values were corrected (in the time dimension for ERP, and both the time and frequency dimensions for PCI, power, and PLV) using the false discovery rate procedure for dependent tests from Benjamini and Yekutieli (2001). This correction, a sequential Bonferroni-type procedure, is highly conservative and thus favors certainty (type II errors) over statistical power (type I errors; for consideration of different approaches to controlling for multiple comparisons, see Groppe et al., 2011). This approach is derived from Benjamini and Hochberg (1995) and is widely used to control for multiple comparisons in neuroimaging studies (Genovese et al., 2002).

All data analyses were performed in MATLAB (The MathWorks) using custom-written scripts, the Fieldtrip Toolbox (Oostenveld et al., 2011), and CircStat: A MATLAB Toolbox for Circular Statistics (Berens, 2009).

Results

There were four central questions driving the current work: first, we assessed if visual stimulation modulated local auditory cortical activity and second if this leads to local multisensory interactions; third, we investigated inter-regional interactions between auditory and motor cortices by measuring phase synchronization in both unisensory and multisensory contexts; and finally, using correlation measures, we tested for relationships between local and inter-regional phase alignment and between inter-regional phase alignment and response times. The experiment (a simple stimulus-detection task) was performed by three patients implanted with frontotemporal electrode grids, which allow for both high temporal and high spatial sampling of the electrophysiological signal. For each participant, the two most relevant electrodes were selected via conjunction of functional and anatomical characteristics to best capture neuronal activity from auditory and motor cortices.

Behavioral data

Hit rates were close to ceiling indicating that participants easily performed the task (hit rates: 97 ± 1%). RT data demonstrated the commonly observed multisensory RTE (Schröger and Widmann, 1998; Molholm et al., 2002, 2006; Teder-Salejärvi et al., 2002; Murray et al., 2005; Talsma and Woldorff, 2005; Senkowski et al., 2006; Moran et al., 2008; Gingras et al., 2009), with RTs to audiovisual stimuli (AV average across participant: 290 ± 25 ms) faster than RTs to either of the unisensory stimuli (A: 341 ± 34 ms and V: 349 ± 5 ms).

Primary and cross-sensory responses and MSI in auditory cortex

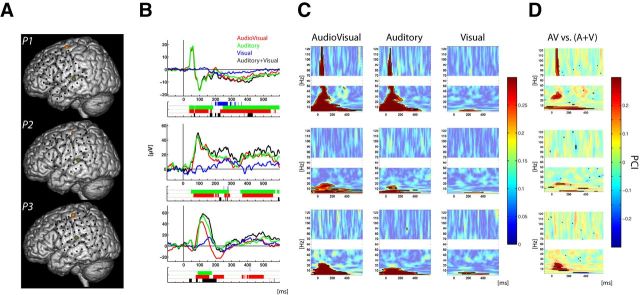

We first conducted analyses on the signal from the electrode that presented the largest and fastest auditory response. Most of the electrodes located along the lateral sulcus showed auditory-related signal modulation. For all participants, the strongest auditory-evoked potential (AEP) response, also occurring at the shortest latency, was positioned over the more posterior and superior region of the superior temporal gyrus (STG; Fig. 1A). Several intracranial studies have reported a close relationship between activity from primary and secondary auditory cortex, located in Heschl's gyrus, and the signal recorded over the posterior bank of the STG (Liegeois-Chauvel et al., 1991; Howard et al., 2000; Brugge et al., 2003; Yvert et al., 2005; Guéguin et al., 2007). For each participant, the selected electrode was therefore considered to be the one that best captured the earliest stages of auditory cortical processing (normalized MNI coordinates: x = 158, 157, 157; y = 113, 119, 116; z = 78, 82, 91, respectively, for Participants 1, 2, and 3). For both auditory-alone and audiovisual conditions, AEP was observed at selected electrode onset before 100 ms (Fig. 1B). For the visual-alone condition, no clear sharp ERP was observed; instead a low-amplitude slow response emerged from baseline at later latencies and showed only brief periods of significance relative to baseline (Fig. 1B). The nature of this cross-sensory effect on multisensory processing was next tested using the additive model (AV vs (A + V)). This comparison revealed subadditive effects in all participants, with the ERP to the multisensory condition smaller than would be expected from the sum of the unisensory responses.

Figure 1.

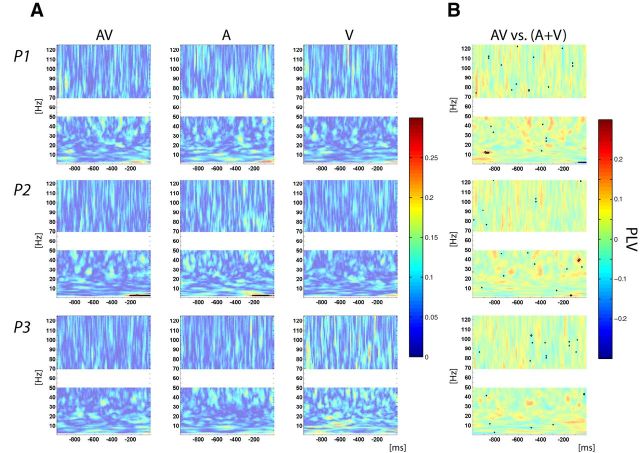

Electrode locations and modulation of activity in auditory cortex. A, Projection of the electrodes of interest on MNI brain template for the three subjects. Electrodes over auditory and motor cortices are indicated in dark green and orange, respectively. B, Evoked potentials recorded over auditory cortex. The activity following AV, A, and V stimuli are plotted in red, green, and blue, respectively; the black line depicts the sum of the ERPs evoked in the unisensory conditions (A + V). Ribbon-plot, below ERP traces, represents statistical results [comparison of each condition against baseline and between conditions following the additive model (AV vs (A + V); in black)]. Corrected significant p values are represented as solid color, and uncorrected p values appear transparent. C, PCI following audiovisual (left), auditory (middle), and visual, (right) stimuli. D, Multisensory effects on PCI assessed using the additive model: AV versus (A + V). C, D, Solid colors with black contours represent statistically significant values corrected for multiple comparisons.

To further characterize the mechanisms underlying these ERP responses, we conducted analyses in the time-frequency domain, with a particular interest in assessing whether increases in phase consistency were accompanied by increases in power; that is, we computed the PCI (see Material and Methods) across trials to reveal whether ongoing oscillations were reset after stimulus presentation. We then examined concomitant power to investigate whether phase reset of ongoing oscillations was related to a classic ERP effect, or alternatively, whether it was modulatory in nature, occurring in the absence of significant increases in power. For auditory-alone and audiovisual conditions, significant increases in both PCI (Fig. 1C) and power were observed. Conversely, for the visual-alone condition, increases in PCI, which were only observed in the lowest delta and theta frequency bands (respectively, 3–4 and 5–8 Hz; Fig. 1B), did not co-occur with significant changes in power (Fig. 2). In other words, the presentation of a visual-alone stimulus led to a reorganization of ongoing oscillatory activity in auditory cortex, with the phase of low frequencies reset without any detectible change in power. This profile (i.e., increases in phase concentration without increases in power) is indicative of a modulatory effect through phase reset (Shah et al., 2004; Lakatos et al., 2007).

Figure 2.

Power modulations in auditory cortex. Power modulation following audiovisual (left), auditory-alone (middle), and visual-alone (right) stimuli. Solid colors with black contours represent statistically significant values corrected for multiple comparisons.

We then wanted to assess whether cross-sensory phase reset in auditory cortex (demonstrated in response to a visual-alone stimulus) modulated the response to a simultaneously presented auditory stimulus. To investigate this question, PCI measured for the audiovisual condition was compared with what would be expected from the sum of the unisensory conditions (auditory-alone plus visual-alone). Consistent across all participants, the application of the additive model [AV vs (A + V); Stein and Meredith, 1993; Stein, 1998; Stanford et al., 2005; Avillac et al., 2007; Kayser et al., 2008; Mercier et al., 2013] revealed a multisensory supra-additive effect in the lower frequency bands (theta and delta; Fig. 1D). This result advocates for multisensory interactions because the phases of low-frequency oscillations in the multisensory conditions were more strongly reset than what would be expected from the sum of the unisensory conditions. Further supra-additive multisensory effects, consistent across participants, were found in mid-range frequencies (alpha and beta bands). However, these multisensory effects were not linked to PCI modulations observed for the visual-alone condition in the same frequency range.

Response-related activity in motor cortex

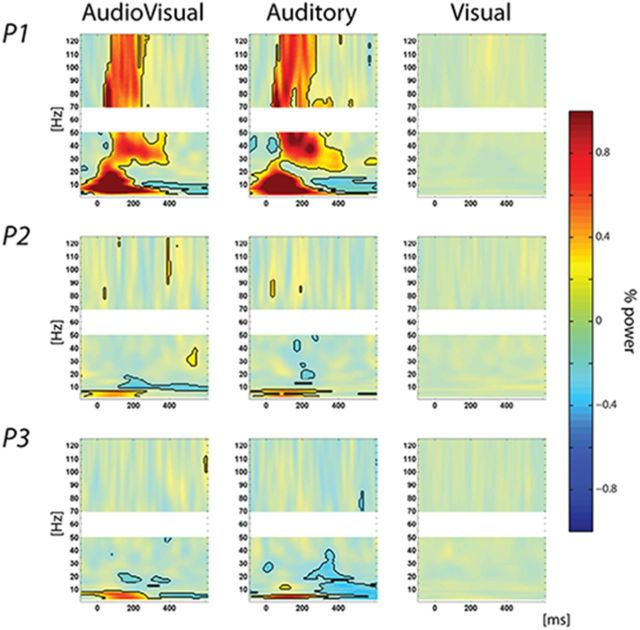

Next we sought to investigate the relationship between activity in auditory and motor cortices. To do so, we first looked for the electrode most representative of motor-related activity. For each patient, analyses performed in the time domain and in the time-frequency domain localized the highest amplitude response-related activity to the same electrode, which was the case regardless of condition. In the time domain, the ERP time locked to the button press was characterized by a slow ramping, starting a couple of hundred milliseconds before the button press and peaking at approximately the time of motor response, then reversing in polarity. This typical Bereitschafts potential profile (or readiness potential; Satow et al., 2003) was confirmed at the single-trial level as shown in Figure 3B, where signal amplitude is depicted at the single-trial level, sorted as a function of response time. For all conditions, the plots show fluctuations of the signal time locked to the button press, but not time locked to stimulus onset. Additionally, time-frequency analysis revealed the typical spectral characteristics of a motor response (Crone et al., 1998a, b; Aoki et al., 1999; Ball et al., 2008; Miller et al., 2009, 2012; Ruescher et al., 2013), consisting of a strong synchronization of low frequencies (<10 Hz) and high gamma band (>70 Hz) concurrent with a desynchronization in the beta band (Fig. 3B). For all participants, these temporal motifs of motor-related activity were observed in an electrode located along the most dorsal portion of the precentral sulcus for all participants (Fig. 1A; normalized MNI coordinates: x = 129, 135, 143; y = 98, 110, 110; z = 136, 133, 129, respectively, for Participants 1, 2, and 3). The present anatomical-functional observations are in full agreement with reports from other human intracranial studies (Crone et al., 1998a, b; Aoki et al., 1999; Ball et al., 2008; Miller et al., 2009, 2012; Ruescher et al., 2013).

Figure 3.

Behavior and motor-related activations. A, For each participant RT distribution is plotted. Bars represent the amount of trials with RTs included in a 20 ms bin. Red, green, and blue correspond, respectively, to audiovisual, auditory-alone, and visual-alone conditions. B, Motor-related activity in response to AV (left), A (middle), and V (right) stimuli. For each subject the first row depicts single-trial representations of activity, in microvolts, recorded over motor cortex, from −100 to 700 ms. Single trials were sorted per RT (represented by the black line). The second row represents induced power modulation (relative to the entire period) time locked to the response, from −350 to 350 ms, for frequencies ranging from 3 to 50 and 70 to 125 Hz. Corrected significant values are depicted with solid colors and black contours.

Phase locking between auditory and motor cortices

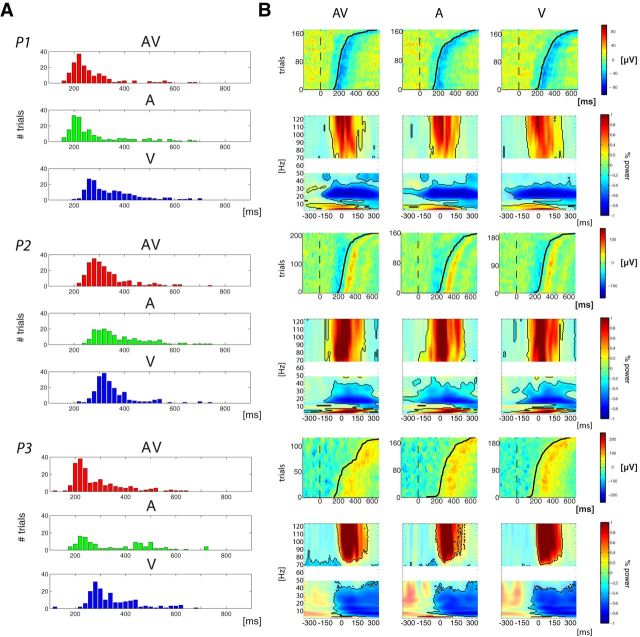

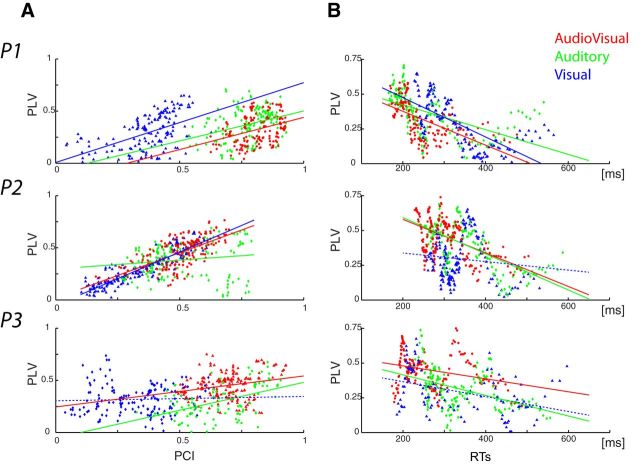

After characterizing sensory and motor-related activity over auditory and motor cortices, we aimed to investigate their possible functional link. We estimated functional connectivity across trials using the PLV, which indexes phase consistency between two signals (Tass et al., 1998; Lachaux et al., 1999). For audiovisual and auditory-alone conditions, analysis of PLV, time locked to the stimulus, revealed a strong and significant increase of phase synchronization between auditory and motor cortices in the low theta and delta bands, occurring between presentation of the stimulus and the button-press response (Fig. 4A). For the visual-alone condition, there was a weaker but still significant PLV increase in two participants in the same theta and delta bands. These results demonstrate a synchronization of activity between auditory and motor cortices, which was stronger when an auditory input was presented. The same analysis conducted on the data, time locked to the response instead, showed an increase in PLV in the low-frequency band (Fig. 5A) that was weaker than that time locked to the stimulus, and only reached significance in the case of one participant. Thus coupling between auditory and motor cortices is more consistently locked to stimulus presentation than to the motor response.

Figure 4.

Phase-locking activity between auditory and motor cortices time lock to stimulus onset. A, PLV plot for AV (left), A (middle), and V (right) conditions. B, Multisensory effects on PLV assessed using the additive model: AV versus (A + V). Color bars indicate PLV value (A) and difference in PLV value between AV and (A + V) (B). A, B, Solid contoured colors represent statistically significant values corrected for multiple comparisons.

Figure 5.

Phase-locking activity between auditory and motor cortices time lock to the response. A, PLV plot for AV (left), A (middle), and V (right) conditions. B, Multisensory effects on PLV assessed using the additive model: AV versus (A + V). Color bars indicate PLV value (A) and difference in PLV value between AV and (A + V) (B). A, B, Solid contoured colors represent statistically significant values after correction for multiple comparisons.

Subsequently we investigated if there was a multisensory effect on synchrony, as defined by the additive model. We assessed if the increase in PLV from the multisensory condition was comparable to the sum of PLV from the unisensory conditions. The analysis of the data time locked to the stimulus revealed nonlinear multisensory interactions by showing a supra-additive effect for all participants (Fig. 4B); that is, in the multisensory condition, phase synchronization between auditory and motor cortices was stronger than expected from the sum of the unisensory conditions. Applying the same analysis to the data time locked to the motor response did not show systematically significant multisensory interactions (Fig. 5B). This second analysis lends further support to the notion that additive effects found in coupling when the data are analyzed time locked to the stimulus reflect differences in coupling time, with faster synchronization in the audiovisual condition.

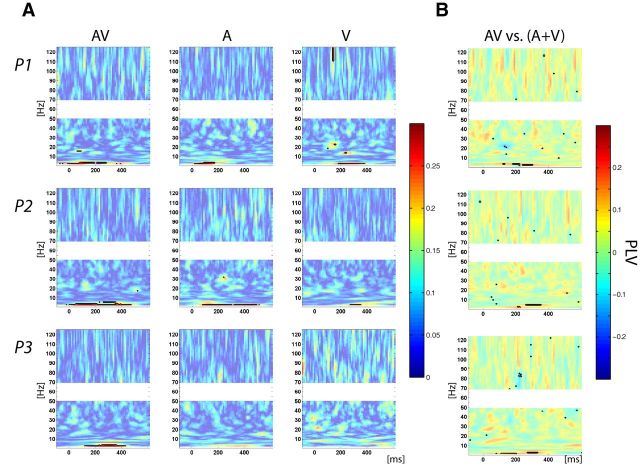

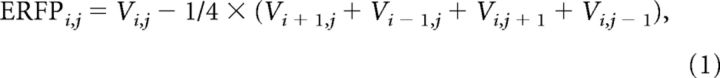

Correlation between phase alignment indices and response time

First, to link phase synchronization between auditory and motor cortices, with modulation of ongoing oscillations in auditory cortex, we performed a correlation measure between those two indices by computing sliding PCIs and PLVs using 10% of the trials binned by response times. The results in Figure 6A reveal a positive correlation between PCI and PLV along trial bins (P1: all rs > 0.33, all ps < 0.001; P2: AV and V: all rs > 0.67, p < 0.001; A: r = 0.15, p < 0.03; P3: AV and A: r > 0.23, p < 0.001; V: r = 0.04, p = 0.29); that is, the stronger the phase alignment was in auditory cortex, the higher phase locking was between auditory and motor cortices. To extend our investigations we also assessed the behavioral relevance of inter-regional phase synchronization. This analysis was done following the same approach: PLVs, time locked to the stimulus, were computed for trials, sorted, and then binned by response time. The results, depicted in Figure 6B, show a negative correlation between PLV and response time, which was further confirmed by running a Pearson correlation (Participant 1: all rs < − 0.5, all ps < 0.001; Participant 2: AV and A: r < −0.5, p < 0.001, V: r < −0.1, p < 0.1; Participant 3: all rs < −0.3, all ps < 0.001). In summary, the greater the phase alignment in auditory cortex, the highest the synchrony is between auditory and motor cortices and the faster the response times. These results thus link synchronization across a sensorimotor network with the speeding of response times.

Figure 6.

Relationship between phase alignment in auditory cortex, phase locking between auditory and motor cortices, and response times (each row represents a participant). A, Correlation between PCI and PLV. B, Correlation between PLV and RTs. Each point on the x-axis of the scatter plots represents the estimated phase alignment index and/or corresponding RTs for 10% of the trials, binned per RT (audiovisual in red, auditory-alone in green, visual-alone in blue). Fitting lines are plotted plain if statistics on the slopes were significant and/or at trend, dashed otherwise.

Discussion

Multisensory stimulation, even when fully redundant, facilitates behavior in simple reaction-time tasks (Schröger and Widmann, 1998; Molholm et al., 2002, 2006; Teder-Salejärvi et al., 2002; Murray et al., 2005; Talsma and Woldorff, 2005; Senkowski et al., 2006; Romei et al., 2007; Moran et al., 2008; Gingras et al., 2009). In the present study, we investigated the neural basis of this speeding. Studies demonstrate that phase reset of ongoing oscillations, via cross-sensory inputs (e.g., auditory inputs into visual cortex), is central to multisensory interactions in sensory cortex (Lakatos et al., 2009; Fiebelkorn et al., 2011; Romei et al., 2012; Mercier et al., 2013). Here, we asked whether such local phase alignment might also lead to inter-regional phase alignment across a sensorimotor network, and we hypothesized that such inter-regional synchronizations might give rise to a well established behavioral consequence of multisensory stimulation: the so-called redundant target effect. The present data reveal that multisensory stimulation indeed leads to greater local and faster inter-regional phase alignment, and that greater phase alignment across a sensorimotor network is linked to faster response times.

Visually driven modulation of ongoing activity in auditory cortex and multisensory interactions

Several noninvasive EEG and MEG studies have reported early latency multisensory interactions that localized best to auditory cortex (Foxe et al., 2000; Murray et al., 2005; Mishra et al., 2007; Raij et al., 2010; Thorne et al., 2011), consistent with findings from human neuroimaging (Foxe et al., 2002) and electrophysiological recordings in nonhuman primates (Schroeder and Foxe, 2002). Here, subdural recordings confirmed the presence of both early multisensory interactions (<200 ms) and visually driven activity in human auditory cortex. Notably, in stark contrast to the classical responses evoked by auditory-alone and audiovisual stimuli, the response in auditory cortex to visual-alone stimulation was dominated by low-amplitude, slow oscillatory activity (Fig. 1B). Further analysis showed that this visually driven modulation of ongoing activity over auditory cortex was attributable to the phase reset of oscillations in the delta and theta bands (Fig. 1C), consistent with earlier work in animals that showed that cross-sensory inputs can modulate neuronal firing by resetting the phase of ongoing oscillatory activity, without increasing signal power (Lakatos et al., 2007; Kayser et al., 2008). It also supports findings from recent noninvasive EEG work that described visually driven modulatory effects within low-frequency oscillations localized (via dipole source-modeling) to auditory cortex (Thorne et al., 2011). The present data go further yet to reveal the presence of nonlinear multisensory effects in the same low frequencies (i.e., delta and theta).

Further, supra-additive multisensory effects, consistent across participants, were also found in the alpha and beta bands, with PCI for the multisensory condition stronger than for the sum of unisensory conditions (Fig. 1D). Moreover, absence of significant PCI increases in the visual-alone condition in these bands raises questions as to the origins of these multisensory effects. We propose two possible explanations. First, it may be that PCI modulations in the visual-alone condition are simply too weak to survive the stringent correction for multiple comparisons applied here. Alternatively, this supra-additive effect may rely on a different mechanism than the one observed in the low frequencies (i.e., visually driven cross-modal phase reset), one that perhaps involves another anatomofunctional pathway. We reported a very similar observation in a previous study where auditory-driven modulation of activity in visual cortex was similarly linked to multisensory interactions (Mercier et al., 2013), even though these multisensory effects were not always associated with detectable cross-modal inputs in the auditory-alone condition. The source of these alpha and beta effects will bear further investigation in future studies.

Phase alignment between auditory and motor cortices and the influence on MSI

Recurrent interactions among multiple cortical and subcortical regions are thought to underlie even the simplest of cognitive tasks. An abiding question is, just how do distant neuronal populations involved in a given task communicate with each other? Coordination of fluctuations in neuronal oscillatory activity have been proposed to mediate such interactions by providing optimal temporal windows of communication between distant neuronal populations involved in a given task (Engel et al., 2001; Varela et al., 2001; Fries, 2005). Synchronized activity between distant brain regions has now been demonstrated during a wide range of processes including perception (Sehatpour et al., 2008; Hipp et al., 2011), attention (Buschman and Miller, 2007; Womelsdorf et al., 2007; Gregoriou et al., 2009; Gray et al., 2015), memory (Palva et al., 2010; Liebe et al., 2012; Burke et al., 2013; Jutras et al., 2013), and sensorimotor coordination (Bressler et al., 1993). Bressler et al. (1993), for example, observed synchronous activity between striate and motor cortex while monkeys performed a go/no-go task, but only when the monkeys had to respond to the visual target (i.e., during go trials).

Here, we find that oscillatory phase alignment between auditory and motor cortices increased during the time period between stimulus presentation and the button-press response. This suggests active communication between two major nodes of the sensorimotor network, recruited to perform the task at hand. Moreover, we observed supra-additive multisensory effects on phase synchronization between auditory and motor cortices that were due to faster synchronization in the multisensory condition. Based on a significant correlation between phase reset in auditory cortex and subsequent phase alignment between auditory and motor cortices, we propose that stronger multisensory-driven local phase alignment leads to faster inter-regional phase alignment between auditory and motor cortices.

Oscillatory activity, MSI, and behavior

Finally, we examined the relationship between auditory and sensorimotor phase alignment and behavior. Correlation analysis revealed that greater phase alignment in the delta band (i.e., the frequency band that showed maximal sensorimotor phase alignment) was significantly related to faster responses. This suggests that speeding of responses commonly observed in multisensory redundant target tasks is due, at least partially, to faster multisensory-related increases in phase alignment between sensory and motor cortex.

Using the same experimental design and scalp-recorded EEG, our group previously identified a relationship between beta power over frontal, left central, and right occipital scalp regions and response times (Senkowski et al., 2006). Multisensory effects were found in the same frequency band and scalp regions, suggesting a link between multisensory interactions and multisensory response time facilitation. While in the present intracranial study we did not observe consistent effects in beta power, it must be noted that the highly localized LFPs were recorded over circumscribed brain regions. The present results, therefore, do not capture all task-related activity, which has been observed in neuroimaging studies to involve a large and distributed network (for reviews, see Martuzzi et al., 2007 as an example of fMRI study based on the same experimental design and Senkowski et al., 2007 and Koelewijn et al., 2010).

Brain oscillations are hierarchically organized, with the phase of low-frequency oscillations modulating the amplitude of higher frequencies (Lakatos et al., 2005; Maris et al., 2011; van der Meij et al., 2012). Further, phase-amplitude coupling has been reported in human intracranial recordings for spatially distributed electrodes (Maris et al., 2011; van der Meij et al., 2012). One could hypothesize that phase alignment at lower frequencies, as observed here, might be linked to multisensory effects in the beta band at other network nodes, such as the superior parietal lobule, which is known to be involved in MSI (Molholm et al., 2006; Moran et al., 2008).

Alternatively, analysis of PCI revealed supra-additive effects in the alpha and beta bands that were not associated with statistically significant cross-sensory phase reset in the visual-alone condition, unlike what was observed in the low frequencies. These two observations question the role of the different frequency bands in neuronal interactions. Several studies that investigated inter-regional synchronization in different cognitive contexts revealed a parallel between functional hierarchy and distinct frequency bands (Buschman and Miller, 2007; Buschman et al., 2012; Bastos et al., 2015). For example, Bastos et al. (2015) investigated this question using dense subdural electrode coverage in nonhuman primates in conjunction with anatomical tracing. They demonstrated that distinct frequency bands support feedforward and feedback influences, with theta bands responsible for the former, whereas beta bands were implicated in the latter. Here, multisensory effects found in the delta-theta bands would therefore be supposed to reflect feedforward processing, driving cross-sensory phase reset and phase synchrony between auditory and motor cortices, whereas multisensory effects in the alpha-beta bands would be linked to feedback from higher order multisensory processing zones.

Importantly, a recent MEG study conducted by Thorne et al. (2011) reported that cross-sensory phase reset of low frequencies in auditory cortex partly accounted for response time variability. The present results extend this finding by linking response times to phase alignment between auditory and motor cortices and by linking multisensory interactions in auditory cortex (i.e., cross-sensory phase reset) to inter-regional sensorimotor communication. Phase reset in sensory cortex appears not only to promote processing of incoming input by increasing the efficiency of the sensory system (as demonstrated by the inverse effectiveness effect; Lakatos et al., 2007), but also by enhancing neuronal communication with other task-related brain regions.

Study limitations

As with all studies using ECoG in humans, response profiles over putatively similar cortical regions show a high degree of interindividual variability (Edwards et al., 2005, 2009; Molholm et al., 2006, 2014; Bidet-Caulet et al., 2007; Besle et al., 2008; Sehatpour et al., 2008; Sinai et al., 2009; Vidal et al., 2010; Butler et al., 2011; Gomez-Ramirez et al., 2011; Bahramisharif et al., 2013; Mercier et al., 2013), very much the same as is observed from noninvasive scalp recordings (Foxe and Simpson, 2002). Much of this variability is due to heterogeneity of underlying cortical geometry across individuals (Stensaas et al., 1974; Rademacher et al., 1993), such that electrodes will almost certainly be at varying orientations regarding generators of primary interest (Kelly et al., 2008). In turn, there is large interindividual variability in the timing of neural transmission and extent of the cortical network that is activated, even for very simple tasks.

It would also be preferable to engage a larger participant cohort. Sample sizes in ECoG studies are often limited by electrode coverage, which is solely dictated by medical needs. Such surgeries are relatively rare, patients often present with significant cognitive compromise that precludes participation, and others will be excluded because of contamination of recordings by epileptic activity. Nonetheless, clear commonalities can be observed and the fact that similar phenomena can be established as statistically robust at the individual participant level across three unique individuals provides a large degree of confidence that we are observing the same underlying mechanisms.

As mentioned, a limitation of ECoG studies concerns spatial coverage, since electrode implants cannot reasonably cover all brain areas involved in a given task. Therefore, exhaustively mapping a given functional network requires large cohorts. A recent study estimated that 50–100 patients (with depth electrodes) would be required to reach 90% coverage depending on the atlas parcellation used (Arnulfo et al., 2015). Also, it is important to clarify that we do not assume that the redundant target effect can be exclusively ascribed to phase-synchronization mechanisms in sensory cortices. Neuroimaging has implicated an extensive network of regions involved in audiovisual integrative processing, even for the very basic stimuli and simple task used herein (Martuzzi et al., 2007). Our aim here is simply to demonstrate that brain oscillatory activity is one mechanism participating in production of the RTE.

Summary and conclusions

Recordings over auditory and motor cortices during a reaction time task revealed (1) visually driven cross-sensory delta band phase reset in auditory cortex; (2) supra-additive multisensory interaction effects [AV > (A + V)] on phase alignment in the delta and theta bands; (3) phase synchronization between auditory and motor cortex in the delta band for all stimulation conditions, with faster sensorimotor inter-regional phase alignment in the multisensory condition; and (4) faster responses with stronger inter-regional phase synchronization. These data thus suggest that multisensory stimulation leads to stronger (i.e., more consistent) local phase alignment in sensory cortex, which in turn leads to faster inter-regional phase synchrony between sensory and motor cortex. This increase in local phase alignment allows for more rapid transfer of information across a sensorimotor network and, consequently, faster responses.

Footnotes

This work was primarily supported by a grant from the U.S. National Science Foundation to J. J. F. (BCS1228595). Part of the data analysis was performed using the Fieldtrip toolbox for EEG/MEG-analysis, developed at the Donders Institute for Brain, Cognition and Behavior (Oostenveld et al., 2011). We thank the three patients who donated their time and energy with enthusiasm at a challenging time for them.

The authors declare no competing financial interests.

References

- Aoki F, Fetz EE, Shupe L, Lettich E, Ojemann GA. Increased gamma-range activity in human sensorimotor cortex during performance of visuomotor tasks. Clin Neurophysiol. 1999;110:524–537. doi: 10.1016/S1388-2457(98)00064-9. [DOI] [PubMed] [Google Scholar]

- Arnulfo G, Hirvonen J, Nobili L, Palva S, Palva JM. Phase and amplitude correlations in resting-state activity in human stereotactical EEG recordings. Neuroimage. 2015;112:114–127. doi: 10.1016/j.neuroimage.2015.02.031. [DOI] [PubMed] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel JR. Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci. 2007;27:1922–1932. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahramisharif A, van Gerven MA, Aarnoutse EJ, Mercier MR, Schwartz TH, Foxe JJ, Ramsey NF, Jensen O. Propagating neocortical gamma bursts are coordinated by traveling alpha waves. J Neurosci. 2013;33:18849–18854. doi: 10.1523/JNEUROSCI.2455-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ball T, Demandt E, Mutschler I, Neitzel E, Mehring C, Vogt K, Aertsen A, Schulze-Bonhage A. Movement related activity in the high gamma range of the human EEG. Neuroimage. 2008;41:302–310. doi: 10.1016/j.neuroimage.2008.02.032. [DOI] [PubMed] [Google Scholar]

- Bastos AM, Vezoli J, Bosman CA, Schoffelen JM, Oostenveld R, Dowdall JR, De Weerd P, Kennedy H, Fries P. Visual areas exert feedforward and feedback influences through distinct frequency channels. Neuron. 2015;85:390–401. doi: 10.1016/j.neuron.2014.12.018. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B. 1995;57:289–300. [Google Scholar]

- Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29:1165–1188. doi: 10.1214/aos/1013699998. [DOI] [Google Scholar]

- Berens P. CircStat: a MATLAB toolbox for circular statistics. J Statistical Software. 2009:31. [Google Scholar]

- Besle J, Fischer C, Bidet-Caulet A, Lecaignard F, Bertrand O, Giard MH. Visual activation and audiovisual interactions in the auditory cortex during speech perception: intracranial recordings in humans. J Neurosci. 2008;28:14301–14310. doi: 10.1523/JNEUROSCI.2875-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidet-Caulet A, Fischer C, Besle J, Aguera PE, Giard MH, Bertrand O. Effects of selective attention on the electrophysiological representation of concurrent sounds in the human auditory cortex. J Neurosci. 2007;27:9252–9261. doi: 10.1523/JNEUROSCI.1402-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandwein AB, Foxe JJ, Russo NN, Altschuler TS, Gomes H, Molholm S. The development of audiovisual multisensory integration across childhood and early adolescence: a high-density electrical mapping study. Cereb Cortex. 2011;21:1042–1055. doi: 10.1093/cercor/bhq170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandwein AB, Foxe JJ, Butler JS, Russo NN, Altschuler TS, Gomes H, Molholm S. The development of multisensory integration in high-functioning autism: high-density electrical mapping and psychophysical measures reveal impairments in the processing of audiovisual inputs. Cereb Cortex. 2013;23:1329–1341. doi: 10.1093/cercor/bhs109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bressler SL, Coppola R, Nakamura R. Episodic multiregional cortical coherence at multiple frequencies during visual task performance. Nature. 1993;366:153–156. doi: 10.1038/366153a0. [DOI] [PubMed] [Google Scholar]

- Brugge JF, Volkov IO, Garell PC, Reale RA, Howard MA., 3rd Functional connections between auditory cortex on Heschl's gyrus and on the lateral superior temporal gyrus in humans. J Neurophysiol. 2003;90:3750–3763. doi: 10.1152/jn.00500.2003. [DOI] [PubMed] [Google Scholar]

- Burke JF, Zaghloul KA, Jacobs J, Williams RB, Sperling MR, Sharan AD, Kahana MJ. Synchronous and asynchronous theta and gamma activity during episodic memory formation. J Neurosci. 2013;33:292–304. doi: 10.1523/JNEUROSCI.2057-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Busch NA, Dubois J, VanRullen R. The phase of ongoing EEG oscillations predicts visual perception. J Neurosci. 2009;29:7869–7876. doi: 10.1523/JNEUROSCI.0113-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buschman TJ, Miller EK. Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science. 2007;315:1860–1862. doi: 10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- Buschman TJ, Denovellis EL, Diogo C, Bullock D, Miller EK. Synchronous oscillatory neural ensembles for rules in the prefrontal cortex. Neuron. 2012;76:838–846. doi: 10.1016/j.neuron.2012.09.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butler JS, Molholm S, Fiebelkorn IC, Mercier MR, Schwartz TH, Foxe JJ. Common or redundant neural circuits for duration processing across audition and touch. J Neurosci. 2011;31:3400–3406. doi: 10.1523/JNEUROSCI.3296-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone NE, Miglioretti DL, Gordon B, Lesser RP. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain. 1998a;121:2301–2315. doi: 10.1093/brain/121.12.2301. [DOI] [PubMed] [Google Scholar]

- Crone NE, Miglioretti DL, Gordon B, Sieracki JM, Wilson MT, Uematsu S, Lesser RP. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. I. Alpha and beta event-related desynchronization. Brain. 1998b;121:2271–2299. doi: 10.1093/brain/121.12.2271. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Drewes J, VanRullen R. This is the rhythm of your eyes: the phase of ongoing electroencephalogram oscillations modulates saccadic reaction time. J Neurosci. 2011;31:4698–4708. doi: 10.1523/JNEUROSCI.4795-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards E, Soltani M, Deouell LY, Berger MS, Knight RT. High gamma activity in response to deviant auditory stimuli recorded directly from human cortex. J Neurophysiol. 2005;94:4269–4280. doi: 10.1152/jn.00324.2005. [DOI] [PubMed] [Google Scholar]

- Edwards E, Soltani M, Kim W, Dalal SS, Nagarajan SS, Berger MS, Knight RT. Comparison of time-frequency responses and the event-related potential to auditory speech stimuli in human cortex. J Neurophysiol. 2009;102:377–386. doi: 10.1152/jn.90954.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel AK, Fries P, Singer W. Dynamic predictions: oscillations and synchrony in top-down processing. Nat Rev Neurosci. 2001;2:704–716. doi: 10.1038/35094565. [DOI] [PubMed] [Google Scholar]

- Fiebelkorn IC, Foxe JJ, Butler JS, Mercier MR, Snyder AC, Molholm S. Ready, set, reset: stimulus-locked periodicity in behavioral performance demonstrates the consequences of cross-sensory phase reset. J Neurosci. 2011;31:9971–9981. doi: 10.1523/JNEUROSCI.1338-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiebelkorn IC, Saalmann YB, Kastner S. Rhythmic sampling within and between objects despite sustained attention at a cued location. Curr Biol. 2013a;23:2553–2558. doi: 10.1016/j.cub.2013.10.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiebelkorn IC, Snyder AC, Mercier MR, Butler JS, Molholm S, Foxe JJ. Cortical cross-frequency coupling predicts perceptual outcomes. Neuroimage. 2013b;69:126–137. doi: 10.1016/j.neuroimage.2012.11.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe JJ, Simpson GV. Flow of activation from V1 to frontal cortex in humans. A framework for defining “early” visual processing. Exp Brain Res. 2002;142:139–150. doi: 10.1007/s00221-001-0906-7. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Morocz IA, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10:77–83. doi: 10.1016/S0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Wylie GR, Martinez A, Schroeder CE, Javitt DC, Guilfoyle D, Ritter W, Murray MM. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88:540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- Fries P. A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn Sci. 2005;9:474–480. doi: 10.1016/j.tics.2005.08.011. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Gingras G, Rowland BA, Stein BE. The differing impact of multisensory and unisensory integration on behavior. J Neurosci. 2009;29:4897–4902. doi: 10.1523/JNEUROSCI.4120-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez-Ramirez M, Kelly SP, Molholm S, Sehatpour P, Schwartz TH, Foxe JJ. Oscillatory sensory selection mechanisms during intersensory attention to rhythmic auditory and visual inputs: a human electrocorticographic investigation. J Neurosci. 2011;31:18556–18567. doi: 10.1523/JNEUROSCI.2164-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray MJ, Frey HP, Wilson TJ, Foxe JJ. Oscillatory recruitment of bilateral visual cortex during spatial attention to competing rhythmic inputs. J Neurosci. 2015;35:5489–5503. doi: 10.1523/JNEUROSCI.2891-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregoriou GG, Gotts SJ, Zhou H, Desimone R. High-frequency, long-range coupling between prefrontal and visual cortex during attention. Science. 2009;324:1207–1210. doi: 10.1126/science.1171402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groppe DM, Urbach TP, Kutas M. Mass univariate analysis of event-related brain potentials/fields I: a critical tutorial review. Psychophysiology. 2011;48:1711–1725. doi: 10.1111/j.1469-8986.2011.01273.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guéguin M, Le Bouquin-Jeannès R, Faucon G, Chauvel P, Liégeois-Chauvel C. Evidence of functional connectivity between auditory cortical areas revealed by amplitude modulation sound processing. Cereb Cortex. 2007;17:304–313. doi: 10.1093/cercor/bhj148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry MJ, Herrmann B. A precluding role of low-frequency oscillations for auditory perception in a continuous processing mode. J Neurosci. 2012;32:17525–17527. doi: 10.1523/JNEUROSCI.4456-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hipp JF, Engel AK, Siegel M. Oscillatory synchronization in large-scale cortical networks predicts perception. Neuron. 2011;69:387–396. doi: 10.1016/j.neuron.2010.12.027. [DOI] [PubMed] [Google Scholar]

- Howard MA, Volkov IO, Mirsky R, Garell PC, Noh MD, Granner M, Damasio H, Steinschneider M, Reale RA, Hind JE, Brugge JF. Auditory cortex on the human posterior superior temporal gyrus. J Comp Neurol. 2000;416:79–92. doi: 10.1002/(SICI)1096-9861(20000103)416:1<79::AID-CNE6>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- Jutras MJ, Fries P, Buffalo EA. Oscillatory activity in the monkey hippocampus during visual exploration and memory formation. Proc Natl Acad Sci U S A. 2013;110:13144–13149. doi: 10.1073/pnas.1302351110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cereb Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Gomez-Ramirez M, Foxe JJ. Spatial attention modulates initial afferent activity in human primary visual cortex. Cereb Cortex. 2008;18:2629–2636. doi: 10.1093/cercor/bhn022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelewijn T, Bronkhorst A, Theeuwes J. Attention and the multiple stages of multisensory integration: a review of audiovisual studies. Acta Psychol. 2010;134:372–384. doi: 10.1016/j.actpsy.2010.03.010. [DOI] [PubMed] [Google Scholar]

- Lacadie CM, Fulbright RK, Rajeevan N, Constable RT, Papademetris X. More accurate Talairach coordinates for neuroimaging using non-linear registration. Neuroimage. 2008;42:717–725. doi: 10.1016/j.neuroimage.2008.04.240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux JP, Rodriguez E, Martinerie J, Varela FJ. Measuring phase synchrony in brain signals. Hum Brain Mapp. 1999;8:194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O'Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- Lakatos P, O'Connell MN, Barczak A, Mills A, Javitt DC, Schroeder CE. The leading sense: supramodal control of neurophysiological context by attention. Neuron. 2009;64:419–430. doi: 10.1016/j.neuron.2009.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebe S, Hoerzer GM, Logothetis NK, Rainer G. Theta coupling between V4 and prefrontal cortex predicts visual short-term memory performance. Nat Neurosci. 2012;15:456–462. S1–S2. doi: 10.1038/nn.3038. [DOI] [PubMed] [Google Scholar]

- Liegeois-Chauvel C, Musolino A, Chauvel P. Localization of the primary auditory area in man. Brain. 1991;114:139–151. [PubMed] [Google Scholar]

- Makeig S, Westerfield M, Jung TP, Enghoff S, Townsend J, Courchesne E, Sejnowski TJ. Dynamic brain sources of visual evoked responses. Science. 2002;295:690–694. doi: 10.1126/science.1066168. [DOI] [PubMed] [Google Scholar]

- Maris E, van Vugt M, Kahana M. Spatially distributed patterns of oscillatory coupling between high-frequency amplitudes and low-frequency phases in human iEEG. Neuroimage. 2011;54:836–850. doi: 10.1016/j.neuroimage.2010.09.029. [DOI] [PubMed] [Google Scholar]

- Martuzzi R, Murray MM, Michel CM, Thiran JP, Maeder PP, Clarke S, Meuli RA. Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb Cortex. 2007;17:1672–1679. doi: 10.1093/cercor/bhl077. [DOI] [PubMed] [Google Scholar]

- Mercier MR, Foxe JJ, Fiebelkorn IC, Butler JS, Schwartz TH, Molholm S. Auditory-driven phase reset in visual cortex: human electrocorticography reveals mechanisms of early multisensory integration. Neuroimage. 2013;79:19–29. doi: 10.1016/j.neuroimage.2013.04.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller J. Divided attention: evidence for coactivation with redundant signals. Cogn Psychol. 1982;14:247–279. doi: 10.1016/0010-0285(82)90010-X. [DOI] [PubMed] [Google Scholar]

- Miller KJ, Zanos S, Fetz EE, den Nijs M, Ojemann JG. Decoupling the cortical power spectrum reveals real-time representation of individual finger movements in humans. J Neurosci. 2009;29:3132–3137. doi: 10.1523/JNEUROSCI.5506-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller KJ, Hermes D, Honey CJ, Hebb AO, Ramsey NF, Knight RT, Ojemann JG, Fetz EE. Human motor cortical activity is selectively phase-entrained on underlying rhythms. PLoS Comput Biol. 2012;8:e1002655. doi: 10.1371/journal.pcbi.1002655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra J, Martinez A, Sejnowski TJ, Hillyard SA. Early cross-modal interactions in auditory and visual cortex underlie a sound-induced visual illusion. J Neurosci. 2007;27:4120–4131. doi: 10.1523/JNEUROSCI.4912-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/S0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke JP, Schwartz TH, Foxe JJ. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. 2006;96:721–729. doi: 10.1152/jn.00285.2006. [DOI] [PubMed] [Google Scholar]

- Molholm S, Mercier MR, Liebenthal E, Schwartz TH, Ritter W, Foxe JJ, De Sanctis P. Mapping phonemic processing zones along human perisylvian cortex: an electro-corticographic investigation. Brain Struct Funct. 2014;219:1369–1383. doi: 10.1007/s00429-013-0574-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moran RJ, Molholm S, Reilly RB, Foxe JJ. Changes in effective connectivity of human superior parietal lobule under multisensory and unisensory stimulation. Eur J Neurosci. 2008;27:2303–2312. doi: 10.1111/j.1460-9568.2008.06187.x. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Ng BS, Schroeder T, Kayser C. A precluding but not ensuring role of entrained low-frequency oscillations for auditory perception. J Neurosci. 2012;32:12268–12276. doi: 10.1523/JNEUROSCI.1877-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otto TU, Mamassian P. Noise and correlations in parallel perceptual decision making. Curr Biol. 2012;22:1391–1396. doi: 10.1016/j.cub.2012.05.031. [DOI] [PubMed] [Google Scholar]

- Palva JM, Monto S, Kulashekhar S, Palva S. Neuronal synchrony reveals working memory networks and predicts individual memory capacity. Proc Natl Acad Sci U S A. 2010;107:7580–7585. doi: 10.1073/pnas.0913113107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrin F, Bertrand O, Pernier J. Scalp current density mapping: value and estimation from potential data. IEEE Trans Biomed Eng. 1987;34:283–288. doi: 10.1109/TBME.1987.326089. [DOI] [PubMed] [Google Scholar]

- Rademacher J, Caviness VS, Jr, Steinmetz H, Galaburda AM. Topographical variation of the human primary cortices: implications for neuroimaging, brain mapping, and neurobiology. Cereb Cortex. 1993;3:313–329. doi: 10.1093/cercor/3.4.313. [DOI] [PubMed] [Google Scholar]

- Raij T, Ahveninen J, Lin FH, Witzel T, Jääskelainen IP, Letham B, Israeli E, Sahyoun C, Vasios C, Stufflebeam S, Hämäläinen M, Belliveau JW. Onset timing of cross-sensory activations and multisensory interactions in auditory and visual sensory cortices. Eur J Neurosci. 2010;31:1772–1782. doi: 10.1111/j.1460-9568.2010.07213.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roach BJ, Mathalon DH. Event-related EEG time-frequency analysis: an overview of measures and an analysis of early gamma band phase locking in schizophrenia. Schizophr Bull. 2008;34:907–926. doi: 10.1093/schbul/sbn093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V, Murray MM, Merabet LB, Thut G. Occipital transcranial magnetic stimulation has opposing effects on visual and auditory stimulus detection: implications for multisensory interactions. J Neurosci. 2007;27:11465–11472. doi: 10.1523/JNEUROSCI.2827-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V, Gross J, Thut G. Sounds reset rhythms of visual cortex and corresponding human visual perception. Curr Biol. 2012;22:807–813. doi: 10.1016/j.cub.2012.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruescher J, Iljina O, Altenmüller DM, Aertsen A, Schulze-Bonhage A, Ball T. Somatotopic mapping of natural upper- and lower-extremity movements and speech production with high gamma electrocorticography. Neuroimage. 2013;81:164–177. doi: 10.1016/j.neuroimage.2013.04.102. [DOI] [PubMed] [Google Scholar]

- Satow T, Matsuhashi M, Ikeda A, Yamamoto J, Takayama M, Begum T, Mima T, Nagamine T, Mikuni N, Miyamoto S, Hashimoto N, Shibasaki H. Distinct cortical areas for motor preparation and execution in human identified by Bereitschaftspotential recording and ECoG-EMG coherence analysis. Clin Neurophysiol. 2003;114:1259–1264. doi: 10.1016/S1388-2457(03)00091-9. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res Cogn Brain Res. 2002;14:187–198. doi: 10.1016/S0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schröger E, Widmann A. Speeded responses to audiovisual signal changes result from bimodal integration. Psychophysiology. 1998;35:755–759. doi: 10.1111/1469-8986.3560755. [DOI] [PubMed] [Google Scholar]

- Sehatpour P, Molholm S, Schwartz TH, Mahoney JR, Mehta AD, Javitt DC, Stanton PK, Foxe JJ. A human intracranial study of long-range oscillatory coherence across a frontal-occipital-hippocampal brain network during visual object processing. Proc Natl Acad Sci U S A. 2008;105:4399–4404. doi: 10.1073/pnas.0708418105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senkowski D, Molholm S, Gomez-Ramirez M, Foxe JJ. Oscillatory beta activity predicts response speed during a multisensory audiovisual reaction time task: a high-density electrical mapping study. Cereb Cortex. 2006;16:1556–1565. doi: 10.1093/cercor/bhj091. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Gomez-Ramirez M, Lakatos P, Wylie GR, Molholm S, Schroeder CE, Foxe JJ. Multisensory processing and oscillatory activity: analyzing non-linear electrophysiological measures in humans and simians. Exp Brain Res. 2007;177:184–195. doi: 10.1007/s00221-006-0664-7. [DOI] [PubMed] [Google Scholar]

- Shah AS, Bressler SL, Knuth KH, Ding M, Mehta AD, Ulbert I, Schroeder CE. Neural dynamics and the fundamental mechanisms of event-related brain potentials. Cereb Cortex. 2004;14:476–483. doi: 10.1093/cercor/bhh009. [DOI] [PubMed] [Google Scholar]

- Sherman SM, Guillery RW. The role of the thalamus in the flow of information to the cortex. Philos Trans R Soc Lond B Biol Sci. 2002;357:1695–1708. doi: 10.1098/rstb.2002.1161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinai A, Crone NE, Wied HM, Franaszczuk PJ, Miglioretti D, Boatman-Reich D. Intracranial mapping of auditory perception: event-related responses and electrocortical stimulation. Clin Neurophysiol. 2009;120:140–149. doi: 10.1016/j.clinph.2008.10.152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanford TR, Quessy S, Stein BE. Evaluating the operations underlying multisensory integration in the cat superior colliculus. J Neurosci. 2005;25:6499–6508. doi: 10.1523/JNEUROSCI.5095-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanics G, Hangya B, Hernádi I, Winkler I, Lakatos P, Ulbert I. Phase entrainment of human delta oscillations can mediate the effects of expectation on reaction speed. J Neurosci. 2010;30:13578–13585. doi: 10.1523/JNEUROSCI.0703-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE. Neural mechanisms for synthesizing sensory information and producing adaptive behaviors. Exp Brain Res. 1998;123:124–135. doi: 10.1007/s002210050553. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The merging of the senses. Cambridge, MA: MIT; 1993. [Google Scholar]

- Stensaas SS, Eddington DK, Dobelle WH. The topography and variability of the primary visual cortex in man. J Neurosurg. 1974;40:747–755. doi: 10.3171/jns.1974.40.6.0747. [DOI] [PubMed] [Google Scholar]

- Steriade M, Contreras D, Amzica F, Timofeev I. Synchronization of fast (30–40 Hz) spontaneous oscillations in intrathalamic and thalamocortical networks. J Neurosci. 1996;16:2788–2808. doi: 10.1523/JNEUROSCI.16-08-02788.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Delpuech C, Pernier J. Stimulus specificity of phase-locked and non-phase-locked 40 Hz visual responses in human. J Neurosci. 1996;16:4240–4249. doi: 10.1523/JNEUROSCI.16-13-04240.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talsma D, Woldorff MG. Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J Cogn Neurosci. 2005;17:1098–1114. doi: 10.1162/0898929054475172. [DOI] [PubMed] [Google Scholar]

- Tass P, Rosenblum MG, Weule J, Kurths J, Pikovsky A, Volkmann J, Schnitzler A, Freund HJ. Detection of n:m phase locking from noisy data: application to magnetoencephalography. Phys Rev Lett. 1998;81:3291–3294. doi: 10.1103/PhysRevLett.81.3291. [DOI] [Google Scholar]

- Teder-Salejärvi WA, McDonald JJ, Di Russo F, Hillyard SA. An analysis of audio-visual crossmodal integration by means of event-related potential (ERP) recordings. Brain Res Cogn Brain Res. 2002;14:106–114. doi: 10.1016/S0926-6410(02)00065-4. [DOI] [PubMed] [Google Scholar]

- Thorne JD, De Vos M, Viola FC, Debener S. Cross-modal phase reset predicts auditory task performance in humans. J Neurosci. 2011;31:3853–3861. doi: 10.1523/JNEUROSCI.6176-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]