Abstract

A crucial function of the brain is to be able to distinguish whether or not changes in the environment are caused by one's own actions. Even the smallest brains appear to be capable of making this distinction, as has been shown by closed-loop behavioral experiments in flies controlling visual stimuli in virtual reality paradigms. We questioned whether activity in the fruit fly brain is different during such closed-loop behavior, compared with passive viewing of a stimulus. To address this question, we used a procedure to record local field potential (LFP) activity across the fly brain while flies were controlling a virtual object through their movement on an air-supported ball. The virtual object was flickered at a precise frequency (7 Hz), creating a frequency tag that allowed us to track brain responses to the object while animals were behaving. Following experiments under closed-loop control, we replayed the same stimulus to the fly in open loop, such that it could no longer control the stimulus. We found identical receptive fields and similar strength of frequency tags across the brain for the virtual object under closed loop and replay. However, when comparing central versus peripheral brain regions, we found that brain responses were differentially modulated depending on whether flies were in control or not. Additionally, coherence of LFP activity in the brain increased when flies were in control, compared with replay, even if motor behavior was similar. This suggests that processes associated with closed-loop control promote temporal coordination in the insect brain.

SIGNIFICANCE STATEMENT We show that closed-loop control of a visual stimulus promotes temporal coordination across the Drosophila brain, compared with open-loop replay of the same visual sequences. This is significant because it suggests that, to understand goal-directed behavior or visual attention in flies, it may be most informative to sample neural activity from multiple regions across the brain simultaneously, and to examine temporal relationships (e.g., coherence) between these regions.

Keywords: behavior, closed-loop, Drosophila, electrophysiology, SSVEP, vision

Introduction

Operant behavior, behavior that is guided by its consequences, is crucial for an animal to learn how to adapt its actions to make appropriate choices. Research in Drosophila melanogaster has shown that flies are able to display their learned choices by orienting toward objects in real or virtual environments (Götz, 1980; Heisenberg and Wolf, 1984). Interestingly, operant behavior during training facilitates memory formation, compared with classical conditioning by passive exposure to stimuli; flies learn more quickly to avoid visual patterns associated with heat when they are in control of the stimulus during the training period compared with when they are passively viewing the same stimulus (Wolf and Heisenberg, 1991; Brembs and Heisenberg, 2000). This suggests that operant control involves a distinct brain state that promotes learning by simultaneously engaging an animal's attention and motor control systems. It is unknown whether operant control in flies is associated with distinct neural signatures.

In freely moving animals there is often a correspondence between actions and environmental consequences. For example, insects show prey-tracking behavior toward small objects and escape responses from large, looming objects (Olberg et al., 2000, 2007; Card and Dickinson, 2008). Insects tracking prey or a food source are essentially under operant control: they are continuously using sensory feedback to adapt their behavior, such as head rotation or body posture (Collett and Land, 1975), which allows them to better predict their prey's position (Mischiati et al., 2014). Although object tracking neurons have been identified in insects such as dragonflies, hoverflies, and blow flies (Collett, 1971; Egelhaaf and Borst, 1993; Kimmerle and Egelhaaf, 2000; Nordström and O'Carroll, 2006), it has been difficult to study neural responses while an insect is actively engaged in tracking behavior, particularly at a whole-brain level. Even more challenging is knowing whether an animal is paying attention to a visual stimulus. In a recent study we described neural correlates of attention in tethered, walking honeybees actively interacting with a stimulus (Paulk et al., 2014). Interestingly, neural correlates of attention emerge even when insects do not actively interact with a stimulus, such as for tethered flies passively viewing novel objects (van Swinderen and Greenspan, 2003; van Swinderen and Flores, 2007; van Swinderen and Brembs, 2010), or for bees before fixating on a selected object (Paulk et al., 2014), suggesting that attention-like processes in the insect brain can be disassociated from behavior (van Swinderen, 2011). However, whether operant control over a stimulus gives rise to a distinct attention-like state is still an open question.

We recently developed a brain-recording procedure allowing us to record local field potential (LFP) activity across multiple sites in the brains of behaving flies (Paulk et al., 2013). Using this novel preparation, we were able to ask the question: how does brain activity differ in flies that are actively controlling versus passively viewing the same stimulus sequences? In this study, we show that the strongest difference was detected by coherence analysis: active control resulted in more strongly correlated responses to a visual stimulus, especially in the central brain.

Materials and Methods

Animals.

Flies were reared on standard fly media under a 12 h light/dark cycle at 25°C. Female laboratory-reared wild-type Canton S flies (3–10 d past eclosion; Drosophila melanogaster) were immobilized under cold anesthesia and positioned for tethering (Paulk et al., 2013). Flies were glued dorsally to a tungsten rod using dental cement (Coltene Whaledent synergy D6 FLOW A3.5/B3). In addition, dental cement cured with blue light was applied to their necks to fix their heads to the thorax (Radii Plus, Henry Scheinn Dental).

Behavioral experiments.

Tethered flies were suspended above an air-supported ball surrounded by either a diamond-shaped light emitting diode (LED) arena (Fig. 1; Paulk et al., 2013; Moore et al., 2014) or a round multipaneled LED arena (Reiser and Dickinson, 2008). The air-supported ball was a ∼37 mg Styrofoam ball (radius 7.5 mm; PioneerCraft) painted with a black and red pattern using a Sharpie pen. The ball was held in a mold made of plaster of paris (Prep) and a 50 ml Falcon tube with a small tube providing the air flow at ∼1 L/min (using an air flow meter, EZI-FLOW) leading from the side to the base of the hollow where the ball was placed.

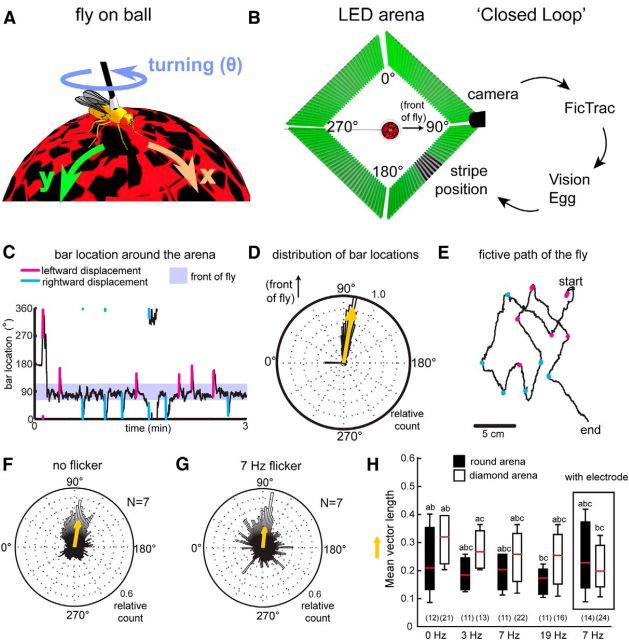

Figure 1.

Walking flies fixate on single vertical bars. A, A fly walks on an air-supported ball, using its movements to turn the ball by walking forward in the x direction, side-stepping in the y direction, or by rotating the ball around the z-axis (theta). B, The fly is surrounded by a diamond-shaped LED array arena (here, viewed from above) onto which a visual stimulus (a vertical dark bar) is displayed. The fly's movements on the ball were detected by a camera and registered through FicTrac software, which was then fed back through Vision Egg software to change the location of the bar in the arena (closed-loop behavior). C, An example of a fly fixating strongly on the flickering dark bar. The flies would also adjust to 90° displacements occurring for 300 ms randomly during the experiment, by bringing the bar back to the front (cyan and magenta spikes). D, This resulted in a mean distribution of bar locations to the front of the fly, as evidenced in the polar plot. Yellow arrow is the mean vector of the location of the bars. Rayleigh test for nonuniformity of bar positions: z statistic: 20812; p < 0.0000001. E, The 2D path of the same fly from C takes into account the displacements, where the fly generally walks straight, turning only in response to the displacements to the left (magenta dots) or to the right (cyan dots). F, G, Flies will fixate on nonflickering and flickering vertical dark bars. H, This fixation behavior results in mean vector lengths that are not significantly different between flickering or nonflickering bar at 7 Hz. Kruskal–Wallis; χ2 = 2.92, p value = 0.0872. Another set of flies were tested on a round arena (black boxplots; Reiser and Dickinson, 2008). Box: flies were still able to fixate on the 7 Hz bar following electrode insertion. The letters a–c indicate statistically different groups. N for each dataset is indicated in brackets on the graph.

In the two different arenas (round or diamond-shaped), the flies' movements were filmed using either a A602f-2 Basler firewire camera (Basler) at 60 frames per second with a 12× zoom lens (Navitar) or a RoHS 0.3MP B&W Firefly MV USB 2.0 camera with a 40.5 mm UV[0] lens (HOYA). The view from above allowed us to track the movement of the ball during experiments as the flies walked on the surface of the ball. This was key for the use of FicTrac, a computer vision ball-tracking program which can provide feedback to control the visual stimuli on the arena (Moore et al., 2014). FicTrac was run on Linux Ubuntu 12.10 operating system, which detected the location and rotation of the ball in three-dimensional space. The coordinate information of the ball, including the rotation in 3D, was then sent to one of two different visual display programs: VisionEgg (Straw, 2008) or MATLAB (Reiser and Dickinson, 2008). In these experiments, the rotation around the vertical axis of the ball translates into the horizontal rotation of a visual stimulus presented on the arena (Fig. 1). For all experiments, FicTrac detected the rotation of the ball at 60 frames per second.

Electrophysiology and recording.

The back of a 16-electrode linear silicon probe (model no. A1X16-3mm50-177, Neuronexus Technologies) was painted with Texas Red fluorescent dye conjugated to 10,000 MW dextran dissolved in distilled water (Invitrogen; Paulk et al., 2013). We inserted the probe into the flies' eyes laterally with the aid of a micromanipulator (Merzhauser) such that the electrode sites faced posteriorly, and was perpendicular to the curvature of the eye (Fig. 2; Paulk et al., 2013). An electrolytically sharpened fine tungsten wire (0.01 inch; A-M Systems) was used as a reference electrode and was placed superficially in the thorax. A Tucker-Davis Technologies multichannel data acquisition system was used to record the signal at 25 kHz unless otherwise specified.

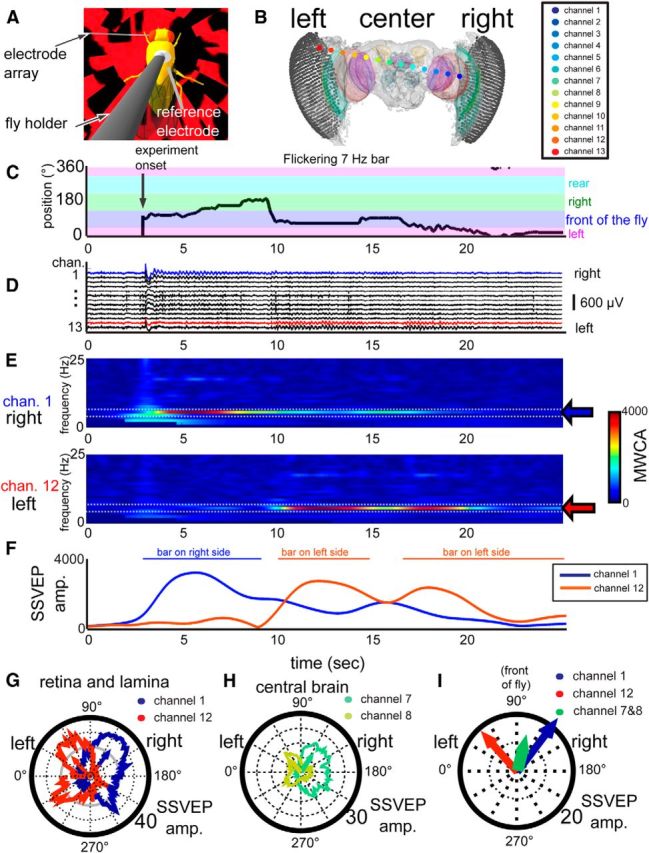

Figure 2.

Multichannel recording in the fly brain reveal bar location during behavior. A, A close-up representation of a tethered fly walking on an air-supported ball with an electrode array inserted. B, A 3D model representation of the electrode array inserted into the head of the fly. C, An example trace of the bar position controlled by a fly walking on a ball with an electrode array inserted. D, The flickering bar can be detected in the brain as oscillations in the LFP across channels which can be mapped to specific neural structures (colored dots in B). E, These oscillations can be detected by measuring the MWCA over time, as represented in these spectrograms of channels 1 and 12 during the same time period represented in C, D. F, The MWCA value for the flicker frequency at 7 Hz (called the SSVEP) can be represented for each channel over time (left and right eye channels are shown, in blue and red). In this case, as the bar moves from left to right, the SSVEP amplitude in the left eye decreases and the SSVEP in the right eye increases. G–I, Mapping the SSVEP amplitude onto the bar position results in an average distribution of flicker responses around the fly. Individual channels in the same fly brain have visual flicker responses which can be mapped to the bar position. Each line represents the mean SSVEP per position and the arrows are the mean direction of the polar plot data. Peripheral brain channels, as in the lamina or retina, have more lateral and larger receptive fields (G) than central brain channels (H). I, The SSVEP mean vectors of the left and right eyes (red and blue arrows) were directed left and right, respectively, whereas the central brain channels (green arrow) pointed in front of the fly.

Stimuli.

Visual stimuli included a vertical dark bar presented on one of two types of LED arenas (Fig. 1). For the diamond-shaped arena, four LED panels were assembled into the arena (Shenzchen Sinorad Medical Electronics; Fig. 1). Each panel contained 32 × 32 pixels each consisting of one blue, one green, and one red LED. We only used the green LEDs, which had a peak wavelength at 518 nm and half-peak maximum of 36 nm (Zhou et al., 2013). For the round arena, we used preassembled LED panels arranged into a cylinder (Mettrix Technology Corporation; Reiser and Dickinson, 2008).

In both cases, we presented a dark vertical bar (20° width and 54° height) on the arena on a bright green background. The location of the vertical bars around the arenas was controlled in either closed loop, where the rotation of the ball changed the horizontal location of the bar around the arena, or in open loop, where the location of the bar was controlled by the computer only. Behavioral responses were tested in response to nonflickering (0 Hz) and flickering (3, 7, and 19 Hz) bars in closed loop. During electrophysiological recordings, we tested behavioral and neural responses to 7 Hz flickering bars, because freely walking flies show strong behavioral responses to this frequency (L. Kirszenblat, Y. Zhou, and B. van Swinderen, unpublished observations).

During electrophysiological recordings, we presented the flies with 3 min of closed-loop behavior, where the flies could control the angular location of the bar around the arena. This was immediately followed by open-loop replay, where the same visual stimulus as the flies just experienced in closed loop was replayed to the flies. This sequence was repeated for a total of three times to the same fly.

Mapping electrode locations.

Flies were fixed in 4% paraformaldehyde (Electron Microscopy Services) in a phosphate buffer solution (PBS). After >8 h in fixative, the flies were transferred and washed in PBS before being embedded in 6% low melting point agarose. To align the heads on the slides, flies were placed between two coverslips to view the location of the electrode entry on both eyes (Paulk et al., 2013) using an LSM 510 microscope (Zeiss). We wanted to be able to compare the location of the electrodes in the brain to the recorded neural activity. To do this, we created a three-dimensional model of structures of the fly brain relative to the eyes from three partially dissected and labeled fly heads (Paulk et al., 2013) This model was used as a standard for mapping the electrode locations across all recorded flies. To be able to map the inserted electrodes to this three-dimensional representation of the fly brain, the reconstructed brain was aligned to the facets of the two eyes (Paulk et al., 2013). Briefly, because the insertion sites of the multielectrode probe was easily visible on the eye surface, a line from one insertion site on one eye to the exit site on the other eye was mapped to a standard 3D fly head and brain (Paulk et al., 2013). We then assigned electrode sites to brain regions.

Behavioral data analysis.

Data analysis was conducted through custom programs written in MATLAB (MathWorks). The angular direction of the fly at each sampling point was determined from the rotation around the vertical axis of the ball, θ. This value was then transformed from 360° around the fly into pixels of the display around the fly. The distribution of bar angular positions is shown as a radial histogram (Fig. 1D). For display purposes, the histogram was normalized to a peak value of 1. A mean vector of the angular positions around the arena was calculated using the Circular Statistical Toolbox in MATLAB (Berens, 2009). This vector is a representation of the narrowness of the distribution of the data (vector length) along a mean direction of bar locations (vector direction, or angle).

Electrophysiological analysis.

We recorded LFPs, which included electrophysiological data filtered (Butterworth) to <300 Hz and down sampled from 24,414 Hz to 1 kHz. We rejected channels which were either not in the brain or were blocked (whether by tracheal tissue or the channels were faulty), which often had no signal or were dominated by 50 Hz line noise. To remove movement deflections in the recordings due to muscle potentials in the thorax, we performed independent component analysis (ICA) using the fastICA function (Hyvarinen et al., 2001) in the sigTOOL MATLAB toolbox (Lidierth, 2009). We used ICA to separate the signal common across all the channels from that seen in individual channels (Paulk et al., 2013).

Both the LFP signal from each probe and the signal from each of five photodiodes around the diamond shaped arena were recorded and digitized on the same computer, with one photodiode placed at each arena apex. Within the arena, we allocated one LED to be a “counter pixel,” which would flicker at a regular 2 Hz rate. This portion of the visual stimulus was not visible to the fly (it was covered), but it faced a fifth photodiode, which was fed into the Tucker-Davis multichannel system. This allowed us to synchronize the system recording the neural activity with the visual stimulus, which was controlled by a second computer. This visual stimulus computer controlled the LED arena and recorded the angular position of the stimulus, the rotation of the ball and the on/off state of the counter pixel. The photodiode signal sent to the Tucker-Davis system and the on/off state of the visual counter pixel were matched at a temporal resolution of 16 ms to allow the LFP signal to be aligned with the behavior of the fly.

To measure the amplitude of the different frequencies within the LFP signal, we performed continuous Morlet wavelet transforms for the flicker frequency and for other frequencies outside of the flicker frequency tag (which included 1–100 Hz except for ±3 Hz around the flicker frequency) using the Fieldtrip toolbox (http://www.ru.nl/fc-donders/fieldtrip/; Oostenveld et al., 2011). This approach involved a 1 Hz spectral resolution and a 30 ms window sliding every 5 ms (thereby giving the Morlet wavelet coefficients at each point of the visual stimulus presented at 200 Hz). The real value of the Morlet wavelet coefficient was taken to measure the magnitude of the oscillations as the Morlet wavelet coefficient amplitude (MWCA). For the frequencies at which the bars were flickered, we define the MWCA as the steady-state visually evoked potential (SSVEP) amplitude.

To measure coherence, the spectral equivalent of correlation analyses, we used multitaper coherence calculations within a series of sliding overlapping windows (Bokil et al., 2010; Paulk et al., 2013). Coherence analysis was performed using the following equation: Cxy(f)=|Syx(f)|/√(Sx(f) × Sy(f)); where Sx(f) and Sy(f) were the multitapered power spectrum estimates of recordings from channels xn and yn over time t and Syx(f) gave the cross-spectral power of these two channels during that same time period (Roach and Mathalon, 2008). A sliding window sampling 0.5 s of the LFP data were moved every 0.25 s across the entire experiment. Within each window, coherence between every pair of channels was calculated from 0 to 200 Hz. We could then correlate the coherence through time relative to the behavior of the flies.

Statistical comparisons.

Because data were nonparametric, we used the Kruskal–Wallis test for multiple comparisons or the Wilcoxon rank sum test for paired comparisons. Circular statistics for comparisons and significance were used from the Circular Statistics MATLAB toolbox (Berens, 2009). In figures and the text, “N” indicates the number of flies, “n” indicates number of electrode sites. MWCA was plotted against stimulus position in a polar plot. The vector length represents the width of the MWCA distribution around the arena (0°–360°) and the direction represents the mean stimulus position, where the LFP MWCA for that frequency was maximum. A Kruskal–Wallis multiple-comparison test was used to test differences across multiple groups. A false discovery rate control test was included to control for multiple comparisons. As a test of the interactions between behavioral measures (such as walking speed, turning speed, etc.), bar location, and neural activity, we also performed multiway ANOVA. The data points we operated on were the 1 s overlapping sliding window estimations of 7 Hz oscillations which also had corresponding bar positions, walking speed, angular velocity, brain region of the recording, and whether the experiment was closed- or open-loop. We additionally categorized each of these time windows as when the flies were walking/ not walking or turning/not turning based on the underlying distribution of values found in the data (see Fig. 4).

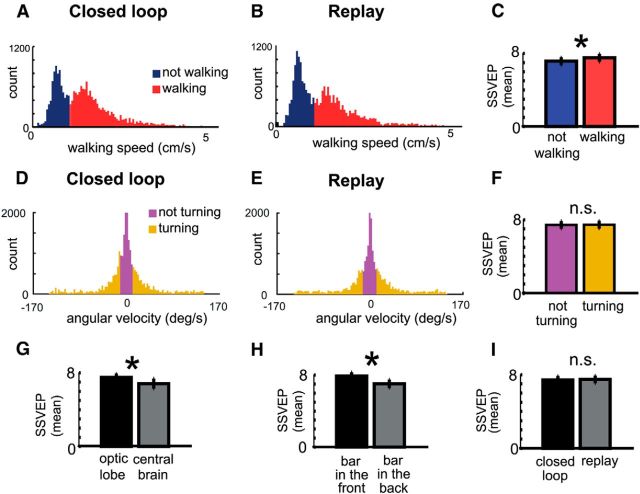

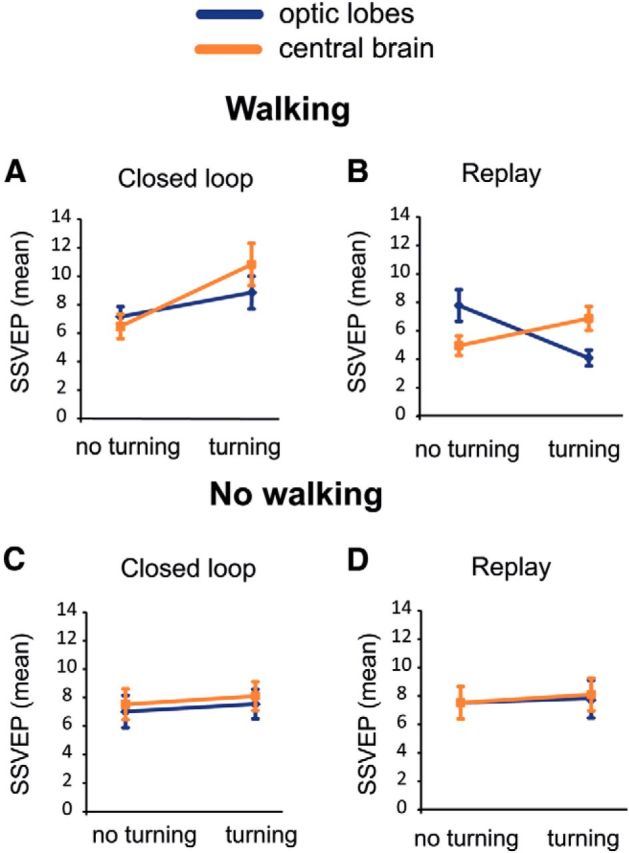

Figure 4.

Mean SSVEP varies with bar position and walking speed. A–C, The distribution of walking speeds could be divided broadly into no walking and walking (A, B) classifications (movement along the x/y axes; Fig. 1A) and turning and no turning (D, E) classifications (changes in theta; Fig. 1A). Median walking speed was not significantly different (1.372 cm/s vs 1.304 cm/s for closed loop and replay, respectively; p = 0.468, Wilcoxon rank sum test). Median turning speed was also not significantly different (p = 0.26, Wilcoxon rank sum test). C, F, SSVEP was significantly higher when flies were walking compared with not walking (C), whereas SSVEP was not dependent on turning behavior (F). G, H, SSVEP was significantly higher in optic lobes versus central brain (G) and when the bar was in the front versus the back halves of the arena (H). I, No significant differences were seen in the SSVEP across the brain for closed-loop versus replay experiments. N = 24 flies.

Results

Walking flies fixate on dark vertical bars in closed loop

In our virtual reality paradigm, walking flies rotate an air-supported ball to control a visual stimulus (a vertical dark stripe) in front of them (“closed-loop” behavior) in a diamond-shaped arena made of LEDs (Fig. 1A,B). Closed-loop control was achieved by filming the ball's movement to control the angular position of the visual object (Fig. 1B; Moore et al., 2014). Consistent with previous tethered paradigms, we found that flies tended to bring the bar to the front, a behavior called fixation (Fig. 1C;Heisenberg and Wolf, 1984). Fixation behavior resulted in mean angular distributions of bar locations centered in front of the fly (Fig. 1D).

To test whether flies were actively fixating, we included randomly timed 90° clockwise or counterclockwise displacements lasting 300 ms (Heisenberg and Wolf, 1984; Paulk et al., 2014). Flies generally adjusted to the displacements by refixating on the bar and bringing it to the front once more (Fig. 1C). These corrective responses were evident when we examined the fictive path the flies would have taken if they were walking on a flat two-dimensional surface, as measured using FicTrac (see Materials and Methods; Moore et al., 2014): flies turned left or right, continuously adjusting their behavior to face the dark bar once again (Fig. 1E). This results in robust fixation behavior in response to the dark bar, when averaged for multiple flies (Fig. 1F).

We then flickered the dark bar at different frequencies, thereby adding a “frequency tag” associated with the object, so that it could potentially be tracked in brain activity (van Swinderen, 2012; Paulk et al., 2013, 2014). We found that flies could still fixate on bars flickering at 3, 7, and 19 Hz (Fig. 1G–H), and the mean fixation vector length (a measure of fixation performance (Paulk et al., 2014) was not significantly different between nonflickering and flickering conditions (Fig. 1H, white or black bars). In addition, the mean walking speed and angular velocity were not significantly different between nonflickering and flickering stimuli (Kruskal–Wallis; walking speed: χ2 = 6.66, p = 0.0836; angular velocity: χ2 = 1.33, p = 0.7211). Finally, we found that performance in the diamond-shaped arena (Paulk et al., 2014) appeared to be better than fixation performance in a round LED arena that has previously been used for closed-loop experiments in flies (Reiser and Dickinson, 2008; Fig. 1H, white vs black bars).

Flickering visual stimuli are represented in the brain activity of walking flies

We recorded LFP activity across the fly brain by inserting a linear 16-channel electrode into the brain of a fly walking on the air-supported ball (Fig. 2A,B; Paulk et al., 2013). Flies with an electrode inserted displayed a slight decrease in walking speed, but no change in angular velocity compared with flies without inserted electrodes (mean walking speed (mm/s): without electrode: 0.0314 ± 0.0172, with electrode: 0.0256 ± 0.017, p = 0.0423. Mean angular velocity (rad/s): without electrode: −2.423 ± 27.958,with electrode: −12.941 ± 12.305, p = 0.647; n = 24 flies). We found that flies in both the round and diamond-shaped arenas still fixated on a 7 Hz flickering bar with the electrode inserted, resulting in a mean fixation vector that was not significantly different from the mean vector length for a 7 Hz bar without the electrode inserted (Fig. 1H, box; χ2 = 0.05; p = 0.8302). We therefore flickered the bar at 7 Hz in the diamond arena for subsequent electrophysiology experiments, as this would provide a “frequency tag” for tracking responses across the brain during behavioral fixation experiments.

To identify brain structures associated with the neural activity, we mapped the location of the recording sites in each experiment by using a three-dimensional brain registration technique (Paulk et al., 2013), allowing us to combine multiple experiments into one model schematic (N = 24 flies; Fig. 3A). Responses to 7 Hz visual flicker could thus be mapped to distinct neuroanatomical regions throughout the model brain (Figs. 2B, 3B; Paulk et al., 2013). These neural responses are evident as oscillating waves in the LFP, an intrinsic property of the CNS in mammals and insects produced by the activity of neural populations (Vialatte et al., 2010; van Swinderen, 2012; Paulk et al., 2013, 2014). The response to 7 Hz flicker was visible across the brain of flies engaged in closed-loop fixation behavior (Fig. 2C,D). The visual response in the brain, also called the SSVEP (Vialatte et al., 2010), changed depending on the location of the bar in the arena (Fig. 2C,D). To quantify the flicker response, we measured the MWCA across multiple frequencies for each channel (Oostenveld et al., 2011). This allowed us track changes in the frequency tag over time for different channels (Fig. 2E), quantified as the SSVEP amplitudes (Fig. 2F).

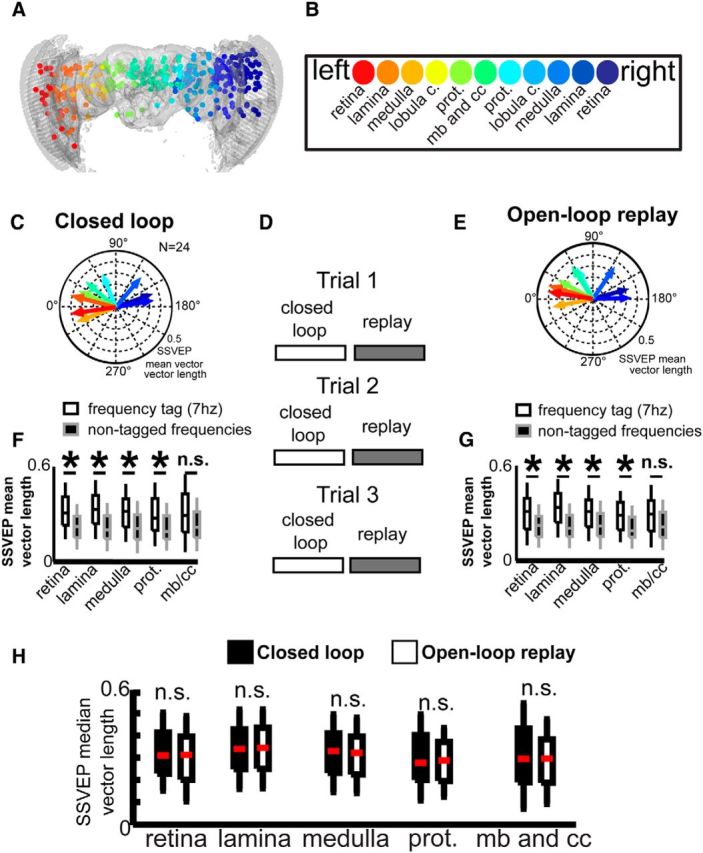

Figure 3.

Closed-loop and open-loop replay reveal identical receptive fields for the 7 Hz visual flicker. A, Recordings sites from multiple flies (N = 24) were mapped onto a standard brain and combined into a common dataset. B, The regions of the brain could be subdivided into structures, which are color-coded in A and in the legend in B, mb, Mushroom body; cc, central complex; prot, protocerebrum. C–E, Flies were able to control a visual stimulus in closed loop, and the same visual sequence was replayed back to the fly during open-loop replay in a series of trials (D). The SSVEP receptive fields were summarized as mean SSVEP vectors per brain region, for closed-loop (C) and replay (E) experiments. These mean SSVEP vectors have mean directions and amplitude per brain region, color-coded as in A and B. F, G, The mean SSVEP vector amplitudes were significantly higher than the amplitudes of LFP oscillations at other frequencies outside the range of the frequency tag (7 Hz) during both closed-loop and open-loop experiments. SSVEP mean vector directions in the central brain were significantly different from the left optic lobe (P test statistic = 7.05; p = 0.0079) but not the right optic lobe (P test statistic = 1.76; p = 0.1847). H, The SSVEP mean vector amplitudes were not significantly different (n.s.) between open-loop replay and closed-loop experiments.

We found that SSVEP amplitudes depended on the location of the bar in the arena, as well as on the recording location in the brain. As expected, when the flickering bar is on the right side of the fly, the right eye (e.g., channel 1; Fig. 2E,F) is more responsive to the 7 Hz stimulus, and when the bar is on the left, the left eye (e.g., channel 12; Fig. 2E,F) is more responsive to 7 Hz. Thus, as the bar moves around the fly, the flicker response in the brain's visual periphery (e.g., the retina and lamina) corresponds with the bar's location.

SSVEP responses to visual flicker in behaving flies reveal receptive fields

To measure how changing the position of the visual stimulus correlated with changes in the strength of the neural activity in particular brain regions, we mapped the average SSVEP amplitude for individual brain channels onto all bar positions around the fly. These data are visualized as SSVEP polar plots, which represented the receptive field of a single recording site (Fig. 2G–I). For example, an optic lobe channel on the fly's right side demonstrated a receptive field spanning both the right and front side of the fly, resulting in an average SSVEP vector pointing between the front and the right visual field (Fig. 2G, blue arrow). These SSVEP-based receptive fields shifted to the left in the other eye (Fig. 2G, red arrow). In central brain channels, however, we found that the distribution of the SSVEP receptive field appeared smaller (Fig. 2H). Using this approach, different channels from each recording could be compared to one another with regard to the receptive field and amplitude of the SSVEP (Fig. 2I).

When we assigned recording sites to different brain regions according to our 3D fly brain model (Fig. 3A,B; see Materials and Methods), we found that central brain channels generally had a more frontal receptive field, whereas the optic lobes had more lateral receptive fields (Fig. 3C; the angular distributions of central channels 7 and 8 are significantly different from optic lobe channels 1 and 2, and 12 and 13, p = 0.001 and p = 0.05, respectively, by Kuiper test). Each SSVEP mean vector has a directional and a length component. The former indicates the bar position that elicits the highest visual response per brain region, and the latter indicates the amplitude of that direction-specific response. Neural activity in the optic lobes was clearly side-specific: the mean direction in one optic lobe was significantly different to the mean SSVEP direction in the other optic lobe (Fig. 3C; p = 0.000002, Kuiper test). The response is also clearly linked to the tagged object: the SSVEP mean vector directions were significantly different from responses to other nontagged frequencies (Wilcoxon rank sum test, rank sum = 4302,100, p < 0.00001), and also different from the mean vector direction at the same frequency (7 Hz) if no flickering bar was present (Wilcoxon rank sum test, rank sum = 1843,398, p < 0.00001). This confirms that the SSVEP vectors are indeed reflecting receptive fields throughout the brain.

To better assess how different brain regions responded to the 7 Hz bar during closed-loop fixation, we next compared the SSVEP mean vector length (which is nondirectional) without the angle component, to combine recordings from left and right brain hemispheres (Fig. 3F). We found that the mean SSVEP vector length (for the 7 Hz frequency tag) during closed-loop behavior is significantly higher compared with frequencies outside of the flicker frequency (“nontagged frequencies”) for all areas except for the central brain regions, which includes the mushroom bodies and central complex (Kruskal–Wallis; p < 0.01; Fig. 3F). This may reflect the decreased directionality of the frequency tag in the central brain, compared with the optic lobes.

SSVEP receptive fields during open-loop stimulation resemble receptive fields during closed-loop behavior

In the preceding experiments, flies could control the angular position of the 7 Hz bar in closed loop. We wanted to know whether neural responses to visual flicker were different when the fly was in control over the stimulus versus when it passively viewed the same stimulus sequence. These open-loop “replay” experiments involved the fly experiencing the exact same visual stimulus as during the preceding closed-loop experiments, but without being able to control it. Crucially, closed-loop and open-loop replay experiments were repeated over three trials, so that fatigue or motor learning could not be an explanation for any differences between the conditions (Fig. 3D). Flies showed similar walking and turning distributions during closed-loop and replay experiments (Fig. 4A–D). We found that during open-loop replay, the mean SSVEP vector length (for the 7 Hz frequency tag) was significantly higher compared to frequencies outside of the flicker frequency (nontagged frequencies) across the majority of brain regions, similar to closed-loop control (Fig. 3F,G). When we directly compared open-loop with closed-loop brain responses, we found that replaying the visual stimulus to the same flies had very little effect on SSVEP responses across the brain, as seen by almost identical SSVEP vector distributions (Fig. 3C,E), as well as similar SSVEP responses by mean vector length (Fig. 3H). This suggests that, at least for SSVEP amplitude, responses to 7 Hz visual flicker in the fly brain are largely determined by where the object is around the fly, rather than by the fly's ability to control the object's position.

Interaction effects between fly behavior and bar position on SSVEP responses

Although we found very little difference in the receptive fields between closed-loop and open-loop replay, we hypothesized that other aspects of the flies' behavior could be contributing to the neural responses. For example, walking speed has been shown to correlate with the visual motion response of single neurons in the Drosophila brain (Chiappe et al., 2010). Additionally, flies might still be responding to the moving object in open loop, by walking or turning toward it for example. Therefore, rather than this being a simple question of closed loop versus replay, we are in fact presented with a complex multivariate problem with various factors which could affect the visual response: walking speed, turning speed, bar position, location of the electrode in the brain, and finally, whether the fly is actively controlling the bar or not. To simplify these multivariate computations, we first classified the SSVEP data by distinct categorical variables: (1) whether the flies were walking or not (Fig. 4A–C), and (2) whether the flies were turning or not (Fig. 4D–F; see Materials and Methods). In addition, we grouped brain regions into two distinct domains (optic lobes and central brain; Fig. 4G), and we subdivided the bar position into two different areas (in front or behind the fly; Fig. 4H). Finally, the classification of closed loop versus replay was also included in the analysis (Fig. 4I), thereby better assessing whether any differences become evident once all other factors are accounted for.

We performed a multiway ANOVA (Table 1) to uncover factors that influence the 7 Hz SSVEP as sampled through time (see Materials and Methods). When we considered primary effects, we found as expected that the neural response to flicker was significantly influenced by walking activity (Fig. 4C; Table 1), by the brain region (Fig. 4G), and by the position of the bar (Fig. 4H). In contrast, turning did not seem to alter the SSVEP (Fig. 4F), and as shown before, replay and closed loop remained similar on average (Fig. 4I). Differences between closed loop and replay only became evident at the level of the SSVEP when we considered interaction effects. When flies were actively walking in closed loop, both the central brain and optic lobes responded more strongly only when flies made turning maneuvers (Fig. 5A; Table 1). However, during replay the optic lobes had a reduced response to the flickering bar under these same conditions, opposite to the effect seen in the central brain (Fig. 5B). Interestingly, when the flies were not walking at all there was no difference if the fly was turning the ball or not either in closed loop or replay (Fig. 5C,D; Table 1). These results suggest that turning behavior combined with active walking are key factors in distinguishing whether the fly senses that it is in control (in closed loop), and that these SSVEP amplitude differences between closed loop and replay are most evident in the optic lobes. These results also suggest that the central brain and the optic lobes become uncoupled during replay (as evident by the crossed lines in Fig. 5B), when flies are not getting visual feedback matching their behavioral actions.

Table 1.

Multiway ANOVA testing how SSVEP varies with brain region, where the electrodes were located, whether the experiment was closed-loop or open-loop replay, the bar position (front vs behind the fly), whether or not the fly was walking, and whether or not the fly was turning

| Multiway ANOVA |

||

|---|---|---|

| Source | p | |

| Walking/not walking | Walking | 0.0044 |

| Turning vs turning left or right | Turning | 0.4672 |

| Bar position (front vs back) | Bar location | 0.000000 |

| Closed loop vs replay | Loop | 0.9538 |

| Brain region | Region | 0.000000 |

| Interaction effects | Walking × turning | 0.2954 |

| Walking × bar location | 0.000000 | |

| Walking × loop | 0.035 | |

| Walking × region | 0.4614 | |

| Turning × bar location | 0.3855 | |

| Turning × loop | 0.0189 | |

| Turning × region | 0.048 | |

| Bar location × loop | 0.2839 | |

| Bar location × region | 0.000000 | |

| Loop × region | 0.6365 | |

Bold text indicates significant effects.

Figure 5.

Mean SSVEP shows different interaction effects with behavior in closed-loop and replay experiments. Significant interaction effects were detected between brain region and turning behavior (see Table 1 for associated statistics). Different interaction effects were detected depending on if the experiment was closed loop (A, C) or replay (B, D), and whether the fly was walking (forwards, sidestep movements) or not. These interaction effects were not evident when the flies were not walking (C, D). SSVEP interaction effects were examined for periods when the bar was in front of the fly, to control for the effects of bar position. N = 24 flies.

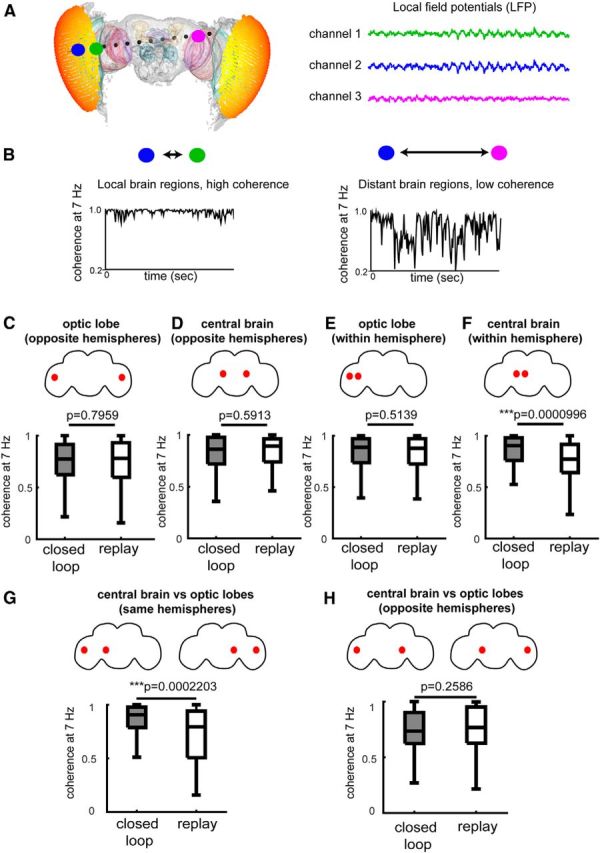

Coherence between channels increases during closed-loop experiments

Coherence analysis offers a way of measuring the coupling between different brain regions at specific frequency bands (Bokil et al., 2010; Paulk et al., 2013; Fig. 6A,B). Because our multichannel recording preparation provides LFP information from up to 16 recording sites simultaneously, it is ideally suited to measuring coherence between channels in the fly brain. Coherence is a spectral equivalent of correlation analysis between two signals at a specific frequency, such as 7 Hz (Bokil et al., 2010), and has been used in human attention studies to measure how different brain regions are engaged with one another during attention tasks (Fries et al., 2001). In previous work using Drosophila, we have shown that the level of coherence in the fly brain depends on the behavioral context, such as whether or not they are standing on a substrate (Paulk et al., 2013). Here, we extend these observations to comparisons between closed-loop fixation and replay. We found that neighboring channels are more coherent than interhemispheric channels, as might be expected (Fig. 6A,B).

Figure 6.

Coherence increases during closed-loop experiments. A, Coherence measures the coupling of brain activity between different brain regions, (Bokil et al., 2010; Paulk et al., 2013), here for the 7 Hz frequency tag in the fly brain. Representative examples of three LFP recordings are shown here, two are close together in one optic lobe (green, blue) and the other recording is more distal, in the opposite optic lobe (magenta). B, Coherence is higher between local brain regions and lower between distal brain regions. Coherence fluctuates through time, with high coherence indicating strong coupling between signals, whereas low coherence indicating weak coupling. C–H, Coherence was measured between electrode sites of different brain regions (indicated by red dots in fly brain schemas) when flies were active (walking and turning) during closed-loop and replay behavior. Coherence was not significantly different between opposite hemispheres of the optic lobes or central brain (C, D), or between neighboring channels in the optic lobes (E). However, coherence was significantly higher between neighboring channels within hemispheres in the central brain during closed-loop behavior (F). Coherence was significantly higher between central brain and optic lobes in the same hemisphere during closed-loop behavior (G), but not between opposite hemispheres (H). Within-hemisphere calculations were for both left and right sides of the brain. Statistical comparisons in C–F were performed using the Wilcoxon rank sum test, N = 24 flies.

To investigate whether coherence levels may be different between closed loop and replay, we performed a multiway ANOVA, taking into account how coherence at 7 Hz varies with channel comparison (local vs distant in the brain), fly behavior (walking or turning), bar position, and experimental condition (closed loop vs replay; Table 2). We subdivided our coherence data into comparisons between neighboring channels (local coherence) and channels on either side of the fly brain (interhemispherical coherence) in the following two regional categories: (1) optic lobes, and (2) central brain, across the experiment types. Unlike SSVEP amplitude, coherence is not significantly altered when the flies walk forward or side-step, it only increases when flies turn (Table 2). There were no differences between closed loop versus replay when we compared interhemispheric coherence in the optic lobes or central brain, or local coherence between neighboring recording sites in the optic lobes (Fig. 6C–E). In contrast, we observed a highly significant decrease in local coherence in the central brain during open-loop replay, compared with closed-loop behavior (Fig. 6F). This suggests that synchronous neural activity in the central brain must at some level reflect a fundamental difference between closed loop and replay, even if amplitude of the flicker response is on average similar between the paradigms (Fig. 3H). Interestingly, we also found that coherence between the central brain and optic lobes was decreased during open-loop replay, when comparing recording sites in the same hemisphere of the brain (Fig. 6G). However, this was not the case when comparing opposite hemispheres (Fig. 6H). These coherence results support our earlier finding that the central brain and optic lobes become uncoupled during replay under certain behavioral conditions (Fig. 5A,B). Together, our SSVEP results show that a direct correspondence between behavior and visual feedback (i.e., closed-loop control) promotes temporal coordination in the fly brain, whereas a mismatch (i.e., open-loop replay) produces a loss of coherence.

Table 2.

Multiway ANOVA testing how coherence varies with brain regions, whether the experiment was closed-loop or open-loop replay, the bar position (front vs behind the fly), whether or not the fly was walking, and whether or not the fly was turning

| Multiway ANOVA |

|||

|---|---|---|---|

| Source | F statistic | p | |

| Walking/not walking | Walking | 0.229706 | 0.631742 |

| Turning vs not turning | Turning | 7.100217 | 0.007708 |

| Bar position (front vs back) | Bar location | 3.564825 | 0.059017 |

| Closed loop vs replay | loop | 2.423198 | 0.119552 |

| Coherence estimation across brain regions | Brain region comparison | 437.1532 | <0.00000001 |

| Interaction effects | Walking × turning | 1.052304 | 0.304978 |

| Walking × bar location | 17.05647 | 0.0000363 | |

| Walking × loop | 22.53801 | 0.000002 | |

| Walking × region | 54.86743 | <0.00000001 | |

| Turning × bar location | 0.690296 | 0.406064 | |

| Turning × loop | 5.459179 | 0.019466 | |

| Turning × region | 0.384883 | 0.859501 | |

| Bar location × loop | 1.135769 | 0.286549 | |

| Bar location × region | 96.13367 | <0.00000001 | |

| Loop × region | 16.58375 | <0.00000001 | |

Bold text indicates significant effects.

Discussion

We live in a closed-loop world: our actions have immediate consequences that we can perceive, allowing us to continuously adjust our behavior to maximize our performance. For a newborn child, this realization may occur gradually, but afterward it is taken for granted that sensory feedback accurately describes the consequences of our actions. Feedback from our actions does not have to be consciously perceived to optimize our behavior: goal-directed behavior can be associated with a signal sent to the periphery, termed an efference copy, to modulate sensory thresholds for self-imposed actions, thereby establishing boundaries between self and non-self (Arbib, 2003).

The capacity to learn how one's own actions impact the outside world is a fundamental requisite for adaptive behavior in all animals. Even insects such as Drosophila can adjust to their environment by associating their actions with consequences, as shown in operant learning paradigms (Heisenberg and Wolf, 1984). In Drosophila, virtual reality paradigms have been successfully used for decades to study learning, memory, and attention-like mechanisms by examining behavioral choices made during closed-loop behavior. These elegant paradigms have allowed fly researchers to effectively ask questions that are more reminiscent of human psychology, e.g., how do flies discriminate figures from the background? (Heisenberg and Wolf, 1984; Fenk et al., 2014) Do flies prefer novelty? (Dill and Heisenberg, 1995) Do flies generalize context? (Liu et al., 1999; Xi et al., 2008) Is fly visual learning position invariant? (Tang et al., 2004) How do flies deal with contradictory cues? (Tang and Guo, 2001; Zhang et al., 2007). The closed-loop configuration of Drosophila flight arenas was crucial for many of these questions to be addressed. Yet, with the possible exception of one study (van Swinderen and Greenspan, 2003), there is little known about the neural correlates of closed-loop behavior in Drosophila. How does the fly brain respond when it encounters the somewhat artificial situation of being able to control the position of a visual object displayed on a wrap-around arena, compared with passively viewing the same object?

We addressed this last question by combining a recently developed multichannel brain recording preparation for Drosophila (Paulk et al., 2013) with novel approaches to accurately perform closed-loop visual fixation experiments in walking insects (Moore et al., 2014). Neural responses were measured by frequency tagging, a method to track flickering stimuli in brain activity. Frequency tagging has been extensively used to understand aspects of attention and cognition in humans and other vertebrates, usually through electroencephalogram (EEG) recordings (Vialatte et al., 2010; Norcia et al., 2015). However, it is only recently that frequency tagging has been established for insects such as the honeybee and fruit flies by recording LFP responses in the brain (van Swinderen, 2012; Paulk et al., 2013, 2014). This approach diverges from traditional methods of recording spiking activity from single cells, but has the advantage of allowing neural activity to be recorded across the entire brain of the animal in ways that are similar to human EEG recording methods.

We used the frequency-tagging approach to map receptive fields of the brain response to the flickering object during closed-loop behavior. Analogous to receptive fields of single neurons, LFPs from populations of neurons can show tuning characteristics depending on the location of the stimulus in the visual field, as shown in cats and primates (Victor et al., 1994; Kayser and König, 2004). We found that closed-loop and replayed stimuli evoked identical receptive fields for the 7 Hz frequency tag in the fly brain, indicating that receptive fields were not dependent on whether the fly was in control of it or not. This is in contrast to previous bee experiments, where a frequency tag at 20 Hz appeared stronger during closed loop compared with replay of the stimulus (Paulk et al., 2014). In future work, it will be crucial to determine whether some frequencies work better than others to study attention in the insect brain. Here, we used 7 Hz because walking flies respond strongly to this frequency (L. Kirszenblat, Y. Zhou, and B. van Swinderen, unpublished observations), and we have shown previously that 7 Hz creates an effective tag in the fly brain to study attention-like effects (van Swinderen, 2012).

Interestingly, the amplitude of the 7 Hz frequency tag was influenced by the fly's walking and turning behavior. We have previously shown that even contact with a substrate (e.g., standing on a Styrofoam ball) significantly changes brain activity in Drosophila (Paulk et al., 2013). Perhaps similarly, neural responses to motion are also modulated by behavioral states, such as walking or flying (Chiappe et al., 2010; Maimon et al., 2010). The integration of forward walking with simultaneous turning movements is most likely crucial for goal-directed behavior, as when a fly makes a choice to approach an object in its environment. Accordingly, our results showed increased coupling between the optic lobes and the central brain when the fly was engaged in such goal-directed behavior (active walking and turning) under closed-loop control.

To formally examine temporal coordination between different brain regions in our experiments, we measured LFP coherence. This had not been possible in previous honeybee closed-loop experiments (Paulk et al., 2014), which involved only two or three glass electrodes at a time, whereas each of our current Drosophila experiments included multiple (10–16) simultaneous recordings across the fly brain, from eye to eye. We found that coherence between optic lobe and central brain channels from the same hemisphere decreased during replay, whereas coherence between contralateral channels remained unchanged. Local coherence in the central brain also decreased during replay. This suggests that immediate visual feedback on the consequence of the fly's actions somehow binds neural signals in the fly brain, especially from ipsilateral sites involving the central brain. The central brain of insects includes different structures such as the mushroom bodies, which have been associated with learning and memory, and the central complex, which is crucial for coordinating sensory input with motor control and has been compared with the basal ganglia in humans (Strausfeld and Hirth, 2013). It is likely that increased coherence has functional relevance for operant behavior in general, and for selective attention more specifically.

One limitation of our recording preparation is that although we can examine how brain regions are coordinated at the global level, we cannot identify the individual neurons and circuits driving the neural responses. It is possible that centrifugal neurons that send signals from the central brain to the peripheral visual system could be responsible for the increased coherence between optic lobes and central brain during closed-loop behavior, because such neurons have been recently implicated in selective attention in insects (Wiederman and O'Carroll, 2013). Because the central brain is involved in sensory-motor coordination, the increased coherence within the central brain during closed-loop behavior might result from changes in temporal coordination between sensory and motor signals, or alternatively could arise from neuromodulation associated with the fly's attentional state. How these changes in coherence relate to the activity of individual neurons and neuromodulators could be explored in future by monitoring individual neural responses simultaneously with global neural activity, or through manipulation of neuromodulatory pathways.

How might neural coherence be relevant to behavior? When animals adapt their behavior to achieve a goal (such as visual fixation in our study) a number of different sensory and motor modalities must work together, such as vision, locomotion, and proprioception. This means that different brain regions controlling each of these modalities must be coordinated, and central-brain coherence provides a likely mechanism for this. However, when closed-loop feedback is broken, then coherence within central brain regions might be maladaptive; until an animal regains control by finding the right behavioral program, in the real world or in virtual reality. Our paradigm for Drosophila provides a way to effectively test the limits of this adaptive system in wild-type and mutant animals. One important implication of our findings is that a better understanding of behavior requires measures of temporal relationships among neurons in fly's central brain. Traditional approaches examining firing rates or calcium signals in just one region of interest would not capture the subtle temporal effects associated with behavioral control that we have uncovered here. Future work using our multichannel preparation or paired recordings in Drosophila mutants should elucidate how closed-loop control promotes sensory coupling in the fly brain.

Footnotes

Funding for this research was provided by the Australian Research Council and the Queensland Brain Institute. We thank Richard Moore and Gavin Taylor for assistance and development of FicTrac Software, Li Liu for assistance with providing materials for the diamond arena, and Aoife Larkin for comments on the paper.

The authors declare no competing financial interests.

References

- Arbib MA. The handbook of brain theory and neural networks. Ed 2. Cambridge, Mass: MIT; 2003. [Google Scholar]

- Berens P. CircStat: a MATLAB toolbox for circular statistics. J Stat Softw. 2009;31:1–21. [Google Scholar]

- Bokil H, Andrews P, Kulkarni JE, Mehta S, Mitra PP. Chronux: a platform for analyzing neural signals. J Neurosci Methods. 2010;192:146–151. doi: 10.1016/j.jneumeth.2010.06.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brembs B, Heisenberg M. The operant and the classical in conditioned orientation of Drosophila melanogaster at the flight simulator. Learn Mem. 2000;7:104–115. doi: 10.1101/lm.7.2.104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Card G, Dickinson MH. Visually mediated motor planning in the escape response of Drosophila. Curr Biol. 2008;18:1300–1307. doi: 10.1016/j.cub.2008.07.094. [DOI] [PubMed] [Google Scholar]

- Chiappe ME, Seelig JD, Reiser MB, Jayaraman V. Walking modulates speed sensitivity in Drosophila motion vision. Curr Biol. 2010;20:1470–1475. doi: 10.1016/j.cub.2010.06.072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collett T. Visual neurones for tracking moving targets. Nature. 1971;232:127–130. doi: 10.1038/232127a0. [DOI] [PubMed] [Google Scholar]

- Collett TS, Land MF. Visual control of flight behavior in hoverfly, Syritta pipiens L. J Comp Physiol. 1975;99:1–66. doi: 10.1007/BF01464710. [DOI] [Google Scholar]

- Dill M, Heisenberg M. Visual-pattern memory without shape-recognition. Philos Trans R Soc Lond B Biol Sci. 1995;349:143–152. doi: 10.1098/rstb.1995.0100. [DOI] [PubMed] [Google Scholar]

- Egelhaaf M, Borst A. A look into the cockpit of the fly- visual orientation, algorithms, and identified neurons. J Neurosci. 1993;13:4563–4574. doi: 10.1523/JNEUROSCI.13-11-04563.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenk LM, Poehlmann A, Straw AD. Asymmetric processing of visual motion for simultaneous object and background responses. Curr Biol. 2014;24:2913–2919. doi: 10.1016/j.cub.2014.10.042. [DOI] [PubMed] [Google Scholar]

- Fries P, Reynolds JH, Rorie AE, Desimone R. Modulation of oscillatory neuronal synchronization by selective visual attention. Science. 2001;291:1560–1563. doi: 10.1126/science.1055465. [DOI] [PubMed] [Google Scholar]

- Götz KG. Visual guidance in Drosophila. Basic Life Sci. 1980;16:391–407. doi: 10.1007/978-1-4684-7968-3_28. [DOI] [PubMed] [Google Scholar]

- Heisenberg M, Wolf R. Vision in Drosophila: genetics of microbehavior. Berlin, New York: Springer; 1984. [Google Scholar]

- Hyvarinen A, Karhunen J, Oja E. Independent component analysis. New York: Wiley; 2001. [Google Scholar]

- Kayser C, König P. Stimulus locking and feature selectivity prevail in complementary frequency ranges of V1 local field potentials. Eur J Neurosci. 2004;19:485–489. doi: 10.1111/j.0953-816X.2003.03122.x. [DOI] [PubMed] [Google Scholar]

- Kimmerle B, Egelhaaf M. Detection of object motion by a fly neuron during simulated flight. J Comp Physiol A. 2000;186:21–31. doi: 10.1007/s003590050003. [DOI] [PubMed] [Google Scholar]

- Lidierth M. sigTOOL: a MATLAB-based environment for sharing laboratory-developed software to analyze biological signals. J Neurosci Methods. 2009;178:188–196. doi: 10.1016/j.jneumeth.2008.11.004. [DOI] [PubMed] [Google Scholar]

- Liu L, Wolf R, Ernst R, Heisenberg M. Context generalization in Drosophila visual learning requires the mushroom bodies. Nature. 1999;400:753–756. doi: 10.1038/23456. [DOI] [PubMed] [Google Scholar]

- Maimon G, Straw AD, Dickinson MH. Active flight increases the gain of visual motion processing in Drosophila. Nat Neurosci. 2010;13:393–399. doi: 10.1038/nn.2492. [DOI] [PubMed] [Google Scholar]

- Mischiati M, Lin H, Siwanowicz I, Leonardo A. Active head control and its role in steering during dragonfly interception flights. Integr Comp Biol. 2014;54:E141. [Google Scholar]

- Moore RJ, Taylor GJ, Paulk AC, Pearson T, van Swinderen B, Srinivasan MV. FicTrac: a visual method for tracking spherical motion and generating fictive animal paths. J Neurosci Methods. 2014;225:106–119. doi: 10.1016/j.jneumeth.2014.01.010. [DOI] [PubMed] [Google Scholar]

- Norcia MA, Appelbaum GL, Ales MJ, Cottereau BR, Rossion B. The steady-state visual evoked potential in vision research: a review. J Vis. 2015;15(6):4, 1–46. doi: 10.1167/15.6.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nordström K, O'Carroll DC. Small object detection neurons in female hoverflies. Proc Biol Sci. 2006;273:1211–1216. doi: 10.1098/rspb.2005.3424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olberg RM, Worthington AH, Venator KR. Prey pursuit and interception in dragonflies. J Comp Physiol A. 2000;186:155–162. doi: 10.1007/s003590050015. [DOI] [PubMed] [Google Scholar]

- Olberg RM, Seaman RC, Coats MI, Henry AF. Eye movements and target fixation during dragonfly prey-interception flights. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2007;193:685–693. doi: 10.1007/s00359-007-0223-0. [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulk AC, Zhou Y, Stratton P, Liu L, van Swinderen B. Multichannel brain recordings in behaving Drosophila reveal oscillatory activity and local coherence in response to sensory stimulation and circuit activation. J Neurophysiol. 2013;110:1703–1721. doi: 10.1152/jn.00414.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulk AC, Stacey JA, Pearson TW, Taylor GJ, Moore RJ, Srinivasan MV, van Swinderen B. Selective attention in the honeybee optic lobes precedes behavioral choices. Proc Natl Acad Sci U S A. 2014;111:5006–5011. doi: 10.1073/pnas.1323297111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiser MB, Dickinson MH. A modular display system for insect behavioral neuroscience. J Neurosci Methods. 2008;167:127–139. doi: 10.1016/j.jneumeth.2007.07.019. [DOI] [PubMed] [Google Scholar]

- Roach BJ, Mathalon DH. Event-related EEG time-frequency analysis: an overview of measures and an analysis of early gamma band phase locking in schizophrenia. Schizophr Bull. 2008;34:907–926. doi: 10.1093/schbul/sbn093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strausfeld NJ, Hirth F. Deep homology of arthropod central complex and vertebrate basal ganglia. Science. 2013;340:157–161. doi: 10.1126/science.1231828. [DOI] [PubMed] [Google Scholar]

- Straw AD. Vision egg: an open-source library for realtime visual stimulus generation. Front Neuroinform. 2008;2:4. doi: 10.3389/neuro.11.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang S, Guo A. Choice behavior of Drosophila facing contradictory visual cues. Science. 2001;294:1543–1547. doi: 10.1126/science.1058237. [DOI] [PubMed] [Google Scholar]

- Tang SM, Wolf R, Xu SP, Heisenberg M. Visual pattern recognition in Drosophila is invariant for retinal position. Science. 2004;305:1020–1022. doi: 10.1126/science.1099839. [DOI] [PubMed] [Google Scholar]

- van Swinderen B. Attention in Drosophila. Int Rev Neurobiol. 2011;99:51–85. doi: 10.1016/B978-0-12-387003-2.00003-3. [DOI] [PubMed] [Google Scholar]

- van Swinderen B. Competing visual flicker reveals attention-like rivalry in the fly brain. Front Integr Neurosci. 2012;6:96. doi: 10.3389/fnint.2012.00096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Swinderen B, Brembs B. Attention-like deficit and hyperactivity in a Drosophila memory mutant. J Neurosci. 2010;30:1003–1014. doi: 10.1523/JNEUROSCI.4516-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Swinderen B, Flores KA. Attention-like processes underlying optomotor performance in a Drosophila choice maze. Dev Neurobiol. 2007;67:129–145. doi: 10.1002/dneu.20334. [DOI] [PubMed] [Google Scholar]

- van Swinderen B, Greenspan RJ. Salience modulates 20–30 Hz brain activity in Drosophila. Nat Neurosci. 2003;6:579–586. doi: 10.1038/nn1054. [DOI] [PubMed] [Google Scholar]

- Vialatte FB, Maurice M, Dauwels J, Cichocki A. Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog Neurobiol. 2010;90:418–438. doi: 10.1016/j.pneurobio.2009.11.005. [DOI] [PubMed] [Google Scholar]

- Victor JD, Purpura K, Katz E, Mao B. Population encoding of spatial-frequency, orientation, and color in macaque V1. J Neurophysiol. 1994;72:2151–2166. doi: 10.1152/jn.1994.72.5.2151. [DOI] [PubMed] [Google Scholar]

- Wiederman SD, O'Carroll DC. Selective attention in an insect visual neuron. Curr Biol. 2013;23:156–161. doi: 10.1016/j.cub.2012.11.048. [DOI] [PubMed] [Google Scholar]

- Wolf R, Heisenberg M. Basic organization of operant-behavior as revealed in Drosophila flight orientation. J Comp Physiol A. 1991;169:699–705. doi: 10.1007/BF00194898. [DOI] [PubMed] [Google Scholar]

- Xi W, Peng Y, Guo J, Ye Y, Zhang K, Yu F, Guo A. Mushroom bodies modulate salience-based selective fixation behavior in Drosophila. Eur J Neurosci. 2008;27:1441–1451. doi: 10.1111/j.1460-9568.2008.06114.x. [DOI] [PubMed] [Google Scholar]

- Zhang K, Guo JZ, Peng Y, Xi W, Guo A. Dopamine-mushroom body circuit regulates saliency-based decision-making in Drosophila. Science. 2007;316:1901–1904. doi: 10.1126/science.1137357. [DOI] [PubMed] [Google Scholar]