Abstract

Dancing to music involves synchronized movements, which can be at the basic beat level or higher hierarchical metrical levels, as in a march (groups of two basic beats, one–two–one–two …) or waltz (groups of three basic beats, one–two–three–one–two–three …). Our previous human magnetoencephalography studies revealed that the subjective sense of meter influences auditory evoked responses phase locked to the stimulus. Moreover, the timing of metronome clicks was represented in periodic modulation of induced (non-phase locked) β-band (13–30 Hz) oscillation in bilateral auditory and sensorimotor cortices. Here, we further examine whether acoustically accented and subjectively imagined metric processing in march and waltz contexts during listening to isochronous beats were reflected in neuromagnetic β-band activity recorded from young adult musicians. First, we replicated previous findings of beat-related β-power decrease at 200 ms after the beat followed by a predictive increase toward the onset of the next beat. Second, we showed that the β decrease was significantly influenced by the metrical structure, as reflected by differences across beat type for both perception and imagery conditions. Specifically, the β-power decrease associated with imagined downbeats (the count “one”) was larger than that for both the upbeat (preceding the count “one”) in the march, and for the middle beat in the waltz. Moreover, beamformer source analysis for the whole brain volume revealed that the metric contrasts involved auditory and sensorimotor cortices; frontal, parietal, and inferior temporal lobes; and cerebellum. We suggest that the observed β-band activities reflect a translation of timing information to auditory–motor coordination.

SIGNIFICANCE STATEMENT With magnetoencephalography, we examined β-band oscillatory activities around 20 Hz while participants listened to metronome beats and imagined musical meters such as a march and waltz. We demonstrated that β-band event-related desynchronization in the auditory cortex differentiates between beat positions, specifically between downbeats and the following beat. This is the first demonstration of β-band oscillations related to hierarchical and internalized timing information. Moreover, the meter representation in the β oscillations was widespread across the brain, including sensorimotor and premotor cortices, parietal lobe, and cerebellum. The results extend current understanding of the role of β oscillations in neural processing of predictive timing.

Keywords: event-related desynchronization, ERD, magnetoencephalography, predictive coding, timing processing

Introduction

When people dance to music with isochronous beats, they synchronize their movements with each beat while emphasizing or accenting the downbeats (beat one) compared to upbeats of perceived musical meters, such as a march (perceived as one–two–one …) or a waltz (perceived as one–two–three–one …). Spontaneous dance movements to music involve different repetitive limb and trunk motions corresponding to different levels of the metrical hierarchy (Toiviainen et al., 2009). Thus, adults can extract hierarchical timing information and plan entrained movements in a predictive manner without specific training. Predictive timing behavior is illustrated in finger tapping to a metronome beat, wherein the beat-to-tap interval is notably shorter than the fastest reaction time (Repp, 2005; Repp and Su, 2013). Predictive timing also appears to be an integral part of dynamic attention allocation (Jones and Boltz, 1989), such that auditory discrimination is facilitated at predictable time points (Jones et al., 2002; Grube and Griffiths, 2009; Repp, 2010), and even enhanced during rhythmic finger tapping in synchrony with these time points (Morillon et al., 2014). Altogether, these findings suggest that privileged connections between auditory and motor systems underlie predictive timing processing.

As for neural processing of beat and meter, evoked (phase-locked) activity elicited by acoustically identical beats has been shown to reflect the subjective sense of meter in magnetoencephalography (MEG; Fujioka et al., 2010) and electroencephalography (EEG; Potter et al., 2009; Nozaradan et al., 2011; Schaefer et al., 2011). However, only our previous study examined the brain areas sensitive to differences in metrical structure (Fujioka et al., 2010). The results showed involvement of sensorimotor cortices and basal ganglia and hippocampal areas, in addition to auditory areas. We also demonstrated that the magnitude of induced (non-phase-locked) β-band (13–30 Hz) oscillations during metronome listening is modulated in a synchronized manner in bilateral auditory cortices (Fujioka et al., 2009) and motor-related areas, including sensorimotor cortices, supplementary motor areas, basal ganglia, and cerebellum (Fujioka et al., 2012). Because the observed induced β activity does not contain phase-locked evoked responses, and our listening task did not require any movements (unlike tapping studies; Pollok et al., 2005a,b), these findings strongly suggest that the auditory system is part of a functional sensorimotor network characterized by β-band oscillations (Neuper et al., 2006). Recently, β activity has been associated with anticipation and predictive timing (Arnal et al., 2011; van Ede et al., 2011) as part of a hierarchical network of oscillators involved in predictive sensory processing, including contributions from δ to θ (1–3 and 4–7 Hz, respectively) and α to β (8–12 and 13–30 Hz, respectively) frequencies (Saleh et al., 2010; Arnal et al., 2014).

The present study examined whether induced β oscillations reflect musical meter and, if so, which brain areas are involved. We hypothesized that a shared representation for auditorily perceived and subjectively imagined meter exists, although the latter would be more effortful, involving extended brain areas. Participants listened to alternations of 12-beat sequences of metrically accented beats (every second or third beat was louder) and unaccented beats (all beats at the same loudness), probing metrical perception and imagery, respectively. This design allowed us to examine induced β modulation, as shown previously for listening to beats (Fujioka et al., 2009, 2012), and extend it to metrical perception, while avoiding effects of movements.

Materials and Methods

Participants.

Fourteen healthy young adults (eight females; age, 19–32 years; mean, 22.8 years) who were active professionally performing musicians at or above the university level (3–29 years of musical training; mean, 12.7 years; 3–28 h of weekly practice; mean, 18.5) participated in the study. All were right-handed except one with ambidexterity. None of the participants reported any history of neurological, otological, or psychological disorders and their hearing was tested with clinical audiometry between 250 and 4000 Hz. All gave signed informed consent before participation after receiving a detailed explanation of the nature of study. The procedure was approved by the Ethics Board at the Rotman Research Institute, Baycrest Centre.

Stimuli and task.

Auditory stimuli were 250 Hz pure tones with 5 ms rise and fall times and 15 ms steady-state duration, created by MATLAB using a sampling rate of 44,100 Hz (MathWorks). The tones were repeated with a regular onset-to-onset interval of 390 ms and combined into a looping 24 tone sequence. During the first half of the sequence, every second or third tone was acoustically accented (+13 dB) to create either a march or a waltz metric structure, whereas in the second half, unaccented tones of equal intensity (40 dB above individual sensation threshold, measured immediately before each MEG recording) were repeated 12 times. The resultant 24 tone sequence was then repeated continuously 28 times (about 4.5 min) in the march condition and 43 times (about 7 min) in the waltz condition in separate blocks. The waltz block was made ∼50% longer than the march block to accommodate the same number of each different beat type so as to equate signal-to-noise ratios for each beat type in MEG recordings. The stimulation was controlled by Presentation software (Neurobehavioral Systems). Participants were instructed to perceive the meter in the accented segments and to imagine the same meter in the unaccented segments. Occasionally a 500 Hz pure-tone target sound with the same duration as the other tones was inserted in one of the unaccented 12 beat segments at a nominal downbeat or upbeat position. The target position and beat type (down vs up) were randomized by the stimulation program to insert targets in 10% of sequences (i.e., about three to five times in a block) with equal probability in downbeat and upbeat positions. The actual number of targets varied across blocks and participants. No targets occurred in nominal middle-beat positions in the case of the waltz condition for the sake of simplicity. When hearing the high-pitched tone, participants indicated whether it was at a downbeat or an upbeat position by pressing a button with their left or right index finger on a keypad assigned to downbeats and upbeats. This target detection task was primarily designed to keep the participants vigilant and attending to the respective metric structure, rather than assessing their behavioral performance, given the level of musicianship in the participants. Another reason to keep the number of the targets extremely small was to prevent contamination from movements. Other than the occasional button presses, participants were instructed to stay still, avoid any movements, and keep their eyes open and fixated on a visual target placed in front of them. The march and waltz blocks were alternated, and each condition repeated three times. The order of the blocks as well as the hand-target (downbeat or upbeat) assignment was randomized across participants. Before going to the MEG testing, the participants received detailed instruction about the stimuli and task, and practiced until they felt confident. The sound was delivered binaurally with ER3A transducers (Etymotic Research), which were connected to the participant's ears via 3.4-m-long plastic tubes and foam earplugs.

MEG recording.

MEG was performed in a quiet magnetically shielded room with a 151 channel whole-head axial gradiometer-type MEG system (VSM Medtech) at the Rotman Research Institute. The participants were seated comfortably in an upright position with the head resting inside the helmet-shaped MEG sensor. The magnetic field data were low-pass filtered at 200 Hz, sampled at 625 Hz, and stored continuously. The head location relative to MEG sensors was registered at the beginning and end of each recording block using small electromagnetic coils attached to three fiducial points at the nasion and left and right preauricular points. The mean of the repeated fiducial recordings defined the head-based coordinate system with origin at the midpoint between the bilateral preauricular points. The posteroanterior x-axis was oriented from the origin to the nasion, the mediolateral y-axis (positive toward the left ear) was the perpendicular to x in the plane of the three fiducials, and the inferosuperior z-axis was perpendicular to the x–y plane (positive toward the vertex). The block was repeated when the fiducial locations deviated in any direction by more than ±5 mm from the mean. A surface electromyogram (EMG) was recorded with brass electrodes placed below the first dorsal interosseous muscle and the first knuckle of the index finger in the left and right hands using two channels of a bipolar EMG amplifier system. The EMG signals as well as the trigger signals from the stimulus computer were recorded simultaneously with the magnetoencephalogram.

Data analysis.

Artifacts in the MEG recording were corrected in the following procedure. First, the time points of eye-blink and heartbeat artifacts were identified using independent component analysis (Ille et al., 2002). The first principle components of the averaged artifacts were used as spatiotemporal templates to eliminate artifacts in the continuous data (Kobayashi and Kuriki, 1999). Thereafter, the continuous data were parsed into epochs according to experimental trials containing 24 beat intervals of 390 ms (9.36 s) plus preceding and succeeding intervals of 1.0 s each, resulting in a total epoch length of 11.36 s.

We used two types of source analysis. First, to examine the dynamics of induced β-band oscillations in bilateral auditory cortices, we obtained a dipole source model and examined the time series of auditory evoked responses and its time-frequency decomposition representing event-related changes in oscillatory activity. Second, to examine the involvement of different brain areas in the β-band activities, we applied a model-free source analysis using a spatial filter based on an MEG beamformer to investigate β activity across the whole brain.

β-Band oscillations in bilateral auditory cortical sources.

To examine source activities in the bilateral auditory cortices, we used a source localization approach with equivalent current dipole model. Short segments of MEG data ±200 ms around the time points of the accented beats were averaged to obtain the auditory evoked response. At the N1 peak of the auditory evoked response, single equivalent dipoles were modeled in the left and right temporal lobes. In right-handed subjects, the auditory source in the right hemisphere is consistently found to be several millimeters more anterior than in the left hemisphere (Pantev et al., 1998). Therefore, we compared locations between the two hemispheres in our data to verify the quality of the source localization. The accented beats were used for this modeling purpose because their N1 peak was particularly enhanced, thus offering superior signal-to-noise ratio for the dipole modeling. Dipole locations and orientations from all blocks were averaged to obtain individual dipole models.

Based on the individual dipole models, the source waveforms for all single trials were calculated (Tesche et al., 1995; Teale et al., 2013). The resulting dipole source waveforms, sometimes termed “virtual channel” or “virtual electrode” waveforms, served as estimates of the neuroelectric activity in the auditory cortices. The polarities of the dipoles were adjusted to follow the convention from EEG recording such that the N1 response showed negative polarity at frontocentral electrodes.

The single-trial source waveforms were submitted to time-frequency analysis. To obtain induced oscillatory activities, the time-domain-averaged evoked response was regressed out from all waveforms. The time-frequency decomposition used a modified Morlet wavelet (Samar et al., 1999) at 64 logarithmically spaced frequencies between 2 and 50 Hz. The half maximum width of the wavelet was adjusted across the frequency range to contain two cycles at 2 Hz and six cycles at 50 Hz. This design accounted for the expectation of a larger number of cycles in a burst of oscillations at higher than low frequencies. The signal power was calculated for each time-frequency coefficient. For each frequency bin, the signal power was normalized to the mean across the 9.36 s epoch (e.g., 24 beat cycle) and expressed as the signal power change. This normalization was conducted separately for each stimulus interval and meter context. The percentage signal power changes were averaged across repeated trials and across participants. The resulting time-frequency map of the whole epoch was segmented according to the 390 ms beat interval, the two-beat interval in the march condition, and the three-beat interval in the waltz condition, separately for the intervals of meter perception (containing accented beats) and imagery (no physical accents present). The 13–30 Hz frequency range subsumes multiple functionally and individually different narrowband oscillations, and signal analyses were performed on subsets of the β band (Kropotov, 2009). The power modulation in the auditory cortex β oscillations was first inspected using the aforementioned time-frequency decompositions for the average of responses across all beat types and then selectively for each beat type, resulting in the TFRs shown in Figures 2–4. Based on the previous observation of the strongest event-related desynchronization (ERD) at 20 Hz, we examined the power modulation by averaging the wavelet coefficients across bins with center frequencies between 18 and 22 Hz. The combined signal had a bandpass characteristic with points of 50% amplitude reduction at 15.1 and 25.5 Hz, as determined by the properties of the short Morlet wavelet kernels. In the resulting β-band waveforms, the 95% confidence interval of the grand average as a representation of subject variability was estimated with bootstrap resampling (N = 1000). The magnitude of power decrease at ∼200 ms following tone onsets compared to the baseline was computed as the mean in a 120 ms window around the grand-average peak latency for each beat type. This magnitude of β-ERD was further examined by a repeated measures ANOVA using three within-subject factors—hemisphere (left vs right), beat type (downbeat vs upbeat, plus middle beat in the case of waltz), and stimulus interval (perception vs imagery)—separately for the march and waltz meter conditions.

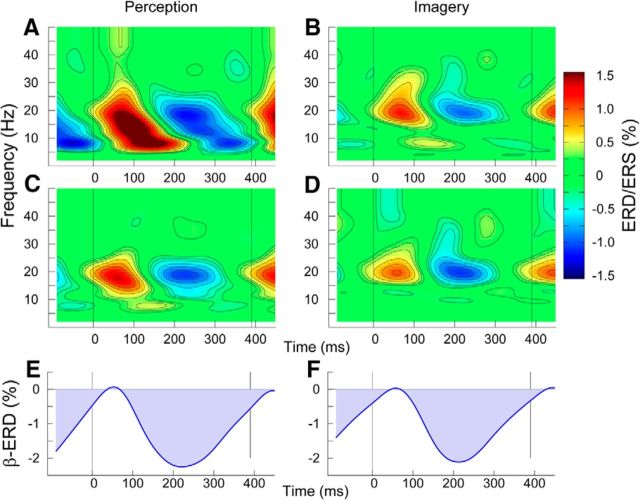

Figure 2.

Oscillatory activities related to the beat in the left and right auditory cortices, obtained by averaging across all beat and meter types. Spectral power changes were referenced to the mean across the beat interval. A, The original TFR for all the beat types averaged from the accented “perception” stimulus interval. The signal power increase at ∼100 ms latency between 5 and 15 Hz reflects spectral power of the auditory evoked response, which is enhanced by the acoustically accented beats. B, The TFR during the unaccented imagery stimulus interval. This contains less contribution from the evoked response because all the stimuli are unaccented. C, D, Induced oscillatory activities expressed in the TFRs in which the spectral power of the averaged evoked response was subtracted before averaging, thus leaving only non-phase-locked signal power changes. E, F, Time series of β modulation in the 15–25 Hz band. The β-ERD was referenced to the maximum amplitude at ∼50 ms latency.

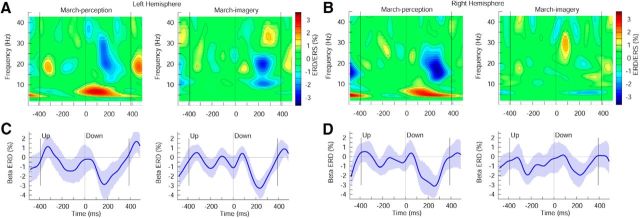

Figure 3.

Induced oscillatory activity in the left and right auditory cortex for the march condition. A, B, Time-frequency representation of the auditory source activity in the perception and imagery conditions, respectively, in the left (A) and right hemispheres (B). C, D, Time course of modulation of β-band activity in the left (C) and right hemispheres (D). The shaded area represents the 95% confidence interval of the group mean.

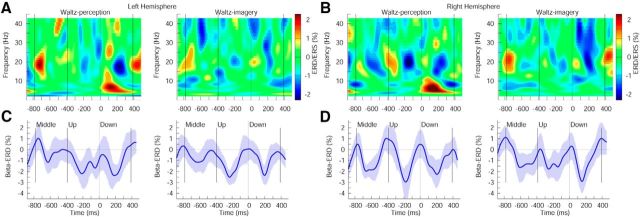

Figure 4.

Induced oscillatory activity in the left and right auditory cortex for the waltz condition. A, B, Time-frequency representation of the auditory source activity in the perception and imagery conditions, respectively, in the left (A) and right hemispheres (B). C, D, Time course of modulation of β-band activity in the left (C) and right hemispheres (D). The shaded area represents the 95% confidence interval of the group mean.

β-Band oscillations in beamformer sources.

We identified areas in the whole brain that showed a contrast in responses to different beat types and thus likely contributed to the meter representation. This analysis was conducted through three major steps: first, an MEG source model was constructed as a spatial filter across the brain volume; second, β-band power of the spatially filtered source activity at each volume element was calculated; and finally, the brain areas were extracted at which β activities matched the prescribed contrast between beat types using a multivariate analysis.

First, to capture source activities across the brain volume, we constructed a spatial filter using a beamformer approach called synthetic aperture magnetometry (SAM) and applied it to the magnetic field data to calculate the time series of local source activity at 8 × 8 × 8 mm volume elements covering the whole brain. The SAM approach uses a linearly constrained minimum variance beamformer algorithm (Van Veen et al., 1997; Robinson and Vrba, 1999), normalizes source power across the whole cortical volume (Robinson, 2004), and is capable of revealing deep brain sources (Vrba and Robinson, 2001; Vrba, 2002). SAM source analysis has been successfully applied for identifying activities in auditory (Ross et al., 2009) and sensorimotor cortices (Jurkiewicz et al., 2006), and deep sources such as hippocampus (Riggs et al., 2009), fusiform gyrus, and amygdala (Cornwell et al., 2008). The SAM spatial filter was computed with 15–25 Hz bandpass filtered MEG data, according to our previous SAM analysis of beat-related β oscillations (Fujioka et al., 2012). This operation was aimed at obtaining the SAM filter that can suppress correlated activities across the brain volume (thus spurious artifacts observed at different areas likely originated from the shared source) specifically in this frequency range, based on the covariance matrix. In this computation, we used a template brain magnetic resonance image (MRI) in standard Talairach coordinates (positive axes toward anterior, right, and superior directions) using the Analysis of Functional NeuroImages (AFNI) software package (Cox, 1996). MEG source analysis based on individual coregistration with a spherical head model, and group analysis based on a template brain, is sufficiently accurate (Steinstraeter et al., 2009) and equivalent to the typical spatial uncertainty in group analysis based on Talairach normalization using individual MRIs (Hasnain et al., 1998). Thus, this approach has been used when individual MRIs are not available (Jensen et al., 2005; Ross et al., 2009; Fujioka et al., 2010).

As a next step, time series of β-power change were calculated at each volume element using the SAM virtual channel data, after applying a bandpass filter to the artifact-corrected magnetic field data. The bandpass filter was constructed using a MATLAB filter design routine (fir1) to obtain similar frequency characteristics as for the wavelet analysis of auditory β-band activity with points of 50% amplitude reduction at 15.0 and 25.0 Hz. Epochs of magnetic field data were first transformed to the SAM virtual channel data for each single trial and normalized to the SD of the whole epoch segment. Thereafter, we averaged the one-beat onset-to-onset interval (0–390 ms) plus a short segment before and after (each about 48 ms) for each combination of beat type, stimulus interval, and meter condition. The baseline was adjusted using a time window in the latency range between −48 and 0 ms.

Finally, we compared the four-dimensional source data (3D maps × time) across beat types using partial least squares (PLS) analysis (McIntosh et al., 1996; Lobaugh et al., 2001; McIntosh and Lobaugh, 2004). This multivariate analysis, using singular value decomposition, is an extension of principal component analysis to identify a set of orthogonal factors [latent variables (LVs)] to model the covariance between two matrices such as spatiotemporal brain activities and contrasts between conditions. A latent variable consists of three components: (1) a singular value representing the strength of the identified differences; (2) a design LV, which characterizes a contrast pattern across conditions and indicates which conditions have different data-contrast correlations; and (3) a brain LV characterizing which time points and source locations represent the spatiotemporal pattern most related to the associated contrast described as the design LV. Here we used a nonrotated version of PLS analysis (McIntosh and Lobaugh, 2004), which allows a hypothesis-driven comparison of the dependent variables using a set of predefined design contrasts about conditions. (For example, in our march case, we assigned −1 to the downbeat and +1 to the upbeat, and in the waltz case, we added another design LV, assigning −1 to the middle beat and +1 to the upbeat.) Our main goal here was to identify differences between beat types in acoustically and subjectively maintained meter processing. Accordingly, we conducted four separate nonrotated PLS analyses (march perception, march imagery, waltz perception, waltz imagery). In the march perception and march imagery conditions, a design LV contrasting downbeat and upbeat data was applied. For the waltz perception and waltz imagery conditions, downbeat, middle beat, and upbeat data were compared as a combination of two pairwise comparisons (e.g., LV1, down vs up; LV2, middle vs up). The significance of obtained LVs was validated through two types of resampling statistics. The first step, using random permutation, examined whether each latent variable represented a significant contrast between the conditions. The PLS analysis was repeatedly applied to the data set with permuted conditions within subjects, to observe a probability reflecting the number of times the permuted singular value was higher than the originally observed singular value. We used 200 permutations, and the significance level was set at 0.05. For each significant LV, the second step examined where and at which time point the corresponding brain LV (the obtained brain activity pattern) was significantly consistent across participants. At each volume element and each time point, the SD of the brain LV value was estimated with bootstrap resampling (N = 200) with replacing participants. The results were expressed as the ratio of the brain LV value to the SD. Note that the ratio reflects the signal strength compared to the interindividual variability, corresponding to a z-score. Using this bootstrap ratio as a threshold, the locations in which this bootstrap ratio was larger than 2.0 (corresponding to the 95% confidence interval) as a mean within the beat interval were visualized using AFNI, as illustrated in Figures 5 and 6. Finally, the same data were further analyzed to extract local maxima and minima to determine the brain areas contributing to the obtained contrast, indicated in the Tables 1 and 2.

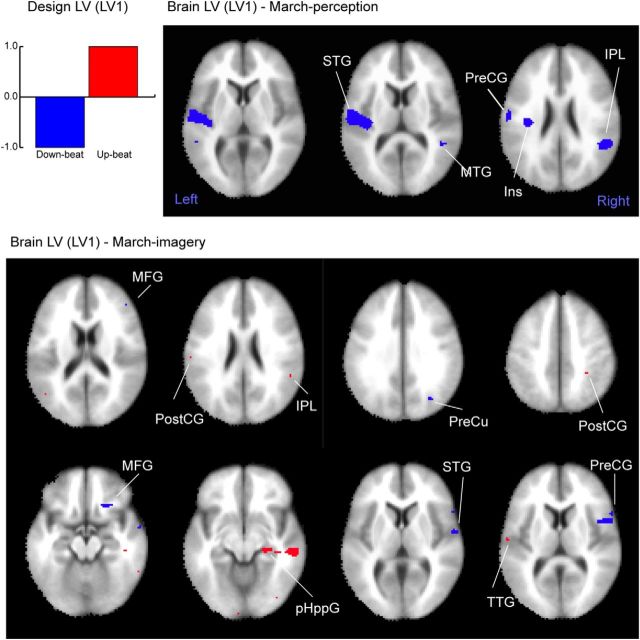

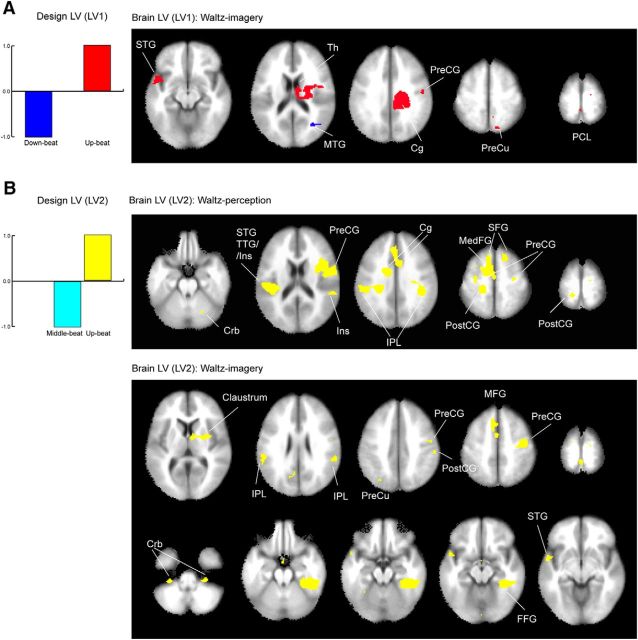

Figure 5.

The results of the PLS analysis for the β-band power that characterizes the contrast between the different beat types in the march condition. The LV1 represents the contrast between the downbeat and upbeat (left, top), which was significant in both march perception and march imagery conditions. The corresponding brain areas in the march perception condition (right, top) demonstrate only the cool colored voxels, in which the β power is decreased for the downbeat compared to the upbeat. In the march imagery condition (bottom), the associated brain areas (right) show both cool colored area (downbeat β decrease > upbeat β decrease) and warm colored areas (e.g., downbeat β decrease < upbeat β decrease). The list of the locations and Talairach coordinates are indicated in Table 1. Ins, Insula; MFG, middle frontal gyrus; MTG, middle temporal gyrus; pHppG, parahippocampal gyrus; PostCG, postcentral gyrus; PreCG, precentral gyrus; PreCu, precuneus; TTG, transverse temporal gyrus.

Figure 6.

The results of the PLS analysis for the β-band power that characterizes the contrast between the different beat types in the waltz condition. A, The LV1 related to the contrast between the downbeat and upbeat (left) was only significant in the waltz perception condition. The corresponding brain areas (right) demonstrate the blue colored voxels, in which the β power is more decreased for the downbeat compared to the upbeat, and the red colored areas, representing the opposite pattern. B, The LV2 related to the contrast between the middle beat and upbeat (left) was significant for both the waltz perception and waltz imagery conditions, yielding the associated brain areas (right). Note that for the LV2, in both perception and imagery, the only brain areas above the significance level were associated with the larger β-power decrease for the upbeat, compared to the middle beat. The list of the locations and Talairach coordinates are indicated in Table 2. Cg, Cingulate; Crb, cerebellum; FFG, fusiform gyrus; Ins, insula; MedFG, medial frontal gyrus; MFG, middle frontal gyrus; MTG, middle temporal gyrus; PCL, paracentral lobule; PostCG, postcentral gyrus; PreCG, precentral gyrus; PreCu, precuneus; SFG, superior frontal gyrus; Th, thalamus; TTG, transverse temporal gyrus.

Table 1.

Stereotaxic Talairach coordinates of brain area locations with a statistically significant effect of the beat-type contrast in β-ERD for the march condition

| x (R–L) | y (A–P) | z (I–S) | Intensity | ||

|---|---|---|---|---|---|

| March perception | |||||

| Down > up | |||||

| Right | Inferior parietal lobule | −61 | 27 | 37 | −3.311 |

| Left | Precentral gyrus | 60 | 8 | 12 | −3.058 |

| Right | Supramarginal gyrus | −53 | 47 | 23 | −2.829 |

| Right | Precentral gyrus | −65 | 2 | 16 | −2.747 |

| Left | Insula | 37 | 21 | 18 | −2.448 |

| Left | Middle temporal gyrus | 53 | 43 | 8 | −2.242 |

| March imagery | |||||

| Down > up | |||||

| Right | Middle frontal gyrus | −23 | −25 | −16 | −2.661 |

| Right | Precuneus | −31 | 69 | 32 | −2.589 |

| Right | Superior temporal gyrus | −61 | 5 | 6 | −2.539 |

| Left | Superior frontal gyrus | 0 | −4 | 71 | −2.495 |

| Right | Uncus | −8 | −3 | −25 | −2.391 |

| Right | middle frontal gyrus | −46 | −38 | 19 | −2.251 |

| Up > down | |||||

| Right | Middle temporal gyrus | −60 | 28 | −11 | 2.631 |

| Right | Inferior parietal lobule | −31 | 40 | 49 | 2.532 |

| Left | Lingual gyrus | 0 | 89 | −13 | 2.412 |

| Right | Parahippocampal gyrus | −23 | 27 | −11 | 2.387 |

| Left | Transverse temporal gyrus | 60 | 13 | 11 | 2.241 |

| Right | Inferior occipital gyrus | −38 | 82 | −11 | 2.188 |

| Left | Middle temporal gyrus | 45 | 64 | 18 | 2.149 |

| Left | Inferior temporal gyrus | 60 | 43 | −15 | 2.085 |

| Left | Anterior cingulate | 7 | −28 | 24 | 2.076 |

| Right | Inferior parietal lobule | −53 | 40 | 25 | 2.052 |

The areas with increased ERD for the downbeat compared to the upbeat (downbeat > upbeat) are associated with a negative value of the intensity, and the areas with increased ERD for the upbeat compared to the downbeat (upbeat > downbeat) are associated with a positive intensity value. The intensity indicates the averaged z-score of the brain activity component within the time interval of interest based on the bootstrap resampling on each location. L, Left; R, right; A, anterior; P, posterior; I, inferior; S, superior.

Table 2.

Stereotaxic Talairach coordinates of brain area locations with a statistically significant effect of the beat-type contrast in β-ERD for the waltz condition

| x (R–L) | y (A–P) | z (I–S) | Intensity | ||

|---|---|---|---|---|---|

| Waltz perception | |||||

| Up > middle (LV2) | |||||

| Left | Middle frontal gyrus | 22 | 3 | 46 | 3.908 |

| Left | Postcentral gyrus | 15 | 40 | 72 | 3.692 |

| Right | Superior frontal gyrus | −8 | −18 | 52 | 3.335 |

| Right | Insula | −31 | −5 | 17 | 3.302 |

| Left | Insula | 37 | 30 | 21 | 3.072 |

| Right | Inferior parietal lobule | −39 | 35 | 34 | 2.921 |

| Right | Uvula | −23 | 67 | −23 | 2.803 |

| Left | Inferior parietal lobule | 60 | 26 | 34 | 2.701 |

| Right | Precentral gyrus | −31 | 13 | 58 | 2.694 |

| Right | Middle temporal gyrus | −64 | 9 | −5 | 2.414 |

| Right | Middle temporal gyrus | −46 | 69 | 8 | 2.333 |

| Right | Precuneus | −1 | 69 | 56 | 2.329 |

| Waltz imagery | |||||

| Down > Up (LV1) | |||||

| Right | Middle temporal gyrus | −31 | 64 | 18 | −2.583 |

| Up > down (LV1) | |||||

| Right | Superior parietal lobule | −16 | 70 | 59 | 3.249 |

| Left | Superior temporal gyrus | 53 | −7 | −12 | 3.18 |

| Right | Thalamus | −8 | 7 | 13 | 3.07 |

| Left | Culmen | 45 | 41 | −23 | 2.651 |

| Left | Paracentral lobule | 0 | 40 | 73 | 2.333 |

| Left | Uncus | 7 | 4 | −25 | 2.316 |

| Right | Precentral gyrus | −53 | 13 | 34 | 2.302 |

| Right | Superior frontal gyrus | −16 | 16 | 72 | 2.171 |

| Right | Cerebellar tonsil | −30 | 33 | −44 | 2.17 |

| Left | Postcentral gyrus | 37 | 22 | 46 | 2.169 |

| Left | Middle temporal gyrus | 65 | 30 | −3 | 2.166 |

| Right | Precuneus | −23 | 53 | 37 | 2.011 |

| Up > middle (LV2) | |||||

| Right | Fusiform gyrus | −45 | 33 | −21 | 4.185 |

| Left | Medial frontal gyrus | 0 | −1 | 56 | 3.288 |

| Right | Precentral gyrus | −48 | 11 | 53 | 3.043 |

| Left | Inferior parietal lobule | 60 | 38 | 26 | 2.966 |

| Right | Precentral gyrus | −15 | 14 | 73 | 2.84 |

| Left | Precuneus | 30 | 72 | 40 | 2.762 |

| Right | Claustrum | −31 | 0 | 8 | 2.758 |

| Left | Superior temporal gyrus | 53 | −5 | −7 | 2.67 |

| Right | Inferior parietal lobule | −61 | 33 | 27 | 2.583 |

| Left | Cerebellar tonsil | 30 | 33 | −44 | 2.481 |

| Left | Superior frontal gyrus | 15 | 11 | 74 | 2.379 |

| Left | Lingual gyrus | 0 | 88 | −13 | 2.314 |

| Left | Culmen | 30 | 51 | −18 | 2.279 |

| Right | Precentral gyrus | −53 | 6 | 29 | 2.204 |

| Right | Superior parietal lobule | −31 | 58 | 51 | 2.135 |

The areas with increased ERD for the downbeat compared to the upbeat and the middle beat compared to the upbeat are indicated. The intensity indicates the averaged z-score of the brain activity component within the time interval of interest based on the bootstrap resampling on each location. L, Left; R, right; A, anterior; P, posterior; I, inferior; S, superior.

Results

Behavioral performance in target detection

During the MEG recording, participants were instructed to pay attention to the metrical structure and indicate with a button press whether an occasional high-pitched target tone in the imagery sections of the stimuli occurred at a downbeat or upbeat position. This task was intended more for keeping participants alert than comparing behavioral performance across conditions. The number of the targets was, on average, 21 per participant through six blocks. All the participants successfully maintained vigilance, as evident in the very small number of missed targets. Seven of 14 participants missed no targets, and the remaining seven missed no more than three targets. Participants were also quite successful in identifying the beat type, as indicated by the correct identification rates: means, 74.4% (SEM, 8.0) for the march downbeat, 75.3% (SEM, 8.1) for march upbeat, 81.0% (SEM, 7.5) for the waltz downbeat, 85.2% (SEM, 5.9) for the waltz upbeat. There were no significant differences in performance across beat-types, as assessed by ANOVA and t tests.

Auditory source localization

Localization of equivalent current dipole sources for the N1 peak of the evoked response to the accented beats was successful in all participants in all six blocks. Mean dipole locations in the head-based coordinate system were x = −4.4 mm, y = −47.8 mm, z = 47.6 mm in the right hemisphere and x = −8.0 mm, y = 46.3 mm, z = 47.7 mm in the left hemisphere, corresponding to Talairach coordinates of the MNI–Colin27 template brain of x = −47 (right), y = 18 (posterior), and z = 9 (superior) in the right hemisphere and x = 42, y = 19, and z = 7 in the left hemisphere. The right hemispheric dipole location was significantly more anterior than the left across all acquired dipoles (t(83) = 5.7, p < 0.001), demonstrating the integrity of the obtained source localizations.

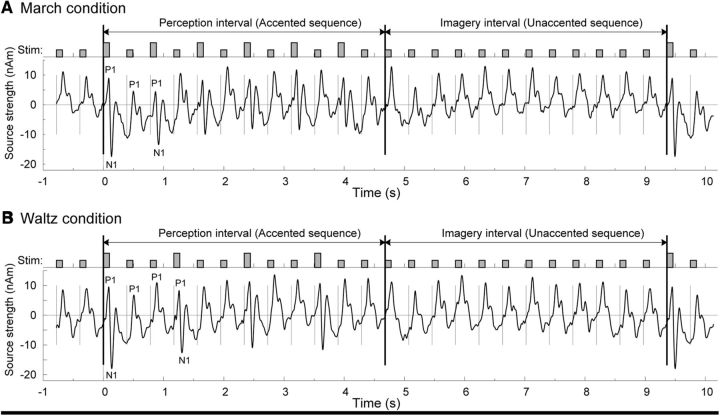

Evoked responses in the auditory cortex

Waveforms of auditory evoked responses were calculated for each experimental trial as the dipole moment of the estimated cortical sources. Grand-averaged waveforms across all participants for left and right auditory cortices are shown in Figure 1A for the march condition and in Figure 1B for the waltz condition. The top traces shows the time course of the stimuli, which were presented with a constant stimulus onset asynchrony of 390 ms. In the first 12 beat segment, the vertically longer bars indicate the accented beats, which were 13 dB higher in intensity (six in the march condition, four in the waltz condition), whereas the second 12 beat segment of the imagery condition contained the unaccented softer beats only. Although each beat stimulus elicited a series of positive and negative deflections, the morphology of the responses changed systematically over the time course of the stimulus sequence. Most prominent were the early P1 waves with latencies of ∼50–80 ms, and the N1 waves with latencies of ∼100–130 ms. However, pronounced N1 waves were expressed only in response to the louder accented stimuli in the perception interval. For the softer stimuli, there was only a subtle dip between the P1 and the following P2 peak. The first accented beat after the 12 equally soft stimuli of the imagery interval was the most perceptually salient stimulus. Accordingly, this first accented beat elicited the most pronounced N1 response in both hemispheres across both the march and waltz conditions. Source strengths were 17.4 and 17.8 nAm in the left hemisphere and 14.7 and 16.2 nAm in the right hemisphere, respectively. The N1 response to the second accented sound was already strongly attenuated compared to the first accented sound, as shown in Figure 1, A and B. By the end of the physically accented 12 beat sequence, the last accented sound elicited a much smaller N1 peak. Significantly reduced N1 amplitudes at a fast stimulation rate are consistent with the literature (Näätänen and Picton, 1987). In contrast to the varying N1 amplitude, the P1 peaks were more consistent across the stimulus sequence (Fig. 1A,B).

Figure 1.

Time courses of click stimuli and auditory evoked responses, grand averaged across left and right cortical sources and across all participants. Twenty-four isochronous clicks were used as auditory stimuli with an onset-to-onset interval of 390 ms. A, In the march condition, the first half of the stimulus sequence imposed the meter structure by acoustically accenting every second click. In this interval, the participants were instructed to perceive the march meter. The second half of the sequence remained at the softer intensity throughout. Here the participants had to imagine the meter structure subjectively. The evoked P1 was prominently expressed in response to each beat stimulus. In the perception interval, the auditory evoked N1 response was predominantly expressed for the accented downbeat stimuli only, whereas during the imagery interval, the evoked N1 was very small for all beats. B, For the waltz condition, every third stimulus was accented. Again, P1 responses were prominent to each beat and N1 responses followed the physically accented stimuli.

Meter representation in the auditory cortex β oscillations

Time-frequency representations (TFRs) in Figure 2 illustrate how each beat stimulus led to changes in oscillatory activity. The TFR in Figure 2A shows signal power changes grand averaged across all beats, regardless of the position within the metric structure, for the “perceived” meter; similarly, Figure 2B shows this for the “imagined” meter. For each frequency, the spectral power was normalized to the mean across each one-beat time interval (0–390 ms) and expressed as the percentage change, commonly termed event-related synchronization and desynchronization (Pfurtscheller and Lopes da Silva, 1999). The TFRs show temporal fluctuations following beat onsets predominantly at frequencies of ∼20 Hz and below. The amplitude of low-frequency oscillations was larger for the perception condition than the imagery condition. This corresponds to the higher stimulus intensity for the downbeat in the perception condition, which elicited enlarged N1 responses, as shown in Figure 1. To reduce such effects of the evoked response and capture solely induced oscillatory activities, the TFRs were recalculated (Fig. 2C,D) after the time-domain-averaged response was regressed out from each trial of the MEG signal. For these evoked (Fig. 2A,B) and induced (C,D) activities, the baseline was calculated and normalized separately for perception and imagery conditions. The time courses of the β-band amplitude changes, shown in Figures 2, E and F, were referenced to the level at 50 ms after the stimulus onset. The β modulations showed a steep decrease immediately after the stimulus, reached the minimum at 200 ms latency, and recovered with a shallow slope. The time courses of β-ERD for the perception and imagine conditions resembled each other closely.

The TFRs with attenuated contribution of the evoked response were analyzed separately for the different beat types, stimulus intervals, and metric conditions. The TFRs for the march and waltz conditions are shown in Figures 3 and 4, respectively, for the perception and imagery conditions. Time 0 corresponds to the onset of the downbeat stimulus. The periodic modulation at β frequencies was visible in all beat-related intervals. However, in the march condition, the trough of β-ERD was noticeably deeper after the downbeat than after the upbeat for both hemispheres. This was not only the case in the perception condition, where metrical accents were physically present, but also for the imagery condition. The depths of the β-ERD around 200 ms were compared with a repeated measures ANOVA with three within-participant factors: beat type (down, up), stimulus interval (perception, imagery), and hemisphere (left, right). The ANOVA revealed a main effect of the beat type (F(1,13) = 7.075, p = 0.0196) due to larger β-ERD after the downbeats compared to the upbeats (p < 0.001). Pairwise comparisons between the downbeat and upbeat in each stimulus interval revealed that for both perception and imagery conditions, the beat-type contrast was significant when the data from both hemispheres were combined (perception, t(27) = 2.74, p = 0.0108; imagery, t(27) = 2.28, p = 0.0307). In particular, for the perception condition, the beat-type contrast in the right hemisphere was significant (t(13) = 2.227, p = 0.0442). For the imagery condition, beat type was significant in the left hemisphere (t(13) = 3.356, p = 0.0052). In the ANOVA, no other main effects or interactions were found to be significant.

In the waltz condition (Fig. 4), the trough of β-ERD at ∼200 ms after the downbeat was also deeper compared with those following the upbeat or the middle beat for both hemispheres. In the ANOVA, again, beat type was the only factor showing a significant effect (F(1,13) = 10.257, p = 0.0005). This main effect was contributed to by the stronger β-ERD after the downbeat compared to the middle beat for both perception and imagery combined together (p < 0.01). This contrast was significant for the perception in the left hemisphere (t(13) = 2.23, p = 0.0443). When the data for both hemispheres were combined, the contrast in the perception condition approached the significance level, whereas that in the imagery condition reached the significance level (perception, t(27) = 1.79, p = 0.0842; imagery, t(27) = 2.36, p = 0.0258). Also, the main effect beat type was contributed to by the larger β-ERD after the upbeat than that after the middle beat (p < 0.05). This contrast in the imagery condition was significant in the left hemisphere (t(13) = 2.22, p = 0.0449). When both hemispheres were combined, the contrast for perception and imagery conditions approached the significance level (perception, t(27) = 1.56, p = 0.129; imagery, t(27) = 1.79, p = 0.0842). There was no significant difference between the upbeat and downbeat. No other main effects or interactions were found.

In summary, periodic modulation of induced β oscillation, with a minimum around 200 ms after the beat onset and a subsequent rebound, was the most prominent effect on brain oscillations regardless of whether the meter was imposed by acoustic accents or by subjective imagery. Furthermore, the β-ERD was larger for the downbeat than for the upbeat in the march condition, and larger for the downbeat and upbeat compared to the middle beat in the waltz condition.

Meter representation in β-band oscillation across the whole brain

Next, we examined the sources of β activity within each beat interval with a SAM beamformer analysis. The SAM spatial filter was constructed on an 8 × 8 × 8 mm lattice across the whole brain. The resulting source activity was bandpass filtered between 15 and 25 Hz and expressed as magnitude of β power, as source analysis does not preserve the signal polarity.

The spatiotemporal pattern of the brain activity in the 0–390 ms latency interval that specifically expressed beat-type differences across acoustically accented and imagined meters was analyzed by a nonrotated task PLS. In the nonrotated PLS, planned comparisons are conducted by using a set of contrast patterns between the conditions of interest as the design LVs. Resampling of the identified brain LV resulted in estimates of bootstrap ratios, normalized to the SD of the group, thus expressing the LV by sets of z-scores for each volume element. Note that through this conversion, the signal strength is expressed in reference to the intersubject variability. Static volumetric brain maps were obtained from averaging the four-dimensional data over the time interval corresponding to one beat (0–390 ms), as illustrated and visualized in Figures 5 and 6 for the march perception, march imagery, waltz perception, and waltz imagery conditions, respectively. Talairach coordinates of local minima and maxima were extracted among those voxels where the bootstrap score was higher than 2 and are listed in Tables 1 and 2.

In the march condition, the design LV expressed the contrast between the downbeat (−1) and upbeat (+1) for the perception and imagery conditions (Fig. 5, bar plot) to capture the beat-related power decrease. In the march perception condition, the LV was significant (p = 0.0249), explaining the difference in β power between the downbeat and upbeat. The map of associated brain areas, shown in Figure 5 (top right), indicates that only the downbeat related β-ERD contributed to the contrast, involving the auditory cortex, which is in line with the results in our equivalent current dipole source analysis. However, the equivalent PLS for the march imagery condition revealed significant contributions from both downbeat and upbeat related β-ERDs in different brain areas. The downbeat-related ERD involved the right inferior parietal lobule (IPL), right superior temporal gyrus (STG), precuneus, right precentral gyrus, and middle frontal gyrus. The upbeat related β-ERD was observed in bilateral postcentral gyrus, right IPL and parahippocampal gyrus, and left transverse temporal gyrus.

In the waltz condition, the nonrotated PLS analysis examined data across the three beat types by two pairwise comparisons for the perception and imagery stimulus intervals. LV1 used the contrast between downbeats (−1) and upbeats (+1), and LV2 used the contrast between middle (−1) and upbeats (+1; Fig. 6, left, bar plots). LV1 explained 36.0% and 44.3% of the data variance in the perception and imagery conditions, respectively, but reached statistical significance only in the waltz imagery condition (p = 0.0199), even though the waltz perception condition used acoustically louder stimuli. Only brain areas in the right middle temporal gyrus were significantly involved in the downbeat-related β-ERD (Fig. 6A, right, blue colored areas), whereas the upbeat β-ERD was associated with power decreases in widespread areas in the left STG, right cingulate gyrus, precentral gyrus, precuneus, and paracentral lobule. LV2, representing the contrast between the middle beats and upbeats, explained 63.9% and 55.7% of the variance in the waltz perception and waltz imagery conditions, respectively, and reached statistical significance in both (p = 0.005 and p < 0.0001, respectively). The brain areas exceeding the significance level by the bootstrap test were associated with β-ERD related to the upbeat (Fig. 6B, yellow), compared to that for the middle beat. In the waltz perception condition, the areas included bilateral auditory and sensorimotor sites such as the STG, IPL, and precentral and postcentral gyrus. Also, medial and lateral premotor cortex and anterior cingulate cortex contributed to the contrast. The brain areas involved in the waltz imagery condition were similar to those in the waltz perception condition, but included additional subcortical areas such as the right claustrum and bilateral cerebellum.

Altogether, the beamformer source analysis followed by PLS revealed that meter structure was reflected in the modulation of β power across a wide range of brain areas such as the temporal, frontal, and parietal lobes and cerebellum. Changes in β activity were generally sensitive to the meter structure and involved wide-range networks of brain areas that were specifically different between march and waltz meters, and between perception and imagery conditions.

Discussion

Our study demonstrates four key findings. First, we replicated our previous finding of periodic modulation of induced β activity in bilateral auditory cortices, elicited by isochronous beats. Second, the amount of β-ERD 200 ms after beat onsets depended on whether beats were perceived as accented or not, regardless of whether the accents were physically present in the stimulus or imagined. Third, march and waltz metrical structures elicited different relationships between upbeats and downbeats. Fourth, despite the common metric representation of β activity in the auditory cortex between the perception and imagery conditions, the distributed brain areas representing the beat-type contrasts differed between the stimulus intervals and meter types. In general, compared to simply perceiving the meter, imagining the meter subjectively required a notably larger number of brain areas. Also, the waltz condition was associated with a wider range of sensorimotor and frontoparietal areas than the march condition, particularly for the middle beat/upbeat contrast. Altogether, the results demonstrate that meter processing likely involves orienting temporal attention to upcoming beats differently according to the beat type. Such temporal processing systematically regulates the β-band network similarly to motor imagery tasks, but without involvement of specific effectors or spatial attention.

The observed periodic β modulation synchronized with the beat interval regardless of beat type and meter (Figs. 2–4) extends our previous results in passive listening (Fujioka et al., 2012) to attentional listening. The robustness of this pattern regardless of metrical structure (physically present or imagined) further supports our previous interpretation that it reflects the automatic transformation of predictable auditory interbeat time intervals into sensorimotor codes, coordinated by corticobasal ganglia–thalamocortical circuits. Other previous studies corroborate this view (Merchant et al., 2015). For example, a similar dependency between the auditory beat tempo and β modulation was found with EEG in 7-year-old children (Cirelli et al., 2014), and adults' center frequency of spontaneous β activity correlates with their preferred tapping tempo (Bauer et al., 2015). Premovement β-power time course predicted the subsequently produced time interval (Kononowicz and Rijn, 2015). In primates, β oscillations in local field potentials from the putamen showed similar entrainment during internally guided tapping, and it was stronger than in auditory-paced tapping, which suggests the importance of internalized timing information for the initiation of movement sequences (Bartolo et al., 2014; Bartolo and Merchant, 2015). These findings, including the current one, are in line with broader hypotheses on the role of β oscillation for timing and predictive sensory processing (Arnal and Giraud, 2012; Leventhal et al., 2012), specifically, that coupling between β oscillation and slower δ-to-θ modulatory activities together regulate task-relevant sensory gating (Lakatos et al., 2005; Saleh et al., 2010; Cravo et al., 2011; Arnal et al., 2014). In this respect, it should be noted that the metric levels (rate of strong beats) in the present study of the march and waltz (1.28 and 0.85 Hz, respectively) fit into the δ band.

More importantly, the experience of meter was encoded in β-ERD, which varied significantly across beat types in both the perception and imagery intervals (Figs. 3, 4). No significant interactions were found between beat type and whether the meter was given in the stimulus or imagined. This indicates that the listeners can endogenously generate internalized experiences of metric structure. Because of the cyclic nature of the stimuli, the modulation pattern likely contains contributions from both stimulus-induced β-ERD and subsequent β rebound, which may relate to endogenous processes. Interestingly, the enhancement of the β-ERD related to beat types was different between the march and waltz conditions. Specifically, for the march, larger β-ERD was observed after the downbeat compared to the upbeat. The pattern was more complex in the waltz, for which listeners showed larger β-ERD for both downbeats and upbeats compared to middle beats. For the target detection task during imagery, targets never occurred on middle beats. However, the different β-ERD pattern for middle beats is unlikely a consequence of this. First, it is only by performing the imagery while internalizing the metric structure that participants would even know which beats were middle beats. Without internalizing the metric structure, the memory demands to differentiate all 12 beat positions during the imagery interval would be unrealistic. As well, attention to target positions cannot explain the similar pattern of middle beat/upbeat β-ERD in the perception interval, where there were no targets. The qualitative differences between march and waltz meters, especially when guided by imagery, are similar to previous findings in auditory evoked responses with MEG (Fujioka et al., 2010) and EEG (Schaefer et al., 2011). For example, Fujioka et al. (2010) found significant differences between evoked responses to downbeats in march and waltz conditions across the brain, although this study did not analyze explicitly responses to middle beats. Schaefer et al. (2011) examined ERPs in patterns with accents every two, three, or four beats, objectively and subjectively. Their principal component waveforms (Schaefer et al., 2011, their Fig. 7, bottom) show that the middle beat in the three-beat pattern was more different from the downbeat and upbeat than those two were from each other. This result may be well related to those from another series of studies that investigated spontaneously and subjectively imposed “binary” (two-beat) meter processing on identical isochronous tones, in which auditory evoked responses to one of the tones and its deviations were enhanced for presumed downbeat positions (Brochard et al., 2003; Abecasis et al., 2005; Potter et al., 2009). These support that the binary march meter is “more natural” than the ternary waltz meter, as the production and perception of ternary meters seem more difficult than those of binary meters (Drake, 1993; Desain and Honing, 2003), although this bias may be learned and not universal across cultures (Hannon and Trainor, 2007; Trainor and Hannon, 2013). The novel findings here are that whereas evoked responses to metrically accented beats are much larger when the meter is acoustically defined than when it is imagined, the pattern of β-ERD differences across beat types is similar across both the perceived and imagined meters. Thus, this result further supports the role of β modulation for the representation of internalized timing.

The brain areas for β-band meter representations paint a rather complex picture. The identified areas generally agree with those found previously for beat representation (Fujioka et al., 2012), including auditory cortex, sensorimotor cortex, medial frontal premotor cortex, anterior and posterior cingulate cortex, and some portions of the medial temporal lobe, parietal lobe, basal ganglia/thalamus, and cerebellum. The sensorimotor and medial frontal premotor cortices as well as parietal lobe, basal ganglia, and cerebellum have been repeatedly implicated in auditory rhythms and temporal attention tasks in neuroimaging studies (Lewis and Miall, 2003; Nobre et al., 2007; Zatorre et al., 2007; Kotz and Schwartze, 2010; Wiener et al., 2010). Our results also provide a number of interesting observations. First, although left and right auditory cortices were involved in meter processing, the hemispheric contributions were complex and seem to be affected by meter type (march, waltz) and stimulus interval (perception, imagery). Specifically, enhanced β-ERD was significant in the left auditory cortex for downbeat processing in the march perception, but in the right for the march imagery (Fig. 5), but again in the left in the waltz imagery for upbeat processing (Fig. 6). Second, processing both the march and waltz meters engaged similar areas in the parietal lobe such as the inferior parietal lobule and precuneus, but more extended areas were observed in the waltz compared to march conditions, in line with the idea that the waltz rhythm is more complex and requires additional resources. Third, the perception and imagery conditions engaged overlapping but not identical areas in the brain, despite the fact that these conditions produced similar responses from auditory cortical areas. The imagery engaged additional brain regions, in line with the increased cognitive load, which may have partly resulted from the target detection task during the imagery. In sum, metrical processing is reflected in β-power modulation across a wide network, and the details of the extent to which different brain regions are involved depends on both on the complexity of the meter and the task requirements related to mental effort. This also resonates with inconclusive results from lesion studies. Previous studies reported that meter processing was impaired in right hemisphere (Kester et al., 1991; Wilson et al., 2002) and either hemisphere lesion (Liégeois-Chauvel et al., 1998). So far, no single neural substrate or hemisphere has been related to meter processing (Stewart et al., 2006). Future research including animal models examining how global and local β oscillations reflect timing and their hierarchical representations, combined with neural computational models of metrical timing processing (Jazayeri and Shadlen, 2010; Vuust et al., 2014), will be needed to elucidate finer details.

Neural representation of the auditory rhythm in the β oscillations is relevant to clinical conditions. For example, metronome pacing stimuli can benefit those with stuttering (Toyomura et al., 2015) and other motor impairment caused by Parkinsons' disease and stroke (Thaut et al., 2015), as well as children with dyslexia (Przybylski et al., 2013). Previously, β oscillations in the basal ganglia and sensorimotor cortex were hypothesized to be associated with dopamine levels available in the corticostriatal circuits (Jenkinson and Brown, 2011; Brittain and Brown, 2014) because of its rapid changes with learning (Herrojo Ruiz et al., 2014). Time processing mechanisms related to auditory rhythm would provide useful biomarkers for rehabilitation and for learning in developmental disorders.

Footnotes

This work was supported by Canadian Institutes of Health Research Grant MOP 115043 to L.J.T. and T.F. We sincerely thank Brian Fidali and Panteha Razavi for assisting with recruiting and testing procedures.

The authors declare no competing financial interests.

References

- Abecasis D, Brochard R, Granot R, Drake C. Differential brain response to metrical accents in isochronous auditory sequences. Music Percept. 2005;22:549–562. doi: 10.1525/mp.2005.22.3.549. [DOI] [Google Scholar]

- Arnal LH, Giraud AL. Cortical oscillations and sensory predictions. Trends Cogn Sci. 2012;16:390–398. doi: 10.1016/j.tics.2012.05.003. [DOI] [PubMed] [Google Scholar]

- Arnal LH, Wyart V, Giraud AL. Transitions in neural oscillations reflect prediction errors generated in audiovisual speech. Nat Neurosci. 2011;14:797–801. doi: 10.1038/nn.2810. [DOI] [PubMed] [Google Scholar]

- Arnal LH, Doelling KB, Poeppel D. Delta-beta coupled oscillations underlie temporal prediction accuracy. Cereb Cortex. 2014;25:3077–3085. doi: 10.1093/cercor/bhu103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartolo R, Merchant H. β oscillations are linked to the initiation of sensory-cued movement sequences and the internal guidance of regular tapping in the monkey. J Neurosci. 2015;35:4635–4640. doi: 10.1523/JNEUROSCI.4570-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartolo R, Prado L, Merchant H. Information processing in the primate basal ganglia during sensory-guided and internally driven rhythmic tapping. J Neurosci. 2014;34:3910–3923. doi: 10.1523/JNEUROSCI.2679-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer AKR, Kreutz G, Herrmann CS. Individual musical tempo preference correlates with EEG beta rhythm. Psychophysiology. 2015;52:600–604. doi: 10.1111/psyp.12375. [DOI] [PubMed] [Google Scholar]

- Brittain JS, Brown P. Oscillations and the basal ganglia: motor control and beyond. Neuroimage. 2014;85:637–647. doi: 10.1016/j.neuroimage.2013.05.084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brochard R, Abecasis D, Potter D, Ragot R, Drake C. The “ticktock” of our internal clock: direct brain evidence of subjective accents in isochronous sequences. Psychol Sci. 2003;14:362–366. doi: 10.1111/1467-9280.24441. [DOI] [PubMed] [Google Scholar]

- Cirelli LK, Bosnyak D, Manning FC, Spinelli C, Marie C, Fujioka T, Ghahremani A, Trainor LJ. Beat-induced fluctuations in auditory cortical beta-band activity: using EEG to measure age-related changes. Front Psychol. 2014;5:742. doi: 10.3389/fpsyg.2014.00742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornwell BR, Johnson LL, Holroyd T, Carver FW, Grillon C. Human hippocampal and parahippocampal theta during goal-directed spatial navigation predicts performance on a virtual Morris water maze. J Neurosci. 2008;28:5983–5990. doi: 10.1523/JNEUROSCI.5001-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cravo AM, Rohenkohl G, Wyart V, Nobre AC. Endogenous modulation of low frequency oscillations by temporal expectations. J Neurophysiol. 2011;106:2964–2972. doi: 10.1152/jn.00157.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desain P, Honing H. The formation of rhythmic categories and metric priming. Perception. 2003;32:341–365. doi: 10.1068/p3370. [DOI] [PubMed] [Google Scholar]

- Drake C. Reproduction of musical rhythms by children, adult musicians, and adult nonmusicians. Percept Psychophys. 1993;53:25–33. doi: 10.3758/BF03211712. [DOI] [PubMed] [Google Scholar]

- Fujioka T, Trainor LJ, Large EW, Ross B. Beta and gamma rhythms in human auditory cortex during musical beat processing. Ann N Y Acad Sci. 2009;1169:89–92. doi: 10.1111/j.1749-6632.2009.04779.x. [DOI] [PubMed] [Google Scholar]

- Fujioka T, Zendel BR, Ross B. Endogenous neuromagnetic activity for mental hierarchy of timing. J Neurosci. 2010;30:3458–3466. doi: 10.1523/JNEUROSCI.3086-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka T, Trainor LJ, Large EW, Ross B. Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. J Neurosci. 2012;32:1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grube M, Griffiths TD. Metricality-enhanced temporal encoding and the subjective perception of rhythmic sequences. Cortex. 2009;45:72–79. doi: 10.1016/j.cortex.2008.01.006. [DOI] [PubMed] [Google Scholar]

- Hannon EE, Trainor LJ. Music acquisition: effects of enculturation and formal training on development. Trends Cogn Sci. 2007;11:466–472. doi: 10.1016/j.tics.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Hasnain MK, Fox PT, Woldorff MG. Intersubject variability of functional areas in the human visual cortex. Hum Brain Mapp. 1998;6:301–315. doi: 10.1002/(SICI)1097-0193(1998)6:4<301::AID-HBM8>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrojo Ruiz M, Brucke C, Nikulin VV, Schneider GH, Kuhn AA. Beta-band amplitude oscillations in the human internal globus pallidus support the encoding of sequence boundaries during initial sensorimotor sequence learning. Neuroimage. 2014;85:779–793. doi: 10.1016/j.neuroimage.2013.05.085. [DOI] [PubMed] [Google Scholar]

- Ille N, Berg P, Scherg M. Artifact correction of the ongoing EEG using spatial filters based on artifact and brain signal topographies. J Clin Neurophysiol. 2002;19:113–124. doi: 10.1097/00004691-200203000-00002. [DOI] [PubMed] [Google Scholar]

- Jazayeri M, Shadlen MN. Temporal context calibrates interval timing. Nat Neurosci. 2010;13:1020–1026. doi: 10.1038/nn.2590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson N, Brown P. New insights into the relationship between dopamine, beta oscillations and motor function. Trends Neurosci. 2011;34:611–618. doi: 10.1016/j.tins.2011.09.003. [DOI] [PubMed] [Google Scholar]

- Jensen O, Goel P, Kopell N, Pohja M, Hari R, Ermentrout B. On the human sensorimotor-cortex beta rhythm: sources and modeling. Neuroimage. 2005;26:347–355. doi: 10.1016/j.neuroimage.2005.02.008. [DOI] [PubMed] [Google Scholar]

- Jones MR, Boltz M. Dynamic attending and responses to time. Psychol Rev. 1989;96:459–491. doi: 10.1037/0033-295X.96.3.459. [DOI] [PubMed] [Google Scholar]

- Jones MR, Moynihan H, MacKenzie N, Puente J. Temporal aspects of stimulus-driven attending in dynamic arrays. Psychol Sci. 2002;13:313–319. doi: 10.1111/1467-9280.00458. [DOI] [PubMed] [Google Scholar]

- Jurkiewicz MT, Gaetz WC, Bostan AC, Cheyne D. Post-movement beta rebound is generated in motor cortex: evidence from neuromagnetic recordings. Neuroimage. 2006;32:1281–1289. doi: 10.1016/j.neuroimage.2006.06.005. [DOI] [PubMed] [Google Scholar]

- Kester DB, Saykin AJ, Sperling MR, O'Connor MJ, Robinson LJ, Gur RC. Acute effect of anterior temporal lobectomy on musical processing. Neuropsychologia. 1991;29:703–708. doi: 10.1016/0028-3932(91)90104-G. [DOI] [PubMed] [Google Scholar]

- Kobayashi T, Kuriki S. Principal component elimination method for the improvement of S/N in evoked neuromagnetic field measurements. IEEE Trans Biomed Eng. 1999;46:951–958. doi: 10.1109/10.775405. [DOI] [PubMed] [Google Scholar]

- Kononowicz TW, Rijn Hv. Single trial beta oscillations index time estimation. Neuropsychologia. 2015;75:381–389. doi: 10.1016/j.neuropsychologia.2015.06.014. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Schwartze M. Cortical speech processing unplugged: a timely subcortico-cortical framework. Trends Cogn Sci. 2010;14:392–399. doi: 10.1016/j.tics.2010.06.005. [DOI] [PubMed] [Google Scholar]

- Kropotov JD. Beta rhythms. In: Kropotov JD, editor. Quantitative EEG, event-related potentials and neurotherapy. London, UK: Elsevier; 2009. pp. 59–76. [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Leventhal DK, Gage GJ, Schmidt R, Pettibone JR, Case AC, Berke JD. Basal ganglia beta oscillations accompany cue utilization. Neuron. 2012;73:523–536. doi: 10.1016/j.neuron.2011.11.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis PA, Miall RC. Distinct systems for automatic and cognitively controlled time measurement: evidence from neuroimaging. Curr Opin Neurobiol. 2003;13:250–255. doi: 10.1016/S0959-4388(03)00036-9. [DOI] [PubMed] [Google Scholar]

- Liégeois-Chauvel C, Peretz I, Babai M, Laguitton V, Chauvel P. Contribution of different cortical areas in the temporal lobes to music processing. Brain. 1998;121(Pt 10):1853–1867. doi: 10.1093/brain/121.10.1853. [DOI] [PubMed] [Google Scholar]

- Lobaugh NJ, West R, McIntosh AR. Spatiotemporal analysis of experimental differences in event-related potential data with partial least squares. Psychophysiology. 2001;38:517–530. doi: 10.1017/S0048577201991681. [DOI] [PubMed] [Google Scholar]

- McIntosh AR, Lobaugh NJ. Partial least squares analysis of neuroimaging data: applications and advances. Neuroimage. 2004;23(Suppl 1):S250–S263. doi: 10.1016/j.neuroimage.2004.07.020. [DOI] [PubMed] [Google Scholar]

- McIntosh AR, Bookstein FL, Haxby JV, Grady CL. Spatial pattern analysis of functional brain images using partial least squares. Neuroimage. 1996;3:143–157. doi: 10.1006/nimg.1996.0016. [DOI] [PubMed] [Google Scholar]

- Merchant H, Grahn J, Trainor L, Rohrmeier M, Fitch WT. Finding the beat: a neural perspective across humans and non-human primates. Philos Trans R Soc Lond B Biol Sci. 2015;370:20140093. doi: 10.1098/rstb.2014.0093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morillon B, Schroeder CE, Wyart V. Motor contributions to the temporal precision of auditory attention. Nat Commun. 2014;5:5255. doi: 10.1038/ncomms6255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näätänen R, Picton T. The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Neuper C, Wörtz M, Pfurtscheller G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog Brain Res. 2006;159:211–222. doi: 10.1016/S0079-6123(06)59014-4. [DOI] [PubMed] [Google Scholar]

- Nobre A, Correa A, Coull J. The hazards of time. Curr Opin Neurobiol. 2007;17:465–470. doi: 10.1016/j.conb.2007.07.006. [DOI] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Missal M, Mouraux A. Tagging the neuronal entrainment to beat and meter. J Neurosci. 2011;31:10234–10240. doi: 10.1523/JNEUROSCI.0411-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pantev C, Oostenveld R, Engelien A, Ross B, Roberts LE, Hoke M. Increased auditory cortical representation in musicians. Nature. 1998;392:811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Lopes da Silva FH. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin Neurophysiol. 1999;110:1842–1857. doi: 10.1016/S1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Pollok B, Südmeyer M, Gross J, Schnitzler A. The oscillatory network of simple repetitive bimanual movements. Brain Res Cogn Brain Res. 2005a;25:300–311. doi: 10.1016/j.cogbrainres.2005.06.004. [DOI] [PubMed] [Google Scholar]

- Pollok B, Gross J, Müller K, Aschersleben G, Schnitzler A. The cerebral oscillatory network associated with auditorily paced finger movements. Neuroimage. 2005b;24:646–655. doi: 10.1016/j.neuroimage.2004.10.009. [DOI] [PubMed] [Google Scholar]

- Potter DD, Fenwick M, Abecasis D, Brochard R. Perceiving rhythm where none exists: Event-related potential (ERP) correlates of subjective accenting. Cortex. 2009;45:103–109. doi: 10.1016/j.cortex.2008.01.004. [DOI] [PubMed] [Google Scholar]

- Przybylski L, Bedoin N, Krifi-Papoz S, Herbillon V, Roch D, Léculier L, Kotz SA, Tillmann B. Rhythmic auditory stimulation influences syntactic processing in children with developmental language disorders. Neuropsychology. 2013;27:121–131. doi: 10.1037/a0031277. [DOI] [PubMed] [Google Scholar]

- Repp BH. Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev. 2005;12:969–992. doi: 10.3758/BF03206433. [DOI] [PubMed] [Google Scholar]

- Repp BH. Do metrical accents create illusory phenomenal accents? Atten Percept Psychophys. 2010;72:1390–1403. doi: 10.3758/APP.72.5.1390. [DOI] [PubMed] [Google Scholar]

- Repp BH, Su YH. Sensorimotor synchronization: a review of recent research (2006–2012) Psychon Bull Rev. 2013;20:403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- Riggs L, Moses SN, Bardouille T, Herdman AT, Ross B, Ryan JD. A complementary analytic approach to examining medial temporal lobe sources using magnetoencephalography. Neuroimage. 2009;45:627–642. doi: 10.1016/j.neuroimage.2008.11.018. [DOI] [PubMed] [Google Scholar]

- Robinson SE. Localization of event-related activity by SAM(erf) Neurol Clin Neurophysiol. 2004;2004:109. [PubMed] [Google Scholar]

- Robinson SE, Vrba J. Functional neuroimaging by synthetic aperture magnetometry. In: Yoshimoto T, Kotani M, Kuriki S, Karibe H, Nakasato N, editors. Recent advances in biomagnetism. Sendai, Japan: Tohoku UP; 1999. pp. 302–305. [Google Scholar]

- Ross B, Snyder JS, Aalto M, McDonald KL, Dyson BJ, Schneider B, Alain C. Neural encoding of sound duration persists in older adults. Neuroimage. 2009;47:678–687. doi: 10.1016/j.neuroimage.2009.04.051. [DOI] [PubMed] [Google Scholar]

- Saleh M, Reimer J, Penn R, Ojakangas CL, Hatsopoulos NG. Fast and slow oscillations in human primary motor cortex predict oncoming behaviorally relevant cues. Neuron. 2010;65:461–471. doi: 10.1016/j.neuron.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samar VJ, Bopardikar A, Rao R, Swartz K. Wavelet analysis of neuroelectric waveforms: a conceptual tutorial. Brain Lang. 1999;66:7–60. doi: 10.1006/brln.1998.2024. [DOI] [PubMed] [Google Scholar]

- Schaefer RS, Vlek RJ, Desain P. Decomposing rhythm processing: electroencephalography of perceived and self-imposed rhythmic patterns. Psychol Res. 2011;75:95–106. doi: 10.1007/s00426-010-0293-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinstraeter O, Teismann IK, Wollbrink A, Suntrup S, Stoeckigt K, Dziewas R, Pantev C. Local sphere-based co-registration for SAM group analysis in subjects without individual MRI. Exp Brain Res. 2009;193:387–396. doi: 10.1007/s00221-008-1634-z. [DOI] [PubMed] [Google Scholar]

- Stewart L, von Kriegstein K, Warren JD, Griffiths TD. Music and the brain: disorders of musical listening. Brain. 2006;129:2533–2553. doi: 10.1093/brain/awl171. [DOI] [PubMed] [Google Scholar]

- Teale P, Pasko B, Collins D, Rojas D, Reite M. Somatosensory timing deficits in schizophrenia. Psychiatry Res. 2013;212:73–78. doi: 10.1016/j.pscychresns.2012.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tesche CD, Uusitalo MA, Ilmoniemi RJ, Huotilainen M, Kajola M, Salonen O. Signal-space projections of MEG data characterize both distributed and well-localized neuronal sources. Electroencephalogr Clin Neurophysiol. 1995;95:189–200. doi: 10.1016/0013-4694(95)00064-6. [DOI] [PubMed] [Google Scholar]

- Thaut MH, McIntosh GC, Hoemberg V. Neurobiological foundations of neurologic music therapy: rhythmic entrainment and the motor system. Front Psychol. 2015;5:1185. doi: 10.3389/fpsyg.2014.01185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toiviainen P, Luck G, Thompson M. Embodied metre: hierarchical eigenmodes in spontaneous movement to music. Cogn Process. 2009;10(Suppl 2):S325–S327. doi: 10.1007/s10339-009-0304-9. [DOI] [PubMed] [Google Scholar]

- Toyomura A, Fujii T, Kuriki S. Effect of an 8-week practice of externally triggered speech on basal ganglia activity of stuttering and fluent speakers. Neuroimage. 2015;109:458–468. doi: 10.1016/j.neuroimage.2015.01.024. [DOI] [PubMed] [Google Scholar]

- Trainor LJ, Hannon EE. Musical development. In: Deutsch D, editor. The psychology of music. London: Elsevier; 2013. pp. 423–498. [Google Scholar]

- van Ede F, de Lange F, Jensen O, Maris E. Orienting attention to an upcoming tactile event involves a spatially and temporally specific modulation of sensorimotor alpha- and beta-band oscillations. J Neurosci. 2011;31:2016–2024. doi: 10.1523/JNEUROSCI.5630-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Veen BD, van Drongelen W, Yuchtman M, Suzuki A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng. 1997;44:867–880. doi: 10.1109/10.623056. [DOI] [PubMed] [Google Scholar]

- Vrba J. Magnetoencephalography: the art of finding a needle in a haystack. Physica C Superconductivity Appl. 2002;368:1–9. doi: 10.1016/S0921-4534(01)01131-5. [DOI] [Google Scholar]

- Vrba J, Robinson SE. Signal processing in magnetoencephalography. Methods. 2001;25:249–271. doi: 10.1006/meth.2001.1238. [DOI] [PubMed] [Google Scholar]

- Vuust P, Gebauer LK, Witek MA. Neural underpinnings of music: the polyrhythmic brain. Adv Exp Med Biol. 2014;829:339–356. doi: 10.1007/978-1-4939-1782-2_18. [DOI] [PubMed] [Google Scholar]

- Wiener M, Turkeltaub P, Coslett HB. The image of time: a voxel-wise meta-analysis. Neuroimage. 2010;49:1728–1740. doi: 10.1016/j.neuroimage.2009.09.064. [DOI] [PubMed] [Google Scholar]

- Wilson SJ, Pressing JL, Wales RJ. Modelling rhythmic function in a musician post-stroke. Neuropsychologia. 2002;40:1494–1505. doi: 10.1016/S0028-3932(01)00198-1. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8:547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]