Abstract

Classical animal visual deprivation studies and human neuroimaging studies have shown that visual experience plays a critical role in shaping the functionality and connectivity of the visual cortex. Interestingly, recent studies have additionally reported circumscribed regions in the visual cortex in which functional selectivity was remarkably similar in individuals with and without visual experience. Here, by directly comparing resting-state and task-based fMRI data in congenitally blind and sighted human subjects, we obtained large-scale continuous maps of the degree to which connectional and functional “fingerprints” of ventral visual cortex depend on visual experience. We found a close agreement between connectional and functional maps, pointing to a strong interdependence of connectivity and function. Visual experience (or the absence thereof) had a pronounced effect on the resting-state connectivity and functional response profile of occipital cortex and the posterior lateral fusiform gyrus. By contrast, connectional and functional fingerprints in the anterior medial and posterior lateral parts of the ventral visual cortex were statistically indistinguishable between blind and sighted individuals. These results provide a large-scale mapping of the influence of visual experience on the development of both functional and connectivity properties of visual cortex, which serves as a basis for the formulation of new hypotheses regarding the functionality and plasticity of specific subregions.

SIGNIFICANCE STATEMENT How is the functionality and connectivity of the visual cortex shaped by visual experience? By directly comparing resting-state and task-based fMRI data in congenitally blind and sighted subjects, we obtained large-scale continuous maps of the degree to which connectional and functional “fingerprints” of ventral visual cortex depend on visual experience. In addition to revealing regions that are strongly dependent on visual experience (early visual cortex and posterior fusiform gyrus), our results showed regions in which connectional and functional patterns are highly similar in blind and sighted individuals (anterior medial and posterior lateral ventral occipital temporal cortex). These results serve as a basis for the formulation of new hypotheses regarding the functionality and plasticity of specific subregions of the visual cortex.

Keywords: blind, connectional fingerprints, functional fingerprints, resting-state functional connectivity, ventral visual cortex, visual experience

Introduction

The ventral occipital-temporal cortex (VOTC) is primarily implicated in visual object recognition. This is achieved through a hierarchical (interactive) process starting from V1, processing simple visual features, such as line orientation, to higher-level visual regions in inferior temporal cortex processing more complex visual properties (Felleman and Van Essen, 1991). Although the role of VOTC in vision is well characterized, its involvement in processing input from other modalities is less clear (Morrell, 1972; Gibson and Maunsell, 1997; for a review, see Ghazanfar and Schroeder, 2006). In the present study, we provide large-scale maps of the degree to which the functionality and connectivity of VOTC are shaped by visual experience, revealing both visual and nonvisual parts of VOTC.

Animal visual deprivation studies (Hubel et al., 1977; Heil et al., 1991) and human brain imaging studies with congenitally blind subjects (Sadato et al., 1996, 1998; Cohen et al., 1997; Büchel et al., 1998; Burton et al., 2002, Burton, 2003; Amedi et al., 2003; Lewis et al., 2010; for reviews, see Merabet et al., 2005; Pascual-Leone et al., 2005) have shown that visual input has a critical role in shaping early visual cortical function. Absence of visual experience early in life also affects the functionality of VOTC regions beyond early visual cortex, such as regions selective for faces (Goyal et al., 2006) or motion (Saenz et al., 2008; Bedny et al., 2010). These functional changes are reflected in altered anatomical and functional connectivity patterns of visual cortex in early blind adults (Liu et al., 2007; Bedny et al., 2010; Watkins et al., 2012; Butt et al., 2013; Burton et al., 2014; Wang et al., 2014; for reviews, see Bock and Fine, 2014; Ricciardi et al., 2014).

Intriguingly, a set of recent findings showed that the categorical response preferences of other parts of VOTC are surprisingly independent of visual experience (for review, see Ricciardi et al., 2014). For example, several category-preferring regions in VOTC respond similarly (in nonvisual tasks) in the congenitally blind and the sighted, including selectivity for scenes and large objects in the parahippocampal place area (Wolbers et al., 2011; He et al., 2013), for tools in left lateral occipital-temporal cortex (Peelen et al., 2013), and for bodies in the right extrastriate body area (Kitada et al., 2014; using a training procedure: Striem-Amit and Amedi, 2014). These results suggest that some parts of VOTC are relatively polymodal, or metamodal (Pascual-Leone and Hamilton, 2001) in nature, exhibiting similar functional profiles in terms of category preference in the absence of visual input/experience.

In sum, previous studies with blind subjects have used specific contrasts to test hypotheses about the influence of visual experience on circumscribed regions of VOTC, reporting evidence for both visually dependent and visually independent regions. The goal of the present study was to systematically map out, across the whole VOTC, the influence of visual experience on functional fingerprints (response patterns to 16 object categories) and connectional fingerprints (functional connectivity patterns during rest in terms of vectors of correlations between resting-state time-series) (Biswal et al., 1995). We characterized the functional and connectional profiles of regions within VOTC that were strongly influenced by visual experience, showing divergent functional and connectivity patterns between blind and sighted, as well as regions within VOTC that appeared polymodal, showing highly similar patterns in the blind and sighted groups.

Materials and Methods

Participants

Thirteen congenitally blind (4 females) and 16 sighted controls (7 females) participated in the object category experiments. All blind subjects reported that they had been blind since birth. Because medical records of onset of blindness were not available for most subjects, it cannot be ruled out that some of the subjects may have had vision very early in life. None of the subjects remembered to have ever been able to visually recognize shapes. All blind subjects were examined by an ophthalmologist to confirm their blindness and to establish the causes if possible. Five blind subjects reported to have had faint light perception in the past. Three blind subjects had faint light perception at the time of testing. Detailed diagnoses for all blind subjects are listed in Table 1. The blind and sighted groups were matched on handedness (all right-handed), age (blind: mean ± SD = 38 ± 12 years, range = 18–58 years; sighted: mean ± SD = 43 ± 11 years, range = 26–59 years; t(27) < 1), and years of education (blind: mean ± SD = 11 ± 3 years, range = 0–12 years; sighted: mean ± SD = 12 ± 2 years, range = 9–15 years; t(27) < 1).

Table 1.

Background information of congenitally blind subjects

| Subject | Age (yr), gender | Years of education | Cause of blindness | Light perception | Reported age at blindness onset | Experiments participated |

|---|---|---|---|---|---|---|

| 1 | 21, M | 12 | Congenital microphthalmia | None | 0 | Task-fMRI |

| 2 | 18, M | 12 | Congenital glaucoma and cataracts | None | 0 | Task-fMRI |

| 3 | 23, M | 0 | Congenital anophthalmos | None | 0 | Task-fMRI |

| 4 | 30, M | 12 | Congenital microphthalmia and microcornea | None | 0 | Task-fMRI |

| 5 | 38, F | 12 | Congenital glaucoma | None | 0 | Both |

| 6 | 45, M | 12 | Congenital microphthalmia; cataracts; leukoma | None | 0 | Both |

| 7 | 44, F | 9 | Congenital glaucoma | None | 0 | Both |

| 8 | 46, F | 12 | Cataracts; congenital eyeball dysplasia | Faint | 0 | Both |

| 9 | 53, M | 12 | Congenital eyeball dysplasia | None | 0 | Both |

| 10 | 36, M | 12 | Congenital leukoma | Faint | 0 | Both |

| 11 | 36, F | 12 | Congenital optic nerve atrophy | Faint | 0 | Both |

| 12 | 58, M | 9 | Congenital glaucoma and leukoma | None | 0 | Both |

| 13 | 41, M | 12 | Congenital glaucoma | None | 0 | Both |

| 14 | 28, F | 15 | Congenital microphthalmia; microcornea; leukoma | Faint | 0 | Resting-state fMRI |

| 15 | 37, F | 12 | No professional medical establishment of cause of blindness | Faint | 0 | Resting-state fMRI |

| 16 | 60, M | 12 | No professional medical establishment of cause of blindness | Faint | 0 | Resting-state fMRI |

| 17 | 46, M | 12 | No professional medical establishment of cause of blindness | Faint | 0 | Resting-state fMRI |

| 18 | 54, F | 9 | No professional medical establishment of cause of blindness | Faint | 0 | Resting-state fMRI |

The resting-state fMRI scanning was performed on 14 congenitally blind (7 females; mean age = 45 years, range = 26–60 years), 9 of whom participated in our object category experiment (Table 1), and 34 right-handed sighted subjects (20 females; age: mean ± SD = 22.5 ± 1.3 years, range = 20–26 years) from separate studies (Wei et al., 2012; Peelen et al., 2013). The potential confound of the age difference between the two groups of subjects was examined in a control analysis where a separate group of 7 sighted adults (2 females; age: mean ± SD = 42 ± 10 years, range = 26–54 years; years of education: mean ± SD = 12 ± 2 years, range = 9–15 years; all right-handed; 2 of them also took part in the object category experiment) that were age-matched with the congenitally blind group were used.

All subjects were native Mandarin Chinese speakers. None had any history of psychiatric or neurological disorders or suffered from head injuries. All subjects provided informed consent and received monetary compensation for their participation. The study was approved by the institutional review board of the Beijing Normal University Imaging Center for Brain Research.

Stimuli

The stimuli consisted of 18 categories, each containing 18 items. The 18 categories included human face parts, human body parts, daily scenes, tools, mammals, reptiles, birds, fishes, bugs, fruits and vegetables, flowers, preserved food, clothes, musical instruments, vehicles, furniture, celebrities, and famous places (for exemplars for each category, see Table 2). Our analyses here focused on the first 16 common objects. Celebrities (presented as faces in visual experiment) and famous places might be differently represented in the brain (e.g., Ross and Olson, 2012) and were not analyzed in this paper. This set of categories was chosen: (1) to cover a range of common object categories people know about and interact with in daily life; and (2) to include categories that have previously been shown to evoke relatively distinct response patterns in VOTC (e.g., tools, animals, bodies, faces, furniture, scenes). Our set was a subset of the categories studied in an earlier study in sighted subjects (Downing et al., 2006).

Table 2.

Stimulus characteristics

| Category | Exemplar stimulus | Word frequency (log) |

Familiarity (1-7,7: most familiar) |

||||

|---|---|---|---|---|---|---|---|

| Sighted |

Blind |

||||||

| Mean | SD | Mean | SD | Mean | SD | ||

| Bird | Pigeon | 0.4 | 0.4 | 4.9 | 1.0 | 4.1 | 1.0 |

| Body parts | Palm | 0.8 | 0.5 | 6.7 | 0.4 | 6.9 | 0.1 |

| Bug | Ladybug | 0.4 | 0.5 | 5.2 | 0.9 | 4.6 | 1 |

| Clothing | Jacket | 0.6 | 0.5 | 6.5 | 0.5 | 6.3 | 0.6 |

| Face parts | Nose | 1.0 | 0.6 | 6.6 | 0.3 | 6.8 | 0.3 |

| Fish | Goldfish | 0.2 | 0.3 | 4.3 | 0.8 | 3.9 | 0.9 |

| Flower | Rose | 0.4 | 0.4 | 4.6 | 0.9 | 4.1 | 1.0 |

| Food | Dumpling | 0.4 | 0.4 | 6.4 | 0.5 | 6.5 | 0.5 |

| Furniture | Bed | 0.6 | 0.5 | 6.2 | 0.4 | 6.1 | 0.7 |

| Fruits and vegetables | Apple | 0.7 | 0.5 | 6.6 | 0.5 | 6.6 | 0.5 |

| Mammal | Cat | 0.6 | 0.6 | 5.1 | 1.2 | 4.3 | 0.8 |

| Musical instruments | Piano | 0.4 | 0.5 | 4.3 | 1.1 | 4.8 | 1.0 |

| Reptile | Turtle | 0.2 | 0.5 | 4.1 | 1.0 | 3.4 | 0.8 |

| Scene | Kitchen | 1.0 | 0.6 | 6 | 0.5 | 5.7 | 0.7 |

| Tool | Hammer | 0.6 | 0.5 | 6.4 | 0.6 | 6.4 | 0.7 |

| Vehicle | Bus | 0.8 | 0.7 | 5.9 | 0.8 | 5.1 | 0.9 |

In the visual object category experiment, stimuli were grayscale photographs (400 × 400 pixels, subtended 10.55° × 10.55° of visual angle); in auditory experiments, they were object names that were digitally recorded (22,050 Hz, 16 bit), spoken by a female native Mandarin speaker. Stimulus presentation was controlled by E-prime (Schneider et al., 2002). There were no significant differences in word length across the 16 categories (number of one, two, or three syllable words: χ2 = 1.807, df = 15, p > 0.05). Names of face parts, body parts, scenes, and vehicles tended to be of higher frequency, and those of reptile and fish were of low frequency (Table 2). Familiarity ratings collected from both sighted and blind subjects showed that face parts, body parts, clothing, food, fruits and vegetables, and tools were the most familiar, and fish, reptile, and bird items were the least familiar (Table 2). The rating scores of the 16 object categories were highly correlated between-subject groups (Spearman's ρ = 0.95, p < 0.001).

Design and task

A size judgment task was adopted (Mahon et al., 2009; He et al., 2013; Peelen et al., 2013) to encourage subjects to retrieve object information. The auditory and the visual version of the experiments had the same following structure. Each object category experiment consisted of 12 runs, with 348 s per run. Each run started and ended with a 12 s fixation (visual experiment) or silence (auditory experiment) block. Between these blocks, eighteen 9 s task blocks, one per category, were presented with an interblock interval of 9 s. For each task block, six 1.5 s items from the same category were presented sequentially. Thus, for each object category, there were a total of 12 blocks across the 12 runs of each experiment, yielding a total of 72 presentations per category, with each of the 18 category exemplars repeated 4 times in the experiment. For each category, items were presented in an order of increasing real-world object size in half of the blocks, whereas for the remaining blocks, the items were presented in random order. The order of the 12 runs and the 18 blocks within each run was pseudo-randomized across subjects with no more than 4 successive blocks in each run being of the same judgment (ascending order or not, see below).

Auditory object category experiment.

All subjects were asked to keep their eyes closed throughout the experiment and to listen carefully to groups of six spoken words presented binaurally through a headphone. Subjects were instructed to think about each item and press a button with the right index finger if the items were presented in an ascending order in terms of real-world object size and press the other button with the right middle finger if otherwise. Responses were made after the onset of a response cue (300 ms auditory tone) immediately following the last item of the block.

Visual object category experiment.

Sighted subjects viewed the stimuli through a mirror attached to the head coil. The stimuli were grayscale photographs presented at the center of the screen. Subjects were instructed to think about the real-world size of each item and press one button with the right index finger if the items were presented in an order of increasing real-world object size and press the other button with the right middle finger if otherwise. Responses were made when the fixation dot at the center of the screen turned from red to green right after the offset of the last item of the block.

Image acquisition

All fMRI and structural MRI data were collected using a 3T Siemens Trio Tim Scanner at the Beijing Normal University MRI center.

For the object category fMRI experiments, high-resolution anatomical 3D MP-RAGE images were collected in the sagittal plane (144 slices, TR = 2530 ms, TE = 3.39 ms, flip angle = 7°, matrix size = 256 × 256, voxel size = 1.33 × 1 × 1.33 mm). BOLD activity was measured with an EPI sequence covering the whole cerebral cortex and most of the cerebellum (33 axial slices, TR = 2000 ms, TE = 30 ms, flip angle = 90°, matrix size = 64 × 64, voxel size = 3 × 3 × 3.5 mm with gap of 0.7 mm).

Fourteen congenitally blind and 34 sighted subjects underwent a resting-state scan in separate studies. Subjects were instructed to close their eyes and to not fall asleep. The resting-state scan lasted 6 min 40 s for the congenitally blind group and 8 min for the sighted group.

A T1-weighted MP-RAGE structural image with the same scanning parameters as reported above and 200 volumes of resting-state functional images using an EPI sequence (32 axial slices, TR = 2000 ms, TE = 33 ms, flip angle = 73°, matrix size = 64 × 64, voxel size = 3.125 × 3.125 × 4 mm with gap of 0.6 mm) were acquired for each of the 14 blind subjects. For the 34 sighted subjects, a T1-weighted structural MP-RAGE image in sagittal plane (128 slices; TR = 2530 ms, TE = 3.39 ms, flip angle = 7°, matrix size = 64 × 64, voxel size = 1.3 × 1 × 1.3 mm) and 240 volumes of resting-state functional images using an EPI sequence (33 axial slices, TR = 2000 ms, TE = 30 ms, flip angle = 90°, matrix size = 64 × 64, voxel size = 3.1 × 3.1 × 3.5 mm with gap of 0.6 mm) were acquired.

Data preprocessing

Object category experiment data were preprocessed using Statistical Parametric Mapping software (SPM8; http://www.fil.ion.ucl.ac.uk/spm/) and the advanced edition of DPARSF version 2.1 (Chao-Gan and Yu-Feng, 2010). The first six volumes (12 s) of each functional run were discarded for signal equilibrium. Functional data were motion-corrected, low-frequency drifts removed with a temporal high-pass filter (cutoff: 0.008 Hz), and normalized to the MNI space using unified segmentation. The functional images were then resampled to 3 mm isotropic voxels and were spatially smoothed using a 6 mm FWHM Gaussian kernel.

Resting-state data were preprocessed and analyzed using SPM 8, DPARSF version 2.1, and Resting-State fMRI Data Analysis Toolkit version 1.5 (Song et al., 2011). The first 6 min 40 s of the sighted group's resting-state data were analyzed so that the two subject groups had matching lengths of resting-state time-series. Functional data were resampled to 3 mm isotropic voxels. The first 10 volumes of the functional images were discarded. Preprocessing of the functional data included slice timing correction, head motion correction, spatial normalization to the MNI space using unified segmentation, spatial smoothing with 6 mm FWHM Gaussian kernel, linear trend removal, and bandpass filtering (0.01–0.1 Hz). Six head motion parameters, global mean signals, white matter, and CSF signals were regressed out as nuisance covariates. Global mean, white matter, and CSF signals were calculated as the mean signals in SPM's whole-brain mask (brainmask.nii) thresholded at 50%, white matter mask (white.nii) thresholded at 90%, and CSF mask (csf.nii) thresholded at 70%, respectively (Chao-Gan and Yu-Feng, 2010). As described below, validation analyses were performed to examine the effects of global signal removal, gray matter density difference, and head motion.

Data analysis

Object category experiment data were analyzed using the GLM in SPM8. For both the auditory and visual object category experiments, the GLM included 18 predictors, convolved with a canonical HRF, corresponding to the 18 category blocks (duration = 9 s) along with one regressor of no interest for the button press (duration = 0) for each block, and six regressors of no interest corresponding to the six head motion parameters in each run. For each subject, runs in which the subject's head motion was >3 mm or 3° were deleted, and the remaining runs were entered into the analysis. For the group effects, a random-effects analysis was conducted for each experiment separately.

All the voxelwise analyses were conducted in a VOTC mask. The mask was defined according to three criteria (Mahon et al., 2009): (1) the voxels were activated (random effects, FDR corrected, p < 0.05) for the task versus fixation contrast in the sighted visual experiment; (2) the voxels were within the occipitotemporal cortex (37–56 and 79–90) in the Automated Anatomical Labeling template (Tzourio-Mazoyer et al., 2002); and (3) the voxels were at or below a z coordinates in the MNI space of 10.

Comparing connectional fingerprints between sighted and congenitally blind groups.

To examine the between-group similarity/dissimilarity of RSFC patterns for each voxel in the VOTC mask, we compared the intrinsic functional connectivity patterns between sighted and congenitally blind groups. Two of the 14 congenitally blind subjects were excluded because of excessive head motion (>2 mm maximum translation or 2° rotation), resulting in 12 blind subjects in the RSFC analysis. There were no significant head motion differences between the remaining blind and sighted subjects: mean/SD of translational motion: blind, 0.36 mm/0.19 mm, sighted, 0.31 mm/0.16 mm; mean/SD of rotational motion: blind, 0.42°/0.23°, sighted, 0.39°/0.26°; two-tailed two-sample t test: p = 0.43 for translational, p = 0.75 for rotational, p = 0.32 for mean framewise displacement computed using the method in Power et al. (2012) and p = 0.22 using the method in Jenkinson et al. (2002).

For each voxel in the VOTC mask, its intrinsic functional connectivity pattern was defined as a vector of the strengths of RSFC between the voxel and each of 180 cerebral regions covering the whole cerebrum. The 180 cerebral regions were defined from an atlas that was generated by parcellating voxels in the whole brain into 200 regions according to their homogeneity of RSFC patterns (Craddock et al., 2012). Twenty regions in the cerebellum and brainstem were excluded from our analysis. Connectional fingerprints were constructed at both individual level and group level. First, the vector of RSFC strengths was obtained for each voxel and each subject by calculating the Pearson correlation of the time series of the voxel and the mean time series of each of the 180 cerebral regions (including the region the seed voxel resided in). For each voxel, the RSFC strengths were then Fisher-transformed and averaged across the subjects of each group (sighted, congenitally blind).

In a first analysis, these group-level data were directly compared using correlation analysis: a correlation map was generated by calculating, for each voxel, the Pearson correlation coefficient between the group-level connectional fingerprints (i.e., the 180 values reflecting RSFC strengths) of the sighted and congenitally blind groups. The resulting map represents the degree of between-group RSFC pattern similarity, with voxels showing a relatively high correlation having relatively similar connectional fingerprints in the blind and sighted groups.

In a second analysis, putative group differences and similarities were examined in more detail by statistically comparing within-group and between-group similarities using individual subject data. Specifically, for each voxel, within-blind and within-sighted r values were calculated by correlating each pair of individual-level connectional fingerprints within the congenitally blind group and within the sighted group, respectively. These values were compared with between-group r values, obtained by correlating the individual-level connectional fingerprint between all pairs of blind and sighted subjects. The bootstrap resampling method (Efron and Tibshirani, 1993; Kriegeskorte et al., 2008) was used to examine whether there was a significant difference between within-group (collapsing within-blind and within-sighted) and between-group similarities of connectional fingerprints, separately for each voxel. This method was chosen because it did not require the data being tested to meet distributional assumptions (Kriegeskorte et al., 2008). Specifically, we bootstrap-resampled the within-group and between-group Fisher-transformed r values, recomputing the mean of within-group similarities and the mean of between-group similarities, and the difference between them. The bootstrap resampling process was repeated 1000 times, resulting in a distribution of the difference between the mean of within-group and between-group similarities. For each voxel, the within-group similarity was considered to be significantly higher than the between-group similarity at a significance level of α if the lower bound of the 1-α confidence interval of the difference was positive. A map indicating the significance level (α = 0.05, 0.01, 0.001) each voxel could reach was produced.

Comparing functional fingerprints between sighted and congenitally blind groups.

To examine the between-group similarity/dissimilarity of functional fingerprints for each voxel in the VOTC mask, we compared the functional response profile to the 16 object categories between sighted and congenitally blind groups. This analysis was identical to the connectional fingerprint analysis described above, except that the voxelwise vectors consisted of the responses (β values) to the 16 object categories in the auditory experiment instead of the 180 RSFC values.

Comparing functional fingerprints between modalities, within the sighted group.

In addition to comparisons between blind and sighted groups, our data also allowed for comparing response profiles between modalities (auditory, visual) within the sighted group. This analysis examined the degree of between-modality similarity of functional fingerprints within the sighted group. The analysis was otherwise identical to that described for the between-group comparison of functional response profile.

Comparing results from connectional and functional fingerprint analyses.

Motivated by the view that the functional profile in a brain region is driven by the functional/structural connectivity pattern of this region (Passingham et al., 2002; Mahon and Caramazza, 2009, 2011; Saygin et al., 2012), we directly compared the between-group similarity maps of category response patterns and RSFC patterns. The RSFC pattern maps and category response maps were plotted for regions showing similar between-group similarity of RSFC patterns and category response patterns (both highly similar or both dissimilar between-subject groups). We further computed the Pearson correlation coefficient between the between-group category response pattern similarity and the between-group RSFC pattern similarity across the VOTC to measure the correspondence between connectional and functional fingerprints.

Finally, we examined the consistency of the between-group and between-modality similarity maps of category response patterns by plotting overlapping regions and calculating their Pearson correlation coefficients across all voxels in the VOTC mask.

Control and validation analyses.

We additionally performed four control analyses to address the potentially confounding effects of global signal removal, gray matter density, head motion and subject age, and one validation analysis to consolidate the major identified clusters of interest using an alternative approach (support vector machine classification).

1. Global signal removal.

Whether global signal should be removed during resting-state fMRI preprocessing is currently controversial. Although previous studies have suggested that global signal should be removed because it is associated with non-neuronal activity such as respiration (e.g., Fox et al., 2009), this procedure introduces widespread negative functional connectivities and may alter the intrinsic brain network structure (e.g., Murphy et al., 2009). To explore the effects of global signal removal on our results, we reanalyzed the correlation between the between-group similarity of category response patterns and the between-group similarity of RSFC patterns without global signal removal.

2. Group difference of gray matter density.

For the potential influence of gray matter density on RSFC patterns (He et al., 2007; Wang et al., 2011), we calculated the partial correlation between the between-group similarity of RSFC patterns and the between-group similarity of category response patterns by including the between-group differences of gray matter density as a covariate. The between-group difference of gray matter density for each voxel was the absolute value of the effect size (estimated) (Hedges, 1982) in the comparison between the gray matter density of blind subjects and sighted subjects.

3. Head motion confounds.

Head motion can exert a confounding effect on functional connectivity patterns (Power et al., 2012, 2014; Van Dijk et al., 2012; Satterthwaite et al., 2013). As reported above, we found no significant difference in head motion between our sighted and congenitally blind groups. To further exclude any potential effects of head motion, we repreprocessed the resting-state functional images using a scrubbing method (Power et al., 2012; Yan et al., 2013). Specifically, for each subject, resting-state functional volumes were removed based on a threshold of framewise displacement (Power et al., 2012) >0.2 mm as well as the 1 back and 2 forward neighbors (Power et al., 2013). Scrubbing resulted in different lengths of time-series across subjects. Nine sighted and 3 congenitally blind subjects were removed due to too few remaining time points (<150 volumes, i.e., 5 min) (Power et al., 2012). For the remaining 25 sighted and 9 congenitally blind subjects, the first 150 time points were analyzed to match the lengths of time-series between groups.

4. Between-group age difference.

There was a significant age difference between the sighted and the congenitally blind groups in the RSFC analysis. To rule out age effects on the RSFC patterns in the VOTC (Biswal et al., 2010; Zuo et al., 2010; Yan et al., 2011), we performed a control analysis using resting-state data of a new group of 7 sighted subjects who were age-matched with our congenitally blind subjects. The scanning time and parameters were identical for the two groups.

5. SVM classification analyses on identified clusters of interest.

To anticipate, our results revealed visual regions in bilateral posterior occipital regions and left posterior fusiform region, and polymodal regions, including the bilateral anterior medial temporal regions and bilateral middle/posterior lateral temporal regions. To verify these results, we performed group classification in each cluster based on the RSFC patterns or category response patterns of the sighted and blind groups using a linear SVM (LIBSVM: http://www.csie.ntu.edu.tw/∼cjlin/libsvm) with standard parameters. We used a leave-one-subject-out cross-validation scheme. In each iteration of the cross-validation, each dimension of feature vectors of both training and test examples was z-normalized within each subject. The classifier was then trained on the training set and tested on the remaining one subject by classifying each subject into either the blind or the sighted groups. At the end of the procedure, we obtained the decoding accuracy in terms of the percentage of corrected predictions of group label in all predictions. The statistical significance of decoding accuracy was assessed using permutation tests (1000 iterations), in which group labels for all subjects were shuffled. The p value for decoding accuracy was calculated as the fraction of accuracies from all permutations that were equal to or greater than the actual accuracy when using correct group labels.

All results in this paper are shown in the MNI space and projected onto the MNI brain surface using the BrainNet viewer (http://www.nitrc.org/projects/bnv/) (Xia et al., 2013).

Results

Comparing connectional fingerprints between sighted and congenitally blind groups

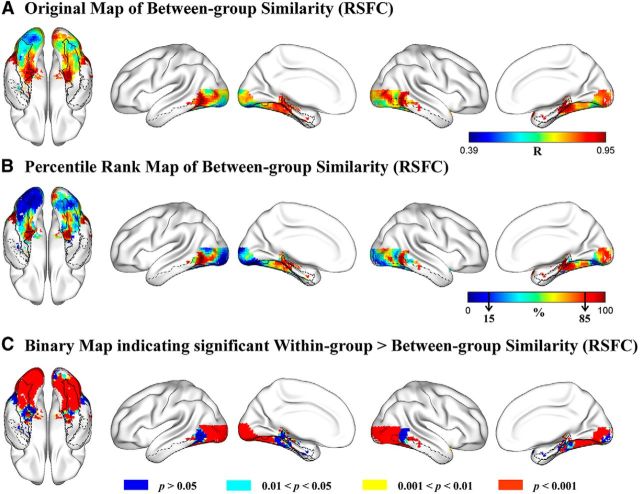

For each voxel in the VOTC mask, strengths of RSFCs (Fisher-transformed Pearson correlation coefficients) between the voxel and 180 cerebral regions (Craddock et al., 2012, their parcellation atlas) covering the whole brain were computed for each subject and averaged across subjects in the sighted and congenitally blind groups separately, rendering a vector of 180 mean RSFC strength values as the RSFC pattern for each voxel in each group; then, the similarity of RSFC patterns between the blind and sighted groups was calculated as the Pearson correlation between the 2 vectors (180 pairs of RSFC strength values) for each voxel. For better visualization, the resulting r map (Fig. 1A) was transformed to a percentile rank map in which the value of each voxel denoted its rank of between-group RSFC pattern similarity among all voxels in the VOTC mask (Fig. 1B).

Figure 1.

Comparison of the connectional fingerprints of the VOTC voxels in the blind and sighted groups. A, Original map of Pearson correlation coefficients of RSFC patterns between blind and sighted groups. Color bar represents the Pearson r value. Warmer colors represent greater between-group similarity. B, Percentile rank map of Pearson correlation coefficients of RSFC patterns between blind and sighted groups. Color bar represents the percentile rank value. Warmer colors represent greater between-group similarity. Black arrows in the color bar indicate the bottom and the top 15% similarity. C, Brain map indicating the significance level each voxel reached for the difference between within-group similarity and between-group similarity of RSFC patterns. The contours of bilateral fusiform gyrus (solid black lines), bilateral parahippocampal gyrus (dashed black lines), and bilateral inferior temporal gyrus (dash-dot black lines) are imposed on the brain surface for a clear reference of significant anatomical locations.

As shown in Figure 1A, voxels showing the highest between-group similarity of RSFC patterns (top 15%, Pearson r > 0.88; Fig. 1B, dark red clusters) were observed in the bilateral anterior medial parts and posterior lateral parts of the VOTC, whereas voxels showing the lowest between-group RSFC pattern similarity (bottom 15%, Pearson r < 0.67; Fig. 1B, dark blue regions) were mainly found in the posterior occipital cortex, including the bilateral inferior occipital gyrus, lingual gyrus, and the left posterior lateral fusiform gyrus.

To statistically examine where the two subject groups differed significantly, we compared the between-group correlations and the within-group correlations of RSFC patterns across all VOTC voxels. For each subject, an individual-level connectional fingerprint was constructed as the vector of 180 RSFC strength values computed based on the subject's own time-series of resting-state brain activity. For each VOTC voxel, 627 within-group r values (66 within-blind R values, n = 12; and 561 within-sighted r values, n = 34) and 408 between-group r values were obtained by correlating the individual-level connectional fingerprints of pairs of subjects from the same group or from different groups (see Materials and Methods). Voxels showing higher within-group similarity relative to between-group similarity of connectional fingerprints were identified using the bootstrap resampling method (Efron and Tibshirani, 1993; Kriegeskorte et al., 2008) on the within-group and between-group r values. A brain map (Fig. 1C) was obtained to show which VOTC voxels' connectional fingerprints were significantly different between sighted and blind groups. As shown in Figure 1C (red patches), the difference between the within-group and between-group similarities were significant (within > between, uncorrected p < 0.001) in large portion of the VOTC, including the bilateral calcarine, lingual gyrus, inferior and middle occipital gyrus, posterior fusiform gyrus, and portions of the posterior inferior/middle temporal gyrus. These areas covered almost completely the regions showing the lowest between-group correlations (bottom 15%), indicating that the RSFC patterns of these clusters were systematically different between the sighted and congenitally blind groups. By contrast, the difference between the within-group similarities and the between-group similarities was not significant (uncorrected single voxel, p > 0.05) in parts of the bilateral anterior parahippocampal gyrus, anterior medial fusiform gyrus, and middle/posterior parts of the inferior and middle temporal gyrus (Fig. 1C, blue patches). These voxels overlapped with the anterior medial temporal regions and middle/posterior lateral temporal regions that showed the highest rank (top 15%) of between-group similarities (Fig. 1B). This analysis was also done for the blind and sighted groups separately (i.e., within-blind correlations vs between-group correlations; within-sighted correlations vs between-group correlations), yielding similar results (data not shown).

Comparing functional fingerprints between sighted and congenitally blind groups

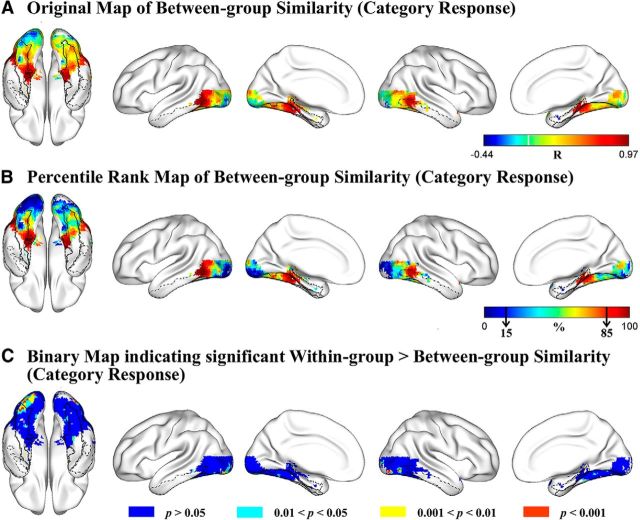

To explore the degree of between-group similarity of functional response patterns in VOTC, we calculated, for each voxel, the correlation of the response profiles to 16 object categories (represented by the spoken names of category exemplars) between the sighted and blind groups. The r map (Fig. 2A) was transformed to a percentile rank map for an overview of the pattern of between-group similarity across the VOTC (Fig. 2B). Regions showing the highest between-group similarity of category response patterns (top 15%, Pearson r > 0.71; Fig. 2B, dark red clusters) were the anterior medial parts of the bilateral VOTC, including the parahippocampal gyrus and anterior medial fusiform gyrus and middle/posterior lateral parts of the bilateral VOTC, including the posterior inferior/middle temporal regions, whereas voxels showing the lowest between-group category response similarity (bottom 15%, Pearson r < 0.02; Fig. 2B, dark blue regions) mainly located in the bilateral posterior lingual gyrus and inferior occipital gyrus, with the clusters in the left hemisphere extending anteriorly to the left posterior lateral fusiform.

Figure 2.

Comparison of the functional fingerprints of the VOTC voxels in the blind and sighted auditory experiments. A, Original map of Pearson correlation coefficients of category response patterns between blind and sighted groups. Color bar represents the Pearson r value. Warmer colors represent greater between-group similarity. B, Percentile rank map of Pearson correlation coefficients of category response patterns between blind and sighted groups. Color bar represents the percentile rank value. Warmer colors represent greater between-group similarity. Black arrows in the color bar indicate the bottom and the top 15% similarity. C, Brain map indicating the significance level each voxel reached for the difference between within-group similarity and between-group similarity of category response patterns. The contours of bilateral fusiform gyrus (solid black lines), bilateral parahippocampal gyrus (dashed black lines), and bilateral inferior temporal gyrus (dash-dot black lines) are imposed on the brain surface for a clear reference of significant anatomical locations.

Similar to the connectional fingerprint comparison, we statistically compared the within-group and between-group similarities calculated from individual-level functional fingerprints. Bootstrap resampling was repeated 1000 times on 198 within-group r values: 78 within-blind r values for the blind group (n = 13) and 120 within-sighted r values for the sighted group (n = 16) and 208 between-group r values for each VOTC voxel. As shown in Figure 2C, a small number of voxels (red patches) in bilateral posterior inferior occipital and lingual gyri showed significant within- versus between-group differences (within > between; uncorrected p < 0.001). By lowering the thresholds of significance to uncorrected p < 0.01 (yellow patches) and p < 0.05 (cyan patches), voxels exhibiting difference of category response patterns between-subject groups were still mainly observed in these bilateral posterior occipital regions. These regions overlapped largely with regions showing the lowest between-group correlations (bottom 15%), indicating that the category response rankings of these clusters were systematically different between the sighted and congenitally blind groups. The VOTC regions showing the highest between-group similarities (top 15%) did not exhibit significant within- versus between-group differences (uncorrected single voxel, p > 0.05), confirming similar category response patterns between the two groups in these bilateral anterior medial and middle/posterior lateral VOTC regions (Fig. 2C, blue patches). This analysis was also done for the blind and sighted groups separately (i.e., within-blind correlations vs between-group correlations; within-sighted correlations vs between-group correlations), yielding similar results (data not shown).

Comparing functional fingerprints between modalities, within the sighted group

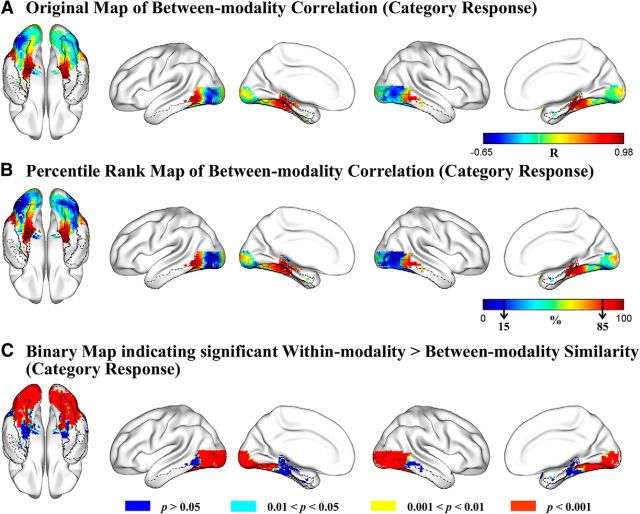

To explore the degree of between-modality similarity of functional fingerprints in VOTC, for each voxel we calculated the Pearson correlation of the category response levels for the 16 categories between the sighted-visual and sighted-auditory experiments. The r map (Fig. 3A) was transformed to a percentile rank map for an overview of the pattern of between-modality similarity across the VOTC (Fig. 3B). Regions showing the highest between-modality similarity of category response patterns (top 15%, Pearson r > 0.63; Fig. 3B, dark red clusters) were the anterior medial parts of the bilateral VOTC, including the posterior parahippocampal gyrus, anterior medial fusiform gyrus, and middle/posterior lateral parts of the bilateral VOTC, including the posterior parts of the middle/inferior temporal regions. Regions showing the lowest correlations (bottom 15%, r values, Pearson r < −0.27; Fig. 3B, dark blue clusters) between modality of inputs in sighted group were mainly located in the bilateral posterior lingual gyrus, posterior lateral fusiform gyrus, and inferior occipital gyrus. Spearman's rank order correlations generated highly similar results.

Figure 3.

Comparison of the functional fingerprints of the VOTC voxels in the sighted visual and auditory experiments. A, Original map of Pearson correlation coefficients of category response patterns between visual and auditory modalities. Color bar represents the Pearson r value. Warmer colors represent greater between-modality similarity. B, Percentile rank map of Pearson correlation coefficients of category response patterns between visual and auditory modalities. Color bar represents the percentile rank value. Warmer colors represent greater between-modality similarity. Black arrows in the color bar indicate the bottom and the top 15% similarity. C, Brain map indicating the significance level each voxel reached for the difference between within-modality similarity and between-modality similarity of category response patterns. The contours of bilateral fusiform gyrus (solid black lines), bilateral parahippocampal gyrus (dashed black lines), and bilateral inferior temporal gyrus (dash-dot black lines) are imposed on the brain surface for a clear reference of significant anatomical locations.

Similar to the other measures, we statistically compared the within-modality and between-modality similarities (240 within- vs 256 between-modality) using the bootstrap resampling method. The resulting binary map (Fig. 3C) showed that the differences between the within-modality correlations and between-modality correlations were significant (within > between) in vast regions of the VOTC (Fig. 3C, red patches), mainly including the bilateral calcarine, lingual gyrus, inferior and middle occipital gyrus, middle and posterior fusiform gyrus, and the posterior tip of the inferior and middle temporal gyri (uncorrected, p < 0.001). These areas largely overlapped with bilateral posterior lingual, lateral fusiform, and inferior occipital regions showing the lowest between-modality correlations (bottom 15%), confirming that the category response rankings of these clusters were indeed systematically different between auditory and visual modalities. By contrast, in bilateral parahippocampal gyrus, medial anterior fusiform gyrus, and the middle/posterior parts of the lateral inferior and middle temporal cortex (Fig. 3C, blue regions), the within-modality correlations and the between-modality correlations did not differ significantly (uncorrected single voxel, p > 0.05). These regions correspond well to the bilateral anterior medial temporal and middle/posterior lateral temporal regions showing the highest rank (top 15%) of between-modality similarities. This analysis was also done for the sighted visual and sighted auditory experiments separately (i.e., within-visual correlations vs between-modality correlations; within-auditory correlations vs between-modality correlations), yielding similar results (data not shown).

Comparing results from connectional and functional fingerprint analyses

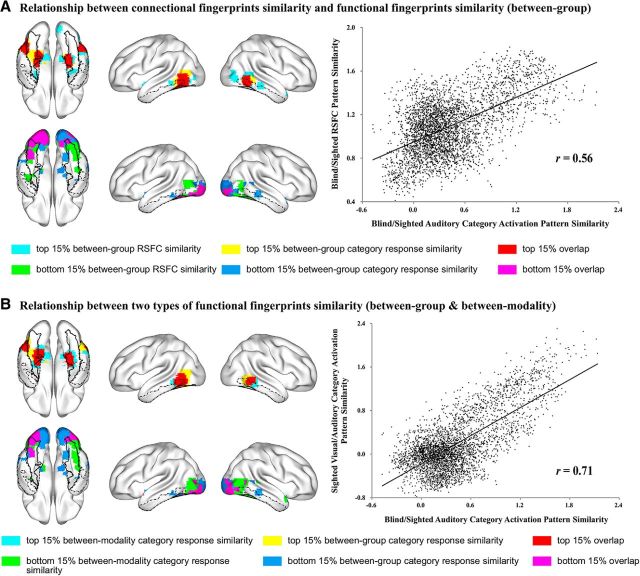

The maps of voxels showing various degrees of similarity between the blind and the sighted subject were highly similar in terms of RSFC and category response patterns (Fig. 4A). The bilateral medial parts of anterior temporal cortex, including the parahippocampal and anterior medial fusiform gyri, and the bilateral posterior parts of lateral temporal cortex, including the posterior middle and inferior temporal gyri, showed the greatest similarity (top 15%) between the blind and sighted groups for both category response patterns and RSFC patterns (Fig. 4A, top row of the left panel, red patches). Below, these regions will be considered to be “polymodal.” Bilateral posterior occipital regions and a small region in the left posterior lateral fusiform gyrus showed the least similarity (bottom 15%) in both category response patterns and RSFC patterns (Fig. 4A, bottom row of the left panel, purple patches) and will be considered to be “visual” regions.

Figure 4.

Relationship between connectional fingerprints similarity and functional fingerprints similarity, and between two types of visual input effects on functional fingerprints similarity, in VOTC. A, Relationship between the between-group similarity of RSFC patterns and between-group similarity of category response patterns in VOTC. Top row of left panel, Voxels with the highest percentile rank (≥85) of between-group RSFC pattern similarity (cyan) overlap with voxels showing highest percentile rank (≥85) of between-group category response pattern similarity (yellow), with overlapping voxels in red. Bottom row of left panel, Voxels with the lowest percentile rank (<15) of between-group RSFC pattern similarity (green) overlap with voxels with the lowest percentile rank (<15) of between-group similarity of category response patterns (blue), with overlapping voxels in purple. Right panel, Scatter plot of the correlation between between-group RSFC pattern similarity (y-axis, Fisher-transformed R values) and between-group category response pattern similarity (x-axis, Fisher-transformed R values). B, Relationship between category response pattern similarities between groups (blind and sighted auditory experiments) and between task input modalities (visual and auditory experiments within sighted group) in VOTC. Top row of left panel, Voxels with the highest percentile rank (≥85) of between-group category response pattern similarity (yellow) overlap with voxels showing highest percentile rank (≥85) of between-modality category response pattern similarity (cyan), overlapping voxels in red. Bottom row of left panel, Voxels with the lowest percentile rank (<15) of between-group category response pattern similarity (blue) overlap with voxels with the lowest percentile rank (<15) of between-modality similarity of category response (green) patterns, with overlapping voxels in purple. Right panel, Scatter plot of the correlation between between-modality category response pattern similarity (y-axis, Fisher-transformed r values) and between-group category response pattern similarity (x-axis, Fisher-transformed r values). The contours of bilateral fusiform gyrus (solid black lines), bilateral parahippocampal gyrus (dashed black lines), and bilateral inferior temporal gyrus (dash-dot black lines) are imposed on the brain surface for a clear reference of significant anatomical locations.

There was a positive correlation (Pearson r = 0.56) between the between-group RSFC pattern similarity and the between-group category response profile similarity across the VOTC mask. As can be seen from the scatter plot (Fig. 4A, right panel), the coupling between the functional response pattern and the intrinsic functional connectivity pattern seemed to be a general pattern across the VOTC rather than driven by a few functionally specific clusters.

We also examined the relationship between the category response patterns in VOTC in two types of visual input analyses: the between-group similarity (blind and sighted auditory experiments), which reflects how visual input across life affects response patterns, and the between-modality similarity (sighted visual and auditory experiments), which measures how visual input during the task setting affects response patterns. The distribution of voxels showing various degrees of similarity of category response patterns between blind and sighted auditory experiments (i.e., across groups with different visual experiences) were highly similar with those between visual and auditory experiments within the sighted group (i.e., across different task input modality in the same subject group) (Fig. 4B). The bilateral medial parts of anterior temporal cortex, including the parahippocampal gyrus and anterior medial fusiform gyrus, and the bilateral posterior parts of lateral temporal cortex, including the posterior middle and inferior temporal gyrus, showed the greatest similarity (top 15%) of task-induced object category response patterns between the blind and sighted auditory experiments, as well as between visual and auditory experiments within the sighted group (Fig. 4B, top row of the left panel, red patches). Bilateral posterior occipital regions, with the left-side cluster extending anteriorly to the left fusiform gyrus, showed the least similarity (bottom 15%) of task-induced object category response patterns both between the blind and sighted auditory experiments and between the visual and auditory experiments within the sighted group (Fig. 4B, bottom row of the left panel, purple patches). There was a high correlation (Pearson r = 0.71) between the between-group similarity and the between-modality similarity of category response patterns across the VOTC mask. These results show that the effect of visual input through life and the effect of visual input in task settings modulate VOTC voxels responses similarly.

Results of control and validation analyses

We validated these results with five additional analyses.

We calculated the RSFC strengths without removing the global signal. The overall pattern of results was largely similar to those with global signal removal. Polymodal voxels, those exhibiting high between-group similarities in terms of both RSFC patterns and category response patterns, were in bilateral medial parts of anterior temporal cortex and lateral parts of posterior temporal cortex. Visual voxels, those exhibiting the lowest between-group similarity in terms of both RSFC patterns and category response patterns, were in the posterior occipital cortex. There was a positive correlation (Pearson r = 0.44) between the between-group RSFC pattern similarity and the between-group category response profile similarity across the VOTC mask.

We considered the possible influence of gray matter density. When the between-group differences (indexed by the absolute value of effect size) in terms of gray matter probability of each voxel were included as a covariate, the partial correlation between the between-group RSFC pattern similarity and the between-group category response profile similarity across the VOTC mask was still high (Pearson r = 0.53), indicating that the results were not driven by cross-voxel variations of gray matter density differences between blind and sighted subjects.

We ruled out possible head motion confounds by performing the analysis using head-motion scrubbed resting-state data (see Materials and Methods). The overall results remained stable. Bilateral medial parts of anterior temporal cortex and lateral parts of posterior temporal cortex exhibited high between-group similarity in terms of both RSFC patterns and category response patterns, whereas voxels in the posterior occipital cortex exhibited low between-group similarity in terms of both RSFC patterns and category response patterns. There was again a positive correlation (Pearson r = 0.42) between the between-group RSFC pattern similarity and the between-group category response profile similarity across the VOTC mask.

To control for age differences, we performed an additional analysis on resting-state data of 7 sighted subjects who were age-matched with the congenitally blind subjects. Again, we found a very similar pattern of results. Voxels exhibiting high between-group similarity of RSFC patterns located in bilateral medial parts of anterior temporal cortex and lateral parts of posterior temporal cortex, whereas voxels exhibiting low between-group similarity of RSFC patterns were in the posterior occipital cortex. A positive correlation (Pearson r = 0.45) was again observed between the between-group RSFC pattern similarity and the between-group category response profile similarity across the VOTC mask.

We performed group classification based on the functional or connectional fingerprints using a linear SVM (LIBSVM: http://www.csie.ntu.edu.tw/∼cjlin/libsvm) with standard parameters to confirm the characteristics of the visual (Fig. 4A, purple patches in the bottom row) and polymodal (Fig. 4A, red patches in the top row) clusters we identified above. Results (Table 3) showed that the bilateral posterior occipital “visual” clusters could successfully discriminate between blind and sighted groups based on both the connectional and functional fingerprints, the left posterior fusiform cluster distinguished successfully the blind from sighted group based on the connectional fingerprints but not the functional fingerprints. In contrast, the temporal “polymodal” clusters could not successfully discriminate between groups based on connectional fingerprints or based on functional fingerprints. Thus, the ROI validation analyses confirmed the “visual” and “polymodal” characteristics of these VOTC clusters we identified.

Table 3.

ROI-based SVM results

| Left posterior occipital | Right posterior occipital | Left posterior fusiform | Left anterior medial temporal | Right anterior medial temporal | Left posterior lateral temporal | Right posterior lateral temporal | |

|---|---|---|---|---|---|---|---|

| Classification accuracy (p value) | |||||||

| RSFC | 93.48% (<0.001) | 93.48% (<0.001) | 78.26% (0.017) | 69.57% (0.198) | 60.87% (0.601) | 69.57% (0.188) | 76.09% (0.053) |

| Category response | 75.86% (0.027) | 79.31% (0.015) | 55.17% (0.356) | 48.28% (0.548) | 48.28% (0.566) | 34.48% (0.889) | 65.52% (0.120) |

Characterization of visual and polymodal regions

In this section, we map the connectional and functional fingerprints of the polymodal (showing the highest percentile rankings, ≥85, of between-group similarity in both RSFC patterns and category response patterns; Fig. 4A, red patches in the top row) and visual regions (showing the lowest percentile rankings, <15%, of between-group similarity in both RSFC patterns and category response patterns; Fig. 4A, purple patches in the bottom row) identified above. In the current analyses, the time series (in RSFC analyses) and β values (in category response analyses) were averaged across all voxels within each region for exploration and visualization purposes. It should be noted that these analyses do not consider possibly heterogeneous subregions within the clusters.

To plot the RSFC maps, for each cluster, one-sample t tests were conducted separately for the blind and sighted groups on the RSFC strengths between the cluster and each of the 180 regions covering the whole brain. The t values were then mapped onto each region of the brain for a direct visual inspection of the RSFC patterns in the blind and sighted groups. To depict the functional fingerprints (category response profiles) for each cluster, β values of activation strength to the 16 object categories in the auditory experiments were extracted and plotted.

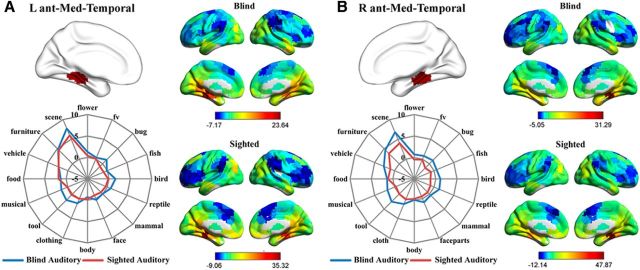

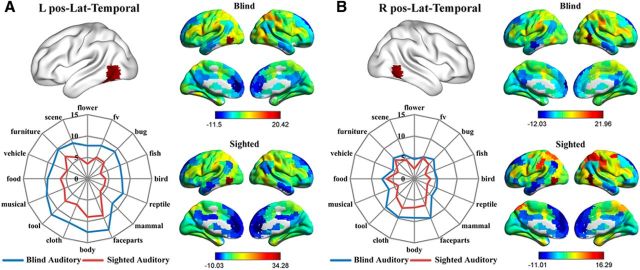

As shown in Figures 5 and 6, the whole-brain RSFC patterns as well as the category response patterns (in the auditory experiments) for the bilateral anterior medial temporal clusters and the bilateral posterior lateral temporal clusters were highly similar between the congenitally blind and sighted groups. Bilateral anterior medial temporal clusters (Fig. 5) were significantly connected (FDR corrected, p < 0.05) with bilateral precuneus and posterior cingulate cortex and adjacent calcarine and lingual regions, the ventral medial and anterior temporal cortex, middle occipital gyrus, and medial prefrontal cortex in both the blind and sighted groups. Two-way mixed-design ANOVAs were performed on the functional responses in these two clusters, with object category as within-group factor and subject group as between-group factor. A significant main effect of category was observed in both clusters (left: F(7,189) = 18.74, p < 10−18; right: F(8,222) = 13.55, p < 10−15; Greenhouse-Geisser corrected). Post hoc comparisons revealed that both clusters exhibited significantly stronger responses to daily scenes and furniture compared with the average of the responses to the remaining 15 object categories (Fs(1,27) > 30.12, Bonferroni corrected, ps < 0.05). The main effect of group and the interaction between category and group were not significant in either cluster (ps > 0.07). For the bilateral posterior lateral temporal cortex, the left (Fig. 6A) had significant positive connections (FDR corrected, p < 0.05) with abutting middle and inferior temporal gyrus, bilateral inferior and middle frontal cortex, right superior frontal cortex, left precentral gyrus, bilateral superior and inferior parietal lobule, and adjacent postcentral gyrus in both the blind and the sighted groups. Two-way mixed-design ANOVA identified significant main effects of category (F(8,223) = 11.53, p < 10−13, Greenhouse-Geisser corrected) as well as group (F(1,27) = 6.43, p < 0.05) in this region, although the interaction was not significant (F(8,223) = 0.56, p = 0.83, Greenhouse-Geisser corrected). Post hoc comparisons revealed that this cluster showed significantly stronger responses to body parts, face parts, and tools compared with the average of the responses to the remaining 15 object categories (Fs(1,27) > 16.95, Bonferroni corrected, ps < 0.05). The right posterior lateral temporal cluster (Fig. 6B) was significantly positively connected (FDR corrected, p < 0.05) with the bilateral inferior and middle temporal gyrus, inferior and middle frontal cortex, supplementary motor area, precentral and postcentral cortex, and inferior/superior parietal cortex in both the sighted and the blind groups. A significant main effect of category was identified by the two-way mixed-design ANOVA (F(15,405) = 8.08, p < 10−15). Post hoc comparisons revealed that the right posterior lateral temporal cluster showed significantly stronger responses to body parts, face parts, and clothing compared with the average of the responses to the remaining 15 object categories (Fs(1,27) > 13.04, Bonferroni corrected, ps < 0.05). The main effect of group and the interaction between category and group were not significant (ps > 0.22).

Figure 5.

Connectional fingerprints and functional fingerprints (category response in the auditory experiments) of the (A) left and (B) right anterior medial temporal clusters. Color scales in the RSFC maps represent the strengths of RSFC between regions (t values). The radial distance in the functional fingerprints reflects the activation strength (β values).

Figure 6.

Connectional fingerprints and functional fingerprints (category response in the auditory experiments) of the (A) left and (B) right posterior lateral temporal clusters. Color scales in the RSFC maps represent the strengths of RSFC between regions (t values). The radial distance in the functional fingerprints reflects the activation strength (β values).

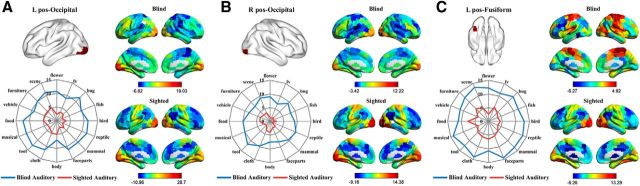

For the bilateral posterior occipital and left posterior lateral fusiform clusters, the whole-brain RSFC patterns between blind and sighted groups were rather different (Fig. 7). One-sample t tests showed that the posterior occipital clusters were significantly connected with the precentral and postcentral cortex and superior temporal regions in the sighted group; whereas in the blind group, they exhibited significant functional connectivity with the left inferior/middle frontal cortex and adjacent precentral gyrus (both FDR corrected, ps < 0.05). The left posterior lateral fusiform cluster was significantly connected with vast regions in the bilateral occipital and ventral temporal cortex and regions in the bilateral precentral and postcentral cortex and supplementary motor areas in the sighted group (FDR corrected, p < 0.05). For the blind group, the left posterior lateral fusiform cluster was significantly connected with bilateral Heschl's gyrus and superior temporal regions corresponding to the primary and secondary auditory cortices, bilateral superior parietal regions, postcentral and precentral cortices and supplementary motor areas, and small parts of the right inferior frontal cortex (FDR corrected, p < 0.05). Two-way mixed-design ANOVAs showed a significant main effect of group in all three clusters (left posterior lateral FG: F(1,27) = 8.84, p = 0.006; left posterior occipital: F(1,27) = 9.07, p = 0.006; right posterior occipital: F(1,27) = 11.34, p = 0.002), with blind subjects exhibiting stronger overall activity than sighted subjects. The main effect of category was not significant (ps > 0.24), but there was a significant interaction between group and category in the left posterior fusiform cluster (F(15,405) = 1.70, p = 0.048). Further analyses revealed that only the responses to fish, flower, musical instrument, and reptile categories were significantly stronger in congenitally blind relative to sighted groups (ts(27) > 3.26, Bonferroni corrected, ps < 0.05).

Figure 7.

RSFC pattern maps and functional fingerprints (category response in the auditory experiments) of the (A) left, (B) right posterior occipital, and (C) posterior lateral fusiform visual regions (low between-group similarity of both RSFC patterns and category response patterns). Color scales in the RSFC maps represent the strengths of RSFC between regions (t values). The radial distance in the functional fingerprints reflects the activation strength (β values).

Discussion

We mapped out the extent to which visual experience modulates connectional and functional fingerprints of VOTC by comparing RSFC patterns and category response profiles between congenitally blind and sighted subjects. Large-scale continuous maps of functional and connectional similarity revealed which parts of VOTC are shaped by visual input/experience and which parts are not. We identified highly “visual” areas in bilateral posterior occipital and left posterior lateral fusiform clusters, where RSFC patterns and object category responses were significantly different between congenitally blind and sighted individuals, and different between sighted subjects performing visual and auditory tasks. We also revealed “polymodal” regions in bilateral anterior medial temporal clusters and posterior lateral temporal clusters, where highly similar functional and connectivity patterns between sighted and blind individuals were obtained. Regions with medium levels of visual dependency were intermixed.

The first result to emphasize is the convergence between the connectional fingerprints and the two types of functional fingerprints, strengthening confidence in the generality of our findings. We also found strong convergence between the effect of visual input during the task and the effect of visual input during life. The map of similarity between input modalities for sighted individuals (sighted visual experiment and auditory experiment) was similar to that of between-subject groups with and without visual experience (blind auditory experiment and sighted auditory experiment). This correspondence invites the inference that a unitary explanation may be appropriate for the modality effect within the sighted group and for the visual experience effect across groups. One possibility is that regions of VOTC exhibit variable plasticity reflecting the degree to which they are typically programmed to process visual or polymodal information (or computation in the metamodal hypothesis; e.g., Pascual-Leone and Hamilton, 2001).

The coupling between a region's functional profile and its intrinsic connectional fingerprint observed in this study corroborates a previous finding that selectivity to faces in VOTC is accurately predicted by voxelwise patterns of whole-brain structural connectivity patterns (Saygin et al., 2012). Our study extends this finding to functional connectivity and to categories other than faces, and most importantly, showed that drastic changes in visual experience affect local activity and functional connectivity patterns together. These results are in accord with the general hypothesis that local activity is determined by large-scale connectivity patterns (Passingham et al., 2002; Mahon and Caramazza, 2009, 2011; Behrens and Sporns, 2012).

The effects of visual input in shaping occipital and part of the posterior lateral fusiform regions are in line with previous studies showing plastic changes in this territory. Studies on visually deprived animals have identified functional and structural reorganization in occipital visual cortices (Hubel et al., 1977; Hyvärinen et al., 1981; Price et al., 1994). In humans, cross-modal activation has been robustly reported in occipital visual cortices in blind individuals when performing nonvisual tasks (for reviews, see Noppeney, 2007; Ricciardi et al., 2014). We here not only observed stronger activation in blind subjects in the auditory experiments relative to the sighted group but also showed that the categorical response patterns are different between blind and sighted, further confirming the visual characteristic of these posterior regions and indicating that the cross-modal reorganization does not follow a simple additive pattern (compare Lewis et al., 2010). Studies on RSFC have found that visual deprivation is associated with reduced connectivity within the occipital cortices and between the occipital cortex and other primary sensory/motor regions, and with increased connectivity between occipital cortices and inferior frontal cortices (Liu et al., 2007; Butt et al., 2013; Burton et al., 2014; for review, see Bock and Fine, 2014). We here showed that in sighted, but not in congenitally blind, posterior occipital regions are significantly synchronized with postcentral/precentral cortices and superior temporal regions; whereas in congenitally blind, but not in sighted, posterior occipital regions are functionally connected with left inferior frontal cortex. These findings are consistent with previous studies and may underlie the recruitment of this occipital region for verbal (e.g., Bedny et al., 2011) or other higher cognitive functions in early blind adults. RSFC patterns of early visual cortex with other visual regions have been reported to be similar between groups (Burton et al., 2014; Striem-Amit et al., 2015), and the intriguing differences of RSFC patterns within the visual cortex and between early visual cortex and nonvisual regions warrant further investigation.

These findings are well explained by the assumption that neurons in early visual cortex are genetically programmed to process stimuli from the visual modality and represent characteristic features within this modality, such as orientation and spatial relations. Stimuli presented in other modalities (e.g., auditory input) are not expressible in terms of those basic units because they depend on other stimulus properties, such as the temporal dimension in this case. Even in the case of tactile input, where spatial relations are important, the construction of object representation requires integration of tactile features over time. In other words, typically the type of representation computed in early visual cortex by dedicated neural circuits may be intrinsic and exclusive to the visual modality, and this representation is therefore not directly recoverable from other sensory inputs. Thus, the plastic changes associated with congenital blindness become more drastic here, responding to language and auditory stimuli without similarity to the visual characteristics. An unexpected hemispheric asymmetry was present in the occipital cortex: the between-group similarities of both the connectional and functional fingerprints were more prominent in the right hemisphere than the left (Figs. 1, 2), perhaps due to more extensive reorganization in the left occipital cortex (Raz et al., 2005; Bedny et al., 2011; Watkins et al., 2012; Butt et al., 2013).

The other major result concerns the bilateral anterior medial temporal regions and posterior lateral temporal regions, where highly similar patterns of intrinsic functional connectivity and response preferences for various types of objects were observed between congenitally blind and sighted subjects, as well as similar categorical response patterns between visual and auditory verbal input within sighted subjects. For the bilateral anterior medial temporal regions, we observed that, for both blind and sighted subjects, the activity was strongest for scenes and furniture, converging with previous findings of selectivity for tactile exploration of scenes and verbal comprehension of large nonmanipulable objects in the parahippocampal cortex of early blind adults (Wolbers et al., 2011; He et al., 2013). Furthermore, in both subject groups, these regions were functionally connected with bilateral precuneus and posterior cingulate cortex, ventral medial temporal cortex, and middle occipital gyrus. This connectivity pattern is in line with the proposal that this section of VOTC is part of a navigation network that processes scenes and large objects that may serve as landmarks (Epstein, 2008; He et al., 2013). The categorical response profile in bilateral posterior lateral temporal regions is less transparent. Here, relatively stronger activation was observed for diverse categories, including human face and body parts, clothing, and tools. One possibility is that these regions represent one or more properties that are common to these categories. Alternatively, these clusters may consist of voxels with diverse functional properties; our tests of visual dependence did not require voxels of a cluster to be functionally homogeneous.

We expect that the polymodal nature of the regions revealed here is closely related to the type of information and/or computation these regions represent: information that is abstracted away from vision-specific properties is suitable for interaction with representations computed through other modalities, including tactile and verbal input, such as objects' shape (Peelen et al., 2014) or size (Konkle and Oliva, 2012; Konkle and Caramazza, 2013). Thus, these regions respond in similar ways when sighted subjects receive visual and nonvisual stimuli and when blind subjects receive verbal or tactile stimuli. Taking away inputs from one modality does not change the function of these regions perhaps because they can develop functional specificity through input from other modalities.

The transition between visual and polymodal cortical regions appears to be continuous rather than discrete. The cortical regions with medium levels of visual dependence may contain neurons that are more strongly tuned to visual input, but they are also modulated by nonvisual input. Alternatively, they may contain a mixture of visual neurons and polymodal neurons that is not distinguishable at the current spatial scale.

In conclusion, by comparing congenitally blind and sighted individuals, we provide a large-scale mapping of the degree to which connectional and functional fingerprints of the “visual” cortex depend on visual experience/input. In addition to revealing regions that are strongly dependent on visual experience (early visual cortex and posterior fusiform gyrus), our results point to regions in which connectional and functional patterns are surprisingly similar in blind and sighted individuals (anterior medial and posterior lateral parts of the VOTC). Although there is general consensus that representations become more “high-level” and more “abstract” as one moves anteriorly from the primary visual system, our results show where exactly such transitions happen. Finally, our results help define the limits of plasticity: plasticity is maximal in those regions that are most strongly modality-specific. In the absence of relevant input, these regions may become “available” for alternative functions.

Footnotes

This study was supported by National Key Basic Research Program of China (2013CB837300, 2014CB846100), National Natural Science Foundation of China (31222024, 31171073, 31271115, 81030028, 31221003), and Program for New Century Excellent Talents in University (12-0055, 12-0065). A.C. was supported by the Fondazione Cassa di Risparmio di Trento e Rovereto. We thank Dr. Yong He for helpful comments; Drs. Xueming Lu and Yujun Ma for help with data analyses; and Beijing Normal University-CNLab members for assistance in data collection.

The authors declare no competing financial interests.

References

- Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early “visual” cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci. 2003;6:758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- Bedny M, Konkle T, Pelphrey K, Saxe R, Pascual-Leone A. Sensitive period for a multimodal response in human visual motion area MT/MST. Curr Biol. 2010;20:1900–1906. doi: 10.1016/j.cub.2010.09.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dodell-Feder D, Fedorenko E, Saxe R. Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci U S A. 2011;108:4429–4434. doi: 10.1073/pnas.1014818108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TE, Sporns O. Human connectomics. Curr Opin Neurobiol. 2012;22:144–153. doi: 10.1016/j.conb.2011.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biswal BB, Mennes M, Zuo XN, Gohel S, Kelly C, Smith SM, Beckmann CF, Adelstein JS, Buckner RL, Colcombe S, Dogonowski AM, Ernst M, Fair D, Hampson M, Hoptman MJ, Hyde JS, Kiviniemi VJ, Kötter R, Li SJ, Lin CP, et al. Toward discovery science of human brain function. Proc Natl Acad Sci U S A. 2010;107:4734–4739. doi: 10.1073/pnas.0911855107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biswal B, Yetkin FZ, Haughton VM, Hyde JS. Functional connectivity in the motor cortex of resting human brain using echo-planar MRI. Magn Reson Med. 1995;34:537–541. doi: 10.1002/mrm.1910340409. [DOI] [PubMed] [Google Scholar]

- Bock AS, Fine I. Anatomical and functional plasticity in early blind individuals and the mixture of experts architecture. Front Hum Neurosci. 2014;8:971. doi: 10.3389/fnhum.2014.00971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchel C, Price C, Frackowiak RS, Friston K. Different activation patterns in the visual cortex of late and congenitally blind subjects. Brain. 1998;121:409–419. doi: 10.1093/brain/121.3.409. [DOI] [PubMed] [Google Scholar]

- Burton H. Visual cortex activity in early and late blind people. J Neurosci. 2003;23:4005–4011. doi: 10.1523/JNEUROSCI.23-10-04005.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME. Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol. 2002;87:589–607. doi: 10.1152/jn.00285.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Raichle ME. Resting state functional connectivity in early blind humans. Front Syst Neurosci. 2014;8:51. doi: 10.3389/fnsys.2014.00051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butt OH, Benson NC, Datta R, Aguirre GK. The fine-scale functional correlation of striate cortex in sighted and blind people. J Neurosci. 2013;33:16209–16219. doi: 10.1523/JNEUROSCI.0363-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao-Gan Y, Yu-Feng Z. DPARSF: a MATLAB Toolbox for “pipeline” data analysis of resting-state fMRI. Front Syst Neurosci. 2010;4:13. doi: 10.3389/fnsys.2010.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M. Functional relevance of cross-modal plasticity in blind humans. Nature. 1997;389:180–183. doi: 10.1038/38278. [DOI] [PubMed] [Google Scholar]

- Craddock RC, James GA, Holtzheimer PE, 3rd, Hu XP, Mayberg HS. A whole brain fMRI atlas generated via spatially constrained spectral clustering. Hum Brain Mapp. 2012;33:1914–1928. doi: 10.1002/hbm.21333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Chan AW, Peelen MV, Dodds CM, Kanwisher N. Domain specificity in visual cortex. Cereb Cortex. 2006;16:1453–1461. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani RJ. An introduction to the bootstrap. Boca Raton, FL: Chapman and Hall/CRC; 1993. [Google Scholar]

- Epstein RA. Parahippocampal and retrosplenial contributions to human spatial navigation. Trends Cogn Sci. 2008;12:388–396. doi: 10.1016/j.tics.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]