Abstract

Experiments that study feature-based attention have often examined situations in which selection is based on a single feature (e.g., the color red). However, in more complex situations relevant stimuli may not be set apart from other stimuli by a single defining property but by a specific combination of features. Here, we examined sustained attentional selection of stimuli defined by conjunctions of color and orientation. Human observers attended to one out of four concurrently presented superimposed fields of randomly moving horizontal or vertical bars of red or blue color to detect brief intervals of coherent motion. Selective stimulus processing in early visual cortex was assessed by recordings of steady-state visual evoked potentials (SSVEPs) elicited by each of the flickering fields of stimuli. We directly contrasted attentional selection of single features and feature conjunctions and found that SSVEP amplitudes on conditions in which selection was based on a single feature only (color or orientation) exactly predicted the magnitude of attentional enhancement of SSVEPs when attending to a conjunction of both features. Furthermore, enhanced SSVEP amplitudes elicited by attended stimuli were accompanied by equivalent reductions of SSVEP amplitudes elicited by unattended stimuli in all cases. We conclude that attentional selection of a feature-conjunction stimulus is accomplished by the parallel and independent facilitation of its constituent feature dimensions in early visual cortex.

SIGNIFICANCE STATEMENT The ability to perceive the world is limited by the brain's processing capacity. Attention affords adaptive behavior by selectively prioritizing processing of relevant stimuli based on their features (location, color, orientation, etc.). We found that attentional mechanisms for selection of different features belonging to the same object operate independently and in parallel: concurrent attentional selection of two stimulus features is simply the sum of attending to each of those features separately. This result is key to understanding attentional selection in complex (natural) scenes, where relevant stimuli are likely to be defined by a combination of stimulus features.

Keywords: attention, EEG, feature-based attention, frequency tagging, steady-state visual evoked potentials, visual search

Introduction

Selective attention allocates processing resources toward relevant stimuli, allowing for adaptive behavior despite a potential overload of sensory information. The fundamental limitation of processing resources in retinotopic visual cortex is taken into account by the biased competition model (Desimone and Duncan, 1995; Duncan et al., 1997) and more recent normalization models of attention (Lee and Maunsell, 2009; Reynolds and Heeger, 2009). According to these proposals, stimuli falling into shared receptive fields compete for neuronal representation. This competition is particularly intense when feature-based attention is used to select one of multiple spatially overlapping stimuli (Andersen et al., 2012).

It is less clear whether the top-down attentional control system that modulates visual processing operates with its own capacity limitation. If this were the case, the effectiveness of attentional selection might decrease in situations where multiple features had to be selected concurrently, as these selections would have to share the same putative limited resource. For example, color selection would be less effective when selection is also based on another feature as opposed to when selection is based on color alone. Whereas previous studies have examined the neural correlates of attention to single features (Saenz et al., 2002; Andersen et al., 2012) and to conjunctions of features (Anllo-Vento and Hillyard, 1996; Treue and Martínez-Trujillo, 1999; Anllo-Vento et al., 2004; Lu and Itti, 2005; Andersen et al., 2008, 2011a; Hayden and Gallant, 2009), none has directly compared the effectiveness of selection based on a single feature with selection based on the conjunction of that same feature when conjoined with another.

The present study contrasted conditions in which participants attended to a stimulus defined by a single feature (color OR orientation) with conditions in which they attended to stimuli defined by a feature conjunction (color AND orientation). Participants were presented with four completely overlapping fields of randomly moving bars (Fig. 1). On each trial, they were cued to attend to a subset of the bars defined by either a single feature (red, blue, horizontal, or vertical) or by a feature conjunction (red horizontal, red vertical, blue horizontal, or blue vertical). Frequency-tagged steady-state visual evoked potentials (SSVEPs) elicited in early visual-cortical areas were recorded to concurrently assess the allocation of attention to each of the four superimposed fields of bars (Di Russo et al., 2002; Andersen et al., 2011b). If attentional selection of feature conjunctions is achieved through parallel and independent facilitation of the constituent features (Anllo-Vento et al., 2004; Andersen et al., 2008), then the attentional modulation of SSVEP amplitudes for feature conjunctions would be exactly the sum of the attentional modulations of the SSVEPs to the individual single features. If, however, attentional selection of different feature dimensions relies on shared resources of top-down attentional control, then attentional selection of feature conjunctions could result in amplitude modulations that are less than the sum of those when the individual features are attended separately.

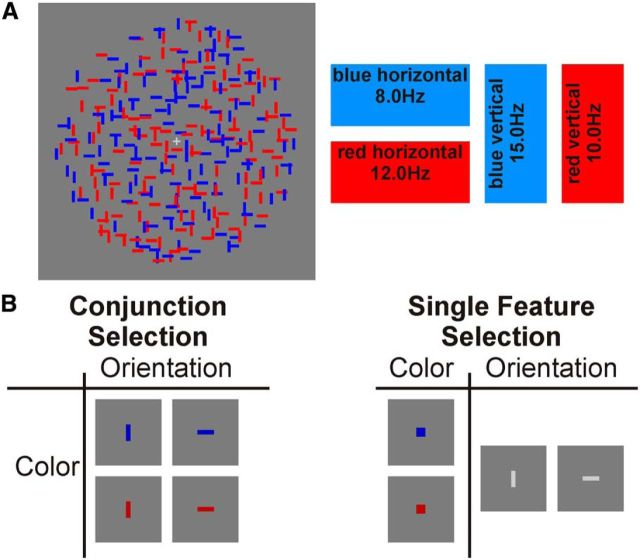

Figure 1.

A, Stimulus display and schematic illustration of assignment of flicker frequencies to stimuli. All bars were in constant random incoherent motion and each of the four fields of bars flickered at a specific frequency: blue horizontal 8.0 Hz, red vertical 10.0 Hz, red horizontal 12.0 Hz, blue vertical 15.0 Hz. B, Illustration of experimental conditions and corresponding attentional cues. In conjunction-selection conditions, participants were instructed to only respond to brief coherent motion intervals of the cued bars (targets) while ignoring corresponding coherent motions of the three other types of bars (distractors). For example, if “blue horizontal” was cued, only coherent motion of blue horizontal bars were targets while coherent motion of blue vertical, red horizontal, and red vertical bars were distractors. In the single feature-selection conditions, participants were instructed to respond to coherent motion of either of the two types of bars with the cued feature value (targets) while ignoring coherent motion of the two other types of bars (distractors). For example, if “blue” was cued, coherent motions of blue horizontal or blue vertical bars were targets while coherent motions of red horizontal or red vertical bars were distractors.

Materials and Methods

Subjects.

Fifteen subjects (10 female, 14 right-handed, ages 20–55, average 30.8 years) with normal color vision and normal or corrected-to-normal visual acuity participated in the experiment after giving informed consent. All subjects were included in the analysis of electrophysiological data. Behavioral data from one subject was lost because of technical failure and was thus not included in the analysis.

Stimulus material and procedure.

Four completely overlapping fields of randomly and independently moving horizontal and vertical bars of red and blue color were presented centrally together with a fixation cross (Fig. 1A). Each of the four fields of 75 bars flickered at an individual frequency (blue horizontal = 8.0 Hz, red vertical = 10.0 Hz, red horizontal = 12.0 Hz, blue vertical = 15.0 Hz) synchronized to the screen's refresh rate of 120 Hz. This frequency tagging enabled SSVEPs to be recorded separately and concurrently to each of the four types of stimuli. The SSVEP is a continuous oscillatory response of the visual cortex with the same frequency as the driving stimulus and whose amplitude is enhanced by attention (Morgan et al., 1996; Di Russo et al., 2002; Andersen et al., 2011b).

Stimulation was presented on a 19” computer monitor set to a resolution of 640 × 480 pixels and 32 bits per pixel color mode. Isoluminance of bars and gray background (9.3 cd/m2) was adjusted for each subject by heterochromatic flicker photometry before EEG recordings. At a viewing distance of 80 cm, the fields of bars were contained within a circle with a diameter of 12.94° of visual angle and single bars had a size corresponding to 0.61 × 0.16° and moved in a random direction 0.051° per frame of screen refresh. Bars moving over the edge of the circular field reappeared on the opposite side. This geometric layout of stimuli was identical to the one used in our previous study (Andersen et al., 2008). To prevent systematic overlapping of bars, which might induce a depth cue, all bars were drawn in random order. Stimulation was realized using Cogent Graphics (John Romaya, Laboratory of Neurobiology, Wellcome Department of Imaging Neuroscience).

Each trial began with the presentation of one of eight cues at fixation that instructed participants which stimuli (defined either by a single feature or a feature conjunction) to attend to on that trial (Fig. 1B). After a randomly chosen interval of 750–1250 ms the cue was removed and the flickering and moving random bar stimuli were presented for 15 s followed by an intertrial interval of 2200 ms during which only the gray background was presented.

Attention to the assigned field of flickering bars was assessed behaviorally by a target-detection task. During brief intervals (500 ms), the bars of one type (color and orientation) could move in one of the four cardinal directions with 70% coherence. Beginning at 400 ms after stimulus onset, between two and eight such coherent motion events occurred randomly within each 15 s trial, and their onsets were separated by at least 700 ms. Participants were instructed to press a button whenever they detected a coherent motion of the attended bars (target) while ignoring corresponding motions of the unattended bars (distractors). Detection responses occurring within an interval from 300 to 1000 ms after the onset of a target or distractor were counted as hits or false alarms, respectively. The responding hand was changed half way through each recording session.

Twenty-four trials were presented for each of the eight attentional conditions resulting in a total of 192 trials for the entire experiment. These were presented in random order, and every 24 trials there was a short break with feedback provided to the subject about his or her behavioral performance. In each of the eight attentional conditions, a total of 100 coherent motion intervals were presented (i.e., 25 per stimulus). Accordingly, in the conjunction-selection conditions, 25 of those coherent motion events were targets and 75 were distractors. In the feature-selection conditions, 50 of those events were targets and 50 were distractors. Before recordings, subjects performed a training session of three or more blocks of 12 trials each until stable target-detection performance was reached. During training, correct and incorrect target-detection responses were indicated by immediate auditory feedback (hits: high beep; false alarms and misses: low beep). This feedback was not given during the main experiment.

EEG recordings and analysis.

Brain electrical activity was recorded noninvasively at a sampling rate of 256 Hz from 64 Ag/AgCl electrodes mounted in an elastic cap using an ActiveTwo amplifier system (BioSemi). Lateral eye movements were monitored with a bipolar outer canthus montage (horizontal EOG). Vertical eye movements and blinks were monitored with a bipolar montage positioned below and above the right eye. Throughout experimental and training blocks, the experimenter monitored the HEOG and VEOG channels on-line and reminded the participant to maintain fixation after each block, if necessary. Processing of EEG data was performed using the EEGLab toolbox (Delorme and Makeig, 2004) in combination with custom-written analysis routines in MATLAB (The MathWorks).

Fifteen successive 1 s epochs were extracted from each 15 s stimulus train. The first epoch in each trial was discarded to exclude the VEP to stimulus onset and to allow the SSVEP sufficient time to build up. To ensure that the analyzed data were not contaminated by activity related to coherent motion or manual responses, all epochs with target onsets or distractor onsets occurring either within the epoch or later than 200 ms after onset of the previous epoch were also discarded. The remaining 200 1 s epochs per condition were detrended (removal of mean and linear trends) and epochs with eye movements or blinks were rejected from further analysis. All remaining artifacts were corrected or rejected by an automated procedure using a combination of trial exclusion and channel approximation based on statistical parameters of the data (Junghöfer et al., 2000). This led to an average rejection rate of 12.5% of all epochs, which did not differ between conditions. Subsequently, all epochs were re-referenced to average reference and averaged for each of the eight experimental conditions separately.

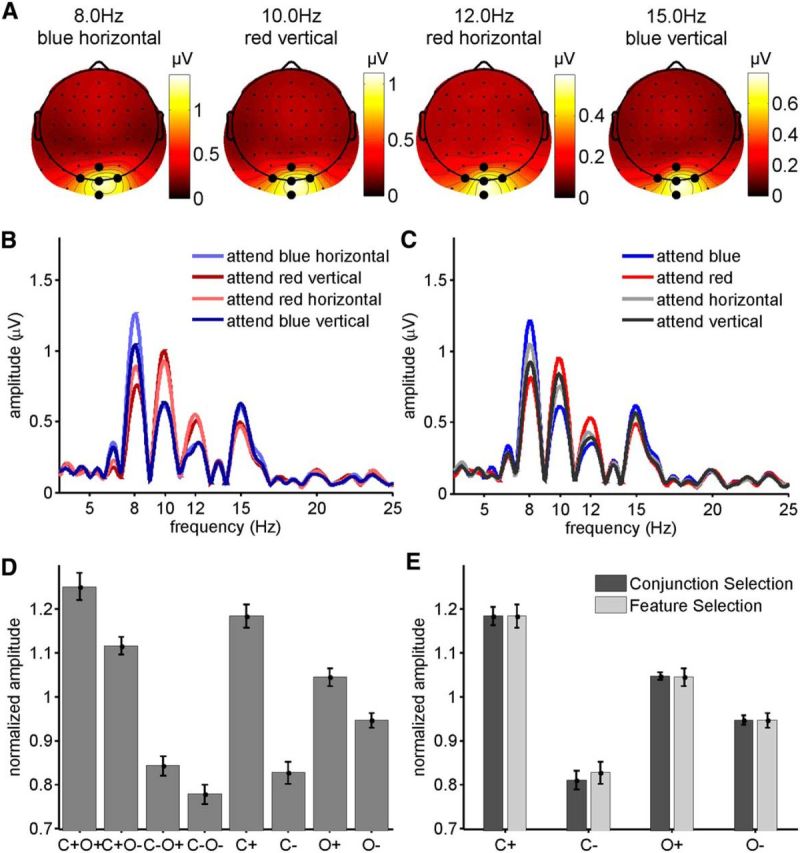

SSVEP amplitudes for each electrode and frequency were quantified as the absolutes of the complex Fourier coefficients at the four stimulation frequencies. Isocontour voltage maps of SSVEP amplitudes averaged over all eight attentional conditions (Fig. 3A) showed a narrow peak over occipital electrodes. Accordingly, a cluster of five electrodes centered on Oz was chosen for statistical analysis. The amplitude spectrum of this electrode cluster (Fig. 3B,C) showed a strong concentration of signal power at the four stimulation frequencies. As in previous similar studies (Andersen et al., 2008, 2011a, 2013), SSVEP amplitudes showed equivalent attention effects for the different frequencies. To make SSVEPs comparable across frequencies, SSVEP amplitudes were normalized to a mean of 1.0 for each frequency and subject by dividing amplitudes by the mean over all conditions (Andersen et al., 2011a). For each stimulus (i.e., frequency), conditions were labeled according to the attentional status of the driving stimulus. For conjunction selection, these labels were as follows: color and orientation attended (C+O+); color attended, orientation unattended (C+O−); color unattended, orientation attended (C−O+); color and orientation unattended (C−O−). For single feature selection the labels were as follows: color attended (C+), color unattended (C−), orientation attended (O+), orientation unattended (O−). Subsequently, SSVEP amplitudes were collapsed over the four frequencies (i.e., stimuli) for each of the eight above-defined attentional conditions.

Figure 3.

A, Spline-interpolated isocontour voltage maps of SSVEP amplitudes averaged over all subjects and attentional conditions for each of the four frequencies. Electrodes used for the analysis are indicated by the larger black dots. B, Grand-average amplitude spectrum obtained by Fourier transformation zero padded to 16,384 points for conjunction and single feature-selection conditions (C). Amplitudes at all four frequencies are larger when one or both of the features defining the driving stimulus are attended. D, Normalized SSVEP amplitudes collapsed across frequencies for conjunction (C+O+, C+O−, C-O+, C−O−) and single feature selection (C+, C−, O+, O−). E, Direct comparison of normalized SSVEP amplitudes for conjunction and single feature selections. Amplitudes for C+, C−, O+, and O− for conjunction selection were derived by averaging amplitudes from C+O+ and C+O−, C−O+ and C−O−, C+O+ and C−O+ and C+O− and C−O−, respectively (see Eqs. 1–4). Error bars represent SEM.

Statistical analysis.

Behavioral responses to coherent motion targets were compared between conjunction targets and single feature targets defined by color or orientation by a one-way repeated-measures ANOVA with the factor target type (C+O+, C+, O+) for hit rates and reaction times separately. False alarm rates to distractors were tested by a one-way repeated-measures ANOVA with the factor distractor type (C+O−, C−O+, C−O−, O−, C−). In both cases, Greenhouse–Geisser correction for nonsphericity was used. Pairwise comparisons between the individual levels of target type or distractor type were tested by two-tailed paired t tests using Bonferroni correction for multiple comparisons.

To examine attentional modulation of stimulus processing, normalized SSVEP amplitudes were first tested separately for conjunction and feature-selection conditions and subsequently tested directly against each other. Conjunction-selection conditions (C+O+, C+O−, C−O+, C−O−) were subjected to a 2 × 2 repeated-measures ANOVA with the factors attention to color (attended, unattended) and attention to orientation (attended, unattended). To assess whether attention to color or attention to orientation modulated normalized SSVEP amplitudes more strongly, the C+O− and C−O+ conditions were directly compared by a two-tailed paired t test. Single feature-selection conditions (C+, C−, O+, O−) were also tested by a 2 × 2 repeated-measures ANOVA with the factors attention (attended, unattended) and feature dimension (color, orientation).

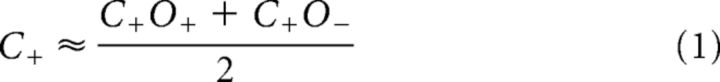

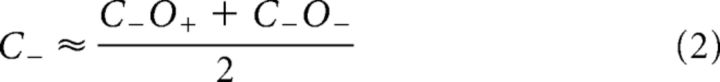

To directly compare conjunction and single feature selection, we tested whether SSVEP amplitudes in the single feature-selection conditions could be predicted from SSVEP amplitudes in the conjunction-selection conditions (and vice versa). For example, an estimate of amplitudes when color alone was attended (C+) was obtained by averaging the two conjunction conditions in which color was attended and orientation was either attended (C+O+) or unattended (C+O−). Estimates for C−, O+, and O− were obtained correspondingly:

|

|

|

|

If these equations were found to be empirically correct in accounting for SSVEP amplitude modulations, this would validate the three assumptions that underlie their derivation: (1) the effects of attending to a feature are parallel (i.e., attending to a color affects stimulus processing equally for all stimuli having that color, regardless of their orientation; Andersen et al., 2008); (2) these effects are independent (i.e., the magnitude of the effect of attending to color does not depend upon concurrent selection by orientation); and (3) the amplitudes of concurrently presented stimuli are subject to divisive normalization, such that any change in the amplitude elicited by one stimulus will be counterbalanced by changes in the amplitudes elicited by the other stimuli, and the result is that the mean amplitude over all stimuli remains constant. For example, an increase of the amplitude elicited by an attended stimulus will be counterbalanced by an equal-sized reduction of the amplitude elicited by a superimposed unattended stimulus.

The amplitude estimates for C+, C−, O+, and O− conditions derived from the conjunction-selection conditions were compared with the actual amplitudes measured in these single feature-selection conditions by a 2 × 2 × 2 repeated-measures ANOVA with the factors attention (attended, unattended), feature dimension (color, orientation), and selection type (conjunction, single feature).

Results

Behavioral data

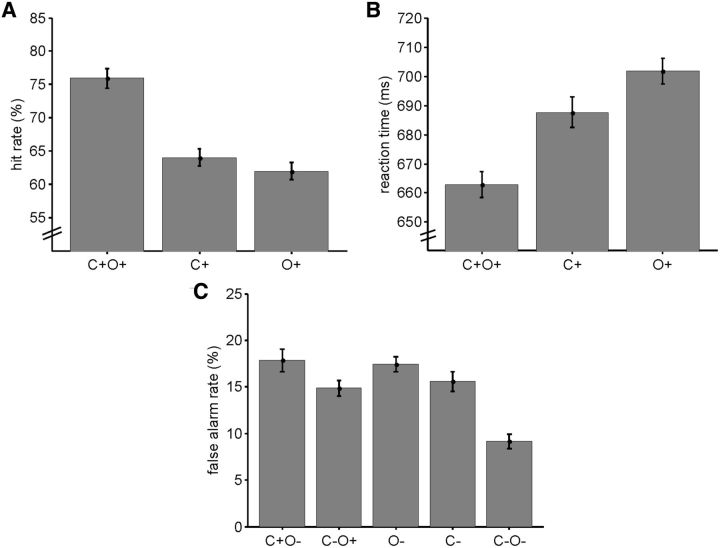

Behavioral performance, as measured by hit rates, reaction times, and false alarm rates, was consistently better for conjunction selection compared with single feature-selection conditions (Fig. 2). Hit rates and reaction times to targets depended strongly upon target type (hit rate: F(2,13) = 21.155, p < 10−5, η2 = 61.9%; reaction time: F(2,13) = 11.442, p < 0.0005, η2 = 46.8%). Conjunction targets were detected more readily than single feature targets (C+O+ vs C+: t(13) = 4.942, p < 0.0005; C+O+ vs O+: t(13) = 5.700, p < 10−4) and elicited faster responses (C+O+ vs C+: t(13) = −2.863, p < 0.05; C+O+ vs O+: t(13) = −5.381, p < 0.0005). Hit rates and reaction times did not differ between targets defined by color or orientation (C+ vs O+: hit rate: t(13) = 1.000, p > 0.1; reaction time: t(13) = −1.624, p > 0.1).

Figure 2.

Behavioral results. Hit rates (A) and reaction times (B) to coherent motion targets in conjunction-selection (C+O+) and single feature-selection (C+, O+) conditions. C, False alarm rates for coherent motion distractors in conjunction-selection (C+O−, C−O+, C−O−) and single feature-selection (O−, C−) conditions. Error bars represent within-subjects SEM (obtained by subtracting each participant's mean before the calculation of the SD).

False alarms depended strongly upon distractor type (F(4,13) = 11.070, p < 0.0005, η2 = 46.0%). Conjunction distractors with both the unattended color and orientation (C−O−) elicited fewer false alarms than all other distractor types (all t(13) ≤ −4.608, all ps < 0.0005). False alarm rates to the other four distractor types (C+O−, C−O+, O−, C−) did not differ significantly from each other (all |t(13)| ≤ 1.887, all ps > 0.05).

Conjunction-selection SSVEPs

SSVEP amplitudes for the conjunction-selection conditions are depicted in Figure 3B. As in our previous study (Andersen et al., 2008), the highest SSVEP amplitudes were elicited when both the color and orientation of a stimulus were attended (C+O+) while the lowest amplitudes were elicited when neither the color nor the orientation of a stimulus was attended (C−O−). Normalized SSVEP amplitudes collapsed over frequencies (Fig. 3D) were enhanced both by attention to the color (F(1,14) = 84.342, p < 10−6, η2 = 76.5%) and to the orientation (F(1,14) = 33.520, p < 10−4, η2 = 5.5%) of a stimulus. The interaction of attention to color and orientation approached but did not reach significance (F(1,14) = 4.015, p = 0.0648, η2 = 0.7%). Attention to color modulated SSVEP amplitudes more strongly than attention to orientation (C+O− vs C−O+: t(14) = 7.495, p < 10−5).

Single feature-selection SSVEPs

Attention to a single stimulus feature strongly enhanced SSVEP amplitudes (F(1,14) = 54.586, p < 10−5, η2 = 54.1%; Fig. 3C). The overall magnitude of SSVEP amplitudes (attended plus unattended) did not depend on the feature dimension on which selection was based (F(1,14) = 0.273, p > 0.1, η2 = 0.1%). However, there was a strong interaction of attention × feature dimension (F(1,14) = 25.971, p < 0.0005, η2 = 17.5%), as attention to color modulated SSVEP amplitudes more strongly than attention to orientation (Fig. 3D).

Direct comparison of feature-selection and conjunction-selection SSVEPs

SSVEP amplitudes for the conjunction-selection conditions precisely predicted amplitudes for the single feature conditions (Fig. 3E), in line with Equations 1–4. Specifically, the SSVEP amplitude for C+ (single feature selection) was virtually identical to the average of the amplitudes for C+O+ and C+O− (conjunction selection), the amplitude for C− was predicted by the average of the amplitudes for C−O+ and C−O−, the amplitude for O+ was predicted by the average of the amplitudes for C+O+ and C−O+, and the amplitude for O− was predicted by the average of the amplitudes for C+O− and C−O−. In line with the foregoing separate analyses of the single feature-selection and conjunction-selection conditions, SSVEP amplitudes were larger for attended stimuli (F(1,14) = 81.301, p < 10−6, η2 = 57.9%). This effect was larger for color selection (C+ − C−) compared with orientation selection (O+ − O−; interaction attention × feature dimension: F(1,14) = 46.170, p < 10−5, η2 = 19.1%). Importantly, the magnitude of SSVEP attention effects did not depend on whether amplitudes were measured in single trial selection conditions or derived from averages of conjunction-selection conditions (interaction attention × selection type: F(1,14) = 0.184, all ps > 0.1; interaction attention × feature dimension × selection type: F(1,14) = 0.092, all ps > 0.1). The main effects of feature dimension and selection type and the interaction feature dimension × selection type were also clearly nonsignificant (all F(1,14) < 0.3, all ps > 0.1). Together the two nonsignificant main effects and the three nonsignificant interactions accounted for <1% of the total variance.

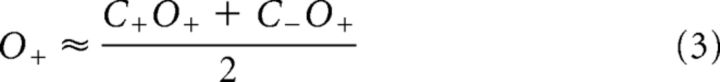

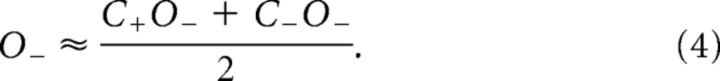

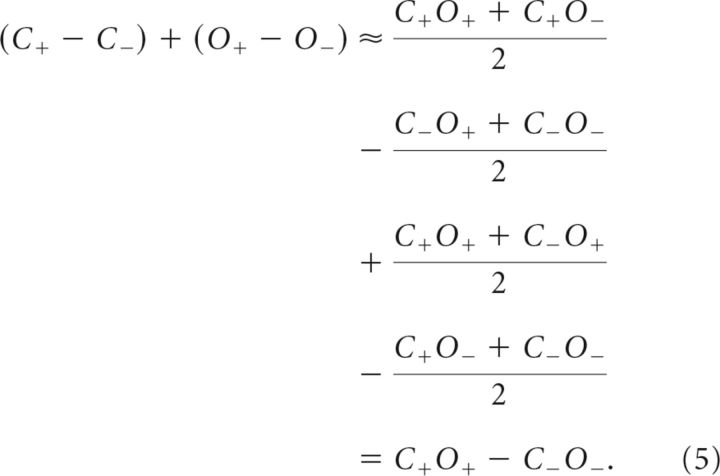

The foregoing analysis revealed that Equations 1–4 yielded very precise estimates for the single feature-selection amplitudes. It directly follows from this that the sum of the single feature attention effects equals the overall attentional enhancement when attending to the conjunction of both features:

|

The observed mean attention effects on SSVEP amplitudes (in normalized values ± SEM) were 0.357 (±0.048) for color (C+ − C−), 0.098 (±0.030) for orientation (O+ − O−), and 0.473 (±0.051) for conjunction selection (C+O+ − C−O−). Using these values to directly test Equation 5, there was no significant difference between the sum of the two single feature attention effects and the overall effect of attention to the conjunction (t(14) = 0.404, p > 0.1). Thus, the modulation of SSVEP amplitudes produced during attention to a feature conjunction can be exactly predicted by summing the individual effects of attention to the constituent features.

Discussion

In the present study attentional selections of features and feature conjunctions were directly contrasted. Both behavioral and electrophysiological results consistently supported the hypothesis that sustained attentional selection of feature conjunctions is achieved by parallel and independent selection of the constituent features. Hit rates for feature-conjunction targets (C+O+) were higher and reaction times were faster than for single feature targets (C+ or O+). Moreover, the lowest false alarm rates were observed for distractors sharing neither of the attended features (C−O−) in conjunction selection trials. These results support the view that attended conjunctions receive a “double dose” of facilitation by combining the attentional effects of both defining features (Andersen et al., 2008). This conclusion is fully supported by the electrophysiological results. The magnitude of enhancement of processing attended conjunctions in visual cortex, as assessed by SSVEP amplitudes, was exactly the sum of the magnitudes of enhancement when attending to the single constituent features.

The parallel enhancement of the individual features during the conjunction-selection conditions was evident in the equal enhancement of stimulus processing for C+O+ and C+O− stimuli by attention to color and for C+O+ and C−O+ stimuli by attention to orientation. This parallel enhancement is fully consistent with the feature-similarity gain model (Treue and Martínez-Trujillo, 1999) and constitutes a nonspatial equivalent to the spatially “global” effect of feature attention. In the present experiment the global effect is manifested as an enhanced processing of the attended feature of a conjunction stimulus, whether or not it is coupled with the attended or unattended value of the other feature.

Importantly, no cost was incurred when attentional selection had to concurrently operate on two feature dimensions as opposed to only one. That is, the magnitude of enhancement of the attended color remained the same, regardless of whether or not selection for orientation was also required. Correspondingly, the magnitude of enhancement of the attended orientation did not depend upon the need to select by color. This finding suggests that attentional selection of individual features within different feature dimensions is entirely independent: there seems to be no shared resource of limited capacity for top-down attentional modulation of different dimensions. This seemingly conflicts with the “dimension-weighting account” of visual search, which proposes that attentional weights are shifted between feature dimensions when the target-defining dimension changes in consecutive trials (Müller et al., 1995; Found and Müller, 1996; Töllner et al., 2008). To reconcile our findings with this account, one would have to assume independence of attentional weights for different dimensions. “Weight shifting” would then correspond to independently increasing the attentional weight of one dimension while reducing the weight of another, rather than reallocating the same attentional weight between both dimensions.

Resource limitations of visual processing were apparent, however, in the absence of any overall main effects of selection type or attended feature and any interactions between these two factors in the three-way ANOVA comparing single feature and conjunction selection. That is, the normalized amplitude summed over all four stimuli was constant regardless of whether participants' attention was based on color, orientation, or the conjunction of these two features. This implies that enhanced processing of attended stimuli was exactly counterbalanced by reduced processing of unattended stimuli. This observation is in accord with models suggesting competitive interactions (Desimone and Duncan, 1995) or divisive normalization (Bundesen et al., 2005; Lee and Maunsell, 2009; Reynolds and Heeger, 2009) among spatially proximate stimuli.

Attention to color in the present experiment modulated SSVEP amplitudes more strongly than attention to orientation (Andersen et al., 2008) and has previously been found to modulate SSVEPs as strongly as spatial attention (Andersen et al., 2011a). Consistent with these electrophysiological findings, faster response times to color-defined targets than to orientation-defined targets have been found in visual search (Found and Müller, 1996). Although a direct comparison of the effectiveness of different feature dimensions for attentional selection would require a parametric manipulation of feature differences (Martinez-Trujillo and Treue, 2004), these findings do suggest that color may be a highly efficient feature for attentional selection. Our results thus are in accord with a mechanism of independent selections of the more effective color and the less effective orientation cues.

Alternative explanations of our SSVEP results that assume that (1) attentional selection was based on flicker frequencies, (2) attentional modulations were affected by interference from targets or distractors, or (3) attention was switched between conjunction stimuli rather than deployed to color and orientation in parallel can be ruled out for the following reasons. (1) The idea that flicker frequencies affected attentional selection is inconsistent with the patterns of results in previous studies (Andersen et al., 2008, 2011a, 2013). Most convincingly, behavioral control conditions in previous experiments (Müller et al., 2006; Störmer et al., 2013) have demonstrated that attentional selection is unaffected by whether the flicker frequencies of attended and unattended stimuli are the same or different. (2) Although single feature-selection trials contained on average twice as many targets as conjunction-selection conditions (and correspondingly fewer distractors), this difference cannot have directly affected our SSVEP amplitudes because epochs with targets or distractors were excluded from the SSVEP analysis. (3) Finally, the idea that attentional modulation on conjunction selection trials was from serial switching of attention between color and orientation rather than to the sustained parallel enhancement of both features was disproved by a single-trials analysis in a previous study (Andersen et al., 2008).

Behavioral performance, as measured by hit rates, reaction times, and false alarm rates was consistently better for conjunction-selection compared with single feature-selection conditions in the present experiment. This difference is compatible with the observed “dual dose” of facilitation received in conjunction selection, but may also be in part attributable to the requirement for attending to half of the display items during single feature selection (while detecting targets that could affect either half of those stimuli) as opposed to attending to only a quarter of the items during conjunction selection (while detecting targets that affected only those items). In comparison, visual search for targets defined by feature conjunctions has generally been found to be less efficient than search for targets defined by a single feature (Treisman and Gelade, 1980; Wolfe et al., 1989; Painter et al., 2014). However, in visual search tasks the comparison between single feature and conjunction search conditions is confounded because both the task and the physical display differ between these conditions. This is not the case in the present experiment, which used identical displays for single feature and conjunction selection and only varied the task instructions. The parallel and independent facilitation of different feature dimensions in early visual areas observed here and in our previous study (Andersen et al., 2008) allow conjunction stimuli to stand out by receiving a dual dose of facilitation, thus providing direct neurophysiological evidence for the mechanism proposed to underlie efficient conjunction search (Wolfe et al., 1989; Wolfe, 1994).

Our main finding—that attentional effects on SSVEP amplitudes in conjunction-selection conditions were precisely equal to the sum of the attention effects observed in single-feature selection—is not readily interpretable in terms of Load Theory (Lavie, 2005), which proposes that increasing “perceptual load” decreases the amount of resources available to process distractor stimuli. Perceptual load has commonly been manipulated by comparing feature (low-load) versus conjunction (high-load) selection conditions (Lavie, 2005; Schwartz et al., 2005). The present findings advise caution in interpreting results obtained using this particular manipulation. Consistent with an interpretation of our conjunction-selection conditions as a “high-load” condition, distractor stimuli sharing none of the attended features (C−O−) in conjunction-selection conditions elicited fewer false alarms and lower SSVEP amplitudes than distractor stimuli in single feature-selection (low-load) conditions (Figs. 2C, 3C). However, such an interpretation of the present findings in terms of perceptual load is untenable because hit rates were higher and reaction times were faster in conjunction-selection conditions compared with single feature-selection conditions. This is the opposite of what would be expected of a high-load condition. Alternatively, if one interprets our conjunction selection conditions as low-load conditions because of their superior behavioral performance, the results are still incompatible with Load Theory; in this latter case, the maximal attentional modulation in low-load conditions (C+O+ vs C−O−) exceeded the maximal attentional modulation in high-load conditions (C+ vs C−), which again is the opposite of what one would expect according to Load Theory (Lavie, 2005). Instead, our findings can be fully explained in terms of parallel and independent attentional selection of two feature dimensions rather than a difference in “perceptual load.”

In summary, the present results provide evidence that voluntary attentional selection of feature conjunctions is achieved by parallel and independent selection of the constituent features. That is, attentional selection of a particular feature does not appear to be influenced by the need to concurrently select another feature of a different dimension. Thus, under the conditions of this study there was no cost for the concurrent operation of multiple stimulus selection mechanisms.

Footnotes

This work was supported by Deutsche Forschungsgemeinschaft (AN 841/1-1, MU 972/20-1). We thank Renate Zahn and Norman Forschack for help with data collection.

The authors declare no competing financial interests.

References

- Andersen SK, Hillyard SA, Müller MM. Attention facilitates multiple stimulus features in parallel in human visual cortex. Curr Biol. 2008;18:1006–1009. doi: 10.1016/j.cub.2008.06.030. [DOI] [PubMed] [Google Scholar]

- Andersen SK, Fuchs S, Müller MM. Effects of feature-selective and spatial attention at different stages of visual processing. J Cogn Neurosci. 2011a;23:238–246. doi: 10.1162/jocn.2009.21328. [DOI] [PubMed] [Google Scholar]

- Andersen SK, Müller MM, Hillyard SA. Tracking the allocation of attention in visual scenes with steady-state evoked potentials. In: Posner MI, editor. Cognitive neuroscience of attention. New York: Guilford; 2011b. pp. 197–216. [Google Scholar]

- Andersen SK, Müller MM, Martinovic J. Bottom-up biases in feature-selective attention. J Neurosci. 2012;32:16953–16958. doi: 10.1523/JNEUROSCI.1767-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen SK, Hillyard SA, Müller MM. Global facilitation of attended features is obligatory and restricts divided attention. J Neurosci. 2013;33:18200–18207. doi: 10.1523/JNEUROSCI.1913-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anllo-Vento L, Hillyard SA. Selective attention to the color and direction of moving stimuli: electrophysiological correlates of hierarchical feature selection. Percept Psychophys. 1996;58:191–206. doi: 10.3758/BF03211875. [DOI] [PubMed] [Google Scholar]

- Anllo-Vento L, Schoenfeld MA, Hillyard SA. Cortical mechanisms of visual attention: electrophysiological and neuroimaging studies. In: Posner MI, editor. Cognitive neuroscience of attention. New York: Guilford; 2004. pp. 180–193. [Google Scholar]

- Bundesen C, Habekost T, Kyllingsbaek S. A neural theory of visual attention: bridging cognition and neurophysiology. Psychol Rev. 2005;112:291–328. doi: 10.1037/0033-295X.112.2.291. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Teder-Sälejärvi WA, Hillyard SA. Steady-state VEP and attentional visual processing. In: Zani A, Proverbio AM, editors. The cognitive electrophysiology of mind and brain. New York: Academic; 2002. pp. 259–274. [Google Scholar]

- Duncan J, Humphreys G, Ward R. Competitive brain activity in visual attention. Curr Opin Neurobiol. 1997;7:255–261. doi: 10.1016/S0959-4388(97)80014-1. [DOI] [PubMed] [Google Scholar]

- Found A, Müller HJ. Searching for unknown feature targets on more than one dimension: investigating a “dimension-weighting” account. Percept Psychophys. 1996;58:88–101. doi: 10.3758/BF03205479. [DOI] [PubMed] [Google Scholar]

- Hayden BY, Gallant JL. Combined effects of spatial and feature-based attention on responses of V4 neurons. Vis Res. 2009;49:1182–1187. doi: 10.1016/j.visres.2008.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37:523–532. doi: 10.1111/1469-8986.3740523. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: Selective attention under load. Trends Cogn Sci. 2005;9:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lee J, Maunsell JH. A normalization model of attentional modulation of single unit responses. PLoS One. 2009;4:e4651. doi: 10.1371/journal.pone.0004651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu J, Itti L. Perceptual consequences of feature-based attention. J Vis. 2005;5(7):622–631. doi: 10.1167/5.7.2. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Treue S. Feature-based attention increases the selectivity of population responses in primate visual cortex. Curr Biol. 2004;14:744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Morgan ST, Hansen JC, Hillyard SA. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc Natl Acad Sci U S A. 1996;93:4770–4774. doi: 10.1073/pnas.93.10.4770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller HJ, Heller D, Ziegler J. Visual search for singleton feature targets within and across feature dimensions. Percept Psychophys. 1995;57:1–17. doi: 10.3758/BF03211845. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen S, Trujillo NJ, Valdés-Sosa P, Malinowski P, Hillyard SA. Feature-selective attention enhances color signals in early visual areas of the human brain. Proc Natl Acad Sci U S A. 2006;103:14250–14254. doi: 10.1073/pnas.0606668103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Painter DR, Dux PE, Travis SL, Mattingley JB. Neural responses to target features outside a search array are enhanced during conjunction but not unique-feature search. J Neurosci. 2014;34:3390–3401. doi: 10.1523/JNEUROSCI.3630-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds JH, Heeger DJ. The normalization model of attention. Neuron. 2009;61:168–185. doi: 10.1016/j.neuron.2009.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saenz M, Buracas GT, Boynton GM. Global effects of feature-based attention in human visual cortex. Nat Neurosci. 2002;5:631–632. doi: 10.1038/nn876. [DOI] [PubMed] [Google Scholar]

- Schwartz S, Vuilleumier P, Hutton C, Maravita A, Dolan RJ, Driver J. Attentional load and sensory competition in human vision: modulation of fMRI responses by load at fixation during task-irrelevant stimulation in the peripheral visual field. Cereb Cortex. 2005;15:770–786. doi: 10.1093/cercor/bhh178. [DOI] [PubMed] [Google Scholar]

- Störmer VS, Winther GN, Li SC, Andersen SK. Sustained multifocal attentional enhancement of stimulus processing in early visual areas predicts tracking performance. J Neurosci. 2013;33:5346–5351. doi: 10.1523/JNEUROSCI.4015-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Töllner T, Gramann K, Müller HJ, Kiss M, Eimer M. Electrophysiological markers of visual dimension changes and response changes. J Exp Psychol Hum Percept Perform. 2008;34:531–542. doi: 10.1037/0096-1523.34.3.531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Treue S, Martínez-Trujillo JC. Feature-based attention influences motion processing gain in macaque visual cortex. Nature. 1999;399:575–579. doi: 10.1038/21176. [DOI] [PubMed] [Google Scholar]

- Wolfe JM. Guided search 2.0: a revised model of visual search. Psychon Bull Rev. 1994;1:202–238. doi: 10.3758/BF03200774. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Cave KR, Franzel SL. Guided search: an alternative to the feature integration model for visual search. J Exp Psychol Hum Percept Perform. 1989;15:419–433. doi: 10.1037/0096-1523.15.3.419. [DOI] [PubMed] [Google Scholar]