Abstract

Visual attention enables observers to select behaviorally relevant information based on spatial locations, features, or objects. Attentional selection is not limited to physically present visual information, but can also operate on internal representations maintained in working memory (WM) in service of higher-order cognition. However, only little is known about whether attention to WM contents follows the same principles as attention to sensory stimuli. To address this question, we investigated in humans whether the typically observed effects of object-based attention in perception are also evident for object-based attentional selection of internal object representations in WM. In full accordance with effects in visual perception, the key behavioral and neuronal characteristics of object-based attention were observed in WM. Specifically, we found that reaction times were shorter when shifting attention to memory positions located on the currently attended object compared with equidistant positions on a different object. Furthermore, functional magnetic resonance imaging and multivariate pattern analysis of visuotopic activity in visual (areas V1–V4) and parietal cortex revealed that directing attention to one position of an object held in WM also enhanced brain activation for other positions on the same object, suggesting that attentional selection in WM activates the entire object. This study demonstrated that all characteristic features of object-based attention are present in WM and thus follows the same principles as in perception.

Keywords: multivoxel pattern analysis, object-based attention, parietal cortex, perception, visual cortex, working memory

Introduction

Limited mental processing capacity demands selection of goal-relevant information from the plethora of available stimuli via selective attention. Selective attention may operate on sensory representations and on internal representations held in working memory (WM) (Chun and Johnson, 2011; Gazzaley and Nobre, 2012). Therefore, attention supports the “working” part of memory by providing a means to flexibly prioritize currently relevant pieces of information among those represented in the mental workspace (Bledowski et al., 2009; Bledowski et al., 2010).

Selective attention has been studied extensively in perceptual processing, delineating differential mechanisms of visual attention. Most studies have examined space-based attention, showing that the focusing of attention to a certain location in the visual field leads to enhanced (faster and more accurate) processing at that location (Posner, 1980). This processing advantage is accompanied by increased neuronal activity in retinotopic visual cortex representing the focused location (Kanwisher and Wojciulik, 2000; Pessoa et al., 2003). Frontal and parietal regions including caudal parts of the superior frontal sulcus and posterior parts of the parietal cortex (PPC) are activated transiently during voluntary attention shifts between external spatial locations.

A second mechanism of attention is “object-based,” improving processing of elements in the visual field that are grouped to a single object by Gestalt principles (Roelfsema and Houtkamp, 2011). This object-based mechanism can be explained by the attentional spread hypothesis (Vecera and Farah, 1994): when attending one part of an object, attention spreads across all parts and features of the object's internal sensory representation, thereby enhancing processing of all other elements of the same object. This object-based mechanism of attention has been confirmed using various methods and experimental designs (Chen, 2012).

On the neural level, Wannig et al. (2011) demonstrated that activity in monkey area V1 at retinotopic object locations was elevated only when these locations were grouped to an attended visual object by Gestalt principles. Similarly, neuroimaging studies in humans found attention shifting-related activity in retinotopic areas V1–V4 to be modulated differentially by the perceptual grouping of the shifting targets (Müller and Kleinschmidt, 2003; Shomstein and Behrmann, 2006). In addition to its role in spatial shifting, PPC has also been implicated in object-based shifting (Shomstein and Behrmann, 2006).

Shifting the attentional focus between items held in WM has been established as a central operation for selection also within WM. It elicits similar fMRI activations as found in perception (Bledowski et al., 2010; Gazzaley and Nobre, 2012; Oberauer and Hein, 2012). Although the detailed mechanisms of attentional selection are well studied in perception, we have little knowledge about whether similar mechanisms are used in WM too. The present study assessed whether the principles of object-based attention also apply to WM representations. In particular, we sought to characterize object-related processing in WM with respect to behavioral effects, recruitment of the same control regions as in perception, and the characteristic attentional spread effects in visual and parietal cortex shown for visual perception.

Materials and Methods

Participants and procedure.

Seventeen healthy right-handed adults (11 females, age 19–30) with normal or corrected-to-normal visual acuity were recruited for the behavioral experiment. One participant was excluded from the behavioral experiment due to overly long reaction times (RTs), resulting in numerous responses given after presentation of the following cue. Twenty different subjects (11 females, aged 20–37) underwent the same behavioral experiment (outside of the MRI scanner), including eye movement recordings, and two sessions of an fMRI experiment. The behavioral experiment and each of the two sessions of the fMRI experiment were performed on separate days. All participants were recruited from Goethe University and gave written informed consent. The study was approved by the local ethics committee. The behavioral experiment comprised a WM task and a perceptual version of the same task. The fMRI experiment included four runs of the WM task and two runs of a functional localizer and retinotopic mapping equally divided between the two sessions.

WM and perceptual task.

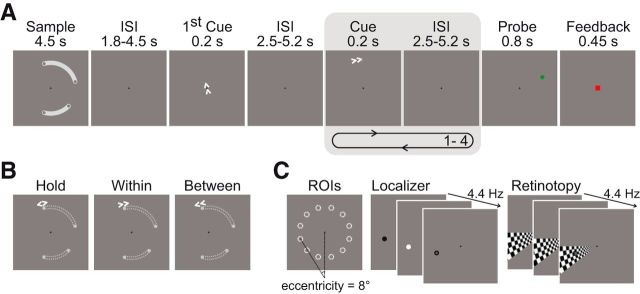

The WM task used a modified delayed-match-to-sample paradigm consisting of encoding, delay and recognition phases, with the operation of shifting covert attention between memorized positions embedded in the delay phase (Fig. 1A). At the beginning of each trial, participants were given 4.5 s to encode the positions of 4 gray dots (size in visual angle: 0.37°) always located at the ends of 2 white, curved shapes presented simultaneously on a gray background. The curved shapes served to group the positions of two dots each to one object. The perceptual grouping had no relevance for the task. The dots appeared on 4 of 12 possible locations on an invisible circle (radius 8° visual angle) around the center of the display (Fig. 1C). The curved shapes covered a maximum of five adjacent positions each and were separated by at least one empty position. Encoding was followed by the delay phase that contained the embedded cued shifting operations: 1.8–4.5 s (in steps of 0.9 s) after the end of encoding, a central arrow cue (“first cue”) appeared for 200 ms, instructing the participants to covertly shift their attention to the memorized position indicated by the cue and maintain it there. This first cue was not of interest itself and was only used to ensure that attention was focused on a specific location so that the following cue presentation could be clearly assigned to one of the shifting conditions detailed below. The first cue was followed by a sequence of 1–4 cues (200 ms) separated by an interval of 2.5–5.2 s (in steps of 0.9 s) during which only a fixation point was shown. Each of these cue presentations consisted of a peripheral arrow cue that appeared at 9.8° eccentricity close to the currently attended position, instructing the next operation. In 1/3 of events, this cue was a “hold” event (opposite arrows), indicating that attention should be held at its current position. Alternatively, the cue pointed either in the clockwise (33%) or counterclockwise (33%) direction, demanding covert shifts of attention to the next memorized position in the indicated direction relative to the current position. Cue-based shifting of attention to the next position in the counterclockwise or clockwise direction required a shift either to the other position of the same curved object shape (“within”-object shift) or to the nearest position of the other object (“between”-object shift) (Fig. 1B). Importantly, to avoid spurious artifactual effects in position-specific analyses of neuroimaging data, we carefully pseudorandomized the numbers of cue occurrences by shifting condition (within, between), shift distance (two, three, or four of 12 positions) and spatial position (12 positions). Pseudorandomization also ensured that each condition (“hold,” “within,” and “between”) was equally likely to occur (33.3%) for each cue number in the sequence (one up to four) and that the conditional probability of one condition following another was equal for all conditions. Therefore, for each cue number, it was equally likely (and thus unpredictable) whether a hold event, a within-object shift, or a between-object shift would occur.

Figure 1.

Experimental design. A, During the working memory (WM) task, subjects had to memorize four spatial positions that were grouped to two task-irrelevant objects. In the maintenance period, arrow cues indicated to which memorized position subjects had to shift their focus of attention. The first cue only served to indicate the starting point of the subsequent clockwise or counterclockwise attentional shifts. The number of cues of interest varied unpredictably from 1–4 across trials and, at the end of each trial, a probe stimulus with subsequent feedback was presented. B, Opposing arrows instructed participants to hold their attention at the memorized location. Depending on the underlying grouping of the memorized positions (indicated here by gray dotted lines for illustration only), the shift cues implied a within- or a between-object shift. C, Memory positions could appear at any of the 12 locations that were mapped functionally with a visual-attentional localizer.

The recognition phase followed 2.5–5.2 s (in steps of 0.9 s) after the offset of the last cue. To test recognition, a single dot appeared for 800 ms and the participants had to indicate via left/right button press whether this probe was at the exact position of the current focus of attention. In 50% of the cases, the probe matched the current focus of attention. Nonmatching probes could appear at four of the set of 12 possible locations. These could be either the two memorized positions that were nearest to the match position (“same-object position” and “different-object position”) or the two positions directly neighboring the match position on the circle of 12 possible positions. The latter positions were used to encourage precise spatial encoding. Because participants did not know after how many cues the recognition test would be presented and because the test demanded quick responding (800 ms response deadline), they were forced to comply with the instruction to select the current locus of attention as the target during cue presentations. After the 800 ms test presentation, a green (correct and in time) or red (incorrect or too slow) square was presented as feedback for 450 ms. This was followed by an intertrial interval (ITI) of 3.25–6.85 s (in steps of 0.9 s). A total of 130 WM trials were presented during fMRI, including 108 cues for each shifting condition. Subjects were instructed to maintain fixation on the black fixation cross at the center of the screen throughout the experiment. The WM task in the behavioral experiment was identical to the fMRI experiment except that participants indicated via button press whenever they had finished shifting attention to a cued position as instructed by the arrow cue. The ITI in the behavioral experiment ranged between 2.475 and 4.275 s. The behavioral experiment included 36 trials with 30 cues per condition.

We used two behavioral measures of the object-based attention effect in WM. First, we evaluated shifting times to cues representing the time subjects needed to complete the shifts of the attention to a target memory position that was located on the same (within-object shift) or different object (between-object shift). These shifting events were of main interest for this study and correspond to the analyzed fMRI events. Overt responses were only collected during the behavioral experiment (see above) to avoid any motor interference during functional measurement. Second, we compared RTs for correct answers to nonmatching probes that appeared at the end of each trial either at the location of a memorized position within the same object (same-object position) as the current target position of the attentional shift (i.e., the focus of attention) or at the memorized position within the other object (different-object position) that was next to the current focus of attention. This measure corresponds to the classical approach of assessing object-based attention benefit in perception, but was implemented here in a WM task. To assess whether this effect was modulated by the previous attentional selection requirements in WM, we also analyzed RTs to the probe separately for trials containing a shift event (within or between) or a hold event as the last cued operation before the probe. RTs to probes were collected during both behavioral and fMRI experiments. Because the experimental design was optimized for the analysis of the shifting events, we obtained far fewer repetitions of the nonmatching probes than shift events. Therefore, to obtain sufficient statistical power, we combined the data from the behavioral and fMRI experiment of those 20 subjects who participated in both experiments.

In the behavioral experiment, subjects also performed a perceptual version of this task to determine whether comparable object-based attention effects in the shifting times after cues are elicited in WM as in perception. The perceptual task was identical to the WM task except that spatial positions and objects were visible throughout the whole trial—thus not requiring maintenance in WM—and disappeared with the onset of the probe in the recognition phase.

For subjects who participated in the fMRI experiment, we collected monocular eye position data at 500 Hz using an infrared eye tracker (Eyelink 1000; SR Research Ltd.) during the behavioral experiment (outside of the MR scanner). Nine-point calibration was conducted before each run. Saccades during the cue period (interval: 0–2.4 s after cue onset) were detected by the Eyelink software using eye-movement thresholds of 22°/s for velocity and 3800°/s2 for acceleration.

Presentation software (version 14.9) was used for stimulus presentation, recording of responses, and synchronization to the scanner with the eye tracker. In the fMRI experiment, stimuli were presented via MRI-compatible LCD goggles (VisuaStim XGA; Resonance Technology).

RT data were analyzed with R (version 3.0.0, R Development Core Team, 2013). First, RTs below 150 ms and above 3000 ms were discarded. Then, for a given contrast, RTs within 3 SDs of each subject's mean were selected and individual condition means tested against each other via paired t test. The within-object benefit and object-based effects to the probe were tested as one-sided tests. To test for possible differences in eye movements between the attentional shift conditions, we used a two-tailed paired t test across subjects on the saccade rate averaged within each condition.

Functional localizer and retinotopic mapping.

For the functional localizer recording, we sequentially stimulated each of the 12 spatial locations of the WM experiment 30 times for the duration of one whole-brain repetition time (TR) using a pseudorandomized order. For stimulation, a disk (0.92°) alternating between black and white (average reversal rate 4.44 Hz) was presented (Fig. 1C). For retinotopic mapping, participants monitored a wedge-shaped, black-and-white checkerboard (average reversal rate 4.44 Hz) subtending 30 degrees of visual angle with check size linearly scaled to eccentricity. The wedge extended from 2° of visual angle to full screen eccentricity and rotated in clockwise/counterclockwise (first/s run) direction by 30°, completing 15.5 rotations per run (first half cycle discarded), with each location being stimulated for the duration of one TR. To increase signal-to-noise ratio compared with passive stimulation, participants were to maintain fixation and to monitor covertly the stimulated sites for the appearance of a small target disk (Bressler and Silver, 2010) (localizer: 0.45°, retinotopic mapping: 0.92°) that could appear at the orbital center of the stimulated site at 8° eccentricity. Target probability at each location was 50% and the contrast of the target disk was adapted in an up/down staircase procedure to equate behavioral performance (75% detection rate) between subjects.

fMRI data acquisition and analysis.

Functional measurements were conducted on a 3T Siemens Allegra head scanner at the Brain Imaging Center, Goethe University Frankfurt. Functional whole-brain volumes were acquired with a T2*-weighted echo-planar sequence [time echo (TE) = 30 ms, flip angle (FA) = 90°, matrix = 64 × 64, 3 × 3 mm in-plane resolution] with 28 slices (3 mm, 1 mm gap) and a TR of 1.8 s. In each session, we acquired a high-resolution, magnetization-prepared rapid-acquisition gradient echo (MPRAGE; TR = 2.2 s, TE = 3.93 ms, FA = 9°, inversion time 0.900 s, 256 × 256 × 192 isotropic 1 mm3 voxels) and a gradient echo field map (short TE = 4.89 ms, long TE = 7.35 ms, total EPI readout time = 26.88 ms). One session of the fMRI experiment lasted ∼1 h. In total, we acquired 2120, 770, and 400 functional volumes for the WM experiment, the functional localizer, and the retinotopic mapping, respectively.

Image processing was performed using SPM8 (Wellcome Department of Cognitive Neurology, http://www.fil.ion.ucl.ac.uk/spm/software/spm8/) in combination with FreeSurfer (http://surfer.nmr.mgh.harvard.edu) and custom MATLAB (The MathWorks) scripts. Data preprocessing included spatial distortion correction caused by magnetic field inhomogeneities using the SPM FieldMap toolbox (Hutton et al., 2002) motion and slice-timing correction (reference slice: 14). Normalization was conducted using the VBM8 Toolbox (http://dbm.neuro.uni-jena.de/vbm/) by segmenting the averaged and coregistered anatomical images into gray matter, white matter, and CSF, computing deformation fields for subject-to-MNI152 (IXI550 template, www.brain-development.org) space mapping with high-dimensional DARTEL normalization (Ashburner, 2007), and applying these parameters including a resampling to 1.5 mm3 isotropic voxels to the functional volumes. For whole-brain univariate fMRI analysis, functional volumes were then spatially smoothed with an isotropic 8 mm full-width-half-minimum Gaussian kernel. No normalization or smoothing was performed for the multivoxel pattern analysis (MVPA).

Univariate analysis.

Each individual design matrix contained predictors for the onset of encoding, first cue, hold, within and between cues, and the onset of the recognition phase. Events were modeled with a finite impulse response approach with 8 time points in intervals of 1.8 s (corresponding to the TR), thus covering the time window from 0–14.4 s after event onset. In addition, the design matrix contained movement parameters and a constant term for each run. Model estimation included high-pass filtering (1/128 Hz cutoff frequency) and a first-order autoregressive error structure. Individual contrasts of between-object shifts versus within-object shifts at scan 3 capturing the peak of the hemodynamic response were computed. Parameter maps were then entered into a second-level one-sample t test. Results are reported at a cluster-corrected false discovery rate of p < 0.05 using a height threshold of p < 0.005 (t > 2.86) and visualized on a surface reconstruction of the SPM canonical single-subject brain using the BrainVoyager QX software (Brain Innovation).

Retinotopic mapping.

Standard retinotopic mapping was conducted to delineate the borders of V1, V2d, V2v, V3d, V3v, and hV4 in each hemisphere of each subject. Preprocessed functional data (see “fMRI data acquisition and analysis” for details) were analyzed within each subject's individual anatomical space using SPM and custom scripts. For each voxel and run, the percentage signal change time course was Fourier transformed, the amplitude and phase of the stimulation frequency (15 rotations per run) extracted, and a signal-to-noise ratio (SNR) (Warnking et al., 2002) computed. Across runs, phase and amplitude data were then obtained as the sum of the complex vectors of both runs weighted by their respective SNRs. The real and imaginary parts of the complex volume were then projected onto the reconstructed and computationally inflated cortex by averaging, at each vertex, values within the gray matter along the surface normal using Freesurfer. Surface labels for areas V1, V2, V3, and V4 were defined by identifying borders of V1, V2d, V2v, V3d, V3v, and hV4 as the phase reversals of the polar angle map and subsequently transformed into volume space.

MVPA.

We generated examples for classification for each shift event (within or between) in the main experiment and each stimulation event of a spatial location in the localizer experiment using an approach optimized for rapid event-related designs (Turner et al., 2012). In this approach, for each event of interest, a GLM is fitted to the data using least square estimation containing finite impulse response predictors for the single event of interest and the respective predictors of the univariate design, as described above, for that run that model all events but the single event of interest. Unsmoothed functional data from brain voxels (determined using the SPM-generated mask from the univariate analysis) and the design matrix were high-pass filtered using the SPM filter with a cutoff period of 128 s. Functional data were scaled for each run to have a voxel mean of 0 and unit variance across all voxels and time points. The t-map of the third time bin of the event of interest for the respective regions was then used in the classification because t-maps are considered superior to β-estimates for classification (Misaki et al., 2010). Before entering the classifier, each example vector was scaled to have unit variance and zero mean.

We trained 66 (i.e., 12 choose 2) linear support vector machines each to distinguish localizer activations of one possible pair of the 12 spatial locations using the LIBSVM package (Chang and Lin, 2011) on the data of the functional localizer. We used all voxels within a respective region, yielding a mean region voxel count of 1335.8 for PPC (SD across subjects (SD): 148.2), 399.7 for V1 (SD = 78.1), 344.8 for V2 (SD = 58.0), 320.4 for V3 (SD = 46.6), and 142.6 for V4 (SD = 29.7). For area V1–V4, we combined all voxels from areas V1 to V4, yielding a mean voxel count of 1196.8 (SD = 143.3). Hyperparameter C was obtained by grid search and twofold cross-validation in the training set using the two sessions as a natural separation of the data. As a result, we obtained a set of classifiers that distinguished which of any two given locations was more active.

To analyze whether we could decode the memory position inside the focus of attention in WM from nonattended memory positions, we applied the classifiers derived from the localizer experiment to the shifting events of the WM task. Specifically, for a given classifier, we collected all those events of the WM experiment that contained a memory position “inside” the focus of attention (i.e., the target memory position of the current attention shift) or one of the three memory positions “outside” the focus of attention situated at one of the two locations that the classifier was trained to distinguish. These trials were then projected onto the decision vector of this classifier. Therefore, this linear spatial filter projected the cue-related activity of a WM trial into a 1D space that optimally separated the retinotopic activity of the respective 2 locations in the independent localizer. Each resulting decision value then reflected the relative evidence of one location being more active than the other during a shift event. Decision values were first recoded by multiplying them with 1 or −1 so that positive values corresponded to evidence in favor of inside and outside positions and then averaged and normalized by their SD to account for different scaling between the filters, separately for inside and outside positions. This yielded one mean decision value for each condition (inside and outside) and classifier.

We then calculated activation values dinside and doutside that represented the relative activation of the inside and the outside position compared with all other positions by averaging the mean decision values across all classifiers, which equals to averaging across all combinations of the 12 spatial locations. Therefore, the resulting activation values, dinside and doutside, reflected an activation state of the respective memory positions regardless of their spatial location within the experiment. Note that negative activation values reflect weaker activation at that position relative to the other positions.

To obtain error probabilities for the null hypothesis that the memory positions outside of the focus of attention were at least as strongly activated as the memory position inside the focus of attention (activation values ddifferent ≥ dsame), we computed for each classifier the mean difference of decision values between the inside and the outside positions standardized by their pooled SD. These values were then averaged across all classifiers per subject as described above. The resulting group mean was then tested against the permutation null distribution of all 220 possible group means, which was obtained by an exhaustive permutation of the signs of all subject effects. If, within a trial, a filter contained both the inside and an outside position at the two spatial locations, the decision value was only included in one of the two distributions of decision values. This applied to both the calculation of the activation value and the inferential statistics.

To analyze the object-based attentional coactivation in WM, we used the identical approach described above to calculate activation values dsame and ddifferent by comparing the decision values when one of the two locations was a same-object position (i.e., memorized position that was colocalized on the same object as the target memory position of the current attention shift, i.e., the focus of attention) or a different-object position (memorized position that was also next to the focus of attention but localized on the different object). To analyze coactivation in visual areas and PPC when no attentional selection was required, we proceeded as described above using only the cue-related activity after a hold event.

Univariate control analysis.

Twelve regions of interest representing the 12 stimulated positions in the functional localizer were generated within visual cortex (V1–V4 as defined by retinotopic mapping) for each subject by fitting a GLM that contained a predictor for each position. Each predictor was contrasted against the other position predictors with stronger negative weights for neighboring positions. The ROI for a specific position was then defined as the 30 voxels with the highest t-values in the respective contrast. For region-specific analyses (V1, V2, V3, V4), we proceeded in exactly the same way within each retinotopically mapped visual area.

For each ROI, we extracted the first principal component of the BOLD signal using the Marsbar toolbox (http://marsbar.sourceforge.net/) and fitted a GLM with parameters and data as described above. This model contained the factors cue (First Cue, Hold, Within, Between) and the position-specific memory/attentional state (focus of attention, same-object position, different-object position, distant-object position:= memory position in the unattended object that is furthest apart from the focus of attention, a nonmemory point within the attended object, nonmemory point within the nonattended object, a nonmemory point outside of objects close to the target position, a nonmemory point outside of objects distant to the target position). In addition, we included predictors for the encoding event and distinguished whether the position was a memory position, within an object, or not stimulated at all. Finally, for the recognition event, we distinguished whether the target appeared at that position.

To examine the effect of object-based coactivation, we contrasted the parameter estimates at time bin 3 after cue onset of positions that were not the target of a shift but equidistant from the target. Specifically, we compared parameter estimates of the unattended position on the attended object (same-object position, csame) with the unattended position on the unattended object (different-object position, cdifferent). The extracted contrast values were averaged across positions for each subject and a one-sided t test was used to determine whether the difference in the parameter estimates was greater than zero. To analyze whether the focus of attention was more active than the other three memory positions, we contrasted the parameter estimates of the focus of attention to the parameter estimates of the other three memory positions with the same approach described above.

Results

Behavioral results

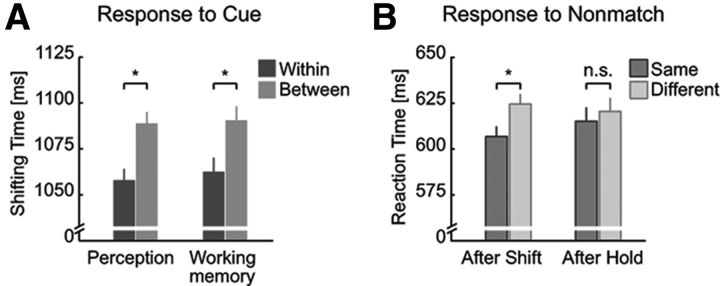

First, we compared the time that subjects needed to complete the shifts of attention to a target position held in WM (Fig. 2A). As hypothesized, we observed a within-object benefit: shifting times to cues were shorter for shifts between memorized spatial positions located on the same object (within-object shift) compared with equidistant positions on separate objects (between-object shift) (paired t test, t35 = 2.59, p = 0.007; “within”-object shift, shifting time mean: 1062.5 ms; between-object shift: 1090.4 ms; ± SEM for the within-subjects comparison; Morey, 2008: ± 7.6 ms; within-object benefit Δt = 27.9 ms). Consistent with the assumption of shared attentional mechanisms between perception and WM, we observed almost identical within-object benefits in both the WM and the perceptual version of the task, in which spatial positions and objects were visible throughout the trial (t35 = 3.55, p = 5.6 × 10−4; shifting times in perceptual task: 1057.9 ms for shifts within vs 1088.9 ms for shifts between objects; ± 6.2 ms; Δt = 31.0 ms). The within-object benefit did not differ between the task versions (t35 = 0.27, p = 0.791). Importantly, the within-object benefit in WM did not differ between the group of subjects that participated only in the behavioral experiment and the group of subjects that participated in both the fMRI and the behavioral experiment (F(1,35) = 0.001, p = 0.975, fMRI group: t19 = 1.56, p = 0.068; 1206.7 ms for shifts within vs 1234.3 ms for shifts between objects; ± 12.5 ms; Δt = 27.6 ms).

Figure 2.

Behavioral results. A, Time to complete shifts of attention (shifting time to cues) were shorter between positions located on the same (within) versus different (between) object for both the WM task and its perceptual version where the sample display was visible throughout the maintenance period. B, RTs to nonmatching probes only following shift events (after shift) in the WM experiment were faster for probes presented at the memory location within the same object as the current focus of attention (same-object position) compared with probes presented at the memory position on the other object (different-object position). Error bars indicate SEM for within-subjects comparison (Morey, 2008) *p < 0.05; n.s., not significant.

In fMRI, to avoid strong manual motor activations in temporal proximity to attention shifts, we required overt behavioral responses only to the probe stimulus at the end of each trial. Performance to a probe stimulus at the end of each trial was high (correct response rate ± SEM: 85.0 ± 1.4%). Importantly, RTs to matching probes (i.e., probes presented at the attended spatial position) were shorter than those to nonmatching probes (presented at unattended spatial positions) (t19 = 7.32, p = 3.1 × 10−7; 565.0 ms vs 623.8 ms; ± 5.7 ms). Therefore, as commonly observed in attentional cueing paradigms (Posner, 1980), directing spatial attention to a particular position accelerated detection of stimuli presented there. This result thus clearly indicates that participants performed the instructed covert spatial attentional shifts between memorized positions.

In addition, we also compared the RTs to nonmatching probes (Fig. 2B). This represents the classical behavioral measure of object-based effects in perceptual studies. Specifically, RTs to nonmatching probes that appeared at the unattended position belonging to the same object (same-object position) as the attended position were shorter than those to nonmatching probes that appeared at the proximal position of the nonattended object (different-object position) (t19 = 2.43, p = 0.013; 607.9 ms for same-object position vs 623.4 ms for different-object position; ± 4.5 ms; Δt = 15.6 ms). Interestingly, the magnitude of this “classical” object-based attention effect was very similar to that typically observed in perception (Egly et al., 1994). Moreover, it was particularly strong when the probe appeared after a shift cue (t19 = 2.25, p = 0.018; 606.9 ms vs 624.6 ms; ± 5.6 ms; Δt = 17.7 ms). In contrast, after a hold cue, when no attentional selection in WM was required for the processing of the cue, there was no difference in RTs to subsequent probes (t19 = 0.50, p = 0.310; 615.2 ms vs 620.5 ms; ± 7.5 ms; Δt = 5.3 ms).

fMRI group level results

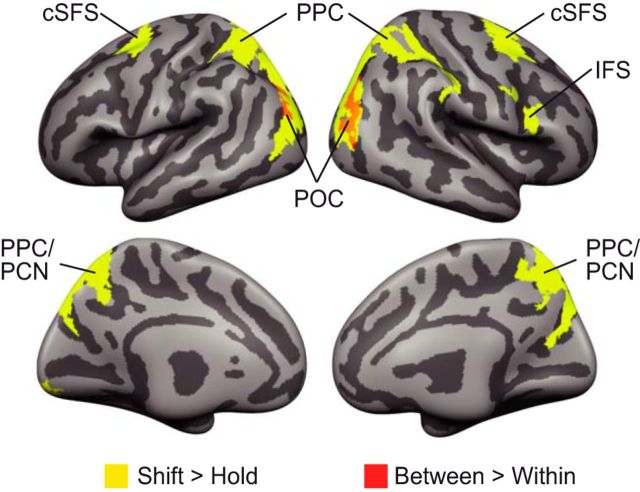

To characterize the neural correlates of object-based shifting of attention between positions maintained in WM, participants performed the WM task while BOLD responses were recorded. Analysis comprised two steps that validated our results in the context of previous findings on attention and WM and provided a detailed view of the neural basis of object-based shifting. First, we identified brain regions responsive to shifting versus holding attention. Here, both within- and between-object shifts were contrasted to events that demanded holding attention at the same position. Consistent with previous findings (Bledowski et al., 2010; Gazzaley and Nobre, 2012), shifting attention between representations in WM was associated with increased activity in the frontal and parietal regions (Fig. 3, Table 1; paired t test, p < 0.05, cluster-wise FDR-corrected). These brain networks are also known to be active during the control of attention on perceived spatial locations (Corbetta and Shulman, 2002). Second, we identified brain regions associated with the type of attention shift by comparing within-object shifts with between-object shifts. Between-object shifts elicited stronger activation bilaterally in the parieto-occipital cortex (POC) on the lateral bank of the transverse-occipital sulcus. The region associated with object-based attention shifting corresponded to the posterior part of the parietal cluster that showed elevated BOLD activity for shifting versus holding attention. Moreover, within the PPC, subthreshold activity extended anteriorly along the bilateral intraparietal sulci. These findings are consistent with studies showing parietal contributions to object-based attention shifting in perception (Serences et al., 2004; Shomstein and Behrmann, 2006; Stoppel et al., 2013).

Figure 3.

Whole-brain effects related to attentional shifts in WM. Several frontoparietal regions showed elevated activation for shifts (within and between objects) compared with hold events. Between-object shifts elicited higher activation compared with within-object shifts in the POC. Results were cluster corrected at a false discovery rate of 0.05 (uncorrected voxelwise threshold t > 2.86). cSFS, Caudal superior frontal sulcus; IFS, inferior frontal sulcus; PPC, posterior parietal cortex; PCN, precuneus; POC, parieto-occipital cortex. Also see Table 1.

Table 1.

List of cortical regions activated by attentional shifts in WM

| Region | Side | Peak voxel z-score | MNI peak coordinates (mm) | Cluster size (vox) | ||

|---|---|---|---|---|---|---|

| Shift > hold | ||||||

| PPC | l | 5.50 | −14 | −72 | 55 | 15203 |

| r | 5.24 | 20 | −61 | 51 | ||

| cSFS | l | 5.48 | −26 | −4 | 55 | 1959 |

| r | 5.28 | 32 | 3 | 64 | 2351 | |

| IFS | r | 3.34 | 56 | 11 | 22 | 499 |

| VC | l | 3.88 | −9 | −87 | −12 | 444 |

| Hold > shift | ||||||

| MT/IPL | r | 5.29 | 57 | −37 | 3 | 6981 |

| MT | l | 4.55 | −50 | −42 | −3 | 2936 |

| IPL | l | 5.26 | −53 | −55 | 45 | 2373 |

| Insula | l | 4.68 | 53 | 0 | 9 | 447 |

| MFG | r | 4.37 | 38 | 26 | 42 | 1044 |

| PCC | — | 4.34 | −2 | −27 | 37 | 1605 |

| rSFS | r | 4.31 | 26 | 57 | 25 | 1546 |

| Cuneus | — | 3.88 | −8 | −78 | 22 | 838 |

| IFG | r | 3.82 | 53 | 26 | 16 | 397 |

| Between-Object > Within-Object Shifts | ||||||

| POC | r | 4.20 | 42 | −67 | 28 | 788 |

| l | 3.60 | −30 | −72 | 30 | 388 | |

Significant activations are reported at a cluster-corrected false discovery rate of p < 0.05 (height threshold of t > 2.86). No significant clusters were found for the contrast Within-Object > Between-Object Shifts.

PPC, posterior parietal cortex; cSFS, Caudal superior frontal sulcus; IFS, inferior frontal sulcus; VC, visual cortex; MT, medial temporal lobe; IPL, inferior parietal lobe; MFG, middle frontal gyrus; PCC, posterior cingulate cortex; rSFS, rostral superior frontal sulcus; IFG, inferior frontal gyrus; POC, parieto-occipital cortex.

Object-related coactivation in visual cortex

To evaluate object-related effects on spatial memory representations, we used MVPA. In contrast to classical ROI analysis, which uses the mean activation across a set of voxels as an aggregate estimate of their activity, MVPA takes into account that different voxels may be differentially informative. Therefore, whereas all voxels are equally weighted in ROI analysis, MVPA allows for unequal weights and searches algorithmically for the multivoxel weighting pattern that optimally separates the data between two conditions (Kriegeskorte et al., 2006). To prevent circular inference, this necessitates a two-step procedure: first, optimal patterns are learned (“trained”) using one part of the dataset. Second, these patterns are then applied to independent data to evaluate the resulting classification statistically.

For training, we used an independent localizer task that required subjects to covertly attend visual stimulation of those 12 locations (one at a time) that were also used in the WM part of the study. As a result of training, for each combination of these locations and in each of the areas of interest (V1–V4), separate weighting patterns were obtained. These weighting patterns were used to project the activity in each trial onto the 1D discriminative vector between the activation patterns of the two locations. Then, for a single position during the WM experiment (e.g., the memory position inside the focus of attention), we aggregated these projected values into an activation value that reflected the relative activation (mean decision value of the classifiers) of that position compared with all other positions during the WM experiment.

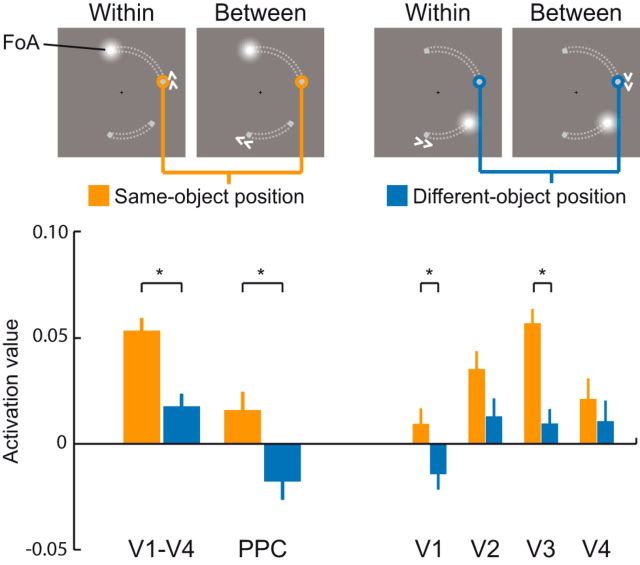

As a first result, the focus of attention, the target memory position to which attention was shifted, was clearly discriminable from the other three memory positions in V1–V4 (Fig. 4; mean activation value inside the focus of attention: dinside = 0.092; mean activation value of other three memory positions outside the focus of attention doutside = −0.055; ± SEM for the within-subjects comparison; Morey, 2008: ± 0.014; probability of dinside ≤ doutside: p = 9.5 × 10−6). This effect was also present at the level of individual regions (V1: dinside = 0.017, doutside = −0.038, ± 0.009, p = 0.002; V2: dinside = 0.04, doutside = −0.038, ± 0.01, p = 2.1 × 10−4; V3: dinside = 0.105, doutside = −0.036, ± 0.013, p = 2.6 × 10−6; V4: dinside = 0.054, doutside = −0.01, ± 0.008, p = 2.2 × 10−4). It is important to note that negative values reflect lower activation of a position relative to all other positions.

Figure 4.

Decoding the focus of attention in working memory. Activation values (normalized decision value of a multivariate filter; see Materials and Methods for details) during attentional shifts in WM were analyzed at positions that were functionally mapped with a perceptual localizer. Target memory positions to which the focus of attention (FoA) was shifted compared with the activation values at the all other memory positions regardless of whether they were part of the same object (grouping of the memorized positions indicated here by gray dotted lines for illustration only). Negative values reflect lower activation relative to all other positions. Error bars indicate SEM for within-subjects comparison (Morey, 2008). *p < 0.05.

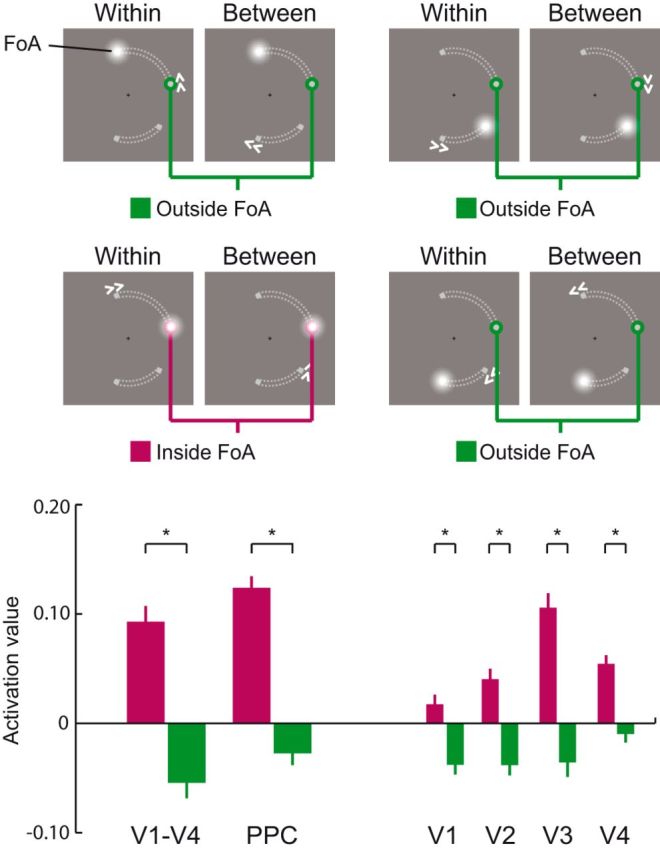

To assess object-based effects, we compared the activation value of an unattended memory position located on the same object (same-object position) as the focus of attention to its activation value when it corresponded to a memory position located on the other, unattended object (different-object position; Fig. 5). Consistent with our hypothesis, we found that same-object positions showed higher activation values (dsame = 0.054) compared with different-object positions (ddifferent = 0.018; ± 0.006; p = 0.002). This confirmed that an object-based attentional coactivation of positions that are grouped with the attended position takes place in V1–V4 during shifts of attention between memory representations in WM. Higher activation of the same-object position was nominally evident in all regions with areas V1 and V3 reaching statistical significance (V1: dsame = 0.009, ddifferent = −0.015, ± 0.007, p = 0.044; V2: dsame = 0.035, ddifferent = 0.013, ± 0.009, p = 0.094; V3: dsame = 0.057, ddifferent = 0.009, ± 0.007, p = 8 × 10−4; V4: dsame = 0.021, ddifferent = 0.011, ± 0.01, p = 0.296). Importantly, the same- and the different-object position conditions were identical with respect to all factors but the specific grouping of the memory items. Specifically, to avoid confounding influences from the previous attentional state, we kept the number of occurrences equal for both conditions when the same or different object position was in the focus of attention before the onset of the current cue. In addition, to avoid differences between the same- and different-object positions due to different general activations of the visual areas during a between- or a within-object shift (as observed in the univariate analysis; Fig. 3), both conditions also contained the same number of cues instructing a within- or between-object shift.

Figure 5.

Attentional spread in the visual and parietal cortex during attentional shifts in WM. Activation values (normalized decision value of a multivariate filter; see Materials and Methods for detail) during attentional shifts in WM were analyzed at positions that were mapped functionally with a perceptual localizer. Memory positions within the same object as the target memory position to which the focus of attention (FoA) was shifted were compared with memory positions that were part of the other object (grouping of the memorized positions indicated here by gray dotted lines for illustration only). Negative values reflect lower activation relative to all other positions. Error bars indicate SEM for within-subjects comparison (Morey, 2008). *p < 0.05.

Object-related coactivation in the parietal cortex

Our univariate analysis revealed that PPC was more strongly engaged during shifts of attention than when attention was held at a memory position. In addition, PPC activation was also modulated by the type of attention shifting with stronger responses to between- than within-object shifts. PPC is considered a central hub of attentional control that modulates activity in visual cortices depending on attentional demands (Kastner and Ungerleider, 2000). Moreover, the intraparietal sulcus has been shown to consist of multiple visuotopically organized maps (Silver and Kastner, 2009), at least one of which might be a representation of a so-called “priority map” (Fecteau and Munoz, 2006; Bisley and Goldberg, 2010; Jerde et al., 2012). This visuotopic organization is preserved in the anatomical and functional connectivity pattern with lower visual areas (Lauritzen et al., 2009; Greenberg et al., 2012). Having demonstrated attentional object-based coactivation in several visual areas and given the strong anatomical and functional links between PPC and visual areas during attentional shifts, we expected that PPC would also show attentional object-based coactivation across same-object positions maintained in WM.

To test this hypothesis, we tracked the activity of the 12 locations at which memory items could appear during the course of the experiment in PPC. This ROI was defined by the bilateral parietal cluster for the contrast between shifting versus holding the focus of attention in the group level analysis. We applied the multivariate retinotopic filter approach as described above in the analysis of visual cortex to the activity patterns in posterior parietal cortex during attention shifts in WM. Similar to visual cortex, in PPC we could clearly discriminate the focus of attention from the other memory positions (dinside = 0.123, doutside = −0.028, ± 0.011, p = 5.2 × 10−7; Fig. 4). As hypothesized, the same-object position showed higher activation values compared with the different-object position (dsame = 0.016, ddifferent = −0.018, ± 0.009, p = 0.025; Fig. 5) during an attention shift. Therefore, object-based attentional coactivation of same-object positions during attention shifts in WM also takes place in PPC.

Object-related coactivation requires attentional selection

We also assessed whether the reported attentional coactivation effect in the visual and parietal cortex can be observed after a hold cue, when no attentional selection in WM was required. In contrast to the object-based attentional coactivation of same-object positions during attentional selection in WM, we did not observe such coactivation after hold events either in V1–V4 (dsame = −0.146, ddifferent = −0.172, ± 0.012, p = 0.157) nor in any of the individual visual areas (V1: dsame = −0.101, ddifferent = −0.098, ± 0.012, p = 0.537; V2: dsame = −0.109, ddifferent = −0.110, ± 0.011, p = 0.464; V3: dsame = −0.078, ddifferent = −0.128, ± 0.015, p = 0.063; V4: dsame = −0.036, ddifferent = −0.004, ± 0.015, p = 0.847) nor in PPC (dsame = −0.029, ddifferent = −0.012, ± 0.018, p = 0.677).

Univariate control analyses

Classical ROI analysis was used as a computationally low-level validation of MVPA results. We defined for each visual region 12 ROIs as the most discriminative voxels during the localizer experiment. We then fitted GLMs to each of the 12 extracted time courses that captured the position-specific variance during the main experiment and computed the same contrasts as in the MVPA analysis. The overall pattern of results of the ROI analysis corresponded well to the results of the multivariate decoding analyses: the focus of attention showed more activity than the other three memory positions. This was evident in all regions (V1–V4: cinside = 5.5 × 10−3, coutside = 3.2 × 10−3, ± SEM for within-subjects comparison; Morey, 2008: 1.2 × 10−3, p = 0.002; V1: cinside = 5.3 × 10−4, coutside = −8.9 × 10−5, ± 8.6 × 10−4, p = 0.060; V2: cinside = 3.4 × 10−3, coutside = 2.3 × 10−3, ± 8.8 × 10−4, p = 0.006; V3: cinside = 5.3 × 10−3, coutside = 3.2 × 10−3, ± 9.6 × 10−4, p = 4.3 × 10−4; V4: cinside = 3.2 × 10−3, coutside = 2.0 × 10−3, ± 6.3 × 10−4, p = 0.049) except area V1, where only a statistical trend was observed. In addition, completely consistent with the MVPA results, the same-object position was nominally more active than the different-object position in all visual regions (V1–V4: csame = 4.2 × 10−3, cdifferent = 3.2 × 10−3, ± 6.0 × 10−4, p = 0.112; V1: csame = 1.7 × 10−4, cdifferent = −8.9 × 10−5, ± 5.7 × 10−4, p = 0.375; V2: csame = 2.6 × 10−3, cdifferent = 2.3 × 10−3, ± 7.0 × 10−4, p = 0.387; V4: csame = 3.4 × 10−3, cdifferent = 2.0 × 10−3, ± 7.1 × 10−4, p = 0.090), with the strongest effect being found in V3 (csame = 4.6 × 10−3, cdifferent = 3.2 × 10−3, ± 5.6 × 10−4, p = 0.046), but with smaller effect sizes compared with the MVPA results.

We did not perform a univariate control analysis in PPC because spatially extended and overlapping receptive fields precluded the definition of 12 nonoverlapping ROIs given our fMRI sequence.

Eye-movement control study

To rule out that the observed BOLD activation differences were due to differences in eye movements between the shift conditions (Corbetta et al., 1998; Konen and Kastner, 2008), we recorded eye movements with a high spatial accuracy and temporal resolution using a video-based infrared eye tracker (for details, see Materials and Methods) during the behavioral session for the 20 subjects who also participated in the fMRI experiment. Analysis of saccade rate revealed no significant differences between the within-object and between-object shift conditions (t19 = −0.36, p = 0.72). Brain activation associated with the within-object benefit can therefore not be attributed to saccade rate.

Discussion

Object-based attention has been studied intensively in perception. Here, we tested the impact of object-based attention in WM and found that the characteristic effects of object-based attention in perception can also be observed for WM. We obtained three main findings. First, attention was shifted faster between memorized positions of the same compared with different objects. This mirrored the behavioral benefit observed in a perceptual version of the same task. In addition, RTs to probes at memorized positions within the same versus different object as the current attentional focus showed a same-object benefit that reflected the well established findings in perception. Second, this benefit was accompanied by a smaller activation increase in PPC for within- compared with between-object attentional shifts. Third, analyses of retinotopic visual cortex revealed neural coactivation of areas representing another memorized position on the same object compared with equidistant positions on a different object after attentional selection in WM. This was fully consistent with previous results on object-based attention in vision. This effect was not confined to visual areas, but was also present in PPC, a region known to integrate bottom-up and top-down information from multiple visuotopic regions. Therefore, the present results establish object-related attention as a contributor to WM processing.

We devised a novel paradigm that allowed us to study object-based shifting of attention in both perception and WM. We found that attentional shifts between positions of the same object occurred faster than shifts between equidistant positions of different objects regardless of whether these positions were physically present or maintained in WM. Moreover, the magnitude of the within-object benefit was almost identical in the WM and the perceptual version of the task. Importantly, we also observed the key characteristic of the classic object-based effect observed in perceptual tasks showing that, even after a long memory delay of several seconds, the detection of nonmatching probes was faster when presented at memorized locations of the same versus different object. Together, these results suggest that a common mechanism might underlie the within-object benefit in perception and WM.

Based on the notion of WM as a composite function emerging from the interaction of attentional and mnemonic mechanisms, numerous studies have proposed a common neural basis for—mostly spatial—attention and WM. One of the central shared processes is the shifting of the attentional focus between different locations, or between items held in WM (Bledowski et al., 2010; Gazzaley and Nobre, 2012; Oberauer and Hein, 2012). Here, we replicate findings showing that this operation is associated with the activation of several frontoparietal control regions (Nobre et al., 2004; Lepsien et al., 2005; Bledowski et al., 2009). In addition, we established that the common basis of these core operations in attention and WM also applies to object-based shifting of attention. This process was associated with increases in activation within several regions in PPC and bilateral POC following the presentation of “between” compared with “within” cues. These regions are also involved in attention shifts between objects in perception (Serences et al., 2004; Shomstein and Behrmann, 2006; Stoppel et al., 2013).

Mechanistically, object-based attention is conceived to be based on the spreading of attention (Vecera and Farah, 1994). In this view, attentional selection of one part of an object entails an automatic spread of attention within object boundaries, thereby coactivating the entire object. Attentional spread to perceptual stimuli at unattended locations of an attended grouping was reflected by elevated multiunit activity in the visual cortex of monkeys (Roelfsema et al., 1998; Wannig et al., 2011). Converging evidence has been obtained in human BOLD responses (Müller and Kleinschmidt, 2003; Shomstein and Behrmann, 2006) and EEG/MEG activity (Martínez et al., 2006). Our findings of fMRI activity in retinotopic visual areas during the WM experiment were consistent with those results: when subjects performed an attentional shift to a memorized position within WM, the multivoxel pattern activity at the retinotopic location of an unattended position within the same object revealed higher activation values compared with equidistant positions on the other object. Faster RTs to nonmatching probes at same-object positions compared with different object positions further support the notion of enhanced processing of same-object positions due to a coactivation after an attentional selection of one part of an object. After hold events, which did not require attentional selection of a memorized position in WM, the object-based attentional coactivation of same-object memory positions was absent and subjects responded equally fast to nonmatching probes presented on the same versus different object. This further emphasizes that the observed object-based behavioral benefits and fMRI coactivations result from the active selection between grouped items within WM.

Sprague and Serences (2013) showed that attention enhances spatial representations across the human visual hierarchy, including early and late visual as well as parietal areas. We observed similar modulations of spatial memory representations in the PPC during object-based attention that mirrored those found in visual cortex: attentional selection of a memorized position led to coactivation of the representation of another memorized position within the same object also in PPC. This finding supports the view that object-based spreading of attention evolves along horizontal and feedback recurrent connections in the visual hierarchy (Roelfsema, 2006). It is also consistent with a recent study showing that PPC contains item-specific signals of memorized stimuli relying strongly on spatial features (Christophel et al., 2012). Attention-related activity modulations in visual areas are thought to result from top-down signals from visuotopic regions in PPC (Lauritzen et al., 2009; Greenberg et al., 2012). Specifically, the “priority map” model predicts that PPC exerts control over visual areas by computing prioritized maps of space that integrate bottom-up salience information and top-down goals (Fecteau and Munoz, 2006; Bisley and Goldberg, 2010). In terms of this model, object-based attention might reflect object-based attentional top-down selection effects that are mediated by parietal visuotopic regions in PPC. This complies with evidence that common visuotopic maps reflect both representations of attentional priority in perception and the maintenance of spatial representations in WM (Jerde et al., 2012).

Assuming that space priority is assigned in an object-based manner with higher priority for positions colocated on the same compared with another object, the activity increase in PPC following shifts between objects might reflect the necessary reconfiguration of the priority maps. In contrast to visual areas, PPC has larger receptive fields with more distributed spatial representations encompassing larger voxel numbers. Therefore, the position-independent univariate contrast of between- versus within-object shifts exceeded significance levels only for PPC voxels encoding the receptive fields of multiple locations.

Notably, we observed the attentional object-based coactivation effect in visual areas even though no stimuli were visually present during the maintenance period. Moreover, our results also show that visual information held in WM can be decoded from BOLD activity in visual areas even if the pattern classifiers were trained on data from a simple perceptual localizer task. The decodability of currently processed memory positions in visual cortex confirms recent neuroimaging findings and supports the view that sensory cortices can retain information about stimuli that are not physically present (Ester et al., 2009; Harrison and Tong, 2009; Emrich et al., 2013). Furthermore, the decodability of WM contents from visual cortex as well as interference by transcranial magnetic stimulation over visual cortex was found to depend on whether that content was attended (Lewis-Peacock et al., 2012; Zokaei et al., 2014). Moreover, it has been shown that memory contents outside of the focus of attention are not detectable but can be successfully retrieved and reactivated by bringing them back into the focus of attention (Lewis-Peacock et al., 2012; LaRocque et al., 2013). Our data are fully compatible with these findings and further emphasize the notion that attention coactivates all memorized positions that are bound to the focus of attention by Gestalt criteria.

Finally, even though the object information was task irrelevant, perceptual grouping information was still encoded into WM. Therefore, an automatic integration of perceptual grouping information might enable structured memory representations (Brady et al., 2011), so attentional coactivation could be observed during attention shifts in WM.

In summary, we have demonstrated that the characteristic behavioral and neuronal features of perceptual object-based attention can be found in WM. This establishes the contribution of object-based attention to WM. The present findings underline the hypothesized resemblance of attentional mechanisms operating in perception and WM.

Footnotes

This work was supported by the German Research Foundation (DFG Grant BL 931/3-1 to C.B. and J.K.). We thank Christian Fiebach (Department of Psychology, Goethe University Frankfurt) for the use of his laboratory's eye tracking system, Ralf Deichmann and Ulrike Nöth (Brain Imaging Center, Goethe University Frankfurt) for help with data acquisition, and Michael Wibral and Axel Kohler for helpful discussions.

The authors declare no competing financial interests.

References

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bledowski C, Rahm B, Rowe JB. What “works” in working memory? Separate systems for selection and updating of critical information. J Neurosci. 2009;29:13735–13741. doi: 10.1523/JNEUROSCI.2547-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bledowski C, Kaiser J, Rahm B. Basic operations in working memory: contributions from functional imaging studies. Behav Brain Res. 2010;214:172–179. doi: 10.1016/j.bbr.2010.05.041. [DOI] [PubMed] [Google Scholar]

- Brady TF, Konkle T, Alvarez GA. A review of visual memory capacity: Beyond individual items and toward structured representations. J Vis. 2011;11:4. doi: 10.1167/11.5.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bressler DW, Silver MA. Spatial attention improves reliability of fMRI retinotopic mapping signals in occipital and parietal cortex. Neuroimage. 2010;53:526–533. doi: 10.1016/j.neuroimage.2010.06.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology. 2011;2:1–27. 27. [Google Scholar]

- Chen Z. Object-based attention: a tutorial review. Atten Percept Psychophys. 2012;74:784–802. doi: 10.3758/s13414-012-0322-z. [DOI] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, Haynes JD. Decoding the contents of visual short-term memory from human visual and parietal cortex. J Neurosci. 2012;32:12983–12989. doi: 10.1523/JNEUROSCI.0184-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Johnson MK. Memory: enduring traces of perceptual and reflective attention. Neuron. 2011;72:520–535. doi: 10.1016/j.neuron.2011.10.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, Drury HA, Linenweber MR, Petersen SE, Raichle ME, Van Essen DC, Shulman GL. A common network of functional areas for attention and eye movements. Neuron. 1998;21:761–773. doi: 10.1016/S0896-6273(00)80593-0. [DOI] [PubMed] [Google Scholar]

- Egly R, Driver J, Rafal RD. Shifting visual attention between objects and locations: evidence from normal and parietal lesion subjects. J Exp Psychol Gen. 1994;123:161–177. doi: 10.1037/0096-3445.123.2.161. [DOI] [PubMed] [Google Scholar]

- Emrich SM, Riggall AC, Larocque JJ, Postle BR. Distributed patterns of activity in sensory cortex reflect the precision of multiple items maintained in visual short-term memory. J Neurosci. 2013;33:6516–6523. doi: 10.1523/JNEUROSCI.5732-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Serences JT, Awh E. Spatially global representations in human primary visual cortex during working memory maintenance. J Neurosci. 2009;29:15258–15265. doi: 10.1523/JNEUROSCI.4388-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fecteau JH, Munoz DP. Salience, relevance, and firing: a priority map for target selection. Trends Cogn Sci. 2006;10:382–390. doi: 10.1016/j.tics.2006.06.011. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Nobre AC. Top-down modulation: bridging selective attention and working memory. Trends Cogn Sci. 2012;16:129–135. doi: 10.1016/j.tics.2011.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg AS, Verstynen T, Chiu YC, Yantis S, Schneider W, Behrmann M. Visuotopic cortical connectivity underlying attention revealed with white-matter tractography. J Neurosci. 2012;32:2773–2782. doi: 10.1523/JNEUROSCI.5419-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutton C, Bork A, Josephs O, Deichmann R, Ashburner J, Turner R. Image distortion correction in fMRI: a quantitative evaluation. Neuroimage. 2002;16:217–240. doi: 10.1006/nimg.2001.1054. [DOI] [PubMed] [Google Scholar]

- Jerde TA, Merriam EP, Riggall AC, Hedges JH, Curtis CE. Prioritized maps of space in human frontoparietal cortex. J Neurosci. 2012;32:17382–17390. doi: 10.1523/JNEUROSCI.3810-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Wojciulik E. Visual attention: insights from brain imaging. Nat Rev Neurosci. 2000;1:91–100. doi: 10.1038/35039043. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Konen CS, Kastner S. Representation of eye movements and stimulus motion in topographically organized areas of human posterior parietal cortex. J Neurosci. 2008;28:8361–8375. doi: 10.1523/JNEUROSCI.1930-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Lewis-Peacock JA, Drysdale AT, Oberauer K, Postle BR. Decoding attended information in short-term memory: an EEG study. J Cogn Neurosci. 2013;25:127–142. doi: 10.1162/jocn_a_00305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauritzen TZ, D'Esposito M, Heeger DJ, Silver MA. Top-down flow of visual spatial attention signals from parietal to occipital cortex. J Vis. 2009;9:18.1–18.14. doi: 10.1167/9.13.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lepsien J, Griffin IC, Devlin JT, Nobre AC. Directing spatial attention in mental representations: Interactions between attentional orienting and working-memory load. Neuroimage. 2005;26:733–743. doi: 10.1016/j.neuroimage.2005.02.026. [DOI] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Drysdale AT, Oberauer K, Postle BR. Neural evidence for a distinction between short-term memory and the focus of attention. J Cogn Neurosci. 2012;24:61–79. doi: 10.1162/jocn_a_00140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martínez A, Teder-Sälejärvi W, Vazquez M, Molholm S, Foxe JJ, Javitt DC, Di Russo F, Worden MS, Hillyard SA. Objects are highlighted by spatial attention. J Cogn Neurosci. 2006;18:298–310. doi: 10.1162/jocn.2006.18.2.298. [DOI] [PubMed] [Google Scholar]

- Misaki M, Kim Y, Bandettini PA, Kriegeskorte N. Comparison of multivariate classifiers and response normalizations for pattern-information fMRI. Neuroimage. 2010;53:103–118. doi: 10.1016/j.neuroimage.2010.05.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morey RD. Confidence Intervals from Normalized Data: A correction to Cousineau (2005) Tutorial in Quantitative Methods for Psychology. 2008;4:61–64. [Google Scholar]

- Müller NG, Kleinschmidt A. Dynamic interaction of object- and space-based attention in retinotopic visual areas. J Neurosci. 2003;23:9812–9816. doi: 10.1523/JNEUROSCI.23-30-09812.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Maquet P, Frith CD, Vandenberghe R, Mesulam MM. Orienting attention to locations in perceptual versus mental representations. J Cogn Neurosci. 2004;16:363–373. doi: 10.1162/089892904322926700. [DOI] [PubMed] [Google Scholar]

- Oberauer K, Hein L. Attention to information in working memory. Current Directions in Psychological Science. 2012;21:164–169. doi: 10.1177/0963721412444727. [DOI] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG. Neuroimaging studies of attention: from modulation of sensory processing to top-down control. J Neurosci. 2003;23:3990–3998. doi: 10.1523/JNEUROSCI.23-10-03990.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Q J Exp Psychol. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Roelfsema PR. Cortical algorithms for perceptual grouping. Annu Rev Neurosci. 2006;29:203–227. doi: 10.1146/annurev.neuro.29.051605.112939. [DOI] [PubMed] [Google Scholar]

- Roelfsema PR, Houtkamp R. Incremental grouping of image elements in vision. Atten Percept Psychophys. 2011;73:2542–2572. doi: 10.3758/s13414-011-0200-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roelfsema PR, Lamme VA, Spekreijse H. Object-based attention in the primary visual cortex of the macaque monkey. Nature. 1998;395:376–381. doi: 10.1038/26475. [DOI] [PubMed] [Google Scholar]

- Serences JT, Schwarzbach J, Courtney SM, Golay X, Yantis S. Control of object-based attention in human cortex. Cereb Cortex. 2004;14:1346–1357. doi: 10.1093/cercor/bhh095. [DOI] [PubMed] [Google Scholar]

- Shomstein S, Behrmann M. Cortical systems mediating visual attention to both objects and spatial locations. Proc Natl Acad Sci U S A. 2006;103:11387–11392. doi: 10.1073/pnas.0601813103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silver MA, Kastner S. Topographic maps in human frontal and parietal cortex. Trends Cogn Sci. 2009;13:488–495. doi: 10.1016/j.tics.2009.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sprague TC, Serences JT. Attention modulates spatial priority maps in the human occipital, parietal and frontal cortices. Nat Neurosci. 2013;16:1879–1887. doi: 10.1038/nn.3574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoppel CM, Boehler CN, Strumpf H, Krebs RM, Heinze HJ, Hopf JM, Schoenfeld MA. Distinct representations of attentional control during voluntary and stimulus-driven shifts across objects and locations. Cereb Cortex. 2013;23:1351–1361. doi: 10.1093/cercor/bhs116. [DOI] [PubMed] [Google Scholar]

- Turner BO, Mumford JA, Poldrack RA, Ashby FG. Spatiotemporal activity estimation for multivoxel pattern analysis with rapid event-related designs. Neuroimage. 2012;62:1429–1438. doi: 10.1016/j.neuroimage.2012.05.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vecera SP, Farah MJ. Does visual attention select objects or locations? J Exp Psychol Gen. 1994;123:146–160. doi: 10.1037/0096-3445.123.2.146. [DOI] [PubMed] [Google Scholar]

- Wannig A, Stanisor L, Roelfsema PR. Automatic spread of attentional response modulation along Gestalt criteria in primary visual cortex. Nat Neurosci. 2011;14:1243–1244. doi: 10.1038/nn.2910. [DOI] [PubMed] [Google Scholar]

- Warnking J, Dojat M, Guérin-Dugué A, Delon-Martin C, Olympieff S, Richard N, Chéhikian A, Segebarth C. fMRI retinotopic mapping–step by step. Neuroimage. 2002;17:1665–1683. doi: 10.1006/nimg.2002.1304. [DOI] [PubMed] [Google Scholar]

- Zokaei N, Manohar S, Husain M, Feredoes E. Causal evidence for a privileged working memory state in early visual cortex. J Neurosci. 2014;34:158–162. doi: 10.1523/JNEUROSCI.2899-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]