Abstract

The prefrontal cortex houses representations critical for ongoing and future behavior expressed in the form of patterns of neural activity. Dopamine has long been suggested to play a key role in the integrity of such representations, with D2-receptor activation rendering them flexible but weak. However, it is currently unknown whether and how D2-receptor activation affects prefrontal representations in humans. In the current study, we use dopamine receptor-specific pharmacology and multivoxel pattern-based functional magnetic resonance imaging to test the hypothesis that blocking D2-receptor activation enhances prefrontal representations. Human subjects performed a simple reward prediction task after double-blind and placebo controlled administration of the D2-receptor antagonist amisulpride. Using a whole-brain searchlight decoding approach we show that D2-receptor blockade enhances decoding of reward signals in the medial orbitofrontal cortex. Examination of activity patterns suggests that amisulpride increases the separation of activity patterns related to reward versus no reward. Moreover, consistent with the cortical distribution of D2 receptors, post hoc analyses showed enhanced decoding of motor signals in motor cortex, but not of visual signals in visual cortex. These results suggest that D2-receptor blockade enhances content-specific representations in frontal cortex, presumably by a dopamine-mediated increase in pattern separation. These findings are in line with a dual-state model of prefrontal dopamine, and provide new insights into the potential mechanism of action of dopaminergic drugs.

Keywords: associative learning, dopamine, fMRI, MVPA, orbitofrontal cortex, reward

Introduction

The prefrontal cortex is critical for higher cognitive functions and goal-directed behavior (Goldman-Rakic, 1987; Fuster, 2001; Miller and Cohen, 2001). Specifically, sustained activity of neuronal populations in the prefrontal cortex of animals represents and maintains information for subsequent utilization (Goldman-Rakic, 1996). The fidelity of these representations has been suggested to be modulated by dopamine in a receptor-specific manner (Durstewitz et al., 2000). More specifically, a physiologically plausible dual-state model suggests that D2-receptor activation renders prefrontal representations prone to interference and disruption by allowing for several simultaneous but weak network representations (Durstewitz et al., 2000; Seamans et al., 2001). Accordingly, blockade of D2-receptor activation should in turn enhance prefrontal representations by inhibiting potentially interfering concurrent representations (Seamans and Yang, 2004). However, the effects of dopamine D2-receptor blockade on cognitive representations in the human prefrontal cortex have remained elusive.

Here we use dopamine receptor-specific pharmacology and multivoxel pattern-based fMRI to test the hypothesis that D2-receptor blockade enhances prefrontal reward signals in humans. Reward representations are fundamental for goal-directed behavior, learning, and decision-making. A prefrontal area key for representing reward-related information is the orbitofrontal cortex (OFC; Murray et al., 2007; Wallis, 2007; Padoa-Schioppa, 2011), and neurons in this region have been shown to maintain reward information throughout delays (Tremblay and Schultz, 1999; Murray et al., 2007; Lara et al., 2009). Despite anatomical and cytoarchitectural differences in the OFC of different species (Wallis, 2012), neural signatures of reward value have been identified in the OFC of rodents (Schoenbaum et al., 1998; van Duuren et al., 2008; Takahashi et al., 2009), nonhuman primates (Tremblay and Schultz, 1999; Padoa-Schioppa and Assad, 2006; Morrison and Salzman, 2009; Kennerley et al., 2011), and humans (Gottfried et al., 2003; Lebreton et al., 2009; Kahnt et al., 2010; Hare et al., 2011; Wunderlich et al., 2012; Barron et al., 2013; McNamee et al., 2013). Reward signals can be decoded from fMRI activity in the OFC using multivoxel pattern analysis (MVPA) techniques (Kahnt et al., 2010; Vickery et al., 2011; McNamee et al., 2013; Kahnt et al., 2014). Instead of focusing on single fMRI voxels, MVPA techniques combine the activity of multiple voxels and are thus capable of identifying signals that are encoded in the distributed activity of neuronal populations (Haxby et al., 2001; Haynes and Rees, 2005; Kamitani and Tong, 2005). Here we use this technique to estimate a proxy of the fidelity of prefrontal reward representations. Specifically, we reasoned that enhanced prefrontal representations should be accompanied by increased fMRI pattern separation between reward and no reward, and thus lead to increased decoding accuracy. Using this MVPA measure, we examine the effects of dopamine D2-receptor blockade on the decoding of reward signals in the human OFC. In particular, we hypothesize that blocking D2-receptor activation using the D2/D3-receptor antagonist amisulpride (Rosenzweig et al., 2002) enhances decoding of reward.

Materials and Methods

Subjects.

In total, 53 right-handed, male subjects participated in the study. Two subjects (both in the placebo group) failed to follow the instructions and were excluded. This left 51 subjects, aged 18–27 years (22.4 ± 0.28 years mean ± SEM). Before the experiment (1 h 30 min ± 4 min), subjects received a pill containing either placebo (N = 24) or 400 mg of the D2-receptor antagonist amisulpride (N = 27) in a randomized and double-blind fashion. To enhance and equate absorption time across subjects, subjects were asked to fast for 6 h before the experiment. Groups did not differ in age (t = 0.83, p = 0.41) or weight (t = 0.36, p = 0.72), and subjects were unaware of whether or not they received the drug, as assessed by postexperimental questionnaires (χ2 = 0.10, p = 0.75).

Task and stimuli.

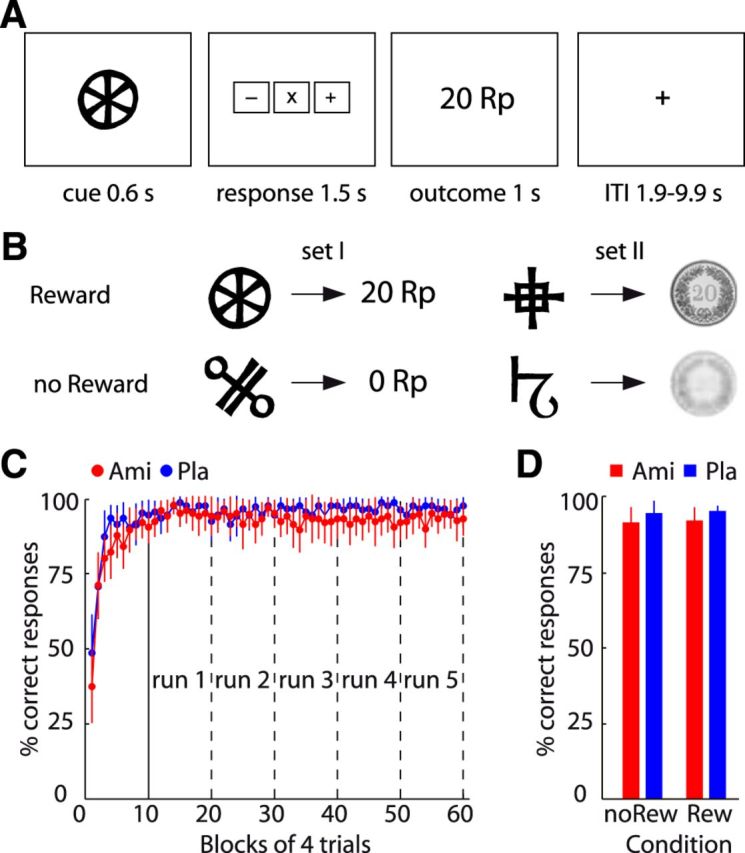

To investigate neural reward signals we used a noninstrumental outcome prediction task (Kahnt et al., 2014) in which different visual stimuli were deterministically associated either with reward (CHF 0.20) or with no reward (CHF 0.00). In each trial (Fig. 1A), subjects saw one of four visual cues for 0.6 s, followed by a response mapping screen on which they had to indicate the upcoming reward (+, reward; −, no reward, x, unsure) using the index, middle, or ring finger of their right hand. To control for preparatory and motor-related signals, associations between buttons and responses were randomized across trials (i.e., in different trials, different buttons had to be pressed to indicate +, −, and x). The response mapping screen stayed on for a total of 1.5 s and was followed by the presentation of the outcome (1 s). Trials were separated by a variable intertrial interval (1.9 − 9.9 s, mean 3.5 s). To control for the visual features of the cues and the outcomes, two different sets of cue-outcome pairs were used. With one pair, the outcomes were shown as images of coins and with the other pair as digits (Fig. 1B). This ensured that the decoded signals were related to reward rather than visual features of cues or outcomes (see below, MVPA searchlight decoding). Outcomes were deterministically (100% cue-outcome contingency) predicted by the cues (associations were randomized across subjects), and each cue-outcome pair was presented 10 times in each of the five scanning runs. Before fMRI data acquisition, subjects performed one training session to learn the cue-outcome associations (Fig. 1C).

Figure 1.

Task and behavioral results. A, Timing of the noninstrumental outcome prediction task. Locations of response options on the response mapping screen were randomized across trials. B, Different cue-outcome pairs were used to control for visual features of cues and outcomes. C, Percentage of correctly predicted outcomes for amisulpride (Ami) and placebo (Pla) group across time (bins of 4 trials each). Because three response options are provided in each trial, chance level is 33%. D, Percentage of correctly predicted no reward (noRew) and reward (Rew) outcomes. Error bars depict 95% CIs.

fMRI acquisition and preprocessing.

Functional imaging was performed on a Philips Achieva 3 T whole-body scanner equipped with an 8-channel head coil. During each of the five scanning sessions 140 T2*-weighted whole-brain EPI images (37 transversal slices acquired in ascending order) were acquired with a TR of 2 s. Imaging parameters were as follows: slice thickness, 3 mm; in-plane resolution, 2.75 × 2.75 mm; TE, 30 ms; flip angle, 90°. Preprocessing was performed using SPM8 and consisted of slice-time correction, realignment, and spatial normalization to the standard EPI template of the MNI, resampling to 3 × 3 × 3 mm voxels. Unsmoothed time series data were used for the MVPA analysis, whereas data for the standard univariate GLM analysis were smoothed using a Gaussian Kernel with 8 mm FWHM.

MVPA searchlight decoding.

To decode reward representations (reward vs no reward) we used linear support vector classification (SVC) in combination with a searchlight approach that allows whole-brain information mapping without potentially biasing voxel selection (Kriegeskorte et al., 2006; Haynes et al., 2007). At the level of single OFC neurons, reward information is represented either positively (more activation for higher value) or negatively (more activation for lower value; Schoenbaum et al., 1998; Morrison and Salzman, 2009; Kennerley et al., 2011). The two populations are intercalated in the OFC (Morrison and Salzman, 2009), making it difficult to identify these signals using conventional univariate fMRI analyses (Kennerley et al., 2009). However, individual voxels can happen to cover a slightly higher proportion of one or the other population (i.e., sampling bias), which results in a nonzero response of each voxel (Haynes and Rees, 2005; Kamitani and Tong, 2005). The response biases of a set of voxels form a condition-specific multivoxel response pattern, such that the pattern elicited by the reward condition is different from that elicited by the no reward condition. These different patterns can then be classified as belonging to reward or no reward trials using pattern recognition algorithms (Kahnt et al., 2010, 2011b). However, it should be noted that it is not entirely clear how exactly multivoxel patterns translate to the underlying neurophysiology, and several models accounting for the relationship between multivoxel patterns and neural firing have been proposed. Specifically, in addition to the biased sampling model described above, activity patterns have been suggested to reflect complex spatiotemporal dynamics of the vascular system (Kriegeskorte et al., 2010; Shmuel et al., 2010) and large-scale biases (Mannion et al., 2010; Freeman et al., 2011). Regardless of the exact mechanism, MVPA methods have been widely used to decode signals represented differentially in intercalated neural populations (Haxby et al., 2001; Haynes and Rees, 2005; Kamitani and Tong, 2005; Xue et al., 2010; Kahnt et al., 2011a), and are able to disentangle overlapping representations within single brain regions, such as value and salience in parietal cortex (Kahnt et al., 2014) or color and motion direction in early visual cortex (Seymour et al., 2009).

In a first step, we estimated condition-specific response amplitudes for each voxel and scanning run that were later used as input to the SVC. Specifically, for each fMRI scanning run, we estimated a voxelwise GLM. This GLM contained four regressors for the onsets of the four cue-outcome pairs (duration 3.1 s) that were convolved with a canonical hemodynamic response function, as well as six regressors of no interest, which accounted for variance induced by head motion. The voxelwise parameter estimates for the four regressors of interest represent the response amplitudes to each of the four cue-outcome pairs in each of the five scanning runs. They were subsequently used as input to a subject-wise SVC decoding analysis described below.

The SVC was performed by using the LIBSVM implementation (http://www.csie.ntu.edu.tw/∼cjlin/libsvm/) with a linear kernel and a cost parameter of c = 0.1 [using different cost parameters or a different decoding algorithm (Naive Bayes Classifier) produced similar results]. At each voxel, we formed a searchlight in the form of a sphere with a radius of 10 mm surrounding the center voxel. Thus, each searchlight contained ∼170 voxels (different searchlight sizes produced similar results). The activity patterns within each searchlight were used to decode information about reward by using the following cross-validation procedure. We trained an SVC model to classify patterns of parameter estimates for reward versus no reward trials from stimulus set I and obtained the cross-validated decoding accuracy by testing the SVC model on parameter estimates for reward versus no reward trials from stimulus set II (Fig. 2A). This procedure was repeated vice versa by training on stimulus set II and testing on stimulus set I. The decoding accuracies for both directions were averaged to obtain a measure of locally distributed reward information that was assigned to the center voxel of the searchlight. This procedure was repeated for every possible center voxel (i.e., searchlight) and resulted in a subject-wise, whole-brain 3D map of decoding accuracy. Importantly, by training and testing the classifier on data from different stimulus sets, we ensured that decoding accuracy is only related to what is common between the two cue-outcome pairs of each set (i.e., reward information) and not related to the visual features of the cues paired with reward and no reward. Moreover, because decoding accuracy was computed based on model predictions in independent test data, and not based on model fits in the training data, this cross-validation procedure is completely insensitive to potential noise fitting (i.e., overfitting) in the training data (Kriegeskorte et al., 2009).

Figure 2.

Effects of D2-receptor blockade on reward signals. A, Schematic of the searchlight decoding approach. Activity patterns were extracted for all four cue-outcome pairs from each searchlight. An SVC model was trained to discriminate reward from no reward on set I only or set II only. This yielded predictions that were then tested on the other set (testing on set II after training on set I and vice versa) to obtain decoding accuracy, which was assigned to the center voxel. This procedure was repeated for every searchlight (center voxel) in the entire brain, resulting in a 3D map of decoding accuracy. B, Cluster in the medial OFC with significantly (pFWE-corr< 0.05) higher decoding accuracy in the amisulpride (Ami) than placebo (Pla) group. C, For illustration purposes, bar plots depict averaged decoding accuracy from individual peak searchlights in the OFC cluster for both groups. Error bars depict 95% CI.

Group level analysis.

To identify brain regions where decoding accuracy differed between the two groups, the subject-wise decoding accuracy maps were smoothed with a Gaussian Kernel of 6 mm FWHM and entered into voxelwise two-sample t tests. This generated a voxelwise whole-brain t-map reflecting the statistical significance of the group differences in decoding accuracy. Except for exploratory analyses, we corrected for multiple comparisons at the cluster-level by applying a whole-brain FWE-corrected threshold of pFWE-corr < 0.05.

Univariate analysis.

To test for changes in the reward-related fMRI signal between groups, we performed a conventional univariate analysis. This analysis was performed on the smoothed time series data. The GLM contained the same regressors (four regressors for the four cue-outcome pairings) and the six movement parameters. Subject-wise linear contrast images were computed for reward minus no reward and entered into voxelwise two-sample t tests for group analysis.

Results

Behavior

One and a half hours before the experiment, subjects received a pill containing either placebo (N = 24) or 400 mg of the D2-receptor blocker amisulpride (N = 27) in a randomized parallel double-blind design. Previous studies have shown that a single dose of 400 mg of sulpiride (similar to amisulpride) occupies ∼30% of D2 receptors in the striatum (Mehta et al., 2008). To reveal reward representations, subjects performed a noninstrumental outcome prediction task (see Materials and Methods) in which visual cues deterministically (100% cue-outcome contingency) predicted reward or no reward. In each trial, subjects saw one cue and were asked to indicate the upcoming outcome on a randomized response mapping screen before the actual outcome was shown (Fig. 1A). Two different pairs of cues predicted reward or no reward either in the form of coins or numbers (Fig. 1B). To make a correct response on a given trial, subjects had to represent the predicted reward and act on this representation.

Subjects in both groups learned the associations between all visual cues and outcomes before the first scanning run, and maintained high performance throughout scanning (Fig. 1C). In line with the notion that amisulpride induces very little behavioral effects (Rosenzweig et al., 2002), groups did not differ in any behavioral learning or performance parameters. Specifically, a time by group ANOVA on the percentage of correct responses revealed a significant effect of time (F(59,2891) = 23.30, p < 0.001), but no effect of group (amisulpride vs placebo, F(1,49) = 1.42, p = 0.24), and no group by time interaction (F(59,2891) = 0.93, p = 0.62). To capture potential differences in learning speed between groups, we estimated the learning rate (α) of a simple reinforcement learning (RL) model (Sutton and Barto, 1998; Kahnt et al., 2009). Mirroring task performance, the individual learning rates did not differ between groups (amisulpride, α = 0.56, ±0.07; placebo, α = 0.64, ±0.06; t = −0.86, p = 0.39). Testing for reward-specific effects, a group (amisulpride vs placebo) by reward (reward vs no reward) ANOVA on the percentage of correct responses (Fig. 1D) did not reveal a significant main effect of group (F(1,49) = 1.375, p = 0.25), reward (F(1,49) = 0.238, p = 0.63), or a group by reward interaction (F(1,49) = 0.001, p = 0.98). A corresponding ANOVA on response times (RTs) revealed a significant effect of reward (F(1,49) = 128.10, p < 0.001; faster responding in reward than no reward trials) but no significant effect of group (F(1,49) = 0.27, p = 0.61), and no group by reward interaction (F(1,49) = 0.27, p = 0.61). In summary, these results demonstrate that groups were well matched with regard to behavioral performance, and that neural reward signals can therefore be compared between groups independent of potentially confounding differences in behavior or learning.

Prefrontal reward signals

We revealed neural reward signals by applying multivoxel pattern-based decoding techniques. Specifically, using a searchlight-decoding approach (Kriegeskorte et al., 2006; Haynes et al., 2007) and linear SVC we searched for information about reward value that is contained in locally distributed multivoxel patterns of fMRI activity (see Materials and Methods). To avoid confounds related to the specific (e.g., visual) features of the cues and to ensure that classifier performance is only driven by reward information, we used a cross-classification procedure. Specifically, we trained the SVC model on the multivoxel response patterns acquired during the presentation of one set of cue-outcome pairs (reward vs no reward), and tested it on the multivoxel response patterns evoked by the other set of cue-outcome pairs (Fig. 2A).

We hypothesized that D2-receptor blockade should reduce the D2-mediated weakening of prefrontal representations (Seamans and Yang, 2004) and thus enhance fMRI pattern separation between reward and no reward trials, which in turn should increase decoding accuracy in the amisulpride group. In line with this prediction, we found significantly (pFWE-corr < 0.05) higher decoding accuracies in the medial OFC (MNI coordinates [x, y, z], [−3, 35, −23], t = 6.07, pFWE-corr = 0.012) in the amisulpride than the placebo group (Fig. 2B, see Table 1 for results at an uncorrected threshold). A similar effect in the left dorsolateral prefrontal cortex did not survive correction for multiple comparisons (left middle and superior frontal gyrus, [−27, 14, 55], t = 4.04, pFWE-corr = 0.058). Exploratory analyses revealed no significant (puncorr < 0.001) voxels when searching for higher decoding accuracy in the placebo than amisulpride group. The same set of results was obtained when behavioral performance was included as covariate of no interest, demonstrating that (nonsignificant) behavioral differences did not affect our decoding results.

Table 1.

Brain regions with higher decoding accuracy for reward in the amisulpride > placebo group

| Region | MNI coordinate |

T | k voxel | ||

|---|---|---|---|---|---|

| x | y | z | |||

| Medial OFC | −3 | 35 | −23 | 6,07 | 186 |

| Left dorsolateral PFC | −27 | 14 | 55 | 4,04 | 115 |

| Dorsomedial PFC | −6 | 41 | 49 | 3,76 | 60 |

| Left ventrolateral PFC | −30 | 50 | 1 | 3,74 | 27 |

| Right inferior TC | 54 | −43 | −8 | 3,98 | 40 |

| Left inferior TC | −33 | −43 | −23 | 3,95 | 42 |

Results thresholded at p < 0.001, uncorrected (k > 15). TC, temporal cortex.

The decoding results described above provide only an abstract picture of the changes induced by amisulpride. We further examined the pattern changes in the OFC using more direct and parsimonious methods. Specifically, we tested whether amisulpride enhances pattern separation between reward and no reward trials in the OFC, by computing the mean squared difference between the activity patterns related to reward and no reward trials. Comparing this measure between the two groups demonstrated significantly greater pattern separation in the amisulpride compared with the placebo group (t = 2.29, p = 0.01, one-tailed; Fig. 3). Notably, these changes in pattern separation were not accompanied by changes in the variance of the patterns per se (t = 0.55, p = 0.58). Moreover, we tested whether patterns in the amisulpride group were also more consistent across time by correlating the reward coding patterns from different scanning runs. As expected, this revealed significantly higher temporal pattern consistency in the amisulpride versus placebo group (t = 1.90, p = 0.03, one-tailed).

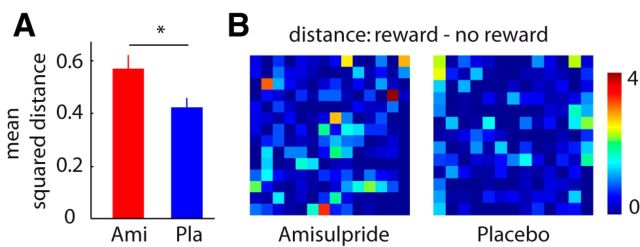

Figure 3.

Amisulpride enhances pattern separation in the OFC. A, Bar plots depict average squared difference between activity patterns related to reward and no reward. Asterisk depicts significant two-sample t test at p < 0.05 (one-tailed). Error bars depict SEM. B, Squared difference between activity patterns related to reward and no reward for a representative subject in the amisulpride group (Ami; left) and in the placebo group (Pla; right). Each pixel represents the squared difference (reward minus no reward) in the activity of one voxel in the medial OFC. The color map represents squared activity difference and is min − max scaled across both displayed patterns. The two subjects were selected such that their average squared pattern difference is close to the mean of their respective group (amisulpride subject = 0.55 [amisulpride group average = 0.57], placebo subject = 0.43 [placebo group average = 0.42]).

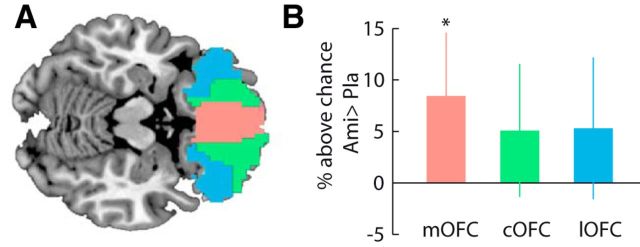

We confirmed the results of the searchlight analysis using an independent region of interest analysis in anatomically defined subregions of the OFC (medial, central, and lateral OFC; Fig. 4A). Training and testing the SVC on the activity patterns within these anatomical regions (using the cross-classification procedure described above) revealed enhanced reward representation with D2-receptor blockade in the medial OFC (t = 2.67, p = 0.01, one-tailed) but not the central (t = 1.55, p = 0.13) and lateral OFC (t = 1.50, p = 0.14, Fig. 4B). Moreover, pattern separation and pattern consistency over time were significantly enhanced in the amisulpride relative to the placebo group in the anatomically defined medial OFC (pattern separation, t = 2.17, p = 0.02; pattern consistency t = 2.69, p = 0.005, one-tailed), but not in the central OFC (pattern separation, t = 1.24, p = 0.11; pattern consistency, t = 1.31, p = 0.10, one-tailed) or the lateral OFC (pattern separation, t = 1.21, p = 0.11; pattern consistency, t = 1.34, p = 0.09, one-tailed).

Figure 4.

Effects of D2-receptor blockade on reward signals in anatomical ROIs. A, Anatomically defined ROIs in the orbitofrontal cortex derived from the automated anatomical labeling (AAL) atlas. B, Difference in decoding accuracy for reward between groups [amisulpride (Ami) − placebo (Pla)]. Asterisk depicts significant two-sample t tests at p < 0.05 (one-tailed). Error bars depict 95% CI. Medial OFC, mOFC; central OFC, cOFC; lateral OFC, lOFC.

To examine the effects of amisulpride on mean BOLD signals, we performed a standard univariate analysis (see Materials and Methods). Univariate BOLD signals in the medial OFC did not differ between groups (t = −0.83, p = 0.41). However, an exploratory voxelwise whole-brain analysis revealed elevated activation in reward > no-reward trials in the amisulpride group compared with the placebo group in the ventral striatum ([15, 14, −11], t = 3.05, puncorr < 0.005). Together, these findings suggest that whereas amisulpride may enhance the average reward signal in the ventral striatum, the effects on prefrontal representations are more subtle. Specifically, amisulpride in the prefrontal cortex enhances the decoding of reward information by increasing pattern separation between the reward and no reward trials as well as pattern consistency across time, without changing the mean signal between conditions.

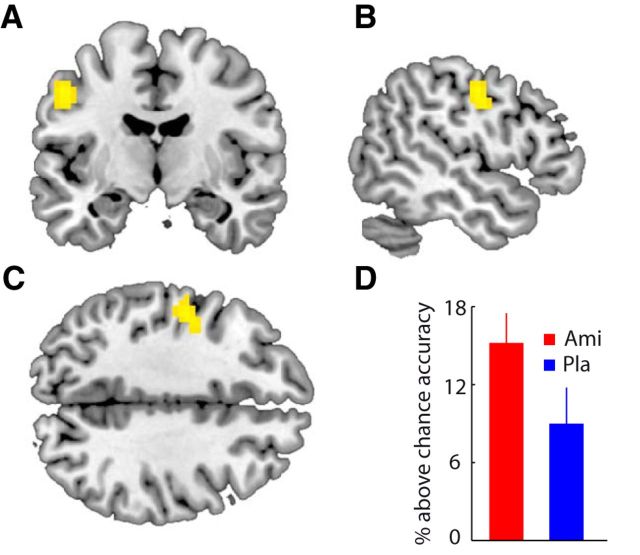

Other cortical signals

An important question is whether the enhancement of decodability by D2-recptor blockade is specific for reward signals. In principle, amisulpride could generally increase decoding of content-specific signals in cortical areas with substantial D2-receptor density. In a set of post hoc analyses we therefore tested whether amisulpride also enhances decoding of other signals required for task performance. For instance, subjects gave their behavioral response using the index, middle, or ring finger (randomized across trials) of their right hand, which should elicit characteristic activity patterns in premotor and motor cortex of the contralateral hemisphere. Given the presence of D2 receptors in motor cortex (Lidow et al., 1989), amisulpride should enhance decoding of these signals. To test this idea, we decoded the specific motor response that subjects made on a given trial using a leave-one-run-out cross-validation procedure. Specifically, using a searchlight approach we trained and tested a three-class SVC on activity patterns corresponding to the three fingers that were used to make the response. We found significantly higher decoding accuracy in left premotor (BA 6) and primary motor cortex (BA 4, [−51, −10, 43], t = 3.61, puncorr < 0.001) in the amisulpride relative to the placebo group (Fig. 5). This suggests that amisulpride enhanced the separation of finger-specific fMRI response patterns in areas of motor cortex that represent the fingers of the right hand (Meier et al., 2008).

Figure 5.

Effects of D2-receptor blockade on motor signals. Coronal (A), sagittal (B), and transversal (C) slices depicting a cluster in motor cortex with significantly higher decoding accuracy for motor response (finger of right hand was used for behavioral response) in the amisulpride (Ami) compared with the placebo (Pla) group. D, For illustration purposes, bar plots depict averaged decoding accuracy from individual peak searchlights in the cluster for both groups. Error bars depict 95% CI.

In contrast, signals in regions with few D2 receptors, such as visual cue representations in occipital cortex (Lidow et al., 1989), should not be changed by amisulpride. As a control, we used a searchlight approach to decode visual signals independent of value (leave-one-run-out training and testing on left-out run for reward and no reward set I versus reward and no reward set II). In line with the idea that the effects of amisulpride on cortical representations are specific to regions with a high density of D2 receptors, we did not find any significant (puncorr > 0.01) increases (amisulpride > placebo) in the accuracy for visual decoding in early visual areas.

Discussion

In the current study we examined the relationship between dopamine D2 signaling and prefrontal representations in humans. Dopamine has long been suggested to play a fundamental role in prefrontal functions (Miller and Cohen, 2001; Robbins and Arnsten, 2009; D'Ardenne et al., 2012). For instance, dopamine applied to the primate prefrontal cortex enhances the signal-to-noise ratio of pyramidal neurons representing task-relevant stimuli (Jacob et al., 2013). However, effects of dopamine on prefrontal function seem to be receptor specific, as D1- and D2-specific agents differentially affect the activity patterns of prefrontal neurons (Seamans et al., 2001). For instance, a low dose of a D1 agonist applied to the prefrontal cortex sharpens the spatial tuning of task-sensitive neurons in a spatial working memory task (Vijayraghavan et al., 2007), and blocking prefrontal D1 receptors impairs learning of visuomotor associations (Puig and Miller, 2012). In contrast, D2-receptor antagonists impair cognitive flexibility without altering behavioral performance (Puig and Miller, 2014), or even fail to affect neuronal activity in the prefrontal cortex at all (Sawaguchi et al., 1990). By showing that D2-receptor blockade enhances decoding of reward signals in the human OFC, our study provides evidence for the importance of receptor-specific dopamine action on prefrontal representations in humans.

We found enhanced decoding not only for reward signals in the OFC but also for motor signals in the motor cortex. In contrast, visual signals in occipital areas remained unaltered by amisulpride. Intriguingly, D2-receptor concentration in the primate brain follows an anterior–posterior gradient with the highest concentration in the prefrontal cortex and the lowest concentration in the occipital cortex, with motor cortex falling in between (Lidow et al., 1989). Our results therefore suggest that amisulpride enhances decoding of region-specific information in cortical regions with high D2-receptor density, presumably by enhancing the separation of content-specific response patterns. However, further studies are required to explore the range of signals for which decoding is enhanced by amisulpride.

These results are in line with a dual-state model of prefrontal dopamine, which suggests that activation of D2 and D1 receptors has opposing effects on the strength of network representations (Durstewitz et al., 2000; Seamans et al., 2001). According to the model, when D1 activation predominates (D1-dominated state), only very strong inputs are able to access prefrontal circuits and establish dominant network representations therein. This effect of D1-receptor activation is thought to be mediated by persistent NMDA receptor activation and increased GABAergic inhibition. In contrast, predominant D2 activation (D2-dominated state) is accompanied by reduced GABAergic inhibition allowing multiple inputs to simultaneously establish weak and fragile network representations in the prefrontal cortex. Our results provide support for this model in humans by showing that D2-receptor blockade is sufficient to enhance decoding of prefrontal signals. Specifically, by blocking D2 receptors, amisulpride should have decreased the likelihood of D2-dominated states and increased the likelihood of D1-dominated states (Seamans and Yang, 2004). Hypothetically, this could have strengthened prefrontal representations, which in turn resulted in enhanced fMRI pattern separation and thus improved decoding accuracy. While recent findings call for modifications of this model (Tseng and O'Donnell, 2007), and the proposed mechanism and functional consequences are therefore presently somewhat speculative, the model predicts that D1-receptor antagonists should weaken prefrontal representations relative to placebo. Unfortunately, such agents are currently unavailable for use in humans. It is important to note that this model was originally designed to account for sustained activity in prefrontal cortex, maintaining sensory or mnemonic representations. Nevertheless, similar mechanisms could apply to activity patterns in motor and premotor cortex, maintaining motor representations.

The model described above focuses on how D2-blockade affects prefrontal representations through local effects on D2 receptors (which are located mainly in layer 5), and the regional specificity of our effects is explained most parsimoniously with this local mechanism. However, more indirect routes and mechanisms may fulfill similar functions. For instance, two opposing pathways project from the striatum through the thalamus back to the cortex (Frank et al., 2004). Activity in the direct pathway is thought to facilitate prefrontal representations, whereas activity in the indirect pathway suppresses representations. Interestingly, neurons in the direct and indirect pathway primarily express D1 and D2 receptors, respectively (Aubert et al., 2000). Reduced activation of the indirect versus the direct pathway could therefore have affected the spatial distribution of activity and thus prefrontal signals in our data. Moreover, the striatum and the dopaminergic midbrain (but not the OFC) contain D2 autoreceptors and blocking these could have increased the availability of dopamine in the synaptic cleft. Blocking of D2 autoreceptors could therefore lead to an overall increase of DA function, and in theory would lead to a greater global occupation of D1 receptors, especially if D2 receptors are concurrently blocked by amisulpride. Finally, blocking D2 receptors could have shifted the tonic/phasic balance toward D1/NMDA-mediated phasic activity (Goto and Grace, 2005) and thus increased separation of patterns coding reward and no reward.

In the current experiment, we used a simple task to ensure that behavioral performance was matched across groups, allowing a straightforward interpretation of the neural effects. In general, however, it would be interesting to test the effects of enhanced cortical representations on behavioral performance. For instance, if D2-receptor blockade decreases the ability to flexibly switch between prefrontal representations, amisulpride may reduce distractibility at the cost of reduced cognitive flexibility and increased perseveration (Mehta et al., 2004). Future experiments are needed to test the behavioral markers of altered prefrontal representations.

Phasic increases in dopamine are thought to play a major role in motivation, reward processing, and RL (Berridge and Robinson, 1998; Pessiglione et al., 2006; Bromberg-Martin et al., 2010; Schultz, 2013). Specifically, unpredicted rewards and reward-predictive stimuli activate dopamine neurons (Tobler et al., 2005) and concomitant dopamine release in striatum and prefrontal cortex (Hart et al., 2014) could play a role in implementing behavioral functions. For instance, dopamine is thought to signal reward prediction errors that drive RL (Schultz, 2013). Interestingly, whereas previous studies show reduced RL when blocking dopamine receptors using haloperidol (Pessiglione et al., 2006), individually estimated learning rates of an RL model did not differ in our experiment. This is in line with the fact that amisulpride has generally very limited effects on behavior (Rosenzweig et al., 2002) and learning (Eisenegger et al., 2014), and corroborates the notion that many of the reinforcing effects of dopamine arise only when both D1 and D2 receptors are stimulated (Wise, 2006).

Of note, amisulpride is one of the few relatively selective drugs affecting dopaminergic neurotransmission available for human use. However, D3 and 5-HT7 receptors are also modulated by amisulpride. The D3 receptor belongs to the D2-like family of dopaminergic receptors, activation of which inhibits the formation of cAMP. Thus, it is likely that D3-receptor activation also opposes D1-receptor activation (which facilitates cAMP formation), along with comparable effects on the strength of prefrontal representations. In contrast, very little is known about the effects of 5-HT7 receptor activation on cognitive functioning, except for a role in memory formation, sleep, and psychiatric disorders (Gasbarri and Pompili, 2014). In general, however, the neuromodulator serotonin (5-HT) has been suggested to play a role in punishment processing and aversive learning (Cools et al., 2008), and has been hypothesized to act as an opponent to dopamine (Daw et al., 2002). Thus, given the role of 5-HT in aversive processing, we believe it is unlikely that 5-HT7-receptors contributed to the effects of amisulpride observed in the current study.

In summary, here we have shown a link between dopamine and prefrontal signals, supporting a dual-state model of prefrontal dopamine in which the strength of network representations can be enhanced by blocking D2 receptors. Thus, our results link a theory that is derived from nonhuman animal models of dopamine receptor functioning to human prefrontal function. By suggesting a mechanism by which prefrontal representations can be manipulated, our results have important implications for the treatment of cognitive dysfunctions. Specifically, high doses of amisulpride (400–1200 mg/d) are widely used in the management of positive symptoms in schizophrenia (Curran and Perry, 2001), which include disordered thoughts and speech, hallucinations, and delusions. Such symptoms could result from multiple weak cognitive representations, suggesting that the enhancement of cognitive representations may be an important aspect of the therapeutic drug effect. This potential mechanism also has implications for the management of other psychiatric disorders that are characterized by enhanced cognitive flexibility and attentional deficits such as attention deficit hyperactivity disorder.

Footnotes

This work was supported by the Swiss National Science Foundation (grants PP00P1_128574, PP00P1_150739, and CRSII3_141965) and the Swiss National Centre of Competence in Research in Affective Sciences. The BCNI is supported by the Medical Research Council and Wellcome Trust. We acknowledge also the Neuroscience Center Zurich and thank M. Wälti and T. Baumgartner for help with data collection.

T.W.R discloses consultancy with Lilly, Lundbeck, Teva, Otsuka, Shire Pharmaceuticals, ChemPartners, and Cambridge Cognition; and research grants with Lilly, Lundbeck, and GlaxoSmithKline. The remaining authors declare no competing financial interests.

References

- Aubert I, Ghorayeb I, Normand E, Bloch B. Phenotypical characterization of the neurons expressing the D1 and D2 dopamine receptors in the monkey striatum. J Comp Neurol. 2000;418:22–32. doi: 10.1002/(SICI)1096-9861(20000228)418:1<22::AID-CNE2>3.3.CO;2-H. [DOI] [PubMed] [Google Scholar]

- Barron HC, Dolan RJ, Behrens TE. Online evaluation of novel choices by simultaneous representation of multiple memories. Nat Neurosci. 2013;16:1492–1498. doi: 10.1038/nn.3515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Brain Res Rev. 1998;28:309–369. doi: 10.1016/S0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R, Roberts AC, Robbins TW. Serotoninergic regulation of emotional and behavioural control processes. Trends Cogn Sci. 2008;12:31–40. doi: 10.1016/j.tics.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Curran MP, Perry CM. Amisulpride: a review of its use in the management of schizophrenia. Drugs. 2001;61:2123–2150. doi: 10.2165/00003495-200161140-00014. [DOI] [PubMed] [Google Scholar]

- D'Ardenne K, Eshel N, Luka J, Lenartowicz A, Nystrom LE, Cohen JD. Role of prefrontal cortex and the midbrain dopamine system in working memory updating. Proc Natl Acad Sci U S A. 2012;109:19900–19909. doi: 10.1073/pnas.1116727109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15:603–616. doi: 10.1016/S0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Dopamine-mediated stabilization of delay-period activity in a network model of prefrontal cortex. J Neurophysiol. 2000;83:1733–1750. doi: 10.1152/jn.2000.83.3.1733. [DOI] [PubMed] [Google Scholar]

- Eisenegger C, Naef M, Linssen A, Clark L, Gandamaneni PK, Müller U, Robbins TW. Role of dopamine D2 receptors in human reinforcement learning. Neuropsychopharmacology. 2014;39:2366–2375. doi: 10.1038/npp.2014.84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O'Reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306:1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- Freeman J, Brouwer GJ, Heeger DJ, Merriam EP. Orientation decoding depends on maps, not columns. J Neurosci. 2011;31:4792–4804. doi: 10.1523/JNEUROSCI.5160-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. The prefrontal cortex—an update: time is of the essence. Neuron. 2001;30:319–333. doi: 10.1016/S0896-6273(01)00285-9. [DOI] [PubMed] [Google Scholar]

- Gasbarri A, Pompili A. Serotonergic 5-HT7 receptors and cognition. Rev Neurosci. 2014;25:311–323. doi: 10.1515/revneuro-2013-0066. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic P. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory. In: Mountcastle VB, Plum F, Geiger SR, editors. Handbook of physiology: the nervous system. Bethesda, MD: American Physiology Society; 1987. pp. 373–417. [Google Scholar]

- Goldman-Rakic PS. Regional and cellular fractionation of working memory. Proc Natl Acad Sci U S A. 1996;93:13473–13480. doi: 10.1073/pnas.93.24.13473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goto Y, Grace AA. Dopaminergic modulation of limbic and cortical drive of nucleus accumbens in goal-directed behavior. Nat Neurosci. 2005;8:805–812. doi: 10.1038/nn1471. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Hare TA, Schultz W, Camerer CF, O'Doherty JP, Rangel A. Transformation of stimulus value signals into motor commands during simple choice. Proc Natl Acad Sci U S A. 2011;108:18120–18125. doi: 10.1073/pnas.1109322108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart AS, Rutledge RB, Glimcher PW, Phillips PE. Phasic dopamine release in the rat nucleus accumbens symmetrically encodes a reward prediction error term. J Neurosci. 2014;34:698–704. doi: 10.1523/JNEUROSCI.2489-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci. 2005;8:686–691. doi: 10.1038/nn1445. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Sakai K, Rees G, Gilbert S, Frith C, Passingham RE. Reading hidden intentions in the human brain. Curr Biol. 2007;17:323–328. doi: 10.1016/j.cub.2006.11.072. [DOI] [PubMed] [Google Scholar]

- Jacob SN, Ott T, Nieder A. Dopamine regulates two classes of primate prefrontal neurons that represent sensory signals. J Neurosci. 2013;33:13724–13734. doi: 10.1523/JNEUROSCI.0210-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahnt T, Park SQ, Cohen MX, Beck A, Heinz A, Wrase J. Dorsal striatal-midbrain connectivity in humans predicts how reinforcements are used to guide decisions. J Cogn Neurosci. 2009;21:1332–1345. doi: 10.1162/jocn.2009.21092. [DOI] [PubMed] [Google Scholar]

- Kahnt T, Heinzle J, Park SQ, Haynes JD. The neural code of reward anticipation in human orbitofrontal cortex. Proc Natl Acad Sci U S A. 2010;107:6010–6015. doi: 10.1073/pnas.0912838107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahnt T, Grueschow M, Speck O, Haynes JD. Perceptual learning and decision-making in human medial frontal cortex. Neuron. 2011a;70:549–559. doi: 10.1016/j.neuron.2011.02.054. [DOI] [PubMed] [Google Scholar]

- Kahnt T, Heinzle J, Park SQ, Haynes JD. Decoding the formation of reward predictions across learning. J Neurosci. 2011b;31:14624–14630. doi: 10.1523/JNEUROSCI.3412-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahnt T, Park SQ, Haynes JD, Tobler PN. Disentangling neural representations of value and salience in the human brain. Proc Natl Acad Sci U S A. 2014;111:5000–5005. doi: 10.1073/pnas.1320189111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12:535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Cusack R, Bandettini P. How does an fMRI voxel sample the neuronal activity pattern: compact-kernel or complex spatiotemporal filter? Neuroimage. 2010;49:1965–1976. doi: 10.1016/j.neuroimage.2009.09.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lara AH, Kennerley SW, Wallis JD. Encoding of gustatory working memory by orbitofrontal neurons. J Neurosci. 2009;29:765–774. doi: 10.1523/JNEUROSCI.4637-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebreton M, Jorge S, Michel V, Thirion B, Pessiglione M. An automatic valuation system in the human brain: evidence from functional neuroimaging. Neuron. 2009;64:431–439. doi: 10.1016/j.neuron.2009.09.040. [DOI] [PubMed] [Google Scholar]

- Lidow MS, Goldman-Rakic PS, Rakic P, Innis RB. Dopamine D2 receptors in the cerebral cortex: distribution and pharmacological characterization with [3H]raclopride. Proc Natl Acad Sci U S A. 1989;86:6412–6416. doi: 10.1073/pnas.86.16.6412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mannion DJ, McDonald JS, Clifford CW. Orientation anisotropies in human visual cortex. J Neurophysiol. 2010;103:3465–3471. doi: 10.1152/jn.00190.2010. [DOI] [PubMed] [Google Scholar]

- McNamee D, Rangel A, O'Doherty JP. Category-dependent and category-independent goal-value codes in human ventromedial prefrontal cortex. Nat Neurosci. 2013;16:479–485. doi: 10.1038/nn.3337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehta MA, Manes FF, Magnolfi G, Sahakian BJ, Robbins TW. Impaired set-shifting and dissociable effects on tests of spatial working memory following the dopamine D2 receptor antagonist sulpiride in human volunteers. Psychopharmacology. 2004;176:331–342. doi: 10.1007/s00213-004-1899-2. [DOI] [PubMed] [Google Scholar]

- Mehta MA, Montgomery AJ, Kitamura Y, Grasby PM. Dopamine D2 receptor occupancy levels of acute sulpiride challenges that produce working memory and learning impairments in healthy volunteers. Psychopharmacology. 2008;196:157–165. doi: 10.1007/s00213-007-0947-0. [DOI] [PubMed] [Google Scholar]

- Meier JD, Aflalo TN, Kastner S, Graziano MS. Complex organization of human primary motor cortex: a high-resolution fMRI study. J Neurophysiol. 2008;100:1800–1812. doi: 10.1152/jn.90531.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Morrison SE, Salzman CD. The convergence of information about rewarding and aversive stimuli in single neurons. J Neurosci. 2009;29:11471–11483. doi: 10.1523/JNEUROSCI.1815-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, O'Doherty JP, Schoenbaum G. What we know and do not know about the functions of the orbitofrontal cortex after 20 years of cross-species studies. J Neurosci. 2007;27:8166–8169. doi: 10.1523/JNEUROSCI.1556-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puig MV, Miller EK. The role of prefrontal dopamine D1 receptors in the neural mechanisms of associative learning. Neuron. 2012;74:874–886. doi: 10.1016/j.neuron.2012.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puig MV, Miller EK. Neural substrates of dopamine D2 receptor modulated executive functions in the monkey prefrontal cortex. Cereb Cortex. 2014 doi: 10.1093/cercor/bhu096. doi: 10.1093/cercor/bhu096. Advance online publication. Retrieved Feb. 10, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins TW, Arnsten AF. The neuropsychopharmacology of fronto-executive function: monoaminergic modulation. Annu Rev Neurosci. 2009;32:267–287. doi: 10.1146/annurev.neuro.051508.135535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenzweig P, Canal M, Patat A, Bergougnan L, Zieleniuk I, Bianchetti G. A review of the pharmacokinetics, tolerability and pharmacodynamics of amisulpride in healthy volunteers. Hum Psychopharmacol. 2002;17:1–13. doi: 10.1002/hup.320. [DOI] [PubMed] [Google Scholar]

- Sawaguchi T, Matsumura M, Kubota K. Effects of dopamine antagonists on neuronal activity related to a delayed response task in monkey prefrontal cortex. J Neurophysiol. 1990;63:1401–1412. doi: 10.1152/jn.1990.63.6.1401. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schultz W. Updating dopamine reward signals. Curr Opin Neurobiol. 2013;23:229–238. doi: 10.1016/j.conb.2012.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seamans JK, Yang CR. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Prog Neurobiol. 2004;74:1–58. doi: 10.1016/j.pneurobio.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Seamans JK, Gorelova N, Durstewitz D, Yang CR. Bidirectional dopamine modulation of GABAergic inhibition in prefrontal cortical pyramidal neurons. J Neurosci. 2001;21:3628–3638. doi: 10.1523/JNEUROSCI.21-10-03628.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seymour K, Clifford CW, Logothetis NK, Bartels A. The coding of color, motion, and their conjunction in the human visual cortex. Curr Biol. 2009;19:177–183. doi: 10.1016/j.cub.2008.12.050. [DOI] [PubMed] [Google Scholar]

- Shmuel A, Chaimow D, Raddatz G, Ugurbil K, Yacoub E. Mechanisms underlying decoding at 7 T: ocular dominance columns, broad structures, and macroscopic blood vessels in V1 convey information on the stimulated eye. Neuroimage. 2010;49:1957–1964. doi: 10.1016/j.neuroimage.2009.08.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge, MA: MIT; 1998. [Google Scholar]

- Takahashi YK, Roesch MR, Stalnaker TA, Haney RZ, Calu DJ, Taylor AR, Burke KA, Schoenbaum G. The orbitofrontal cortex and ventral tegmental area are necessary for learning from unexpected outcomes. Neuron. 2009;62:269–280. doi: 10.1016/j.neuron.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Tseng KY, O'Donnell P. Dopamine modulation of prefrontal cortical interneurons changes during adolescence. Cereb Cortex. 2007;17:1235–1240. doi: 10.1093/cercor/bhl034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Duuren E, Lankelma J, Pennartz CM. Population coding of reward magnitude in the orbitofrontal cortex of the rat. J Neurosci. 2008;28:8590–8603. doi: 10.1523/JNEUROSCI.5549-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vickery TJ, Chun MM, Lee D. Ubiquity and specificity of reinforcement signals throughout the human brain. Neuron. 2011;72:166–177. doi: 10.1016/j.neuron.2011.08.011. [DOI] [PubMed] [Google Scholar]

- Vijayraghavan S, Wang M, Birnbaum SG, Williams GV, Arnsten AF. Inverted-U dopamine D1 receptor actions on prefrontal neurons engaged in working memory. Nat Neurosci. 2007;10:376–384. doi: 10.1038/nn1846. [DOI] [PubMed] [Google Scholar]

- Wallis JD. Orbitofrontal cortex and its contribution to decision-making. Annu Rev Neurosci. 2007;30:31–56. doi: 10.1146/annurev.neuro.30.051606.094334. [DOI] [PubMed] [Google Scholar]

- Wallis JD. Cross-species studies of orbitofrontal cortex and value-based decision-making. Nat Neurosci. 2012;15:13–19. doi: 10.1038/nn.2956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise RA. Role of brain dopamine in food reward and reinforcement. Philos Trans R Soc Lond B Biol Sci. 2006;361:1149–1158. doi: 10.1098/rstb.2006.1854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wunderlich K, Dayan P, Dolan RJ. Mapping value based planning and extensively trained choice in the human brain. Nat Neurosci. 2012;15:786–791. doi: 10.1038/nn.3068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue G, Dong Q, Chen C, Lu Z, Mumford JA, Poldrack RA. Greater neural pattern similarity across repetitions is associated with better memory. Science. 2010;330:97–101. doi: 10.1126/science.1193125. [DOI] [PMC free article] [PubMed] [Google Scholar]