Abstract

Objective:

Emotional communication is a cornerstone of social cognition and informs human interaction. Previous studies have shown deficits in facial and vocal emotion recognition in older adults, particularly for negative emotions. However, few studies have examined combined effects of aging and hearing loss on vocal emotion recognition by adults. The objective of this study was to compare vocal emotion recognition in adults with hearing loss relative to age-matched peers with normal hearing. We hypothesized that age would play a role in emotion recognition and that listeners with hearing loss would show deficits across the age range.

Design:

Thirty-two adults (22-74 years of age) with mild to severe, symmetrical sensorineural hearing loss, amplified with bilateral hearing aids and 30 adults (21-75 years of age) with normal hearing, participated in the study. Stimuli consisted of sentences spoken by two talkers, one male, one female, in 5 emotions (angry, happy, neutral, sad, and scared) in an adult-directed manner. The task involved a single-interval, five-alternative forced-choice paradigm, in which the participants listened to individual sentences and indicated which of the 5 emotions was targeted in each sentence. Reaction time was recorded as an indirect measure of cognitive load.

Results:

Results showed significant effects of age. Older listeners had reduced accuracy, increased reaction times, and reduced d’ values. Normal hearing listeners showed an Age by Talker interaction where older listeners had more difficulty identifying male vocal emotion. Listeners with hearing loss showed reduced accuracy, increased reaction times, and lower d’ values compared to age-matched normal hearing listeners. Within the group with hearing loss, age and talker effects were significant, and low frequency pure tone averages showed a marginally significant effect. Contrary to other studies, once hearing thresholds were taken into account, no effects of listener sex were observed, nor were there effects of individual emotions on accuracy. However, reaction times and d’ values showed significant differences between individual emotions.

Conclusions:

The results of this study confirm existing findings in the literature showing that older adults show significant deficits in voice emotion recognition compared to their normally hearing peers, and that among listeners with normal hearing, age-related changes in hearing do not predict this age-related deficit. The present results also add to the literature by showing that hearing impairment contributes additionally to deficits in vocal emotion recognition, separate from deficits related to age. These effects of age and hearing loss appear to be quite robust, being evident in reduced accuracy scores and d’ measures, as well as in reaction time measures.

Keywords: adults, aging, hearing loss, voice, emotion recognition

Introduction

Social cognition has been defined as the ability to use interactions with others to alter one’s own reactions based on judgements relating to the intent, motivation, and emotional state of others, as well as the content and context of the interaction (Bandura 1986; Gross et al. 2006; Pajares et al. 2009). The ability to recognize emotion in others and one’s self can facilitate development of interpersonal skills and social interactions, which in turn can impact personal and professional relationships (Carstensen et al.1997) and help avoid conflict (Carstensen et al. 1999, Schieman 1999). Aging has been shown to be associated with impaired ability to recognize negative emotion in others (Mill et al. 2009), which can lead to reduced social cognition and poorer quality of life. Studies of emotion recognition in older listeners have focused more on facial emotion recognition than on vocal emotion recognition, but emotion identification declines in both modalities (e.g., Mill et al., 2009). Age-related hearing loss may present a confound in studies of vocal emotion perception. At least one study (Dupuis & Pichora-Fuller, 2015) has shown that the deficit remains in the elderly even when hearing thresholds are taken into account. However, the effect of hearing loss on emotion recognition has not been comprehensively studied in adults. The goal of the current study is to investigate the combined effect of age and hearing loss on vocal emotion recognition.

Effects of Age on Facial and Vocal Emotion Recognition

The ability to recognize emotion, particularly negative facial emotions, has been shown to decrease with age (Moreno et al. 1993; McDowell et al. 1994; MacPherson et al. 2002; Phillips et al. 2002; Calder et al. 2003; Sullivan and Ruffman 2004). However, findings are mixed regarding the specifics of such decline. Mill et al. (2009) found a decline in both facial and vocal emotion identification for sadness and anger, suggesting that age-related deficits in emotion identification are not modality-specific. Conversely, Isaacowitz et al. (2007) reported that age differences were more pronounced in emotion recognition involving auditory tasks, as opposed to facial emotion recognition. He posited that auditory emotion recognition tasks are harder for the aging brain to categorize, because visual emotion recognition tasks carry less cognitive load. Sullivan and Ruffman (2004) used a series of control experiments to show that declines in facial emotion recognition in their elderly population were not related to general face processing deficits, fluid intelligence, or processing speed.

Declines in facial and vocal emotion recognition may start early for specific emotions. Young adults have been shown to outperform middle-aged adults in vocal recognition of anger, disgust, fear, happiness, and surprise (Paulman et al. 2008). Mill et al. (2009) found deficits in the facial and vocal recognition of sadness began in young adulthood and worsened with each decade. Brosgole and Weisman (1995) found deficits in facial emotion recognition starting at age 45.

Some researchers have reported different reactions and recognition abilities between positive and negative emotions. Charles and Carstensen (2008) found that older adults reported feeling less anger than younger adults in response to speech that simulated conflict. Mather and Carstensen (2005) reported a bias for attention to positive facial emotion recognition in the elderly. Isaacowtiz et al. (2007) could not replicate this finding for happiness in facial or vocal emotion and suggested the possibly of a ceiling effect confounding the findings of previous studies. The cause of the hypothesized positivity bias in emotion recognition in older adults is not understood. Socio-environmental factors may play a role. Several authors (Schieman 1999, Phillips and Allen 2004; Mather and Carstensen 2005) speculated that older adults who are out of the workforce are more able to customize their environment to avoid negative situations, resulting in a lower exposure to negative emotions, which may account for their ability to recognize positive emotions more readily. On the other hand, Phillips and Allen (2004) reported that age-related differences in the positivity bias could be explained by lower levels of depression and anxiety in the elderly population.

Neurobiological changes in the aging brain may underlie deficits in the facial recognition of negative emotions. Benton and Van Allen (1968) reported that age-related declines from right hemisphere lesions influence facial emotion recognition. Owsley et al. (1981) found that age-related changes in facial perception were not due to ocular pathologies but likely neural in origin. Calder et al. (2003) proposed that changes in the aging brain were responsible for progressive declines in identification of fear, and to a lesser extent, sadness. As recognition of disgust was intact, Calder et al. (2003) conjectured that age-related declines in emotion recognition relate, not to a general cognitive impairment, but to declines in the specific parts of the brain that control the recognition of specific emotions. They posited that different speeds of deterioration explain why the ability to recognize some emotions remains intact while others decline. MacPherson et al. (2002) compared emotion recognition ability in old and young participants and found significant main effects of age related to medial temporal-lobe deterioration. They further suggested that the current body of literature may not capture the full extent of emotion recognition decline in the elderly, as damage to the ventromedial frontal lobes of the population past the typical age cutoffs of these studies could reveal further declines in facial emotion recognition in octogenarians and beyond.

The majority of vocal emotion recognition studies did not control for hearing loss, which may account for some of the variability in findings across studies. Dupuis and Pichora-Fuller (2015) showed that hearing thresholds did not predict deficits in vocal emotion recognition in normally hearing, older listeners. Mitchell and Kingston (2014) found that impaired pitch perception in older adults with normal hearing was a predictor of deficits in voice emotion recognition. However, they did not test audiometric thresholds above 2000 Hz, so a high frequency hearing loss cannot be ruled out as a confounding factor. Their finding of poorer complex pitch discrimination in older listeners with normal hearing has been reported by others (Schvartz-Leyzac & Chatterjee, 2015; Shen et al., 2016), and the correlation between complex pitch sensitivity and vocal emotion recognition has also been reported in the literature, although not in older listeners (Deroche et al., 2016)

In the current study, a group of older adults with normal hearing was included in an effort to examine aging effects independent of hearing loss. As some of these individuals still had higher thresholds at the high frequencies, their audiometric thresholds at lower and higher frequencies were considered as predictors of performance, to rule out hearing loss as a confound.

Effects of Hearing Loss

In listeners with normal hearing, facial emotion recognition, and to a lesser extent, vocal emotion recognition, have been studied extensively as a function of age. However, few studies have examined the role of hearing loss in vocal emotion recognition ability in adults, even though the National Institute of Health reports that 25% of adults aged 65-74 and 50% of adults over 74 years of age have disabling hearing loss (https://www.nidcd.nih.gov/health/statistics/quick-statistics-hearing). Orbelo et al. (2005) found that, although elderly adults with mild to moderate hearing loss showed deficits in vocal emotion recognition compared to young adults, the variability within the elderly group was not related to hearing loss, but rather, to aging effects in the right hemisphere of the brain. Picou (2016) used non-speech auditory stimuli to compare older listeners with mild-to-severe hearing loss to middle-aged normal hearing subjects in an emotion perception task. She found deficits in emotional valence and arousal ratings and concluded that acquired hearing loss, not age, affected the emotional valence ratings. Using fMRI, Husain et al. (2014) presented emotion sounds to adults with high frequency hearing loss, aged (58 +/− 8 years) and a control group with normal hearing. She concluded that the decline of emotion recognition in the group with hearing loss could be related either to missing high frequency information in the stimulus or neuronal reorganization related to hearing loss. Lambrecht et al. (2012) found a mediation effect on auditory emotion recognition in older adults with a mild hearing loss at 4000 Hz. They excluded older adults with greater degrees of hearing loss. A few older studies have examined emotion recognition in adults with hearing loss, using sentence materials. Rigo and Lieberman (1989) correlated degree of hearing loss (mild, moderate, severe) to the ability to identify affective, interpersonal situations (auditory-only expressions of dislike, affection, etc.) and found that listeners with hearing loss performed as well as normal hearing peers if their low frequency hearing thresholds were in the normal range. Most et al. (1993) completed a similar study and found no correlation, however, the subjects were adolescents and had severe to profound loss. Oster and Risberg (1986) found deficits in emotion recognition by adolescents with hearing loss attending to sentences spoken in different emotions by actors. They found similar deficits in normal hearing peers who listened to the same sentences through a low-pass filter (500Hz). None of these studies investigated the effects of hearing loss compounded with age-related declines in emotion processing in the elderly population. In the present study, we examined the effects of hearing loss in two ways. First, we included a population with hearing loss and compared their performance with a normally hearing population. Second, in both groups, we examined the effects of low- and high-frequency thresholds as predictors. Effects of high-frequency hearing loss are expected to provide information of overall hearing sensitivity. However, effects of low-frequency hearing loss are expected to be most relevant to vocal emotion recognition, as voice pitch changes, a critical cue to vocal emotions, are encoded primarily by the cochlear place and temporal fine structure of neural responses. Without good low-frequency hearing, the rich harmonic structure of voice pitch cues would not be well represented.

Effects of Listener Sex

Hall’s (1978) extensive literature review of facial and vocal emotion recognition found a clear female advantage that was particularly large in auditory-only stimuli. A number of recent studies have confirmed a small female advantage (for review and meta-analysis, see Thompson and Voyer, 2014), but the female advantage for auditory-only stimuli was not replicated. Hoffman et al. (2010) found a female advantage for subtle facial emotions, but no advantage for high intensity facial expressions. Other studies have shown no sex effect for facial or gestural emotion recognition (Grimshaw et al. 2004; Alaerts et al. 2011). Erwin et al. (1992) and Thompson and Voyer (2014) both reported a female advantage for negative emotions (facial and vocal emotions in Thompson and Voyer, facial emotions only in Erwin et al.), however, a ceiling effect in numerous studies of positive emotions led Thompson and Voyer to question whether women would have outperformed men in positive emotions, too, absent the ceiling. In Hoffman et al.’s (2010) study, women’s performance hit ceiling for mid-intensity facial recognition, whereas men showed a more gradual improvement from low to high.

Some studies suggested the sex of the talkers producing the stimuli interacted with the sex of the subjects. Erwin et al. (1992) found women better at detecting some facial emotions in males, men worse at detecting some emotions in women, and Rahman et al. (2004) found faster reaction times for some male faces than female.

Reaction Times in Emotion Recognition

Studies of facial and gestural emotion recognition (Rahman et al. 2004; Alaerts et al. 2011) have found faster reaction times in women than men. Even when there was no difference in recognition accuracy between sexes or emotions, Rahman et al. (2004) reported a faster reaction time. As noted above, Rahman et al. (2004) also found differences in reaction times across specific facial emotions and the sex of the stimuli. Reaction times were faster for happy male faces versus happy female faces and sad male faces versus sad female faces; they were slower for neutral male faces versus neutral female faces.

Response time measures may produce different effects if participants are instructed to focus on accuracy or response speed. In the present study, accuracy was emphasized in the instructions; participants were not aware that reaction times were being recorded. Recent work by Pals et al (2015) indicate that within similar experimental paradigms, reaction times still reflect listening effort. Although the central focus of our investigation was on accuracy and sensitivity, reaction times were included as a measure to supplement these findings.

Objectives of the present study

In the current study, we examined emotion recognition with sentence stimuli in a group of adults with hearing loss ranging in age from young to old and compared the findings to a group of normal hearing adults of a similar age range. Previous emotion recognition studies have investigated responses to a wide range of emotions, including happy, sad, scared, angry, disgusted, and surprised. Given that relatively little is known of the acoustic cues most relevant to perceptions of emotion in general, we focused on 4 primary emotions in addition to neutral in the present study (happy, sad, scared, and angry), in order to simplify the analysis and interpretation of our results. These 4 emotions are included in a large body of literature on both facial and vocal emotion recognition, in children and in adults. This should make our results more comparable to the literature, and at the same time ensure that the emotions are conceptually separable enough for most individuals. In addition, intergenerational differences stemming from variations in social communication style across age groups and talker-based variations are more likely to be minimized when focusing on primary emotions, rather than emotions with more nuanced differences (e.g., surprised vs. happy).

We were interested in effects of listeners’ age and sex and how these effects might interact with hearing loss. Based on previous literature, we hypothesized that there would be a female advantage in emotion recognition, that younger listeners would perform better than older listeners, and finally, that the combined effects of age and hearing loss would be associated with poorer emotion recognition than either age or hearing loss alone. Measures of performance included measures of accuracy (percent correct) and sensitivity (d’ values derived from confusion matrices), as well as reaction times.

Methods

Participants

Two participant groups participated in this study: (1) Listeners with symmetrical, bilateral sensorineural hearing loss (HL) wearing binaural hearing aids, (2) Listeners with normal hearing (NH). Participants were native American English speaking adults.

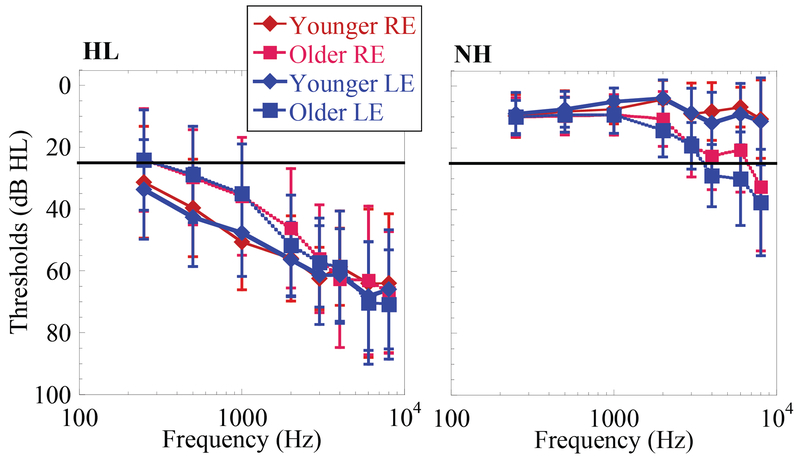

Thirty-two listeners with HL, aged 22-74 years, participated. Air and bone conduction thresholds were measured on the day of testing or within 4 months of testing, if the participant reported no changes in hearing status. Hearing loss was defined as air conduction thresholds worse than 25 dB HL at the majority of frequencies from 250-8000 Hz. Means and standard deviations of audiometric thresholds of these participants are shown in Figure 1.

Figure 1.

Audiometric thresholds obtained in younger (diamonds) and older (squares) participants, red and blue lines designating right ear (RE) and left ear (LE) respectively, with hearing loss (HL) in the left column and normal hearing (NH) in the right column. Solid black line represents criteria for normal hearing threshold (25 dB HL). Hearing thresholds in dB HL (abscissa). Frequency in Hz (ordinate). Error bars show +/− 1 standard deviation from the mean.

Thirty NH listeners, ages 21-75, participated. Air conduction thresholds were measured on the day of testing or within 2 months of testing. Normal hearing was defined as having pure tone air conduction thresholds equal or better than 25 dB HL from 250-8000 Hz; however, the criteria were relaxed somewhat for older participants. Thus, in participants over the age of 65, a PTA (500 Hz, 1 kHz, 2 kHz, and 4 kHz) of 22 dB HL was deemed acceptable, even when high frequency hearing thresholds were worse than 25 dB at 4, 6 and 8k Hz. A small number of exceptions also deemed acceptable are noted as follows: 3 younger listeners had borderline thresholds at a single frequency; a 53-year-old had a 30 dB HL threshold at 8kHz; a 56-year-old had a 35 dB HL threshold at 4kHz; a 63-year-old had a 35 dB HL threshold at 6kHz. Figure 1 shows the means and standard deviations of audiometric thresholds measured in this population.

Stimuli

Twelve semantically emotion-neutral sentences from the HINT database were used for stimulus recordings, as well as 2 additional sentences to be used in training. These sentences are identical to those described in Chatterjee et al. (2015), but new recordings were made in which the talkers used an adult-directed manner. Each sentence was recorded with the talker conveying the following emotions: happy, sad, angry, scared, and neutral. Ten different adult talkers were used to make the recordings (5 male, 5 female) and the male and female talker with the most recognizable emotions (based on a previous pilot study with NH listeners in their 20s) were used in this study. The talkers chosen for this study were aged 34 and 26 years, respectively. Recordings were made at 44.1 kHz sampling rate and 16-bit resolution using an AKG C 2000 B microphone feeding into an Edirol-25EX audio interface, and processed offline using Audition v.3.0 or Audition v.5.0 software. Three repetitions of each set were recorded by the same talker, and the cleanest recording of each utterance was included in the final stimulus set. When all recordings were clean, the second round was used.

Procedure

Stimuli were presented via an Edirol-25EX soundcard and a single loudspeaker (Grason Stadler Inc.) at a mean level of 65 dB SPL. Each talker’s materials were presented in a single block. The listener sat in a sound booth, facing the loudspeaker approximately one meter away. Note that individual utterances were not normalized to each other in intensity. Rather, a 1 kHz tone was generated to have the same mean root-mean-square level as that of all 60 utterances produced by a specific talker, and the system was calibrated to deliver the 1 kHz tone at 65 dB SPL. This allowed the natural intensity variations associated with the different emotions to be present in the stimuli. For the listeners with HL, hearing aids were worn at preferred settings.

A Matlab-based custom software tool, Emognition, was used to control the experiment. A computer screen pictured 5simple (stick figure-like) faces conveying the 5 emotions, with the corresponding emotion written under each face. The emotions were stacked vertically in the same order for each sentence (top-to-bottom) Happy, Scared, Neutral, Sad, Angry). Participants used a mouse to click on the emotion that corresponded to each sentence heard. The order of the talker was alternated between participants. Passive training consisted of the participant listening to 2 sentences recorded from the particular talker with all 5 emotions, presented in random order. After each utterance was heard, the image of the corresponding visual face lit up on the computer screen. After 2 rounds of passive training, the test stimulus set (consisting of 12 new sentences spoken by the same talker) were presented in random order and the participant used the mouse to choose the corresponding emotion. This procedure (including the passive training) was repeated, and then the entire procedure was repeated with the second talker’s materials. For example, the participant passively trained with the female talker’s ten training sentences (2 sentences, 5 emotions) 2 times in a row, then actively selected the emotion for 12 different sentences (with 5 emotions each, a total of 60 sentences). Two passive training sessions were performed again with the female talker, followed by the participant again actively selecting the emotion for the test block of 60 sentences spoken by the same talker, presented in a random order. Next, the entire procedure was repeated with the same sentences, using the male talker. The order of talkers (female, male) was counterbalanced across participants.

Responses were recorded as overall percent correct scores, confusion matrices, and reaction times. Although reaction times were measured, participants were not informed of this, and instructions emphasized accuracy (i.e., not speed of response). The sensitivity index d’ was calculated based on hit rates and false alarm rates derived from the confusion matrices (Macmillan & Creelman, 2005).

Statistical Analyses

Data analyses were conducted in R version 3.12 (R Core Team 2015) using car (Fox & Weisberg, 2011), nlme (Pinheiro et al. 2015) for the linear mixed-effects model implementation and ggplot2 (Wickham, 2009) for graphing. Outlier analysis used “Tukey fences” (Tukey, 1977) to identify outliers. Points that were above the upper fence (third quartile + 1.5*interquartile range) or below the lower fence (first quartile – 1.5*interquartile range) were considered outliers.

The linear mixed-effects model used the lme function within nlme. The anova function within the car library was used to compare models with and without the inclusion of specific factors of interest. Models were built beginning with Age or Age Group as the primary fixed effect, and other predictors were introduced sequentially. If the inclusion of a predictor did not improve the model significantly, it was not included in the final model. Model residuals were visually inspected to ensure that their distribution did not deviate from normality.

Results

1. Predictors of overall accuracy

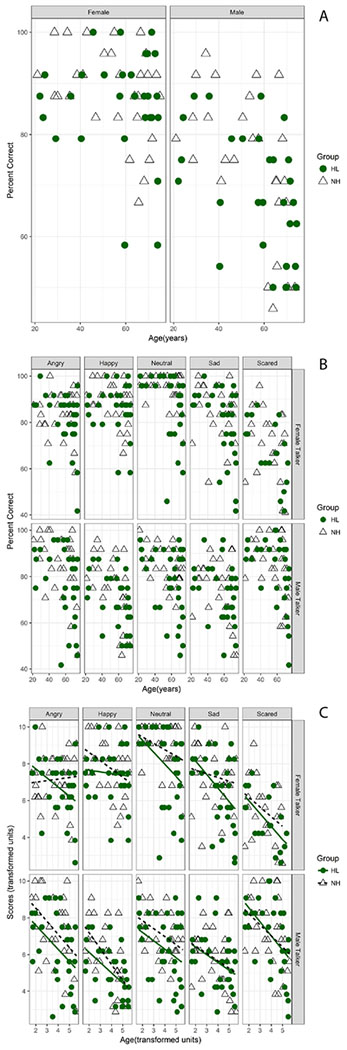

First, outliers in the accuracy scores were removed from the overall dataset. This resulted in removal of 5.9% of the data. Figure 2A shows the accuracy scores (overall percent correct) obtained in the 2 groups of participants (NH, HL) plotted as a function of their age and separated out by performance with the female and male talkers’ materials respectively (left and right panels). Figure 2B also shows accuracy scores, this time separated into different panels showing the different emotions and Talker sex (Male or Female). Generally, the younger NH listeners showed overall excellent performance, and scores declined with age in a talker-dependent manner (greater declines for the male talker’s stimuli). The data follow a nonlinear function, with the slope of the age-related change steepening beyond middle age. To linearize the data, we applied an exponential transform to the Age data and the percent correct scores using the equations:

| [Eqn. 1] |

| [Eqn. 2] |

where expAge represents the transformed Age variable (Age is expressed in years) and expPC represents the transformed percent correct scores. Figure 2C shows the results after this transformation, along with best-fitting linear regression lines. Henceforth, “Age” will reflect expAge, and the dependent variable expPC will be referred to as “Accuracy”. These data were used as inputs to a linear mixed effects (LME) regression analysis with Age, Listener Sex, Talker (Male or Female), Emotion, and Hearing Status (NH, HL) as predictors, and subject-based random intercepts. Visual inspection of qqplots and histograms of the model residuals did not show notable deviations from normality or homoscedasticity. The results indicated main effects of Age (t(53)=−4.57, p < .0001) and an interaction between Age and Talker (t(489)=2.17, p=0.03). No other interactions were observed between the predictors.

Figure 2.

A) Overall percent correct, plotted as a function of age (years), for participants with HL (filled circles) and NH (open triangles). Data obtained with the female talker’s materials in the left column and male talker’s materials in the right column. B) As in Fig. 1A, but columns separate data by 5 emotions (angry, happy, neutral, sad, scared). Responses are divided by talkers (upper two rows correspond to the female talker, lower two rows correspond to the male talker). C) As in Fig. 2A, but with the ordinate and abscissa represented as scores (transformed units) and age (transformed units), respectively. The solid and dotted lines show linear regression fits for listeners with HL and NH, respectively.

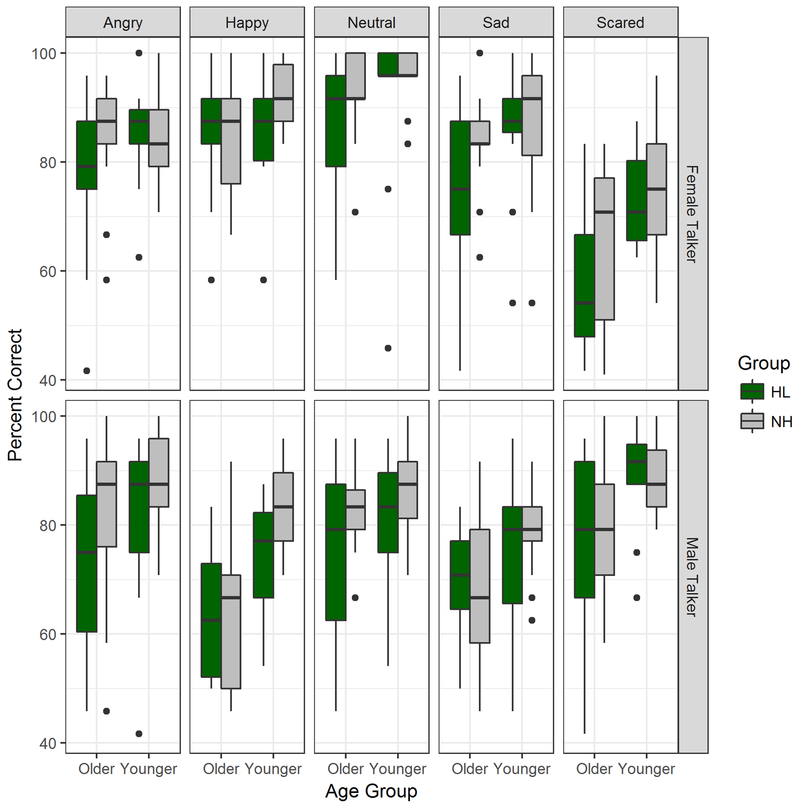

In a second analysis, Age was replaced by a categorical variable, Age Group ((Younger, age<60) and Older (age> 60)), and an LME analysis was performed with the same factors as before. Results showed significant effects of Age Group (t(53)=−3.11, p=0.003), Talker (t(489)=5.49, p<0.0001), and Hearing Status (t(53)=−2.85, p=0.006), and a marginally significant interaction between Age Group and Talker (t(489)=1.84, p=0.066). Again, no effects of Emotion were observed. Figure 3 shows boxplots of the data obtained in the two age groups, separated by Emotion, Hearing Status and Talker Sex as in Figs. 2B and 2C.

Figure 3.

As in Fig. 2, but box plots show percent correct as a function of age group (older and younger groups), separated into participants with HL (green) and NH (gray). Dots show data points extending beyond whiskers (not including outliers).

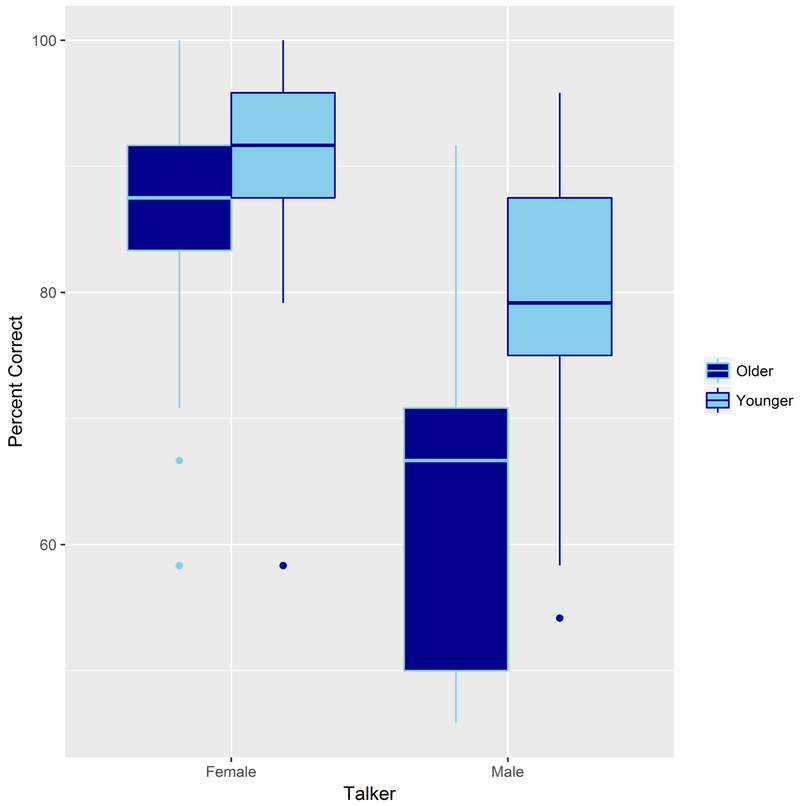

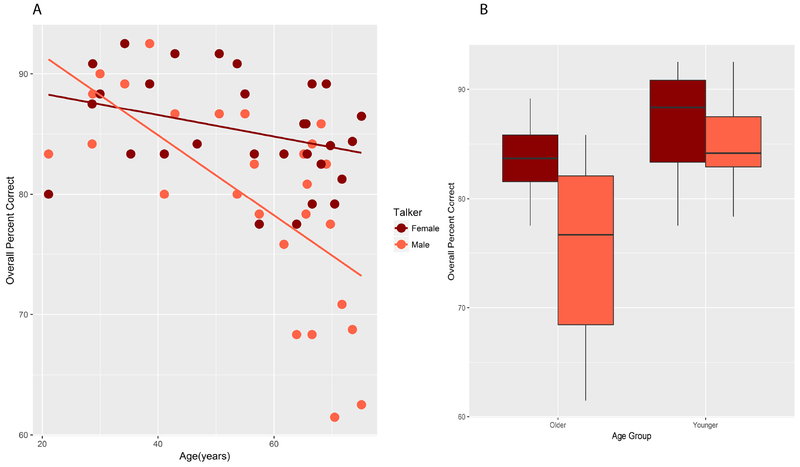

Figure 4 illustrates the interaction between Age and Talker. The participants (NH and HL groups together) were divided into 2 two groups by age, those younger than 60 and those older than 60. It is evident that the older group is more susceptible to the differences between talkers than the younger group, with a greater decline in performance in going from the female to the male talker’s materials.

Figure 4.

Overall percent correct, plotted as a function of talker sex. Box plots show percent correct for female listeners (left) and male listeners (right), older listeners (dark blue) and younger listeners (light blue). Dots show data points extending beyond whiskers (not including outliers).

2. Effects of Hearing Loss

We were specifically interested in effects of the degree of hearing loss on performance by the participants. The overall PTA, as well as the low and high frequency PTAs (LFPTA, HFPTA) were considered in these analyses. As might be expected, the distributions of the PTAs were quite different between the two groups, with PTAs being clustered closely around normal values in the NH group and widely spread out in the HL group. As the ranges of the PTAs were different between the two groups of participants, we examined effects of PTA separately within each group.

A. Analyses of Accuracy within the NH group

Within-group analyses of Accuracy included pure tone averages of audiometric thresholds (PTAs) as predictors. An analysis was conducted within the NH group, to investigate the effects of Age, Emotion, PTA, Listener Sex, and Talker. Within the NH group, the overall PTA was highly correlated with the high-frequency PTA (HFPTA) (r=0.92, p<0.0001) and with the low-frequency PTA (LFPTA) (r=0.78, p<0.0001). The high- and low-frequency PTAs were also significantly correlated with each other (r=0.47, p<0.01). Because of these collinearities, a hierarchical LME modeling approach was taken, with Age (transformed units), Talker, LFPTA, HFPTA, Emotion and Listener Sex entered in sequence. The model showed a significant effect of Age, and a significant improvement by the addition of Talker, but none of the other factors significantly improved the model and were excluded. The final model results showed a significant effect of Age (t(27)=−4.41, p<0.001) and a significant interaction between Age and Talker (t(248)=2.23, p=0.027). Visual inspection of model residuals confirmed no obvious deviations from normality and homoscedasticity.

A similar analysis was conducted with Age Group as a categorical variable. Again, Age Group contributed significantly, and the inclusion of Talker improved the model fit significantly. Emotion, LFPTA, HFPTA and Listener Sex did not significantly improve the model fit and these factors were excluded. The results showed significant effects of Age Group (t(27)=−3.89, p<0.001) and Talker (t(248)=3.61, p<0.001) and a marginal interaction between the two (t(248)=1.74, p=0.08). The interactions between Age and Talker and Age Group and Talker in these 2 sets of analyses are illustrated in Figs. 5A and 5B. The pattern is consistent: performance by older listeners falls more steeply between the female and the male talker’s stimuli.

Figure 5.

A) Overall percent correct as a function of age (years) in the NH group. Scores obtained with female talker’s (dark red circles) and male talker’s (light red circles) materials. Solid lines show linear regression fits. B) Box plots show percent correct scores obtained in older and younger NH groups (abscissa) for the female talker’s (dark red) and male talker’s (light red) materials. The colors are as in Fig. 5A.

B. Analyses of Accuracy within the HL group

Within the HL group, multiple correlations were observed between potential predictors. LFPTA and HFPTA were significantly correlated to each other (r=0.27, p=0.04). LFPTA and HFPTA were also significantly related to Listener Sex (men had overall higher thresholds than women) and with expAge. To minimize collinearity effects, LFPTA and HFPTA were considered in separate analyses, and Listener Sex was not included as a factor. Age had a significant effect, and the inclusion of Talker significantly improved the model. The inclusion of LFPTA improved the model marginally (p=0.08), but Emotion did not. Incorporating interactions did not improve the model fit. In a second analysis, HFPTA did not improve the model when added to Age and Talker. Thus, the final model showed significant effects of Age (t(28)=−3.88, p<0.001), Talker (t(253)=4.63, p<0.001), and a marginal effect of LFPTA (t(28)=−1.78, p=0.085). The remaining factors did not contribute.

A similar analysis was conducted with Age Group as a categorical predictor instead of Age. Age Group had a significant effect (t(28)=−2.73, p=0.011), as did Talker ((t(253)=4.65, p<0.001). LFPTA improved the model marginally (t(28)=−1.65, p=0.11). In a separate analysis, HFPTA did not contribute significantly to Age and Talker. The remaining factors, Emotion and Listener Sex, did not contribute to the model.

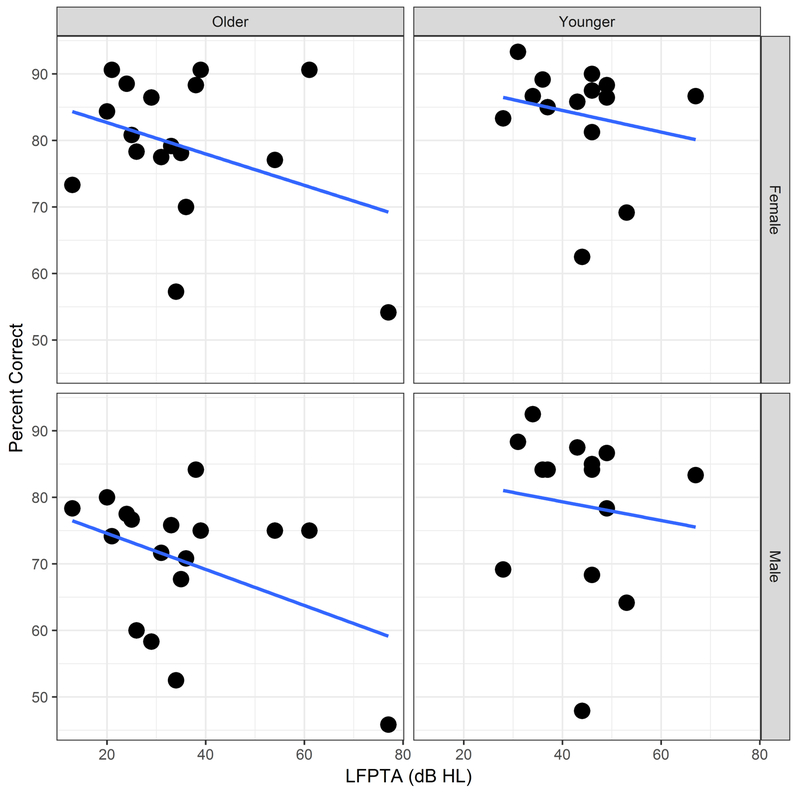

Figure 6 shows the effect of LFPTA on the mean percent correct scores obtained by listeners (calculated across emotions), for older and younger age groups (left and right columns) and for the female and male talkers (top and bottom rows).

Figure 6.

Overall accuracy (percent correct scores) as a function of LFPTA (dB HL), divided by older group (left column), younger group (right column), female talker’s materials (top row), male talker’s materials (bottom row). Circles represent individual participants for each data set. Solid lines represent linear regression fits.

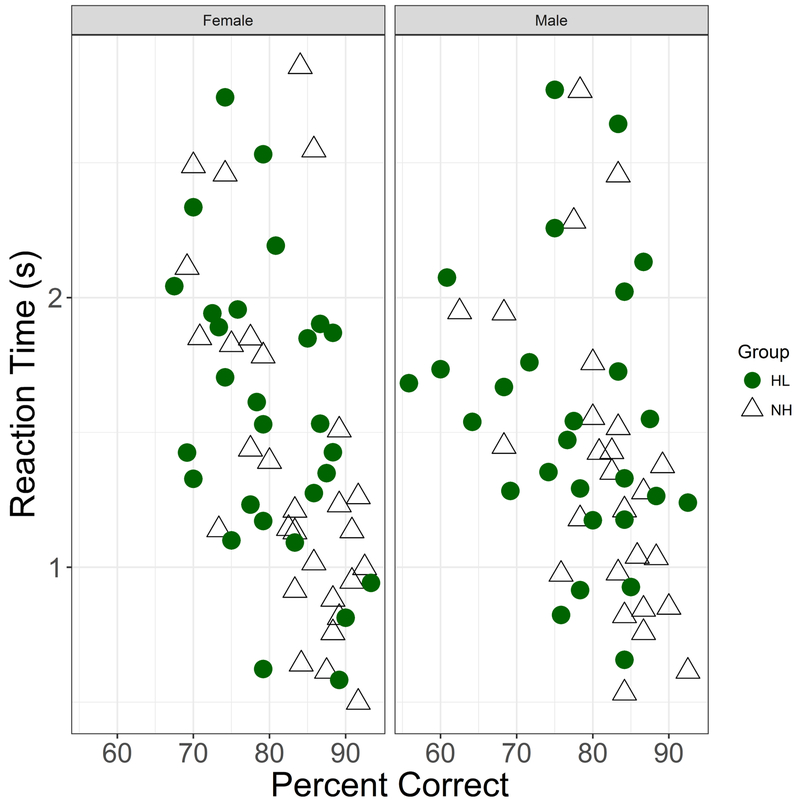

Predictors of Reaction Times

Outlier analyses resulted in 5.7% of the data being excluded from analyses. A multiple regression analysis showed that overall reaction time was significantly related to accuracy (estimated slope=−0.032, p=0.02), but was not influenced by Hearing Status or Talker (Fig 7). The reaction time data when plotted against listener age showed a pattern parallel to Accuracy scores, with an increase in reaction times above age 60 (Fig 8). Similar to the accuracy data, the Age and RT values were exponentiated to linearize the data space prior to analyses. An LME analysis with subject-based random intercepts showed main effects of Age (t(59)=4.32, p<0.001), Talker (t(512)=−2.10, p=0.036) and Emotion (t(512)=2.91, p=0.004), but no effects of Hearing Status and no effects of Listener Sex. Including interactions did not improve the model significantly. A parallel analysis was conducted with Age Group (Younger, Older) in place of Age as a fixed effect. An LME analysis with subject-based random intercepts showed a main effect of Age Group (t(58) = 2.47, p=0.017), a marginal effect of Hearing Status (t(58)=2.01, p=0.05), main effects of Talker (t(512)=−2.11, p=0.035) and Emotion (t(512)=2.92, p=0.004), but no effects of Listener Sex.

Figure 7.

Reaction time (seconds), plotted as a function of percent correct. Female listeners in left column, male listeners in right column. Participants identified by HL (filled circles) and NH (open triangles).

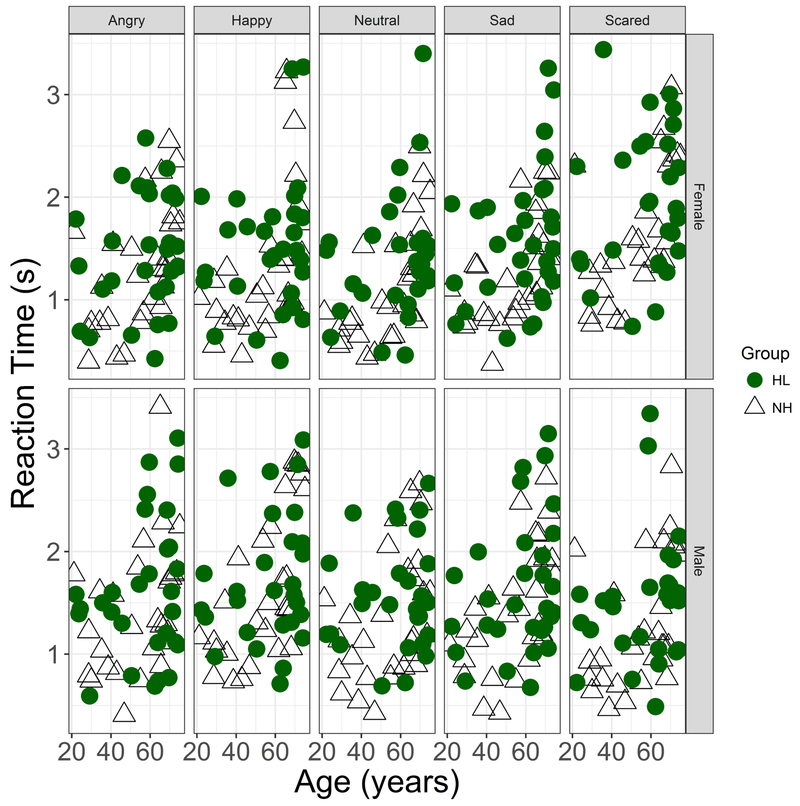

Figure 8.

As in Fig. 7, but columns separate data by 5 emotions (angry, happy, neutral, sad, scared). Data obtained with female talkers’ materials in the upper row and male talker’s materials in the lower row.

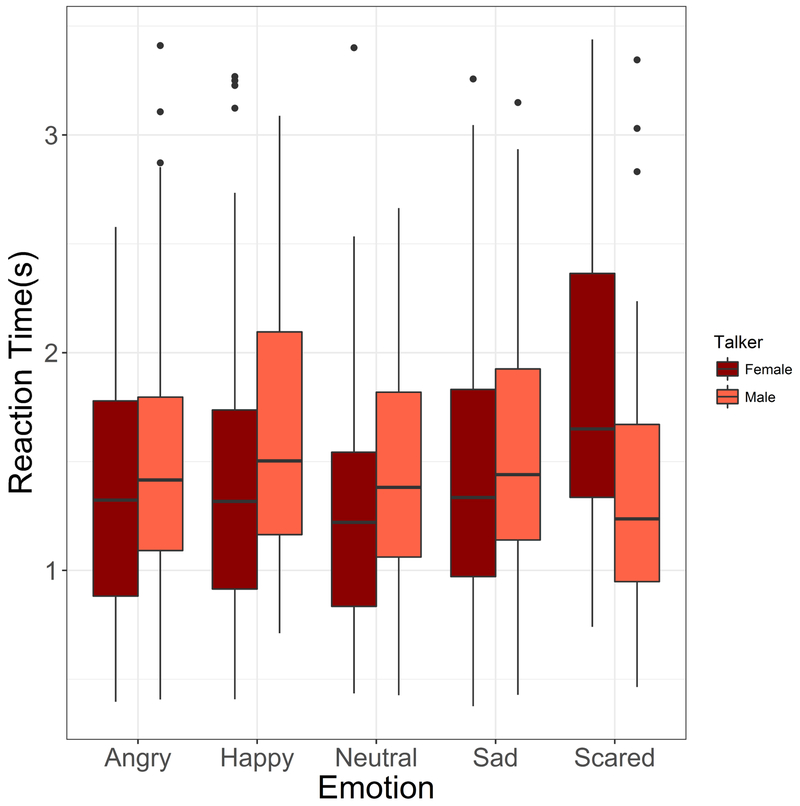

A follow-up test of differences between reaction times for the different emotions was conducted using pairwise t-tests with Bonferroni corrections on the reaction times obtained with male and female talkers separately. With the male talker’s materials, no significant differences were observed between emotions. With the female talker’s materials, reaction times obtained with scared were significantly longer than those obtained with the other emotions (scared vs. angry: p=0.004; scared vs. happy, p=0.014; scared vs. neutral, p<0.001; scared vs. sad, p=0.018). Figure 9 shows boxplots of the reaction time data obtained with the female and male talkers’ materials, for each emotion.

Figure 9.

Reaction times (seconds), plotted against individual emotions (left to right: angry, happy, neutral, sad, scared). Box plots show participant reaction times for female talker (dark red) and male talker (light red). Dots show data points extending beyond whiskers (not including outliers).

Analyses of d’

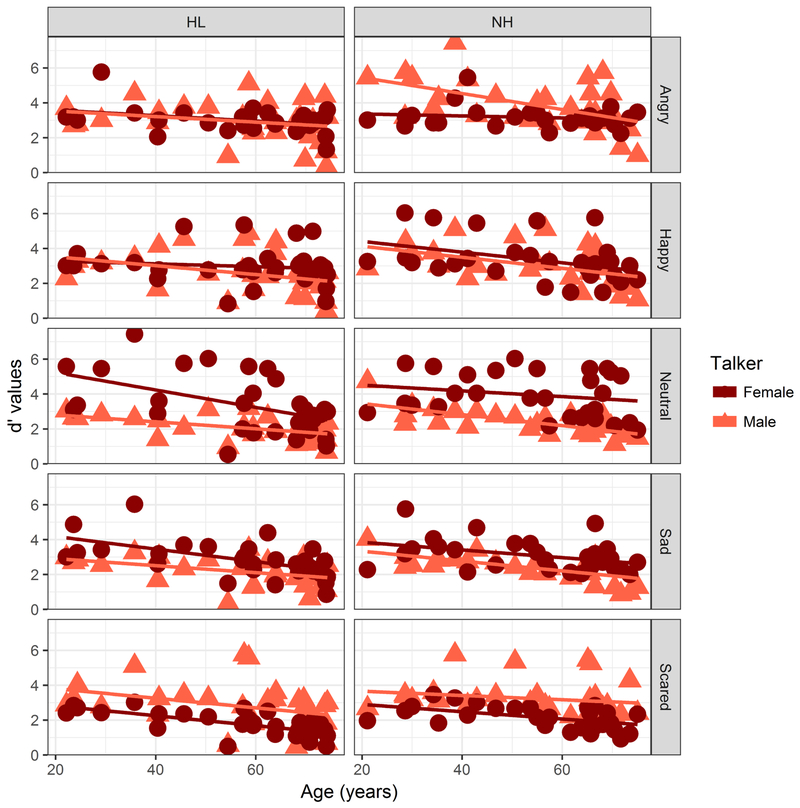

Outlier analysis resulted in 4.94% of the data being excluded. As with Accuracy and RTs, d’ values showed age-related changes. The data did not require transformation prior to analyses. An LME analysis showed significant effects of Age (t(58)=−5.33,p<0.001), Talker (t(544)=3.06, p=0.002), Emotion (t(544)=−2.38, p=0.017) and Hearing Status (t(58)=−2.320, p=0.024), but no effects of Listener Sex and no interactions. Figure 10 shows the d’ values computed for Emotion, Talker and Hearing Status, plotted as a function of Age.

Figure 10.

Relationship between d’ values (ordinate) and age in years (abscissa) for female talker’s (circles) and male talker’s (triangles) materials. Left column shows participants with HL and NH in left and right columns, respectively. Individual emotions are separated by rows, from top to bottom in the following order: angry, happy, neutral, sad, and scared.

The results were confirmed in a second analysis conducted by grouping the factor Age into two categories, Younger and Older, as was done in our analyses of Accuracy described previously. The LME analysis showed significant effects of Age Group (t(57)=−3.95, p=0.0002), Hearing Status (t(57)=−2.69, p=0.009), Emotion (t(544)=−2.39, p =0.017) and Talker (t(544)=3.06, p=0.002), but no effects of Listener Sex, and no significant interactions.

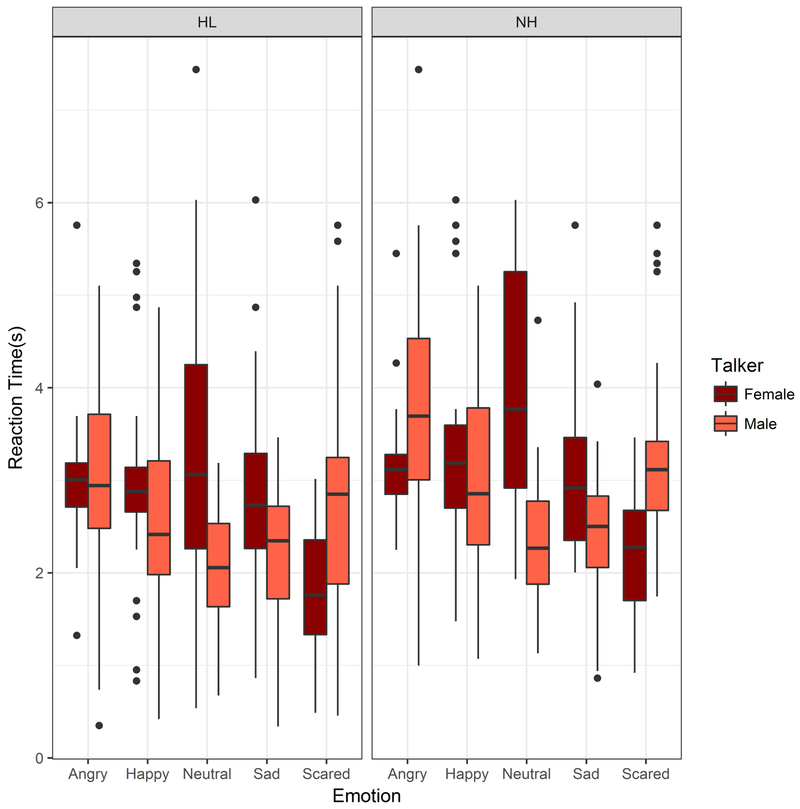

Figure 11 shows boxplots of d’ values plotted against emotion, for listeners with HL and NH, and for the 2 talkers. Post-hoc pairwise comparisons for the effect of Emotion (t-tests, Bonferroni correction) were conducted for the male and female talkers separately. For the female talker, results showed that d’ for neutral was significantly higher than angry (p=0.03), and d’ for scared was significantly lower than d’ for all other emotions at the p<0.001 level. For the male talker, d’ for happy was significantly lower than angry (p=0.015), d’ for neutral was significantly lower than angry (p<0.001) and happy (p=0.031), d’ for sad was significantly lower than d’ for angry (p<0.01), and d’ for scared was significantly lower than d’ for neutral (p<0.001) and sad (p=0.002). Considering the mean of d’ values obtained with the male and female talkers, a pairwise t-test with Bonferroni corrections showed that d’ obtained for sad was significantly lower than d’ for angry (p=0.0017), and d’ for scared was significantly lower than d’ for angry (p<0.001) and happy (p=0.049), and marginally different from neutral (p=0.07).

Figure 11.

Box plots of d’ values for female talker’s (dark red) and male talker’s (light red) materials plotted against individual emotions. Data obtained with participants with HL and NH are shown in the left and right column, respectively. Dots show data points extending beyond whiskers (not including outliers).

Analyses of d’s within groups including LFPTA as a predictor showed no significant contributions of LFPTA to d’ values. For NH listeners, a significant effect of Age was observed (t(27)=−4.67, p<0.001), but Talker and Emotion did not contribute significantly. For listeners with HL, significant effects of Age(t(28)= −3.71, p<0.001), Talker (t(275)=2.88 , p=0.004) and a marginal effect of Emotion was observed (t(275)=−1.71, p=0.088), with LFPTA contributing non-significantly (t(28)=1.64 , p=0.11). Results were very similar when Age was considered as a categorical variable.

Summary of Results

Overall, the results confirmed an effect of Age, which was reflected in reduced accuracy scores, increased reaction times, and reduced d’s.

Listeners with HL showed reductions in Accuracy, increased reaction times, and lower d’s than listeners with NH. The effect of Hearing Status was weaker when Accuracy or Reaction Time were considered as the dependent variable, in that in both cases, the effect was only observed when Age was considered to be a categorical variable. However, d’ values showed consistent and significant effects of Hearing Status. Within the group with HL, effects of Age and Talker were significant, LFPTA had a marginally significant effect, and there were no interactions.

LFPTA and HFPTA were correlated with Age, but when Age was controlled for, LFPTA only contributed marginally to Accuracy and d’s within the group with HL. In the group with NH, the LFPTA was not predictive of Accuracy or d’s, even though the PTA values were correlated with Age. HFPTA did not contribute to the results in either the participants with NH or those with HL.

No effects of Listener Sex were observed.

Effects of individual emotions were not observed in the Accuracy scores, but were observed in reaction times and in d’ values.

A significant effect of Talker was also observed, with the female talker’s vocal emotions being more identifiable than the male talker’s vocal emotions. This was also reflected in accuracy scores, in reduced d’s, and in longer reaction times with the male talker’s materials. An Age by Talker interaction was observed in NH listeners, with the older participants showing poorer performance with the male talker’s materials.

Discussion

The present results confirm previous findings of age-related declines in vocal emotion recognition and extend the literature by showing that, independent from age, hearing loss further impacts the ability to recognize emotions. The present findings also indicate that a fuller picture may be obtained by including the sensitivity index d’ and reaction times alongside accuracy scores. While d’s include accuracy in their calculation, they also incorporate false alarms and are a criterion-free measure of the listener’s sensitivity from a signal detection theoretic perspective. Reaction times additionally provide information about cognitive load. Thus, the same accuracy scores may be associated with different reaction times and (depending on the internal noise distribution) different d’s.

Effects of age and hearing loss were consistently observed across the three measures, demonstrating their robustness. All three measures also showed greater difficulty with the male talker’s emotions. Effects of individual emotions were different across the measures. Thus in overall accuracy measures, there were no effects of individual emotions, but d’scores and reaction times revealed significant differences between emotions. Given that we used only two talkers, one male and one female, we cannot generalize these findings to talker sex in general. Even the effects of individual emotions showed variations across the two talkers. A study incorporating stimuli recorded by a large number of talkers might show different effects of both talker variability and talker sex, and different patterns of results across emotions.

Analyses of Accuracy showed main effects of age. This is in line with earlier studies showing declines in emotion recognition ability with age in NH listeners, including Isaacowitz et al. (2007); Paulmann et al. (2008); Mill et al. (2009); and Dupuis and Pichora-Fuller (2015) but contradicts Phillips et al. (2002) who found no age effects for vocal emotion recognition. Our findings of the effects of hearing loss are consistent with other studies that have taken hearing status into account. Husain et al. (2014) and Picou (2016) found deficits in responses to emotion in listeners with hearing loss; however, both looked at emotional valance and/or arousal, as opposed to specific emotions. Husain et al. (2014) found deficits in older listeners with mild to moderate high frequency HL, whereas our study did not find any deficit for older listeners with only a mild high frequency HL. We did find marginal deficits in listeners with low frequency HL, but when we removed listeners’ sex from the analysis, that effect became nonsignificant.

Multiple studies have found an age-related decline in recognizing facial expressions of sadness and/or anger (Moreno et al. 1993; McDowell et al. 1994; MacPherson et al. 2002; Phillips et al. 2002; Calder et al. 2003; Phillips and Allen 2004; Sullivan and Ruffman 2004), followed by fear, (McDowell et al. 1994; Calder et al. 2003; Sullivan and Ruffman 2004). Generally, age cutoffs in these previous studies ranged from 70 to 90 years of age, depending on the study. The current study found no effect of emotion in accuracy measures. It is possible that the current study did not capture the full extent of decline on specific emotions because our age cut off would miss further declines in older listeners, as MacPherson et al. (2002) speculated. However, using older subjects would introduce other issues, specifically, age-related cognitive changes. The fact that effects of specific emotions were observed in the d’ and reaction time measures points to their greater sensitivity as outcome measures.

The many studies from previous years that found a female advantage (Hall 1978; for review see Thompson and Voyer (2014)), particularly with vocal emotion, may have been done without taking hearing thresholds carefully into account. In more recent studies, the gap shrinks. We observed no effects of Listener Sex once hearing thresholds were taken into account (male listeners had higher thresholds than female listeners).

Our analyses of reaction times showed that they were significantly related to accuracy. Similar to accuracy scores, reaction times were relatively weakly influenced by hearing status (there was no significant effect of hearing status when age was considered a continuous variable and a marginally significant effect when age was considered a categorical variable). Reaction times were longer in the older listeners, as expected. Talker and emotion also influenced reaction times, but there were no interactions and no effects of listener sex. Husain et al. (2014) found that older adults with HL had significantly slower reaction times than NH peers. It is possible that the differences in methodology (task and stimuli) contribute to a relatively weak effect of hearing status on reaction times in the present study.

Hearing thresholds (LFPTA and HFPTA) were considered as contributors in our statistical models. In the NH group, analyses confirmed Dupuis and Pichora-Fuller’s (2015) finding that age-related changes in voice emotion recognition are not related to age-related hearing loss. In the group with HL, contributions of LFPTA were marginal and did not reach significance. Based on the important role of low-frequency coding of voice pitch cues for emotion recognition, it is reasonable that the LFPTA should play more of a role than HFPTA in predicting performance in this task.

Overall, measures of d’ scores confirmed the effects of age, hearing loss and talker, and added information about emotion-specific differences to the accuracy scores. No effects of LFPTA were observed in the d’ measures. Although the 3 measures considered here (Accuracy, d’ and reaction times) provide consistent information regarding age, hearing loss and talker variability, there are areas of divergence, such as the effects of individual emotions. Measures of Accuracy were not sensitive to these, but d’s and reaction times did show emotion-based differences in both older and younger listeners. Even considering the results of d’ and reaction times, the specific emotion-dependence varied somewhat between these measures. A more comprehensive picture is clearly obtained by incorporating error patterns as well as accuracy rates, as in d’s, as well as reaction time, which might provide some insight into cognitive load (Pals et al. 2015).

Other studies have referred to a “positivity bias” in older individuals, in which they prefer to attend more to emotional scenarios associated with positive emotions. The present study could not address the question of such a bias, because we did not include a wide range of emotions. In fact, we had only 1 positive emotion and 3 negative ones in our set of 5. Further, we did not design the study to examine attentional effects, which would be most informative about such a bias.

One limitation of the present study was the lack of cognitive measures, which might have provided greater insight into underlying processes. For example, Suzuki and Hiroko (2013) found emotion-specific patterns of decline associated with cognitive deficits. Mechanisms underlying these associations remain unclear, but clinical implications of such findings could be important. Along with cognitive measures, other measures of social cognition, such as facial emotion recognition, would yield useful information in such studies in the future, as they might help to separate out effects that are specific to the auditory perception of emotions from more modality-general effects of changes in emotion understanding with advancing age.

Clinical implications

Age-related changes in facial and vocal emotion recognition have important implications for social communication in middle-aged and older members of the community, both with each other and with younger family members, caregivers and friends. Older individuals also have greater difficulty understanding rapid speech, a speaking style increasingly prevalent in younger generations and in radio or television broadcasts. Taken together, these findings suggest that middle aged and older individuals may face significant challenges when communicating with others in their environment. Our finding of a larger talker effect in older listeners also suggests that age-related effects may include a greater vulnerability to talker variations in speaking style and vocal affect, suggesting more difficulties for older individuals confronted with unfamiliar talkers in larger social gatherings.

One aspect that has not been taken into account in the present study and others of its kind, is the effect of social context. The literature suggests that older individuals are better able to use contextual cues in speech perception than younger listeners. A similar advantage may exist for social context, but has not been studied as yet. Sze et al. (2012) have suggested that when presented with a more dynamic social scenario, older listeners may not show the same deficits. However, the need to rely more on contextual cues, whether social or linguistic, suggests a greater reliance on higher-level processes in older individuals, which might diminish their ability to solve concurrent problems (e.g., preparing food involving a complicated recipe while engaging in conversation with family members). These challenges are likely to make social interactions more burdensome, resulting in a greater tendency for social withdrawal in increasing age. Social networks, shown to play an important role in individuals’ emotional well-being (Cohen & Willis, 1985; Pinquart & Sörensen, 2000) and emotional state (e.g., Huxhold et al., 2013; Huxhold et al., 2014), are often diminished in the elderly, although the frequency of social interactions may increase in old age (Cornwell et al., 2008).

For listeners with hearing loss, the present study shows a compounding effect of age and hearing impairment. Taken together with the cognitive declines associated with aging and hearing loss (Lin et al., 2013), the present findings suggest an even greater risk for negative impacts on social cognition, social interactions, and quality of life in aging individuals with hearing loss. Listening effort has been shown to increase in speech perception with hearing loss or under adverse conditions. For older listeners with hearing loss, social interactions may exact an even greater toll. Greater listening effort may mean more fatigue at the end of a long day, which may have a negative impact on the individual’s health and well-being.

Reaction time measures showed a clear lengthening with age and with hearing loss. To some extent, this may reflect slower processing or a need for more processing in the task. In everyday scenarios, a slowed response may have a negative impact on the natural rhythm of social communication. Such implications have not been studied in the literature but would clearly be important as an aspect of communication.

Conclusions

The present study confirmed age-related declines occur in emotion recognition by adults with normal hearing and added the new finding that bilateral sensorineural hearing loss is related to additional deficits alongside aging effects. These effects were found in accuracy scores, in d’ values computed from confusion matrices, and in reaction times. Different patterns in the emotion-specificity of effects were observed in the three measures, suggesting the need for comprehensive, multi-pronged approaches rather than single-outcome designs. Age also resulted in a greater vulnerability to talker-variability, although this needs to be confirmed with a larger stimulus set. Listener sex effects were not observed once degree of hearing loss was taken into account. Future studies should take into account the listeners’ cognitive status and measures of social cognition in addition to hearing status.

Acknowledgements

This research was supported by National Institutes of Health (NIH) grants R01 DC014233 and the Clinical Management Core of NIH grant P20GM10923. Portions of this work were presented at the 2018 Midwinter Meeting of the Association for Research in Otolaryngology held in San Diego, CA.

This research was funded by National Institutes of Health (NIH) grant R01 DC014233, the Clinical Management Core of NIH grant P20 GM10923 and the Human Research Subject Core of P30 DC004662.

Footnotes

Financial Disclosures/Conflicts of Interest:

The authors have no conflicts of interest to disclose.

References

- Alaerts K, Nackaerts E, Meyns P, Swinnen SP, & Wenderoth N (2011). Action and emotion recognition from point light displays: an investigation of gender differences. PloS One, 6(6), e20989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A (1986). Social foundations of thought and action: A social cognitive theory. Englewood Cliffs, NJ, US: Prentice-Hall, Inc. [Google Scholar]

- Benton AL, Van Allen MW, 1968. Impairment in facial recognition in patients with cerebral disease. Cortex 4, 344–358. [PubMed] [Google Scholar]

- Brosgole L, Weisman J, 1995. Mood recognition across the ages. International Journal of Neuroscience 82, 169–189. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Keane J, Manly T, Sprengelmeyer R, Scott S, Nimmo-Smith I, Young AW, 2003. Facial expression recognition across the adult life span. Neuropsychologia 41, 195–202. [DOI] [PubMed] [Google Scholar]

- Carstensen LL, Gross JJ, & Fung HH (1997). of Emotional Experience. Annual Review of Gerontology and Geriatrics, Volume 17, 1997: Focus on Emotion and Adult Development, 325. [Google Scholar]

- Carstensen LL, Isaacowitz DM, & Charles ST (1999). Taking time seriously: A theory of socioemotional selectivity. American Psychologist, 54(3), 165. [DOI] [PubMed] [Google Scholar]

- Charles ST, & Carstensen LL (2008). Unpleasant situations elicit different emotional responses in younger and older adults. Psychology and Aging, 23(3), 495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chatterjee M, Zion DJ, Deroche ML, Burianek BA, Limb CJ, Goren AP, ... & Christensen, J. A. (2015). Voice emotion recognition by cochlear-implanted children and their normally-hearing peers. Hearing Research, 322, 151–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen S, & Wills TA. (1985). Stress, social support, and the buffering hypothesis. Psychol Bull, 98(2), 310. [PubMed] [Google Scholar]

- Cornwell B, Laumann EO, & Schrumm LP. (2008). The social connectedness of older adults: A national profile. Am. Sociol. Rev 73(2), 185–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deroche ML, Kulkarni AM, Christensen JA, Limb CJ, & Chatterjee M (2016) Deficits in sensitivity to pitch sweeps by school-aged children wearing cochlear implants. Front. Neurosc 10. doi: 10.3389/fnins.2016.00073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupuis K, & Pichora-Fuller MK (2015). Aging affects identification of vocal emotions in semantically neutral sentences. Journal of Speech, Language, and Hearing Research, 58(3), 1061–1076. [DOI] [PubMed] [Google Scholar]

- Erwin RJ, Gur RC, Gur RE, Skolnick B, Mawhinney-Hee M, & Smailis J (1992). Facial emotion discrimination: I. Task construction and behavioral findings in normal subjects. Psychiatry Research, 42(3), 231–240. [DOI] [PubMed] [Google Scholar]

- Grimshaw GM, Bulman-Fleming MB, & Ngo C (2004). A signal-detection analysis of sex differences in the perception of emotional faces. Brain and Cognition, 54(3), 248–250. [DOI] [PubMed] [Google Scholar]

- Gross JJ, Richards JM, & John OP (2006). Emotion Regulation in Everyday Life In Snyder DK, Simpson J, & Hughes JN (Eds.), Emotion regulation in couples and families: Pathways to dysfunction and health (pp. 13–35). Washington, DC, US: American Psychological Association. [Google Scholar]

- Hall JA (1978). Gender effects in decoding non-verbal cues. Psychological Bulletin, 85, 845–857 [Google Scholar]

- Hoffmann H, Kessler H, Eppel T, Rukavina S, & Traue HC (2010). Expression intensity, gender and facial emotion recognition: Women recognize only subtle facial emotions better than men. Acta Psychologica, 135(3), 278–283. [DOI] [PubMed] [Google Scholar]

- Husain FT, Carpenter-Thompson JR, & Schmidt SA (2014). The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Frontiers in Systems Neuroscience, 8, 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huxhold O, Fiori KL, & Windsor TM. (2013). The dynamic interplay of social network characteristics, subjective well-being, and health: the costs and benefits of socio-emotional selectivity. Psychol Aging, 28(1), 3016. [DOI] [PubMed] [Google Scholar]

- Huxhold O, Miche M, & Schüz B. (2014). Benefits of having friends in older ages: differential effects of informal social activities on well-being in middle-aged and older adults. J Gerontol B Psychol Sci Soc Sci, 69(3), 366–375. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Lockenhoff CE, Lane RD, Wright R, Sechrest L, Riedel R, et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging, 22, 147–159. [DOI] [PubMed] [Google Scholar]

- Lambrecht L, Kreifelts B, & Wildgruber D (2012) Age-related decrease in recognition of emotional facial and prosodic expressions. Emotion 12(3), 529–539. [DOI] [PubMed] [Google Scholar]

- Macmillan NA & Creelman CD (2005) Detection Theory: A User’s Guid. Mahwah, NJ: Lawrence Erlbaum Associates. [Google Scholar]

- MacPherson SE, Phillips LH, Della Sala S, 2002. Age, executive function, and social decision making—a dorsolateral prefrontal theory of cognitive aging. Psychology and Aging 17, 598–609. [PubMed] [Google Scholar]

- Martin M, & Zimprich D (2005). Cognitive development in midlife In Willis SL & Martin M (Eds.), Middle adulthood: A lifespan perspective (pp. 179–206). Thousand Oaks, CA: Sage. [Google Scholar]

- Mather M, Carstensen LL, 2005. Aging and motivated cognition—the positivity effect in attention and memory. Trends in Cognitive Sciences 9, 496–502 [DOI] [PubMed] [Google Scholar]

- McDowell CL, Harrison DW, Demaree HA, 1994. Is right-hemisphere decline in the perception of emotion a function of aging? International Journal of Neuroscience 79, 1–11. [DOI] [PubMed] [Google Scholar]

- Mill A, Allik J, Realo A, & Valk R (2009). Age-related differences in emotion recognition ability: a cross-sectional study. Emotion, 9(5), 619. [DOI] [PubMed] [Google Scholar]

- Moreno C, Borod JC, Welkowitz J, Alpert M, 1993. The perception of facial emotion across the adult life-span. Developmental Neuropsychology 9, 305–314. [Google Scholar]

- Most T, Weisel A, Zaychik A. Auditory, visual and auditory-visual identification of emotions by hearing and hearing-impaired adolescents, British Journal of Audiology, 1993, vol. 27 (pg. 247–253) [DOI] [PubMed] [Google Scholar]

- Orbelo DM, Grim MA, Talbott RE, & Ross ED (2005). Impaired comprehension of affective prosody in elderly subjects is not predicted by age-related hearing loss or age-related cognitive decline. Journal of Geriatric Psychiatry and Neurology, 18(1), 25–32. [DOI] [PubMed] [Google Scholar]

- Oster AM, Risberg A. The identification of the mood of a speaker by hearing-impaired listeners, SLT-Quarterly Progress Status Report, 1986, vol. 4 (pg. 79–90) [Google Scholar]

- Owsley C, Sekuler R, Boldt C, 1981. Aging and low-contrast vision–face perception. Investigative Ophthalmology & Visual Science 21, 362–365. [PubMed] [Google Scholar]

- Pajares F, Prestin A, Chen J, & Nabi RL (2009). Social cognitive theory and media effects. The Sage handbook of media processes and effects, 283–297. [Google Scholar]

- Pals C, Sarampalis A, van Rijn H, & Başkent D (2015). Validation of a simple response-time measure of listening effort. The Journal of the Acoustical Society of America, 138(3), EL187–EL192. 10.1121/1.4929614 [DOI] [PubMed] [Google Scholar]

- Paulmann S, Pell MD, & Kotz SA (2008). How aging affects the recognition of emotional speech. Brain and Language, 104(3), 262–269. [DOI] [PubMed] [Google Scholar]

- Phillips LH, MacLean RDJ, & Allen R (2002). Age and the understanding of emotions: Neuropsychological and social– cognitive perspectives. Journal of Gerontology : Psychological Sciences, 57(B), 526–530. [DOI] [PubMed] [Google Scholar]

- Phillips LH, Allen R, (2004). Adult aging and the perceived intensity of emotions in faces and stories. Aging Clinical and Experimental Research 16, 190–199. [DOI] [PubMed] [Google Scholar]

- Picou EM (2016). How hearing loss and age affect emotional responses to nonspeech sounds. Journal of Speech, Language, and Hearing Research, 59(5), 1233–1246. [DOI] [PubMed] [Google Scholar]

- Pinheiro J, Bates D, DebRoy S, Sarkar D, & R Core Team (2015). nlme: Linear and Nonlinear Mixed Effects Models. R package version 3.1-121,http://CRAN.Rproject.org/package=nlme

- Pinquart M, & Sörensen S. (2000). Influences of socioeconomic status, social network, and competence on subjective well-being in later life: A meta-analysis. Psychol Aging 15(2), 187–224. [DOI] [PubMed] [Google Scholar]

- Rahman Q, Wilson GD, & Abrahams S (2004). Sex, sexual orientation, and identification of positive and negative facial affect. Brain and Cognition, 54(3), 179–185. [DOI] [PubMed] [Google Scholar]

- Rigo TG, & Lieberman DA (1989). Nonverbal sensitivity of normal-hearing and hearing-impaired older adults. Ear and Hearing, 10(3), 184–189. [DOI] [PubMed] [Google Scholar]

- Schvartz-Leyzac KC., & Chatterjee, M. (2015) Fundamental frequency discrimination using noise-band-vocoded harmonic complexes in older listeners with normal hearing. J. Acoust. Soc. Am, 138(3), 1687–1695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shen J, Wright R, & Souza PE (2016) On older listeners’ ability to perceive dynamic pitch. J. S.L.H.R, 59(3), 572–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan S, Ruffman T, 2004. Emotion recognition deficits in the elderly. International Journal of Neuroscience 114, 403–432. [DOI] [PubMed] [Google Scholar]

- Suzuki A, Hoshino T, Shigemasu K, & Kawamura M (2007). Decline or improvement?: Age-related differences in facial expression recognition. Biological psychology, 74(1), 75–84. [DOI] [PubMed] [Google Scholar]

- Suzuki A, and Hiroko A “Cognitive aging explains age-related differences in face-based recognition of basic emotions except for anger and disgust.” Aging, Neuropsychology, and Cognition 203 (2013): 253–270. [DOI] [PubMed] [Google Scholar]

- Sze JA, Goodkind MS, Gyurak A, & Levenson RW. (2012). Aging and emotion recognition: not just a losing matter. Psychol Aging, 27(4), 940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson AE, & Voyer D (2014). Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cognition and Emotion, 28(7), 1164–1195. [DOI] [PubMed] [Google Scholar]

- Tukey JW (1977). Exploratory data analysis. Reading, Mass: Addison-Wesley Pub. Co. [Google Scholar]

- Wickham H (2009). ggplot2: elegant graphics for data analysis. Springer, New York. [Google Scholar]