Abstract

Background:

Health literacy as a concept is gaining importance in European countries, although it is still not adequately addressed among health personnel. Health literacy supports the self-management of patients in maintaining and improving health, which could decrease the burden on health systems in Europe. However, health professionals lack adequate knowledge about health literacy and the skills to promote health literacy among their patients.

Objective:

The Health Literacy Practices and Educational Competencies for Health Professionals (a health literacy training curriculum for health professionals) was recently developed in the United States, and the study presented here aimed to refine that assessment for health personnel in European settings.

Methods:

The modified Delphi method was used and data collected online via electronic communication to achieve consensus among an expert panel. The participants were a group of 20 health literacy and health care experts from 10 professional fields representing 13 European countries. The participants rated health literacy competencies on a four-point Likert scale and provided written feedback and recommendations. If a predetermined threshold of 70% or more of the participants agreed on the competency, the consensus was defined (similar to the criteria in the Health Literacy Practices and Educational Competencies for Health Professionals intervention).

Key Results:

After three rounds of ratings and modifications, consensus agreement was reached on 56 health literacy competencies (20 knowledge items, 25 skills items, 11 attitude items) and 38 practices. Eight items were removed from the original list and eight new items were added to the final list.

Conclusions:

This study is the first known attempt to develop a measurable list of health literacy competencies for health personnel in Europe. Further work is needed to develop educational curricula, standard national and regional guidelines, and questionnaires for the process of implementation to maximize health literacy responsiveness in health care organizations. [Health Literacy Research and Practice. 2017;1(4):e247–e256.]

Plain Language Summary:

The Health Literacy Practices and Educational Competencies for Health Professionals was recently developed in the United States. This study aimed to refine that assessment for health care professionals in Europe. The modified Delphi method was used and data collected online via electronic communication, and in the end, 56 health literacy competencies were included.

Health systems in Europe are currently facing multiple challenges, including escalating health care costs, rising rates of chronic disease, and aging populations. These issues are further exacerbated by large numbers patients with low health literacy and shortages in the number of health care personnel who are adequately prepared to work with such populations (Dennis et al., 2012; Heijmans et al., 2015; Nolte & McKee, 2008; Rudd & Anderson, 2006; Volandes & Paasche-Orlow, 2007; World Health Organization, 2008; Wise & Nutbeam, 2007). Health literacy is linked to literacy and entails people's knowledge, motivation, and competences to access, understand, appraise, and apply health information to make judgments and decisions in everyday life concerning health care, disease prevention, and health promotion to maintain or improve quality of life during the life course. (Sorensen et al., 2012). Low health literacy has been associated with greater risk for less healthy behavior and lower efficacy in self-managed care (Sørensen et al., 2015); poorer skills in interpreting health information, worse control of medical conditions, and increased rates of hospitalization (Berkman, Davis, Donahue, Halpern, & Crotty, 2011); increased risk of chronic conditions (Kickbusch, Pelikan, Apfel, & Tsoros., 2013); and increased need for emergency care (Morrison, Myrvik, Brousseau, Hoffmann, & Stanley 2013). Health literacy levels have been estimated to vary between 29% and 62% in Europe (Sørensen et al., 2015).

To address these problems, there is a need to create and strengthen health literacy-friendly settings within the health sector, which could empower patients and promote and support health literacy (Kickbusch et al., 2013).

However, both American and European research has recognized the lack of understanding and recognition of health literacy matters among health care personnel (Barrett, Puryear, & Westpheling, 2008; Brach et al., 2012; Dennis et al., 2012; Dickens, Lambert, & Cromwell, 2013; Koh, Brach, Harris, & Parchman 2013; Jukkala, Deupree, & Graham, 2009; Nutbeam & Kickbusch, 2000; Osborne, Batterham, Elsworth, Hawkins, & Buchbinder, 2013; Ratzan, 2011). Health care professionals have limited skills to address low health literacy among patients effectively (Bass, Wilson, Griffith, & Barnett, 2002; Lambert et al., 2014; Schlichting et al., 2007). Unfortunately, only a minority of ongoing health literacy interventions in 16 European Union member states focus on health professionals (e.g., Dickens, Lambert, & Cromwell, 2013; Heijmans et al., 2015; Jukkala et al., 2009).

In the United States, Coleman, Hudson, and Maine (2013) addressed the importance of mitigating the effects of low health literacy through the consistent use of health literacy-sensitive practices and adopting multiprofessional educational curricula. Key health literacy educational competencies were defined as the knowledge, skills, and attitudes that health personnel need to effectively address low health literacy among consumers of health care services and health information (Coleman et al., 2013; Heijmans et al., 2015). This American research group established a Delphi expert panel to agree upon the curricula for educational competencies regarding health literacy for newly graduated students (Coleman et al., 2013). The aim of the present study was to assess if the developed health literacy practices and educational competencies for health care professionals in the U.S. would be relevant among European stakeholders. This was the research question: “Considering the U.S. health literacy curricula made by Coleman et al. what are the ideal health literacy knowledge, skills, attitudes, and practices among health care personnel in Europe?” The hypothesis was that the developed tool would be valid in the European health care context with some adjustments to the European cultural setting.

Methods

Expert Panel

The panelists (Table 1) were identified based on their expertise and leadership role in health care, health communication, or health literacy. They were identified based on recommendations from one key informant in the European health literacy network and another key informant in the health communication network. Emails were sent to the identified experts requesting them to voluntarily participate in the study. This was a convenience sample of members of certain professional networks, namely Health Literacy Europe, the European Forum for Primary Care, the European Health Communication Network, and the European consensus on learning objectives for a core communication curriculum in health care professions study. To ensure representation of different European regions, the invitation was sent to health literacy and health care experts from Northern, Southern, Eastern, and Western Europe. Experts with research history in health literacy and specific knowledge and interest in health literacy were particularly recruited. The criteria for selection included a long history in health literacy and primary care research and practice with an important role in health literacy promotion locally and regionally. To confirm their expertise in health literacy, they were asked if their peers would consider them health literacy experts. Everyone who responded was accepted to participate. In total, 20 participants accepted the invitation.

Table 1.

Delphi Expert Panel Demographics Compared to the United States Panel

| Characteristic | Current Study (N = 16) | Coleman et al. (2013) (N = 22) |

|---|---|---|

|

| ||

| n (%) | n (%) | |

|

| ||

| Mean age (years) | 50.25 | 51.9 |

|

| ||

| Sex | ||

| Female | 10 (52.5) | 15 (71.4) |

| Male | 6 (37.5) | 6 (28.6) |

|

| ||

| Nationality | ||

| Nordic (Swedish, Norwegian, Danish) | 4 (25) | |

| Central Europe (German, Swiss) | 4 (25) | |

| Western Europe (Belgian, French, British, Dutch) | 4 (25) | |

| Eastern Europe (Polish, Romanian) | 2 (12.5) | |

| Southern Europe (Portuguese, Greek) | 2 (12.5) | |

|

| ||

| Country of residence | ||

| Nordic (Swedish, Norwegian, Danish) | 5 (31.3) | |

| Central Europe (German, Swiss) | 5 (31.3) | |

| Western Europe (Belgian, French, British, Dutch) | 3 (18.8) | |

| Eastern Europe (Polish, Romanian) | 2 (12.5) | |

| Southern Europe (Portuguese, Greek) | 1 (5.3) | |

|

| ||

| Highest level of education completed | ||

| Master's | 2 (12.5) | 1 (5) |

| Doctorate | 14 (87.5) | 19 (90) |

|

| ||

| Current joba | ||

| Professor | 11 (68.8) | |

| Researcher | 3 (18.8) | |

| Clinical practitioner | 3 (18.8) | |

| Manager | 2 (12.5) | |

|

| ||

| Education | ||

| Medicine | 5 (31.3) | |

| Psychology | 1 (5.3) | |

| Nursing | 1 (5.3) | |

| Pharmacy | 1 (5.3) | |

| Occupational therapy | 1 (5.3) | |

| Nutrition | 1 (6.3) | |

| Cognitive-behavioral therapist | 1 (5.3) | |

| Others | 5 (31.3) | |

|

| ||

| Would your peers consider you to have expertise on the topic of health literacy? | ||

| Yes | 14 (87.5) | 16 (72.7) |

| No | 2 (12.5) | 6 (27.3) |

Note.

Some respondents had more than one job.

Study Design

To stay consistent with the Coleman et al. (2013) study, we used a modified Delphi approach that included three rounds of group consensus-building via electronic communication among the group of health literacy experts between February 2015 and April 2015.

Originally, 20 health literacy experts were recruited, a number that falls between the regular Delphi study panelist number of 15 to 30 (De Villiers et al., 2005). The electronic communication took place via email and no online platform was used. After gathering the data, it was anonymized. No additional pilot test was done for the Coleman et al. (2013) competency list. Each panelist completed a consent form. All Delphi rounds were conducted in English.

In Round 1, 16 health literacy and health care experts rated the original 32 health literacy practices and 62 educational competencies for health professionals identified in the American consensus study using the decision rule described below (Coleman et al., 2013). These items were based on a nonsystematic literature review of primarily North American sources available as of 2010. We did not conduct a supplemental review for the period 2010 to 2015.

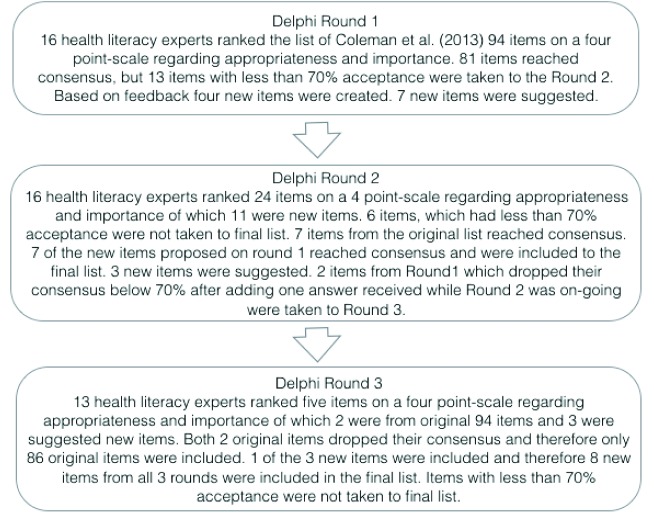

The items were originally represented in four categories: (1) knowledge, (2) skills, (3) attitudes, and (4) practices. This division was observed to increase comparability (Figure 1).

Figure 1.

Overview of the complete Delphi process.

During all rounds, the participants were asked to specify in the comment section if they thought the new items overlapped or repeated items from the original list and were also asked to suggest necessary editing. In addition, a space for adding more items was provided on both Round 1 and Round 2. Furthermore, a space for any additional comments and suggestions was provided next to each item during all rounds.

In Round 2, items from Round 1 that did not achieve consensus (11 items) were rated again to confirm the consensus. In addition, newly suggested items were added, based on the feedback from Round 1 (13 items). Three new items that had been proposed during Round 2 were added for Round 3. In addition, a late response to items in Round 1 that was received from one participant during Round 2 caused two items that had been accepted in Round 1 to fall below the acceptance threshold. These two items were added back to be re-rated in Round 3 (Figure 1). Each panelist was kept updated via individual emails. Each round was 2 weeks long. Those who could not adhere to this time frame despite individual reminders dropped out. No new participants joined between the rounds, and those who missed a round were not able to continue on another round. One panelist was allowed to remain for additional rounds even after completing Round 1 late.

Decision Rule

Using a similar procedure as in the American study, the rating of the practices and competencies during all three rounds was based on the appropriateness and the importance of each item to all health care professionals to ensure comparability and validity. Next to each item and at the end of the survey there was space for comments, suggestions, and recommendations that were further used in qualitative analysis. Participants were asked to rate each item using a four-point Likert-type scale concerning importance. The scale ranged from 1 (very important) to 4 (not important) and concerning appropriateness ranged from 1 (very appropriate) to 4 (not appropriate).

As in the previous study (Coleman et al., 2013), the importance ratings of “very important” and “important” and appropriateness ratings of “very appropriate” or “appropriate” were considered affirmative ratings. We used the same consensus level of 70% or more experts agreeing on both the importance and appropriateness for the competency to be retained (Coleman et al., 2013) and to improve comparability between these two studies. On a few occasions, participants suggested edits in regard to the wording of original items, but these were not accepted in consensus.

Quantitative Analysis

The demographics were analyzed using descriptive statistics (i.e., mean percentages, medians, and standard deviations). The statistical findings of the rankings were analyzed by using descriptive statistics in both IBM SPSS and Microsoft Excel.

Qualitative Analysis

To identify, analyze, and report patterns within the qualitative data, a thematic analysis was performed, similar to that used in the Delphi study by Bachmann et al. (2013). Thematic analysis was used because it focuses on identifying and describing both implicit and explicit ideas and themes within qualitative data. It also enables identifying code co-occurrence and displaying relationships between codes and capturing the complexities of meaning within data (Braun & Clarke, 2006). First, verbatim transcripts were read. Second, themes were identified based on grouping of concepts used by the panelists. Third, themes were compared, contrasted, and structured to upper categories. Lastly, theoretical models were built while constantly checking against the data by drawing four overarching themes. The structure of basic themes, organizing themes, and four global themes were created and adjusted similarly to the thematic network of Attride-Stirling (2001) and the thematic map of Braun & Clarke (2006).

Ethical Considerations

The project plan was approved by the Institutional Review Board of Lund University's Department of Health Sciences.

Results

Expert Panel

There were 16 participants in Rounds 1 and 2, and three dropped out before Round 3 (Table 1). The mean age of participants was 50.3 years (range, 31–65 years). Most of the participants were women (62.5%), had an educational background of clinical patient care (78.6%), had a doctoral-level education (87.6%), and considered themselves to have expertise in the field of health literacy (85.7%). The informants were allowed to define their education freely without multiple answer options; therefore, the answers were not limited only to the person's education in their professional field (Table 1). The most commonly reported field was medicine (n = 5). Moreover, Northern (25%), Southern (12.5%), Eastern (12.5%), Western (25%), and Central (25%) Europe all had representatives on the panel (Table 1).

Quantitative Analysis

Out of the 94 items rated during Round 1, consensus was reached on 83 items regarding importance and on 81 items regarding appropriateness. With a range from 46.7% to 100% rated “very important” or “important,” or “very appropriate” or “appropriate,” most items had high relevance among health care personnel. There were 21 items that had an acceptance of 100% regarding importance (rated “very important” or “important”) and 13 items that had an acceptance of 100% regarding appropriateness (rated “very appropriate” or “appropriate”). There were 27 items that had an acceptance of 90% to 99% regarding importance, and 31 items that had an acceptance of 90% to 99% regarding appropriateness. (If the reader would like to see all of the data used in the analysis, contact the corresponding author.)

After Round 1, there were 13 items without consensus, and these were carried forward to Round 2 for another ranking. In addition to these, seven new items suggested by the panel and four new items developed by the researchers based on the feedback given during Round 1 were included in Round 2. Therefore, there were 11 new items added to the Round 2 ranking list. In total, there were 24 items included for ranking in Round 2.

In Round 2, consensus was reached on 8 of 13 items regarding both importance and appropriateness. Five items were excluded from the original list based on the predetermined decision rule. In addition, 7 of 11 new items reached consensus and were included in the list with recommendations for edits suggested. During this round, there were three new items developed by the researchers from the feedback of the panel that were included for ranking in Round 3. One more expert from Round 1 was delayed and returned the answers while Round 2 was ongoing. This meant that two items (one regarding importance and one regarding appropriateness) lost their consensus because the agreement level fell below 70%. These two items were also included in Round 3, so altogether there were five items included for ranking in Round 3.

During Round 3, consensus was reached for only one new item, and two items from the original set were excluded based on the predetermined decision rule. The final set of competencies, therefore, consisted of 20 knowledge items, 25 skills items, 11 attitude items, and 38 practice items, yielding a total of 94 health literacy competencies.

Ratings on each item were compared to the ratings in the U.S. study (Coleman et al., 2013), along with the percentage of ratings and the round in which they were accepted from each study (a consensus was not reached on some items). Round 3 was the last round in this study, whereas there were 4 rounds in the U.S. study (Coleman et al., 2013).

In the U.S. study (Coleman et al., 2013), the appropriateness was consistently higher compared to importance. In this study, when using the same consensus level, only 22 items (23.4%) were rated more highly on appropriateness than importance. In this study, when comparing the ratings regarding importance criteria (“very important” and “important” together), 34 items (36.6%) were considered more important than appropriate. In addition, in this study 39.2% (n = 37) of the time the rating was the same on both criteria (“very appropriate” and “appropriate” compared to “very important” and “important”) per item.

When looking only at the category “very appropriate” compared to “very important” in this study, 33 items (35.1%) were more appropriate and 27 items (28.7%) were more important. Furthermore, 36.6% (n = 34) of the time, the rating on both “very appropriate” and “very important” was the same in this study. With regard to the importance, two of the most important competencies were a universal precautions approach to communication errors together with knowledge about shame in association to health literacy, the latter also being considered most appropriate. In addition, skills to elicit a patient's prior understanding of their health issues in a nonshaming manner was the other one of the “most appropriate” items.

Qualitative Analysis

During each round, experts provided extensive suggestions and recommendations that were analyzed using a thematic analysis approach. Feedback was given to 87 of the 94 items during Round 1, and all of the items during Round 2 and Round 3. This qualitative data assisted in the illustration of the rationale behind the experts' ratings and facilitated conducting further analysis.

Through the thematic analysis, several organizing themes were created and in the end, four broad global themes were identified: (1) emphasizing holistic approach in routine clinical practices, (2) positioning the health literacy demands for health systems, (3) inclusion of other stakeholders from different sectors, and (4) increasing health personnel capacity building in health literacy.

Discussion

Consensus was reached among European experts for 90% (n = 85) of the competency items, which had been derived in the U.S. study (Coleman et al., 2013). This indicates a high agreement level between the American and European lists and many similarities in the understanding of health literacy requirements from health personnel across continents, despite the historical and cultural differences. One example of this cultural difference is health literacy proficiency levels, varying from 12% in the U.S. (Kutner, Greenberg, Jin, & Paulsen, 2006) to between 29% and 51% in Europe depending on the country (Sørensen et al., 2015).

The items with the highest consensus in our study concerned shame (items K7 and A6), assessment of prior understanding (items S14 and P7), health personnel's pro-activity in addressing communication needs (items A10 & A11), avoiding medical jargon (items K4, K16, K17, S1, S2, and P14), Teach-Back method (items K19, S16, and P28), context-specific thinking (items K11 and S5), cultural sensitivity (items K6, S10, and P12), acknowledging informed refusal (item A5), empathy (item A8), nonjudgmental approach (items S15, A7, and P22), and regular information check with understanding (items S17 and P28).

However, the panelists also revealed some differences of opinion between the American and European health literacy competencies and practices. Competencies that were considered a more common approach in American settings were aligned more with health literacy as a risk. For instance, Nutbeam (2008) previously described how health literacy can be viewed as a risk factor as well as an asset, and these views were expressed in the study. In contrast, and typical for the European health literacy paradigm, the items referring to person-centered approaches (items P4, K21, S26, and P23) were rated among the more important competency items for health professionals, supporting a view of health literacy as an asset. The items related to population-level approaches (items P33, A11, K2, and P24) and asset empowerment (items P17, A7, and P25) were recognized to suit the European context according to the ratings. The clinical and risk paradigm of the U.S. has, on the other hand, been acknowledged in previous research to separate the body of research from public health and asset paradigm in the Euroean Union together with Australia and Canada (Nutbeam, 2008; Nutbeam & Kickbusch, 2000; Paasche-Orlow, McCaffery, and Wolf, 2009; Rimal & Lapinski, 2009).

Among the health literacy skill items, those referring to oral communication were included within higher consensus rankings than written communication competencies. Other studies have identified the use of modern health communication skills in clinical settings as a way to improve clinical patient outcomes (Coulter & Jenkinson, 2005; Parrott, 2004; Rimal & Lapinski, 2009), patient satisfaction, symptom relief, adherence, recall, and physical recovery (Dooris, 2009; Jansen et al., 2010; Joosten et al., 2008; Lie, Carter-Pokras, Braun, & Coleman, 2012; Shilling, Jenkins, & Fallowfield, 2003; Zolnierik & DiMatteo, 2009).

The panelists highlighted that health personnel across the health system should have competencies to routinely use health literacy methods relevant in the European setting to provide holistic patient care. Communicative and critical health literacy were considered the most important health literacy approaches in patient care according to the rating of the panel compared to the other health literacy items (items K4, A2, S1, P5, and K2). These aspects of health literacy are increasingly receiving attention in European research. Sykes, Wills, Rowlands, & Popple (2013), for example, described critical health literacy as a unique set of characteristics of advanced personal skills, health knowledge, information skills, effective interaction between service providers and users, informed decision-making, and empowerment including political action. However, critical health literacy also highlights that there needs to be a political will and drive for critical health literacy to develop and that requires location of responsibilities beyond the individual level (Sykes et al., 2013). Along these lines, the panel suggested new items that received consensus with regard to health systems prioritizing the development of health literacy friendliness as a new comprehensive structural solution to the issue, as recommended by Kickbusch et al. (2013).

The panelists mentioned several times that health literacy competencies should be relevant to routine practices among clinical professionals and realistic to role division, available resources, and time per patient, which is a general challenge recognized at the societal level in Europe (Dennis et al., 2012; Nolte & McKee, 2008; Sørensen et al., 2012; Volandes & Paasche-Orlow, 2007; World Health Organization, 2008; Wise & Nutbeam, 2007).

The U.S. consensus study (Coleman et al., 2013) was recently replicated among nurses in the U.S. Toronto (2016) performed a three-round e-Delphi study, which used a national sample of health literacy nurse experts. The study discovered that not all of the health literacy competencies of Coleman et al. (2013) for health professionals were essential to nurses. Furthermore, the study identified additional patient-centered competencies that nurses should possess while interacting with patients with low health literacy (Toronto, 2016).

Finally, in our study, a need was acknowledged to assess different health literacy measures and train health care personnel to use them accordingly. According to the panelists, health literacy measures should be adequate and suitable, which in turn requires advanced skills from health care personnel. However, controversy exists concerning this recommendation, as there is a risk of stigmatizing patients through the measurement and screening process in spite of the good intentions to help (Paasche-Orlow and Wolf, 2008). Instead, it is proposed to teach health care staff to use “health literacy universal precautions.” Health literacy universal precautions are the steps that practitioners take when they assume that all patients may have difficulty comprehending health information and accessing health services. They are aimed at (1) simplifying communication with and confirming comprehension for all patients, so that the risk of miscommunication is minimized, (2) making the institutional environment and health care system easier to navigate, and (3) supporting patients' efforts to improve their health (DeWalt et al., 2003).

Limitations

Although there is no agreement on the number of participants needed for consensus studies, different results might have been reached with a larger number of participants, or with different participants, such as nonmedical professionals, patients, or their relatives; however, our results are similar to those found by Coleman et al. (2013) in a consensus study using 23 expert panelists. The use of a convenience sample consisting primarily of physicians with lower representation among other disciplines could be considered a limitation of the recruitment method used. Not having a representative from each European country and from each health professional group might also increase the risk of selection bias. It was also not assessed how long the participants had worked in the field of health literacy or in clinical settings, nor how they defined health literacy.

Delphi studies typically encounter participant drop-outs (de Meyrick, 2003). For instance, 1 of 23 participants dropped out in the electronically conducted American study (Coleman et al., 2013). To minimize this risk, the participants in this study were informed in the information letter when invited to the study that the aim was to have three online rounds, and they should reserve enough time to join all of the rounds. However; 4 of the original 20 panelists dropped out after Round 1 without participating, and 3 more dropped out during the third and final round. The diminishing sample size might have affected the validity of the results.

The cut-off point could have been even more conservative than the 70% consensus level to make the list of competencies shorter. Furthermore, the same conclusion as in the U.S. study can be made here, which is that because there is no standard for defining consensus level in Delphi studies, and because the number of participants was relatively low, the pooled opinions should be interpreted taking these limitations into account (Coleman et al., 2013).

In addition, the consensus discussions were conducted in English; however, the panelists were from a variety of European nationalities and English may not have been their preferred language. It is possible that participants may have interpreted the meaning of items differently as a result.

On the final list there are several competency items that should be rewritten for each European country accordingly. For example, competency item K5 should be rewritten for various European Union countries; competency item S6 should be rewritten for professionals for whom English is not the primary language; and competency items P3 and P16 should also be rewritten for European Union countries where English is not the predominant language of health care professionals.

Lastly, as noted above, we used the list of items generated in the U.S. study, which was based on a 2010 literature review. It is possible that more recent literature may include additional recommended practices and competencies.

Conclusion

A predefined set of health literacy competencies derived from an American study was assessed for its relevance for European health care personnel via a Delphi process with health literacy experts from different professional backgrounds. For the final set of 94 health literacy competencies, eight original items were excluded and eight new items were included and given individual ranks based on importance and appropriateness. Due to high acceptance by the health literacy experts, it is recommended that the set of health literacy competencies can serve as a basis for curriculum or for a guideline and clinical assessment by different stakeholders, such as health educators, practitioners, or policy makers.

Implications and Recommendations

The set of health literacy competencies confirmed in this study can provide a sound basis for further development of curricula for health professionals while taking into account the inter- and multi-professional learning objectives for health literacy education in different national settings in Europe. One main concern with the U.S. consensus study was that the final list was too long and unprioritized. Our study has this same limitation. Efforts are underway in the U.S. to rank the order of the items (Coleman et al., 2017) and a similar follow-up prioritization study may be needed to aid European educators, clinicians, and policy makers. Furthermore, more detailed analysis of the different rating levels together with the written input from the experts can be used in developing educational and training material, questionnaires for assessments or guidelines, and protocols for clinical practitioners.

In the future, more detailed investigation is needed about the health literacy adjusting and implementation processes related to the different European health systems and professional groups. This set of health literacy competencies can also be further assessed in national settings with country experts to ensure adjustment to the different cultures and health systems. Depending on use, a larger and more representative sample such as experts from nonmedical professions, students, patients, and their relatives could also be explored in further research.

To get a more detailed overview of the value of each competency, other ranking categories instead of appropriateness and importance could be used. In addition, instead of applying the 4-point Likert scale, a smaller or bigger scale, such as a 5-point-Likert-scale used by Bachmann et al. (2013) in their Delphi study on core curriculum development for health communication could have been chosen. Having more rounds than three would also possibly improve the validity. In addition, including the evaluation of the entire list of health literacy competencies to each round, instead of only the ones without consensus together with the new items, could also further strengthen the validity of the results..

If intended to create a shorter standardized guideline for core competencies, the desired amount of competencies could also be predetermined in advance to reach more essential competencies as in the Delphi study on nursing core competencies by Lock (2011), in which 192 standards were reduced to a core set of 12. However, at this stage, the assessed set of health literacy competencies for health personnel in Europe provides new material for policy makers, health practitioners, and educators in different European countries that is applicable to various settings and assessments.

References

- Attride-Stirling J. (2001). Thematic networks: An analytic tool for qualitative research. Qualitative Research, 1(3), 385–405. 10.1177/146879410100100307 [DOI] [Google Scholar]

- Bachmann C. Abramovitch H. Barbu C. G. Cavaco A. M. Elorza R. D. Haak R. Rosenbaum M. (2013). A European consensus on learning objectives for a core communication curriculum in health care professions. Patient Education and Counseling, 93(1), 18–26. 10.1016/j.pec.2012.10.016 [DOI] [PubMed] [Google Scholar]

- Bass P. F. Wilson J. F. Griffith C. H. Barnett D. R. (2002). Residents' ability to identify patients with poor literacy skills. Academic Medicine, 77(10), 1039–1041. 10.1097/00001888-200210000-00021 [DOI] [PubMed] [Google Scholar]

- Barrett S. E. Puryear J. S. Westpheling K. (2008). Health literacy practices in primary care settings: Examples from the field. Retrieved from Commonwealth Fund website: http://www.commonwealthfund.org/publications/fund-reports/2008/jan/health-literacy-practices-in-primary-care-settings--examples-from-the-field

- Berkman N. D. Davis T. C. Donahue K.E. Halpern D. J. Crotty K. (2011). Low health literacy and health outcomes: An updated systematic review. Annals of Internal Medicine, 155(2), 97–107. 10.7326/0003-4819-155-2-201107190-00005 [DOI] [PubMed] [Google Scholar]

- Brach C. Keller D. Hernandez L. M. Baur C. Parker R. Schyve P. Schillinger D. (2012). Ten attributes of health literate health care organizations. Retrieved from National Academy of Medicine website: https://nam.edu/perspectives-2012-ten-attributes-of-health-literate-health-care-organizations/

- Braun V. Clarke V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 37–41. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- Coleman C. A. Hudson S. Maine L. L. (2013). Health literacy practices and educational competencies for health professionals: A consensus study. Journal of Health Communication, 18(Suppl. 1), 82–102. 10.1080/10810730.2013.829538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coleman C. Hudson S. Pederson B. (2017). Prioritized health literacy and clear communication practices for health care professionals. HLRP: Health Literacy Research and Practice, 1(3):e90–e99. 10.3928/24748307-20170503-01 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coulter A. Jenkinson C. (2005). European patients' views on the responsiveness of health systems and healthcare providers. European Journal of Public Health, 15(4), 355–360. 10.1093/eurpub/cki004 [DOI] [PubMed] [Google Scholar]

- Dennis S. M. Williams A. Taggart J. Newall A. Denney Wilson E. Zwar N. Harris M. F. (2012). Which providers can bridge the health literacy gap in lifestyle risk factor modification education: A systematic review and narrative synthesis. BMC Family Practice, 13, 44. 10.1186/1471-2296-13-44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Meyrick J. (2003). The Delphi method and health research. Health Education. 103(1), 7–16. 10.1108/09654280310459112 [DOI] [Google Scholar]

- De Villiers M. R. De Villiers P. J. T. Kent A. P. (2005). The Delphi technique in health science education. Medical Teaching, 27, 639–643. 10.1080/13611260500069947 [DOI] [PubMed] [Google Scholar]

- DeWalt D. A. Callahan L. F. Hawk V. H. Broucksou K. A. Hink A. Rudd R. Brach C. (2003). AHRQ health literacy universal precautions toolkit. Retrieved from Agency for Healthcare Research and Quality website: https://www.ahrq.gov/professionals/quality-patient-safety/quality-resources/tools/literacy-toolkit/index.html

- Dickens C. Lambert B. L. Cromwell T. (2013). Nurse overestimation of patients' health literacy. Journal of Health Communication, 18(Suppl. 1), 62–69. 10.1080/10810730.2013.825670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dooris M. (2009). Holistic and sustainable health improvement: The contribution of the settings-based approach to health promotion. Perspectives in Public Health, 129(1), 29–36. 10.1177/1757913908098881 [DOI] [PubMed] [Google Scholar]

- Heijmans M. Uiters E. Rose T. Hofstede J. Devillé W. van der Heide I Rademakers J. (2015). Study on sound evidence for a better understanding of health literacy in the European Union. Final Report. Retrieved from European Commission website: ec.europa.eu/health/sites/health/.../2015_health_literacy_en.pdf [Google Scholar]

- Jansen J. van Weert J. C. M. de Groot J. van Dulmen S. Heeren T. J. Bensing J. M. (2010). Emotional and informational patient cues: The impact of nurses' responses on recall. Patient Education and Counseling, 79(2), 218–224. 10.1016/j.pec.2009.10.010 [DOI] [PubMed] [Google Scholar]

- Joosten E. G. DeFuentes-Merillas L. De Weert G. H. Sensky T. Van Der Staak C. P. F. DeJong J. A. J. (2008). Systematic review of the effects of shared decision-making on patient satisfaction, treatment adherence and health status. Psychotherapy and Psychosomatics, 77(4), 219–226. 10.1159/000126073 [DOI] [PubMed] [Google Scholar]

- Jukkala A. Deupree J.P. Graham S. (2009). Knowledge of limited health literacy at an academic health center. Journal of Continuing Education in Nursing, 40(7), 298–302. . 10.3928/00220124-20090623-01 [DOI] [PubMed] [Google Scholar]

- Kickbusch I. Pelikan J. Apfel F. Tsouros A. (2013). Health literacy: The solid facts. Retrieved from World Health Organization website: www.euro.who.int/__data/assets/pdf_file/0008/190655/e96854.pdf [Google Scholar]

- Koh H. K. Brach C. Harris L.M. Parchman M. L. (2013). A proposed “health literate care model” would constitute a systems approach to improving patients' engagement in care. Health Affairs (Millwood), 32(2), 357–367. 10.1377/hlthaff.2012.1205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutner M. Greenberg E. Jin Y. Paulsen C. (2006). The health literacy of America's adults. Results from the 2003 national assessment of adult literacy. (NCES 2006–483). Retrieved from National Center for Education Statistics website: https://nces.ed.gov/pubs2006/2006483.pdf

- Lambert M. Luke J. Downey B. Grengle S. Kelaher M. Reid S. Smylie J. (2014). Health literacy? Health professionals' understandings and their perceptions of barriers that Indigenous patients encounter. BMC Health Services Research, 14, 614. 10.1186/s12913-014-0614-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lie D. Carter-Pokras O. Braun B. Coleman C. (2012). What do health literacy and cultural competence have in common? Calling for a collaborative health professional pedagogy. Journal of Health Communication, 17(Suppl. 1), 3, 13–22. 10.1080/10810730.2012.712625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lock L. R. (2011). Selecting examinable nursing core competencies: A Delphi project. International Nursing Review, 58(3), 347–353. 10.1111/j.1466-7657.2011.00886.x [DOI] [PubMed] [Google Scholar]

- Morrison A. K. Myrvik M. P. Brousseau D. C. Hoffmann R. G. Stanley R.M. (2013). The relationship between parent health literacy and pediatric emergency department utilization: A systematic review. Academic Pediatrics. 13(5), 421–429. 10.1016/j.acap.2013.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nolte E. McKee M. (2008) Caring for people with chronic conditions - a health system perspective. Retrieved from World Health Organization website: http://www.euro.who.int/en/publications/abstracts/caring-for-people-with-chronic-conditions.-a-health-system-perspective-2008

- Nutbeam D. (2008). The evolving concept of health literacy. Social Science and Medicine, 67(12), 2072–2078. 10.1016/j.socscimed.2008.09.050 [DOI] [PubMed] [Google Scholar]

- Nutbeam D. Kickbusch I. (2000). Advancing health literacy: A global challenge for the 21st century. Health Promotion International, 15(3), 183–184. 10.1093/heapro/15.3.183 [DOI] [Google Scholar]

- Osborne R. H. Batterham R. W. Elsworth G. R. Hawkins M. Buchbinder R. (2013). The grounded psychometric development and initial validation of the Health Literacy Questionnaire (HLQ). BMC Public Health, 13(1), 658. 10.1186/1471-2458-13-658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paasche-Orlow M. K. Wolf M. S. (2008). Evidence does not support clinical screening of literacy. Journal of General Internal Medicine, 23, 100–102. 10.1007/s11606-007-0447-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paasche-Orlow M. K. McCaffery K. Wolf M. S. (2009). Bridging the international divide for health literacy research. Patient Education and Counseling, 75(3), 293–294. 10.1016/j.pec.2009.05.001 [DOI] [PubMed] [Google Scholar]

- Parrott R. (2004). Emphasizing “communication” in health communication. Journal of Communication, 54(4), 751–787. 10.1111/j.1460-2466.2004.tb02653.x [DOI] [Google Scholar]

- Ratzan S. C. (2011). Health communication: Beyond recognition to impact. Journal of Health Communication, 16(2), 109–111. 10.1080/10810730.2011.552379 [DOI] [PubMed] [Google Scholar]

- Rimal R. N. Lapinski M. K. (2009). Why health communication is important in public health. Bulletin of the World Health Organization, 87(4), 247. 10.2471/BLT.08.056713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudd R.E. Anderson J. E. (2006). The health literacy environment of hospitals and health centers. Partners for action: Making your healthcare facility literacy-friendly. Retrieved from National Center for the Study of Adult Learning and Literacy website: http://www.ncsall.net/fileadmin/resources/teach/environ.pdf [Google Scholar]

- Schlichting J. A. Quinn M. T. Heuer L. J. Schaefer C. T. Drum M. L. Chin M. H. (2007). Provider perceptions of limited health literacy in community health centers. Patient Education and Counseling, 69(1–3), 114–120. 10.1016/j.pec.2007.08.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shilling V. Jenkins V. Fallowfield L. (2003). Factors affecting patient and clinician satisfaction with the clinical consultation: Can communication skills training for clinicians improve satisfaction? Psycho-Oncology, 12(6), 599–611. 10.1002/pon.731 [DOI] [PubMed] [Google Scholar]

- Sørensen K. Pelikan J. M. Rothlin F. Ganahl K. Slonska Z. Doyle G. Brand H. (2015). Health literacy in Europe: Comparative results of the European health literacy survey (HLS-EU). The European Journal of Public Health, 25(6), 1053–1058. 10.1093/eurpub/ckv043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sørensen K. van den Broucke S. Fullam J. Doyle G. Pelikan J. Slonska Z. Doyle G. Brand H. (2012). Health literacy and public health: A systematic review and integration of definitions and models. BMC Public Health, 12(1), 80. 10.1186/1471-2458-12-80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sykes S. Wills J. Rowlands G. Popple K. (2013). Understanding critical health literacy: A concept analysis. BMC Public Health, 2013, 13, 150. 10.1186/1471-2458-13-150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toronto C. E. (2016). Health literacy competences for registered nurses: An e-Delphi study. The Journal of Continuing Education in Nursing, 47(12), 558–565. 10.3928/00220124-20161115-09 [DOI] [PubMed] [Google Scholar]

- Volandes A. E. Paasche-Orlow M. K. (2007). Health literacy, health inequality and a just healthcare system. The American Journal of Bioethics, 7, 5–10. 10.1080/15265160701638520 [DOI] [PubMed] [Google Scholar]

- Wise M. Nutbeam D. (2007). Enabling health systems transformation: What progress has been made in re-orienting health services? Promotion and Education. 14(Suppl. 2):S23–S27. 10.1177/10253823070140020801x [DOI] [PubMed] [Google Scholar]

- World Health Organization. (2008). The World Health Report 2008. Primary health Care - Now more than ever. Retrieved from http://www.who.int/whr/2008/en/

- Zolnierek K.B. DiMatteo R. M. (2009). Physician communication and patient adherence to treatment: A meta-analysis. Medical Care, 47(8), 826–834. 10.1097/MLR.0b013e31819a5acc [DOI] [PMC free article] [PubMed] [Google Scholar]