Abstract

Knowledge of object shape is primarily acquired through the visual modality but can also be acquired through other sensory modalities. In the present study, we investigated the representation of object shape in humans without visual experience. Congenitally blind and sighted participants rated the shape similarity of pairs of 33 familiar objects, referred to by their names. The resulting shape similarity matrices were highly similar for the two groups, indicating that knowledge of the objects' shapes was largely independent of visual experience. Using fMRI, we tested for brain regions that represented object shape knowledge in blind and sighted participants. Multivoxel activity patterns were established for each of the 33 aurally presented object names. Sighted participants additionally viewed pictures of these objects. Using representational similarity analysis, neural similarity matrices were related to the behavioral shape similarity matrices. Results showed that activity patterns in occipitotemporal cortex (OTC) regions, including inferior temporal (IT) cortex and functionally defined object-selective cortex (OSC), reflected the behavioral shape similarity ratings in both blind and sighted groups, also when controlling for the objects' tactile and semantic similarity. Furthermore, neural similarity matrices of IT and OSC showed similarities across blind and sighted groups (within the auditory modality) and across modality (within the sighted group), but not across both modality and group (blind auditory–sighted visual). Together, these findings provide evidence that OTC not only represents objects visually (requiring visual experience) but also represents objects nonvisually, reflecting knowledge of object shape independently of the modality through which this knowledge was acquired.

Introduction

Object shape allows us to recognize, identify, categorize, and interact with objects. Knowledge of object shape is typically acquired through the visual modality, yet object shape is not a strictly visual object property (e.g., color): shape knowledge can also be acquired through other sensory modalities, such as touch. Indeed, congenitally blind individuals, who lack visual experience, have a detailed, rich, and relatively accurate representation of object shape (Gregory and Wallace, 1963). In the present study, we aimed to reveal nonvisual shape representations in the human brain. Specifically, by testing congenitally blind individuals, we tested whether regions in high-level “visual” cortex that have been associated with visual shape representations also represent object shape nonvisually.

Much research has investigated the neural mechanisms underlying the recognition of object shape from visual input. In human fMRI, regions in ventral and lateral occipitotemporal cortex respond more strongly to pictures of intact objects relative to their scrambled counterparts (Malach et al., 1995; Kourtzi and Kanwisher, 2000). Important evidence that these regions represent the shape of objects, rather than other properties of intact objects, comes from recent fMRI studies that used multivoxel pattern analysis (Eger et al., 2008; Haushofer et al., 2008; Op de Beeck et al., 2008; Drucker and Aguirre, 2009; Peelen and Caramazza, 2012; Mur et al., 2013). These studies have shown that multivoxel activity patterns within object-selective cortex (OSC; defined by the contrast between intact and scrambled objects) carry information about the shape of the viewed objects. For example, patterns of activity within OSC discriminate between objects with smooth, sharp, and straight contours (Op de Beeck et al., 2008). Another study (Haushofer et al., 2008) used representational similarity analysis (RSA; Kriegeskorte et al., 2008) to directly link OSC activity patterns with perceived shape similarity. In that study, participants rated the pairwise similarity of several shapes, thus creating a matrix describing the perceptual similarity among the set of shapes. Multivoxel fMRI activity patterns in OSC were established for each of the shapes, and the similarity between these activity patterns was computed using correlations. Correlating the perceptual and neural similarity matrices showed that activity patterns in parts of OSC reflected perceived object shape.

These findings raise the question of whether the representation of object shape in high-level visual cortex is a purely visual representation or whether this region also represents object shape nonvisually. One way to address this question is to relate neural similarity with shape similarity for objects that are not presented visually (e.g., objects referred to by aurally presented words or objects explored by touch). However, in sighted individuals, positive results in such an experiment could reflect visual imagery of shapes (Stokes et al., 2009; Reddy et al., 2010; Cichy et al., 2012). Therefore, in the present study we tested congenitally blind individuals, who have never visually experienced object shape and therefore cannot visually imagine shapes, to test whether nonvisual object shape is represented in high-level visual cortex.

Materials and Methods

Participants

Thirteen congenitally blind and 15 sighted adults were scanned and paid for participation in the study. All blind participants reported that they had lost their vision since birth. Because medical records of onset of blindness were not available for most participants, it cannot be ruled out that some of the participants may have had vision very early in life. None of the participants remembered to have ever been able to visually recognize shapes. Five blind participants reported to have had faint light perception in the past. Three participants reported to have faint light perception at the time of testing. An ophthalmologist established the cause of blindness of all participants. This led to the following diagnoses: three congenital glaucoma, one congenital glaucoma and cataracts, one congenital glaucoma and leukoma, one congenital microphthalmia, one congenital microphthalmia and microcornea, one congenital anophthalmos, one congenital optic nerve atrophy, one congenital leukoma, one congenital eyeball dysplasia, one congenital microphthalmi, cataracts and leukoma, and one cataracts and congenital eyeball dysplasia.

One sighted participant was discarded from the auditory experiment and three sighted participants were discarded from the visual experiment because of excessive head motion (the visual experiment was always run at the end of the experimental session). The blind (four female) group was matched to the 14 remaining sighted (seven female) participants of the auditory experiment on handedness (all right handed), age (blind: mean = 38, SD = 12, range = 18–58; sighted mean = 43, SD = 10, range = 27–60; t(25) = 1.2, p = 0.22), and years of education (blind: mean = 11, SD = 3, range = 0–12; sighted: mean = 11, SD = 2, range = 9–15; t(25) < 1). They were all native Mandarin Chinese speakers. None suffered from psychiatric or neurological disorders, had ever sustained head injury, or were on any psychoactive medications. All participants completed a written informed consent approved by the institutional review board of Beijing Normal University (BNU) Imaging Center for Brain Research.

Materials and procedure

Auditory experiment.

During the auditory fMRI experiment, congenitally blind and sighted participants performed an auditory object-size judgment task. The objects presented were 33 common household objects (Fig. 1), selected from a larger set based on an informal interview with a congenitally blind individual verifying his knowledge of the shape of the items. All stimuli were disyllabic words and were recorded digitally (22,050 Hz, 16 bit) by a female native Mandarin speaker. In the scanner, stimuli were presented binaurally over a headphone. Participants were instructed to compare the real-world size of each object with that of a typical adult's hand palm. If the object was smaller than a palm, participants responded by pressing a button with their right index finger; if the object was bigger than a palm, they pressed a button with their right middle finger. Sighted participants were asked to close their eyes during the experiment. Blind participants judged 53% (SD, 7%) of items smaller than a hand palm (mean RT, 1129 ms; SD, 101 ms). Sighted participants judged 45% (SD, 13%) of items smaller than a hand palm (mean RT, 1203 ms; SD, 118 ms).

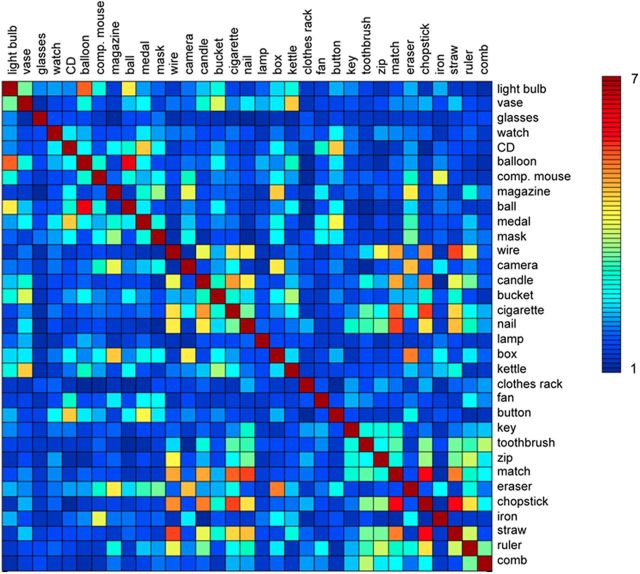

Figure 1.

Shape similarity matrix. Matrix showing the shape similarity between all object pairs on a scale from 1 (least similar) to 7 (most similar), averaged across the participants of an independent group. Participants were asked to rate “how similar in shape are the objects denoted by these two words.”

Each experimental run consisted of 66 3-s-long trials (1 s auditory word followed by 2 s silence) and 22 3-s-long null-trials (3 s silence). Each object name was presented twice within a run. The order of the 88 trials was pseudorandomized, with the restriction that no two consecutive trials were identical, and that the first and the last trials were experimental trials rather than null-trials. Each run started and ended with a 12 s silence period. The whole experiment consisted of eight runs each lasting 4 min 48 s, with each of the 33 object words presented 16 times during the experiment.

Visual experiment.

After they had completed the auditory experiment, the sighted participants additionally participated in an fMRI experiment in which they viewed pictures of the objects. This allowed us to test whether, using our analysis approach, ROI selection, and experimental design, we would replicate previous reports of shape similarity in high-level visual cortex using visual stimuli (Haushofer et al., 2008; Op de Beeck et al., 2008; Peelen and Caramazza, 2012). The task (object-size judgment) was the same as in the auditory experiment. Pictures were black-and-white photographs (200 × 200 pixels, visual angle 5.29 × 5.29°). Three different exemplars for each object were used. Participants viewed the pictures through a mirror attached to the head coil adjusted to allow foveal viewing of a back-projected monitor.

One run consisted of 99 2-s-long picture trials (800 ms picture followed by 1200 ms fixation) and 33 2-s-long fixation trials. Trial order was pseudo-randomized with the restriction that no two consecutive objects were identical and that both the first and the last presentation were picture trials. Each of the 33 object conditions was presented three times within a run, with each exemplar presented once. Each run started and ended with 12 s fixation. Participants performed five runs, each lasting 4 min 48 s, with each of the 33 objects presented 15 times in the experiment.

Similarity ratings for auditory experiment.

Shape similarity ratings were collected from the participants of the fMRI study as well as from 16 college students who did not participate in the fMRI study. Two additional student groups rated the tactile (N = 15) and semantic (N = 16) similarity of the objects. These ratings were collected for use in partial correlation analyses, aimed at finding correlations between shape similarity and neural similarity after removing the effects of tactile and semantic similarity on both these variables. The partial correlation is equivalent to the correlation between the residuals of shape and neural similarity after regression on tactile and semantic similarity.

For sighted participants, each trial of the rating task showed two object names on a computer screen, whereas for the blind participants the object names were read aloud by the experimenter. Each participant rated all possible 528 pairs of the 33 object names. For shape similarity, participants were asked to rate: “how similar in shape are the objects denoted by these two words?” For tactile similarity, participants were asked to rate: “how similar do they feel when you touch the objects denoted by these two words?” For semantic similarity, participants were asked to rate: “how related in meaning are the objects denoted by these two words?” all using a seven-point scale (seven for most similar). The next trial started after the response was collected. For shape similarity ratings, participants were told to disregard other properties of the objects such as their color, real-world size, or tactile properties, such as texture. This resulted in symmetric 33 × 33 matrices of shape, tactile, or semantic similarity. The correlations between these matrices (ratings of college students) were as follows: shape–tactile, r = 0.00; shape–semantic, r = 0.24; tactile–semantic, r = 0.21. Some examples (from student group) of highly similar pairs on the shape dimension were as follows: match–chopstick (5.8), wire–straw (5.6), cigarette–chopstick (5.5), and balloon–light bulb (5.4). Some examples of highly similar pairs on the tactile dimension were as follows: glasses–light bulb (6.2), kettle–bucket (6.0), iron–medal (5.4), and ball–eraser (5.4). Some examples of highly similar pairs on the semantic dimension were as follows: lamp–light bulb (6.5), kettle–bucket (6.5), balloon–ball (6.4), and cigarette–match (6.3).

All fMRI analyses presented in this paper used the group-average ratings from sighted students who did not participate in the fMRI experiment (Fig. 1), as the blind and sighted fMRI participants did not rate tactile and semantic similarity. Using the student groups' similarity matrices has the advantage that the ratings were fully independent of the fMRI data. We also analyzed the data using each participant's own shape similarity matrix. These analyses gave qualitatively similar but less consistent results, in line with a previous study showing that consensus judgments may be more informative than idiosyncratic judgments in predicting fMRI activity to individual items (Engell et al., 2007). Indeed, using the shape similarity matrix averaged across all raters (blind, sighted, students) slightly improved results (in terms of statistical significance) relative to the results reported here.

Similarity ratings for visual experiment.

A new group of sighted participants (N = 16; college students) rated the perceptual similarity of the pictures presented in the visual experiment. In each trial, two pictures, of different objects, were shown simultaneously and participants answered the question “how much do these two objects look alike?” using a seven-point scale (seven for most similar). Participants were instructed to judge the similarity of the shape of the objects disregarding low-level similarities, such as differences in size, illumination, or viewpoint.

The pixelwise similarity between the objects was computed using pixelwise correlations (Peelen and Caramazza, 2012) on black-and-white silhouettes of the object pictures used in the visual experiments. The analysis was done separately for each of the three exemplars of the 33 objects. Correlations were Fisher transformed and then averaged across the three exemplars. This resulted in a symmetric 33 × 33 matrix of pixel similarity.

Data acquisition and analysis

MRI data were collected with a 3T Siemens Trio Tim scanner at the BNU MRI center. A high-resolution 3D structural dataset was acquired with a 3D-MPRAGE sequence in the sagittal plane (TR, 2530 ms; TE, 3.39 ms; flip angle, 7 degree; matrix size, 256 × 256, 144 slices; voxel size, 1.33 × 1×1.33 mm; acquisition time, 8.07 min). BOLD activity was measured with an EPI sequence (TR, 2000 ms; TE, 30 ms; flip angle, 90; matrix size, 64 × 64; voxel size, 3 × 3 × 3.5 mm with gap of 0.7 mm, 33 axial slices).

fMRI data were analyzed using BrainVoyager QX v2.3. The first five volumes (10 s) of each run were discarded. Preprocessing of the functional data included slice time correction, 3D motion correction, and high-pass filtering (cutoff, three cycles per run). No spatial smoothing was applied. For each participant, functional data were then registered to her/his anatomical data and transformed into Talairach space. Functional data were analyzed using the general linear model. We included 33 regressors of interest corresponding to the 33 objects, and six regressors of no interest corresponding to the six motion parameters.

Anatomical regions of interest (ROIs) were defined using the Talairach atlas implemented in BrainVoyager. Corresponding ROIs of the two hemispheres were combined because no significant differences between hemispheres were found in any of the analyses. The first ROI (occipital cortex; OC) consisted of Brodmann areas 17 and 18. BA17 and BA18, located in the occipital lobe, approximately correspond to retinotopic areas V1 and V2/V3, respectively. The second ROI (inferior temporal cortex; IT) consisted of Brodmann areas 37 and 20. BA37 and BA20 include the occipitotemporal and ventral temporal cortex, covering regions in which object-selective responses are found. The two ROIs were of comparable size (OC, 28,906 mm3; IT, 20,348 mm3).

A functionally defined OSC ROI was taken from a previous study (Bracci et al., 2012) conducted in a separate group of sighted participants. Participants performed one run of a standard object-selective cortex localizer (Malach et al., 1995), lasting 5 min. Stimuli consisted of 20 intact and 20 scrambled objects, which were presented in alternating blocks. OSC was defined at the group level (N = 11) in Talairach space by contrasting intact versus scrambled objects (random-effects, p < 0.001), and had a size of 24,896 mm3.

To create a neural similarity matrix for a given ROI, multivoxel response patterns (t values relative to baseline) for the 33 objects were correlated with each other, resulting in a symmetric 33 × 33 matrix. These correlation values were Fisher transformed. Shape information was computed by correlating the neural similarity matrix of a given ROI with the average shape similarity matrix of the independent student group, considering only unique off-diagonal values of the matrices. Correlations between neural and shape matrices were computed for each participant individually, Fisher transformed, and then tested against zero across participants using t tests. Considering the unidirectional hypothesis for this test (a positive correlation between neural similarity and shape similarity), one-tailed statistical tests were used. For all other tests (e.g., differences between groups), for which both directions might be hypothesized, two-tailed tests were used.

Whole-brain pattern analysis was performed using a searchlight approach (Kriegeskorte et al., 2006). For each voxel in the brain we took a cube of 18 mm side length (corresponding to 216 resampled (3 × 3 × 3 mm voxels) around this voxel. For each of these cubes, shape information was computed as described above. Information values from each cube were assigned to the center voxel of this cube. The analysis was performed for each participant separately. A random-effects group analysis was performed testing for voxels in which information values differed from zero using t tests. The threshold was set to p < 0.05 (conjunction analysis) and p < 0.0005 (combined analysis), with a cluster size threshold of five voxels.

Results

Shape similarity ratings

As can be seen in Figure 1, there was considerable variability in the shape similarity of the object name pairs, as rated by an independent group of sighted students who did not participate in the fMRI experiment. Shape similarity was also rated by the sighted and blind participants of the fMRI study. Interestingly, the shape similarity matrix of the independent student group was equally strongly correlated with the shape similarity matrix of the sighted (r = 0.84) and of the blind (r = 0.84) group participating in the fMRI experiment. The correlation between the matrices of the sighted and blind fMRI groups was r = 0.88 (Fig. 2). These similarities show that the blind participants' judgments of shape are surprisingly similar to those of sighted individuals, suggesting a common representation of object shape.

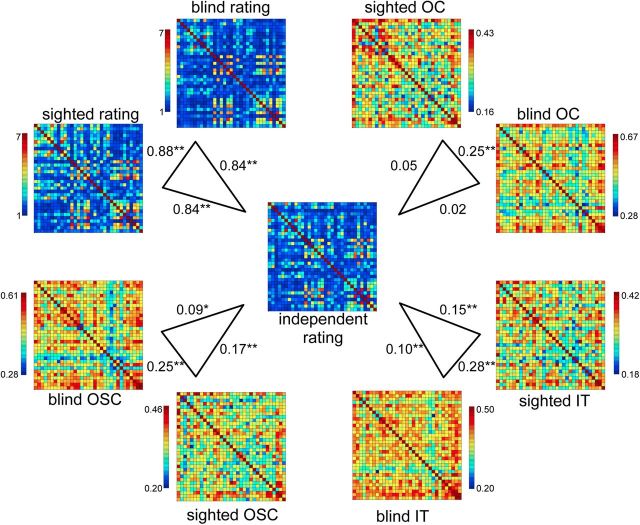

Figure 2.

Group-average shape and neural similarity matrices. Correlations between the group-average independent shape rating matrix (center) and the group-average shape rating matrices of the blind and sighted groups of the fMRI study (top left), and correlations between the group-average independent shape rating matrix and the group-average neural similarity matrices of OC, IT, and OSC ROIs in the auditory experiment. Asterisks indicate significance levels of the correlations relative to zero; *p < 0.05; **p < 0.01.

Auditory experiment

fMRI activity was measured while sighted and congenitally blind participants listened to the names of 33 common household objects. For several regions of interest, the neural similarity between the 33 objects was correlated with the rated shape similarity between these same objects. Regions of interest included two anatomical ROIs, corresponding to what is often considered low-level visual cortex (OC) and high-level visual cortex (IT), and an OSC ROI, functionally defined by the contrast between pictures of intact and scrambled objects in a separate group of sighted participants (see Materials and Methods).

Group-average matrices

In a first analysis we compared the similarity between the group-average neural matrices of the blind and sighted groups, and between the group-average neural matrices and the independent shape similarity matrix (Fig. 2). Significance of these correlations was determined using permutation tests (1 million iterations), by computing correlations between each of two 2 matrices after randomly shuffling the labels of the conditions. This yielded, for each comparison, a probability of obtaining a correlation under the null hypothesis that was the same or larger as those obtained between the original matrices.

As shown in Figure 2, the neural similarity matrices of all three regions were reliably correlated across groups (p < 0.00002, for all comparisons), indicating an overlap in the neural representational space of the objects in blind and sighted participants. Importantly, the neural similarity matrices of IT and OSC were significantly correlated with the shape similarity matrix for both the sighted (IT, p = 0.00011; OSC, p = 0.000007) and the blind (IT, p = 0.010; OSC, p = 0.022) groups, indicating that these regions showed relatively similar neural patterns to objects that were rated as relatively similar in shape, even in the absence of visual experience. By contrast, the neural similarity matrix of OC was not significantly correlated with the shape similarity matrix in either group (sighted, p = 0.12; blind, p = 0.31).

Individual-participant matrices

Our main statistical analyses were based on individual-participant correlations, allowing for population-level inferences. For each participant, correlations between each ROI's neural similarity matrix and the independent shape similarity matrix were computed and Fisher transformed. To test for significant shape information, correlations were tested against zero using one-sample t tests. To test for differences in shape information between blind and sighted groups, and between ROIs, correlations were contrasted between groups and ROIs using ANOVAs and independent-samples t tests. Individual-participant correlations were also tested using random-effects searchlight analyses, focusing on the occipitotemporal cortex. To ensure that correlations between neural similarity and shape similarity were not due to effects of tactile similarity or semantic similarity (see Materials and Methods for the rating procedure of tactile and semantic similarity), second-order partial correlations were also computed, removing the influence of both tactile and semantic similarity.

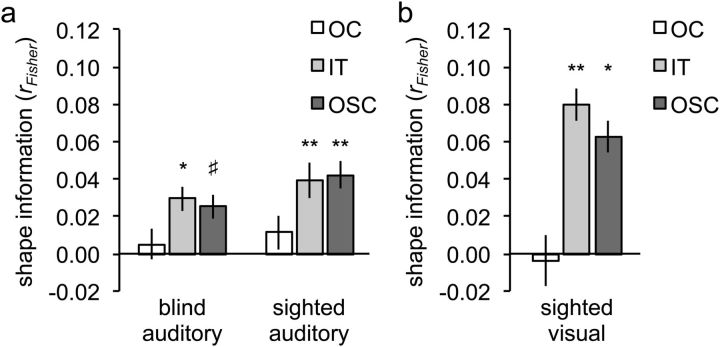

A 2 × 2 ANOVA on shape information in the two anatomical ROIs, with group (sighted, blind) and ROI (OC, IT) as factors, revealed a main effect of ROI, with significantly more shape information in IT than OC (F(1,25) = 5.51; p = 0.027; Fig. 3a). Shape information was significant in IT (r = 0.034; t(26) = 3.87; pone-tailed = 0.0003) but not in OC (r = 0.008; t(26) = 0.70; pone-tailed = 0.25). There was no main effect of Group (F(1,25) = 0.19; p = 0.67) and no interaction between ROI and Group (F(1,25) = 0.03; p = 0.88), indicating equally strong shape information in blind and sighted groups. Shape information in the IT ROI was significant in both the sighted group (Fig. 3a; t(13) = 3.09; pone-tailed = 0.0043) and the blind group (Fig. 3a; t(12) = 2.28; pone-tailed = 0.021). These results did not change when controlling for the influence of both tactile and semantic similarity using second-order partial correlation analysis: shape information was again significant in IT (r = 0.028; t(26) = 3.03, pone-tailed = 0.0027) but not in OC (r = 0.006; t(26) = 0.44, pone-tailed = 0.33). Furthermore, shape information in the IT ROI was again significant in both the sighted group (r = 0.031; t(13) = 2.24, pone-tailed = 0.021) and the blind group (r = 0.025; t(12) = 1.96, pone-tailed = 0.037). Tactile information was not significant in either ROI (r < 0.017; pone-tailed >0.19, for both ROIs), whereas semantic information was significant in IT (r = 0.028; t(26) = 2.32, pone-tailed = 0.014) but not in OC (r = 0.014; t(26) = 1.06, pone-tailed = 0.15).

Figure 3.

Results of ROI analyses. The plotted values (shape information) are the average (Fisher-transformed) correlations between the shape similarity matrix and the neural similarity matrix of each ROI (OC, IT, and OSC). a, Auditory experiment. b, Visual experiment. Error bars indicate within-participant SEM. Asterisks indicate significance levels of the correlations relative to zero (one-tailed); #p = 0.06; *p < 0.05; **p < 0.01.

Similar to the IT ROI, response patterns in the functionally defined OSC contained significant shape information (r = 0.034; t(26) = 3.18; pone-tailed = 0.0019; Fig. 3a), which did not differ between sighted and blind groups (t(25) = 0.79; p = 0.44). Shape information was also significant when controlling for both tactile and semantic dimensions using second-order partial correlation analysis (r = 0.028; t(26) = 2.45; pone-tailed = 0.011). Tactile information was not significant in OSC (r = −0.003; t(26) = −0.14, pone-tailed = 0.56), whereas semantic information was significant in OSC (r = 0.029; t(26) = 2.30, pone-tailed = 0.015).

Searchlight analyses

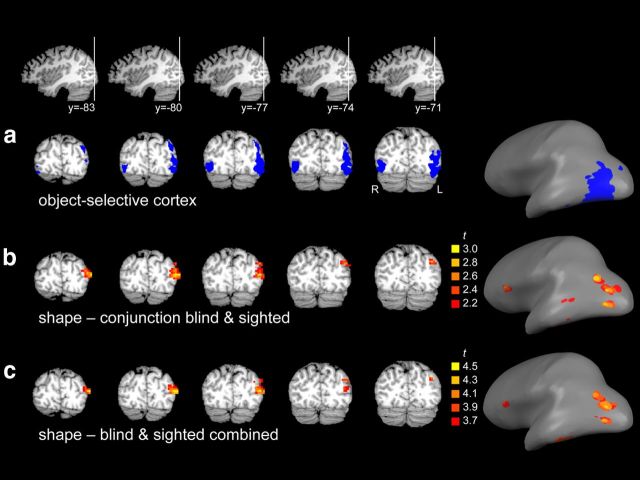

To test which parts of the occipitotemporal cortex (OTC) contributed most strongly to the shape information observed in the ROI analyses, we next tested for shape information in OTC without limiting the analysis to a priori regions of interest by using a searchlight pattern analysis (Kriegeskorte et al., 2006). This analysis tested for local clusters in which neural similarity was correlated with shape similarity. In a first analysis, a conjunction analysis was performed to reveal OTC clusters in which shape information was significant (p < 0.05) in both groups. This analysis revealed a large cluster in left lateral occipitotemporal cortex (volume = 2916 mm3; xyz = −43, −80, 15; Fig. 4b), overlapping object-selective cortex (Fig. 4a). A cluster in left inferior temporal cortex was also observed (volume = 54 mm3; xyz = −51, −41, −21), but this cluster did not survive the cluster size threshold. One cluster was observed outside OTC, in left inferior frontal cortex (volume = 162 mm3; xyz = −46, 21, 13).

Figure 4.

Results of whole-brain analyses. a, Object-selective cortex ROI displayed in volume space (left) and on an inflated brain (right). b, Results of a random-effects conjunction searchlight analysis, testing for regions showing significant shape information in both blind and sighted groups, displayed in volume space (left) and on an inflated brain (right). The cluster in left occipitotemporal cortex was one contiguous cluster in volume space but not when displayed on the inflated brain. c, Results of a random-effects searchlight analysis on all participants combined, displayed in volume space (left) and on an inflated brain (right). The cluster in left occipitotemporal cortex was one contiguous cluster in volume space but not when displayed on the inflated brain.

In a second analysis, auditory task data of both groups were combined to maximize statistical power. At a threshold of pone-tailed <0.0005 this analysis revealed two clusters in OTC (Fig. 4c). The largest cluster was again located in left lateral occipitotemporal cortex (volume = 2268 mm3; xyz = −43, −79, 14), overlapping object-selective cortex (Fig. 4a). A smaller cluster was located in left inferior temporal cortex (volume = 216 mm3; xyz = −51, −42, −21). A third cluster was located outside OTC, in left inferior frontal cortex (volume = 216 mm3; xyz = −50, 21, 14). None of these clusters showed a significant difference in shape information between sighted and blind groups (t(25) < 1, for all tests).

Visual experiment

Data of a visual experiment, in which pictures of the objects were presented, were analyzed using the same approach as used for the analysis of the auditory experiment data. This served to replicate previous reports of shape representations in OTC for visually presented objects (Haushofer et al., 2008; Op de Beeck et al., 2008; Peelen and Caramazza, 2012) and their overlap with representations of imagined objects, referred to by aurally presented object names (Stokes et al., 2009; Reddy et al., 2010; Cichy et al., 2012).

A 2 × 2 ANOVA with ROI (OC, IT) and dimension (pixel, shape) as factors revealed a significant interaction (F(1,11) = 97.2, p < 0.0001), reflecting significant information about shape similarity in IT (Fig. 3a; t(11) = 2.96, pone-tailed = 0.0064) but not OC (Fig. 3a; t(11)=-0.21, pone-tailed = 0.42) and significant information about pixelwise image similarity in OC (t(11) = 8.82, pone-tailed <0.0001) but not IT (t(11) = 0.01, pone-tailed = 0.49). Shape information was also significant in OSC (Fig. 3b; t(11) = 2.50, pone-tailed = 0.015). These results replicate previous studies showing that inferior temporal cortex represents visual object shape, whereas occipital cortex represents low-level visual image properties (Peelen and Caramazza, 2012).

Next, we tested whether the neural similarity matrices of the sighted participants were similar across modalities (auditory-visual). To this aim, we computed the correlation between each individual participant's neural similarity matrix in one modality (e.g., visual) and the group-average neural similarity matrix in the other (e.g., auditory) modality, excluding the tested participant from the group-average matrices. The cross-modality correlation (averaged across both comparison directions; Fig. 5 shows each comparison separately) was significantly positive in OSC (r = 0.053; t(11) = 3.78; pone-tailed = 0.0015) and IT (r = 0.036; t(11) = 1.91; pone-tailed = 0.041), but not in OC (r = −0.01; t(11) = −0.30). Thus, neural similarity matrices in OTC showed similarities across the auditory and visual modalities in the sighted participants, replicating previous reports (Stokes et al., 2009; Reddy et al., 2010; Cichy et al., 2012).

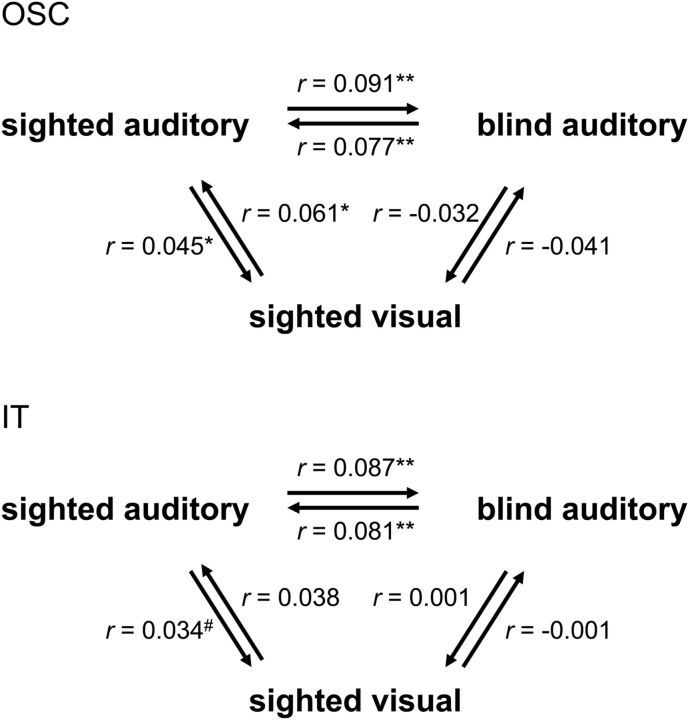

Figure 5.

Comparing neural similarity matrices across modality and group. Neural similarity matrices of OSC (top) and IT (bottom) were correlated across the two modalities (auditory, visual) in the sighted group, across the two groups (blind, sighted) in the auditory experiment and across both group and modality (sighted visual–blind auditory). Individual participants' neural similarity matrices were correlated with group-average neural similarity matrices (excluding the tested participant from the group-average matrix). Arrows indicate the direction of the comparison, pointing from the individual matrix to the group-average matrix. Correlations were averaged across participants. Asterisks indicate significance levels of the correlations relative to zero (one-tailed); #p = 0.09; *p < 0.05; **p < 0.01.

Comparing neural similarity matrices across modality and group

The current results indicate commonalities in the neural representational spaces of OSC and IT across modality (within the sighted group) and across blind and sighted groups (within the auditory modality; Fig. 2). A final question that we addressed was whether these two commonalities were related to each other as well. That is, whether neural similarity matrices in OSC and IT would also be correlated when comparing visual experiment matrices of the sighted with auditory experiment matrices of the blind. In a first analysis, we computed the correlation between each sighted participant's neural similarity matrix in the visual experiment (sighted_visual) and the group-average neural similarity matrix of the blind participants' auditory experiment (blind_auditory). These correlations were not significantly different from zero (Fig. 5; r < 0; t(11) < 1, for both ROIs) even though for the same sighted participants the correlation between their auditory experiment neural similarity matrices and the group-average blind auditory neural similarity matrix was reliably positive in both OSC (Fig. 5; r = 0.091; t(11) = 2.36; pone-tailed = 0.019) and IT (Fig. 5; r = 0.087; t(11) = 4.09; pone-tailed = 0.0009). Follow-up t tests confirmed this difference, showing significantly lower correlations for the sighted_visual–blind_auditory comparison than for both the sighted_auditory–blind_auditory comparison (OSC, t(11) = 2.22; p = 0.048; IT, (t(11) = 2.36; p = 0.038) and (at least in OSC) the sighted_visual–sighted_auditory comparison (OSC, t(11) = 2.86; p = 0.016; IT, (t(11) = 1.43; p = 0.18). To provide a further test for the correlation between sighted visual and blind auditory matrices, we also performed the reverse comparison: computing the correlation between each blind participant's neural similarity matrix (blind_auditory) and the group-average neural similarity matrix of the sighted participants' visual experiment (sighted_visual). These correlations were again not significantly different from zero (Fig. 5; r < 0.01; t(12) < 1, for both ROIs) even though for the same blind participants the correlation between their (auditory experiment) neural similarity matrices and the group-average sighted auditory neural similarity matrix was reliably positive in both OSC (Fig. 5; r = 0.077; t(12) = 3.31; pone-tailed = 0.003) and IT (Fig. 5; r = 0.081; t(12) = 3.06; pone-tailed = 0.005). The correlations for the blind_auditory–sighted_visual comparison were (at least in OSC) significantly lower than the correlations for the blind_auditory–sighted_auditory comparison (OSC, t(12) = 3.34; p = 0.006; IT, (t(12) = 2.07; p = 0.060).

To summarize, the neural similarity matrices of OSC and IT showed similarities across modality (within the sighted group) and across blind and sighted groups (within the auditory modality), but not across both modality and group (blind auditory–sighted visual). These results suggest that the auditory experiment in sighted participants activated both visual object representations (uniquely shared with the visual experiment) and nonvisual object representations (uniquely shared with the blind group's auditory experiment).

Discussion

We examined whether the occipitotemporal cortex, classically considered the high-level visual cortex, also represents object shape in the absence of prior visual input. Congenitally blind and sighted participants were presented with words referring to 33 common household objects. fMRI activity was measured to each of these objects individually to establish the neural similarity between all pairings of the objects. This neural similarity matrix was then correlated with a shape similarity matrix capturing the pairwise shape similarity of the objects, as rated by an independent group of participants. A positive correlation between these matrices indicates that the neural similarity space in a brain region partly reflects the shape similarity space. Results showed that activity patterns in anatomically defined inferior temporal cortex and functionally defined object-selective cortex, but not occipital cortex, carried information about object shape in both sighted and congenitally blind participants. Finally, a searchlight analysis revealed two regions in left occipitotemporal cortex in which neural similarity reflected shape similarity, both when averaging across groups and in a group conjunction analysis. Together, these results provide evidence that the occipitotemporal cortex, previously linked to visual shape representation, also represents object shape in the absence of vision.

The correlations between individual-participant neural similarity matrices of OSC/IT and shape similarity matrices were consistently positive across participants but were numerically weak (Fig. 3). This is likely a result of noisy activity estimates for individual conditions due to the few repetitions we could include for each of the individual object conditions (16 repetitions per conditions). This notion is supported by the finding of numerically higher correlations between the shape matrix and the group-average neural matrices (Fig. 2), with noise expected to cancel out when averaging across participants. Furthermore, individual-participant correlations in the visual experiment were of the same order of magnitude as correlations in the main (auditory) experiment and were slightly weaker than those reported in previous studies with more powerful designs (Haushofer et al., 2008; Peelen and Caramazza, 2012). Additionally, it is of course likely that shape similarity only explains a small part of neural similarity, with activity patterns in OTC reflecting not just the shape of the objects but several additional object properties as well.

The aim of our study was to test for regions in which multivoxel activity patterns carry information about object shape. Our approach differs from previous studies investigating object processing in blind individuals, as these studies tested for activity increases associated with the active exploration of objects (through tactile or auditory input) relative to control conditions. These studies provided evidence that regions of the high-level visual cortex of congenitally/early blind individuals are activated in response to objects conveyed by sounds (De Volder et al., 2001), touch (Amedi et al., 2010), echolocation (Arnott et al., 2013), or through visual-to-auditory sensory substitution soundscapes (Amedi et al., 2007). One consistent finding from these studies was evidence for a region in lateral occipital cortex (Amedi et al., 2002, 2007, 2010) that responds significantly more strongly to exploration of objects than to exploration of textures, to hand movements, or to retrieval of object knowledge (e.g., listening to object names, as in the present study). It is unclear whether activity in response to the active exploration of objects (as studied previously) primarily reflects activity in neurons representing object shape or whether it primarily reflects more general processes involved in grouping sensory input to build coherent object representations (for example, integrating haptic input from multiple fingers; Peelen et al., 2010). Comparing Talairach coordinates between the searchlight clusters carrying shape information in the present study (−43, −79, 14 and −51, −42, −21) and the object exploration region in previous studies (e.g., −51, −62, 1; Amedi et al., 2010) suggests that these may be different regions. Furthermore, increased activity during nonvisual object recognition, relative to control conditions not involving objects, could reflect activity in neurons coding for other (nonshape) properties of objects, such as their semantic category (Pietrini et al., 2004; Mahon et al., 2009; He et al., 2013; Peelen et al., 2013). An important avenue for future research is to relate these two lines of research, for example by testing for shape information within regions localized with an active object exploration task.

In our main experiment, participants were presented with the names of objects rather than with tactile or visual input. Still, one possibility is that our results reflected activity in sensory shape representations, with the nature of these representations being different for the two groups (tactile in blind, visual in sighted). Alternatively, our results can be parsimoniously explained by assuming representations of object shape that are not specific to one modality (e.g., reflecting the knowledge that a particular object has an elongated shape; Caramazza et al., 1990). Such amodal representations may be applied to both vision and touch, thus speaking to the intriguing question first posed by Molyneux >300 years ago (Locke, 1690): when a man who is born blind regains sight later in life, would he be able to immediately visually recognize shapes that are known to him by touch? A recent study empirically addressed this question by testing shape matching in congenitally blind participants who had undergone cataract removal surgery (Held et al., 2011). Shortly after treatment, participants were able to accurately match two shapes within the visual modality. They were at chance, however, when matching shapes across visual and tactile modalities. This finding suggests a negative answer to Molyneux's question; although it could be argued that the visual ability in the newly sighted participants may have been insufficient to reach conceptual representations of object shape (they may have used simpler visual cues to perform the visual task). Interestingly, performance on the cross-modal matching task improved dramatically within a week even without explicit training on the task, suggesting rapid cross-modal mappings.

In summary, we found that knowledge of object shape is represented in the occipitotemporal cortex of both sighted and congenitally blind individuals. Our results indicate that bottom-up visual input is not required for establishing object shape representations in OTC. Regions previously assumed to house visual representations of object shape also represent object shape nonvisually, reflecting knowledge of object shape independently of the modality through which this knowledge was acquired.

Footnotes

This work was supported by the 973 Program (2013CB837300), NSFC (31171073; 31222024; 31271115, 31221003), NCET (12-0055; 12-0065), and MPNSSF (11&ZD186). M.V.P. was supported by the Fondazione Cassa di Risparmio di Trento e Rovereto.

References

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, Rotman S, Hemond C, Meijer P, Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nat Neurosci. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Amedi A, Raz N, Azulay H, Malach R, Zohary E. Cortical activity during tactile exploration of objects in blind and sighted humans. Restor Neurol Neurosci. 2010;28:143–156. doi: 10.3233/RNN-2010-0503. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Thaler L, Milne JL, Kish D, Goodale MA. Shape-specific activation of occipital cortex in an early blind echolocation expert. Neuropsychologia. 2013;51:938–949. doi: 10.1016/j.neuropsychologia.2013.01.024. [DOI] [PubMed] [Google Scholar]

- Bracci S, Cavina-Pratesi C, Ietswaart M, Caramazza A, Peelen MV. Closely overlapping responses to tools and hands in left lateral occipitotemporal cortex. J Neurophysiol. 2012;107:1443–1456. doi: 10.1152/jn.00619.2011. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Hillis AE, Rapp BC, Romani C. The multiple semantics hypothesis-multiple confusions. Cogn Neuropsych. 1990;7:161–189. doi: 10.1080/02643299008253441. [DOI] [Google Scholar]

- Cichy RM, Heinzle J, Haynes JD. Imagery and perception share cortical representations of content and location. Cereb Cortex. 2012;22:372–380. doi: 10.1093/cercor/bhr106. [DOI] [PubMed] [Google Scholar]

- De Volder AG, Toyama H, Kimura Y, Kiyosawa M, Nakano H, Vanlierde A, Wanet-Defalque MC, Mishina M, Oda K, Ishiwata K, Senda M. Auditory triggered mental imagery of shape involves visual association areas in early blind humans. Neuroimage. 2001;14:129–139. doi: 10.1006/nimg.2001.0782. [DOI] [PubMed] [Google Scholar]

- Drucker DM, Aguirre GK. Different spatial scales of shape similarity representation in lateral and ventral LOC. Cereb Cortex. 2009;19:2269–2280. doi: 10.1093/cercor/bhn244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Ashburner J, Haynes JD, Dolan RJ, Rees G. fMRI activity patterns in human LOC carry information about object exemplars within category. J Cogn Neurosci. 2008;20:356–370. doi: 10.1162/jocn.2008.20019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engell AD, Haxby JV, Todorov A. Implicit trustworthiness decisions: automatic coding of face properties in the human amygdala. J Cogn Neurosci. 2007;19:1508–1519. doi: 10.1162/jocn.2007.19.9.1508. [DOI] [PubMed] [Google Scholar]

- Gregory RL, Wallace J. Recovery from early blindness: a case study. Exp Soc Monogr. 1963;2:65–129. [Google Scholar]

- Haushofer J, Livingstone MS, Kanwisher N. Multivariate patterns in object-selective cortex dissociate perceptual and physical shape similarity. PLoS Biol. 2008;6:e187. doi: 10.1371/journal.pbio.0060187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He C, Peelen MV, Han Z, Lin N, Caramazza A, Bi Y. Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage. 2013;79:1–9. doi: 10.1016/j.neuroimage.2013.04.051. [DOI] [PubMed] [Google Scholar]

- Held R, Ostrovsky Y, deGelder B, Gandhi T, Ganesh S, Mathur U, Sinha P. The newly sighted fail to match seen with felt. Nat Neurosci. 2011;14:551–553. doi: 10.1038/nn.2795. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Cortical regions involved in perceiving object shape. J Neurosci. 2000;20:3310–3318. doi: 10.1523/JNEUROSCI.20-09-03310.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis-connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:4. doi: 10.3389/neuro.01.016.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke L. London: 1690. An essay concerning human understanding. [Google Scholar]

- Mahon BZ, Anzellotti S, Schwarzbach J, Zampini M, Caramazza A. Category-specific organization in the human brain does not require visual experience. Neuron. 2009;63:397–405. doi: 10.1016/j.neuron.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mur M, Meys M, Bodurka J, Goebel R, Bandettini PA, Kriegeskorte N. Human object-similarity judgments reflect and transcend the primate-IT object representation. Front Psychol. 2013;4:128. doi: 10.3389/fpsyg.2013.00128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP, Torfs K, Wagemans J. Perceived shape similarity among unfamiliar objects and the organization of the human object vision pathway. J Neurosci. 2008;28:10111–10123. doi: 10.1523/JNEUROSCI.2511-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. Conceptual object representations in human anterior temporal cortex. J Neurosci. 2012;32:15728–15736. doi: 10.1523/JNEUROSCI.1953-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Rogers J, Wing AM, Downing PE, Bracewell RM. Unitary haptic perception: integrating moving tactile inputs from anatomically adjacent and non-adjacent digits. Exp Brain Res. 2010;204:457–464. doi: 10.1007/s00221-010-2306-3. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Bracci S, Lu X, He C, Caramazza A, Bi Y. Tool selectivity in left occipitotemporal cortex develops without vision. J Cogn Neurosci. 2013;25:1225–1234. doi: 10.1162/jocn_a_00411. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci U S A. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reddy L, Tsuchiya N, Serre T. Reading the mind's eye: decoding category information during mental imagery. Neuroimage. 2010;50:818–825. doi: 10.1016/j.neuroimage.2009.11.084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes M, Thompson R, Cusack R, Duncan J. Top-down activation of shape-specific population codes in visual cortex during mental imagery. J Neurosci. 2009;29:1565–1572. doi: 10.1523/JNEUROSCI.4657-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]