Abstract

The posterior parietal cortex (PPC) has traditionally been viewed as containing separate regions for the planning of eye and limb movements, but recent neurophysiological and neuroimaging observations show that the degree of effector specificity is limited. This has led to the hypothesis that effector specificity in PPC is part of a more efficient than strictly modular organization, characterized by both distinct and common activations for different effectors. It is unclear, however, what differentiates the distinctions and commonalities in effector representations. Here, we used fMRI in humans to study the cortical representations involved in the planning of eye, hand, and foot movements. We used a novel combination of fMRI measures to assess the effector-related representational content of the PPC: a multivariate information measure, reflecting whether representations were distinct or common across effectors and a univariate activation measure, indicating which representations were actively involved in movement preparation. Active distinct representations were evident in areas previously reported to be effector specific: eye specificity in the posterior intraparietal sulcus (IPS), hand tuning in anterior IPS, and a foot bias in the anterior precuneus. Crucially, PPC regions responding to a particular effector also contained an active representation common across the other two effectors. We infer that rostral PPC areas do not code single effectors, but rather dichotomies of effectors. Such combinations of representations could be well suited for active effector selection, efficiently coding both a selected effector and its alternatives.

Keywords: effector selectivity, fMRI, foot, MVPA, parietal cortex, pointing

Introduction

The ability to generate an appropriate response in a complex environment is of utmost importance for the survival of any organism. A critical aspect is the selection of the effectors involved, such as eyes, hand, and foot. While the posterior parietal cortex (PPC) has been implicated in this process, it is debated how this structure maps multiple effectors onto the 2D cortical surface.

PPC organization could separate motor responses in relation to the effector that is to be moved. This would lead to separate neural modules for the control of different effector systems (Cui and Andersen, 2007). To date, this has been the prevailing concept of PPC organization (Colby and Goldberg, 1999; Matelli and Luppino, 2001; Filimon et al., 2009). For instance, the lateral intraparietal area (LIP) is thought to encode eye movements (Gnadt and Andersen, 1988), the parietal reach region (PRR) reaching movements (Snyder et al., 1997), and the anterior intraparietal sulcus (aIPS) grasping movements (Murata et al., 2000). Recently, it has been argued that effector specificity in PPC is part of an efficient coding scheme, representing effectors in terms of commonalities in the behavioral repertoire (Graziano, 2006; Levy et al., 2007; Jastorff et al., 2010). For example, because eye and hand are often moved together, the efficient coding principle proposes a shared neural substrate for both effectors. Accordingly, both LIP and PRR respond during preparation of hand as well as eye movements (Snyder et al., 1997; Calton et al., 2002). Likewise, human fMRI studies have revealed large overlap in PPC during the planning of eye, hand (Beurze et al., 2007; Levy et al., 2007), and foot movements (Heed et al., 2011). However, it remains unclear which characteristic of those effector representations drives distinct and overlapping representations in PPC.

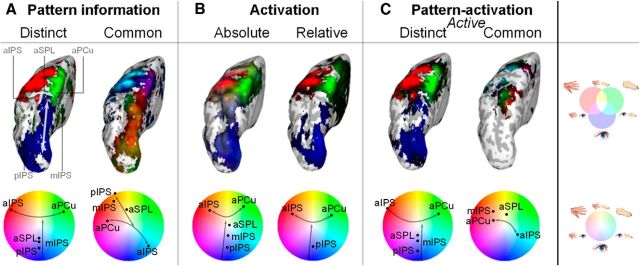

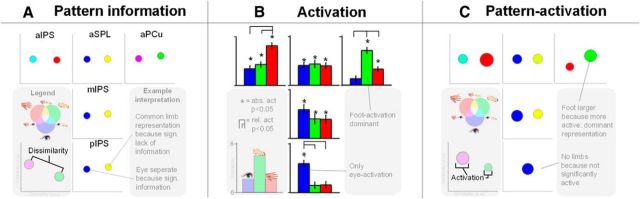

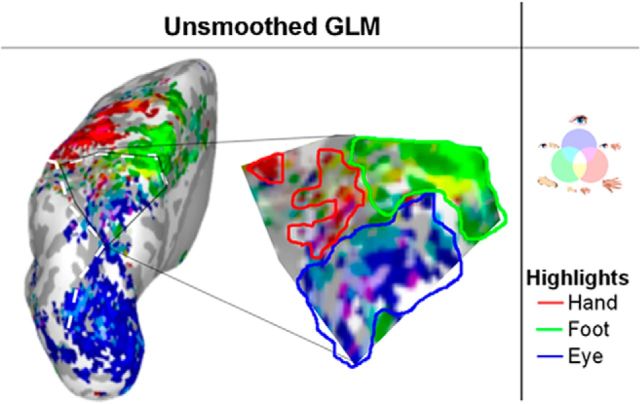

Here, we characterize identities and commonalities in the neural representations of eye, hand, and foot movements in PPC by using a novel combination of multivariate and univariate fMRI measures. Multivariate pattern analysis (MVPA) tests spatial patterns of fMRI activity to infer informational content (Fig. 1A; Haxby et al., 2001; Haynes et al., 2005; Gallivan et al., 2011, 2013). The underlying logic is that distinct patterns encode effector identities, whereas a shared pattern across effectors codes effector commonalities (Fig. 1B). However, multivariate patterns are inherently undirected (Fig. 1C). For instance, if the multivariate activity pattern of a region differs between eye movements and both hand and foot movements, but not between hand and foot movements, the region might code for eye movements, it might integrate hand and foot movements, or it might distinguish eye from limb movements. These possibilities can be disentangled by considering the degree of activation of that region for each effector, that is, whether the region is activated for the eye only, for the limbs, or for all three, respectively. Activation adds direction to the pattern information results. Crucially, we combine information and activation measures into one score, showing which distinct and common representations (Fig. 1D) are present as well as activated across PPC during movement planning.

Figure 1.

Study rationale. A, Preparing to move different effectors (eye, foot, and hand icons, represented in blue, green, and red colors, respectively) might evoke different spatial patterns and intensity of BOLD responses in a given cortical region (gray levels). Activation measures pertain to differences in mean activation (histogram of mean effector-related activities, on the left). Information measures capture the distance (correlation) between patterns of activation (plot of multidimensional scaling distances between effectors, MDS, on the right, with similarity in arbitrary units, a.u.). B, Example of how information measures can disambiguate activation. Given a region equally active for the three effectors (left histogram), information measures can follow three different patterns (middle column, MDS plots), reflecting three different representational contents (right column). C, Example of how activation measures can disambiguate information. Given a region with a pattern of eye-related responses different from hand- and foot-related responses (left, MDS plot), activation measures can follow three different patterns (middle column, histograms), reflecting three different representations (right column). D, Combining activation and information measures over three effectors results in 14 different types of representational content, as indicated by the corresponding icons and RGB combinations. These can be separated in a group pertaining to differences between effectors (“distinct,” on the left, meaning distinct patterns, or separate dots, for each effector), and a group pertaining to commonalities across effectors (“common,” on the right, meaning shared patterns, or overlapping dots, for the effectors). A given region can follow types from either group or, when complementary, both groups. Pattern-activation analysis only considers the activated patterns.

Materials and Methods

Participants

Twenty-three healthy, right-handed participants with normal or corrected-to-normal vision participated in this study. Data of six participants were not included in further analyses due to poor task performance (see below). The remaining 17 participants (9 female) were aged 19–33 years (mean 23.5). Participants gave their written consent in accordance with the local ethics committee (CMO Committee on Research Involving Human Subjects, region Arnhem-Nijmegen, The Netherlands).

Experimental setup

Participants lay supine in the scanner, with their head inside a phased-array receiver head coil. The head and neck were stabilized within the coil using foam blocks and wedges. The limbs used in the task (right arm, right leg) were additionally cushioned for stabilization. Upper arms and legs were cushioned and strapped to minimize head movements during task execution. Visual stimuli were controlled using Presentation software (Version 14.7; Neurobehavioral Systems), projected onto a screen and viewed by the participant using a mirror, giving the perception that the stimuli were approximately above the participants' head. Responses to stimuli were made using the eyes, right hand, or right foot.

Eye tracking.

To track eye movements, the position of the left eye was recorded using a long-range infrared, video-based eye tracker (SMI) at a frequency of 50 Hz. Saccades were identified by detecting a 2% change in eye position, relative between baseline and the maximum amplitude of the trial. Results from the automatic analysis were verified visually.

Hand and foot movement recording.

Hand and foot pointing was performed with the right limbs. Hand pointing involved rotating the wrist, and pointing at the target with the index finger. Foot pointing involved rotating the ankle to point at the target with the big toe. To track hand and foot movements, infrared light-emitting diodes (LEDs) were taped to the right index finger and right big toe. The infrared light, while invisible to the participants, was visible to a camera, mounted to the ceiling at a distance of ∼2 m of the scanner. One of the two LED lights was shortly interrupted in parallel with presentation of the stimulus and movement cue, respectively, for alignment of movement data with the experiment's events. The LED locations were extracted from the video footage frame by frame using MATLAB (MathWorks) as done previously (Heed et al., 2011). Movements were identified by detecting a 5% change from baseline of hand or foot position, relative to the maximum amplitude of the current trial, and were verified visually.

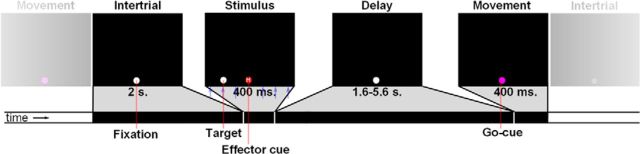

Experimental paradigm

Participants performed a delayed movement task using three possible effectors (eyes, right hand, and right foot) to six potential targets (4, 6, and 8 degrees to the left and right of the fixation point; Fig. 2). Participants started all trials pointing to a central fixation point (white dot, size 1°) with all three effectors. The hand and foot pointed to the fixation dot in the horizontal (left–right) direction only, as the degrees of freedom of wrist and ankle limit vertical movement. Trials started with a change of the fixation dot's color to indicate the effector, together with the presentation of a movement target (400 ms). The fixation dot could change to red, blue, or orange to instruct a hand, a foot, or an eye movement, respectively. In addition, a capital letter was displayed in the center of the fixation point (O, H, V, the first letters for eye, hand, and foot in Dutch). Next, the fixation point turned white again to indicate the delay period (1.6–5.6 s). Finally, the fixation dot turned purple to indicate movement execution (400 ms). During the delay period, participants had to plan the instructed movement to the remembered target, and execute this movement at the presentation of the purple dot. After 1.6 s, the next trial started.

Figure 2.

Experimental paradigm: the delayed movement task. A white fixation dot was presented for 2 s (Intertrial period). Next, the effector was indicated by a change of color of the fixation dot for 400 ms, on top of which a small letter was projected (O, V, H, for the first letter of the Dutch words for eye, foot, or hand). the target stimulus was presented at one of six possible horizontal locations (Stimulus period, blue arrows, which were not shown to the subject, indicate possible stimulus eccentricities). Subsequently, the fixation dot turned white again for a variable duration (Delay period). Finally, the fixation dot turned purple signaling the participant to execute the cued movement to the remembered stimulus location (Movement period).

Trials were grouped in runs of 43 trials. Trial order was arranged such that each of the six trial types [resulting from three effectors (eye, foot, and hand) and two target sides (left and right)] followed each other equally often, while appearing random to the participant (Brooks, 2012). Each run started and ended with 20 and 8 s of fixation, respectively. These intervals served as baseline in the GLM analysis. The length of the breaks between runs was determined by the participant. The total duration of the experiment, consisting of 18 runs, 774 trials (43 of each type), excluding the breaks, was 86 min, split into two sessions.

We used eye and limb movement data to verify that participants held fixation during the delay period with all three effectors and only moved the cued effector during the execution period. Six participants made a large number of errors (performance < 75%) and were excluded from further analysis. The excluded participants mostly failed to keep eye fixation during the execution of hand or foot movement. For the remaining 17 participants, the average number of trials that entered analysis was 87% (SD: 6.7).

MRI settings and preprocessing

MR images were acquired using a Siemens Trio 3 T MRI scanner (Siemens Tim TRIO) with a 32-channel head coil. A multi-echo sequence of five echoes (TE: 9.4, 21.2, 33, 45, 57 ms, TR: 2.01 s) was used to improve signal strength. It encompassed 26 slices, centered on the parietal and frontal motor areas (voxel size 3 × 3 × 3.5 mm, FOV 192 mm, flip angle = 80°). After collecting the functional images, high-resolution anatomical images were acquired using a T1-weighted MP-RAGE GRAPPA sequence (176 sagittal slices, voxel size = 1 × 1 × 1 mm, TR = 2300 ms, TE = 3.93 ms, FOV = 256 mm, flip angle = 8°).

The multi-echo data were combined using the PAID algorithm (Poser et al., 2006). Slices were temporally aligned to the center (14th) slice to accommodate for slice-timing differences. High-pass filtering (cutoff: 128 s) was applied to filter out low-frequency confounds. To retain maximal pattern information, no spatial smoothing was applied. GLM analyses were performed in native space, and the resulting images were normalized to MNI space using DARTEL normalization procedures (Ashburner, 2007). To estimate normalization flow fields, the structural images were segmented into tissue type. In addition, the gray and white matter segment of the normalized brain of one participant was used to reconstruct and inflate the cortical sheet of each hemisphere in the FreeSurfer Toolbox (Dale et al., 1999; Fischl et al., 1999). All further processing and subsequent analysis steps were performed using the SPM8 toolbox (Statistical Parametric Mapping) and MATLAB (MathWorks).

fMRI analysis

A recent report analyzed the data obtained in the present paradigm using a repetition suppression approach (Heed et al., 2013) and contains a detailed analysis of the behavioral data. Here, we developed and applied a new analysis approach that integrates univariate and multivariate analysis of these data to examine the cortical representations involved in the planning of eye, hand, and foot movements.

Pattern information versus activation measures.

In the current study, we combined pattern information and activation measures into a pattern-activation framework. Pattern information analysis distinguishes regional representations (Kriegeskorte and Bandettini, 2007; Raizada and Kriegeskorte, 2010), focusing on differences in the activation pattern across voxels in that region. Activation analysis, on the other hand, is based on statistical parametric mapping, and typically tests average activation relative to a baseline in a given region. Activation is believed to represent the differential metabolic response of a region to different sensorimotor or cognitive challenges (Friston et al., 2011; Fig. 1A). The metabolic demand, in turn, is thought to indicate the involvement of a region in current processing. Note that the two measures are independent, similar to how a mean and SD are independent, or how a correlation measure is mean independent: a pattern can be highly informative, but, on average, might not be activated above baseline. Similarly, an activated region can contain the same pattern of activation across conditions. As such, they have often been explicitly contrasted (Peelen et al., 2006; Peelen and Downing, 2007; Jimura and Poldrack, 2012; Coutanche, 2013). In the present framework, the two methods are combined, rather than contrasted: patterns (i.e., representations) can be activated (i.e., involved) to different degrees.

The rationale of the framework is as follows. Consider patterns of activation in a region for eye, hand, and foot (Fig. 1D). Patterns for two of the three effectors can be equal (high correlation or low classification) or different (low correlation or high classification). Equal patterns indicate a common representation across effectors. Different patterns indicate a distinct representation per effector. With three effectors, a combination of distinct and common is also possible, e.g., eye versus hand and foot. For each pattern, its mean activation, as tested by univariate analysis, represents the pattern's level of involvement. In our framework, patterns for each effector are weighted based on mean activation per effector: the more activated, the higher the combined value. This combination of pattern and activation again results in a separation of common and distinct representations (Fig. 1D). Representations, which are not activated, are effectively masked out because the low activation weight leads to negligible combination scores.

We first performed separate pattern information and activation analyses, followed by the combination of the two measures into a common pattern-activation measure. Following the conventions from MVPA (Kriegeskorte et al., 2006), we refer here to pattern information simply as “information,” although, in a mathematical sense, mean activation can also be considered information, albeit on a different spatial scale.

GLM.

Both information and activation analyses were based on the output of a participant-specific GLM. Six regressors of interest captured variance during the planning period, specific to effector (three: eye, hand, foot) and target side (two: left, right). Side was included because PPC is highly sensitive to target direction (for review, see Silver and Kastner, 2009). Thirteen additional regressors were used to constrain the variance explained by the planning regressors: six location-dependent spike regressors at stimulus onset explained location-dependent variance and six movement spike regressors at go-cue presentation accounted for activation specific to the direction of the executed of the movement. One spike regressor at session onset accounted for break-related effects. As defined by the criteria described above, erroneous trials were removed from the main regressors of interest and instead captured by corresponding error regressors. All regressors were convolved with a standard hemodynamic response function (Friston et al., 2011). In addition, we included 17 nuisance regressors. Twelve movement regressors (translation and rotation, as well as their derivatives) captured signal variance due to head movement. Five additional regressors accounted for changes in overall signal intensity in five compartments, which are not expected to reflect task-related activity (white matter, CSF, skull, fat, and out of brain; Verhagen et al., 2008). Runs were modeled separately in the design matrix, each run containing 36 regressors (not including the constant and a variable number of error regressors) and on average 143 scans. We tested 18 runs, resulting in 2574 scans in total.

We used the t contrast scores for the comparison of the planning regressors versus baseline, collapsed across runs and movement target sides, as the basis for the pattern information and activation analyses. In short, for the information analysis we correlated the pattern in t values between effectors, and for the activation analysis we averaged the values (Fig. 1A). We chose t values over β-values from the GLM because they are the more informative measure (Misaki et al., 2010).

Searchlight analysis.

Both information and activation analysis were performed within a searchlight sphere (Kriegeskorte et al., 2006) with a radius of three voxels. Searchlight sphere size varied at the brain's border and was on average 83 voxels. Searchlight analysis was performed in native space.

Pattern information analysis.

Pattern information analysis was based on a correlation analysis (Haxby et al., 2001; Kriegeskorte et al., 2008) of the three effector pairs: eye–hand, eye–foot, hand–foot. The spatial distribution of effector-specific t values across a searchlight sphere was taken as local effector-specific pattern, which were then compared per effector pair using correlations. Low correlations between patterns reflect dissimilarity or information (akin to classification performance), meaning that patterns form distinct effector-specific representations. In addition, we take into account the alternative possibility: high correlations between patterns, indicative of similarity or lack of information, mean that patterns form a common, effector-unspecific representation.

Before calculating correlation scores, we first transformed t values into z-scores voxel by voxel to ensure voxel contribution to pattern information was not influenced by mean activation (Haxby et al., 2001; Pereira et al., 2009; Hanson and Schmidt, 2011). Next, for each combination of two effectors, we calculated the correlation score between the voxel patterns in the searchlight sphere. We then recentered the distribution of correlations across search spheres by subtracting the expected mean correlation (average correlation for permuted spheres, see Significance test, below) for a given effector pair. This procedure makes the reference value consistent across participants, allowing group analysis. The correlation, or similarity, values were then normalized to a value between −1 and 1 by dividing the values by the maximum absolute value across all three effectors. To obtain a dissimilarity measure (i.e., information measure), while retaining the two-sided nature of the original correlation measure, we flipped the sign of the centered similarity score. These dissimilarity values were used to construct MDS figures (Edelman et al., 1998).

Next, we converted the information scores for effector pairs into three effector-specific information values. Note that the raw information scores are comparisons between pairs of effectors (e.g., the correlation between eye and hand, cyan in Fig. 1D, distinct representation). However, combination with the effector-specific activation scores requires an effector-specific information score of one effector versus the other two (e.g., eye vs hand and foot, blue in Fig. 1D). First, we converted RGB values to HSB (hue, saturation, brightness) color space. Second, we rotated hue by 1/6π (effectively rotating color by 60° along the color circle, that is, half the distance between the colors of two respective effectors: green becomes cyan, blue turns magenta, and red transforms to yellow. Finally, we converted the rotated HSB color value back to RGB. After rotation, each of the three values (R, G, and B) indicated the amount of information on one effector versus the other two effectors in a [−1 1] range. We split the range in two [0 1] intervals to differentiate heuristically between common representations (similarity, left side of dissimilarity distribution, flipped sign) and distinct representations (dissimilarity, right side of dissimilarity distribution).

Activation analysis.

Within a sphere, the activation analysis resembled an ROI analysis (Poldrack, 2007): we averaged the pattern of t values within a searchlight sphere for the effector-specific regressor of interest. Contrasts were first computed against baseline to detect common activations across effectors. We regard this as a measure of absolute activation, even though baseline measures are inherently relative in fMRI (Stark and Squire, 2001). We also calculated the mean t contrasts for one effector versus the other two effectors as a measure of relative activation. Both types of activation values were normalized to a [0–1] range by considering only positive values and dividing by the maximum value across effectors. For the combined pattern-activation analysis, we converted the two activation measures into one activation measure, consisting of three numbers (one for each effector) in a [0–1] range. The score was defined as the maximum of the absolute and relative activations per effector, indicating the region was activated, regardless whether it was absolute or relative.

Combination of pattern information and activation measures.

We subsequently combined the pattern information and activation measures into a combined pattern-activation measure by elementwise multiplication of the activation scores with either the dissimilarity or similarity scores. Multiplying the activation scores with the full [−1 1] information range and splitting the range afterward gives the same result. The active common and distinct scores are the left and right side of the pattern-activation distribution, which allows a straightforward inspection of common and distinct representations. Subsequently we calculated square root values of the multiplied values to correct for multiplication dampening. The multiplication of pattern information and activation scores attenuates nonactive patterns and increases the strength of active patterns. To determine areas encoding active distinct representations, we multiplied activation and dissimilarity scores. Thus, the distinction score will be high only when both activation and dissimilarity scores are high for a given effector. To determine areas encoding active common representations, we multiplied the activation scores with the similarity scores. As a result, the value for the common representation will be high only if both activation and similarity scores are high for a given effector. Such a high value would indicate that the region, even though significantly active, does not carry any specific information about effectors.

The combination of information and activation values can reveal a large range of representations, including multiple representations within a region. On the one side, the current approach can identify active distinct representations (eye, hand, and foot) and combinations thereof, with up to three active distinct representations within a single region (Fig. 1D, white, left). On the other side, a region could contain a representation common to several (up to all three) effectors (Fig. 1D, right). A representation can also be common for one effector, if that is the only effector for which there consistently is a common representation across subjects. In combination, a region may also contain one active effector-specific representation, and, in addition, one active representation common to the remaining two effectors (Fig. 1D). In our framework, dominance of a representation over the others is determined by activation, with that effector assigned dominance for which activation is highest.

Significance test.

To assess significance, we performed within-participant permutation tests separately for the three types of analyses (information, activation, and pattern activation). The reference distribution of the permutation test was created by repeating the searchlight procedure using the same number of spheres and sphere sizes as in the original procedure, but with the voxel data permuted randomly across the entire brain. As in the original analysis, information, activation, and pattern-activation scores were calculated for each of three effectors within each searchlight sphere. The information scores were centralized by subtracting the mean information score. P values were calculated as the percentage of permuted spheres with a score higher (one-sided test, for activation) or different (two-sided test, for information and pattern activation) than that of the original, nonpermuted sphere, and determined separately for each effector. This procedure tests whether the continuous group of voxels in a search sphere gives a significantly different test score than a random set of voxels taken from the same brain.

Group analysis.

For analysis across participants, searchlight sphere results were first converted to MNI space after which information, activation, and pattern-activation scores were averaged across participants. Participant-level p values were accumulated by applying Fisher's method (Fisher, 1925), which combines multiple p values into one aggregate p value based on a χ2 distribution. Spheres were regarded significant for a given test if at least one of the three effectors was significant across participants.

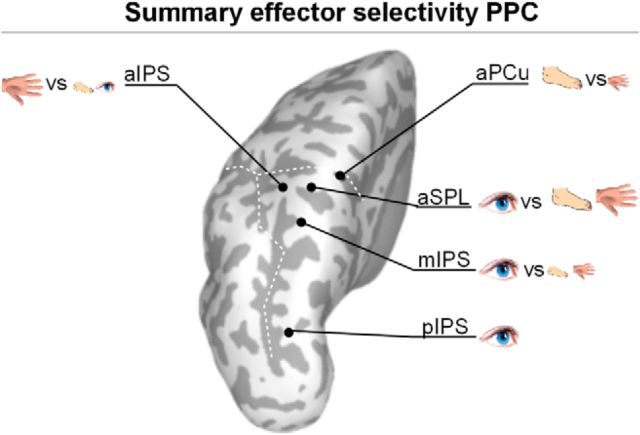

ROIs.

To facilitate reporting, and to allow direct linking of our results to previous, region-specific findings, we defined five ROI search spheres in PPC, corresponding to areas often implicated in motor planning (Levy et al., 2007; Filimon et al., 2009; Heed et al., 2011): posterior intraparietal sulcus (pIPS), medial IPS (mIPS), aIPS, anterior superior parietal lobe (aSPL), and anterior precuneus (aPCu).

Area pIPS, also known as V7 or IPS0 (Swisher et al., 2007), has previously been implicated in saccade control (Levy et al., 2007). Area mIPS, also referred to as IPS2 (Silver and Kastner, 2009), has been found active both for saccade and reach control (Levy et al., 2007; Heed et al., 2011). Area aIPS is involved in hand movements (Culham et al., 2003; Heed et al., 2011; Konen et al., 2013), whereas aPCu (within area 5L) seems to be involved in both hand and foot control (Filimon et al., 2009; Heed et al., 2011). Last, aSPL (area 5L/7A) has recently been found to contain a shared representation for hand and foot (Heed et al., 2013).

The location of the ROIs was determined based on the peak of the activation for any of the effectors or combination thereof closest to the reference coordinates, restricted to fall within a posterior parietal and precuneus surface mask, as defined by FreeSurfer cortical parcellation. Thus, the ROIs are based on functional results and, hence, are used for descriptive purposes only (Kriegeskorte et al., 2009). We report the properties of the search sphere centered on the activation peaks of the respective areas. The complete overview of ROIs is shown in Table 1.

Table 1.

The MNI coordinates of the regions used in the ROI analysis and their normalized peak values for activation, information, and combination for eye (E), hand (H), and foot (F)

| ROI | Coordinates |

Source | Activation |

Information |

Combination |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reference |

Peak |

|||||||||||||||

| x | y | z | x | y | z | E | F | H | E | F | H | E | F | H | ||

| pIPSa | −24 | −81 | 29 | −21 | −87 | 28 | Heed et al. (2011) | 0.38 | 0.10 | 0.10 | 0.17 | −0.11 | −0.22 | 0.32 | −0.08 | −0.17 |

| mIPSa | −19 | −69 | 57 | −21 | −63 | 60 | Heed et al. (2011) | 0.38 | 0.26 | 0.25 | 0.12 | −0.17 | −0.27 | 0.19 | −0.09 | −0.24 |

| aIPS | −32 | −38 | 57 | −39 | −42 | 63 | Blangero et al. (2009) | 0.23 | 0.28 | 0.54 | −0.22 | −0.16 | 0.24 | −0.21 | −0.19 | 0.46 |

| aSPLa | −27 | −42 | 70 | −24 | −54 | 63 | Heed et al. (unpublished observations) | 0.26 | 0.28 | 0.25 | 0.09 | −0.11 | −0.13 | 0.12 | −0.15 | −0.13 |

| aPCu | −9 | −54 | 60 | −15 | −45 | 70 | Filimon et al. (2009) | 0.07 | 0.40 | 0.18 | −0.05 | 0.21 | −0.16 | −0.01 | 0.32 | −0.16 |

aCoordinates were originally reported in Talairach space. Bold numbers indicate significant effects, p < 0.05, permutation test.

Results

The goal of this study was to characterize commonalities and distinctions in parietal effector representations involved in movement planning. Participants performed a delayed movement task involving goal-directed movements of the eye, hand, and foot. A novel searchlight-based pattern-activation approach (see Materials and Methods; Fig. 1) was developed to disentangle representations committed to the three effectors. Pattern information measures (dissimilarity and similarity) determined whether regions contained distinct or common representations for the different effectors. Activation measures tested the strength of the activation of these effector-specific patterns. The combined pattern-activation measure was used to delineate active distinct representations (significant activation as well as information) and active common representations (significant activation, but lack of information), revealing the active representational content of a region. We start with a description of the results of the left hemisphere, contralateral to the used limb.

Pattern information

Pattern information analysis was applied to determine which representations are present in PPC, coding effector distinctions and/or commonalities (Figs. 3A, Fig. 4A).

Figure 3.

Group results for pattern information (A), activation (B), and combined pattern-activation (C) tests of effector specificity, plotted on an inflated representation of the left hemisphere (top) and per ROI in color gradients (bottom). The cortical locations of the ROIs are indicated by white crosses on the inflated surface. Color coding following Figure 1D: blue, eye; red, hand; green, foot; brightness indicates strength of tuning. A, Information is split into distinct (dissimilarity, i.e., information is present) and common (similarity, i.e., lack of information). B, The activation results are separated in absolute activation (compared with baseline) and relative activation (compared with the other two effectors). C, The combined pattern-activation results split into active distinct results (both activation and information effects) and active common (only activation, no information effects). Values are normalized per part (A–C). Only voxels and ROIs significant for the respective tests are shown (p < 0.05, permutation test). The straight and curved arrows highlight the caudorostral and lateromedial gradients, respectively.

Figure 4.

Regional results for effector specificity according to pattern information (A), activation (B), and combined pattern information (C) measures, based on the values in Table 1. Gray texts highlight examples to facilitate interpretation by the reader. Color coding as in Figure 1B. A, Information results, shown in MDS plots, with the color indicating the effectors, the distance, and the dissimilarity. When two effectors were significantly similar (i.e., have a common representation), the two colors were merged. B, Average normalized activation results, shown in bar plot (±SE). Asterisks indicate significant differences from baseline. Connecting lines indicate significant relative differences between effectors (p < 0.05, permutation test). C, Combined pattern-activation results, depicting the combination of information and activation scores: only significantly activated representations are included, the size of the dots represents the relative activation of the effector(s).

Information was present within a parietofrontal network, including different types of effector-specific representations in PPC (Fig. 3A, straight arrow, top and bottom). Caudorostral along the IPS, we found a large and continuous, distinct representation related to eye movement planning (Fig. 3A, blue, left). This was combined with strong common representation of the hand and foot to different relative degrees (Fig. 3A, yellow/orange/light green, right). More specifically, pIPS, mIPS, and aSPL represented primarily an eye versus limb distinction (Fig. 4A, blue vs yellow colors).

Furthermore, we found a lateromedial hand-eye-foot information gradient in rostral PPC. The gradient for common representation ranged from foot and eye (Fig. 3A, cyan, right), to foot (green), to hand and foot (yellow) to hand (red), and to hand and foot (magenta). Note that the regional boundaries differ between the two information measures.

Regarding the predetermined ROIs (Fig. 4A), aIPS represented hand movements versus foot and eye movements (red vs cyan); aSPL represented the eye versus the limbs (blue vs yellow), and aPCu represented foot versus hand and eye movements (green vs magenta). The distinct representation of aIPS and aPCu continued well into lateral and medial somatosensory and motor cortex, respectively.

In summary, information analysis revealed a caudorostral distinct eye representation and a rostral, lateromedial distinct hand-eye-foot representational gradient, combined with mostly common representations for the complementary effectors. Thus, most PPC areas represented a dichotomy between a distinct representation for a single effector and a representation common to the other two effectors.

Activation

Whereas the information analysis revealed distinct and common representations across PPC, it does not show which of the representations of a given area was most activated by the task, and whether, perhaps, some representations were on average not activated at all. The activation analysis examined such regional involvement (Figs 3B, 4B).

Activation of the different effectors against the fixation baseline was evident widely across a parietofrontal network, showing strong involvement of the PPC (Fig. 3B). Within PPC, there was a caudorostral eye-to-limb gradient: caudally, eye activation was dominant, whereas more rostral regions were more activated by all three effectors, both in a shared fashion (Fig. 3B, gray-white colors, left, straight arrow), and separately (Fig. 3B, red and green). At the level of the preselected ROIs (Fig. 4B), pIPS showed activation for eye movements only, mIPS demonstrated also activation for the other two effectors, and aSPL showed comparable activations for the three effectors.

We also observed a rostral, lateromedial hand–foot gradient: a hand bias (Fig. 3B, red, curved arrow) was evident lateral to aSPL, a foot bias (green) more medially, whereas all three effectors were represented in the in-between region (yellow or gray-white). For the ROIs (Fig. 4B) along the postcentral sulcus, aIPS was predominantly activated for the hand, aSPL for the hand and foot, and aPCu for the foot. In aIPS the dominant hand activation was accompanied by activation for the foot and eye, and in aPCu the dominant foot activation was accompanied by activation for the hand. Thus, all rostral PPC ROIs were activated for multiple effectors. The relative hand and foot dominance in aIPS and aPCu continued in lateral and medial somatosensory and motor cortex.

In sum, the activation analysis revealed a caudorostral eye–limb gradient and a lateromedial hand–foot gradient, combined with significant secondary activations in rostral PPC.

Combined activation and information

The information-based analysis revealed mostly dichotomies of distinct and common effector representations across PPC, but did not allow conclusions about which representations were actually activated by the task. Activation analysis, on the other hand, showed general activation with relative effector-specific peaks, but did not allow conclusions about whether the general activations held effector-specific representations. Combining the two measures in the pattern-activation framework allows examination of both of these aspects simultaneously, that is, to observe which unique representations are present as well as which of them are activated by the task (Figs. 3C, 4C).

The combined pattern-activation analysis revealed active distinct representations across the frontoparietal network, including the PPC (Fig. 3C, left). Caudal PPC was dominated by eye-related representations, whereas rostral PPC contained active distinct representations for the hand and the foot, separated by an active representation distinct for planning eye movements. Note that all combined activation (Fig. 3B, gray, left) has been filtered out, revealing clear patches of representations. ROI analysis revealed that pIPS, mIPS, and aSPL all actively distinguished movements of the eyes, whereas aIPS encoded movements of the hand and aPCu represented planned movements of the foot (Fig. 4C).

Of the common representations revealed by the information analysis (Fig. 3A, right), especially the rostral PPC representations were activated (Fig. 3B), resulting in rostral patches of active common representations (Fig. 3C, right). Specifically, the combined analysis uncovered three distinct representations that were hidden in the overlapping activations: an activated limb representation (Fig. 3C, right, yellow/orange), foot and eye representation (green/cyan), and hand representation (red). The selected ROIs are covered by these patches (Fig. 4C): in addition to the active distinct representations, mIPS contained an active common representation for the limbs (circle diagram, yellow), aIPS coded an active common representation for the foot and eyes (green and cyan), and aPCu contained a large activated hand representation (red). Furthermore, in aSPL, we found an integrated hand–foot representation at the cross section of the aIPS and aPCu regions.

Both active distinct and active common representations were found within the same regions in PPC (compare Figs. 3C, left and right, Fig. 4C), but with different amounts of activation. For most regions, the distinct representation was also the most activated representation, that is, most regions were dominated by one effector. aSPL formed an exception, in that both the eye and limb representation were equally activated (dominance indicated by brightness of colors in Fig. 3C, size of dot in 4C).

In summary (Fig. 5), the pattern of representations for effector planning in PPC emerging from the combined information-activation framework is mostly that of active dichotomies: mIPS, eye versus the limbs (most activated first); aSPL, limbs versus eye (equal dominance); aIPS hand versus eye and foot; aPCu, foot versus hand. Area pIPS appears as an exception, with only an active representation of the eye.

Figure 5.

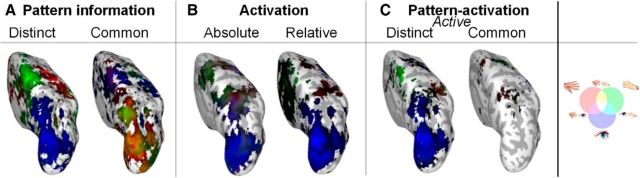

Right hemisphere, group results. Data in same format as in Figure 3, top panel.

Right hemisphere

Analysis was focused on the contralateral (left) hemisphere, which is thought to be most involved in coding right hand and foot movement (Beurze et al., 2009; Gallivan et al., 2011; Heed et al., 2011). However, the hemisphere ipsilateral to the moving limb can also contain information about planned movements (Gallivan et al., 2013). As Figure 6 shows, eye-specific information was present ipsilaterally in caudal PPC, and hand- and foot-specific information was present in the motor cortex. In rostral PPC regions, effector-specific informational content was reduced compared with the contralateral hemisphere. Furthermore, activation of the right hemisphere was attenuated compared with the left hemisphere, particularly in motor and somatosensory cortices. The combined analysis revealed that the eye-specific posterior representations were active and distinct, whereas no clear, active distinct limb-specific representations emerged in rostral PPC. Similarly, we did not observe any strong active common representations.

Figure 6.

Group GLM test of effector-specificity on unsmoothed data, depicted on an inflated left hemisphere, with magnification of the rostral PPC. Voxels significantly (p < 0.001, uncorrected) tuned for one or more effectors are included. Colored lines highlights clusters of effector-specific tuning (red is hand, green is foot, blue is eye).

Thus, the only representation active in ipsilateral PPC was for the one effector for which the brain is not lateralized: the eyes.

Control measures

Relation activation and information

Results of the pattern information and activation analysis show a remarkable consistency: in a given region, the most distinct representation was, at the same time, the most strongly activated. However, this consistency may in fact be artifactual: more strongly activated voxels may dominate the correlation calculation and thus increase the likelihood that higher activated representations turn out distinct. If this is the case, positive correlations between information and activation should also be present in the random data generated in the permutation test. If not, it should be unique to the actual results, more specifically to the effectors represented in each hemisphere: all effectors in the left hemisphere, only the eyes in the right hemisphere. We tested this potential confound by comparing the relationship between mean relative activation per effector and information per effector in the actual data, separately for each hemisphere, with the same measures calculated for random data.

In the left hemisphere, correlations between activation and information were positive for all three effectors [mean correlation: 0.68 (hand), 0.39 (foot), 0.47 (eye), all p < 0.05]. In the right hemisphere, the correlations dropped for the hand (−0.02, p > 0.05), but not for the eyes and foot (0.38 and 0.45, respectively, both p < 0.05). In contrast, for the random data, activation and information correlated negatively (r = −0.19, − 0.18, − 0.09, all p < 0.05). Thus, we conclude that the higher relative activations predict higher information measures, but that the relationship is not due to a confound in our analyses.

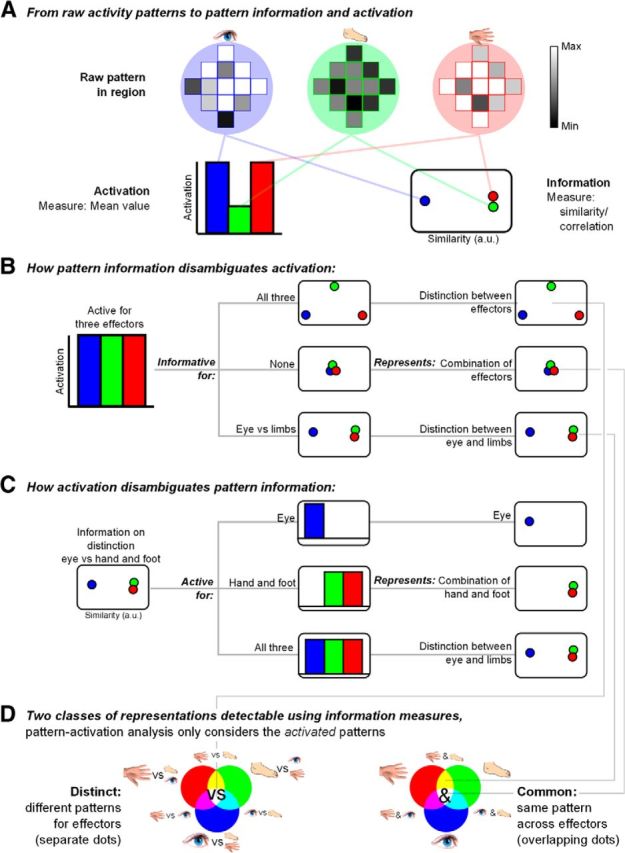

Unsmoothed group GLM

All our analyses are based on tests using search spheres of 83 voxels on average, which is comparable in size to a standard smoothing kernel. It is possible that this approach has hindered precise spatial localization, with spheres capturing information and activation from neighboring areas. To control for such effects, we performed a standard group GLM on spatially unsmoothed data (Fig. 7). We predicted that there should be effector-specific voxels within PPC areas, validating the spatial sensitivity of our analyses.

Figure 7.

Overview of the effector specificity results per ROI, combining the two pattern-activation plots (Fig. 3C). Black dots indicate the ROIs, the symbols indicate the different (vs) activated representations in these ROIs. Large symbols indicate the dominant (i.e., most activated) representations, small symbols the less activated representations.

The prediction was confirmed, as activation patterns were consistent with those determined by the searchlight procedure: aIPS was most active for hand planning; mIPS was most active for eye planning; and aPCu was most active for foot planning. Unsurprisingly, activations were more scattered, and patches were not as consistent as those detected by the pattern-activation analysis, which underlines the high sensitivity of the pattern-activation analysis for regional representations.

Discussion

This study characterized distinctions and commonalities across effector representations in PPC. The motor planning responses of most regions were dominated by one particular effector: eye movements in pIPS, hand movements in aIPS, and foot movements in aPCu. The new observation is that several PPC regions also actively coded for commonalities between the other effectors, distinct from the dominant effector. This finding provides empirical evidence for the notion that effector selection is based on an efficient neural code that distinguishes an effector from other potential effectors (Medendorp et al., 2005; Cui and Andersen, 2011).

Pattern-activation gradient

The pattern-activation measure revealed a caudorostral gradient in PPC. This finding extends previous reports, showing that activation of a particular region by the planning of several effectors (Levy et al., 2007; Heed et al., 2011) does not necessarily imply identical representational content. Furthermore, we extend previous MVPA studies (Gallivan et al., 2011a,b; Gallivan et al., 2013) by specifying, per region, the dominant representation and testing for differences between limbs, instead of only eye–hand or hand–hand distinctions.

Previous reports have shown that, along the caudorostral axis, pIPS is informative for the eye–hand distinction (Gallivan et al., 2011). Here we show that the eye representation is dominant, that there is no information on the distinction between the hand and foot (limbs), and that limb movements do not lead to activation. We hence conclude that pIPS is involved in saccade planning (Levy et al., 2007). Following the same logic, the pattern-activation findings suggest that areas mIPS and aSPL specifically distinguish between eye and limbs. Eye, hand, and foot planning all activate these regions, but the informational content is restricted to a distinction between eyes and limbs, but not between the limbs. Only the distinct representations for eye movements are active in both hemispheres, in line with the bilateral organization of saccade preparation (Sereno et al., 2001).

In rostral PPC, aIPS coded for the hand and aPCu coded for the foot. The common representations underlying this dominant hand–foot gradient largely complemented the dominant representations: the foot area also represents the hand and the hand area also represents the foot and eye. This corepresentation of the different limbs may explain why others have found hand-tuning in the aPCu region (Fernandez-Ruiz et al., 2007; Filimon et al., 2009), which we find to be dominated by foot planning (Heed et al., 2011). Importantly, the combined pattern-activation measure allowed us to detect two separate representations in aPCu (one foot, one hand), of which the foot representation is dominant in activation. We hence conclude that aPCu is predominantly responsible for foot movement planning, while aIPS is responsible for hand movement planning.

In addition to motor planning, rostral PPC is important for somatosensation (Sereno and Huang, 2014). The rostral effector gradient may hence stem from somatosensory activations. However, the current analysis specifically focused on the planning period, during which there is no somatosensory feedback. Rather, the activation could be related to the prediction of the somatosensory consequences of the movement from the start of the trial onward (MacKay and Crammond, 1987), consistent with the effector-specific involvement of SI and SII in the current task (SII not shown; Eickhoff et al., 2007) and the predictive coding framework (Friston and Kiebel, 2009). Furthermore, knowing the position of the hand and foot, in addition to their target positions, is essential for the planning of movements with the respective effectors (Buneo et al., 2002; Beurze et al., 2009). Such an integrative role would fit with the position of the regions next to the somatosensory homunculus (Seelke et al., 2012), in line with continuous topographic coding (Graziano and Aflalo, 2007a), similar to the location of the parietal saccade areas near regions containing visual maps (Aflalo and Graziano, 2010).

Nature of coding

The continuous topographic organization of different combinations of active distinct and active common effector representations suggests a more complex organization than modular effector specificity. The common representations could code movements with similar computational constraints (cf. Heed et al., 2011), and the combination of distinct and common representations might allow combining effectors toward a common functional goal (Graziano and Aflalo, 2007b); e.g., hand and foot in aPCu for walking or climbing (Abdollahi, 2013). Such classes of movement based on coding commonalities and identities may represent a more efficient organization of motor preparation than effector-specific coding per se (Levy et al., 2007; Jastorff et al., 2010).

The fact that the rostral planning-related PPC representations are consistently dichotomous suggests that these representations could additionally be used in the selection of effectors or classes of movements. For example, in single-effector movements, aPCu could select foot movements over hand movements, while in actual climbing or walking it could represent both effectors (Graziano, 2006). This would imply that rostral PPC regions can make effector “choices,” rather than representing one or the other effector (Cisek and Kalaska, 2010; Cui and Andersen, 2011). The activated distinct representation would then be related to the selected effector, the less-activated common representation to the alternative effector(s). Which alternative effectors are considered could depend on the class of movement represented in a region (e.g., walking or climbing in aPCu: foot and hand) and/or on the combination of effectors tested in the task. Whether the basis of selection is single effectors or classes of movement could be studied by testing movements involving combinations of effectors or movements belonging to different movement classes.

Alternatively, the selection process could reflect motor inhibition of the alternative effectors (Brown et al., 2006). In the present study, participants were explicitly instructed to move only one effector, while, in nonexperimental circumstances, some effectors (e.g., eye and hand) are often moved together. Moreover, the task context itself, with equal probability for each effector, might have induced coactivation of effectors based on contingency (Chang et al., 2008; Gallivan et al., 2013). Testing movement planning with different effector probabilities should allow the separation of these accounts.

Finally, the active common representations could be caused by a general effect, such as spatial attention. We cannot rule this out, given that we did not test non-motor trials, but the specificity of our analysis to the delay period, the specific parietofrontal representations in the ipsilateral hemisphere, and the rich gradient of secondary representations all speak against a general origin of the reported common representations.

Pattern-activation framework

The pattern-activation framework makes explicit the notion that information and activation are complementary measures (Peelen and Downing, 2007; Jimura and Poldrack, 2012). Patterns are seen as representations contained in an area (MVPA assumption), whereas the mean activity shows how involved the region is in representing the condition (univariate analysis assumption). In essence, the framework assumes that patterns can be active to differing degrees, allowing quantification of the representation's involvement in the current cognitive process: we interpret weakly activated representations as weakly involved in the task. For example, limb representations in the ipsilateral hemisphere were activated for the current task to some extent, but our framework makes the testable prediction that comparable representations should be evident, though at higher activation, during movement planning of the left limbs.

There are several alternatives with which the pattern-activation framework may be implemented. An alternative for the information measure (that is, correlation) is classification (Cox and Savoy, 2003). The advantage of a correlative measure as implemented here is the possibility to test whether two patterns are less distinct than expected by chance. This allowed us to identify common representations; in contrast, in a classification approach, nondistinctiveness would be a null result. Alternatively, we could have used cross-classification: if patterns for two classes are indistinguishable (low interclass classification score), they could either be noise (no cross-classification possible) or truly the same pattern (cross-classification possible). The current results have large predictive power for such classification results. Another alternative for correlation is component analysis (Beckmann et al., 2005). This approach has the attractive feature of allowing detection of partly shared representations, such as a common hand–foot representation and a distinct hand representation within the same region. Any alternative to the activation measure should be based on a clean baseline and ideally be sensitive to both absolute and relative activation effects.

Activation is essentially information at a different spatial scale. If one took a larger ROI, some activation results would translate into information results, as they would become a subpart of the region, and vice-versa, a smaller ROI would lead to a shift from information to activation results, when local patterns no longer activate parts but the whole region. The combined measure we propose allows taking this into account by combining the two measures.

Conclusion

We conclude that PPC represents effector dichotomies of distinct and common representations. The distinct representations are organized along a posterior-to-anterior, visuosomatic gradient, which splits into a second, lateral-to-medial hand–foot gradient in rostral PPC. This appears to reflect a topographically continuous organization, from visual perception and saccades, along lateral hand and medial foot planning pathways, to the somatosensory hand and foot regions. The combination of distinct and common representations in rostral PPC makes this region ideally suited to function as a selector, either of effectors or of classes of movements.

Footnotes

This work was supported by an internal Grant from the Donders Centre for Cognition. W.P.M. is supported by the European Research Council (EU-ERC 283567) and the Netherlands Organisation for Scientific Research (NNWO-VICI: 453-11-001). T.H. is supported by the German Research Foundation (DFG-HE 6368/1-1) and the Emmy Noether program of the DFG. We thank Paul Gaalman for expert assistance with magnetic resonance imaging recording.

The authors declare no competing financial interests.

References

- Abdollahi RO, Jastorff J, Orban GA. Common and segregated processing of observed actions in human SPL. Cereb Cortex. 2013;23:2734–2753. doi: 10.1093/cercor/bhs264. [DOI] [PubMed] [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, DeLuca M, Devlin JT, Smith SM. Investigations into resting-state connectivity using independent component analysis. Philos Trans R Soc Lond B Biol Sci. 2005;360:1001–1013. doi: 10.1098/rstb.2005.1634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Integration of target and e-ector information in the human brain during reach planning. J Neurophysiol. 2007;97:188–199. doi: 10.1152/jn.00456.2006. [DOI] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Spatial and effector processing in the human parietofrontal network for reaches and saccades. J Neurophysiol. 2009;101:3053–3062. doi: 10.1152/jn.91194.2008. [DOI] [PubMed] [Google Scholar]

- Brooks JL. Counterbalancing for serial order carryover effects in experimental condition orders. Psychol Methods. 2012;17:600–614. doi: 10.1037/a0029310. [DOI] [PubMed] [Google Scholar]

- Brown MR, Goltz HC, Vilis T, Ford KA, Everling S. Inhibition and generation of saccades: rapid event-related fMRI of prosaccades, antisaccades, and nogo trials. Neuroimage. 2006;33:644–659. doi: 10.1016/j.neuroimage.2006.07.002. [DOI] [PubMed] [Google Scholar]

- Calton JL, Dickinson AR, Snyder LH. Non-spatial, motor-specific activation in posterior parietal cortex. Nat Neurosci. 2002;5:580–588. doi: 10.1038/nn0602-862. [DOI] [PubMed] [Google Scholar]

- Chang SW, Dickinson AR, Snyder LH. Limb-specific representation for reaching in the posterior parietal cortex. J Neurosci. 2008;28:6128–6140. doi: 10.1523/JNEUROSCI.1442-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annu Rev Neurosci. 2010;33:269–298. doi: 10.1146/annurev.neuro.051508.135409. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Coutanche MN. Distinguishing multi-voxel pattern and mean activation: why, how, and what does it tell us? Cogn Affect Behav Neurosci. 2013;13:667–673. doi: 10.3758/s13415-013-0186-2. [DOI] [PubMed] [Google Scholar]

- Cox DD, Savoy RL. Functional magnetic resonance imaging (fMRI) “brain reading”: detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage. 2003;19:261–270. doi: 10.1016/S1053-8119(03)00049-1. [DOI] [PubMed] [Google Scholar]

- Cui H, Andersen RA. Posterior parietal cortex encodes autonomously selected motor plans. Neuron. 2007;56:552–559. doi: 10.1016/j.neuron.2007.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui H, Andersen RA. Different representations of potential and selected motor plans by distinct parietal areas. J Neurosci. 2011;31:18130–18136. doi: 10.1523/JNEUROSCI.6247-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Edelman S, Grill-Spector K, Kushnir T, Malach R. Toward direct visualization of the internal shape representation space by fMRI. Psychobiology. 1998;26:309–321. [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR. The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb Cortex. 2007;17:1800–1811. doi: 10.1093/cercor/bhl090. [DOI] [PubMed] [Google Scholar]

- Fernandez-Ruiz J, Goltz HC, DeSouza JF, Vilis T, Crawford JD. Human parietal “reach region” primarily encodes intrinsic visual direction, not extrinsic movement direction, in a visual motor dissociation task. Cereb Cortex. 2007;17:2283–2292. doi: 10.1093/cercor/bhl137. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Huang RS, Sereno MI. Multiple parietal reach regions in humans: cortical representations for visual and proprioceptive feedback during on-line reaching. J Neurosci. 2009;29:2961–2971. doi: 10.1523/JNEUROSCI.3211-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM. Cortical surface-based analysis. II: Inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9:195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- Fisher RA. Statistical methods for research workers. Edinburgh: Oliver and Boyd; 1925. [Google Scholar]

- Friston K, Kiebel S. Predictive coding under the free-energy principle. Philos Trans R Soc Lond B Biol Sci. 2009;364:1211–1221. doi: 10.1098/rstb.2008.0300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE, Penny WD. Statistical parametric mapping: the analysis of functional brain images. London: Academic; 2011. [Google Scholar]

- Gallivan JP, McLean Da, Valyear KF, Pettypiece CE, Culham JC. Decoding action intentions from preparatory brain activity in human parieto-frontal networks. J Neurosci. 2011a;31:9599–9610. doi: 10.1523/JNEUROSCI.0080-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Smith FW, Culham JC. Decoding effector-dependent and effector-independent movement intentions from human parieto-frontal brain activity. J Neurosci. 2011b;31:17149–17168. doi: 10.1523/JNEUROSCI.1058-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Flanagan JR, Culham JC. Where one hand meets the other: limb-specific and action-dependent movement plans decoded from preparatory signals in single human frontoparietal brain areas. J Neurosci. 2013;33:1991–2008. doi: 10.1523/JNEUROSCI.0541-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gnadt JW, Andersen RA. Memory related motor planning activity in posterior parietal cortex of macaque. Exp Brain Res. 1988;70:216–220. doi: 10.1007/BF00271862. [DOI] [PubMed] [Google Scholar]

- Graziano M. The organization of behavioral repertoire in motor cortex. Annu Rev Neurosci. 2006;29:105–134. doi: 10.1146/annurev.neuro.29.051605.112924. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Aflalo TN. Mapping behavioral repertoire onto the cortex. Neuron. 2007a;56:239–251. doi: 10.1016/j.neuron.2007.09.013. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Aflalo TN. Rethinking cortical organization: moving away from discrete areas arranged in hierarchies. Neuroscientist. 2007b;13:138–147. doi: 10.1177/1073858406295918. [DOI] [PubMed] [Google Scholar]

- Hanson SJ, Schmidt A. High-resolution imaging of the fusiform face area (FFA) using multivariate non-linear classifiers shows diagnosticity for non-face categories. Neuroimage. 2011;54:1715–1734. doi: 10.1016/j.neuroimage.2010.08.028. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Deichmann R, Rees G. Eye-specific effects of binocular rivalry in the human lateral geniculate nucleus. Nature. 2005;438:496–499. doi: 10.1038/nature04169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heed T, Beurze SM, Toni I, Röder B, Medendorp WP. Functional Rather than Effector-Specific Organization of Human Posterior Parietal Cortex. J Neurosci. 2011;31:3066–3076. doi: 10.1523/JNEUROSCI.4370-10.2011. abstracts. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heed T, Leoné FTM, Toni I, Medendorp WP. Repetition suppression effects for eye, hand and foot movement planning: effector-dependent versus effector-independent representations. Soc Neurosci Abstr. 2013;39 163.05/JJ15. [Google Scholar]

- Jastorff J, Begliomini C, Fabbri-Destro M, Rizzolatti G, Orban GA. Coding observed motor acts: different organizational principles in the parietal and premotor cortex of humans. J Neurophysiol. 2010;104:128–140. doi: 10.1152/jn.00254.2010. [DOI] [PubMed] [Google Scholar]

- Jimura K, Poldrack RA. Analyses of regional-average activation and multivoxel pattern information tell complementary stories. Neuropsychologia. 2012;50:544–552. doi: 10.1016/j.neuropsychologia.2011.11.007. [DOI] [PubMed] [Google Scholar]

- Konen CS, Mruczek RE, Montoya JL, Kastner S. Functional organization of human posterior parietal cortex: grasping-and reaching-related activations relative to topographically organized cortex. J Neurophysiol. 2013;109:2897–2908. doi: 10.1152/jn.00657.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Bandettini P. Analyzing for information, not activation, to exploit high-resolution fMRI. Neuroimage. 2007;38:649–662. doi: 10.1016/j.neuroimage.2007.02.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis-connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:4. doi: 10.3389/neuro.01.016.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12:535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I, Schluppeck D, Heeger DJ, Glimcher PW. Specificity of human cortical areas for reaches and saccades. J Neurosci. 2007;27:4687–4696. doi: 10.1523/JNEUROSCI.0459-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacKay WA, Crammond DJ. Neuronal correlates in posterior parietal lobe of the expectation of events. Behav Brain Res. 1987;24:167–179. doi: 10.1016/0166-4328(87)90055-6. [DOI] [PubMed] [Google Scholar]

- Matelli M, Luppino G. Parietofrontal circuits for action and space perception in the macaque monkey. Neuroimage. 2001;14:S27–32. doi: 10.1006/nimg.2001.0835. [DOI] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Crawford JD, Vilis T. Integration of target and effector information in human posterior parietal cortex for the planning of action. J Neurophysiol. 2005;93:954–962. doi: 10.1152/jn.00725.2004. [DOI] [PubMed] [Google Scholar]

- Misaki M, Kim Y, Bandettini PA, Kriegeskorte N. Comparison of multivariate classifiers and response normalizations for pattern-information fMRI. Neuroimage. 2010;53:103–118. doi: 10.1016/j.neuroimage.2010.05.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol. 2000;83:2580–2601. doi: 10.1152/jn.2000.83.5.2580. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Using multi-voxel pattern analysis of fMRI data to interpret overlapping functional activations. Trends Cogn Sci. 2007;11:4–5. doi: 10.1016/j.tics.2006.10.009. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49:815–822. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Pereira F, Mitchell T, Botvinick M. Machine learning classifiers and fMRI: a tutorial overview. Neuroimage. 2009;45:S199–209. doi: 10.1016/j.neuroimage.2008.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack RA. Region of interest analysis for fMRI. Soc Cogn Affect Neurosci. 2007;2:67–70. doi: 10.1093/scan/nsm006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poser BA, Versluis MJ, Hoogduin JM, Norris DG. BOLD contrast sensitivity enhancement and artifact reduction with multiecho EPI: parallel-acquired inhomogeneity-desensitized fMRI. Magn Reson Med. 2006;55:1227–1235. doi: 10.1002/mrm.20900. [DOI] [PubMed] [Google Scholar]

- Raizada RDS, Kriegeskorte N. Pattern-information fMRI: new questions which it opens up and challenges which face it. Int J Imaging Syst Technol. 2010;20:31–41. doi: 10.1002/ima.20225. [DOI] [Google Scholar]

- Seelke AM, Padberg JJ, Disbrow E, Purnell SM, Recanzone G, Krubitzer L. Topographic maps within Brodmann's area 5 of macaque monkeys. Cereb Cortex. 2012;22:1834–1850. doi: 10.1093/cercor/bhr257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Huang RS. Multisensory maps in parietal cortex. Curr Opin Neurobiol. 2014;24:39–46. doi: 10.1016/j.conb.2013.08.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Pitzalis S, Martinez A. Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science. 2001;294:1350–1354. doi: 10.1126/science.1063695. [DOI] [PubMed] [Google Scholar]

- Silver MA, Kastner S. Topographic maps in human frontal and parietal cortex. Trends Cogn Sci. 2009;13:488–495. doi: 10.1016/j.tics.2009.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- Stark CE, Squire LR. When zero is not zero: the problem of ambiguous baseline conditions in fMRI. Proc Natl Acad Sci U S A. 2001;98:12760–12766. doi: 10.1073/pnas.221462998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taoka M, Toda T, Iriki A, Tanaka M, Iwamura Y. Bilateral receptive field neurons in the hindlimb region of the postcentral somatosensory cortex in awake macaque monkeys. Exp Brain Res. 2000;134:139–146. doi: 10.1007/s002210000464. [DOI] [PubMed] [Google Scholar]

- Verhagen L, Dijkerman HC, Grol MJ, Toni I. Perceptuo-motor interactions during prehension movements. J Neurosci. 2008;28:4726–4735. doi: 10.1523/JNEUROSCI.0057-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]