Abstract

Research from the previous decade suggests that word meaning is partially stored in distributed modality-specific cortical networks. However, little is known about the mechanisms by which semantic content from multiple modalities is integrated into a coherent multisensory representation. Therefore we aimed to characterize differences between integration of lexical-semantic information from a single modality compared with two sensory modalities. We used magnetoencephalography in humans to investigate changes in oscillatory neuronal activity while participants verified two features for a given target word (e.g., “bus”). Feature pairs consisted of either two features from the same modality (visual: “red,” “big”) or different modalities (auditory and visual: “red,” “loud”). The results suggest that integrating modality-specific features of the target word is associated with enhanced high-frequency power (80–120 Hz), while integrating features from different modalities is associated with a sustained increase in low-frequency power (2–8 Hz). Source reconstruction revealed a peak in the anterior temporal lobe for low-frequency and high-frequency effects. These results suggest that integrating lexical-semantic knowledge at different cortical scales is reflected in frequency-specific oscillatory neuronal activity in unisensory and multisensory association networks.

Keywords: cortical oscillations, language, multisensory integration, semantic memory, visual word processing

Introduction

Imaging studies have shown that verbal descriptions of perceptual/motoric information activate neural networks also engaged in processing this information at the sensory level. For example, color words activate pathways that are also sensitive to chromatic contrasts (Simmons et al., 2007), action words engage areas involved in action planning (Hauk et al., 2004; van Dam et al., 2010), and words with acoustic associations (e.g., “telephone”) engage regions sensitive to meaningful sounds (Kiefer et al., 2008). More recent studies have investigated words that are associated with features from more than one modality. For example, van Dam et al. (2012) showed that words that are associated with visual and action features (e.g., “tennis ball”) activate networks from both modalities. While this is evidence that lexical-semantic knowledge is partially stored in modality-specific (MS) cortical networks, where and how this information is integrated is still debated.

Some accounts postulate that information is integrated in distributed convergence zones (Barsalou et al., 2003; Damasio et al., 2004), while others have argued for a single hub in the anterior temporal lobe (ATL; Patterson et al., 2007). Although there is compelling neuropsychological evidence for the existence of a hub (Hodges et al., 1992), little is known about how the network dynamics within this region combine semantic content from distributed sources in the cortex. Combining multiple semantic features may be relevant for identifying a specific token of a concept, and detecting relationships between concepts that are not perceptually based.

Physiological evidence from animals and humans suggests that distributed information is integrated through synchronized oscillatory activity (Singer and Gray, 1995). Several accounts have attempted to link oscillatory activity with perceptual and cognitive processes (Raghavachari et al., 2001; Bastiaansen et al., 2005; Schneider et al., 2008). However, the link between power changes in a given frequency band and specific perceptual/cognitive processes remains contentious. One compelling recent account suggests that high-frequency and low-frequency oscillations may operate at different spatial scales at the level of the cortex (von Stein and Sarnthein, 2000; Donner and Siegel, 2011). Specifically, it has been argued that oscillatory activity at low frequencies (<30 Hz) are involved in coordinating distributed neural populations, whereas interactions within a neural population are reflected in high frequencies (>30 Hz). This idea converges with evidence suggesting that high-frequency oscillatory activity can be nested in low-frequency cycles (Canolty et al., 2006). With respect to embodied accounts of lexical semantics, this is interesting because integrating distributed information across modalities (e.g., color–sound) may be reflected in lower frequency bands than integrating MS information locally (e.g., color–shape).

We used magnetoencephalography (MEG) to test whether oscillatory neuronal activity is relevant for the integration of cross-modal (CM) semantic information during word comprehension. In the experimental paradigm, two feature words and one target word were presented visually. Feature words referred to either MS information from the same modality or to CM information from different modalities (Fig. 1A). We hypothesized that integrating features of the target word from local MS networks will be reflected in high-frequency oscillatory activity, while integrating features across modalities will induce a modulation in low frequencies.

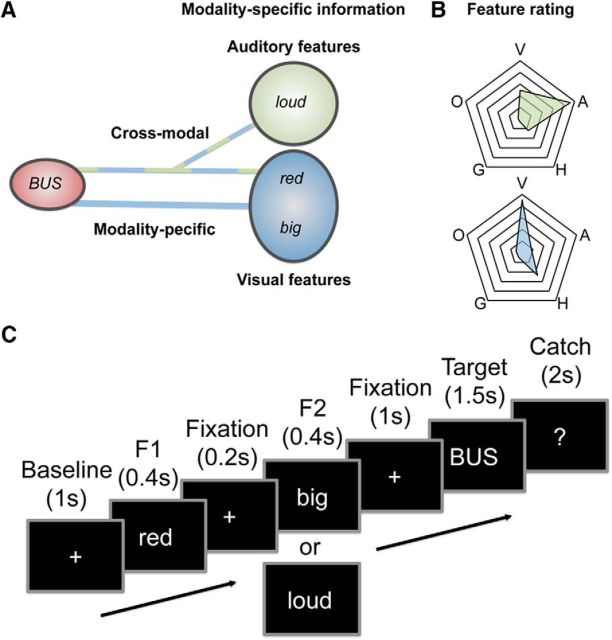

Figure 1.

Design of the experiment. A, The word “bus” is associated with visual and auditory features. Participants are required to verify features from either the same (visual: “red,” “big”) or different modalities (auditory and visual: “red,” “loud”). B, Spider plots show that auditory (green) and visual features (blue) are rated as predominantly auditory (A) and visual (V) rather than haptic (H), olfactory (O), or gustatory (G). C, Features were always presented before target words.

Materials and Methods

Participants.

Participants were 22 healthy, right-handed individuals (nine male) with no neurological disorder, normal or corrected to normal vision, and no known auditory impairment. The age range was 18–35 years (mean, 24.45 years; SE, 1.19 years). Four participants were excluded from the analysis due to excessive ocular and movement-related artifacts.

All participants were students at the University of York and participated either for monetary compensation or course credits on a voluntary basis. All participants gave written informed consent according to the Declaration of Helsinki and were debriefed after the study. The study was approved by the Ethics Committee of the York Neuroimaging Centre.

Experimental design.

Participants performed a dual property verification task. After a baseline period of fixation (1000 ms), two feature words (e.g., “red,” “big”; 400 ms each) and a target (e.g., “bus”; 1500 ms) were presented (Fig. 1C). Participants were instructed to decide whether the two features can be used to describe the target. To reduce motor response-related activity in the signal, participants were asked to respond only on catch trials (33%, see below).

One hundred and ninety-six unique features were extracted from previous feature rating studies (Lynott and Connell, 2009; van Dantzig et al., 2011) and a rating system was created for the purpose of the current experiment in which participants (N = 10) rated how likely they perceived a given feature by seeing, hearing, feeling, tasting, and smelling. Dependent-sample t tests confirmed that visual features were more strongly experienced by seeing compared with hearing, feeling, tasting, and smelling (all p < 0.001). Similarly, auditory features were more strongly perceived by hearing than seeing, feeling, tasting, or smelling (all p < 0.001).

Three hundred target words were either paired with a CM or an MS feature pair. For example, “bus” was paired with visual features (“red,” “big”) in the MS condition, and a visual and auditory feature in the CM condition (“red,” “loud”). Feature pairs were rated (N = 10), and matched for relatedness and predictability with respect to the target word in the CM versus MS condition (p = 0.83; p = 0.123), as well as the MS visual (MS-v) versus auditory (MS-a) condition (p = 0.092; p = 0.95). In addition, features were matched between the CM and MS condition for word length (p = 0.75), log10 frequency [p = 0.86; British National Corpus (BNC); http://www.kilgarriff.co.uk/bnc-readme.html], and the proportion of gerundives to adjectives (p = 0.24). The entire list of stimuli will be made available upon request. Target words were the same in the CM and MS conditions, and were matched for word length and log10 frequency (BNC; http://www.kilgarriff.co.uk/bnc-readme.html) across the MS-v and MS-a conditions (p = 0.74; p = 0.40).

Additionally, 150 feature–target (IF) combinations (e.g., “rumbling-squeaking-cactus”) were included as nonintegrable distracters. Each participant saw an experimental target word only in one condition, resulting in 100 CM, 100 MS-v, 100 MS-a, and 150 IF pairs. The presentation of experimental items in a given condition was pseudorandomized within each participant and counterbalanced over participants.

Data acquisition.

MEG data were acquired on a Magnes 3600 whole-head 248-channel magnetometer system (4-D Neuroimaging) using a sampling rate of 678.17 Hz. Head position was measured at the start and end of the experiment from five head coils (left and right preauricular points, Cz, nasion, and inion) using a Polhemus Fastrack System (Polhemus Fastrak). Horizontal and vertical eye movements as well as cardiac responses were recorded and monitored during the whole experiment.

Structural T1-weighted images (TR = 8.03 ms; TE = 3.07 ms; flip angle, 20°; spatial resolution, 1.13 × 1.13 × 1.0 mm; in-plane resolution, 256 × 256 × 176) were recorded on a GE 3.0 Tesla Excite HDx system (General Electric) using an eight-channel head coil and a 3-D fast spoiled gradient recall sequence. Coregistration of the MEG to the structural images was performed using anatomical landmarks (preauricular points, nasion) and a surface-matching technique based on individually digitized head shapes.

Preprocessing.

The analysis was performed using Matlab 7.14 (MathWorks) and Fieldtrip (http://fieldtrip.fcdonders.nl/). For subsequent analysis, the data were bandpass filtered (0.5–170 Hz; Butterworth filter; low-pass filter order, 4; high-pass filter order, 3) and resampled (400 Hz). Line noise was suppressed by filtering the 50, 100, and 150 Hz Fourier components. Artifact rejection followed a two-step procedure. First, artifacts arising from muscle contraction, squid jumps, and other nonstereotyped sources (e.g., cars, cable movement) were removed using semiautomatic artifact rejection. Second, extended infomax-independent component analysis, with a weight change stop criterion of <10−7, was applied to remove components representing ocular (eye blinks, horizontal eye movements, saccadic spikes) and cardiac signals.

Time frequency analysis.

Total power was computed using a sliding window Fourier transformation for each trial with fixed time windows (500 and 200 ms) in steps of 50 ms during the first 1000 ms after the onset of the target word. To maximize power, low (2–30 Hz) and high frequencies (30–120 Hz) were analyzed separately. For low frequencies, Fourier transformation was applied to Hanning tapered time windows of 500 ms, resulting in a frequency smoothing of ∼2 Hz. For high frequencies, a multitaper method was applied to reduce spectral leakage (Percival and Walden, 1993); sliding time windows of 200 ms were multiplied with three orthogonal Slepian tapers and subjected to Fourier transformation separately. The resulting power spectra were averaged over tapers, resulting in a frequency smoothing of ±10 Hz.

Statistical analysis.

Power differences across conditions were evaluated using cluster-based randomization, which controls for multiple comparisons by clustering neighboring samples in time, frequency, and space (Maris and Oostenveld, 2007). At the sensor level, clustering was performed by computing dependent-samples t tests for each sensor time point in five frequency bands of interest, θ (2–8 Hz), α (10–14 Hz), β (16–30 Hz), low γ (30–70 Hz), and high γ (80–120 Hz), during the first 1000 ms after the onset of the target word. At the source level, clustering was performed across space at the frequency time window of interest. Neighboring t values exceeding the cluster-level threshold (corresponding to α < 0.05) were combined into a single cluster. Cluster-level statistics were computed by comparing the summed t values of each cluster against a permutation distribution. The permutation distribution was constructed by randomly permuting the conditions (1000 iterations) and calculating the maximum cluster statistic on each iteration.

Source reconstruction.

Following the recommendation from Gross et al. (2013), statistical analysis of the contrast CM–MS was performed at the sensor level; subsequent source reconstruction was used to localize this effect. Oscillatory sources were localized at the whole-brain level using linear beamforming (Gross et al., 2001; Liljeström et al., 2005), an adaptive spatial filter technique. Individual structural MRI scans were segmented and the brain compartment was used to compute a single-shell headmodel for the source analysis. The individual single-shell headmodel was used to compute the forward model (Nolte, 2003) on a regular 3-D grid (with 10 × 10 × 10 mm spacing). Filters were constructed using the leadfield of each grid point and the cross-spectral density matrix (CSD). The CSD matrix was computed between all MEG sensors using Hanning tapers (500 ms; ∼2 Hz smoothing) for low frequencies, and multitapers for high frequencies (200 ms; seven tapers; 20 Hz smoothing). The grid points from each individual structural image were warped to corresponding locations in an MNI template grid (International Consortium for Brain Mapping; Montreal Neurological Institute, Montreal, QC, Canada) before averaging across participants.

Results

Behavioral analysis

Responses of one participant were not recorded, leaving 17 participants for the behavioral analysis. A one-sample t test confirmed that participants were able to correctly verify trials above chance (t(16) = 7.652; p < 0.001; mean, 0.77; SE, 0.04). As expected with a delayed response, there were no significant differences between the cross-modal and the MS condition in accuracy (t(16) = −1.287; p = 0.216; CM: mean, 0.74; SE, 0.05; MS: mean, 0.78; SE, 0.34) or reaction times (t(16) = − 0.931; p = 0.366; CM: mean, 900 ms; SE, 61 ms; MS: mean, 874 ms; SE, 54 ms).

Low frequencies are sensitive to semantic integration at the global scale

In the low-frequency range, both conditions (CM, MS) show a power increase in the θ band (2–8 Hz) as well as a decrease in the α (10–14 Hz) and β bands (16–30 Hz; Fig. 2A). Statistical comparison between conditions (Fig. 2A, right) revealed enhanced θ-band power (2–8 Hz; 580–1000 ms) to the target word for CM versus MS (p = 0.04, two-sided) over left-lateralized magnetometers (Fig. 2B). In other words, enhanced θ power in response to visually presented words is more sustained when participants think about a target word in the context of lexical-semantic features from different modalities.

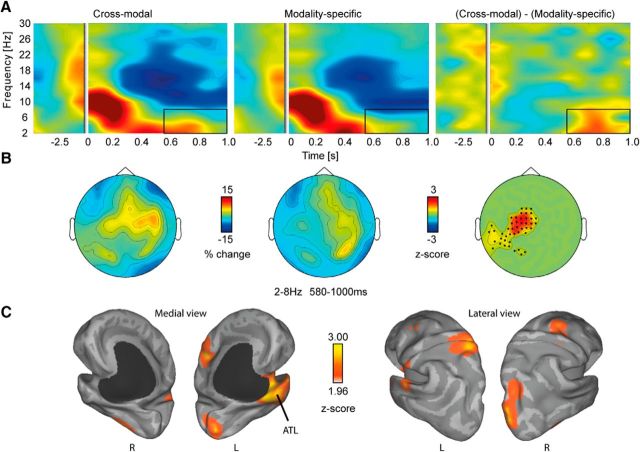

Figure 2.

Low-frequency oscillations in sensor and source space. A, Total power changes in the CM and MS condition relative to a 500 ms fixation baseline. Right, Statistical differences between conditions averaged over the identified cluster. The box depicts the significant time–frequency range. B, Topographies showing relative signal change across magnetometers between 2 and 8 Hz and between 580 and 1000 ms. Right, Significant channels are marked as dots. C, Source reconstruction revealed peaks in the ATL and parietal lobe.

Source reconstructions for each condition were computed at the center frequency (4–8 Hz; Gross et al., 2001; Liljeström et al., 2005). Figure 2C shows the difference between conditions, expressed in z-scores. Major peaks are observed in left ATL, precuneus, and around the paracentral lobule. Smaller peaks are seen in left lingual and right posterior fusiform gyrus, as well as right superior occipital and middle frontal gyrus.

To evaluate whether these effects are related to evoked activity sensitive to semantic processing (N400m), time-domain data for each condition were averaged, baseline corrected (150 ms pretarget), and converted into planar gradients (Bastiaansen and Knösche, 2000). ANOVAs with repeated measures at all sensors were computed for the N400m time window (350–550 ms) and subjected to a cluster-randomization procedure. This analysis yielded no significant clusters (p = 0.7), suggesting that any effect related to semantic processing is induced.

High frequencies reflect MS semantic integration

The high-frequency range revealed an early increase in high-γ power (80–120 Hz, 150–350 ms) for MS, but not CM, pairs. Specifically, γ power was enhanced when lexical-semantic features from the same modality were presented (p = 0.006, two-sided; Fig. 3A). The topography of the effect showed a left posterior distribution (Fig. 3B). This suggests that integrating MS features of a target word is reflected in high-frequency γ oscillations.

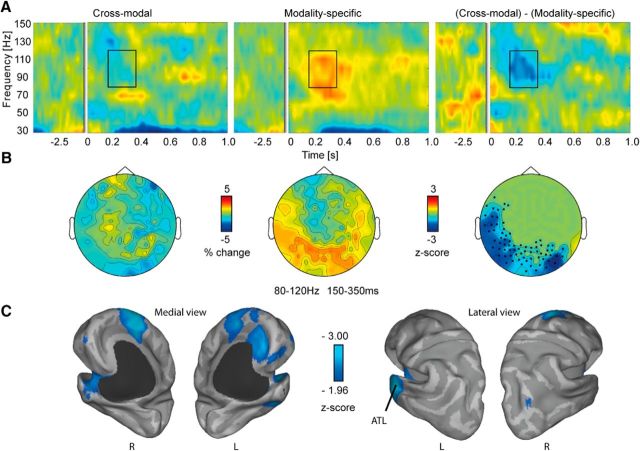

Figure 3.

High-frequency oscillations in sensor and source space. A, Total power changes in the CM and MS condition relative to a 500 ms fixation baseline, and the statistical difference between conditions. The box depicts the significant time–frequency range. B, Topographies showing relative signal change across magnetometers between 80 and 120 Hz and between 150 and 350 ms. Right, Significant channels are marked as dots. C, Source reconstruction revealed peaks in left ATL and medial frontal lobe.

Source reconstruction in the high-frequency range (80–120 Hz) revealed a peak in the left ATL, as well as the medial superior frontal gyrus, midcingulum, and left anterior cingulate cortex. Smaller peaks were observed in the right middle occipital gyrus (Fig. 3C).

Modality specificity to semantic features in auditory and visual cortices

Whole-brain cluster statistics in source space were performed on the two MS conditions (visual and auditory) versus baseline to investigate whether enhanced γ power reflects MS network interactions. Both conditions showed enhanced γ power in visual areas, but only the auditory condition showed a peak in left posterior superior temporal sulcus (pSTS)/BA22 (p = 0.004, two-sided; Fig. 4, left, middle). A direct comparison between conditions confirmed that γ power in left pSTS is enhanced for the auditory, but not visual, condition (p < 0.002, two-sided; Fig. 4, right). No effect in the opposite direction was found.

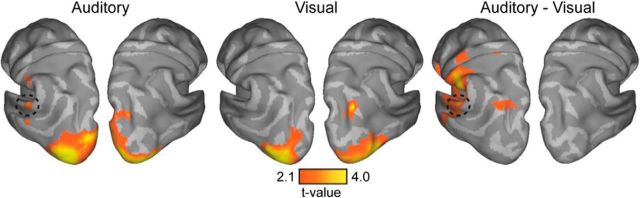

Figure 4.

Whole-brain contrasts for MS auditory and visual feature contexts in the γ range. Left, Middle, The contrast between the auditory (left) and visual (middle) condition versus baseline. Right, Contrast between the auditory and visual condition. γ Power is enhanced in visual areas for both conditions, but only the auditory condition shows a peak in pSTS/BA22 (dashed circle). All contrasts are corrected at the cluster level (p < 0.005).

Discussion

The aim of the current experiment was to investigate (1) whether oscillatory neuronal activity contributes to the integration of semantic information from different modalities and, if so, (2) how these dynamics are linked to a putative hub or multiple convergence zones in the cortex. Therefore, we characterized differences in oscillatory neuronal activity when participants integrated semantic features of a target word (e.g., “bus”), from the same or multiple modalities. Our results suggest that integrating features from different modalities (e.g., “red,” “loud”) is reflected in a more sustained increase in θ power, while high γ power is more sensitive to integrating lexical-semantic knowledge from a single modality (e.g., “red,” “big”). The neural generators of both effects include the ATL, a region that is proposed to be critical for semantic association and integration (Patterson et al., 2007). Furthermore, a direct comparison between auditory and visual feature contexts revealed that γ power is enhanced in visual areas for both conditions, while only the auditory context reveals a peak in auditory areas (pSTS).

Fast and slow components of lexical-semantic processing are reflected in the spectral and temporal profile of the signal.

Previous research using evoked fields in MEG has shown that MS activation of lexical-semantic features can be detected before 200 ms (Pulvermüller et al., 2009). It has been proposed that these responses reflect early parallel processes during language comprehension. The current study revealed an early (150–350 ms) modulation in high-frequency power (80–120 Hz) in response to the target word if participants had to access two features from the same modality. The implication of these results for neurocognitive models of language understanding is that early integration of word meaning from the same modality is reflected in a transient high-frequency oscillatory response.

Furthermore, the current study identified a late θ power increase on the target word when participants verified features from different modalities. Previous research has shown that θ oscillations are sensitive to lexical-semantic content of open-class words (Bastiaansen et al., 2005). θ is also the only known frequency band that shows a linear power increase during sentence processing, suggesting that it could be involved in ongoing integration of word meaning (Bastiaansen et al., 2002). The current study revealed a power increase in the θ band when participants evaluated semantic features of the target word that was prolonged for CM features. Given similar behavioral performance, identical target words, and careful matching of feature and target words in both conditions, differences in task demands are unlikely to account for this effect. A possible explanation is that integration demands increase when participants integrate information over a more widespread cortical network, that is, information from multiple MS networks. This account is in line with behavioral findings showing delayed reaction times during property verification of CM features (Barsalou et al., 2005).

In sum, the present study identified two processes relevant for lexical-semantic processing: an early increase in γ power for combining similar information, and a sustained increase in θ power that could reflect ongoing integration of information from distributed cortical networks.

Oscillatory neuronal activity in the ATL reflects the distribution of lexical-semantic information in the cortex

Based on neuropsychological research in patients with temporal lobe atrophy (Hodges et al., 1992), Patterson and colleagues (2007) have argued that the ATL might be involved in combining semantic knowledge from distributed MS networks. However, the physiological mechanisms of how information is integrated within, and possibly outside, this region are poorly understood. The current study showed that integrating information from different modalities is reflected in sustained θ power within the ATL. As previously suggested (Raghavachari et al., 2001), θ oscillations could operate as a temporal gating mechanism in which incoming information from different networks is combined into a coherent representation.

The present data also revealed enhanced γ power in left ATL when the target was presented with two features from the same modality. As recently demonstrated by Peelen and Caramazza (2012), using fMRI pattern classification, the ATL is sensitive to the MS content of the stimulus (motoric, visuospatial). This suggests that different activation patterns in the ATL could reflect inputs from distributed MS networks. Indeed, recent imaging work (van Dam et al., 2012) has demonstrated that listening to words that are associated with more than one modality (visual and functional) activates multiple MS cortical networks. For example, a motor network in the parietal lobe responds more strongly if the participant is asked to think about what to do with an object rather than what it looks like. The current results further demonstrate that accessing and combining MS semantic information enhances γ power in local MS networks. Specifically, γ power is enhanced in pSTS when participants are asked to access auditory features of an object. No such modulation was observed in the visual feature context. A possible reason for the lack of a semantic effect is that the sensory response to a visual word desensitizes the visual system to the more subtle semantic modulations (Pulvermüller, 2013).

Several studies have associated CM perceptual matching with enhanced γ activity (Schneider et al., 2008). These findings are not necessarily in conflict with the current framework. As Donner and Siegel (2011) point out, local γ band modulations can also be the result of higher-order interactions. The current study extends this work showing that oscillatory dynamics in temporal association networks reflect whether one or multiple local networks participate in these interactions.

In conclusion, the current study has demonstrated that combining word meaning from a single modality is reflected in early oscillatory activity in the γ band, originating in sensory cortices and left ATL, respectively. More specifically, MS networks in the auditory cortex were more sensitive to auditory than to visual features. In contrast, integrating features from multiple modalities induced a more sustained oscillatory response in the θ band that was partially localized to ventral networks in the ATL. Together, these results represent a mechanistic framework for lexical-semantic processing. At the physiological level, accessing knowledge from a single or from multiple semantic networks is reflected in oscillatory activity at different frequencies. At the cognitive level, the current data suggest two processes that operate in parallel, but at a different temporal resolution: a fast process for combining similar information early on, and a slow process that could be involved in integrating distributed semantic information into a coherent object representation.

Footnotes

This work was supported by grants from the European Union (ERC-2010-AdG-269716) and the Deutsche Forschungsgemeinschaft (SFB TRR58-B4), as well as a study visit grant from the Experimental Psychology Society.

The authors declare no competing financial interests.

References

- Barsalou LW, Kyle Simmons W, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends Cogn Sci. 2003;7:84–91. doi: 10.1016/S1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Pecher D, Zeelenberg R, Simmons WK, Hamann SB. Multimodal simulation in conceptual processing. In: Ahn W, Goldstone R, Love B, Markman A, Wolff P, editors. Categorization inside and outside the lab: Festschrift in honor of Douglas L. Medin. Washington, DC: American Psychological Association; 2005. pp. 249–270. [Google Scholar]

- Bastiaansen MC, Knösche TR. Tangential derivative mapping of axial MEG applied to event-related desynchronization research. Clin Neurophysiol. 2000;111:1300–1305. doi: 10.1016/S1388-2457(00)00272-8. [DOI] [PubMed] [Google Scholar]

- Bastiaansen MC, van Berkum JJ, Hagoort P. Event-related theta power increases in the human EEG during online sentence processing. Neurosci Lett. 2002;323:13–16. doi: 10.1016/S0304-3940(01)02535-6. [DOI] [PubMed] [Google Scholar]

- Bastiaansen MC, van der Linden M, Ter Keurs M, Dijkstra T, Hagoort P. Theta responses are involved in lexical-semantic retrieval during language processing. J Cogn Neurosci. 2005;17:530–541. doi: 10.1162/0898929053279469. [DOI] [PubMed] [Google Scholar]

- Canolty RT, Edwards E, Dalal SS, Soltani M, Nagarajan SS, Kirsch HE, Berger MS, Barbaro NM, Knight RT. High gamma power is phase-locked to theta oscillations in human neocortex. Science. 2006;313:1626–1628. doi: 10.1126/science.1128115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Donner TH, Siegel M. A framework for local cortical oscillation patterns. Trends Cogn Sci. 2011;15:191–199. doi: 10.1016/j.tics.2011.03.007. [DOI] [PubMed] [Google Scholar]

- Gross J, Kujala J, Hamalainen M, Timmermann L, Schnitzler A, Salmelin R. Dynamic imaging of coherent sources: studying neural interactions in the human brain. Proc Natl Acad Sci U S A. 2001;98:694–699. doi: 10.1073/pnas.98.2.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross J, Baillet S, Barnes GR, Henson RN, Hillebrand A, Jensen O, Jerbi K, Litvak V, Maess B, Oostenveld R, Parkkonen L, Taylor JR, van Wassenhove V, Wibral M, Schoffelen JM. Good practice for conducting and reporting MEG research. Neuroimage. 2013;65:349–363. doi: 10.1016/j.neuroimage.2012.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermüller F. Somatotopic representation of action words in human motor and premotor cortex. Neuron. 2004;41:301–307. doi: 10.1016/S0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Oxbury S, Funnell E. Semantic dementia: progressive fluent aphasia with temporal lobe atrophy. Brain. 1992;115:1783–1806. doi: 10.1093/brain/115.6.1783. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Herrnberger B, Grothe J, Hoenig K. The sound of concepts: four markers for a link between auditory and conceptual brain systems. J Neurosci. 2008;28:12224–12230. doi: 10.1523/JNEUROSCI.3579-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liljeström M, Kujala J, Jensen O, Salmelin R. Neuromagnetic localization of rhythmic activity in the human brain: a comparison of three methods. Neuroimage. 2005;25:734–745. doi: 10.1016/j.neuroimage.2004.11.034. [DOI] [PubMed] [Google Scholar]

- Lynott D, Connell L. Modality exclusivity norms for 423 object properties. Behav Res Methods. 2009;41:558–564. doi: 10.3758/BRM.41.2.558. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164:177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Nolte G. The magnetic lead field theorem in the quasi-static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Phys Med Biol. 2003;48:3637–3652. doi: 10.1088/0031-9155/48/22/002. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. Conceptual object representations in human anterior temporal cortex. J Neurosci. 2012;32:15728–15736. doi: 10.1523/JNEUROSCI.1953-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Percival DB, Walden AT. Spectral analysis for physical applications: multitaper and conventional univariate techniques. Cambridge, UK: Cambridge UP; 1993. [Google Scholar]

- Pulvermüller F. How neurons make meaning: brain mechanisms for embodied and abstract-symbolic semantics. Trends Cogn Sci. 2013;17:458–470. doi: 10.1016/j.tics.2013.06.004. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y, Hauk O. Understanding in an instant: neurophysiological evidence for mechanistic language circuits in the brain. Brain Lang. 2009;110:81–94. doi: 10.1016/j.bandl.2008.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raghavachari S, Kahana MJ, Rizzuto DS, Caplan JB, Kirschen MP, Bourgeois B, Madsen JR, Lisman JE. Gating of human theta oscillations by a working memory task. J Neurosci. 2001;21:3175–3183. doi: 10.1523/JNEUROSCI.21-09-03175.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider TR, Debener S, Oostenveld R, Engel AK. Enhanced EEG gamma-band activity reflects multisensory semantic matching in visual-to-auditory object priming. Neuroimage. 2008;42:1244–1254. doi: 10.1016/j.neuroimage.2008.05.033. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW. A common neural substrate for perceiving and knowing about color. Neuropsychologia. 2007;45:2802–2810. doi: 10.1016/j.neuropsychologia.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer W, Gray CM. Visual feature integration and the temporal correlation hypothesis. Annu Rev Neurosci. 1995;18:555–586. doi: 10.1146/annurev.ne.18.030195.003011. [DOI] [PubMed] [Google Scholar]

- van Dam WO, Rueschemeyer SA, Bekkering H. How specifically are action verbs represented in the neural motor system: an fMRI study. Neuroimage. 2010;53:1318–1325. doi: 10.1016/j.neuroimage.2010.06.071. [DOI] [PubMed] [Google Scholar]

- van Dam WO, van Dijk M, Bekkering H, Rueschemeyer SA. Flexibility in embodied lexical-semantic representations. Hum brain Mapp. 2012;33:2322–2333. doi: 10.1002/hbm.21365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dantzig S, Cowell RA, Zeelenberg R, Pecher D. A sharp image or a sharp knife: norms for the modality-exclusivity of 774 concept-property items. Behav Res Methods. 2011;43:145–154. doi: 10.3758/s13428-010-0038-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Stein A, Sarnthein J. Different frequencies for different scales of cortical integration: from local gamma to long range alpha/theta synchronization. Int J Psychophysiol. 2000;38:301–313. doi: 10.1016/S0167-8760(00)00172-0. [DOI] [PubMed] [Google Scholar]