Abstract

Though the fusiform is well-established as a key node in the face perception network, its role in facial expression processing remains unclear, due to competing models and discrepant findings. To help resolve this debate, we recorded from 17 subjects with intracranial electrodes implanted in face sensitive patches of the fusiform. Multivariate classification analysis showed that facial expression information is represented in fusiform activity and in the same regions that represent identity, though with a smaller effect size. Examination of the spatiotemporal dynamics revealed a functional distinction between posterior fusiform and midfusiform expression coding, with posterior fusiform showing an early peak of facial expression sensitivity at around 180 ms after subjects viewed a face and midfusiform showing a later and extended peak between 230 and 460 ms. These results support the hypothesis that the fusiform plays a role in facial expression perception and highlight a qualitative functional distinction between processing in posterior fusiform and midfusiform, with each contributing to temporally segregated stages of expression perception.

Keywords: face perception, facial expressions, fusiform, intracranial electroencephalography, multivariate temporal pattern analysis

Introduction

Face perception, including detecting a face, recognizing face identity, assessing sex, age, emotion, attractiveness, and other characteristics associated with the face, is critical to social communication. An influential cognitive model of face processing distinguishes processes associated with recognizing the identity of a face from those associated with recognizing expression (Bruce and Young 1986). A face sensitive region of the lateral fusiform gyrus, sometimes called the fusiform face area, is a critical node in the face processing network (Haxby et al. 2000; Calder and Young 2005; Ishai 2008; Duchaine and Yovel 2015) that has been shown to be involved in identity perception (Barton et al. 2002; Barton 2008; Nestor et al. 2011; Goesaert and Op de Beeck 2013; Ghuman et al. 2014). What role, if any, the fusiform plays in face expression processing continues to be debated, particularly given the hypothesized cognitive distinction between identity and expression perception.

Results demonstrating relative insensitivity of the fusiform to face dynamics (Pitcher et al. 2011), reduced fusiform activity for attention to gaze direction (Hoffman and Haxby 2000), and findings showing insensitivity of the fusiform to expression (Breiter et al. 1996; Whalen et al. 1998; Streit et al. 1999; Thomas et al. 2001; Foley et al. 2012) led to a model that proposed that this area was involved strictly in identity perception and not expression processing (Haxby et al. 2000). This model provided neuroscientific grounding for the earlier cognitive model that hypothesized a strong division between identity and expression perception (Bruce and Young 1986). Recently, imaging studies have increasingly suggested that fusiform is involved in expression coding (Vuilleumier et al. 2001; Ganel et al. 2005; Fox et al. 2009; Xu and Biederman 2010; Bishop et al. 2015; Achaibou et al. 2016). Positive findings for fusiform sensitivity to expression have led to the competing hypothesis that the division of face processing is not for identity and expression, but rather form/structure and motion (Duchaine and Yovel 2015). However, mixed results have been reported in examining whether the same patches of the fusiform that code for identity also code for expression (See [Zhang et al. 2016] for a study that examined both, but saw negative results for expression coding). Furthermore, some studies show the fusiform has an expression-independent identity code (Nestor et al. 2011; Ghuman et al. 2014). Taken together, prior results provide some, but not unequivocal, evidence for a role of the fusiform in expression processing.

Beyond whether the fusiform responds differentially to expression, one key question is whether the fusiform intrinsically codes for expression or if differential responses are due to task-related and/or top-down modulation of fusiform activity (Dehaene and Cohen 2011). Assessing this requires a method with high temporal resolution to distinguish between early, more bottom-up biased activity, and later activity that likely involved recurrent interactions. Furthermore, a passive viewing or incidental task is required to exclude biases introduced by variable task demands across stimuli. The low temporal resolution of fMRI makes it difficult to disentangle early bottom-up processing from later top-down and recurrent processing. Some previous intracranial electroencephalography (iEEG) studies have used an explicit expression identification task, making task effects difficult to exclude (Tsuchiya et al. 2008; Kawasaki et al. 2012; Musch et al. 2014). Those that have used an implicit task have shown mixed results regarding whether early fusiform response is sensitive to expression (Pourtois et al. 2010; Musch et al. 2014). Furthermore, iEEG studies often lack sufficient subjects and population-level analysis to allow for a generalizable interpretation.

To help mediate between these 2 models and clarify the role of the fusiform in facial expression perception, iEEG was recorded from 17 subjects with a total of 31 face sensitive electrodes in face sensitive patches of the fusiform gyrus while these subjects viewed faces with neutral, happy, sad, angry, and fearful expressions in a gender discrimination task. Multivariate temporal pattern analysis (MTPA) on the data from these electrodes was used to analyze the temporal dynamics of neural activity with respect to facial expression sensitivity in fusiform. In a subset of 7 subjects, identity coding was examined in the same electrodes also using MTPA. In addition to examining the overall patterns across all electrodes, the responses from posterior fusiform and midfusiform, as well as the left and right hemisphere, were compared. To supplement these iEEG results, a meta-analysis of 64 neuroimaging studies was done examining facial expression sensitivity in the fusiform. The results support the view that fusiform response is sensitive to facial expression and suggest that the posterior fusiform and midfusiform regions play qualitatively different roles in facial expression processing.

Materials and Methods

Participants

The experimental protocols were approved by the Institutional Review Board of the University of Pittsburgh. Written informed consent was obtained from all participants.

A total of 17 human subjects (8 males, 9 females) underwent surgical placement of subdural electrocorticographic electrodes or stereoelectroencephalography (together electrocorticography and stereoelectroencephalography are referred to here as iEEG) as standard of care for seizure onset zone localization. The ages of the subjects ranged from 19 to 65 years old (mean = 37.9, SD = 12.7). None of the participants showed evidence of epileptic activity on the fusiform electrodes used in this study nor any ictal events during experimental sessions.

Experiment Design

In this study, each subject participated in 2 experiments. Experiment 1 was a functional localizer experiment and Experiment 2 was a face perception experiment. The experimental paradigms and the data preprocessing methods were similar to those described previously by Ghuman and colleagues (Ghuman et al. 2014).

Stimuli

In Experiment 1, 180 images of faces (50% males), bodies (50% males), words, hammers, houses, and phase scrambled faces were used as visual stimuli. Each of the 6 categories contained 30 images. Phase scrambled faces were created in MATLABTM by taking the 2D spatial Fourier spectrum of each of the face images, extracting the phase, adding random phases, recombining the phase and amplitude, and taking the inverse 2D spatial Fourier spectrum.

In Experiment 2, face stimuli were taken from the Karolinska Directed Emotional Faces stimulus set (Lundqvist et al. 1998). Frontal views and 5 different facial expressions (fearful, angry, happy, sad, and neutral) from 70 faces (50% male) in the database were used, which yielded a total of 350 unique images. A short version of Experiment 2 used a subset of 40 faces (50% males) from the same database, which yielded a total of 200 unique images. Four subjects participated in the long version of the experiment, and all other subjects participated in the short version of the experiment.

Paradigms

In Experiment 1, each image was presented for 900 ms with 900 ms intertrial interval during which a fixation cross was presented at the center of the screen (~10° × 10° of visual angle). At random, 1/3 of the time an image would be repeated, which yielded 480 independent trials in each session. Participants were instructed to press a button on a button box when an image was repeated (1-back).

In Experiment 2, each face image was presented for 1500 ms with 500 ms intertrial interval during which a fixation cross was presented at the center of the screen. This yielded 350 (200 for the short version) independent trials per session. Faces subtended approximately 5° of visual angle in width. Subjects were instructed to report whether the face was male or female via button press on a button box.

Paradigms were programmed in MATLABTM using Psychtoolbox and custom written code. All stimuli were presented on an LCD computer screen placed approximately 150 cm from participants’ heads.

All of the participants performed one session of Experiment 1. The 9 of the subjects performed one session of Experiment 2, and the other 8 participants performed 2 or more sessions of Experiment 2.

Data Analysis

Data Preprocessing

The electrophysiological activity was recorded using iEEG electrodes at 1000 Hz. Common reference and ground electrodes were placed subdurally at a location distant from any recording electrodes, with contacts oriented toward the dura. Single-trial potential signal was extracted by band-passing filtering the raw data between 0.2 and 115 Hz using a fourth order Butterworth filter to remove slow and linear drift, and high-frequency noise. The 60 Hz line noise was removed using a forth order Butterworth filter with 55–65 Hz stop-band. Power spectrum density (PSD) at 2–100 Hz with bin size of 2 Hz and time-step size of 10 ms was estimated for each trial using multitaper power spectrum analysis with Hann tapers, using FieldTrip toolbox (Oostenveld et al. 2011). For each channel, the neural activity between 50 and 300 ms prior to stimulus onset was used as baseline, and the PSD at each frequency was then z-scored with respect to the mean and variance of the baseline activity to correct for the power scaling over frequency at each channel. The broadband gamma signal was extracted as mean z-scored PSD across 40–100 Hz. Event-related potential (ERP) and event-related broadband gamma signal (ERBB), both time-locked to the onset of stimulus from each trial, were used in the following data analysis. Specifically, the ERP signal is sampled at 1000 Hz and the ERBB is sampled at 100 Hz.

To reduce potential artifacts in the data, raw data were inspected for ictal events, and none were found during experimental recordings. Trials with maximum amplitude 5 standard deviations above the mean across all the trials were eliminated. In addition, trials with a change of more than 25 μV between consecutive sampling points were eliminated. These criteria resulted in the elimination of less than 1% of trials.

Electrode Localization

Coregistration of grid electrodes and electrode strips was adapted from the method of Hermes et al. (2010). Electrode contacts were segmented from high-resolution postoperative CT scans of patients coregistered with anatomical MRI scans before neurosurgery and electrode implantation. The Hermes method accounts for shifts in electrode location due to the deformation of the cortex by utilizing reconstructions of the cortical surface with FreeSurferTM software and co-registering these reconstructions with a high-resolution postoperative CT scan. SEEG electrodes were localized with Brainstorm software (Tadel et al. 2011) using postoperative MRI coregistered with preoperative MRI images.

Electrode Selection

Face sensitive electrodes were selected based on both anatomical and functional constraints. Anatomical constraint was based upon the localization of the electrodes on the reconstruction using postimplantation MRI. In addition, MTPA was used to functionally select the electrodes that showed sensitivity to faces, comparing to other conditions in the localizer experiment (see below for MTPA details). Specifically, 3 criteria were used to screen and select the electrodes of interest: 1) electrodes of interest were restricted to those that were located in the midfusiform sulcus (MFS), on the fusiform gyrus, or in the sulci adjacent to fusiform gyrus; 2) electrodes were selected such that their peak 6-way classification d′ score for faces (see below for how this was calculated) exceeded 0.5 (P < 0.01 based on a permutation test, as described below); and 3) electrodes were selected such that the peak amplitude of the mean ERP and/or mean ERBB for faces was larger than the peak of mean ERP and/or ERBB for the other nonface object categories in the time window of 0–500 ms after stimulus onset. Dual functional criteria are used because criterion 2) insures only that faces give rise to statistically different activity from other categories, but not necessarily activity that is greater in magnitude. Combining criteria 2) and 3) insures that face activity is both statistically significantly different from other categories and greater magnitude in the electrodes of interest.

Multivariate Temporal Pattern Analysis

Multivariate methods were used instead of traditional univariate statistics because of their superior sensitivity (Ghuman et al. 2014; Haxby et al. 2014; Hirshorn et al. 2016; Miller et al. 2016). In this study, MTPA was applied to decode the coding of stimulus condition in the recorded neural activity. The timecourse of the decoding accuracy was estimated by classification using a sliding time window of 100 ms. Previous studies have demonstrated that both the low-frequency and the high-frequency neural activity contribute to the coding of facial information (Ghuman et al. 2014; Miller et al. 2016; Furl et al. 2017), therefore, both ERP and ERBB signals in the time window are combined as input features for the MTPA classifier. According to our preprocessing protocol, the ERP signal is sampled at 1000 Hz and the ERBB is sampled at 100 Hz, which yields 110 temporal features in each 100 ms time window (100 voltage potentials for ERP and 10 normalized mean power-spectrum density for ERBB). The 110-dimensional data were then used as input for the classifier (see Supplementary Results for a detailed analysis on the contribution by ERP and ERBB features for the classification, Fig. S1). The goal of the classifier was to learn the patterns of the data distributions in such 110-dimensional space for different conditions and to decode the conditions of the corresponding stimuli from the testing trials. The classifier was trained on each electrode of each subject separately to assess the electrode sensitivity to faces and facial expressions. For Experiment 1, it was a 6-way classification problem and we specifically focused on the sensitivity of face category against other nonface categories. Therefore, we used the sensitivity index (d′) for face category against all other nonface category as the metric of face sensitivity. d′ was calculated as Z(true positive rate) – Z(false positive rate), where Z is the inverse of the Gaussian cumulative distribution function. d′ was used because it is an unbiased measure of effect size and one that takes into both the true positive and false positive rates. It also has the advantage that it is an effect size measure that has similar interpretation as Cohen’s d (Cohen 1988; Sawilowsky 2009) while also being applicable to multivariate classification. In addition, we provide full receiver–operator characteristic (ROC) curves for completeness and as validation of d′ values. For Experiment 2, averaged pairwise classification between every possible pair of facial expressions (10 pairs in total) was used.

The choice of the classifier is an empirical problem. The performance of the classifier depends on whether the assumptions of the classifier approximate the underlying truth of the data. Additionally, the complexity of the model and the size of the dataset affect performance (bias-variance trade-off). In this study, we employed Naïve Bayes (NB) classifiers, which assumes that each of the input features are conditionally independent from one another, and are Gaussian distributed. The classification accuracy of the classifier was estimated through 5-fold cross-validation. Specifically, all the trials were randomly and evenly spited into 5-fold. In each cross-validation loop, the classifier was trained based on 4-fold and the performance was evaluated on the left out fold. The overall performance was estimated by averaging across all the 5 cross-validation loops. In general, different classifiers gave similar results. Specifically, we evaluated the performance of different classifiers (NB, support vector machines, and random forests) on a small subset of the data, and NB classifier tended to perform better than other commonly used classifiers in the current experiment, but other classifiers also gave similar results. In addition, our previous experience (Hirshorn et al. 2016) with similar datasets also suggested that NB performed reasonably well in such classification analysis. We therefore used NB throughout the work presented here. The advantage of the Naïve Bayes classifier in the current study is likely due to intrinsic properties of the high dimensional problem (Bickel and Levina 2004) that make a high-bias low-variance classifier (i.e., NB classifier) preferable compared with the low-bias high-variance classifiers (i.e., support vector machines).

Permutation Testing

Permutation testing was used to determine the significance of the sensitivity index d′. Multivariate temporal pattern analysis For each permutation, the condition labels of all the trials were randomly permuted and the same procedure as described above was used to calculate the d′ for each permutation. The permutation was repeated for a total of 1000 times. The d′ of each permutation was used as the test statistic and the null distribution of the test statistic was estimated using the histogram of the permutation test.

K-Means Clustering

K-means clustering was used to cluster the electrodes into groups based on both functional and anatomical features (Kaufman and Rousseeuw 2009). Specifically, we applied k-means clustering algorithm to the electrodes in a 2D feature space of MNI y-coordinate and the peak classification accuracy time. Note that each dimension was normalized through z-scoring in order to account for different scales in space and time. See Supplemental Information for detailed analysis using Bayesian information criterion (BIC) and Silhouette analysis for model selection.

Facial Feature Analysis

The facial features from the stimulus images were extracted following the similar process as (Ghuman et al. 2014). Anatomical landmarks for each picture were first determined by IntraFace (Xiong and De la Torre 2013), which marks 49 points on the face along the eyebrows, down the bridge of the nose, along the base of the nose, and outlining the eyes and mouth. Based on these landmarks we calculated 17 facial feature dimensions listed in Table S3. The values for these 17 feature dimensions were normalized by subtracting the mean and dividing by the standard deviation across all the pictures. The mean representation of each expression in facial feature space was computed by averaging across all faces of the same expression.

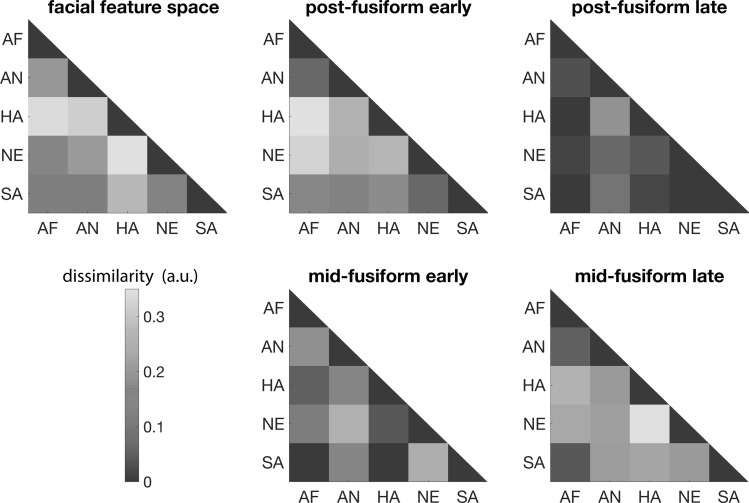

Representational Similarity Analysis

Representational similarity analysis (RSA) was used to analyze the neural representational space for expressions (Kriegeskorte and Kievit 2013). With pairwise classification accuracy between each pair of facial expressions, we constructed the representational dissimilarity matrix (RDM) of the neural representation of facial expressions, with the element in the i-th column of the j-th row in the matrix corresponding to the pairwise classification accuracy between the i-th and j-th facial expressions. The corresponding RDM in the facial feature space was constructed by assessing the Euclidean distance between the vectors for the i-th and the j-th facial expressions averaged over all identities in the 17-dimensional facial feature space (Fig. 3, top left).

Figure 3.

Representational similarity analysis (RSA) between the facial feature space and the representational spaces of posterior fusiform and midfusiform at both early and late stages. Top row: representational dissimilarity matrices (RDM) of facial expressions in the facial feature space (left), RDM of posterior fusiform at early stage (middle), RDM of posterior fusiform at late stage (right). Bottom row: RDM of midfusiform at early stage (middle), RDM of midfusiform at late stage (right). Abbreviations: AF = fearful; AN = angry; HA = happy; NE = neutral; SA = sad.

Results

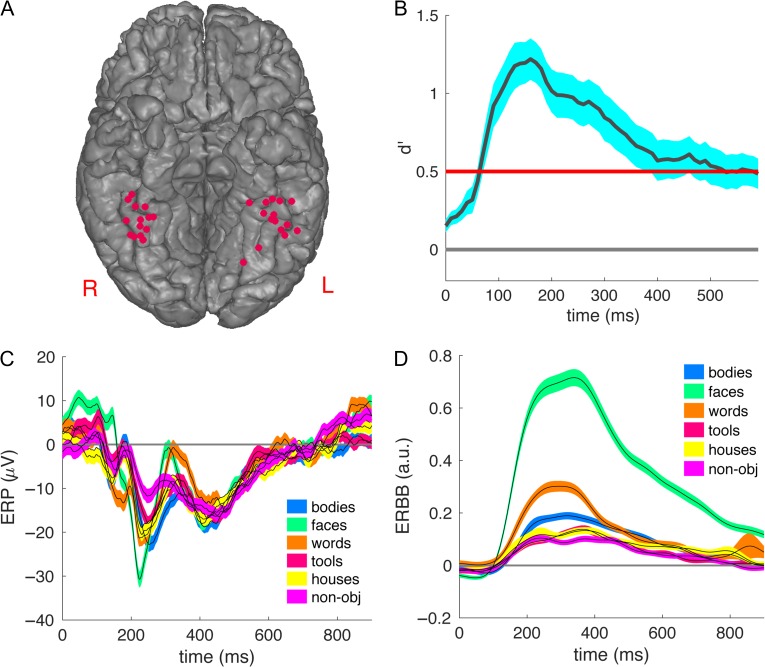

Electrode Selection and Face Sensitivity

The locations of the 31 fusiform electrodes from 17 participants sensitive to faces are shown in Figure 1A and Table 1. The averaged event-related potential (ERP) and event-related broadband gamma activity (ERBB) responses (see Materials and Methods for detailed definitions of ERP and ERBB) for each category across all channels are shown in Figure 1C,D, respectively. The averaged sensitivity index (d′) for faces peaked at 160 ms (d′ = 1.22, P < 0.01 in every channel, Fig. 1B). Consistent with previous findings (Allison et al. 1999; Eimer 2000c, 2011; Ghuman et al. 2014), strong sensitivity for faces was observed in the fusiform around 100–400 ms after stimulus onset.

Figure 1.

The face sensitive electrodes in the fusiform. (A) The localization of the 31 face sensitive electrodes in (or close to) the fusiform, mapped onto a common space based on MNI coordinates. We moved depth electrode locations to the nearest location on the overlying cortical surface, in order to visualize all the electrodes. (B) The timecourse of the sensitivity index (d′) for faces versus the other categories in the 6-way classification averaged across all 31 fusiform electrodes. The shaded areas indicate standard error of the mean across electrodes. The red line corresponds to P < 0.01 with Bonferroni correction for multiple comparisons across 60 time points. (C) The ERP for each category averaged across all face sensitive fusiform electrodes. The shaded areas indicate standard error of the mean across electrodes. (D) The ERBB for each category averaged across all face sensitive fusiform electrodes. The shaded areas indicate standard error of the mean across electrodes.

Table 1.

MNI coordinates and facial expression sensitivity (d′) for all face sensitive electrodes

| Electrode ID | X (mm) | Y (mm) | Z (mm) | Peak time (ms) | Peak d' | Sensitive to expressions |

|---|---|---|---|---|---|---|

| S1a | 35 | −59 | −22 | 260 | 0.29 | Y |

| S1b | 33 | −53 | −22 | 150 | 0.31 | Y |

| S1c | 42 | −56 | −26 | 200 | 0.20 | N |

| S2a | 40 | −57 | −23 | 170 | 0.34 | Y |

| S3a | −33 | −44 | −31 | 580 | 0.18 | N |

| S4a | −38 | −36 | −30 | 440 | 0.12 | N |

| S5a | −38 | −36 | −20 | 300 | 0.25 | Y |

| S5b | −42 | −37 | −19 | 330 | 0.25 | Y |

| S6a | 34 | −40 | −11 | 540 | 0.24 | Y |

| S6b | 39 | −40 | −10 | 490 | 0.33 | Y |

| S7a | 36 | −57 | −21 | 100 | 0.42 | Y |

| S8a | −22 | −72 | −9 | 100 | 0.23 | Y |

| S8b | −40 | −48 | −23 | 170 | 0.38 | Y |

| S9a | 32 | −46 | −7 | 180 | 0.34 | Y |

| S9b | 36 | −48 | −8 | 160 | 0.40 | Y |

| S10a | 29 | −46 | −15 | 310 | 0.31 | Y |

| S11a | −25 | −38 | −17 | 580 | 0.36 | Y |

| S11b | −34 | −38 | −18 | 400 | 0.46 | Y |

| S11c | −49 | −37 | −20 | 430 | 0.27 | Y |

| S12a | 41 | −33 | −19 | 70 | 0.06 | N |

| S12b | 37 | −51 | −9 | 70 | 0.22 | Y |

| S12c | 35 | −59 | −4 | 80 | 0.23 | Y |

| S13a | 43 | −36 | −13 | 400 | 0.11 | N |

| S13b | 44 | −48 | −11 | 190 | 0.10 | N |

| S14a | −52 | −54 | −17 | 30 | 0.14 | N |

| S15a | −37 | −47 | −10 | 180 | 0.64 | Y |

| S16a | −39 | −45 | −11 | 160 | 0.03 | N |

| S17a | −43 | −53 | −26 | 90 | 0.20 | N |

| S17b | −46 | −50 | −28 | 110 | 0.31 | Y |

| S17c | −30 | −63 | −20 | 120 | 0.27 | Y |

| S17d | −45 | −56 | −25 | 40 | 0.13 | N |

aNote: Electrode ID is labeled by subject number (SX) and electrode from that subject (a, b, etc.). Sensitivity to expression defined as P < 0.05 decoding accuracy corrected for multiple comparisons.

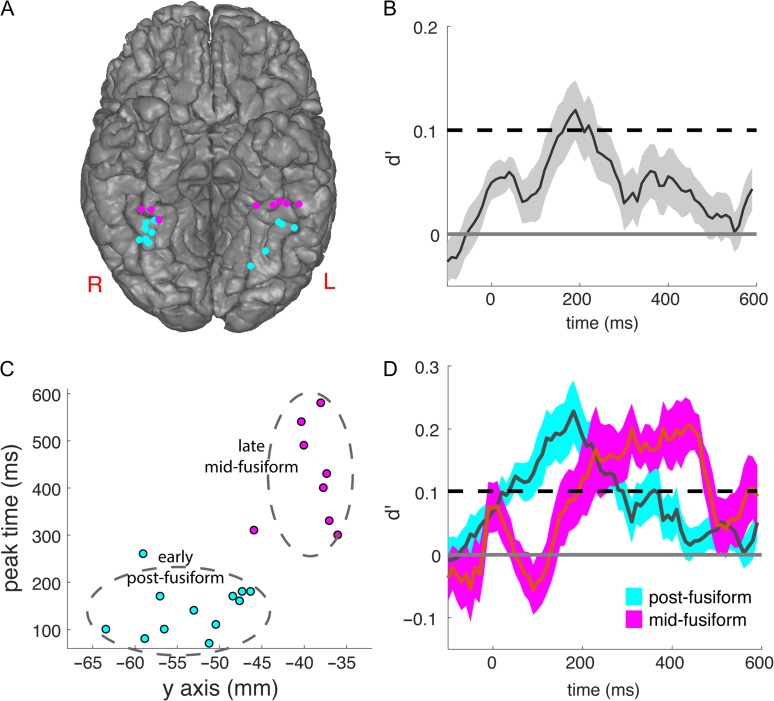

Facial Expression Classification at the Individual and Group Level

For each participant, the classification accuracy between each pair of facial expressions was estimated using 5-fold cross-validation (see Materials and Methods for details). As shown in Figure 2B, the averaged timecourse peaked at 190 ms after stimulus onset (average decoding at peak d’ = 0.12, P < 0.05, Bonferroni corrected for multiple comparisons). In addition to the grand average, on the single electrode level, 21 out of the 31 electrodes from 12 out of 17 subjects showed a significant peak in their individual timecourses (P < 0.05, permutation test corrected for multiple comparisons). The locations of the significant electrodes are shown in Figure 2A and all electrodes are listed in Table 1.

Figure 2.

The timecourse of the facial expression classification in fusiform. (A) The locations of the electrodes with significant face expression decoding accuracy, with the posterior fusiform group colored in cyan and the midfusiform group colored in magenta. (B) The timecourse of mean and standard error for pairwise classification between different face expressions in all 31 fusiform electrodes. The shaded areas indicate standard error of the mean across electrodes. Dashed line: P = 0.05 threshold with Bonferroni correction for 60 time points (600 ms with 10 ms stepsize). (C) The time of the peak classification accuracy was plotted against the MNI y-coordinate for each single electrode with significant expression classification accuracy. K-means clustering partitions these electrodes into posterior fusiform and midfusiform groups. Dashed oval represent the 2-σ contour using the mean and standard deviation along the MNI x- and y-axes. (D) The mean and standard error for pairwise classification between different face expressions in posterior fusiform electrodes and midfusiform electrodes. The posterior group peaked at 180 ms after stimulus onset and the midfusiform group had an extended peak starting at 230 ms and extending to 450 ms (both P < 0.05, binomial test, Bonferroni corrected; dashed line: P = 0.05 threshold with Bonferroni correction for 60 time points [600 ms with 10 ms stepsize]). The shaded areas indicate standard error of the mean across electrodes. See supplement for receiver operator characteristic (ROC) curves validating classification analysis (Fig. S2).

The effect size for the mean peak expression classification is relatively low. This is in part because the electrodes consisted of 2 distinct populations with different timecourses (see below). Additionally, due to the variability in electrode position, iEEG effect sizes can be lower in some cases than what would be seen with electrodes optimally placed over face patches. To assess whether this was the case, we examined the correspondence between face category decoding and expression decoding based on the logic that placement closer to face patches should lead to higher face category decoding accuracy. A significant positive correlation between the decoding accuracy (d′) for face category and the decoding accuracy (d′) for facial expressions was seen (Pearson correlation r = 0.57, N = 21, P = 0.007). This suggests that electrode position relative to face patches in the fusiform can explain some of the effect size variability for expression classification. That suggests the true effect size for expression classification for optimal electrode placement may be closer to what was seen for electrodes with higher accuracy (0.4–0.6, see Table 1) rather than the mean across all electrodes.

Spatiotemporal Dynamics of Facial Expression Decoding

The next question we addressed was whether spatiotemporal dynamics of facial expression representation in fusiform was location dependent. Specifically, we compared the dynamics of expression sensitivity between left and right hemispheres, and between posterior fusiform and midfusiform regions for electrodes showing significant expression sensitivity.

We first analyzed the lateralization effect for the expression coding in fusiform. The mean timecourses of decoding accuracy for left and right fusiform did not differ at the P < 0.05 uncorrected level at any time point (Fig. S3).

In contrast substantial differences were seen in the timing and representation of expression coding between posterior fusiform and midfusiform. This was first illustrated by plotting the time of the peak decoding accuracy in each individual electrode against the corresponding MNI y-coordinate of the electrode (Fig. 2C). A qualitative difference was seen between the peak times for electrodes posterior to approximately y = −45 compared with those anterior to that, rather than a continuous relationship between y-coordinate and peak time. This was quantified by a clustering analysis using both BIC (Kass and Wasserman 1995) and Silhouette analysis (Kaufman and Rousseeuw 2009) (Fig. S4), which both showed evidence for a cluster-structure in the data (Bayes factor >20) with k = 2 as the optimal number of clusters (mean Sillhouette coefficient = 0.59). The 2 clusters corresponded to the posterior fusiform and midfusiform (Fig. 2C; see Supplemental Information for detailed analysis on clustering and the selection of models with different values of k). The border between these data-driven clusters corresponds well with prior functional and anatomical evidence showing that the midfusiform face patch falls within a 1 cm disk centered around the anterior tip of MFS (which falls at y = −40 in MNI coordinates) with high probability (Weiner et al. 2014). That would make the border between the midfusiform and posterior fusiform face patch approximately y = −45 in MNI coordinates, which is very close to the border produced by the clustering analysis (y = −45.9).

The timecourse of the posterior fusiform and midfusiform clusters were then examined in detail. As shown in Figure 2D, the timecourse of decoding accuracy in the posterior group peaked at 180 ms after stimulus onset and the timecourse of midfusiform group first peaked at 230 ms and the peak extended until approximately 450 ms after stimulus onset.

Representational Similarity Analysis

A recent meta-analysis suggests that fusiform is particularly sensitive to the contrast between specific pairs of expressions (Vytal and Hamann 2010). To examine this in iEEG data, the representation dissimilarity matrices (RDMs) for facial expressions in the early and late activity in posterior fusiform and midfusiform were computed (Fig. 3). No contrasts between expressions showed significant differences in posterior fusiform in the late window or in midfusiform in the early window (P > 0.1 in all cases, T-test), as expected due to the corresponding low overall classification accuracy. In the early posterior fusiform, expressions of negative emotions (fearful, angry) were dissimilar to happy and neutral expressions (P < 0.05 in each case, T-test), but not very distinguishable from one another. In the late midfusiform activity, happy and neutral expressions were both distinguishable from expressions of negative emotions and from each other (P < 0.05 in each case, T-test). The results showed partial consistency with a previous meta-analysis based on neuroimaging studies (consistent in angry vs. neutral, fearful vs. neutral, fearful vs. happy, and fear vs. sad) (Vytal and Hamann 2010). However, the previous meta-analysis also reported statistical significance for the contrasts of fearful versus angry and angry versus sad, which were absent in our results.

One question is the degree to which the representation in fusiform reflects the structural properties of the facial expressions subjects were viewing. To examine this question, an 17-dimensional facial feature space was constructed based on a computer vision algorithm (Xiong and De la Torre 2013). The features characterize structural and spatial frequency properties of each image, for example, eye width, eyebrow length, nose height, eye–mouth width ratio, and skin tone. An RDM was then built between the expressions in this feature space and compared with the neural feature spaces. There was a significant correlation between posterior fusiform representation space in the early time window (Spearman’s rho = 0.24, P < 0.05, permutation test). The correlation between midfusiform representation space in the late time window and the facial feature space was smaller and did not reach statistical significance (Spearman’s rho = 0.15, P > 0.1, permutation test).

Comparison to Facial Identity Classification

Given the strongly supported hypothesis the fusiform plays a central role in face identity recognition, the effect size of identity and expression coding in the fusiform was compared. Due to the relatively few repetitions of individual faces, individuation was examined in only the 7 subjects that had sufficient repetitions of each face identity allowing for multivariate classification of identity across expression; identity decoding was previously reported for 4 of these subjects in a recent study (Ghuman et al. 2014). Across the 7 total subjects (3 here and 4 reported previously), the mean peak d’ = 0.50 for face identity decoding was significantly greater than the mean peak accuracy for facial expression decoding in the exact same set of electrodes (mean peak d’ = 0.20; t[6] = 3.7821, P = 0.0092). With regards to the timing of identity (mean peak time = 314 ms) versus expression sensitivity, the posterior peak time for expression classification was significantly earlier than the peak time for identity (t[18] = 4.45, P = 0.0003). The midfusiform extended peak time for expression classification overlapped with the peak time for identity.

Discussion

Multivariate classification methods were used to evaluate the encoding of facial expressions recorded from electrodes placed directly in face sensitive fusiform cortex. Though the effect size for expression classification is smaller than for identity classification, the results support a role for the fusiform in the processing of facial expressions. Electrodes that were sensitive to expression were also sensitive to identity, suggesting a shared neural substrate for identity and expression coding in the fusiform. The results also show that the posterior fusiform and midfusiform are dynamically involved in distinct stages of facial expression processing and have different representations of expressions. The differential representation and magnitude of the temporal displacement between the sensitivity in posterior fusiform and midfusiform suggests these are qualitatively distinct stages of facial expression processing and not merely a consequence of transmission or information processing delay along a feedforward hierarchy.

Fusiform is Sensitive to Facial Expression

The results here show that the fusiform is sensitive to expression, though the effect size for classification of expression in the fusiform using iEEG is small-to-medium (Cohen 1988) (d′ is on the same scale as Cohen’s d and they are equivalent when the data is univariate Gaussian, so d′s between 0.2 and 0.5 are “small” and 0.5 and 0.8 are medium.). The results also suggest that the same patches of the fusiform that are sensitive to expression are sensitive to identity as well. Given the variability of the effect size due to the proximity of electrode placement relative to face patches, the relative effect size may be more informative than the absolute effect size. The magnitude for facial expression classification is approximately half what was seen for face identity classification. This suggests that while fusiform contributes to facial expression perception, it is to a lesser degree than face identity processing. Greater involvement in identity than expression perception is expected for a region involved in structural processing of faces because identity relies on this information more than expression because expression perception also relies on facial dynamics. These results support models that hypothesize fusiform involvement in form/structural processing, at least for posterior fusiform (see discussion on Multiple, Spatially, and Temporally Segregated Stages of Face Expression Processing in the Fusiform section below), which can support facial expression processing (Calder and Young 2005; Duchaine and Yovel 2015). These results do not support models that hypothesize a strong division between facial identity and expression processing (Bruce and Young 1986; Haxby et al. 2000).

To test what a brain region codes for one must examine its response for early, bottom-up activation during an incidental task or passive viewing (Dehaene and Cohen 2011), otherwise it is difficult to disentangle effects of task demands and top-down modulation. Indeed, previous studies have demonstrated that extended fusiform activity, particularly in the broadband gamma range, is modulated by task-related information (Engell and McCarthy 2011; Ghuman et al. 2014). Some previous iEEG studies of expression coding in the fusiform have used an explicit expression judgment task and examined only broadband gamma activity, making it difficult to draw definitive conclusions about fusiform expression coding from these results (Tsuchiya et al. 2008; Kawasaki et al. 2012). One previous study that used an implicit task did not show evidence of expression sensitivity during the early stage of activity in the fusiform (Musch et al. 2014); another did show evidence of expression sensitivity, though it reported results only from a single subject (Pourtois et al. 2010). The results here show in a large iEEG sample that the early response of the fusiform most sensitive to bottom-up processing is modulated by expression, at least for the posterior fusiform.

The effect size for facial expression classification is consistent with mixed findings in the neuroimaging literature for expression sensitivity in the fusiform (Haxby et al. 2000; Tsuchiya et al. 2008; Harry et al. 2013; Harris et al. 2014; Skerry and Saxe 2014; Zhang et al. 2016). IEEG generally has greater sensitivity and lower noise than noninvasive measures of brain activity. Methods with lower sensitivity, such as fMRI, would be expected to have a substantial false negative rate for facial expression coding in the fusiform. To quantify fMRI sensitivity to expression we performed a meta-analysis on 64 studies. Of these studies, 24 reported at least one expression sensitive loci in the fusiform. However, at the meta-analytic level, no significant cluster of expression sensitivity was seen in the fusiform after whole brain analysis (see Supplementary Tables S1, S2, and Fig. S5). Thus, consistent with the iEEG effect size for expression decoding in the fusiform seen here, there is some suggestion in the fMRI literature for expression sensitivity in the fusiform, but it is relatively small in magnitude and does not achieve statistical significance at the whole brain level.

Multiple, Spatially, and Temporally Segregated Stages of Face Expression Processing in the Fusiform

Using a data-driven analysis, posterior fusiform and midfusiform face patches were shown to contribute differentially to expression processing. The dividing point between posterior fusiform and midfusiform electrodes found in a data-driven manner is consistent with the anatomical border for the posterior fusiform and midfusiform face patches previously described, suggesting a strong coupling between the anatomical and functional divisions in fusiform (Weiner et al. 2014). While posterior fusiform and midfusiform have been shown to be cytoarchitectonically distinct regions each with separate face sensitive patches (Freiwald and Tsao 2010; Weiner et al. 2014, 2016), functional differences between these patches have remained elusive in the literature. The results here suggest that these anatomical and physiological distinctions correspond to functional distinctions in the role of these areas in face processing, as reflected in qualitatively different temporal dynamics in these regions for facial expression processing. Specifically, posterior fusiform participates in a relatively early stage of facial expression processing that may be related to structural encoding of faces. Midfusiform demonstrates a distinct pattern of extended dynamics and participates in a later stage of processing that may be related to a more abstract and/or multifaceted representation of expression and emotion. These results support the revised model of fusiform function that posits the fusiform contributes to structural encoding of facial expression during the initial stages of processing (Calder and Young 2005; Duchaine and Yovel 2015), with the notable addition that it may be primarily posterior fusiform contributes to structural processing.

The early time period of expression sensitivity in posterior fusiform overlaps with strong face sensitive activity measured noninvasively around 170 ms after viewing a face, which is thought to reflect structural encoding of face information (Bentin and Deouell 2000; Eimer 2000a, 2000c; Blau et al. 2007; Eimer 2011). Face sensitive activity in this time window has been shown to be insensitive to attention and is thought to reflect a “rapid, feedforward phase of face-selective processing” (Furey et al. 2006). Additionally, a face adaptation study showed that activity in this window reflects the actual facial expression rather than the perceived (adapted) expression (Furl et al. 2007). Consistent with these previous findings, the RSA results here show that the early posterior activity is significantly correlated to the physical/structural features of the face.

The expression sensitivity in midfusiform onset began later than the posterior fusiform (around 230 ms), and remained active until ~450 ms after viewing a face. Face sensitive activity in this time window has been shown to be sensitive to face familiarity and to attention (Eimer 2000b; Eimer et al. 2003). Previous studies and the results presented here show that face identity can be decoded from the activity in this later time window in midfusiform (Ghuman et al. 2014; Vida et al. 2017) and reflects a distributed code for identity among regions of the face processing network (Li et al. 2017). Thus, this later activity may relate to integration of multiple kinds of face information, such integration of identity and expression. Additionally, the previously mentioned face adaptation study showed that activity in this window reflects the subjectively perceived facial expression after adaptation (Furl et al. 2007). The RSA analysis here showed that the activity in this time window in midfusiform was not significantly correlated with physical similarity of the facial expressions. This lack of correlation with the physical features of the space, combined with the result that midfusiform activity does show significant expression decoding, suggests that the representation in midfusiform may reflect a more conceptual representation of expression. Taken together, these results and prior findings suggest the midfusiform expression sensitivity in this later window reflect a more abstract and subjective representation of expression and may be related to integration of multiple face cues, including identity and expression. This abstract and multifaceted representation is likely to reflect processes arising from interactions across the face processing network (Ishai 2008).

To conclude, the results presented here support the hypothesis that the fusiform contributes to expression processing (Calder and Young 2005; Duchaine and Yovel 2015). The finding that the same part of the fusiform is sensitive to both identity and expression contradicts models that hypothesize separate pathways for their processing (Bruce and Young 1986; Haxby et al. 2000) and instead supports the hypothesis the fusiform supports structural encoding of faces in service of both identity and expression (Duchaine and Yovel 2015). The results also show there is a qualitative distinction between face processing in posterior fusiform and midfusiform, with each contributing to temporally and functionally distinct stages of expression processing. This distinct contribution of these two fusiform patches suggest that the structural and cytoarchitectonic differences between posterior fusiform and midfusiform are associated with functional differences between the contributions of these areas to face perception. The results here illustrate the dynamic role the fusiform plays in multiple stages of facial expression processing.

Supplementary Material

Supplementary Material

Supplementary material is available at Cerebral Cortex online.

Funding

National Institute on Drug Abuse under award (NIH R90DA023420 to Y.L.), the National Institute of Mental Health under award (NIH R01MH107797 and NIH R21MH103592 to A.S.G.), and the National Science Foundation under award (1734907 to A.S.G.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or the National Science Foundation.

Notes

We thank the patients for participating in the iEEG experiments and the UPMC Presbyterian epilepsy monitoring unit staff and administration for their assistance and cooperation with our research. We thank Michael Ward, Ellyanna Kessler, Vincent DeStefino, Shawn Walls, Roma Konecky, Nicolas Brunet, and Witold Lipski for assistance with data collection and thank Ari Kappel and Matthew Boring for assistance with electrode localization. Conflict of Interest: None declared.

References

- Achaibou A, Loth E, Bishop SJ. 2016. Distinct frontal and amygdala correlates of change detection for facial identity and expression. Soc Cogn Affect Neurosci. 11:225–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. 1999. Electrophysiological studies of human face perception. I: Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb Cortex. 9:415–430. [DOI] [PubMed] [Google Scholar]

- Barton JJ. 2008. Structure and function in acquired prosopagnosia: lessons from a series of 10 patients with brain damage. J Neuropsychol. 2:197–225. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Press DZ, Keenan JP, O’Connor M. 2002. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 58:71–78. [DOI] [PubMed] [Google Scholar]

- Bentin S, Deouell LY. 2000. Structural encoding and identification in face processing: erp evidence for separate mechanisms. Cogn Neuropsychol. 17:35–55. [DOI] [PubMed] [Google Scholar]

- Bickel PJ, Levina E. 2004. Some theory for Fisher’s linear discriminant function, ‘naive Bayes’, and some alternatives when there are many more variables than observations. Bernoulli. 10:989–1010. [Google Scholar]

- Bishop SJ, Aguirre GK, Nunez-Elizalde AO, Toker D. 2015. Seeing the world through non rose-colored glasses: anxiety and the amygdala response to blended expressions. Front Hum Neurosci. 9:152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. 2007. The face-specific N170 component is modulated by emotional facial expression. Behav Brain Funct. 3:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR. 1996. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 17:875–887. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. 1986. Understanding face recognition. Br J Psychol. 77(Pt 3):305–327. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. 2005. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 6:641–651. [DOI] [PubMed] [Google Scholar]

- Cohen J. 1988. Statistical power analysis for the behavioral sciences. Hillsdale, NJ: L. Erlbaum Associates. [Google Scholar]

- Dehaene S, Cohen L. 2011. The unique role of the visual word form area in reading. Trends Cogn Sci. 15:254–262. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Yovel G. 2015. A revised neural framework for face processing. Annu Rev Vis Sci. 1:393–416. [DOI] [PubMed] [Google Scholar]

- Eimer M. 2000. a. Effects of face inversion on the structural encoding and recognition of faces. Evidence from event-related brain potentials. Brain Res Cogn Brain Res. 10:145–158. [DOI] [PubMed] [Google Scholar]

- Eimer M. 2000. b. Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clin Neurophysiol. 111:694–705. [DOI] [PubMed] [Google Scholar]

- Eimer M. 2000. c. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport. 11:2319–2324. [DOI] [PubMed] [Google Scholar]

- Eimer M. 2011. The face-sensitive N170 component of the event-related brain potential In: Calder AJ, Rhodes G, Johnson MH, Haxby JV, editors. The Oxford handbook of face perception. Oxford: Oxford University Press; p. 329–344. [Google Scholar]

- Eimer M, Holmes A, McGlone FP. 2003. The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci. 3:97–110. [DOI] [PubMed] [Google Scholar]

- Engell AD, McCarthy G. 2011. The relationship of gamma oscillations and face-specific ERPs recorded subdurally from occipitotemporal cortex. Cereb Cortex. 21:1213–1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foley E, Rippon G, Thai NJ, Longe O, Senior C. 2012. Dynamic facial expressions evoke distinct activation in the face perception network: a connectivity analysis study. J Cogn Neurosci. 24:507–520. [DOI] [PubMed] [Google Scholar]

- Fox CJ, Moon SY, Iaria G, Barton JJ. 2009. The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. Neuroimage. 44:569–580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY. 2010. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science. 330:845–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furey ML, Tanskanen T, Beauchamp MS, Avikainen S, Uutela K, Hari R, Haxby JV. 2006. Dissociation of face-selective cortical responses by attention. Proc Natl Acad Sci USA. 103:1065–1070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, Lohse M, Pizzorni-Ferrarese F. 2017. Low-frequency oscillations employ a general coding of the spatio-temporal similarity of dynamic faces. Neuroimage. 157:486–499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, van Rijsbergen NJ, Treves A, Friston KJ, Dolan RJ. 2007. Experience-dependent coding of facial expression in superior temporal sulcus. Proc Natl Acad Sci USA. 104:13485–13489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen-Gottstein Y, Goodale MA. 2005. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia. 43:1645–1654. [DOI] [PubMed] [Google Scholar]

- Ghuman AS, Brunet NM, Li Y, Konecky RO, Pyles JA, Walls SA, Destefino V, Wang W, Richardson RM. 2014. Dynamic encoding of face information in the human fusiform gyrus. Nat Commun. 5:5672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goesaert E, Op de Beeck HP. 2013. Representations of facial identity information in the ventral visual stream investigated with multivoxel pattern analyses. J Neurosci. 33:8549–8558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris RJ, Young AW, Andrews TJ. 2014. Brain regions involved in processing facial identity and expression are differentially selective for surface and edge information. Neuroimage. 97:217–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harry B, Williams MA, Davis C, Kim J. 2013. Emotional expressions evoke a differential response in the fusiform face area. Front Hum Neurosci. 7:692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Connolly AC, Guntupalli JS. 2014. Decoding neural representational spaces using multivariate pattern analysis. Annu Rev Neurosci. 37:435–456. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Hermes D, Miller KJ, Noordmans HJ, Vansteensel MJ, Ramsey NF. 2010. Automated electrocorticographic electrode localization on individually rendered brain surfaces. J Neurosci Methods. 185:293–298. [DOI] [PubMed] [Google Scholar]

- Hirshorn EA, Li Y, Ward MJ, Richardson RM, Fiez JA, Ghuman AS. 2016. Decoding and disrupting left midfusiform gyrus activity during word reading. Proc Natl Acad Sci USA. 113:8162–8167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. 2000. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 3:80–84. [DOI] [PubMed] [Google Scholar]

- Ishai A. 2008. Let’s face it: it’s a cortical network. Neuroimage. 40:415–419. [DOI] [PubMed] [Google Scholar]

- Kass RE, Wasserman L. 1995. A reference bayesian test for nested hypotheses and its relationship to the Schwarz criterion. J Am Stat Assoc. 90:928–934. [Google Scholar]

- Kaufman L, Rousseeuw PJ. 2009. Finding groups in data: an introduction to cluster analysis. Hoboken, NJ: John Wiley & Sons. [Google Scholar]

- Kawasaki H, Tsuchiya N, Kovach CK, Nourski KV, Oya H, Howard MA, Adolphs R. 2012. Processing of facial emotion in the human fusiform gyrus. J Cogn Neurosci. 24:1358–1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Kievit RA. 2013. Representational geometry: integrating cognition, computation, and the brain. Trends Cogn Sci. 17:401–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y, Richardson RM, Ghuman AS. 2017. Multi-connection pattern analysis: decoding the representational content of neural communication. Neuroimage. 162:32–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. 1998. The Karolinska directed emotional faces (KDEF). CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet; 91-630.

- Miller KJ, Schalk G, Hermes D, Ojemann JG, Rao RP. 2016. Spontaneous decoding of the timing and content of human object perception from cortical surface recordings reveals complementary information in the event-related potential and broadband spectral change. PLoS Comput Biol. 12:e1004660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musch K, Hamame CM, Perrone-Bertolotti M, Minotti L, Kahane P, Engel AK, Lachaux JP, Schneider TR. 2014. Selective attention modulates high-frequency activity in the face-processing network. Cortex. 60:34–51. [DOI] [PubMed] [Google Scholar]

- Nestor A, Plaut DC, Behrmann M. 2011. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci USA. 108:9998–10003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. 2011. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011:156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. 2011. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage. 56:2356–2363. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P. 2010. Modulation of face processing by emotional expression and gaze direction during intracranial recordings in right fusiform cortex. J Cogn Neurosci. 22:2086–2107. [DOI] [PubMed] [Google Scholar]

- Sawilowsky SS. 2009. New effect size rules of thumb. J Mod Appl Stat Methods. 8:597–599. [Google Scholar]

- Skerry AE, Saxe R. 2014. A common neural code for perceived and inferred emotion. J Neurosci. 34:15997–16008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Streit M, Ioannides AA, Liu L, Wolwer W, Dammers J, Gross J, Gaebel W, Muller-Gartner HW. 1999. Neurophysiological correlates of the recognition of facial expressions of emotion as revealed by magnetoencephalography. Brain Res Cogn Brain Res. 7:481–491. [DOI] [PubMed] [Google Scholar]

- Tadel F, Baillet S, Mosher JC, Pantazis D, Leahy RM. 2011. Brainstorm: a user-friendly application for MEG/EEG analysis. Comput Intell Neurosci. 2011:879716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas KM, Drevets WC, Whalen PJ, Eccard CH, Dahl RE, Ryan ND, Casey BJ. 2001. Amygdala response to facial expressions in children and adults. Biol Psychiatry. 49:309–316. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Kawasaki H, Oya H, Howard MA 3rd, Adolphs R. 2008. Decoding face information in time, frequency and space from direct intracranial recordings of the human brain. PLoS One. 3:e3892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vida MD, Nestor A, Plaut DC, Behrmann M. 2017. Spatiotemporal dynamics of similarity-based neural representations of facial identity. Proc Natl Acad Sci USA. 114:388–393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. 2001. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 30:829–841. [DOI] [PubMed] [Google Scholar]

- Vytal K, Hamann S. 2010. Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. J Cogn Neurosci. 22:2864–2885. [DOI] [PubMed] [Google Scholar]

- Weiner KS, Barnett MA, Lorenz S, Caspers J, Stigliani A, Amunts K, Zilles K, Fischl B, Grill-Spector K. 2016. The cytoarchitecture of domain-specific regions in human high-level visual cortex. Cereb Cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner KS, Golarai G, Caspers J, Chuapoco MR, Mohlberg H, Zilles K, Amunts K, Grill-Spector K. 2014. The mid-fusiform sulcus: a landmark identifying both cytoarchitectonic and functional divisions of human ventral temporal cortex. Neuroimage. 84:453–465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. 1998. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci. 18:411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiong X, De la Torre F. 2013. Supervised descent method and its application to face alignment. IEEE CVPR.

- Xu X, Biederman I. 2010. Loci of the release from fMRI adaptation for changes in facial expression, identity, and viewpoint. J Vis. 10:36. [DOI] [PubMed] [Google Scholar]

- Zhang H, Japee S, Nolan R, Chu C, Liu N, Ungerleider LG. 2016. Face-selective regions differ in their ability to classify facial expressions. Neuroimage. 130:77–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.