Abstract

Motivation

Sequence alignment is a central operation in bioinformatics pipeline and, despite many improvements, remains a computationally challenging problem. Locality-sensitive hashing (LSH) is one method used to estimate the likelihood of two sequences to have a proper alignment. Using an LSH, it is possible to separate, with high probability and relatively low computation, the pairs of sequences that do not have high-quality alignment from those that may. Therefore, an LSH reduces the overall computational requirement while not introducing many false negatives (i.e. omitting to report a valid alignment). However, current LSH methods treat sequences as a bag of k-mers and do not take into account the relative ordering of k-mers in sequences. In addition, due to the lack of a practical LSH method for edit distance, in practice, LSH methods for Jaccard similarity or Hamming similarity are used as a proxy.

Results

We present an LSH method, called Order Min Hash (OMH), for the edit distance. This method is a refinement of the minHash LSH used to approximate the Jaccard similarity, in that OMH is sensitive not only to the k-mer contents of the sequences but also to the relative order of the k-mers in the sequences. We present theoretical guarantees of the OMH as a gapped LSH.

Availability and implementation

The code to generate the results is available at http://github.com/Kingsford-Group/omhismb2019.

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

Measuring sequence similarity is the core of many algorithms in computational biology. For example, in the overlap–layout–consensus paradigm to assemble genomes (e.g. Jaffe et al., 2003; Myers et al., 2000), the first overlap step consists of aligning the reads against one another to determine which pairs have a significant alignment (an overlap). In meta-genomics, sequencing reads, or longer sequences created from these reads, are aligned against known genomes, or against one another to cluster the sequences, to determine the constituent species of the sample. Sequence similarity is also at the heart of the many general sequence aligners, either genome to genome [e.g. MUMmer4 (Marçais et al., 2018), LASTZ (Harris, 2007)] or reads to genome [e.g. Bowtie2 (Langmead and Salzberg, 2012), BWA (Li and Durbin, 2010)], that are used in countless pipelines in bioinformatics.

Despite many algorithmic and engineering improvements [e.g. implementation on SIMD (Zhao et al., 2013) and GPU (Liu et al., 2012)], computing the sequence alignment or edit distance between two sequences takes approximately quadratic time in the length of the input sequences, which remains computationally expensive in practice. Given that the edit distance is likely not computable in strong subquadratic time (Backurs and Indyk, 2015), most aligners rely on heuristics to more quickly detect sequences with a high probability of having an alignment.

Recent aligners, such as Mash (Ondov et al., 2016), Mashmap (Jain et al., 2017), or overlappers such as MHap (Berlin et al., 2015), use a method called ‘locality-sensitive hashing’ (LSH) to reduce the amount of work necessary (Indyk and Motwani, 1998). The procedure is a dimensionality reduction method and works in two steps. First, the sequences (or part of the sequences) are summarized into sketches that are much smaller than the original sequences while preserving important information to estimate how similar two sequences are. Second, by directly comparing those sketches (with no need to refer to the original sequences) or by using these sketches as keys into hash tables, the software finds pairs of sequences that are likely to be similar. A more thorough, and computationally expensive, alignment procedure may then be used on the candidate pairs to refine the actual alignments.

In an LSH method, the distance between sketches is used as a first approximation for the distance between the sequences. That is, with high probability, two sequences which are very similar must have sketches which are similar, and conversely dissimilar sequences have dissimilar sketches. More precise definition of these concepts is given in Section 2.

Instead of using an LSH for the edit distance or an alignment score, in practice sequence alignment programs use the minHash LSH (Broder, 1997) for the Jaccard similarity or an LSH for the Hamming distance as a proxy for the edit distance. Although these two techniques have proven themselves useful in practice, they suffer from one major flaw: neither the Jaccard similarity nor the Hamming similarity directly corresponds to the edit distance (see Section 2.2 for examples). In fact, it is possible to find sequences that are indistinguishable according to the Jaccard similarity, but have large edit distance. Similarly, with the Hamming distance, there exist sequences with very low edit distance that are completely dissimilar according to the Hamming similarity.

Depending on the problem and the software implementation, the cases above can lead to false negatives (an alignment is missed) and a decrease in precision, or false positives (a nonexistent potential alignment reported) and extra computational work. An LSH method for edit distance instead of the proxy Jaccard or Hamming similarities would reduce both of these issues.

Although multiple definitions are possible for sequence similarity (or distance), in this study, we focus on the edit distance (a.k.a. Levenshtein distance, Levenshtein, 1966), which is the number of operations (mismatch, insertion, deletion) needed to transform a string into another one.

Two methods that are LSH for the edit distance have been described previously. Bar-Yossef et al. (2004) propose a sketch that can distinguish, with some probability, between sequences with edit distance from sequences with edit distance , where n is the length of the sequences, for any . They use an indirect method to obtain an LSH for the edit distance: first they embed the edit distance space into a Hamming space with low distortion, and second, apply an LSH on the Hamming space. That is, the input sequence is first transformed into a bit vector of high dimension, then sketching for the Hamming distance is applied to obtain an LSH for the edit distance.

Similarly, Ostrovsky and Rabani (2007) propose a two-step method, where the edit distance space is first embedded into an space with low distortion, then a sketching algorithm for the (Kushilevitz et al., 2000) is used to obtain an LSH for the edit distance. This method can distinguish between sequences with edit distance and edit distance , for some constant c.

We propose a simpler and direct method that is an LSH for the edit distance. Our method is an extension to the minHash method. We call our method OMH for Order Min Hash, and it can be seen as a correction of the minHash method. The probability of hash collision in the OMH method is the product of two probabilities. The first is the probability to select a k-mer from the set of common k-mers between the two sequences. This probability is similar to minHash that estimates the Jaccard similarity between the k-mer contents of two sequences. However, there is one key difference: the minHash method estimates the Jaccard similarity which treats sequences as sets of k-mers, and the number of occurrences of each k-mer in the sequences is ignored, whereas OMH estimates the weighted Jaccard, where the number of occurrences of a k-mer in a sequence is significant, i.e. the weighted Jaccard works with multi-sets. The second probability is the likelihood that the common k-mers appear in the same relative order in the two sequences. Therefore, OMH is sensitive not only to the k-mer content of the sequences but also to the order of the k-mers in the sequences.

The sketch proposed for OMH is only slightly bigger than the sketch for minHash while maintaining significantly more information about the similarity of two sequences. In addition to providing an estimate for the edit distance between two sequences, it also provides an estimate of the k-mer content similarity (the weighted Jaccard) and how similar the relative order is between the common k-mers of the two sequences.

Section 2 summarizes the notation used though out and main results. Detailed proofs of the results are given in Section 3. Section 4 discusses some practical consideration on the implementation of the sketches.

2 Main results

2.1 Concepts and definitions

Similarity and dissimilarity. A dissimilarity is a function that indicates the distance between two elements in the universe . d satisfies the triangle inequality and means that x = y. In other words, a dissimilarity is a normalized distance. A similarity is a function such that is a dissimilarity. Hence, a dissimilarity defines a similarity and vice versa. We will therefore use either of the terms ‘edit dissimilarity’ or ‘edit similarity’.

Given two strings of length n (where Σ is the alphabet of size ), the Hamming dissimilarity is the number of indices at which S1 and S2 differ divided by n: ( denotes the set ). The edit dissimilarity (a.k.a. normalized edit distance) is the minimum number of indels (short for insertion or deletion) and mismatches necessary to transform S1 into S2, divided by n. Given two sets A and B, the Jaccard similarity is .

Gapped LSH. Let be a set of hash functions defined on a set (the universe). A probability distribution on the set is called -sensitive for the similarity s when

| (1) |

| (2) |

where and . A similarity admits a gapped LSH scheme if there exists a distribution on a set of hash functions that is -sensitive. In the definition above, the probability is taken over the choice of the hash function in and the implications hold for any choice of x and . In a gapped LSH, the probability of a hash collision is increased () between similar elements, and less likely () for dissimilar elements.

In the following, the probabilities are always taken over the choice of the hashing function, even though we may omit the ‘’ subscript.

LSH. An LSH for a similarity is a family of hash functions that is -sensitive for any . Equivalently, the family of hash functions satisfies . In practice a gapped LSH is typically used to put elements into a hash table where there is high likelihood of a collision, whereas a full LSH can be used as a direct estimator of the underlying measurement.

minHash sketch. Let the universe be a family of sets on the ground set X (i.e. ). The minHash LSH for the Jaccard similarity is defined as the uniform distribution on the set . That is, the hash function selects the smallest element of the set A according to some ordering π of the elements of the ground set X. This family of hash functions is -sensitive for any value of , or equivalently .

LSH for Hamming similarity. The Hamming similarity between two sequences with same length n is the proportion of positions which are equal: . For the Hamming similarity, the uniform distribution on satisfies .

String k-mer set. For a sequence S, the set of its constituent k-mers is , where is the substring of length k starting at index i. By extension, the Jaccard similarity between two sequences is the Jaccard similarity between their k-mer sets: .

Weighted Jaccard. The weighted Jaccard similarity on multi-sets (or weighted sets) is defined similarly to the Jaccard similarity on sets, where the intersection and union take the multiplicity of the elements into account. More precisely, a multi-set A is defined by an index function , where gives the multiplicity of x in A (zero if not present in A). The index function of the intersection of two multi-sets is the minimum of the index functions, and for the union it is the maximum. Then, the weighted Jaccard is defined by

This is a direct extension to the set definitions, where the index function takes values in {0, 1}.

2.2 Jaccard and Hamming similarities differ from edit similarity

Similarly to the definition of the LSH, we say that a similarity f1 is a -proxy for the similarity f2 if

| (3) |

| (4) |

That is high similarity for f1 implies high similarity for f2, and the converse. Because of the similar structure between the definitions of sensitivity and proxy, if f1 is not a proxy for f2 (for any non-trivial choice of parameters ), then an LSH for f1 is not an LSH for f2.

We show here that neither the Hamming similarity nor the Jaccard similarity is a good proxy for the edit dissimilarity. More precisely, only one of the implications above is satisfied.

Jaccard similarity differs from edit similarity. A low Jaccard similarity does imply a low edit similarity (Equation 4). On the other hand, consider the sequence that has n − k 0s followed by k 1s, and S2 with k 0s followed by n − k 1s (k fixed, n arbitrarily large). The k-mer sets of S1 and S2 are identical, hence , while the edit similarity is . These sequences are indistinguishable according to the Jaccard similarity while having arbitrarily small edit similarity (Equation 3 not satisfied).

Weighted Jaccard similarity differs from edit similarity. Consider two de Bruijn sequences: sequences of length σk containing every k-mer exactly once (van Aardenne-Ehrenfest and de Bruijn, 1951). There is a very, very large number of such sequences [], and although any two such sequences have exactly the same k-mer content, they might otherwise have a very low edit similarity. Both the Jaccard and weighted Jaccard similarities fail to distinguish between de Bruijn sequences, regardless of their mutual edit dissimilarity.

For example, the two sequences 1111011001010000111 and 0000101001111011000 of length 19 each contain exactly the 16 possible 4-mers; hence, their Jaccard and weighted Jaccard similarities are 1. Their edit similarity is only . By comparison, two random binary sequences of length 19 have an average edit similarity of and an average Jaccard similarity of . In other words, these two de Bruijn sequences are much more dissimilar than two random sequences despite having a perfect Jaccard similarity.

More generally, both Jaccard and weighted Jaccard similarities treat sequences as bags of k-mers. The information on relative order of these k-mers within the sequence is ignored, although it is of great importance for the edit similarity. In contrast, an OMH sketch does retain some information on the order of the k-mers in the original sequence. In the case of the two de Bruijn sequences above, the proportion of pairs of k-mers that are in the same relative order in the two sequences is 0.4. The expected similarity between the OMH sketches of these sequences is also equal to 0.4.

Hamming similarity differs from edit similarity. A high Hamming similarity does imply a high edit similarity (Equation 3). The opposite is not true however. Consider the sequences of length n, and . These sequences have a Hamming similarity of 0 and an edit similarity of (two indels). That is, these sequences are as dissimilar as possible according to the Hamming dissimilarity, but an arbitrarily high edit similarity (Equation 4 not satisfied).

The Hamming similarity is very sensitive to the absolute position in the string. A single shift between two sequences has a large impact on the Hamming similarity but only a unit cost for the edit similarity. An OMH sketch on the other hand only contains relative order between k-mers and is indifferent to changes in absolute position.

2.3 LSH for the edit similarity

An LSH for the edit similarity must be sensitive to the k-mer content of the strings and the relative order of these k-mers, but relatively insensitive to the absolute position of the k-mers in the string. This motivates the definition below. Similarly to the minHash, k-mers are selected at random by using a permutation on the k-mers. Additionally, to preserve information about relative order, k-mers are selected at once and recorded in the order they appear in the sequence (rather than the order defined by the permutation).

Additionally, the method must handle repeated k-mers. Two copies of the same k-mer occur at different positions in the sequence, and it is important for the relative ordering between k-mers to distinguish between these two copies. We make k-mers unique by appending to them their ‘occurrence number’.

More precisely, for a string S of length , consider the set of the pairs of the k-mers and their occurrence number. If there are x copies of m in sequence S, then the x pairs are in the set , and the occurrence number denotes the number of other copies of m that are in the sequence S to the left of this particular copy. That is, if m is the k-mer at position i in S (i.e. ), then its occurrence number is . This set is the ‘multi-set’ of the k-mer content of string S, or the ‘weighted set’ of k-mers where the number of occurrences is the weight of the k-mer (hence the w superscript). We call a pair (m, i) of a k-mer and an occurrence number a ‘uniquified’ k-mer.

A permutation π of defines two functions and . is a vector of length of elements of such that:

the pairs (mi, oi) are the smallest elements of according to π,

the pairs are listed in the vector in the order in which the k-mer appears in the sequence S. That is, if i < j, and , then x < y.

The vector contains only the k-mers from , in the same order. The OMH method is defined as the uniform distribution on the set of hash functions .

For extreme cases, where , the vector contains overlapping k-mers that cover the entire sequence S. In that case, equality of the hash values implies strict equality of the sequences.

At the other extreme, where , the vectors contain only one k-mer and no relative order information is preserved. In that case, only the k-mer content similarity between S1 and S2 matters.

The weighted Jaccard similarity of two sequences is the weighted Jaccard of their k-mer content (seen as multi-set). Because k-mers were made unique by their occurrence number in , the weighted Jaccard similarity is equivalently defined as .

Theorem 1. When, OMH is an LSH for the weighted Jaccard similarity:

(5) Proof. This proof is similar to that of minHash and the Jaccard similarity (Broder, 1997). Because every uniquified k-mer in has the same probability of being selected, the probability of having a hash collision is the same as selected a k-mer from the intersection where the probability of picking a k-mer is weighted by its maximum occurrence number. □

As we shall see in Section 4.4, the weighted Jaccard similarity contains approximately the same information as the Jaccard similarity with respect to the edit similarity.

For the general case , we shall prove the following theorem in Section 3 that OMH is a gapped LSH for the edit dissimilarity.

Theorem 2. For anyand any, there exist functionsandsuch that OMH is-sensitive for the edit distance.

The actual functions p1 and p2 are explicitly defined in Section 3, but they may not be easily expressed with elementary functions in general.

3 Proofs of main results

We shall now prove Theorem 2 that OMH is sensitive for the edit similarity by exhibiting the relations between parameters that satisfy Equations (1) and (2). We will break the proof in two lemmas that provide the relations between s1, p1 and between s2, p2. In the following, S1 and S2 are two sequences of length n. The number of k-mers in each of these sequences is .

In the following, we assume that a binomial coefficient where n is negative or null is equal to 0. In these proofs, it means that the probability of choosing elements from the empty set is zero.

Lemma 1. when

(6) Proof. The situation is similar to the minHash method. Suppose that , then the edit dissimilarity and the number of mismatches and indels is . Any alignment between s1 and s2 has at most mismatches and indels, because . Therefore, there are at least k-mers in the aligned bases, as an error (mismatch or indel) affects at most k consecutive k-mers.

Similarly, the size of the set is maximized when all the k-mers that are not part of the alignment are different. Then, is at most .

We estimate the probability to have a hash collision from the number of uniquified k-mers in the aligned bases. As seen in Figure 1, it is possible for a k-mer m with different occurrence numbers to be part of the aligned bases. Any permutation that has in the lowest uniquified k-mers does not lead to a hash collision. Let x be the number of k-mers in the aligned bases with occurrence number that disagree between S1 and S2. Therefore, there are at least x k-mers outside of the aligned bases representing at least unaligned bases. Given that the edit similarity is , the number of unaligned bases is at most and . Consequently, the number of k-mers in the aligned bases to choose from is at least the number of k-mers in the aligned bases () minus the number of k-mers with disagreeing occurrence number (x) .

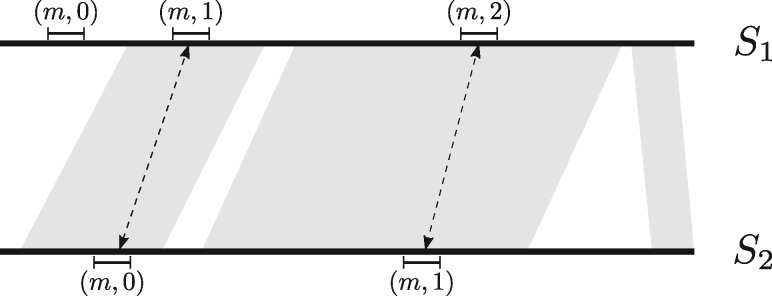

Fig. 1.

For an alignment between S1 and S2, the gray area represents the aligned bases. A particular k-mer m is shown with its occurrence numbers. The occurrence number of the matched k-mer pairs in the aligned bases may not agree (as in this example). For every such m with a mismatch occurrence number in the aligned bases, there must exist an instance of m outside the aligned bases [ in S1 here]

Every element of has an equal probability to be in the lowest elements according to a permutation π; therefore, the probability of having a hash collision is:

| (7) |

This defines the relationship between p1 and s1 as in Equation (6).

□

For the proof of Equation (2), we will consider its contrapositive

| (8) |

That is for any two sequences with high probability of having a hash collision, the edit similarity of the sequences must be high.

To have a high probability of collision between two sketches, the sequences must (i) have a large number of common k-mers and (ii) these common k-mers should be mostly in the same relative order. The first condition corresponds to the sequences having a large weighted Jaccard similarity.

The second condition is related to common subsequences (CSs) between sequences of k-mers. A ‘common subsequence’ between and is a sequence of elements that are in both and and appear in the same order (formally an increasing function such that ). We emphasize here that the ‘sequences’ considered here are not DNA sequences, but ordered lists of k-mers .

If the sequences S1 and S2 have a long CS of k-mers, then the probability to pick k-mers in the same order between the common k-mers of S1 and S2 will be high. In turn, the presence of a long CS of k-mers implies a high similarity.

Considering Equation (8), we are looking for the lowest similarity (s2) that is achievable given a high probability (p2) of hash collision. This is done in two parts: (i) finding the lowest weighted Jaccard between S1 and S2 given the high hash collision rate and (ii) constructing a worse case example of having many CSs of k-mers while not having any long CS. This second problem is equivalent to finding, for a given L, a single sequence with as many as possible increasing subsequences of length L (see Lemma 3).

Lemma 2. when

(9) Proof. We use the notation for the set of elements in the vector (in other words, because all the elements are unique by construction, the elements without order).

As mentioned above, we consider the contrapositive state in Equation (8). We have that

| (10) |

Under the conditional event (C) that , we have . The reverse implication () is always true as h is obtained from hw by using only the k-mer in each element. The forward implication () holds thanks to (C). Given that the k-mers are listed in order in which they appear in the respective sequences, they are also listed in order of their occurrence number, and because the content in the weighted vectors hw is the same, the equality of the unweighted vectors h implies equality of the weighted vectors.

Let be the size of the intersection of the weighted k-mer sets. The event (C) occurs when the smallest k-mers/occurrence number pairs according to the permutation π belong to the intersection . Therefore,

| (11) |

Consider now the sequences and of the elements of listed in the order in which they occur in S1 and S2, respectively. Both of these sequences have length m. Then, the event that under the condition (C) is equivalent to having the hash function picking a CS of length between and . Because the elements of these sequences are never repeated (it is a list of uniquified k-mers), the problem of finding CSs between and is identical to finding increasing subsequences (IS) in a sequence of integers of length m (Fredman, 1975; Hunt and Szymanski, 1977).

| (12) |

where is the set of all permutations of . Together, Equations (10–12), and Lemma 3 imply that the following holds for any choice of sequences S1, S2:

| (13) |

where L is the length of the longest CS between and . The function on the right-hand side of Equation (13) is an increasing function of L, equal to 0 when , and equal to 1 when . Given that , replacing L by in Equation (13) gives the desired relation between s2 and p2 of Equation (9).□

Finally, we prove the relationship between the length of the longest increasing subsequence (LIS) and the largest number of sequences of maximal length.

Lemma 3. For , for any sequence of length n with an LIS of at most i, the largest number of increasing subsequences of length is

and this bound is tight.

Proof. The proof relies on the properties of patience sorting (Aldous and Diaconis, 1999). Patience sorting for a shuffled deck of cards works as follows:

The algorithm creates stacks of cards where in each stack the cards are in decreasing order from the bottom to the top of the stack. The stacks are organized in a line, left to right.

At each round, the next card of the deck is examined and added to the top of the left most stack it can go on, i.e. the left most stack with a top card whose value is higher than the new card.

If no existing stack is suitable, a new stack is created to the right with the new card.

After all the cards are drawn and organized in stacks (see Fig. 2), the following properties hold: (i) no two cards from an increasing subsequence in the original deck are in the same stack, and (ii) the number of stacks is equal to the LIS (see Aldous and Diaconis, 1999, Lemma 1).

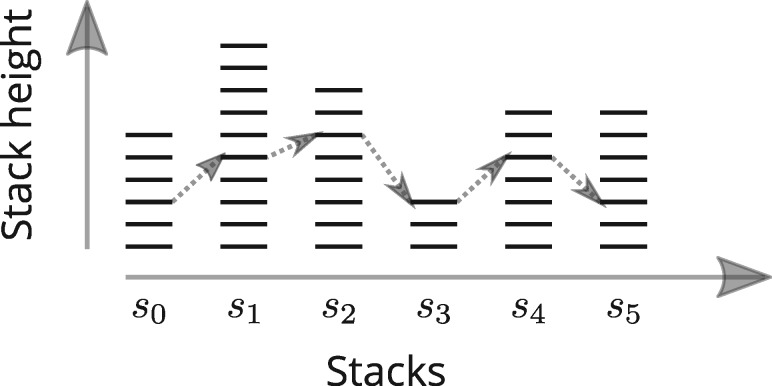

Fig. 2.

An example of stacks created when sorting a deck of cards. The LIS is 6, with the arrows showing a possible increasing subsequence of maximal length. To maximize the number of possible subsequences of maximum length, the height of the stacks have to be equal

Fix and a sequence S of length n with LIS of . At the end of patience sorting of S, let be the vector of the height of each of the stacks. Then, an upper bound on the number of increasing subsequence of length in S is

| (14) |

This is an upper bound as every choice of elements from different stacks does not necessarily define a valid increasing subsequence of S. We show that g reaches its maximum when .

Because the set is compact, g reaches a maximum on C. Suppose that in s, not all the sj are equal; without loss of generality, assume that and are distinct. Set and consider the point . Let us also use the notation

Then, we split the sum in into the terms containing neither nor (), the terms that contain one of or () and the terms that contain both (), and we use the inequality (arithmetic mean is larger than geometric mean):

Hence, , where s contains two distinct values, is not maximum, and g must reach its maximum when all the sj are equal. Furthermore, in that case .

Therefore,

| (15) |

The function defined above is increasing and the maximum is reached for .

Finally, consider the sequence S(i, n), i divides n, defined by blocks:

Each block is of length n/i, the numbers in each blocks are in decreasing order, and the start of the blocks are in increasing order: block j is the decreasing sequence .

When the patience sorting algorithm is applied to the list S(i, n), the stacks are filled up one by one, from bottom to top and from left to right, and have the same height of n/i. Therefore, any choice of one element in each stack is a valid increasing subsequence of S(i, n) and the bound of Equation (15) is attained. □

Finally, we can restate and prove the main theorem.

Theorem 2. For anyand any, there exist functionsandsuch that OMH is-sensitive for the edit distance.

Proof. It is a direct consequence of Lemma 1 and Lemma 2. □

4 Discussion

4.1 Parameters

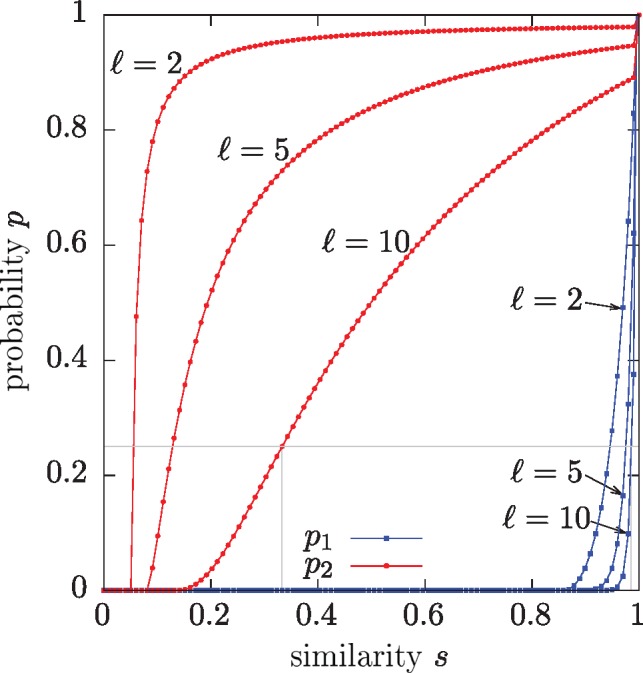

To have a proper LSH method, the conditions must hold. This condition means that the method is able to distinguish with some probability between dissimilar () and similar () sequences. Figure 3 shows the functions p1 (blue lines) and p2 (red lines) from Theorem 2 for varying values of .

Fig. 3.

The relationships between the similarity thresholds s1 and s2, and the probabilities p1 and p2, for n = 100, k = 5. Given a probability, e.g. shown by the horizontal gray line, the OMH method can distinguish between similarities below s2 (below left vertical gray line) and from similarities above s1 (above the right vertical gray line). The functions are defined at discrete points, when ns is integral, represented by the circles and squares

At the limit, taking , the method can distinguish between any s1 and s2 such that and (gray lines on Fig. 3). For larger values of , the gap between distinguishable values is reduced, although at the cost of having high values for s1.

4.2 Choice of parameter

The main difference between OMH and the minHash methods is the choice of k-mers, where minHash corresponds to the case of (ignoring the slight difference between Jaccard and weighted Jaccard). It might seem surprising at first that OMH is an LSH for edit dissimilarity for any values of , except for the extremes of and .

The proof of Theorem 2 is consistent with this analysis. For both these extreme values of , Equation (13), which relates the probability p2 to the similarity s2, becomes trivially true (). This means that even a certain hash collision (probability of 1) provides no guarantee on the relative order of common k-mers between the two sequences (i.e. the length of the longest subsequence L = 1: only one k-mer is guaranteed to align). On the other hand, any other value of leads to an actual bound in Equation (13).

For example, when , the minimum number of k-mers that must align in proper order as a function of the collision probability p2 is

| (16) |

Even in the case where n is very large, then , the number of properly aligning k-mers becomes large when the probability of collision p2 is close to 1. This is in contrast to the minHash, where a probability of collision of 1 (i.e. a Jaccard similarity of 1) does not guarantee that more than two k-mers properly align (see example in Section 2.2).

In practice, the parameter should be relatively small, say . Increasing the value of has two effects on the OMH method. First, it increases the minimum edit similarity that is detectable by the method, as there must be at least bases in the alignment of the two sequences for OMH to have a non-zero probability of hash collision. Second, a larger value of implies that the probability of hash collision is small, which requires storing a higher number of vectors in a sketch to obtain a low variance. There is a trade-off between how sensitive the scheme is to relative order (high value of ) and the smaller size for the sketch (low value of ).

4.3 Practical sketches for OMH

In our implementation, the OMH sketch for a sequence S contains more than just the list of vectors . In practice, we store

the length of the sequence ,

a list of m vectors and associated order vector .

Recall that the k-mers in the vector are listed in the order in which they appear in S. The order vector is a permutation of the indices that can reorder the k-mers according to π. That is, and i < j imply that (where, as in the definition, oi is the occurrence number of the k-mer mi). The total space usage of a sketch is .

The reason for the order vector in the sketch is to recover both an estimate of the weighted Jaccard between the two sequences and how well these common k-mers properly align. More precisely, given two sketches for S1 and S2, the number of collisions and the number of collisions in the reordered k-mers according to the order vector give an estimate of the weighted Jaccard . Using this estimate, the sizes of S1 and S2 from the sketches, and the formula , we can recover estimates for the size of the intersection and union of the weighted k-mer sets. Finally, formulas (10) and (11) give the probability for k-mers from the intersection to be in the same alignment order between the two sequences. The case where the Jaccard similarity is not sufficient to assess that the sequences have a high edit similarity is precisely the case when this last probability is low.

In other words, the extra bits of information per k-mer in the OMH sketch compared with a weighted minHash sketch corresponds to the supplemental information given by OMH compared with minHash. Given that is small in practice, the cost for this extra information is also very small.

For genomics sequences, it is traditional to compute the minHash using ‘canonical’ k-mers (defined as a k-mer or its reverse complement, whichever comes first lexicographically). In the OMH sketches, it is not possible to use canonical k-mers as this in incompatible with the order information encoded in the vector . Rather, two sketches, one for the forward strand and one for the reverse are stored. Comparing two sequences requires doing two sketches comparisons.

The size of an OMH sketch is .

4.4 Weighted Jaccard and OMH

Even though the Jaccard and minHash sketches are regularly used as a measure of the k-mer content similarity in computational biology software, the weighted Jaccard similarity has been heavily studied and used in other contexts, such as large database document classification and retrieval (e.g. Shrivastava, 2016; Wu et al., 2017), near duplicate image detection (Chum et al., 2008), duplicate news story detection (Alonso et al., 2013), source code deduplication, time series indexing (Luo and Shrivastava, 2017), hierarchical topic extraction (Gollapudi and Panigrahy, 2006), or malware classification (Drew et al., 2017) and detection (Raff and Nicholas, 2017).

The weighted Jaccard, compared with the unweighted Jaccard, gives a more complete measure of the similarity between two sets or sequences. Obviously, when no elements are repeated, the two similarities are equal. On the other hand, in the case of many repeated elements, the difference can be significant.

For example, returning to the example from Section 2.2 where with n – k 0s followed by k 1s and S2 with k 0s followed by n – k 1s, the edit similarity is very low: . The Jaccard similarity is , in other words, these two sequences are indistinguishable according to the Jaccard similarity. On the other hand, the weighted Jaccard is also very low: , much more similar to the edit similarity.

In the case of two de Bruijn sequences that might have very low edit similarity, the Jaccard and weighted Jaccard are both equal to 1, as every k-mer occurs exactly once. Therefore, in this case the weighted Jaccard provides no extra information. The OMH sketching method, being also sensitive to the relative orders of the k-mers (see Equation 10), would have a probability of hash collision much lower than 1.

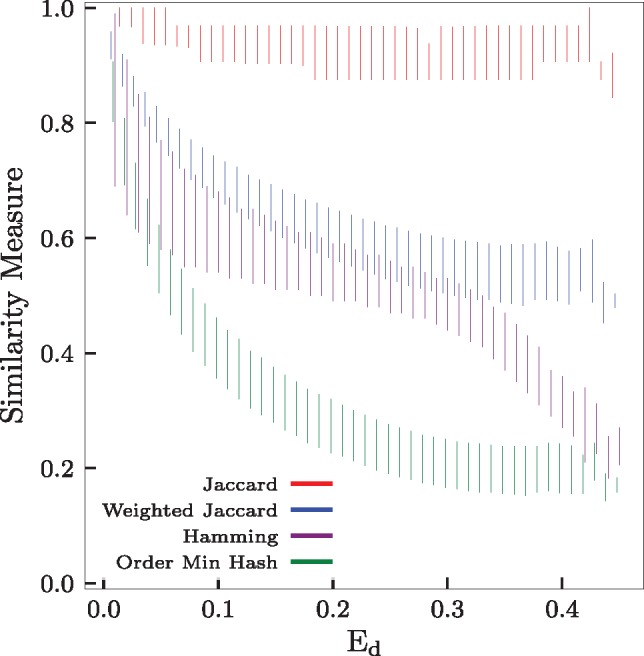

In Figure 4, we generated 1 million random binary sequences (σ = 2) of length n = 100. Each string is then randomly mutated a random number of times (up to 100 times) to obtain a pair of sequences with a random edit dissimilarity. Then, for each pair, we compute the actual edit dissimilarity, Hamming dissimilarity, the exact—i.e. not estimated by minHash—Jaccard and weighted Jaccard similarities. Additionally, the OMH sketch (with and m = 500) is also computed for each pair. The graph shows the median and first quartiles computed over the million pairs of sequences. Even for sequences with high edit dissimilarity (>0.4), the Jaccard similarity remains very high. However, the weighted Jaccard and OMH are more sensitive to the edit dissimilarity.

Fig. 4.

Evolution of the Jaccard, weighted Jaccard, Hamming and OMH against the edit dissimilarity on randomly generated binary sequences. In average, the Jaccard similarity stays high, even for sequences with high edit dissimilarity, unlike the weighted Jaccard, Hamming or OMH which are much more sensitive to the edit dissimilarity

4.5 Using sketches for phylogeny reconstruction

Genomic rearrangement in bacteria often involves mobile genomic elements known as ‘insertion sequences’ (IS). Lee et al. (2016) studied the frequencies and locations of these insertions and found that the positions are largely driven by the locations of existing copies. Although the locations of these inserts may vary across the genome, there are only a small number of inserted sequences that occur regularly. Consequently, the k-mer content of the genomes remains almost unchanged through these insertion events.

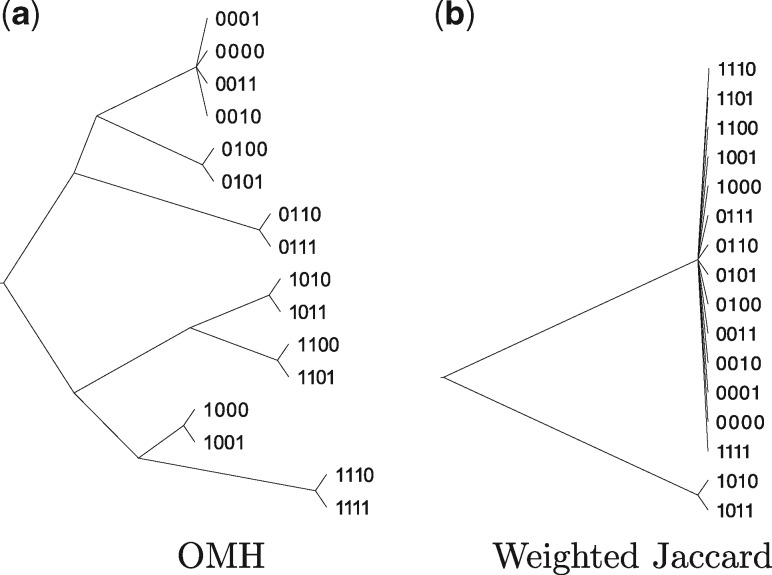

To test the effectiveness of OMH to recover the history of these insertion events, we simulated a family of Escherichia coli genomes by inserting these IS elements. We randomized the order of the four most common sequences (IS1, IS5, IS2, and IS186) and insert one into the genome at each of four generations of produced genomes. The location of the insertion was randomly chosen from the list of locations where the same sequence had been previously identified. Starting with E.coli K-12 MG1655 (NC_000913.2) we created two children by inserting the first element in the randomized order at two separate locations. For the next generation, we created two children for each of the individuals by choosing two random locations at which to insert the next sequence. For all the children in a generation, the same IS is inserted. We then measured all pairwise dissimilarities and created a phylogeny using the distances computed by OMH and weighted Jaccard.

Figure 5 shows two phylogenies of the 16 final sequences with , k = 22, . We chose to provide high differentiation between OMH and Jaccard, and m to provide high sensitivity to sequence perturbation. The binary sequences are the path used to create the child, i.e. siblings in the last generation would have the same three-digit prefix. OMH recovered the structure of the tree except for the four nodes at the top of the tree. Weighted Jaccard on the other hand cannot resolve most of the lineages as the sequences are very similar at the level of k-mer content. The experiment was repeated 10 times, and in each a similar tree was recovered (see Supplementary Fig. S1).

Fig. 5.

Phylogenies of the 16 child sequences produced by inserting IS elements into the Escherichia coli genome when distance is measured by both (a) OMH and (b) weighted Jaccard with , k = 22, . In general, OMH is able to recover more of the lineage structure than Jaccard because the k-mer content is very similar even though the sequences are inserted at different locations

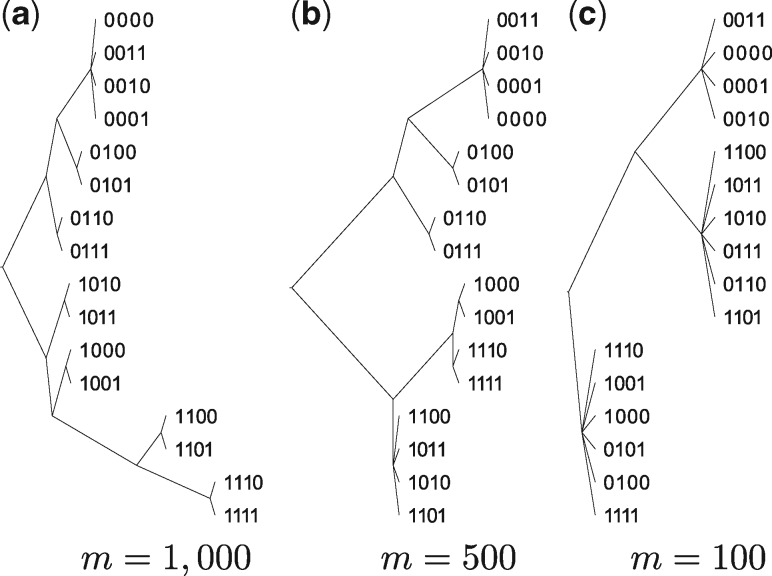

To test the robustness of OMH to lower values of m, and in turn the necessary computational resources, we reconstructed the tree above with and 100 (see Fig. 6). Even for low values of m, OMH is able to recover most of the tree structure, which is not recovered by weighted Jaccard even with a much larger m.

Fig. 6.

Phylogenies of the 16 child sequences produced by inserting IS elements into the Escherichia coli genome when distance is measured by OMH k = 22, and m equals to (a) 1000, (b) 500 and (c) 100. As m increases, OMH recovers more accurately the general structure of the tree

5 Conclusion

We presented the OMH method that is an LSH for the edit dissimilarity. Unlike the Jaccard similarity, which is only sensitive to the k-mer content of a sequence, OMH additionally takes into account the relative order of the k-mers in a sequence.

The OMH method is a refinement of the weighted Jaccard similarity that is used extensively in many related fields, such as document classification and duplicate detection. However, despite the advantages of the weighted Jaccard similarity, it has not yet been widely adopted by the bioinformatics community. Using weighted Jaccard and OMH for estimating edit similarity in bioinformatics applications can help reduce the number of false-positive matches which can in turn avoid unnecessary computations.

Supplementary Material

Acknowledgements

The authors would like to thank Mohsen Ferdosi and Heewook Lee for valuable discussion, and Natalie Sauerwald for comments on the manuscript.

Funding

This work was supported in part by the Gordon and Betty Moore Foundation’s Data-Driven Discovery Initiative [GBMF4554 to C.K.]; the US National Institutes of Health [R01GM122935]; The Shurl and Kay Curci Foundation; and the generosity of Eric and Wendy Schmidt by recommendation of the Schmidt Futures program.

Conflict of Interest: C.K. is a co-founder of Ocean Genomics, Inc.

References

- Aldous D., Diaconis P. (1999) Longest increasing subsequences: from patience sorting to the Baik-Deift-Johansson theorem. Bull. Am. Math. Soc., 36, 413–432. [Google Scholar]

- Alonso O. et al. (2013) Duplicate news story detection revisited In: Asia Information Retrieval Symposium. Springer, pp. 203–214. [Google Scholar]

- Backurs A., Indyk P. (2015) Edit distance cannot be computed in strongly subquadratic time (unless SETH is false) In: Proceedings of the Forty-Seventh Annual ACM Symposium on Theory of Computing, STOC ’15. ACM, New York, NY, USA, pp. 51–58. [Google Scholar]

- Bar-Yossef Z. et al. (2004) Approximating edit distance efficiently. In: 45th Annual IEEE Symposium on Foundations of Computer Science, pp. 550–559.

- Berlin K. et al. (2015) Assembling large genomes with single-molecule sequencing and locality-sensitive hashing. Nat. Biotechnol., 33, 623–630. [DOI] [PubMed] [Google Scholar]

- Broder A.Z. (1997) On the resemblance and containment of documents. In: Proceedings. Compression and Complexity of SEQUENCES 1997 (Cat. No.97TB100171), pp. 21–29.

- Chum O. et al. (2008) Near duplicate image detection: min-Hash and tf-idf weighting. In: BMVC, Vol. 810, pp. 812–815. [Google Scholar]

- Drew J. et al. (2017) Polymorphic malware detection using sequence classification methods and ensembles. EURASIP J. Inf. Secur., 2017, 2. [Google Scholar]

- Fredman M.L. (1975) On computing the length of longest increasing subsequences. Discrete Math., 11, 29–35. [Google Scholar]

- Gollapudi S., Panigrahy R. (2006) Exploiting asymmetry in hierarchical topic extraction. In: Proceedings of the 15th ACM International Conference on Information and Knowledge Management ACM, pp. 475–482.

- Harris R.S. (2007) Improved pairwise alignment of genomic DNA. PhD Thesis, The Pennsylvania State University, PA, USA.

- Hunt J.W., Szymanski T.G. (1977) A fast algorithm for computing longest common subsequences. Commun. ACM, 20, 350–353. [Google Scholar]

- Indyk P., Motwani R. (1998) Approximate nearest neighbors: towards removing the curse of dimensionality In: Proceedings of the Thirtieth Annual ACM Symposium on Theory of Computing, STOC ’98. ACM, New York, NY, USA, pp. 604–613. [Google Scholar]

- Jaffe D.B. et al. (2003) Whole-genome sequence assembly for mammalian genomes: Arachne 2. Genome Res., 13, 91–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jain C. et al. (2017) A fast approximate algorithm for mapping long reads to large reference databases In: Sahinalp S.C. (ed.) Research in Computational Molecular Biology. Springer International Publishing, Cham, pp. 66–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kushilevitz E. et al. (2000) Efficient search for approximate nearest neighbor in high dimensional spaces. SIAM J. Comput., 30, 457–474. [Google Scholar]

- Langmead B., Salzberg S.L. (2012) Fast gapped-read alignment with Bowtie 2. Nat. Methods, 9, 357–359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H. et al. (2016) Insertion sequence-caused large-scale rearrangements in the genome of Escherichia coli. Nucleic Acids Res., 44, 7109–7119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levenshtein V.I. (1966) Binary codes capable of correcting deletions, insertions, and reversals. In: Soviet Physics Doklady, Vol. 10, pp. 707–710. [Google Scholar]

- Li H., Durbin R. (2010) Fast and accurate long-read alignment with Burrows–Wheeler transform. Bioinformatics, 26, 589–595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu C.-M. et al. (2012) SOAP3: ultra-fast GPU-based parallel alignment tool for short reads. Bioinformatics (Oxford, England), 28, 878–879. [DOI] [PubMed] [Google Scholar]

- Luo C., Shrivastava A. (2017) SSH (sketch, shingle, & hash) for indexing massive-scale time series. In: NIPS 2016 Time Series Workshop, pp. 38–58.

- Marçais G. et al. (2018) MUMmer4: a fast and versatile genome alignment system. PLOS Comput. Biol., 14, e1005944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers E.W. et al. (2000) A whole-genome assembly of Drosophila. Science, 287, 2196–2204. [DOI] [PubMed] [Google Scholar]

- Ondov B.D. et al. (2016) Mash: fast genome and metagenome distance estimation using MinHash. Genome Biol., 17, 132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostrovsky R., Rabani Y. (2007) Low distortion embeddings for edit distance. J. ACM, 54, 218–224. [Google Scholar]

- Raff E., Nicholas C. (2017) Malware classification and class imbalance via stochastic hashed LZJD. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security ACM, pp. 111–120.

- Shrivastava A. (2016) Simple and efficient weighted minwise hashing. In: Advances in Neural Information Processing Systems, pp. 1498–1506.

- van Aardenne-Ehrenfest and de Bruijn (1951) Circuits and trees in oriented linear graphs. Simon Stevin : Wis-en Natuurkundig Tijdschrift. Tschr., 28, 203–217. [Google Scholar]

- Wu W. et al. (2017) Consistent weighted sampling made more practical. In: Proceedings of the 26th International Conference on World Wide Web, WWW ’17. Republic and Canton of Geneva, Switzerland, pp. 1035–1043. International World Wide Web Conferences Steering Committee.

- Zhao M. et al. (2013) SSW Library: an SIMD Smith-Waterman C/C++ library for use in genomic applications. PLoS One, 8, e82138. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.