Abstract

Clinical judgment to reach final diagnosis has remained a challenge since time immemorial. The present times are witness to artificial intelligence (AI) and machine learning programs competing to outperform the seasoned physician in arriving at a differential diagnosis. We discuss here the possible roles of AI in neurology.

Keywords: Artificial intelligence, clinical decision support system, machine learning

INTRODUCTION

To quote Hippocrates, “Life is short, the art long, opportunity fleeting, experience treacherous and judgment difficult.” Clinical judgment has been the proverbial Sword of Damocles hanging over a neurologist's head since time immemorial. In a recent study, the diagnostic accuracy of a doctor was considered to be far superior when pitted against artificial intelligence (AI) algorithms, with a caveat that doctors also made incorrect diagnosis in 15% of cases.[1] While the gray cells of an experienced neurologist may filter through the multitude of clinical possibilities using his or her experience, AI, on the other hand, invokes complex algorithms based on previously fed data sets to achieve similar results.[2,3]

CLINICAL JUDGMENT VERSUS ARTIFICIAL INTELLIGENCE

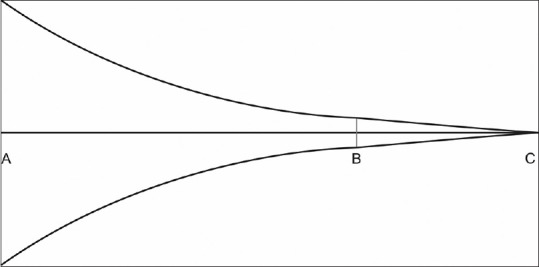

Let us imagine the scenario of a neurologist and AI-based system being called simultaneously to see a patient. In the process of proceeding to see the patient, both the neurologist and the AI system will have to consider an infinite set of possible diagnoses. Let us further assume that the patient is a middle-aged man who has developed an acute-onset inability to speak. At the point of the first contact, the AI system with its built-in camera, microphones, and deductive algorithms will struggle to narrow down the possibilities from the original infinite set of possible diagnoses. The neurologist, however, having arrived at the scene may be able to immediately narrow down the possibilities to a finite number based on prior knowledge and human intuition and instinct. As more information become available by the way of leading questions and guided investigations, the possibilities become progressively fewer in number. Soon, a structure emerges, and differential diagnosis takes a form. The efficiency of AI system, though wanting to start with, will progressively improve to parallel and even surpass the human reasoning, as more and more information is successively fed into it. The cognitive process involved in reasoning the clinical scenario cited above can be depicted in the form of a funnel, with its wide diameter representing the reasoning process just before the point of first contact (A) and narrow end, the final conclusion (C) [Figure 1]. The shrinking diameter of the funnel (B) reflects narrowing down of possibilities with progressive acquisition of new information.[4]

Figure 1.

Funnel plot of diagnostic reasoning in neurologists

Pauker et al. have estimated that the core knowledge of internal medicine involves about two million facts or data points.[5] It would be wise to assume then that any AI system must sift through at least two million facts at the wide end of the funnel before moving forward toward point B. At the wide end of the funnel, the totality of the world must be encountered with its huge amount of data that may not always follow the classical computer logic of true or false.[4] This can be a limiting factor for most AI systems. The seasoned neurologist, on the other hand, can quickly move from this world of overwhelming facts at point A toward the zone at point B, as suggested in the clinical vignette above. The AI may, however, be able to outperform the neurologist in moving from point B to point C (conclusion or final diagnosis) due to the limited data points to start with and powerful algorithms with blazing computing speeds.

RULE-BASED EXPERT SYSTEM VERSUS MACHINE LEARNING MODEL

Rule-based expert algorithms, based on human prefed knowledge database, employ computer programs based on Bayesian inference, Fuzzy logic, and other statistical models, to infer from clinical input. This is akin to a navigation app (e.g., Google Maps) computing the shortest route from point A to point B based on prefed data points in a city map database. Rule-based expert systems based on prefed static data tend to work in the manner an ideal undergraduate medical student would, by taking general principles about medicine and applying them to new patients. A study involving fifty Clinicopathological conferences published in NEJM suggested pattern recognition and selection of a “pivot” or key finding as an essential step toward generating a differential diagnosis.[6] AI systems based on machine learning algorithms and neural networks are explicitly designed to recognize patterns in the fed data.[2] It approaches clinical problems as a doctor progressing through residency might, i.e., by learning rules and patterns from every new patient seen. AI-based solution is, therefore, not preprogrammed by humans but are capable of “programming” itself when fed with very large amounts of structured and unstructured data. These data sets exist in neurology in the form of electronic medical records including patient's imaging data. These self-learned patterns, referred to as learned “models,” later form a pivot for the AI to derive meaningful clinical inferences from new real-time patient data. This is similar to modern driver-less cars trying to constantly predict and negotiate the evolving scenarios based on real-time data as they move forward without human intervention. AI, thus, acquires dynamic “knowledge” progressively in real-time through training, validation, and practical application through data sets, whereas rule-based expert algorithms are static in their knowledge base and output. A trained AI algorithm may in principle acquire enough prowess to outperform human clinical reasoning skills. The potential ramification of this phenomenon in the field of neurology is profound.

ARTIFICIAL INTELLIGENCE IN NEUROLOGY

In a recently published article, a machine learning algorithm for image classification called a deep convolutional neural network was trained using a retrospective data set of 128,175 retinal images, which were graded for diabetic retinopathy by a panel of human experts. When the algorithm was tested with new data sets of fundus photographs, it diagnosed diabetic retinopathy with a sensitivity and specificity of 90% and 98%, respectively.[7] In a recent study published in Lancet, a retrospectively collected data set containing 313,318 head computed tomography (CT) scans together with their clinical reports from around twenty centers in India was used to train an AI algorithm to detect critical findings on CT scan.[8] The deep learning algorithm could accurately identify head CT scan abnormalities requiring urgent attention, opening up the possibility to use these algorithms to automate the triage process. Machine learning has the potential to displace the work of radiologists as neuroimaging converted to digitized image data sets when fed into these algorithms will soon deliver an accuracy exceeding that of the trained human eye.[1] Bentley et al. have used CT scan-based AI to predict intracranial hemorrhage in patients receiving intravenous thrombolysis with tPA.[9] Machine learning has also been employed in outcome predictions and prognostic evaluation after strokes.[10,11,12,13,14,15,16,17]

THE DARK SIDE

Today's machine learning is not the sponge-like learning that humans are capable of, making rapid progress in a new domain without having to be drastically redesigned for the specific task. The ability of an algorithm to perform outstandingly at a single task like making a diagnosis from a digitized image should not be confused with the larger task of performing the role of an neurologist involved in day-to-day patient care. Machine learning algorithms are also data hungry, needing data points to the tune of millions to be of any value. Training AI with clinical data sets is also prone to human biases during data collection. AI algorithms are often referred to as “black box models” because, unlike the rule-based expert systems, the underlying rationale for the generated output by the AI algorithm is inscrutable not only by physicians, but also by the engineers who develop them.[18]

CONCLUSION

To quote Charles Darwin, “It is not the strongest of the species that survive, nor the most intelligent, but the one most responsive to change.” Algorithms of tomorrow may equal or outperform the doctor even at the wide end of the funnel (A). There is a need to create awareness and foresee this disruption while growing along, adapting to this new reality. Sir William Osler once said, “Medicine is the science of uncertainty and the art of probability-Listen, listen, listen the patient is telling you the diagnosis.” AI is listening, are we?

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

REFERENCES

- 1.Semigran HL, Levine DM, Nundy S, Mehrotra A. Comparison of physician and computer diagnostic accuracy. JAMA Intern Med. 2016;176:1860–1. doi: 10.1001/jamainternmed.2016.6001. [DOI] [PubMed] [Google Scholar]

- 2.Obermeyer Z, Emanuel EJ. Predicting the future – Big data, machine learning, and clinical medicine. N Engl J Med. 2016;375:1216–9. doi: 10.1056/NEJMp1606181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McCarthy M. Doctors beat computer programs in making diagnoses. BMJ. 2016;355:i5552. doi: 10.1136/bmj.i5552. [DOI] [PubMed] [Google Scholar]

- 4.Blois MS. Clinical judgment and computers. N Engl J Med. 1980;303:192–7. doi: 10.1056/NEJM198007243030405. [DOI] [PubMed] [Google Scholar]

- 5.Pauker SG, Gorry GA, Kassirer JP, Schwartz WB. Towards the simulation of clinical cognition. Taking a present illness by computer. Am J Med. 1976;60:981–96. doi: 10.1016/0002-9343(76)90570-2. [DOI] [PubMed] [Google Scholar]

- 6.Eddy DM, Clanton CH. The art of diagnosis: Solving the clinicopathological exercise. N Engl J Med. 1982;306:1263–8. doi: 10.1056/NEJM198205273062104. [DOI] [PubMed] [Google Scholar]

- 7.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–10. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 8.Chilamkurthy S, Ghosh R, Tanamala S, Biviji M, Campeau NG, Venugopal VK, et al. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet. 2018;392:2388–96. doi: 10.1016/S0140-6736(18)31645-3. [DOI] [PubMed] [Google Scholar]

- 9.Bentley P, Ganesalingam J, Carlton Jones AL, Mahady K, Epton S, Rinne P, et al. Prediction of stroke thrombolysis outcome using CT brain machine learning. Neuroimage Clin. 2014;4:635–40. doi: 10.1016/j.nicl.2014.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang Q, Xie Y, Ye P, Pang C. Acute ischaemic stroke prediction from physiological time series patterns. Australas Med J. 2013;6:280–6. doi: 10.4066/AMJ.2013.1650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Asadi H, Dowling R, Yan B, Mitchell P. Machine learning for outcome prediction of acute ischemic stroke post intra-arterial therapy. PLoS One. 2014;9:e88225. doi: 10.1371/journal.pone.0088225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Asadi H, Kok HK, Looby S, Brennan P, O'Hare A, Thornton J, et al. Outcomes and complications after endovascular treatment of brain arteriovenous malformations: A Prognostication attempt using artificial intelligence. World Neurosurg. 2016;96:562–90. doi: 10.1016/j.wneu.2016.09.086. [DOI] [PubMed] [Google Scholar]

- 13.Birkner MD, Kalantri S, Solao V, Badam P, Joshi R, Goel A, et al. Creating diagnostic scores using data-adaptive regression: An application to prediction of 30-day mortality among stroke victims in a rural hospital in India. Ther Clin Risk Manag. 2007;3:475–84. [PMC free article] [PubMed] [Google Scholar]

- 14.Ho KC, Speier W, El-Saden S, Liebeskind DS, Saver JL, Bui AA, et al. Predicting discharge mortality after acute ischemic stroke using balanced data. AMIA Annu Symp Proc 2014. 2014:1787–96. [PMC free article] [PubMed] [Google Scholar]

- 15.Chen Y, Dhar R, Heitsch L, Ford A, Fernandez-Cadenas I, Carrera C, et al. Automated quantification of cerebral edema following hemispheric infarction: Application of a machine-learning algorithm to evaluate CSF shifts on serial head CTs. Neuroimage Clin. 2016;12:673–80. doi: 10.1016/j.nicl.2016.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Siegel JS, Ramsey LE, Snyder AZ, Metcalf NV, Chacko RV, Weinberger K, et al. Disruptions of network connectivity predict impairment in multiple behavioral domains after stroke. Proc Natl Acad Sci U S A. 2016;113:E4367–76. doi: 10.1073/pnas.1521083113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hope TM, Seghier ML, Leff AP, Price CJ. Predicting outcome and recovery after stroke with lesions extracted from MRI images. Neuroimage Clin. 2013;2:424–33. doi: 10.1016/j.nicl.2013.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cabitza F, Rasoini R, Gensini GF. Unintended consequences of machine learning in medicine. JAMA. 2017;318:517–8. doi: 10.1001/jama.2017.7797. [DOI] [PubMed] [Google Scholar]