A retrospective cohort study revealed wide variation in electrolyte testing rates across hospitals, with strong hospital-level correlation across diagnoses.

Abstract

Video Abstract

BACKGROUND:

Overuse of laboratory testing contributes substantially to health care waste, downstream resource use, and patient harm. Understanding patterns of variation in hospital-level testing across common inpatient diagnoses could identify outliers and inform waste-reduction efforts.

METHODS:

We conducted a multicenter retrospective cohort study of pediatric inpatients at 41 children’s hospitals using administrative data from 2010 to 2016. Initial electrolyte testing was defined as testing occurring within the first 2 days of an encounter, and repeat testing was defined as subsequent testing within an encounter in which initial testing occurred. To examine if testing rates correlated across diagnoses at the hospital level, we compared risk-adjusted rates for gastroenteritis with a weighted average of risk-adjusted rates in other diagnosis cohorts. For each diagnosis, linear regression was performed to compare initial and subsequent testing.

RESULTS:

In 497 719 patient encounters, wide variation was observed across hospitals in adjusted, initial, and repeat testing rates. Hospital-specific rates of testing in gastroenteritis were moderately to strongly correlated with the weighted average of testing in other conditions (initial: r = 0.63; repeat r = 0.83). Within diagnoses, higher hospital-level initial testing rates were associated with significantly increased rates of subsequent testing for all diagnoses except gastroenteritis.

CONCLUSIONS:

Among children’s hospitals, rates of initial and repeat electrolyte testing vary widely across 8 common inpatient diagnoses. For most diagnoses, hospital-level rates of initial testing were associated with rates of subsequent testing. Consistent rates of testing across multiple diagnoses suggest that hospital-level factors, such as institutional culture, may influence decisions for electrolyte testing.

What’s Known on This Subject:

Overuse of laboratory testing contributes to health care waste. A better understanding of the variation in electrolyte testing rates across hospitals may inform future research and improvement interventions that target overuse.

What This Study Adds:

Wide variation in testing rates were observed across hospitals, with strong associations between rates of testing across multiple diagnoses at individual hospitals. These results suggest the potential for overuse of electrolyte testing at least partially related to hospital-level factors.

Health care spending in pediatrics is rising sharply, with profound and growing consequences for patients, families, and health systems.1–3 A significant proportion of this spending can be categorized as overuse,4 which is defined as the delivery of health care for which net benefits do not exceed net harms.5 In particular, overuse of laboratory testing, especially repeat daily testing in which there is a low pretest probability of a clinically significant result, contributes substantially to waste.6 Overuse of commonly repeated tests, such as serum electrolyte tests, can lead to excess costs, patient charges, increased demand on nursing time, iatrogenic anemia,7 and poor patient and family experience because of the pain of phlebotomy8,9 and interrupted sleep from early morning laboratory draws.10,11 Decreasing overtesting may also reduce other unnecessary care, such as repeat testing and electrolyte supplementation, or other interventions for mild subclinical abnormalities that would have self-corrected.12

Previous studies in pediatrics have been used to identify variation in testing that has impacted the value and cost of subsequent care.13–15 However, a detailed understanding of the patterns of variation in testing across diagnoses and across hospitals could improve knowledge of hospital-level factors that increase testing rates and low-value care. Although previous quality improvement interventions have reduced the use of routine testing at single centers,16,17 recognition of consistent differences in how testing is used at different hospitals could inform broader efforts to reduce overtesting. In particular, although electrolyte testing is commonly performed in pediatrics, various guidelines recommend limiting its use in generally healthy children with acute conditions such as bronchiolitis and gastroenteritis.18,19

In this study, we examined a cohort of generally healthy children hospitalized with common pediatric conditions to measure the frequency of and variation in inpatient electrolyte testing across children’s hospitals and to determine patterns of use that may impact testing at a hospital level. In particular, we sought to determine if individual hospitals had a pattern of higher testing rates across conditions, which may indicate hospital factors that drive disproportionate resource use (such as a hospital-specific “culture” of testing), and to evaluate if hospitals with higher rates of initial testing perform more subsequent testing, even after adjusting for measurable differences in patient demographics and clinical severity.

Methods

Study Design

We conducted a multicenter retrospective cohort study of pediatric inpatients with 1 of 8 common pediatric conditions (gastroenteritis, urinary tract infections [UTIs], constipation, febrile conditions in infants, pneumonia, skin and soft tissue infections (SSTIs), bronchiolitis, and asthma) who were cared for at children’s hospitals that contribute data to the Pediatric Health Information System (PHIS) database. The PHIS database includes administrative data from 49 tertiary care children’s hospitals across the United States that are affiliated with the Children’s Hospital Association (Lenexa, KS). Data quality and reliability are ensured through a joint effort between the Children’s Hospital Association and participating hospitals. Hospitals submit discharge and encounter data, including demographics, diagnoses, and procedures, in International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) and International Classification of Diseases, 10th Revision, Clinical Modification (ICD-10-CM) format. Data are de-identified at the time of data submission and are reviewed at bimonthly coding consensus meetings, coding-consistency reviews, and quarterly data-quality reports.20 The current study excluded 6 hospitals that had incomplete data during the study period and 2 hospitals that did not contribute local emergency department data to the PHIS.

Study Population

Inclusion and Exclusion Criteria

We included all patients hospitalized (admitted and observation status) at <18 years of age between January 1, 2010, and December 31, 2016, who had specific billing codes for 1 of 8 common medical diagnoses (Supplemental Table 2).

Because our objective was to understand electrolyte testing in a generally healthy cohort of children with uncomplicated hospitalizations, we made the following exclusions: (1) patients with complex chronic conditions (CCCs),21 (2) patients with a length of stay (LOS) >7 days, (3) encounters that included ICU stays, and (4) encounters that involved the use of specific medications that directly alter serum electrolytes with a high risk of significant abnormality, including mineralocorticoids, diuretics, and desmopressin. We also excluded transfers from other hospitals because we were unable to determine the extent of any previous testing and could not distinguish between initial or repeat testing. This study was categorized as not human subjects research by the Institutional Review Board of Cincinnati Children’s Hospital Medical Center.

Diagnosis-Specific Inclusion and Exclusion Criteria

The 8 chosen diagnoses represent frequent causes of inpatient hospitalization.22 Diagnosis cohort definitions and diagnosis-specific inclusion and exclusion criteria were adapted from previous studies (Supplemental Table 2).13,14,23–28 Because these definitions were developed by using ICD-9-CM codes, ICD-10-CM equivalents were created by using Centers for Medicare and Medicaid Services general equivalency mapping.29 Draft ICD-10-CM codes were then reviewed by study authors and by a coding specialist, with minor adjustments to preserve the intended cohort definition. Population size and seasonal variation in discharge percentage were then compared between ICD-9-CM and ICD-10-CM definitions for each cohort to evaluate the similarity of the cohorts coded with each International Classification of Diseases coding system (Supplemental Fig 5).

Measures

The primary variable of interest was use of an electrolyte test during any day of the patient hospitalization. Electrolyte tests were defined as any test for an individual electrolyte or any test panels (such as basic metabolic panels) that was used to test for any electrolyte. A point-of-care blood gas analysis was not included as an electrolyte test. We defined the overall testing rate as the proportion of hospitalizations in which electrolyte testing was performed at any time in the admission encounter (which included testing in the emergency department). Testing that occurred on the first or second day was categorized as initial testing, and the initial testing rate was calculated as the proportion of hospitalizations in which any initial testing occurred. Testing that occurred on any subsequent day after initial testing was categorized as repeat testing, and the repeat testing rate was calculated as the proportion of hospitalizations in which any repeat testing occurred. To measure the volume of testing after the first 2 days of an encounter, subsequent testing was defined as the sum of all tests that occurred after the second day of hospitalization divided by the total number of hospitalizations. The subsequent testing rate, expressed as the total number of subsequent tests per hospitalization, was calculated for each diagnosis cohort within each hospital.

Outcomes included hospital-level variation in overall electrolyte testing rate, initial electrolyte testing rate, and repeat electrolyte testing rate.

Statistical Analysis

Demographic characteristics were summarized with frequencies and percentages by diagnosis.

To describe variation across hospitals in electrolyte testing, we first calculated unadjusted proportions of patient hospitalizations within each of the electrolyte testing categories (overall, initial, and repeat testing) within each of the 8 diagnoses. Descriptive statistics, including median, range, and interquartile range (IQR), were calculated for each diagnosis group.

We risk adjusted all hospital-level rates of testing by including covariates in a generalized linear mixed-effect model, with hospital included as a random effect. Covariates in the model included age, sex, race and/or ethnicity, payer, LOS, and severity for each hospitalization. Severity was assessed by using the relative cost weights (hospitalization resource intensity score for kids)30 on the basis of each encounter’s assigned all patients refined diagnosis related group and level of severity.

To understand the baseline rates of testing and to determine rates of change in testing over time, we calculated yearly rates of initial testing over time for each diagnosis group. We constructed generalized linear mixed-effects models with a binomial distribution and hospital random effects to assess for any significant changes to testing rates over time for each diagnosis group.

To model the impact of potential hospital-specific factors on rates of testing and to analyze if rates of testing in 1 condition were associated with testing in other conditions, (eg, a local culture or guidelines that encourage testing), risk-adjusted testing rates were divided into quintiles by diagnosis and displayed on a heat map. The proportion of hospitals with at least 7 or 8 diagnoses in the highest 40% of testing rates was calculated for both initial and repeat testing. We also compared adjusted rates of initial and repeat testing for a single diagnosis with high rates of testing in both categories (gastroenteritis) with a weighted average of the other diagnosis cohorts using Spearman’s correlation. Only 1 comparison was performed to minimize the potential for increased type 1 error due to multiple comparisons.

Finally, we assessed the relationship between risk-adjusted rates of initial testing and all subsequent testing after the initial time period for each diagnosis via linear regression.

All analyses were performed by using SAS version 9.4 (SAS Institute, Inc, Cary, NC), and P < .05 was considered statistically significant.

Results

During the study period, 17.7% of all patient encounters (497 719 encounters) were eligible for inclusion across 41 hospitals and 8 diagnosis categories (Table 1). Cohort sizes and seasonal variation were similar over time (Supplemental Fig 5). Cohort sizes ranged from 21 922 encounters for constipation to 134 515 encounters for asthma over the 7-year study period.

TABLE 1.

Demographics of Electrolyte Testing Cohorts

| Gastroenteritis (n = 21 922) | UTI (n = 27 572) | Constipation (n = 35 681) | Febrile Infant (n = 28 463) | Pneumonia (n = 77 574) | SSTI (n = 76 380) | Bronchiolitis (n = 95 612) | Asthma (n = 134 515) | |

|---|---|---|---|---|---|---|---|---|

| n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | |

| Age, y | ||||||||

| 0 | 7339 (33.5) | 10 580 (38.4) | — | 28 463 (100) | 10 415 (13.4) | 11 514 (15.1) | 70 977 (74.2) | — |

| 1–4 | 13 049 (59.5) | 6472 (23.5) | 7174 (20.1) | — | 41 808 (53.9) | 30 561 (40) | 24 635 (25.8) | 53 377 (39.7) |

| 5–12 | 1534 (7) | 6311 (22.9) | 19 891 (55.7) | — | 21 497 (27.7) | 22 070 (28.9) | — | 69 020 (51.3) |

| 13–17 | — | 4209 (15.3) | 8616 (24.1) | — | 3854 (5) | 12 235 (16) | — | 12 118 (9) |

| Sex | ||||||||

| Male | 11 668 (53.2) | 5872 (21.3) | 17 973 (50.4) | 15 628 (54.9) | 40 770 (52.6) | 40 426 (52.9) | 56 395 (59) | 84 157 (62.6) |

| Female | 10 252 (46.8) | 21 697 (78.7) | 17 706 (49.6) | 12 830 (45.1) | 36 800 (47.4) | 35 947 (47.1) | 39 211 (41) | 50 354 (37.4) |

| Race | ||||||||

| Non-Hispanic white | 11 192 (51.1) | 13 576 (49.2) | 21 207 (59.4) | 12 791 (44.9) | 34 186 (44.1) | 35 779 (46.8) | 41 786 (43.7) | 35 211 (26.2) |

| Non-Hispanic African American | 3683 (16.8) | 3099 (11.2) | 5642 (15.8) | 5141 (18.1) | 14 422 (18.6) | 17 209 (22.5) | 19 849 (20.8) | 61 302 (45.6) |

| Hispanic | 4430 (20.2) | 7418 (26.9) | 5620 (15.8) | 6867 (24.1) | 18 383 (23.7) | 15 505 (20.3) | 22 277 (23.3) | 24 523 (18.2) |

| Asian American | 513 (2.3) | 747 (2.7) | 550 (1.5) | 676 (2.4) | 2539 (3.3) | 1569 (2.1) | 1976 (2.1) | 2623 (1.9) |

| Other | 2104 (9.6) | 2732 (9.9) | 2662 (7.5) | 2988 (10.5) | 8044 (10.4) | 6318 (8.3) | 9724 (10.2) | 10 856 (8.1) |

| Payer | ||||||||

| Government | 13 250 (60.4) | 17 224 (62.5) | 19 588 (54.9) | 18 499 (65) | 45 115 (58.2) | 48 024 (62.9) | 63 001 (65.9) | 89 018 (66.2) |

| Private | 7797 (35.6) | 9578 (34.7) | 15 021 (42.1) | 8980 (31.5) | 30 027 (38.7) | 25 609 (33.5) | 29 809 (31.2) | 41 658 (31) |

| Other | 875 (4) | 770 (2.8) | 1072 (3) | 984 (3.5) | 2432 (3.1) | 2747 (3.6) | 2802 (2.9) | 3839 (2.9) |

| Disposition | ||||||||

| Home | 21 645 (98.7) | 27 130 (98.4) | 35 140 (98.5) | 28 069 (98.6) | 76 512 (98.6) | 75 174 (98.4) | 94 293 (98.6) | 131 871 (98) |

| Other | 277 (1.3) | 442 (1.6) | 541 (1.5) | 394 (1.4) | 1062 (1.4) | 1206 (1.6) | 1319 (1.4) | 2644 (2) |

| LOS, d | ||||||||

| 1 | 12 288 (56.1) | 8808 (31.9) | 16 484 (46.2) | 6533 (23) | 32 671 (42.1) | 31 599 (41.4) | 46 817 (49) | 85 415 (63.5) |

| 2–4 | 9026 (41.2) | 17 353 (62.9) | 17 288 (48.5) | 21 074 (74) | 40 058 (51.6) | 41 297 (54.1) | 43 956 (46) | 47 686 (35.5) |

| 5–7 | 608 (2.8) | 1411 (5.1) | 1909 (5.4) | 856 (3) | 4845 (6.2) | 3484 (4.6) | 4839 (5.1) | 1414 (1.1) |

—, not applicable per cohort definitions.

Hospital-Level Use of Electrolyte Testing

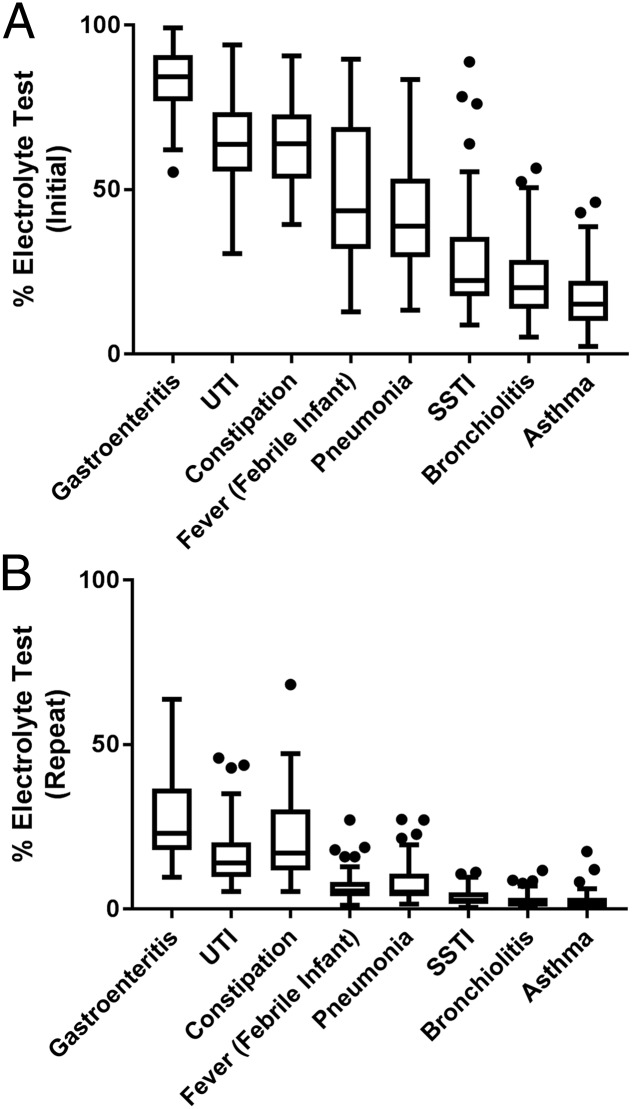

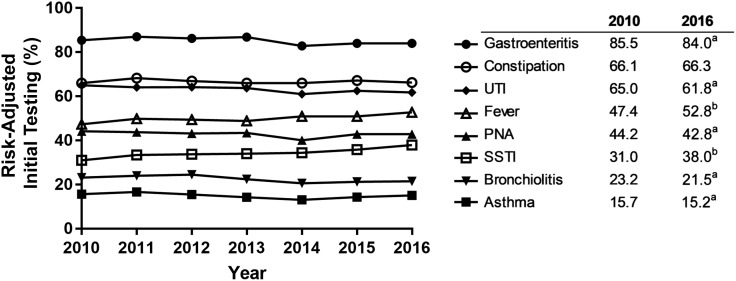

Across hospitals, there was wide variation in unadjusted rates of initial and repeat electrolyte testing within each diagnosis category (Fig 1, Supplemental Table 3). Both initial and repeat electrolyte testing were most common in the following diagnoses: gastroenteritis (median: initial 84%, repeat 23%), UTIs (median: initial 64%, repeat 14%), and constipation (median: initial 64%, repeat 17%). These 3 diagnoses were the only 3 with an IQR entirely above 50% for initial testing. Across the 41 hospitals, there was a wide degree of variation in initial testing rates for these 3 conditions as well as for pneumonia (range: 14%–86%), SSTIs (range: 9%–89%), and febrile infants (range: 13%–92%). Both initial and repeat electrolyte testing were less frequent in bronchiolitis and asthma. Within each diagnosis, initial electrolyte testing was much more common than repeat testing. After risk adjustment, the range of variation in testing rates across institutions revealed little change compared with unadjusted data (Fig 2, Supplemental Table 3). For example, within the cohort with gastroenteritis, the range of initial testing changed from 61% to 99% (unadjusted) to 56% to 99% (adjusted).

FIGURE 1.

Unadjusted rates of testing. A, Box plot to describe the variation in unadjusted hospital rates of initial electrolyte testing across 8 common pediatric diagnoses. B, Box plot to describe the variation in unadjusted hospital rates of repeat electrolyte testing across 8 common pediatric diagnoses.

FIGURE 2.

Adjusted rates of initial testing over time by diagnosis: rates of risk-adjusted initial testing by year across all included hospitals for each individual diagnosis. a Significantly decreasing rate (P < .001). b Significantly increasing rate (P < .001).

Changes in Rates of Testing Over Time

Over the 7-year study period, there were statistically significant changes in initial testing rates in 7 of the 8 diagnoses, although the changes were small and of questionable clinical relevance. Fever and SSTI had increasing rates, whereas gastroenteritis, UTI, pneumonia, bronchiolitis, and asthma had decreasing rates. Only constipation had no change in the rate of initial testing over time.

Cross-Diagnosis Hospital-Level Use

Heat maps of adjusted initial and repeat electrolyte testing rates are displayed in Fig 3. The proportion of hospitals with testing rates in the top 40% for 8 of 8 or 7 of 8 diagnoses was 12% (5 of 41) and 24% (10 of 41), respectively, for initial testing and 15% (6 of 41) and 22% (9 of 41), respectively, for repeat testing. Hospital-specific initial rates of testing in gastroenteritis were moderately correlated with the weighted average of initial testing rates in other conditions (r = 0.63; P ≤ .001). Hospital-specific repeat rates of testing in gastroenteritis were highly correlated with the weighted average of repeat testing rates in other conditions (r = 0.83; P ≤ .001).

FIGURE 3.

Hospital-level variation in adjusted testing rates as a heat map: individual hospital rates of initial (left heat map) and repeat (right heat map) electrolyte testing ordered from highest testers (top) to lowest testers (bottom). Individual hospitals are displayed on the rows and are consistent across both charts. Diagnoses are displayed as columns. Maximum, median, and minimum values for each diagnosis are displayed under the chart. Color values correspond to quintiles of testing within each diagnosis (in order, red represents the highest testing quintile and orange, yellow, light green, and dark green represent the lowest testing quintile).

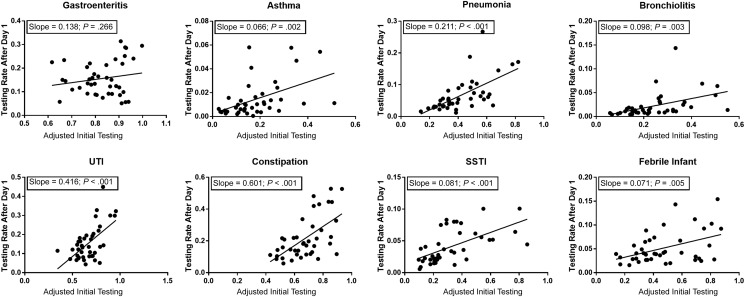

Subsequent Electrolyte Testing

There was a positive, significant association between adjusted initial testing rates and the rate of subsequent testing for all diagnoses except gastroenteritis (Fig 4). Slopes for diagnosis cohorts ranged from 0.071 for the febrile infant to 0.601 for constipation and indicate how differences in initial testing rates by hospital relate to expected changes in subsequent testing rates. For example, in the cohort with constipation, a 10% decrease in initial testing rates (ie, a decrease of 10 tests per 100 hospitalizations) would be expected to lead to 6 fewer subsequent electrolyte tests per 100 patient hospitalizations.

FIGURE 4.

Linear regression of the adjusted initial testing rate and subsequent rate of testing. The subsequent rate of testing is defined as the total number of repeat and late tests (ie, all tests after day 1 of the encounter) divided by the total number of hospitalizations.

Discussion

In this multicenter study of electrolyte testing, we identified significant variation in rates for initial and repeat testing across children’s hospitals, even after adjusting for variation in patient-level covariates. Although some diagnoses, such as pneumonia, febrile conditions in infants, and SSTIs, revealed a range of testing rates as wide as 80%, other diagnoses had narrower ranges that clustered around higher rates of testing (gastroenteritis, constipation, and UTI) or lower rates of testing (bronchiolitis and asthma). In addition to this variation in testing rates within each diagnosis, interestingly, many hospitals demonstrated similar ranks of testing across conditions (ie, were consistently high or low testers). This finding was visually depicted through heat maps in which individual hospitals often had similar colors across all diagnoses, and there was only 1 hospital in the top third of the heat map that had any testing rate in the bottom quintile for a diagnosis. Additionally, although one would expect only 0 or 1 hospital to fall into the top 40% of testing rates across 7 of 8 conditions by chance alone, almost one-quarter of our hospitals fell into this category. Within most diagnoses, hospitals with higher rates of initial testing were also found to have higher rates of subsequent testing, even after limiting our cohort to a relatively homogenous noncomplex population and controlling for measurable differences in severity of illness. Overall, these relationships support the idea that hospital-level factors beyond case severity have a significant impact on testing rates and have the potential to lead to low-value testing.

An important consideration in evaluating variation in health care systems is whether the variation is intended or unintended. Intended variation is guided by a plan and is introduced for a reason31 such as the necessary variation related to different diagnoses that require different tests and treatments. The variation we see in testing rates between certain diagnoses may in part be a result of intended variation. For instance, guidelines in gastroenteritis recommend electrolyte testing in severe cases,18 whereas bronchiolitis guidelines generally recommend minimal testing,19 which is consistent with the significant difference in testing rates between these conditions. However, it is likely that much of the variation we describe within conditions is unintended. After we controlled for differences in patient characteristics and severity, there remained wide variation in initial and repeat testing rates within all the diagnoses we investigated. Many factors may contribute to this diagnosis-specific variation in electrolyte testing. Previous studies focusing on a single diagnosis or condition have similarly revealed variation in usage of diagnostic testing, often with a description of high use in conditions that generally do not warrant testing.13–15,32,33 Some variation may occur because of differences in diagnosis volume. In bronchiolitis, a higher volume of patients has been demonstrated to lead to lower levels of testing.34 There may also be systems factors within an institution, such as the content of order sets or adherence to diagnosis-specific guidelines, that lead to variation in testing. Diagnosis-specific variation in the ordering of other nonelectrolyte laboratory tests, such as blood cultures in febrile infants or those with SSTIs, may lead to increased electrolyte ordering through an increased opportunity to add on tests. Even without order sets or other clinical-decision support tools, physicians at 1 institution may also develop similar ordering habits through their shared practice environment.35 Diagnoses with wide variation in testing rates may highlight opportunities for further investigation and to target overuse reduction.

In addition to diagnosis-specific practice variation in testing, consistent hospital-level trends were seen in test use across all diagnoses. Within a single hospital, electrolyte testing rates in 1 diagnosis were highly correlated with rates in other diagnoses, a finding that would be unlikely if only diagnosis-specific factors affected testing rates. This supports the idea that there are also likely macrosystems factors that influence testing rates across multiple diagnoses within a hospital. Previous studies have revealed that systems factors, such as health system messaging from leadership,36 role models who demonstrate high-value testing,35–37 supportive training environments,35,36 health systems culture,37 and transparency of cost,37,38 may all impact our use of health systems resources. The training environment may also play a role because some studies have indicated that high-use training environments may decrease trainees’ ability to recognize when conservative management is appropriate.39 In addition, hospitals that test more may do so in an attempt to mitigate risk such as testing protocols meant to improve safety around nephrotoxic medications or intravenous fluids. Although electrolyte testing is appropriate in many circumstances, applying a protocol overly broadly to both low- and high-risk patients may lead to excess testing across a health system. Developing ways to risk stratify for protocols may be 1 direct way to improve this overtesting. Regardless of the reason, consistent hospital-level variation in testing likely leads to unintended variation in clinical care and may adversely impact outcomes, including unnecessary patient harm and expense. These results highlight the need for interventions that address value at a health system level40 and to guide appropriate use of laboratory testing.41,42

Finally, our study has revealed a clear relationship between a hospital’s initial testing rates and subsequent testing volume in most diagnoses. Without data on the clinical circumstances that may affect testing decisions, the reasons behind this relationship are unclear and require further study. In some clinical situations, repeat testing may be warranted. However, another potential driver of this relationship is the “cascade effect,” in which overuse of testing leads to a cascade of further testing or interventions.12 This may occur through overdiagnosis (detection of a finding, such as mild hypokalemia, that is a valid finding but would not cause symptoms and would resolve without treatment) or false-positives (detection of a falsely abnormal finding, such as hyperkalemia related to a hemolyzed blood sample, that leads to repeat testing and possibly other interventions). The presence of higher rates of initial testing may lead to an increased chance of slightly abnormal results, which will lead to repeat tests without positively affecting outcomes. Alternatively, the correlation of initial and subsequent testing rates may be a further indication of a higher testing culture present at some institutions.

Our work should be interpreted in the context of important limitations. In the current study, we used administrative data, which led to several limitations. The data set lacks any results of testing, specific timing of testing, or other patient-level clinical context, which could be found in electronic medical records (information that may be important for understanding the clinical circumstances surrounding testing). Although we excluded transfers from our data set, there remains the possibility that patients had previous electrolyte testing at urgent care or outpatient sites, which could have influenced the testing decisions we observed. Although we controlled for many factors, there remains the possibility that there are significant differences in severity of illness or other clinical characteristics across hospitals that may be related to testing rates and the repetition of testing. For instance, the definition of observation status may be somewhat different at various hospitals. However, given the wide distribution of variation in our data, even after restricting the cohort to a homogeneous noncomplex population and adjusting for measurable differences in illness severity, it is unlikely that these missing factors explain all the variation and trends we observed. Our data set is limited to children’s hospitals, which may limit the generalizability of results to other care environments such as community hospitals or hospitals without training programs. It is also important to consider that because our initial testing definition included any tests on the first 2 days of a hospitalization, it is likely that our analysis underrepresents the true rate of follow-up tests. For instance, electrolyte testing obtained on admission and repeated the following morning would be included as a single initial test, whereas many providers would recognize this clinically as a repeat test. However, this limitation would likely underestimate the proportion of hospitalizations with repeat testing, and it is unlikely that this underestimation would be differential across institutions.

These results provide a baseline understanding of current electrolyte testing rates across a variety of inpatient pediatric diagnoses. Although electrolyte tests are generally considered low cost, the strain of low-cost, low-value interventions on the US health system is substantial43 and widespread.44 Further studies to understand the relationship between testing, test results, and outcomes (eg, LOS, hyperkalemia, and acute kidney injury) are warranted. If the variation we have described is associated with no change in outcomes, it may support the concept that lower rates of testing will provide better overall value. This type of benchmarking would more clearly define overuse in this testing category and facilitate both hospital-level monitoring of use of laboratory testing and future guideline development.41,42 In addition, a better understanding of the factors that drive overuse of testing is needed. Data sets with more granular clinical data from the electronic health record, such as laboratory test results, intravenous fluid use, and electrolyte supplements, may help validate and clarify the interpretation of testing rates. Finally, a mixed-methods evaluation of culture and of systems-level factors, such as policies, clinical environment, order sets, and staffing models, may improve understanding of what specific environmental factors impact testing rates.

Conclusions

Overall, our study reveals a wide variation in rates of initial and repeat electrolyte testing across 8 common inpatient pediatric conditions. This interhospital variation in testing correlated strongly across conditions such that hospitals that tested more in 1 condition had a higher likelihood to test more in other conditions. There is also evidence of a potential cascade effect, whereby initial testing may lead to higher rates of subsequent testing. These results provide evidence for the potential overuse of electrolyte testing, and further research is required to evaluate outcomes and benchmarking that may facilitate guidelines to improve the value of testing in pediatric inpatient care.

Acknowledgment

This work was completed in partial fulfillment of the Master of Science degree in Clinical and Translational Research at the Division of Epidemiology, College of Medicine, University of Cincinnati.

Glossary

- CCC

complex chronic condition

- ICD-9-CM

International Classification of Diseases, Ninth Revision, Clinical Modification

- ICD-10-CM

International Classification of Diseases, 10th Revision, Clinical Modification

- IQR

interquartile range

- LOS

length of stay

- PHIS

Pediatric Health Information System

- SSTI

skin and soft tissue infection

- UTI

urinary tract infection

Footnotes

Dr Tchou led the overall conceptualization and design of the study, analyzed and interpreted the data, drafted the initial manuscript, and reviewed and revised the manuscript; Dr Hall led the acquisition and analysis of the data and contributed to the conceptualization and design of the study and the drafting and critical review of the manuscript; Drs S.S. Shah, Johnson, Schroeder, Antoon, Genies, Quinonez, Miller, S.P. Shah, and Brady contributed to the overall conceptualization and design of the study, the analysis and interpretation of data, and the critical review of the manuscript; and all authors approved the final manuscript as submitted and agree to be accountable for all aspects of the work.

FINANCIAL DISCLOSURE: The authors have indicated they have no financial relationships relevant to this article to disclose.

FUNDING: Supported by an institutional Clinical and Translational Science Award (National Institutes of Health National Center for Advancing Translational Sciences; 1UL1TR001425). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the National Institutes of Health. Funded by the National Institutes of Health (NIH).

POTENTIAL CONFLICT OF INTEREST: The authors have indicated they have no potential conflicts of interest to disclose.

COMPANION PAPER: A companion to this article can be found online at www.pediatrics.org/cgi/doi/10.1542/peds.2019-1334.

References

- 1.Bui AL, Dieleman JL, Hamavid H, et al. Spending on children’s personal health care in the United States, 1996–2013. JAMA Pediatr. 2017;171(2):181–189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Health Care Cost Institute Children’s health spending - 2010–2013. Available at: https://www.healthcostinstitute.org/research/annual-reports/entry/children-s-health-spending-2010-2013. Accessed May 6, 2019

- 3.Chua KP, Conti RM, Freed GL. Appropriately framing child health care spending: a prerequisite for value improvement. JAMA. 2018;319(11):1087–1088 [DOI] [PubMed] [Google Scholar]

- 4.Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513–1516 [DOI] [PubMed] [Google Scholar]

- 5.Chassin MR, Galvin RW. The urgent need to improve health care quality. Institute of Medicine National Roundtable on Health Care Quality. JAMA. 1998;280(11):1000–1005 [DOI] [PubMed] [Google Scholar]

- 6.Zhi M, Ding EL, Theisen-Toupal J, Whelan J, Arnaout R. The landscape of inappropriate laboratory testing: a 15-year meta-analysis. PLoS One. 2013;8(11):e78962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hassan NE, Winters J, Winterhalter K, Reischman D, El-Borai Y. Effects of blood conservation on the incidence of anemia and transfusions in pediatric parapneumonic effusion: a hospitalist perspective. J Hosp Med. 2010;5(7):410–413 [DOI] [PubMed] [Google Scholar]

- 8.Bisogni S, Dini C, Olivini N, et al. Perception of venipuncture pain in children suffering from chronic diseases. BMC Res Notes. 2014;7:735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Postier AC, Eull D, Schulz C, et al. Pain experience in a US children’s hospital: a point prevalence survey undertaken after the implementation of a system-wide protocol to eliminate or decrease pain caused by needles. Hosp Pediatr. 2018;8(9):515–523 [DOI] [PubMed] [Google Scholar]

- 10.Stickland A, Clayton E, Sankey R, Hill CM. A qualitative study of sleep quality in children and their resident parents when in hospital. Arch Dis Child. 2016;101(6):546–551 [DOI] [PubMed] [Google Scholar]

- 11.McCann D. Sleep deprivation is an additional stress for parents staying in hospital. J Spec Pediatr Nurs. 2008;13(2):111–122 [DOI] [PubMed] [Google Scholar]

- 12.Deyo RA. Cascade effects of medical technology. Annu Rev Public Health. 2002;23:23–44 [DOI] [PubMed] [Google Scholar]

- 13.Lind CH, Hall M, Arnold DH, et al. Variation in diagnostic testing and hospitalization rates in children with acute gastroenteritis. Hosp Pediatr. 2016;6(12):714–721 [DOI] [PubMed] [Google Scholar]

- 14.Florin TA, French B, Zorc JJ, Alpern ER, Shah SS. Variation in emergency department diagnostic testing and disposition outcomes in pneumonia. Pediatrics. 2013;132(2):237–244 [DOI] [PubMed] [Google Scholar]

- 15.Thomson J, Hall M, Berry JG, et al. Diagnostic testing and hospital outcomes of children with neurologic impairment and bacterial pneumonia. J Pediatr. 2016;178:156–163.e1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Johnson DP, Lind C, Parker SE, et al. Toward high-value care: a quality improvement initiative to reduce unnecessary repeat complete blood counts and basic metabolic panels on a pediatric hospitalist service. Hosp Pediatr. 2016;6(1):1–8 [DOI] [PubMed] [Google Scholar]

- 17.Tchou MJ, Tang Girdwood S, Wormser B, et al. Reducing electrolyte testing in hospitalized children by using quality improvement methods. Pediatrics. 2018;141(5):e20173187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Centers for Disease Control and Prevention Managing acute gastroenteritis among children: oral rehydration, maintenance, and nutritional therapy. Pediatrics. 2004;114(2):507. [PubMed] [Google Scholar]

- 19.Ralston SL, Lieberthal AS, Meissner HC, et al. ; American Academy of Pediatrics . Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis [published correction appears in Pediatrics. 2015;136(4):782]. Pediatrics. 2014;134(5). Available at: www.pediatrics.org/cgi/content/full/134/5/e1474 [DOI] [PubMed] [Google Scholar]

- 20.Mongelluzzo J, Mohamad Z, Ten Have TR, Shah SS. Corticosteroids and mortality in children with bacterial meningitis. JAMA. 2008;299(17):2048–2055 [DOI] [PubMed] [Google Scholar]

- 21.Feudtner C, Feinstein JA, Zhong W, Hall M, Dai D. Pediatric complex chronic conditions classification system version 2: updated for ICD-10 and complex medical technology dependence and transplantation. BMC Pediatr. 2014;14:199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Keren R, Luan X, Localio R, et al. ; Pediatric Research in Inpatient Settings (PRIS) Network . Prioritization of comparative effectiveness research topics in hospital pediatrics. Arch Pediatr Adolesc Med. 2012;166(12):1155–1164 [DOI] [PubMed] [Google Scholar]

- 23.Parikh K, Hall M, Mittal V, et al. Establishing benchmarks for the hospitalized care of children with asthma, bronchiolitis, and pneumonia. Pediatrics. 2014;134(3):555–562 [DOI] [PubMed] [Google Scholar]

- 24.Tieder JS, Hall M, Auger KA, et al. Accuracy of administrative billing codes to detect urinary tract infection hospitalizations. Pediatrics. 2011;128(2):323–330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stephens JR, Steiner MJ, DeJong N, et al. Healthcare utilization and spending for constipation in children with versus without complex chronic conditions. J Pediatr Gastroenterol Nutr. 2017;64(1):31–36 [DOI] [PubMed] [Google Scholar]

- 26.Puls HT, Hall M, Bettenhausen J, et al. Failure to thrive hospitalizations and risk factors for readmission to children’s hospitals. Hosp Pediatr. 2016;6(8):468–475 [DOI] [PubMed] [Google Scholar]

- 27.Aronson PL, Thurm C, Williams DJ, et al. ; Febrile Young Infant Research Collaborative . Association of clinical practice guidelines with emergency department management of febrile infants ≤56 days of age. J Hosp Med. 2015;10(6):358–365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Foradori DM, Lopez MA, Hall M, et al. Invasive bacterial infections in infants younger than 60 days with skin and soft tissue infections [published online ahead of print August 20, 2018]. Pediatr Emerg Care. 10.1097/PEC.0000000000001584 [DOI] [PubMed] [Google Scholar]

- 29.Centers for Medicare and Medicaid Services 2018 ICD-10 CM and GEMs. 2017. Available at: https://www.cms.gov/Medicare/Coding/ICD10/2018-ICD-10-CM-and-GEMs.html. Accessed February 13, 2018

- 30.Richardson T, Rodean J, Harris M, Berry J, Gay JC, Hall M. Development of hospitalization resource intensity scores for kids (H-RISK) and comparison across pediatric populations. J Hosp Med. 2018;13(9):602–608 [DOI] [PubMed] [Google Scholar]

- 31.Berwick DM. Controlling variation in health care: a consultation from Walter Shewhart. Med Care. 1991;29(12):1212–1225 [DOI] [PubMed] [Google Scholar]

- 32.Tieder JS, Robertson A, Garrison MM. Pediatric hospital adherence to the standard of care for acute gastroenteritis. Pediatrics. 2009;124(6). Available at: www.pediatrics.org/cgi/content/full/124/6/e1081 [DOI] [PubMed] [Google Scholar]

- 33.Tyler A, McLeod L, Beaty B, et al. Variation in inpatient croup management and outcomes. Pediatrics. 2017;139(4):e20163582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Van Cleve WC, Christakis DA. Unnecessary care for bronchiolitis decreases with increasing inpatient prevalence of bronchiolitis. Pediatrics. 2011;128(5). Available at: www.pediatrics.org/cgi/content/full/128/5/e1106 [DOI] [PubMed] [Google Scholar]

- 35.Stammen LA, Stalmeijer RE, Paternotte E, et al. Training physicians to provide high-value, cost-conscious care: a systematic review. JAMA. 2015;314(22):2384–2400 [DOI] [PubMed] [Google Scholar]

- 36.Gupta R, Moriates C, Harrison JD, et al. Development of a high-value care culture survey: a modified Delphi process and psychometric evaluation. BMJ Qual Saf. 2017;26(6):475–483 [DOI] [PubMed] [Google Scholar]

- 37.Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016;11(12):869–872 [DOI] [PubMed] [Google Scholar]

- 38.Tek Sehgal R, Gorman P. Internal medicine physicians’ knowledge of health care charges. J Grad Med Educ. 2011;3(2):182–187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Sirovich BE, Lipner RS, Johnston M, Holmboe ES. The association between residency training and internists’ ability to practice conservatively. JAMA Intern Med. 2014;174(10):1640–1648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lee VS, Kawamoto K, Hess R, et al. Implementation of a value-driven outcomes program to identify high variability in clinical costs and outcomes and association with reduced cost and improved quality. JAMA. 2016;316(10):1061–1072 [DOI] [PubMed] [Google Scholar]

- 41.Morgan DJ, Malani P, Diekema DJ. Diagnostic stewardship-leveraging the laboratory to improve antimicrobial use. JAMA. 2017;318(7):607–608 [DOI] [PubMed] [Google Scholar]

- 42.Melanson SE. Establishing benchmarks and metrics for utilization management. Clin Chim Acta. 2014;427:127–130 [DOI] [PubMed] [Google Scholar]

- 43.Mafi JN, Russell K, Bortz BA, Dachary M, Hazel WA Jr, Fendrick AM. Low-cost, high-volume health services contribute the most to unnecessary health spending. Health Aff (Millwood). 2017;36(10):1701–1704 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chua K-P, Schwartz AL, Volerman A, Conti RM, Huang ES. Use of low-value pediatric services among the commercially insured [published correction appears in Pediatrics. 2017;139(3):e20164215]. Pediatrics. 2016;138(6):e20161809. [DOI] [PMC free article] [PubMed] [Google Scholar]