Abstract

The ability to synchronize movement to a steady beat is a fundamental skill underlying musical performance and has been studied for decades as a model of sensorimotor synchronization. Nevertheless, little is known about the neural correlates of individual differences in the ability to synchronize to a beat. In particular, links between auditory-motor synchronization ability and characteristics of the brain's response to sound have not yet been explored. Given direct connections between the inferior colliculus (IC) and subcortical motor structures, we hypothesized that consistency of the neural response to sound within the IC is linked to the ability to tap consistently to a beat. Here, we show that adolescent humans who demonstrate less variability when tapping to a beat have auditory brainstem responses that are less variable as well. One of the sources of this enhanced consistency in subjects who can steadily tap to a beat may be decreased variability in the timing of the response, as these subjects also show greater between-trial phase-locking in the auditory brainstem response. Thus, musical training with a heavy emphasis on synchronization of movement to musical beats may improve auditory neural synchrony, potentially benefiting children with auditory-based language impairments characterized by excessively variable neural responses.

Introduction

Beat synchronization, the ability to move to a steady beat, has been studied for decades as a simple example of sensorimotor coordination, i.e., the alignment of motor output to sensory input. However, despite steady progress in elucidating the mechanisms enabling beat synchronization (for review, see Repp, 2005, Repp and Su, 2013), little is known about the neural resources underlying individual differences in beat synchronization ability. The work that has been done has focused on the motor system; the ability to tap consistently to a beat, for example, is linked to activation within the cerebellum and motor cortex (Penhune and Doyon, 2005; Steele and Penhune, 2010; Steele, 2012) and gray and white matter volume in frontal cortex (Ullén et al., 2008). No research has investigated whether individual differences in auditory function underlie differences in beat synchronization ability. There is, however, reason to believe that beat synchronization relies on temporal fidelity in sensory systems, as tapping to a beat is less variable and more accurate when stimuli are presented in the auditory, rather than the visual, modality (Kolers and Brewster, 1985; Chen et al., 2002; Repp, 2003; Patel et al., 2005; Birkett and Talcott, 2012). This auditory advantage suggests that the fine temporal resolution of the auditory system enables more accurate beat synchronization.

Temporal stability of the neural response to sound within the inferior colliculus (IC) may be particularly important for beat synchronization. The IC receives ascending connections from subcortical auditory structures (Kudo and Niimi, 1980; Coleman and Clerici, 1987) as well as descending input from the cerebral cortex (Bajo et al., 2010) and sends input to the cerebellum by way of the dorsolateral pontine nuclei (Mower et al., 1979; Hashikawa, 1983; Saint Marie, 1996). Compared with the cerebral cortex, the IC is capable of representing timing information with far greater precision (Liu et al., 2006; Warrier et al., 2011). The IC is, therefore, in a unique position to integrate precise timing information from throughout the auditory system and influence motor output. Imaging studies have shown that the cerebellum is involved in sensorimotor synchronization (Rao et al., 1997; Molinari et al., 2007; Bijsterbosch et al., 2011; Grahn et al., 2011), and damage to the cerebellum results in impaired motor response timing (Ivry et al., 1988) and increased variability during beat synchronization (Molinari et al., 2005). The transformation of temporal auditory periodicity into rhythmic motor timing may, therefore, take place via the connection from the IC to the cerebellum (Warren et al., 2005; Molinari et al., 2005; Malcolm et al., 2008). If so, during beat synchronization variability in the timing of the response to sound in the IC could result in a more variable estimate of stimulus periodicity, and therefore, more variable motor responses. We tested this hypothesis by measuring the consistency of the complex auditory brainstem response (cABR) to sound, an electrophysiological response driven by activity within the IC (Smith et al., 1975; Chandrasekaran and Kraus, 2010; Warrier et al., 2011).

Materials and Methods

Subjects

We tested 124, 14- to 17-year-old high-school students enrolled in Chicago charter schools (58 female, mean age 15.8 years, SD 0.90 years). All subjects had normal audiometric thresholds (air conduction thresholds <20 dB hearing level for pure tones at octave intervals from 250 to 8000 Hz), normal brainstem responses to click stimuli, and no history of learning or neurological disorders. Subjects were monetarily compensated for their time.

Tapping test: data collection

The apparatus used for the tapping test was a NanoPad2 (KORG). It is a small rectangular plastic device containing 16 square rubber pads, each ∼4 cm across. First, subjects were familiarized with the device and instructed to pick one of these pads and tap on it with the second and third fingers of their dominant hand as if they were hitting a drum. After confirming that the subject was tapping correctly, the experimenter explained that the subject would hear a drum sound presented at a regular beat and that the subject should tap along to the beat such that the subject's taps occurred at the exact same time as the sound. Each trial consisted of a total of 40 sound presentations. Tapping during the first 20 sound presentations was not analyzed to give the subject ample time to absorb the stimulus tempo and begin tapping along. During the next 20 sound presentations, tap times were recorded by software custom-written in Python (Python Labs; Van Rossum and Drake, 2001) and aligned with stimulus presentation times. Two different tempos were presented, 1.5 and 2 Hz (twice each), for a total of four trials. Forty total taps were analyzed for each stimulus rate. These rates were chosen because they overlap with the rate of stressed syllable production in conversational speech.

Tapping test: analysis

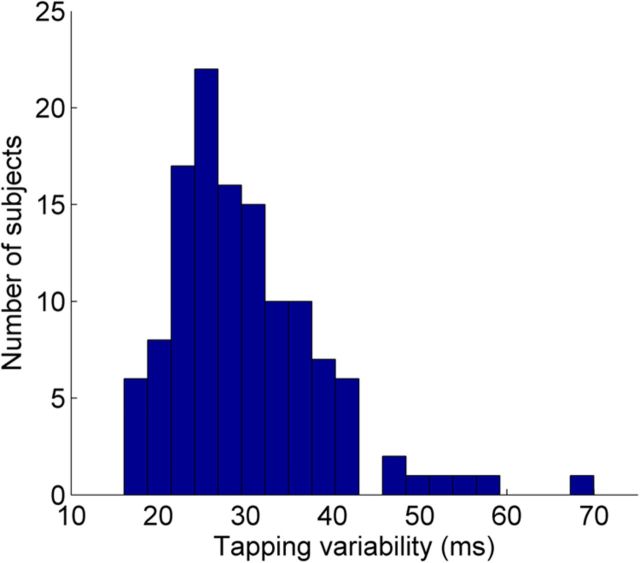

The main variable of interest was the variability of the tapping rate. This measure was chosen because it is the primary measure used in studies investigating individual differences in tapping ability (Rubia et al., 2001, 2003; Pitcher et al., 2002; Repp, 2005; Ben-Pazi et al., 2006; Thomson et al., 2006; Thomson and Goswami, 2008; Ullén et al., 2008; Corriveau and Goswami, 2009; Holm et al., 2011; Tierney and Kraus, 2013). First, to remove instances where the subject did not tap with enough force to elicit a response from the tapping pad, interonset intervals (IOIs) exceeding 1.7 times the target IOI were removed from analysis. Cases in which tapping on the pad resulted in a double-tap (two taps very close together in time, likely because of a small bounce occurring upon contact with the tapping pad) were also corrected by removing the second of the two taps; this was based on the assumption that the first tap was the tap the subject intended to produce. This was only done when the IOI between two taps was a small fraction of the target IOI (<100 ms). After these rare cases were removed, tapping variability was calculated for each trial by calculating the interval between each pair of taps, and then calculating the SD of the set of intervals for each trial. Finally, to create a global measure of each subject's tapping ability, tapping variability across all four trials was averaged. A histogram of tapping performance is shown in Figure 1. To minimize the effects of outliers, tapping performance outliers were corrected to within 2 SDs of the mean (six data points corrected).

Figure 1.

Histogram of tapping variability across subjects. Lower variability indicates better tapping performance.

Neurophysiology: stimuli

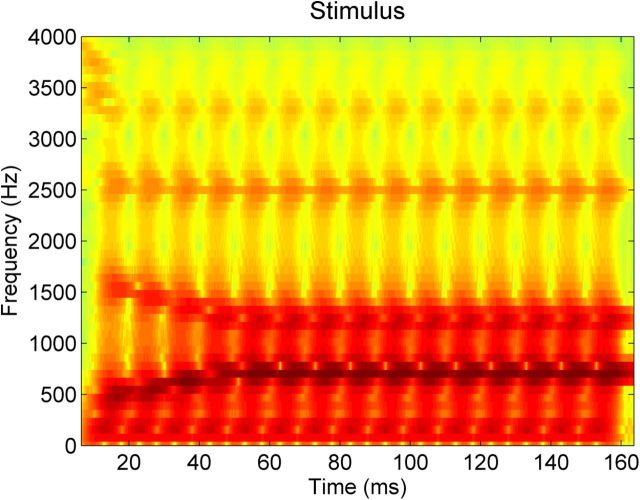

Neural responses were collected to a 170 ms speech sound /da/ synthesized with a Klatt-based synthesizer. This speech sound begins with a 5 ms onset burst; following this onset, the remainder of the sound is voiced with a 100 Hz fundamental frequency. From 5 to 50 ms, during the formant transition period, the first formant rises from 400 to 720 Hz and the second and third formants fall from 1700 to 1240 Hz and from 2580 to 2500 Hz. During the steady-state vowel portion of the sound, from 50 to 170 ms, the first, second, and third formants remain steady at 720, 1240, and 2500 Hz. Throughout the sound the fourth, fifth, and sixth formants remain steady at 3330, 3750, and 4900 Hz. (A spectrogram of the stimulus is presented in Fig. 2). Presented concurrently with the synthesized speech sound was looped background noise consisting of 45 s of grammatically correct but semantically anomalous overlapping speech babble from six different talkers (two males, four females). Specific recording parameters for the speech babble can be found in Smiljanić and Bradlow (2005). The speech sound was presented at 80 dB with a 10 dB signal-to-noise ratio over the background noise.

Figure 2.

Spectrogram of the stimulus, a 170 ms synthesized /da/. Analysis focused on the first 50 ms of the sound, the consonant-vowel transition, during which the first, second, and third formants gradually changed.

Neurophysiology: data collection

Testing was conducted in a soundproof booth. Brainstem EEG recordings were collected while the speech sound was presented monaurally through insert earphones (ER-3; Etymotic Research) by Neuroscan Stim 2 presentation software (Compumedics). Stimuli were presented at alternating polarities at a rate of 4.35 Hz, the standard stimulus presentation rate used across studies in our laboratory to facilitate cross-study comparisons (Skoe and Kraus, 2010). Subjects were told that they would hear a synthesized speech sound but that they did not need to actively listen to the sound. Instead, to maintain relaxation and prevent drowsiness, subjects were instructed to watch a self-selected subtitled movie with the soundtrack presented at <40 dB SPL. Six-thousand artifact-free responses to the speech sound were collected with an 81 ms interstimulus interval over the course of ∼30 min. Brainstem responses were collected using NeuroScan Acquire (Compumedics) through a vertical Ag-AgCl electrode montage, with monaural reference at the right earlobe, the grounding electrode placed on the forehead and the active electrode at Cz, and were digitized at 20,000 Hz by a Synamp2 system (Compumedics). Electrode impedances were kept <5 KΩ.

Neurophysiology: data analysis

All neurophysiological analysis was conducted using custom-written software in MATLAB (MathWorks). Before subsequent processing recordings were bandpass filtered from 70 to 2000 Hz (12 dB/octave rolloff) using a Butterworth filter. This filter was imposed to minimize low-frequency contributions to the signal from the cerebral cortex (Chandrasekaran and Kraus, 2010). The resulting signal, known as the cABR, is driven by activity within the IC (Smith et al., 1975; Chandrasekaran and Kraus, 2010; Warrier et al., 2011). This neural measure confers a number of advantages when used as an index of the auditory system's encoding of sound; for example, the cABR is highly stable between test and retest (Hornickel et al., 2012a), making it an ideal tool for the study of individual differences in auditory function. The cABR also accurately reproduces spectrotemporal stimulus features (Fig. 2), due to the high degree of timing precision within the IC (Liu et al., 2006; Warrier et al., 2011), enabling examination of the auditory system's encoding of a variety of acoustic characteristics (Kraus and Chandrasekaran, 2010).

For both analysis techniques described below, the recording was segmented from the onset of stimulus presentation at 0 ms until 170 ms after stimulus presentation. Trials containing amplitude spikes of >35 microvolts were then rejected as artifacts (amplitude rejects were always kept <10% of total trials collected), and 6000 artifact-free trials were selected. Analysis was conducted on two time windows: 5–60 ms, which corresponds to the response to the formant transition of the stimulus, and 60–170 ms, which corresponds to the response to the steady-state vowel of the stimulus. The response to the formant transition of the stimulus was examined separately because neural encoding of the timing of this dynamic portion of the stimulus stresses the auditory system, revealing relationships between neural timing and behavior that are not present in the response to the steady-state vowel (Parbery-Clark et al., 2009a, Anderson et al., 2010).

Neural response consistency.

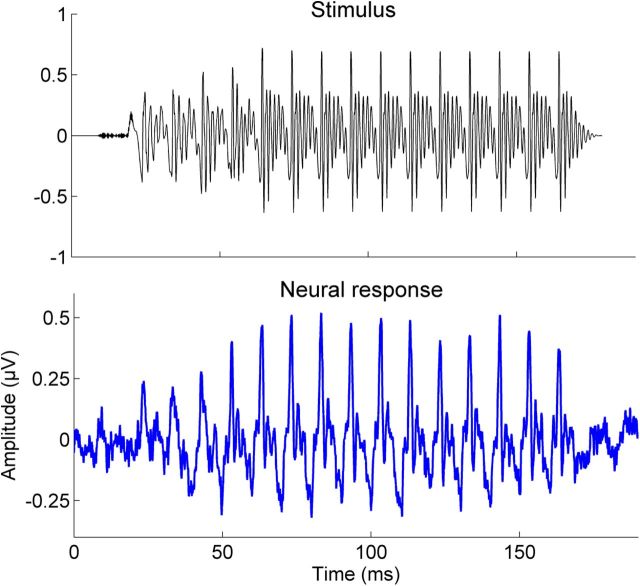

We assessed the trial-by-trial consistency of the brainstem's response to sound using a procedure previously reported in Hornickel et al., 2012b; Hornickel and Kraus, 2013). This procedure is designed to assess the extent to which the brainstem's representation of sound varies from trial to trial. First, 3000 of the 6000 total trials are randomly selected and averaged, producing an average waveform (Fig. 3, bottom waveform). Second, the remaining 3000 trials are also averaged, producing a second average waveform. The greater the consistency of the brainstem's response to sound, the more similar these two waveforms will be. Third, to quantify this similarity, the two waveforms were cross-correlated. These three steps were repeated 100 times with 100 different random samplings of the 6000 trials, resulting in 100 different response consistency elements; these 100 values were averaged to generate a final measure of neural response consistency. Response consistency data were Fischer transformed before statistical analyses.

Figure 3.

Top, Waveform of the synthesized /da/ stimulus. Bottom, Auditory brainstem response from a single subject. The auditory brainstem response reproduces the spectrotemporal features of the stimulus with high fidelity. The stimulus has been shifted forward in time by 8.75 ms to account for neural transmission delay.

Intertrial phase-locking.

The neural response consistency measure is driven in part by variability in the timing of the cABR, but it is also influenced by trial-by-trial changes in the frequency content of the response. To specifically examine the timing variability of the auditory brainstem response, we calculated intertrial phase-locking from 70 to 1000 Hz for each subject. This procedure, which is commonly used to examine the timing consistency of the brainstem response (Clinard et al., 2010; Ruggles et al., 2012), measures the extent to which the phase of each frequency component of the brainstem response is consistent across trials.

Six-thousand artifact-free trials were selected. The time-frequency spectrum of each trial was then calculated using a fast Fourier transform. This procedure results in a vector containing two measures for each frequency: a vector length, which indicates the extent to which each frequency is encoded in the response, and a phase, which contains information about the timing of the representation of that frequency. Because our goal was specifically to examine the timing variability of the response, each vector was transformed into a unit vector (i.e., a vector with a length of one). Next, for each frequency, the 6000 vectors (one for each trial) were averaged. Finally, the length of the resulting vector was calculated as a measure of the consistency of the phase across trials. This measure ranges from 0 (no phase consistency) to 1 (perfect phase consistency). To specifically examine phase-locking at stimulus frequencies represented in the response, maximum intertrial phase-locking was measured in 40 Hz windows surrounding the fundamental frequency of the stimulus (100 Hz) and the second through fourth harmonics of the stimulus (200, 300, and 400 Hz). These four values were then averaged to form a global phase-locking measure.

Results

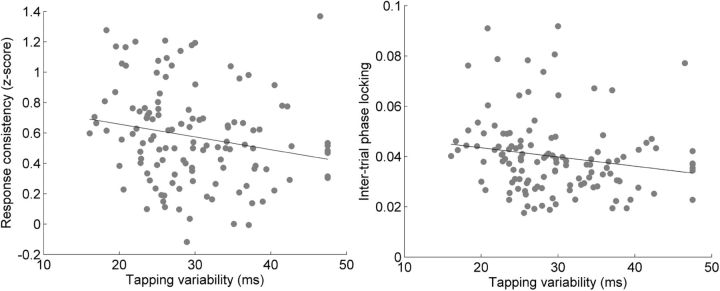

Pearson's correlations revealed that subjects with lower synchronized tapping variability had more consistent speech-evoked brainstem responses when compared with subjects with higher tapping variability (Fig. 4). Tapping variability related to consistency in the response to the transition portion of the speech sound (r = −0.204, p < 0.05), in which the frequency content of the sound is changing rapidly, but not to the response to the unchanging steady-state vowel portion (r = −0.0359, p > 0.2). More variable tapping was linked to more variable neural responses to sound.

Figure 4.

Scatterplots of tapping variability versus neural response consistency (left) and intertrial phase locking (right). Tapping variability inversely correlated with neural response consistency (r = −0.204, p < 0.05) and with intertrial phase locking (r = −0.183, p < 0.05). Lower variability indicates better tapping performance.

Several different factors can influence neural response consistency, including changes in the frequency spectrum of the response and timing jitter between trials. To more directly test our hypothesis that variable synchronized tapping performance is affected by variability in the timing of the auditory system's representation of sound, we used Pearson's correlations to examine the relationship between intertrial phase-locking, a measure of timing consistency at a particular frequency and within a specified time range, and synchronized tapping variability. Inter-trial phase-locking at the fundamental frequency and the first three harmonics was related to tapping variability in the response to the transition (r = −0.183, p < 0.05) but not in the response to the steady-state (r = −0.0638, p > 0.2), such that greater neural timing variability was linked to greater variability in synchronized tapping.

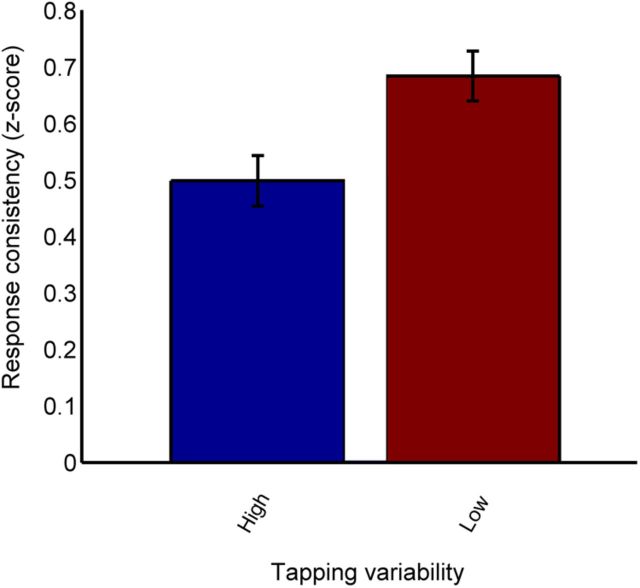

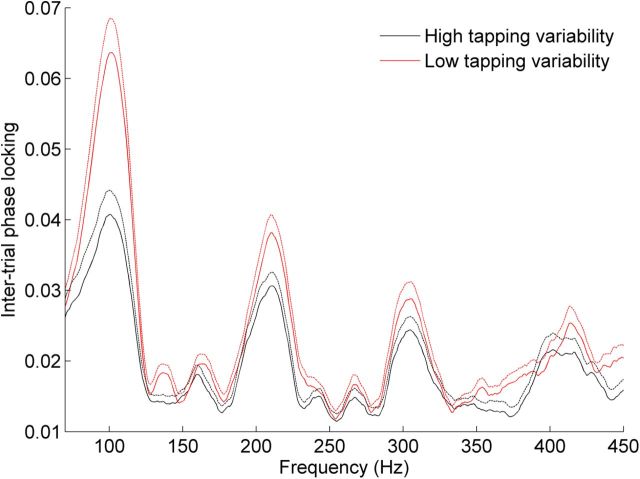

To further investigate the relationship between neural response consistency and tapping ability, we divided subjects into good and poor tapping groups by separating them into top and bottom terciles (n = 41 subjects each) based on tapping variability. As tapping ability only related to neural consistency in the response to the transition of the speech sound, we limited our group analyses to this region of the response. The group of subjects able to tap to the metronome with low variability showed less variable neural responses to sound (Fig. 5). The good tapping group showed an average response consistency score of 0.684 (SD 0.282), whereas the poor tapping group showed an average response consistency score of 0.499 (0.287). This difference was significant according to a two-sided unpaired t test; t(80) = −2.94, p < 0.01. In the response to the transition of the speech sound, the good tapping group showed greater phase-locking than the poor tapping group (Fig. 6; good tapping average = 0.0444 (0.0140), poor tapping average = 0.0361 (0.0122); t(80) = −2.84, p < 0.01).

Figure 5.

Subjects who show less variability when tapping to a beat show more consistent neural responses to sound (t(80) = −2.94, p < 0.01). Error bars represent ±1 standard error of the mean.

Figure 6.

Subjects who show less variability when tapping to a beat show greater intertrial phase-locking in the auditory brainstem response. Intertrial phase-locking to the fundamental frequency and first three harmonics of the speech sound /da/ in the third of the subjects with the least variable synchronized tapping (red) was greater than phase-locking in the third of the subjects with the most variable synchronized tapping (black; t(80) = −2.84, p < 0.01). Dashed lines represent +1 standard error of the mean.

Discussion

We find that variability in synchronized tapping is linked to trial-by-trial variability in the cABR, an electrophysiological response to sound primarily originating within the IC. We also find that beat synchronization variability relates with timing jitter in the auditory brainstem response, as measured by intertrial phase-locking. This is the first evidence supporting the hypothesis that the IC, due to its connection with the cerebellum and its ability to respond with high temporal precision to auditory stimuli (Liu et al., 2006; Warrier et al., 2011), plays an important role in the transformation of periodicity in auditory stimuli to motor output (Molinari et al., 2005; Warren et al., 2005; Malcolm et al., 2008). More generally, this is the first evidence linking beat synchronization ability to individual differences in auditory system function.

The link between variability in the timing of the cABR and variability in synchronizing to a beat suggests that a high degree of timing jitter within the IC leads to a more variable representation of stimulus periodicity, and in turn, more variable motor output. The higher degree of consistency found when subjects tap to auditory stimuli versus visual stimuli (Kolers and Brewster, 1985; Semjen and Ivry, 2001; Chen et al., 2002; Repp and Penel, 2002; Repp, 2003; Patel et al., 2005; Birkett and Talcott, 2012) may therefore stem from the high degree of temporal precision and consistency within the auditory brainstem (Liu et al., 2006; Warrier et al., 2011). The manner in which the auditory system extracts the periodicity of a stimulus is unknown; several different mechanisms have been proposed, including coupling of low-frequency neural oscillators to stimuli (Large and Jones, 1999) within the auditory cortex (Nozaradan et al., 2012) and measurement of temporal intervals by a central timekeeper, possibly within the cerebellum (Ivry et al., 1988). The IC's role in beat synchronization may, therefore, simply be to relay precise timing information to either the auditory cortex or the cerebellum, where periodicity detection takes place. Alternatively, timekeeping processes may actually take place within the IC, as it is the first brain region in the ascending auditory pathway in which duration-sensitive neurons have been reported (Casseday et al., 1994; Brand et al., 2000; Pérez-González et al., 2006; Yin et al., 2008), and damage to the IC leads to impaired discrimination of time intervals (Champoux et al., 2007).

Another explanation for the link between timing jitter within the auditory brainstem and beat synchronization variability is that error correction relies on auditory temporal precision. No matter how accurate a subject's performance when synchronizing to a beat, the slightest discrepancy between the target tempo and the produced tempo will lead to a build-up in phase error. Detection of this phase error is crucial to maintain accurate synchronization. In fact, subjects will correct for phase shifts of as little as 1.5 ms, even though the threshold for conscious detection of timing shifts is on the order of 20 ms (Repp, 2000; Madison and Merker, 2004). Less accurate temporal estimates could, therefore, cause the threshold for phase error detection to be increased, thereby increasing the overall variability of the response (Krause et al., 2010a). To test the idea that temporal jitter within the IC leads to increased thresholds for error correction, future work could directly examine individual differences in phase error correction during synchronized tapping. We predict that subjects whose auditory brainstem responses display less intertrial timing consistency will show higher phase error detection thresholds.

The temporal jitter we find in the frequency-following response to frequencies of 70 Hz and above likely reflects, more broadly, temporal jitter in the response to sound of neurons within the IC, including jitter in whatever response components are necessary for the extraction of stimulus periodicity (such as, lower-frequency oscillations). For example, temporal jitter in the relay of auditory information from other subcortical structures to the IC would lead to globally decreased phase-locking throughout the IC. The ideal experiment would be to measure temporal jitter using intracortical recordings in IC and examine how individual differences in particular aspects of IC function relate to tapping ability; unfortunately, this has not been practical because the extreme rarity of beat synchronization in animals necessitates the use of human subjects. However, the recent finding of beat synchronization in cockatoos (Patel et al., 2009), budgerigars (Hasegawa et al., 2011), chimpanzees (Hattori et al., 2013), and sea lions (Cook et al., 2013), and the existence of natural rhythmic primate behaviors, such as chest beating and foot drumming (Randall, 2001; Fitch, 2006; Remedios et al., 2009), suggest that finding an animal model for beat synchronization may be feasible eventually, enabling a direct test of the hypothesis that the IC and its connection to the cerebellum play a vital role in beat synchronization.

We find that the ability to tap consistently to a beat relates to the consistency of the auditory brainstem response to sound, a measure that has also been linked to reading ability (Hornickel and Kraus, 2013) and phonological awareness, the explicit knowledge of the components of spoken language. To distinguish speech sounds based on certain acoustic characteristics, such as voice onset time, listeners must be able to detect extremely small differences in timing. Timing jitter in the brain's response to sound may therefore hinder the development of stable mental representations of speech sound categories necessary for phonological awareness. Thus, an explanation for the relationship between the ability to tap to a beat and reading ability (Thomson et al., 2006; Thomson and Goswami, 2008; Corriveau and Goswami, 2009; Tierney and Kraus, 2013) supported by the present findings is that both skills rely on the shared neural resource of consistent auditory brainstem timing.

Interestingly, the consistency of the auditory brainstem response can be modified by experience: an experimental study has shown that a group of children with dyslexia who wore an assistive listening device in the classroom showed improved consistency in the neural response to the consonant-vowel transition of a synthesized speech sound after a year relative to a control group who did not wear the device (Hornickel et al., 2012b). Furthermore, the increase in response consistency was linked to improvements in language skills. Given that response consistency also tracks with beat synchronization ability, it is possible that training in rhythmic abilities including beat synchronization practice could lead to a more stable neural representation of sound, in addition to improving linguistic skills, such as phonological awareness and reading.

Examining the effects of musical training with a high degree of emphasis on beat synchronization ability may be a particularly fruitful line of research. Musicians outperform nonmusicians on beat synchronization tasks, producing responses that are both more consistent and more accurate (Repp and Doggett, 2007; Krause et al., 2010a,b; Repp, 2010). Musical training has also been shown to lead to improvements in reading ability (Overy, 2003; Moreno et al., 2009), and musical experience has been linked to a variety of linguistic, perceptual, cognitive, and neural benefits including enhanced speech-in-noise perception (Parbery-Clark et al., 2009b), auditory attention, and memory (Strait et al., 2010, 2012a; Parbery-Clark et al., 2011; Strait and Kraus, 2011), as well as enhanced neural sound encoding (Parbery-Clark et al., 2009a; Bidelman and Krishnan, 2010; Bidelman et al., 2011a,b,c; for review, see Kraus and Chandrasekaran, 2010; Parbery-Clark et al., 2012b; Skoe and Kraus, 2012; Strait et al., 2012a,b; Parbery-Clark et al., 2012c), including enhanced response consistency (Parbery-Clark et al., 2012a). At least some of the benefits of musical training may be driven by practice synchronizing to complex auditory rhythms, a process which, as our findings show, requires low timing jitter within the auditory system. If so, emphasizing perception of and interaction with auditory rhythms may enhance the beneficial linguistic and scholastic effects of musical training.

Our data suggest that the IC is an important region for beat synchronization. However, other regions both within and outside the auditory system are likely also crucial for beat synchronization. For example, given that auditory and motor/premotor cortex are functionally connected during synchronized tapping tasks (Pollok et al., 2003; Chen et al., 2006, 2008; Krause et al., 2010b), characteristics of neural function in these two regions may also be tied to synchronization ability. Other neural areas that have been associated with synchronized tapping tasks, and therefore potentially underlie synchronization ability, include the cerebellum (Molinari et al., 2007; Bijsterbosch et al., 2011) and the basal ganglia (Rao et al., 1997; Jäncke et al., 2000; Jantzen et al., 2002; Witt et al., 2008; Schwartze et al., 2011).

We find that tapping ability relates only to timing consistency in the response to the dynamic portion of the stimulus, likely because a rapidly changing stimulus is more difficult for the brainstem to accurately and reliably encode. Thus, the response to the dynamic portion of the sound may expose individual differences that are obscured in the response to the static portion.

In summary, we find that subjects who are able to consistently synchronize their movements to a beat have auditory brainstem responses that are also more consistent and show less timing jitter between trials. Successful beat synchronization, therefore, depends upon accurate, consistent sensory input. These findings also suggest that the acquisition of reading and beat synchronization ability may relate because both rely on consistent timing within the auditory system.

Footnotes

This work was supported by the NSF 0842376 to N.K.

The authors declare no competing financial interests.

References

- Anderson S, Skoe E, Chandrasekaran B, Kraus N. Neural timing is linked to speech perception in noise. J Neurosci. 2010;30:4922–4926. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bajo VM, Nodal FR, Moore DR, King AJ. The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat Neurosci. 2010;13:253–260. doi: 10.1038/nn.2466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Pazi H, Shalev RS, Gross-Tsur V, Bergman H. Age and medication effects on rhythmic responses in ADHD: possible oscillatory mechanisms? Neuropsychologia. 2006;44:412–416. doi: 10.1016/j.neuropsychologia.2005.05.022. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Krishnan A. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res. 2010;1355:112–125. doi: 10.1016/j.brainres.2010.07.100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman G, Gandour J, Krishnan A. Cross-domain effects of music and language experience on the representation of pitch in the human auditory brainstem. J Cogn Neurosci. 2011a;23:424–434. doi: 10.1162/jocn.2009.21362. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Gandour JT, Krishnan A. Musicians and tone-language speakers share enhanced brainstem encoding but not perceptual benefits for musical pitch. Brain Cogn. 2011b;77:1–10. doi: 10.1016/j.bandc.2011.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Krishnan A, Gandour JT. Enhanced brainstem encoding predicts musicians' perceptual advantages with pitch. Eur J Neurosci. 2011c;33:530–538. doi: 10.1111/j.1460-9568.2010.07527.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bijsterbosch JD, Lee KH, Hunter MD, Tsoi DT, Lankappa S, Wilkinson ID, Barker AT, Woodruff PW. The role of the cerebellum in sub- and supraliminal error correction during sensorimotor synchronization: evidence from fMRI and TMS. J Cogn Neurosci. 2011;23:1100–1112. doi: 10.1162/jocn.2010.21506. [DOI] [PubMed] [Google Scholar]

- Birkett EE, Talcott JB. Interval timing in children: effects of auditory and visual pacing stimuli and relationships with reading and attention variables. PLoS ONE. 2012;7:e42820. doi: 10.1371/journal.pone.0042820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brand A, Urban A, Grothe B. Duration tuning in the mouse auditory midbrain. J Physiol. 2000;84:1790–1799. doi: 10.1152/jn.2000.84.4.1790. [DOI] [PubMed] [Google Scholar]

- Casseday JH, Ehrlich D, Covey D. Neural tuning for sound duration: role of inhibitory mechanisms in the inferior colliculus. Science. 1994;264:847–850. doi: 10.1126/science.8171341. [DOI] [PubMed] [Google Scholar]

- Champoux F, Paiement P, Mercier C, Lepore F, Lassonde M, Gagné JP. Auditory processing in a patient with a unilateral lesion of the inferior colliculus. Eur J Neurosci. 2007;25:291–297. doi: 10.1111/j.1460-9568.2006.05260.x. [DOI] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: neural origins. Psychophysiology. 2010;47:236–246. doi: 10.1111/j.1469-8986.2009.00928.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen JL, Zatorre RJ, Penhune VB. Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. Neuroimage. 2006;32:1771–1781. doi: 10.1016/j.neuroimage.2006.04.207. [DOI] [PubMed] [Google Scholar]

- Chen JL, Penhune VB, Zatorre RJ. Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J Cogn Neurosci. 2008;20:226–239. doi: 10.1162/jocn.2008.20018. [DOI] [PubMed] [Google Scholar]

- Chen Y, Repp BH, Patel AD. Spectral decomposition of variability in synchronization and continuation tapping: comparisons between auditory and visual pacing and feedback conditions. Hum Mov Sci. 2002;21:515–532. doi: 10.1016/S0167-9457(02)00138-0. [DOI] [PubMed] [Google Scholar]

- Clinard CG, Tremblay KL, Krishnan AR. Aging alters the perception and physiological representation of frequency: evidence from human frequency-following response recordings. Hear Res. 2010;264:48–55. doi: 10.1016/j.heares.2009.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coleman JR, Clerici WJ. Sources of projections to subdivisions of the inferior colliculus in the rat. J Comp Neurol. 1987;262:215–226. doi: 10.1002/cne.902620204. [DOI] [PubMed] [Google Scholar]

- Cook P, Rouse A, Wilson M, Reichmuth C. A California sea lion (Zalophus californianus) can keep the beat: motor entrainment to rhythmic auditory stimuli in a nonvocal mimic. J Comp Psychol. 2013 doi: 10.1037/a0032345. in press. [DOI] [PubMed] [Google Scholar]

- Corriveau KH, Goswami U. Rhythmic motor entrainment in children with speech and language impairments: tapping to the beat. Cortex. 2009;45:119–130. doi: 10.1016/j.cortex.2007.09.008. [DOI] [PubMed] [Google Scholar]

- Fitch W. On the biology and evolution of music. Music Percept. 2006;24:85–88. doi: 10.1525/mp.2006.24.1.85. [DOI] [Google Scholar]

- Grahn JA, Henry MJ, McAuley JG. FMRI investigation of cross-modal interactions in beat perception: audition primes vision, but not vice versa. Neuroimage. 2011;54:1231–1243. doi: 10.1016/j.neuroimage.2010.09.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasegawa A, Okanoya K, Hasegawa T, Seki Y. Rhythmic synchronization tapping to an audio-visual metronome in budgerigars. Sci Rep. 2011;1:120. doi: 10.1038/srep00120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hashikawa T. The inferior colliculopontine neurons of the cat in relation to other collicular descending neurons. J Comp Neurol. 1983;219:241–249. doi: 10.1002/cne.902190209. [DOI] [PubMed] [Google Scholar]

- Hattori Y, Tomonaga M, Matsuzawa T. Spontaneous synchronized tapping to an auditory rhythm in a chimpanzee. Sci Rep. 2013;3:1566. doi: 10.1038/srep01566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holm L, Ullén F, Madison G. Intelligence and temporal accuracy of behaviour: unique and shared associations with reaction time and motor timing. Exp Brain Res. 2011;214:175–183. doi: 10.1007/s00221-011-2817-6. [DOI] [PubMed] [Google Scholar]

- Hornickel J, Knowles E, Kraus N. Test-retest consistency of speech-evoked auditory brainstem responses in typically-developing children. Hear Res. 2012a;284:52–58. doi: 10.1016/j.heares.2011.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornickel J, Zecker SG, Bradlow AR, Kraus N. Assisted listening devices drive neuroplasticity in children with dyslexia. Proc Natl Acad Sci U S A. 2012b;109:16731–16736. doi: 10.1073/pnas.1206628109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornickel J, Kraus N. Unstable representation of sound: a biological marker of dyslexia. J Neurosci. 2013;33:3500–3504. doi: 10.1523/JNEUROSCI.4205-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ivry RB, Keele SW, Diener HC. Dissociation of the lateral and medial cerebellum in movement timing and movement execution. Exp Brain Res. 1988;73:167–180. doi: 10.1007/BF00279670. [DOI] [PubMed] [Google Scholar]

- Jäncke L, Loose R, Lutz K, Specht K, Shah NJ. Cortical activations during paced finger-tapping applying visual and auditory pacing stimuli. Cogn Brain Res. 2000;10:51–66. doi: 10.1016/S0926-6410(00)00022-7. [DOI] [PubMed] [Google Scholar]

- Jantzen KJ, Steinberg FL, Kelso JA. Practice-dependent modulation of neural activity during human sensorimotor coordination: a functional magnetic resonance imaging study. Neurosci Lett. 2002;332:205–209. doi: 10.1016/S0304-3940(02)00956-4. [DOI] [PubMed] [Google Scholar]

- Kolers P, Brewster J. Rhythms and responses. J Exp Psychol Hum Percept Perform. 1985;11:150–167. doi: 10.1037/0096-1523.11.2.150. [DOI] [PubMed] [Google Scholar]

- Kraus N, Chandrasekaran B. Music training for the development of auditory skills. Nat Rev Neurosci. 2010;11:599–605. doi: 10.1038/nrn2882. [DOI] [PubMed] [Google Scholar]

- Krause V, Pollok B, Schnitzler A. Perception in action: the impact of sensory information on sensorimotor synchronization in musicians and non-musicians. Acta Psychologica. 2010a;133:28–37. doi: 10.1016/j.actpsy.2009.08.003. [DOI] [PubMed] [Google Scholar]

- Krause V, Schnitzler A, Pollok B. Functional network interactions during sensorimotor synchronization in musicians and non-musicians. Neuroimage. 2010b;52:245–251. doi: 10.1016/j.neuroimage.2010.03.081. [DOI] [PubMed] [Google Scholar]

- Kudo M, Niimi K. Ascending projections of the inferior colliculus in the cat: an autoradiographic study. J Comp Neurol. 1980;191:545–556. doi: 10.1002/cne.901910403. [DOI] [PubMed] [Google Scholar]

- Large EW, Jones MR. The dynamics of attending: how people track time-varying events. Psychol Rev. 1999;106:119–159. doi: 10.1037/0033-295X.106.1.119. [DOI] [Google Scholar]

- Liu L, Palmer A, Wallace M. Phase-locked responses to pure tones in the inferior colliculus. J Neurophysiol. 2006;96:1926–1935. doi: 10.1152/jn.00497.2005. [DOI] [PubMed] [Google Scholar]

- Madison G, Merker B. Human sensorimotor tracking of continuous subliminal deviations from isochrony. Neurosci Lett. 2004;370:69–73. doi: 10.1016/j.neulet.2004.07.094. [DOI] [PubMed] [Google Scholar]

- Malcolm MP, Lavine A, Kenyon G, Massie C, Thaut M. Repetitive transcranial magnetic stimulation interrupts phase synchronization during rhythmic motor entrainment. Neurosci Lett. 2008;435:240–245. doi: 10.1016/j.neulet.2008.02.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molinari M, Leggio MG, Filippini V, Gioia MC, Cerasa A, Thaut MH. Sensorimotor transduction of time information is preserved in subjects with cerebellar damage. Brain Res Bull. 2005;67:448–458. doi: 10.1016/j.brainresbull.2005.07.014. [DOI] [PubMed] [Google Scholar]

- Molinari M, Leggio MG, Thaut MH. The cerebellum and neural networks for rhythmic sensorimotor synchronization in the human brain. Cerebellum. 2007;6:18–23. doi: 10.1080/14734220601142886. [DOI] [PubMed] [Google Scholar]

- Moreno S, Marques C, Santos A, Santos M, Luis Castro SL, Besson M. Musical training influences linguistic abilities in 8-year-old children: more evidence for brain plasticity. Cereb Cortex. 2009;19:712–723. doi: 10.1093/cercor/bhn120. [DOI] [PubMed] [Google Scholar]

- Mower G, Gibson A, Glickstein M. Tectopontine pathway in the cat: laminar distribution of cells of origin and visual properties of target cells in dorsolateral pontine nucleus. J Neurophysiol. 1979;42:1–15. doi: 10.1152/jn.1979.42.1.1. [DOI] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Mouraux A. Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J Neurosci. 2012;32:17572–17581. doi: 10.1523/JNEUROSCI.3203-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overy K. Dyslexia and music. Ann N Y Acad Sci. 2003;999:497–505. doi: 10.1196/annals.1284.060. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Lam C, Kraus N. Musician enhancement for speech in noise. Ear Hear. 2009a;30:653–661. doi: 10.1097/AUD.0b013e3181b412e9. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. J Neurosci. 2009b;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Strait DL, Anderson S, Hittner E, Kraus N. Musical experience and the aging auditory system: implications for cognitive abilities and hearing speech in noise. PLoS ONE. 2011;6:e18082. doi: 10.1371/journal.pone.0018082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Anderson S, Hittner E, Kraus N. Musical experience strengthens the neural representation of sounds important for communication in middle-aged adults. Front Aging Neurosci. 2012a;4:30. doi: 10.3389/fnagi.2012.00030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Anderson S, Hittner E, Kraus N. Musical experience offsets age-related delays in neural timing. Neurobiol Aging. 2012b;33:1483.e1–1483.e4. doi: 10.1016/j.neurobiolaging.2011.12.015. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Tierney A, Strait DL, Kraus N. Musicians have fine-tuned neural distinction of speech syllables. Neuroscience. 2012c;219:111–119. doi: 10.1016/j.neuroscience.2012.05.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel AD, Iversen JR, Chen Y, Repp BH. The influence of metricality and modality on synchronization with a beat. Exp Brain Res. 2005;163:226–238. doi: 10.1007/s00221-004-2159-8. [DOI] [PubMed] [Google Scholar]

- Patel AD, Iversen JR, Bregman MR, Schulz I. Experimental evidence for synchronization to a musical beat in a nonhuman animal. Curr Biol. 2009;19:827–830. doi: 10.1016/j.cub.2009.03.038. [DOI] [PubMed] [Google Scholar]

- Penhune VB, Doyon J. Cerebellum and M1 interaction during early learning of timed motor sequences. Neuroimage. 2005;26:801–812. doi: 10.1016/j.neuroimage.2005.02.041. [DOI] [PubMed] [Google Scholar]

- Pérez-González D, Malmierca MS, Moore JM, Hernández O, Covey E. Duration selective neurons in the inferior colliculus of the rat: topographic distribution and relation of duration sensitivity to other response properties. J Neurophysiol. 2006;95:823–836. doi: 10.1152/jn.00741.2005. [DOI] [PubMed] [Google Scholar]

- Pitcher TM, Piek JP, Barrett NC. Timing and force control in boys with attention deficit hyperactivity disorder: subtype differences and the effect of comorbid developmental coordination disorder. Hum Mov Sci. 2002;21:919–945. doi: 10.1016/S0167-9457(02)00167-7. [DOI] [PubMed] [Google Scholar]

- Pollok B, Müller K, Aschersleben G, Schmitz F, Schnitzler A, Prinz W. Cortical activations associated with auditorily paced finger tapping. Neuroreport. 2003;14:247–250. doi: 10.1097/00001756-200302100-00018. [DOI] [PubMed] [Google Scholar]

- Randall J. Evolution and function of drumming as communication in mammals. Am Zoologist. 2001;41:1143–1156. doi: 10.1668/0003-1569(2001)041[1143:EAFODA]2.0.CO;2. [DOI] [Google Scholar]

- Rao SM, Harrington DL, Haaland KY, Bobholz JA, Cox RW, Binder JR. Distributed neural systems underlying the timing of movements. J Neurosci. 1997;17:5528–5535. doi: 10.1523/JNEUROSCI.17-14-05528.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Remedios R, Logothetis NK, Kayser C. Monkey drumming reveals common networks for perceiving vocal and nonvocal communication sounds. Proc Natl Acad Sci U S A. 2009;106:18010–18015. doi: 10.1073/pnas.0909756106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Repp BH. Compensation for subliminal timing perturbations in perceptual-motor synchronization. Psychol Res. 2000;63:106–128. doi: 10.1007/PL00008170. [DOI] [PubMed] [Google Scholar]

- Repp BH. Rate limits in sensorimotor synchronization with auditory and visual sequences: the synchronization threshold and the benefits and costs of interval subdivision. J Mot Behav. 2003;35:355–370. doi: 10.1080/00222890309603156. [DOI] [PubMed] [Google Scholar]

- Repp BH. Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev. 2005;12:969–992. doi: 10.3758/BF03206433. [DOI] [PubMed] [Google Scholar]

- Repp BH. Sensorimotor synchronization and perception of timing: effects of music training and task experience. Hum Mov Sci. 2010;29:200–213. doi: 10.1016/j.humov.2009.08.002. [DOI] [PubMed] [Google Scholar]

- Repp B, Doggett R. Tapping to a very slow beat: a comparison of musicians and nonmusicians. Music Percept. 2007;24:367–376. doi: 10.1525/mp.2007.24.4.367. [DOI] [Google Scholar]

- Repp BH, Penel A. Auditory dominance in temporal processing: new evidence from synchronization with simultaneous visual and auditory sequences. J Exp Psychol Hum Percept Perform. 2002;28:1085–1099. doi: 10.1037/0096-1523.28.5.1085. [DOI] [PubMed] [Google Scholar]

- Repp BH, Su YH. Sensorimotor synchronization: a review of recent research (2006–2012) Psychon Bull Rev. 2013;20:403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- Rubia K, Taylor E, Smith AB, Oksanen H, Overmeyer S, Newman S. Neuropsychological analysis of impulsiveness in childhood hyperactivity. Br J Psychiatry. 2001;179:138–143. doi: 10.1192/bjp.179.2.138. [DOI] [PubMed] [Google Scholar]

- Rubia K, Noorloos J, Smith A, Gunning B, Sergeant J. Motor timing deficits in community and clinical boys with hyperactive behavior: the effect of methylphenidate on motor timing. J Abnorm Child Psychol. 2003;31:301–313. doi: 10.1023/A:1023233630774. [DOI] [PubMed] [Google Scholar]

- Ruggles D, Bharadwaj H, Shinn-Cunningham BG. Why middle-aged listeners have trouble hearing in everyday settings. Curr Biol. 2012;22:1417–1422. doi: 10.1016/j.cub.2012.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saint Marie RL. Glutamatergic connections of the auditory midbrain: selective uptake and axonal transport of D-[3H]aspartate. J Comp Neurol. 1996;373:255–270. doi: 10.1002/(SICI)1096-9861(19960916)373:2<255::AID-CNE8>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- Schwartze M, Rothermich K, Schmidt-Kassow M, Kotz SA. Temporal regularity effects on pre-attentive and attentive processing of deviance. Biol Psychol. 2011;87:146–151. doi: 10.1016/j.biopsycho.2011.02.021. [DOI] [PubMed] [Google Scholar]

- Semjen A, Ivry RB. The coupled oscillator model of between-hand coordination in alternate-hand tapping: a reappraisal. J Exp Psychol Hum Percept Perform. 2001;27:251–265. doi: 10.1037/0096-1523.27.2.251. [DOI] [PubMed] [Google Scholar]

- Skoe E, Kraus N. Auditory brainstem response to complex sounds: a tutorial. Ear Hear. 2010;31:302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skoe E, Kraus N. A little goes a long way: how the adult brain is shaped by musical training in childhood. J Neurosci. 2012;32:11507–11510. doi: 10.1523/JNEUROSCI.1949-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smiljanić R, Bradlow AR. Production and perception of clear speech in Croatian and English. J Acoust Soc Am. 2005;118:1677–1688. doi: 10.1121/1.2000788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith JC, Marsh JT, Brown WS. Far-field recorded frequency-following responses: evidence for the locus of brainstem sources. Electroencephalogr Clin Neurophysiol. 1975;39:465–472. doi: 10.1016/0013-4694(75)90047-4. [DOI] [PubMed] [Google Scholar]

- Steele C. The relationship between brain structure, motor performance, and early musical training. Monreal, Quebec: Dissertation, Concordia University; 2012. [Google Scholar]

- Steele CJ, Penhune VB. Specific increases within global decreases: a functional magnetic resonance imaging investigation of five days of motor sequence learning. J Neurosci. 2010;30:8332–8341. doi: 10.1523/JNEUROSCI.5569-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Kraus N. Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Front Psychol. 2011;2:113. doi: 10.3389/fpsyg.2011.00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Parbery-Clark A, Ashley R. Musical experience shapes top-down auditory mechanisms: evidence from masking and auditory attention performance. Hear Res. 2010;261:22–29. doi: 10.1016/j.heares.2009.12.021. [DOI] [PubMed] [Google Scholar]

- Strait DL, Parbery-Clark A, Hittner E, Kraus N. Musical training during early childhood enhances the neural encoding of speech in noise. Brain Lang. 2012a;123:191–201. doi: 10.1016/j.bandl.2012.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Chan K, Ashley R, Kraus N. Specialization among the specialized: auditory brainstem function is tuned in to timbre. Cortex. 2012b;48:360–362. doi: 10.1016/j.cortex.2011.03.015. [DOI] [PubMed] [Google Scholar]

- Thomson JM, Goswami U. Rhythmic processing in children with developmental dyslexia: auditory and motor rhythms link to reading and spelling. J Physiol Paris. 2008;102:120–129. doi: 10.1016/j.jphysparis.2008.03.007. [DOI] [PubMed] [Google Scholar]

- Thomson J, Fryer B, Maltby J, Goswami U. Auditory and motor rhythm awareness in adults with dyslexia. J Res Read. 2006;29:334–348. doi: 10.1111/j.1467-9817.2006.00312.x. [DOI] [Google Scholar]

- Tierney AT, Kraus N. The ability to tap to a beat relates to cognitive, linguistic, and perceptual skills. Brain Lang. 2013;124:225–231. doi: 10.1016/j.bandl.2012.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ullén F, Forsman L, Blom O, Karabanov A, Madison G. Intelligence and variability in a simple timing task share neural substrates in the prefrontal white matter. J Neurosci. 2008;28:4238–4243. doi: 10.1523/JNEUROSCI.0825-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Rossum G, Drake F. Python reference manual. Virginia: PythonLabs; 2001. Available at http://www.python.org. [Google Scholar]

- Warren JE, Wise RJ, Warren JD. Sounds do-able auditory-motor transformations and the posterior temporal plane. Trends Neurosci. 2005;28:636–643. doi: 10.1016/j.tins.2005.09.010. [DOI] [PubMed] [Google Scholar]

- Warrier CM, Nicol TG, Abrams DA, Kraus N. Inferior colliculus contributions to phase encoding of stop consonants in an animal model. Hear Res. 2011;282:108–118. doi: 10.1016/j.heares.2011.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witt ST, Laird AR, Meyerand ME. Functional neuroimaging correlates of finger-tapping task variations: an ALE meta-analysis. Neuroimage. 2008;42:343–356. doi: 10.1016/j.neuroimage.2008.04.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin S, Chen Z, Yu D, Feng Y, Wang J. Local inhibition shapes duration tuning in the inferior colliculus of guinea pigs. Hear Res. 2008;237:32–48. doi: 10.1016/j.heares.2007.12.008. [DOI] [PubMed] [Google Scholar]