Abstract

Strong evidence exists for a key role of the human ventral occipitotemporal cortex (vOT) in reading, yet there have been conflicting reports about the specificity of this area in orthographic versus nonorthographic processing. We suggest that the inconsistencies in the literature can be explained by the method used to identify regions that respond to words. Here we provide evidence that the “visual word form area” (VWFA) shows word selectivity when identified at the individual subject level, but that intersubject variability in the location and size of the VWFA causes this selectivity to be washed out if defining the VWFA at the group level or based on coordinates from the literature. Our findings confirm the existence of a word-selective region in vOT while providing an explanation for why other studies have found a lack of word specificity in vOT.

Introduction

Over the past decade, much progress has been made in elucidating the neural foundations of reading. A particular focus of research efforts has been on clarifying the role of the left ventral occipitotemporal cortex (vOT) in reading (Cohen et al., 2002; Baker et al., 2007; Kherif et al., 2011; Mano et al., 2012; Vogel et al., 2012). Yet, although providing strong evidence for a key role of vOT in reading, these studies have also given rise to a strong divide in the field (for reviews, see Dehaene and Cohen, 2011; Price and Devlin, 2011. Although there is general agreement that reading engages the left vOT and that learning to read increases the activity in this region, there is currently considerable disagreement about the role the vOT plays in the processing of written words (Dehaene and Cohen, 2011; Price and Devlin, 2011). Specifically, a key area of contention is whether the vOT contains neuronal representations selective for orthographic stimuli. One view (Price and Devlin, 2011), pointing to a number of studies showing that the vOT responds to both orthographic and nonorthographic stimuli (Kherif et al., 2011; Vogel et al., 2012), hypothesizes that responses to words in the vOT arise from a general role of this area in perception as an integrator of bottom-up sensory information with top-down predictions based on prior experience with these stimuli, in the absence of dedicated populations of neurons selectively tuned to orthographic stimuli. In contrast, the other side of the debate (Dehaene and Cohen, 2011) hypothesizes that there is an area within the left vOT, coined the “visual word form area” (VWFA), that contains neuronal representations with a specific selectivity for orthography, i.e., with neurons that respond more to written words than other objects. This theory proposes that the difficulty researchers have had in finding word selectivity in the vOT could be a result of averaging across subjects that led to a blending of functionally distinct areas selective for nonorthographic stimuli that abut or even intermingle with the orthographically selective representations in the VWFA (Baker et al., 2007; Dehaene and Cohen, 2011; Wandell, 2011; Mano et al., 2012).

Yet, although researchers have commented on the impact of the individual variability in VWFA location and its relatively small size as potential explanations for the divergence in interpretations regarding the functional role of the VWFA, surprisingly, no study to date has directly tested this hypothesis. Here, we investigated the impact of variability of word-selective regions at the level of individual subjects on the apparent word selectivity in the VWFA. We found that the VWFA has a high degree of word selectivity when identified at the individual subject level, but that intersubject variability in the location and size of the VWFA causes this selectivity to be washed out if defining the VWFA at the group level or based on coordinates from the literature.

Materials and Methods

Participants.

For the main experiment a total of 53 right-handed normal adults who were native English speakers (aged 18–32, 32 female) were included. Forty-one subjects were drawn from the experiments conducted in (Glezer et al., 2009). Additionally, we include data from 12 subjects who participated in an ongoing study in the lab. For the control experiment in which we identified the VWFA ROI using separate event-related scans, we included all subjects for whom we had event-related scan data that included real word (RW) and fixation conditions. This yielded a subset of 24 subjects, from Experiment 1 in (Glezer et al., 2009) and the ongoing study. Subjects from these two experiments were included if a VWFA could be identified as described below (N = 20). Experimental procedures were approved by Georgetown University's Institutional Review Board, and written informed consent was obtained from all subjects before the experiment.

Scanning, stimuli, and task.

The data reported for the main experiment were taken from the localizer scans from (Glezer et al., 2009) and an ongoing study in the lab. All scans were acquired as outlined in (Glezer et al., 2009). In short, we used a block design and collected echo-planar imaging (EPI) images from two scans. Participants passively viewed blocks of images of written words (high-frequency nouns, >50 per million), scrambled words, faces, and objects. Each block lasted 20,400 ms (stimuli were displayed for 500 ms and were separated by a 100 ms blank), and blocks were separated by a 10,200 ms fixation block. Each run consisted of two blocks of each group (words, scrambled words, faces, objects) and eight fixation blocks. For the control experiment, the VWFA was identified in each subject using separate scans, namely the event-related rapid adaptation scans from Experiment 1 from (Glezer et al., 2009) and the ongoing study, in which subjects performed an orthographic oddball detection task as in (Glezer et al., 2009). These experiments were chosen as the experimental design included both RW and fixation conditions. In these experiments, RW prime/target pairs were presented briefly in each trial and included either the same word repeated (“same” condition) or two different words (“different” condition; Glezer et al., 2009). The stimuli for all scans were presented using E-Prime (http://www.pstnet.com/products/e-prime/), back-projected on a translucent screen located at the rear of the scanner, and viewed by participants through a mirror mounted on the head coil.

MRI acquisition.

All MRI data were acquired at Georgetown University's Center for Functional and Molecular Imaging using an EPI sequence on a 3 Tesla Siemens Trio scanner. For the 24 subjects from Experiment 1 and 2 from (Glezer et al., 2009) an eight-channel head coil was used (Flip angle = 90°, TR = 2040 ms, TE = 30 ms, FOV = 205 mm, 64 × 64 matrix). For all of the other 29 subjects, a 12-channel head coil was used (Flip angle = 90°, TR = 2040 ms, TE = 29 ms, FOV = 205 mm, 64 × 64 matrix). For all subjects, 35 interleaved axial slices (thickness = 4.0 mm, no gap; in-plane resolution = 3.2 × 3.2 mm2) were acquired.

MRI data analysis.

All preprocessing and most statistical analyses were done using the SPM2 software package (http://www.fil.ion.ucl.ac.uk/spm/software/spm2/). After discarding the first five acquisitions of each run, the EPI images were temporally corrected to the middle slice (for event-related scans only), spatially realigned, resliced to 2 × 2 × 2 mm3, normalized to a standard MNI reference brain in Talairach space and smoothed with an isotropic 6.4 mm Gaussian kernel. For the main experiment, the VWFA regions were identified for each individual subject using localizer scans to obtain the individual ROI (iROI) as in (Glezer et al., 2009). We first modeled the hemodynamic activity for each condition with the standard canonical hemodynamic response function, then identified a word-selective ROI with the contrast of words > fixation (at least p < 0.00001, uncorrected) masked by the contrast of words > scrambled words (at least p < 0.05, uncorrected). This contrast typically resulted in only 1–2 foci in the left vOT (p < 0.05, corrected), thresholds were tightened beyond this only to obtain a cluster with one peak coordinate with at least 10 but not >100 contiguous voxels. ROIs were selected by identifying in each subject the most anterior cluster that was significant at the corrected cluster-level of at least p < 0.05 in the vOT (specifically, the occipitotemporal sulcus/fusiform gyrus region) in a location closest to the published location of the VWFA, approximate Talairach coordinates −43 −54 −12 ± 5 (Cohen et al., 2002; Kronbichler et al., 2004), MNI −45 −57 −12 (Vogel et al., 2012). To identify the word-selective group ROI (gROI) we performed a whole brain group analysis using the contrast of words > fixation masked by words > scrambled words. This identified an ROI in the vOT (MNI −44 −54 −16) near the classically defined VWFA ROI (Cohen et al., 2002). To obtain the largest cluster with only one peak coordinate we used the threshold of p = 2 × 10−13, uncorrected, yielding a 28 voxel gROI. Finally, we created a “literature-based” ROI (litROI) using MarsBar Toolbox (Brett et al., 2002a) following the same methods as in (Vogel et al., 2012), by building a 4-mm-radius sphere around MNI coordinates −45 −57 −12. To determine whether variability in ROI size impacted our results, we also analyzed our fMRI data using different-size gROI and litROI. For the gROI we systematically tightened and loosened the threshold of the main contrast (words > fixation) and kept the masking contrast (words > scrambled words) constant (voxelwise, p = 0.05, FWE). For the main contrast we used the following uncorrected voxelwise thresholds: 1 × 10−13 (16 voxels, size 1 gROI), 2 × 10−13 (28 voxels, size 2 gROI and the main gROI reported on in the paper), 3 × 10−13 (54 voxels, size 3), and 4 × 10−13 (63 voxels, size 4), 5 × 10−13 (80 voxels, size 5), 6 × 10−13 (91 voxels, size 6), 7 × 10−13 (103 voxels, size 7). For the litROI, in addition to the 4-mm-radius sphere, we built a sphere using the same techniques as described above with an 8 mm radius. Following ROI selection we then extracted the mean percentage signal change of each individual subject's iROI, the gROI and the litROI with the MarsBar toolbox (Brett et al., 2002a) and conducted statistical analyses (paired t tests, two-tailed) on the percentage signal change.

For the control experiment, VWFA regions were identified using the event-related scans for each individual subject to obtain the word-selective iROI and gROI. iROI were identified with the contrast of all RW stimuli versus fixation (at least p < 0.001, uncorrected) masked by “different” > “same” (at least p < 0.05, uncorrected; Glezer et al., 2009). This mask allowed for the selection of voxels specifically activated in word reading as it includes only voxels that showed word-selective repetition suppression (Glezer et al., 2009). iROI selection criteria were as discussed above for the main experiment. To identify the gROI we performed a whole brain group analysis using the contrast of RW > fixation masked by “different” > “same”. This identified an ROI in vOT (MNI −45 −56 −16) near the classically defined VWFA ROI (Cohen et al., 2002). To obtain an ROI with one peak coordinate we used the threshold of p = 1 × 10−6, uncorrected, yielding a gROI of 58 voxels. To determine whether variability in ROI size impacted our results, we also performed analyses using different-size gROI and litROI. For the gROI we systematically varied the threshold of the main contrast (RW > fixation) and kept the masking contrast (“different” > “same”) constant (voxelwise, p = 0.01). For the main contrast we used the following uncorrected voxelwise thresholds: 1 × 10−7 (13 voxels, size 1 gROI), 1 × 10−6 (58 voxels, size 2 gROI and the gROI reported on in the paper), 3 × 10−6 (96 voxels, size 3), and 1 × 10−3 (495 voxels, size 4). For the litROI, we used 4 and 8 mm spheres as in the main experiment. We then used the separate data from the localizer scans to examine the responses to word, face, and object stimuli within the iROI, gROI, and litROI.

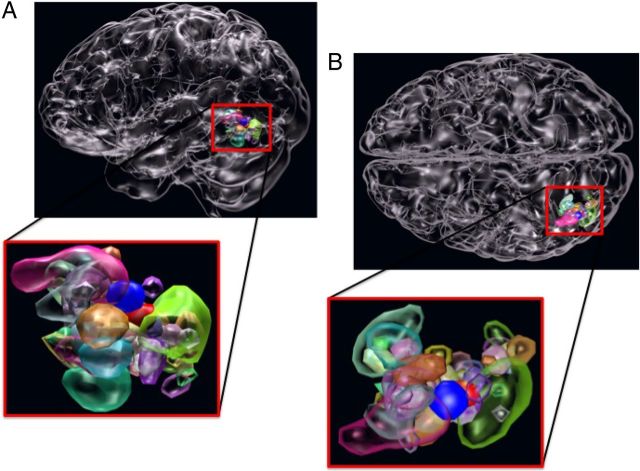

For visualization in Figure 1, ROI and glass brain were rendered using Blender (http://www.blender.org). In addition to the ROI-based analyses, we performed a whole-brain analysis using the contrast of words > objects (p < 0.05, uncorrected).

Figure 1.

ROI variability. Lateral (A) and superior (B) views of the brain, along with a blow-up of the relevant regions (red frame) showing gROI (solid red blob), litROI (solid blue blob), and iROI (translucent blobs, N = 53).

Results

We first examined variability of the iROI across subjects and how well the gROI and litROI represented the individually defined ROI. As can be seen from Figure 1, individual VWFA ROI showed considerable variability across subjects, which was only poorly captured by the gROI and litROI. To quantify this variability, we tested whether the peaks of individual subjects' ROI were located within the gROI and litROI. This was the case for only 5 of 53 and 0 of 53 subjects, respectively. These observations support that, given the variability of word-selective voxels across subjects, gROI and litROI might be too coarse measures to identify orthographic selectivity in cortex.

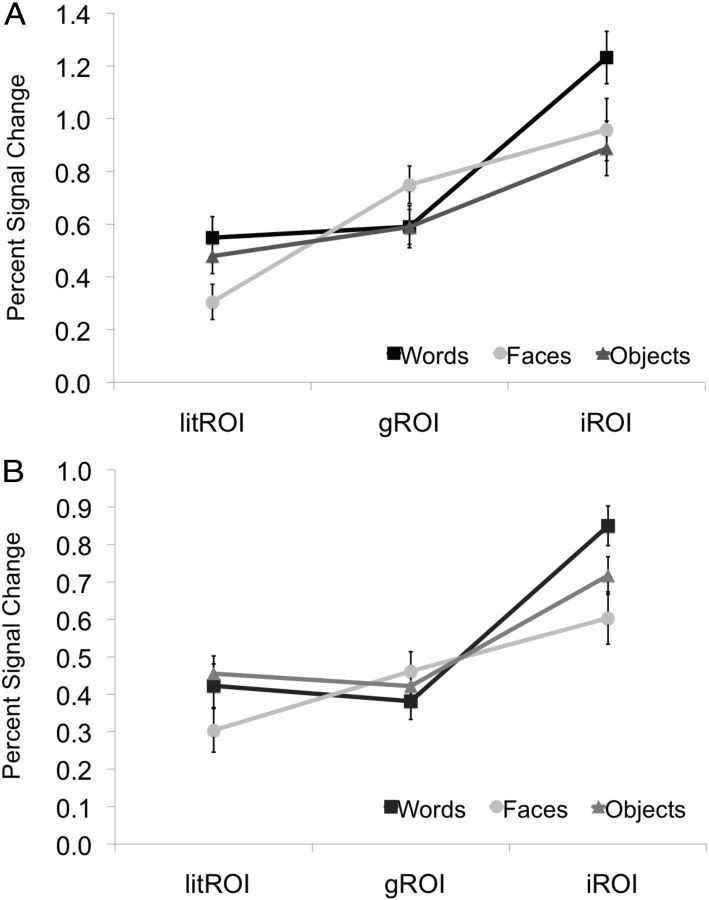

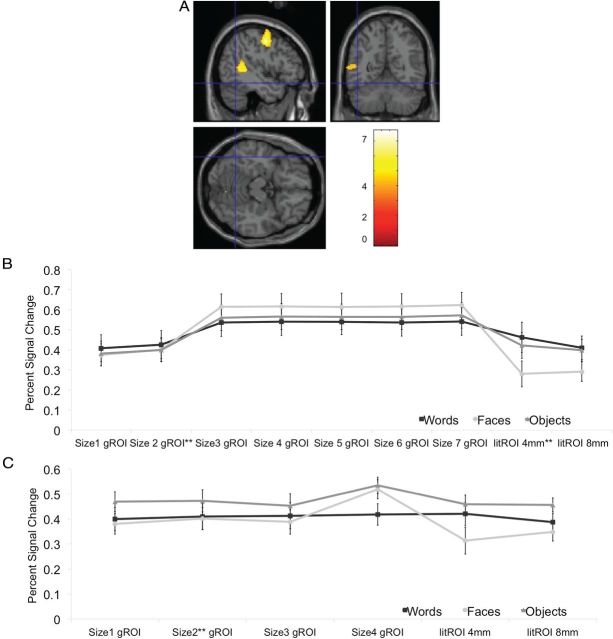

To assess the impact of the gROI and litROI mismatch relative to individual word-responsive ROI on ROI selectivity, we extracted the individual responses to words, faces, and objects in all 53 subjects using MarsBar (Brett et al., 2002a) and compared activations to the different stimulus classes across the different ROI. As shown in Figure 2, in the gROI (MNI −44 −54 −16) we found that responses to words were not significantly higher than to objects or faces (at least p = 0.58, paired t test). This replicates previous findings showing no selectivity to orthography in a group-based ROI (Duncan et al., 2009). For the litROI, we likewise found no word selectivity compared with objects (p = 0.44). Interestingly, we did find a higher response to words than faces (p = 0.001). These results replicate findings showing no selectivity to orthography compared with objects within the litROI (Vogel et al., 2012). We also found that varying the size of the gROI and litROI did not alter the results (Fig. 3), as words never activated the gROI or litROI significantly more than objects. We also performed a whole brain group analysis using the contrast of words > objects, which identified no cluster in the vOT that responded more to words than objects, even at the loosest threshold of p < 0.05, uncorrected, whereas other regions known to be involved in word reading were active (i.e., STS/STG region and precentral gyrus (Moore and Price, 1999; Turkeltaub et al., 2002; Fig. 3); these results are in line with previous findings showing no activation in the vOT when directly comparing words and objects (Moore and Price, 1999; Sevostianov et al., 2002).

Figure 2.

The VWFA is selective for orthography, but only if defined individually. Average percentage signal change to words, objects and faces in the left hemisphere in three ROIs: the literature based ROI (litROI), group-based ROI (gROI), and individually defined ROI (iROI) for (A) the main experiment and (B) the control experiment. Error bars represent within-subject SEM.

Figure 3.

Whole-brain analysis and effect of varying ROI size. A, Whole-brain analysis of words > objects contrast. Crosshair is centered at the location of the classically defined VWFA (Talairach coordinates −43 −54 −12 (Cohen et al., 2002) MNI −45 −57 −12 (Vogel et al., 2012). Color bar shows t values. B, Effect of changing the size of gROI and litROI in the main experiment and (C) control experiment. Plots show mean percentage signal change to words, objects, and faces in the left hemisphere gROI and litROI for varying ROI sizes. **Indicates the ROI reported on in Figure 2. Error bars represent within-subject SEM.

An absence of orthographic selectivity in gROI and litROI is compatible with the domain-general theory of vOT function (Price and Devlin, 2011) as well as with the theory of an orthographically selective VWFA (Dehaene and Cohen, 2011) whose selectivity is washed out in group averaging. However, the two theories make clearly different predictions for individually defined ROI: the theory that posits the existence of a VWFA with orthographically selective neurons (Baker et al., 2007; Glezer et al., 2009; Dehaene and Cohen, 2011) predicts that each subject's iROI should show a significantly stronger response to words than to other object classes, as the iROI captures each individual subject's orthographically selective voxels. In contrast, the domain-general theory of vOT function (Price and Devlin, 2011; Vogel et al., 2012) predicts that the iROI, like the gROI should not show preferential responses to words versus other object classes. To test these competing hypotheses, we identified each individual subject's VWFA (average location was MNI coordinates −42 ± 5 −58 ± 8 −17 ± 6), and then analyzed the responses in each subject's iROI to faces and objects. Crucially, in the iROI, responses to words were significantly higher than responses to both objects and faces (p = 0.00003 and 0.002, respectively), strongly supporting the existence of an orthographically selective representation in ventral occipitotemporal cortex. Moreover, this degree of orthographic selectivity was specific to the left hemisphere: although word-responsive iROI could be identified in the right hemisphere in a majority of subjects (n = 35; average MNI coordinate 43 ± 8 −61 ± 7 −16 ± 6) these right hemisphere iROI showed no significant response differences between the different stimulus groups. Likewise, a right hemisphere gROI was identified at MNI 42 −64 −22 (n = 53); this gROI showed stronger responses to objects and faces than to words (p < 0.0001).

To address the concern that orthographic selectivity in the VWFA in the preceding analyses might have been inflated due to the same scans being used to define the VWFA and to examine its selectivity, we conducted a set of control analyses in which we used separate datasets to identify the VWFA ROI (see Materials and Methods). Results of this control experiment fully confirmed the findings from the main experiment: individual VWFA ROI showed considerable variability across subjects which was only poorly captured by the gROI and litROI. Again, we quantified this variability and found poor overlap of the peaks of individual subjects' ROI with the gROI and litROI. Only 2 of 20 and 1 of 20 subjects had iROI peaks within the gROI and litROI, respectively. We further found that responses in the gROI (MNI −45 −56 −16) and litROI to words were not significantly higher than to objects or faces (gROI p = 0.51 and 0.28; litROI p = 0.48, p = 0.08, respectively, paired t test; Fig. 2). Varying the size of the gROI and litROI did not alter the results (Fig. 3), as words never activated the gROI or litROI significantly more than objects or faces. In fact, in the largest ROI, responses to objects were significantly higher than to words (p = 0.02) and almost reached significant for faces (p = 0.057). However, in the iROI (average location −42 ± 6 −56 ± 9 −16 ± 6), responses to words were significantly higher than responses to both objects and faces (p = 0.012 and 0.006, respectively). As in the main experiment, this degree of orthographic selectivity was specific to the left hemisphere. Word-responsive iROI could be identified in the right hemisphere in a majority of subjects (n = 18) (average MNI 39 ± 6 −58 ± 8 −16 ± 6). However, these ROI showed a significantly higher response to faces and objects (p = 0.03 and 0.005, respectively). Likewise, while a right hemisphere gROI could be identified at MNI 42 −60 −14 (n = 20), this gROI showed significantly stronger responses to objects (p = 0.0002) and faces (p < 0.0001) than to words.

Discussion

In our study, we explored the impact of fMRI analysis and ROI selection methods on the ability to identify orthographically selective regions in human vOT. Using individual subject-based analyses, we show that there exists in ventral temporal cortex a neuronal representation selective for orthography, the so-called VWFA. Although this representation can be reliably identified in individual subjects, intersubject variability in its location and size washes out orthographic selectivity in a group analysis or when attempting to define the VWFA based on coordinates from the literature. The idea of intersubject variability and averaging leading to decreased selectivity has been examined previously in other areas (Brett et al., 2002b; Saxe et al., 2006; Nieto-Castañón and Fedorenko, 2012), yet, no study to date has directly examined the impact of individual variability in VWFA location as potential explanations for the divergence in interpretations regarding the functional role of the VWFA. Our study not only strengthens the case for an orthographically selective VWFA, but also explains why studies using group-based analyses have failed to identify an orthographically selective ROI in ventral occipitotemporal cortex.

Interestingly, the debate regarding the nature of representations in the vOT parallels recent discussions regarding the nature of neural representations in the human “fusiform face area” (FFA). Whereas one theory argued for the FFA being highly specialized for face perception (Kanwisher et al., 1997), another theory argued for an object-general but process-specific role of the FFA (Gauthier, 2000), in which individual neurons were not specifically selective for faces but generally responded to objects of expertise. That is, neurons in the FFA that respond to faces were posited to also respond to birds in a birder, or to cars in a car expert, with the same group of neurons supporting subordinate-level discrimination for all objects of expertise. The theory of face specificity of the FFA came under attack also from studies reporting that images of body parts could activate the FFA as strongly as images of faces did (Spiridon et al., 2006). Additional studies, however, revealed that selectivity for objects of expertise was close to the FFA but did not show much overlap with face-selective voxels (Rhodes et al., 2004; Jiang et al., 2007; but see McGugin et al., 2012), and that similarly, apparent body part-selectivity of the FFA was due to body part-selective voxels adjacent to but separate from the FFA (Schwarzlose et al., 2005). Very relevantly, recent high-resolution imaging studies (Weiner and Grill-Spector, 2013) have revealed that right ventral temporal cortex contains an arrangement of regions of alternating face- and body part-selectivity, whose topography is highly consistent across subjects, but whose locations and spatial extents are variable, producing the appearance of one large region responsive to faces and body parts in a group analysis, very similar to the data presented in this paper showing that group averaging washes out the orthographic selectivity of neuronal representations located at slightly different locations in individual subjects. It would therefore be attractive in future work to further probe selectivity in the vicinity of the VWFA, specifically whether there might be a topographically consistent arrangement of selectivity for words and other object classes. Interestingly, a recent high-resolution imaging study (Mano et al., 2012) showed that orthographically selective areas comingle with object selective regions in left vOT. Finding consistency in object selectivity around the VWFA would be highly interesting for our understanding of development and plasticity, given the theory (Dehaene and Cohen, 2011) that the VWFA arises during reading acquisition from the recruitment of neurons in object-selective visual cortex that have appropriate connectivity with language regions to become selective for the “objects” of reading, i.e., real words.

Footnotes

This research was supported by NSF Grants 1026934 and 0449743. We thank Paul Robinson for his help in creating Figure 1. We further thank Xiong Jiang for his assistance with the projects that generated the data that went into the analysis for this paper.

The authors declare no competing financial interests.

References

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc Natl Acad Sci U S A. 2007;104:9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox. Paper presented at the 8th International Conference on Functional Mapping of the Human Brain; June 2–6, 2002; Sendai, Japan. 2002a. [Google Scholar]

- Brett M, Johnsrude IS, Owen AM. The problem of functional localization in the human brain. Nat Rev Neurosci. 2002b;3:243–249. doi: 10.1038/nrn756. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehéricy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain. 2002;125:1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Cohen L. The unique role of the visual word form area in reading. Trends Cogn Sci. 2011;15:254–262. doi: 10.1016/j.tics.2011.04.003. [DOI] [PubMed] [Google Scholar]

- Duncan KJ, Pattamadilok C, Knierim I, Devlin JT. Consistency and variability in functional localisers. Neuroimage. 2009;46:1018–1026. doi: 10.1016/j.neuroimage.2009.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I. What constrains the organization of the ventral temporal cortex? Trends in Cognitive Sciences. 2000;4:1–2. doi: 10.1016/S1364-6613(99)01416-3. [DOI] [PubMed] [Google Scholar]

- Glezer LS, Jiang X, Riesenhuber M. Evidence for highly selective neuronal tuning to whole words in the “visual word form area.”. Neuron. 2009;62:199–204. doi: 10.1016/j.neuron.2009.03.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang X, Bradley E, Rini RA, Zeffiro T, Vanmeter J, Riesenhuber M. Categorization training results in shape- and category-selective human neural plasticity. Neuron. 2007;53:891–903. doi: 10.1016/j.neuron.2007.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kherif F, Josse G, Price CJ. Automatic top-down processing explains common left occipitotemporal responses to visual words and objects. Cereb Cortex. 2011;21:103–114. doi: 10.1093/cercor/bhq063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kronbichler M, Hutzler F, Wimmer H, Mair A, Staffen W, Ladurner G. The visual word form area and the frequency with which words are encountered: evidence from a parametric fMRI study. Neuroimage. 2004;21:946–953. doi: 10.1016/j.neuroimage.2003.10.021. [DOI] [PubMed] [Google Scholar]

- Mano QR, Humphries C, Desai RH, Seidenberg MS, Osmon DC, Stengel BC, Binder JR. The role of left occipitotemporal cortex in reading: reconciling stimulus, task, and lexicality effects. Cereb Cortex. 2012;23:988–1001. doi: 10.1093/cercor/bhs093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Gatenby JC, Gore JC, Gauthier I. High-resolution imaging of expertise reveals reliable object selectivity in the fusiform face area related to perceptual performance. Proc Natl Acad Sci U S A. 2012;109:17063–17068. doi: 10.1073/pnas.1116333109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore CJ, Price CJ. Three distinct ventral occipitotemporal regions for reading and object naming. Neuroimage. 1999;10:181–192. doi: 10.1006/nimg.1999.0450. [DOI] [PubMed] [Google Scholar]

- Nieto-Castañón A, Fedorenko E. Subject-specific functional localizers increase sensitivity and functional resolution of multisubject analyses. Neuroimage. 2012;63:1646–1669. doi: 10.1016/j.neuroimage.2012.06.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The interactive account of ventral occipitotemporal contributions to reading. Trends Cogn Sci. 2011;15:246–253. doi: 10.1016/j.tics.2011.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes G, Byatt G, Michie PT, Puce A. Is the fusiform face area specialized for faces, individuation, or expert individuation? J Cogn Neurosci. 2004;16:189–203. doi: 10.1162/089892904322984508. [DOI] [PubMed] [Google Scholar]

- Saxe R, Brett M, Kanwisher N. Divide and conquer: a defense of functional localizers. Neuroimage. 2006;30:1088–1096. doi: 10.1016/j.neuroimage.2005.12.062. discussion 1097–1099. [DOI] [PubMed] [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sevostianov A, Horwitz B, Nechaev V, Williams R, Fromm S, Braun AR. fMRI study comparing names versus pictures of objects. Hum Brain Mapp. 2002;16:168–175. doi: 10.1002/hbm.10037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spiridon M, Fischl B, Kanwisher N. Location and spatial profile of category-specific regions in human extrastriate cortex. Hum Brain Mapp. 2006;27:77–89. doi: 10.1002/hbm.20169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turkeltaub PE, Eden GF, Jones KM, Zeffiro TA. Meta-analysis of the functional neuroanatomy of single-word reading: method and validation. Neuroimage. 2002;16:765–780. doi: 10.1006/nimg.2002.1131. [DOI] [PubMed] [Google Scholar]

- Vogel AC, Petersen SE, Schlaggar BL. The left occipitotemporal cortex does not show preferential activity for words. Cereb Cortex. 2012;22:2715–2732. doi: 10.1093/cercor/bhr295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wandell BA. The neurobiological basis of seeing words. Ann N Y Acad Sci. 2011;1224:63–80. doi: 10.1111/j.1749-6632.2010.05954.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner KS, Grill-Spector K. Neural representations of faces and limbs neighbor in human high-level visual cortex: evidence for a new organization principle. Psychol Res. 2013;77:74–97. doi: 10.1007/s00426-011-0392-x. [DOI] [PMC free article] [PubMed] [Google Scholar]