Abstract

Persistent activity in cortex is the neural correlate of working memory (WM). In persistent activity, spike trains are highly irregular, even more than in baseline. This seemingly innocuous feature challenges our current understanding of the synaptic mechanisms underlying WM. Here we argue that in WM the prefrontal cortex (PFC) operates in a regime of balanced excitation and inhibition and that the observed temporal irregularity reflects this regime. We show that this requires that nonlinearities underlying the persistent activity are primarily in the neuronal interactions between PFC neurons. We also show that short-term synaptic facilitation can be the physiological substrate of these nonlinearities and that the resulting mechanism of balanced persistent activity is robust, in particular with respect to changes in the connectivity. As an example, we put forward a computational model of the PFC circuit involved in oculomotor delayed response task. The novelty of this model is that recurrent excitatory synapses are facilitating. We demonstrate that this model displays direction-selective persistent activity. We find that, even though the memory eventually degrades because of the heterogeneities, it can be stored for several seconds for plausible network size and connectivity. This model accounts for a large number of experimental findings, such as the findings that have shown that firing is more irregular during the persistent state than during baseline, that the neuronal responses are very diverse, and that the preferred directions during cue and delay periods are strongly correlated but tuning widths are not.

Introduction

Working memory (WM), the ability to temporarily hold, integrate, and process information to produce goal-directed behavior, is crucial to higher cognitive functions, such as planning, reasoning, decision-making, and language comprehension (Baddeley, 1986; Fuster, 2008). The persistent activity recorded in neocortex during WM tasks is thought to be the main neuronal correlate of WM (Fuster and Alexander, 1971; Miyashita and Chang, 1988; Goldman-Rakic, 1995). For example, in an oculomotor-delayed response (ODR) task in which a monkey has to remember the location of a stimulus for several seconds to make a saccade in its direction, a significant fraction of the neurons in the prefrontal cortex (PFC) modify their activity persistently and selectively to the cue direction during the delay period (Funahashi et al., 1989, 1990, 1991; Constantinidis et al., 2001; Takeda and Funahashi, 2007). The classical view is that this reflects a multistability in the dynamics of the PFC circuit because sensory inputs are the same in the precue and in the delay periods but neuronal activity is different (Hebb, 1949; Hopfield, 1984; Amit, 1995; Amit and Brunel, 1997; Wang, 2001).

The persistent activity of PFC neurons is highly irregular temporally (Shinomoto et al., 1999; Compte et al., 2003; Shafi et al., 2007). For instance, it has been reported that in ODR tasks, the coefficient of variation of the interspike interval distribution is close to 1, and even >1 for many of the neurons (Compte et al., 2003). Previous modeling works have attempted to account for this irregularity (Brunel, 2000; van Vreeswijk and Sompolinsky, 2004; Renart et al., 2007; Roudi and Latham, 2007; Barbieri and Brunel, 2008). However, in all these models, unless parameters are tuned, the neurons fire much too regularly in memory states. Hence, accounting in a robust way for highly irregular firing and persistent activity remains challenging.

Irregular firing of cortical neurons is naturally explained if one assumes that cortical networks operate in a regime in which excitation is strong and balanced by strong inhibition (Tsodyks and Sejnowski, 1995; van Vreeswijk and Sompolinsky, 1996, 1998; Vogels et al., 2005; Lerchner et al., 2006; Vogels and Abbott, 2009). It is therefore tempting to assume that in WM tasks the PFC network expresses a multistability between balanced states. We argue here that this requires that the nonlinearities underlying the persistence of activity in PFC are primarily in the neuronal interactions and not in the neurons as assumed previously. We also argue that short-term plasticity is a possible substrate for these nonlinearities. Specifically, we propose that the short-term facilitating excitatory synapses recently reported in PFC (Hempel et al., 2000; Wang et al., 2006) play an essential role in WM. To illustrate these claims, we develop a new network model for spatial WM based on this assumption. We show that the model displays highly irregular spontaneous activity as well as persistent, selective, and highly irregular delay activity. Importantly, it also displays a great deal of the diversity observed in the delay activity of PFC neurons (Funahashi et al., 1989, 1990; Asaad et al., 1998; Romo et al., 1999; Takeda and Funahashi, 2002; Brody et al., 2003; Shafi et al., 2007).

A brief account of this study has been presented in abstract form (Hansel and Mato, 2008).

Materials and Methods

The network models.

In this paper we consider two network models made of spiking neurons. One model (Model I) has an unstructured connectivity. The second model (Model II) represents a network in PFC involved in an ODR task and has a ring architecture (Ben-Yishai et al., 1995; Hansel and Sompolinsky, 1996).

Single neuron dynamics.

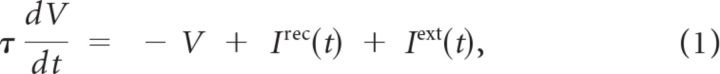

Both models are made up of an excitatory and an inhibitory population of integrate-and-fire neurons. The membrane potential of a neuron follows the dynamics:

|

where τ is the membrane time constant, Irec(t) is the total recurrent synaptic current the neuron receives from all other cells in the network connected to it and Iext(t) represents feedforward inputs from outside the network. Whenever the membrane potential of the neuron reaches the threshold, VT, it fires an action potential and its voltage is reset to VR. We take VT = 20 mV, VR = −3.33 mV. The time constants of the neurons are τ = 20 and 10 ms for excitatory and inhibitory neurons respectively, in accordance with standard values (Somers et al., 1995).

The external inputs.

Simulation of a memory task (Model I) or of the ODR task (Model II) requires a stimulus that depends on time. First, there is a precue period that allows the system to settle in a baseline state. In the second stage, a transient input is applied to simulate the cue, after which the input returns to the previous value. Finally another transient input is applied to erase the memory that has been stored. Thus, the external feedforward input into neuron i = 1, …, Nα in population α = E, I (hereafter neuron i, α) is:

The first term on the right hand side represents the background input, which is constant in time and depends solely on the target population. The second term represents the transient sensory inputs related to the cue to be memorized. The third term represents a transient input that erases the memory at the end of the delay period. In Model I, Ii,αγ(t) (γ = cue, erase) is homogeneous (i.e., Ii,αγ(t) ≡ Aαγ(t) does not depend on i). In model II:

where θcue is the direction in which the cue is presented; is the direction of the cue for which the sensory input into neuron (i, α) is maximum; and θerase is the direction of the saccade that is actually performed. An estimate of θerase is given by the direction of the population vector computed from the activity of the neurons at the end of the delay period (see below). We assume that the angles, θi,α, are uniformly distributed between 0 and 360°.

For simplicity we take Aαγ(t) = ÂαγHγ(t) (γ = cue, erase), where Âγ is constant, Hcue(t) = 1 during the cue period, and Hcue(t) = 0 otherwise. Similarly, Herase(t) = 1 when the memory erasing input is present and Herase(t) = 0 otherwise.

Connectivity of the networks.

We define the connectivity matrix of the network by Jiα,jβ = 1 if neuron (j, β) is connected presynaptically to neuron (i, α) and Jiα,jβ = 0 otherwise. In Model I the connectivity is unstructured. Therefore the probability, Piα,jβ, that Jiα,jβ = 1 depends only on α and β: Piα,jβ = Kαβ/Nβ, where Nβ is the number of neurons in population β = E, I and Kαβ is the average number of connections a neuron in population α receives from population β.

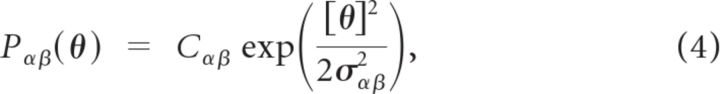

The architecture of Model II is consistent with the columnar functional anatomy of the monkey PFC (Goldman-Rakic, 1987, 1988, 1995; Rao et al., 1999). The probability of connection between two neurons is given by Piα,jβ = Pαβ(|θiα − θjβ|) with:

|

where [θ] = min(|θ|, 2π − |θ|) and Cαβ is a normalization that ensures that the total number of inputs a neuron in population α receives from population β is on average Kαβ. The range of the interactions is characterized by the parameters σαβ. The resulting network architecture is a probabilistic version of the architecture of the ring model, in which neurons are all connected with probability 1, whereas the strength of their interactions depends on their distance on the ring (Ben-Yishai et al., 1995; Hansel and Sompolinsky, 1996; Compte et al., 2000).

Synaptic interactions.

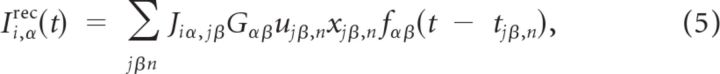

We model the recurrent synaptic input current into neuron (i, α) as:

|

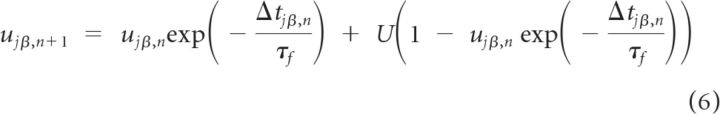

where Gαβ is a constant that measures the maximal synaptic current neuron (i, α) receives from neuron (j, β), tjβ,n is the time of the n-th spike fired by neuron (j, β), xjβ,n is the amount of synaptic resources available at its synaptic terminals before this spike, and ujβ,n is the fraction of these resources used by this spike. The dynamics of these two variables are responsible for the short-term plasticity (STP) and we model them as (Markram et al., 1998):

|

|

where Δtjβ,n is the n-th interspike interval of neuron (j, β); τr and τf are the recovery and the facilitation time constants of the synapse, and U the maximal utilization parameter.

Finally, the function fαβ(t) in Equation 5 describes the dynamics of individual postsynaptic currents (PSCs). It is given by fαβ(t) = (1/ταβ) exp(−t/ταβ)H(t) where ταβ is the synaptic decay time, and H(t) = 0 [respectively (resp.) 1] for t < 0 (resp. t > 0) (Dayan and Abbott, 2001).

Network size, connectivity, and scaling of network parameters with connectivity.

The total number of neurons in the network is N = NE + NI with NE = 0.8N and NI = 0.2N. The average total number of synaptic inputs a neuron receives, K, is assumed to be the same in the two populations. We take: KEE = KIE ≡ KE = 0.8K and KII = KEI ≡ KI = 0.2K. Unless specified otherwise we take N = 80,000 and K = 2000.

The balanced regime is mathematically well defined only in the limit N → ∞, K → ∞ while K ≪ N, and the strength of the recurrent interactions and the external inputs are scaled as (van Vreeswijk and Sompolinsky, 1998):

|

|

|

where gαβ, iαb, and âαγ do not depend on K. This scaling ensures that, in a wide range of parameters, the temporal fluctuations in the synaptic inputs remain finite and do not depend on the connectivity when the connectivity is large, whereas the time-average excitatory and inhibitory inputs increase and balance each other (van Vreeswijk and Sompolinsky, 1996, 1998). In that limit, even though the excitatory and inhibitory inputs become infinitely large, the temporal mean and SD of the fluctuations of the total inputs remain finite and on the same order of the neuronal threshold.

For finite connectivity, the balance of excitation and inhibition is only approximate. Therefore, to qualify the dynamic regime of the network as balanced, it is important to check the robustness to increasing K. As explained in Results, it is essential to verify that the domain of the parameters in which the multistability between balanced states occurs does not vanish in the limit of large N and large K. To test for this robustness, we performed numerical simulations with a network of size up to N = 320,000 neurons and connectivities as large as K = 32,000.

Synaptic parameters.

Unless specified otherwise, the parameters of the models used in our simulations are those given in Table 1.

Table 1.

Parameters of the synaptic interactions for the unstructured and spatial working memory networks

| Unstructured | Spatial WM | |

|---|---|---|

| gEE (AMPA) | 560 mV ms | 533.3 mV ms |

| gEE (NMDA) | 560 mV ms | 533.3 mV ms |

| gIE (AMPA) | 67.2 mV ms | 67.2 mV ms |

| gIE (NMDA) | 7.4 mV ms | 7.4 mV ms |

| gEI | −138.6 mV ms | −138.6 mV ms |

| gII | −90.6 mV ms | −90.6 mV ms |

| U | 0.03 | 0.03 |

| τr | 200 ms | 200 ms |

| τf | 450 ms | 450 ms |

| σEE | — | 60° |

| σIE | — | 70° |

| σEI | — | 60° |

| σII | — | 60° |

In both models, pyramidal cells form a mixture of fast (AMPA) and slow (NMDA) synapses on other pyramidal cells and interneurons. Both components share the same connectivity matrices Jiα,jE but differ in their synaptic strength (gAMPA and gNMDA, respectively) and in the decay time constant of their PSCs. Equation 5 must be interpreted as including the sum over both components. The decay time constant of the excitatory postsynaptic currents are 3 and 50 ms for AMPA and NMDA synapses, respectively. We tested to confirm that taking longer time constants for the NMDA synapses (for instance 80 ms, as reported by Wang et al., 2008) has no impact on the results (data not shown).

The voltage dependence of the NMDA currents was not included in the simulations depicted in this paper. However, we have verified that including voltage dependence while keeping the STP of the recurrent excitation (EE) synapse interactions does not qualitatively change the conclusions of our work. For that purpose, we multiplied the synaptic strength, GαENMDA, by the same voltage-dependent factor as in Compte et al. (2000). Since this factor is always <1, we had to increase GEENMDA and GIENMDA by some constant factor equal to 5.

We define Rα as follows: Rα = gαEAMPA/(gαENMDA + gαEAMPA). In most of the simulations we took RE = 0.5 and RI = 0.9. This accounts for the fact that NMDA synapses are more abundant between pyramidal cells than between pyramidal cells and interneurons (Thomson, 1997). All the inhibitory interactions have a decay time constant of 4 ms (Bartos et al., 2001, 2002). We verified that the properties of the model were robust with respect to the values of the excitation and inhibition decay-time constants, as well as with respect to the ratios RE and RI.

The recurrent excitation between pyramidal cells displays short-term plasticity. For simplicity, in the simulations described in this paper, we assumed that this is the case for AMPA as well as for NMDA interactions. However, we verified that the properties of the network are essentially the same if only AMPA synapses display STP (provided that the synaptic strength GEEAMPA is increased appropriately to compensate for the absence of facilitation in NMDA synapses). The parameters of the STP were chosen such that the network displays multistability in a broad range of background inputs. The chosen values for the recovery and the facilitation time constants were compatible with the in vitro data of Wang et al. (2006). The utilization parameter, U, was in the range of the lower values reported in that study. We assume that excitatory synapses to inhibitory neurons as well as all inhibitory synapses do not display STP. Therefore for these synapses xjβ,n = ujβ,n = 1.

With the parameters in Table 1, the maximal postsynaptic potentials (PSPs) of the various connections are as follows: 4.3 10−2 mV and 0.14 mV for the NMDA and AMPA components of the EE synapses, respectively; −2.3 mV for the EI synapses, −2.5 mV for the II synapses; and 2.5 10−2 and 1 mV for the NMDA and AMPA components of the excitatory to the inhibitory neurons, respectively. Note that the PSPs generated by individual excitatory connections are substantially weaker than those generated by inhibitory ones. This is partially compensated for by excitatory connectivity that is larger by a factor of 4 than the inhibitory connectivity, and by greater activity for inhibitory than for excitatory neurons. Moreover, the neurons receive an excitatory tonic input. Altogether, excitation and inhibition inputs balance approximately as we show in the results.

Numerical simulations.

Simulations were performed using a second-order Runge–Kutta scheme with a fixed time step, δt = 0.1 ms, supplemented by an interpolation scheme for the determination of the firing times of the neurons (Hansel et al., 1998).

Characterization of the irregularity in action potential firing.

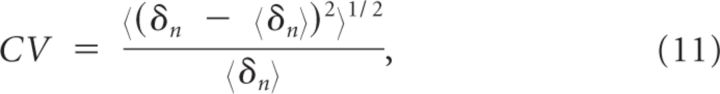

We quantify the irregularity of the discharge of a neuron by the coefficient of variation (CV) of its interspike interval (ISI) distribution defined by:

|

where δn is n-th ISI of the neuron and 〈 … 〉 denotes an average overall number of spikes it has fired.

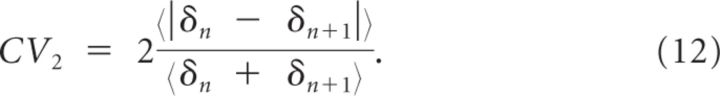

We also evaluate the coefficient of variation CV2. CV2 is computed by comparing each ISI (δn) to the following ISI (δn+1) to evaluate the degree of variability of ISIs in a local manner (Holt et al., 1996). It is defined by:

|

For a Poisson spike train, CV = CV2 = 1.

Evaluation of the phase diagrams.

To evaluate which regions in the space of parameters display persistent activity, we use the following procedure: In simulating the network, we slowly increase the external input IE (while keeping the rest of the parameters constant) and monitor the mean and spatial modulation of the network activity. When IE has reached a predefined value of sufficient size, we continue the simulations while decreasing IE back to its initial value. This generates a hysteresis curve, which enables us to identify the bistability region for that point in the parameter space. The procedure is repeated for different values of the parameters (e.g., GEE) to obtain a phase diagram.

Simulation of the delayed response task in Model II.

At the beginning of a trial, the network is initialized from random initial conditions. After 3 s (representing the fixation period in the experiment), the cue is presented and the related feedforward input occurs for Δtcue = 0.5 s. The delay period goes from 3.5 to 6.5 s. The transient input, which erases the memory, begins at t = 6.5 s and has a duration of Δterase = 1 s.

Quantification of the single neuron directional selectivity in Model II.

Tuning curves were estimated by simulating 20 trials for each of the eight cues from 0 to 315° in intervals of 45°. Using a bootstrap method, we determined whether the task-related activity of a neuron was directionally tuned (Constantinidis et al., 2001). For each neuron we evaluated the quantity Oi,α = [∑θrθ2 ]1/2 where rθ is the firing rate of the neuron during the delay period averaged over the 20 trials with the cue presented in direction θ. We compared the obtained value of Oi,α with the one obtained after randomly permuting the angles of each trial before averaging. If the second quantity is smaller than the first one for 99% of the permutations, we consider that the activity is significantly directionally tuned.

We quantified the degree of directional selectivity with the circular variance (CircVar) (Mardia, 1972) defined by CircVar ≡ 1 − c1/c0 where ck = |Σθ rθ exp(ikθ)|. A broad tuning curve (badly selective response) corresponds to CircVar close to 1 whereas for very sharp tuning CircVar is close to 0.

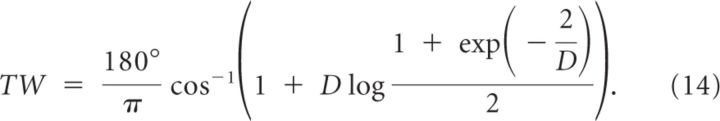

The tuning curves of a large fraction of the neurons can be well fitted to a von Mises function defined as:

|

We estimated the parameters A, B, ψ, D for each neuron by minimizing the quadratic error of the fit: E = Σθ(r(θ) − rθ)2/σθ2, where the sum is over the eight directions of the cue and σθ is the trial-to-trial SD of the response. The estimate of the preferred direction (PD) of the neuron is given by PD = 180°ψ/π. The sharpness of the tuning curve [tuning width (TW)] above baseline can be computed from the formula:

|

The quality of the fit is estimated by evaluating the χ2 distribution for 4 degrees of freedom (8 points minus 4 parameters) (Press et al., 1992). This probability characterizes the goodness-of-fit. Bad fits correspond to extremely low values of the probability, q. We consider that the fit is good if q > 0.001. To determine the spatial modulation of the network activity and population vector in Model II, let us denote by fjα(t) the firing rate of neuron (j, α) averaged over a time window of 50 ms around time t. To characterize the spatial modulation of the activity of population α at time t we computed:

|

where Mα is the modulus of the complex number Zα and ψα is its argument. Note that the real and imaginary parts of Zα(t) are the components of the population vector at time t for population α. If the network activity is homogeneous, Mα(t) is ∼0, whereas for a very sharply modulated activity profile, Mα(t) is ∼1. In our simulations, we always found that ψE(t) is approximately equal to ψI(t). The preferred direction, θerase, of the feedforward input that erases the memory trace was taken to be the value of ψE = ψI at the end of the delay period.

Results

Irregular firing in cortex in vivo and balance of excitation and inhibition

Cortical neurons fire irregularly (Burns and Webb, 1976; Softky and Koch, 1993; Bair et al., 1994). The neurons that fire less irregularly are those in primary motor cortices, in supplementary motor cortices, or in association with or in motor areas, such as parietal regions (Maimon and Asaad, 2009; Shinomoto et al., 2009), where the CV of the ISI distributions of the neurons are in the range CV = 0.5–0.8. The neurons that fire more irregularly are in sensory areas and in prefrontal cortex where the CVs are ∼1.

Remarkably, recent experimental studies in monkeys performing WM tasks have reported that the level of temporal irregularity with which PFC neurons fire during the delay period is comparable to, if not higher than, what is observed in spontaneous activity or during the fixation period (Shinomoto et al., 1999; Compte et al., 2003; Shafi et al., 2007).

The highly irregular activity of cortical neurons in vivo has long appeared paradoxical in view of the large number of their synaptic inputs (Softky and Koch, 1992, 1993; Holt et al., 1996). This is because the temporal fluctuations of the postsynaptic current produced by K ≫ 1 presynaptic afferents firing asynchronously are much smaller, by a factor , than its average. Accordingly, since in vitro neurons fire regularly in response to weakly noisy input (Connors et al., 1982), one would expect that firing in vivo would be only weakly irregular. A generic solution to this problem posits nearly balanced strong excitatory and inhibitory synaptic inputs such that their temporal fluctuations, although much smaller than their means taken separately, are comparable to the average total input and to the neuronal threshold (van Vreeswijk and Sompolinsky, 1996, 1998). Modeling studies have shown that balanced states emerge in a robust manner without fine tuning of parameters from the collective dynamics of recurrent neuronal networks (van Vreeswijk and Sompolinsky, 1996, 1998, 2004; Amit and Brunel, 1997; Lerchner et al., 2006; Hertz, 2010). The balance mechanism has been applied to account for the high variability of spontaneous activity as well as sensory-evoked neuronal activity in cortex (van Vreeswijk and Sompolinsky, 2004; Lerchner et al., 2006). Can it also provide a natural framework to account for the spiking irregularity observed during WM tasks?

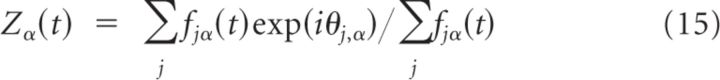

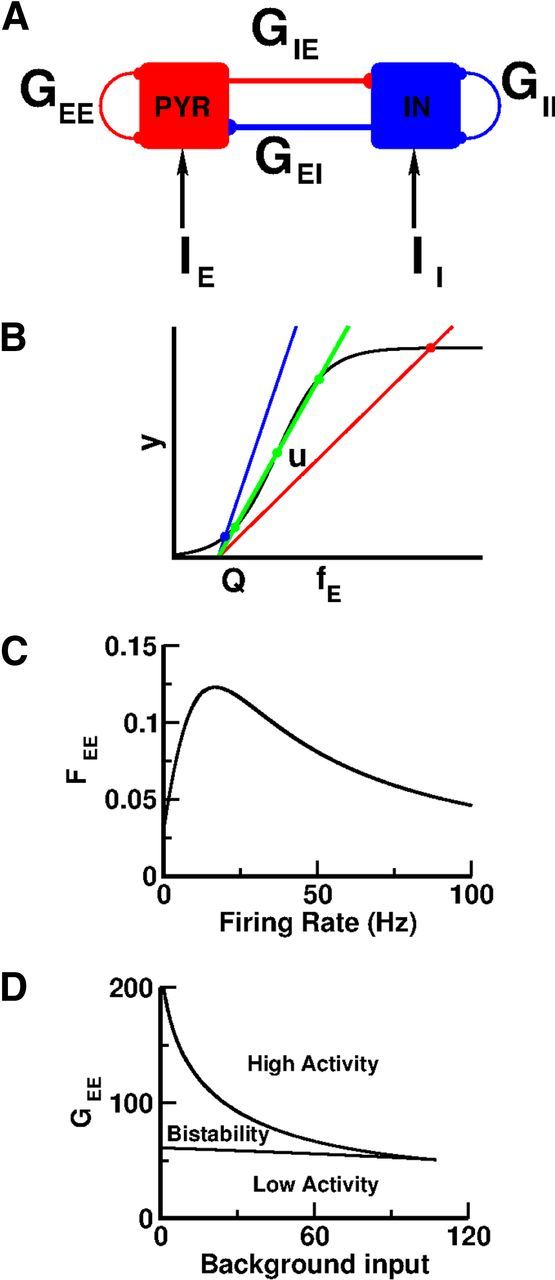

In balanced states, the level of activity of macroscopic ensembles of neuronal populations are largely independent of single-cell intrinsic properties (van Vreeswijk and Sompolinsky, 1998). This can be understood heuristically as follows. Let us consider a network comprising one excitatory population (E) and one inhibitory population (I) (Fig. 1A). The state of each population is characterized by its activity, fα, α = E, I. Assuming a stationary state of the network, this activity is related to the total input into the population α, hα, via fα = Sα(hα) where Sα is the sigmoidal input–output transfer function of population α and hE and hI are given by (see for instance Dayan and Abbott, 2001):

where the constants Gαβ measure the efficacy of the interactions between population β and α and IE and II are external inputs to the network.

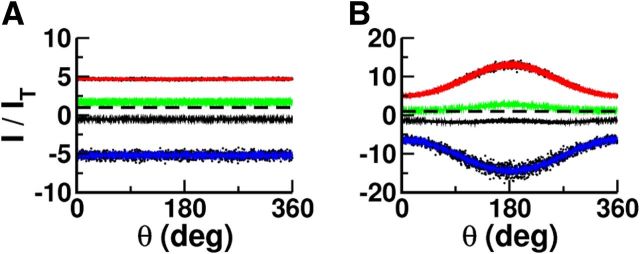

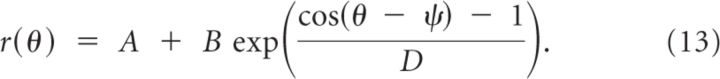

Figure 1.

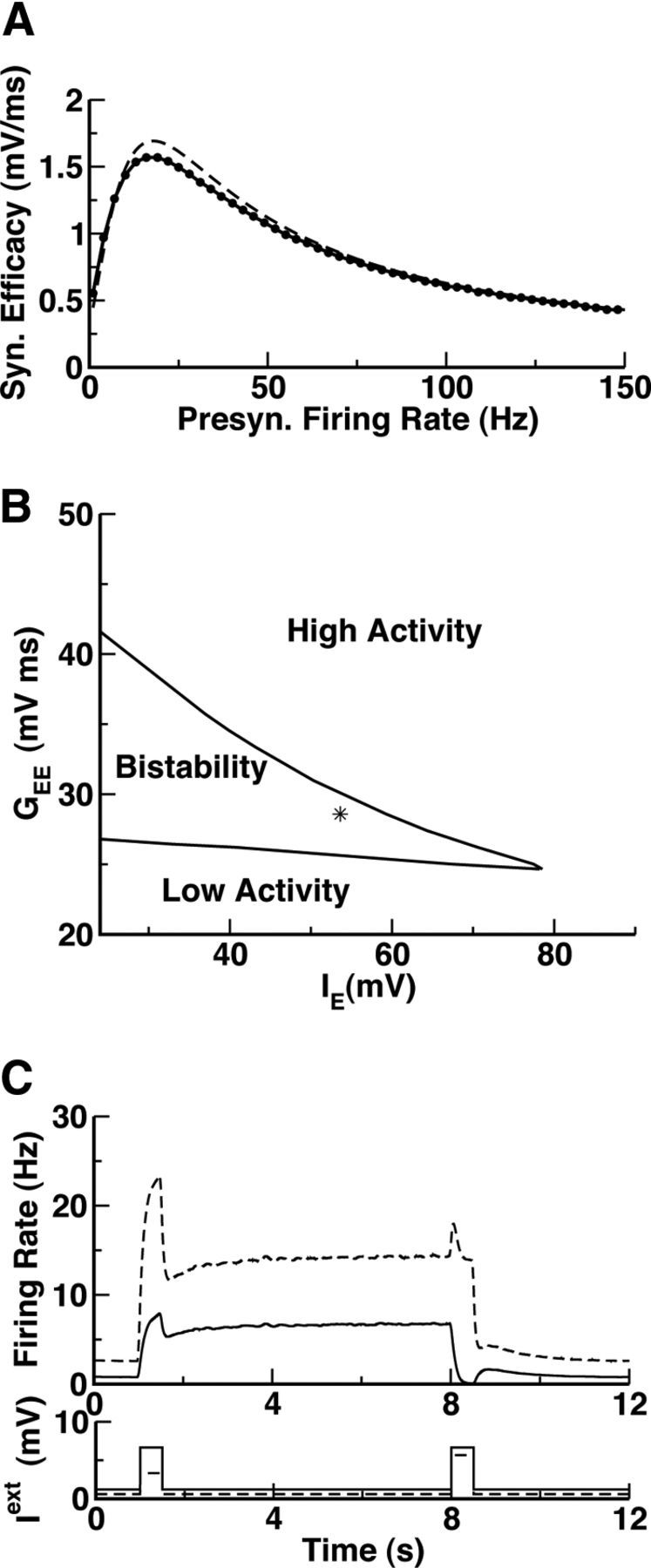

Bistability between balanced states sustained by nonlinear recurrent excitation in a two-population rate model. A, Architecture of the model. B, Graphic solution of the balance equations for the sigmoidal synaptic transduction function, SEE(fE): Black curve: The function SEE(fE). Straight lines: y = (fE − Q)/G, Q = 0.5; blue: G = 20; green: G = 3.3; red: G = 9.3. The intersections between the straight lines and the black curve correspond to the possible states of the network. For G = 20 and G = 3.3, the network has only one stable state. For G = 9.3, it is bistable and also displays one unstable state (indicated by the symbol u). C, Nonlinear input–output transfer function FEE(fE) = (a + bfE)/(1 + cfE + dfE2) with a = 0.03, b = 0.0135 s, c = 0.0195 s, d = 2.7 10−3 s2 (top). D, Phase diagram of the network. Background inputs to the E and I populations are equal (IE = II). Parameters: GEI = 2.5, GIE = 9, GII = 3.

If the excitation is too strong, the inputs hE and hI and therefore the activities of the populations reach the saturation levels of SE and SI. Conversely, for overly strong inhibition, hE and hI are below the (soft) threshold of SE and SI and network activity is very low. An appropriate balance of excitation and inhibition is necessary to prevent the network from being in one of these extreme regimes. This occurs if the activities of the two populations obey the conditions:

which express the very fact that inhibition balances excitation. These balance conditions do not depend on the input–output transfer functions of the populations. They fully determine the population activities as a function of the external inputs. Since these equations are linear, there is generically a unique solution for given values of IE and II. Hence the network cannot exhibit more than one balanced state. This effective washout at the macroscopic level of the neuronal intrinsic properties is a remarkable feature of the balance regime. As a matter of fact, it can be derived in large networks of randomly connected binary neurons (van Vreeswijk and Sompolinsky, 1998), of randomly connected spiking integrate-and-fire neurons (Renart et al., 2010), or of integrate-and-fire networks (Lerchner et al., 2004; van Vreeswijk and Sompolinsky, 2004).

According to the classical theory of WM, the selective persistent activity observed during the delay period reflects the coexistence of many collective stable states of the network dynamics in PFC. The argument above implies that for these states to be balanced, other nonlinearities than those present in the input–output transfer functions of the neurons are required. This prompted us to inquire which nonlinearities other than those of single neurons are sufficient to achieve multistability between balanced states.

Bistability between balanced states can be robustly sustained by nonlinear recurrent interactions

The simplest form of persistent activity is exhibited by neural networks that possess two stable states that differ by the level of activity of the neurons. If the network is in one of these states, it remains there until an appropriate perturbation of a macroscopic number of neurons induces a transition to the other state. We will term the state in which the activity is lower as baseline and the state in which the activity is elevated as persistent.

Bistability between balanced states in a simplified rate model with nonlinear synaptic interactions

It is clear that the linearity of Equations 19 and 20 stems from the assumption that the interactions between the neurons are linear; namely, that the synaptic inputs are proportional to the presynaptic firing rates and linearly sum up.

We first relax this assumption by considering that the recurrent excitatory interactions depend nonlinearly on the activity of the excitatory neurons. This means that we replace GEE in Equations 19 and 20 by a term GEEFEE(fE), which depends nonlinearly on the activity of the excitatory population. Equations 19 and 20 are therefore replaced by:

with SEE(fE) = fEFEE(fE). Expressing fI as a function of fE using Equation 22 and inserting in Equation 21 we get:

|

where

and Q = (GIIIE/GEI − II)/GIE. Equation 23 determines fE as a function of the model parameters. Note that this equation is formally equivalent to the one that determines the firing rate of a population of excitatory neurons with an input–output transfer function, SEE, coupled recurrently with linear interactions of strength G, receiving an external input GQ. It can be solved graphically: its solutions are given by the intersections of the straight line y = (fE − Q)/G with the curve y = SEE(fE).

Of particular interest is the case in which SEE has a sigmoidal shape (Fig. 1B). Then, for G small (Fig. 1B, blue line) or G large (red line) only one solution exists (blue and red points, respectively). For intermediate G (green line), three solutions coexist (green points). One corresponds to a low activity state and another to a high activity state. In the third solution (point u) the activity is at an intermediate level. Stability analysis reveals that the low and high activity states are stable whereas the intermediate state is unstable. Therefore, a network with such nonlinear recurrent excitatory interactions can display bistability between two balanced states.

As an example, we consider the function FEE plotted in Figure 1C. We numerically solved Equation 23 for different values of the external input and the strength of the recurrent excitation. The resulting phase diagram is plotted in Figure 1D. It shows that there is a large domain in the parameter space where the network displays bistability. In this domain, the balanced conditions, Equations 21 and 22, are fulfilled. Hence, the bistability is between balanced states.

Similar analyses can be performed when nonlinearities are present in II, EI, or IE interactions. This shows that bistability between balanced states can also occur if the II interactions are nonlinear with a sigmoidal transfer function. However, nonlinearities only in EI or IE interactions are not sufficient to sustain bistability of balanced states (results not shown).

Nonlinearities induced by facilitating recurrent excitatory synapses can sustain bistability between balanced states in a spiking network

The analysis above provides insights into the possibility of achieving bistability between balanced states in large neuronal networks in which excitatory recurrent synaptic currents are sigmoidal functions of the firing rates of the presynaptic neurons. Synapses exhibiting STP with facilitation at a low presynaptic firing rate display input–output transfer functions that exhibit this feature. This suggests that STP may underlie bistability of balanced states. Let us note that it has been previously found that synapses with STP can give rise to bistability in a fully connected network of excitatory integrate-and-fire neurons, although in this case no irregular firing is observed (Hempel et al., 2000).

We investigated this hypothesis in a network consisting of two large populations of integrate-and-fire neurons with random and unstructured connectivity (see Materials and Methods for details). The recurrent excitatory synapses are endowed with STP described according to the model of Markram et al. (1998). The efficacy of an EE connection, GEEux, is the product of the maximal efficacy GEE, the amount of available synaptic resources x, and the utilization fraction of resources u. At each presynaptic spike, the synapse depresses due to depletion of neurotransmitter and it also facilitates due to calcium influx. As a result, the variable x is reduced by a quantity ux (depression) and the fraction u increases (facilitation). Between spikes, u relaxes to its baseline level, U, and x recovers to 1, with time constants τf and τr, respectively. The parameters we use for the STP are given in Table 1. Figure 2A depicts the steady-state input–output transfer function for these parameters when the presynaptic spike train has Poisson statistics or when it is periodic. In both cases, the shape is similar to the one in Figure 1C. Note that the shape of the input–output transfer function depends only weakly on the spike statistics.

Figure 2.

Bistability between balanced states induced by STP in recurrent excitation in a two-population network of integrate-and-fire neurons. Parameters as in Table 1. We keep the relationship IEb = IIb. A, The transduction function of the recurrent excitatory synapses facilitates (resp. depresses) at a low (resp. high) presynaptic firing rate. This function was computed by simulating the model synapse (Eqs. 6, 7 in Materials and Methods) and the stationary value (after 5 s of simulation) of the product ux averaged over 100 trials. Dashed line, Periodic input. Dots, Input with Poisson statistics. B, Phase diagram of the network (GEI = 2.6). The star indicates the parameters used in C. C, Top, The population average activity of the excitatory (solid line, low activity state: fE = 1.25 Hz; high activity state: fE = 3.9 Hz) and inhibitory (dashed line, low activity state: fI = 4.1 Hz; high activity state: fI = 9.32 Hz) populations. Bottom, External inputs (background plus transient inputs).

We performed extensive numerical simulations to study the dependence of the network steady states on the model parameters. Figure 2B shows the phase diagram of the model as a function of the strength of the recurrent excitation and the background inputs. All other parameters of the model are given in Tables 1 and 2. It is qualitatively similar to the phase diagram of our nonlinear rate model (Fig. 1D). It displays a wide region of bistability between a low (baseline) and an elevated (persistent) activity state. In this region, the network prepared in the baseline state remains in that state. However, a transient input of appropriate intensity and duration induces a switch of the network to the activity-elevated state. The network persists in that state until another appropriate transient input switches it back to baseline (Fig. 2C).

Table 2.

Parameters of the external current for the unstructured and spatial working memory networks

| Unstructured | Spatial WM | |

|---|---|---|

| iEb | 1.66 mV | 1.66 mV |

| âEcue | 4.66 mV | 2.4 mV |

| ϵEcue | 0 | 0.17 |

| âEerase | 2.66 mV | 5.2 mV |

| ϵEerase | 0 | 0.23 |

| iIb | 0.83 mV | 0.83 mV |

| âIcue | 2.33 mV | 1 mV |

| ϵIcue | 0 | 0 |

| âIerase | 1.83 mV | 3.7 mV |

| ϵIerase | 0 | 0.28 |

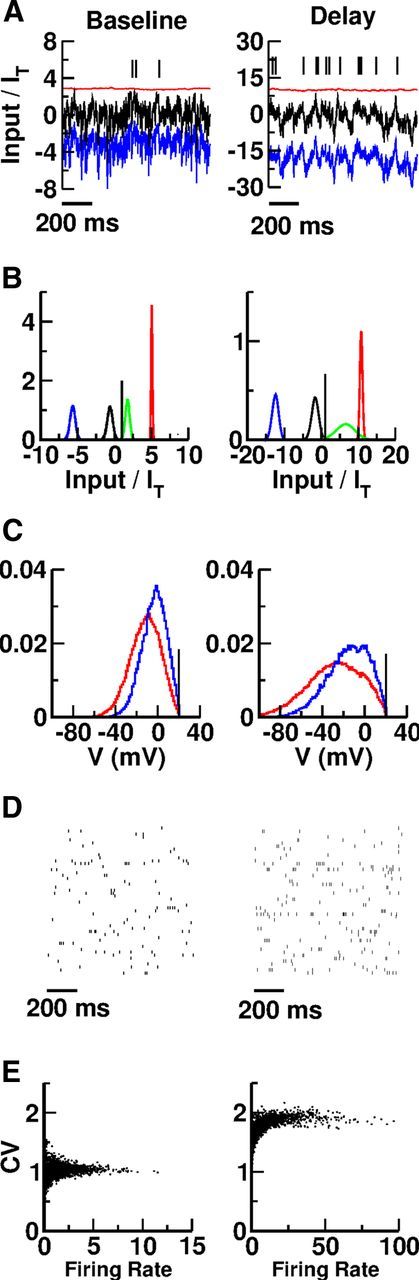

The balance regime is characterized by excitatory and inhibitory inputs into neurons that are much larger than the neuronal threshold, whereas the temporal mean and temporal fluctuations of the total (net) inputs are comparable to the threshold. Figure 3A shows the excitatory (red), inhibitory (blue), and total synaptic currents (black) to an excitatory neuron in the network in the baseline and in the persistent states. In both situations, the time average of the excitatory current into this neuron is much larger than the threshold. However, it is compensated for to a large extent by a strong inhibition. This results in a total input whose temporal mean is below threshold at a distance comparable to the amplitude of the input temporal fluctuations. As a result, in baseline as well as during the delay period, the action potentials this neuron fires are driven by the temporal fluctuations. The resulting spike trains are highly irregular in both epochs.

Figure 3.

Inhibition balances excitation in the baseline as well as in the persistent state. A, Input currents into an excitatory neuron. Red, Excitatory input (recurrent plus background). Blue, Inhibitory input. Black, Total input (excitatory plus inhibitory). The firing rate and the CV of the ISI histogram of this neuron are as follows: baseline: f = 1.4 Hz, CV = 1.2; delay: f = 16.6 Hz, CV = 1.9. B, Population histograms of the inputs into the neurons normalized to the firing threshold. Blue, Time-averaged inhibitory input. Red, Time-averaged excitatory input. Black, Time-averaged total input. Green, Time-averaged total input plus 1.5 SDs of the total input fluctuations. All neurons are included in the histograms. Vertical line corresponds to threshold. C, Population histograms of the membrane potentials. Red, Excitatory population. Blue, Inhibitory population. The membrane potentials of all the neurons are included in the histograms. The potentials are sampled with a rate of 0.1 Hz. Vertical line corresponds to threshold. D, Spike trains of 200 excitatory neurons during baseline and delay periods. E, CV versus firing rates. One thousand neurons in each population are included. The histograms of the CVs and the firing rates are plotted in Figure 4. The results plotted in C and D were obtained in simulations 100 s long.

The histograms plotted in Figure 3B show that these features are not specific to this particular neuron. For all the neurons, the mean excitatory and inhibitory currents are much larger than the threshold in baseline as well as in the persistent state. However, in both states the mean net input is comparable, in absolute value, to the threshold and the input fluctuations. It is in general below threshold, but the fluctuations are large enough to bring the membrane potential of the neurons above threshold. This is clear from the histogram of the mean inputs plus 1.5 SDs plotted in green in Figure 3B. The distribution of the membrane potentials in the two populations can be seen in Figure 3C. We can see that even if the firing rate is higher in the delay state than in baseline, the membrane potentials tend to be smaller in the second case because the mean total input decreases. The activity of the neurons is highly irregular in both states. This is depicted in Figure 3D, where the spike rasters of a subset of neurons are plotted. This is also confirmed by Figure 3E, which plots the CV of the ISI of 2000 neurons as a function of their averaged firing rates.

Interestingly, in the persistent state, the mean net inputs into the neurons are more negative than during baseline. However, the resulting mean hyperpolarization of the neurons is compensated for by an increase in their input temporal fluctuations in such a way that the neuronal activity is larger in the persistent states than in baseline. The firing is more irregular in the persistent state: the histogram of the CV of the ISI distributions is shifted toward values larger than during baseline (Fig. 3E). This is a consequence of the increase in the input temporal fluctuations.

Note that our model does not incorporate the voltage dependence of NMDA synapses (Jahr and Stevens, 1990). This is another nonlinearity that will not be washed out in the balanced state. We have checked that these nonlinearities do not affect the qualitative behavior of the model when STP is present, but they are incapable by themselves of generating persistent activity in a balanced state. This is because as the firing rate increases the mean total input decreases and the mean membrane potential decreases also (Fig. 3C). The increase in the firing rate is allowed only by the increase in the fluctuations, but the NMDA synapse filters those fluctuations and is dominated by the mean voltage. Therefore the voltage-dependent NMDA synapse will not become potentiated as firing rate increases, and cannot provide a suitable substrate for WM.

Robustness of the bistability regime with respect to connectivity changes

We investigated the robustness of the bistability regime in our model with respect to changes in connectivity K by simulating the network with different values of K while the strength of the interactions and the external inputs are scaled according to Equations 8, 9, and 10 (van Vreeswijk and Sompolinsky, 1996, 1998, 2004). This scaling guarantees that the temporal fluctuations in the total inputs into the neurons remain similar while increasing K.

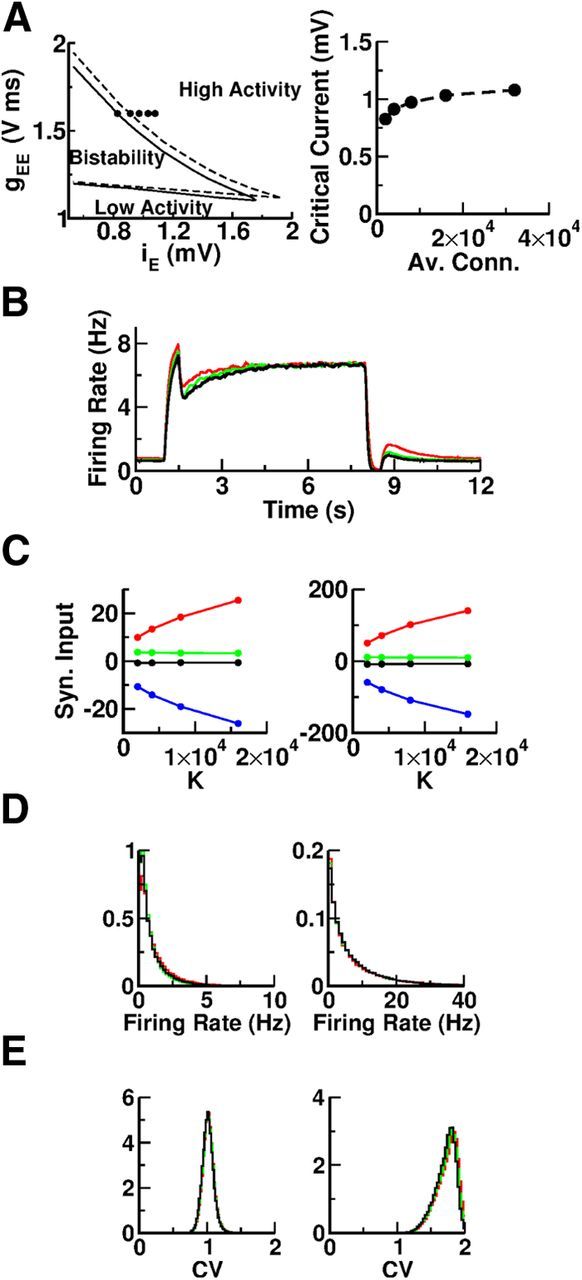

Changing the connectivity from K = 2000 to K = 4000 has some effect in the phase diagram of the network as indicated by the comparison of the solid and dashed lines in Figure 4A (left). The lower boundary of the bistable region moves slightly upward but at the same time the upper boundary also moves in the same direction. The latter move is larger than the former. Hence, in fact, the bistable region is slightly larger for K = 4000. This suggests that the phase diagram remains essentially the same when K increases. Figure 4A (right) plots the critical value of the background current on the boundary of the bistable region for GEE = 1.6 V ms for K = 2000 up to K = 32,000. The overall variation of the critical current suggests that it saturates as K becomes very large.

Figure 4.

The bistable regime is robust with respect to changes in the average connectivity, K. A, Left, Phase diagram for K = 2000 (solid line) and K = 4000 (dashed line). The dots show the critical value of the background current below which the network displays bistability for gEE = 1.6 V ms and K = 2000, 4000, 8000, 16,000, and 32,000 (left to right). Right, The critical value of the background current below which the network displays bistability as a function of K. For the simulations with K = 2000, the network size was N = 80,000. For the other values of K, N = 160,000. B, The average activity of the excitatory population versus time for different values of K. Red, K = 2000; green, K = 4000; black, K = 8000. The network size is N = 80,000 and the synaptic strength is gEE = 1.23 V ms. C, The population averages of the mean excitatory input (red), total inhibitory input (blue), mean net input (black), and of the input fluctuations (green). Left, Baseline. Right, Delay period. D, Histograms of the firing rates for K = 2000 (red), K = 4000 (green), and K = 8000 (black); N = 80,000. All the neurons in the two populations are included. Left, Baseline. Right, Delay period. The histograms are very similar for all the three values of the connectivity. E, Histograms of the CV for K = 2000 (red), K = 4000 (green), and K = 8000 (black); N = 80,000. CVs were estimated from spike trains 100 s long. All the neurons with a firing rate larger than 0.5 Hz in the two populations are included. Left, Baseline. Right, Delay period. The histograms are almost identical for all three values of the connectivity.

The properties of the dynamical states of the network for the reference set of parameters (see Tables 1, 2) are also compared in Figure 4 for K = 2000, 4000, and 8000. Figure 4B confirms the robustness of the bistability with respect to K. Indeed, the network is bistable, the population average activities in the two coexisting stable states are the same, and the overall dynamics of activity during the switch on/off are very similar for all the values of K tested. Figure 4C plots, as a function of K, the population average of the mean excitatory currents (red) and mean inhibitory currents (blue) as well as the mean (black) and the fluctuations (green) of the net inputs into one excitatory neuron. The mean excitation and the mean inhibition increase proportionally to . This contrasts with the mean and the fluctuations of the net inputs, which remain almost constant and on the order of the neuronal threshold. These features indicate that the baseline as well as the elevated activity states are balanced independently of K. Finally, Figure 4D,E plots the histograms of the single neuron firing rates and CV for different values of K. It is clear that increasing the connectivity has almost no effect on these distributions.

We therefore conclude that the bistability, the excitation and inhibition balance, the irregularity, and the heterogeneity of the neuronal activity are robust in our network with respect to change in connectivity.

Network mechanism underlying visuospatial WM

The classical framework to investigate visuospatial WM in primates is the ODR task schematically represented in Figure 5A. In this task, a monkey needs to remember the location of a stimulus for a delay period of several seconds and make a saccade in that direction at the end of the period (Funahashi et al., 1989). Electrophysiological recordings performed in dorsolateral prefrontal cortex of primates has revealed that neurons in this region modify their activity persistently and selectively to the cue direction during the delay period (Funahashi et al., 1989, 1990, 1991; Constantinidis et al., 2001; Takeda and Funahashi, 2007). It is believed that this selective persistent activity is the neural correlate of information on the location of the cue that has to be memorized to perform the saccade at the end of the delay period.

Figure 5.

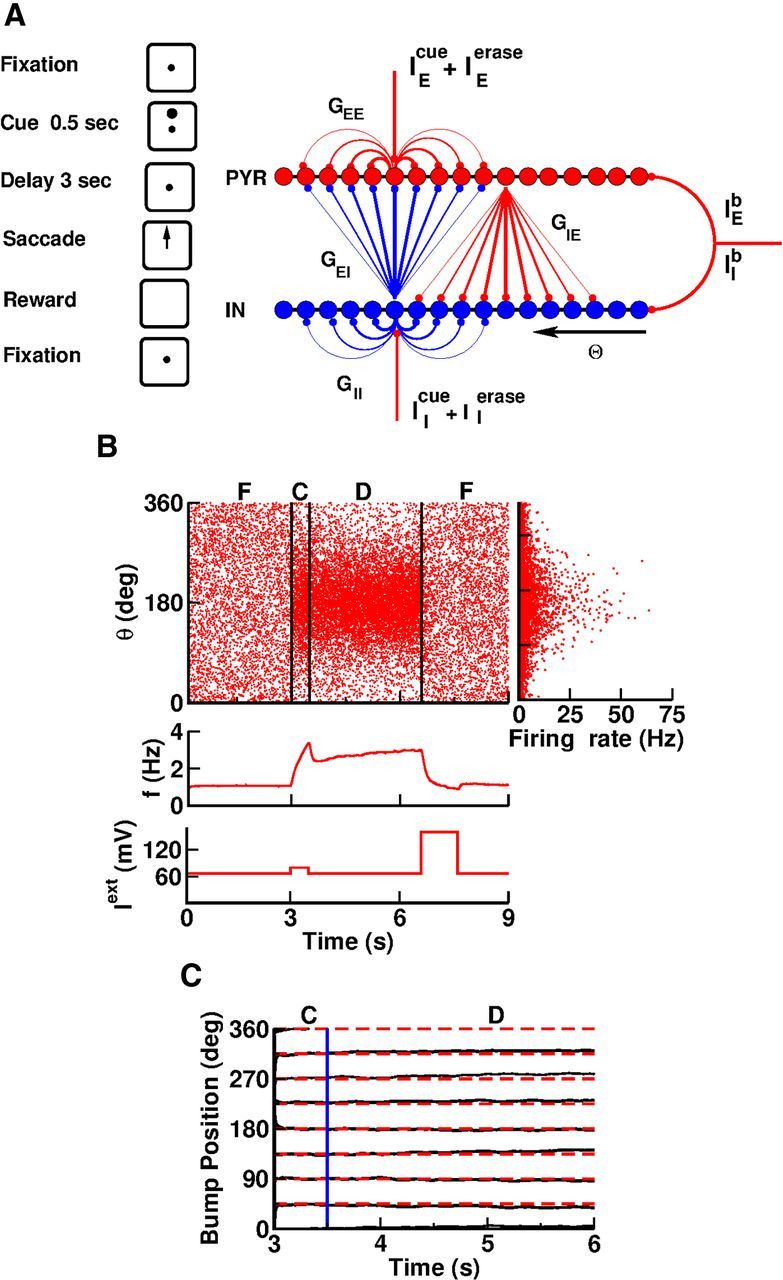

The PFC network model. A, Left, The visuomotor WM task. A trial begins while the monkey fixates the screen center. A visual cue appears for 0.5 s. The monkey must memorize the cue direction during a 3 s delay period while it maintains fixation. At the end of the delay it must make a saccade in the cue direction and restore fixation. Right, Model architecture. Red dots, Excitatory (pyramidal) neurons. Blue dots, Inhibitory interneurons. Neurons are arranged according to their preferred directions θ. The probability of connection decreases as a function of the difference between the preferred directions. The interaction strengths are GEE, GIE, GEI, and GII. The background currents IEb, IIb are applied uniformly to all the neurons. The transient currents IEcue, IIcue, IEerase, and IEerase are applied as explained in Materials and Methods. B, One trial of the task simulated in the network. Cue direction, 180°. Fixation, cue, and delay periods are denoted by F, C, and D, respectively. Top, Left, Spike rasters. Ten percent of the excitatory neurons are included. Top, Right, Spatial profile of the time-averaged activity of the neurons during the delay period. Twenty-five percent of the neurons are represented. Middle, Population-averaged activity of excitatory neurons versus time. Bottom, External input to excitatory neurons versus time. C, The position of the bump versus time during cue (C) and delay (D) periods for eight directions of the cue (every 45°, dashed lines). The blue vertical line denotes the beginning of the delay period (see Materials and Methods for details).

A theoretical framework to account for this selective persistent delay activity is a recurrent network made of identical neurons with the geometry of a ring and a connectivity pattern such that the interaction between two neurons depends solely on their distance on the ring (Camperi and Wang, 1998; Compte et al., 2000). With sufficiently strong and spatially modulated recurrent excitation and appropriate inhibition, the network operates in a regime of multistability between a state in which the activity is homogeneous and a set of states characterized by a bumpy activity profile. The “bump” can be localized at an arbitrary location if the network connectivity is tuned so that it is rotationally invariant. During the cue period, a transient stimulus tuned to a specific location on the ring, corresponding to the direction to be memorized, selects the state in which the bump peaks at that location. After the stimulus is withdrawn, the network remains in this state. Therefore, the network is able to encode the memory of the cue location.

A crucial ingredient in this hypothesis is that neuronal populations respond in a nonlinear fashion to external inputs. This is essential not only to generate the persistence of neuronal activity during the delay but also its bumpy localized profile (i.e., selectivity) (Hansel and Sompolinsky, 1998). In all the models studied so far to account for selective persistent activity in ODR tasks (Compte et al., 2000; Barbieri and Brunel, 2008), the population nonlinearities are induced by nonlinear input–output transfer functions of single neurons. As argued above, the network in these models cannot display baseline as well as memory balanced states because of the washout at the population level of the latter nonlinearities. In the following we show that this becomes possible if the nonlinearities are generated by short-term facilitation in the recurrent excitatory synapses.

Balanced visuospatial WM can be sustained by STP

We consider a large recurrent network of integrate-and-fire neurons with a ring architecture (Fig. 5A; Materials and Methods). Each neuron is parametrized by its location, θ, on the ring. The probability of connection between two neurons decreases with their distance according to Equation 4. The neurons receive a homogeneous background input that is constant in time. During the cue period, the neurons receive an additional input. This stimulus depends on the direction of the cue according to Equation 3. It is maximum for θcue = θ. Another transient input erases the memory at the end of the delay period. A crucial feature of the model is that excitatory recurrent synapses display STP as in Figure 2A. See Materials and Methods for the details of the model.

We studied this network using numerical simulations that mimic the experimental protocol of an ODR task. Figure 5B shows a typical trial. At the start, during the precue period, the network activity is low and homogeneous: the network is in its baseline state. The visual cue is presented for 500 ms in direction θcue = 180°. Subsequently the neurons near θ = 180° elevate their activity. Upon removal of the cue, the network relaxes to a state with a “bumpy” pattern of activity localized near θcue. The network activity remains elevated and localized close to θ = θcue (Fig. 5C), maintaining memory of the cue direction during the 3 s delay period. Eventually, the transient input at the end of the delay period erases this memory.

For the duration of the turn-on input we have chosen 0.5 s, as this is the typical duration of the cue period in the ODR task experiments (Funahashi et al., 1989). We have also assumed that the cue-related input is tuned to account for the direction selectivity of the cue period activity of PFC neurons. With the parameters we have chosen, the cue-related input, averaged over all directions, is not very different from the background input. The modulation with the direction, ε, is 0.17. So the maximum/minimum of this input are only 17% larger/smaller than the background. In fact, our simulations indicate that the mean cue-related input can be taken as the same as for the background input (results not shown). The most critical parameter is ε, which needs to be at least 0.1. Therefore, the transient input necessary to activate the persistent state does not need to be large compared with background.

As far as we know, nothing is known experimentally about the strength and the duration of the external inputs responsible for turning the switch off. Simulations show that erasing of the memory can be achieved in various ways in our model. In fact, a broad range of stimulus parameters and stimulus durations can achieve switch-off. The most efficient way is a sufficiently strong and long-lasting feedfoward inhibitory input into the excitatory population, or a strong excitatory input on the inhibitory neurons. However, such stimuli suppresses almost completely the activity in all the neurons. This is not observed in experiments. In the parameters of our reference set, both populations of neurons are excited by the transient stimulus that erases the memory. The excitation of the interneurons induces an inhibition on the pyramidal cells. This inhibition is to some extent balanced by the feedforward excitatory input these neurons receive via the erasing stimulus. As a result, this input reduces their activity and only some of the pyramidal neurons display a transient complete suppression of activity. Note that according to Table 2 with the parameters of the simulations described in the paper, the mean (over directions) of the switch-off-related input is approximately 2 (for excitatory neurons) and 3 (for inhibitory neurons) times larger than the background input. Hence the switch-off input is larger than the background, but only moderately.

To show that there is a balance of excitation and inhibition during the precue as well as during the delay period, we computed the time average of each neuron's excitatory, inhibitory, and total synaptic inputs. The spatial profiles of these signals are depicted in Figure 6 for the excitatory neurons. During the precue period, these inputs display heterogeneities on a short spatial scale, due to the randomness of the connectivity. After averaging over these fluctuations, the profile of activity is essentially homogeneous. This is in contrast to the delay period, during which these inputs are modulated, in line with the fact that the mnemonic activity encodes the direction of the cue. Another difference between the two periods is that excitatory and inhibitory inputs into the neurons are larger during the delay than during the precue period. However, during both periods, inhibition approximately balances excitation and action potentials are driven by temporal fluctuations in the inputs (compare green line with threshold). Interestingly, the temporal mean of the total inputs tends to be lower during the delay than during the precue period. This is compatible with the elevation of the activity of a large fraction of the neurons during the delay period because the fluctuations also tend to increase. This is similar to what we found in our simulations of the unstructured network studied above (e.g., Fig. 3).

Figure 6.

Spatial profiles of the time-averaged inputs into excitatory neurons demonstrating the balance of excitation and inhibition in the spatial WM model. A, Baseline. B, Delay period. Black curves, Top to bottom, Excitatory input, total input, and inhibitory input. Red, Excitatory input averaged with a square filter with a width of 50 neurons to smooth fast spatial fluctuations. Blue, Inhibitory input averaged with the same filter. Green, Total input plus 1.5 SDs of the total synaptic input fluctuations. All the inputs are normalized to the threshold (dashed line).

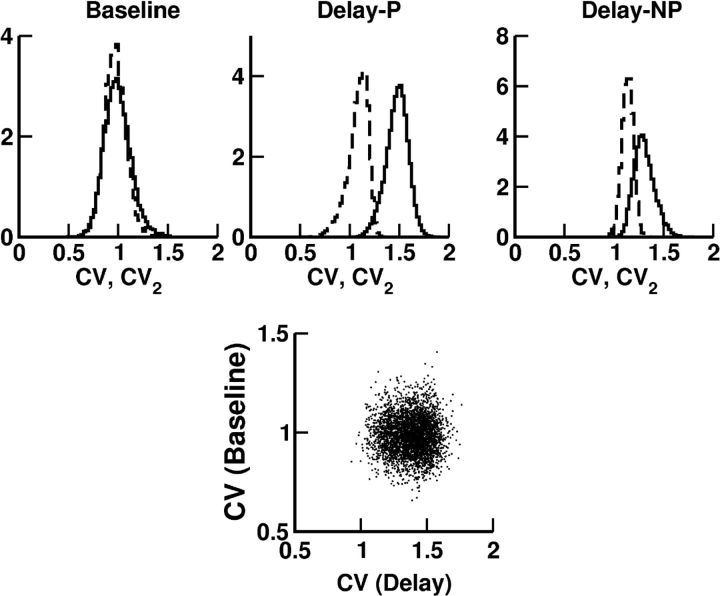

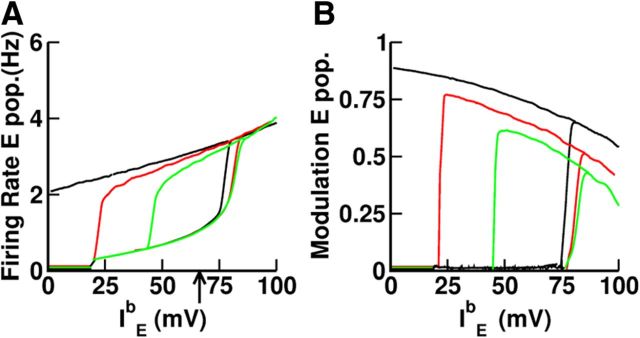

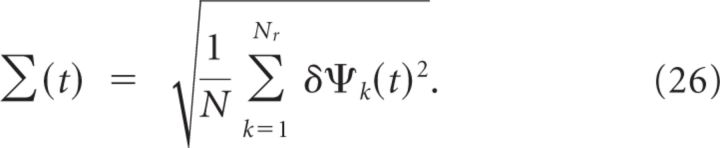

The activity of most of the neurons in our network is direction-selective during the cue period as well as during the delay. This is depicted in Figure 7 for one excitatory neuron. Note that its tuning curves during the two periods have slightly different preferred directions (PDs) and different tuning widths (TWs). The discharge pattern of the neuron at baseline is highly irregular (CV = 0.9). It is also strongly irregular during the delay whether the cue is presented at PD (CV = 1.6) or away from it (CV = 1.3). In Figure 8 we show the distribution of CV over the whole population in the precue and the delay periods. For the delay period the CVs were computed separately for preferred (Fig. 8, left) and nonpreferred directions (Fig. 8, right), defined as those directions for which the trial-averaged firing rate was higher (preferred directions) or lower (nonpreferred directions) than the activity of that neuron averaged both over trials and over the eight directions (Compte et al., 2003). These results show that all the neurons in both periods fire in a very irregular manner and that, during the delay, the firing is more irregular when the cue was presented at preferred directions. We also investigated whether slow firing rate nonstationarities can account for part of the irregularity. To do that we computed CV2, which takes into account difference between adjacent interspike intervals (see Materials and Methods). We found (Fig. 8, dashed lines) that the behavior is now much more similar to a Poisson process. This is also compatible with the results found in Compte et al. (2003). In Figure 8 we also show CV in baseline period versus CV in delay period for all the neurons in the network. We can see that there is no correlation between the two values.

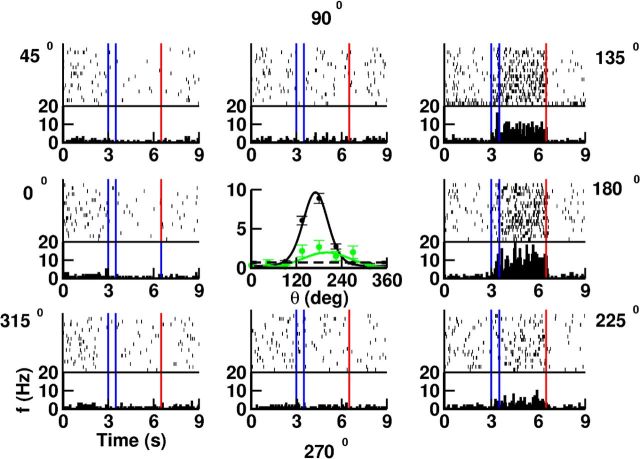

Figure 7.

The activity of the neurons during the delay period is selective to the direction of the cue and the spike trains are highly irregular for preferred as well as for nonpreferred cue directions. Rasters and PSTH (bins, 50 ms) for one excitatory cell. Eight cue directions and 20 trials/cue direction. Vertical lines indicate the cue period (blue) and the end of the delay period (red). Center, tuning curves of the neuron for the delay (black; PD, 170°; TW, 38°) and cue (green; PD, 199°; TW, 68°) periods. Note that for this neuron the TWs are different but the PDs are similar. Error bars: SD estimated over the 20 trials. Dashed line, Baseline firing.

Figure 8.

Top, Histograms of the CV (solid line) and CV2 (dashed line) for activities during fixation (baseline), and delay periods. Delay-P, preferred directions. Delay-NP, nonpreferred directions. (see Materials and Methods). For each neuron, CV and CV2 were computed from 20 trials per cue direction. Only directions with average firing rate >2 Hz are included. Bottom, CV in baseline versus CV in delay for all the neurons.

The selectivity properties and the dynamics of the delay activity are diverse

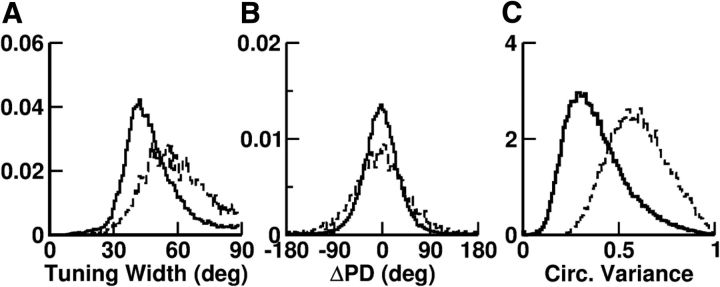

The neurons in our model display a diversity of selectivity properties. Although most neurons (98%) have selective delay activity (selectivity evaluated by the bootstrap method), only half have tuning curves well fitted to a von Mises function (Eq. 13). Moreover the width of the tuning curves of these neurons are broadly distributed (Fig. 9A) and the preferred directions are diverse even for nearby neurons (Fig. 9B). Neurons with tuning curves badly fitted to a von Mises function also display a broad dispersion in the degree of selectivity as quantified by the circular variance (Mardia, 1972) (Fig. 9C). Other aspects of the diversity in tuning curves are depicted in Figure 10A. Note in particular that, depending on the neuron, for a cue that is opposite to the preferred direction, the delay activity can be suppressed (also Fig. 7), similar to, or enhanced compared with baseline (Fig. 10A, black lines).

Figure 9.

Diversity in the tuning curves. A, Histogram of the tuning width for neurons with tuning curves well fitted with a von Mises function. Solid, Excitatory neurons (TW, 48 ± 14°). Dashed, Inhibitory neurons (TW, 61 ± 16°). B, Histograms of the difference ΔPD = PD − θ between the PD of a neuron and its location in the network for these neurons. C, Histograms of the circular variance for all the neurons (solid, excitatory neurons; dashed, inhibitory neurons).

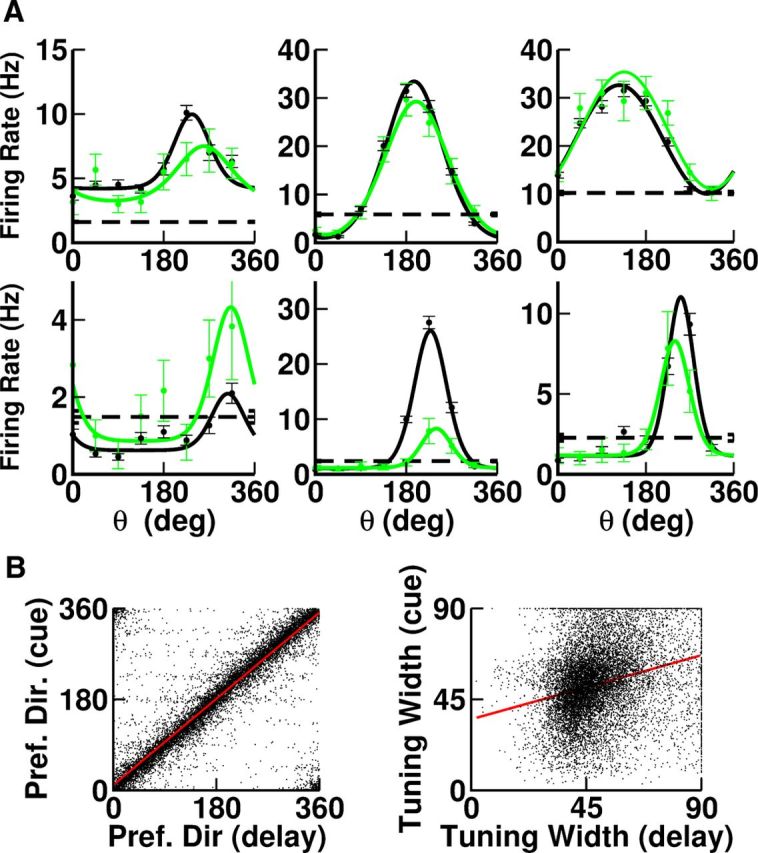

Figure 10.

A, Diversity in the shapes of the tuning curves. Black, Delay. Green, Cue. Dashed line, Baseline average firing rate. All the neurons are excitatory except for the last neurons in the second line, which are inhibitory. B, Comparison of the tuning properties of the neurons during the cue and delay periods. Left, The preferred directions are strongly correlated (R2 = 0.94). Right, The tuning widths are weakly correlated (R2 = 0.02). Only neurons with tuning curves well fitted with von Mises functions for the two periods are included.

The tuning curves of the responses during the cue period are also diverse (Fig. 10A, green lines). For instance, for some neurons the optimal response is larger for the cue than for the delay whereas for others the reverse is observed. The simulations also revealed strong correlations between the preferred direction of the delay and the cue responses. In contrast, the tuning widths in the two epochs are only weakly correlated (Fig. 10B). Note that in Fig. 10B (left) one can see eight faint horizontal stripes associated with the eight equally spaced directions used to evaluate the tuning curves. This is because for very narrow tuning curves, the sampling of the directions is too coarse to obtain a precise estimate of the preferred directions. This effect involves <0.1% of the neurons.

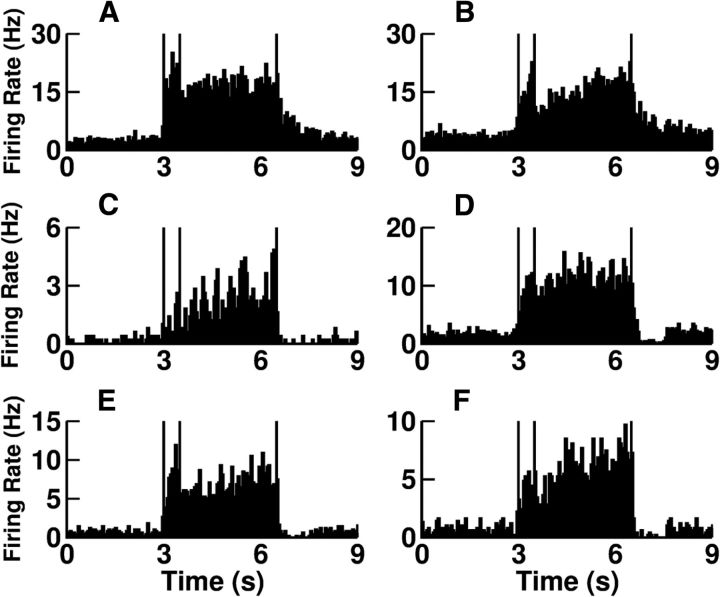

Figure 11 plots the poststimulus time histograms (PSTHs) of the activity for several neurons. It shows that the firing rate dynamics of the neurons during the task are also diverse. In particular, although for many neurons activity remains essentially constant during the delay period (Figs. 1, 3, 11C), for others it ramps up (Figs. 2, 4, 11C). Some neurons display a phasic response to the cue (Figs. 1, 2, 11C), whereas others do not (Figs. 3, 4, 11C). In fact, visual inspection of the PSTH for a sample of 200 neurons (data not shown) indicates a phasic cue response at the preferred direction for approximately half of them.

Figure 11.

Diversity in the firing rate dynamics during cue, delay, and response periods. Poststimulus histograms (100 trials included) for five neurons. A–D, Different excitatory neurons for a cue direction at their PDs. E, F, One excitatory neuron with PD = 161° and a cue at 135° (E) and at 225° (F).

This diversity is also an outcome of the balanced regime in which the network is operating. Because of the randomness in connectivity, the excitatory and the inhibitory inputs fluctuate spatially. Although the spatial fluctuations are much smaller than the spatial average for each of these inputs, they are comparable in size in the total inputs. As a result, the spatial fluctuations in connectivity substantially affect the discharge of the neurons (van Vreeswijk and Sompolinsky, 1996, 1998, 2004) inducing diversity in single neuron activity properties.

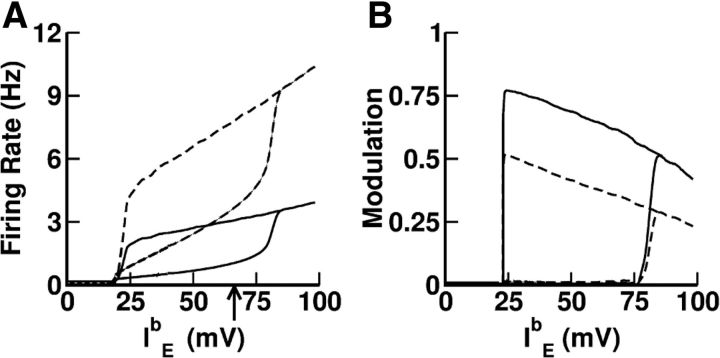

The network dynamics are multistable in a broad range of the background inputs

We assessed the robustness of multistability with respect to changes in the background inputs. Figure 12 depicts the bifurcation diagram for the network dynamic states when the background input is varied. This was done by running the dynamics of the network (with the parameters of Table 1) while changing IEb (keeping IIb = IEb/2) very slowly. The network was initialized in the low activity state with a small value of IEb just above the threshold current of the excitatory neurons, IEb ≈ 20 mV. Figure 12 plots the hysteresis behavior of the spatial average (A) and the spatial modulation (B) of the network activity, as defined in the Materials and Methods section, as a function of IEb. By increasing IEb slowly, the network remains in a state of low activity up to a value of IEb ≈ 80 mV, beyond which this state no longer exists. The network then settles in a state of elevated activity where it remains while IEb keeps increasing. At IEb = 100 mV the direction of the changes of IEb is reversed and it starts to decrease. The network now tracks the state of elevated activity until IEb ≈ 24 mV, when it ceases to exist. This shows that in a broad range of background inputs the network displays multistability between states that differ by their level of activity and by their spatial profile.

Figure 12.

Bifurcation diagram as IEb is varied. A, Spatial average activities of excitatory neurons (solid) and inhibitory (dashed). B, Spatial modulation of the activities of the two populations. All parameters are given in Table 1. The arrow indicates the value of the current used in simulations.

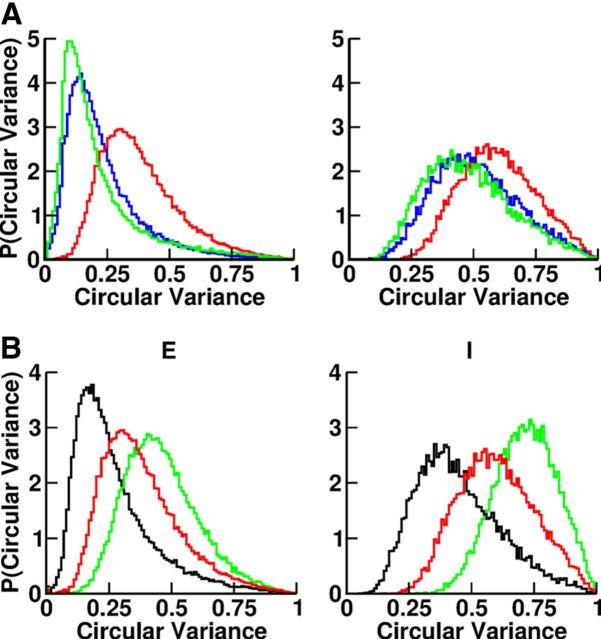

Selectivity depends on the range of the inhibition

Importantly, the multistability of the network and the selectivity of the neurons are robust to changes in the connectivity K. This is shown in Figure 13A, which plots the distribution of the circular variance for three different values of the connectivity (K = 2000, 4000, and 8000). It shows that for larger connectivity the neurons tend to be more selective, but that this effect saturates for large values of K.

Figure 13.

A, Dependence of the width of the tuning curves on the network connectivity. Left, Distribution of the circular variance for the excitatory neurons. Right, Distribution of the circular variance for the inhibitory neurons. K = 2000 (red), K = 4000 (blue), K = 8000 (green). Other parameters as in Tables 1 and 2. B, Dependence of the width of the tuning curves on the range of inhibition. Left, Distribution of circular variance for the excitatory neurons. Right, Distribution of the circular variance for the inhibitory neurons. σII = σEI = 60° (red), σII = σEI = 80° (black), and σII = σEI = 40° (green). All the other parameters (but σII, σEI) are given in Table 1.

Figure 14 plots the bifurcation diagram for different values of the spatial range of the inhibitory interactions. It shows that, even though the range of the inhibition is shorter than the range of the excitation, the network displays multistability. It also shows that the domain of multistability is larger when the inhibition is broader. It can also be seen that in the multistable regime, the spatial average firing rates in the baseline as well as in persistent states depend only weakly on the range of the inhibition (Fig. 14, left). The dependency of the spatial modulation of the activity profile in the persistent state is more pronounced: for broader inhibition the modulation is larger (Fig. 14, right). This corresponds to an increase in the degree of direction selectivity of the neurons during the delay period when inhibition is broader. This is shown in Figure 13B and in Table 3. The fraction of neurons with good fit to a circular variance, also given in Table 3, is also sensitive to the range of the inhibitory interactions. The broader the inhibition, the smaller this fraction becomes.

Figure 14.

Dependence of the bifurcation diagram on the range of inhibition. A, Spatial average activity of the excitatory neurons for the following: σII = σEI = 60° (red), σII = σEI = 80° (black), and σII = σEI = 40° (green). B, Spatial modulation of the activity of the excitatory neurons. All parameters (but σII, σEI) are given in Tables 1 and 2.

Table 3.

Tuning width and percentage of neurons with good tuning, fq>0.001, as a function of the width of the inhibitory interactions, σ = σII = σEI

| σ | TWE | TWI | fq>0.001 |

|---|---|---|---|

| 40° | 50±13° | 60±14° | 75% |

| 60° | 48±14° | 61±16° | 44% |

| 80° | 38±13° | 49±16° | 23% |

These results indicate that these parameters (range of inhibition and connectivity) can be varied in a very broad range and the network still displays selective persistent activity.

Encoding of the cue direction in the position of the activity bump

In theory, the existence of a continuous set of persistent attractors is necessary to maintain memory of the cue direction in the network. This continuity is destroyed by very small spatial heterogeneities in the connectivity or in the intrinsic properties of the neurons. In the presence of such heterogeneities, the network state during the delay period drifts toward a discrete attractor of the dynamics that is only very weakly correlated with the cue position (Tsodyks and Sejnowski, 1995; Zhang, 1996; Seung et al., 2000; Renart et al., 2003). Hence, the memory trace encoded in the location of the bump of network activity fades during the delay period at a rate that depends on the velocity of this drift. If the drift is too fast, this trace cannot be conserved for the duration of the delay and the selectivity of the neurons to the cue direction will be impaired. However, if the drift is sufficiently slow, the network can still function properly to encode the position of the cue, provided the delay duration is not overly lengthy.

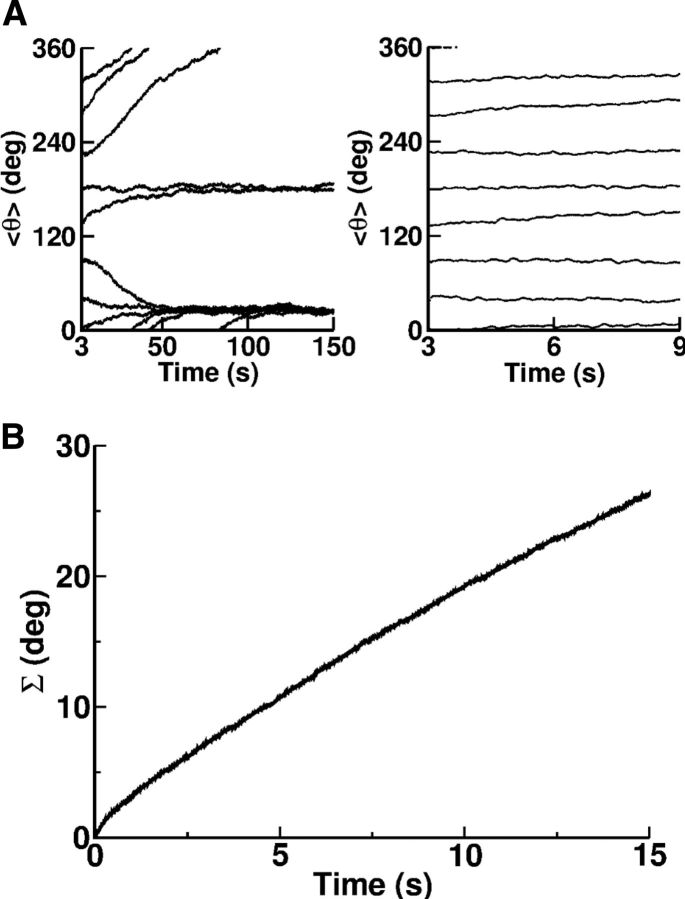

In our network model, the connectivity is random and hence is heterogeneous. As a result, the dynamics of the network possesses only a small number of attractors, as shown in Figure 15A (left). The eight trajectories of the bump plotted in that figure correspond to different directions of presentation of the stimulus during the cue period. After a relatively long time all the trajectories converge toward one of two possible locations. In other words, there are only two persistent attractors. However, if the delay period is not too long, the position of the cue is still strongly correlated at the end of this period (Fig. 15A, right).

Figure 15.

A, The direction of the population vector estimated from the activity of the excitatory neurons versus time. All parameters are as in the reference set. At that time the cue is presented at t = 3 s for a duration of 500 ms in one of the eight directions. From bottom to top (in degrees): 0, 45, 90, 135, 180, 225, 270, 315. The total simulation time is 150 s (left) and 10 s (right). B, Σ(t) (in degrees) versus time (see definition in Materials and Methods). The size of the network is NE = 64,000, NI = 16,000. The averaging was performed over Nr = 100 realizations of the network. All parameters are as in the reference set.

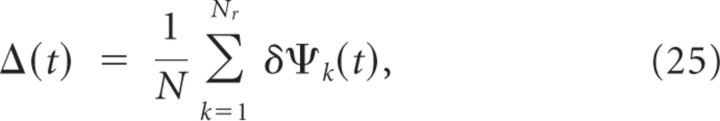

In fact, the accuracy with which the location of the cue can be memorized decreases with the duration of the delay period. To estimate the rapidity of the memory degradation, we simulated Nr = 100 realizations of the network for delay periods of 15 s. For each realization we computed, as a function of time, the location of the bump of activity, ψk(t) (k = 1, …, Nr), as explained in Materials and Methods. Defining δψk(t) = ψk(t) − ψk(0) (t = 0 at the beginning of the delay period), we evaluated:

|

and:

|

Clearly Δ(t) is a small quantity on the order of . This is because, after averaging over the realizations of the connectivity, the network displays rotational symmetry. This is in contrast with Σ(t), which does not vanish even though Nr is large, as depicted in Figure 15B. At short time, the drift is dominated by the effect of the fast noise generated by the network dynamics in the balanced state. Therefore, it is a diffusion process and Σ(t) ∝ . For very long times, the dynamics converge toward one of the attractors therefore Σ(t) saturates. For intermediate values of t, the drift is dominated by the effect of the heterogeneity in the connectivity. It takes the form of a directed random walk and Σ(t) increases linearly with time: Σ(t) ≈ Vt. The rate of this increase, V, is an estimate of the drift velocity and thus of the rapidity of memory deterioration during the delay period. For the parameters of Table 1, we find a drift velocity of approximately 1.8°/s (Fig. 15B). Hence, the typical error in encoding the direction of the cue in the memory trace in this network is not >6° if the delay period is 3 s in duration.

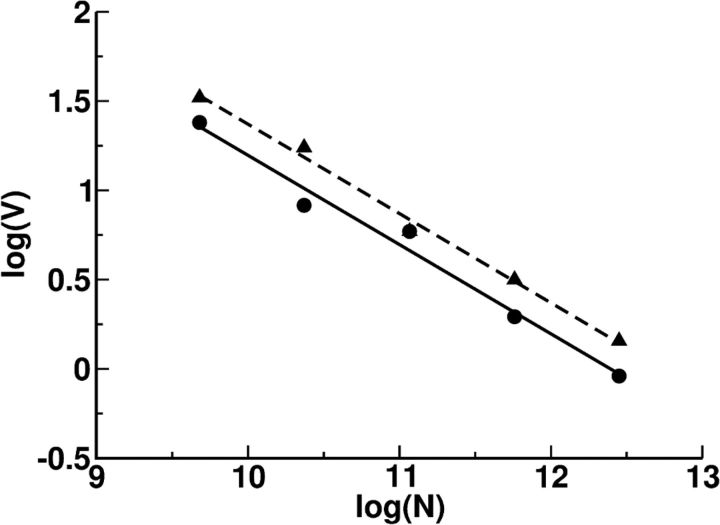

The drift velocity depends on the network size, N, and on its connectivity, K. This is depicted in Figure 16, which plots log V as a function of log N for two values of K. The best linear fit of the data points reveals that the slope is very close to ½ for these two cases. This means that the velocity of the drift scales is V ∝ with a prefactor that decreases with the connectivity. This prefactor also depends on other parameters of the network. For instance, we found that it increases with the background input and therefore with the average activity of the network in the persistent state.

Figure 16.

The drift velocity of the bump versus the network size. Circles, Average connectivity as in the reference set. Triangles, Average connectivity smaller by a factor of 2. All other parameters are as in the reference set. Lines correspond to the best linear fit: y = a0 + a1x. Solid: a0 = 6.193, a1 = −0.499; correlation coefficient, 0.991. Dashed: a0 = 6.372, a1 = −0.5; correlation coefficient, 0.996.

The scaling of the drift velocity can be understood in terms of the fluctuations in the neuronal input. For a bump that involves a finite fraction of the network [i.e., a number of neurons which is O(N)] and if the fluctuations are uncorrelated, the drift velocity should scale as the fluctuation of the input on the individual neuron divided by . In Zhang (1996) these fluctuations come from perturbations in connections on the order of . This means that the fluctuation in the total input for a given neuron is on the order of and the drift velocity is O(1/). In Renart et al. (2003) the fluctuations come from intrinsic heterogeneity and are order 1, so the drift velocity will be O(). In our case we have O(K) inputs into each neuron, each one of them scaling as [. Therefore the total fluctuations per neuron are on the order of 1 and the drift velocity scales as O(1 ). The prefactor should remain finite in the limit of very large K because the quenched fluctuations do not vanish in the balanced regime. Proving rigorously this conjecture is an interesting problem that deserves further research.

Itskov et al. (2011) have recently studied a ring model of visuospatial WM with rate-based neuronal dynamics in which the selective delay activity was generated by the nonlinearity of the neurons. In the presence of heterogeneities the dynamics had only a small number of attractors. However, they showed that short-term facilitation in the recurrent interactions can slow down the drift of the bump dramatically. This is because it selectively amplifies synapses from neurons that have been activated by the cue, which tends to pin down the bump at its initial position. A similar effect explains the slowness of the drift in our PFC model. Therefore, facilitation in the recurrent excitatory interactions plays two roles in our mechanism: (1) it induces nonlinearities that sustain the persistence of the activity and (2) it slows down the degradation of the memory trace.

In our PFC model, the spatial fluctuations in the connectivity give rise to strong heterogeneities. As a result, the bump of activity that should encode the direction of the cue drifts during the delay period toward a location that is weakly correlated with the stimulus. This leads to an eventual loss of memory. However, we found that the drift is slow. It is on the order of 2°/s in a network with 80,000 neurons and connectivity K = 2000 and even slower for a larger network. For plausible size and connectivity one can easily obtain a drift of 0.5–1°/s, which is in line with experimental data (White et al., 1994, Ploner et al., 1998).

Discussion

Experimental data support the hypothesis that the cortex operates in states in which excitation is balanced by inhibition (Destexhe et al., 2003; Shu et al., 2003; Haider et al., 2006). Modeling studies (van Vreeswijk et al., 1996, 1998; Lerchner et al., 2004, Vogels et al., 2005; Vogels and Abbott, 2009; Hansel and van Vreeswijk, 2012) have argued that this can explain in a natural way why neurons in vivo fire so irregularly in spontaneous as well as in sensory-evoked activity. In the present work, we argued that balance of excitation and inhibition can also explain the high irregularity of neuronal firing in persistent activity but that this requires synaptic nonlinearities. This is because, if the synaptic interactions are linear, neuronal nonlinearities wash out at the population level in balanced states, thus precluding more than one balanced state.

Our first result is that in an unstructured network, nonlinearities induced by short-term facilitation in recurrent excitation are appropriate to generate bistability between balanced states in a very robust way. We then demonstrated that in a network model of PFC, these nonlinearities can also sustain the selectivity of the delay activity as recorded in PFC during ODR tasks. Interestingly, we found in our simulations that the mean input into a neuron is more hyperpolarizing during the delay period than in baseline. Concomitantly, the temporal fluctuations in the input also increase to guarantee that the activity is larger during the delay. This explains why in our model the spike trains are typically more irregular during the delay period than during baseline, in qualitative agreement with the experimental results of Compte et al. (2003). Similar features were found in the mean-field theory of the integrate-and-fire network with stochasticity and short-term plasticity in neuronal interactions studied by Mongillo et al. (2012). However, this effect may be model and parameter dependent and more modeling as well as experimental works are required to further probe its significance.

Another important feature of our PFC model is the diversity across neurons in the neuronal tuning curves and in the dynamics. This occurs although all the neurons (in a given population) are identical. In fact this is another hallmark of the balanced regime in which the network operates. Such diversity is also observed in experimental data (Funahashi et al., 1989, 1990; Takeda and Funahashi, 2007). Remarkably, in our model, the preferred directions of the neurons during the cue and during the delay periods are highly correlated whereas the tuning widths are very weakly correlated in agreement with experimental reports (Funahashi et al., 1990).

An essential property of the mechanism we have proposed is its robustness. Even if the average connectivity, K, is very large, the unstructured network as well as our PFC model display multistability of balanced states in which the activity is driven by temporal fluctuations in synaptic inputs. The temporal irregularities and the heterogeneities in the neuronal firing are very robust features that depend only weakly on K for very large K. This agrees with the work of Mongillo et al. (2012).

This contrasts with what happens in networks with linear synaptic interactions. In this case a coexistence of several states in which the neuronal firing is driven by the temporal fluctuations rather than by the mean inputs can in theory be achieved. However, this requires an increasingly precise tuning of the parameters as the connectivity increases (Renart et al., 2007). This is because the response of populations to external inputs becomes more linear as the connectivity increases. As a result, accounting for both spatiotemporal irregularity and mnemonic activity in models with linear synaptic interactions requires fine-tuning of the network size and connectivity (Renart et al., 2007), the coding level (van Vreeswijk and Sompolinsky, 2004; Lundqvist et al., 2010), synaptic efficacies (Roudi and Latham, 2007), and on the level of fast noise (Barbieri and Brunel, 2008). Durstewitz and Gabriel (2007) argued that a network with voltage-dependent NMDA synapses can significantly display enhanced variability when it operated in a chaotic close-to-bifurcation regime. This presumably also needs fine-tuning of the parameters in the large size limit to insure the right membrane potential distribution.