Abstract

A neural correlate of parametric working memory is a stimulus-specific rise in neuron firing rate that persists long after the stimulus is removed. Network models with local excitation and broad inhibition support persistent neural activity, linking network architecture and parametric working memory. Cortical neurons receive noisy input fluctuations that cause persistent activity to diffusively wander about the network, degrading memory over time. We explore how cortical architecture that supports parametric working memory affects the diffusion of persistent neural activity. Studying both a spiking network and a simplified potential well model, we show that spatially heterogeneous excitatory coupling stabilizes a discrete number of persistent states, reducing the diffusion of persistent activity over the network. However, heterogeneous coupling also coarse-grains the stimulus representation space, limiting the storage capacity of parametric working memory. The storage errors due to coarse-graining and diffusion trade off so that information transfer between the initial and recalled stimulus is optimized at a fixed network heterogeneity. For sufficiently long delay times, the optimal number of attractors is less than the number of possible stimuli, suggesting that memory networks can under-represent stimulus space to optimize performance. Our results clearly demonstrate the combined effects of network architecture and stochastic fluctuations on parametric memory storage.

Introduction

Persistent neural activity occurs in prefrontal (Fuster, 1973; Funahashi et al., 1989; Romo et al., 1999) and parietal (Pesaran et al., 2002) cortex during the retention interval of parametric working memory tasks. Model networks of stimulus-tuned neurons that are connected with local slow excitation (Wang, 1999) and broadly tuned inhibitory feedback (Compte et al., 2000; Goldman-Rakic, 1995) exhibit localized and persistent high-rate spike train patterns called “bump” states (Compte et al., 2000; Renart et al., 2003). Bumps have initial locations that are stimulus-dependent, so population activity provides a code for the remembered stimulus (Durstewitz et al., 2000). These models relate cortical architecture to persistent neural activity, and are a popular framework for studying working memory (Wang, 2001; Brody et al., 2003).

Neural variability is present in all brain regions and limits neural coding in many sensory, motor, and cognitive tasks (Stein et al., 2005; Faisal et al., 2008; Laing and Lord, 2009). In parametric working memory networks, dynamic input fluctuations cause bump states to wander diffusively (Compte et al., 2000; Laing and Chow, 2001; Wu et al., 2008; Polk et al., 2012; Burak and Fiete, 2012; Kilpatrick and Ermentrout, 2013), degrading stimulus storage over time. Psychophysical data show that the spread of the recalled position increases with delay time (White et al., 1994; Ploner et al., 1998), consistent with diffusive wandering of a bump state. While several results examine how bump formation depends upon neural architecture, little is known about how cortical wiring affects the diffusion of persistent neural activity.

The response properties of cells are often heterogeneous (Ringach et al., 2002), a feature that can improve population-based codes (Chelaru and Dragoi, 2008; Shamir and Sompolinsky, 2006; Marsat and Maler, 2010; Osborne et al., 2008; Padmanabhan and Urban, 2010). In particular, there is a large degree of variation in synaptic plasticity and cortical wiring in prefrontal cortical networks involved in persistent activity during working memory tasks (Rao et al., 1999; Wang et al., 2006). Heterogeneity in excitatory coupling quantizes the neural space used to store inputs, reducing the network's overall storage capacity (Renart et al., 2003; Itskov et al., 2011). On the other hand, stabilizing a discrete number of network states improves the robustness of working memory dynamics to parameter perturbation (Rosen, 1972; Koulakov et al., 2002; Brody et al., 2003; Goldman et al., 2003; Miller, 2006). In this study, we investigate how stabilization introduced by synaptic heterogeneity affects the temporal diffusion of persistent neural activity.

We show that spatial heterogeneities in the excitatory architecture of a spiking network model of working memory reduce the rate with which bumps diffuse away from their initial position. However, the same heterogeneities limit the number of stable network states used to store memories. A tradeoff between these consequences maximizes the transfer of stimulus information at a specific degree of network heterogeneity. For a large number of stimulus locations and long retention times, we show that network architectures that under-represent stimulus space can optimize performance in working memory tasks.

Materials and Methods

Recurrent network architecture.

We used for our network a ring architecture commonly used for generating persistent activity to represent direction between 0 and 360° (Ben-Yishai et al., 1995; Compte et al., 2000) with NE = 256 pyramidal cells (E) and NI = 64 interneurons (I). Each leaky integrate-and-fire neuron (Laing and Chow, 2001) was distinguished by its cue orientation preference θj, where θj(E) = ΔE · j(j = 1, …, NE) and θj(I) = ΔI · j(j = 1, …, NI) for ΔE = 360/256 and ΔI = 360/64, respectively. The subthreshold membrane potential of each neuron, Vα(θj, t) (α = E, I), obeyed the following equation:

|

where IE = 0.6 and II = 0.6 are bias currents that determine the resting potential of E and I neurons. The external current, expressed in the following equation:

|

represents sensory input received only by pyramidal neurons, where I0 = 2 is the input amplitude, Id = 3 determines input width, and θext is the cue position. The stimulus was turned on at TON = −1 s and off at TOFF = 0 s. Interneurons received no external input, so Iext,I = 0. Voltage fluctuations were represented by the white noise process In,α(t) with variance σV,α2 (σV,E = 0.5 and σV,I = 0.3). We scaled and nondimensionalized voltage so the threshold potential Vth = 1 and the reset potential Vres = 0 for all neurons.

Synaptic currents were mediated by a sum of AMPA, NMDA, and GABA currents:

each modeled as

|

|

|

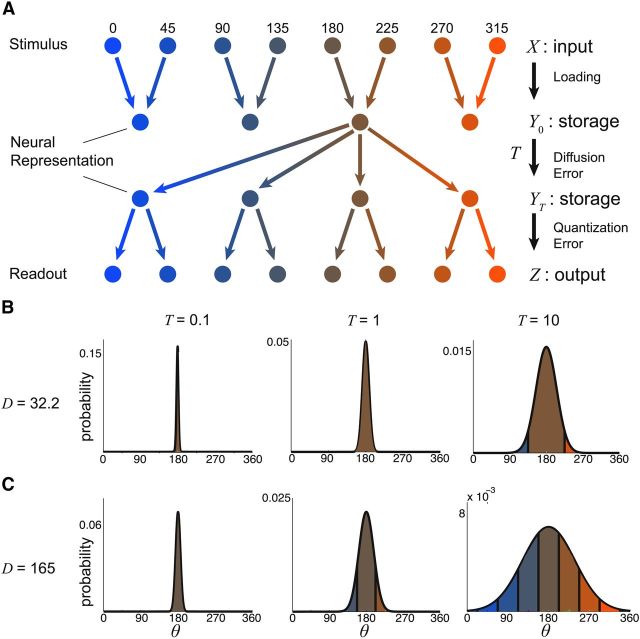

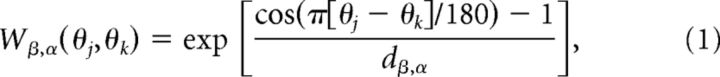

where AAMPA,E = 1, ANMDA,E = 2, AGABA,E = 0.81, AAMPA,I = 1, ANMDA,I = 1, and AGABA,I = 0. Orientation preference was introduced into synaptic conductance by the spatially decaying functions (Fig. 1A) expressed:

|

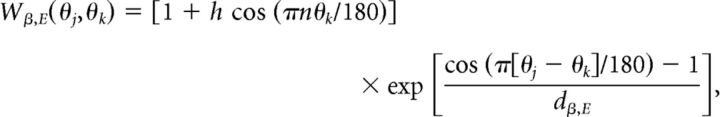

where dAMPA,E = 0.32, dNMDA,E = 0.32, dGABA,E = 5, dAMPA,I = 5, and dNMDA,I = 5. Equation 1 was used for excitatory (AMPA and NMDA) synapses between pyramidal (E) cells in the case of spatially homogeneous connectivity. In the case of spatially heterogeneous synaptic strength (Fig. 2), the strength of AMPA and NMDA connections between pyramidal cells (E) was given by

|

where h = 0.025 represents the strength of the heterogeneity and n is the frequency of the heterogeneity, which must be integer valued.

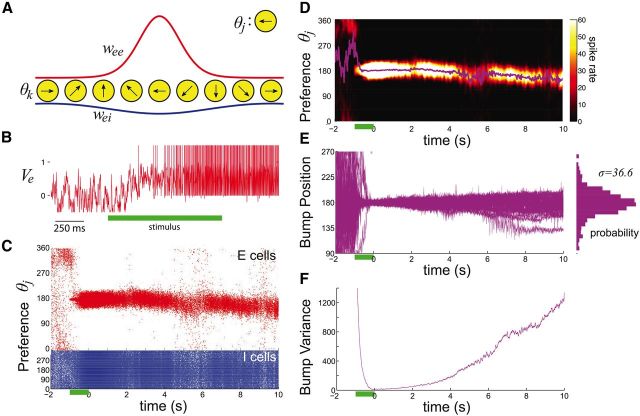

Figure 1.

Spiking network model with spatially homogeneous synaptic connectivity. A, Strength of connections from pyramidal neurons to pyramidal neurons (red) and synapses from interneurons to pyramidal neurons (blue). A neuron of preferred stimulus angle θj receives synaptic inputs from all neurons spanning preferred stimulus angles indexed by θk (see Materials and Methods). B, Voltage of the pyramidal cell with stimulus preference 170° before, during (green bar), and after cue presentation. C, Formation of a bump of spiking activity in the pyramidal neurons (red) following cue presentation (green bar). D, Spike rate, locally averaged across space and time (see Materials and Methods), Plot shows that the position of the bump's peak (Δ(t), magenta) diffuses in space due to voltage and synapse noise. E, Bump position (Δ(t)) plotted for 32 realizations. The resulting probability density of bump positions from 1000 realizations after 10 s is approximately Gaussian. F, Variance of the bump's position (<Δ(t)2>), across 1000 realizations, scales approximately linearly as a function of time.

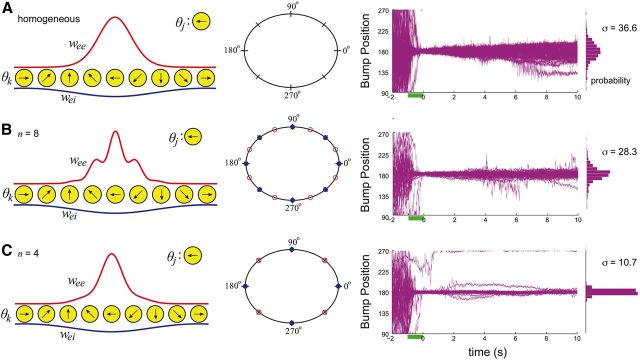

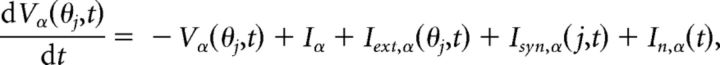

Figure 2.

A, Distance-dependent synaptic connections lead to a spatially homogeneous system. Bump dynamics lie on a line attractor, so bumps diffuse with ease. B, Periodically breaking the spatial homogeneity of synaptic connections with n = 8-fold heterogeneity leads to bump dynamics evolving on a chain of n = 8 attractors (red) each separated by repelling states (blue). Bumps do not wander away from their initial position as easily (position plot), which tightens the resulting probability density after 10 s. C, Effect of synaptic heterogeneity is more noticeable for a n = 4-fold break in homogeneity. Bump position rarely strays from 180°, as shown by the very tight probability density.

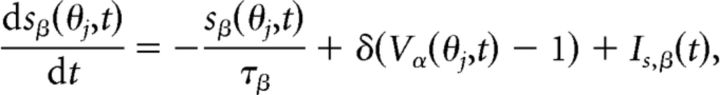

Synaptic gating variables of type β = AMPA, NMDA, or GABA associated with a neuron at location θj were instantaneously activating and exponentially decaying as described by

|

where α = E for β = AMPA and NMDA while α = I for β = GABA. Instantaneous activation is represented here using the delta function δ, so sβ(θj, t) increments by 1 when Vα(θj, t) attains the spike threshold Vth = 1. Decay time constants for each synapse type are τAMPA = 5 ms, τNMDA = 100 ms, and τGABA = 20 ms. Fluctuations in conductance were introduced into each synapse with the term Is,β(t), which is white noise with variance σs,β2 (σs,AMPA = 0.1, σs,NMDA = 0.45, and σs,GABA = 0.05). We take the variance of noise to NMDA synapses to be high, σs,NMDA = 0.45, because it leads to high variances in the spike times, as commonly observed in prefrontal cortical neurons during the delay period of working memory tasks (Compte et al., 2003). In addition, this generates an error of a few degrees in the recall of cue position for delay periods of 2–10 s, as observed in psychophysical experiments (White et al., 1994).

Numerical simulations were done using an Euler–Maruyama method with timestep dt = 0.1 ms and normally distributed random initial conditions. Spike time rastergrams were smoothed to generate population firing rates as a function of degree and time, whose maximum at each time were used to calculate the centroid of the bump (Figs. 1D,E, 2). Variances (Figs. 1F, 3) and probability densities (Figs. 1E, 2) were computed using 1000 values for the bump centroid across 10 s. Linear fits of variance in the case of spatially homogeneous synapses and spatially heterogeneous synapses with n = 8 and n = 4 (Fig. 3) were performed using linear regression.

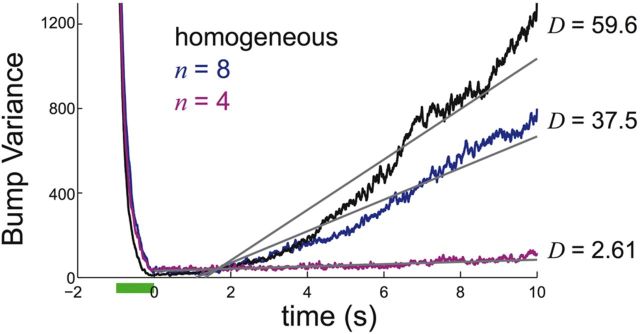

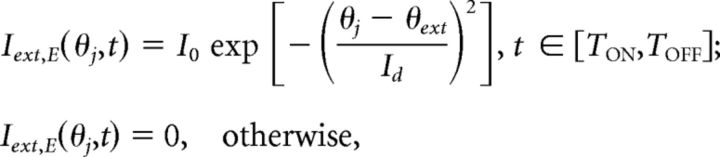

Figure 3.

Variance of bump position as a function of time, averaged across 1000 realizations for spatially heterogeneous structure of pyramidal-to-pyramidal synapses with frequency n = 4 and n = 8, as well as spatially homogenous structure. We fit each curve to straight lines to generate an approximation of the effective diffusion coefficient D (see Materials and Methods).

Diffusion in the potential well model.

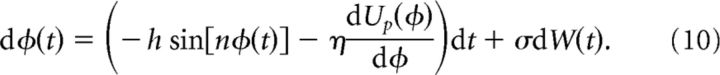

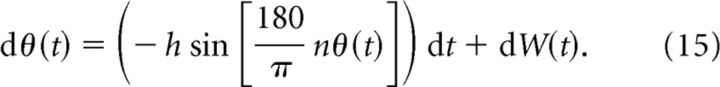

To analyze the diffusive dynamics of the bump, we studied an idealized model of bump motion. In this model, the bump position φ(t) obeys the stochastic differential equation, (Lifson and Jackson, 1962; Lindner et al., 2001):

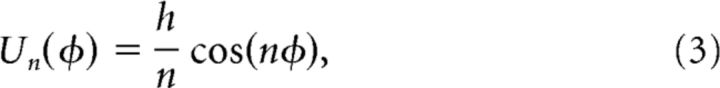

Here φ(t) was restricted to the periodic domain φ ∈(− π,π] and dW was a standard white noise process. The first term in Equation 2 models the periodic spatial heterogeneity that is responsible for attractor dynamics. Heterogeneity is parametrized by its strength h and spatial frequency n. In this framework, the dynamics of φ(t) is a diffusive process occurring on an energy landscape defined by the periodic potential

|

producing n attractors (Fig. 4A). These attractors occur at the minima of Equation 3, given by φ = 2jπ/n where j = 1, …, n. They are separated from one another by repellers or saddles at the maxima of Equation 3.

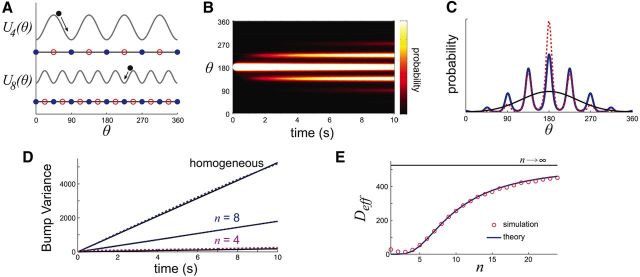

Figure 4.

A, Particle diffusing in periodic potential well Equation 3 is an idealized model of the bump diffusing over the network with spatially heterogeneous synapses, given by the stochastic process Equation 2. B, Probability density p(θ, t) of particle position θ spreads diffusively in time (n = 8; h = 1; noise variance σ2 = 0.16). C, Profile of p(θ, t) computed from 10,000 realizations (red); effective diffusion theory Equation 6 (black); and effective diffusion theory with periodic correction Equation 7 (blue). D, Effective diffusion theory using Deff in Equation 8 matches variance scaling from simulations of stochastic equation. Variance increases monotonically with the well frequency n. E, An effective diffusion coefficient Deff can be computed by treating well hopping as a jump Markov process (Lifson and Jackson, 1962; Lindner et al., 2001), yielding formula Equation 8.

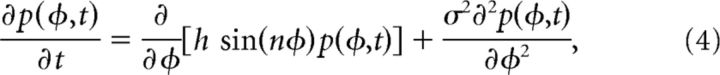

To analyze the model, we reformulated Equation 2 as an equivalent Fokker–Planck equation (Risken, 1996),

|

where p(φ, t) is the probability density of finding the bump at a given value φ at time t. For ease of analytic calculations, we let φ evolve on an infinite domain. Since we worked in parameter regimes where the resulting spread of probability densities was relatively narrow, this did not considerably alter the results. Also, experimentally measured errors in cue recall are typically not large enough to span more than a quarter of the possible stimulus space (Ploner et al., 1998).

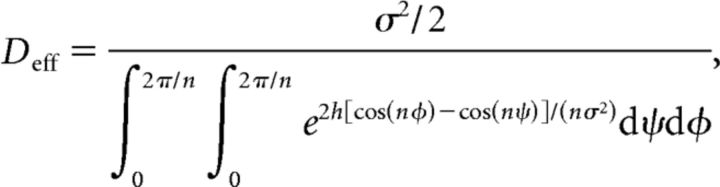

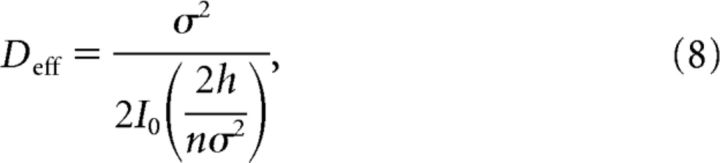

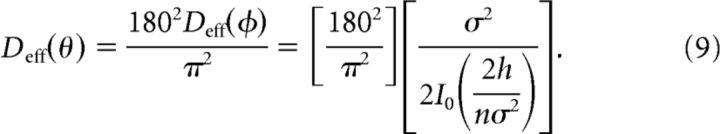

For large times and sufficiently high-frequency n, the variance of the stochastic process Equation 2 can be quantified using an effective diffusion coefficient (Lindner et al., 2001)

|

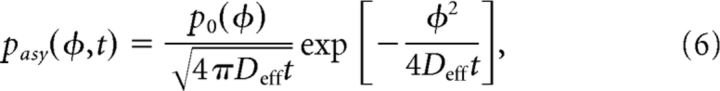

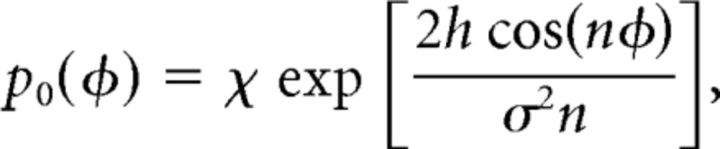

The density ρ(φ, t) tends asymptotically:

|

where p0(φ) is the stationary, periodic solution, Equation 4, given by

|

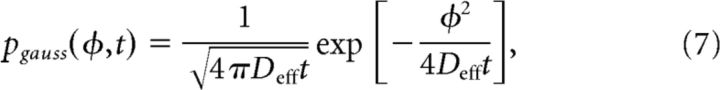

where χ is a normalization factor. The approximation, Equation 6, matches realizations of the full stochastic process Equation 2 very well (Fig. 4C). Clearly, the frequency of the probability distribution's microscale is commensurate with that of the periodic potential Equation 3. We were mainly concerned with computing the effective diffusion of the stochastic process defined by Equation 2. Remarkably, second-order statistics are still well approximated by ignoring the microperiodicity of the density in Equation 6, just using:

|

(Fig. 4C). Previous authors have used asymptotic methods for computing the associated effective diffusion coefficient Deff inherent in the formula Equation 6 (Lifson and Jackson, 1962; Lindner et al., 2001). The long-standing result is (Lifson and Jackson, 1962)

|

and we can compute the integrals in the denominator to find:

|

where I0(x) is the modified Bessel function of the zeroth kind. Equation 8 demonstrates the monotone increasing dependence of the effective diffusion upon the number of potential wells n.

To calculate the probability density p(φ, t), we simulated 10,000 realizations of Equation 2 using an Euler–Maruyama integration scheme with a timestep dt = 0.001 from t = 0 to t = 10 s (Fig. 4B,C). The effective diffusion coefficient was calculated as the gradient of the variance across the time window and converted to degrees with the change of variables θ = 180(φ + π)/π yielding:

|

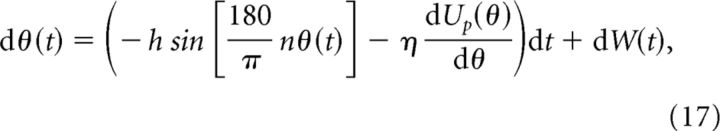

Adding unstructured heterogeneity.

Effects of unstructured heterogeneity were studied by perturbing the potential in Equation 2 with a combination of random periodic functions,

|

The unstructured heterogeneity was given by the random potential

|

where aj, bj (j = 1, …, Nh) are randomly drawn from normal distributions. Here η scales the amplitude of the random potential and the maximal frequency of the unstructured components is given by Nh. We take the rounded integer Nh = [0.05(1 + 0.1ξ)/(η + 0.0005) + 1], ξ is a normally distributed random variable, so larger η values reduce the number of modes added to the potential, decreasing the maximal number of attractors in the system, as in other studies of unstructured heterogeneity in bump attractor networks (Zhang, 1996; Renart et al., 2003; Itskov et al., 2011; Hansel and Mato, 2013). To calculate an effective diffusion coefficient Dh, we initialized 10,000 simulations of Equation 10 at φ(0) = 0 and computed Dh = <φ(T)2>/T for T = 10 s.

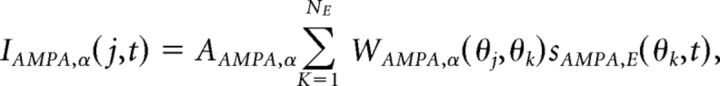

Information measures.

To measure the performance of the network on a working memory task, we used a Shannon measure of mutual information for a noisy channel (Cover and Thomas, 2006). We considered a channel receiving one of m possible stimuli (X), storing an input as one of n ≤ m possible states (Y(t)), and reading out the remembered stimulus as one of the original m possible values (Z). The stored variable Y(t) evolves during storage time t ∈ [0, T] due to degradation of the initially loaded signal Y(0) by dynamic noise. The stimuli were presented with equal probability pj = 1/m (j = 1, …, m), so that the stimulus entropy was as follows:

|

The network represented a stimulus as the bump position at one of the system's n attractors. If m was a multiple of n (m = qn with q an integer) then the mapping from stimulus to loaded representation was straightforward with Y(0) = ceil(X/q). When m was not a multiple of n, we allowed the potential well structure of the system to guide the loaded state to the nearest attractor. This led to slightly nonuniform distributions of loaded stimuli. However, the effects of diffusion made this slight nonuniformity insignificant, especially as the length of storage time T was increased. In our theoretical calculations, we assumed that the loading algorithm maximized the entropy of the neural representation Y(0); this sometimes involved random assignments from X to Y(0). In fact, we found our numerical results did not stray too far from this approximation (see Figs. 7, 8).

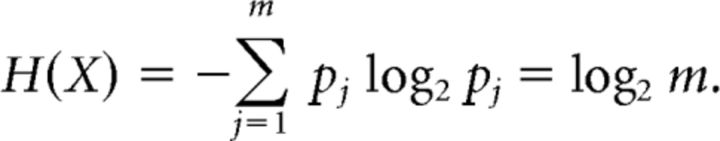

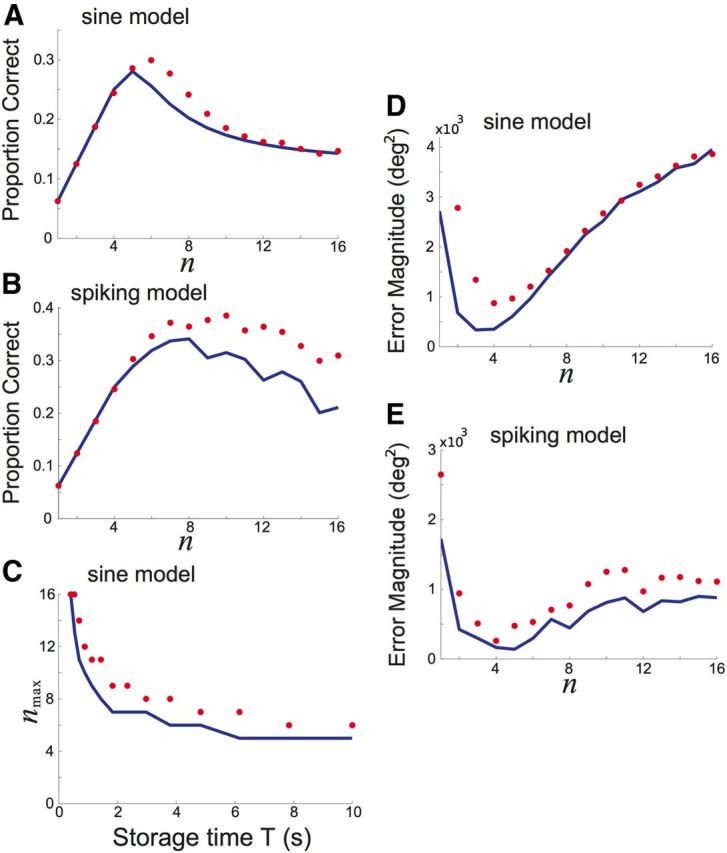

Figure 7.

An optimal number of attractors nmax < m emerges as the delay time T grows with m fixed. A, Mutual information I(X; Z) between input particle position X and output recalled position Z varies with n in the sine model for delay (storage) times T = 0.1, T = 1, and T = 10; input number m = 16; well height h = 1; and noise variance σ2 = 0.16. B, Mutual information I(X; Z) calculated between initial X and final Z position of the bump in the spiking network for delay times T = 0.1 and T = 10 with m = 16 inputs. C, Keeping the number of possible initial positions m = 16 fixed reveals that nmax decreases monotonically with delay time T.

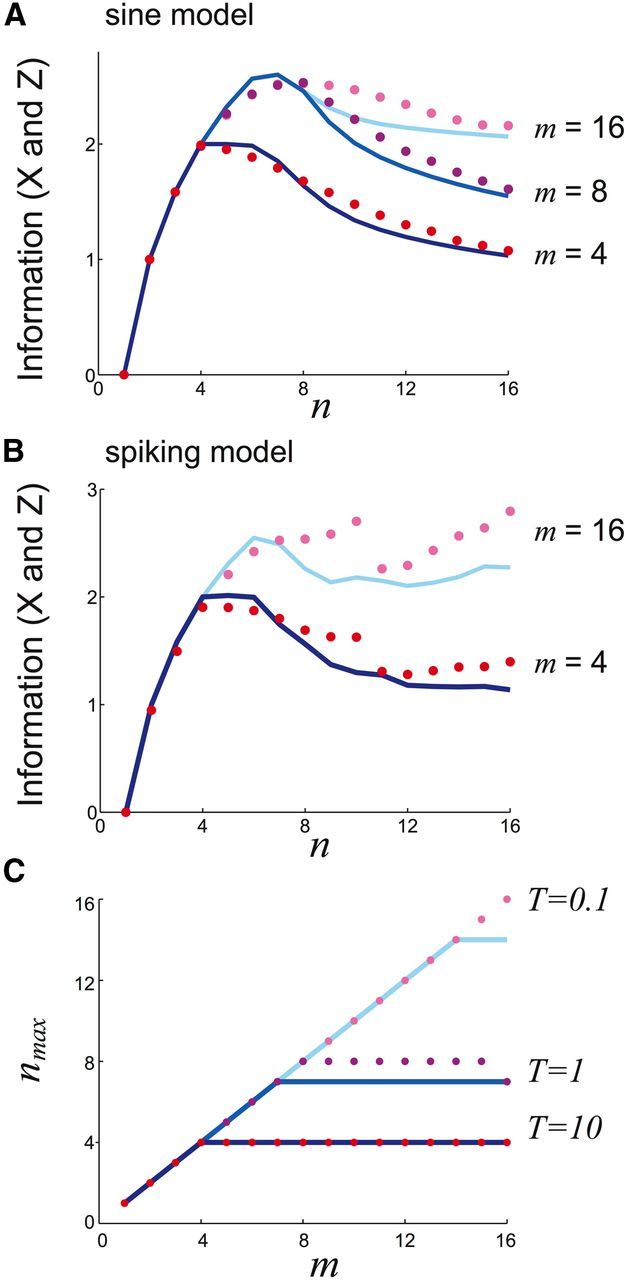

Figure 8.

An optimal number of attractors nmax < m emerges as m is increased with T fixed. A, I(X; Z) in sine model for input numbers m = 4, m = 8, and m = 16 with delay time T = 1 fixed. B, I(X; Z) in spiking model for input numbers m = 4 and m = 16 with delay time T = 5 s fixed. C, As the delay time T increases, nmax reaches an optimum at smaller cue numbers m. Noise variance σ2 = 0.16.

If n = m, then Y(0) = X and the loaded representation had the same entropy as the stimulus H(Y(0)) = H(X). If n < m, then the representation entropy was smaller than the stimulus entropy H(Y(0)) < H(X), since the space into which the stimulus is represented is smaller than the original stimulus space. Since the stimulus X has a uniform distribution, then so does Y, with the probability that attractor j loaded as pj = 1/n (j = 1, …, n) and the entropy of the representation as H(Y(t)) = log2 n (this holds for all t). The readout of the neural representation required an expansion from Y(T) to Z. If m was a multiple of n, then the expansion was straightforward with Z having probability 1/q for Z = q(Y(T) − 1) + 1, …, qY(T) and zero otherwise. In this case H(Z) = log2 m. If m was not a multiple of q, we subdivided the domain into m evenly spaced subdomains and assigned Z accordingly, and for theoretical calculations we again assumed H(Z) = log2 m.

While H(Y) ≤ H(Z), H(Y) nevertheless set an upper limit for the mutual information between the stimulus X and readout Z, expressed as:

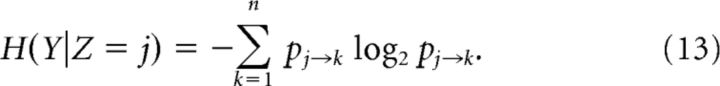

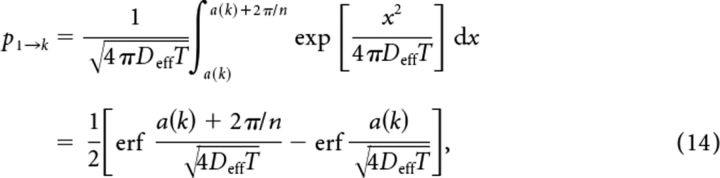

To compute the conditional entropy H(Y|Z) we first calculated the probability of transition between one state and another during the diffusion phase (0 ≤ t ≤ T). A direct estimate of the transition probabilities was obtained by numerically simulating many realizations of the model and estimating p(φ(0)|Z) where φ(0) is the center of mass of the bump at time t = T. We subdivided φ into n subdomains of equal width, and the area of each subdomain is pj→k, the transition probability from the loaded state X = j to another state (k ≠ j) or itself (k = j), where k = 1, … n. Due to discrete translation symmetry in both systems, we expected pj→k = pj+l→k+l. The conditional entropy can then be computed as follows (Cover and Thomas, 2006):

|

Our second method of computing the conditional entropy employed the effective diffusion coefficient Deff associated with the probability density for locations of the bump or particle. Deff depends upon n, ultimately introducing this further n dependence into the mutual information (Eq. 12). Using the associated pure Gaussian probability (Eq. 7), we computed transition probabilities analytically for the case j = 1, so, as Equation 14 states:

|

for a set delay time T, where a(k) = (2πk − 1)/n is the lower boundary of the kth subdomain and k = 1, …, ceiling(n/2). Due to reflection symmetry of the Gaussian, we expected p1→k = p1→n−k. For even n, the (n/2 + 1)th subdomain is (−π, π/n − π) ∪ (π − π/n, π), which would lead to two integrals in Equation 14. As mentioned, the transition probabilities pj→k for j = 2, …, n were easily computed as pj→k = p1→k−j+1 (see Fig. 6B,C, insets). We then plugged each pj→k value into our formula for conditional entropy, Equation 13. The analytic and numeric calculation of pj→k led to similar results for I(X; Z), the calculated value of mutual information (Eq. 12; see Fig. 7).

Figure 6.

Noisy channel description of memory storage. A, Loading m possible initial conditions into n possible wells initially reduces information. After the storage period (T), information may have been lost due to hops between wells. B, Purely Gaussian probability density with the effective diffusion coefficient Deff calculated when n = 4 and σ2 = 0.16. The area of each filled portion represents the probability of recalling the cue angle associated with that color. Each area corresponds to the probability of transitioning from the original state to that state pj→k. C, For n = 8, the effective diffusion coefficient Deff is larger, leading to faster spreading.

Results

Diffusion of bumps in a spatially homogeneous network

The neural mechanics of parametric working memory has a long history of theoretical investigation (Amari, 1977; Camperi and Wang, 1998; Compte et al., 2000; Laing and Chow, 2001; Wang, 2001; Brody et al., 2003; Renart et al., 2003). Motivated by the working memory of visual cue orientations, we consider a network of spiking model neurons where each neuron has a preferred orientation in its feedforward input. Persistent neural spiking within the network is due to a combination of assumptions about synaptic connectivity (Goldman-Rakic, 1995; Rao et al., 1999; Lewis and Gonzalez-Burgos, 2000). First, the strength of pyramidal-to-pyramidal connectivity decreases as the distance between the tuning peak of each neuron increases (Fig. 1A, red line). Second, excitatory synaptic currents involve both fast-acting AMPA and slow-acting NMDA components (see Materials and Methods). Third, feedback connections from interneurons are broadly tuned (Fig. 1A, blue line). With these architectural features, neurons in the network respond to a transient stimulus (Fig. 1, green bar) with an elevated rate of spiking that persists long after the stimulation ceases (Fig. 1B). Short-range excitation leads to high-rate pyramidal spiking across a short range of orientations, while wide-range inhibition localizes this spiking (Fig. 1C); we refer to this pattern of activity as a “bump.” The position of the bump encodes the initial stimulus position in working memory (Compte et al., 2000; Wang, 2001; Brody et al., 2003).

We model the inherent trial-to-trial variability of neural response with an orientation-independent fluctuating input to each neuron, as well as a stochastic component of the recurrent synaptic feedback (see Materials and Methods). These fluctuations degrade the storage of the orientation cue by causing the bump to stochastically wander away from its initial position (Fig. 1C,D). Spatiotemporal averaging of the spike time raster plots identifies the maximal firing rate at each time point, and visualizes the bump wandering across the network (Fig. 1D, magenta line). We fix the stimulus orientation and perform many trials of the network simulation, with the only difference between trials being the realization of the stochastic forces in the network. The bump's position after a delay period of 10 s can be described by a probability density having an overall Gaussian profile (Fig. 1E), and the variance in bump position increases linearly as a function of time (Fig. 1F). These last two properties suggest the bump position behaves as a diffusion process (Risken, 1996).

Diffusive dynamics in working memory networks have been studied in several different frameworks (Compte et al., 2000; Miller, 2006; Wu et al., 2008; Burak and Fiete, 2012; Polk et al., 2012; Kilpatrick and Ermentrout, 2013). The intuition for the diffusive character of these networks is best gained from an analysis of the deterministic network. A bump can be formed with its center of mass located at any orientation, allowing for the storage of a continuum of stimuli (Amari, 1977; Camperi and Wang, 1998). However, perturbations that change the bump's position will be integrated and stored as if they were another input. Stochastic inputs lead to a continuous and random displacement of the bump, without the bump relaxing back to its original location. Over time, the position of the bump effectively obeys Brownian motion and recall error increases with the delay period. This diffusion-based error is consistent with psychophysical studies that show the spread of recalled continuous variables scales sublinearly with time (White et al., 1994; Ploner et al., 1998).

Reduced diffusion in a spatially heterogeneous network

Previous models of working memory have considered networks that use neuronal units with bistable properties (Rosen, 1972; Koulakov et al., 2002; Brody et al., 2003; Goldman et al., 2003; Miller, 2006). These networks lack the homogeneity required for a continuum of neutrally stable stimulus representations, and rather have a discrete number of stable states. One advantage of this network heterogeneity is a “robustness” of representation with respect to parameter perturbation, a feature that is absent in homogeneous networks (Brody et al., 2003; Goldman et al., 2003). We consider spatially periodic modulation of excitatory coupling (Fig. 2, left), where the period of the modulation is 360/n degrees, so that n cycles cover orientation space. We assume such an architecture would not be biased to favor one particular cue location because errors reported in recalling cues are approximately the same for each cue location (White et al., 1994). Such an architecture may develop from Hebbian plasticity rules during training in working memory tasks, since orientation cues are typically chosen at fixed and evenly spaced locations around the circle (Funahashi et al., 1989; White et al., 1994; Goldman-Rakic, 1995; Meyer et al., 2011). In this situation, some neuron pairs are activated more than other pairs, leading to relative strengthening of their recurrent connections (Clopath et al., 2010; Ko et al., 2011). Alternatively, reward-based plasticity mechanisms could also set up spatial heterogeneity in synapses if it improved a subject's performance during a task (Schultz, 1998; Wang, 2008; Klingberg, 2010).

Spatial biases introduced to network architecture shift the smooth continuum of stable states to a chain of discrete attractors, each separated by a repeller (Fig. 2, compare A, B, C, middle column). This discrete attractor structure occurs because some pyramidal neurons receive stronger excitatory projections than others (Zhang, 1996; Itskov et al., 2011; Hansel and Mato, 2013). Spatial heterogeneity in the strength of excitatory connections (decreasing n) stabilizes bump positions to perturbations by noise (Fig. 2, compare A, B, C, right column). For all n tested, the probability density of bump positions retains an approximately Gaussian shape with periodic modulation, so the variance of bump positions still grows nearly linearly with time (Fig. 3), and it is well approximated by Dt where D is the diffusion coefficient (see Materials and Methods). The coefficient D drops considerably for a network with spatially heterogeneous synapses, compared with the bump diffusion measured with the homogenous network. In total, spatial heterogeneity of excitatory coupling helps stabilize bump position in models of working memory with fluctuating stochastic inputs.

Potential well model for bump diffusion

To analyze the relationship between network heterogeneity and bump diffusion more deeply, we now study an idealized model for parametric working memory. Briefly, a noise-driven particle on a periodic potential landscape retains the essential effects of noise and spatial heterogeneity in our spiking network model (see Materials and Methods). Our simplified model treats the bump position as a particle moving in a landscape of peaks, from which it is repelled (Fig. 4A, maxima), and wells, to which it is attracted (Fig. 4A, minima). In the potential well model, the memory of the stimulus location is tracked by the particle's position θ(t) obeying the following stochastic differential equation,

|

Here h is the amplitude of the periodic potential and W(t) is a Wiener process (Risken, 1996). The sine function determines the θ-dependent drift of the particle, which ultimately affects its diffusive motion. The positive integer n determines the number of stable attractors. Similar reduced neural models have been explored (Renart et al., 2003; Itskov et al., 2011), and the general problem of noise-induced behavior in periodic potentials has been well studied (Lifson and Jackson, 1962; Risken, 1996; Lindner et al., 2001).

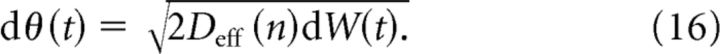

Diffusive behavior occurs in periodic potentials, yet the mechanics are different than diffusion on a free potential landscape (h = 0). In periodic potentials, a particle typically undergoes small variance dynamics confined within a well. However, rare but large noise kicks eventually push the particle to a neighboring well. These noise-induced well transitions continue indefinitely, and diffusion over the potential landscape occurs in a punctuated fashion. Across many trials, the probability p(θ, t) of finding the particle at position θ at time t evolves like a Gaussian kernel modulated by a periodic function (Fig. 4B; see Materials and Methods). The maxima of p(θ, t) are centered at the minima of the potential, indicating a higher likelihood of finding the particle at the bottom of a well than in transition between wells.

Treating the transitions between wells as a jump process, we can approximate the diffusion of the sine potential model, Equation 15, with the following Brownian walk:

|

The effective diffusion coefficient Deff(n) is derived using standard approaches (see Materials and Methods, Eq. 9). Under this approximation, θ(t) obeys a Gaussian distribution with variance Deff(n)t, and p(θ, t) does not possess the periodic microstructure of the actual probability density (Fig. 4C, compare blue and black curves). Despite these differences, the approximation agrees very accurately with the variance in the particle's position in the periodic potential (Fig. 4D). In this framework we can directly relate the frequency of heterogeneity n to the overall diffusivity of the particle through Deff(n). As with bumps in the spiking network, increasing the frequency n of the spatial heterogeneity increases the effective diffusion Deff (Fig. 4D). This is true across the entire range of frequencies n, and as n → ∞ the particle's variance saturates to that of a system with a flat potential (Fig. 4E). Thus, despite the simplicity of the potential well framework, it can qualitatively explain the diffusivity observed in the spiking network. In both Figure 3 and Figure 4D, the variance in bump position is fit well by a linear function whose slope decreases with the number of attractors n.

Impact of unstructured heterogeneity

As we have shown, a structured heterogeneity of cortical architecture that generates evenly spaced attractors (Figs. 2, 4A) curtails the rate of diffusion (Figs. 3, 4D). However, synaptic architecture may also have random components that are neither related to task specifics nor optimized to any specific computation (Wang et al., 2006). In principle, such perturbations in architecture could degrade the performance of working memory networks that require a fine-tuned architecture. Renart et al. (2003) demonstrated that the deleterious effects of such unstructured heterogeneity in bump attractor networks can be mitigated by homeostatic plasticity, which spatially homogenizes network excitability. In our model, considering such a process would bar the system from establishing spatially structured heterogeneity, which we have shown improves storage accuracy. Thus, we next study how a combination of structured and unstructured spatial heterogeneity affects the diffusive dynamics in working memory networks. We show that the system is still robust to noise, even when the potential is altered in this way.

Specifically, we modify the shape of the potential in Equation 15, so that,

|

where Up(θ) is an unstructured perturbation of the underlying cosine potential function (see Materials and Methods). Briefly, Up(θ) is a component of the potential that is randomized from trial to trial (two realizations of the full potential are shown in Fig. 5A,B). Adding a small component of unstructured heterogeneity (η > 0) to a network with an initially flat potential function (h = 0) substantially alters the attractor structure (Fig. 5A). The network shifts from having a continuum of preferred locations to having a small number of preferred locations at disordered positions. This is analogous to the drastic collapse in the number of possible stable bump locations observed in bump attractor models whose synaptic structure is randomly perturbed (Zhang, 1996; Renart et al., 2003; Itskov et al., 2011; Hansel and Mato, 2013). On the other hand, a network that possesses structured heterogeneity (h > 0) retains the original positions of its stable attractors after unstructured heterogeneity is added, even though the profile of the potential is distorted (Fig. 5B). When the severity of heterogeneity is increased (larger η), the number of attractors is considerably reduced in the network without structured heterogeneity, while remaining the same in the network with structured heterogeneity (Fig. 5C). Last, the effective diffusion Dh (see Materials and Methods) of the network containing structured heterogeneity increases only gradually as the degree of unstructured heterogeneity is increased (Fig. 5D), contrasting the distinct rise in the effective diffusion Dh in the network without structured heterogeneity.

Figure 5.

Storage in potentials with structured heterogeneity resists degradation from unstructured heterogeneity. A, Adding unstructured heterogeneity to a flat potential function drastically alters the state space of attractors. B, When structured heterogeneity is already present, adding unstructured heterogeneity does not change the number of attractors or their positions. C, The number of attractors n is strongly influenced by the severity of unstructured heterogeneity η in the homogeneous potential. Starting with n = 8 attractors, adding unstructured heterogeneity does not alter n. D, Effective diffusion increases more for the homogeneous potential as a function of η than for the potential containing structured heterogeneity.

Therefore, the spatial organization of attractors in the network with structured heterogeneity is robust to random perturbations of the underlying potential landscape. Recent studies have shown that parametrically perturbed spiking network models of bumps retain dynamics whose spatial profile is bump-shaped (Brody et al., 2003; Itskov et al., 2011; Hansel and Mato, 2013; Kilpatrick and Ermentrout, 2013). Brody et al. (2003) showed the effective dynamics of the resulting system can then be numerically approximated by a potential well model like Equation 17. Thus, the low-dimensional dynamics of the spiking network model can still be described by the potential well model, so we believe the spiking network will also be robust to unstructured perturbations in its spatial architecture. This robustness allows the reduction of diffusion due to structured heterogeneity to be relatively unaffected by sources of unstructured heterogeneity that undoubtedly exist in most cortical networks (Wang et al., 2006).

Memory storage as a noisy channel

Structured spatial heterogeneity in recurrent excitatory coupling has two distinct influences on response fidelity in working memory networks. First, it produces a finite set of attractors with which to store stimuli. Second, as we have shown, heterogeneity reduces the diffusion of persistent bump states across the network. These two influences have consequences for the overall storage performance by the network. We next characterize the working memory network as a noisy information channel (Cover and Thomas, 2006) and show how spatial heterogeneity of excitatory coupling mitigates a tradeoff between errors due to these two influences.

Consider a stimulus that chosen from m equally likely values and is to be stored by a working memory network. The network has n attractors and must store the stimulus value for T seconds before being read out. From a coding perspective, we have a chain where random input X ∈ [1, m] is loaded into attractor Y(0) ∈ [1, n] and remains in storage until Y(T) ∈ [1, n], after which it is finally read out as response Z ∈ [1, m] (Fig. 6A). If n < m then the transition X → Z involves a compression (X → Y(0)) and expansion (Y(T) → Z) of data, causing errors in transmission due to the quantization of the neural representation (Materials and Methods).

The transition Y(0) → Y(T) involves diffusion across the network, which also degrades storage. To compute the probability of transitioning from one attractor to another during the storage phase, we need only integrate the Gaussian approximation with variance Deff(n)T over the appropriate domain (Fig. 6B,C). In this way, we calculate the matrix of transition probabilities from one attractor to another during the retention interval. With this matrix, we can calculate the information lost due to diffusion (Materials and Methods). Naturally, as the product Deff(n)T increases, diffusion becomes more prominent (Fig. 6B,C), and information loss due to diffusion increases.

In total, an increase in n has the dual effect of reducing quantization error, yet increasing diffusion error. Thus, we predict that spatial heterogeneity (measured by n) causes a tradeoff between quantization and diffusion-based error, and an optimal heterogeneity will maximize the overall information flow across the channel. We explore this prediction in the next section.

Optimizing information flow with heterogeneous network coupling

Delay time T and the number of possible stimuli m can be easily controlled in working memory experiments (Funahashi et al., 1989). By fixing the protocol in working memory tasks, it has been shown animals can improve their performance through extensive training, and boost their average reward rate (Meyer et al., 2011). Performance improvements are likely caused by modifications to the structure of networks underlying working memory, so we presume the spatial heterogeneity of the network, parametrized by n, evolves internally through reward-based plasticity mechanisms (Schultz, 1998; Wang, 2008; Klingberg, 2010). To measure the overall success of storage, we consider the mutual information I between X and Z for both the potential well model and the full spiking network (Materials and Methods). Mutual information measures the reduction in uncertainty in stimulus X when response Z is known. Furthermore, mutual information allows for a clean dissection of the information loss due to quantization-based and diffusion-based errors.

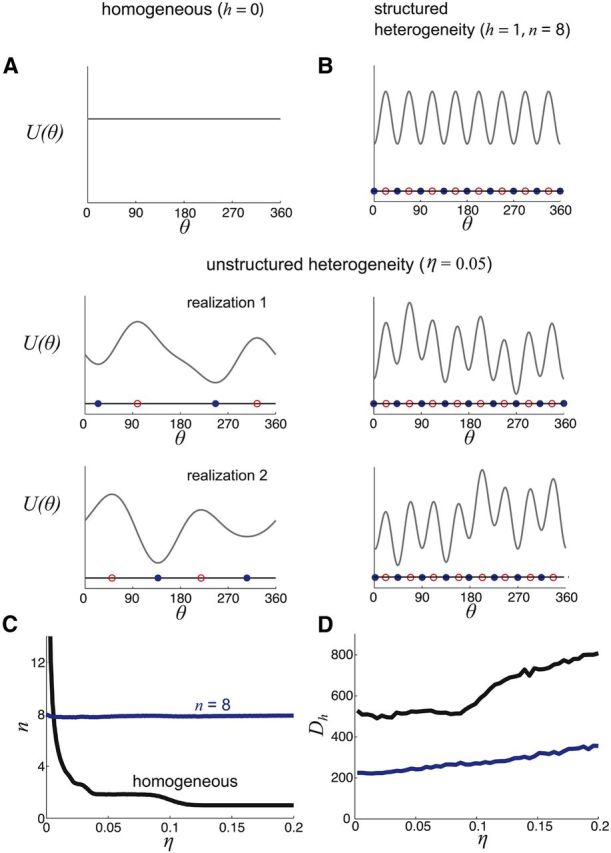

The stimulus space compression involved in X → Y (0) involves a loss of log2(m/n) bits, a quantity that decreases with n. Calculating information loss due to diffusion (Y (0) → Y (T)) requires that we compute the effective diffusion coefficient Deff(n). To obtain Deff(n) for the potential well model, we use our analytical approach (see Materials and Methods, Eq. 9), while for the spiking model we use a numeric fit to Deff(n) (Fig. 3). Information loss due to diffusion increases with Deff(n), which in turn increases with n (Figs. 3, 4E). Given both sources of information loss, we compared the channel theory prediction for I(X, Z) to direct estimates of I(X, Z) based on the joint density p(X, Z); Materials and Methods), for both the spiking and potential well models.

For a fixed m and very short delay time T the information I(X, Z) monotonically increases with n and is maximized when n = m (Fig. 7A,B; T = 0.1 s). This is expected, since recall is near immediate, so that diffusion-based error is negligible and quantization error dominates the information loss. This error vanishes when n = m and I(X, Z) approaches the stimulus entropy (log2(m) bits). As the delay time is increased, information loss due to quantization error does not change, but errors due to diffusion increase. For sufficiently long T, information peaks at a value of nmax < m in both the potential well model and the spiking model (Fig. 7A,B; T = 10 s). The value nmax marks a compromise between quantization and diffusion errors. For the potential well model, the optimal heterogeneity nmax decreases as the delay time T increases (Fig. 7C), since diffusion error grows as T increases. In total, we find that for sufficiently long delay times the degree of heterogeneity n should be less than the stimulus size m to optimize information transfer.

For a fixed delay time T, varying the number of possible inputs m also shifts nmax. Diffusion error is independent of the number of possible inputs m; however, the total possible information increases with the number of inputs m. For small m, we have that nmax = m since when n ≥ m, the quantization error is always zero and network diffusion increases with n (Fig. 8A,B; m = 4). However, for larger m, we find nmax < m, due to a compromise between quantization error and diffusion (Fig. 8A,B; m = 16). These results hold for the potential well model over a wide range of m (Fig. 8C). Overall, we highlight that a combination of varying T and m uncovers the effect of diffusion and quantization error on mutual information in a working memory network. In particular, for many combinations of T and m, an optimal spatial heterogeneity for information transfer can be found.

The information I(X, Z) measures the general relation between X and Z, one that is decoder independent. However, in psychophysical experiments, a reward is only given when the recall is correct, i.e., X = Z. Thus, it is important to consider how the probability of correct recall depends on the spatial heterogeneity of excitatory connections. In both the potential well and spiking network models, the probability of correct recall is maximized for a fixed n < m when T is sufficiently long (Fig. 9A,B), consistent with observations of I(X, Z) (Fig. 7A,B). The n that maximizes the probability of correct recall decreases as storage time increases (Fig. 9C), also in agreement with results using I(X, Z) (Fig. 7C). The probability of correct recall provides a quantification of error that weights all incorrect responses the same. To use knowledge of the spatial organization of the cue set in determining error, we also measure the impact of the number of attractors on the angular difference between the recalled and cued stimulus position. Specifically, we compute the variance of the difference between the recalled and input cue location X − Z. The magnitude of the recall error is minimized for a fixed n < m (Fig. 9D,E), corroborating our findings for I(X, Z) and proportion correct. Thus, our core finding that information transfer across the memory network is maximized for a specific degree of spatial heterogeneity also holds for a measure of task performance.

Figure 9.

The proportion of correct responses depends on the number of possible outputs, n. Blue curves are computed using theoretical probability densities and red dots employ numerically computed probability densities (see Materials and Methods). A, Proportion p(Z = X) of correct responses Z varies with n in the sine model for delay (storage) time T = 10 s. B, Proportion of correct responses p(Z = X) as a function of n in the spiking model for delay (storage) time T = 10 s and input number m = 16. C, The number of outputs nmax that maximizes the proportion of correct responses as the storage time T is varied in the sine model. D, Error magnitude (in degrees squared) varies with n in the sine model for delay (storage) time T = 10 s. E, Error magnitude (in degrees squared) varies with n in spiking model for delay (storage) time T = 10. Input number m = 16. In the sine model, well height h = 1 and noise variance σ2 = 0.16.

Discussion

We have outlined how both neural architecture and noisy fluctuations determine error in working memory codes. In working memory networks, the position of a bump in spiking activity encodes the memory of a stimulus, and input fluctuations cause diffusion of the bump position, which degrades the memory. Spatially heterogeneous recurrent excitation reduces the diffusion of bumps by stabilizing a discrete set of bump positions. However, this also introduces memory quantization, limiting the capacity of information transfer. By analyzing the information loss incurred by both error sources, we can maximize the transfer of information between the stimulus and the memory output by tuning the spatial heterogeneity of recurrent excitation. We found that the ideal heterogeneity gives a number of attractors in the network nmax, which can be less than the number of possible inputs to the network m.

Robust bump dynamics through quantization

Networks whose dynamics lie on a continuum attractor have steady-state activity that can be altered by arbitrarily weak noise and input (Bogacz et al., 2006). The advantage of this feature is that two stimuli with an arbitrarily fine distinction can be reliably stored and distinguished upon recall. However, this structure requires fine-tuning of network architecture, since any parametric jitter will destroy a continuum attractor. Previous work has shown how spatial heterogeneity in recurrent excitatory coupling quantizes the continuum attractor and stabilizes persistent network firing rates to perturbations in model parameters (Koulakov et al., 2002; Brody et al., 2003; Goldman et al., 2003; Cain and Shea-Brown, 2012) and fluctuations (Fransén et al., 2006). We believe our results apply to these models and have extended this previous work in two major ways.

First, we have shown that quantizing the state space of a spatially structured network into a finite number of attractors stabilizes bump position to dynamic noise. Second, we have shown that there is an optimal number of attractor positions for storing stimuli when dynamic noise is present. The optimal number can be lower than the actual quantization of possible stimuli, so that under-representing stimulus space can lead to more reliable coding. Studies of networks encoding the memory of eye position show individual neurons exhibit bistability in their firing rates (Aksay et al., 2003), which motivated modeling their firing rate to input relations as quantized, staircase-shaped functions (Goldman et al., 2003). This provides an example of a working memory network thought to provide a discrete delineation of a continuous variable. Our results also suggest parametric working memory networks should coarsen the stored signal to guard against diffusion error.

The advantage of spatial heterogeneity

Past work has suggested spatial heterogeneity in working memory networks is a barrier to reliable memory storage (Zhang, 1996; Renart et al., 2003; Itskov et al., 2011; Hansel and Mato, 2013). In these studies, parameters of single neurons (Renart et al., 2003) or synaptic architecture (Itskov et al., 2011; Hansel and Mato, 2013) change throughout the network in a spatially aperiodic way. Substantial quantization error results since the bump drifts toward one of a finite number of attractor positions that may not be evenly spread over representation space. Renart et al. (2003) show this effect can be overcome by considering homeostatic mechanisms that balance excitatory drive to each neuron in the network, halting drift of bumps altogether. On the other hand, both Itskov et al. (2011) and Hansel and Mato (2013) show drift can be slowed by including short-term facilitation in the network. Rather than exploring ways to remove the effective drift introduced by spatial heterogeneity, we have shown spatial heterogeneity can improve network coding. As long as quantization error is outweighed by a reduction in diffusion error, heterogeneous networks make less overall error in recall tasks than spatially homogeneous networks.

Our model represents the space of possible oriented cues as evenly distributed in space with uniform probability of presentation, a protocol often used in experiments (Funahashi et al., 1989; White et al., 1994; Goldman-Rakic, 1995; Meyer et al., 2011). Thus, we reason that the ideal covering of stimulus space by the network will have a uniform distribution. This translates into network spatial heterogeneity that is exactly periodic. The periodicity allows for a compact derivation of the effective diffusion coefficient Deff(n). Were the stimulus set to have an asymmetric probability distribution, we would expect the ideal spatial heterogeneity would not produce evenly spaced attractors. In this case, approximating bump position is possible, but motion between attractors will depend on θ and will be difficult to interpret as a simple diffusion process. Nevertheless, we expect that the specifics of spatially uneven heterogeneous coupling will significantly impact both the attractor quantization and stochastic drift across the network, and control the information transfer from stimulus to recall.

Mechanisms that produce structured heterogeneity

We conceive of two main biophysical processes that could produce structured spatial heterogeneity in a working memory network. First, Hebbian plasticity may operate at locations in the network that are driven by common external cues. Such cues will consistently activate neurons of similar orientation preference, so clusters of similarly tuned cells will tend to strengthen recurrent excitation between each other (Goldman-Rakic, 1995; Clopath et al., 2010; Ko et al., 2013). Recent experiments show training does increase the delay period firing rates of neurons with a preference for the encoded cue (Meyer et al., 2011), which may occur due to reinforcement of recurrent excitation. This mechanism would create attractors only at locations in the network that consistently receive feedforward input during training. Neurons that are never directly stimulated by cues would be deprived of continual reinforcement of their excitatory inputs, allowing broadly tuned inhibition to decrease their delay period firing (Wang, 2001). In the framework of our models, a network trained on n cue locations would form n attractors. Depending on the length of delay, this might not be the optimal number of attractors, but it would improve coding compared with the network without quantization.

Second, reward-based plasticity mechanisms signaled by dopamine may supervise the reinforcement of synaptic excitation to form a network with the optimal number of attractors. Many studies have verified that dopamine can carry reward signals back to the network responsible for a correct action (Schultz, 1998). Selectively acting on specific subsets of neurons, dopamine can prompt plasticity in network architecture to improve future chances of rewards (McNab et al., 2009; Klingberg, 2010). Such supervisory mechanisms could seek an optimal architecture in the network to maximize reward yields for a fixed retention time and number of possible cues. As we have demonstrated, this resulting structured heterogeneity will improve coding, even if there is unstructured heterogeneity present (Wang et al., 2006).

Relating diffusion of neural activity to behavior

Our results (and those of many other past studies) assume that neural activity has a diffusive component. However, how exactly neural variability drives behavioral variability is largely unknown (Britten et al., 1996; Churchland et al., 2011; Brunton et al., 2013; Haefner et al., 2013). Psychophysical studies of spatial working memory tasks reveal that subjects typically respond with nonzero error. In particular, Ploner et al. (1998) show that the midspread of memory-guided saccades in humans scales sublinearly with delay time over 0.5–20 s. This scaling is consistent with a diffusion of neural activity involved in the storage of memory, giving support to our modeling assumptions.

In contrast to these data, recent psychophysiological work in rodents and humans performing decision-making tasks lasting 0.5–2 s suggests that models with sensory noise, rather than internal diffusion, best capture these behavioral data (Brunton et al., 2013). However, this study ultimately considers a two-alternative forced-choice task, and does not consider the storage and recall of inputs over a large stimulus space. When there are only two attractors in our network, the diffusion coefficient is near zero, consistent with Brunton et al. (2013). In addition, the timescale of tasks studied by Brunton et al. (2013) may not be long enough to substantially reveal the effects of internal diffusion. These differences between the diffusive nature of working memory and decision integrator networks suggest that more work needs to be done to link variability of neural and behavioral response.

Implications for multiple object working memory

We emphasize that we did not study network encoding of multiple object working memory. Added complications arise when several items must be remembered at once (Luck and Vogel, 1997). For instance, the error made in recalling the value of a set of multiple continuous variables increases with the set size (Wilken and Ma, 2004). Recently, it has been shown that a spiking network model can recapitulate many of these set size effects (Wei et al., 2012). Interestingly, there is an optimal spread of pyramidal synapses that minimizes errors due to set size. However, reduction of the effects of dynamic noise on the accuracy of memories has yet to be studied. Our ideas could be extended to analyze how networks that encode multiple object memory could be made more robust, applying network quantization to the storage of multiple bump attractors.

Footnotes

This work was supported by National Science Foundation Grants NSF-DMS-1121784 (B.D.), NSF-DMS-1311755 (Z.P.K.), and NSF-DMS-1219753 (B.E.). We thank Robert Rosenbaum, Ashok Litwin-Kumar, and Krešimir Josić for useful discussions.

The authors declare no competing financial interests.

References

- Aksay E, Major G, Goldman MS, Baker R, Seung HS, Tank DW. History dependence of rate covariation between neurons during persistent activity in an oculomotor integrator. Cereb Cortex. 2003;13:1173–1184. doi: 10.1093/cercor/bhg099. [DOI] [PubMed] [Google Scholar]

- Amari S. Dynamics of pattern formation in lateral-inhibition type neural fields. Biol Cybern. 1977;27:77–87. doi: 10.1007/BF00337259. [DOI] [PubMed] [Google Scholar]

- Ben-Yishai R, Bar-Or RL, Sompolinsky H. Theory of orientation tuning in visual cortex. Proc Natl Acad Sci U S A. 1995;92:3844–3848. doi: 10.1073/pnas.92.9.3844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13:87–100. doi: 10.1017/S095252380000715X. [DOI] [PubMed] [Google Scholar]

- Brody CD, Romo R, Kepecs A. Basic mechanisms for graded persistent activity: discrete attractors, continuous attractors, and dynamic representations. Curr Opin Neurobiol. 2003;13:204–211. doi: 10.1016/S0959-4388(03)00050-3. [DOI] [PubMed] [Google Scholar]

- Brunton BW, Botvinick MM, Brody CD. Rats and humans can optimally accumulate evidence for decision-making. Science. 2013;340:95–98. doi: 10.1126/science.1233912. [DOI] [PubMed] [Google Scholar]

- Burak Y, Fiete IR. Fundamental limits on persistent activity in networks of noisy neurons. Proc Natl Acad Sci U S A. 2012;109:17645–17650. doi: 10.1073/pnas.1117386109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cain N, Shea-Brown E. Computational models of decision making: integration, stability, and noise. Curr Opin Neurobiol. 2012;22:1047–1053. doi: 10.1016/j.conb.2012.04.013. [DOI] [PubMed] [Google Scholar]

- Camperi M, Wang XJ. A model of visuospatial working memory in prefrontal cortex: recurrent network and cellular bistability. J Comput Neurosci. 1998;5:383–405. doi: 10.1023/A:1008837311948. [DOI] [PubMed] [Google Scholar]

- Chelaru MI, Dragoi V. Efficient coding in heterogeneous neuronal populations. Proc Natl Acad Sci U S A. 2008;105:16344–16349. doi: 10.1073/pnas.0807744105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchland AK, Kiani R, Chaudhuri R, Wang XJ, Pouget A, Shadlen MN. Variance as a signature of neural computations during decision making. Neuron. 2011;69:818–831. doi: 10.1016/j.neuron.2010.12.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clopath C, Büsing L, Vasilaki E, Gerstner W. Connectivity reflects coding: a model of voltage-based stdp with homeostasis. Nat Neurosci. 2010;13:344–352. doi: 10.1038/nn.2479. [DOI] [PubMed] [Google Scholar]

- Compte A, Brunel N, Goldman-Rakic PS, Wang XJ. Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb Cortex. 2000;10:910–923. doi: 10.1093/cercor/10.9.910. [DOI] [PubMed] [Google Scholar]

- Compte A, Constantinidis C, Tegner J, Raghavachari S, Chafee MV, Goldman-Rakic PS, Wang XJ. Temporally irregular mnemonic persistent activity in prefrontal neurons of monkeys during a delayed response task. J Neurophysiol. 2003;90:3441–3454. doi: 10.1152/jn.00949.2002. [DOI] [PubMed] [Google Scholar]

- Cover T, Thomas J. Elements of information theory. New York: Wiley-Interscience; 2006. [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Neurocomputational models of working memory. Nat Neurosci. 2000;3(Suppl):1184–1191. doi: 10.1038/81460. [DOI] [PubMed] [Google Scholar]

- Faisal AA, Selen LP, Wolpert DM. Noise in the nervous system. Nat Rev Neurosci. 2008;9:292–303. doi: 10.1038/nrn2258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fransén E, Tahvildari B, Egorov AV, Hasselmo ME, Alonso AA. Mechanism of graded persistent cellular activity of entorhinal cortex layer V neurons. Neuron. 2006;49:735–746. doi: 10.1016/j.neuron.2006.01.036. [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Fuster JM. Unit activity in prefrontal cortex during delayed-response performance: neuronal correlates of transient memory. J Neurophysiol. 1973;36:61–78. doi: 10.1152/jn.1973.36.1.61. [DOI] [PubMed] [Google Scholar]

- Goldman MS, Levine JH, Major G, Tank DW, Seung HS. Robust persistent neural activity in a model integrator with multiple hysteretic dendrites per neuron. Cereb Cortex. 2003;13:1185–1195. doi: 10.1093/cercor/bhg095. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Cellular basis of working memory. Neuron. 1995;14:477–485. doi: 10.1016/0896-6273(95)90304-6. [DOI] [PubMed] [Google Scholar]

- Haefner RM, Gerwinn S, Macke JH, Bethge M. Inferring decoding strategies from choice probabilities in the presence of correlated variability. Nat Neurosci. 2013;16:235–242. doi: 10.1038/nn.3309. [DOI] [PubMed] [Google Scholar]

- Hansel D, Mato G. Short-term plasticity explains irregular persistent activity in working memory tasks. J Neurosci. 2013;33:133–149. doi: 10.1523/JNEUROSCI.3455-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itskov V, Hansel D, Tsodyks M. Short-term facilitation may stabilize parametric working memory trace. Front Comput Neurosci. 2011;5:40. doi: 10.3389/fncom.2011.00040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilpatrick ZP, Ermentrout B. Wandering bumps in stochastic neural fields. SIAM J Appl Dyn Syst. 2013;12:61–94. doi: 10.1137/120877106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klingberg T. Training and plasticity of working memory. Trends Cogn Sci. 2010;14:317–324. doi: 10.1016/j.tics.2010.05.002. [DOI] [PubMed] [Google Scholar]

- Ko H, Hofer SB, Pichler B, Buchanan KA, Sjöström PJ, Mrsic-Flogel TD. Functional specificity of local synaptic connections in neocortical networks. Nature. 2011;473:87–91. doi: 10.1038/nature09880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ko H, Cossell L, Baragli C, Antolik J, Clopath C, Hofer SB, Mrsic-Flogel TD. The emergence of functional microcircuits in visual cortex. Nature. 2013;496:96–100. doi: 10.1038/nature12015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koulakov AA, Raghavachari S, Kepecs A, Lisman JE. Model for a robust neural integrator. Nat Neurosci. 2002;5:775–782. doi: 10.1038/nn893. [DOI] [PubMed] [Google Scholar]

- Laing C, Lord GJ. Stochastic methods in neuroscience. New York: Oxford UP; 2009. [Google Scholar]

- Laing CR, Chow CC. Stationary bumps in networks of spiking neurons. Neural Comput. 2001;13:1473–1494. doi: 10.1162/089976601750264974. [DOI] [PubMed] [Google Scholar]

- Lewis DA, Gonzalez-Burgos G. Intrinsic excitatory connections in the prefrontal cortex and the pathophysiology of schizophrenia. Brain Res Bull. 2000;52:309–317. doi: 10.1016/S0361-9230(99)00243-9. [DOI] [PubMed] [Google Scholar]

- Lifson S, Jackson JL. On self-diffusion of ions in a polyelectrolyte solution. J Chem Phys. 1962;36:2410. [Google Scholar]

- Lindner B, Kostur M, Schimansky-Geier L. Optimal diffusive transport in a tilted periodic potential. Fluct Noise Lett. 2001;1:R25–R39. doi: 10.1142/S0219477501000056. [DOI] [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature. 1997;390:279–281. doi: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- Marsat G, Maler L. Neural heterogeneity and efficient population codes for communication signals. J Neurophysiol. 2010;104:2543–2555. doi: 10.1152/jn.00256.2010. [DOI] [PubMed] [Google Scholar]

- McNab F, Varrone A, Farde L, Jucaite A, Bystritsky P, Forssberg H, Klingberg T. Changes in cortical dopamine d1 receptor binding associated with cognitive training. Science. 2009;323:800–802. doi: 10.1126/science.1166102. [DOI] [PubMed] [Google Scholar]

- Meyer T, Qi XL, Stanford TR, Constantinidis C. Stimulus selectivity in dorsal and ventral prefrontal cortex after training in working memory tasks. J Neurosci. 2011;31:6266–6276. doi: 10.1523/JNEUROSCI.6798-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller P. Analysis of spike statistics in neuronal systems with continuous attractors or multiple, discrete attractor states. Neural Comput. 2006;18:1268–1317. doi: 10.1162/neco.2006.18.6.1268. [DOI] [PubMed] [Google Scholar]

- Osborne LC, Palmer SE, Lisberger SG, Bialek W. The neural basis for combinatorial coding in a cortical population response. J Neurosci. 2008;28:13522–13531. doi: 10.1523/JNEUROSCI.4390-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padmanabhan K, Urban NN. Intrinsic biophysical diversity decorrelates neuronal firing while increasing information content. Nat Neurosci. 2010;13:1276–1282. doi: 10.1038/nn.2630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pesaran B, Pezaris JS, Sahani M, Mitra PP, Andersen RA. Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat Neurosci. 2002;5:805–811. doi: 10.1038/nn890. [DOI] [PubMed] [Google Scholar]

- Ploner CJ, Gaymard B, Rivaud S, Agid Y, Pierrot-Deseilligny C. Temporal limits of spatial working memory in humans. Eur J Neurosci. 1998;10:794–797. doi: 10.1046/j.1460-9568.1998.00101.x. [DOI] [PubMed] [Google Scholar]

- Polk A, Litwin-Kumar A, Doiron B. Correlated neural variability in persistent state networks. Proc Natl Acad Sci U S A. 2012;109:6295–6300. doi: 10.1073/pnas.1121274109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao SG, Williams GV, Goldman-Rakic PS. Isodirectional tuning of adjacent interneurons and pyramidal cells during working memory: evidence for microcolumnar organization in PFC. J Neurophysiol. 1999;81:1903–1916. doi: 10.1152/jn.1999.81.4.1903. [DOI] [PubMed] [Google Scholar]

- Renart A, Song P, Wang XJ. Robust spatial working memory through homeostatic synaptic scaling in heterogeneous cortical networks. Neuron. 2003;38:473–485. doi: 10.1016/S0896-6273(03)00255-1. [DOI] [PubMed] [Google Scholar]

- Ringach DL, Shapley RM, Hawken MJ. Orientation selectivity in macaque V1: diversity and laminar dependence. J Neurosci. 2002;22:5639–5651. doi: 10.1523/JNEUROSCI.22-13-05639.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Risken H. The Fokker-Planck equation: methods of solution and applications. volume 18. Berlin: Springer; 1996. [Google Scholar]

- Romo R, Brody CD, Hernández A, Lemus L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature. 1999;399:470–473. doi: 10.1038/20939. [DOI] [PubMed] [Google Scholar]

- Rosen MJ. A theoretical neural integrator. IEEE Trans Biomed Eng. 1972;19:362–367. doi: 10.1109/TBME.1972.324139. [DOI] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Shamir M, Sompolinsky H. Implications of neuronal diversity on population coding. Neural Comput. 2006;18:1951–1986. doi: 10.1162/neco.2006.18.8.1951. [DOI] [PubMed] [Google Scholar]

- Stein RB, Gossen ER, Jones KE. Neuronal variability: noise or part of the signal? Nat Rev Neurosci. 2005;6:389–397. doi: 10.1038/nrn1668. [DOI] [PubMed] [Google Scholar]

- Wang XJ. Synaptic basis of cortical persistent activity: the importance of NMDA receptors to working memory. J Neurosci. 1999;19:9587–9603. doi: 10.1523/JNEUROSCI.19-21-09587.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang XJ. Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 2001;24:455–463. doi: 10.1016/S0166-2236(00)01868-3. [DOI] [PubMed] [Google Scholar]

- Wang XJ. Decision making in recurrent neuronal circuits. Neuron. 2008;60:215–234. doi: 10.1016/j.neuron.2008.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y, Markram H, Goodman PH, Berger TK, Ma J, Goldman-Rakic PS. Heterogeneity in the pyramidal network of the medial prefrontal cortex. Nat Neurosci. 2006;9:534–542. doi: 10.1038/nn1670. [DOI] [PubMed] [Google Scholar]

- Wei Z, Wang XJ, Wang DH. From distributed resources to limited slots in multiple-item working memory: a spiking network model with normalization. J Neurosci. 2012;32:11228–11240. doi: 10.1523/JNEUROSCI.0735-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White JM, Sparks DL, Stanford TR. Saccades to remembered target locations: an analysis of systematic and variable errors. Vision Res. 1994;34:79–92. doi: 10.1016/0042-6989(94)90259-3. [DOI] [PubMed] [Google Scholar]

- Wilken P, Ma WJ. A detection theory account of change detection. J Vis. 2004;4(12):1120–1135. doi: 10.1167/4.12.11. [DOI] [PubMed] [Google Scholar]

- Wu S, Hamaguchi K, Amari S. Dynamics and computation of continuous attractors. Neural Comput. 2008;20:994–1025. doi: 10.1162/neco.2008.10-06-378. [DOI] [PubMed] [Google Scholar]

- Zhang K. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J Neurosci. 1996;16:2112–2126. doi: 10.1523/JNEUROSCI.16-06-02112.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]