Abstract

The extent to which different cognitive processes are “embodied” is widely debated. Previous studies have implicated sensorimotor regions such as lateral intraparietal (LIP) area in perceptual decision making. This has led to the view that perceptual decisions are embodied in the same sensorimotor networks that guide body movements. We use event-related fMRI and effective connectivity analysis to investigate whether the human sensorimotor system implements perceptual decisions. We show that when eye and hand motor preparation is disentangled from perceptual decisions, sensorimotor areas are not involved in accumulating sensory evidence toward a perceptual decision. Instead, inferior frontal cortex increases its effective connectivity with sensory regions representing the evidence, is modulated by the amount of evidence, and shows greater task-positive BOLD responses during the perceptual decision stage. Once eye movement planning can begin, however, an intraparietal sulcus (IPS) area, putative LIP, participates in motor decisions. Moreover, sensory evidence levels modulate decision and motor preparation stages differently in different IPS regions, suggesting functional heterogeneity of the IPS. This suggests that different systems implement perceptual versus motor decisions, using different neural signatures.

Introduction

Embodied cognition theories hypothesize that the same sensorimotor networks that guide actions (e.g., hand or eye movements) also implement perceptual decisions (Gold and Shadlen, 2007; Cisek and Kalaska, 2010). This implies that a radiologist's decision of whether a mammogram contains a tumor would rely on different brain circuits depending on which effector indicates it. Whether or not perceptual decisions are implemented in sensorimotor circuits is contentious.

Moreover, whether perceptual decisions have a single neural signature is unknown. Diffusion models (Ratcliff, 1978) hypothesize that sensory evidence accumulates until a decision threshold is reached. Accordingly, macaque neurophysiology studies have predicted greater firing rates for high than low sensory evidence (High>Low) perceptual decisions. When monkeys indicate perceptual decisions with saccades to already known spatial targets, sensorimotor areas such as the lateral intraparietal area (LIP) and frontal eye fields (FEF) show High>Low firing patterns (Kim and Shadlen, 1999; Shadlen and Newsome, 2001; Gold and Shadlen, 2007).

However, such increased firing could also reflect motor preparation. FEF and LIP show increasing presaccade neural activity in saccade tasks not involving perceptual decisions (Andersen and Buneo, 2002). Invasive microstimulation and recording studies suggest that in most FEF and LIP neurons the High>Low pattern disappears if saccade plans cannot be formed (Gold and Shadlen, 2003; Bennur and Gold, 2011). Thus High>Low neuronal firing may in most cases indicate an evolving motor decision, rather than sensory integration.

Human fMRI studies have revealed both sensorimotor and higher-level prefrontal activations when specific motor responses, effectors, or spatial targets were preassigned to particular perceptual decisions (Heekeren et al., 2004-2008; Grinband et al., 2006; Philiastides and Sajda, 2007; Ploran et al., 2007, 2011; Tosoni et al., 2008; Donner et al., 2009; Ho et al., 2009; Kayser et al., 2010a,b; Noppeney et al., 2010; Liu and Pleskac, 2011; Rahnev et al., 2011). However, effector-nonspecific sensorimotor areas can plan both eye and hand movements to fixed spatial targets (Filimon, 2010), or multiple hand movements, including left and right (Cisek and Kalaska, 2005; Medendorp et al., 2005), making their contribution to perceptual decisions unclear.

Moreover, contradictory BOLD fMRI response patterns have been predicted. Diffusion models could predict either a High>Low BOLD pattern, assuming activation remains elevated after reaching a decision threshold (Heekeren et al., 2004; Tosoni et al., 2008; Ruff et al., 2010) (i.e., sustained activity), or a Low>High pattern if activation returns to baseline, since low evidence accumulates more slowly, leading to greater integrated activity (Philiastides and Sajda, 2007; Kayser et al., 2010a,b; Noppeney et al., 2010). Perceptual decision-making studies to date have presumed a single specific neural signature. However, a single neural signature may not hold across multiple brain regions (Heekeren et al., 2008).

We disentangle motor preparation from perceptual decision making using event-related fMRI. Target locations and possible eye or hand responses are revealed only after the decision stage. Unlike perceptual decision-making studies to date, we do not presume either a High>Low or Low>High pattern. We investigate the modulation pattern within each brain area that shows increased effective connectivity with sensory evidence regions during perceptual decisions, before motor planning can begin.

We show that sensorimotor regions such as putative LIP are not involved in the accumulation of sensory evidence for perceptual decisions, whereas left inferior prefrontal cortex is. This area shows Low>High activations. However, once the motor instruction is known, LIP shows High>Low activations, consistent with motor decisions. Thus, different types of decisions have different neural signatures.

Materials and Methods

Participants.

Nineteen neurologically normal, right-handed subjects with normal or corrected-to-normal vision participated. Three were excluded due to excessive head motion (>1 voxel = 3 mm) or fatigue. The analyses were performed on the remaining 16 subjects (10 females; age range, 20–32 years; mean age, 24.9 years). Subjects gave written informed consent in accordance with the ethics committee of the Charité University Medicine Clinic, Berlin, Germany.

Stimuli.

Subjects performed a perceptual decision-making task involving faces and houses (Fig. 1). Stimuli were produced from grayscale images of 40 faces (face database, Max Planck Institute for Biological Cybernetics, http://faces.kyb.tuebingen.mpg.de/) and 40 front-facing houses (131 × 156 pixels, mean luminance = 100), cropped to match low-level features and overall organization of the face images (Fig. 1B).

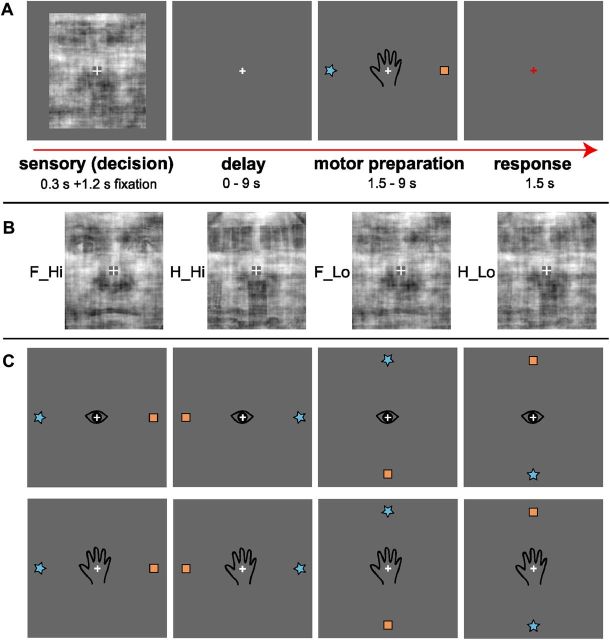

Figure 1.

Stimuli and task. A, Example event-related fMRI trial. Subjects decided whether a noisy stimulus represented a house or a face, without knowing how they would indicate their response. Following a delay, subjects received a specific motor preparation instruction for either an eye or hand movement. Star and house symbols indicated faces versus houses, respectively (counterbalanced across subjects). During the response stage, subjects indicated their face or house decision by either saccading to the remembered target location in eye trials (up, down, left, or right), or pressing the corresponding button on a diamond-shaped button box in hand trials. A red fixation cross indicated the start of the response period. Subjects maintained fixation at all times of the trial, except during eye movement responses. Fixation was used as baseline in-between trials (jittered duration, see Materials and Methods). B, Example face and house stimuli at high or low levels of sensory evidence (low or high levels of noise, respectively). Note that these levels were adjusted for each subject individually, based on performance during training and after each run in the scanner. Face and house stimuli and levels of sensory evidence were pseudo-randomized across trials. C, Possible motor plans for the motor preparation stage. Eye and Hand trials as well as target locations were pseudo-randomized across trials. F_Hi, H_Hi, High sensory evidence for faces and houses, respectively; F_Lo, H_Lo, low sensory evidence for faces and houses, respectively.

All face and house stimulus images were equated for spatial frequency, contrast, and luminance. The magnitude and phase spectrum of each image was obtained using a fast Fourier transform (FFT). The average magnitude spectrum of all images was calculated. Stimulus images were produced by calculating the inverse FFT of the average magnitude spectrum and a linear combination of their corresponding phase spectra and random noise, obtained using a weighted mean phase algorithm (Dakin et al., 2002). This generated images characterized by their percentage phase coherence. Higher coherence means a clearer image (more sensory evidence); lower coherence means a noisier image (less sensory evidence).

The coherence levels of face and house stimulus images in high and low sensory evidence conditions were adjusted for each subject individually before each functional run and remained constant during that run. Subjects had two to three practice runs (128–192 trials) on the task before commencing scanning. Low coherence levels commenced at 30% for the first run, and were adjusted in increments of 2.5% for the next practice run depending on the performance of the subject, to achieve at least 70% correct performance. High coherence levels were lowered from 50% in decrements of 2.5% to avoid ceiling effects and to achieve ∼90% accuracy.

Stimuli were controlled by a Fujitsu Celsius H250 laptop running Presentation 12.1 software (Neurobehavioral Systems). Stimuli were viewed with goggles (VisuaStim, Resonance Technology), with an 800 × 600 pixel display subtending 32° × 24° visual angle. Stimulus images subtended a 5.24° × 6.24° visual angle, presented against a gray background. In addition to face and house stimuli, the start of response preparation was indicated with a schematic hand or an eye centered on the fixation cross, together with two motor targets located either above and below, or left and right, of the fixation cross (Fig. 1A). Each target (a square or a star) subtended 0.77° × 0.77° and was presented 5.4° above, below, left, or right from the fixation cross.

Task.

Subjects reported whether a centrally presented image showed a face or a house. A central fixation cross was always present. Each trial (Fig. 1A) included a 300 ms high coherence (Hi) or low coherence (Lo) Face (F) or House (H) stimulus (F_Hi, F_Lo, H_Hi, H_Lo), followed by: a 1200 ms fixation cross; variable delay; variable motor preparation (eye or hand); a 1500 ms response period; and a variable fixation baseline.

The subject did not know whether the response would be executed with an eye or hand movement until the motor preparation period, after the stimulus and delay periods. During each motor preparation event, two targets, a star and a square, appeared at opposite locations up and down, or left and right, of the fixation cross. Subjects were instructed to prepare a movement toward the appropriate response symbol (e.g., star for face or square for house). The stimulus-to-response symbol mapping was counterbalanced across subjects.

To signal the response period, the fixation cross turned red. Hand responses consisted of an up, down, left, or right button press on a four-button, diamond-shaped fiber-optic response pad (Current Designs, http://www.curdes.com), placed by the subjects' right hand and not visible to them. Eye responses consisted of an eye movement to the remembered location of one of the targets on the visual display, recorded at 60 Hz with an MR-compatible eye tracker (ViewPoint, Arrington Research) built into the stimulus display goggles. Participants were instructed to maintain central fixation except when indicating their response on eye movement trials. No performance feedback was provided during the experiment. Subjects completed two to three training runs beforehand, to minimize learning effects during the experiment.

The order of stimulus images, eye- and hand-response trials, and the location of targets was pseudo-randomized. For maximum uncertainty about the duration and onset of each event, and to better separate the hemodynamic responses, the duration of delay, motor preparation, and baseline periods was jittered according to a geometric distribution [mean, 2 repetition times (TRs); truncated to range from one to five TRs; one TR = 1500 ms]. The geometric distribution is the discrete analog of an exponential distribution (event durations are multiples of a TR). In contrast to a uniform (flat) distribution of event durations, the geometric distribution leads to maximum uncertainty about the beginning of the next event. One thousand random designs (event orders and timing jitters) were produced in MATLAB. The power of each design was evaluated with 3dDeconvolve (nodata option; AFNI), and the stimulus timing sequences with the smallest total amount of unexplained variance (mean square error) were selected (http://afni.nimh.nih.gov/pub/dist/HOWTO//howto/ht03_stim/html/AFNI_howto.shtml). The four best sequences were chosen for four functional runs, the order of which was randomized across subjects. Each random event order lasted 672 s (448 TRs). There were 16 trials of each condition, totaling 64 trials per functional run.

MRI data acquisition.

A 3T Siemens Magnetom Trio scanner and 12-channel phased-array head coil were used. Functional images were acquired using an echoplanar T2* GRAPPA gradient echo pulse sequence [28 contiguous axial slices covering the entire brain; 3 mm in-plane voxel resolution; 4 mm slice thickness; 64 × 64 matrix; field of view (FOV) = 192 mm; TR = 1500 ms; echo time (TE) = 30 ms; flip angle = 70°; bandwidth = 2232 Hz/pixel; 448 volumes per run]. Images were collected in bottom-up interleaved order. Each subject completed four functional runs. At the beginning of each run, four volumes were discarded automatically to allow magnetization to reach a steady state. In the same scanning session, a high-resolution T1-weighted MPRAGE structural scan was collected for each participant for registration purposes (TR = 2300 ms; TE = 2.98 ms; 1 × 1 × 1 mm voxels; 256 × 256 matrix; FOV = 256 mm; flip angle = 9°).

MRI data preprocessing and analysis.

Standard fMRI preprocessing, including brain extraction, motion correction, slice timing correction, whole-brain intensity normalization in each functional run, high-pass filtering (>100 s), and spatial smoothing (8 mm FWHM Gaussian kernel), was conducted using the FMRIB Software Library (FSL 4.1; http://www.fmrib.ox.ac.uk/fsl/index.html). For each subject, EPI images from functional runs 1, 2, and 4 were first registered to the EPI image from run 3, using the FMRIB Linear Image Registration Tool. All EPI images were then registered to each subject's high-resolution anatomical scan using a six-parameter rigid body transformation. Registration to standard space (Montréal Neurological Institute) was performed using the FMRIB Nonlinear Image Registration Tool.

Whole-brain statistical analyses were performed within the general linear model (GLM) framework implemented in the FMRIB Software Library. A first-level GLM was first estimated for each subject, each run, modeling sensory (F_Hi, F_Lo, H_Hi, H_Lo); delay (Delay_F_Hi, Delay_F_Lo, Delay_H_Hi, Delay_H_Lo); motor preparation (Eye_Hi, Eye_Lo, Hand_Hi, Hand_Lo); and response (Eye Response, Hand Response) events. Delay and motor preparation events were labeled as high or low sensory evidence according to the stimulus that was shown in that trial, to investigate whether the amount of sensory evidence affects subsequent periods.

To avoid motor contamination of the decision-making period, false alarms during sensory, delay, and motor preparation periods were modeled as error regressors, as were incorrect responses. Eye movement false alarms were defined as an eye movement >2.5° from the fixation cross. Six motion correction parameters were modeled as confound regressors.

All regressors were convolved with the FMRIB preset double-gamma hemodynamic response function. Contrasts of interest were computed at the first level using linear combinations of the regressors above, including the following: Face (F_Hi, F_Lo); House (H_Hi, H_Lo); Face versus House; High Sensory (F_Hi, H_Hi); Low Sensory (F_Lo, H_Lo); High Delay (Delay_F_Hi, Delay_H_Hi); Low Delay (Delay_F_Lo, Delay_H_Lo); Eye Preparation (Eye_Hi, Eye_Lo); Hand Preparation (Hand_Hi, Hand_Lo); and Eye and Hand Preparation following High versus Low evidence (Eye_Hi vs Eye_Lo; Hand_Hi vs Hand_Lo).

Each subject's individual runs were combined in a fixed-effects second-level analysis. Data across subjects were combined in a mixed-effects, third-level (FLAME1 + 2) analysis, treating subjects as a random effect.

Face and house regions of interest.

Variation in brain size and shape and individual differences in ear canals and lateral ventricles produce different field distortions in ventral temporal cortex in each subject. As a result, registration of individual runs to standard space is imprecise and can slightly smear out activations in the fusiform and parahippocampal gyri. Note that group-level activations for faces and houses in the fusiform gyrus and parahippocampal gyrus were highly significant (z > 3.1, corresponding to p < 0.001, whole-brain corrected with AlphaSim cluster-thresholding; see Clustering section below), suggesting a good overlap between different subjects' activations. However, to ensure that selected voxels accurately track face and house sensory evidence in individual subjects on a run-by-run basis, face and house voxels were selected based on anatomical and functional criteria in individual runs. Face- and house-responsive voxels were selected based on Face>House and House>Face contrasts in the left and right fusiform gyrus and parahippocampal gyrus, respectively. In subjects lacking bilateral activations, only the activated side was selected. To ensure consistency in the selection of fusiform face area (FFA) and parahippocampal place area (PPA) clusters, FFA and PPA were also defined at each subject's run-average level in standard space, and back-transformed to single run space using the FSL invwarp and applywarp tools. Activations resulting from Face>House and House>Face contrasts in single runs were selected if they were consistent (close to or overlapping) with these back-transformed masks. A cluster of a minimum of 10 adjacent voxels surviving at least p < 0.05 (z > 1.65) was required. In runs in which large activations extended into neighboring occipital and temporal areas, the threshold was raised until a cluster was confined to the anatomical region of interest (ROI) (strongest p value used: p = 0.001; i.e., z = 3.1). Across all runs and subjects, in 14% of the selected face and house ROIs, the threshold had to be made less stringent than p < 0.05 to obtain a cluster of 10 adjacent voxels, due to occasionally noisier individual runs. In every case, these clusters were in the same anatomical location as the more significant face and house ROIs from other runs in the same subject.

Single-unit recording studies investigating binary decisions suggest that perceptual decision areas compare the output of neuronal populations tuned to each alternative (Gold and Shadlen, 2007). Thus, the output of lower-level sensory neurons is integrated in a higher-level decision area. To investigate whether higher-level decision areas integrate sensory evidence for faces or houses, a difference fMRI signal was computed between face- and house-selective areas.

The average time series across all voxels in face and house ROIs, respectively, was extracted using fslmeants. The time series of each ROI was demeaned and normalized in MATLAB using the formulas, demeaned_timeseries = timeseries − mean(timeseries); and norm_timeseries = demeaned_timeseries/norm(demeaned_timeseries), where the MATLAB norm function calculates the square root of the summed (individually) squared vector elements. If present bilaterally, the demeaned and normalized time series from left and right face regions and from left and right house regions were averaged, separately, to create one time series for faces, and one for houses. In subjects with only one significantly activated fusiform face and parahippocampal house area, that time series was used. Thus, each subject had one demeaned and normalized face-selective time series, Face(t), and one demeaned and normalized house-selective time series, House(t). The absolute difference between these two time series was then computed to obtain a difference time series, |Face(t) − House(t)|.

Psychophysiological interaction.

In addition to the main GLM, a psychophysiological interaction (PPI) analysis (Friston et al., 1997) was performed. The PPI identified which brain areas increase their effective connectivity with the difference signal |Face(t) − House(t)| from fusiform face and parahippocampal house regions, during the perceptual decision period when a stimulus was presented. Regions involved in face and house perceptual decisions should correlate with the absolute difference in activation (the absolute difference in BOLD signal over time) between fusiform face and parahippocampal house regions, |Face(t) − House(t)|. Moreover, the increase in correlation between perceptual decision areas and face and house sensory areas should be task specific; that is, it should be greater during the perceptual decision period (when the stimulus is presented) than during the rest of the trial.

Note that a PPI does not exclude regions that are also activated during the rest of the trial, or that show greater motor-related activations. Rather, it reveals regions that increase in effective connectivity with sensory regions specifically when sensory evidence is presented and a perceptual decision is to be made. A region revealed by this PPI method could still show high levels of activation during the motor stage. However, the effective connectivity with face and house regions should be greatest when the perceptual decision is to be made, and when that region needs to rely on sensory input from face and house regions. Percent signal change analysis on subjects' FFA and PPA showed that both FFA and PPA responded strongly during the sensory stage, but were deactivated or not significantly activated versus baseline during the delay and motor preparation phases (data not shown). Based on this response profile, an area integrating sensory information from FFA and PPA should show the greatest effective connectivity during the sensory stage, rather than the rest of the trial.

Note that this type of analysis is based on the drift diffusion model, which assumes a single directed drift process of accumulating relative evidence (i.e., the difference signal between alternatives) to one of two decision boundaries corresponding to each alternative (Ratcliff, 1978). This model has been applied to populations of neurons signaling leftward versus rightward motion direction (Smith and Ratcliff, 2004) (for review, see Gold and Shadlen, 2007), with the assumption that a single area integrates the relative difference (e.g., LIP or the superior colliculus, when the task involves preassigned eye movements). Unlike the drift diffusion model, race models assume two independent accumulators racing to a common boundary, with evidence for each decision accumulated separately (Usher and McClelland, 2001). However, the two models make very similar predictions in terms of changes in model parameters (Ratcliff, 2006).

Our goal here was to test whether areas previously assumed to fulfill the predictions of the diffusion model (e.g., LIP, when motor responses are preassigned) still behave as sensory integrators when motor preparation is disentangled from perceptual decisions.

The PPI analysis thus included the following regressors: (1) a physiological regressor, |Face(t) − House(t)|; (2) a psychological task regressor (PSY) in which face and house sensory TRs were weighted +1, TRs from the remainder of the trial were weighted −1, and baseline TRs were weighted 0; and (3) an interaction regressor (PSY.* |Face(t) − House(t)|).

The PSY (task) regressor weighting ensures that the correlation is task specific, rather than (for instance) a nonspecific and longer-duration attentional effect. By convolving the seed region time series (|Face(t) − House(t)|) with a task regressor that is weighted +1 during the perceptual decision period and −1 during nondecision periods, the resulting PPI regressor is only correlated with the time series during the perceptual decision period (for review, see O'Reilly et al., 2012). This ensures a task-specific correlation; that is, an increase in effective connectivity between two regions specifically during the task, rather than an overall correlation with the original seed time series that could be due to other factors, such as a third area driving both correlated regions (Friston, 2011). Additionally, incorrect/false alarm regressors and motion regressors were included as regressors of no interest, as above.

To ensure that perceptual decision-related activity was not cut short in the first PPI, a second PPI (data not shown) was also implemented, in which the perceptual decision period was deemed to last for the full duration of the sensory and delay TRs. The pattern of results did not change, but the activations were weaker overall in this analysis, and a subset of the original PPI areas was revealed.

Clustering.

To correct for multiple comparisons, cluster-size thresholding (AlphaSim, AFNI) (Ward, 2000) was applied to the whole brain volume. Minimum cluster sizes for contiguous activated voxels occurring by chance with a probability of <0.05 were calculated with 10,000 Monte Carlo simulations for voxelwise probability thresholds p = 0.005 (z > 2.6) and p = 0.001 (z > 3.1), yielding a required minimum of 22 and 14 voxels, respectively.

Percent signal change analysis.

Areas revealed by the PPI to increase their effective connectivity with face and house signals during the perceptual decision period (i.e., areas that correlated with the interaction term in the PPI) were selected as ROIs. The activation profile (percent signal change for each of the regressors from the main GLM) was then calculated for these PPI ROIs using the Featquery tool in FSL and customized MATLAB scripts. This allowed us to examine how these PPI regions behave during the different stages of the trial (as modeled in the main GLM). ROIs were cluster thresholded, as described above. Note that the selection of PPI ROIs, which contain voxels that show an interaction between the task (perceptual decision) and |Face(t) − House(t)| is independent of the percent signal change calculation for each regressor versus baseline within such voxels, thus avoiding a circular analysis.

Two-tailed paired t tests were performed to identify whether (1) the BOLD signal from such PPI regions differed between high and low sensory evidence events and (2) whether overall activations during the sensory period (high and low) differed significantly from the rest of the trial.

Results

Subjects performed face versus house perceptual decisions on images containing high or low levels of sensory evidence. Importantly, subjects made their decision first and were only able to indicate their response after variable-length delay and motor preparation periods, using either eye or hand movements to four possible spatial targets (Fig. 1). This decoupled the perceptual decision from motor planning [eight possible motor plans: two effectors (hand, eye) × four response locations (top, bottom, right, left)].

Behavioral results

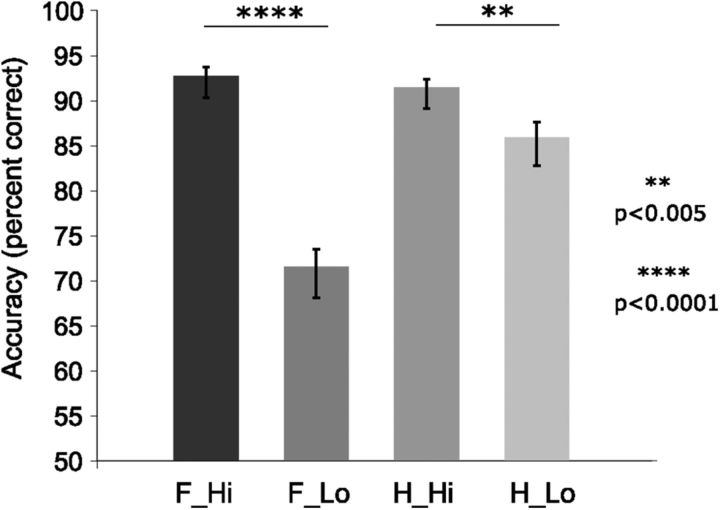

Subjects were highly sensitive to the amount of sensory evidence in face and house images (Fig. 2). Performance was significantly more accurate on high evidence trials (paired t test, F_Hi vs F_Lo, t(15) = 10.2, two-tailed p < 0.0001; H_Hi vs H_Lo, t(15) = 3.6, two-tailed p = 0.0012). Performance was well above chance (percentage correct responses: F_Hi: 92.8%; F_Lo: 71.6%; H_Hi: 91.5%; H_Lo: 85.9%; chance = 50%). Although accuracy was somewhat higher on H_Lo than F_Lo, the highly significant difference between H_Hi and H_Lo suggests the modulation was effective. Note that the task was not a reaction-time task. Rather, responses followed sensory, delay, and motor preparation events (Fig. 1A). Previous reaction-time studies of perceptual decisions have shown that subjects can detect faces and cars presented for as little as 50 ms, with reaction times <1 s (Philiastides and Sajda, 2007). In addition, we found no trend of increasing accuracy with longer delays (R2 = 0.07, p = 0.67, n.s.). We are thus confident that our subjects had sufficient time to view the stimuli and reach a decision within the 1500 ms decision period. The high accuracy rates for both high and low stimuli also support this.

Figure 2.

Accuracy (percentage correct classification) for high and low levels of sensory evidence for faces and houses. Subjects were sensitive to the amount of sensory evidence available for their perceptual decision, with significantly higher accuracy for higher levels of sensory evidence. Error bars represent the SEM. Abbreviations are as in Figure 1.

BOLD activations during sensory evidence

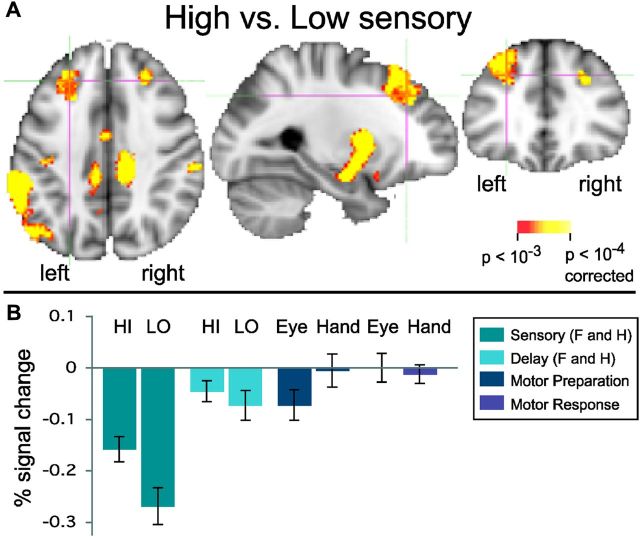

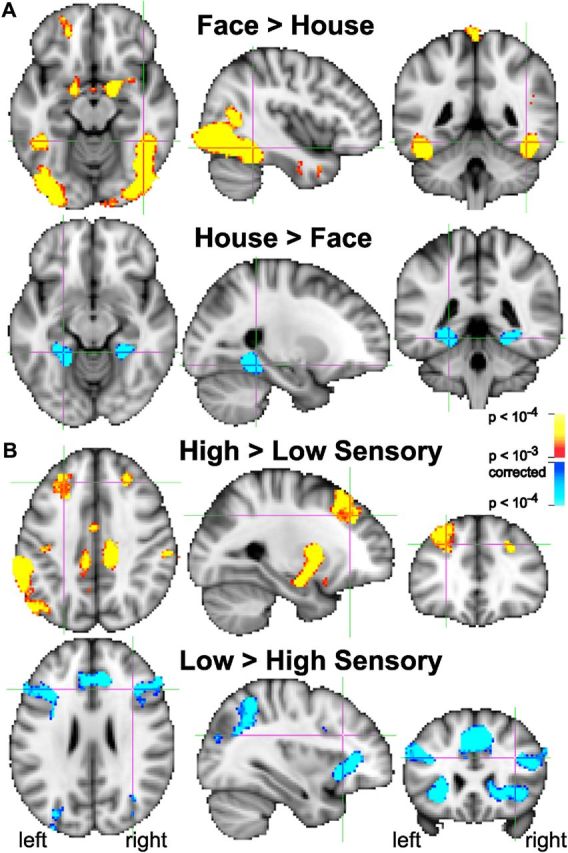

The activations for stimuli at different levels of evidence confirm the effectiveness of the sensory evidence manipulation (Fig. 3). Unless otherwise stated, all p values are voxelwise, corrected with AlphaSim cluster thresholding to p < 0.05 at the whole-brain level. Faces activated face-dominant regions in occipital and ventral temporal cortex significantly more than houses (p < 0.001, corrected; Fig. 3A). Houses activated the parahippocampal gyrus significantly more than faces (p < 0.001, corrected). Moreover, both low and high sensory evidence (across faces and houses) activated a wide network of areas (Fig. 3B). A High>Low sensory evidence contrast revealed BOLD activations in superior prefrontal cortex, inferior parietal cortex, precuneus, cingulate cortex, striatum, amygdala, superior temporal sulcus, right postcentral sulcus, medial prefrontal cortex (MPFC), and middle insula (p < 0.001, corrected). A Low>High sensory evidence contrast produced greater BOLD activations in lateral and inferior prefrontal cortex, FEF, intraparietal and superior parietal areas, pre-supplementary motor and anterior cingulate cortex, anterior insula, right orbitofrontal cortex, and the occipital pole (p < 0.001, corrected). The large number of areas showing such modulations illustrates that such contrasts do not eliminate attentional, working memory, difficulty, and other influences, and are not sufficient to identify decision-making regions. The PPI analysis narrowed down the list of likely decision-making areas by also requiring an increase in effective connectivity with face and house regions during the perceptual decision, rather than just a modulation by sensory evidence.

Figure 3.

BOLD activation contrasts between faces and houses, and between high and low sensory evidence. A, Red to yellow: activations greater for face stimuli than house stimuli (across both high and low sensory evidence). Face stimuli activated the fusiform gyrus, including the fusiform face area, significantly more than house stimuli. Dark blue to light blue: the parahippocampal place area shows greater activation for house stimuli than face stimuli. Fusiform face and parahippocampal house regions of interest were selected in each subject based on Face>House and House>Face contrasts. B, Multiple brain regions are modulated by the amount of sensory evidence available for a decision. Red to yellow: greater activations for high sensory evidence than low sensory evidence (across both faces and house) can be found in multiple brain regions, including prefrontal and inferior parietal cortices. Dark blue to light blue: greater activations for low sensory than high sensory evidence can be found in multiple cortical and subcortical areas, including prefrontal and posterior parietal cortex. All activations are shown at voxelwise p < 0.001, corrected to p < 0.05 with AlphaSim (see Materials and Methods).

PPI analysis

To determine which of these areas (Fig. 3) plays a role in perceptual decision making, we adopted an effective connectivity approach by using a PPI analysis, without requiring a particular BOLD activation pattern of either High>Low or Low>High a priori.

We hypothesized that perceptual decision-making regions that integrate sensory evidence should show greater effective connectivity with the absolute difference between time series from face- and house-dominant sensory regions, |Face(t) − House(t)|, during the perceptual decision period, than during the rest of the trial, when motor preparation and other mental processes might take place. While a modulation in connectivity according to sensory evidence can be expected, effective connectivity between perceptual decision-making regions and sensory regions should be greatest during the decision period, for both high and low evidence decisions. Our approach avoids a priori assumptions about whether decision-making regions follow a High>Low or Low>High pattern. Instead, the pattern of modulation by amount of sensory evidence can then be determined empirically within regions revealed by the PPI analysis.

An increase in effective connectivity with sensory (face and house) regions could, however, also be due to non-decision-related attention, working memory, or inhibition effects. We therefore further hypothesized that, in addition to an increased effective connectivity, perceptual decision-making regions involved in sensory integration should (1) show some modulation by amount of sensory evidence (i.e., either High>Low or Low>High); (2) show greater activation during the decision period than during the rest of the trial, i.e., at the time that the decision is made; and (3) be activated above baseline (fixation).

The perceptual decision period was defined as the TR (1500 ms) during which the sensory evidence was presented, convolved with a standard double-gamma hemodynamic response function. Including the delay in the perceptual decision period did not change the pattern of results other than producing overall weaker activations, presumably because of the long jittered delay (see Materials and Methods).

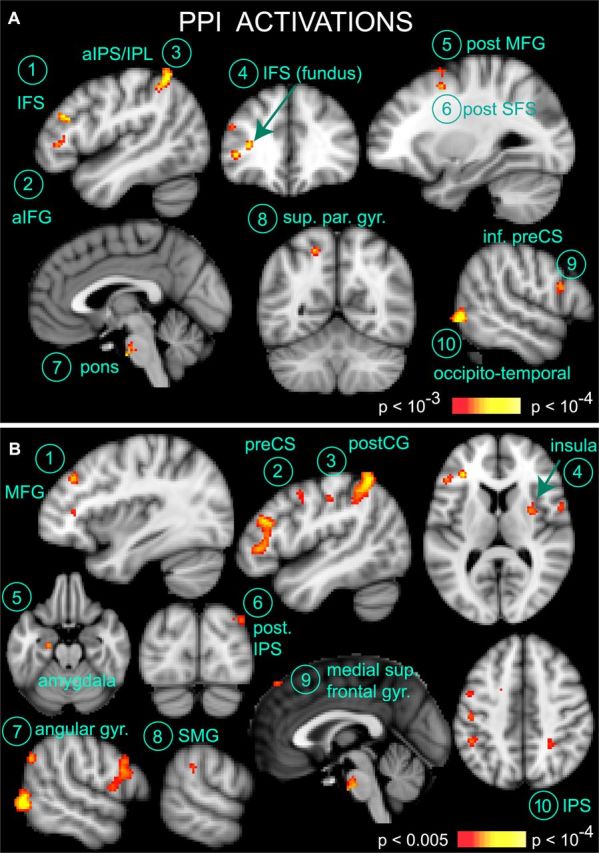

Figure 4A shows the outcome of the PPI analysis (also see Table 1). Regions that increased their effective connectivity with |Face(t) − House(t)| during the perceptual decision period included the left inferior frontal sulcus (IFS), anterior inferior frontal gyrus (IFG), an adjacent region in the fundus of the IFS, the posterior part of superior frontal sulcus (SFS) and middle frontal gyrus (MFG), the pons, the left superior parietal gyrus (SPG), and inferior parietal lobule [IPL/anterior intraparietal sulcus (aIPS)], as well as the right inferior pre-central sulcus (CS) and occipitotemporal cortex (p < 0.001, corrected). The occipitotemporal area likely corresponds to the lateral occipital complex (LOC), a region known to respond to objects and faces (Grill-Spector et al., 1999).

Figure 4.

PPI results. A, Several brain regions increase their effective connectivity with face and house regions specifically during the face–house decision stage (Table 1). Activations are shown at voxelwise p < 0.001, cluster corrected to p < 0.05 (see Materials and Methods). The percent BOLD signal change of these areas is shown in Figure 5. The seed time series for the PPI was the absolute difference between face and house voxels selected in the fusiform face gyrus and parahippocampal gyrus (see Fig. 3A; see Materials and Methods). B, PPI results surviving voxelwise p < 0.005, corrected to p < 0.05. This lower threshold reveals additional brain areas that increase their effective connectivity with face and house regions during the decision stage, including the left pre-central sulcus and right intraparietal sulcus (also see Table 2). None of these additional areas showed a response profile consistent with the accumulation of sensory evidence for perceptual decision making. The percent BOLD signal change of these regions is shown in Figure 6. a, Anterior; post., posterior; inf. pre-CS, inferior pre-CS; sup. par. gyr., superior parietal gyrus, postCG, post-central gyrus. Also see Fig. 3 legend. Left is left in coronal and axial images.

Table 1.

PPI activations (p < 0.001, corrected)

| Brain region | BA | Number of voxels | Peak z-value | MNI coordinates |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| L anterior IPS/IPL | 7/40 | 139 | 4 | −44 | −52 | 54 |

| R occipitotemporal cortex | 37 | 115 | 4.05 | 58 | −66 | −2 |

| L inferior frontal sulcus | 46 | 67 | 3.84 | −48 | 28 | 22 |

| L anterior inferior frontal gyrus | 45 | 62 | 3.97 | −42 | 36 | 4 |

| L posterior superior frontal sulcus | 6 | 61 | 3.59 | −24 | 10 | 48 |

| R inferior precentral sulcus | 44 | 46 | 3.52 | 52 | 8 | 20 |

| L pons | — | 36 | 3.6 | −2 | −14 | −38 |

| L posterior middle frontal gyrus | 6 | 25 | 3.51 | −30 | 8 | 62 |

| L inferior frontal sulcus (fundus) | 46 | 24 | 3.91 | −32 | 34 | 10 |

| L superior parietal gyrus | 7 | 20 | 3.53 | −18 | −58 | 54 |

Brain areas involved in a psychophysiological interaction with fusiform face and parahippocampal house voxels during the decision period, thresholded at voxelwise p < 0.001, cluster corrected to p < 0.05 with AlphaSim. L, Left; R, right; BA, Brodmann area.

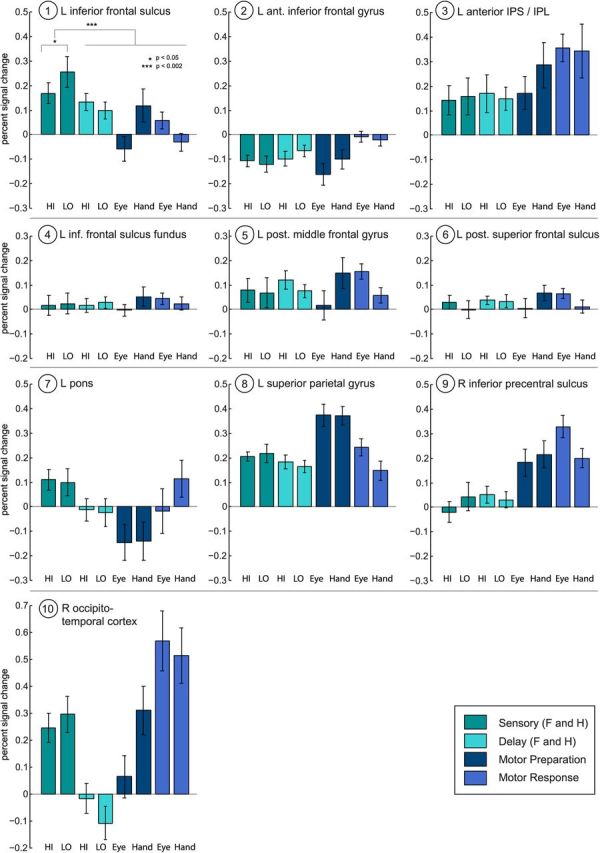

It is possible that this entire network is involved in perceptual decision making. However, Figure 5 shows that in some of these regions, activations during the decision (sensory) period decreased relative to, or were not significantly different from, the fixation baseline (e.g., the left anterior IFG, posterior part of SFS, fundus of IFS, and the right inferior pre-CS). The left posterior MFG showed low levels of BOLD activation during the perceptual decision period, slightly higher activations during the delay period, and the highest BOLD responses during hand motor preparation and saccades. The left aIPS showed similar above-baseline BOLD activation during sensory, delay, and movement preparation periods, and highest responses during both hand and eye response periods. The right occipitotemporal cortex was most active during the sensory period and during hand preparation and hand and eye responses. The pontine nuclei showed relatively low-level activations during the perceptual stage, and deactivation or no significantly above-baseline activation in the rest of the trial. The activation of the pontine nuclei is consistent with cerebro-pontine projections from frontal, occipital, and parietal cortex (Leergaard and Bjaalie, 2007). However, the pons also showed no modulation by amount of sensory evidence.

Figure 5.

Percent BOLD signal change for each area revealed by the PPI in Figure 4A. The left inferior frontal sulcus is the only region that (1) shows a modulation by the amount of sensory evidence (here: Low>High); (2) shows greater activation during the perceptual decision stage than during the rest of the trial; and (3) is positively activated during the perceptual decision stage. Other areas are deactivated (left anterior IFG), not significantly different from baseline during the decision (right inferior pre-CS, left posterior SFS, fundus of left IFS), or are not modulated by evidence levels during the sensory stage. Error bars represent the SEM (vs baseline). Note HI versus LO Sensory error bars are versus baseline, not for the difference between HI and LO. A paired two-tailed t test between HI and LO Sensory activations was significant (p < 0.05). HI, High sensory evidence (both faces and houses); LO, low sensory evidence (both faces and houses).

The only PPI region that fulfilled the three criteria for a sensory integration region was the left IFS: it showed greatest above-baseline BOLD activation during the perceptual decision period, compared with the rest of the trial (paired t test, t(15) = 3.87, two-tailed p = 0.0015), a modulation by amount of sensory evidence (paired t test, t(15) = 2.32, two-tailed p = 0.035, the pattern being Low>High), and above-baseline activation (Fig. 5). Even without requiring greater perceptual decision-related activation compared with the rest of the trial, it was still the only area showing a modulation by sensory evidence in above-baseline activations during perceptual decisions.

It is possible that within this network of areas, the inhibition or deactivation of some regions (e.g., right inferior pre-CS, left IFG), motor readiness (for both hand and eye movements, in the absence of a known motor plan, e.g., aIPS and superior parietal gyrus), and actual integration of sensory evidence (left IFS) all need to be orchestrated to successfully reach a perceptual decision. Our results suggest, however, that within this network, only the IFS integrates sensory evidence relevant to the perceptual decision per se, being sensitive to the amount of sensory evidence available.

Given the nature of our task, subjects knew that either a hand or eye movement would eventually occur later in the trial. It is thus possible that both eye and hand preparatory networks were preactivated in anticipation of the upcoming specific motor instruction, to allow for a quick selection of a specific motor plan. While a left superior parietal gyrus region did increase its effective connectivity with face and house regions during the perceptual decision, it was not modulated by the level of sensory evidence, suggesting it is not involved in accumulating it. Moreover, this area responded equally strongly during eye and hand preparation (i.e., it did not show effector-specific motor planning during the motor preparation stage). The left aIPS was likewise not modulated by the amount of sensory evidence, showing comparatively low levels of activation during the decision period and greater motor-related activations. The left aIPS also responded equally strongly during eye and hand responses. The fact that the aIPS showed greater motor-related than perceptual decision-related responses also argues against a possible bias against motor-related regions in our analysis.

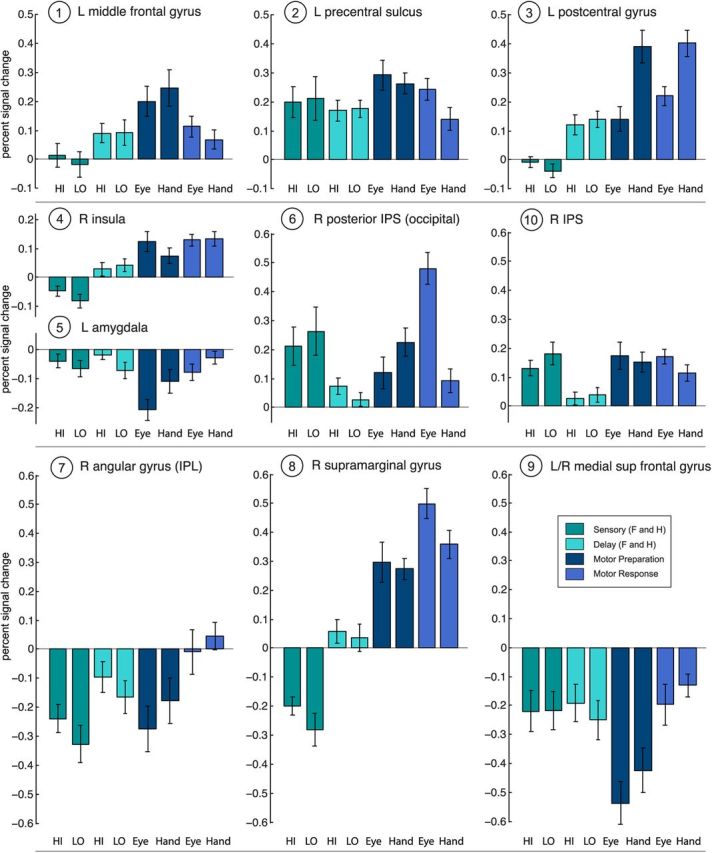

To ensure that additional activations were not missed due to use of too stringent a statistical threshold, we lowered the threshold for the PPI activations to p < 0.005 (minimum voxel cluster = 22 voxels) (Figs. 4B, 6; Table 2). The results did not change and revealed no additional sensory evidence accumulation regions that were modulated by the amount of sensory evidence. At this lower threshold, two smaller IPS clusters, located at the posterior end of the IPS in the occipital lobe and in an anterior segment of the IPS, appeared in the right hemisphere. None of these posterior parietal activations showed significant modulation by sensory evidence during the perceptual decision stage.

Figure 6.

Percent BOLD signal change for PPI areas shown in Figure 4B. The majority of these areas show negative BOLD responses during the perceptual decision stage or the entire trial (vs fixation baseline). The left pre-central sulcus is activated across all events, with no significant modulation by amount of sensory evidence. The right posterior IPS and a second IPS region also show no modulation by amount of sensory evidence for the decision. Abbreviations are as in Figures 4 and 5.

Table 2.

PPI activations (p < 0.005, corrected)

| Brain region | BA | Number of voxels | Peak z-value | MNI coordinates |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| L IFS/IFG | 45/46 | 440 | 3.97 | −42 | 36 | 4 |

| L anterior IPS/IPL | 7/40 | 384 | 4 | −44 | −52 | 54 |

| R occipitotemporal cortex | 37 | 317 | 4.05 | 58 | −66 | −2 |

| L posterior SFS/MFG | 6 | 298 | 3.59 | −24 | 10 | 48 |

| R inferior precentral sulcus | 44 | 262 | 3.52 | 52 | 8 | 20 |

| L superior parietal gyrus | 7 | 106 | 3.53 | −18 | −58 | 54 |

| L pons | — | 92 | 3.6 | −2 | −14 | −38 |

| L MFG | 9 | 62 | 3.6 | −34 | 34 | 34 |

| L precentral sulcus | 6 | 40 | 2.94 | −50 | 0 | 42 |

| R insula | 13 | 39 | 3.2 | 30 | 0 | 10 |

| L/R medial superior frontal gyrus | 8 | 38 | 3.01 | 0 | 46 | 52 |

| R posterior IPS (occipital) | 19 | 36 | 3.09 | 36 | −84 | 34 |

| R IPS | 7/39 | 32 | 2.9 | 26 | −46 | 46 |

| R angular gyrus (IPL) | 39 | 31 | 3.33 | 58 | −62 | 34 |

| R supramarginal gyrus | 40 | 31 | 3.13 | 66 | −36 | 30 |

| L calcarine sulcus/posterior lateral ventricle | 17 | 30 | 3.11 | −18 | −78 | 4 |

| L postcentral gyrus | 3 | 26 | 2.99 | −50 | −20 | 44 |

| L amygdala | — | 22 | 3.06 | −22 | −14 | −22 |

Brain areas involved in a psychophysiological interaction with fusiform face and parahippocampal house voxels during the decision period, thresholded at voxelwise p < 0.005, cluster corrected to p < 0.05 with AlphaSim. The top seven regions highlighted in bold are the same as the regions listed in Table 1, surviving a voxelwise p < 0.001, clustercorrected to p < 0.05. Several of the PPI clusters from Table 1 (left IFS and left anterior IFG; posterior SFS and MFG) merge at the lower threshold shown here. BA, Brodmann area.

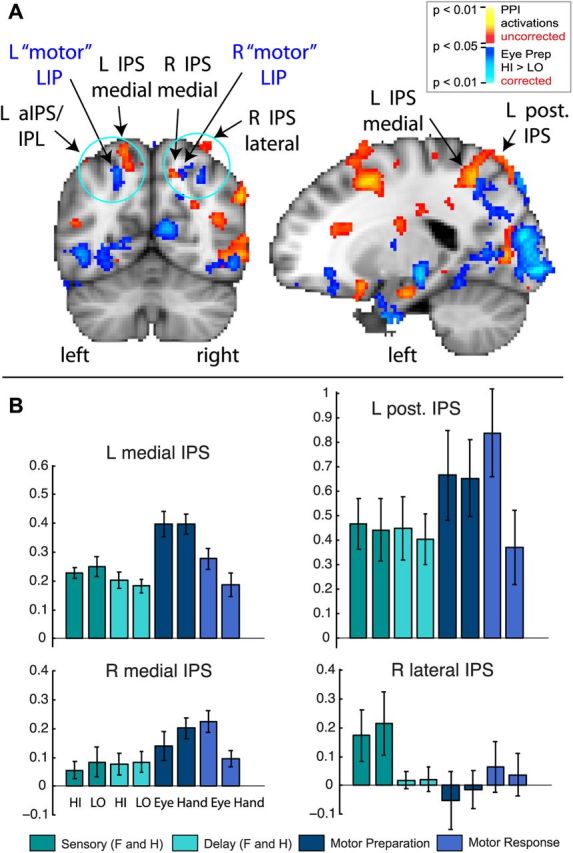

Since area LIP may be too small to detect with stringent cluster thresholding, we also lowered the PPI activation threshold to p < 0.05 (uncorrected) to examine any activations around the IPS (see Fig. 8). None of these PPI activations around the IPS showed a significant modulation by sensory evidence during the decision (sensory) stage. Even if it were to be assumed that there is a modulation by sensory evidence during the perceptual decision in the parietal PPI areas discussed, but that it was too weak to be picked up with fMRI, the question remains why such a modulation was detected in prefrontal cortex, but not in parietal cortex. This pattern of results suggests a greater involvement in sensory evidence accumulation for prefrontal cortex.

Figure 8.

Overlay between PPI activations (p < 0.05, uncorrected) and Eye Preparation HI>LO activations (p < 0.05, corrected with AlphaSim cluster thresholding) around the IPS. A, Stringent thresholds and clustering might accidentally miss a possible IPS activation that might show a modulation by sensory evidence during the decision (sensory period). To ensure that we did not miss any IPS PPI activations that may be indicative of sensory integration, we lowered the threshold to p < 0.05 (uncorrected) for PPI activations. Two activations appeared lateral and medial of the IPS, bilaterally (in red to yellow). We overlaid the LIP activation from Figure 7 (in blue: Eye Preparation HI > LO, p < 0.05, corrected with AlphaSim cluster thresholding) on the same image. The motor LIP activation (blue) did not overlap with any of the uncorrected IPS PPI activations (red). B, None of the uncorrected IPS PPI activations showed a significant modulation by sensory evidence during the perceptual decision, as required for a sensory integration role during the decision (sensory) stage. All two-tailed paired t tests (HI vs LO during the Sensory period) were nonsignificant. The many regions and response profiles around the IPS suggest functional heterogeneity, even within saccade-responsive regions.

Note that our PPI analysis is analogous to the hypothesized difference-based comparator mechanism assumed to integrate sensory evidence for one or the other perceptual category in macaque studies (Gold and Shadlen, 2007). A perceptual decision area should thus integrate evidence for both faces and houses, and respond during both face and house decisions. This analysis does not contrast face decisions with house decisions to identify face-specific and house-specific representations. Such finer representations may precisely be intermingled at the neuronal level, which would, however, not affect the expected fMRI activations. Whenever house decisions are made, the decision area is activated; whenever face decisions are made, the area is also activated. Our analyses show that such sensory integration takes place in the left inferior prefrontal cortex, but not LIP, when motor plans cannot be formed.

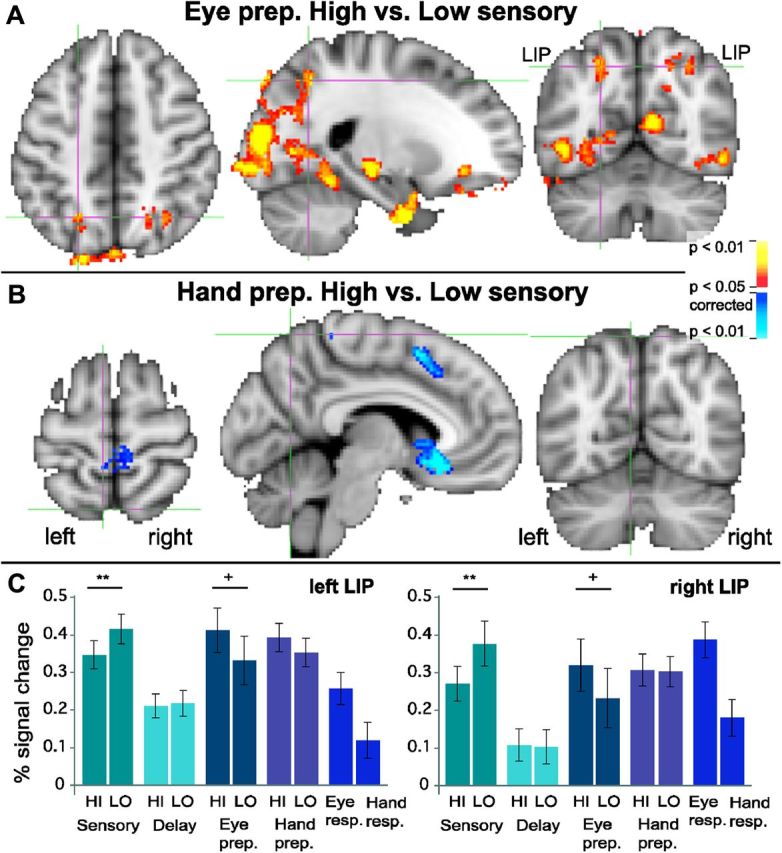

Previous studies in which perceptual decisions and motor planning were not disentangled did, however, find a High>Low sensory evidence modulation in area LIP (Tosoni et al., 2008). This suggests that once the motor plan is known, LIP should show a High>Low modulation, reflecting greater motor preparatory activity for easy oculomotor decisions. Figure 7A confirms this hypothesis. Once the eye movement preparation instruction was revealed, a bilateral IPS region showed greater activation for eye movement preparation following high sensory evidence, compared with low sensory evidence [left IPS: peak voxel z = 2.6; MNI (x, y, z) coordinates = −22, −60, 48; right IPS: peak voxel z = 2.2; MNI coordinates = 30, −64, 46] (Fig. 7A) (p<0.05, corrected). This confirms that putative human LIP is indeed involved in decisions—however, in motor decisions—and specifically eye movement decisions between possible targets to saccade to. This motor IPS region did not overlap with any of the above-mentioned parietal PPI regions, even when using a liberal (p < 0.05, uncorrected) threshold for PPI activations (Fig. 8A). This IPS region showed a Low>High modulation during the sensory period, and High>Low modulation during the motor period (Fig. 7C). Thus, the same region can show different patterns of modulation, depending on sensory versus motor stages. The Low>High pattern during the sensory stage in putative LIP likely indicates attention. This suggests different mechanisms underlying attention and motor decisions, despite the overlap reported in attentional and eye movement control networks (Corbetta et al., 1998).

Figure 7.

Modulation by sensory evidence during motor preparation. A, Red to yellow: putative bilateral LIP (lateral intraparietal area) shows greater activation for high sensory evidence compared with low sensory evidence, but only during the eye movement preparation stage (i.e., after the motor plan is known). B, Dark blue to light blue: areas in posterior parietal cortex, including medial parietal cortex, do not show greater activation for high compared with low sensory evidence during hand movement preparation. In contrast, motor areas such as the left pre-SMA and right paracentral lobule do show greater activation for hand movement preparation in high sensory versus low sensory trials. C, LIP shows a reversal of High>Low and Low>High modulations from the sensory stage to the eye movement preparation stage. During the decision (sensory) stage, both left and right LIP show a Low>High modulation (**paired two-tailed p = 0.008 and 0.005; t(15) = 3.03 and 3.28 for left and right LIP, respectively). In contrast, during the eye movement preparation stage, both left and right LIP show a High>Low modulation in a High>Low activation contrast (+p < 0.05, corrected at whole-brain level; within the ROI: paired two-tailed p = 0.007 and 0.009, t(15) = 3.14 and 3.02 for left and right LIP, respectively). Note that although the ROI was selected based on the latter contrast, High>Low during eye movement preparation, the contrast is significant at the whole-brain-level, corrected, before the selection of any ROI. Error bars represent the SEM.

Both putative LIP and left IFS showed a Low>High pattern during the sensory stage. But unlike left IFS, putative LIP did not show a task-specific increase in effective connectivity with face and house regions during the perceptual decision stage. Thus, the Low>High pattern in left IFS cannot simply indicate attention. Additional analyses also revealed that left IFS lags FFA/PPA activation by 1.2 s. This suggests that the left IFS is not involved in top-down attentional modulation of FFA and PPA.

Figure 7B shows that putative human LIP only participates in eye movement decisions, not in hand movement decisions. Although the posterior IPS showed greater hand versus eye preparatory activation (Fig. 6), no posterior parietal area showed a modulation by sensory evidence during the Hand preparatory stage (Fig. 7B; note the contrast Hand Prep Low versus Hand Prep High also showed no posterior parietal activation). In contrast, the left pre-supplementary motor area (SMA), right paracentral lobule, and left anterior cingulate cortex were modulated by the amount of evidence during hand movement preparation (Fig. 7B). This suggests that frontal hand motor networks may be more sensitive to evidence for different motor decisions than posterior hand motor networks. The activation of the pre-SMA is consistent with its involvement in the speed–accuracy tradeoff for hand responses (Forstmann et al., 2010). Eye motor preparatory activations in the parietal eye fields, around the IPS, only showed High>Low modulations (Figs. 7A, 8A, motor LIP), not Low>High modulations, even at p = 0.05, uncorrected (data not shown), in contrast to the Low>High modulation during the sensory period in and around the IPS (Figs. 3B, 7C). This suggests that the mechanism driving Low>High sensory activations in parieto-frontal sensorimotor and attention areas is different from the mechanism driving High>Low eye movement preparation activations in putative human LIP.

To confirm that the connectivity between FFA/PPA and the left IFS reflects causal coupling, not mere correlations, we also performed a PPI analysis based on IFS activations (data not shown). IFS ROIs were identified in single runs based on PPI activations obtained with |FFA(t) − PPA(t)| as the seed time series. We performed the same PPI analysis as before, except using left IFS time series instead of |FFA(t) − PPA(t)|. Results showed that a different network of areas increased their effective connectivity with left IFS, including left OFC, right MPFC, and the right putamen/posterior insula. This suggests further processing in a different, possibly reward-related, network, with information transmitted from IFS to additional areas, rather than back to FFA/PPA. Thus, our PPI analysis did not merely reflect functional correlations between regions, but effective connectivity (Friston, 2011).

Finally, previous studies (Heekeren et al., 2004, 2006) identified a neighboring area in the left superior frontal sulcus [sometimes called posterior dorsolateral PFC (DLPFC)] using High>Low sensory evidence contrasts. Indeed, the peak activation in a High>Low sensory evidence contrast (Fig. 9) was located in the left SFS (peak voxel: z = 5.25; MNI = −28, 20, 42). This difference was due to less negative (below fixation baseline) BOLD responses during high compared with low evidence, consistent with previous reports (Heekeren et al., 2004, 2006; Tosoni et al., 2008; Fig. 9). This SFS area did not show an increase in effective connectivity with |Face(t) − House(t)| during the decision period in our PPI analysis, which disentangled perceptual decisions and motor plans. The fact that this region did not increase its effective connectivity with face and house signals during the perceptual decision period, when motor preparation was controlled for, suggests that it does not play a sensory integration role. However, recent simultaneous local field potential (LFP) and single-unit recordings in areas with task-negative BOLD responses have shown a correlation between decreases in LFP power and increased firing rates (Popa et al., 2009). A correlation between more negative BOLD responses and higher firing rates in SFS could suggest a role in modulation or control of other, perhaps motor, networks. Such coordination or inhibition may be more necessary during the perceptual decision stage than during the rest of the trial (Fig. 9). This is consistent with evidence that repetitive TMS (transcranial magnetic stimulation) over SFS impairs perceptual decision making when motor responses can be planned at the moment of the decision (Philiastides et al., 2011).

Figure 9.

An SFS area previously identified as posterior DLPFC does not integrate sensory evidence. A, Red to yellow: a region located in the left SFS (see crosshairs) shows significantly greater activation (voxelwise p < 0.0001) for high compared with low sensory evidence (faces and houses). B, A percent BOLD signal change analysis in this region shows that it is deactivated versus fixation baseline during both high and low sensory evidence stimuli. Further, it is not significantly above baseline during other parts of the trial. The positive difference in signal is due to the significantly less negative activation during high compared with low sensory evidence, consistent with previous literature (see Discussion). This region did not increase its effective connectivity with face and house regions during the decision period (Fig. 4). Abbreviations are as in Figure 5 legend.

The presence of multiple activations in and around the IPS that display different functional profiles (e.g., an increase in effective connectivity, but no modulation by sensory evidence during the sensory period; or opposite modulations during sensory and motor periods, as in motor LIP) indicates functional heterogeneity around the IPS. This is consistent with the presence of multiple retinotopic maps inside the IPS (Hagler et al., 2007). It is unclear which of these functionally heterogeneous areas are homologous to the macaque LIP area described in oculomotor decision-making tasks.

This suggests that just observing fMRI activation in posterior parietal cortex during a perceptual decision-making task should be interpreted with caution, as such activation does not automatically prove any LIP involvement in accumulation of sensory evidence, especially if motor plans have not been disentangled from the perceptual decision. Our results show that when motor plans are disentangled from perceptual decisions the left inferior frontal sulcus participates in perceptual decisions independent of effector-specific motor preparation, whereas a region in IPS, putative LIP, participates in eye movement decisions once an oculomotor plan can be formed.

Discussion

Using an event-related fMRI design that disentangled motor plans from face–house perceptual decisions, we showed that the left IFS integrates sensory evidence during perceptual decisions, while putative LIP participates in motor, but not perceptual, decisions. The IFS showed greater activation for low- than high-evidence decisions. LIP showed a High>Low pattern during motor decisions, when subjects had to decide which target to saccade to. This suggests that different regions and types of decisions use different neural signatures.

A network of areas increased their effective connectivity with face and house regions during the perceptual decision period, before any specific motor responses could be planned. This network comprised lateral/inferior prefrontal areas (left IFS, IFG, right inferior pre-CS), posterior MFG/SFS, left superior parietal gyrus and anterior IPS, and right LOC. This parieto-frontal network is consistent with previous neuroimaging studies of perceptual decision making, including dot motion direction decisions (Kayser et al., 2010a,b; Liu and Pleskac, 2011; Rahnev et al., 2011); recognition of gradually revealed objects (Ploran et al., 2007, 2011); categorization of distorted dot-pattern configurations (Vogels et al., 2002); categorical decisions of animated point-light skeleton models (Li et al., 2007), and audiovisual object decisions (Noppeney et al., 2010).

By controlling for motor preparation, avoiding a priori assumptions about sensory evidence modulation patterns, and using an effective connectivity approach that permitted the study of sensory integration from separate areas representing alternative sensory evidence, we showed that sensorimotor areas LIP and FEF do not accumulate sensory evidence toward perceptual decisions.

Importantly, in our study neither the target location (Liu and Pleskac, 2011) nor the type of effector (Rahnev et al., 2011) were known during the perceptual decision. Several parietal areas code for spatial targets independent of hand or eye effectors, and plan movements with either the left or right hand (Medendorp et al., 2005). Two alternative hand movements can be planned simultaneously (Cisek and Kalaska, 2005). To disentangle motor preparation from perceptual decision making, it is thus paramount that neither the spatial target location nor the effector type be known until after the perceptual decision.

The left IFS (extending onto MFG), a subregion of DLPFC, was the only region showing increased effective connectivity with face and house regions that (1) was modulated by sensory evidence levels during the perceptual decision; (2) showed greatest activity during the decision stage; and (3) was activated above baseline. Even if the second requirement were omitted, e.g., allowing equal activation during decision and motor stages, this was still the only PPI region showing above-baseline BOLD responses modulated by sensory evidence levels during perceptual decisions.

During the motor preparation stage, evidence for faces versus houses became converted into evidence for a particular movement direction. Indeed, putative LIP was modulated by sensory evidence during the eye movement preparation stage. This suggests that the confidence/uncertainty of the perceptual decision is passed onto the motor system once a motor plan can be formed, and that it affects motor preparatory activation. This is consistent with single-cell evidence that area LIP is sensitive to confidence (Kiani and Shadlen, 2009).

The fact that we found the predicted High>Low modulation in LIP once specific saccade plans became possible argues against the possibility that fMRI lacked the sensitivity to detect such modulations in LIP. Similarly, the robust effective connectivity shown by other brain areas, including left IFS, suggests that fMRI has the sensitivity to detect sensory integration regions. Moreover, our PPI analysis also revealed regions with greater motor than perceptual decision-related activations (e.g., aIPS), arguing against a possible bias against regions with motor responses.

Prefrontal cortex

Left IFS showed a Low>High sensory evidence modulation, consistent with fMRI studies on varying dot motion coherence levels (Kayser et al., 2010a,b; Liu and Pleskac, 2011), degraded visual and auditory object evidence (Noppeney et al., 2010), and complex versus simple perceptual decisions (Li et al., 2007).

Our IFS activations were very anterior, consistent with findings that posterior, but not anterior, IFS is modulated by attention (Kayser et al., 2010b). Frontoparietal attention areas (Corbetta et al., 1998), which showed a Low>High pattern in the full GLM analysis, did not increase their effective connectivity with face and house regions during perceptual decisions. Also, peak activations for IFS lagged FFA/PPA by 1.2 s, arguing against a top-down attentional role for IFS. Given lower activations during the delay than the decision period in left IFS, a memory role also seems unlikely.

Our results suggest that DLPFC is not homogeneous, but comprises multiple functionally distinct areas, not all of which participate in perceptual decision making (IFS, MFG, and SFS). This is consistent with multiple cytoarchitectonic subdivisions of DLPFC (Petrides and Pandya, 1999). In addition, different kinds of decisions involve different prefrontal areas. Value-based decision making involves ventromedial PFC (Basten et al., 2010; Philiastides et al., 2010; Wunderlich et al., 2010). Predictive coding of categories also involves mPFC (Summerfield et al., 2006).

Area LIP and posterior parietal cortex

Once a saccade choice became possible, a bilateral posterior IPS region showed a High>Low activation pattern (Fig. 7). This is consistent with previous reports of LIP involvement, when saccade plans are already known (Gold and Shadlen, 2007; Tosoni et al., 2008). This region was not part of the PPI network that increased its effective connectivity with FFA/PPA during the perceptual decision. This suggests that putative LIP is involved in motor decisions, rather than perceptual decisions. We did not observe a High>Low evidence modulation for hand movement plans in medial parietal areas, in contrast to Tosoni et al. (2008). This may be because we used button presses rather than pointing, thus involving smaller movements.

A single-unit LIP study showed that the High>Low pattern disappears in many LIP neurons in the absence of a specific motor plan, while some continue to show motion direction and target color selectivity (Bennur and Gold, 2011). In that study, targets appeared at two known locations, with the exact location to be saccaded to being ambiguous. It is possible that LIP participates in motion direction decisions, due to its connectivity with area MT (middle temporal), but not in perceptual decision making more generally. Both LIP and PFC neurons are involved in rule-based categorization, with some evidence suggesting that LIP neurons show stronger and earlier category-selective responses (Swaminathan and Freedman, 2012). However, LIP neurons require weeks of training before showing such category selectivity (Freedman and Assad, 2011). Early in training, PFC may be more involved in categorization, with category effects becoming obvious in LIP only after training (Swaminathan and Freedman, 2012). In fact, a recent recording study found earlier and stronger rule-based signals in PFC than in parietal cortex (Goodwin et al., 2012), consistent with our finding that the left IFS, but not parietal cortex, showed sensory-level modulations during perceptual decisions. Our subjects also received considerably less training than macaques. Moreover, rule-based decision making involves applying arbitrary rules to identical sensory input, rather than integrating degraded sensory input as in perceptual decision making; LIP may participate in rule-based decisions rather than sensory integration more generally. Also, LIP cells showing category selectivity are usually selected based on memory (delay-period) responsivity (Swaminathan and Freedman, 2012). It is unknown whether LIP cells that do not respond during delay periods also show such category-selective signals or play a role in perceptual decision making more generally. Recent studies suggest functional heterogeneity within macaque LIP (Premereur et al., 2011), consistent with our findings of functionally heterogeneous IPS regions and with previous reports of multiple retinotopic maps inside human IPS (Hagler et al., 2007). Our results thus suggest that LIP cannot be assumed to be generally involved in perceptual decision making.

In our study, the left SPG and aIPS (putative AIP) (Filimon, 2010), were part of the PPI network showing increased effective connectivity with face and house voxels during perceptual decisions. However, neither SPG nor aIPS were effector specific, being equally activated during hand and eye movement preparation. Multiple possible motor plans may be activated in parallel (Cisek and Kalaska, 2005, 2010). Given knowledge that the upcoming response will involve either eye or hand movements, these activations may reflect generic hand and eye motor readiness, not a specific movement plan. Neither SPG nor aIPS showed modulation by sensory evidence levels during the perceptual decision stage, and both showed less activation during the perceptual decision than during the motor stage.

Consistent with our results, a recent multivoxel pattern analysis study also found that when dot-motion directions were disentangled from left and right button presses, the direction of motion could not be discriminated from parietal sensorimotor response regions (Hebart et al., 2012). Instead, perceptual choices of the dot motion direction could be predicted with 61% accuracy from deactivations in an angular gyrus region when motion coherence (sensory evidence) was zero, with classification accuracy at chance levels when the sensory evidence was highest. This also suggests that different mechanisms may underlie categorical choices and accumulation of sensory evidence.

It has been argued that cognitive, perceptual, and motor processes are not necessarily separate components of the functional brain architecture, especially in natural behavior (Cisek and Kalaska, 2010). The motor system and evidence-accumulating areas appear to be tightly coupled, such that evolving decisions are reflected in motor activations (Klein-Flügge and Bestmann, 2012). Evolving lexicality decisions are reflected in hand trajectories toward word or non-word stimuli (Barca and Pezzulo, 2012). Thus, not only LIP, but probably even M1 can provide a readout of the accumulation of sensory (even linguistic) evidence when perceptual decisions are mapped onto motor decisions. This is consistent with an immediate sharing of information between higher-level decision-making and motor systems when such sharing is possible, rather than with a serial processing model in which the decision is first completed and then passed onto the motor system. Where evidence accumulation happens may also depend on the nature of the stimuli, with highly “embodied” concepts (e.g., “hammer”) activating posterior parietal areas that process both sensory and hand-related motor information (Filimon et al., 2007, 2009; Pulvermüller and Fadiga, 2010). Our results suggest, however, that perceptual decisions involving abstract concepts, without well trained specific motor mappings, primarily rely on prefrontal mechanisms. Future learning, TMS, and MEG experiments could further clarify the interaction between prefrontal and sensorimotor areas in different contexts.

Footnotes

This work was supported by Deutsche Forschungsgemeinschaft (Grant HE 3347/2-1 to H.R.H.), as well as by the Max Planck Society (F.F., M.G.P., J.D.N., N.A.K., and H.R.H.). Note that a majority of this work was performed while all authors were at the Max Planck Institute for Human Development, Berlin, Germany. We thank Hadi Roohani for help with eye tracker and behavioral analysis scripts; Jürgen Baudewig and Christian Kainz for MRI scanning support at Freie Universität Berlin; and H.S. Scholte for sharing his MATLAB FSL scripts.

References

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- Barca L, Pezzulo G. Unfolding visual lexical decision in time. PLoS One. 2012;7:e35932. doi: 10.1371/journal.pone.0035932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basten U, Biele G, Heekeren HR, Fiebach CJ. How the brain integrates costs and benefits during decision making. Proc Natl Acad Sci U S A. 2010;107:21767–21772. doi: 10.1073/pnas.0908104107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennur S, Gold JI. Distinct representations of a perceptual decision and the associated oculomotor plan in the monkey lateral intraparietal area. J Neurosci. 2011;31:913–921. doi: 10.1523/JNEUROSCI.4417-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural correlates of reaching decisions in dorsal premotor cortex: specification of multiple direction choices and final selection of action. Neuron. 2005;45:801–814. doi: 10.1016/j.neuron.2005.01.027. [DOI] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annu Rev Neurosci. 2010;33:269–298. doi: 10.1146/annurev.neuro.051508.135409. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, Drury HA, Linenweber MR, Petersen SE, Raichle ME, Van Essen DC, Shulman GL. A common network of functional areas for attention and eye movements. Neuron. 1998;21:761–773. doi: 10.1016/s0896-6273(00)80593-0. [DOI] [PubMed] [Google Scholar]

- Dakin SC, Hess RF, Ledgeway T, Achtman RL. What causes non-monotonic tuning of fMRI response to noisy images? Curr Biol. 2002;12:R476–R477. doi: 10.1016/s0960-9822(02)00960-0. [DOI] [PubMed] [Google Scholar]

- Donner TH, Siegel M, Fries P, Engel AK. Buildup of choice-predictive activity in human motor cortex during perceptual decision making. Curr Biol. 2009;19:1581–1585. doi: 10.1016/j.cub.2009.07.066. [DOI] [PubMed] [Google Scholar]

- Filimon F. Human cortical control of hand movements: parietofrontal networks for reaching, grasping, and pointing. Neuroscientist. 2010;16:388–407. doi: 10.1177/1073858410375468. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, Sereno MI. Human cortical representations for reaching: mirror neurons for execution, observation, and imagery. Neuroimage. 2007;37:1315–1328. doi: 10.1016/j.neuroimage.2007.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Huang RS, Sereno MI. Multiple parietal reach regions in humans: cortical representations for visual and proprioceptive feedback during on-line reaching. J Neurosci. 2009;29:2961–2971. doi: 10.1523/JNEUROSCI.3211-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forstmann BU, Anwander A, Schäfer A, Neumann J, Brown S, Wagenmakers EJ, Bogacz R, Turner R. Cortico-striatal connections predict control over speed and accuracy in perceptual decision making. Proc Natl Acad Sci U S A. 2010;107:15916–15920. doi: 10.1073/pnas.1004932107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. A proposed common neural mechanism for categorization and perceptual decisions. Nat Neurosci. 2011;14:143–146. doi: 10.1038/nn.2740. [DOI] [PubMed] [Google Scholar]

- Friston KJ. Functional and effective connectivity: a review. Brain Connect. 2011;1:13–36. doi: 10.1089/brain.2011.0008. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. J Neurosci. 2003;23:632–651. doi: 10.1523/JNEUROSCI.23-02-00632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Goodwin SJ, Blackman RK, Sakellaridi S, Chafee MV. Executive control over cognition: stronger and earlier rule-based modulation of spatial category signals in prefrontal cortex relative to parietal cortex. J Neurosci. 2012;32:3499–3515. doi: 10.1523/JNEUROSCI.3585-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grinband J, Hirsch J, Ferrera VP. A neural representation of categorization uncertainty in the human brain. Neuron. 2006;49:757–763. doi: 10.1016/j.neuron.2006.01.032. [DOI] [PubMed] [Google Scholar]

- Hagler DJ, Jr, Riecke L, Sereno MI. Parietal and superior frontal visuospatial maps activated by pointing and saccades. Neuroimage. 2007;35:1562–1577. doi: 10.1016/j.neuroimage.2007.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebart MN, Donner TH, Haynes JD. Human visual and parietal cortex encode visual choices independent of motor plans. Neuroimage. 2012;63:1393–1403. doi: 10.1016/j.neuroimage.2012.08.027. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431:859–862. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Ruff DA, Bandettini PA, Ungerleider LG. Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proc Natl Acad Sci U S A. 2006;103:10023–10028. doi: 10.1073/pnas.0603949103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Ungerleider LG. The neural systems that mediate human perceptual decision making. Nat Rev Neurosci. 2008;9:467–479. doi: 10.1038/nrn2374. [DOI] [PubMed] [Google Scholar]

- Ho TC, Brown S, Serences JT. Domain general mechanisms of perceptual decision making in human cortex. J Neurosci. 2009;29:8675–8687. doi: 10.1523/JNEUROSCI.5984-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser AS, Buchsbaum BR, Erickson DT, D'Esposito M. The functional anatomy of a perceptual decision in the human brain. J Neurophysiol. 2010a;103:1179–1194. doi: 10.1152/jn.00364.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser AS, Erickson DT, Buchsbaum BR, D'Esposito M. Neural representations of relevant and irrelevant features in perceptual decision making. J Neurosci. 2010b;30:15778–15789. doi: 10.1523/JNEUROSCI.3163-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiani R, Shadlen MN. Representation of confidence associated with a decision by neurons in the parietal cortex. Science. 2009;324:759–764. doi: 10.1126/science.1169405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JN, Shadlen MN. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nat Neurosci. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- Klein-Flügge MC, Bestmann S. Time-dependent changes in human corticospinal excitability reveal value-based competition for action during decision processing. J Neurosci. 2012;32:8373–8382. doi: 10.1523/JNEUROSCI.0270-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leergaard TB, Bjaalie JG. Topography of the complete corticopontine projection: from experiments to principal maps. Front Neurosci. 2007;1:211–223. doi: 10.3389/neuro.01.1.1.016.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S, Ostwald D, Giese M, Kourtzi Z. Flexible coding for categorical decisions in the human brain. J Neurosci. 2007;27:12321–12330. doi: 10.1523/JNEUROSCI.3795-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Pleskac TJ. Neural correlates of evidence accumulation in a perceptual decision task. J Neurophysiol. 2011;106:2383–2398. doi: 10.1152/jn.00413.2011. [DOI] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Crawford JD, Vilis T. Integration of target and effector information in human posterior parietal cortex for the planning of action. J Neurophysiol. 2005;93:954–962. doi: 10.1152/jn.00725.2004. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Ostwald D, Werner S. Perceptual decisions formed by accumulation of audiovisual evidence in prefrontal cortex. J Neurosci. 2010;30:7434–7446. doi: 10.1523/JNEUROSCI.0455-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]