Abstract

The parietal cortex is highly multimodal and plays a key role in the processing of objects and actions in space, both in human and nonhuman primates. Despite the accumulated knowledge in both species, we lack the following: (1) a general description of the multisensory convergence in this cortical region to situate sparser lesion and electrophysiological recording studies; and (2) a way to compare and extrapolate monkey data to human results. Here, we use functional magnetic resonance imaging (fMRI) in the monkey to provide a bridge between human and monkey studies. We focus on the intraparietal sulcus (IPS) and specifically probe its involvement in the processing of visual, tactile, and auditory moving stimuli around and toward the face. We describe three major findings: (1) the visual and tactile modalities are strongly represented and activate mostly nonoverlapping sectors within the IPS. The visual domain occupies its posterior two-thirds and the tactile modality its anterior one-third. The auditory modality is much less represented, mostly on the medial IPS bank. (2) Processing of the movement component of sensory stimuli is specific to the fundus of the IPS and coincides with the anatomical definition of monkey ventral intraparietal area (VIP). (3) A cortical sector within VIP processes movement around and toward the face independently of the sensory modality. This amodal representation of movement may be a key component in the construction of peripersonal space. Overall, our observations highlight strong homologies between macaque and human VIP organization.

Introduction

The parietal cortex is considered to be an “association” cortex that receives convergent multimodal sensory inputs. This view, initially based on anatomical evidence, is confirmed by single-cell electrophysiological recordings. Briefly, the parietal cortex receives visual afferents from the extrastriate visual cortex (Maunsell and van Essen, 1983; Ungerleider and Desimone, 1986). In agreement with this, visual neurons are recorded from several parietal regions, coding the location and structure of visual items as well as their movement in 3D space with respect to the subject. These neurons can have a preference for large field dynamic visual stimuli, such as expanding or contracting optic flow patterns or moving bars (Schaafsma and Duysens, 1996; Bremmer et al., 2002a; Zhang et al., 2004), or smaller moving visual stimuli (Vanduffel et al., 2001). The parietal cortex also receives monosynaptic afferents from the somatosensory cortex (Seltzer and Pandya, 1980; Disbrow et al., 2003). Accordingly, tactile neuronal responses have been documented in this region (Duhamel et al., 1998; Avillac et al., 2005). A light auditory input to the parietal cortex has also been described from the caudomedial auditory belt (Lewis and Van Essen, 2000a), corroborating the description of parietal neurons responsive to auditory stimulations (Mazzoni et al., 1996; Grunewald et al., 1999; Schlack et al., 2005). Last but not least, the parietal cortex also receives vestibular afferents from several cortical regions with direct input from vestibular nuclei (Faugier-Grimaud and Ventre, 1989; Akbarian et al., 1994) and accordingly contains neurons that are modulated by vestibular information (Bremmer et al., 2002b; Chen et al., 2011).

This heavily multimodal convergence within the parietal cortex appears to serve two key functions: on the one hand, the processing of space and objects in space and on the other hand the preparation of oriented actions in space (i.e., sensorimotor transformation). These functions are documented by lesion, fMRI, and single-cell recording studies (Colby and Goldberg, 1999; Bremmer et al., 2002b; Andersen and Cui, 2009; Orban, 2011).

Despite this accumulated knowledge on parietal functions, little effort has been directed toward functional neuroimaging of multimodal convergence within this cortex (Bremmer et al., 2001; Sereno and Huang, 2006). In particular, no such data are available in the nonhuman primate. This prevents a direct transfer of accumulated knowledge on multimodal parietal functions, from the single-cell recording studies in the macaque, to observations derived from the human studies. Here, we propose to bridge this gap, thanks to nonhuman primate fMRI. More precisely, we focus on the intraparietal sulcus (IPS). This region has distinctive functions compared with the parietal convexity and is proposed to be involved in the construction of a multisensory representation of space (Bremmer, 2011). In particular, a subregion of the IPS is thought to code peripersonal space thanks to the processing of moving stimuli toward the subject (Graziano and Cooke, 2006). We specifically probe the involvement of the IPS in the processing of visual, tactile, and auditory moving stimuli around and toward the face.

Materials and Methods

Subjects and materials

Two rhesus monkeys (female M1, male M2, 5–7 years old, 5–7 kg) participated to the study. The animals were implanted with a plastic MRI compatible headset covered by dental acrylic. The anesthesia during surgery was induced by Zoletil (tiletamine-zolazepam, Virbac, 5 mg/kg) and followed by isoflurane (Belamont, 1–2%). Postsurgery analgesia was ensured thanks to Temgesic (buprenorphine, 0.3 mg/ml, 0.01 mg/kg). During recovery, proper analgesic and antibiotic coverage was provided. The surgical procedures conformed to European and National Institutes of Health guidelines for the care and use of laboratory animals.

During the scanning sessions, monkeys sat in a sphinx position in a plastic monkey chair positioned within a horizontal magnet (1.5-T MR scanner Sonata; Siemens) facing a translucent screen placed 90 cm from the eyes. Their head was restrained and equipped with MRI-compatible headphones customized for monkeys (MR Confon). A radial receive-only surface coil (10 cm diameter) was positioned above the head. Eye position was monitored at 120 Hz during scanning using a pupil-corneal reflection tracking system (Iscan). Monkeys were rewarded with liquid dispensed by a computer-controlled reward delivery system (Crist) thanks to a plastic tube coming to their mouth. The task, all the behavioral parameters, and the sensory stimulations were monitored by two computers running with Matlab and Presentation. Visual stimulations were projected onto the screen with a Canon XEED SX60 projector. Auditory stimulations were dispensed with an MR Confon. Tactile stimulations were delivered through Teflon tubing and 6 articulated plastic arms connected to distant air pressure electro-valves. Monkeys were trained in a mock scan environment approaching to the best the actual MRI scanner setup.

Task and stimuli

The animals were trained to maintain fixation on a red central spot (0.24° × 0.24°) while stimulations (visual, auditory, or tactile) were delivered. The monkeys were rewarded for staying within a 2° × 2° tolerance window centered on the fixation spot. The reward delivery was scheduled to encourage long fixation without breaks (i.e., the interval between successive deliveries was decreased and their amount was increased, up to a fixed limit, as long as the eyes did not leave the window). The different modalities were tested in independent interleaved runs (see below for the organization of the runs).

Visual stimulations.

Large field (32° × 32°) visual stimulations consisted of white bars (3.2° × 24.3°, horizontal, vertical, or 45° oblique) or white random dots on a black background (see Fig. 1A for an example). Three conditions were tested in blocks of 10 pulses (TR = 2.08 s): (1) coherent movement, with bars moving in one of the 8 cardinal directions or expanding or contracting random dots pattern (with 5 possible optic flow origins: center, top left (−8°, 8°), top right, lower left and lower right); each coherent movement sequence lasted 850 ms, and 24 such sequences were pseudo-randomly presented in a given coherent movement block; (2) scrambled movement, in which the different frames of a given coherent movement sequence were randomly reorganized so that no coherent movement component was left; each scrambled movement sequence lasted 850 ms, and 24 such sequences were presented in a given scrambled movement block; these matched the 24 coherent movement sequences of the coherent movement block from which they were derived; this provides precise visual controls of the coherent movement blocks; (3) static, in which individual frames randomly picked from the coherent movement visual stimuli sequences, were presented for 250 ms; these matched the coherent movement sequences of the coherent movement block they were derived from so as to provide precise visual controls of the coherent movement blocks. As a result, within a given block, 850 ms portions of the different stimuli (bars/dots/directions/origins) of a same category (coherent/scrambled/static) were pseudo-randomly interleaved. All of these stimulations were optimized for area ventral intraparietal area (VIP) as we used large field visual stimuli, moving at 100°/s in the coherent movement condition (Bremmer et al., 2002a).

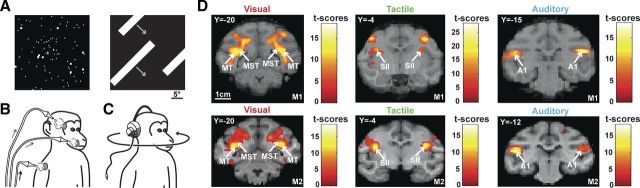

Figure 1.

Stimulations for the three sensory modalities and their corresponding primary activations. A, Two examples of visual stimuli: coherent optic flows and moving bars. The main condition of interest, coherent movement, was mixed with two control conditions (scrambled and static stimulations) and a baseline condition (fixation only). B, Schematics of the tactile stimulations: air puffs were delivered to the center of the face, the periphery of the face, or the shoulders, both on the left and right sides of the monkeys. C, Schematics of the auditory stimulations: moving sounds were delivered to the monkeys via a headset. We used the same conditions as for the visual modality. D, Statistical parametric maps (SPMs) showing the primary activations obtained for the three sensory modalities. The visual activations (left column) are specific for visual motion and correspond to the conjunction analysis of two contrasts (coherent movement vs scrambled and coherent movement vs static; p < 0.05, FWE-corrected level, masked to display only positive signal change relative to the fixation baseline). The tactile activations (middle column) correspond to stimulations to the center of the face relative to the fixation baseline (p < 0.05, FWE-corrected level). The auditory activations (right column) correspond to coherent movement relative to the fixation baseline (p < 0.05, FWE-corrected level). These results are presented individually for each monkey and displayed on coronal sections of each anatomy (M1 for the top, and M2 for the lower ones). MT, Medial temporal area; MST, medial superior temporal area; SII, secondary somatosensory area; A1, primary auditory area.

Auditory stimulations.

In both monkeys, we used coherent movement complex auditory stimuli moving in near space around the head (binaural, 3D holographic sounds, http://gprime.net/flash.php/soundimmersion, http://onemansblog.com/2007/05/13/get-your-virtual-haircut-and-other-auditory-illusions/, durations ranging between 1.7 and 11.5 s). We selected the stimuli evoking the best movement perception and localization among a group of 12 human subjects (average age, 45.5 years). Scrambled stimulations were obtained by cutting the movement sounds in 100 ms or 300 ms segments and randomly mixing them. Static stimulations consisted of auditory stimuli evoking a stable stimulus in space (selected from the same 3D holographic sounds database and evaluated by the same group of subjects as the coherent movement auditory sounds). In M2, we also used pure-tones auditory stimulations (generated in Goldwave, at 300, 500, and 800 Hz). Coherent movement stimulations consisted of two kinds of movement: (1) 2.0 s long sounds simulating far away to near movements (linear increase in signal amplitude + Doppler effect) or the opposite (linear decrease in sound amplitude); and (2) sound moving between the left and the right ear (3.5 s stimuli, corresponding to two back-and-forth cycles, thanks to opposite amplitude variations between the two binaural sounds and a 1 Hz stimulus frequency binaural offset). Human subject rating of these stimuli was less consistent than that for complex sounds, subjects usually describing stimuli moving within the head. Scrambled stimulations were obtained as for complex scrambled stimuli. Static stimuli consisted of constant left ear or right ear stimulations. Within a given block, 2 s and 3.5 s stimulations of a given category (coherent/scrambled/static) were pseudo-randomly interleaved.

Tactile stimulations.

They consisted of air puffs delivered to three different locations on the left and the right of the animals' body (see Fig. 1A for a schematic representation): (1) center of the face, close to the nose and the mouth; (2) periphery of the face, above the eyebrows; and (3) shoulders. The intensity of the stimulations ranged from 0.5 bars (center/periphery) and 1 bar (shoulders), to adjust for the larger distance between the extremity of the stimulation tubes and the skin, as well as for the difference in hair density. Within a given block, left and right stimulations were pseudo-randomly interleaved, each stimulation lasting between 400 and 500 ms and the interstimulation interval between 500 and 1000 ms.

Functional time series (runs) were organized as follows: a 10-volume block of pure fixation (baseline) was followed by a 10-volume block of category 1, a 10-volume block of category 2, and a 10-volume block of category 3; this sequence was played four times, resulting in a 160-volume run. The blocks for the 3 categories were presented in 6 counterbalanced possible orders. A retinotopy localizer was run independently in the two monkeys using exactly the stimulations of Fize et al. (2003). This localizer is not effective in driving VIP specifically or identifying a reliable topographical organization in this region (except for a relative overrepresentation of the upper with respect to the lower visual field). Here, it is used to locate the central representation of the lateral intraparietal area (LIP) within each hemisphere, in both animals.

Scanning

Before each scanning session, a contrast agent, monocrystalline iron oxide nanoparticle (Sinerem, Guerbet or Feraheme, AMAG), was injected into the animal's femoral/saphenous vein (4–10 mg/kg). For the sake of clarity, the polarity of the contrast agent MR signal changes, which are negative for increased blood volumes, was inverted. We acquired gradient-echo echoplanar (EPI) images covering the whole brain (1.5 T; repetition time [TR] 2.08 s; echo time [TE] 27 ms; 32 sagittal slices; 2×2×2 mm voxels). During each scanning session, the runs of different modalities and different orders were pseudo-randomly intermixed. A total of 40 (34) runs was acquired for visual stimulations in M1 (M2), 36 (40) runs for tactile stimulations, and 37 (42) runs for complex auditory stimulations (plus 40 runs for pure-tones auditory stimulations in M2). Fifty-seven (45) runs were obtained for the retinotopy localizer were obtained in independent sessions for M1 (M2).

Analysis

The analyzed runs were selected based on the quality of the monkeys' fixation (>85% within the tolerance window): a total of 23 (25) runs were selected for visual stimulations in M1 (M2), 20 (32) for tactile stimulations, 26 (32) for complex auditory stimulations (and 34 for pure-tones auditory stimulations in M2) and 20 (24) for the retinotopy localizer. Time series were analyzed using SPM8 (Wellcome Department of Cognitive Neurology, London, United Kingdom). For spatial preprocessing, functional volumes were first realigned and rigidly coregistered with the anatomy of each individual monkey (T1-weighted MPRAGE 3D 0.6×0.6×0.6 mm or 0.5×0.5×0.5 mm voxel acquired at 1.5T) in stereotactic space. The JIP program (Mandeville et al., 2011) was used to perform a nonrigid coregistration (warping) of a mean functional image onto the individual anatomies. Fixed-effect individual analyses were performed for each sensory modality in each monkey, with a level of significance set at p < 0.05 corrected for multiple comparisons (familywise error [FWE], t > 4.89) unless stated otherwise. We also performed conjunction analyses (statistical levels set at p < 0.05 at corrected level unless stated otherwise). In all analyses, realignment parameters, as well as eye movement traces, were included as covariates of no interest to remove eye movement and brain motion artifacts. When coordinates are provided, they are expressed with respect to the anterior commissure. We also performed ROI analyses using MarsBar toolbox (Brett et al., 2002). The ROIs were defined based on the conjunction analyses results. The significance threshold for the t tests was set at p < 0.05 (one-tailed). Results are displayed on coronal sections from each individual anatomy or on individual flattened maps obtained with Caret (Van Essen et al., 2001; http://www.nitrc.org/projects/caret/). We also mapped each individual anatomy onto the F6 monkey atlas of Caret to visualize the obtained activations against the anatomical subdivisions described by Lewis et al. (2000, 2005) within the intraparietal sulcus IPS.

Test-retest analyses are performed to evaluate the robustness of the reported activations (see Figs. 4 and 6). For each monkey, the runs of the sensory modality of interest are divided into two equal groups as follows. The runs are ordered from the first run to be recorded to the last. A first group (called the ODD test-retest group) is composed of all the odd runs in the acquisition sequence (first, third, fifth, seventh, etc.). A second group (called the EVEN test-retest group) is composed by all the even runs in the acquisition sequence (second, fourth, sixth, eighth, etc.). The percentage of signal change (PSC) in the contrast of interest is calculated for all the runs, only the ODD runs and only the EVEN runs independently.

Figure 4.

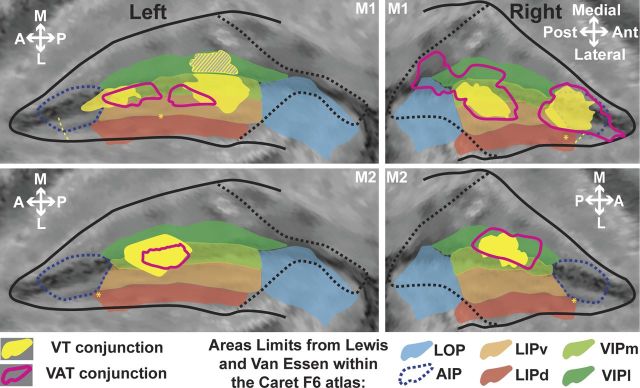

Motion processing regions within the intraparietal sulcus. SPMs of the visual (top panels, red scale) conjunction analysis (coherent movement vs scrambled and coherent movement vs static contrasts) are hot color-coded and displayed on the individual flat maps of the IPS (p < 0.05, FWE-corrected level, masked to display only positive signal change relative to the fixation baseline). Tactile activations (center of the face vs fixation contrast, green scale, p < 0.05, FWE-corrected level) are displayed on the middle panels. Auditory activations are displayed on the bottom (conjunction of the coherent movement vs static contrasts for the even and odd groups of runs) (test-retest analysis; p < 0.05 at uncorrected level). For other conventions, see Figure 2.

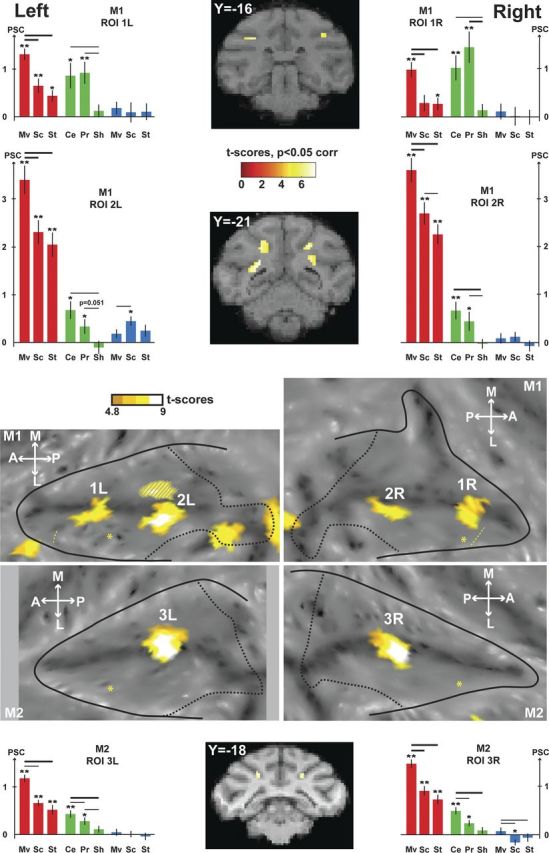

Figure 6.

VAT movement-specific conjunction analyses. A, The contrasts used for the conjunction are the visual contrasts (coherent movement vs scrambled and coherent movement vs static), the tactile contrast (center of the face vs fixation), and the auditory contrast (coherent movement vs static). The VAT conjunction is shown at the uncorrected level (p < 0.05). The activations are masked by the visual contrast (coherent movement vs fixation) so as to display only positive signal change relative to the fixation baseline. For other conventions, see Figure 5. B, Test-retest analyses performed on VAT ROIs (1′L/1′R and 2′L/2′R for M1, 3′L/3′R for M2). For each region of interest, histograms show the PSC (mean ± SE) for the contrasts of interest used to define the VAT conjunction (visual contrasts: Mv-Sc, coherent movement vs scrambled; Mv-St, coherent movement vs static; tactile contrasts: Ce-Fi, center of the face vs fixation; auditory contrast: Mv-St, coherent movement vs static). For each sensory modality and each contrast, PSC of the whole dataset (full colored bars) and even/odd subsets (135°/45° hatched colored bars) are presented. t tests were performed on the PSC for each condition (**p < 0.001; *p < 0.05).

ANOVA.

A two-way ANOVA is performed when necessary to investigate whether two ROIs have similar response profiles or not. PSCs are extracted for each ROI for the contrasts of interest. The two-way ANOVA takes the contrasts of interest as first factor and the ROI identity as second factor. A main contrast effect indicates that all contrasts do not activate the ROIs to the same extent, irrespective of ROI identity. A main ROI effect indicates that the overall activation between the two ROIs is different, irrespective of what contrast is considered. An interaction effect indicates that the two ROIs are activated in different ways by each contrast of interest.

Potential covariates.

In all analyses, realignment parameters, as well as eye movement traces, were included as covariates of no interest to remove eye movement and brain motion artifacts. However, some of the stimulations might have induced a specific behavioral pattern biasing our analysis, not fully accounted for by the aforementioned regressors. For example, large field coherent fast-moving stimuli might have induced an eye nystagmus, air puffs to the face might have evoked facial mimics (as well as some imprecision in the point of impact of the air puff) and spatially localized auditory stimuli might have induced an overt orienting behavior (microsaccades and saccades, offset fixation). Although we cannot completely rule out this possibility, our experimental setup allows to minimize its impact. First, monkeys worked head-restrained (to maintain the brain at the optimal position within the scanner, to minimize movement artifacts on the fMRI signal, and to allow for a precise monitoring of their eye movements). As a result, the tactile stimulations to the center and to the periphery of the face were stable in a given session. When drinking the liquid reward, small lip movements occurred. These movements thus correlated with reward timing and were on average equally distributed over the different sensory runs and the different conditions within each run (we checked that the monkeys had equal performance among the different conditions within a given run). The center of the face air puffs were placed on the cheeks on each side of the monkey's nose at a location that was not affected by the lip movements. Peripheral body stimulation air puffs were directed to the shoulders, at a location that was not affected by possible arms movements by the monkey. This was possible because the monkey chair tightly fit the monkey's width. Second, monkeys were required to maintain their gaze on a small fixation point, within a tolerance window of 2° × 2°. This was controlled online and was used to motivate the animal to maximize fixation rates (as fixation disruptions, such as saccades or drifts, affected the reward schedule). Eye traces were also analyzed offline for the selection of the runs to include in the analysis (good fixation for 85% of the run duration, with no major fixation interruptions). A statistical analysis indicates that the monkeys' performance was not significantly different across the visual, tactile, or auditory runs (one-way ANOVA: M1, p = 0.75; M2, p = 0.65). This suggests that the overall oculomotor behavior was constant across types of runs. Small systematic changes taking place within the tolerance window could have affected our data, such as small saccades, microsaccades, or other differences in eye position variance. When eye position is used as a regressor for fMRI signal, changes in the fMRI signal can be specifically located in LIP and the FEF (this analysis is used to delineate the anterior limit of LIP in monkey M1). Microsaccades are expected to activate the same regions as saccades. The fact that LIP is not activated in the contrasts presented in Figures 5 and 6 indicates that microsaccade and small saccade patterns were similar across conditions.

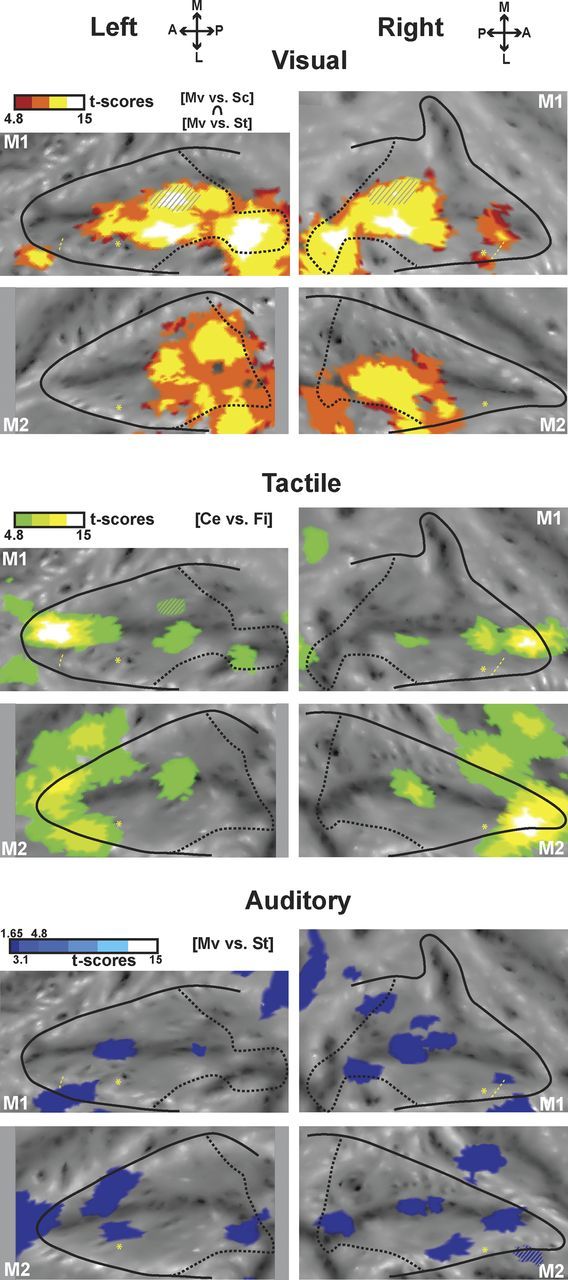

Figure 5.

VT movement-specific conjunction analyses. The contrasts used for the conjunction are the visual contrasts (coherent movement vs scrambled and coherent movement vs static) and the tactile contrast (center of the face vs fixation). The activations are masked by the visual contrast (coherent movement vs fixation) so as to display only positive signal change relative to the fixation baseline. The VT conjunction (p < 0.05, FWE-corrected level) is shown on the flat maps of the two individual monkeys, and SPMs are displayed on two coronal sections in M1 and one coronal section in M2. Histograms show the PSC (mean ± SE) within the ROIs defined by the VT conjunction (1L/1R and 2L/2R for M1, 3L/3R for M2) for each individual condition of the visual runs (red: Mv, Coherent movement; Sc, scrambled; St, static), the tactile runs (green: Ce, Center of the face; Pr, periphery of the face; Sh, shoulders), and the auditory runs (blue). t tests were performed on the PSC for each condition (**p < 0.001, *p < 0.05), and in-between conditions (thick line: p < 0.001; thin line: p < 0.05). For other conventions, see Figure 2.

Results

Monkeys were exposed to visual (Fig. 1A), tactile (Fig. 1B), or auditory (Fig. 1C) stimulations, while fixating a central point, in independent time series. Based on prior studies, we used large field visual stimuli (expanding and contracting optic flow stimuli and moving bars) (Bremmer et al., 2002b), tactile stimuli to the face and upper body (Duhamel et al., 1998), and rich auditory stimuli moving in space (complex sounds have been shown to produce more robust cortical activations outside the primary auditory cortex, Blauert, 1997; than pure tones as used by Schlack et al., 2005). These stimulations were effective in activating the primary areas involved in their processing: all the visual stimuli activated the primary visual areas, and coherent movement specifically activated areas MT and MST (Fig. 1D, left); the three tactile stimulations activated area SII (Fig. 1D, middle, center of the face stimulation); and all the auditory stimuli activated the primary auditory cortex and the auditory belt (Fig. 1D, right, coherent movement stimuli).

In this paper, we specifically focus on the IPS. However, other cortical regions in both hemispheres of both monkeys were also robustly activated (p < 0.05, FWE-corrected level) by our stimulations: the occipital cortex (visual stimulations: striate and extrastriate areas), temporal cortex (visual: superior temporal sulcus; auditory: different parts of the temporoparietal cortex), cingulate cortex (visual: anterior and posterior cingulate), frontal cortex (visual: anterior arcuate sulcus, principal sulcus and convexity; tactile: central sulcus, premotor cortex and ventrolateral prefrontal cortex), and parietal cortex outside of the IPS (visual: inferior parietal convexity; tactile: superior and inferior parietal convexities).

Visual, tactile, and auditory processing within the IPS

Because the parietal cortex is a site of multisensory convergence, we first studied how each modality activated the IPS (Fig. 2A). Figure 2B shows, for each monkey, the results for the main stimulation conditions contrasted with the fixation baseline. For the visual and auditory modalities, the main stimulation condition is the coherent movement. These coherent movement versus fixation contrasts extract the activations that are related both to the stimulation onset (visual onset of the large-field visual stimuli or auditory onset of the rich moving sounds) as well as to the coherent movement component that builds up within a given coherent movement sequence. They capture both movement-selective and nonselective IPS domains. For the tactile modality, the main condition is the center of the face stimulation, as it is the one eliciting the strongest activation in the IPS for both monkeys (Fig. 3).

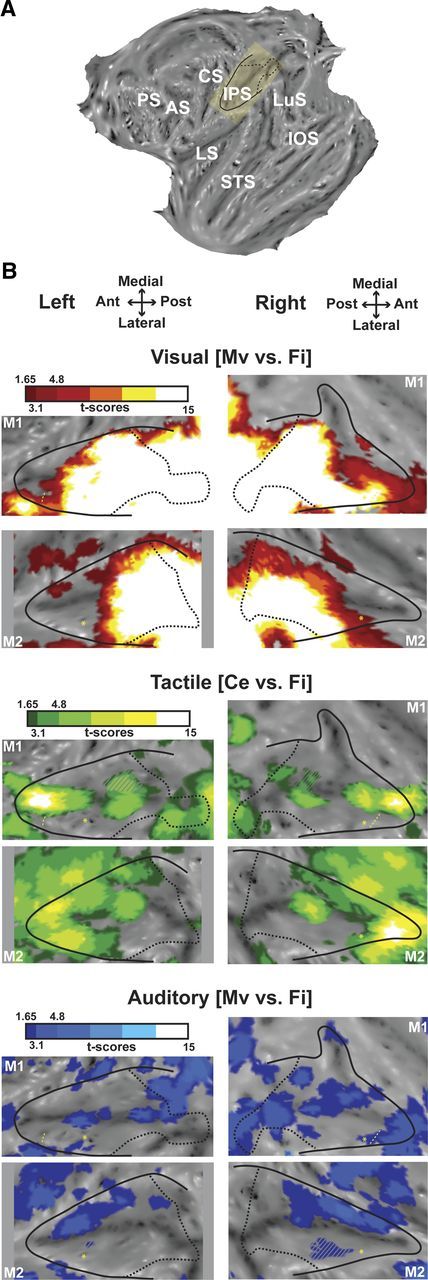

Figure 2.

Visual, tactile, and auditory modalities within the intraparietal sulcus. A, Localization of the IPS on the flattened representation of the cortex obtained with Caret (monkey M1, left hemisphere). The yellow inset corresponds to the IPS, which was slightly rotated to be depicted as horizontal in B and in the following figures. Black solid line indicates the limit between the convexity and the banks of the IPS; and black dashed line, projection on the flat map of the most posterior coronal section of the IPS, just before the annectant gyrus can be identified. AS, Arcuate sulcus; CS, central sulcus; IOS, inferior occipital sulcus; IPS, intraparietal sulcus; LS, lateral sulcus; LuS, lunate sulcus; PS, principal sulcus; STS, superior temporal sulcus. B, Activations presented on the flattened IPS for (1) the visual modality (top panels), showing the coherent movement versus fixation contrast (red represents t score scale, color transitions being adjusted to t scores = 1.65 at p < 0.05, uncorrected level; t scores = 3.1 at p < 0.001, uncorrected level and t scores = 4.8 at p < 0.05, FWE-corrected level); (2) the tactile modality (middle panels), showing the center of the face versus fixation contrast (green color t score scale, color transitions as in 1); and (3) the auditory modality (lower panels), showing the coherent movement versus fixation contrast (blue represents t score scale, color transitions as in 1). The hyphenated yellow line corresponds to the anterior boundary of M1 eye movements' regressors extracted from the visual analysis. This limit corresponds to the anterior boundary of the LIP (Durand et al., 2007); nothing reliable was obtained in M2. Yellow asterisk indicates local maximal activation for the central visual field compared with the peripheral visual field, assessed with the retinotopic localizer, and corresponds to the central representation located in anterior LIP (Ben Hamed et al., 2001; Fize et al., 2003; Arcaro et al., 2011). Gray hyphenated areas represent activations spilling over the other bank of the IPS. Top (respectively lower) panels correspond to flat maps of M1 (respectively M2). A, Anterior; L, lateral; M, medial; P, posterior.

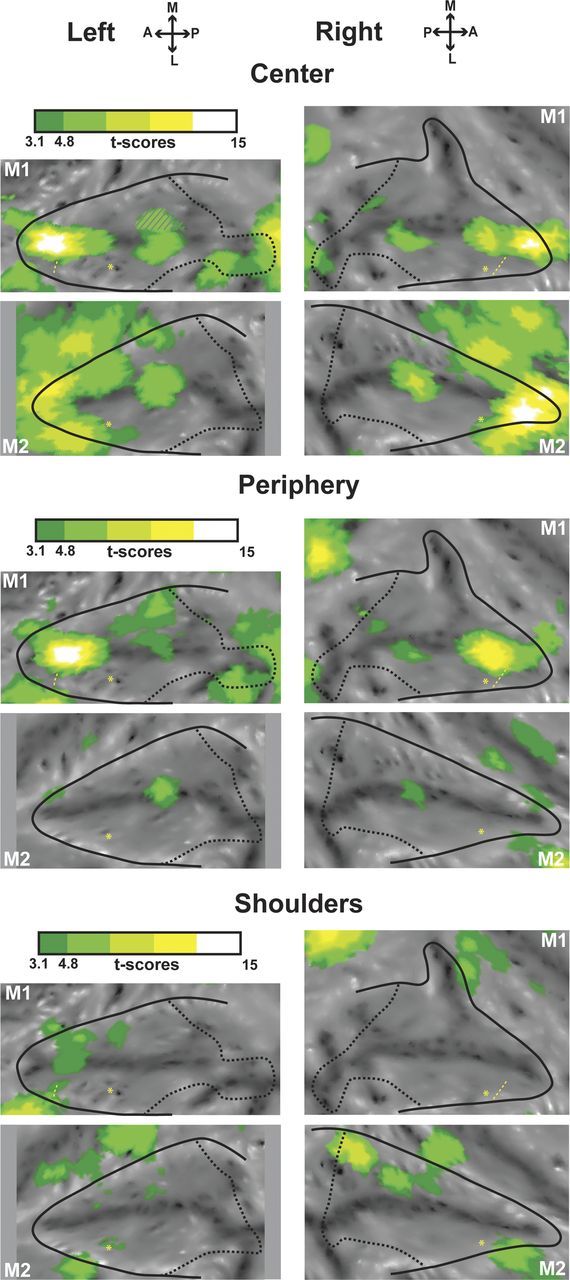

Figure 3.

Tactile activations for the center of the face, periphery of the face, and shoulders presented on flap maps of the intraparietal sulcus. The center of the face (top panels for left and right sides), periphery of the face (middle panels), and shoulder (lower panels) activations correspond, respectively, to the center of the face versus fixation, periphery of the face versus fixation, and shoulder versus fixation contrasts. For other conventions, see Figure 2.

Two major results can be highlighted. First, the three sensory modalities do not activate the IPS with the same robustness: the visual (t score for the principal local maximum: t = 41.4/42.6 and t = 29.4/29.9, left/right, in M1 and M2, respectively) and tactile (t = 14.4/13.1 and t = 9.7/13.4) modalities activate a large region at corrected level, whereas no auditory activation can be observed at this criterion (t score for the principal local maximum: t = 3.8/4.1 and t = 3.1/5.2 in M1 and M2, respectively). To check that the weak auditory activations that we observe are not specific to the 3D holographic binaural sound stimuli we chose, we presented monkey M2 with pure tone auditory stimuli as in Bremmer et al. (2001). These pure tones hardly elicited any activation within the IPS (t score for the principal local maximum: t = 2.46/1.35, data not shown). Thus, in the next analyses, we will present the auditory results for the 3D holographic sound stimuli at a lower statistical level (p < 0.05, uncorrected).

Second, we observed a clear topography: the visual stimulations mainly activated the posterior two-thirds of the IPS, whereas the tactile stimulations activated the anterior third. This topography is very stable, as can be seen by comparing the activations at corrected and uncorrected levels. The auditory activations were more patchy and variable in between animals and hemispheres but were mainly concentrated on the medial bank.

Movement processing toward and around the face within the IPS

The parietal cortex is also involved in the processing of stimuli moving with respect to the subject (Duhamel et al., 1997, 1998). Here, we studied how the movement component of the sensory stimulations we used specifically activated the IPS. We thus contrasted, for the visual modality, the coherent movement condition both with the scrambled movement and static conditions (coherent movement vs scrambled and coherent movement vs static; Fig. 4, top). Using both these contrasts allows to subtract visual onset and local motion cues so as to reveal activations specific to large field movement toward and around the face or self-motion. In contrast to the widespread activations elicited compared with the fixation baseline (Fig. 2B), these specific visual coherent movement activations were found at the fundus of the IPS.

Ideally, auditory movement activations should also be defined by contrasting the coherent movement condition both to the scrambled movement and static conditions, as done for the visual activations. However, the scrambled sounds activated the IPS nearly as much as the coherent sounds (see, for example, the PSC histograms in Fig. 5), possibly because they retained some degree of sound motion (they corresponded to a random rearrangement of small fragments of the coherent motion complex sounds). We thus only used the coherent movement versus static contrast. We observed activations in the fundus of the IPS overlapping with the visual movement activations, only at very low t score levels (t score between 1.73 and 2.53, <4.8, which corresponds to p < 0.05, FWE-corrected level, and <3.1, which corresponds to p < 0.001, uncorrected level). To assess the reliability of these auditory activations, we performed a test-retest analysis (separating the runs in EVEN and ODD groups). We show in Figure 4 (bottom) the conjunction analysis (p < 0.05, uncorrected level) for the coherent movement versus static contrasts in these EVEN and ODD groups, thus revealing only the weak but reproducible auditory activations. Confirming our previous observations, auditory regions processing movement were mainly found at the fundus of the IPS in three of four hemispheres (two regions in both hemispheres of monkey M1 and one region in the right hemisphere of monkey M2).

Together, this suggests that processing moving visual or auditory stimuli recruited overlapping regions in the fundus of the IPS. Interestingly, these regions also overlapped with tactile regions activated by stimulations to the center of the face (Fig. 4, middle), signing a region of multisensory convergence. In this context, air puff stimulations can be viewed as the dynamic impact of an object approaching the face. We did not specifically test for dynamic versus stationary tactile stimulations (the latter being quite challenging to produce). We rather focused on face specificity with respect to other body parts (the fundus of the IPS being known to preferentially represent the face) (Duhamel et al., 1998). We thus cannot conclude whether the tactile activations we observe at the fundus of the IPS are induced by the stationary or the movement components of the tactile stimulations to the face (air puffs to the face, including both aspects). If we extrapolate recording results from Duhamel et al. (1998), who showed that 30% of VIP tactile cells have no direction selectivity, this would mean that 70% of the tactile fMRI signal we observe is driven by the dynamic component of the air puffs, whereas the remaining 30% is driven by the purely stationary component of tactile face stimulation (see discussion on this point).

Convergence of multimodal movement processing signals within the IPS as a putative definition of the VIP

In the next step, we looked for the multimodal regions at the fundus of the IPS that are specific to movement processing, thanks to conjunction analyses. Both the visual and the tactile modalities activated very robustly the IPS. As a result, the most reliable sensory convergence was the visuo-tactile one (Fig. 5, VT, coronal sections, orange to white areas on the flat maps). Two bilateral regions were observed at the fundus of the IPS in M1 [anterior, 1L (−14, −16, 10), t score = 6.12 and 1R (16, −16, 12), t score = 5.98; posterior, 2L (−9, −22, 9), t score = 6.61 and 2R (10, −22, 10), t score = 7.12); and one bilateral region was observed in M2 (3L (−9, −16, 9), t score = 6.86 and 3R (10, −16, 9), t score = 6.64]. An ROI analysis based on these VT conjunction results (Fig. 5, red and green histograms) showed that these bimodal regions were dominated by the visual input and specifically its movement component, and were more activated by the face tactile stimulations than by the shoulder stimulations (Fig. 3). Auditory stimulations elicited almost no responses in these regions (Fig. 5, blue histograms).

The visual, auditory, and tactile conjunction analysis was performed at a lower statistical level (p < 0.05 at uncorrected level, conjunction of four contrasts). In M1, we observed again two bilateral regions at the fundus of the IPS for the visuo-auditory-tactile (VAT) conjunction (Fig. 6A, purple to white color scale): M1 anterior, left (−12, −16, 9), t score = 2.64, right (22, −15, 11), t score = 2.11; M1 posterior, left (−9, −20, 7), t score = 1.70, right (7, −20, 9), t score = 2.33. In M2, one bilateral trimodal region was identified, again at the fundus of the IPS (Fig. 6A, purple to white color scale): M2, left (−10, −18, 8), t score = 2.21, right (8, −17, 7), t score = 2.63. These VAT regions were used to define another set of ROIs (Fig. 6A, histograms), in which the PSC profiles were very similar to the VT profiles but lower for all conditions, except the auditory ones. Notably, the PSC for auditory movement was significantly different from that for static auditory stimuli for all ROIs, both in monkey M1 and monkey M2. In monkey M2, one additional VAT conjunction region is observed on the medial bank of the IPS. As no VT region is identified at corrected level in this monkey, and no VT or VAT convergence is observed in the medial bank of the IPS in monkey M1, this observation will not be considered in the following sections.

To evaluate the robustness of the VAT conjunction analysis, we performed test-retest analyses (Fig. 6B). ODD and EVEN groups of runs were selected independently from the visual, tactile, and auditory runs. PSC were calculated for the relevant contrasts (coherent movement vs scrambled and coherent movement vs static for the visual modality, center of the face vs fixation for the tactile modality, and coherent movement vs static for the auditory modality), for each of the ROIs identified by the VAT conjunction analysis (2 ROIs per hemisphere in monkey M1 and 1 ROI per hemisphere in monkey M2), on all the runs, on the ODD test-retest dataset and on the EVEN test-retest dataset. All PSC changes are significant at p < 0.05 or at p < 0.001, except for the auditory contrasts calculated in the left ROI of monkey M2, for which PSC reaches significance for all the runs together, are close to significance for the EVEN test-retest dataset (p = 0.07) but not significant for the ODD test-retest dataset.

In the following section, we address the fact that, in monkey M1, two multimodal regions are observed in the fundus of the IPS, whereas only one multimodal region was found in monkey M2. First, we tested the robustness of the spatial disjunction between these two VAT regions in monkey M1. To this end, we defined two cubic ROIs of 8 contiguous voxels lying in the fundus of the right and left IPS, respectively, just between the two multimodal regions of each hemisphere. Percentage signal changes remained far from significance for both ROIs, for all tested contrasts (tactile [center of the face vs fixation], visual [coherent movement vs scrambled], visual [coherent movement vs static], auditory [coherent movement vs static]), on all data as well as on a test-retest analysis considering first the ODD runs of each modality then the EVEN runs of each modality. Second, we compared the activation profiles within these ROIs (VT ROIs 1 and 2 in Fig. 5 and VAT ROIs 1′ and 2′ in Fig. 6) to evaluate whether these two regions were functionally different or not. Although the PSC for the center of the face tactile stimulation and for the visual coherent movement were similar in the anterior ROI, the response to visual movement was significantly higher than the tactile one in the posterior ROI: pooled left and right data, two-way ANOVA, conditions × ROIs, interaction p < 2 × 10−14 for the VT ROI and p < 7 × 10−5 for the VAT ROI; Holm-Sidak post hoc test, p > 0.25 for the visual versus tactile comparison in the anterior VT ROI (respectively p > 0.1 for the anterior VAT ROI) and p < 10−15 for the visual versus tactile comparison in the posterior VT ROI (respectively p < 10−7 for the posterior VAT ROI). This was the only distinctive feature we could identify between these two regions. In particular, there was no difference in the relative strength of the tactile responses to the center and to the periphery of the face between the anterior and posterior ROIs (interaction for the two-way ANOVA, condition × ROI, p = 0.11 and p = 0.58, for the VT and the VAT ROIs, respectively), or any difference in the retinotopy localizer-induced activations (similar activation profiles in the anterior and posterior ROIs for central visual stimulation, horizontal meridian, vertical meridian, superior peripheral stimulation and inferior visual stimulation, stimulations as in Fize et al., 2003; data not shown, interaction for the two-way ANOVA, condition × ROI, p = 0.39 and p = 0.76 for the VT and the VAT ROIs, respectively). In conclusion, these anterior and posterior regions of monkey M1 IPS fundus have similar activation profiles, the only difference being that the posterior regions are more activated by the visual stimuli than by the tactile stimuli, consistent with the observation that the visual modality dominates the posterior portion of the IPS (Fig. 2).

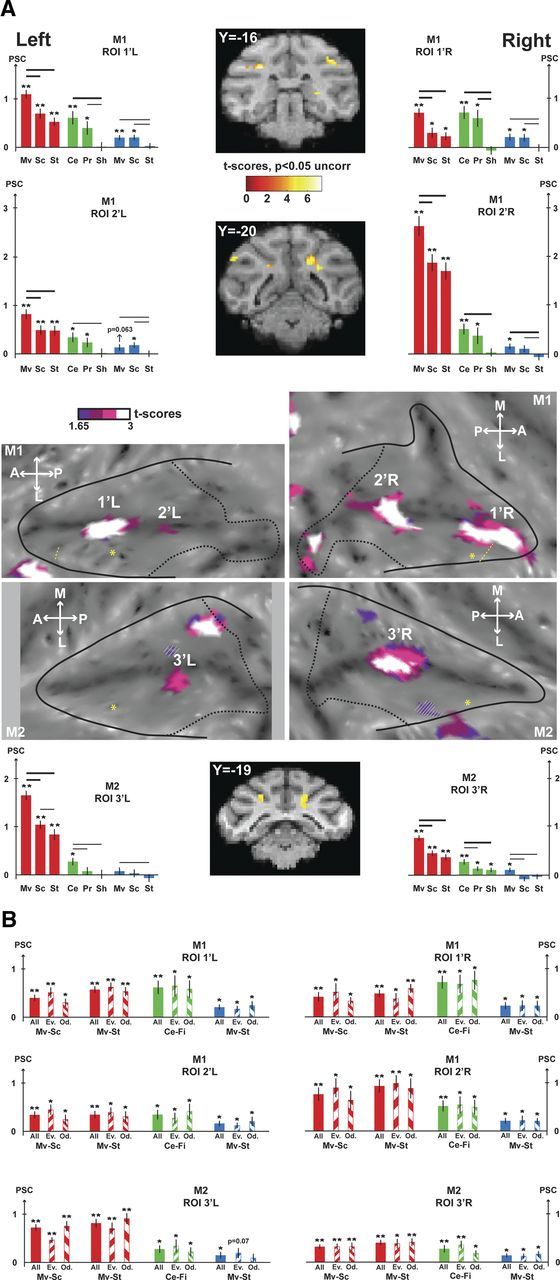

The regions of convergence for multimodal movement processing signals identified above (ROIs and VAT ROIs in all 4 hemispheres) consistently fall in the fundus of the IPS at a location compatible with the described localization of area VIP based on anatomical and connectivity data (Lewis and Van Essen, 2000a). To confirm this, we projected the individual results of each monkey on a common atlas (F6 atlas in Caret), together with the boundaries of the intraparietal areas as defined by Lewis and Van Essen (2000a, 2000b) (Fig. 7). Interestingly, the VT and VAT regions mainly fall within what has been described as VIPl, at the fundus of the IPS, without however encompassing its whole anteroposterior extent. In monkey M1, the anterior region, which is functionally undistinguishable from the posterior region (except for a slight difference in the ratio between the visual and tactile levels of activation), extends anteriorly to the boundaries of area VIP, in what is identified in the F6 atlas as anterior intraparietal area (AIP). However, given the reported physiological properties of area AIP (Sakata and Taira, 1994; Murata et al., 2000), it appears that the IPS cytoarchitectonic organization of monkey M1 is not perfectly captured by its coregistration onto the F6 atlas. As a result, we think that this anterior ROI actually belongs to VIP. Corroborating this fact, this ROI never extends anteriorly to the anterior border of area LIP in either hemisphere (as defined by the eye movement activation anterior limit, p < 0.05, FWE-corrected level). This is in agreement with most electrophysiological and anatomical studies that describe the anterior border of VIP as matching that of LIP (Sakata and Taira, 1994; Luppino et al., 1999; Murata et al., 2000; Borra et al., 2008). The proposed location of AIP in the F6 atlas is also questionable as this area is rarely described as encompassing the fundus of anterior IPS (Luppino et al., 1999; Lewis and Van Essen, 2000a, 2000b).

Figure 7.

Projection of the VT and VAT conjunction results onto the F6 Caret atlas. Only the activations at the fundus of the IPS are represented. The boundaries of relevant IPS subdivisions as defined in the F6 atlas from Lewis and Van Essen (2000a, 2000b) are color shaded. See main text for a discussion of the boundaries of the AIP (dotted blue lines). For other conventions, see Figure 2.

The dual VT and VAT regions observed in monkey M1 strongly suggest an interindividual variability in IPS multisensory convergence patterns between monkeys M1 and M2. In the following, we consider sequentially the following: (1) within individual interhemispheric size variability, (2) interindividual size variability, (3) within individual interhemispheric spatial variability, and (4) interindividual spatial variability. The projection surface of the significant voxels onto the flat map (Fig. 7) does not allow to precisely address these questions, and a voxel count is more appropriate as it reflects the volume of cortex specifically recruited in the contrast of interest (VT ROIs; M1, anterior ROI, right: 31 voxels; left: 49 voxels; posterior ROI, right: 65 voxels; left: 132 voxels, including mirror activations on medial bank; M2, right: 53 voxels; left: 86 voxels). An interhemispheric size variability can be noted, ranging between a 30% and a 60% size difference (only an approximation of this measure can be derived for the posterior ROI of monkey M1). The interindividual size variability ranges between 50% (left hemisphere) and 80% (right hemisphere), thus slightly surpassing intraindividual variability. This observation is in agreement with reports of higher interindividual variability in area size compared with intraindividual variability, and specifically in higher-order areas, such as VIP (for review, see Krubitzer and Seelke, 2012). From a spatial perspective, the identified convergence regions within each hemisphere in a given animal are positioned symmetrically with respect to each other, as can be seen from the coronal sections of Figures 5 and 6. In contrast, their location in monkey M2 appears to fall just in between the two convergence regions identified in monkey M1, indicating a high degree of interindividual variability. Overall, the same analysis applies to the VAT convergence regions, although both their intraindividual and interindividual variability appear to be more important than that of the VT convergence. This is most probably because, although the VT convergence analysis is affected by the variability of visual and tactile projections within the IPS, the VAT convergence analysis is affected by the variability of all of the visual, tactile, and auditory projections within the IPS. The intraindividual and interindividual variability we observe in the VT and VAT convergence patterns is the result of the variability of visual and tactile projections within the IPS as described from anatomical studies (Fig. 4) (e.g., Lewis and Van Essen, 2000a). Overall, this depicts a patchy topographic organization of VT VIP domains.

Discussion

fMRI allows us to capture the spatial extent of the visual, tactile, and auditory domains within the IPS as well as their regions of overlap. In particular, we highlight a heterogeneous region involved in the processing of the movement component of these different sensory modalities. This region corresponds to area VIP as defined by its cytoarchitectonic limits. We further describe, within VIP, a region specifically dedicated to the amodal processing of movement (i.e., irrespective of the sensory domain by which it is determined).

Sensory domains within the IPS

Posterior IPS is strongly recruited by large field moving visual patterns (coherent movement vs fixation contrast; Fig. 2). This activation extends from the parieto-occipital pole and the inferior parietal gyrus to two-thirds into the IPS and up its medial and lateral banks. This activation covers area LIP, in agreement with single-cell recording studies describing complex neuronal responses to moving visual patterns (Eskandar and Assad, 1999, 2002; Williams et al., 2003) and fMRI (Vanduffel et al., 2001). It also covers area medial intraparietal area (MIP), although the visual responses in this cortical area are strongest when associated with a coordinated hand movement (Eskandar and Assad, 2002; Grefkes and Fink, 2005). Finally, VIP is also robustly activated, in agreement with its functional involvement in the processing of large field visual stimuli (Bremmer et al., 2002a, 2002b). Within this visual IPS, we further identify a visual movement-specific territory (coherent movement vs scrambled and coherent movement vs static contrasts; Fig. 5), grossly encompassing the posterior half of the IPS fundus. This region is consistently larger than the fundal IPS region activated by the random dot patterns used by Vanduffel et al. (2001) to identify VIP, most probably the result of the high speed (100°/s vs 2–6°/s) and large aperture (60° vs 14°) of our stimuli.

Tactile stimulations to the face activate the anterior inferior parietal gyrus extending down into the IPS fundus. Tactile stimulations to the center of the face produce higher IPS activations than tactile stimulations to the periphery of the face, although both are colocalized (Fig. 3). Shoulder tactile stimulations produce very low IPS activations, specific to the upper medial bank (in agreement with early descriptions of somatosensory responses in this region; for review, see Hyvärinen, 1982), confirming the overrepresentation of the face with respect to other body parts within the IPS fundus as described by single-cell recording studies (Duhamel et al., 1998). Contrary to the two other modalities, we did not specifically test for tactile movement. However, because of the reported characteristics of tactile neurons in the fundus of the IPS (Duhamel et al., 1998), we think that our tactile activations are part of the global processing of movement around and toward the face (70% of VIP's tactile cells have tactile direction selectivity, which could be driven by the dynamic component of air puffs in our experiment; the other 30% of cells respond to stationary tactile stimulations touching the face or moving away from it, in congruence to their visual response to proceeding/receding optic flow).

The auditory IPS activations are weak, consistent with Joly et al. (2011) and with the known light connections from the caudo-medial auditory belt (Lewis and Van Essen, 2000a). Auditory responses have been described in area VIP in naive animals (Schlack et al., 2005), using broadband auditory noise stimuli that are known to allow for a better sound localization ability than stimuli with narrower bandwidths (Blauert, 1997). Auditory responses have also been recorded, during spatially guided behavior, both in area LIP and in the parietal reach region (Mazzoni et al., 1996; Stricanne et al., 1996; Cohen et al., 2002; Gifford and Cohen, 2005). However, these activities have been shown to be nonexistent in naive animals, only arising after behavioral training (Grunewald et al., 1999; Linden et al., 1999). We thus predict that our activations would be stronger in animals trained to perform an auditory-motor behavior (Mullette-Gillman et al., 2005, 2009).

Functional definition of the ventral parietal area VIP

In the present work, we were specifically interested in the IPS regions that were functionally specialized for processing moving stimuli independent of which sensory modality in which they were presented. A single domain dedicated to movement could be identified, situated at the fundus of the IPS, coinciding within the VIP, as defined by Lewis and Van Essen (2000a, 2000b). This area is monosynaptically connected with the medial temporal area MT (Maunsell and van Essen, 1983; Ungerleider and Desimone, 1986) and with the somatosensory cortex (Seltzer and Pandya, 1980; Lewis and Van Essen, 2000a). Weak but direct connections with the caudo-medial auditory belt have also been described (Lewis and Van Essen, 2000a). From a functional point of view, area VIP was initially defined based on its neuronal responses to visual stimuli approaching the face (Colby et al., 1993) or representing relative movement of the subject with its environment (Bremmer et al., 2002a, 2002b). In addition to unimodal visual neurons, VIP also hosts neurons that are modulated by VT (Duhamel et al., 1998; Avillac et al., 2005) and visuo-vestibular stimulations (Bremmer et al., 2002b; Chen et al., 2011).

Although the extent of the motion-specific visual region we describe matches closely the expected cytoarchitectonically defined VIP (joint VIPl and VIPm of Lewis and Van Essen, 2000b), both the VT and VAT conjunctions cover only part of this region, sometimes extending into ventral LIP (Fig. 7). Although spatially contiguous, these multimodal regions only partially overlap. We thus provide functional evidence for a multimodal associative definition of area VIP, spatially more restrictive than its motion-specific definition. Our data support a patchy organization of this IPS region, although higher-resolution fMRI acquisitions will be required to directly address this point. In addition, because the vestibular modality has not been tested, we do not know where visuo-vestibular convergence regions would fall in the fundus of the IPS with respect to the reported multimodal convergence regions and whether a purely visual region will be found to coexist next to multimodal patches. The recordings of Chen et al. (2011) suggest that visuo-vestibular convergence should be found all throughout the fundus of the IPS (their Fig. 1) as they report that up to 97% of the cells recorded in this region respond to rotational vestibular stimulations while at the same time 98% of them respond to translational or rotational visual stimulations (Table 1 in Chen et al., 2011).

In addition to multisensory convergence, VIP has also been described as a cortical site of multisensory integration (Bremmer et al., 2002b; Avillac et al., 2007; Chen et al., 2011). While we expect that the VIP patches we identify will be performing multisensory integration across the dominant sensory modalities by which they are activated, it is not clear whether they will also be influenced by sensory signals from contiguous patches. Indeed, in Avillac et al. (2007), we describe visual unimodal neurons within VIP whose responses are modulated by tactile stimulation. Only electrophysiological recordings performed in fMRI-identified patches will allow to test whether multisensory integration is processed locally or at the level of the whole area.

It is important to note that the multimodal convergence we describe here is dependent on the stimulation characteristics and as such does not reflect the entirety of multisensory convergence patterns within the IPS. For example, multisensory convergence is also expected to be found in areas MIP and AIP. However, whereas our visual stimuli robustly activate the medial bank of the IPS, the tactile stimulations we used do not. Hand or arm tactile stimulations would have been more suited to map multisensory convergence in MIP (for review, see Grefkes and Fink, 2005). Conversely, whereas our tactile stimulations robustly activate the anterior most part of the IPS, the visual stimuli we use are clear not optimal for this region. Objects with 3D structure or small 2D objects would have allowed describing multisensory convergence in area AIP (Durand et al., 2007).

Comparison with human studies

Our present observations in the macaque monkey reveal several similarities of interest with human fMRI studies identifying VIP on the basis of its multimodality. Bremmer et al. (2001) identify human VIP based on a conjunction analysis of visual, auditory, and face tactile stimulations. The group results reveal a unitary human VIP, whereas the single subject activations suggest that the patchy organization that we describe here might be a shared functional trait between humans and nonhuman primates. In another study, Sereno and Huang (2006) highlight an important interindividual and intraindividual variability in the location and extent of human VIP within the parietal cortex. In addition, their subject 2 (Fig. 5 of Sereno and Huang, 2006) has a doubled representation of both its somatosensory and visual maps. This is similar to our monkey M1 in which we describe two disjoint sites of VAT conjunction, suggesting that such a duplication of cortical maps might be more under the dependence of ontogenetic rather than phylogenetic factors.

Footnotes

This work was supported by the Agence Nationale de la Recherche (Grant ANR-05-JCJC-0230-01), and by the Fondation pour la Recherche Médicale to S.P. We thank J.R. Duhamel and S. Wirth for helpful comments on the manuscript; W. Vanduffel, J. Mandeville (JIP software), N. Richard, E. Metereau, and S. Maurin for technical support; E. Åstrand for her continuous support; and J.L. Charieau and F. Herant for animal care.

The authors declare no competing financial interests.

References

- Akbarian S, Grüsser OJ, Guldin WO. Corticofugal connections between the cerebral cortex and brainstem vestibular nuclei in the macaque monkey. J Comp Neurol. 1994;339:421–437. doi: 10.1002/cne.903390309. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Cui H. Intention, action planning, and decision making in parietal-frontal circuits. Neuron. 2009;63:568–583. doi: 10.1016/j.neuron.2009.08.028. [DOI] [PubMed] [Google Scholar]

- Arcaro MJ, Pinsk MA, Li X, Kastner S. Visuotopic organization of macaque posterior parietal cortex: a functional magnetic resonance imaging study. J Neurosci. 2011;31:2064–2078. doi: 10.1523/JNEUROSCI.3334-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel JR. Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci. 2007;27:1922–1932. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben Hamed S, Duhamel JR, Bremmer F, Graf W. Representation of the visual field in the lateral intraparietal area of macaque monkeys: a quantitative receptive field analysis. Exp Brain Res. 2001;140:127–144. doi: 10.1007/s002210100785. [DOI] [PubMed] [Google Scholar]

- Blauert J. Spatial hearing. Cambridge, MA: MIT; 1997. [Google Scholar]

- Borra E, Belmalih A, Calzavara R, Gerbella M, Murata A, Rozzi S, Luppino G. Cortical connections of the macaque anterior intraparietal (AIP) area. Cereb Cortex. 2008;18:1094–1111. doi: 10.1093/cercor/bhm146. [DOI] [PubMed] [Google Scholar]

- Bremmer F. Multisensory space: from eye-movements to self-motion. J Physiol. 2011;589:815–823. doi: 10.1113/jphysiol.2010.195537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001;29:287–296. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox [abstract]. Presented at the 8th International Conference on Functional Mapping of the Human Brain; June 2–6, 2002; Sendai, Japan. 2002. [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 2011;31:12036–12052. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen YE, Batista AP, Andersen RA. Comparison of neural activity preceding reaches to auditory and visual stimuli in the parietal reach region. Neuroreport. 2002;13:891–894. doi: 10.1097/00001756-200205070-00031. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol. 1993;69:902–914. doi: 10.1152/jn.1993.69.3.902. [DOI] [PubMed] [Google Scholar]

- Disbrow E, Litinas E, Recanzone GH, Padberg J, Krubitzer L. Cortical connections of the second somatosensory area and the parietal ventral area in macaque monkeys. J Comp Neurol. 2003;462:382–399. doi: 10.1002/cne.10731. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Bremmer F, Ben Hamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Durand JB, Nelissen K, Joly O, Wardak C, Todd JT, Norman JF, Janssen P, Vanduffel W, Orban GA. Anterior regions of monkey parietal cortex process visual 3D shape. Neuron. 2007;55:493–505. doi: 10.1016/j.neuron.2007.06.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eskandar EN, Assad JA. Dissociation of visual, motor and predictive signals in parietal cortex during visual guidance. Nat Neurosci. 1999;2:88–93. doi: 10.1038/4594. [DOI] [PubMed] [Google Scholar]

- Eskandar EN, Assad JA. Distinct nature of directional signals among parietal cortical areas during visual guidance. J Neurophysiol. 2002;88:1777–1790. doi: 10.1152/jn.2002.88.4.1777. [DOI] [PubMed] [Google Scholar]

- Faugier-Grimaud S, Ventre J. Anatomic connections of inferior parietal cortex (area 7) with subcortical structures related to vestibulo-ocular function in a monkey (Macaca fascicularis) J Comp Neurol. 1989;280:1–14. doi: 10.1002/cne.902800102. [DOI] [PubMed] [Google Scholar]

- Fize D, Vanduffel W, Nelissen K, Denys K, Chef d'Hotel C, Faugeras O, Orban GA. The retinotopic organization of primate dorsal V4 and surrounding areas: a functional magnetic resonance imaging study in awake monkeys. J Neurosci. 2003;23:7395–7406. doi: 10.1523/JNEUROSCI.23-19-07395.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford GW, 3rd, Cohen YE. Spatial and non-spatial auditory processing in the lateral intraparietal area. Exp Brain Res. 2005;162:509–512. doi: 10.1007/s00221-005-2220-2. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia. 2006;44:2621–2635. doi: 10.1016/j.neuropsychologia.2005.09.011. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Fink GR. The functional organization of the intraparietal sulcus in humans and monkeys. J Anat. 2005;207:3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grunewald A, Linden JF, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area: I. Effects of training. J Neurophysiol. 1999;82:330–342. doi: 10.1152/jn.1999.82.1.330. [DOI] [PubMed] [Google Scholar]

- Hyvärinen J. Posterior parietal lobe of the primate brain. Physiol Rev. 1982;62:1060–1129. doi: 10.1152/physrev.1982.62.3.1060. [DOI] [PubMed] [Google Scholar]

- Joly O, Ramus F, Pressnitzer D, Vanduffel W, Orban GA. Interhemispheric differences in auditory processing revealed by fMRI in awake rhesus monkeys. Cereb Cortex. 2012;22:838–853. doi: 10.1093/cercor/bhr150. [DOI] [PubMed] [Google Scholar]

- Krubitzer LA, Seelke AM. Cortical evolution in mammals: the bane and beauty of phenotypic variability. Proc Natl Acad Sci U S A. 2012;26;109(Suppl 1):10647–10654. doi: 10.1073/pnas.1201891109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 2000a;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Mapping of architectonic subdivisions in the macaque monkey, with emphasis on parieto-occipital cortex. J Comp Neurol. 2000b;428:79–111. doi: 10.1002/1096-9861(20001204)428:1<79::aid-cne7>3.0.co;2-q. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Beauchamp MS, DeYoe EA. A comparison of visual and auditory motion processing in human cerebral cortex. Cereb Cortex. 2000;10:873–888. doi: 10.1093/cercor/10.9.873. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Brefczynski JA, Phinney RE, Janik JJ, DeYoe EA. Distinct cortical pathways for processing tool versus animal sounds. J Neurosci. 2005;25:5148–5158. doi: 10.1523/JNEUROSCI.0419-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linden JF, Grunewald A, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area: II. Behavioral modulation. J Neurophysiol. 1999;82:343–358. doi: 10.1152/jn.1999.82.1.343. [DOI] [PubMed] [Google Scholar]

- Luppino G, Murata A, Govoni P, Matelli M. Largely segregated parietofrontal connections linking rostral intraparietal cortex (areas AIP and VIP) and the ventral premotor cortex (areas F5 and F4) Exp Brain Res. 1999;128:181–187. doi: 10.1007/s002210050833. [DOI] [PubMed] [Google Scholar]

- Mandeville JB, Choi JK, Jarraya B, Rosen BR, Jenkins BG, Vanduffel W. FMRI of cocaine self-administration in macaques reveals functional inhibition of basal ganglia. Neuropsychopharmacology. 2011;36:1187–1198. doi: 10.1038/npp.2011.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, van Essen DC. The connections of the middle temporal visual area (MT) and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci. 1983;3:2563–2586. doi: 10.1523/JNEUROSCI.03-12-02563.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazzoni P, Bracewell RM, Barash S, Andersen RA. Spatially tuned auditory responses in area LIP of macaques performing delayed memory saccades to acoustic targets. J Neurophysiol. 1996;75:1233–1241. doi: 10.1152/jn.1996.75.3.1233. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 2009;19:1761–1775. doi: 10.1093/cercor/bhn207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol. 2000;83:2580–2601. doi: 10.1152/jn.2000.83.5.2580. [DOI] [PubMed] [Google Scholar]

- Orban GA. The extraction of 3D shape in the visual system of human and nonhuman primates. Annu Rev Neurosci. 2011;34:361–388. doi: 10.1146/annurev-neuro-061010-113819. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M. Parietal control of hand action. Curr Opin Neurobiol. 1994;4:847–856. doi: 10.1016/0959-4388(94)90133-3. [DOI] [PubMed] [Google Scholar]

- Schaafsma SJ, Duysens J. Neurons in the ventral intraparietal area of awake macaque monkey closely resemble neurons in the dorsal part of the medial superior temporal area in their responses to optic flow patterns. J Neurophysiol. 1996;76:4056–4068. doi: 10.1152/jn.1996.76.6.4056. [DOI] [PubMed] [Google Scholar]

- Schlack A, Sterbing-D'Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25:4616–4625. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seltzer B, Pandya DN. Converging visual and somatic sensory cortical input to the intraparietal sulcus of the rhesus monkey. Brain Res. 1980;192:339–351. doi: 10.1016/0006-8993(80)90888-4. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Huang RS. A human parietal face area contains aligned head-centered visual and tactile maps. Nat Neurosci. 2006;9:1337–1343. doi: 10.1038/nn1777. [DOI] [PubMed] [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Desimone R. Cortical connections of visual area MT in the macaque. J Comp Neurol. 1986;248:190–222. doi: 10.1002/cne.902480204. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An integrated software suite for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanduffel W, Fize D, Mandeville JB, Nelissen K, Van Hecke P, Rosen BR, Tootell RB, Orban GA. Visual motion processing investigated using contrast agent-enhanced fMRI in awake behaving monkeys. Neuron. 2001;32:565–577. doi: 10.1016/s0896-6273(01)00502-5. [DOI] [PubMed] [Google Scholar]

- Williams ZM, Elfar JC, Eskandar EN, Toth LJ, Assad JA. Parietal activity and the perceived direction of ambiguous apparent motion. Nat Neurosci. 2003;6:616–623. doi: 10.1038/nn1055. [DOI] [PubMed] [Google Scholar]

- Zhang T, Heuer HW, Britten KH. Parietal area VIP neuronal responses to heading stimuli are encoded in head-centered coordinates. Neuron. 2004;42:993–1001. doi: 10.1016/j.neuron.2004.06.008. [DOI] [PubMed] [Google Scholar]