Abstract

Introduction Electronic health record (EHR) downtime is any period during which the EHR system is fully or partially unavailable. These periods are operationally disruptive and pose risks to patients. EHR downtime has not sufficiently been studied in the literature, and most hospitals are not adequately prepared.

Objective The objective of this study was to assess the operational implications of downtime with a focus on the clinical laboratory, and to derive recommendations for improved downtime contingency planning.

Methods A hybrid qualitative–quantitative study based on historic performance data and semistructured interviews was performed at two mid-Atlantic hospitals. In the quantitative analysis, paper records from downtime events were analyzed and compared with normal operations. To enrich this quantitative analysis, interviews were conducted with 17 hospital employees, who had experienced several downtime events, including a hospital-wide EHR shutdown.

Results During downtime, laboratory testing results were delayed by an average of 62% compared with normal operation. However, the archival data were incomplete due to inconsistencies in the downtime paper records. The qualitative interview data confirmed that delays in laboratory result reporting are significant, and further uncovered that the delays are often due to improper procedural execution, and incomplete or incorrect documentation. Interviewees provided a variety of perspectives on the operational implications of downtime, and how to best address them. Based on these insights, recommendations for improved downtime contingency planning were derived, which provide a foundation to enhance Safety Assurance Factors for EHR Resilience guides.

Conclusion This study documents the extent to which downtime events are disruptive to hospital operations. It further highlights the challenge of quantitatively assessing the implication of downtimes events, due to a lack of otherwise EHR-recorded data. Organizations that seek to improve and evaluate their downtime contingency plans need to find more effective methods to collect data during these times.

Keywords: electronic health record, patient care, downtime, contingency planning, clinical laboratory

Background and Significance

Adoption of electronic health record (EHR) systems by U.S. hospitals has reached 96% as of 2015, up from 72% in 2011. 1 The rapid adoption has been motivated and incentivized by the 2009 Health Information Technology for Economic and Clinical Health Act. 1 2 3 EHR systems have brought operational efficiencies, improved patient safety, and more effective communications to health care. One challenge, however, is downtimes of EHR systems. Downtime events disrupt the patient care process, deactivate safety measures, such as clinical decision support systems, and can compromise patient safety. 4 5 6 7 8

Downtime events are considered to be any period in which any portion or all of the EHR system is disrupted. 9 10 11 Downtime events can have varying impacts depending on which systems are affected. For example, if the systems for patient registration go into downtime, the impacts to processing patient laboratory requests would be minimal once the patient data are manually entered. In contrast, a downtime event compromising the link between computerized physician order entry (CPOE) and the laboratory information system (LIS) could significantly impact performance.

Total downtime events, that is, all systems and services become unavailable, are highly disruptive to hospital function as all computer systems are offline. Total downtime scenarios are often not a part of the downtime contingency plans at hospitals, as typically it is assumed that at least a read-only snapshot of the EHR would remain accessible. One growing source of total downtime is a deliberate cyberattack on the hospital systems. 12 13 14 15 16 During a cyberattack-instigated downtime most or all of the systems are compromised and must be taken offline to clean and restore. Regardless of the circumstances, a cyberattack downtime results in no access to any computer systems or backups. Due to the lack of planning and inability to retrieve any patient history, total downtime events are more disruptive than a planned or even unplanned partial downtime.

Initiatives such as the Office of the National Coordinator's Safety Assurance Factors for EHR Resilience (SAFER) program suggest that downtime contingency plans be in place. However, SAFER is primarily focused on the comprehensive safe adoption of EHRs in hospitals, while downtime is recognized as a concern, it is not the primary focus of the guide. 17 18 19 With regards to the issue of downtime, SAFER suggests the creation of downtime protocols, regular drilling of downtime protocols, however does not provide substantive guidance on the creation of those protocols. The most recent revision added creation of a formal communication plans to support the dissemination of information during downtime. It is worth noting that SAFER is still regarded as a guide, and is not integrated into hospital regulation. Similar regulatory mandates come from the Institute of Medicine, the Health Insurance Portability Accountability Act (HIPAA), and the Centers for Medicare and Medicaid Services. However, as with SAFER, the regulations do not provide a framework for how to construct or validate contingency plans, they simply require that one exists for the organization. 11 20 21

Laboratory Medicine in Downtime

Ordering and receiving laboratory results is a critical part of the care process, and laboratory tests are a significant part of informing clinical decision-making. An estimated 7 billion laboratory tests run in United States hospitals per year, and a laboratory test consulted as part of the diagnostic process in at least 70% of medical cases. 22 23 The laboratory is a vital department of a hospital, and adverse patient safety events have resulted from errors in test selection or testing interpretation. 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 Understanding the impact of EHR downtime on laboratory operations is essential to mitigating potential safety risks.

A challenge to understanding the impact of downtime in the laboratory is that comprehensive downtime data are not available. Most laboratory operational measures are electronic and captured by the LIS or the EHR. During downtime, many of the electronic progress-tracking mechanisms are compromised, and the paper records from downtime are the only records of performance available. Due to the chaotic events surrounding the total downtime of interest, not all documentation was completed and the archived paper records are significantly fragmented.

Methods

Location

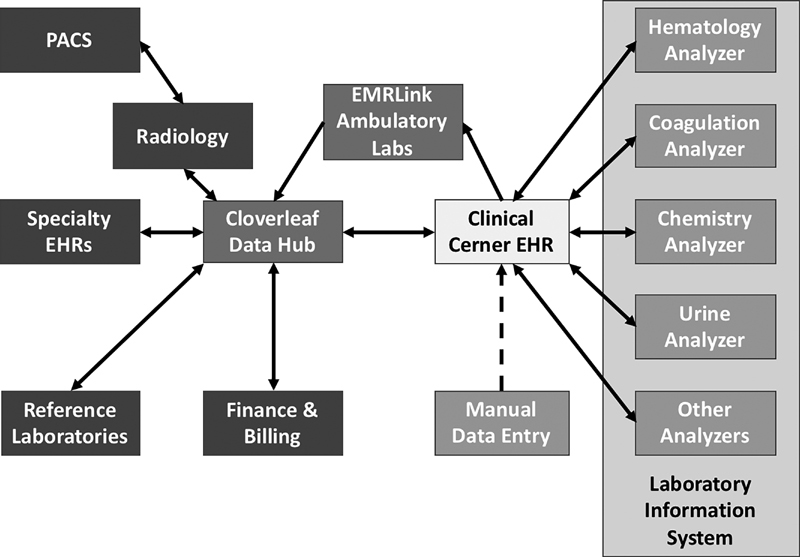

Data were obtained from a 300-bed suburban acute care facility with a 24-hour operating emergency department. The facility is located on the East Coast and is adjacent to a major metropolitan area. The study facility is part of a hospital system, which has implemented a network-wide integrated EHR system linking CPOE, LISs, and EHR as shown in Fig. 1 .

Fig. 1.

Health information technology structure of study hospital.

The downtime incident of focus was a total downtime event instigated by a ransomware attack, occurring in 2016 with approximately 48 hours during which all systems were completely shut down, followed by 48 hours of partial downtime as individual systems were incrementally restored and reconnected.

The organizations of interest are periodically reviewed for compliance with relevant regulations (i.e., Clinical Laboratory Improvement Amendments, College of American Pathologists, and HIPAA) and at the time of the study were in compliance with the regulations, and had been during the downtime incident of interest. Disaster plans for the information technology (IT) systems were on file and available, and the clinical laboratory had engaged in some disaster drills involving unavailability of the IT system. The emergency department at the time of study had not performed any comprehensive downtime drills.

Quantitative Analysis: Data Sources

Normal operational data were retrieved from the master EHR database and the clinical laboratory's quality assurance reports. Downtime data were obtained through manual review of paper records, which are generated in the laboratory during downtime operation. Normal operations data were obtained for the same period a year prior to maintain matched seasonality of the data. The data collected were for specific high-volume laboratory testing commonly ordered by emergency medicine. Both normal and downtime operational data were reviewed for specimen arrival in the laboratory, testing start time, testing completion, and time of result reporting. We focused on performance data related to the tests requested by the hospital emergency department since these are typically STAT (urgent) orders for patients requiring rapid assessment. Also, the emergency department must maintain full functionality during a downtime event. The specific test types and the number of observations available for downtime and normal operation are listed in Table 1 .

Table 1. Volumes of laboratory tests examined.

| Laboratory test type | Normal | Downtime |

|---|---|---|

| Amylase level | 100 | 8 |

| Basic metabolic panel | 1,118 | 47 |

| Beta HCG qualitative urine | 635 | 8 |

| Complete blood count w/ differential | 2,285 | 45 |

| Comprehensive metabolic panel | 1,154 | 26 |

| Drug abuse screen urine | 202 | 5 |

| Lipase level | 581 | 19 |

| Magnesium level | 808 | 28 |

| Phosphorus level | 411 | 14 |

| PT and INR | 696 | 21 |

| PTT | 122 | 7 |

| Troponin-I | 1,183 | 35 |

| Urinalysis IRIS complete | 33 | 10 |

| Urinalysis IRIS microscopic | 1,042 | 9 |

| Urinalysis IRIS reflex microscopic | 6 | 11 |

Abbreviations: HCG, human chorionic gonadotropin; INR, international normalized ratio; PT, prothrombin time; PTT, partial thromboplastin time.

Downtime Laboratory Work

Testing requests were classified as downtime by the established laboratory protocols. During downtime, specimens are brought into the laboratory under identification numbers specific for downtime. Any test that goes through laboratory specimen intake in the laboratory during downtime is processed under downtime protocols, even if the system comes back online during processing. The laboratory operated the analyzers in an off-network state and manually transcribed order information and results until the entire hospital network had been restored, a duration of almost 4 days.

Outcome Measures and Statistical Analysis

To statistically compare downtime turnaround time (TAT) to normal operational modes, typically an analysis of variance (ANOVA) test is performed. However, none of the requirements for ANOVA were met by the data, in particular the data were not normally distributed and the variance was nonhomogenous. Instead of ANOVA, we performed the Kruskal–Wallis test, which is an accepted ANOVA equivalent for nonparametric and/or skewed data.

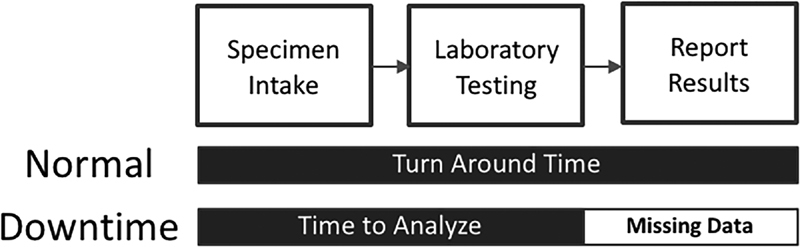

Laboratory performance is measured through TAT, the time from acceptance in the laboratory to completion of testing, and result reporting to the clinician, and it is depicted in Fig. 2 . TAT is also collected and tracked as part of the quality assurance program within the laboratory. Result reporting is typically managed by an automated process where the results are populated into the EHR by the analyzers. During downtime operation, a hand review of all paper reports from the laboratory is conducted to identify critical results, which require immediate action. After critical results are directly reported to the physician, all remaining reports are batched for reporting by fax or hand delivery to the physician. Regardless of the status of the result, the standing procedure for downtime indicates that the time of reporting be documented in the paper documentation.

Fig. 2.

Depiction of turnaround time limitation due to data limitations.

Unfortunately, capturing the true specimen acceptance to result reporting TAT during downtime was not possible due to inconsistencies in documentation. The reports obtained were not time stamped for the time of result reporting making true acceptance to reporting TAT during downtime impossible to calculate. The paper records only contained time of laboratory acceptance through testing completion. The manual review and reporting steps were rarely documented despite being required by the downtime procedures. As a result, the downtime performance calculated represents the time when analysis was completed and results were printed. Time of results reporting is not available in the data. The difference in performance measures is shown in Fig. 2 . Due to the data aresues, no consistent measures for the time to report results during downtime are available.

Qualitative Analysis: Interviews

Interviews were conducted at the same hospital where downtime performance data were obtained and an additional hospital within the same network. Seventeen participants were involved in seven sessions conducted at the two hospitals. Interview sessions were held for specific job roles: emergency medicine physicians and nurses, and clinical laboratory technicians and managers. All participants had experience working through several downtime events, including the unexpected large-scale event resulting in a multiday total shutdown.

Code Book Development

Narrative statements were extracted from the transcripts of the sessions. Statement content was reviewed and classified iteratively to create a codebook. Following grounded theory practices, 37 38 the open code-based approach was used to develop the codebook for analysis shown in Table 2 . 37 39 This approach is established in the literature and has been utilized for similar work in the past. 10 40 Open coding allows the researcher to develop the structure of the codebook based on the data itself when the outcomes are less known. Due to the unknown nature of downtime events and the experiences workers may have had, questions were tailored to target specific themes; however, the participants were free to tell as much or as little of their experiences regarding downtime as they felt compelled to share.

Table 2. Downtime interview codebook.

| Downtime interview codebook and frequencies | |||

|---|---|---|---|

| Theme | Subcode | Definition | Frequency of occurrence |

| Downtime – downtime operations, such as the support for, discovery, or recovery from downtime operations | Discovery | How the beginning scenario of a downtime incident is generally or specifically discovered | 20 |

| Initiation | Once a downtime situation is identified, what are the factors surrounding the implementation of the downtime procedure | 13 | |

| Recovery | Resolution/completion of the downtime incident, recovery/transition back to normal operations | 7 | |

| Communication | Relay of nondiagnostic information during downtime, general, such as person to person communication | 36 | |

| Handling | Comments on behaviors or actions during a downtime incident, such as execution of downtime procedures | 25 | |

| Infrastructure | Comments about critical equipment to maintaining operations | 16 | |

| General | Downtime-related statements that do not conform to the other subcodes | 10 | |

| Specimen Handling – issues relating to the labeling, tracking, testing, and reporting of specimens moving between the ED and laboratory | Labeling | Statement refers directly to the labeling of a specimen | 9 |

| Documentation | Other documents that accompany the specimen, such as the requisition form | 7 | |

| Positive patient identification | Specific mention of positive patient identification or demographic information being incorrect or missing | 4 | |

| Workload and Workflow – work tasks, work stress, and concerns about the implications to patient safety resulting from stress and workload | Patient safety | Reference to patient care and safety concerns | 26 |

| Job role | Reference to specific job role or desire for there to be prescribed downtime job role | 38 | |

| Interruption | Work interruption during downtime | 12 | |

| Result reporting | Reporting of clinical or diagnostic patient information during downtime | 18 | |

| Volume | Volume of workload encountered during downtime | 27 | |

| Communication – transfer of information, both clinical and general information such as understanding between the departments about needs and limitations | Transparency | Indication of the level of communication and work task understanding, trust between hospital areas | 19 |

| General | Communication related statements that don't conform to the other sub codes | 10 | |

| Preparation – activities related to the training, practicing, and creation of downtime procedures, and issues from their execution | Training | Discussion surrounding past/current/future downtime protocol training | 33 |

| Document control | Issues with version control of documents for downtime protocol and training specifically mentioned | 2 | |

| Procedure | Downtime procedure concerns, related to suitability of current procedures or shortcomings | 15 | |

| Improvement | Opportunities for improvement to downtime procedure or noted improvement occurrences developed during downtime, i.e., “did X during last downtime and it worked well” | 21 | |

| General | Preparation-related statements that don't conform to the other subcodes | 4 | |

Abbreviation: ED, emergency department.

Coding

One researcher coded the excerpts from the interview sessions independently while developing the codebook in a process taking multiple iterations. Themes are major subjects which were expressed by participants during the sessions. The subcode is based on the content of the narrative statement. The research team reviewed the codebook and reached agreement on its content. In the final step, the codebook and excerpts were provided to a researcher, who was not involved in the interview sessions and codebook development, and was asked to code the excerpts with the developed codebook. Situations where both researchers disagreed were discussed, and a consensus was reached in all cases resulting in consistent coding.

Results

Quantitative Results: Laboratory Performance

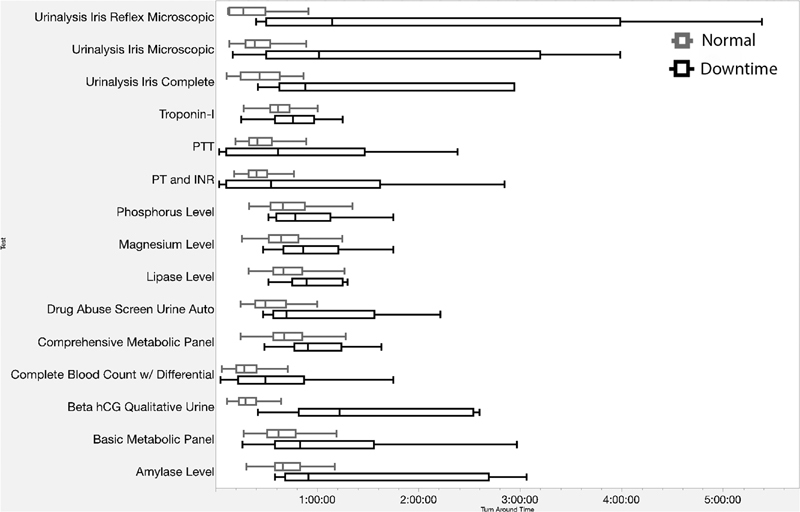

Of the 15 types of laboratory tests examined, 11 exhibited delays during downtime and 9 of the 11 delayed tests were delayed by significant amounts relative to normal operations, according to the Kruskal–Wallis tests. The average time delay across of all test types was 20 minutes, representing a 62% increase in TAT over normal operating conditions. Depending on test type, delays ranged from 8 minutes (32.5% longer than normal) for a Complete Blood Count with Differential , to 36 minutes (173% longer than normal) for a Magnesium Level request. A full summary of the delays is shown in Table 3 and Fig. 3 .

Table 3. Summary of testing data.

| Laboratory test type | Percent difference in TAT from normal vs. downtime operations |

|---|---|

| Amylase level a | 44.74% |

| Basic metabolic panel a | 38.50% |

| Beta HCG qualitative urine a | 15.88% |

| Complete blood count w/ differential a | 32.50% |

| Comprehensive metabolic panel a | 19.21% |

| Drug abuse screen urine | 41.46% |

| Lipase level a | 57.65% |

| Magnesium level a | 173.02% |

| Phosphorus level | 146.54% |

| PT and INR | −37.10% |

| PTT | −8.25% |

| Troponin-I a | 100.65% |

| Urinalysis IRIS complete | −37.28% |

| Urinalysis IRIS microscopic | −20.83% |

| Urinalysis IRIS reflex microscopic a | 10.99% |

Abbreviations: HCG, human chorionic gonadotropin; INR, international normalized ratio; PT, prothrombin time; PTT, partial thromboplastin time; TAT, turnaround time.

Significant delay.

Fig. 3.

Comparison of turnaround time by all available test types, normal (upper) versus downtime (lower). *Not all tests had sufficient numbers of observations for statistical analysis ( n counts depicted in Table 1 ).

Analysis of the available data showed that the data were not normally distributed, and did not meet the required homogeneity of variance required to run using an ANOVA test. 41 In response, the Kruskal–Wallis tests were selected as the nonparametric ANOVA equivalent comparing the in-laboratory TATs for specific laboratory tests during normal operation and total downtime. Kruskal–Wallis tests require a minimum of five observations for each treatment to be valid. The summarized results are in Table 3 .

Unexpectedly, four tests showed improved performance during downtime. Due to the limited downtime data, which contains only nine downtime data points compared with hundreds of normal testing, no conclusion could be drawn from this observations. The spread of the tests in question shown in Fig. 3 suggests that more downtime samples could resolve the analysis issue as the downtime data exhibit significantly more variance in downtime compared with normal operations.

Qualitative Results: Interviews

Of the 372 excerpts collected, both researchers agreed on the coding 196 times, the remaining excerpts were discussed, and neither researcher was favored in the final decisions, ultimately siding with the initial researcher 87 times and the independent researcher 85 times. In four instances, both researchers agreed that neither had initially identified the correct code and recoded the excerpt. The Cohen's kappa statistic for interrater reliability between the two researchers was moderate at κ = 0.48.

Comments related to Downtime coupled with comments about the Workload and Workflow during downtime dominated the discussions, representing 34.1 and 32.5% of the discussion topics, respectively, followed by downtime Preparation at 20.2%. The complete breakdown is shown in Table 2 .

Downtime

Downtime was the most frequent discussion topic. Specifically, the discussion most frequently focused on communication among coworkers during downtime (28.3%), followed by how the event was handled (19.7%), how an event was discovered (15.7%), what infrastructure was needed (12.6%), how established downtime procedures were initiated (10.2%), and the recovery process after a situation was resolved (5.5%). The rest of the discussion was general downtime topics (7.8%).

Workload and Workflow

Concerns for the workload and workflow during a downtime were almost as prevalent as downtime theme. Discussions related to job roles during downtime (31.4%), followed by the volume of work (22.3%) and concerns over patient safety during downtime (21.5%). The remainder of the discussion was concerned with result reporting (14.8%) and work task interruption from downtime-related sources (10%).

Downtime Preparation

Participants voiced concerns over the way downtime preparation is handled. It includes overall training and preparation of downtime (44%), possibilities for improvement opportunities (29%), and issues with the existing procedures (20%). The remaining comments were either general (5.3%) or mentioned issues with document control of the existing procedures where multiple outdated versions were kept in place with the current documents (2.7%).

Communication

Communication during downtime was a recurring theme. However, the only subcode that presented were concerns for the understanding of limitations and needs between departments (65.5%). The remaining comments were categorized as general with no significant categories occurring within the theme (34.5%).

Specimen Handling

Specimen handling concerns were the smallest theme of the discussions; however, the issues discussed had the potential to make impacts in the other areas. The concerns were labeling of specimens, which is necessary to perform laboratory testing and report results (45%), followed by general documentation such as the paper requisition forms (35%). Finally, comments pertaining to the proper patient identification for care actions such as medication, testing, and imaging requests (20%) were also made.

Discussion

This study confirms that downtime is highly disruptive to patient care. The hybrid qualitative–quantitative analysis, which combined interview data with operational data, assessed the delays in laboratory result reporting. The analysis of the paper records suggested that the TAT of tests during downtime increased by 20 minute, or 62%, compared with normal operation. While the archival paper records allowed this and other quantitative results, further verification and interpretation was needed due to sparsity and inconsistency in the data. Interview findings suggest that the delays are actually significantly larger, extending for multiple hours, far beyond the 20 minutes evidenced by the available data.

Interviews further provided insight into the experiences of employees who had encountered downtime and could provide information about the sources and reasons for these delays. Interviewees from the laboratory voiced concerns that the rest of the hospital was unaware of the limitations downtime places on laboratory operations, and continued to order all tests as if there was no downtime. Communication issues, both between and within departments, were a recurring theme in the interviews.

There is a clear need to include effective communication plans in downtime contingency strategies. Some nursing participants voiced frustrations with downtime impacting the ability to stay on top of information customarily conveyed by the EHR. During normal operation, the EHR helps to keep the charge nurse informed about the status of all of patients under the team's care. During downtime, the charge nurse is required to trust that all floor nurses are doing their jobs accurately without having the means or the time to check on each floor nurse and patient individually.

One solution for the communication, expectation management, and workload management issues during downtime would be to improve the mutual understanding regarding operations and needs of different departments in the hospital. Emergency department participants indicated that they had little insight into the work and activities of the laboratory. Similarly, laboratory staff reported that they know little about emergency department operations and needs.

During normal operations, almost all of the work performed in the laboratory is automated, requiring minimal human intervention. Downtime workflows disable all of this automation, shifting the entire workload to a manual one requiring human intervention for all steps. While interview participants from different areas of the hospital were collectively aware of the laboratory being impacted by the downtime, many were not cognizant of the manual workload shift in the laboratory. During downtime, physicians and nurses continued to order a full spectrum of tests as if the hospital was not in downtime, causing the laboratory to become overloaded, with a significant increase in TAT. Not only did testing take longer, but the manual communication of testing results back to clinicians further increases workload of laboratory workers and associated delays.

Interviewees frequently mentioned preparation for downtime. Nursing participants indicated that there was no formal training provided for downtime, and they had to rely on senior nurses having worked in “pre-EHR” days. During downtime, senior nursing staff became overloaded by having to keep up with their workload and provide support to the younger staff.

There was a significant amount of paper-recorded data, which was incomplete and could not be used in the analysis. In fact, none of the paper records from one of the two hospitals were sufficiently complete for a meaningful analysis. Downtime contingency plans, therefore, need to include approaches which facilitate manual paper documentation, and identify ways that documentation tasks do not impede patient care under these more stressful circumstances.

Based on their experiences of having working through planned, unplanned, and total downtime events, interviewees provided the following recommendations for improving downtime management:

Have designated communication plans to alert all areas when one or more departments experience a downtime. The plan would also help to set and manage expectations regarding the time to complete requests from the laboratory, radiology, pharmacy, and other services.

Reduce the workload for services that are highly EHR dependent and automated, and require high levels of manual interventions during downtime. The clinical laboratory is capable of processing approximately 6,000 individual tests per hour, but only 15% of those tests require human intervention. It is physically and operationally impossible to staff the laboratory to handle this workload at 100% manual operation.

Train and drill with all staff on downtime procedures. Ensure that all staff members are capable of enacting the entire downtime procedures for their work area. Numerous participants spoke about how some staff members only know how to do half of the tasks according to a downtime procedure and “make up” a “close enough” solution for what they cannot remember. Nonstandard operations are inefficient, and problems further compound when work is handed off to the next shift.

All departments should be able to communicate to the rest of the hospital what their requirements are to perform their work. For example, the laboratory has specific documentation and identification requirements that are necessary to ensure accurate result reporting. For example, if the patient demographics are incorrect on the forms, the results may not be valid for the patient.

Have designated staff and support roles to handle nonclinical but necessary tasks such as communication and paper work. This would reduce the work load of clinical staff so that they can continue safe and timely patient care.

Many of these suggestions fit with the general themes of SAFER guides. The SAFER guides provide a solid foundation, but further development of the guidelines with respect to downtime contingency planning is necessary.

Conclusion

The combined analysis of performance data and interviews shows that downtime introduces unique and significant demands on hospital staff and resources. To manage the allocation of the resources as well as maintain safe and effective patient care, better and more detailed downtime contingency plans with a focus on communications, resource allocation, and training are necessary.

Clinical Relevance Statement

This work highlights some of the issues encountered during EHR downtime, including a large-scale downtime event. Physical and organizational limitations and opportunities are highlighted for areas of interest for future study. There is a clear need for enhanced planning to handle the continuing downtime threat to ensure continuous safe and efficient patient care.

Multiple Choice Questions

-

Downtime events can be triggered by:

Computer virus.

System upgrade.

Hardware failure.

All of the above.

Correct Answer: The correct answer is option d. Downtimes can originate from numerous vectors, and even a planned downtime can extend longer than anticipated complicating patient care as much as an unplanned downtime event.

-

Participants expressed concern for:

Not enough downtime events occur.

Desire to have better training for future events.

Sending staff home during downtime.

Not enough work during downtime.

Correct Answer: The correct answer is option b. All participants indicated desire for better or new downtime training programs. Currently, downtime events trigger an all-hands scenario where the hospital attempts to continue as close to normal operation as possible, in the case of the laboratory and other automation-dependent areas of the hospital, there may be insufficient space to house the personnel necessary to maintain normal workflows.

Acknowledgments

The authors would like to thank Tracy Kim and Josh Puthumana for their assistance in collecting the laboratory records for this study.

Funding Statement

Funding This research was supported by a grant from the Agency for Healthcare Research and Quality (AHRQ): Evidence-Based Contingency Planning for Electronic Health Record Downtime (R21 HS024350–01A1).

Conflict of Interest E.L. reports grants from Agency for Healthcare Research and Quality, during the conduct of the study. None of the authors have any conflict of interest to declare.

Authors' Contributions

Each author contributed to the conception or design of the work, data analysis and interpretation, critical revision of the article, and final approval of the version to be published.

Protection of Human and Animal Subjects

This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and was reviewed by the Institutional Review Boards of Virginia Polytechnic Institute, Virginia Commonwealth University, and MedStar Health.

References

- 1.Henry J, Pylypchuk Y, Searcy T, Patel V.Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospitals: 2008–2015; 2016Available at:https://www.healthit.gov/sites/default/files/briefs/2015_hospital_adoption_db_v17.pdf. Accessed December 16, 2016

- 2.Blumenthal D. Launching HITECH. N Engl J Med. 2010;362(05):382–385. doi: 10.1056/NEJMp0912825. [DOI] [PubMed] [Google Scholar]

- 3.Russo E, Sittig D F, Murphy D R, Singh H. Challenges in patient safety improvement research in the era of electronic health records. Healthc (Amst) 2016;4(04):285–290. doi: 10.1016/j.hjdsi.2016.06.005. [DOI] [PubMed] [Google Scholar]

- 4.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010;363(06):501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 5.Buntin M B, Burke M F, Hoaglin M C, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood) 2011;30(03):464–471. doi: 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 6.Wolfstadt J I, Gurwitz J H, Field T S et al. The effect of computerized physician order entry with clinical decision support on the rates of adverse drug events: a systematic review. J Gen Intern Med. 2008;23(04):451–458. doi: 10.1007/s11606-008-0504-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bates D W, Kuperman G J, Wang S et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(06):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Campbell E M, Sittig D F, Guappone K P, Dykstra R H, Ash J S. Overdependence on technology: an unintended adverse consequence of computerized provider order entry. AMIA Annu Symp Proc. 2007:94–98. [PMC free article] [PubMed] [Google Scholar]

- 9.Larsen E, Haubitz C, Wernz C, Ratwani R.Improving electronic health record downtime contingency plans with discrete-event simulationInHawaii International Conference on System Sciences. Vol. 2016Kauai;2016

- 10.Larsen E, Fong A, Wernz C, Ratwani R M. Implications of electronic health record downtime: an analysis of patient safety event reports. J Am Med Inform Assoc. 2018;25(02):187–191. doi: 10.1093/jamia/ocx057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sittig D F, Gonzalez D, Singh H. Contingency planning for electronic health record-based care continuity: a survey of recommended practices. Int J Med Inform. 2014;83(11):797–804. doi: 10.1016/j.ijmedinf.2014.07.007. [DOI] [PubMed] [Google Scholar]

- 12.McDermott I E. Ransomware: tales from the CryptoLocker. Online Search. 2015;39(03):35–37. [Google Scholar]

- 13.Digital extortion: 26 things to know about ransomwareAvailable at:http://www.beckershospitalreview.com/healthcare-information-technology/digital-extortion-26-things-to-know-about-ransomware.html. Accessed April 15, 2016

- 14.Hollywood Presbyterian declares emergency after hackers cut off data, demand $3.4 million ransom | Healthcare IT NewsAvailable at:http://m.healthcareitnews.com/news/hollywood-presbyterian-declares-emergency-after-hackers-cut-data-demand-34-million-ransom?platform=hootsuite. Accessed April 14, 2016

- 15.MedStar attack found to be ransomware, hackers demand Bitcoin | Healthcare IT NewsAvailable at:https://www.healthcareitnews.com/news/medstar-attack-found-be-ransomware-hackers-demand-bitcoin. Published April 2016. Accessed October 9, 2018

- 16.Larsen E, Rao A, Sasangohar F.Investigating electronic health record downtimes: a scoping review of literature and news mediaJ Med Internet Res2019. Doi: 10.2196/preprints.13285

- 17.Office of the National Coordinator for Health Information TechnologyContingency Planning - The Safety Assurance Factors for EHR Resilience (SAFER) Guides. Available at:http://www.healthit.gov/sites/safer/files/guides/safer_contingencyplanning_sg003_form_0.pdf. Accessed September 14, 2015

- 18.Sittig D F, Ash J S, Singh H. The SAFER guides: empowering organizations to improve the safety and effectiveness of electronic health records. Am J Manag Care. 2014;20(05):418–423. [PubMed] [Google Scholar]

- 19.Sittig D F, Singh H. Electronic health records and national patient-safety goals. N Engl J Med. 2012;367(19):1854–1860. doi: 10.1056/NEJMsb1205420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.et al. Health IT and Patient Safety Building Safer Systems for Better CareWashington, DC: National Academies Press;2012. Available at:http://cci.drexel.edu/faculty/ssilverstein/patientsafetyandhealthitprepub.pdf. Accessed May 22, 2017 [PubMed]

- 21.Office of the Federal RegisterPublic Welf2007. CFR 45 § 164.308(a)(7)(ii)(C). Doi: 10.1037/018747

- 22.Silverstein M D.An Approach to Medical Errors and Patient Safety in Laboratory Services: A White Paper Prepared for the Quality Institute Meeting Making the Laboratory a Partner in Patient Safety Atlanta, April 2003. Atlanta;2003

- 23.Plebani M. Errors in laboratory medicine and patient safety: the road ahead. Clin Chem Lab Med. 2007;45(06):700–707. doi: 10.1515/CCLM.2007.170. [DOI] [PubMed] [Google Scholar]

- 24.Wagar E A, Tamashiro L, Yasin B, Hilborne L, Bruckner D A. Patient safety in the clinical laboratory: a longitudinal analysis of specimen identification errors. Arch Pathol Lab Med. 2006;130(11):1662–1668. doi: 10.5858/2006-130-1662-PSITCL. [DOI] [PubMed] [Google Scholar]

- 25.Sciacovelli L, Plebani M. The IFCC Working Group on laboratory errors and patient safety. Clin Chim Acta. 2009;404(01):79–85. doi: 10.1016/j.cca.2009.03.025. [DOI] [PubMed] [Google Scholar]

- 26.Plebani M, Ceriotti F, Messeri G, Ottomano C, Pansini N, Bonini P. Laboratory network of excellence: enhancing patient safety and service effectiveness. Clin Chem Lab Med. 2006;44(02):150–160. doi: 10.1515/CCLM.2006.028. [DOI] [PubMed] [Google Scholar]

- 27.Howanitz P J. Errors in laboratory medicine: practical lessons to improve patient safety. Arch Pathol Lab Med. 2005;129(10):1252–1261. doi: 10.5858/2005-129-1252-EILMPL. [DOI] [PubMed] [Google Scholar]

- 28.Hallock M L, Alper S J, Karsh B.A macro-ergonomic work system analysis of the diagnostic testing process in an outpatient health care facility for process improvement and patient safety Ergonomics 200649(5-6):544–566. [DOI] [PubMed] [Google Scholar]

- 29.Astion M L, Shojania K G, Hamill T R, Kim S, Ng V L. Classifying laboratory incident reports to identify problems that jeopardize patient safety. Am J Clin Pathol. 2003;120(01):18–26. doi: 10.1309/8EXC-CM6Y-R1TH-UBAF. [DOI] [PubMed] [Google Scholar]

- 30.Straub W H, Gur D. The hidden costs of delayed access to diagnostic imaging information: impact on PACS implementation. AJR Am J Roentgenol. 1990;155(03):613–616. doi: 10.2214/ajr.155.3.2117364. [DOI] [PubMed] [Google Scholar]

- 31.Wagar E A, Yuan S. The laboratory and patient safety. Clin Lab Med. 2007;27(04):909–930. doi: 10.1016/j.cll.2007.07.002. [DOI] [PubMed] [Google Scholar]

- 32.Bonini P, Plebani M, Ceriotti F, Rubboli F. Errors in laboratory medicine. Clin Chem. 2002;48(05):691–698. [PubMed] [Google Scholar]

- 33.Lippi G, Plebani M. The importance of incident reporting in laboratory diagnostics. Scand J Clin Lab Invest. 2009;69(08):811–813. doi: 10.3109/00365510903307962. [DOI] [PubMed] [Google Scholar]

- 34.Oral B, Cullen R M, Diaz D L, Hod E A, Kratz A. Downtime procedures for the 21st century: using a fully integrated health record for uninterrupted electronic reporting of laboratory results during laboratory information system downtimes. Am J Clin Pathol. 2015;143(01):100–104. doi: 10.1309/AJCPM0O7MNVGCEVT. [DOI] [PubMed] [Google Scholar]

- 35.Blanchfield B, Fahlman C, Pittman M. Hospital Capital Financing In The Era of Quality and Safety: Strategies and Priorities for the Future – A Survey of CEOs. Robert Wood Johnson Found. 2005:1–27. [Google Scholar]

- 36.Hollensead S C, Lockwood W B, Elin R J. Errors in pathology and laboratory medicine: consequences and prevention. J Surg Oncol. 2004;88(03):161–181. doi: 10.1002/jso.20125. [DOI] [PubMed] [Google Scholar]

- 37.Corbin J M, Strauss A. Grounded theory research: procedures, canons, and evaluative criteria. Qual Sociol. 1990;13(01):3–21. [Google Scholar]

- 38.Pope C, Ziebland S, Mays N.Qualitative research in health care. Analysing qualitative data BMJ 2000320(7227):114–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Creswell J W, Knight V.Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 4th edIn, ed.Los Angeles: SAGE Publications Inc; 2014 [Google Scholar]

- 40.Ratwani R, Fairbanks T, Savage E et al. Mind the Gap. A systematic review to identify usability and safety challenges and practices during electronic health record implementation. Appl Clin Inform. 2016;7(04):1069–1087. doi: 10.4338/ACI-2016-06-R-0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ott R L, Longnecker M. Australia, United States: Brooks/Cole Cengage Learning; 2010. An Introduction to Statistical Methods and Data Analysis. [Google Scholar]