Abstract

The mammalian cerebral cortex is characterized in vivo by irregular spontaneous activity, but how this ongoing dynamics affects signal processing and learning remains unknown. The associative plasticity rules demonstrated in vitro, mostly in silent networks, are based on the detection of correlations between presynaptic and postsynaptic activity and hence are sensitive to spontaneous activity and spurious correlations. Therefore, they cannot operate in realistic network states. Here, we present a new class of spike-timing-dependent plasticity learning rules with local floating plasticity thresholds, the slow dynamics of which account for metaplasticity. This novel algorithm is shown to both correctly predict homeostasis in synaptic weights and solve the problem of asymptotic stable learning in noisy states. It is shown to naturally encompass many other known types of learning rule, unifying them into a single coherent framework. The mixed presynaptic and postsynaptic dependency of the floating plasticity threshold is justified by a cascade of known molecular pathways, which leads to experimentally testable predictions.

Introduction

Various forms of synaptic plasticity have been discovered and characterized in the mammalian brain experimentally as well as theoretically. One type of associative plasticity is based on the relative spike timing between presynaptic and postsynaptic cells (Levy and Steward, 1983; Bell et al., 1997; Markram et al., 1997; Bi and Poo, 1998). This so-called spike-timing-dependent plasticity (STDP) has been shown to produce an asymmetric and reversible form of synaptic potentiation and depression depending on the temporal order of presynaptic and postsynaptic cell spikes. Models have been proposed to capture such mechanisms and have been very successful in accounting for the emergence of receptive fields, hippocampal place cells, synaptic competition, and stability (Gerstner et al., 1996; Song et al., 2000; van Rossum et al., 2000; Song and Abbott, 2001; Gütig et al., 2003; Yu et al., 2008). However, previous models suffer from an important limitation: their sensitivity to spontaneous activity. Because cerebral cortex is characterized by sustained and irregular spontaneous activity (Softky and Koch, 1993; Shadlen and Newsome, 1998), classical STDP models cannot be used to learn in such stochastic-like activity states, leading to either a complete loss of memory or a shift of the network dynamics toward a pathological synchronous state (Morrison et al., 2007). Because of this “catastrophic-forgetting” effect, memory retention in in vivo-like network states seems inconsistent with STDP.

We investigate a natural way to circumvent this problem through metaplasticity. Since early work on long-term potentiation (LTP) and depression (LTD), the need for higher-order regulation of plasticity rules has been evident. This process, termed metaplasticity (Deisseroth et al., 1995; Abraham and Bear, 1996), has been shown to take place through various molecular pathways and to be critical for the stabilization of learned statistics (Abraham, 2008). One major role of metaplasticity is to promote in a conditional way (depending on the previous history of synapses) persistent changes in synaptic weights during learning. A learning rule with activity-dependent induction threshold, known as the Bienenstock, Cooper, and Munro (BCM) theory, was introduced previously (Bienenstock et al., 1982). The BCM model is considered as a precursor form of metaplasticity and was shown to be consistent with STDP (Senn et al., 2001; Izhikevich and Desai, 2003; Pfister and Gerstner, 2006; Clopath et al., 2010). However, in these models, the activity-dependent threshold was introduced artificially or its postsynaptic dependency could not account for priming experiments reported in the hippocampus (Huang et al., 1992; Christie and Abraham, 1992; Mockett et al., 2002).

Thus, to our knowledge, no model has yet been proposed to account comprehensively for the multiple scales on which plasticity may occur and, in particular, the influence of previous presynaptic activity patterns. We introduce here an STDP model endowed with fast and slow induction processes that can, respectively, account for STDP, short-term competitive interactions (Sjöström et al., 2001; Wang et al., 2005) as well as long-term plasticity regulation as observed in hippocampal priming experiments. This metaplastic model of STDP, or mSTDP, provides a possible solution to the catastrophic-forgetting problem.

Materials and Methods

The mSTDP model

Biological basis.

The mSTDP model is based on a number of experimental observations in vitro. First, important nonlinear interactions in STDP were reported when spike pairings occur closely in time or if triplet protocols are considered (Markram et al., 1997; Sjöström et al., 2001; Wang et al., 2005). Second, priming experiments were conducted in classical LTP/LTD experiments by stimulating the synaptic pathway to be conditioned before the LTP/LTD induction protocol with a high-frequency tetanus or a low-frequency stimulation (Christie and Abraham, 1992; Huang et al., 1992; Wang et al., 1998; Mockett et al., 2002). These studies demonstrated that subthreshold preactivation of LTP or LTD mechanisms could impact their reactivation. Based on these results, it appears that plastic processes occurring at the same synapse through the same molecular pathways exhibit regulation on three distinct timescales that account, respectively, for the temporal and order specificity to induce synaptic weight changes (STDP rule), synaptic competition between paired and unpaired synapses (short-term interactions), and metaplasticity (long-term interactions). We propose here a unified phenomenological model in which the functional diversity of the synaptic effects naturally emerges across these timescales. This mSTDP model is based on putative biomolecular interactions between kinases and phosphatases acting on the synaptic weights and is inspired by experimental in vitro data on the effect of priming stimulations on LTP and LTD induction in the hippocampus.

The mSTDP algorithm.

To implement this as a learning rule, LTP and LTD are treated separately such that the final synaptic weight change results from a linear summation of the scaling contribution produced by these two processes. Both LTP and LTD will be described through the action of two variables: a fast variable responsible for the direct action of these plasticity rules and a slow variable that will act as an induction threshold for authorizing the expression of plasticity. Both variables are computed on eligibility traces resulting from a triplet rule (Pfister and Gerstner, 2006).

For LTP, every presynaptic spike followed by a postsynaptic spike will increase the corresponding eligibility trace by an amount governed by the STDP exponential curve. This eligibility trace then decays with a slower time constant accounting for the triplet interaction. Both the fast and slow actions of LTP are then computed directly with this eligibility trace. The fast action will give rise to an instantaneous synaptic weight increase at the time of a postsynaptic spike, whereas the slow action that is acting at the same instant is a nonlinear function of the eligibility trace averaged over a long timescale and across synapses. The latter will act as a threshold for plasticity induction so that we only consider the positive amount of synaptic change resulting from the difference between fast and slow variables. This will be computed by considering a rectified function of their difference. The mechanism is identical for LTD except that the slow variable will be driven by the LTP eligibility trace accounting for the concurrent changes in LTP and LTD induction thresholds (Mockett et al., 2002). In the special case in which the slow variables are taken as constants, this model formally reduces to a learning rule similar to a model introduced previously by Senn et al. (2001) in which LTP and LTD are both subject to fixed induction thresholds. In this case, the model exhibits plasticity induction thresholds reminiscent of the Artola, Bröcher, and Singer (ABS) learning rule (Artola et al., 1990; Artola and Singer, 1993). In that study, the authors reported that the depolarization level of the postsynaptic neuron defines a plasticity threshold for LTD and a second threshold for LTP at higher depolarization levels. When the postsynaptic membrane potential was clamped at values under these thresholds, LTD and LTP were both absent. In the study by Senn et al. (2001), these thresholds are defined at the suprathreshold level but obey the same induction crossing order for LTD and LTP for increasing postsynaptic spiking activity.

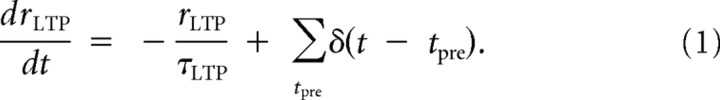

For the LTP mechanism, and for a given synaptic connection, our model posits that every presynaptic spike elicits instantaneous incrementation of a variable rLTP, followed by exponential decay with a time constant τLTP. This variable is used to detect pairing activity within the LTP side of the STDP curve:

|

Once a postsynaptic spike is triggered, the current value of rLTP is used to determine the instantaneous increment of the LTP eligibility trace δwLTP, which decays with a time constant TLTP, slower than the STDP time constant (τLTP):

|

Note that, once Equation 1 is solved and used in Equation 2, the dynamics of δwLTP depends on the correlation between presynaptic and postsynaptic spiking activity through the product of these point processes. The LTP eligibility trace δwLTP is used to define two different actions on the synaptic weight. The instantaneous action of LTP takes place at the postsynaptic spike time and results in an increase given by the value of δwLTP right after the spike. We chose a linear relationship for the sake of simplicity, but a monotonic nonlinear function involving more detailed biophysical processes could be used as well.

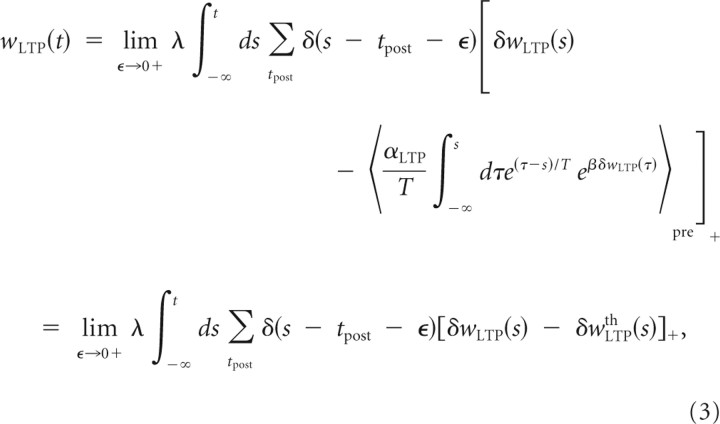

The second component introduced in our model describes the downregulation on the amount of LTP with a slow time constant and thus monitors the past history of a nonlinear function of δwLTP. We hypothesize that this LTP downregulation term can be modeled as an exponential operator on δwLTP averaged over a long time interval T and over a spatially distributed presynaptic ensemble, allowing some form of contextual regulation: δwLTPth(t) = . The coefficient αLTP defines the impact of LTP downregulation relative to the potentiation term, and β determines the rate of modulation of the LTP downregulation. The average over a presynaptic ensemble determines the nature (including heterosynaptic effects) and the spatial extent of competition between presynaptic neurons. Unless stated otherwise, we will consider for simplicity an average over all presynaptic neurons such that the memory context is the same for all synapses, whether they are stimulated or not, and all synapses share the same plasticity thresholds (the slow variables δwLTPth). The slow variable is acting on δwLTP right after each postsynaptic spike so that the complete synaptic increment at time t is given by the integration over times preceding t of the rectified difference between these two terms, such as

|

where λ is the learning magnitude relative to the synaptic weight. In this equation and in the following, the product of two identical point processes for the postsynaptic or presynaptic activity is performed with an infinitesimal difference ε such that the value used for the weight update is taken immediately after the spike.

As mentioned above, the slow variable δwLTPth is used as an induction threshold by considering the net effect of LTP as the rectified difference between the fast and slow variables at each postsynaptic spike. In the absence of synaptic activity, the slow variable is equal to αLTP, which is also the lower bound for this variable. As long as fluctuations of the fast variable can overcome the impact of the slow variable, LTP can occur. This induction threshold changes according to the past history of the synapses and can eventually prevent any additional potentiation.

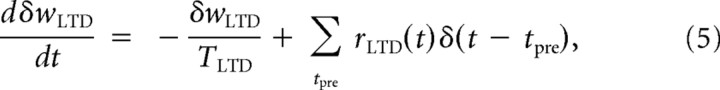

For LTD, each postsynaptic spike followed by a presynaptic spike elicits behavior governed by a set of equations similar to Equations 1 and 2:

|

|

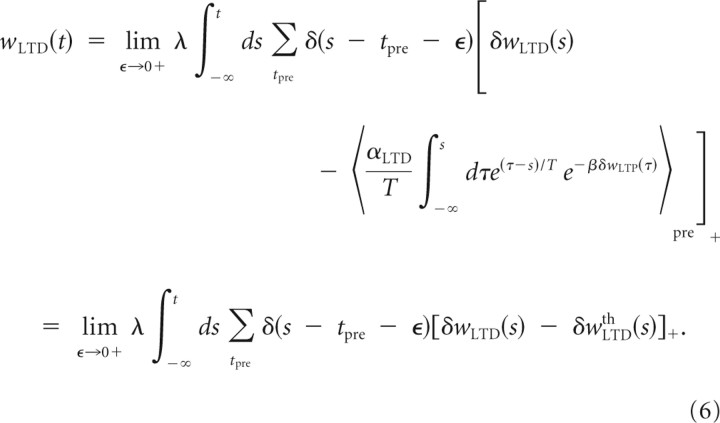

where α accounts for the asymmetric impact of the LTD and LTP sides of STDP. The LTD eligibility trace δwLTD is hypothesized to have an instantaneous effect on AMPARs that will act to reduce the net synaptic weight of the synapse by δwLTD right after the presynaptic neuron has fired. Moreover, the downregulation on the LTP variable is assumed to lower the threshold for LTD. This will be accounted for by introducing an additional term that is inversely proportional to the LTP downregulation term averaged over the past history and the same presynaptic ensemble (as shown by the sign change in the exponential term and its dependency on LTP): δwLTDth(t) = . The coefficient αLTD is the magnitude of facilitation of LTD that acts right after each presynaptic spike. Therefore, the net depressive effect on the synaptic weight at time t is given by the integration over times preceding t of the rectified difference between these two terms:

|

The rectified difference creates plasticity induction thresholds for LTP and LTD that are essential for stabilization of individual synaptic weights. In the absence of synaptic activity, the resting value for the slow variable is αLTD, which is also the upper bound for this variable. Similar models could be obtained with other positive supralinear functions. Altogether, the synaptic weight fluctuations are dictated by the difference between LTP and LTD:

We will assume lower bounds (wmin = 0 nS), and, in the case of Figure 9, we will introduce realistic upper hard bounds to avoid the local divergence of one or very few synaptic weights. This latter situation seems unrealistic because it would require all the impact of the distributed synaptic competition to be restricted to the reinforcement of a single synapse while forcing all other synapses to their lower bound value. Note that both the mSTDP algorithm and the BCM rule provide asymptotic stability of the mean of the synaptic weight distribution, as will be shown below. The further addition of hard bounds in the mSTDP model forbids the divergence of the weights of individual synapses because of the stochastic nature of competition in this latter model (which does not exist in rate-based models such as BCM).

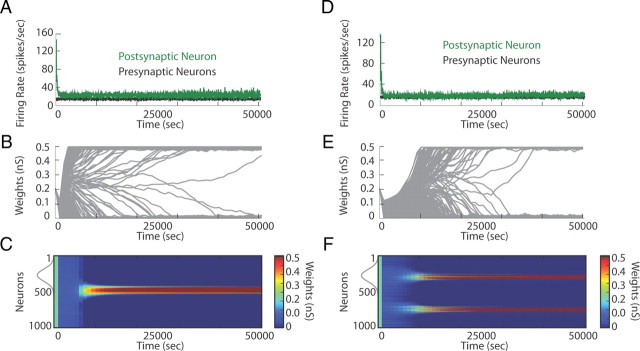

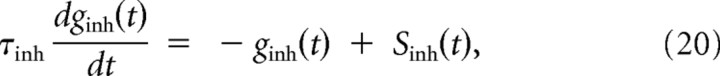

Figure 9.

Emergence of receptive fields with random and sparse activation of a spatially Gaussian distributed stimulation. A, Time evolution of the mean presynaptic (black) and postsynaptic (green) neuron firing rates. B, Time evolution of individual synaptic weights with hard upper bounds. These simulations were done by averaging slow threshold variables over all synapses. C, Emergence of a unimodal receptive field (one-dimensional mapping on the neuronal sheet). Synaptic weights are color coded and illustrated over the whole stimulation period. The Gaussian profile of the stimulation is shown in gray. D–F, Same as in A–C but with finite slow variables diffusion coefficient (D = 103 units2/s).

A weight-dependent version of mSTDP could be obtained by adding this dependency in Equation 7, as described by van Rossum et al. (2000), Gütig et al. (2003), and Morrison et al. (2007), so that each LTP and LTD synaptic weight update is modulated by the current weight value.

From BCM plasticity to stochastic mSTDP

mSTDP without slow variables.

Here, we formally describe the relationship between mSTDP and the BCM rule (Bienenstock et al., 1982) for a simple model in which two neurons are connected through a plastic synapse and follow independent Poisson processes. We will first consider the case in which the slow variables δwLTPth and δwLTDth have been set to a fixed value of 0 so that the rectification can be neglected. In this setting, the model reduces to a triplet rule defined by the fast variables δwLTP and δwLTD alone in Equations 3 and 6. The computation of the mean synaptic variation as a function of presynaptic and postsynaptic rate is straightforward and similar to (Pfister and Gerstner, 2006)

|

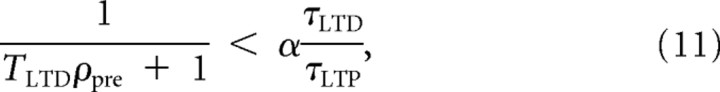

where ρpre and ρpost are, respectively, the presynaptic and postsynaptic firing rates, and Φ(ρpost, ρpre) is a quadratic function of ρpost that crosses the abscissa at 0 and another point given by

|

which is positive if

|

a condition that is true for any ρpre if τLTP < ατLTD. Otherwise, the existence of a second positive crossing point will depend on the presynaptic firing rate. Note that the dependence on the presynaptic firing rate was not present in the description of the threshold in the original BCM rule (Bienenstock et al., 1982). However, in the case in which TLTD is very small or 0, the floating threshold dependence on presynaptic firing rate disappears in our model. This scenario is formally equivalent to the triplet rule found by Pfister and Gerstner (2006) after fitting their model to in vitro data (Sjöström et al., 2001; Wang et al., 2005).

mSTDP with fast and slow variables.

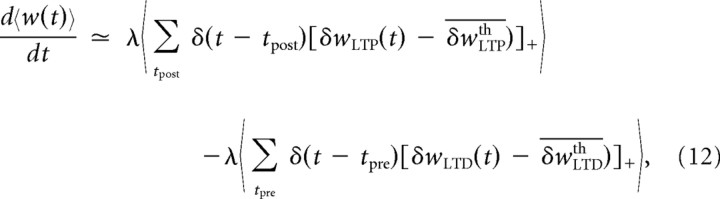

One of the main ingredients of the BCM theory in providing metaplasticity is that the threshold between LTP and LTD for various postsynaptic firing rates can shift as a supralinear function of the past activity of the postsynaptic neuron. We show here that the slow variables linked to the LTP downregulation fulfill similarly this role. However, they do so without the constraint of a supralinear power relationship between the LTD–LTP transition threshold and the mean postsynaptic activity introduced in an ad hoc manner in the BCM model. The variation of the slow components in LTP and LTD can be considered constant during measurement of the BCM curve because the corresponding timescales are several orders of magnitude apart. Since we can compute at least numerically the expected asymptotic values of δwLTPth and δwLTDth for a given presynaptic and postsynaptic firing rate, we can derive the relationship between the BCM threshold and the postsynaptic firing averaged over the past history. We can thus write the mean synaptic weight derivative as

|

where the ε term is omitted in the notation for the sake of simplification. The first averaged term in Equation 12 can be computed through the joint probability distribution of the postsynaptic spike times {tpost} and the stochastic process δwLTP(t): P(δwLTP, tpost). The average can then be performed on the rectified function using this joint distribution. Moreover, by using the conditional probability definition and the Poisson stationarity, we can write P(δwLTP, tpost) = P(δwLTP|tpost)P(tpost) = P(δwLTP|tpost)ρost.. A similar expression can be obtained for LTD such that we can write Equation 12 as

|

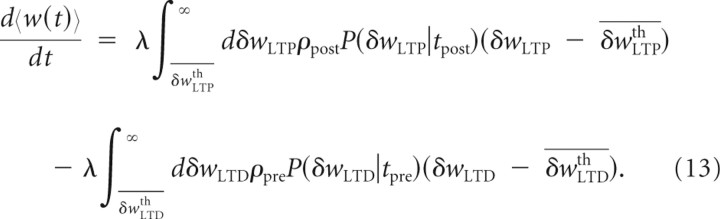

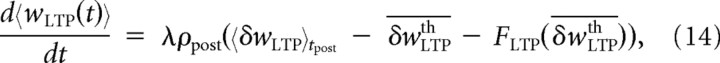

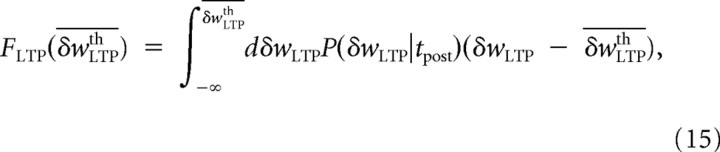

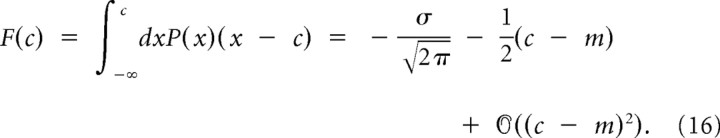

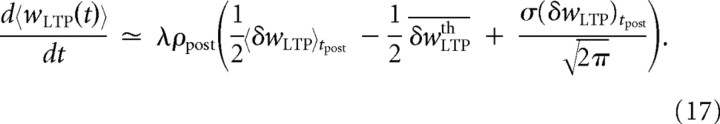

We will consider three asymptotic cases for LTP and LTD to study the effect of the slow variables. In the linear regime (〈δw〉 ≫ δwth), the threshold–linear function is approximated by a linear function so that a simple average can be performed in Equation 13. In the threshold regime (〈δw〉 ≪ δwth), the linear–threshold function is almost always equal to 0 so that we can neglect the corresponding integrals in Equation 13. Finally, we will also consider the case in which the distribution is close to the threshold (〈δw〉 ∼ δwth). In Equation 13, the term for LTP can be written as follows (an identical computation can be done for LTD):

|

where

|

is a function of the LTP threshold and 〈 … 〉tpost is a conditional average over the postsynaptic spike times. In this special case when the mean of the fast variable is assumed to be close to the threshold, FLTP (δwLTPth) can be expanded around the mean value of the distribution P(δwLTP). We will assume that the scale of synaptic weight changes λ is very small compared with the postsynaptic and presynaptic mean firing rates so that the distributions P(δwLTP|tpost) and P(δwLTD|tpre) in Equation 13 can be approximated by Gaussian probability distributions (diffusion approximation). The general form of such an expansion for a Gaussian distribution P(x) of mean value m and variance σ2 is given by

|

The LTP contribution can therefore be written as

|

From the definition of the fast variable in Equation 3, it can be shown that the last term grows as ρpost2 for Poisson processes (the variance of the corresponding compound shot noise process grows as ρpost4). Therefore, the overall form of the BCM curve is unchanged. Note, however, that this equation is only valid in the limit of the diffusion approximation. If the thresholds are set to 0, the mean should also be close to 0 and the diffusion approximation is not valid anymore. These different asymptotic cases are summarized in Table 1. In particular, ρpost〈δwLTP〉tpost = τLTPTLTPρpreρpost2 + τLTPρpreρpost and ρpre 〈δwLTD〉tpre = ατLTDTLTDρpostρpre2 + ατLTDρpreρpost such that λ(ρpost〈δwLTP〉tpost − ρpre〈δwLTD〉tpre) = ρpreΦ(ρpost, ρpre). This last relation corresponds to the curve of Equation 9 found in the case in which the slow variables are neglected. In the situation in which slow variables are taken into account, more complex behavior can be expected from the synaptic weights depending on the definition of the presynaptic ensemble sharing the same slow variables, and this can be explored by introducing a new function Φ1(ρpost, ρpre). This function is derived from the reference BCM curve (case without slow variables in our model) with a shift in the floating threshold proportional to , where δwLTPth is an exponential function of the presynaptic activity averaged over time and over the presynaptic ensemble. This specific presynaptic dependence will be responsible for the various forms of synaptic competition observed in our simulations. In particular, when all synapses share the same slow variables (identical δwLTPth for all synapses), the ones that are targeted by presynaptic neurons with higher firing rates tend to express a lower LTD–LTP transition threshold. Conversely, when thresholds are assumed to be independent across synapses, a high presynaptic firing rate results in higher floating threshold because of the exponential term. This will be illustrated in the following simulations.

Table 1.

LTP and LTD asymptotic behaviors

| LTP | LTD | |

|---|---|---|

| 0 | 0 | |

The middle and last columns contain the asymptotic expression of LTP and LTD terms for the three possible regimes corresponding to the cases in which the fast variables are well below , close to , or well above the slow variables. Depending on the past activity of the synapse and on the parameter set, any combination of these LTP/LTD terms can occur and describe the current plasticity pattern.

Because the LTP and LTD thresholds depend on the past history of the synapse and on the coefficients αLTP and αLTD, any combination of the terms in Table 1 can occur during the learning process. However, from the definition of these thresholds as exponential functions of the postsynaptic and presynaptic activities (Eqs. 3, 6), it can be shown that the BCM sliding threshold always increases with the postsynaptic and presynaptic firing rate past histories and that ρpost = 0 is always a crossing point. In particular, because of the induction thresholds, there is a postsynaptic domain including 0 (null activity level) where no plasticity can take place, which corresponds to rare coincidences between presynaptic and postsynaptic spiking activities: the “zero domain.” Note that this plateau domain differs from the origin singularity at 0 in the BCM model, because it is not limited to the 0 point but encompasses a postsynaptic activity domain that is similar to the dead zone assumed in the ABS model. Beyond this stable domain, three regimes can be outlined for increasing past synaptic activity. In the pure LTP regime, the BCM curve is entirely positive and only potentiation can occur. This scenario can happen when the past synaptic activity was very low and the corresponding slow variables could not shift the curve downward. For higher past synaptic activity, the slow variables δwLTPth and δwLTDth have reached values high enough to define a non-null BCM-like floating threshold. In this particular regime, LTD or LTP will be observed depending on the current level of postsynaptic firing rate. Finally, in the pure LTD regime, the LTP threshold has completely overtaken the fast variable so that the BCM sliding threshold has increased to infinity and only depression can occur. In this last situation, the zero domain will shrink to the single point at 0 because of the decreasing LTD threshold. Altogether, these terms produce, for increasing past postsynaptic activity, the desired supralinear shift of the BCM sliding threshold toward higher values. This behavior is further illustrated in Figure 4.

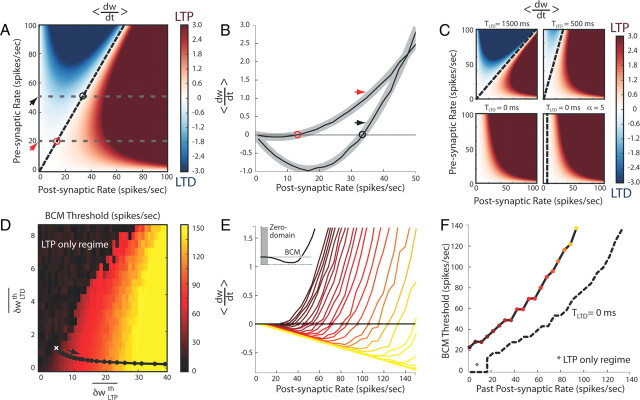

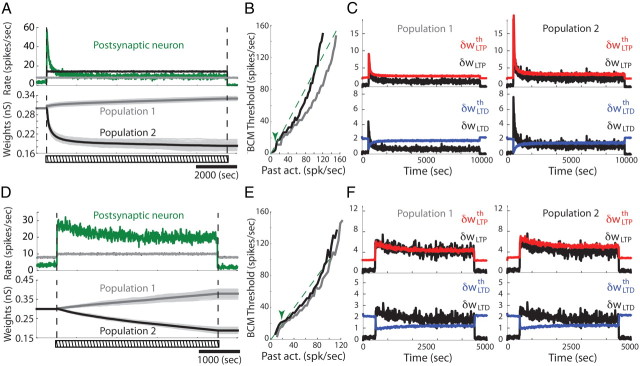

Figure 4.

Relationship between mSTDP and the BCM plasticity rule. A, Mean synaptic change 〈dw/dt〉 (LTP in red, LTD in blue) as a function of presynaptic and postsynaptic firing rates in a two-neuron model with Poisson-process firing statistics and in the absence of slow variables. The oblique dotted line represents the crossing point of the plasticity curve between LTD and LTP. B, Two BCM-like plasticity curves, linking synaptic change with mean postsynaptic activity, correspond to the gray dashed lines in A, respectively, for a presynaptic firing rate of 20 spikes/s (red arrow) and 50 spikes/s (black arrow), with the LTD–LTP transition threshold marked by an open circle. Theoretical predictions are shown in bold gray. C, Same as in A for different TLTD and α values. Note that α has been set to 0.46 in the first three panels and α = 5.00 in the last panel. D, Values of the LTD–LTP transition threshold (BCM) are plotted as a function of asymptotic (constant) slow variables δwLTPth and δwLTDth, corresponding to an infinite T. The black curve represents a trajectory obtained for a presynaptic firing rate of 10 spikes/s and different postsynaptic firing rates when T = 10 s and for (αLTP,αLTD) = (5, 1.15). E, BCM-like plasticity curves obtained along the black trajectory with the same color code as in D. In the inset, a representative curve depicting the singularity around the floating threshold (BCM) and the zero domain of stability at the origin. F, Supralinear dependence of the floating plasticity threshold on the past postsynaptic firing rate history corresponding to the curves shown in E. In dashed black, the same curve when TLTD = 0 ms. The input rate-dependent region in which only LTP can occur is indicated by an asterisk.

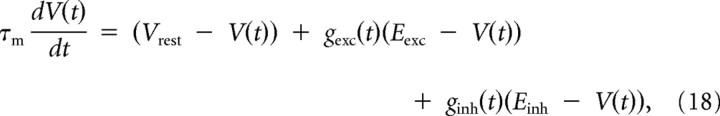

Neuron model

We consider leaky conductance-based integrate-and-fire neurons, with membrane time constant τm = 20 ms, and resting membrane potential Vrest = −70 mV. When the membrane potential V reaches the spiking threshold Vthresh = −54 mV, a spike is generated and the membrane potential is held at the resting potential for a refractory period of duration τref = 5 ms. Synaptic connections are modeled as conductance changes:

|

where the reversal potentials are Eexc = 0 mV and Einh = −70 mV. The synaptic activation is modeled as an instantaneous conductance increase followed by exponential decay:

|

|

with time constants τexc = 5 ms and τinh = 5 ms. Sexc/inh(t) are the synaptic spike trains —point processes—coming from the excitatory and inhibitory populations, respectively.

Constraining the model from in vitro data

The data of Sjöström et al. (2001) and Wang et al. (2005) shown in Figure 2 were used to constrain the model with a least-squares fit, similar to the one described by Pfister and Gerstner (2006). The time constants for the STDP time windows were fixed to τLTP = 20 ms and τLTD = 25 ms, and parameters found by the model are TLTP = 845 ms, TLTD = 995 ms, and α = 0.46. In all the other simulations, the two parameters TLTP and TLTD were set to 1 s.

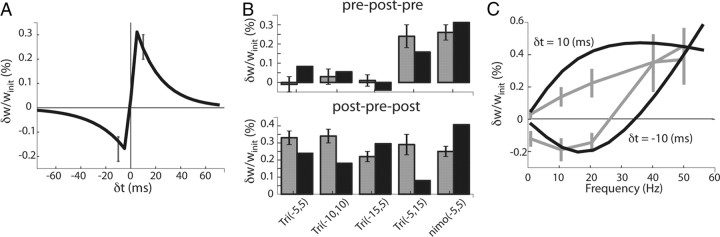

Figure 2.

Reproduction of STDP experiments in vitro. Data shown in gray have been used to fit the mSTDP model parameters for STDP and short-term interactions (with the slow variables set to 0). A, Typical biexponential STDP curve, linking the relative amplitude of the synaptic change (positive for LTP, negative for LTD) with the tpost − tpre interval and obtained with a simulated pairing protocol. Gray error bars in the graph are taken from Wang et al. (2005) recorded in hippocampal neurons for δt = ±10 ms. B, Relative synaptic changes induced by triplet protocols (denoted Tri) compared with experimental data obtained by Wang et al. (2005) (top, pre–post–pre; bottom, post–pre–post). Gray bars correspond to the original recordings for each protocol in the abscissa (with time intervals between each spike). Black bars correspond to the prediction of the model. The last set of columns represents the condition in which L-type calcium channels are blocked with nimo, a specific L-type calcium channel antagonist. C, STDP dependence on the pairing frequency for δt = ±10 ms. In gray are original data obtained for visual cortex neurons by Sjöström et al. (2001) for this protocol.

Priming simulations

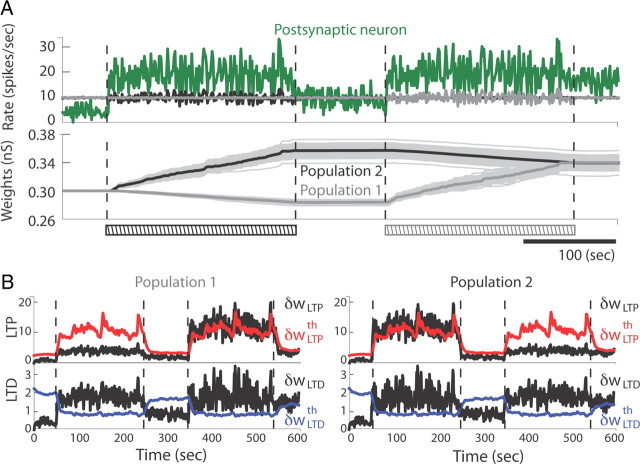

For the LTP/LTD priming protocols (see Fig. 3) corresponding to Huang et al. (1992) and Mockett et al. (2002), respectively, we used a two-neuron model. Synaptic weights are chosen such that weak presynaptic tetanic bursts are able to elicit some spikes in the postsynaptic neuron (winit = 50 nS and wmax = 100 nS). The strong values of those weights could be reduced if more than two neurons were considered. Parameters for the plasticity rule were β = 0.15, αLTP = 15, and αLTD = 2. For LTP priming, the presynaptic neuron received 10 weak tetanic bursts (20 spikes/s for 250 ms each), with an interburst interval of 1 s. Then, 10 s later, it received a strong burst at 100 spikes/s for 200 ms. The learning rate was fixed to λ = 5 × 10−3 and T = 50 s. For LTD priming, the presynaptic neuron receives a low-frequency stimulation at 15 spikes/s for 40 s. Then, 40 s later, the exact same stimulation occurs. The learning rate was fixed to λ = 0.8 × 10−4, and T = 100s.

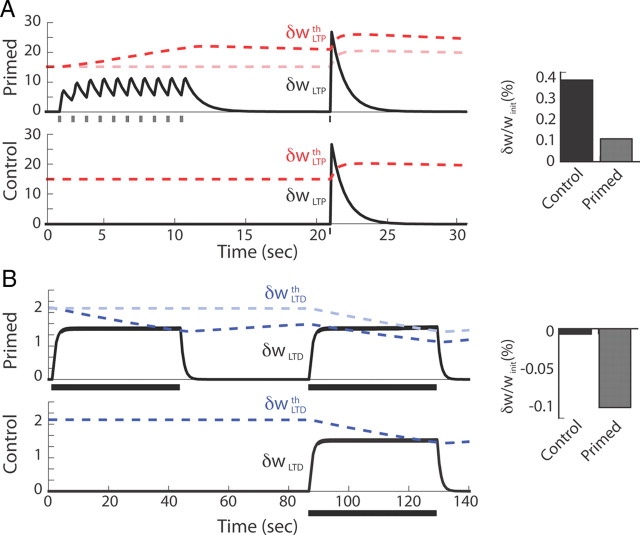

Figure 3.

Reproduction of priming experiments using the mSTDP rule. A, Simulation of an LTP priming experiment inspired by Huang et al. (1992), in which weak tetanus stimulation can elicit LTP suppression. The top shows the time evolution of fast and slow LTP variables with a priming stimulation, and the bottom shows the control condition without priming (with δwLTPth also illustrated in light red in the top). The postsynaptic spike times corresponding to the weight updates are depicted below each graph. The histograms on the right are the resulting synaptic weight changes relative to their initial value for the two conditions. B, Same as A but with a protocol inspired by Mockett et al. (2002) in which previous low-frequency stimulation can elicit LTD facilitation for subsequent low-frequency stimulations. Slow variables are drawn in blue. The presynaptic spike times corresponding to the weight updates are depicted below each graph.

Feedforward convergence simulations

In feedforward convergence simulations (see Figs. 4–9), the network was composed of 1000 excitatory and 250 inhibitory neurons connected to a postsynaptic neuron. Only excitatory connections are subject to the mSTDP learning rule, and parameters for the plasticity were β = 0.15, αLTP = 2.5, αLTD = 2.3, and T = 5 s. The initial weight for the presynaptic population was winit = 0.3 nS. The ratio between excitatory and inhibitory weights is such that winh = 33 × wexc. Every neuron in the presynaptic population had a constant firing rate of 8 spikes/s, and the learning rate was fixed to λ = 2.5 × 10−6. For Figure 9, presynaptic neurons are stimulated with wrapped Gaussian profiles of rates vi = 1 + 50 × exp(−(i − μ)2/2σ2) spikes/s, the center μ being shifted randomly every 50 ms over all possible positions i ϵ {1, …, 1000} and with σ = 80. Here an upper bound for the synaptic weights was set to wmax = 0.5 nS, and the learning rate was divided by 2 to avoid strong fluctuations near the boundaries.

Figure 5.

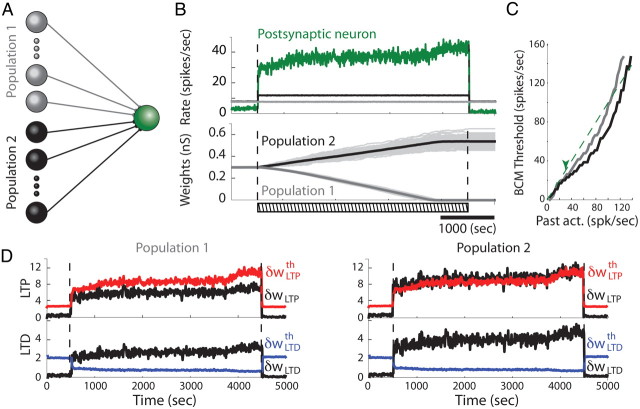

Firing rate-based competition produced by mSTDP. A, Illustration of the feedforward model (only excitatory cells are shown). B, Time evolution of firing rates (top) and synaptic weights (bottom) in a feedforward model with presynaptic Poisson processes. In this protocol, the postsynaptic neuron receives Poisson input from two presynaptic populations. After 500 s, presynaptic neurons in population 2 double their firing rates from 8 to 16 spikes/s (black curve), whereas the other population remains unchanged (gray curve). The firing rate of the postsynaptic neuron is shown in green. The bottom plot represents individual synaptic weights in light gray, as well as the mean weight of population 1 in dark gray and population 2 in black. After 4000 s, the firing rate of population 2 is relaxed to its initial value. The dashed bar indicates the onset, offset, and duration of the stimulation. C, Floating plasticity threshold dependence on the past postsynaptic firing rate for both populations. The green arrow indicates the stable postsynaptic firing rate during the competitive learning protocol, before population 1 weights reach their lower bound at 0. The dashed green line indicates identity. D, Time evolution of the fast variables δwLTP and δwLTD (black curves), as well as the corresponding slow variables δwLTPth (red) and δwLTDth (blue).

Figure 6.

Effect of the time constant TLTD on the presynaptic dependence of the floating plasticity threshold. A–C, Same as B–D in Figure 5 but with a mean firing rate of 20 spikes/s for population 1 and 50 spikes/s for population 2. Here, as in Figure 5, TLTD = 1000 ms. D–F, Same as in A–C but with TLTD = 50 ms.

Figure 7.

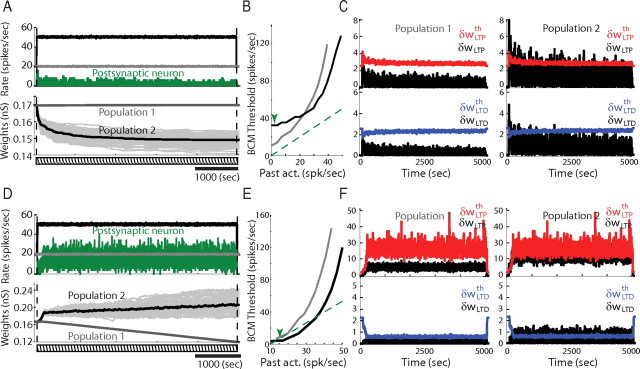

Synchrony-based competition produced by mSTDP. A, Time evolution of firing rates (top) and synaptic weights (bottom) in a feedforward model with presynaptic Poisson processes. In this protocol, population 2 neurons are synchronous Poisson processes (correlation coefficient of 0.25) between 50 and 250 s (black crosshatched bar), and population 1 neurons are synchronous Poisson processes between 350 and 550 s (gray crosshatched bar). Outside these synchrony periods, the synaptic inputs are uncorrelated. B, Time evolution of the fast variables δwLTP and δwLTD (black curves), as well as the corresponding slow variables δwLTPth and δwLTDth.

Figure 8.

Synaptic weight dynamics for mSTDP rules with independent slow variables. A, The time evolution of the firing rates of the presynaptic and postsynaptic neurons is shown in the top and the synaptic weight distributions of populations 1 and 2 in the bottom. Population 2 neurons increase their firing rate during the period delimited by the dashed black bar. B, Floating plasticity threshold dependence on the past postsynaptic firing rate for both populations. C, Time evolution of the fast and slow variables of both populations 1 and 2. The color code is identical to that of Figures 5–7. D–F, Same as in A–C but for a uniform increase in firing rate and for a bimodal distribution of coefficients αLTP with means of 2.3 and 2.7, corresponding to populations 1 and 2, respectively.

Network model

The network was composed of Nexc = 63 × 63 excitatory and Ninh = 31 × 31 inhibitory neurons, arranged on a two-dimensional grid of artificial size 1 mm2. Because of the rather small neuron density, the metric unit used to describe the network can be considered arbitrary, but we will assume, for the sake of comparability between stimulation protocols and for establishing realistic propagation delays, that 1 mm corresponds to ∼1° of visual angle in cat primary visual cortex V1. The grid has periodic boundary conditions to avoid any border effects. Each neuron was sparsely connected with the rest of the network with a connection probability that depended on the distance Δij between neurons according to a Gaussian profile pij = with a spatial spread of σ = 0.1 mm. For each neuron, K = ε(Nexc + Ninh) incoming connections were drawn by randomly picking other neurons in the network that will or will not create a projection according to a rejection method based on the Gaussian profile. The connection density was ε = 0.1, and self-connections were discarded.

The network was set to an asynchronous irregular (AI) state (Brunel, 2000) with a population rate of ∼7 spikes/s and with the mean coefficient of variation of the interspike interval (ISI CV) of 1. Initial synaptic weights were drawn from Gaussian distributions with mean values: δgexc = 5 nS and δginh = 50 nS. The SDs of the Gaussian distributions are one-third of their mean values. Every neuron received an additional Poisson input at 500 spikes/s in Figure 10 and 300 spikes/s in Figure 11. To reproduce lateral propagation along horizontal connections, as supported by in vivo intracellular evidence (Bringuier et al., 1999) and voltage-sensitive dye imaging (Benucci et al., 2007), synaptic delays are considered as being linearly dependent on distance, i.e., if Δij is the distance between two neurons, we have dij = dsyn + , where dij is the delay between the two neurons, dsyn = 0.2 ms is the minimal delay attributable to synaptic transmission, and v is the velocity of dendritic conduction. In all simulations, v = 0.2 mm/ms, in agreement with biological measurements (Bringuier et al., 1999), i.e., delays in the network are in the range , where /2 mm is the longest possible connection in the network.

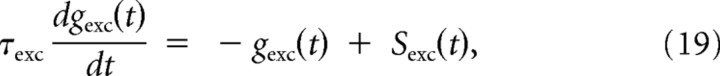

Figure 10.

Stable learning in a topographically organized recurrent two-dimensional neuronal network with in vivo-like spontaneous activity. A, Time evolution of the unstimulated population (gray curve) and stimulated population by an external drive within a circular area (black curve). The mean afferent synaptic weights for each neuron in the network are displayed as snapshots in the course of time above the graph. The mean afferent weights fed by neurons inside the stimulated region, outside the stimulated region, and from all neurons are plotted in the first, second, and third rows, respectively. B, From left to right, The columns labeled by circled numbers correspond, respectively, to the stable spontaneous regime (1), the end of the stimulation epoch (2), and the relaxation ongoing regime 1000 s after the stimulation (3). Network snapshots of the mean afferent and efferent synaptic weight per neuron are plotted in the two first rows for mSTDP. In the last two rows, the mean firing rates for each neuron in the network are plotted for the mSTDP and the weight-dependent STDP rules. Circled numbers at the top of each column refer to the same phases described in A and B. C, Network firing statistics before, during, and after stimulation: top, firing rate distribution; middle, ISI CV; bottom, mean Pearson's coefficient computed over 2000 distinct neuron pairs.

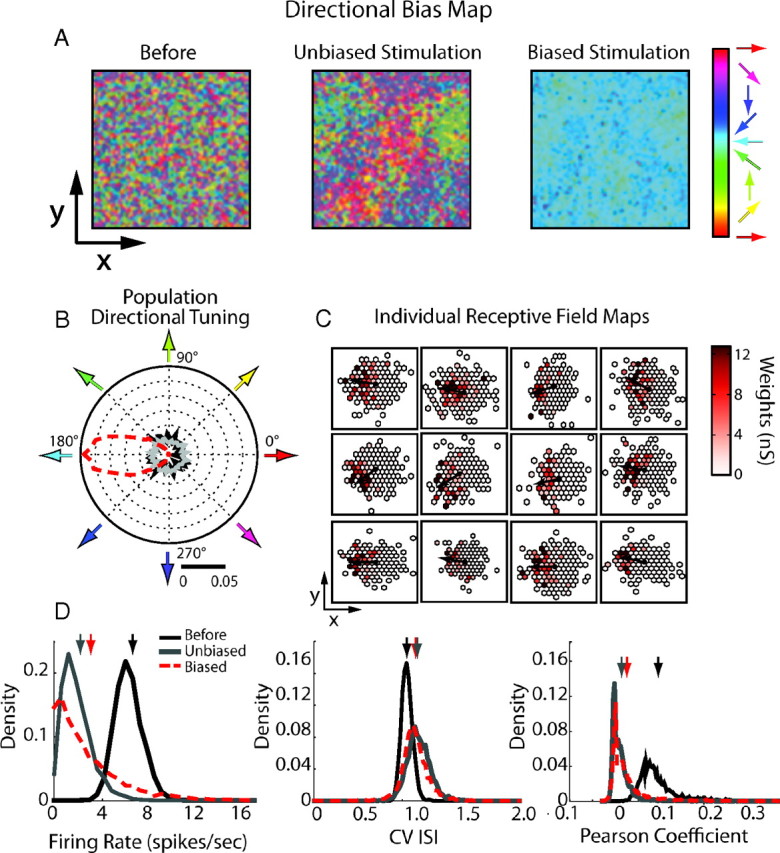

Figure 11.

Stable learning in recurrent retinotopically organized networks stimulated with moving bars. A, Maps of direction preferences, color coded and computed for each neuron in the network from their individual receptive fields. For one neuron, the receptive field corresponds to the spatial distribution of its synaptic sources (locations of the presynaptic neurons afferent to the same target cell). Individual direction preferences were computed by identifying the direction, in each receptive field, in which recurrent presynaptic weights were the strongest (see Materials and Methods). The left shows the map of the direction preferences in the initial, randomly connected network under spontaneous activity. Middle and right shows these maps induced by plasticity respectively after unbiased (middle) or directionally biased (right, red arrow) conditioning stimulations. B, Distribution of the direction preferences gathered across all neurons during spontaneous (black curve), directionally unbiased (gray curve), and biased conditioning (red dashed curve) stimulation. The direction tunings are measured from individual receptive fields as spatial biases in the recruitment of presynaptic sources. C, Examples of individual receptive fields for several neurons randomly selected in the network after the biased stimulation. The maps are centered on the reference postsynaptic neurons (black dots), and individual direction preferences are indicated by black arrows. Incoming synaptic weights are color coded. D, Network firing statistics before stimulation and after the different stimulation phases: left, firing rate distribution; middle, ISI CV; right, mean Pearson's coefficient computed over 2000 distinct neuron pairs. Arrows indicate mean values for each phase.

In all simulations, only recurrent excitatory connections are subject to plasticity, and the learning scale is set to λ = 1.5 × 10−6. For Figure 11, an external layer of stimulating neurons driven by Poisson processes is added on top of the recurrent network. All external neurons project locally to all neurons of the recurrent network within a radius of 0.3 mm, with synaptic weights gexc = 5 nS. In this external layer, moving bars were modeled as linear wave fronts with a profile described by a half-Gaussian function. The bars were 0.2 mm large and 0.1 mm long, with a peak rate amplitude of 4 spikes/s. This pattern of activity originated every 100 ms at random places and propagated perpendicularly to the wave front in a random direction φ, with a constant speed s = 10 mm/s (that would correspond to 10°/s in the visual field according to the chosen metric to which cells in V1, and mostly secondary visual cortex V2, will respond). In the unbiased case, values of φ are drawn from a uniform distribution ranging from 0 to 2π, whereas in the biased case, they are drawn from a wrapped Gaussian distribution centered on 0 with variance σ2 = π/4.

For the quantification of the preferred direction, we considered, for each neuron, the centered map of its afferent presynaptic weights. In this neuron-centered reference frame, an incoming input originates from a position identified by its polar coordinates (r, θ), such that the map value at (r, θ) corresponds to the weight w of the projection. Angular positions of all the afferent connections were then gathered and weighted by their corresponding synaptic weights. The peak value of this weighted histogram is used to define the structural direction preference of the neuron, i.e., the angular direction along which the composite synaptic contribution of all synaptic weights recruited by the bar is the strongest.

Simulator

All simulations were performed using a modified version of the NEST 2.0 simulator (Gewaltig and Diesmann, 2007), allowing updates of the synaptic weights at each time step of the simulation, controlled with the PyNN interface (Davison et al., 2008). The integration time step of our simulations was 0.1 ms.

Results

Biophysical correlates of the mSTDP model

The working assumption of our mSTDP model is based on electrophysiological in vitro results reported previously (Wang et al., 2005), showing evidence that LTP and LTD are elicited by calcium influx through distinct dedicated channels, NMDA and L-type, respectively. For LTP, and for every pre–post pairing, calcium enters the cell through NMDA channels and activates second-messenger-dependent molecular processes leading to potentiation (presumably through calmodulin binding and subsequent activation of kinases; see Lisman, 1989, 1994). For LTP, we consider that the STDP window is defined by an exponential curve that dictates the instantaneous amount of Ca2+/calmodulin that will be formed at the time of the postsynaptic spike. In a recent work by Faas et al. (2011), the authors have shown that the binding of calmodulin with calcium takes place over a very short timescale, in accordance with our phenomenological description of this process as an instantaneous rise of the corresponding variable at the time of postsynaptic spikes. This variable will be labeled δwLTP. The action of this variable is twofold. On a very short timescale, the corresponding molecule will activate a kinase K that will phosphorylate AMPA receptors, thus increasing the excitatory synaptic conductance, acting as a phenomenological substrate for LTP, as in previous biophysical models (Senn et al., 2001; Shouval et al., 2002; Badoual et al., 2006; Pfister and Gerstner, 2006; Graupner and Brunel, 2007; Clopath et al., 2010). On a slower timescale, it will also activate another pathway, resulting in a nonlinear downregulation of the kinase activation. This additional hypothetical slow molecular pathway is critical in this model and has not been taken into account in previous models of calcium-dependent kinase kinetics that usually rely on positive regulation of the kinase (Lisman, 1989, 1994; Okamoto and Ichikawa, 1994; Lisman et al., 2002; Graupner and Brunel, 2007).

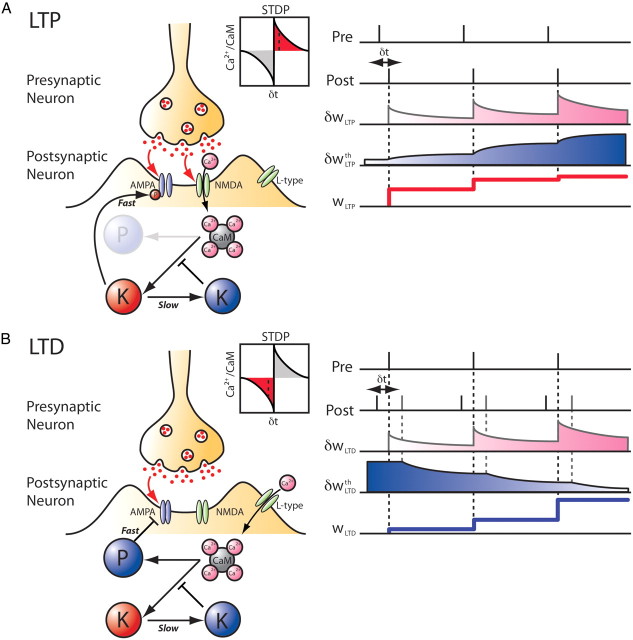

In our phenomenological model, these fast and slow actions were implemented through two different operators. The rapid Ca2+/calmodulin action is modeled as an instantaneous update of synaptic weight given by the value of δwLTP at the time of the postsynaptic spike. The long-lasting LTP downregulation acts as an induction threshold during these postsynaptic events and will be modeled as an average of exp(βδwLTP) over the past history and over a presynaptic ensemble. This function accounts for the strong nonlinear increase in the LTP threshold when weak tetanic priming stimulations are used before a strong tetanic induction in hippocampal slices (Huang et al., 1992). Unless stated otherwise, the presynaptic ensemble will be averaged over all previously active synapses projecting on the postsynaptic neuron such that they all share the same slow variables. Note also that the fast variable does not need to exhibit an instantaneous rise but can also have a finite rise time as long as the timescale is significantly faster than that of the slow variables. The net effect on the synaptic weight is proportional to the kinase concentration, thus resulting in the rectified difference between these two terms (see Materials and Methods). This LTP mechanism is depicted in Figure 1A and obeys Equation 3 for instantaneous weight changes induced by LTP: wLTP.

Figure 1.

Schematic description of the model of mSTDP. A, Mechanism of LTP with a fast potentiation action and slower downregulation of the instantaneous amount of potentiation at the postsynaptic spike times. The plasticity rule used for LTP is inspired by putative molecular pathways depicted on the left. A pairing protocol between presynaptic and postsynaptic spike trains is illustrated on the right with the time evolution of each variable of the model. The slowly increasing threshold (δwLTPth, blue curve) progressively reduces the effect of fast potentiation (δwLTP, pink curve) on the synaptic weight (wLTP, thick red lines) at subsequent postsynaptic spike times. The pairing time difference δt is relative to the presynaptic spike time (δt = tpost − tpre). B, Mechanism of LTD with a fast depression action and a facilitation produced by LTP downregulation at the presynaptic spike times. The plasticity rule used for LTD is inspired by putative molecular pathways depicted on the left. On the right, a triplet protocol illustrates how the progressive lowering of the LTD threshold (δwLTDth, blue curve) produced by repeated pre–post pairings can increase the functional impact of fast depression (δwLTD, pink curve) produced by previous post–pre pairings, resulting in larger depression of the synaptic weight (wLTD, thick blue lines) at the presynaptic spike times.

Note that other types of ensemble average can be considered for the slow variables. For instance, the sharing of the slow variables by synapses can be done on a local basis (restricted to a specific domain of the neurite) and not on a global basis as described above. This could correspond to local groups of synapses located in the same dendritic branch and sharing some of the proteins through local diffusion. This will result in different learning rules, as described in later sections.

Regarding LTD, it has been shown that priming stimulations can facilitate LTD induction with low-frequency stimulation (Christie and Abraham, 1992; Wang et al., 1998; Mockett et al., 2002). Although the direct action of LTD is assumed to be conveyed by calcium influx through dedicated L-type channels (Graef et al., 1999; Wang et al., 2005), the priming effect has been shown to occur through NMDA channels and to covary with the metaplasticity observed for LTP (Mockett et al., 2002). Indeed, LTD facilitation and LTP suppression are both elicited in priming experiments that activate NMDA receptors. We assumed that the direct action of “pre after post” pairing results in the binding of an independent Ca2+/calmodulin (variable δwLTD) to a phosphatase that will be responsible for rapid AMPA receptor dephosphorylation and endocytosis. Moreover, we hypothesized that this process can be facilitated by the Ca2+/calmodulin-dependent LTP downregulation generated through NMDA channels that will make more Ca2+/calmodulin molecules available for the phosphatase. This is modeled as a buffering term acting at the presynaptic spike times and that is inversely proportional to the slow LTP action exp(βδwLTP), averaged over the past history and over the same presynaptic ensemble. This LTD mechanism is depicted in Figure 1B and obeys Equation 6 for instantaneous weight changes induced by LTD: wLTD. The time evolution of the synaptic weight is given by the time integral over the synaptic changes (Eq. 7).

Thus, one of the main consequences of these mechanisms is that the LTP or LTD changes to be expressed will have to cross a plasticity induction threshold. This by itself is similar to a previously proposed plasticity model (Senn et al., 2001), except that the threshold here is moving as a function of the past activity attributable to the dual timescale interactions that we propose to underlie metaplasticity. Changes in thresholds in this model result from short-term upregulations and downregulations of purely presynaptic vesicle release probability at individual synapses through nonlinear messenger interactions. In contrast, the mSTDP model offers the possibility of studying several scenarios, ranging from learning rules with a single shared postsynaptic floating threshold (as in BCM) to multiple and purely presynaptic plasticity induction thresholds (as in the study by Senn et al., 2001).

The mSTDP model can be more readily compared with the BCM hypothesis, because the two learning rules produce a floating plasticity threshold driven, respectively, by presynaptic and postsynaptic activity (mSTDP) or shared by all the synapses (BCM) and evolving on a very slow timescale. Note that these two features are absent in the model of Senn et al. (2001), in which the sliding feature of the threshold arises from kinetic equations regulating the vesicle release probability. However, this adaptive threshold is driven by the same time constant causing the synaptic weight changes. Therefore, this model does not account for the multiple plasticity timescales necessary to reproduce in vitro priming experiments, neither does this rule incorporate plasticity induction thresholds shared among all synapses critical to ensure synaptic competition in the BCM framework. More recently, models based on triplet interactions were introduced that reproduce some features of the BCM rule (Pfister and Gerstner, 2006; Clopath et al., 2010). However, the slow sliding threshold in these models was introduced as an ad hoc homeostatic process governing the amplitude of depression (Clopath et al., 2010) or potentiation (Pfister and Gerstner, 2006), without a structural relationship with the plasticity algorithm. The induction thresholds for LTP and LTD are therefore kept fixed in these models. We will show further in the text that our biochemical scenario can account for the classic “floating plasticity threshold” found in previous experimental and theoretical BCM-like studies of synaptic plasticity, but in this case, the “floating feature” is an emergent property of the model.

Single-cell STDP experiments

Before considering the mSTDP with the fast and slow variable actions, we first compared our model with plasticity protocols occurring at a fast timescale so that the slow variables can be considered constant. In particular, to determine whether this phenomenological model is consistent with STDP experiments, we show that its predictions reproduce the plasticity effects reported for first- and second-order interspike interactions. In Figure 2A, the biexponential STDP curve was obtained using a pairing protocol at 1 Hz. Triplet interactions were obtained in Figure 2, B and C, for a given set of parameters chosen to qualitatively match the data reported by Sjöström et al. (2001) and Wang et al. (2005) and with the slow variables set to 0 for the sake of simplicity. Note that these results are conserved even in the presence of slow variables (data not shown). Previous studied plasticity models have also succeeded in reproducing these data (Pfister and Gerstner, 2006; Clopath et al., 2010) or other similar datasets (Senn et al., 2001) with generalized short-term interactions. In the study by Senn et al. (2001), the short-term interaction is produced by regulation of presynaptic vesicle release probability. Here, the short-term interaction is inherited from the assumption that the Ca2+/calmodulin-bound concentration decays slowly compared with the STDP time constant. Figure 2B displays the synaptic changes for different patterns of presynaptic and postsynaptic spike triplets (Wang et al., 2005). In pre–post–pre firing patterns, for instance, the synapse displays virtually no plasticity, whereas significantly larger potentiation is obtained for post–pre–post patterns that would produce comparable synaptic changes if short-term interactions were neglected. Moreover, when simulating L-type channel blockade (“nimo” application condition) by forcing δwLTD to 0, we obtained a comparable potentiation between pre-post-pre and post-pre-post patterns, a prediction in accordance with experimental data. In Figure 2C, plasticity curves are plotted for fixed pairing intervals (δt = ±10 ms) occurring at various frequencies. In particular, for post–pre pairings, the synapse first experiences depression followed by potentiation at higher frequencies, as reported by Sjöström et al. (2001). The main features of the model responsible for this frequency-dependent effect are the extra time constants added by the binding of Ca2+ with independent calmodulin molecules (variables δwLTP/LTD). These time constants, similar to those of the triplet model by Pfister and Gerstner (2006), are able of capturing not only pairwise interactions within pre–post pairings but also higher-order interactions. Note that these high-order plasticity rules were also shown to naturally emerge from the dynamics of kinase/phosphatase interaction with calcium and glutamate in a previous biophysical model (Badoual et al., 2006).

Priming experiments

We next considered experimental data involving timescales in which the action of the mSTDP slow variables is critical. To confirm that the mSTDP model faithfully reproduces the dynamics of metaplasticity as reported in vitro, we replicated priming experiments that were shown to either inhibit LTP or facilitate LTD. In Figure 3A, the protocol used by Huang et al. (1992) was reproduced in a two-neuron model. When weak tetanus priming stimulations were used before subsequent strong tetanus stimulation, the resulting synaptic weight change was considerably lower than the change elicited by the strong tetanus stimulation alone. Preactivation of LTP increases its induction threshold and then decreases the amount of LTP obtained during the strong tetanus stimulation. Conversely, LTD induction by low-frequency stimulation was significantly facilitated when a previous low-frequency priming stimulation was used (Fig. 3B). This result is in accordance with LTD facilitation reported by Christie and Abraham (1992), Wang et al. (1998), and Mockett et al. (2002). Low-frequency stimulation of the LTD pathway decreases the induction threshold for LTD and therefore increases the amount of depression compared with a control situation without priming. Moreover, in the study by Mockett et al. (2002), it was shown that this facilitation occurs through NMDA channels and is concurrent with LTP downregulation, which is in accordance with our definition of the slow variables both depending on δwLTP. Note that the results shown in Figure 3 were obtained with a set of parameters different from that used for the rest of the study to mimic the protocols used in vitro. To avoid excessively long simulations, time constants smaller than those observed experimentally were used in the rest of the study. Nevertheless, similar results on priming experiments can be obtained with our choice of parameters if the timescale and burst amplitude of each protocol are properly scaled.

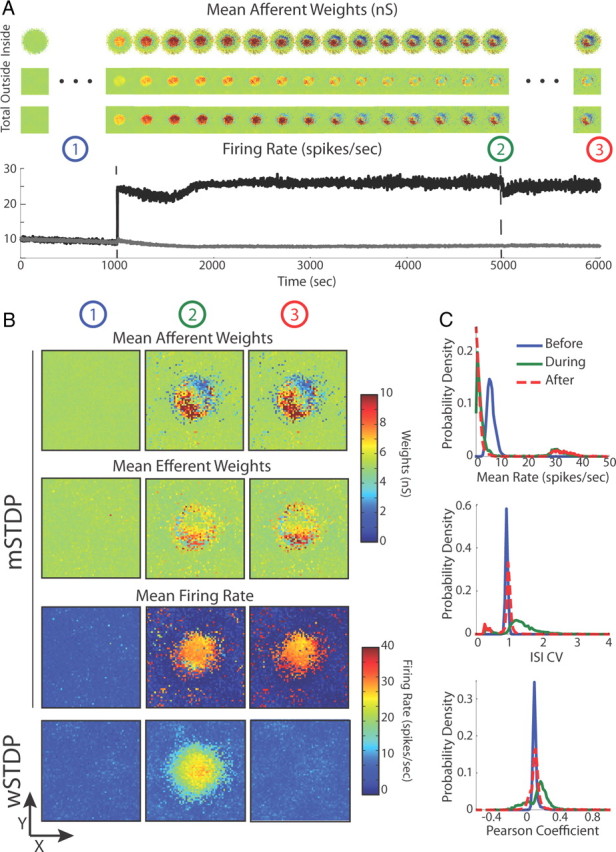

Relation with BCM

The dependence of LTP and LTD induction thresholds on the past synaptic activity in mSTDP introduces new interesting functional properties that can ensure stable learning as well as synaptic competition. We thus further studied the relationship between mSTDP and the original BCM plasticity rule first introduced to endow plasticity with stable convergence through synaptic competition (Bienenstock et al., 1982). Previous theoretical studies have succeeded in reproducing some aspects of the BCM rule by considering nonlinear interactions between presynaptic and postsynaptic spike patterns in STDP (Senn et al., 2001; Izhikevich and Desai, 2003; Burkitt et al., 2004; Pfister and Gerstner, 2006; Clopath et al., 2010). This correspondence was achieved either by assuming first-neighbor interactions between spikes (Izhikevich and Desai, 2003; Burkitt et al., 2004) or short-term interactions involving slower variables (Senn et al., 2001; Pfister and Gerstner, 2006; Clopath et al., 2010). However, these models were unable to account for one salient feature of the BCM rule: the floating plasticity threshold shared among all synapses integrating the impact of mean postsynaptic activity changes on slow timescales, which was either included as an ad hoc mechanism or was absent (Bush et al., 2010). The sliding plasticity threshold was originally assumed to be a supralinear function of the past postsynaptic firing rate history and was introduced to ensure nontrivial convergence and to avoid weight divergence in the plasticity algorithm (Bienenstock et al., 1982). In Figure 4A, we first plotted the amplitude and sign of plasticity for various presynaptic and postsynaptic firing rates in a model consisting of a pair of neurons linked by an ideal synapse and firing as Poisson processes. Figure 4B shows two BCM curves that correspond to selected lines in the diagram of Figure 4A. These curves were computed in the absence of slow variables and are compared with theoretical predictions (Eq. 9). The depression domain and thus the BCM threshold increases with presynaptic firing rate as expected from the model definition (see Materials and Methods). This dependence is absent from the original BCM rule and creates differential plasticity changes between synapses originating from neurons with different firing rates as reported previously (Burkitt et al., 2004). This form of synaptic competition usually requires fine tuning of the model parameters and produces unstable distributions of synaptic weights because no homeostatic regulation is present in the absence of slow variables to ensure a normalization of the overall synaptic weight impact on the postsynaptic neuron. Moreover, in the parameter regime of the triplet rule used in Figure 4A, regions of anti-Hebbian learning exist in which an increase in the presynaptic firing rate results in a decrease of the corresponding synaptic weight. When the value of TLTD is decreased, the presynaptic dependence progressively disappears until a fixed BCM threshold is observed independently of any change in the presynaptic rate when TLTD = 0. In Figure 4C, we illustrate several plasticity rules for different values of the triplet parameters TLTD and α. In particular, for TLTD = 0 and α = 0.46, only LTP can occur. The choice of parameters in the last panel is formally similar to that found by Pfister and Gerstner (2006) when fitting their triplet model to the data of Sjöström et al. (2001) and Wang et al. (2005); in their studies, as well as in this particular parametric case of our mSTDP model, the BCM threshold is constant and shared by all synapses.

We then studied the role of the slow variables δwLTPth and δwLTDth in modulating the LTD–LTP transition plasticity BCM threshold with the past postsynaptic firing rate history, as originally used by Bienenstock and colleagues to simulate the effect of rearing conditions (dark rearing vs normal visual experience) on visual cortical plasticity. Measuring this relationship directly from the model requires the decoupling of slow and fast variables such that the past history of the postsynaptic activity is not affected during the measurement of the “instantaneous” BCM threshold. Therefore, we assumed that the slow time constant T is slow enough compared with other time constants to be considered infinite during the BCM curve measurement. Moreover, we also assumed that the presynaptic average is carried over all synapses. In this asymptotic regime (T → ∞), all synapses share common LTP and LTD thresholds described by the coefficients δwLTPth and δwLTDth of Equations 3 and 6 that act as constant thresholds defined by the past activity regime. The new BCM plasticity curves and thresholds can thus be computed for any pair of fixed LTP and LTD thresholds. Figure 4D shows the plasticity thresholds obtained for different values of these coefficients. Note that the existence of an LTD induction threshold was absent from the original BCM rule (Bienenstock et al., 1982) but was introduced at the subthreshold level in the ABS rule (Artola and Singer, 1993) and at the suprathreshold level by Senn et al. (2001). However, in the ABS model, LTP and LTD thresholds were considered as fixed values. In the model by Senn et al., the effective floating threshold was not shared among synapses, contrary to the mSTDP rule. The slow variables are nonlinear functions of the postsynaptic activity history through the eligibility traces δwLTP and δwLTD in Equations 3 and 6 that are functions of the past postsynaptic activity for Poisson processes. For a given parameter set (αLTP, αLTD) and for a finite time constant T = 10 s and presynaptic rate of 10 spikes/s, successive stationary postsynaptic firing rates resulted in effective stationary variables δwLTPth and δwLTDth that are plotted as trajectories in Figure 4D. The white cross corresponds to a quiescent past postsynaptic activity, and the arrow indicates the floating plasticity thresholds for higher past activities. Figure 4E shows the plasticity curves as a function of the past postsynaptic firing rate (assumed constant during each protocol) for the trajectories drawn in Figure 4D. As described in Materials and Methods, two curve-axis crossing points can be highlighted: the first point at the origin of the postsynaptic activity axis, and the second point at the transition between pure LTD and LTP domains (BCM-like threshold). The corresponding two regimes are illustrated in Figure 4E (inset). The stable zero domain around the origin is usually large enough to operate as a “dead zone” and supports a static integrative mode with fixed synaptic weights. In this domain, no synaptic weight change will occur as long as the level of presynaptic and postsynaptic coincidence remains small. This domain can be shrunk after high past synaptic activity (LTD-only regime). However, the second point, the LTD–LTP transition BCM threshold, increases with increasing past synaptic activity as described in Table 1. Figure 4F shows the BCM thresholds for increasing postsynaptic past activity corresponding to the different curves in Figure 4E. This relation is supralinear as required by the formal demonstration of stability conditions during competitive BCM learning (Bienenstock et al., 1982). The increase rate of this function as a function of the past synaptic activity is driven by the parameter β that describes the rate of LTP downregulation (Eq. 3). It is worth noting that the floating BCM threshold is a singular operating point that can only guarantee the convergence of the mean synaptic weight. The stochastic nature of neuronal firing creates a residual competition that slowly diffuses changes in the synaptic weights around the mean self-stabilized operating point. Thus, only the zero domain guarantees both individual and mean synaptic stability, allowing the cell to operate reliably in a passive (non-adaptive) integrative mode.

Stable competition of mSTDP during ongoing activity

To show that the mSTDP model produces stored weight patterns that are robust to ongoing activity, we investigated several network models, namely feedforward and recurrent network models. In the feedforward model, we considered a presynaptic population of 1000 excitatory and 250 inhibitory neurons projecting to a postsynaptic neuron, in which only the excitatory synapses were subject to plasticity. To mimic the stochastic ongoing activity observed in vivo, each presynaptic neuron followed a Poisson process with a mean rate of 8 spikes/s. The parameters αLTP and αLTD were chosen such that no synaptic changes were observed in this regime, considered as the normal operating point of the network. One important issue, common to all models of synaptic plasticity, concerns the operating point of the synapses. In the ABS and Senn et al. (2001) models, the implicit reference is the quiescent network state: as soon as the postsynaptic activity increases, the lower plasticity threshold crossed by synapses is that of LTD induction. In contrast, the BCM and mSTDP models were proposed to account for the in vivo case. In this situation, the presence of ongoing activity differs from the absence of firing at rest in vitro. For BCM, the bending of the covariance rule, so that it converges to the origin, the “zero-activity” point was proposed only to ensure mathematical stability. Note that a pure covariance product rule (Sejnowski, 1977) would lead to instability and change in the sign of the synaptic weight (if allowed) near the origin. In our model, however, the “fixed” regime below LTP and LTD thresholds guarantees that individual synaptic weights remain fixed during ongoing activity. This stable information transmission domain may be thought to correspond to the basal level of network spiking correlation during spontaneous activity, which has been reported to be low in vivo (Renart et al., 2010) or during sparse evoked activity during natural scene viewing (Vinje and Gallant, 2000). However, both zero-domain and “crossing” (floating threshold of transition between LTD and LTP) points can be used to stabilize the mean synaptic weight, although the singular nature of the floating threshold still allows for a residual competition. This singularity has a possible functional drawback because competition of infinite duration will induce a progressive increase in the variance of the weight distribution without affecting its mean (first-order stability remains valid), as will be shown in the following. This second-order instability can be seen as a correlate of synaptic competition but in the long term results in a synaptic weight distribution in which a single weight overtakes all the others. Alternatively, our model could have been parameterized in such a way as to create a stability domain around the LTD–LTP crossing point, similar to the zero domain at the origin. This second stability domain would stabilize the full synaptic weight distribution at the operating point between LTP and LTD. Such a second stability domain was proposed on the basis of the experimental validation of the BCM rule (Frégnac et al., 1988), as an operating domain matching the dynamical range of evoked sensory and ongoing activity (Frégnac, 2002). These differences in the working state of the synapse will be the focus of future developments of the mSTDP model. In the following, we considered different heterogeneous presynaptic firing patterns to study the evolution of synaptic weights produced by mSTDP.

We first studied the case in which the presynaptic ensemble average of slow variables is made over all synapses, thus defining common LTP and LTD thresholds shared among all synapses as required by the BCM rule. We separately considered the effect of heterogeneous presynaptic firing rate (detailed in Figs. 5, 6) and synchrony (detailed in Fig. 7) within the presynaptic neuron population (see illustration in Fig. 5A). The nonlinear interspike interactions responsible for the BCM-like features of the plasticity curve can create competition between groups of neurons with different firing rates further stabilized by the slow variables. We thus increased the mean firing rate of half the presynaptic excitatory neurons and, as expected, observed a rapid separation of their synaptic weights from those of neurons with unchanged rate (Fig. 5B). In particular, because of the strong impact of the LTP fast variables compared with LTD (α = 0.46) and our choice of presynaptic rates (8 and 16 Hz), the synaptic weights of population 2 were increased when the mean firing rate of population 2 got higher. This change was accompanied by a simultaneous decrease in the synaptic weights fed by population 1, whose firing rate was kept unchanged. This effect is expected from the BCM-like competition feature of the plasticity algorithm, which tends to keep the average synaptic weight and thus the postsynaptic firing rate constant. The floating plasticity threshold dependence on the past postsynaptic activity is illustrated for both input populations in Figure 5C, with lower floating thresholds for synapses fed by population 2 as expected from the BCM threshold presynaptic dependence discussed in Materials and Methods. In these conditions, population 1 experiences depression and population 2 potentiation.

Eventually, when all synaptic weights of population 1 reached their lower bound at 0, the average synaptic weight corresponding to population 2 became stabilized so that the postsynaptic firing rate reached a new stationary regime. If the stimulation pattern was maintained, the variance of the global synaptic weight distribution would slowly increase because of the residual competition. To avoid weight divergence of individual synapses (stability is guaranteed by the mSTDP model for the mean but not for the variance of the synaptic weight distribution), this plasticity phase was then followed by a phase in which presynaptic firing rates were relaxed to their original values, resulting in an inactivation of LTP and LTD. Variables δwLTP and δwLTD for both populations returned under their respective thresholds δwLTPth and δwLTDth corresponding to the zero domain of stability (Fig. 5D). The resulting postsynaptic firing rate was comparable with its original value, and the synaptic weights remained constant in this new ongoing regime (Fig. 5B). Therefore, spatially heterogeneous distribution of presynaptic firing rates elicits, at the level of the common postsynaptic target cell, synaptic weight scaling of a competitive nature. Ensuring that the fast variables return under their respective thresholds thus results in a segregation and stabilization of individual synaptic weights. This result does not depend on the initial weights as can be concluded from similar simulations in which the initial values were drawn from a Gaussian distribution (data not shown).

However, as discussed in Figure 4A–C in the case of a single presynaptic source, the presynaptic dependence of the LTD–LTP transition threshold can invert the outcome of competition between the input populations, when presynaptic firing rates are chosen so as to produce LTD instead of LTP in the absence of slow variables. If, for instance, the presynaptic firing rates are chosen to be 20 spikes/s for population 1 (instead of 8 spikes/s in Fig. 5) and 50 spikes/s for population 2 (instead of 16 spikes/s in Fig. 5), a depression is predicted by Figure 4A for both synaptic sets, the larger the higher the presynaptic rate. Consequently, the synaptic weights corresponding to population 2 will become smaller than for population 1 (Fig. 6A). Indeed, in the singular BCM regime (around the LTD–LTP threshold), the floating plasticity threshold of population 1 is smaller than that of population 2, thus inverting the pattern of competition (Fig. 6B). Note that this presynaptic dependence is lost when TLTD is very small or equal to 0. In Figure 6D–F, the same simulation was run with TLTD = 50 ms and resulted in synaptic weight distributions comparable with those in Figure 5, with stronger weights for population 2. This can be seen in particular in the dependence of the floating plasticity threshold on the past postsynaptic activity (Fig. 6E), which shows smaller values for population 2, corresponding to potentiation in the singular BCM regime.

We next considered the case of correlated Poisson processes of variable synchrony with a fixed mean firing rate. As reported by Song and Abbott (2001), if half of the presynaptic neurons are driven by synchronous inputs while the other half are randomly activated, heterosynaptic competition occurs between the synchronized and unsynchronized sets of synapses and produces two separate synaptic weight distributions corresponding to each input population. In Figure 7A, during a first phase, half the presynaptic neurons (population 2) were driven by correlated Poisson processes (correlation coefficient of 0.25 for 200 s). The resulting synaptic weight distribution after the rapid learning phase is separated into two distinct sharp unimodal distributions. If the synchronous firing of population 2 were to last longer, the synaptic weights of population 1 would eventually reach 0 and the average synaptic weight of population 2 would become stable as in Figure 5 (data not shown). Instead, this phase was then followed by a stationary phase of 100 s, during which the spiking activity of both populations was decorrelated. Fast variables were brought back below their induction thresholds (Fig. 7B), resulting in a stabilization of individual synaptic weights. To show that these changes are not irreversible and that homogeneous distributions can be recovered with appropriate synaptic inputs, we then inverted the two population spiking patterns for the same stimulation duration. The weight distribution self-organized into a unimodal distribution in which the synaptic weights of the two populations were indistinguishable. The combined effect of these alternated patterns of correlated stimulations induced a global potentiation of the mean synaptic weight, each set of synapses benefiting more from LTP than LTD, as can be seen in the time evolution of all variables (Fig. 7B). From this result, we concluded that presynaptic populations can be segregated based on both first-order (mean rate) and second-order (synchrony) input statistics, thus resulting in efficient synaptic competition mechanisms that rely on different aspects of the mSTDP rule.

mSTDP with independent slow variables

We also considered the case in which synapses do not share a common plasticity threshold. Interestingly, new forms of synaptic competition can arise in this situation. We first replicated the stimulation protocol of Figure 5 where two presynaptic populations fire with different mean rates. In Figure 8A, the increase in postsynaptic firing rate elicits a fast and strong change in population 2 synaptic weights. However, instead of being potentiated as in Figure 5, these weights are strongly depressed. Indeed, the increase in slow variables (Fig. 8C) produced by the strong presynaptic and postsynaptic firing rate only affects the synapses of population 2, resulting in a shift toward depression for the corresponding weights until the fast variables return below their respective thresholds. Eventually, individual synaptic weights settle at constant values and the postsynaptic firing becomes stationary. Although this pattern of competition resembles that observed in Figure 6A–C, this effect is not the result of the presynaptic dependence of the LTD–LTP transition threshold. Indeed, the protocol is identical to that illustrated in Figure 5, and the singular LTD–LTP transition BCM point still keeps the same form of dependence on the past synaptic activity (Fig. 8B) for small TLTD. The homeostatic regulation acts in this latter case on each synapse independently and therefore produces a strong depression to compensate for the increase in presynaptic firing rate and keep the postsynaptic mean rate constant. Therefore, different forms of learning rules can arise based on the spatial extent of synaptic interactions within the same neurite.

This synaptic weight segregation can arise from functional competition but can also result from structural specificity. If the coefficients αLTP are drawn from a bimodal distribution, two distinct populations of synaptic weights emerge for a fixed and homogeneous presynaptic firing rate (Fig. 8D–F). These populations correspond respectively to each mode of the bimodal distribution. Population 1, having smaller LTP induction thresholds, experiences an increase in the synaptic weights, whereas population 2 synaptic weights are dominated by depression. If synapses interact only locally, synaptic weight distributions can be spontaneously segregated as a result of structural differences that may be related, for instance, to different dendritic branching morphologies.

Emergence of receptive fields with mSTDP

To illustrate the emergence of receptive fields in a simplified retinotopic feedforward model, we next considered an additional protocol in which retinotopically organized presynaptic neurons were driven by Poisson processes with mean rate following spatial Gaussian profiles (Song and Abbott, 2001; Pfister and Gerstner, 2006). Every 50 ms, the center of the Gaussian stimulation profile was randomly drawn among the presynaptic neurons with periodic conditions. After an initial phase of ∼500 s, the postsynaptic firing rate reached a stationary regime (Fig. 9A) and a sharp and localized receptive field emerged in the presynaptic population (Fig. 9B,C) ∼500 s later. After ∼2000 s, the synaptic weights reached their upper (wmax = 0.5 nS) or lower (wmax = 0 nS) bound, and the receptive field stayed stable for the rest of the simulation. If the synaptic weight is unbounded, the excessive competition between synapses could shrink the receptive field to a single winner-take-all synapse. To avoid this anomalous trivial weight distribution, some additional constraint must be used, such as imposing weight dependency or hard bounds, as in Figure 9.